Abstract

Many animals exhibit agile mobility in obstructed environments due to their ability to tune their bodies to negotiate and manipulate obstacles and apertures. Most mobile robots are rigid structures and avoid obstacles where possible. In this work, we introduce a new framework named Haptic And Visual Environment Navigation (HAVEN) Architecture to combine vision and proprioception for a deformable mobile robot to be more agile in obstructed environments. The algorithms enable the robot to be autonomously (a) predictive by analysing visual feedback from the environment and preparing its body accordingly, (b) reactive by responding to proprioceptive feedback, and (c) active by manipulating obstacles and gap sizes using its deformable body. The robot was tested approaching differently sized apertures in obstructed environments ranging from greater than its shape to smaller than its narrowest possible size. The experiments involved multiple obstacles with different physical properties. The results show higher navigation success rates and an average 32% navigation time reduction when the robot actively manipulates obstacles using its shape-changing body.

Keywords: Embodied intelligence, Deformable robot, Bio-inspired robotics, Multimodal perception

Subject terms: Mechanical engineering, Electrical and electronic engineering

Introduction

With the emergence of new robot-based applications, robots have to plan and execute tasks in the presence of environmental uncertainties which makes sensing an important component. Robots must be equipped with different sensing modalities to be able to operate in unstructured environments. The combination of different modalities can have a synergistic effect, including cases in which the use of a single sensor modality may fail1,2. It has been shown that the fusion of different sensing modalities (including tactile sensing) can increase successful execution of tasks requiring interaction with the environment3,4.

As tactile sensing performance improves, sensor fusion becomes more important in multiple applications5. However, in the literature on haptics, exploration has been treated only to a limited extent compared to grasping6. The majority of mobile robot path planning methods focus on obstacle avoidance, rather than object traversal or manipulation.

Deformable or transformable robots offer the ability to change shape depending on their task or requirement. Some examples include continuum7,8, origami9,10, and modular robots11,12. The development of deformable robots is important and useful because they can adapt their bodies to suit their environments, navigate more efficiently and safely, and present a wide range of potential applications. Some of these applications include medical and healthcare13,14, household15,16, search and rescue17,18, and environmental and space exploration19–21. Deformability or compliance in robots is also advantageous since unexpected collisions can be somewhat tolerated22, similar to how animals minimise collision force to reduce risk of injury. Other benefits of shape change include variable stability and centre of mass (which could be extended for advantages with payload capability), changeable points of contact with the ground (which can enable the robot to drive on more desirable surfaces or avoid potential hazards), varying turning circle/radius (especially useful in cluttered or obstructed environments), and inherent damage resilience and mechanical robustness (increasing ability to absorb impacts or adapt in case of component failure)23.

We previously developed DeforMoBot24, a deformable mobile robot capable of using proprioceptive whisker feedback to adjust its shape in real time in order to traverse obstacles in its path. The robot’s design is bio-inspired by animals such as cats which have the ability to squeeze through spaces narrower than their normal body size.

In this work, we propose a new framework named Haptic And Visual Environment Navigation (HAVEN) Architecture. This architecture enables the augmented robot to autonomously choose between reactive traversal and active mobile manipulation of obstacles based on a visual prediction algorithm. The IEEE Robotics and Automation Society’s Technical Committee for Mobile Manipulation defines mobile manipulation as robotic tasks that require a synergistic combination of navigation and interaction with the environment25. Specifically, using vision and proprioception algorithms, the robot is now

Predictive, by visually analysing the environment and preparing its body for the given circumstances;

Reactive, by responding to proprioceptive feedback; and

Active, by manipulating obstacles and gap sizes.

While most robots do not engage tasks through kinematic tuning such as stiffness and shape change, our robot can take action both in state space and through kinematic tuning, taking inspiration from biological counterparts. The motion planning problem of Navigation Among Movable Obstacles explores the potential for obstacle manipulation26, but has not been thoroughly explored in unstructured environments.

Many animals (such as cats, rats, rabbits, and seals) use a combination of their eyes and whiskers for perception of their environment. Likewise, we have enabled the robot to benefit from multimodal perception, as shown in Fig. 1. Building on proprioceptive sensing developed previously24, the robot can now use vision to analyse its environment to find the properties and location of the biggest gap in the scene and prepare itself accordingly (through speed and shape change). Real-time proprioceptive feedback can then allow the robot to update its shape in the course of its navigation and also assists with edge cases. The robot can also manipulate obstacles and gaps through active shape change.

Fig. 1.

(A) Taking a bio-inspired approach from animals such as cats, we present a robot which uses visual and proprioceptive sensing to be predictive, reactive, and active in its locomotion and interactions. From left-right: The robot analyses its environment and observes the small gap between the obstacles, approaches at its narrowest body shape, expands its shape to actively widen the gap, and is able to resume its natural shape once it has progressed. (B) Overhead view of the augmented deformable mobile robot DeforMoBot detailing design measurements and augmentations including depth camera, longer whiskers, micro switch, and speed and direction control.

The remainder of the paper is organised as follows. “Related work” discusses relevant related work. “Method” presents the methodology including algorithms and the proposed HAVEN Architecture. “Experiments and results” explains the experimentation and also analyses the results obtained. Finally, “Conclusions” presents the conclusions of this work.

Related work

In general, a major aim of robot navigation is to avoid collisions between the robot and its environment27,28. However, this is limiting, especially in obstructed environments, where obstacles can be moved or manipulated to facilitate the robot’s navigation through the environment. This is evidenced by the fact that in many works to date that have focused on outdoor locomotion, navigation has been limited to uneven terrain29–31. To enable more robust navigation in the face of these challenges, the integration of further sensing such as proprioception can be beneficial.

The effective combination of visual and tactile modalities remains a challenging problem32. The fusion of vision and haptics in robotics has predominantly been utilised in object grasping, and has also been used in virtual reality applications. Using vision alone, robots cannot infer physical properties of obstacles (beyond geometry, colour, and semantics). Obtaining increased knowledge about its surroundings can help a robot to achieve its goal in unstructured environments. Some work has demonstrated that fusion of visual and tactile sensor data can enable robots to build a more detailed model of objects with which they have interacted. For example, a robot arm with RGB-D camera for tabletop scanning and mapping was able to determine rigidity by poking with force sensing, measure local material type using single-pixel spectroscopy, and predict force distributions by pushing33. The combination of tactile sensing and vision for haptic mapping by a robot arm has also been explored where visually similar scenes are assumed to have similar tactile characteristics34.

In the context of navigation, the fusion of visual and tactile information could allow a robot to navigate in poor visibility conditions with high occlusion or navigate around objects which are difficult to perceive. At present, there are few works that have fused visual and tactile information for navigation. Some work has been done on this combination for simultaneous localization and mapping (SLAM), examples of which include bio-inspired visuo-tactile SLAM for environment exploration and interaction35, and whisker-based tactile SLAM to generate occupancy maps36. In other work, a mobile robot used a camera to measure the compression of a passive foam rod which deformed when in contact with objects in its environment37. However, these works did not investigate this visual-tactile fusion for mobile manipulation.

The field of mobile manipulation largely consists of robots using manipulator arms to achieve tasks38,39. Robot arms can be useful and helpful, but they require additional hardware, computation, and power. Moreover, a manipulator adds an occasionally-used payload and an undesirable kinematic augmentation on the robot. A deformable robot can instead use its own shape-changing body to its advantage and directly interact with and manipulate obstacles.

Affordances can be described as key attributes of an environment which could be perceived by a robot to effectively interact with objects40. One particular robot used 3D range sensing and learned to perceive traversability affordances, although the authors reported this sensing as slow, requiring considerable learning data and limiting the robot’s perception and reactivity41. Morphology should also be considered for increased agility and robustness42 and for understanding affordance detection in organisms and robots. We recently developed the PaTS-Wheel, a passively-transformable single-part wheel that can render hooks when presented with obstacles or uneven terrain43. In other recent work, a simulation involving voxel-based soft robots predicted whether they could fit through an aperture using minimal tactile feedback44.

Shape-changing robots offer potential beyond the capabilities of rigid robots. Some soft robots have been manufactured for navigating narrow gaps. One such example is a multiple-gait soft robot capable of squeezing into confined spaces45. In other work, an inch-long soft robot mimicked the crawling locomotion of a caterpillar with a travelling-wave deformation to squeeze through a narrow slit46. However, soft robots have limited force capability47. Bio-inspired origami robots combine smart material actuators with folding processes to obtain compliance and soft-body features9. Diverse transformations aim to achieve increased mobility and versatility48 as well as reconfigurable modularity49. Shape-changing legs, as opposed to shape-changing bodies, have also been explored. A sprawl-tuned autonomous robot (STAR) uses variable leg sprawl angle to adapt its stiffness and height50. Passively telescoping legs on another robot can compress in a programmed direction and allow it to passively traverse narrow channels51. Other examples of folding robots include a compliant legged articulated robotic insect (CLARI)18, a cockroach-inspired soft legged robot52, a hybrid mobile robot with controllable stiffness53, and a foldable drone54. However, these works do not explore the potential for mobile manipulation of obstacles.

The ability to change shape allows robots to expand their functionality and application due to enhanced agility and adaptability. Enabling shape sensing, automating shape-changing, and integrating functional materials into systems remain overarching challenges in their development55. Our transformable structure uses rigid-body kinematics which can actively change shape. Other advantages of the design include the potential to carry payload and additional sensor modalities. We believe the robot offers advancement through the proposed combination of predictive (vision), reactive (proprioception), and active (manipulation) obstacle traversal strategies.

Method

To highlight the motivation for using haptic and visual sensing modalities, we tested the robot’s reaction to various edge cases where single sensor modality fails. The visual prediction and navigation attempts of obstacles such as transparent glass, string curtains, and a solid wall are shown in Fig. 2A. The robot does not recognize the transparent glass as an obstacle, whereas it identifies both the string curtains and the solid wall as being occupied. In tests, recorded angle measurements show there are no whisker angle changes, highlighting that these are complicated situations which need more than one modality. While vision is desired for allowing the robot to prepare its body shape, speed, and strategy for navigation, proprioceptive sensing is needed to confirm that the robot can physically traverse obstacles in different circumstances without causing damage to itself or its environment and without reducing efficiency by attempting to accomplish impossible tasks. Manipulation can be used if the robot gets stuck or when the biggest gap in the environment is smaller than the robot’s narrowest possible width.

Fig. 2.

(A) Edge cases illustrating the motivation for employing multimodal sensor fusion. The top row shows the relative colourmap depth and RGB images from the robot’s onboard camera, while the bottom row shows the robot’s progression during the navigation attempt. (B) Validation of the kinematic analysis. The cubic expressions for experimental measurements of (the 2-bar link angle) given w (the width of the robot) are plotted against the kinematic equations derived. (C) Relationships between the change in whisker angle and change in servo angle are modelled by linear regression (left), and between the change in servo angle and change in robot width are modelled by quadratic polynomial regression (right).

We utilise an enhanced version of DeforMoBot, a bio-inspired deformable mobile robot24. A number of augmentations have been made to the robot to expand its capabilities, as detailed in Fig. 1B; an Intel RealSense Depth Camera provides depth and RGB sensing, while a microswitch at the front tip of the robot improves proprioceptive sensing. Speed and direction control are also added for different approach strategies. The whiskers are elongated for multiple reasons: the wheels and robot body are shielded during obstacle interaction, obstacles are better protected during navigation (instead of being in contact primarily with the rimless wheels), and the robot uses the whiskers to ensure that it can push directly against objects when it expands to manipulate obstacles and gap sizes. The shape of the entire robot is controlled solely by the robot’s 2-bar link angle . This value can be calculated in terms of the desired robot width w as shown in the Supplementary Methods.

Figure 2B compares the predicted 2-bar link angle based on the desired robot width w from the kinematic equations and the experimental measurements of for different w values with and without sprawl. As expected, the influence of sprawl increases as the robot width decreases. The overlap of the data plotted in the figure demonstrates a good fit between experimental data and the analytical predictions.

The relationships between the robot angles and width are shown in Fig. 2C. The left-hand plot shows the independent tuned linear relationship between the change in the whisker angle and the change in the servo angle which solely controls the robot’s shape. This tuning must complement the robot’s body shape to achieve meaningful behaviour: whisker perception that is too sensitive would result in overcompensation by the robot compressing its body shape to traverse obstacles, while whisker perception that is overly coarse would leave the robot at risk of struggling to move through gaps. The direct quadratic relationship between the servo angle and the change in the robot’s width w is plotted on the right-hand side of Fig. 2C.

Haptic And Visual Environment Navigation (HAVEN) Architecture

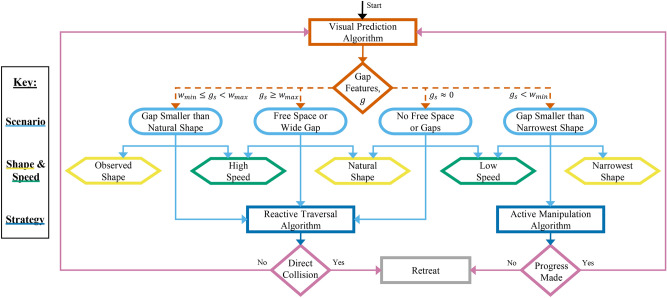

We present the HAVEN Architecture in Fig. 3 which the robot employs to autonomously make decisions and take actions. The Visual Prediction Algorithm enables the robot to be predictive by analysing the environment and preparing for the estimated conditions before it encounters them. The Reactive Traversal Algorithm allows the robot to be reactive to real-time proprioceptive feedback in the course of its journey. The Active Manipulation Algorithm enables the robot to actively control its environment by manipulating obstacles and gap sizes.

Fig. 3.

Haptic and visual environment navigation (HAVEN) architecture. After determining a state (light blue ellipses) based on the output of the Visual Prediction Algorithm (in orange), the robot autonomously chooses its initial shape (yellow hexagons), driving speed (green hexagons) and navigation strategy (dark blue rectangles). Diamonds represent decision points, ellipses show states, hexagons depict processes, and rectangles portray algorithms.

The Visual Prediction Algorithm uses the depth camera and operates as follows. A ROS node subscribes to depth data messages in real-time and converts these to OpenCV image encoding, focusing on a region of interest. The algorithm then smoothens out “depth holes” in the images using mean value replacement and determines the existence of gaps between obstacles by grouping together similar depth readings, d, within a threshold, . If any apertures exist in the depth image, various parameters are calculated such as the start and end pixel indices so that the biggest gap can be calculated.

The biggest gap distance is converted from pixels to meters by first extracting the intrinsic calibration matrix, K, containing the focal length and optical centre . The average point clouds at the start and end of the aperture are calculated by converting (x, y) pixel coordinates to 3D point coordinates in the camera frame.

| 1 |

This imparts the depths of the obstacles on either side and ensures that the algorithm can be applied to scenes regardless of the obstacle positions or approach angle of the robot.

The Euclidean distance, , between the two 3D points is calculated and communicated to the robot so that it can decide how best to proceed. The initial shape of the robot is set as

| 2 |

where a, b, and c are coefficients which can be adjusted depending on application. In our case, we use the method of least squares to obtain coefficients of , , and which satisfy the desired relationship. Fig. 4 shows sample depth and aligned depth-to-colour images overlaid with gap bounding boxes, gap widths, and obstacle depths.

Fig. 4.

Examples of the Visual Prediction Algorithm employed by the robot from different approach angles on various obstacles with different apertures: (A) a gap between weighted boxes approached directly from , and (B) a gap between weighted boxes approached from an angle of . In each group, the coloured depth images are shown on the left and the aligned depth-to-colour images with overlaid feature information are shown on the right.

There are four possible outcomes to the Visual Prediction algorithm outlined as follows.

In free space, or where the gap is bigger than the robot’s natural shape, the robot progresses in its natural shape and follows an Obstacle Traversal Algorithm inspired by the shape/mobility algorithm previously introduced24. It updates its shape using proprioceptive feedback and retreats if it detects head-on collision with an unseen obstacle. This can occur in edge cases such as windows or transparent glass.

If no free space or gaps are identified, the robot proceeds cautiously at low speed, again employing the Obstacle Traversal Algorithm and retreating if a head-on collision occurs. This addresses cases such as obstacles which may be solid (e.g. walls) or deformable (e.g. string curtains), and also in the event of camera failure.

If the largest aperture measures between the robot’s smallest and largest widths, the robot changes size accordingly to match this observed distance and executes the Obstacle Traversal Algorithm once again, retreating in the case of a head-on collision. It updates using proprioceptive feedback and resumes its natural shape once it has traversed the obstacles.

Finally, if the biggest opening is narrower than the robot’s smallest size, it drives slowly at its narrowest width and follows the Obstacle Manipulation Algorithm presented in the supplementary material. After the robot comes into contact with the obstacles (determined by proprioceptive whisker feedback), it changes the servo angle by a degree at a rate of to gradually widen its body shape to push against the obstacles and expand the gap. If the robot can widen the gap enough to fit through, it can traverse the obstacles before resuming its natural shape. If it cannot manipulate the gap and progress within a certain time frame, it retreats and can look for other possibilities if desired.

Experiments and results

Several experiments were performed to analyse the robot’s performance following the HAVEN Architecture. These experiments compared the traversal and manipulation strategies in different scenarios and also tested the robot in different obstructed, challenging environments.

Having no prior knowledge of the scene, obstacles, or aperture size, the robot used the Visual Prediction Algorithm. Examples of depth and aligned depth-to-colour images obtained for different obstacles are shown in Fig. 4. Colourmaps are applied to the depth images to clearly show the relative depth measurements, and details of feature information are overlaid on the RGB images including the obstacle depths measured, the largest gaps seen in the images, and the “bounding boxes” of these gaps. For reference, depth and aligned depth-to-colour images with overlaid features for all obstacle types at each approach angle are shown in Supplementary Fig. 1.

Comparison of Traversal and Manipulation Algorithms

The Traversal and Manipulation Algorithms were tested and compared in different scenarios. We used balls, boxes containing 2.5 kg, cushions, and stone blocks as obstacles since these cover a wide range of physical properties. The robot was tested attempting to traverse gaps between the obstacles of , , , and (ranging from bigger than its natural size of to much smaller than its narrowest width of ) from angles of (directly facing them) and .

We also conducted additional tests involving the robot blindly driving full speed fixed in its Natural shape and in its Narrowest shape. In its Natural shape, the robot achieves comparatively lower success rates and higher navigation times. In its Narrowest shape, it performs similarly to the Traversal strategy, but without the aforementioned benefits of shape change. There is also likely to be attrition on the robot and any payload from repeated higher-speed collisions, and there is higher likelihood that damage can be caused to the robot or its environment. The navigation time statistics are shown in Supplementary Fig. 2 with direct comparison of the median navigation times obtained in Supplementary Table 1. Images of the robot’s progression for each of these cases—at all gap sizes for every obstacle at each approach angle—are included in Supplementary Figs. 3–6.

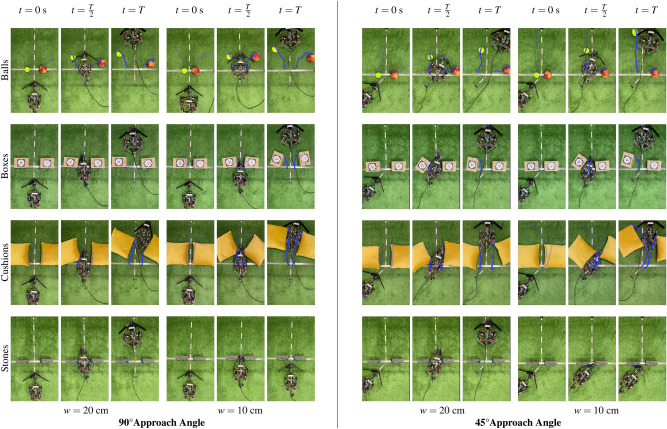

In order to directly compare the reactive Obstacle Traversal Algorithm and the active Obstacle Manipulation Algorithm, we constrained the robot to employ each algorithm in every scenario where apertures are smaller than the robot’s natural width. The robot still autonomously controlled its initial conditions based on the visual prediction. As it progressed, it continued to adjust its motion and shape based on real-time proprioceptive feedback from the whisker and microswitch measurements, and was allowed up to 15 seconds for navigation.

The robot was afforded 10 attempts at traversing each obstacle, at each width, from each approach angle, using each algorithm, resulting in a total of 480 trials overall. The progression of the robot’s path and shape as it employs the Obstacle Manipulation Algorithm at gap sizes smaller than its narrowest possible width is shown in Fig. 5. The obstacles’ movements are annotated in blue to clearly show the effects of the robot’s interaction with them, and the average total displacement of these obstacle points are shown in Supplementary Table 2. For comparison with the Obstacle Manipulation Algorithm, we include images of the robot’s progression as it exclusively used the Obstacle Traversal Algorithm—at all gap sizes for every obstacle at each approach angle—in Supplementary Figs. 7 and 8.

Fig. 5.

The robot attempts to traverse various obstacles employing the Obstacle Manipulation Algorithm in which it uses its shape-changing ability to push against the obstacles. The obstacles’ movements are annotated in blue to clearly show the effects of the robot’s interaction with them. Timestamps are shown in the column headers, obstacles are listed in the row headers, and gap widths are detailed below the figures.

Examples of the robot’s whisker angle changes and servo angle changes for a particular case are presented in Fig. 6A, with other obstacles, apertures, and approach angles shown for reference in Supplementary Fig. 9. The servo angle—controlling the robot’s overall shape—is reactive in real-time to feedback from the whisker apart from a rewidening delay so that the robot does not become stuck when progressing through obstacles. It begins at an angle determined by the results of the Visual Prediction Algorithm and holds this position until it comes into contact with the obstacles when it can then update in real-time.

Fig. 6.

(A) Direct comparisons of the mean measurements of the whisker angle (in blue) and servo angle (in orange) of the robot employing the Traversal and Manipulation Algorithms (detailed in the legend) for a particular obstacle (in this case, weighted boxes) at apertures of (top plot) and (bottom plot) approached directly at . The robot’s initial body shape (controlled by the servo angle ) is set using visual prediction and updates based on proprioceptive feedback. (B) Box plots comparing summary statistics of experiments involving the robot employing the Traversal (in blue) and Manipulation Algorithms (in orange) for the various scenarios. Each box extends from the lower to upper quartile values of the data, with a line at the median. Whiskers extend from boxes to show the range of the data. Circles represent failed navigation attempts. Plots show results of paired two-tailed Mann–Whitney U tests where , , and .

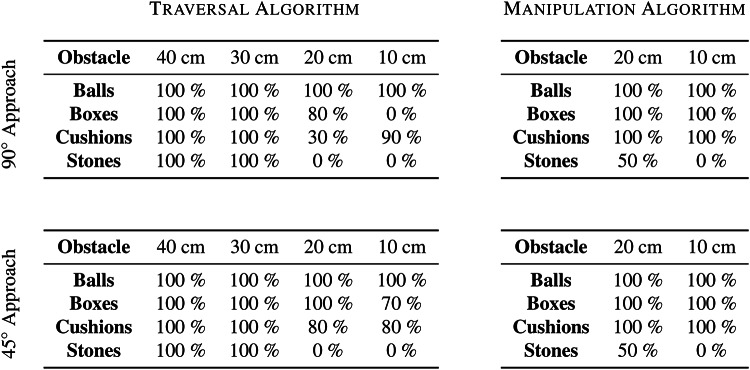

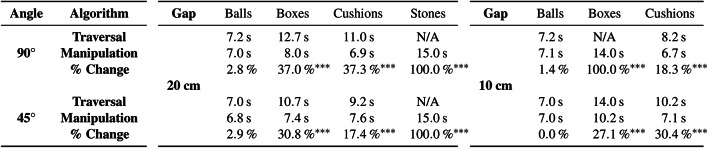

The success rates from the experiments are summarised in Table 1 and the navigation time statistics are shown in Fig. 6B. The robot had excellent navigation results of 100% success for all obstacles at wider apertures of and . It achieved 100% success at negotiating balls at all gap sizes since they rolled away upon contact and caused insignificant whisker deformation. Narrower gap distances between other obstacles proved more challenging as expected.

Table 1.

Navigation success rates.

The Manipulation Algorithm outperformed the Traversal Algorithm for apertures narrower than the robot’s smallest possible width. It achieved 100% success—and improved times taken—at overcoming the boxes and the cushions at every aperture, and was sometimes able to manipulate and traverse the heavier stone blocks. Given longer or multiple obstacle manipulation attempts, the robot could potentially achieve even higher navigation success rates.

Table 2 further examines the results obtained by comparing the median navigation times of the algorithms and performing paired two-tailed Mann–Whitney U tests. The Obstacle Manipulation Algorithm achieved lower navigation times in all cases involving the narrower gaps from both approach angles of 90°and 45°: for gaps of , it achieved improvements for boxes of 37.0% (, Mann–Whitney U test) and 30.8% (), for cushions of 37.3% () and 17.4% (), and for stones of 100% () in both cases, respectively; for gaps of , it accomplished improvements for cushions of 18.3% () and 30.4% (), and for boxes of 100% () and 27.1% (), respectively.

Table 2.

Comparison of median navigation times.

Results of paired two-tailed Mann–Whitney U tests where  ,

,  , and

, and

Outdoor environments

Finally, the robot and its algorithms were observed in different outdoor environments including terrains such as tarmac, gravel, and grass, and with obstacles including leaves, stones, rocks, and bricks. Examples of such tests are shown in Fig. 7 in which the robot attempts to traverse gaps between obstacles comprising twigs and leaves (Fig. 7A) and bricks (Fig. 7B). Following the HAVEN Architecture, the robot observed the gap was smaller than its minimum width and chose its navigation strategy accordingly. By pushing the obstacles apart using its shape-changing body, it was able to create a gap wide enough for it to progress through before resuming its natural shape.

Fig. 7.

Examples of the robot using the HAVEN Architecture to progress through gaps narrower than its minimum size between obstacles in outdoor environments such as twigs and leaves (A), and bricks (B). From left-right: the robot analyses the coloured depth images (top) and aligned depth-to-colour images with overlaid information (bottom), approaches at its narrowest width, and since it cannot fit through, it expands its body shape to widen the gap, and is then able to compress once more and progress, before finally resuming its natural shape.

The capabilities of the robot and algorithms presented result in improved perception and proficiency, allowing the robot to navigate more effectively and efficiently. These abilities can be particularly useful in obstructed, cluttered, unstructured, or otherwise challenging environments. The ability of the robot to manipulate obstacles could also be useful for widening gaps to allow other bigger robots to traverse. Furthermore, twins or multiples of the robot could potentially act together and unite to manipulate larger or heavier obstacles. The aforementioned ideas, further perception and sensing modalities, and marginal design improvements will be interesting to explore in future work.

Conclusions

This paper presents a new Haptic And Visual Environment Navigation (HAVEN) Architecture for predictive, reactive, and active obstacle traversal and manipulation strategies by a deformable mobile robot using vision and proprioceptive sensing. We tested the robot attempting to traverse obstructed environments with different types of obstacles placed apart at decreasing gaps from to . These obstacles varied in their geometric and mechanical properties, and gaps between them were on a scale ranging from greater than the robot’s natural widest shape of to much smaller than the robot’s narrowest width of . The proposed approach of using visual feature extraction to choose between reactive obstacle traversal and active obstacle manipulation results in achieving high success rates (detailed in Table 1) and a significant reduction in time (shown in Table 2) to negotiate gaps. This improves the robot’s locomotion and navigation abilities, particularly in obstructed environments.

Supplementary Information

Acknowledgements

This work was partly supported by EU Horizon 2020 research and innovation programme under grant agreement 101016970 (Natural Intelligence for Robotic Monitoring of Habitats) and by UK Engineering and Physical Sciences Research Council (EPSRC) DTP scholarship jointly with Analog Devices, Inc. The authors would like to thank Digby Chappell for meaningful feedback on this work and Yukun Ge for help with the drawing in Fig. 1A.

Author contributions

All authors conceived the experiments. B.W.M. conducted the experiments, and all authors analysed the results. B.W.M. wrote the paper, and all authors revised the paper.

Data availability

The data supporting the findings of this study are in the main text and supplementary materials. Raw data and code are available from the corresponding author upon reasonable request.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

7/10/2025

This article has been updated to amend the license information.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-024-75607-7.

References

- 1.Luo, R. C., Yih, C.-C. & Su, K. L. Multisensor fusion and integration: approaches, applications, and future research directions. IEEE Sens. J. 2, 107–119 (2002). [Google Scholar]

- 2.Alatise, M. B. & Hancke, G. P. A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 8, 39830–39846 (2020). [Google Scholar]

- 3.Prats, M., Sanz, P. J. & Del Pobil, A. P. Vision-tactile-force integration and robot physical interaction. In 2009 IEEE International Conference on Robotics and Automation, 3975–3980 (IEEE, 2009).

- 4.Wang, J., Lin, S. & Liu, A. Bioinspired perception and navigation of service robots in indoor environments: A review. Biomimetics 8, 350 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Luo, S., Bimbo, J., Dahiya, R. & Liu, H. Robotic tactile perception of object properties: A review. Mechatronics 48, 54–67 (2017). [Google Scholar]

- 6.Seminara, L. et al. Active haptic perception in robots: a review. Front. Neurorobot. 13, 53 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Russo, M. et al. Continuum robots: An overview. Adv. Intell. Syst. 5, 2200367 (2023). [Google Scholar]

- 8.Coad, M. M. et al. Vine robots. IEEE Robot. Autom. Mag. 27, 120–132 (2019). [Google Scholar]

- 9.Rus, D. & Tolley, M. T. Design, fabrication and control of origami robots. Nat. Rev. Mater. 3, 101–112 (2018). [Google Scholar]

- 10.Ze, Q. et al. Soft robotic origami crawler. Sci. Adv. 8, eabm7834 (2022). [DOI] [PMC free article] [PubMed]

- 11.Chen, I.-M. & Yim, M. Modular robots. Springer Handbook of Robotics 531–542 (2016).

- 12.Seo, J., Paik, J. & Yim, M. Modular reconfigurable robotics. Annu. Rev. Control Robot. Auton. Syst. 2, 63–88 (2019). [Google Scholar]

- 13.Yim, S. & Sitti, M. Design and rolling locomotion of a magnetically actuated soft capsule endoscope. IEEE Trans. Rob. 28, 183–194 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fusco, S. et al. Shape-switching microrobots for medical applications: The influence of shape in drug delivery and locomotion. ACS Appl. Mater. Interfaces 7, 6803–6811 (2015). [DOI] [PubMed] [Google Scholar]

- 15.Samarakoon, S. B. P., Muthugala, M. V. J., Le, A. V. & Elara, M. R. hTetro-Infi: A reconfigurable floor cleaning robot with infinite morphologies. IEEE Access 8, 69816–69828 (2020). [Google Scholar]

- 16.Ramalingam, B. et al. Optimal selective floor cleaning using deep learning algorithms and reconfigurable robot hTetro. Sci. Rep. 12, 15938 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wharton, P. et al. Tetraflex: A multigait soft robot for object transportation in confined environments. IEEE Robot. Autom. Lett. (2023).

- 18.Kabutz, H. & Jayaram, K. Design of CLARI: A miniature modular origami passive shape-morphing robot. Adv. Intell. Syst. 5, 2300181 (2023). [Google Scholar]

- 19.Sabelhaus, A. P. et al. System design and locomotion of SUPERball, an untethered tensegrity robot. In 2015 IEEE International Conference on Robotics and Automation (ICRA), 2867–2873 (IEEE, 2015).

- 20.Hermes, M., Ishida, M., Luhar, M. & Tolley, M. T. Bioinspired shape-changing soft robots for underwater locomotion: Actuation and optimization for crawling and swimming. Bioinspired Sens. Actuation Control Underw. Soft Robot. Syst. 7–39 (2021).

- 21.Baines, R. et al. Multi-environment robotic transitions through adaptive morphogenesis. Nature 610, 283–289 (2022). [DOI] [PubMed] [Google Scholar]

- 22.Albu-Schaffer, A. et al. Soft robotics. IEEE Robot. Autom. Mag. 15, 20–30 (2008). [Google Scholar]

- 23.Cully, A., Clune, J., Tarapore, D. & Mouret, J.-B. Robots that can adapt like animals. Nature 521, 503–507 (2015). [DOI] [PubMed] [Google Scholar]

- 24.Mulvey, B. W., Lalitharatne, T. D. & Nanayakkara, T. DeforMoBot: A bio-inspired deformable mobile robot for navigation among obstacles. IEEE Robot. Autom. Lett. 8, 3828–3835 (2023). [Google Scholar]

- 25.IEEE Robotics and Automation Society. Mobile Manipulation. https://www.ieee-ras.org/mobile-manipulation.

- 26.Stilman, M. & Kuffner, J. J. Navigation among movable obstacles: Real-time reasoning in complex environments. Int. J. Humanoid Rob. 2, 479–503 (2005). [Google Scholar]

- 27.Hoy, M., Matveev, A. S. & Savkin, A. V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A survey. Robotica 33, 463–497 (2015). [Google Scholar]

- 28.Pandey, A., Pandey, S. & Parhi, D. Mobile robot navigation and obstacle avoidance techniques: A review. Int. Rob. Auto J. 2, 00022 (2017). [Google Scholar]

- 29.Bruzzone, L. & Quaglia, G. Locomotion systems for ground mobile robots in unstructured environments. Mech. Sci. 3, 49–62 (2012). [Google Scholar]

- 30.Guastella, D. C. & Muscato, G. Learning-based methods of perception and navigation for ground vehicles in unstructured environments: A review. Sensors 21, 73 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wijayathunga, L., Rassau, A. & Chai, D. Challenges and solutions for autonomous ground robot scene understanding and navigation in unstructured outdoor environments: A review. Appl. Sci. 13, 9877 (2023). [Google Scholar]

- 32.Liu, H., Yu, Y., Sun, F. & Gu, J. Visual-tactile fusion for object recognition. IEEE Trans. Autom. Sci. Eng. 14, 996–1008 (2016). [Google Scholar]

- 33.Haughton, I., Sucar, E., Mouton, A., Johns, E. & Davison, A. J. Real-time mapping of physical scene properties with an autonomous robot experimenter. In Conference on Robot Learning (2022).

- 34.Bhattacharjee, T., Shenoi, A. A., Park, D., Rehg, J. M. & Kemp, C. C. Combining tactile sensing and vision for rapid haptic mapping. In 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 1200–1207 (IEEE, 2015).

- 35.Struckmeier, O., Tiwari, K., Salman, M., Pearson, M. J. & Kyrki, V. ViTa-SLAM: A bio-inspired visuo-tactile SLAM for navigation while interacting with aliased environments. In 2019 IEEE International Conference on Cyborg and Bionic Systems (CBS), 97–103 (IEEE, 2019).

- 36.Pearson, M. J. et al. Simultaneous localisation and mapping on a multi-degree of freedom biomimetic whiskered robot. In 2013 IEEE International Conference on Robotics and Automation, 586–592 (IEEE, 2013).

- 37.Alt, N. & Steinbach, E. Navigation and manipulation planning using a visuo-haptic sensor on a mobile platform. IEEE Trans. Instrum. Meas. 63, 2570–2582 (2014). [Google Scholar]

- 38.Thakar, S. et al. A survey of wheeled mobile manipulation: A decision-making perspective. J. Mech. Robot. 15, 020801 (2023). [Google Scholar]

- 39.Sandakalum, T. & Ang, M. H. Jr. Motion planning for mobile manipulators-a systematic review. Machines 10, 97 (2022). [Google Scholar]

- 40.Gibson, J. J. The Ecological Approach to Visual Perception: Classic Edition (Psychology Press, 2014).

- 41.Uğur, E. & Şahin, E. Traversability: A case study for learning and perceiving affordances in robots. Adapt. Behav. 18, 258–284 (2010). [Google Scholar]

- 42.Ozkan-Aydin, Y., Chong, B., Aydin, E. & Goldman, D. I. A systematic approach to creating terrain-capable hybrid soft/hard myriapod robots. In 2020 3rd IEEE International Conference on Soft Robotics (RoboSoft), 156–163 (IEEE, 2020).

- 43.Godden, T., Mulvey, B. W., Redgrave, E. & Nanayakkara, T. PaTS-Wheel: A passively-transformable single-part wheel for mobile robot navigation on unstructured terrain. IEEE Robot. Autom. Lett. 9, 5512–5519 (2024). [Google Scholar]

- 44.Pigozzi, F., Woodman, S., Medvet, E., Kramer-Bottiglio, R. & Bongard, J. Morphology choice affects the evolution of affordance detection in robots. In Proceedings of the Genetic and Evolutionary Computation Conference, 211–219 (2023).

- 45.Shepherd, R. F. et al. Multigait soft robot. Proc. Natl. Acad. Sci. 108, 20400–20403 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Rogóż, M., Zeng, H., Xuan, C., Wiersma, D. S. & Wasylczyk, P. Light-driven soft robot mimics caterpillar locomotion in natural scale. Adv. Opt. Mater. 4, 1689–1694 (2016). [Google Scholar]

- 47.Mazzolai, B. et al. Roadmap on soft robotics: multifunctionality, adaptability and growth without borders. Multifunct. Mater. 5, 032001 (2022). [Google Scholar]

- 48.Firouzeh, A. & Paik, J. Robogami: A fully integrated low-profile robotic origami. J. Mech. Robot. 7, 021009 (2015). [Google Scholar]

- 49.Belke, C. H. & Paik, J. Mori: a modular origami robot. IEEE/ASME Trans. Mechatron. 22, 2153–2164 (2017). [Google Scholar]

- 50.Zarrouk, D., Pullin, A., Kohut, N. & Fearing, R. S. STAR, a sprawl tuned autonomous robot. In 2013 IEEE International Conference on Robotics and Automation, 20–25 (IEEE, 2013).

- 51.Lathrop, E., Tolley, M. T. & Gravish, N. Directionally compliant legs enabling crevasse traversal in small ground-based robots. Adv. Intell. Syst. 5, 2200258 (2023). [Google Scholar]

- 52.Jayaram, K. & Full, R. J. Cockroaches traverse crevices, crawl rapidly in confined spaces, and inspire a soft, legged robot. Proc. Natl. Acad. Sci. 113, E950–E957 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Labazanova, L. et al. Self-reconfigurable soft-rigid mobile agent with variable stiffness and adaptive morphology. IEEE Robot. Autom. Lett. 8, 1643–1650 (2023). [Google Scholar]

- 54.Falanga, D., Kleber, K., Mintchev, S., Floreano, D. & Scaramuzza, D. The foldable drone: A morphing quadrotor that can squeeze and fly. IEEE Robotics and Automation Letters 4, 209–216 (2018). [Google Scholar]

- 55.Shah, D. et al. Shape changing robots: bioinspiration, simulation, and physical realization. Adv. Mater. 33, 2002882 (2021). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data supporting the findings of this study are in the main text and supplementary materials. Raw data and code are available from the corresponding author upon reasonable request.