Abstract

Manual decisions regarding the timing of surveillance endoscopy for premalignant Barrett’s oesophagus (BO) is error-prone. This leads to inefficient resource usage and safety risks. To automate decision-making, we fine-tuned Bidirectional Encoder Representations from Transformers (BERT) models to categorize BO length (EndoBERT) and worst histopathological grade (PathBERT) on 4,831 endoscopy and 4,581 pathology reports from Guy’s and St Thomas’ Hospital (GSTT). The accuracies for EndoBERT test sets from GSTT, King’s College Hospital (KCH), and Sandwell and West Birmingham Hospitals (SWB) were 0.95, 0.86, and 0.99, respectively. Average accuracies for PathBERT were 0.93, 0.91, and 0.92, respectively. A retrospective analysis of 1640 GSTT reports revealed a 27% discrepancy between endoscopists’ decisions and model recommendations. This study underscores the development and deployment of NLP-based software in BO surveillance, demonstrating high performance at multiple sites. The analysis emphasizes the potential efficiency of automation in enhancing precision and guideline adherence in clinical decision-making.

Subject terms: Barrett oesophagus, Machine learning

Introduction

Barrett’s oesophagus (BO) is a precancerous condition and the most common precursor for the development of oesophageal adenocarcinoma1,2. It is characterised by the replacement of the normal oesophageal squamous epithelium with a columnar lined epithelium with an associated risk of malignant transformation ranging from 0.3% to 0.5% per year3.

Regular endoscopic surveillance is crucial to mitigate the risk of cancer progression, the timing of which is determined by specific endoscopic and pathological findings. Both are reported separately. Established guidelines offer recommendations for the appropriate intervals of surveillance endoscopy, considering the combination of these findings4. Adherence to guidelines is well established to improve dysplasia detection and patient outcomes5.

Endoscopic and pathological data are electronically stored, often in semi-structured text formats. Despite this, clinicians individually track patients and review their largely free text endoscopy and pathology reports to extract elements that are relevant to the surveillance guidelines. This manual process, though once a practical solution, has become increasingly challenging in the face of rising caseloads. Moreover, this process can also introduce human error5 leading to inconsistencies and deviations from the recommended surveillance intervals, resulting in either excessive or inadequate surveillance with the unnecessary resource usage or cancer progression respectively6.

The need for a more automated and standardised solution is apparent. The development of an efficient and reliable system could alleviate the administrative burden on clinicians, while ensuring adherence to evidence-based guidelines and optimising operational efficiency to enhance patient outcomes.

Recent advances in Natural Language Processing (NLP) have shown potential in automating the extraction of diagnostic and quality metrics from endoscopy and pathology reports7–10. However, several challenges persist in the effective implementation of NLP in clinical settings for BO. For example, many studies focus on binary dysplasia classification9, categorising conditions as either dysplastic or non-dysplastic. This approach, while straightforward, fails to account for crucial factors like the length of BO in endoscopy reports or the presence or absence of intestinal metaplasia, which are essential for determining follow-up intervals.

Further, while numerous studies have successfully developed NLP models with high efficiency and generalisability11, a critical gap often remains in their deployment within clinical pathways. This disconnect is exacerbated by the difficulty in obtaining large, well-designed, and diverse clinical datasets crucial for real-world performance. The lack of such datasets has been a recurrent issue12, highlighting the disparity between the theoretical promise of AI-driven algorithms and their actual applicability in clinical environments.

The aim of the current study is to demonstrate the development, implementation, evaluation and deployment of an NLP based solution tailored to help clinicians with decision making for gastrointestinal premalignant surveillance. We use BO as an example of how an NLP model can be trained, developed under an ISO 13485:2016 accredited Quality Management System and seamlessly deployed into live clinical pathways within an NHS Trust13. To gauge the effectiveness and real-world viability of our approach, we have chosen to conduct the study in three central London trusts, which experience a high throughput of BO surveillance cases.

Results

Cross-validation evaluation

EndoBERT and PathBERT cross-validation (Tables 1 and 2), demonstrated high performance and stability. PathBERT showed high precision, recall, and F1-score across all categories, with low standard deviations suggesting consistent performance. EndoBERT also performed well, particularly in the “Long” and “Short” categories. These results underscore the effectiveness of both models in categorising endoscopy and pathology reports with high accuracy.

Table 1.

PathBERT cross-validation evaluation results (5 folds)

| Category | Averaged Precision ( ± std) | Averaged Recall ( ± std) | Averaged F1 Score ( ± std) |

|---|---|---|---|

| No IM | 0.95 ± 0.01 | 0.95 ± 0.02 | 0.95 ± 0.01 |

| IM | 0.98 ± 0.01 | 0.98 ± 0.01 | 0.98 ± 0.01 |

| Dysplasia/Cancer | 0.98 ± 0.01 | 0.99 ± 0.01 | 0.98 ± 0.01 |

| Insufficient | 0.92 ± 0.04 | 0.90 ± 0.04 | 0.90 ± 0.01 |

Overall averaged accuracy: 0.96 ± 0.01.

Table 2.

EndoBERT cross-validation evaluation results (5 folds)

| Category | Averaged Precision ( ± std) | Averaged Recall ( ± std) | Averaged F1 Score ( ± std) |

|---|---|---|---|

| Insufficient | 0.89 ± 0.05 | 0.80 ± 0.01 | 0.84 ± 0.04 |

| Long | 0.97 ± 0.01 | 0.97 ± 0.01 | 0.97 ± 0.01 |

| Short | 0.94 ± 0.01 | 0.95 ± 0.01 | 0.95 ± 0.01 |

| No Barrett’s | 0.94 ± 0.01 | 0.94 ± 0.02 | 0.95 ± 0.01 |

Overall averaged accuracy: 0.95 ± 0.01.

GSTT, KCH and SWB holdout test sets evaluation

(Tables 3 and 4) The best performing category for all three trusts was ‘Long’, achieving a F1-score of 1, 0.92 and 1 for GSTT, KCH and SWB respectively. The ‘Short category also performed well (F1-score 0.98, 0.90 and 0.99 respectively) as did ‘No Barrett’s (F1-score: 0.95, 0.81, 0.88). There was a decrease in performance for the ‘Insufficient’ category for all three Trusts. This category contained a small sample size for all three Trusts in this category (GSTT n = 17 (false positive = 2, false negative = 0), KCH n = 25 (false positive = 4, false negative = 4), SWB n = 7 (false positive = 0, false negative = 1).

Table 3.

EndoBERT external evaluation results

| Dataset | Category | Precision | Recall | F1 |

|---|---|---|---|---|

| GSTT | No Barrett’s | 0.96 | 0.94 | 0.95 |

| Long | 1.00 | 1.00 | 1.00 | |

| Short | 1.00 | 0.96 | 0.98 | |

| Insufficient | 0.73 | 0.85 | 0.79 | |

| KCH | No Barrett’s | 0.79 | 0.83 | 0.81 |

| Long | 0.98 | 0.86 | 0.92 | |

| Short | 0.93 | 0.88 | 0.90 | |

| Insufficient | 0.66 | 0.88 | 0.75 | |

| SWB | No Barrett’s | 0.78 | 1 | 0.88 |

| Long | 1 | 1 | 1 | |

| Short | 1 | 0.98 | 0.99 | |

| Insufficient | 1 | 0.67 | 0.80 |

Table 4.

PathBERT external evaluation results

| Dataset | Category | Precision | Recall | F1 |

|---|---|---|---|---|

| GSTT | No IM | 0.93 | 0.90 | 0.92 |

| IM | 1 | 0.94 | 0.97 | |

| Insufficient | 0.75 | 0.92 | 0.83 | |

| Dysplasia/Cancer | 1 | 1 | 1 | |

| KCH | No IM | 0.93 | 0.8 | 0.86 |

| IM | 0.92 | 0.99 | 0.95 | |

| Insufficient | 0.81 | 0.81 | 0.81 | |

| Dysplasia/Cancer | 1 | 1 | 1 | |

| SWB | No IM | 0.82 | 0.72 | 0.77 |

| IM | 0.94 | 0.95 | 0.95 | |

| Insufficient | 0.71 | 1 | 0.83 | |

| Dysplasia/Cancer | 1 | 1 | 1 |

EndoBERT achieved an accuracy of 0.95 on the GSTT test set (109/115 cases accurately classified), 0.86 on the KCH test set (121/140 cases accurately classified), and 0.99 on the SWB test set (149/151 cases accurately classified).

PathBERT detected all cancers or dysplasias with a F1-score of 1 for all trusts. For intestinal metaplasia the F1 score was 0.97,0.95 and 0.95 for GSTT, KCH and SWB respectively. There was a decrease in performance for the detection of ‘No IM’ for the SWB test set (F1 score GSTT, KCH and SWB 0.92,0.86 and 0.77 respectively). This was mostly attributable to 7 false negatives in a small sample size (‘No IM’ category n = 18). The performance of the ‘Insufficient’ category was consistent across all three trusts (F1 score 0.83, 0.81 and 0.83 for GSTT, KCH and SWB respectively).

The average accuracy across all categories in the GSTT, KCH and SWB test sets for PathBERT was 0.93 (100 out of 107 cases correctly classified), 0.91 (123 out of 135 cases correctly classified) and 0.92 (139 out of 151 cases correctly classified) respectively.

Comparison between EndominerAI predictions and historical follow up intervals

Based on its rigorous validation and evaluation, EndominerAI’s recommendations in our retrospective analysis of 1640 GSTT reports (Table 5) were considered reliable ground truth for GSTT datasets. The analysis revealed that in 444 instances (27.0%), endoscopists’ actions did not align with these recommendations. The data shows discrepancies in both late and early decisions across different categories. Across all categories, the primary error observed in endoscopy scheduling14 EndominerAI’s input was scheduling endoscopies too early. This occurred in up to 26.58% of endoscopies where the patient had long segment BO with IM. 13.29% of endoscopies were requested late for patients with long segment BO with IM, a high-risk group for malignancy progression.

Table 5.

Seven-year retrospective analysis: comparison between endoscopist and EndominerAI predictions

| Metric | Total count n = 1640 | n (%) |

|---|---|---|

| Number of differences | 444 (27.0%) | |

| When endoscopist was incorrect: Cohort | Late BO n (%) | Early n (%) |

| Long BO with IM | 59 (13.29%) | 118 (26.58%) |

| Long BO with no IM | 4 (0.90%) | 43 (9.68%) |

| Short BO with IM | 25 (5.63%) | 76 (17.12%) |

| Short BO with no IM | 2 (0.45%) | 117 (26.35%) |

Implementation in clinical practice

The implementation of EndominerAI software optimises the booking of endoscopic procedures by enabling faster and more accurate decision-making. Traditionally, scheduling each endoscopy takes about 5 minutes per patient, but EndominerAI can determine follow-up timings for hundreds of patients in under a minute, greatly optimising the booking process.

Discussion

This study demonstrates the development, deployment and validation of an automatable decision-aid process to allow accurate decision-making in BO. Using a combination of natural language processing of endoscopy with pathology reports and evidence-based rules can accurately determine optimal endoscopy follow-up intervals.

Firstly, this demonstrates the application of machine learning in healthcare NLP, offering a framework for its implementation in a hospital setting. The infrastructure required for training natural language models in healthcare has become more accessible, with the ability to train in-house on standard GPUs.

Secondly, our approach required no complex entity or relationship labelling, yet achieved high accuracy and recall. This strategy, suitable for various pre-malignant conditions involving procedural and pathological text, significantly reduces the need for extensive text annotation, a common bottleneck in model development. Typically, entity and relation level annotation demand the training of domain experts and the establishment inter-annotator agreement. By extracting a limited number of categories for a ruleset, we simplified the process, proving effective for our domain. Future research could assess the annotation granularity needed depending on the addressed task.

Thirdly a key advantage of employing a hybrid algorithmic structure lies in its adaptability to evolving medical guidelines. The rule-based component of the architecture can be swiftly adapted to align with updated clinical recommendations, without necessitating modifications to the NLP component.

For the endoscopic data at GSTT, EndoBERT achieved F1 of 95% in all of the categories apart from “Insufficient” (Table 3). This latter result is not unexpected due to its complexity, which involves identifying the absence of descriptors characteristic of other categories. The class imbalanced distribution might also have influenced the performance of the model. PathBERT also performed exceptionally well for GSTT endoscopic data. It is particularly reassuring that the model consistently identified dysplasia or cancer correctly in every test case.

We observe that both PathBERT and EndoBERT generalise exceptionally well on data from hospitals not included in the training set. However, performance across different classes is directly influenced by variations in class distributions between Trusts (Supplementary Tables 3 and 4). For instance, the endoscopy test set for KCH contains a higher proportion of Insufficient cases compared to the other two Trusts. This class, as previously discussed, is more challenging to predict, resulting in a slight decrease in performance. It may be that an increased annotation granularity, such as entity-relation annotation would help, although this would come with additional annotation overhead that our simple approach avoids.

A further important emphasis is the need for a robust framework for the practical deployment and workflow implementation of NLP based models. In addition to training models, healthcare settings also need to consider how such a tool is used and by who, according to local practices. We implemented and deployed the models according to a robust industry accepted quality management system which allowed the decision aid to be classified as a Class 1 medical device. The deployment across the trust required the development of an easily accessible user interface that clinicians could intuitively understand.

This study also emphasises the importance of decision aids in improving resource utilisation. Human interpretation of guidelines is fallible even among gastroenterologists. Although we were unable to identify patient-specific factors that may explain erroneous decisions about follow-up timings, the comparison between the automated prediction of surveillance timing versus those that were actually booked indicates a 27% error rate (Table 5). The majority of the human error was related to the early booking of the follow-up endoscopy. This indicates that decision aids such as the tool presented here may provide a significant cost benefit by preventing overuse of endoscopic resources. Late follow-up would also be prevented, with consequent benefit for the patient. We would reiterate that the tool is a decision aid. As mentioned there may be other factors that affect follow-up timing decisions which we were not able to quantify but which were not a result of human error per se. Clinicians may occasionally deviate from the guidelines for several reasons. This could include patient preferences, administrative errors, or resource constraints. In some cases, clinicians might choose to follow up with a patient earlier than recommended due to concerns about the patient’s condition. That being said, given the wide margins defined as an error, any error is therefore a significant departure from the guidelines. Further we wouldn’t expect the number of errors described to be simply due to the deviation reasons given above which only happen in exceptional cases. The aim however of the described tool is to improve adherence to guidelines where it is appropriate to do so and therefore remove human error from the decision-making process where it exists.

One key limitation is the model’s vulnerability to data drift, which can erode its predictive accuracy over time. Continuous monitoring and periodic retraining are essential to mitigate this limitation. Other potential limitations include the model’s lack of validation on diverse patient populations or healthcare systems. Finally, the models would not be able to be used in cases where knowledge of an individual patient’s entire endoscopic history is needed in order to make decisions about follow-up timing, such as patients who have undergone successful therapeutic eradication of Barrett’s oesophagus. Furthermore, while this study suggests potential resource savings by reducing unnecessary endoscopies, a full economic impact analysis was not conducted and is planned for future work. Additionally, the study is not longitudinal, as assessing long-term outcomes robustly would require many years of follow-up, which falls outside the scope of the current paper. Overall, the current study demonstrates a simple method to train an implement a model using procedural (endoscopic) and related pathological data to provide accurate timings for further surveillance examinations. This tool serves as a decision-making aid and is used under clinical supervision. Our approach is applicable to other premalignant conditions where endoscopic and pathology text are needed to make decisions.

Methods

Patient and public involvement

In the initial scoping phase of the project, we prioritised Patient and Public Involvement (PPI) to ensure the acceptability and usability of our application (EndominerAI) by patients. The research questions and outcome measures were developed with an understanding of patients’ priorities and experiences gathered through in-house forums, social media engagement via Twitter, Facebook and Q&A sessions, and feedback collected from posters placed around the endoscopy department. We invited patients to share their views on the project, providing valuable insights that were considered during the conduct of the study.

Establishment of surveillance intervals

Surveillance intervals were based on the British Society of Gastroenterology guidelines for the surveillance of BO4. The guidelines rely on elements from both endoscopy and pathology reports. Endoscopic elements include the maximum extent of BO defined as the length of BO from the top of the gastric folds to the most proximal maximum extent of BO (M stage). Pathological elements include whether intestinal or gastric metaplasia, dysplasia or cancer was detected. Each element impacts on the follow up timing for subsequent endoscopic surveillance. Detection of dysplasia or cancer commits the patient to alternative higher intensity surveillance or therapeutic pathways that are outside of the remit of this study.

Data access

Data acquisition was authorised through the GSTT Electronic Records Research Interface (GERRI) institutional board review (IRAS ID = 257283). Endoscopy reports were selected from Guy’s and St Thomas’ NHS Foundation Trust (GSTT) electronic patient records (EPR) using a case-insensitive keyword search for the term “Barrett” from endoscopy reports between 2007 and 2021. This keyword was chosen to best represent this patient cohort by an expert gastroenterologist (SZ). Pathology records pertaining to the endoscopy procedures were retrieved through querying the EPR as a separate dataset. These were retrieved by date-matching the endoscopic procedure to the pathology specimen collection date.

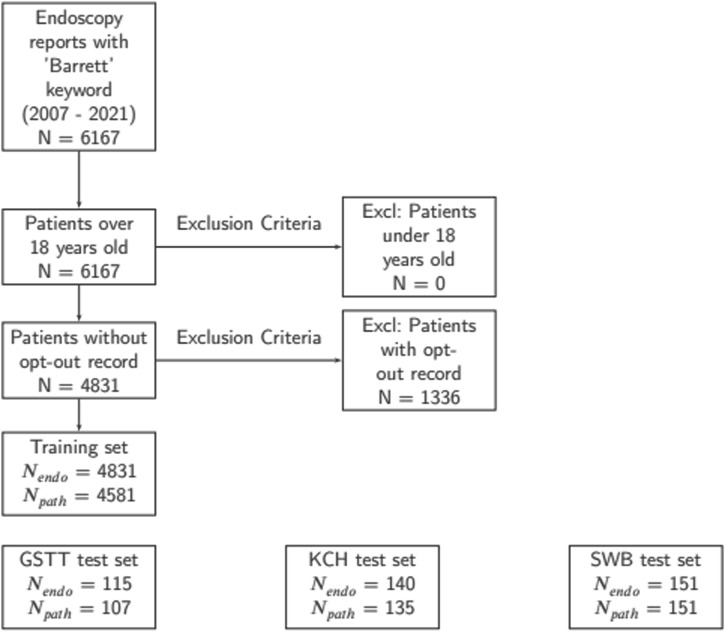

Patients were excluded for the following reasons: 1) Absence of pathology results relevant to the upper GI tract 2) Under the age of 18 3) Patient had opted out of data analysis using the NHS opt-out system (Fig. 1).

Fig. 1. Cohort selection process for both model training and test sets.

This figure presents a consort diagram detailing the process used for selecting patient cohorts for the training set and illustrating the distribution of test samples across different trusts: GSTT, KCH and SWB.

The demographic profile of our training set, predominantly male with an average age of 63.7 years, mirrors trends observed in broader studies on BO15,16. These similarities in demographic distribution suggest that our training set, despite its gender bias, is representative of the broader patient population typically affected by BO in the UK. More details can be found in Supplementary Table 1.

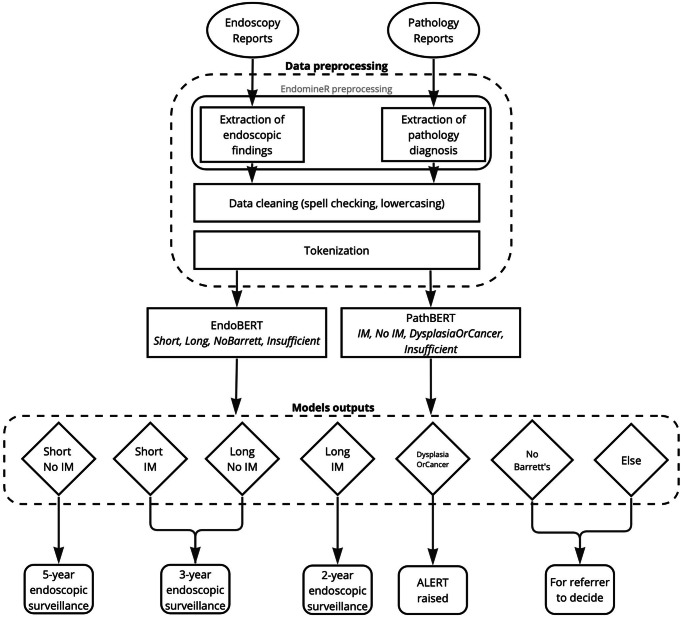

Data preprocessing

The endoscopy free-text analysis software EndoMineR17 was used to extract relevant sections of the endoscopy and pathology reports, consisting of the endoscopic findings and pathology microscopic description and diagnosis, as represented in Fig. 2. EPRs in free-text form introduce complexities that include but are not limited to spelling errors, inconsistent capitalisation, and the presence of irrelevant information. Previous research confirms that spelling errors are a prevalent issue in free-text clinical documents, necessitating meticulous data cleaning18. These errors have proved to impact performance of transformer-based models significantly19. To address these challenges, our data cleaning pipeline leveraged preprocessing best-practice20, including tokenization and lowercasing. We also performed spell checking by cross-referencing with a dictionary of common GI terms to correct any errors and maintain consistency across the training set. For instance, variations like “ogj”, “goj”, and “gastrooesophageal” were all standardised to “GOJ”.

Fig. 2. EndominerAI end-to-end data pipeline.

The diagram presents the comprehensive data pipeline of EndominerAI, showcasing the sequence from data preprocessing to follow-up prediction. After data preprocessing, the data is submitted to EndoBERT (for endoscopic data) or PathBERT (for histopathological data), the output of these models (diamonds) is then passed in to a rule-based algorithm to determine follow up timing.

The primary goal of annotating data for our machine learning tool was to accurately categorise key elements in endoscopy and pathology reports for BO patients. Annotation involves assigning predefined labels to the reports, which is crucial for training the models to recognise and extract relevant information for clinical decision-making. The definitions in Table 6 were established. The training set (4831 endoscopy reports and 4581 pathology reports) was blindly annotated by an expert endoscopist (SZ).

Table 6.

Class definition for Endoscopy and Pathology

| Category | Definition |

|---|---|

| Long segment Barrett’s oesophagus | Maximum extent of Barrett’s oesophagus is equal to or exceeds 3 cm above the gastroesophageal junction4. |

| Short segment Barrett’s oesophagus | Maximum extent of Barrett’s oesophagus is less than 3 cm above the gastroesophageal junction. |

| No Barrett’s oesophagus | Absence of Barrett’s oesophagus, as explicitly stated in the report. |

| Insufficient | Report either lacks a clear statement regarding the presence or absence of Barrett’s oesophagus or does not provide information on the length of Barrett’s oesophagus. |

| No intestinal metaplasia | Presence of gastric metaplasia but does not indicate the presence of intestinal metaplasia, or explicitly states the absence of intestinal metaplasia. Dysplasia and cancer are not detected in these cases. |

| Intestinal metaplasia | Intestinal metaplasia is present but without any signs of dysplasia or cancer. |

| Dysplasia or cancer present | Dysplasia or cancer is detected in the pathology report. |

| Insufficient | Either no biopsies relating to an endoscopic diagnosis of Barrett’s oesophagus are available or the pathology report does not clearly indicate the nature of the sampled Barrett’s tissue. |

Fine-tuning BERT for multiclass classification

Model training was performed on an NVIDIA DGX-1 server running Ubuntu 20.04 configured with 8 32GB V100 GPUs and 512GB RAM. Model training was performed on a single GPU with multiple GPUs used to parallelize hyperparameter tuning. All model development, training, and tuning were performed using an in-house machine learning operations (MLOps) framework for healthcare applications.

Unlike elementary NLP techniques such as Bag-of-Words (BoW), or even more traditional symbolic and statistical approaches that excel in tasks with well-defined linguistic rules, transformer-based models offer dense representations that capture long-range dependencies and nuanced context within the text, which is critical for accurate disease classification. The choice of BERT-based models is therefore justified in our context by the need to capture long-range dependencies and subtle contextual information within endoscopy and pathology reports. For instance, accurately categorising BO lesions requires linking information about the length of the affected oesophageal segment, often located in a separate part of the report, with the initial description of the findings.

We fine-tuned two BERT-based models21 EndoBERT and PathBERT, to classify text segments extracted from endoscopy and pathology reports, respectively. These models are built on the pre-trained “bert-base-uncased” model obtained from the HuggingFace library22. We modified the pre-trained BERT architecture by adding a linear classification layer with sigmoid activation. This layer maps the BERT model’s hidden state output to the number of classes relevant to our tasks (Table 6).

Model training

A cross-validation strategy was employed for the training and evaluation of EndoBERT and PathBERT models. This approach involved splitting the training dataset into (k = 5) folds, with distinct sets used for training and internal validation in each iteration.

Stratification was maintained on class labels to ensure representative distribution across folds. The final model was then trained on the overall training set. Endoscopy and pathology class distribution can be found in Supplementary Tables 3 and 4.

Metrics that would reflect models’ performance across all categories, with a specific focus on those of critical medical significance, were used for model evaluation. Accuracy, precision (positive predictive value), recall (sensitivity) and F1 score were calculated for each class. This approach allowed careful performance monitoring of classes where false negatives could have significant clinical implications, such as “Dysplasia/Cancer” or “Long” (long segment BO).

In this study, we performed a random search for hyperparameter tuning before the cross-validation procedure. We explored a pre-defined space of hyperparameters across more than 50 trials, taking about 30 hours in total. The performance metric used for optimisation was average accuracy. The primary hyperparameters, their optimal values, and the search space are detailed in Supplementary Table 2.

Use of algorithm to combine elements

A rule-based algorithm was designed to leverage the classifications from EndoBERT and PathBERT models and provide accurate follow-up timings. This rule-based algorithm strictly adheres to the endoscopic guidelines4. The rule-based algorithm combines outputs from EndoBERT and PathBERT to provide a recommendation for patient follow-up timings (Fig. 2). For example, if EndoBERT outputs “Short” for the segment length and PathBERT outputs “No IM” for the histopathological grade, the rule-based algorithm will predict a follow-up timing of 5 years.

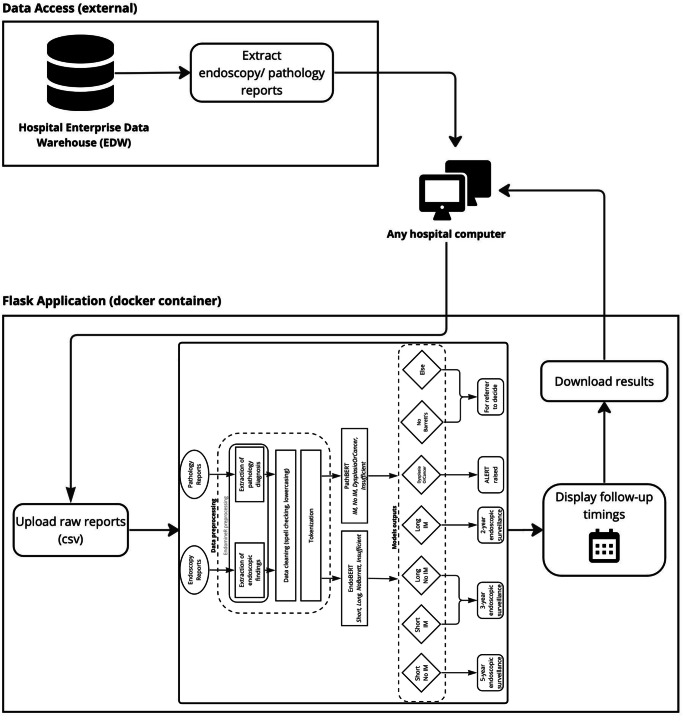

Deployment in Hospital Network as Part of Quality Management System

EndominerAI was developed as a Class I software as medical device (SaMD) under an ISO 13485:2016 accredited Quality Management System. Developing an application within an accredited quality management system ensures compliance with all applicable requirements outlined in ISO 14971:2019, BS EN 62304:2006, BS EN 62366:2015, DCB0129, and UKMDR2002. This involves assessing potential risks associated with software as a medical device throughout the entire project lifecycle.

During the project’s scoping phase, a Clinical Risk Management plan is established. This plan includes the creation of a hazard log, identification of hazards, description of patient safety consequences, explanation of hazard causes and contributory conditions, identification of existing mitigating controls, estimation of clinical risk, and identification of participating personnel.

Throughout the development phase, a Verification and Validation plan is implemented to ensure that new software releases do not introduce any vulnerabilities or risks. The primary method for verifying the correctness of the code involves generating and performing unit tests. These tests are designed to cover over 90% of the code and are written for each item in the design specification. Unit tests are reviewed at key stages of the development process, and each pull request is only merged if all unit tests pass.

A user interface was developed using the scalable and flexible Flask web framework23 to facilitate integration within the hospital environment. The application is hosted on in-house hardware with a fixed IP, encapsulated within a Docker container, ensuring secure access from any computer within the hospital (Fig. 3). As part of the QMS requirements, User Acceptability Testing and User Training sessions were conducted to verify the acceptability and usability of the software by end users. One example of feedback we received was the request to add a ‘download reduced dataset’ button, allowing clinicians to download a condensed version of the results instead of the full dataset.

Fig. 3. EndominerAI architecture in hospital environment.

This figure displays the operational framework of EndominerAI in a hospital setting. It shows how data is extracted from hospital databases, processed and returned to the user.

The current workflow involves the curation of endoscopic data related to the term “Barrett’s”. Pathology reports related to the endoscopy reports are also curated. Both datasets are easily accessed from the Enterprise Data Warehouse. No pre-processing is needed before uploading them to the web interface. The interface then provides a downloadable spreadsheet with the predicted follow-up times alongside the most recent endoscopy and pathology results. The entities that have been extracted from the relevant reports as well as how the entities were combined to reach a final decision are also presented to the clinician to provide output explainability. This can then be validated by a clinician. Thus, the process is completed under clinical supervision.

Evaluation

A prospective evaluation was then conducted by accessing all patient records who had undergone endoscopic surveillance for BO at GSTT between May and July 2023 where pathology results from the endoscopy were available (115 patient records). The time period was chosen as a representative time over which records would normally be assessed in order to plan follow-up surveillance endoscopies. Pathology reports derived from templates were excluded, as these can be easily analysed separately using simple regular expressions. This resulted in a test set of 107 pathology reports. For each of the endoscopy and pathology test sets, the respective model’s predictions for the class label were included, along with the algorithm’s prediction for the timing of each patient’s return for endoscopic surveillance. These predictions, along with the output pathology data, were then assessed by expert clinicians (SZ and PV) to determine their accuracy.

For the external evaluation, reports were collected from KCH and SWB, ranging from the years 2015 to 2022. For SWB data access was authorised by setting-up a data sharing agreement between the institutions, in compliance with the GDPR. KCH data was authorised through the Kings Electronic Records Research Interface (KERRI) institutional board review (IRAS ID = 232823). The dataset sizes were chosen to be roughly equivalent to the GSTT test set size. The same exclusion criteria applied to the GSTT dataset were also employed for the KCH and SWB dataset. This dataset was also assessed by expert clinicians (SZ and PV) to ensure the accuracy of the model predictions.

Subsequently, a retrospective evaluation was performed using seven years of historical data from GSTT. To determine whether the automated approach could enhance resource utilisation and reduce late endoscopies, the timing of actual endoscopies following BO surveillance endoscopy was compared with the algorithm’s predictions. This allowed a direct comparison between the algorithm’s decision for return to endoscopy versus what had been done historically. Reports where no BO was found, or if either of the categories of endoscopy or pathology were insufficient according to the definitions above were excluded. An endoscopy was considered early or late if it happened more than or less than 6 months from the date suggested by EndominerAI.

Supplementary information

Acknowledgements

This work has been funded by the South East London Cancer Alliance (SELCA). We would like to express our gratitude to the Clinical Scientific Computing team at Guy’s and St. Thomas’ NHS Foundation Trust for providing us with access to their hardware resources and technical expertise, which significantly facilitated the computational aspects of this work. We would particularly like to thank Dijana Vilic and Haris Shuaib for their contributions.

Author contributions

A.Z. developed the concept, methodology, validation, and was the principal contributor to the visualization and writing of both the original draft and review/editing. S.Z. took a lead and supervising role in conceptualization, methodology, validation, investigation, funding acquisition, project administration, supervision, and was the principal contributor of both the original draft and review/editing. L.J. supported methodology, contributed to software development, and assisted in writing and reviewing the manuscript. X.Z. supported data curation and provided support in writing and reviewing. P.P. and J.D. both offered supporting supervision roles. P.V. assisted in data curation and validation. Z.Y. supported data curation. A.R. provided support in supervision and contributed equally to writing and reviewing. N.T. and S.A. supported the evaluation of the software on SWB data. All authors have read and approved the manuscript.

Data availability

The datasets generated and analysed during the current study are not publicly available due to the sensitive nature of the patient data derived from Guy’s and St Thomas’ NHS Trust Electronic Patient Records.

Code availability

Access to the python code used in this research is available upon request to the corresponding author.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41746-024-01302-6.

References

- 1.Robertson, C. S., Mayberry, J. F., Nicholson, D. A., James, P. D. & Atkinson, M. Value of endoscopic surveillance in the detection of neoplastic change in Barrett’s oesophagus. Br. J. Surg.75, 760–763 (2005). [DOI] [PubMed] [Google Scholar]

- 2.Hameeteman, W., Tytgat, G. N. J., Houthoff, H. J. & van den Tweel, J. G. Barrett’s Esophagus; Development of Dysplasia and Adenocarcinoma. Gastroenterology96, 1249–1256 (1989). [DOI] [PubMed] [Google Scholar]

- 3.Bhat, S. et al. Risk of Malignant Progression in Barrett’s Esophagus Patients: Results from a Large Population-Based Study. JNCI J. Natl Cancer Inst.103, 1049–1057 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fitzgerald, R. C. et al. British Society of Gastroenterology guidelines on the diagnosis and management of Barrett’s oesophagus. Gut.63, 7–42 (2014). [DOI] [PubMed] [Google Scholar]

- 5.Abrams, J. A. et al. Adherence to Biopsy Guidelines for Barrett’s Esophagus Surveillance in the Community Setting in the United States. Clin. Gastroenterol. Hepatol.7, 736–742 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zeki, S. & Dunn, J. PTH-69 Short segment non dysplastic Barrett’s is often surveyed too early by non-experts. Gut.70, A134 (2021). [Google Scholar]

- 7.Soroush, A. et al. Natural language processing can automate extraction of Barrett’s Esophagus endoscopy quality metrics. medRxiv. 10.1101/2023.07.11.23292529 (2023).

- 8.Kefeli J., Saroush A., Diamond C., Zylberberg H., May B., Abrams A., Weng C.,Tatonetti N.Large Language Models for Granularized Barrett’s Esophagus Diagnosis Classification. Preprint at https://arxiv.org/abs/2308.08660 (2023).

- 9.Nguyen Wenker, T. et al. Using Natural Language Processing to Automatically Identify Dysplasia in Pathology Reports for Patients With Barrett’s Esophagus. Clin. Gastroenterol. Hepatol.21, 1198–1204 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Li, J. et al. A deep learning and natural language processing-based system for automatic identification and surveillance of high-risk patients undergoing upper endoscopy: A multicenter study. EClinicalMedicine53, 101704 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lederman, A., Lederman, R. & Verspoor, K. Tasks as needs: reframing the paradigm of clinical natural language processing research for real-world decision support. J. Am. Med. Inform. Assoc.29, 1810–1817 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Panch, T., Mattie, H. & Celi, L. A. The “inconvenient truth” about AI in healthcare. NPJ Digit. Med.2, 77 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Messmann, H., Ebigbo, A., Hassan, C., Repici, A. & Mori, Y. How to Integrate Artificial Intelligence in Gastrointestinal Practice. Gastroenterology162, 1583–1586 (2022). [DOI] [PubMed] [Google Scholar]

- 14.Chen, M., Lan, G., Du, F. & Lobanov, V. Joint Learning with Pre-trained Transformer on Named Entity Recognition and Relation Extraction Tasks for Clinical Analytics. in Proceedings of the 3rd Clinical Natural Language Processing Workshop 234–242 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2020). 10.18653/v1/2020.clinicalnlp-1.26.

- 15.Caygill, C. P., Watson, A., Reed, P. I. & Hill, M. J. Characteristics and regional variations of patients with Barrett’s oesophagus in the UK. Eur. J. Gastroenterol. Hepatol.15, 1217–1222 (2003). [DOI] [PubMed] [Google Scholar]

- 16.Cook, M. B., Wild, C. P. & Forman, D. A Systematic Review and Meta-Analysis of the Sex Ratio for Barrett’s Esophagus, Erosive Reflux Disease, and Nonerosive Reflux Disease. Am. J. Epidemiol.162, 1050–1061 (2005). [DOI] [PubMed] [Google Scholar]

- 17.S Zeki, S. EndoMineR for the extraction of endoscopic and associated pathology data from medical reports. J. Open Source Softw.3, 701 (2018). [Google Scholar]

- 18.Lai, K. H., Topaz, M., Goss, F. R. & Zhou, L. Automated misspelling detection and correction in clinical free-text records. J. Biomed. Inf.55, 188–195 (2015). [DOI] [PubMed] [Google Scholar]

- 19.Kumar, A., Makhija, P. & Gupta, A. Noisy Text Data: Achilles’ Heel of BERT. in Proceedings of the Sixth Workshop on Noisy User-generated Text (W-NUT 2020) 16–21 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2020). 10.18653/v1/2020.wnut-1.3.

- 20.Chai, C. P. Comparison of text preprocessing methods. Nat. Lang. Eng.29, 509–553 (2023). [Google Scholar]

- 21.Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. Pre-training of Deep Bidirectional Transformers for Language Understanding. in Proceedings of the 2019 Conference of the North 4171–4186 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2019). 10.18653/v1/N19-1423.

- 22.Wolf, T. et al. Transformers: State-of-the-Art Natural Language Processing. in Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations 38–45 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2020). 10.18653/v1/2020.emnlp-demos.6.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated and analysed during the current study are not publicly available due to the sensitive nature of the patient data derived from Guy’s and St Thomas’ NHS Trust Electronic Patient Records.

Access to the python code used in this research is available upon request to the corresponding author.