Abstract

In everyday life, people often stand up and sit down. Unlike young, able-bodied individuals, older adults and those with disabilities usually stand up or sit down slowly, often pausing during the transition. It is crucial to design interfaces that accommodate these movements. Additionally, in public settings, protecting personal information is essential. Addressing these considerations, this paper presents a distance-based representation scheme for the motions of standing up and sitting down. This proposed scheme identifies both standing and sitting positions, as well as the transition process between these two states. Our scheme is based solely on the variations in distance between a sensor and the surfaces of the human body during these movements. Specifically, the proposed solution relies on distance as input, allowing for the use of a proximity sensor without the need for cameras or additional wearable sensor attachments. A single microcontroller is adequate for this purpose. Our contribution highlights that using a proximity sensor broadens the applicability of the approach while ensuring that personal information remains secure. Additionally, the scheme alleviates users’ mental burden, particularly regarding privacy concerns. Extensive experiments were performed on 58 subjects, including 19 people over the age of 70, to verify the effectiveness of the proposed solution, and the results are described in detail.

Keywords: standing-and-sitting motions, one-dimensional representation, interface, distance measurements, proximity sensor

1. Introduction

Standing up and sitting down are seemingly simple actions that most people repeat numerous times each day. However, elderly individuals and those with muscle diseases or disorders may find these movements challenging. The ability to stand up from a seated position and return is crucial for maintaining independence in daily living. Various assistive technologies [1,2,3,4] have been developed to assist with these motions. Among these, recognition methods for standing up and sitting down have been extensively researched. These approaches are used to monitor the health of elderly individuals in nursing homes [5,6,7,8], diagnose lower-limb diseases and assess locomotion in hospitals [9,10,11,12], and develop interfaces for assistive systems [13,14]. Measurement methods serve as the core technology for these approaches. Based on their sensor installation, measurement tools are primarily categorized into two types: wearable and nonwearable devices.

Wearable devices for measuring human activities are increasingly being reported. These devices incorporate sensors such as inertial measurement units (IMUs), barometers, pressure sensors, and electromyography (EMG) sensors. An IMU-based wearable device [10,15,16,17,18,19,20] measures the acceleration and angular velocity of the specific body part to which it is attached. Multiple sensors must be integrated into the device to assess the posture of the entire body. A wearable barometer [21,22,23] provides the height of the part of the body’s position, whether standing or sitting, to which it is affixed. Since this wearable device is influenced by environmental conditions, the sensor values must be calibrated frequently. Next, wearable devices equipped with pressure sensors [1,24,25,26] are used to detect variations in forces on the contacted surfaces. However, these pressure sensors are sensitive to temperature and encounter challenges owing to signal acquisition noise. Additionally, EMG sensor-type wearable devices [8,9,27,28,29] use muscle potential and frequency analysis to identify changes in fatigue resulting from muscle movements. However, inadequate skin contact hampers stable signal acquisition. These wearable devices facilitate the measurement and swift recognition of standing-up and sitting-down motions, thus eliminating the need to monitor computer screens. Most of the devices mentioned are portable, hands-free tools; however, they exhibit a notably short battery life. Additionally, their size and the effort needed to wear them render them impractical for daily use. A drawback of several wearable devices is the requirement for wires connecting the sensors to their electronic terminals. While these wires are essential for transmitting signals from the human body, they further impede the practicality of these devices for everyday tasks.

Several studies have detailed the measurement methods for typical nonwearable devices. First, these devices use an interface scheme that relies on visual input from a camera [12,30,31,32,33,34]; this visual input can capture three-dimensional posture data of the entire body from a single point or frame. By improving the identification of related motion features, these visual data enable the interface scheme to detect emerging trends. In recognizing standing and sitting motions, camera-based interface schemes efficiently and quickly convey motion intents, thereby expediting the decision-making process. However, the quality of their data is influenced by lighting conditions and environmental factors. Additionally, the expenses associated with processing large amounts of information must be taken into account. Finally, privacy protection is a critical consideration when camera systems are used in sensitive areas such as toilets or bathrooms. Second, a force plate [12,34,35,36,37], which consists of multiple force sensors, measures the ground reaction force exerted on the human body during standing-up or sitting-down movements. The input from the force plate can be used to analyze the movement of the center of mass. In addition, by detecting the ground reaction force, the force plate enables the measurement of postural stability, explosive force, and leg strength and power. However, the maintenance and calibration of a force plate are expensive.

Based on our survey, we summarize the interface requirements for standing and sitting motions here. First, physical strength diminishes with age, resulting in slower movements and reflexes among the elderly. Second, older individuals often find complex controls with small characters and intricate buttons challenging to learn and use. Third, because these interfaces are expected to be used in diverse locations, protecting users’ personal information is essential. In public spaces, it is important to implement noncontact maneuvering to the greatest extent possible in the post-COVID-19 era.

In light of the aforementioned considerations, this study addresses the interface issue related to representing the motions of standing up and sitting down. Our proposed solution involves using distance measurements from a fixed sensor to the body of an individual in either a standing or sitting position. The underlying concept is as follows. When observed from the side, a standing body appears as a straight line, while the sitting body forms a bent line at the waist and knee. Based on this idea, we propose a distance-based representation scheme for the standing position, the sitting position, and the transition between these two positions. The core technology behind this solution involves the conversion of one-dimensional (1D) information (distance) and the estimation of lower-limb joints, leading to the creation of a linkage model consisting of three rotational joints. A notable advantage of this solution is its reliance on 1D distances, allowing it to function with a proximity sensor that only requires a single microcontroller, eliminating the need for additional memory. This representation scheme broadens the application potential of the solution beyond spatial limitations. Given its applications, such as with public transport straps, these interfaces should be compact and portable, and it is also preferable to maintain low computational costs for recognition processes. For example, each strap in a public transport vehicle should ascend and descend as its user (passenger) stands up and sits down, respectively.

This paper is structured as follows. Section 2 outlines our research problem and its definitions. Section 3 provides an explanation of the proposed solution. Section 4 details the results from extensive experiments performed to evaluate the effectiveness and performance of the proposed approach, along with a discussion of these findings and potential future directions. Finally, Section 5 presents our conclusions.

2. Problem Statement

2.1. Problem Definition

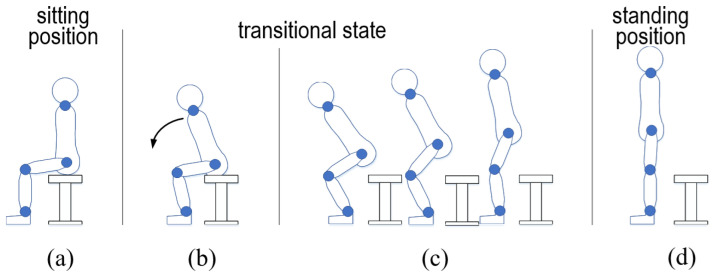

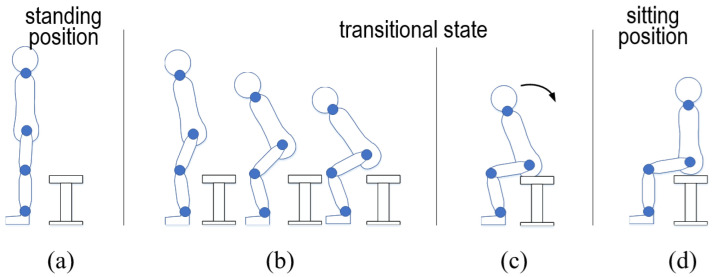

We aim to create an interface model for the standing-up and sitting-down motions without requiring additional sensor equipment or compromising privacy. Typically, these motions are categorized into two primary patterns: standing and sitting positions. The distinction between these positions can be determined by measuring the difference in distance from the sensor to the surfaces of the human body in each position. However, additional effort is essential to achieve a more sophisticated representation of the standing-up and sitting-down motions. First, as illustrated in Figure 1 and Figure 2, the transitional states between the sitting and standing positions must be clearly defined. Elderly individuals tend to raise or lower themselves slowly and often pause during this transition. Second, aligning with the aforementioned requirement, the transition process should be monitored in sync with human motions for the effective use of assistive systems. Third, experiments involving participants across a broad age range should be performed to validate the effectiveness of the proposed approach.

Figure 1.

Illustration of the standing-up motion process from sitting position to standing position ((a) preparation, (b) standing phase I, (c) standing phase II, (d) standing phase III).

Figure 2.

Illustration of the sitting-down motion process from standing position to sitting position ((a) preparation, (b) sitting phase I, (c) sitting phase II, (d) sitting phase III).

To meet these requirements, we study the representation of standing-up and sitting-down motions. By connecting the physical characteristics of the elderly to these motion processes, it becomes essential to describe the transitional states for a clear estimation of these motions. Consequently, we pose the following problem: How can the standing-up and sitting-down motions, along with their individual transition processes, be described and modeled using distances?

This problem is considered a representation challenge between human motions and a sensor system. Our approach uses a distance measurement-based model, which facilitates the representation of standing and sitting positions as well as the transition process between them. These motions replace a linkage model to streamline the representation of the human body. Based on this linkage model, we propose a distance-based representation scheme, which is detailed in the following subsection.

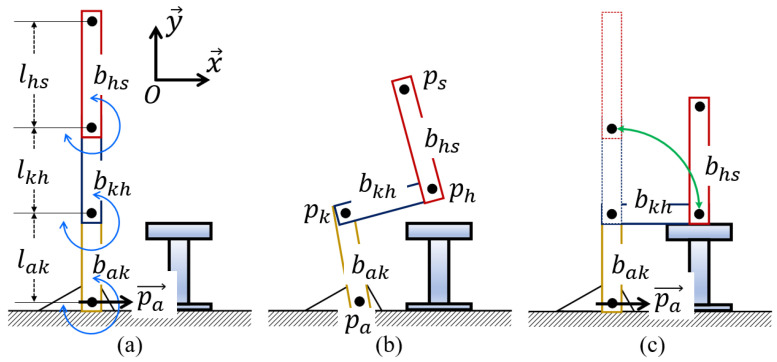

2.2. Definitions of Measurement Model

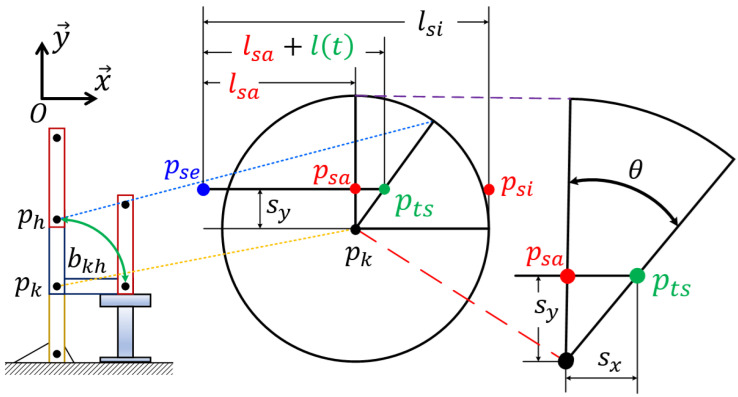

To start, we introduce the model definition for the standing-up and sitting-down motions. A two-dimensional (2D) observation plane is designated as the sagittal plane. As shown in Figure 3, the horizontal axis parallel to the ground is defined as , while the axis that is counterclockwise and perpendicular to is denoted by . The intersection point of and is labeled as O. Following this, we present three types of lengths measured from the ground along . First, represents the height of the stool. Second, for an individual seated on a stool of , represents the distance from the ground to the knee , with the assumption that is greater than . Third, for a person in a standing posture, the height from the ground to a section of the thigh is denoted by , which is greater than . Lastly, refers to a point that is positioned at a height of from the ground along .

Figure 3.

Definitions and notations of linkage representation where the linkage is composed of three rigid bars and three joints ((a) standing position, (b) transition process, and (c) sitting position).

The linkage model, consisting of three rigid bars, is created to simplify the motions of standing up and sitting down. In the linkage model shown in Figure 3b, , , , and denote the ankle, knee, hip, and shoulder joints, respectively. From bottom to top, the three bars are as follows: the bar connecting the foot to the knee (), the bar connecting the knee to the pelvis (), and the bar connecting the pelvis to the shoulder (). Next, several geometric definitions are presented. , , and denote the straight lines connecting to , to , and to , respectively. The lengths between individual joints, from bottom to top, are defined as , , and . In addition, denotes a vector that is parallel to and passes through . Using these straight lines, the angles formed between and , between and , and between and are defined as , , and , respectively.

Moreover, , , and are assumed to be rotatable at , , and to illustrate the motions of standing up and sitting down. In this linkage, these motions are assumed to occur solely in the anteroposterior (front to back) and vertical (top to bottom) directions. In the standing position, , , and are aligned along the same line (Figure 3a). In the sitting position (Figure 3c), , , and are oriented at right angles to each other. There are also transitional states observed between the standing and sitting positions (Figure 3b).

3. Distance-Based Representation Scheme

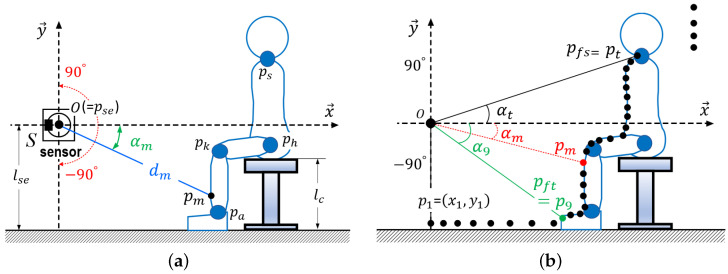

3.1. Measurements Using Proximity Sensor

We outline the measurement method for the standing-up and sitting-down motions. As shown in Figure 4a, the sensor (S) is located at with the center of S defined as O. Scanning is performed by S across a range of to at predetermined intervals. The rotation time of S is designated as one sampling time t. The input number for t is represented as . The distance from to the body surface is denoted as , and the scanning angle of S corresponding to is defined as . Using trigonometric functions with and , a point () is determined. After one rotation of S, as depicted in Figure 4b, a set of is collected. It is assumed that the feet do not move along the direction while a person is standing up or sitting down. Additionally, in both standing and sitting positions, , , and remain stationary, with no surging or swaying occurring.

Figure 4.

Illustration of measurement method using proximity sensor (S) positioned at . (a) Location of a sensor and measurement parameters; (b) Computations by pairs of distances and angles.

3.2. Overall Computation Flow

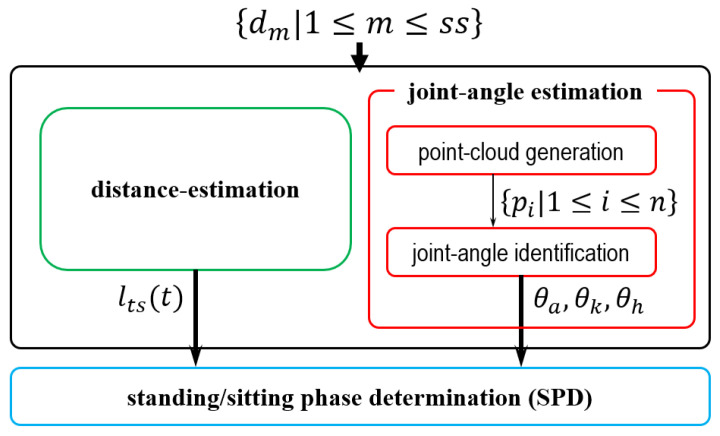

Figure 5 shows the computation flow of the proposed distance-based representation scheme. The input consists of , derived from S, while the output denotes phase determination (either standing or sitting). Using , the distance estimation and joint angle estimation modules calculate the distance changes from the standing position to the sitting position, as well as the joint angles , , and , respectively. These modules operate in parallel. At time t, the motion phase can be identified using the outputs from the standing/sitting phase determination (SPD) module. Detailed descriptions of these modules and their computation flows are provided in the subsequent subsections. In summary, at t, the representation scheme calculates the motion process based on , producing the determination result as the current phase. Notably, the scheme performs scheduling at t recursively without retaining any prior data.

Figure 5.

Computation flow of the distance-based representation scheme, with detailed explanations of individual modules provided in the subsequent subsections.

3.3. Distance Estimation Module

The distance estimation module is designed to identify standing and sitting positions by calculating the distance change during the actions of standing up and sitting down. This module computes and outputs based on t. The computation process of is illustrated in Figure 6.

Figure 6.

Illustration of computation based on the rotation of centered at and formula.

First, three types of distances are defined. For the sitting position, represents the distance from to a point on the body surface. In the standing position, the distance from to a point on the body surface is expressed as . During the standing-up and sitting-down motions, a predetermined beam of S identifies a point on the body surface, where denotes the distance from to . In the standing position, is equal to . In the sitting position, and are equivalent. Relative to , can be decomposed into and . This means that, during the motions, can be expressed as and . Next, to derive , the rotational movement of is examined. Following the conditions and assumptions regarding as detailed in Section 2.2, the standing-up and sitting-down motions on the plane are modeled by the rotation of around .

The circular movements during these actions create a fan-like shape with an angle of , configured by and . In other words, the changes in this fan shape can be seen as the reciprocating motions between and . Since these reciprocating motions can be represented by the distance from to , the standing and sitting positions can be tracked by measuring the variation in this distance.

With reference to , two types of distances with respect to and are illustrated in Figure 6. First, the distance between and (or ) in the direction is defined as . Second, represents the distance between and in the direction. When , the distance from to is denoted as , indicating the standing position. According to the definition of , if is nonzero, it is determined by equation . Therefore, from the aforementioned geometric considerations, the distance from to is expressed by combining and , formulated as . Concerning , the variations in as a function of t are designated as during the standing-up and sitting-down motions. As a result, the rotational motion can be perceived as a 1D reciprocating motion of transitioning from to . From the perspective of circular movement, the angular velocity relative to t is taken into account. Using the relation , can be calculated.

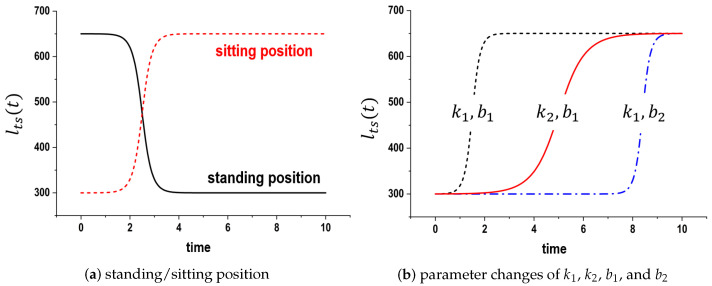

Next, the standing-up and sitting-down motions are analyzed to estimate at t. Our observations reveal that these motions gradually accelerate at the beginning and then gradually decelerate toward the end. Therefore, the value of the fan-shaped motion can be represented as an exponential function. The approximation of this exponential motion is related to the use of the well-known Gudermannian function [38]. Using this function, the rotation is transformed into linear motion as follows:

| (1) |

where and are positive constants, representing the motion speed and starting time parameters, respectively. Based on this equation, Figure 7a shows the trajectories represented by . Individual motions are plotted according to the sign of . The slopes and starting times are modified by adjusting and as shown in Figure 7b.

Figure 7.

Computational results for trajectories in the distance estimation module when and .

3.4. Joint Angle Estimation Module

To compute , , and , as detailed in Section 3.2, the joint angle estimation module performs two functions: point cloud generation and joint angle identification. The computation process of this module is as follows.

As illustrated in Figure 4b, the point cloud generation function produces a set of points that represent the body surface. At t, is calculated using and . Once , , ⋯, , ⋯, are calculated, the points are analyzed to identify the valid range that represents the body surface. Starting from the point , which corresponds to the direction of S, the coordinate of each point is checked to see if it exceeds the value of . If the ordinate is greater, then that coordinate is considered to be the front of the toes. Specifically, this point is designated as . After establishing , is positioned at a predetermined distance along . Subsequently, the distance between and in the direction of of S is calculated from to . This calculation routine verifies whether exceeds the assigned length , which serves as our empirical value. If is greater than , then points following the ()th are disregarded as part of the body surface. In this context, the mth point is defined as . This function outputs a set of points that represents the valid range from to . For convenience, this set of these points is represented by , and is empirically considered as .

Next, the joint angle identification function aims to determine and . The underlying principle of this computation is to locate points with larger (=) values, which are established by neighboring points. Since human-body movements occur at joints, points with higher can be considered joints. Thus, the first step is to calculate formed by contiguous points , , and . The calculation of uses the inner product formula. For , ⋯, , ⋯, , points that correspond to substantial tilts are selected as joint candidates. Subsequently, and are identified by referencing , which corresponds to the largest .

Then, , , and are calculated through distance computations involving , , , and . The values of and are sequentially assessed to determine the appropriateness of these estimations. If , , and fall outside of the effective range of the lengths, alternative point candidates are calculated and substituted with new joint points. This calculation process is reiterated to identify , , , and , allowing for the computation of , , and using the inner product formula. This applies to a standing posture or a similar position. If all differences in are negligible, it will be challenging to ascertain and . To mitigate this uncertainty, the length ratios of , , and are empirically established as 0.35, 0.35, and 0.3, respectively, relative to . These values are derived from research findings pertaining to Japanese body size [39].

3.5. SPD Module

The SPD module is illustrated using Figure 1 and Figure 2. The motions of standing up and sitting down are broadly categorized into two states: dynamic and static. The dynamic state includes the actions involved in transitioning to a standing or sitting position. First, the dynamic state of the standing-up motion is split into two phases: the forward flexion phase and the upward movement with the body extension phase. For ease of reference, these phases are labeled standing phase I (-I) and standing phase II (-II), respectively (Figure 1). Next, the dynamic state of the sitting-down motion is further divided into two phases, specifically, the downward movement associated with the sitting phase and the back extension phase, referred to as sitting phase I (-I) and sitting phase II (-II), respectively (see Figure 2). In contrast, the static state, encompassing both the standing and sitting positions, exhibits no variations in , , and . Since these positions can also be viewed as distinct phases in the context of continuous movements, the standing position is designated as standing phase III (-III), while the sitting position is denoted as sitting phase III (-III).

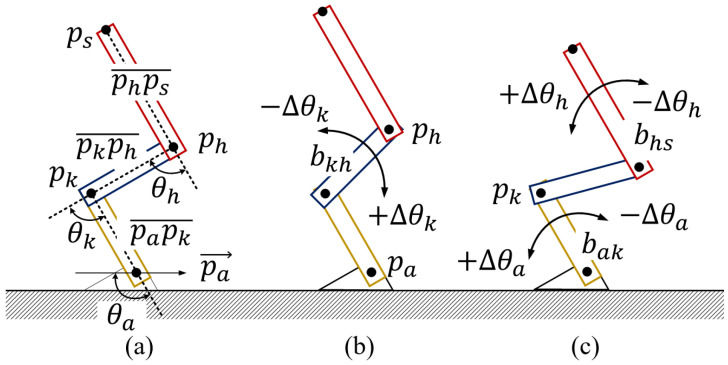

Here, we explain the relationships between the postures and the angles , , and . The changes in these angles between time points and t are denoted as , , and , respectively. As shown in Figure 8, when is positive, bends forward while the knee is pushed outward; conversely, when is negative, extends. Furthermore, if , both and at bend, causing to move backward. In contrast, when , is lifted forward owing to an extension movement. Additionally, when is positive, and at bend forward, whereas when is negative, and at extend.

Figure 8.

Pose explanation based on angle variations , , and of individual joint angles , , and ((a) definitions of , , and , (b) variations, (c) and variations).

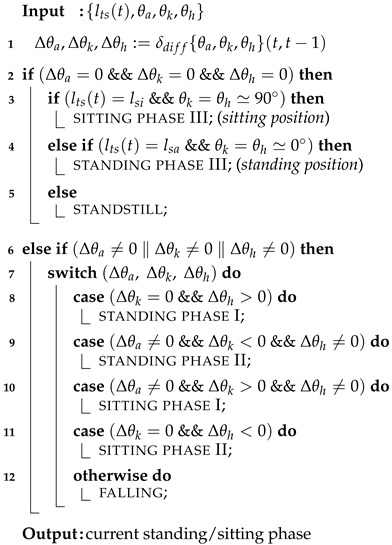

Considering these conditions and assumptions, we propose a phase determination algorithm (Algorithm 1) for the SPD module. The inputs for Algorithm 1 include from the distance estimation module and , , and from the joint angle estimation module. The phase determination computation uses these inputs, producing one of six possible phases at t.

| Algorithm 1: Standing/sitting phase determination. |

|

The details of the computation are as follows. Figure 1a,d and Figure 2a,d illustrate the static state, where , , and are all 0 (indicating that , , and are stationary). As noted in Section 1, an individual may pause while either standing up or sitting down. To avoid incorrect phase determination, is used to verify if the current phase is -III or -III. When and , this indicates a standing position as shown in Figure 1d and Figure 2a. Conversely, when and , it indicates a sitting position as depicted in Figure 1a and Figure 2d.

Next, each phase is identified based on the variations in , , and . As depicted in Figure 1b, in -I, the body bends forward while the knee remains fixed. This phase is recognized when and . -II, shown in Figure 1c, commences when the buttocks leave the stool’s surface and concludes just before the individual reaches a standing position. During this phase, the center of gravity of the body rises, and is extended. -II is identified when , , and . As seen in Figure 2c, -I begins at the standing position and ends when the buttocks reconnect with the stool surface. During this phase, the body posture shifts downward through the bending of joint angles. Consequently, -I is identified when , . As illustrated in Figure 2c, -II encompasses the motion process until a seated position is achieved after the buttocks make contact with the seating surface. In this phase, the bent body rises. If and , then -II is determined. Finally, for any posture not included in the six previously mentioned processes, it is assumed that the individual is in a state of falling.

4. Evaluation Results and Discussion

4.1. Evaluation Direction and Experimental Settings

Five types of experiments were performed for the standing-up and sitting-down motions on two types of voluntary participants, namely, laboratory colleagues and anonymous individuals (including elderly people), to verify the effectiveness of the proposed representation scheme. Specifically, we examined the characteristics of the scheme using a proximity sensor and validated its performance by comparing measurement sensors. Before performing the experiments, we outlined the objectives of this study along with the experimental processes and settings to the participants. Subsequently, we obtained written informed consent from the voluntary subjects for their participation and for any accompanying images. Lastly, we obtained approval from the ethics committee of the University of Miyazaki for the experiments (approval No. 2021-002). Additionally, all experiments were performed in accordance with the protocol established by the University of Miyazaki Ethics Committee.

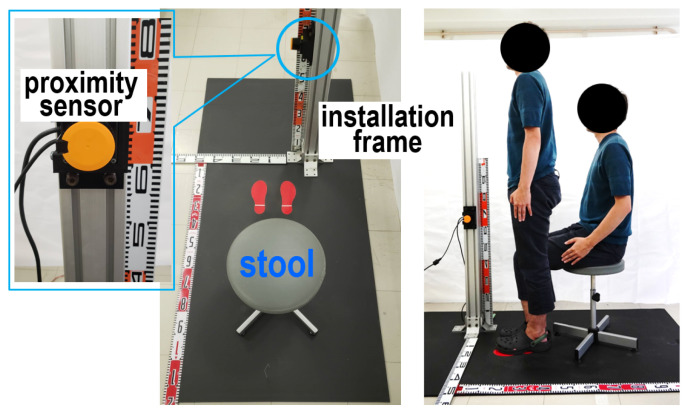

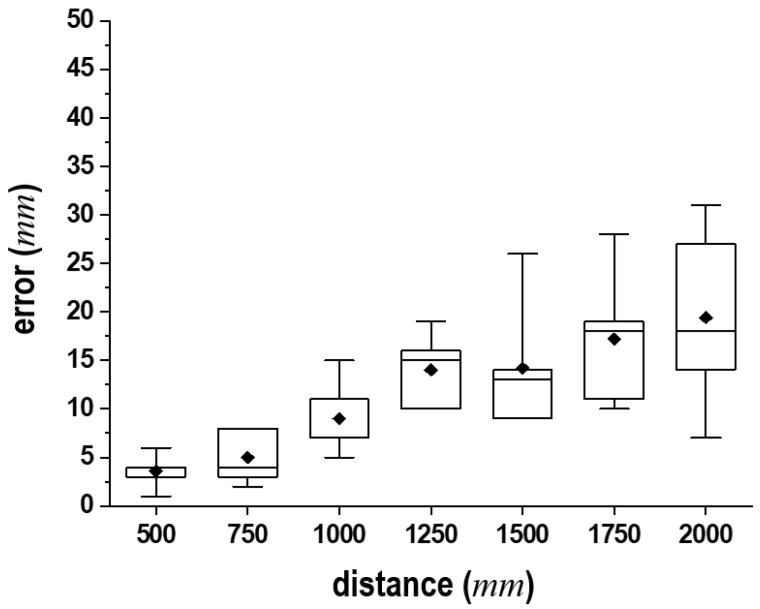

As illustrated in Figure 9, the experimental setup comprised a height-adjustable stool capable of adjusting , a UST-10LX proximity sensor (Hokuyo Automatic Co., Ltd., Tokyo, Japan), and its supporting frame. Figure 10 shows the statistical analysis results from 100 trials, measuring distances at 250 mm intervals in a range of 500 mm to 2000 mm. Despite a slight decrease in the measurement accuracy, the proximity sensor demonstrated satisfactory performance for practical experimental applications. Subsequently, was adjusted to ensure that the subjects’ heels naturally made contact with the ground when seated. The subjects were instructed to wear lightweight clothing or to adjust their shirts and pants to minimize the distance from S to their bodies. The surfaces were measured. During these experiments, participants assumed the following postures: they were instructed to either cross their arms in front of their chest or let their arms rest by their sides. At times, the positioning of the hands interfered with the distances to the body surface, leading to inaccuracies in the distance measurements. These complications made it challenging to accurately detect the joint locations, resulting in some deviated data. Additionally, we asked participants to perform these motions slowly according to our command signals for standing up and sitting down to avoid causing them any strain. Each individual’s body was oriented toward the installation frame of S to facilitate distance measurements along the sagittal plane.

Figure 9.

Experimental setting using proximity sensor (left) and experimental scene (right).

Figure 10.

Experimental results for distance measurements at 250 mm intervals using the UST-10LX proximity sensor.

A FDR-AX700 digital 4K video camera recorder (SONY Corporation, Tokyo, Japan) and a Vantage V8 motion capture device (Vicon) (Inter Reha Co., Ltd., Tokyo, Japan) were used to evaluate the results of the proposed scheme. The standing-up and sitting-down motions were recorded on the plane using the video camera, and the results were analyzed through video analysis for comparison. Additionally, the motion capture was performed to assess the results of the proposed scheme further. Furthermore, an EAS linear actuator (Orientalmotor Co., Ltd., Tokyo, Japan), simulating the standing-up or sitting-down motion, was used as an index for evaluating the phase determination.

4.2. Experimental Results in Laboratory Environment

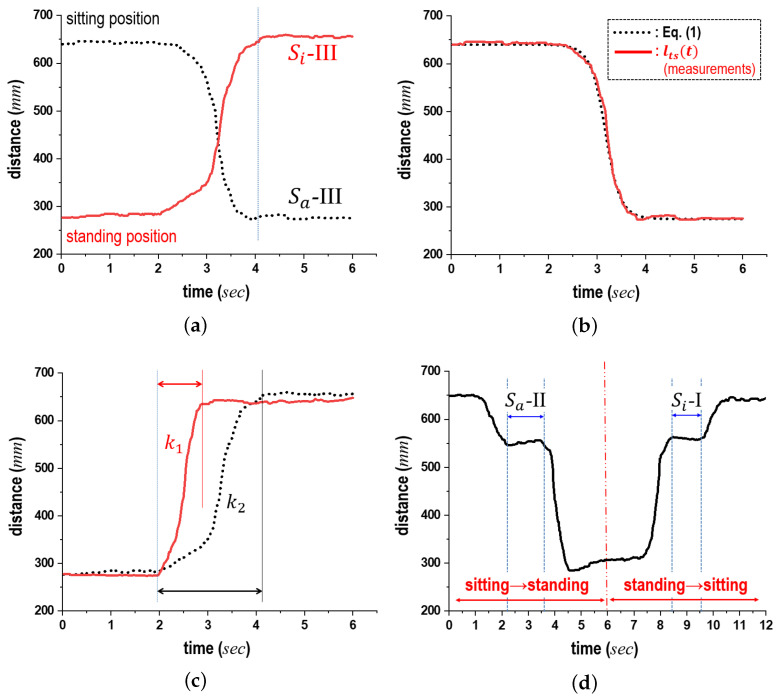

The initial experiments were performed to validate the functionality of the distance estimation module, with the results presented in Figure 11. In this figure, the horizontal axis represents time (in seconds), and the vertical axis represents distance (in millimeters). The trajectories depicting the average values, measured over 10 trials while the subjects were performing standing-up and sitting-down motions, are shown in Figure 11a. The black dotted line represents the trajectories for the standing-up motion, while the red solid line indicates the trajectories for the sitting-down motion, respectively. These results confirmed that obtained during these motions was similar to the findings of Equation (1) (Figure 7a). Therefore, this module could adequately determine the standing and sitting positions and compute according to the standing-up and sitting-down motions.

Figure 11.

Experimental results obtained using distance estimation module. (a) Trajectories of when standing up and sitting down; (b) Comparative results between Equation (1) and (measurements); (c) Comparisons according sitting speeds and ; (d) Trajectories of when pausing in -II and -I.

In Figure 11b, the black dotted line represents Equation (1), while the red bold line corresponds to the measured . As illustrated in Figure 7, Equation (1) and show a relatively close resemblance. Figure 11c shows that the speed differences during the sitting-down motion were evident between 2 and 4 s after the start. The black dotted line indicates the results of slow sitting-down motions, whereas the red solid line represents the results of normal sitting-down motions. As expected, a shorter motion time led to a steeper incline in relation to the change in distance. Whether standing up or sitting down quickly or slowly, variations in completion time are attributed to differences in motion speeds. It was concluded that the distance estimation module, by adjusting in Equation (1), is capable of monitoring both standing-up and sitting-down motions.

Figure 11d shows the trajectories of based on the following motion patterns. During the standing-up process, each subject was instructed to stop moving. After approximately 1 s, they were prompted to resume standing up. Subsequently, participants were asked to sit down from the standing position. In the middle of these movements, subjects were again instructed to stop. After approximately 1 s, they continued the downward motion. During these pauses, the module mistakenly classified the stop as a single phase, assigning the pausing commands to -II and -I. As detailed in Section 3.3, Equation (1) only identifies the static phase based on . While elderly individuals may pause during transitions, these transitional states should be accurately represented.

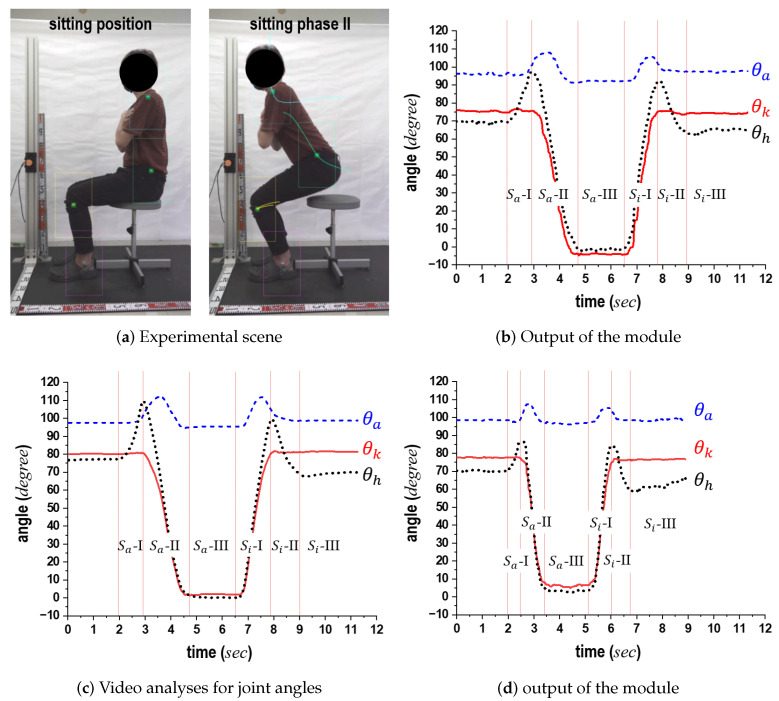

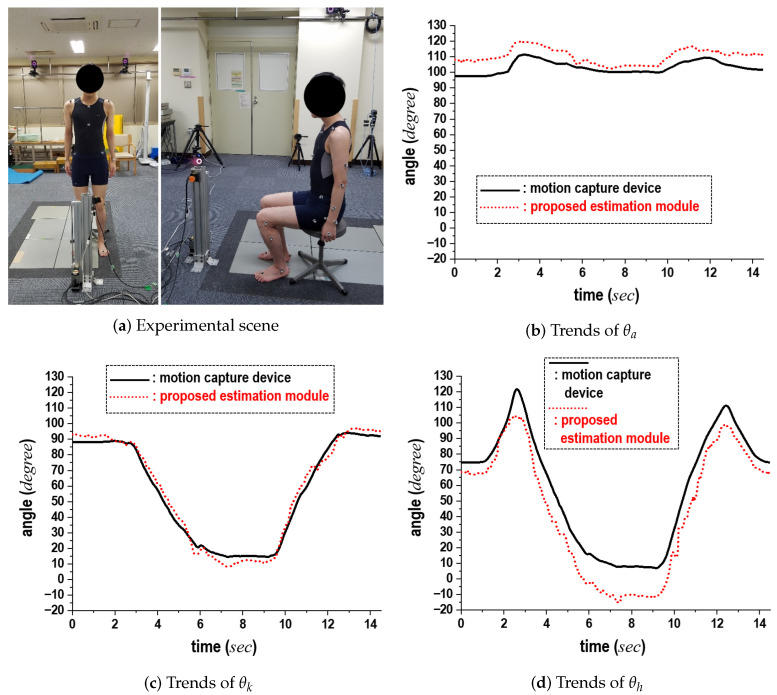

Second, to assess the effectiveness of the joint angle estimation module, we compared the module’s outputs with the image analysis results derived from video clips. Figure 12a shows the experimental setup. Markers were affixed to the ankle, knee, and hip of each participant. Their standing-up and sitting-down motions were simultaneously recorded by a video camera, allowing for the computation of , , and through the module. The trajectories of the three markers were extracted from the video clip.

Figure 12.

Computation and video analysis results for , , and are provided to evaluate the joint angle estimation module, with cases (b,c) representing trajectories obtained by the joint-angle estimation module and those obtained from the video clips, respectively, and with cases (b,d) representing the results for normal motions and swifter motions, respectively.

Figure 12b shows the , , and trajectories calculated by the joint angle estimation module. Figure 12c presents the , , and trajectories extracted from the video clips recorded during the standing-up and sitting-down motions depicted in Figure 12b. In these figures, the horizontal axis represents time (in seconds), while the vertical axis indicates the angle (in degrees). The red vertical lines denote the boundaries of individual phases. The transition between the preparation phase and -I (or -I) corresponds to the moment when command signals were issued. Other boundary lines were marked based on the sitting position on the stool. Overall, the trajectories of , , and exhibited similar trends. Despite the small differences in the , , and values obtained from the module and those from the video clips, our comparisons demonstrated that the proposed module could calculate the individual angles based on only as explained in Section 3.2.

In a different experimental setting, unlike the scenarios depicted in Figure 12b,c, we instructed the subject to perform standing-up and sitting-down motions at a faster pace. Figure 12d shows the , , and trajectories for these motions. The results indicate that the overall characteristics of these trajectories displayed similar patterns. Owing to the increased speed of the actions in these experiments, the maximum observed was lower compared to those in Figure 12b. Notably, distinct speeds were reflected in during the standing-up and sitting-down motions. As a result, although the motions were performed 1.3 times faster when comparing the motion duration in Figure 12b with those in Figure 12d, the joint angle estimation module effectively monitored and calculated the variations in , , and .

The third experiment was performed to assess the feasibility of the joint angle estimation module by comparing its measurements to those from the motion capture device. Figure 13a shows the experimental setup with a young subject, while the results in Figure 13b–d show the , , and trajectories, respectively. In these graphs, the black solid line represents the motion capture results, and the red dotted line indicates the computation results from the proposed module (obtained using the proximity sensor). The horizontal and vertical axes represent time (in seconds) and angle (in degrees), respectively. The two sets of results showed a close approximation regarding both the time-change zones and angle magnitudes. Fluctuations in were noticeable, even though the trends of the and trajectories were comparable. Additionally, when compared to the results from the motion capture device, displayed the largest error, with an average deviation of up to . This increased error in occurred since, when flexed, the waist position was relatively distant from S, located in the front direction, making its measurement more challenging compared to and . Despite this slight difference, we confirmed that the joint angle estimation module is capable of producing patterns similar to those measured by the motion capture.

Figure 13.

Comparison results between the joint-angle estimation module and the motion capture device.

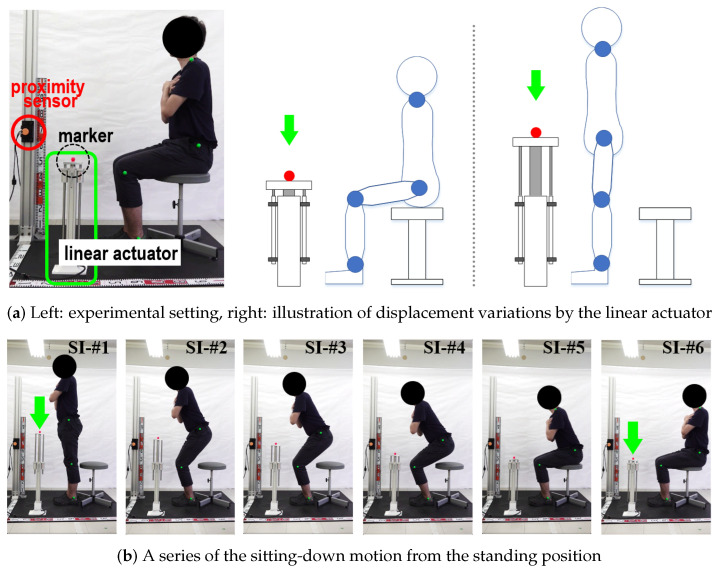

Fourth, as detailed in Section 3.5, the SPD module (Algorithm 1) produced outputs for each motion phase at t. The effectiveness of this module was evaluated through experiments performed with a linear actuator. Figure 14a shows the experimental setup and provides a conceptual depiction of the experiments involving the linear actuator. This actuator was governed by the motions of each subject. During the standing-up (sitting-down) motions, the transitional displacements controlled by the linear actuator were specified as changes in the projected onto exclusively at -II (and -I), meaning the displacements of the subject’s in the direction. In summary, the goal of this experiment was to assess the accuracy of the , , and values generated by the joint angle estimation module and to evaluate the reliability of the SPD module’s output. Under identical experimental conditions, as shown in Figure 12, we recorded the transitional movements using a camera, and the video clips were analyzed to simultaneously verify the subjects’ motions alongside those of the actuator. Figure 14b shows photographs of a subject executing the sitting-down motion from a standing position.

Figure 14.

Experimental setup using linear actuator to evaluate the effectiveness of the SPD module.

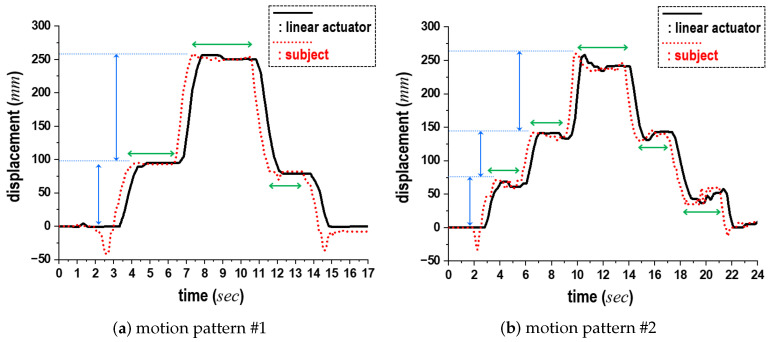

As comparative results, Figure 15 shows the variations in the projected onto , alongside the corresponding displacements of the linear actuator in the same direction when -II (and -I) are applied. In this figure, the black solid lines represent the displacements of the linear actuator, while the red dotted lines denote the of the subject. The horizontal and vertical axes depict time (in seconds) and displacement (in millimeters), respectively. Additionally, the blue and green bidirectional arrows indicate the range of displacement observed during continuous movements and the pause intervals in these motions, respectively. (See video-S1: Experimental-scene-1.mp4 submitted as Supplementary Materials).

Figure 15.

Comparison of displacements of linear actuator, shown in Figure 14, according to two patterns of subject movements.

As illustrated in Figure 15a, each subject paused once during both the standing-up and sitting-down motions (motion pattern ). Despite these pauses, the linear actuator rose and subsequently returned to its initial position. In contrast, during the second trial shown in Figure 15b, we instructed each subject to pause twice during the standing-up and sitting-down motions (motion pattern ). This required the subjects to maintain unfamiliar pause postures as directed, leading to body swaying. Consequently, minor fluctuations were noted during the preparation phase, as the subjects readied themselves for their next movements or during the pauses. Upon analysis of the data, the average displacement of these fluctuations was recorded at 3 cm before the initiation of the motions and 5 cm during the paused postures. We initially attributed the delay in the actuator’s motions, which was approximately 0.3 s, to the acceleration and deceleration processes. From a hardware perspective, we observed that the standing-up and sitting-down motions coincided with similar displacements of the actuator, which confirmed the accuracy of the SPD module. Finally, Figure 16 presents the experimental results from the feasibility test of one target application, wherein the proposed scheme monitors the standing-up and sitting-down actions of a passenger in a bus. According to these motions, the marker of the linear actuator ascends and descends, respectively.

Figure 16.

Experimental scene for our application example in a bus.

4.3. Experimental Results for Elderly and Voluntary Subjects

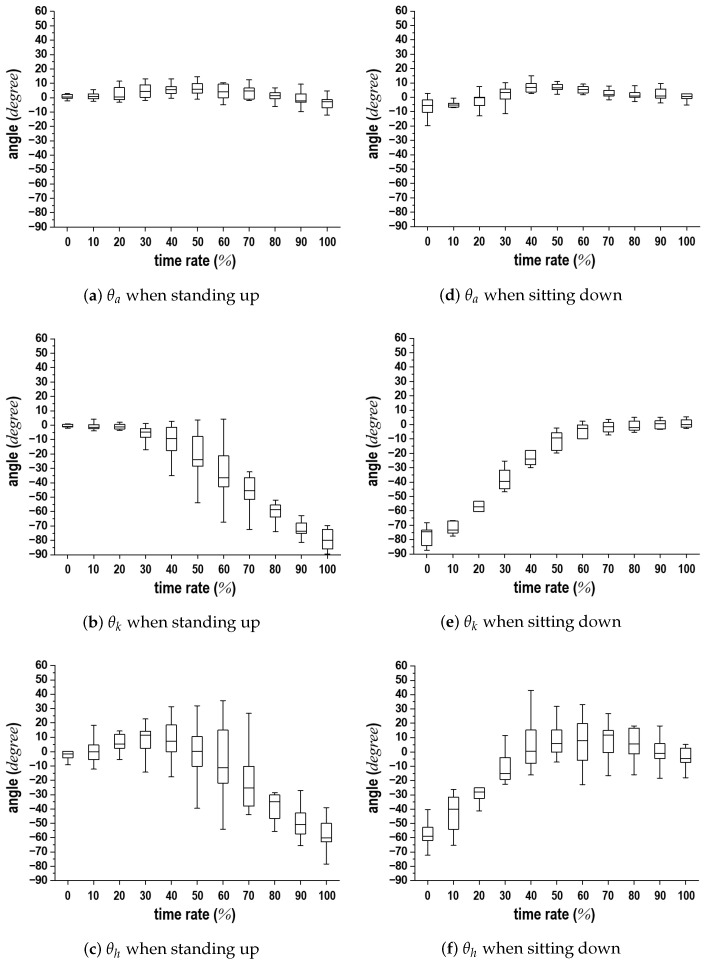

We performed experiments to assess the usability of the proposed representation scheme for anonymous subjects with individual differences. The participants included 58 male and female volunteers: 4 in their 20 s, 5 in their 30 s, 8 in their 40 s, 11 in their 50 s, 11 in their 60 s, 14 in their 70 s, and 5 in their 80 s. Approximately 33% of the participants were elderly (aged 70 and above). As illustrated in Figure 14, comparative experiments were performed at a nursing home and during a regional welfare event, with the results from the scheme being thoroughly analyzed. (See video-S2: Experimental-scene-2.mp4 and video-S3: Experimental-scene-3.mp4 submitted as Supplementary Materials).

The statistical data from the experiments are shown in Figure 17. In this figure, the boxes represent the confidence intervals of 25–75%, the horizontal bars in the boxes indicate the mean values, and the error bars denote the standard deviations. Figure 17a,d, Figure 17b,e, and Figure 17c,f depict the statistical variations in , , and over time taken for the standing-up and sitting-down motions. Each subject exhibited unique motion patterns and speeds during these movements, making it challenging to analyze the data of all participants without an appropriate standard. Consequently, we developed a comparison standard based on individual variations. The joint angle in the sitting position was designated as the initial value of ; thus, the comparison standard was defined as the change in angle over the duration needed to complete the standing-up or sitting-down motion.

Figure 17.

Statistical trends of experimental results for , , and over time rate (%) during standing-up and sitting-down motions of 58 subjects.

The trends observed in Figure 17 were consistent with those in Figure 12 and Figure 13, despite the variations in motion speeds attributed to individual differences. The median values indicate that the displacement ranges for each joint during these motions were between and relative to the initial , between and relative to the initial , and between and relative to the initial . As demonstrated in the submitted video clip, individual differences in the standing-up and sitting-down motions were influenced by personal habits, muscle strength, and body types. It is believed that this contributed to the increase in the error bar at and . These ranges can serve as an index for the variability in the joint angles. As a result, different motion trends can be represented despite individual differences leading to variations in motion speeds. Furthermore, the proposed distance-based representation scheme successfully estimated , , and , confirming that the six phases could be identified even among subjects with differing motion speeds.

4.4. Discussion

The findings from the experiments mentioned above are summarized as follows. First, the measurement and the estimation of , , and using the geometric model showed trends in their angle transitions that closely aligned with the joint angles obtained through motion capture. This confirms the efficacy of the angle estimation method. Second, to assess the effectiveness of the SPD module, we validated the phase determination and the displacements of derived from , , and during standing-up and sitting-down motions through comparative experiments involving a linear actuator and multiple subjects. Third, we performed experiments on 58 voluntary subjects and found that the proposed scheme could be applied to cases involving individual differences during the standing-up and sitting-down motions.

These analyses suggest that this study is valuable for interface development. However, dispersions were observed in the transition processes for , , and due to several factors. The proximity sensor measurements relied on the distances from the sensor to the body surface, while the video camera and motion capture device tracked markers attached to the body. Additionally, the type of clothing worn also contributed to these variations. The upper garments worn by the subjects were specifically designed for the experiments; however, the lower garments were not designated for this purpose. Some hems were wide and shifted during movement. For the postures in these experiments, subjects were asked to either cross their arms in front of their chests or place their arms at their sides. Given the intended applications, it is undesirable to impose such restrictions on the positions and locations of the hands. One of our ongoing projects focuses on the accuracy of the measurement setups in relation to these factors. Additionally, we are developing an algorithm for situations where only a portion of the body is visible owing to obstacles that restrict the sensor’s line of sight.

This study will have a considerable impact on the field of assistive systems for lower limbs because the proposed scheme prioritizes user privacy and can seamlessly integrate into daily routines. However, there is still potential for improvement. A measurement method capable of working with thick clothing, skirts, or textured fabrics will be necessary to implement this representation scheme in everyday life. Furthermore, the proposed measurement method uses a proximity sensor to scan the body surface from the shoulder to the toes on the sagittal plane. As such, during experiments, subjects were instructed to stand in easily scannable positions. Taking these factors into account, future works can further refine the measurement method, including sensor installation, to develop an interface system based on this scheme.

5. Conclusions

An interface scheme that uses relative distance measurements to represent the motions of standing up and sitting down is introduced. This scheme encompasses the measurement of relative distance from a proximity sensor to the body surface, data reduction to a 2D coordinate plane, and the determination of motion phases based on variations in joint angles. Additionally, the transition process of the joint locations is captured by modeling the human body as a geometric linkage structure. Using a proximity sensor, the recognition of standing-up and sitting-down motions requires less data compared to other measurement methods, such as cameras. The sensors do not need to be affixed to the body for measurement purposes. Furthermore, individual privacy is protected since the scheme does not require extensive data that could identify individuals. Comparative experiments were performed with subjects of varying ages to showcase the effectiveness and performance of the proposed approach. While minor fluctuations in the experimental results were noted owing to individual variations among participants, the proposed scheme proved to be satisfactorily effective. In conclusion, the scheme emphasizes the following key aspects: noncontact handling in the post-COVID-19 era and the recognition of the discontinuous transition process between standing and sitting positions, and vice versa. The proposed scheme is applicable to the interfaces of welfare and measurement devices in medical and welfare facilities, among others. Our future research will focus on improving the measurement accuracy of the developed method and exploring its applications in public spaces.

Acknowledgments

Special thanks to Naohisa Togami for data analysis and technical assistance. His extraordinary contributions have greatly improved the quality of this thesis paper.

Abbreviations

The following abbreviations are used in this manuscript:

| 1D | one-dimensional |

| 2D | two-dimensional |

| SPD | standing/sitting phase determination |

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/s24216967/s1. The first video clip shows the experimental results obtained with the linear actuator on one laboratory colleague to examine the effectiveness of the SPD module as shown in Figure 14 and Figure 15. The second video clip shows the experimental scene in the nursing home. The results are shown in Figure 17. The third video clip presents the experimental scene with the voluntary subjects at the regional event. The related results are shown in Figure 17.

Author Contributions

Conceptualization, G.L.; methodology, G.L. and Y.H.; software, G.L., Y.H. and T.W.; validation, G.L., Y.H., T.W. and C.L.; formal analysis, G.L. and Y.H.; investigation, G.L., Y.H. and T.W.; resources, G.L.; data curation, G.L. and Y.H.; writing—original draft preparation, G.L.; writing—review and editing, Y.H., T.W. and C.L.; visualization, G.L.; supervision, C.L.; project administration, G.L.; funding acquisition, G.L. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The permission of the ethics committee of the University of Miyazaki was obtained for the experiments (Approval No. 2021-002).

Informed Consent Statement

We obtained written informed consent from the voluntary subjects for their participation and for any accompanying images.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Lee K., Ha S., Lee K., Hong S., Shin H., Lee G. Development of a Sit-to-Stand Assistive Device with Pressure Sensor for Elderly and Disabled: A Feasibility Test. Phys. Eng. Sci. Med. 2021;44:677–682. doi: 10.1007/s13246-021-01015-0. [DOI] [PubMed] [Google Scholar]

- 2.Lee G., Togami N., Hayakawa Y., Tamura H. Two functional wheel mechanism capable of step ascending for personal mobility aids. Electronics. 2023;12:1399. doi: 10.3390/electronics12061399. [DOI] [Google Scholar]

- 3.Lee S.-J., Lee J.H. Safe Patient Handling Behaviors and Lift Use among Hospital Nurses: A Cross-Sectional Study. Int. J. Nurs. Stud. 2017;74:53–60. doi: 10.1016/j.ijnurstu.2017.06.002. [DOI] [PubMed] [Google Scholar]

- 4.Asker A., Assal S.F.M., Ding M., Takamatsu J., Ogasawara T., Mohamed A.M. Modeling of Natural Sit-To-Stand Movement Based on Minimum Jerk Criterion for Natural-like Assistance and Rehabilitation. Adv. Robot. 2017;31:901–917. doi: 10.1080/01691864.2017.1372214. [DOI] [Google Scholar]

- 5.Thakur D., Biswas S. Online Change Point Detection in Application with Transition-Aware Activity Recognition. IEEE Trans. Hum. Mach. Syst. 2022;52:1176–1185. doi: 10.1109/THMS.2022.3185533. [DOI] [Google Scholar]

- 6.Yahaya S.W., Lotfi A., Mahmud M., Adama D.A. A Centralised Cloud-Based Monitoring System for Older Adults in a Community; Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics; Melbourne, Australia. 17–20 October 2021; pp. 2439–2443. [DOI] [Google Scholar]

- 7.Schrader L., Toro A.V., Konietzny S., Rüping S., Schäpers B., Steinböck M., Krewer C., Müller F., Güttler J., Bock T. Advanced Sensing and Human Activity Recognition in Early Intervention and Rehabilitation of Elderly People. J. Popul. Ageing. 2020;13:139–165. doi: 10.1007/s12062-020-09260-z. [DOI] [Google Scholar]

- 8.Yang N., An Q., Kogami H., Yamakawa H., Tamura Y., Takahashi K., Kinomoto M., Yamasaki H., Itkonen M., Shibata-Alnajjar F., et al. Temporal Features of Muscle Synergies in Sit-To-Stand Motion Reflect the Motor Impairment of Post-Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2019;27:2118–2127. doi: 10.1109/TNSRE.2019.2939193. [DOI] [PubMed] [Google Scholar]

- 9.Subramani P., K S., B K.R., R S., D P.B. Prediction of Muscular Paralysis Disease Based on Hybrid Feature Extraction with Machine Learning Technique for COVID-19 and Post-COVID-19 Patients. Pers. Ubiquitous Comput. 2021;27:831–844. doi: 10.1007/s00779-021-01531-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mitamura S., Nagamune K. A Development of Motion Measurement System of Lower Limb during Isotonic Contraction of Knee Extension Using MMT; Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC); Bari, Italy. 6–9 October 2019; pp. 1182–1186. [DOI] [Google Scholar]

- 11.Bisio I., Garibotto C., Lavagetto F., Sciarrone A. When EHealth Meets IoT: A Smart Wireless System for Post-Stroke Home Rehabilitation. IEEE Wirel. Commun. 2019;26:24–29. doi: 10.1109/MWC.001.1900125. [DOI] [Google Scholar]

- 12.Shukla B.K., Jain H., Vijay V., Yadav S.K., Mathur A., Hewson D.J. A Comparison of Four Approaches to Evaluate the Sit-To-Stand Movement. IEEE Trans. Neural Syst. Rehabil. Eng. 2020;28:1317–1324. doi: 10.1109/TNSRE.2020.2987357. [DOI] [PubMed] [Google Scholar]

- 13.Sharma B., Pillai B.M., Suthakorn J. Physical Human-Robot Interaction (PHRI) through Admittance Control of Dynamic Movement Primitives in Sit-To-Stand Assistance Robot; Proceedings of the 2022 15th International Conference on Human System Interaction (HSI); Melbourne, Australia. 28–31 July 2022; pp. 1–6. [DOI] [Google Scholar]

- 14.Takeda M., Sato K., Hirata Y., Katayama T., Mizuta Y., Koujina A. Standing, Walking, and Sitting Support Robot Based on User State Estimation Using a Small Number of Sensors. IEEE Access. 2021;9:152677–152687. doi: 10.1109/ACCESS.2021.3127275. [DOI] [Google Scholar]

- 15.Greene B.R., Doheny E.P., McManus K., Caulfield B. Estimating Balance, Cognitive Function, and Falls Risk Using Wearable Sensors and the Sit-To-Stand Test. Wearable Technol. 2022;3:e9. doi: 10.1017/wtc.2022.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Billiaert K., Al-Yassary M., Antonarakis G.S., Kiliaridis S. Measuring the Difference in Natural Head Position between the Standing and Sitting Positions Using an Inertial Measurement Unit. J. Oral Rehabil. 2021;48:1144–1149. doi: 10.1111/joor.13233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Milosevic B., Leardini A., Farella E. Kinect and Wearable Inertial Sensors for Motor Rehabilitation Programs at Home: State of the Art and an Experimental Comparison. Biomed. Eng. OnLine. 2020;19:25. doi: 10.1186/s12938-020-00762-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Takano W. Annotation Generation from IMU-Based Human Whole-Body Motions in Daily Life Behavior. IEEE Trans. Hum. Mach. Syst. 2020;50:13–21. doi: 10.1109/THMS.2019.2960630. [DOI] [Google Scholar]

- 19.Bochicchio G., Ferrari L., Bottari A., Lucertini F., Scarton A., Pogliaghi S. Temporal, Kinematic and Kinetic Variables Derived from a Wearable 3D Inertial Sensor to Estimate Muscle Power during the 5 Sit to Stand Test in Older Individuals: A Validation Study. Sensors. 2023;23:4802. doi: 10.3390/s23104802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lindemann U., Krespach J., Daub U., Schneider M., Sczuka K.S., Klenk J. Effect of a Passive Exosuit on Sit-to-Stand Performance in Geriatric Patients Measured by Body-Worn Sensors—A Pilot Study. Sensors. 2023;23:1032. doi: 10.3390/s23021032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cola G., Avvenuti M., Piazza P., Vecchio A. Fall Detection Using a Head-Worn Barometer. In: Perego P., Andreoni G., Rizzo G., editors. Proceedings of the Wireless Mobile Communication and Healthcare, Lecture Notes of the Institute for Computer Sciences; Milan, Italy. 14–16 November 2016; Cham, Switzerland: Springer; 2017. pp. 217–224. [DOI] [Google Scholar]

- 22.Masse F., Gonzenbach R., Paraschiv-Ionescu A., Luft A.R., Aminian K. Wearable Barometric Pressure Sensor to Improve Postural Transition Recognition of Mobility-Impaired Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2016;24:1210–1217. doi: 10.1109/TNSRE.2016.2532844. [DOI] [PubMed] [Google Scholar]

- 23.Massé F., Bourke A.K., Chardonnens J., Paraschiv-Ionescu A., Aminian K. Suitability of Commercial Barometric Pressure Sensors to Distinguish Sitting and Standing Activities for Wearable Monitoring. Med. Eng. Phys. 2014;36:739–744. doi: 10.1016/j.medengphy.2014.01.001. [DOI] [PubMed] [Google Scholar]

- 24.Zhang M., Liu D., Wang Q., Zhao B., Bai O., Sun J. Gait Pattern Recognition Based on Plantar Pressure Signals and Acceleration Signals. IEEE Trans. Instrum. Meas. 2022;71:4008415. doi: 10.1109/TIM.2022.3204088. [DOI] [Google Scholar]

- 25.Tsai M.-C., Chu E.T.-H., Lee C.-R. An Automated Sitting Posture Recognition System Utilizing Pressure Sensors. Sensors. 2023;23:5894. doi: 10.3390/s23135894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zhang W., Liu Y., Chen C., Fan C. A Study on the Comfort Level of Standing Chairs Based on Pressure Sensors and sEMG. Appl. Sci. 2024;14:6009. doi: 10.3390/app14146009. [DOI] [Google Scholar]

- 27.Paez-Granados D.F., Kadone H., Hassan M., Chen Y., Suzuki K. Personal Mobility with Synchronous Trunk–Knee Passive Exoskeleton: Optimizing Human–Robot Energy Transfer. IEEE/ASME Trans. Mechatronics. 2022;27:3613–3623. doi: 10.1109/TMECH.2021.3135453. [DOI] [Google Scholar]

- 28.Hyodo K., Kanamori A., Kadone H., Kajiwara M., Okuno K., Kikuchi N., Yamazaki M. Evaluation of Sit-to-Stand Movement Focusing on Kinematics, Kinetics, and Muscle Activity after Modern Total Knee Arthroplasty. Appl. Sci. 2024;14:360. doi: 10.3390/app14010360. [DOI] [Google Scholar]

- 29.Zhang W., Bai Z., Yan P., Liu H., Shao L. Recognition of Human Lower Limb Motion and Muscle Fatigue Status Using a Wearable FES-sEMG System. Sensors. 2024;24:2377. doi: 10.3390/s24072377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sun J., Wang Z., Yu H., Zhang S., Dong J., Gao P. Two-Stage Deep Regression Enhanced Depth Estimation from a Single RGB Image. IEEE Trans. Emerg. Top. Comput. 2020;10:719–727. doi: 10.1109/TETC.2020.3034559. [DOI] [Google Scholar]

- 31.Kwon B., Huh J., Lee K., Lee S. Optimal Camera Point Selection toward the Most Preferable View of 3-D Human Pose. IEEE Trans. Syst. Man Cybern. Syst. 2022;52:533–553. doi: 10.1109/TSMC.2020.3004338. [DOI] [Google Scholar]

- 32.Huynh-The T., Hua C.-H., Kim D.-S. Encoding Pose Features to Images with Data Augmentation for 3-D Action Recognition. IEEE Trans. Ind. Inform. 2020;16:3100–3111. doi: 10.1109/TII.2019.2910876. [DOI] [Google Scholar]

- 33.Thompson L.A., Melendez R.A.R., Chen J. Investigating Biomechanical Postural Control Strategies in Healthy Aging Adults and Survivors of Stroke. Biomechanics. 2024;4:153–164. doi: 10.3390/biomechanics4010010. [DOI] [Google Scholar]

- 34.Laschowski B., Razavian R.S., McPhee J. Simulation of Stand-To-Sit Biomechanics for Robotic Exoskeletons and Prostheses with Energy Regeneration. IEEE Trans. Med. Robot. Bionics. 2021;3:455–462. doi: 10.1109/TMRB.2021.3058323. [DOI] [Google Scholar]

- 35.Wang H., Xu S., Fu J., Xu X., Wang Z., Na R.S. Sit-To-Stand (STS) Movement Analysis of the Center of Gravity for Human–Robot Interaction. Front. Neurorobotics. 2022;16:863722. doi: 10.3389/fnbot.2022.863722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Job M., Battista S., Stanzani R., Signori A., Testa M. Quantitative Comparison of Human and Software Reliability in the Categorization of Sit-To-Stand Motion Pattern. IEEE Trans. Neural Syst. Rehabil. Eng. 2021;29:770–776. doi: 10.1109/TNSRE.2021.3073456. [DOI] [PubMed] [Google Scholar]

- 37.Bochicchio G., Ferrari L., Bottari A., Lucertini F., Cavedon V., Milanese C., Pogliaghi S. Loaded 5 Sit-to-Stand Test to Determine the Force–Velocity Relationship in Older Adults: A Validation Study. Appl. Sci. 2023;13:7837. doi: 10.3390/app13137837. [DOI] [Google Scholar]

- 38.Zwillinger D. CRC Standard Mathematical Tables and Formulae. 31st ed. CHAPMAN & HALL/CRC; Boca Raton, FL, USA: 2002. [Google Scholar]

- 39.Kouchi M., Mochimaru M. Anthropometric data bases. J. Hum. Interface Soc. 2000;2:252–258. (In Japanese) [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data are contained within the article.