Abstract.

Significance

Accurate identification between pathologic (e.g., tumors) and healthy brain tissue is a critical need in neurosurgery. However, conventional surgical adjuncts have significant limitations toward achieving this goal (e.g., image guidance based on pre-operative imaging becomes inaccurate up to 3 cm as surgery proceeds). Hyperspectral imaging (HSI) has emerged as a potential powerful surgical adjunct to enable surgeons to accurately distinguish pathologic from normal tissues.

Aim

We review HSI techniques in neurosurgery; categorize, explain, and summarize their technical and clinical details; and present some promising directions for future work.

Approach

We performed a literature search on HSI methods in neurosurgery focusing on their hardware and implementation details; classification, estimation, and band selection methods; publicly available labeled and unlabeled data; image processing and augmented reality visualization systems; and clinical study conclusions.

Results

We present a detailed review of HSI results in neurosurgery with a discussion of over 25 imaging systems, 45 clinical studies, and 60 computational methods. We first provide a short overview of HSI and the main branches of neurosurgery. Then, we describe in detail the imaging systems, computational methods, and clinical results for HSI using reflectance or fluorescence. Clinical implementations of HSI yield promising results in estimating perfusion and mapping brain function, classifying tumors and healthy tissues (e.g., in fluorescence-guided tumor surgery, detecting infiltrating margins not visible with conventional systems), and detecting epileptogenic regions. Finally, we discuss the advantages and disadvantages of HSI approaches and interesting research directions as a means to encourage future development.

Conclusions

We describe a number of HSI applications across every major branch of neurosurgery. We believe these results demonstrate the potential of HSI as a powerful neurosurgical adjunct as more work continues to enable rapid acquisition with smaller footprints, greater spectral and spatial resolutions, and improved detection.

Keywords: hyperspectral imaging, fluorescence-guided surgery, neurosurgery, brain tumors

1. Introduction

Optical imaging approaches have transformed surgery via improved intraoperative detection of both normal and diseased tissues.1–5 Technologies that jointly leverage optics, computational methods, and visualization tools have facilitated this unparalleled transformation, with several successful commercial technologies in areas such as surgical robotics6–8 and image-2,3,5 and fluorescence-guided9,10 surgery. Image-guided surgery allows for the clinical deployment of optical imaging systems that are non-invasive and non-ionizing, which in turn can be used for intraoperative computer vision,11 tactile sensing,12 manipulation, and tracking algorithms13 that have a relatively compact footprint and allow for rapid acquisition.

As an example, images acquired via a surgical endoscope, processed through computer vision pipelines,14 have been used for post-surgical analysis of the surgical workflow,15,16 including recognizing surgical goals, predicting the current task being performed, segmenting and recognizing relevant landmarks during surgery, evaluating the difficulty of the surgical plan, and surgeon skill.11 In addition, visual instrument detection and tracking methods for minimally invasive surgeries have been developed and validated on surgical videos.13 Autonomous, high-precision, and dexterous surgical instrument manipulation for surgery and remote telesurgery has been made possible6,17–19 through deep learning methods at precisions previously thought impractical.8 Recently developed image-guided surface sensing systems, such as the GelSight sensor,20 can provide joint micron-scale topography (2.5-dimensional depth data) and tactile feedback more sensitive than human skin.21 The demonstrated effectiveness of these approaches suggests exciting potential prospects for intraoperative applications.

A promising approach in image-guided surgery is hyperspectral imaging (HSI),22–25 which captures wide-field, spectrally resolved images of the surgical field. HSI systems have been deployed successfully for applications in remote sensing, astronomy, agriculture, and surveillance.26–28 Hyperspectral data can be interpreted as an “optical fingerprint” of the material being analyzed (e.g., diffuse reflectance properties) and can be used for material recognition and classification.29–32 Therefore, HSI can enhance visualization of tissue structure and composition in image-guided surgery, aiding in guiding diagnosis and treatment.

In this paper, we review the applications of HSI in neurosurgery, focusing on specific HSI techniques and their medical implementations and benefits in clinical practice. Specifically, we provide the reader with an up-to-date review of how HSI has been implemented clinically and, thus, focus on HSI systems and techniques used in clinical studies only. We begin with preliminaries (Sec. 2), which include an overview of the major subspecialities in neurosurgery (Sec. 2.1), followed by a short review of current HSI techniques (Sec. 2.2). We then discuss the benefits and challenges of HSI in neurosurgery (Secs. 2.3 and 2.4). Next, we proceed with an in-depth review of HSI technologies and their clinical applications for imaging under white light in reflectance mode (Sec. 3) and for imaging fluorescence in fluorescence-guided surgery (Sec. 4). We have broken up Secs. 3 and 4 into technological subsections—imaging hardware and software (Secs. 3.1 and 4.1), datasets (Sec. 3.2), and visualization tools (Sec. 3.3)—and followed them up with clinical implementations of and results from these HSI technologies (Secs. 3.4 and 4.2). By separating each section into technological and clinical subsections, the readers will be able to refer to more detailed technological aspects of HSI (e.g., imaging systems, computational methods, datasets, and visualization techniques) or the clinical results and implementations of these technologies in the various subspecialties of neurosurgery. We also provide in-depth tables that summarize the technological and clinical subsections for ease of reference. Finally, we discuss future perspectives on HSI as a novel tool with the potential to become a standard adjunct in image-guided neurosurgery (Sec. 5).

2. Preliminaries

2.1. Neurosurgery

Neurosurgery is the branch of medicine that treats disorders of the central nervous system (CNS) or peripheral nervous system (PNS) by physical manipulation, modification, or modulation of anatomical (e.g., the subthalamic nucleus for deep brain stimulation) and pathological (e.g., aneurysm clipping and resection of brain tumors) structures.33–35 In terms of research and clinical techniques, neurosurgery is among the most rapidly developing subspecialties of medicine,36 propelled by the interdisciplinary integration of tools from imaging, molecular biology, cancer neuroscience, electrophysiology, brain mapping, neuroengineering, computational biology, bioinformatics, and robotics. Clinically, neurosurgery is composed of the following subspecialties:

“Neurosurgical oncology” is the surgical branch of neuro-oncology focused on the diagnosis, treatment, and long-term management of tumors of the CNS and PNS. Surgical resection is the primary course of treatment for a large set of tumors. The success of tumor resection is one of the most important initial predictors of overall survival and quality of life.37,38 Therefore, the goal of tumor surgery is to maximize the extent of tumor resection (EOR) while preserving the functional brain to ensure high post-operative functional outcomes (i.e., achieving an oncofunctional balance39–44). However, rates of EOR can be as low as 30% as reported by post-operative,45 standard-of-care magnetic resonance imaging (MRI) using conventional surgical techniques.

Conventional resections are performed under white light illumination with or without magnification (e.g., using microscopes or surgical loupes). In these procedures, the surgeon uses the cues from visual white light and tactile feedback to determine which tissue to resect and which to preserve.41 However, because brain tumors often appear visually similar to normal brain tissue, residual tumors often remain unresected, leading to low rates of maximal EOR. This is especially problematic in infiltrative areas of the most aggressive malignant tumors, such as glioblastomas (GBMs).41 Surgical adjuncts such as intraoperative MRI (iMRI), intraoperative ultrasound (US), and neuronavigation can improve visualization and intraoperative surgical decision-making. Despite their benefits, these tools have limitations including disruption of the surgical workflow, inaccurate spatial information due to brain shift, low contrast (normal tissue versus pathology), and high costs.46 Therefore, there is an acute need for real-time, high-resolution technologies that accurately delineate tumors from normal brain tissue in neurosurgical oncology.47–52

“Vascular neurosurgery” is the branch of neurosurgery focused on the diagnosis and surgical treatment of blood vessel pathologies of the nervous system.33 This encompasses a variety of conditions including aneurysms, arteriovenous malformations (AVMs), stroke, and hemorrhage. The primary aims of surgical treatments include restoring normal blood flow to the brain, preventing blood clot formation and stroke, repairing vascular pathologies (e.g., aneurysms and fistulas), and resecting vascular lesions (e.g., AVMs and cavernomas). Given that the spatial scale of vascular structures in the nervous system is of the order of millimeters, submillimeter precision and real-time intraoperative feedback are critical to safely treat pathologies while preserving normal vasculature. Although intraoperative three-dimensional (3D) digital subtraction angiography provides visualization of the neurovasculature in 3D as well as differentiates its venous and arterial components,53 it does not provide direct intraoperative visualization of vasculature and pathology at the tissue level. Intraoperative Doppler US can detect blood flow,54 but it is constrained in resolution (i.e., millimeters) and field of view (i.e., single point detection) and is sensitive to patient motion. Intraoperative indocyanine green (ICG) fluorescence angiography provides real-time intraoperative feedback with surface visualization of vasculature using ICG fluorescence, which accumulates in the blood vessels.55 However, visualization of vasculature and pathologies is transient (i.e., ICG signal washes out shortly after administration), is useful only for surface imaging, and is not specific to pathologies as it accumulates in all normal and abnormal vasculature.56 Therefore, there is an acute need for real-time, non-transient, and highly specific intraoperative imaging technologies that can distinguish between normal and pathological neurovasculature for visual feedback in vascular neurosurgery.

“Functional neurosurgery” is the surgical branch of neurosurgery that treats various chronic neurologic disorders of the brain through functional modification. These disorders include epilepsy, movement disorders, pain, spasticity, and psychiatric illnesses.33 One example of functional neurosurgery is the treatment of intractable epilepsy via surgically resecting the epileptogenic area, which is the area of the brain where seizures are believed to originate. The goal of this surgery is to eliminate or decrease the frequency and severity of seizures.57 In epilepsy surgery it is important to map out the affected area of the brain, typically with intraoperative electrocorticography (ECoG).58 During this procedure, a grid of electrodes is placed on the cortex to measure electrical activity and identify regions with abnormal signals that might indicate seizure origin. However, intraoperative ECoG interrupts the surgical workflow by requiring electrode placement, signal measurement, signal interpretation, electrode removal, and co-registration of electrode locations with signal origins on the brain. In addition, recordings can take a few minutes to complete and interpret. The resolution of ECoG is dependent on the intrinsic spacing within the electrode array, with spatial resolutions of up to a centimeter using conventional grids. There is also a risk of infection associated with the use of such an electrode array with long-term monitoring. As such, imaging techniques that provide visualization of the epileptogenic regions would enable real-time feedback and ideally more accurate identification of the seizure-causing regions. Overall, there is a need for imaging technologies that provide functional neurosurgeons with real-time and highly specific identification of normal and abnormal functions in the nervous system.

“Spine surgery” is the surgical branch of neurosurgery that treats disorders affecting the spinal cord.33 Spine surgery can address issues such as spinal deformity, nerve compression, pain, and neurological deficits due to disorders of the spinal cord and nerves. Surgical navigation has become critical in spine surgery to perform accurate manipulation of bony structures while preventing damage to the spinal cord and its surrounding neural elements. Such navigation is typically done with fiducial markers placed on the skin and spine, but these can get obscured, deformed, or displaced during surgery,59 compromising accurate real-time guidance. It is therefore clear that to enhance the accuracy and safety of spine surgery, there is a pressing need for non-invasive real-time tracking systems and algorithms. These advanced technologies will provide better guidance during surgical procedures, ensuring more effective treatment of spinal disorders and improved patient outcomes.

“Other subspecialties” of neurosurgery include trauma and peripheral nerve surgery. However, there has been no clinical work with HSI in these subspecialties, so we will not discuss them here.

2.2. Hyperspectral Imaging

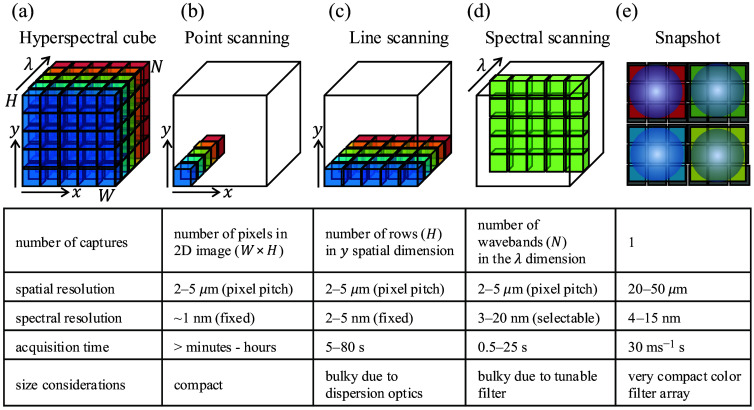

HSI is the acquisition of high-resolution spectra over a wide field of view. HSI allows for capturing a 3D hyperspectral cube of size , where and are the height and width of images in the cube, respectively, and is the number of wavelength channels [Fig. 1(a)]. The value of roughly distinguishes it from multispectral imaging, a spectrally resolved imaging paradigm that uses fewer, broader spectral bins. Here, we define a multispectral system to have less than 10 wavelength channels () and a hyperspectral system to have more than 10 (). Each channel in the cube is equivalent to a two-dimensional (2D) image that would be captured by placing an appropriate bandpass spectral filter in front of the camera. Capturing spectral data in addition to spatial information can be used to determine the composition of the contents of the imaged scene.31,32,60 An in-depth review of the construction and properties of such systems can be found in the literature,31,32 and we discuss only the essentials here. HSI technologies relevant to neurosurgery and their general specifications are illustrated in Fig. 1. Acquisition of a 3D hyperspectral image cube with a 2D camera sensor, however, is not straightforward. Thus, several techniques for the capture of hyperspectral image cubes have been developed, each with its own unique advantages and pitfalls.61,62

Fig. 1.

Hyperspectral imaging technologies used in neurosurgery. (a) Hyperspectral image cube is an array of size , where and are the width and height, respectively, of images in the cube along the and spatial dimensions, and is the number of wavelength channels along the dimension. Each channel in the cube is equivalent to an image that would be captured by placing an appropriate bandpass spectral filter in front of the camera. (b) Point scanning methods acquire a complete spectrum at a single pixel coordinate (i.e., “point”), scanning along the and spatial dimensions to reconstruct the full 3D hyperspectral cube. (c) Line scanning methods acquire 2D data of size along one spatial dimension, scanning along the spatial dimension (i.e., “line”) to reconstruct the full 3D hyperspectral cube. (d) Spectral scanning methods acquire 2D data images of size at one wavelength channel, scanning along the wavelength dimension (i.e., “spectral”) to reconstruct the full 3D hyperspectral cube. (e) Snapshot methods acquire the full 3D hyperspectral image cube of size with each single acquisition (i.e., “snapshot”).

“Point scanning methods” (also referred to as whiskbroom scanners) operate using a single detector or a small array of detectors to sequentially scan the scene, capturing spectral data pixel by pixel. Although this method provides high spectral resolution, the point-scanning approach needs acquisitions, which for megapixel-sized images is time-consuming and limits their use to imaging static scenes and/or small fields of view [Fig. 1(b)].

“Line scanning methods” (also referred to as pushbroom scanners) encode spectral data in one spatial dimension, allowing parallel measurement of the other spatial dimension. Typically, these methods use a linear array of detectors aligned perpendicular to the scanning direction (say, along the dimension), capturing spectral data row by row. This approach reduces the number of acquisitions to , which significantly reduces acquisition time compared with point scanners. However, the acquisition of thousands of line scans still comes at a high time cost. These are the most widely available systems63–66 used abundantly in HSI applications [Fig. 1(c)].

“Spectral scanning methods” image one spectral channel (i.e., one waveband) in the hyperspectral cube at a time and employ a tunable bandpass spectral filter to capture sequentially 2D images at each spectral channel. Spectral scanners offer the flexibility to acquire cubes over a programmable set of wavelengths with selectable spectral resolution. High-spectral-resolution cubes come at a high time cost, especially when considering their use in the dynamic, fast-paced surgical setting. Typical tunable filters used are liquid crystal tunable filters (LCTFs)67 and acousto-optic tunable filters68 [Fig. 1(d)].

“Snapshot methods”69–71 capture a hyperspectral cube with complete spatial and spectral information in a single exposure. Snapshot acquisition is achieved by space division multiplexing of the sensor over the spatial and spectral dimensions, similar to a plenoptic camera.72 In this approach, the sensor area is distributed over a number of parts equal to the number of spectral channels. Each of these parts images a wide-field image corresponding to one spectral channel, and these parts are stacked together to form the hyperspectral cube. This technology is facilitated by new optical designs incorporating lenslet arrays70,71,73,74 and varying filtering and dispersion strategies. This rapid acquisition enables the use of snapshot systems in applications requiring real-time hyperspectral feedback, such as in intraoperative image guidance, where long scan times or bulky scanning hardware can interfere with the surgical workflow. However, space division multiplexing requires a trade-off between spatial and spectral resolutions for equivalent acquisition times—as we increase the number of parts, the sensor is segmented into fewer pixels available for each part [Fig. 1(e)].

“Snapscan systems” combine the benefits of snapshot and line scanning hyperspectral systems. Such systems are built with mosaic filter arrays as in snapshot systems but employ internal scanning of the mosaic and computational reconstructions to yield fast, high-resolution hyperspectral cubes.75

“Compressed sensing methods” exploit the regularity in natural signals to obtain an approximation to the hyperspectral cube.76 An example of such regularity is the sparsity of individual spectral channels in the spatial frequency domain, which is the subject of a classic signal processing technique called compressed sensing. Such systems have the capability to provide video-rate hyperspectral acquisition with high spatial resolution for scenes that follow its assumptions.77 In addition, such methods can also implement programmable spectral filters78 in addition to bandpass filters, which allow for matched filtering of spectral signals for classification and segmentation applications.

2.3. Benefits of HSI in Neurosurgery

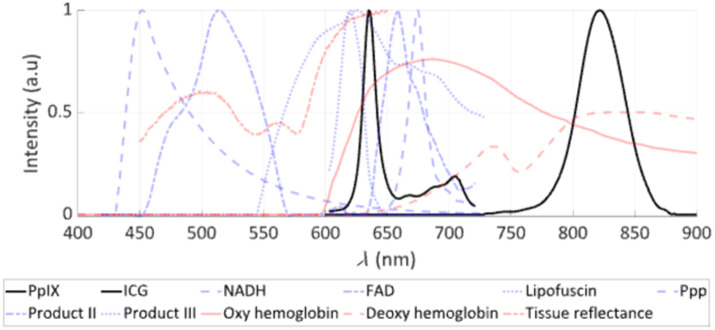

As mentioned before, the spectrum in one pixel of the hyperspectral cube contains the optical signature or “optical fingerprint” of the imaged scene point at that spatial coordinate (Fig. 2). This fingerprint can include fluorophores [e.g., protoporphyrin IX (PpIX)] and/or chromophores (e.g., oxy- and deoxyhemoglobin) that differentially accumulate in tissues. This fingerprint is representative of the tissue composition of the imaged scene point—typically, bulk brain tissue, arterial blood vessels, venous blood vessels, various types of tumors, and background. HSI is particularly useful when classifying these kinds of tissue because reflectance and fluorescence spectra obtained with the hyperspectral cubes have high discriminative power that has been widely characterized.79–83

Fig. 2.

Spectra of fluorophores, chromophores, and reflectance in the visible to near-infrared (NIR) used in HSI for neurosurgery. HSI in neurosurgery has used both exogenous agents, such as 5-aminolevulinic acid that leads to the production of protoporphyrin IX (PpIX), and ICG as key fluorescence biomarkers in fluorescence guided surgery (FGS), with their fluorescence spectra shown in black. Other endogenous fluorophores (e.g., FAD, NADH) are shown in blue, and PpIX photoproducts as well as tissue reflectance and chromophores (e.g., oxy- and deoxyhemoglobin) are shown in red. The -axis shows the intensity of fluorescence emission, reflectance, or absorption in arbitrary units, and the -axis shows the wavelength , in nanometers.

As an example of this high discriminative power in the context of vascular neurosurgery, consider a pixel consisting of a blood vessel. The main chromophores involved in the reflectance spectrum of this pixel are oxyhemoglobin and deoxyhemoglobin. The reflectance spectra of deoxyhemoglobin and oxyhemoglobin, which are equal at 545 nm, change rapidly in opposite directions between 545 and 560 nm. Therefore, spectrally resolved imaging in the visible range of the spectrum allows for highly accurate estimates of the relative concentrations of deoxyhemoglobin and oxyhemoglobin, allowing optical measurements of oxygen saturation.

In addition to pixel-wise classification of tissue constituents, hyperspectral data enable other kinds of optical characterization across the surgical field of view. The rich data encoded in each hyperspectral cube offer the potential to extract optical features that would otherwise be impossible to detect visually with the naked eye or with a conventional color image.67,84 For example, spectrally resolved wide-field data have been shown to correct for the distorting effects of tissue optical properties on emitted fluorescence signals,85 which opens the possibility for using HSI to evaluate the surgical field of view and provide quantitative, objective measures of fluorescence and therefore absolute fluorophore molar concentrations.67

Putting all these capabilities together with modern acquisition techniques from optics and computational imaging, advances in computational methods and hardware, and segmentation and classification with artificial intelligence,86 HSI has the potential to be a powerful tool for real-time intraoperative guidance.

2.4. Challenges in Current Neurosurgical HSI Approaches

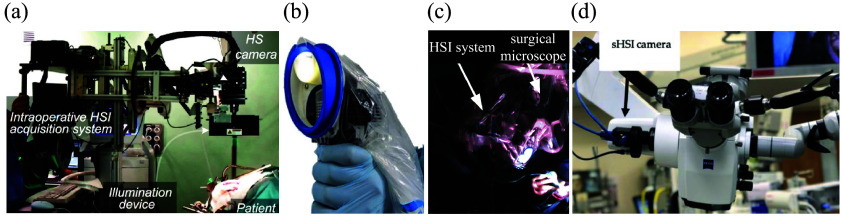

Translating an optical system for clinical use into the neurosurgical operating room presents unique challenges not encountered in traditional benchtop imaging settings for pre-clinical studies87 (Fig. 3). The fundamental principle for translation of a novel HSI system into the operating room is that any system and imaging process must not significantly interfere with or interrupt the neurosurgical workflow; it should enable ease of integration, safety, and efficiency for dynamic intraoperative use. A major practical consideration is the size of the imaging system. The spatial footprint of the optical setup must be as small as possible to seamlessly integrate and “fit” into the already instrument-dense neurosurgical operating room (consisting of, for example, the surgical microscope, US imager, ultrasonic aspirator, neuronavigation, drill, and suction control).

Fig. 3.

HSI systems in neurosurgery. (a) HELICoiD system uses an exoscope with two line-scan hyperspectral cameras mounted in a confocal configuration. The HELICoiD system fits within a bounding and requires removing the surgical microscope for acquisition, thus interrupting the surgical workflow. (b) Small footprint handheld HSI snapshot system does not require removing the surgical microscope but does not provide the same field of view as seen from the surgeon’s oculars. (c) and (d) HSI systems [spectral scanning in panel (c) and snapscan in panel (d)] mounted on one of the side ports of the surgical microscope enable the acquisition of 3D hyperspectral image cubes co-registered with the surgeon’s field of view with a small physical footprint to seamlessly integrate into the already space-constrained neurosurgical operating room. (a) Adapted from Leon et al.,88 under CC-BY 4.0. (b) Adapted from MacCormac et al.,89 under CC-BY 4.0. (c) Reproduced from Valdés et al.,67 under CC-NC-SA 3.0. (d) Adapted from Kifle et al.,90 under CC-BY 4.0.

Next, the hyperspectral image captured by the system should be as high-quality as possible, while being as close to real-time as possible (), consistent with other intraoperative imaging modalities such as US imaging, neuronavigation feedback, microscope visualization, and 3D exoscope imaging. For the hyperspectral data to be useful for surgical guidance, it must fulfill certain basic constraints in addition to real-time acquisition. First, structures in the brain visualized intraoperatively are of the order of millimeters. Therefore, submillimeter resolution over a surgical field of view of the order of centimeters is critical. Second, the spectral bandwidth of the fluorescence peaks of commonly used fluorophores may be as narrow as nanometers, requiring spectral resolutions of a few nanometers. Lastly, as light is split into spectral channels in the already light-starved conditions of fluorescence imaging, the hyperspectral system sensor should have high quantum efficiency, high bit depth, and low dark noise to enable short exposure times.

The speed of hyperspectral acquisition is constrained by the space–spectrum–sensitivity trade-off. Therefore, these conditions are all difficult to satisfy together. The most common, line-scan hyperspectral imagers provide high spectral and spatial resolutions in one spatial dimension [Fig. 3(a)]. However, providing equivalent resolution in the second spatial dimension for surgically relevant scales is time-consuming (typically tens to hundreds of seconds). To be more sensitive to low-intensity fluorescence signals, existing spectral scanning methods [Fig. 3(c)] typically increases exposure times, decreasing hyperspectral cube acquisition rates. Snapshot and snapscan HSI systems70,71,75,91 [Figs. 3(b) and 3(d)] can potentially provide fast frame rates for hyperspectral acquisition.67,87,92–94 However, they sacrifice spatial resolution to do so, also increasing exposure if increased sensitivity is needed. Managing this balance among the imaging parameters to construct clinically practical and effective systems is one of the most important open problems in neurosurgical HSI.

3. Neurosurgical HSI in Reflectance Mode

Traditionally, neurosurgery has been performed under white-light illumination provided by xenon or halogen lamps.95 The spectral distributions of such illumination extend across the visible-near-infrared (VIS-NIR) range of the optical spectrum, where the optical properties and reflectance spectra of various types of brain tissue, intracranial structures (e.g., arteries, veins, and nerves), pathologies (e.g., tumors, aneurysms, hemorrhages, and abscess), and their molecular constituents (e.g., oxyhemoglobin and deoxyhemoglobin) have been well-characterized.79–83 Therefore, HSI systems can be used across subspecialties in neurosurgery to serve a common purpose—to determine the composition of what the surgeon sees in the surgical field of view.

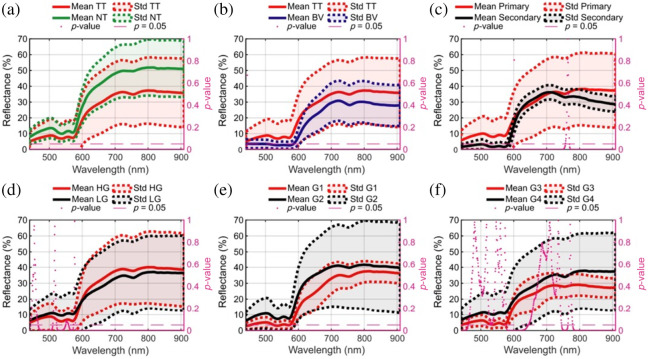

For example, in neurosurgical oncology, the aim is to determine the presence or absence of tumor in the field of view, to classify tumor type, and to identify background tissue (Fig. 4). In vascular neurosurgery, the aim is to image blood perfusion and oxygen saturation. In functional neurosurgery, the aim is to identify the epileptiform regions by measuring neurovascular coupling. In spine surgery, the aim is to track surgical field skin features for intraoperative navigation without the use of fiducial markers. Here, we provide a detailed presentation of the optical designs of HSI systems that have been implemented in the neurosurgical operating room. These systems along with their parameters are discussed in Sec. 3.1 and summarized in Table 1.

Fig. 4.

Reflectance spectra of normal brain and brain tumors. (a) and (b) Reflectance spectra of normal tissue (NT) and tumor tissue (TT) and blood vessels (BVs) are significantly different in the visible-NIR regime. (c)–(f) Significant differences are observed in the reflectance spectra from different grades of primary tumors (low grade, high grade, grade 1, grade 2, grade 3, and grade 4) as well as in metastases (i.e., secondary). These differences in reflectance spectra enable the classification of the field of view into the brain parenchyma, blood vessels, and tumor tissue, along with subclassification into arteries, veins, and various tumor types and grades. The -axis shows the reflectance of tissue in arbitrary units, and the -axis shows the wavelength in nanometers. Adapted from Leon et al.,88 under CC-BY 4.0.

Table 1.

Technical specifications of current hyperspectral imaging systems in neurosurgery (as applied in individual work).

| HIS system | Clinical application | HIS tech | Sensor tech | Wavelength range | Spectral bands | Spectral resolution | Field of view | Pixel resolution | Spatial resolution | Frame rate per line/channel) | Total time |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Neurosurgical oncology—reflectance | |||||||||||

| Headwall Hyperspec® VNIR A-series, Headwall Hyperspec® NIR 100/U |

Tumor segmentation from normal tissue, blood, and background112–115,146,147 | Line detection, scanned manually on a translation stage | Silicon CCD, InGaS | 400 to 1000 nm 900 to 1700 nm |

826 172 |

2 to 3 nm 5 nm |

230 mm (max) × 129 mm 230 mm (max) × 153 mm |

1787 (max) × 1004 479 (max) × 320 |

|

90 fps 100 fps |

80 s 40 s |

| Specim ImSpector VNIR V10-E spectrograph | Brain tissue classification97 | Pushbroom | CCD | 400 to 1000 nm | 1040 | 2.8 nm | N/S | N/S | N/S | N/S | N/S |

| Headwall Hyperspec® VNIR A-series (only) | Tumor segmentation from normal tissue, blood, and background88,96,99,116,119,122,127,131,132,140,148,174 | Line detection, scanned manually on a translation stage | Silicon CCD | 400 to 1000 nm | 826 | 2 to 3 nm | 230 mm (max) × 129 mm | 1787 (max) × 1004 | 90 fps | 80 s | |

| IMEC snapshot multispectral SM5x5 | Brain tissue classification234 | Snapshot | CMOS | 676 to 954 nm | 25 | 12 nm (inf.) | N/S | 410 × 216 | N/S | N/A | N/S |

| Ximea MQ022HG-IM-SM5X5-NIR | Brain tissue classification98,150 | Snapshot | CMOS | 665 to 975 nm | 25 | 14 nm (inf.) | N/S | 409 × 217 | N/S | 170 fps | 70 ms |

| TIVITA tissue camera | Brain tissue classification87 | Pushbroom | CMOS | 500 to 1000 nm | 100 | 5 nm | 60 mm × 70 mm | 640 × 480 | 110 to (inf.) | 100 fps | |

| IMEC snapscan VNIR 150 | Brain tumor identification93,141 | Snapscan | CMOS | 470 to 900 nm | 150 | 10 to 15 nm | N/S | 3600 × 2048 | N/S | N/A | 2 to 20 s |

| BaySpec OCITM-D-2000Ultra-compact hyperspectral | Brain tumor identification90 | Snapshot | N/S | 475 to 875 nm | 35 to 40 | 12 to 15 nm | N/S | 500 × 270 | N/S | 50 fps | |

| Cubert Ultris X50 |

Evaluation of snapshot hyperspectral imaging in neurosurgery89 |

Snapshot |

CMOS |

350 to 1000 nm |

155 |

4 nm |

N/S |

570 × 570 |

N/S |

1.5 fps |

0.67 s |

| Neurosurgical oncology—fluorescence | |||||||||||

| Custom multispectral system | Residual brain tumor detection209 | Spectral scanning | CCD | 495 to 720 nm | 5 | 20 nm | 3 cm diameter | 755 × 484 | N/S | 15 s | |

| CRi VariSpec LCTF + PhotonMax | Brain tumor identification107 | Spectral scanning | CCD | 400 to 720 nm | 33 | 20 nm at 550 nm | 25.4 mm | 512 × 512 | 6 s | 120 s | |

| CRi VariSpec LCTF + pco.pixelfly | PpIX concentration estimation67,84 | Spectral scanning | CCD | 400 to 720 nm | 55 (WL) 75 (FL) |

5 nm (WL) 3 nm (FL) |

10 to 50 mm × 7.5 to 40 mm | 696 × 520 | N/S | N/S | 4 to 16 s |

| CRi VariSpec LCTF + pco.edge | PpIX concentration estimation211,219 | Spectral scanning | CMOS | 400 to 720 nm | N/S | N/S | N/S | 2560 × 2160 | N/S | 50 ms | 10 to 30 s |

| CRi VariSpec LCTF + hNü EMCCD | PpIX concentration estimation217 | Spectral scanning | EMCCD | 400 to 720 nm | 52 (WL) 52 (FL) |

3 nm (WL) 3 nm (FL) |

512 × 512 | N/S | 10 to 100 ms | 1.04 to 10.4 s | |

| CRi VariSpec LCTF + ORCA-Flash4.0 | PpIX concentration estimation221 | Spectral scanning | EMCCD | 400 to 720 nm | 33 (WL) 33 (FL) |

10 nm | N/S | 1024 × 1024 | N/S | >100 ms | 26.4 s |

| CRi VariSpec LCTF + Sony IMX252 | PpIX concentration estimation94,198,199,201,202,222,223,226 | Spectral scanning | sCMOS | 420 to 730 nm | 63 (WL) 104 (FL) |

5 nm (WL) 3 nm (FL) |

N/S | (variable across work) | N/S | >100 ms | N/S |

| Senop HSC-2 |

PpIX concentration visual versus machine threshold comparison206 |

Spectral scanning |

CMOS |

510 to 635 nm |

4 |

20 nm |

N/S |

1024 × 1024 |

N/S |

65.9 ms |

0.46 s |

| Vascular neurosurgery | |||||||||||

| Eba Japan HSC1700 | Oxygenation mapping101,156 | Pushbroom | CCD | 400 to 800 nm | 81 | 5 nm | N/S | 640 × 480 | N/S | 30 fps | 5 to 16 s |

| IMEC snapshot multispectral |

Distinguishing blood and blood vessels157 |

Snapshot |

CCD |

480 to 630 nm |

16 |

15 nm |

|

256 × 512 |

|

20 fps |

<50 ms |

| Functional neurosurgery | |||||||||||

| CRi VariSpec LCTF + pco.pixelfly | Imaging epileptiform regions104 | Spectral scanning | CCD | 480 to 660 nm | 4 | N/S | N/S | 1392 × 1024 | N/S | N/S | N/S |

| IMEC snapshot multispectral | Imaging neurovascular coupling92,160 | Snapshot | CMOS | 480 to 630 nm | 16 | 15 nm | 256 × 512 | 10 to 20 fps | 25 to 95 ms | ||

| Ximea MQ022HG-IM-SM5X5-NIR |

Intraoperative brain mapping152,163 |

Snapshot |

CMOS |

665 to 960 nm |

25 |

13 nm (inf.) |

N/S |

409 × 217 |

N/S |

170 fps |

14 fps |

| Spine surgery | |||||||||||

| Quest Medical Imaging BV Hyperea | Markerless positioning during spine surgery59 | Snapshot | Silicon CCD | 450 to 950 nm | 41 | 15×15 cm | 500 × 250 | (inf.) | 16 fps | N/S | |

| IMEC snapscan VNIR, Photonfocus (MV0-D2048x1088-C01-HS02-160-G2) | Tissue classification in spine surgery167 | Snapscan Snapshot |

CCD | 470 to 900 nm 665 to 975 nm |

150+25 | 10 to 15 nm 15 nm |

N/SN/S | 3650 × 2048 409 × 217 |

N/S N/S |

N/S 50 fps |

2 to 40 s 1 s |

N/S, not specified; N/A, not applicable; WL, white light; FL, fluorescence

To process, interpret, and visualize the hyperspectral data captured with these HSI systems, accompanying computational methods have been developed. For example, in neurosurgical oncology, a number of classification and segmentation algorithms label every pixel in the surgical field as normal tissue, tumor (primary or secondary),96 necrosis,97 blood vessel (artery or vein),98 dura mater,98 hypervascularized tissue,99 skull,100 or background. Similarly, spectral fitting methods process HSI data captured during vascular and functional neurosurgery to yield perfusion and oxygenation maps.92,101–105 Along with details on optical hardware, we also present a brief review of these computational methods in Sec. 3.1 and summarize their pipelines, validation methods, and best results in Table 2. For a more detailed review of such computational methods, please refer to Massalimova et al.106

Table 2.

Computational methods developed for hyperspectral imaging in neurosurgery.

| Objective | Pre-processing | Input format | Target | Algorithm | Validation standard | Validation method | Best validation metrics/results | Speed | Hardware/algorithm |

|---|---|---|---|---|---|---|---|---|---|

| Neurosurgical oncology—reflectance | |||||||||

| Tumor identification, Fabelo et al.113 | A. Spatial non-uniformity correction B. Dark frame subtraction and flat-fielding C. Denoising D. Spectral normalization |

Pixel-wise | Classes: tumor tissue, normal tissue, and background | SVM | Tumor histopathology results from regions of interest | Tenfold cross-validation on mixed-patient pixel spectra | 87% overall accuracy 78% tumor sensitivity |

N/A | Hyperspec® Data Processing Unit |

| MLP | 97% overall accuracy 93% tumor sensitivity |

||||||||

| RF | 99% overall accuracy 99% tumor sensitivity |

||||||||

| Tumor identification and type prediction, Fabelo et al.96 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and cropping D. Spectral normalization |

Pixel-wise | Classes: tumor tissue and normal tissue Subclasses: primary tumor and metastasis |

RF | Visual assessment and tumor histopathology results from region of interest | Tenfold cross-validation on mixed-patient pixel spectra | 99.7% overall accuracy 99.7% tumor sensitivity 99.6% subclass accuracy 100% subclass sensitivity |

N/A | Kalray many-core processor |

| Tumor identification speedup, Madroñal97 | A. Cropping of regions of interest B. Automatic specularity and background removal |

Pixel-wise | Classes: tumor tissue, normal tissue, and necrosis | SVM | Ex vivo | Tenfold cross-validation on mixed-sample pixel spectra | N/S | 2.3 Hz | Kalray massively parallel processor array MPPA-256-N |

| Dimensionality reduction with semantic tumor segmentation, Ravi et al.114 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and cropping D. Spectral normalization E. Novel deep learning–based embedding (FR-t-SNE) |

Cube | Classes: tumor tissue and normal tissue Subclasses: primary tumor and their types and metastasis and their origin (nine total) |

Discrete cosine transform–based semantic texton forest | Visual assessment and tumor histopathology results from region of interest | Sixfold cross-validation | 72% overall accuracy 53% tumor sensitivity (due to inter-patient variability) 92% tumor specificity |

40 s/cube | Intel Xeon E7-8890 v424 cores |

| Tumor and blood vessel identification, Fabelo et al.122 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and cropping D. Spectral normalization E. FR-t-SNE embedding |

Cube | Classes: tumor tissue, normal tissue, blood vessel, and background | Mixed supervised–unsupervised pipeline | Visual assessment and tumor histopathology results from region of interest | Tenfold cross-validation | 99% to 100% overall accuracy 98% to 100% tumor sensitivity | 1 min/cube | Kalray MPPA-256-N |

| Tumor and blood vessel identification, tumor type prediction, and speedup, Fabelo et al.115 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and cropping D. Spectral normalization |

Cube | Classes: tumor tissue, normal tissue, and blood vessel Subclasses: primary tumors, metastasis, and their origin (eight total) |

Mixed supervised–unsupervised pipeline | Visual assessment and tumor histopathology results from region of interest | Tenfold cross-validation | 98% overall accuracy Unspecified sensitivity |

1 min/cube | Kalray MPPA EMB01 board |

| Brain tissue classification, Fabelo et al.131 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and cropping D. Spectral normalization |

Cube | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | Combined 1D DNN and 2D CNN | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 80% overall accuracy 42% tumor accuracy |

1 min/cube | NVIDIA Quadro K2200 GPU |

| Cube | 2D deep convolutional neural network | 77% overall accuracy 42% tumor accuracy |

1 min/cube | ||||||

| Pixel-wise | 1D deep neural network | 77% overall accuracy 40% tumor accuracy |

10 s/cube | ||||||

| Hyperspectral band selection, Martinez et al.99 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral cropping and normalization D. Spectral resampling |

Pixel-wise | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | SVM | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | With the top 2.5% most significant spectral bands: 77% overall accuracy 57% tumor sensitivity |

N/S | Algorithm |

| Brain tissue classification, Fabelo et al.174 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and cropping D. Spectral normalization |

Cube | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | 2D deep convolutional neural network | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 85% overall accuracy 41% tumor sensitivity |

1 min/cube | NVIDIA Titan-XP GPU |

| Pixel-wise | 1D deep neural network | 84% overall accuracy 42% tumor sensitivity |

10 s/cube | NVIDIA Quadro K2200 GPU | |||||

| Tumor and blood vessel identification, Manni et al.132 | A. Dark frame subtraction and flat-fielding B. Spectral cropping C. Spectral band selection |

Pixel-wise | Classes: tumor tissue, normal tissue, blood vessel, and background | SVM | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 76% overall accuracy 43% tumor sensitivity |

N/S | N/S |

| Cube | 2D convolutional neural network | 72% overall accuracy 14% tumor sensitivity |

NVIDIA Titan-XP GPU | ||||||

| Cube | 2D–3D hybrid convolutional neural network | 80% overall accuracy 68% tumor sensitivity |

|||||||

| Pixel-wise | 1D deep neural network | 78% overall accuracy 19% tumor sensitivity |

|||||||

| Tumor identification, Martínez-González et al.119 | A. Dark frame subtraction and flat-fielding B. Spectral smoothing C. Spectral band selection |

Pixel-wise | Classes: tumor tissue and normal tissue | Linear scalar SVM | Visual assessment and tumor histopathology results from region of interest | Unspecified data split | 89% overall sensitivity | <1 s | Intel Core i5 |

| Gray–white matter classification, Lai et al.234 | Dark frame subtraction and flat-fielding | Pixel-wise | Classes: gray matter and white matter | SVM | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 96% overall sensitivity 89% overall specificity |

N/S | N/S |

| Brain tissue classification, Cruz-Guerrero et al.116 | Dark frame subtraction and flat-fielding | Pixel-wise | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | Blind linear unmixing with end-member estimation (EBEAE)144,235 | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 67% to 76% overall accuracy 30% to 50% tumor sensitivity |

29 to 32 s/cube | Algorithm |

| Tumor and blood vessel identification and tumor type prediction, Ruiz et al.98 | A. Dark frame subtraction and flat-fieldingB. Spectral correction and normalization | Pixel-wise | Classes: tumor tissue, normal tissue, venous blood vessel, arterial blood vessel, and dura mater | SVM | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 75% to 97% overall median accuracy | N/S | N/S |

| RF | 55% to 97% overall median accuracy | ||||||||

| Hyperspectral cube fusion, Leon et al.147 | A. Dark frame subtraction and flat-fieldingB. Spatial denoisingC. Spatial upsampling for NIR image | Cube | Fused hyperspectral image | Spatial registration using SURF and MSER detectors via a projective transform | N/A | Structural similarity index (SSIM) among gray reconstructions from transformed cubes | 0.78 SSIM 21% accuracy improvement |

N/S | N/S |

| Brain tissue classification, Hao et al.135 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and band selection D. Spectral normalization |

Cube | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | CNN | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 97% overall accuracy 91% tumor sensitivity |

N/S | NVIDIA GeForce RTX 2080Ti GPU |

| Hyperspectral band selection, Baig et al.118 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral smoothing and downsampling D. Spectral normalization |

Pixel-wise | Classes: tumor tissue and normal tissue | Empirical mode decomposition | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 88% overall accuracy for the top 2.5% most significant bands |

N/S | Algorithm |

| Brain tissue classification, Urbanos et al.150 | A. Dark frame subtraction and flat-fielding B. Spectral correction and normalization |

Pixel-wise | Classes: tumor tissue, normal tissue, venous blood vessel, arterial blood vessel, and dura mater | SVM | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 60% overall accuracy 20% tumor sensitivity |

N/S | N/S |

| Pixel-wise | RF | 53% overall accuracy 11% tumor sensitivity |

|||||||

| Cube | CNN | 49% overall accuracy 32% tumor sensitivity |

|||||||

| Hyperspectral image denoising, Sun et al.236 | N/S | Cube | Denoised image | TV-regularized denoising | N/A | N/A | N/A | N/S | N/S |

| Brain tissue classification, Ayaz et al.136 | A. Dark frame subtraction and flat-fielding B. Spectral dimensionality reduction and sensitivity correction |

Cube | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | 3D CNN | Visual assessment and tumor histopathology results from region of interest | 80:10:10 data split | >99% overall accuracy 99% tumor sensitivity |

N/S | NVIDIA GeForce RTX 5000 GPU |

| Brain tissue classification, Wang et al.134 | A. Dark frame subtraction and flat-fielding B. Spectral dimensionality reduction and sensitivity correction |

Cube | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | CNN | Visual assessment and tumor histopathology results from region of interest | 500:1 data split | >99% overall accuracy 99% tumor accuracy |

N/S | N/S |

| Brain tissue classification, Cebrián et al.137 | N/S | Cube | Classes: tumor tissue, normal tissue, blood, and meninges | Deep recurrent neural network | Visual assessment and tumor histopathology results from region of interest | Fivefold cross-validation | >99% overall AUC >99% tumor AUC |

N/S | N/S |

| Brain tissue classification, La Salvia et al.140 | A. Dark frame subtraction and flat-fieldingB. Spectral band selection | Cube | Classes: tumor tissue, normal tissue, hypervascularized tissue, and background | CNNUNet++, DeepLabV3+ architectures | Visual assessment and tumor histopathology results from region of interest | Leave-one-patient-out cross-validation | 76% tumor accuracy 76% tumor sensitivity |

0.29 s | NVIDIA GeForce RTX 2080 GPU |

| Testing deep learning and classical machine learning algorithms for low-grade gliomas, Giannantonio et al.141 | Spectral band selection | Pixel-wise | Classes: tumor tissue and normal tissue | SVM | Visual assessment | 75:25 data split | 91% overall accuracy 92% overall sensitivity |

N/S | NVIDIA GeForce RTX 3090 GPU |

| Pixel-wise | RF | 86% overall accuracy 88% overall sensitivity |

|||||||

| Pixel-wise | MLP | 92% overall accuracy 91% overall sensitivity |

|||||||

| Cube | CNN | 81% overall accuracy 80% overall sensitivity |

|||||||

| Hyperspectral band selection, Zhang et al.145 | A. Dark frame subtraction and flat-fielding B. Spectral normalization |

Pixel-wise | Classes: tumor tissue, normal tissue, blood vessel, and background | Data gravitation and weak correlation | Visual assessment and tumor histopathology results from region of interest | Fivefold cross-validation | 90% to 98% overall accuracy | 1 s | Algorithm |

| Tumor and blood vessel identification, Leon et al.88 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral cropping, smoothing, and downsampling D. Spectral normalization |

Cube | Classes: tumor tissue, normal tissue, blood vessel, and background | Mixed supervised–unsupervised pipeline | Visual assessment and tumor histopathology results from region of interest | 60:20:20 data split Fivefold cross-validation |

87% overall accuracy 58% tumor accuracy |

N/S | N/S |

| Pediatric tumor identification, Kifle et al.90 | None | Pixel-wise | Classes: tumor tissue and normal tissue | RF | Visual assessment | 70:30 data split | 83% to 85% overall accuracy | N/S | N/S |

| Tumor and blood vessel identification, Sancho et al.152 | A. Dark frame subtraction and flat-fielding B. Spectral normalization and correction |

Cube | Classes: tumor tissue, normal tissue, blood vessel, and dura mater | Mixed supervised–unsupervised pipeline | Visual assessment and tumor histopathology results from region of interest | 80:20 data split | 95% overall AUC 95% tumor AUC |

14 fps | NVIDIA GeForce RTX 3090 GPU |

| Brain tissue classification, Martín-Pérez et al.100 | A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral cropping and correction D. Spectral normalization |

Pixel-wise | Classes: tumor tissue (with subclasses), normal tissue, arterial and venous blood vessels, dura mater, and skull | RF | Visual assessment and tumor histopathology results from region of interest | 80:15:5 data split | 57% tumor AUC (with snapshot HSI) 65% tumor AUC (with line scan HSI) |

N/S | N/S |

| HSI-MR registration, Villa et al.173 | None | Cube | MRI-HSI fusion | Depth-based 3D registration | Actuator position | N/A | registration error | 5 s | N/S |

| Brain tissue classification, Zhang et al.142 | A. Dark frame subtraction and flat-fielding B. Spectral normalization |

Cube | Classes: tumor tissue, normal tissue, blood vessel, and background | CNN | Visual assessment and tumor histopathology results from region of interest | Unspecified data split | 97% overall accuracy | 90 to 100 s | N/S |

| Neurosurgical oncology—fluorescence | |||||||||

| PpIX concentration estimation, Valdés et al.67 and Valdés et al.84 | Spectral interpolation | Pixel-wise | PpIX concentrations | Fitting to known fluorophore mixture spectra and empirical correction algorithm | Liquid tissue-mimicking phantoms | Phantom correction accuracy | 24% PpIX concentration accuracy 20 ng/ml detection threshold |

4 to 8 s | N/A |

| PpIX concentration estimation, Valdés et al.211 | Spectral interpolation | Pixel-wise | PpIX concentrations | Empirical correction algorithm | Liquid tissue-mimicking phantoms | Phantom correction accuracy | 6% PpIX concentration accuracy 20 ng/ml detection threshold |

1 to 2 s | N/A |

| PpIX concentration estimation, Jermyn et al.217 | Spectral interpolation | Pixel-wise | PpIX concentrations | Empirical correction algorithm | Liquid tissue-mimicking phantoms | Phantom correction accuracy | Best corrected fluorescence fit detection threshold |

N/S | N/A |

| PpIX concentration estimation, Xie et al.221 | Dark frame subtraction and flat-fielding | Cube | PpIX concentrations | Spatially regularized reconstruction | Liquid tissue-mimicking phantoms | Phantom correction accuracy | Best corrected fluorescence fit detection threshold |

N/S | N/A |

| PpIX concentration estimation, Bravo et al.219 | Dark frame subtraction and flat-fielding | Pixel-wise | PpIX concentrations | Fitting to known fluorophore mixture spectra and empirical correction algorithm | Liquid tissue-mimicking phantoms | Phantom correction accuracy | Ground truth to estimate linear fit detection threshold |

N/S | N/A |

| Fluorescence component spectra identification, Black et al.199 | Dark frame subtraction and flat-fielding | Pixel-wise | Significance of auto-fluorescence | Fitting to autofluorescence and PpIX spectra | Fluorescence spectra from biopsies | Spectral unmixing fit quality | In weakly fluorescing areas, 82% lower error for five-component spectral fitting as opposed to PpIX 635 peak only | N/A | N/A |

| Tumor property classification, Black et al.222 | A. Dark frame subtraction and flat-fielding B. Spectrally constrained dual-band normalization |

Pixel-wise | Tumor type, grade, glioma margins, and IDH mutation prediction | RF and multilayer perceptron | Fluorescence spectra from biopsies | Fivefold cross-validation | 87% tumor type accuracy 96% tumor grade accuracy 86% margin accuracy 93% IDH margin accuracy |

N/A | N/S |

| Joint correction and unmixing of fluorescence spectra, Black et al.226 | N/S | Cube | Corrected fluorescence spectra | 1D convolutional neural network in a mixed supervised–unsupervised framework | Liquid tissue-mimicking phantoms/pig brain homogenates | Pearson correlation coefficients between known and predicted concentrations |

for phantoms for pig brain homogenates |

N/A | N/S |

| Fluorescence component spectra identification and significance, Black et al.223 |

A. Dark frame subtraction and flat-fielding B. Spectrally constrained dual-band normalization |

Pixel-wise |

Fluorescence spectrum library |

Sparse non-negative Poisson regression |

Fluorescence spectra from biopsies, simulated data |

Data distribution analysisSpectral component abundances |

Data distribution is 82% closer to Poisson than Gaussian in terms of KL divergenceEach library component is present in >7% of the dataset |

N/A |

N/A |

| Vascular neurosurgery | |||||||||

| Cerebral oxygenation mapping, Mori et al.101 | A. Spectral smoothing and cropping B. Spectral normalization |

Pixel-wise | Oxygen saturation | Fitting to known hemoglobin and oxyhemoglobin spectra | N/A | N/A | N/A | 10 s/cube | N/A |

| Distinguishing blood and blood vessels, Laurence et al.157 | A. Dark frame subtraction and flat-fielding B. Denoising C. Spatial registration to account for breathing |

Pixel-wise | Oxygen saturation temporal dynamics | Fitting to known hemoglobin and oxyhemoglobin spectra and Fourier transform | Electrocorticography recordings | Visual overlay comparison | N/A | 25 s/cube | N/A |

| Diagnosing cerebral hyperperfusion, Iwaki et al.156 | ROI selection and outlier rejection | Pixel-wise | Oxygen saturation | Fitting to known hemoglobin and oxyhemoglobin spectra | Visual assessment and co-registered SPECT images | Comparison against SPECT | 85% hyperperfusion sensitivity | N/S | N/A |

| Co-designing hemodynamic and brain mapping, Caredda et al.165 |

N/A |

Pixel-wise |

Oxygen saturation and cytochrome-c-oxidase concentration |

Fitting to known hemoglobin, cytochrome-c-oxidase, and oxyhemoglobin spectra and Monte Carlo light transport simulation |

Ground truth from light transport simulation |

Comparison against ground truth |

Concentration estimation errors: 0.5% oxyhemoglobin 4.4% hemoglobin 15% oxCCO |

N/A |

N/A |

| Functional neurosurgery | |||||||||

| Imaging seizures within surgery, Noordmans et al.104 | A. Dark frame subtraction and flat-fielding B. Spectral normalization |

Pixel-wise | Oxygen saturation temporal dynamics | Fitting to known hemoglobin and oxyhemoglobin spectra | Electrocorticography recordings | Visual overlay comparison | N/A | N/S | N/A |

| Imaging neurovascular coupling, Pichette et al.92 | A. Dark frame subtraction and flat-fielding B. Spectral filter response linear correction C. Spatial registration to account for breathing D. Spatial cropping to region of interest |

Pixel-wise | Oxygen saturation temporal dynamics | Fitting to known hemoglobin and oxyhemoglobin spectra | N/A | N/A | N/A | N/S | N/A |

| Metabolic brain mapping, Caredda et al.163 | A. Spatial registration to account for breathing B. Spectral smoothing |

Pixel-wise | Oxygen saturation and cytochrome-c-oxidase concentration | Fitting to known hemoglobin and oxyhemoglobin spectra | Electrical brain stimulation data | Visual overlay comparison and normalized cross-correlation coefficient | Correlation coefficients over time range of interest: 0.76 oxyhemoglobin 0.86 hemoglobin 0.84 oxCCO |

N/S | Intel Core i5-7200U |

| Imaging hemodynamic response to interictal epileptiform discharges, Laurence et al.160 |

A. Dark frame subtraction and flat-fielding B. Spatial registration to account for breathing C. Spatial cropping to region of interest D. Outlier rejection |

Pixel-wise |

Oxygen saturation |

Fitting to known hemoglobin and oxyhemoglobin spectra |

Electrocorticography recordings |

Visual overlay comparison |

N/A |

N/S |

N/A |

| Spine surgery | |||||||||

| Positioning feedback and navigation, Manni et al.59 |

A. Dark frame subtraction and flat-fielding B. Spatial denoising C. Spectral band selection |

SURF/DELF/MSER features237–239 | Feature displacement | -nearest neighbors | Fiducial markers | Comparison between detected and actual marker locations | marker localization error | N/S | N/A |

N/S, not specified; N/A, not applicable; WL, white light; FL, fluorescence; SURF, speeded up robust features; MSER, maximally stable extremal regions; SSIM, structural similarity index measure; KL, Kullback–Leibler; SPECT, single-photon emission computed tomography.

3.1. Imaging Hardware and Software

3.1.1. Neurosurgical oncology

HSI for use in neurosurgical oncology was introduced by Gebhart et al.107 in 2007 with the use of a Varispec VIS-20 LCTF from Cambridge Research Instruments, Inc.108 coupled with a PhotonMax electron multiplying charge-coupled device (EMCCD) camera109 mounted on a surgical microscope to measure intraoperative autofluorescence and diffuse reflectance spectra with acquisition times of 5 min. Here, the authors did not solely use reflectance but rather both reflectance and autofluorescence measurements to determine a reflectance/autofluorescence ratio for optimal identification of tumor tissue. Similar to the previous approach, Valdés et al.67 used a Varispec LCTF coupled with a pco.pixelfly charge-coupled device (CCD) camera110 on a surgical microscope (Zeiss OPMI Pentero) [Fig. 3(c)] to measure the reflectance and fluorescence spectra in a fluorescence correction algorithm to enable more accurate measurement of tissue fluorophores during brain tumor resection. Here, both approaches did not solely use reflectance measurements for tissue identification. Instead, they coupled their reflectance measurements with fluorescence to enable tumor tissue identification, which will be discussed in more detail later (see Sec. 4). It was not until 2016 with the kickoff of the European Hyperspectral Imaging Cancer Detection (HELICoiD) project111 and the development of the HELICoiD demonstrator by Salvador et al.112 and Fabelo et al.,113 where HSI of reflectance was used solely for tumor tissue identification.

The HELICoiD demonstrator consists of a pair of line sensor hyperspectral cameras mounted on a custom optical breadboard in the operating room [Fig. 3(a)]. These cameras, bought off-the-shelf from Headwall Photonics,64 are the CCD-based Hyperspec® VNIR A-series operating in the VIS-NIR wavelength range (400 to 1000 nm, 826 spectral bands, 2- to 3-nm resolution, ) and the InGaS-based Hyperspec® NIR 100/U operating in the NIR short-wave infrared (SWIR) wavelength range (900 to 1700 nm, 172 spectral bands, 5-nm resolution, ). The cameras are set up in a confocal stereo configuration with matched fields of view, at an imaging distance of 40 cm and surgical field clearance of 29 cm. The entire imaging assembly is mounted on a translation stage to implement pushbroom scanning [Fig. 1(c)]. The demonstrator system uses a 150-W quartz–tungsten–halogen (QTH) bulb with a spectral range of 400 to 2200 nm, passed through an optical fiber to a cold light emitter. This ensures that the heat from the QTH bulb is not transmitted to the tissue to avoid tissue damage. Follow-up work in the HELICoiD project used other hyperspectral line cameras, such as the Specim ImSpector® VNIR V10-E spectrograph66 (400 to 1000 nm, 2.8-nm resolution) by Madroñal et al.97 and the Headwall Hyperspec® NIR X-Series63 (900 to 1700 nm, 166 spectral bands, ) by Ravi et al.114 in linear scanning configurations to capture hyperspectral datasets.

In the initial HELICoiD pilot study, several pixel-wise classification algorithms were used on the data collected with the HELICoiD demonstrator to test the potential of reflectance spectra in tumor resection. These include support vector machines (SVMs), multilayer perceptrons (MLPs), and random forests (RFs) implemented on parallel processing platforms such as the Headwall Hyperspec® Data Processing Unit112,113 (31 images from 22 procedures on primary glioblastomas and 135k labeled spectra from the HELICoiD demonstrator) and the Kalray MPPA-256-N HPC device96 (13 images from 13 procedures on glioblastomas and metastases and 25k labeled spectra from the HELICoiD demonstrator). The training data consisted of mixed-patient pixel-wise spectra from intraoperative hyperspectral cubes with pathologist-labeled ground truth classification labels. These were tested on data from both HELICoiD cameras separately, and the VIS-NIR data were shown to be most effective with the RF classifier, providing cross-validated accuracy, sensitivity, and specificity greater than 99% for mixed-patient pixel-wise three-class classification.96,113 Subsequently, this classification scheme with a larger dataset (36 cubes from 22 patients, labeled spectra from the HELICoiD demonstrator) has been integrated into a mixed supervised–unsupervised framework to provide fast intraoperative visualization115 with a total per-frame acquisition and processing time of 1 min at an overall accuracy greater than 98% for five-class classification (including blood vessels). Further work has extended and improved these results with techniques such as blind linear unmixing116,117 and empirical mode decomposition,118 shown SVMs effective for identifying malignant tumor phenotypes,119 and demonstrated estimation of the molecular composition of brain tissues in real time.120

Further, to ease the time and computational complexity of working with high-dimensional hyperspectral data (hundreds of wavelength channels across millions of pixels) and improve the semantic consistency of segmentation, dimensionality reduction with manifold embedding has been employed.114 This method uses a deep learning–based modified version of the -distributed stochastic neighbor (t-SNE) manifold embedding algorithm,121 called fixed-reference t-SNE (FR-t-SNE). This non-linear embedding method attempts to preserve local spatial regularity (nearby pixels represent the same class with high probability) while still capturing high-level global features (pixel classes). The possibility for generalization of this method was evaluated by testing the model on patient data from a different set of individuals, with around 72% overall accuracy and 53% tumor sensitivity for four-class classification (33 images from 18 patients, captured with the HELICoiD demonstrator). A combination of the above pixel-wise and dimensionality-reduced classifiers to create a joint spatio-spectral classifier has been shown by Fabelo et al.122 to have an overall accuracy greater than 99%, with a speed-up of to 8.5× achieved with hardware acceleration (five cubes from five patients and 45k labeled spectra from HELICoiD demonstrator).

Various hardware acceleration platforms have been explored to speed up the classification computation by individually optimizing the components of these classifiers. The linear kernel SVM113 has been sped up 3 to 5× on massively parallel processor arrays97 and system-on-chip architectures123,124 and 90× on graphics processing unit (GPUs);125 dimensionality reduction with principal component analysis (PCA) for data preprocessing115 has been sped up 36× using multiple central processing unit (CPU) compute cores;126 -nearest neighbor classification115,122,127 has been sped up 30 to 66× on GPUs; and k-means clustering115,122 has been sped up 150× on GPUs.128 Jointly implementing the entire pipeline with PCA on a multi-GPU129 platform has resulted in a total speed-up of 180× over the serial platforms, resulting in processing times being reduced from several hundreds of seconds to tens of seconds.129 The effect of optimizing the data-type representation of the hyperspectral images and their storage in memory has been explored for lower-throughput processing.130

Recently, deep learning has been applied to tumor identification in both deep fully connected per-pixel and convolutional spatio-spectral configurations.131,132 This generalizes the hyperspectral data embedding and classification features for the embedded data while allowing for fast computation on the GPU. In combination with unsupervised clustering techniques and minimal user guidance, these accuracies rise to 77% to 78% for one-dimensional (1D) spectral deep neural networks (DNNs),131,132 72% to 77% for 2D convolutional neural networks (CNNs),131,132 80% for a combination of 1D DNN and 2D CNN,131 and 80% for 3D spatio-spectral CNNs132 (with datasets consisting of eight cubes from six patients and 82k labeled spectra;131 12 cubes from 12 patients and 116k spectra,132 both from the HELICoiD). Other deep learning architectures133–143 have also produced comparable results with the potential for fast hyperspectral brain structure classification. Figure 5 shows examples of the HELICoiD demonstrator during brain tumor surgery for tissue classification using unmixing methods and deep neural networks.

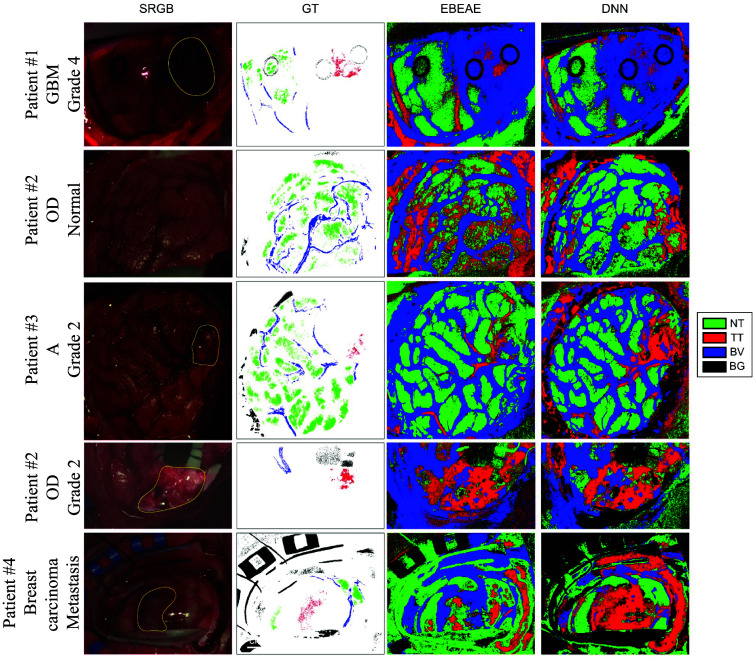

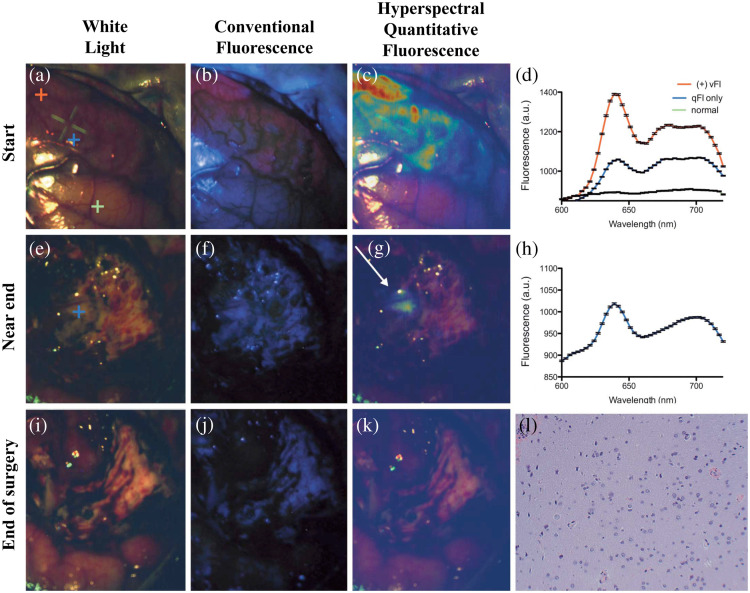

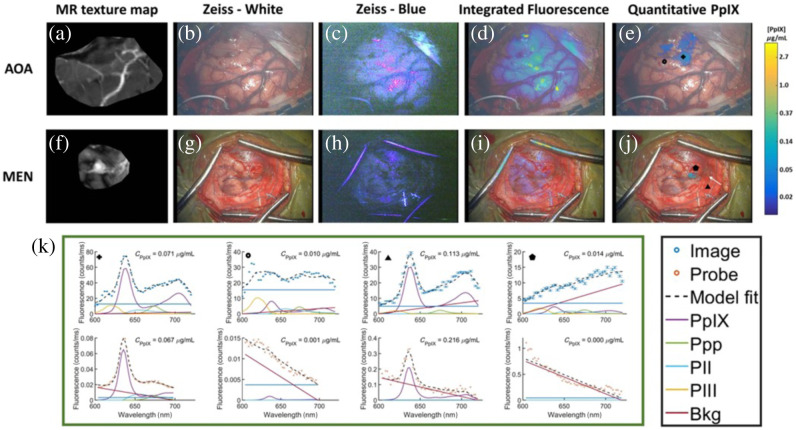

Fig. 5.

Classifying brain tissue types based on reflectance spectra. Left to right: intraoperative hyperspectral reflectance imaging on four patients with glioma grades 2 and 4 using the HELICoiD system (patient 1 in row 1, patient 2 in rows 2 and 4, patient 3 in row 3, and patient 4 in row 5), white-light synthetic RGB image reconstructed from the hyperspectral cube with tumor regions marked in yellow and biopsy sites with black circles, ground truth–labeled pixels and pixel classifications using linear unmixing methods [extended blind end-member and abundance extraction (EBEAE)],117,144 and a two-layer pixel-wise DNN.131 The four classes are NT, TT, BV, and BG. EBEAE yields around 60% overall accuracy, 30% tumor sensitivity, and 85% tumor specificity, whereas the DNN yields 85% overall accuracy, 65% tumor sensitivity, and 95% tumor specificity with fivefold cross-validation on mixed-patient pixel-wise data. GBM, glioblastoma; OD, oligodedrogioma; A, astrocytoma. Adapted from Leon et al.,88 under CC-BY 4.0.

Manual initial feature engineering has also been attempted to provide better pre-processed data as input for classification algorithms. For example, by selecting the most relevant spectral bands using iterative combinatorial optimization algorithms,99 correlation-based ranking,145 and deep learning.141 In addition, registered pairs of VIS-NIR and NIR images from the HELICoiD demonstrator have been analyzed for spectral similarities between classes to ignore non-distinctive samples.146

The two data streams from the visible and near-infrared (VNIR) and NIR cameras in the original HELICoiD setup112,113 need to be fused to create a single hyperspectral cube146 to add more useful data to the computational methods described above. Therefore, a new version of the demonstrator has been proposed by Leon et al.,147 where the confocal stereo configuration is changed to make the camera axes parallel. This changes the transformation between the two camera viewpoints from a projection to a translation, allowing for less spatial and radiometric distortion of the captured spectra. Combined with spatial and spectral upsampling, hyperspectral cubes are generated at the original spatial resolution and two wavelength ranges (641 spectral bands between 435 and 901 nm and 144 spectral bands between 956 and 1638 nm), resulting in a 21% accuracy increase as compared with using just the VNIR camera on a synthetic material database.

Because the HELICoiD system is mounted on a platform separate from the surgical microscope, it interrupts the surgical workflow due to the need for physical translation of the HELICoiD system prior to data acquisition [Fig. 3(a)]. To prevent such movement, Mühle et al.87 designed a workflow with a TIVITA® VIS-NIR tissue hyperspectral camera (500 to 1000 nm, 100 spectral bands, 5-nm spectral resolution, output pixels, , )65 mounted onto surgical microscope oculars. However, as the cameras used in the above projects can capture only one-dimensional spatial slices, physical scanning of the cameras in one dimension across the surgical field of view is required to capture the entire hyperspectral cube. Thus, this system can capture nanometer-resolution megapixel intraoperative surgical datasets (comparable with previous systems88,122,147,148) at the cost of per capture. Data captured from this system yields 99% accuracy and greater than 98% sensitivity for tumor detection (one patient, 29k labeled spectra). However, given the time requirement for data acquisition of a single hyperspectral cube, it has had limited utility for routine clinical use as it significantly interrupts the surgical workflow, which precludes performing the resection under continuous feedback from the HSI system.

Therefore, snapshot HSI systems such as the Ximea Corporation MQ022HG-IM-SM5X5-NIR (665 to 975 nm, 25 spectral bands, , )149 based on the IMEC SM5x5 NIR sensor, the Cubert Ultris X50 (350 to 1000 nm, 155 spectral bands, , ),91 the Senop HSC-2 (freely selectable bandwidths and resolutions)73 and the BaySpec OCI-2000 Series snapshot hyperspectral imagers (475 to 875 nm, 35 to 40 spectral bands, )74 have been explored as potential alternatives89,90,98,150–154 [Fig. 1(d)]. These can be mounted either by themselves89,98,150–152 or coupled to a surgical microscope90,93,153,154 to minimize disturbance to the surgical workflow [Fig. 3(d)]. In addition, systems that fuse the advantages of snapshot and line scanning hyperspectral acquisition, called snapscan systems (such as the IMEC Snapscan VNIR,75,93 470 to 900 nm, 150 spectral bands, , 2- to 20-s acquisition), coupled with surgical microscopes have been used for intraoperative imaging.141 These systems have been used to develop machine learning-based classification (e.g., SVM, decision tree, and RF classifiers90,93,98,151) and convolutional neural networks,153 with similar results—for instance, a system with the Senop HSC-2 camera reported accuracies around 98%.153

3.1.2. Vascular neurosurgery

A major goal in vascular neurosurgery is to restore healthy blood flow to structures in the brain and prevent ischemia (i.e., oxygen-starved), clots, and bleeding. Healthy blood flow leads to an adequate supply of oxyhemoglobin to tissue. Therefore, oxygen saturation (i.e., ratio of oxyhemoglobin to total hemoglobin) in bulk tissue is used as a measure of tissue health and adequate oxygen delivery to tissues.

Hyperspectral oxygen saturation estimation was first used for intraoperative imaging of the cerebral cortex in the superficial temporal artery (STA)–middle cerebral artery (MCA) bypass by Mori et al.101 Hyperspectral cubes were acquired with a standalone HSC1700 line scanning camera originally developed for the TAIKI Hyperspectral EO Mission (400 to 800 nm, 81 spectral bands, , 5- to 16-s acquisition).155 A mixed spectrum consisting of hemoglobin, deoxyhemoglobin, and bulk tissue scattering was fit,102 and oxygen saturation was estimated from these proportions. This study found that the STA-MCA anastomosis increased the oxygen saturation distal to the anastomosis corresponding to MCA territory brain regions in two patients with moyamoya disease and two with occlusion of the internal carotid artery. Further, Iwaki et al.156 also found that HSI could detect cerebral hyperperfusion following this anastomosis in five patients with moyamoya disease. These results showcased the potential of hyperspectral data in vascular neurosurgery for hemodynamic imaging (i.e., imaging of blood flow and tissue perfusion).

Fu et al.103 developed an LCTF-based HSI system coupled with a Zeiss surgical microscope tested to predict cerebral ischemia in rats. Unlike the prior work which fit spectra to estimate oxygen saturation, the authors used an empirical measure to estimate oxygen saturation and tissue perfusion. This work showed that the ratio of tissue reflectance around 545 nm to reflectance around 560 nm could identify early brain ischemia in a rat stroke model. Their method works using the reflectance of deoxyhemoglobin and oxyhemoglobin, which are equal at 545 nm but change rapidly in opposite directions between 545 and 560 nm, yielding a high predictive power for estimating low oxygen saturation.

Further, a snapshot hyperspectral system from IMEC with filters mosaiced on a CCD sensor (480 to 630 nm, 16 spectral bands, , ) was used by Laurence et al.157 to distinguish between blood vessels and bleeding in the cortex in three patients. Diffuse reflectance spectra measured by the camera are fit to a model consisting of a combination of oxyhemoglobin, deoxyhemoglobin, and tissue absorption.102 The estimated oxyhemoglobin proportion is Fourier-transformed to calculate its temporal frequency distribution. It was inferred that the healthy regions where the oxygen saturation is driven by the respiratory rate (cortex and blood vessels) had a first harmonic temporal frequency of around 0.23 Hz, with a significant second harmonic at 0.46 Hz. Meanwhile, bleeding varied more significantly than the heart rate at a frequency of around 1.3 Hz, which allowed for accurate identification of the vessels. Noordmans et al.158 used intraoperative HSI and found that these slow, sinusoidal hemodynamic oscillations displayed a stable and reproducible frequency in four epilepsy patients, which included non-lesional, focal cortical dysplasia and dysembryoplastic neuroepithelial tumor, emphasizing the possibility to generalize this method.

3.1.3. Functional neurosurgery

Epilepsy surgery requires the mapping of metabolically active brain regions, including epileptogenic regions, that demand more oxygen and blood. This link between neuronal activity and changes in blood flow and oxygenation is commonly referred to as neurovascular coupling.159 As seizures result from intense, uncontrolled neuronal activity, regions of the brain exhibiting seizure activity are highly metabolically active and as such display differences in their neurovascular coupling compared with regions not exhibiting seizure activity.

The first use of HSI for evaluating neurovascular coupling dynamics in epilepsy intraoperatively was in 2013 by Noordmans et al.,104 where one patient with intractable sensorimotor seizures of the left hand was imaged using an LCTF-based system (Varispec VIS108 filter with a pco.pixelfly camera,110 ) coupled to a Zeiss Pentero surgical microscope (Fig. 6). In this work, the entire cerebral cortex was imaged over the span of 7 min, and the area of increased oxyhemoglobin at the start of seizure activity matched the epileptogenic zone. Subsequently, Laurence et al.105 further validated this finding in 12 epilepsy patients, which included non-lesional, focal cortical dysplasia type and heterotopia. The authors found that regions of seizure activity were isolated with an intraoperative HSI system.

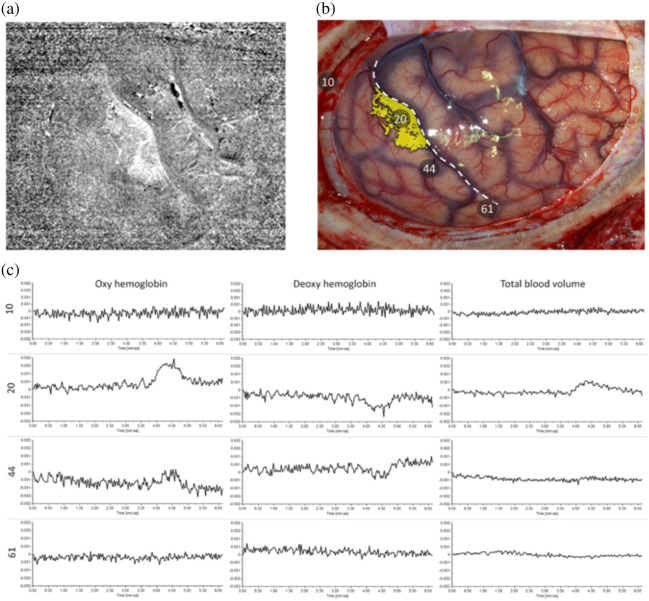

Fig. 6.

HSI to map seizures intraoperatively. (a) Local increase in oxygenation during seizure: oxygenation changes estimated from oxyhemoglobin concentration during a seizure. (b) Area matched to a photo of the cortex: overlay of oxygenation changes on an RGB image of the brain cortex, which correlates with electrical recordings of seizure activity measured via electrocorticography. Position 20 corresponds to the sensory cortex of the hand where positive seizure activity was recorded and HSI measured higher oxygenation. Reproduced from Noordmans et al.,104 with permission from John Wiley & Sons, Inc. (c) Relative concentration as a function of time.

Further, a snapshot hyperspectral system from IMEC with filters mosaiced on a CCD sensor (480 to 630 nm, 16 spectral bands, , 10 to ) coupled with a Zeiss Pentero microscope was used for intraoperative hemodynamic imaging on one patient undergoing epilepsy surgery resection by Pichette et al.92 at video rates. Laurence at al.160 tested this system to measure the interictal discharges in eight patients with non-lesional or subcortical heterotopias undergoing epilepsy surgery, where unsupervised clustering of oxygenation correlated well with direct electrical measurements of the imaged cortex.