Abstract

Dynamic LR and QR factorization are fundamental problems that exist widely in the control field. However, the existing solutions under noises are lack of convergence speed and anti-noise ability. To this end, this paper incorporates the advantages of Dynamic-Coefficient Type (DCT) and Integration-Enhance Type (IET) Zeroing Neural Dynamic (ZND), and proposes an Adaptive and Robust-Enhanced Neural Dynamic (AREND). On this basis, a Strategy of Integration-Coupling (SIC) is proposed to address multiple error function problems, improving model stability and application scenarios. This strategy is experimentally proven to be effective and has potential expansion capability. After that, the convergence and robustness of our AREND is theoretically analyzed. Furthermore, the proposed AREND is verified by numerical experiments of low-to-high dimensional factorization in comparison with existing solutions. Finally, the real-time 3-D Angle of Arrival (AoA) localization in multiple high-noise conditions, is validated to the accuracy of the proposed model. Code is available at https://github.com/Alana2a3/AREND-Code-Implementation.

Keywords: LR factorization, QR factorization, Zeroing neural dynamic, Anti-noise ability

Subject terms: Computational science, Computer science

Introduction

Matrix factorization is a fundamental tool to simplify problems by splitting a matrix into the form of a product of several matrices of a particular type1–4. Among them, LR and QR factorization, as the names suggest: are a matrix M factorization into two matrices. The LR factorization is: a unit lower triangular matrix and an upper triangular matrix to the multiplication, and the QR factorization is: an orthogonal matrix Q and an upper triangular matrix to the multiplication. The LR factorization can be utilized in parallel computation5–7, applied in many engineering and technology needs like data movement analysis and circuit simulation8–10. Due to the special attributes of the orthogonal matrix after the QR factorization, the computation is more efficient and stable, often applied in least squares problems and optimization problems11,12. In real life, QR factorization is applied in areas such as the smart grid, pathological matrix problems, and harmonic compensation of inductors, among others13–16.

In practical applications, matrix factorization has to account for noise in the environment. To improve the stability of matrix factorization, algorithms minimize the accumulation and amplification of noise in the computation to provide reliable results17–22. With the development of neural dynamics in various fields23–30, researchers turn their attention to matrix factorization. Most of the work is on static matrices. While for the general case of dynamic matrices, 31,32only assume quasi-stationary case, and 33,34have no consideration of noise conditions. 35more focuses on improving the convergence speed of the solution. But under the noisy condition, it is more disturbing to the factorization. The practical solution for factorization is not satisfactory.

In recent years, a novel kind of neural dynamic called Zeroing Neural Dynamic (ZND) has been proposed36, which is commonly employed for solving dynamic problems. ZNDs utilize first-order derivative and are susceptible to noise. In order to improve the accuracy of ZNDs, they are extended to solve for a variety of noise types, resulting in an Integration-Enhance Type (IET) of ZNDs, which have been designed by control theory37, applied to robotic arm38, and used for constrained dynamic optimization39, among other applications. But they have shortcomings that fail to rapidly regulate the two evolutionary direction guidance volumes, the residual error and integration information. Fortunately, there is a Dynamic-Coefficient Type (DCT) of ZNDs40,41 designed. It essentially applies adaptive coefficient to adjust the residual error guidance. Nevertheless, the ZNDs still lack the integration information guiding, and they are only considered to solve problems under slight noise. In addition, ZNDs are also applied to the dynamic matrix factorization42–44, but suffer from improvement deficiency, limited speed of model convergence and robustness. In35, two nonlinear-activated ZNDs designed for LR and QR factorizations resolving the problem of convergence speed, but there is still potential to improve anti-noise ability. At the same time, dynamic target localization is also drawing attention. Among them, the Angle-of-Arrival (AoA) localization is a wide range of algorithms can be applied to the positioning system45, wireless communication46, satellite localization47. On the downside of AoA, it is susceptible to noise and the ZND of IET is poor in noisy situations45.

A single error function built for a problem is universal, while multiple error functions arising from problem characterization are correlated. The two guiding volumes are the basis for the construction of the error function48, then the volumes between themselves are also correlated. Preventing instability in solving, the above issue has to be taken into account when choosing model similar to the IET relied on the two guiding volumes.

In general, related work on the dynamic study lacks an improved ZND with strong robustness. In this paper, we propose an Adaptive and Robust-Enhanced Neural Dynamic (AREND) by combining the advantages of the IET and DCT for solving the dynamic matrix LR and QR factorization, utilizing the activation function to stimulate the adaptive control of the guiding volumes. In addition, a Strategy of Integration-Coupling (SIC) is also given for the multiple error function problems.

The rest of the paper is structured as follows: Section “Preliminaries and existing solutions” gives preliminaries and existing solutions for the LR and QR factorization. Section “Methodology” explains the motivation of the SIC and unifies the AREND evolution formula, subsequently the constructions of the AREND for LR and QR factorization is designed. Section “Theorem analysis” gives a theoretical analysis of the AREND convergence and robustness. Section “Numerical experiments” conducts comprehensive assessments and comparative experiments, and proves the effectiveness and superiority of the AREND. Section “Conclusions” summarizes the whole paper. The main contributions of this paper are as follows:

Unlike the existing ZNDs, the AREND introduces the adaptive regulation of the evolutionary guiding volumes, which contributes to its fast convergence speed and strong robustness for solving the LR and QR factorization problems.

For the multiple error function problems, the SIC is proposed for the first time, which alleviates the convergence instability of the IET of ZND solution. Then the AREND is unified into a double-coupling evolution formula, improving the model application scenarios.

The convergence speed and robustness of the AREND are verified by different dimension matrix LR and QR factorization under noise-free or noise perturbed cases, and the accuracy of the AREND is validated by the real-time 3-D AoA localization.

Preliminaries and existing solutions

LR and QR factorization

The mathematical expression for the definition of LR factorization is as follows:

| 1 |

where time , the dynamic full-rank matrix , unit lower triangular matrix , and upper triangular matrix are assumed to be smooth. Then the error function of LR factorization is defined as

| 2 |

The mathematical expression for the definition of QR factorization is given as

| 3 |

where the dynamic column full-rank matrix , orthogonal matrix , and upper triangular matrix are supposed to be smooth as well.

Remark

The QR factorization can exploit the orthogonality of the factored matrix to reconstruct the other two error functions, ensuring uniqueness and stability of the factorization. Of course, this has advantages and disadvantages. On the positive side, the target residual error tends to decrease rapidly over time, indicating an effective self-correction mechanism. However, multiple error function constraints can complicate the process. Introducing multiple feedback volumes can inadvertently increase the residual error when it becomes negligible, contrary to the goal of minimizing it. This puts forward the model requirement of adaptive control for the residual errors decline.

Base on the definition and orthogonality of the QR factorization, the error functions are given: one definition-error function , two property-error functions , , where I is a unit matrix, and represents transpose operation.

To improve the efficiency and simplicity, we propose extracting and arranging the elements of the factorized matrices into column vectors, satisfying the matrix multiplication and vectorization calculations. Therefore, the following lemmas are introduced and more information is available in35,44:

Lemma 1

The primary elements of the unit lower triangular matrix are extracted as column vector the major elements of the upper triangular matrix are extracted as column vector Then has and the substitution matrices , which satisfy the equations and

Lemma 2

The matrix and the transpose of the matrix after vectorization can be respectively denoted as

| 4 |

| 5 |

where and are the operation matrix and transpose matrix, respectively. is obtained by vectorizing the upper triangular non-zero elements of into columns, is obtained from Q column vectorization.

Existing solutions

The experimental principle is to make all the comparison models to achieve the optimal convergence results, i.e., to choose the optimal activation function, variable parameter function to make the comparison models to achieve the optimal convergence performance. Then show the superiority of AREND proposed in this paper. The LR, QR factorization studies lack solutions for comparison, here the exist ZNDs are provided. The Original ZND (OZND) evolution formula is as

| 6 |

where is a adjustable positive coefficient. The evolution formula of the Nonlinear-activated ZND (NZND)35 is

| 7 |

in this paper, an exemplary representation of the activation function is given, where denotes the activation function that acts on each element s of each of the example matrices S. And the activation function employed by the NZND in the experiments is as

| 8 |

where , is expressed as

A representative of IET of ZNDs, the Noise-Tolerance ZND (NTZND)37 is

| 9 |

the residual error control coefficient , and the integration feedback coefficient .

For DCT of ZNDs, a variable parameter function determines the automatic adjustment of the sampling interval, i.e., it varies with the error, decreasing the step to speed up the convergence rate when the error is large, and increasing the step accordingly to conserve computational resources when the error is small. A DCT of ZND canonical example40 in 2021 called VPZND is as

| 10 |

and is the optimal variable-parameter function as

is the optimal activation function of VPZND as follows:

where .

Methodology

AREND

In view of the high stability requirements of dynamic matrix factorization, the AREND is proposed in this section, which makes the solution system adaptive to control the stable decrease of residual error. Adaptive activation functions are utilized to couple the two components of guiding volumes to ensure adaptive adjustment. Unlike the previous work, a novel evolution formula is designed as

| 11 |

where is the derivative form of , the functions and represent adaptive activation functions act on each matrix S element s, which all can be constructed from the following adaptive function types.

- Adaptive activation function type I:

12 - Adaptive activation function type II:

13

where is a positive parameter define the upper and lower bounds, controlling the behavior of the functions, and limiting the solution system guiding information feedback and enhance robustness. The parameters and are hyperparameters used to control the adjustment speed of the guiding volumes.

SIC

Considering the QR factorization involves several mutually constrained error functions, the IET of ZNDs is always affected by the other integration and leads to instability, if it relies solely on the single integration. So the SIC, coupling multiple integration, is proposed to solve this kind of problem. And a unified double-coupling AREND evolution formula is as

| 14 |

where , N depends on the number of error functions. In order to simplify equation, (14) is transformed into:

| 15 |

where . In order to prove the SIC effective, a unified evolution formula of the NTZND is also given as

| 16 |

AREND for LR factorization

In this subsection, the AREND for LR factorization (AREND-LR) is designed in steps. According to35, first, substitute the error function of LR factorization (2) into (14), and then

| 17 |

Second, apply the Kronecker product to the above formula (17), the equation after vectorization is formulated as

| 18 |

where is the derivative form of , and and are obtained by substituting the matrices L(t), into the lemma 1, I is a unitary matrix, and represents the symbolic Kronecker product.

Finally, let , and , the AREND-LR is given as

| 19 |

where represents the pseudo-inverse of .

Recognizing the unavoidable noise in the actual system, to monitor the performance of the AREND in a noisy environment, the model under noise is designed as

| 20 |

where is the noise in vector form.

AREND for QR factorization

This subsection is a design of AREND for QR factorization (AREND-QR). First, substitute the QR error functions into the evolution formula (14), then , where . Second, apply the Kronecker product and Lemma 2 to the above formula, the equation after vectorization is as follows:

| 21 |

where , , , are the form of the derivative of B(t), Q(t), , , . I represents the unit matrix. and are obtained by substituting into Lemma 2 (4), while and are obtained by substituting into Lemma 2 (5).

Finally, the above equations are transformed into the form of matrix product, the AREND-QR is as

| 22 |

where

and represents the pseudo-inverse of , the model under noise is as follows:

| 23 |

where is the noise in vector form.

Theorem analysis

Convergence and robustness are undoubtedly important for a model. In this section, we provide theoretical analysis and proofs for the convergence and robustness of the AREND. Notice that although there is only one error function discussed, it also applies to the multiple error function case.

Convergence analysis

The following theorem is formulated to investigate and verify the global convergence of the AREND.

Theorem 1

At the state of random initialization, the residual error of the AREND under no external noise disturbance can converge to the theoretical solution of the target error function.

Proof

First, according to the evolution formula of the AREND, the ijth element of the model is transformed into:

| 24 |

based on Lyapunov’s theory, an intermediate variable is constructed as then its derivative form can be get: Subsequently, a Lyapunov function is defined to prove the stability:

where is proven to be positive definite. Then it is easy to get: . In term of the definition of (12) and (13), the following inequality is . When get , then is derived. Consequently, . View , obtain , so is negative definite. Only when does it satisfies and . It can be proven that Z(t) can converge to zero.

Furthermore, , refer to LaSalle’s invariance principle49, meaning . Consistent with previous discussion, a new Lyapunov function is designed as . Similar to , the above proof steps can prove that and its derivative form . The prior proofs that the AREND can converge stably to the theoretical solution of the objective error function.

The proof is completed.

Robustness analysis

Noise immunity is an important indication of model robustness, and the next section focuses on analyzing the performance of the AREND with common conditions perturbed by different noises.

Theorem 2

Under the condition of constant noise the AREND can converge to the theoretical solution of the target error function.

Proof

Using the Laplace transform50 to represent the ith and jth subsystems of the AREND polluted by constant noise :

| 25 |

Without loss of generality and also for simplicity, the nonlinear activation functions and are exploited and investigated in this subsection. The following equation can be written as

According to the final value theorem51, it can be obtained as . Thus, the AREND can converge to zero. Constant noise has no effect at all on the performance.

The proof is completed.

Theorem 3

For period noise with upper and lower bounds and period T, the AREND can converge to the theoretical solution of the objective problem. Upper bound for each element of the residual error can be expressed as .

Proof

Using the Laplace transform, the ith and jth subsystems of the AREND polluted by period noise is written as

| 26 |

where is the Laplace transform of . Then, according to the final value theorem, we can get

In general, when the coefficients and are large enough, the final value of the residual error tends to be zero in the presence of period noise. In other words, these two coefficients determine the robustness of the AREND under period noise.

Thus, the proof is completed.

Numerical experiments

The theoretical analysis in the previous section proves the convergence and robustness of the AREND. In this section, numerical experiments from low-dimensional to high-dimensional matrices are designed to further demonstrate the performance of the proposed model, as well as model validation in the application of the AoA localization. The parameters , , , , , , and hyperparameters are utilized throughout the experiments, except where noted specifically. But the tuning of these hyperparameters can only be deployed empirically. Only when addressing the QR factorization, and are adjusted to be 12. In these experiments, the phase in which ZND adjusts the guidance volumes is depicted by the AREND through residual error curve fluctuations, as Figs. 1 and 5a,b shown.

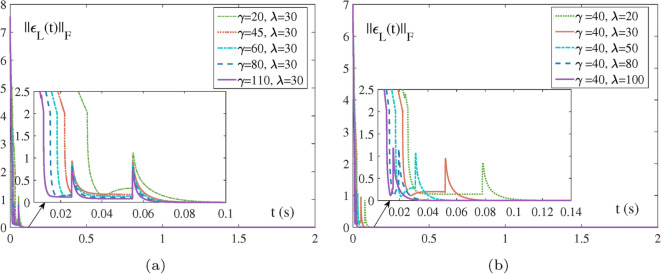

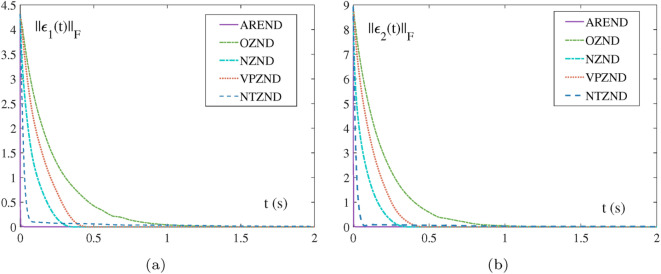

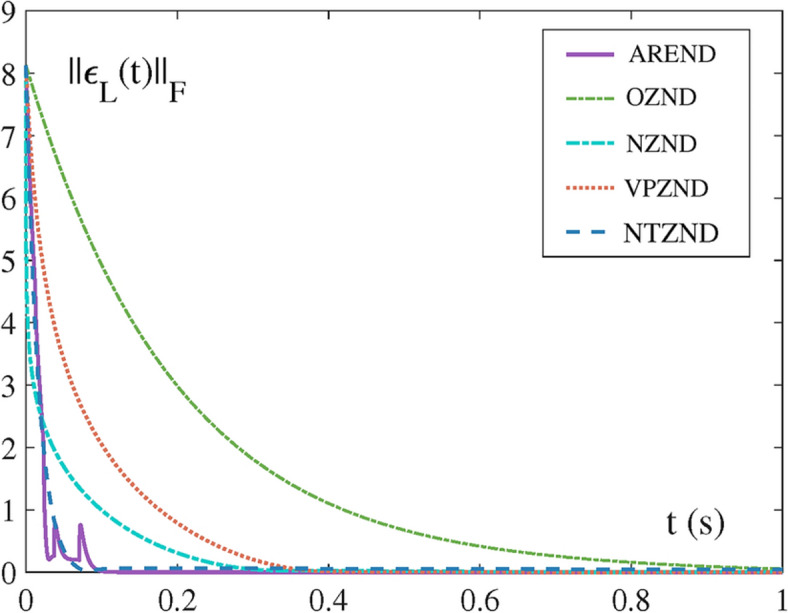

Fig. 1.

The comparisons of the residual error by models solving LR factorization.

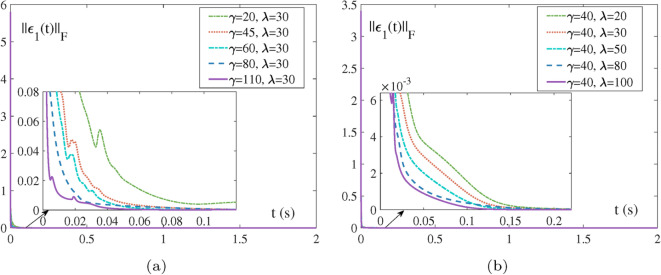

Fig. 5.

The comparisons of the residual error by AREND-LR (19) solving high dimensional matrix with different values of the two parameters. (a) . (b) .

Instances for evaluation

LR factorization

In order to verify the convergence of the AREND-LR, a following dynamic matrix is designed as

Figure 1 is a comparison of the AREND, OZND, NZND, VPZND, NTZND for the LR factorization in the absence of noise. The OZND converges the latest. The NZND and VPZND converge at around 0.3 s and 0.4 s, respectively. The NTZND cannot adaptively adjust the two guiding volumes and its convergence is incomplete. However, the AREND converges to zero quickly at 0.1 s. The adaptive activation functions of the AREND adjust the level of the two guiding volumes in a very short time. It is demonstrated that the AREND has the excellent performance addressing LR factorization this kind of single-error function problem.

QR factorization

To verify the convergence of the AREND-QR and the effectiveness of the SIC, a dynamic matrix is designed as

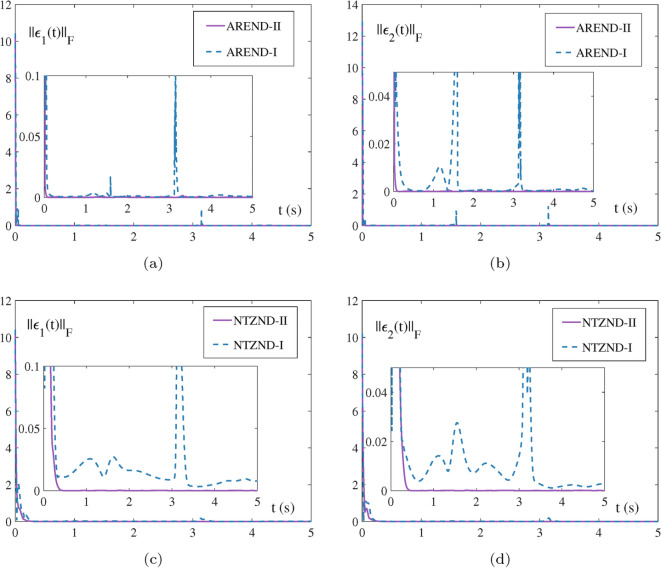

Integration-coupling VS. single-integration: In terms of Fig. 2, two ZNDs of IET, the AREND and NTZND, compare the employment of the SIC and single integration. From the surface, the single-integration strategy to solve the problem has precarious convergence. There are a number of up and down oscillations, pointing out that the IET of ZNDs guiding by the single-integration fail to solve the QR factorization this kind of multiple error function problem. On the contrary, the IET of ZNDs all develops stability and convergence capability with the help of the SIC. NTZND-I, the original model, focuses only on the integration feedback from one of the error functions and cannot eliminate the internal errors of the system. NTZND-II, with the help of SIC, enables all the error functions to play a comparable role in solving the system, coupled in the form of cumulative and integral terms, which eliminate the multinomial errors from each other. Furthermore, the adaptive functions activated AREND is more capable of controlling the residual error drop, indicating the superiority of the AREND. Note that the ZNDs of IET are all utilizing the SIC in the following experiments.

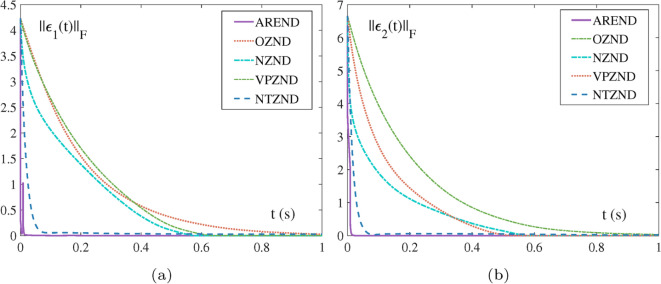

Convergence under noise-free case: Refer to Fig. 3, for and , the convergence time of the OZND, NZND, VPZND are around 1s, 0.4s, 0.5s, whereas the NTZND obviously only converges to a small value. Within 0.1s, the two residual errors solved by the AREND converge to zero. The real-time adaptive tuning of the activation functions is justified playing a crucial role in ensuring strong competitiveness of the AREND-QR.

Fig. 2.

The comparisons of residual errors by integration-coupling and single-integration models for solving QR factorization, where II and I represents integration-coupling and single-integration models, respectively. (a) and (c) . (b) and (d) .

Fig. 3.

The comparison of residual errors by different models for solving QR factorization. (a) . (b) .

High-dimensional situations

LR factorization

To investigate the performance of high dimensional matrix LR factorization in terms of model convergence and robustness in noisy environments, a dynamic matrix is designed as

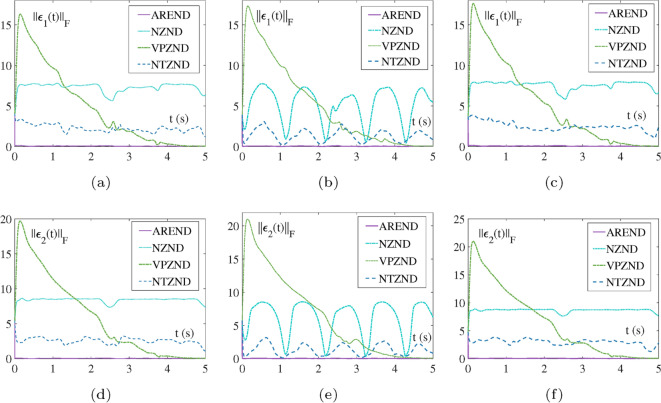

Convergence and robustness discussion: Fig. 4 is a comparison of multiple models solving the high dimensional LR factorization. Figure 4a points out that the NZND and VPZND converge in 0.4 s, the AREND has the best convergence performance, converging in 0.1 s. Figure 4b,d indicate that results by all models with the constant noise and random noise are generally consistent, Fig. 4c shows under the period noise , the compared ZNDs fluctuate more. It can be seen that the VPZND is resistant to noise but converges slowly. Only the AREND is the fastest to converge under the three types of noise, and still converges in 0.1s. The activation-coupling contributes to it perfect access to integration information. It turns out the convergence of the AREND-LR is almost unaffected by the noises.

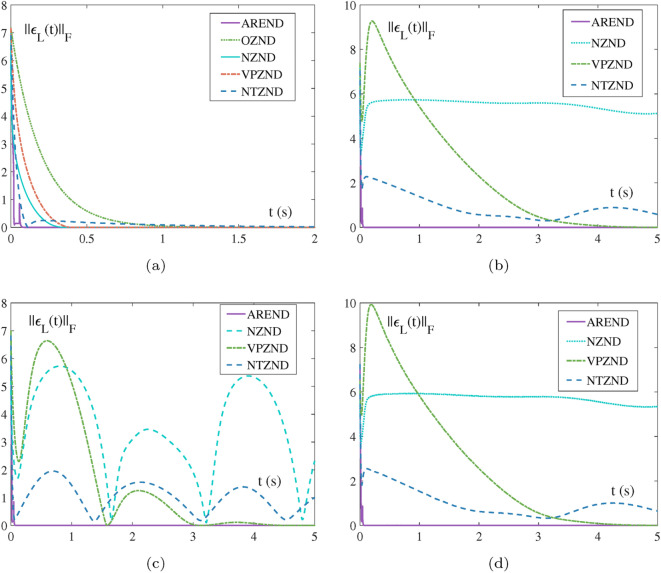

Different values of model parameters: Fig. 5 are the comparison of the different values of the two AREND parameters. In Fig. 5a, when is a fixed value, the larger is, the faster the adjustment control of the residual error is, and the shorter the time of convergence is. In Fig. 5b, when is a certain value, the larger the value of , the more rapid the adjustment control of the two guidance volumes becomes, and the more brief the time of convergence is. In summary, the two variables are kept in a certain proportion to increase, the speed of adjustment of the two guiding volumes increases, and the convergence is faster.

Fig. 4.

Multi-model comparisons of the residual error for solving high-dimensional matrix LR factorization with different noises. (a) With noiseless. (b) With constant noise . (c) With period noise . (d) With random noise .

QR factorization

Aiming to explore more performance of the AREND-QR for high-dimensional matrix, a dynamic matrix is designed as

Under no noise: Fig. 6 is a comparison of the two residual errors of models solving high-dimensional matrix QR factorization. The residual errors by the AREND almost all converge in about 0.01 s. In addition to the role of hyperparameters, the double-coupling of the AREND ensures that the residual error of the solver system decreases rapidly by integrating multiple pieces of information, making the AREND suitable for even high-dimensional matrix.

Under different noises: Fig. 7 shows a comparison of the two residual error of models under noises. It reveals that the AREND, NTZND, and VPZND are resistant to all three types of noise. However, notices that the VPZND has the disadvantage of being slower to converge, requiring longer time work and more computational cost. The NTZND with integration term undoubtedly gives it some immunity to noise, yet the performance is not satisfactory. But the AREND converges in a very short time about 0.1 s. It is firmly proven that the AREND has an superiority in dealing with QR factorization in noisy environments.

Different values of the parameter: Fig. 8 shows the comparison of different values of the control parameters of the AREND. The same as the LR factorization Fig. 5 is that the speed of convergence of the AREND is positively correlated with the values of , . And the difference from the LR factorization is the SIC makes the control adjustment speed of the parameters , better, and improves the model accuracy.

Fig. 6.

The comparison results by multiple models for solving high-dimensional matrix QR factorization. (a) . (b) .

Fig. 7.

The comparisons of residual errors by multiple models for solving high-dimensional matrix QR factorization with multiple large noises. (a) and (d) With constant noise . (b) and (d) With period noise . (c) and (f) With random bounded noise .

Fig. 8.

The residual error results by AREND-QR (22) solving the high dimensional matrix with different values of two parameters. (a) . (b) .

Quantitative analysis

The quantitative experiment results are shown from Tables 1, 2, 3 and 4, which detailedly records the Average Steady-State Residual Error (ASSRE) and Maximal Steady-State Residual Error (MSSRE) when different models solve the high-dimensional LR and QR factorization. The mathematical description of MSSRE is defined as , and ASSRE is defined as , where t(s) represents the length of time in seconds. In these tables all point out the ZNDs of DCT, such as DPZND41, DVPEZND52, are mostly not suitable for solving LR and QR factorization.

Table 1.

Compare the ASSREs of different models when solving the LR problem.

| Model | ASSRE with NF | ASSRE with CN | ASSRE with PN | ASSRE with RN |

|---|---|---|---|---|

| OZND | ||||

| NZND | ||||

| VPZND | ||||

| NTZND | ||||

| DPZND | Divergent | Divergent | Divergent | Divergent |

| DVPEZND | Divergent | Divergent | Divergent | Divergent |

| AREND |

NF, CN, PN, and RN signify the noises free, constant noises, period noises, and random noises, respectively. The bold font denotes the best result in the corresponding item

Table 2.

Compare the MSSREs of different models when solving the LR problem.

| Model | MSSRE with NF | MSSRE with CN | MSSRE with PN | MSSRE with RN |

|---|---|---|---|---|

| OZND | ||||

| NZND | ||||

| VPZND | ||||

| NTZND | ||||

| DPZND | Divergent | Divergent | Divergent | Divergent |

| DVPEZND | Divergent | Divergent | Divergent | Divergent |

| AREND |

NF, CN, PN, and RN signify the noises free, constant noises, period noises, and random noises, respectively. The bold font denotes the best result in the corresponding item

Table 3.

Compare the ASSREs of different models when solving the QR problem.

| Model | ASSRE with NF | ASSRE with CN | ASSRE with PN | ASSRE with RN | |

|---|---|---|---|---|---|

| OZND | |||||

| NZND | |||||

| VPZND | |||||

| NTZND | |||||

| DPZND | Divergent | Divergent | Divergent | Divergent | |

| DVPEZND | Divergent | Divergent | Divergent | Divergent | |

| AREND | |||||

| OZND | |||||

| NZND | |||||

| VPZND | |||||

| NTZND | |||||

| DPZND | Divergent | Divergent | Divergent | Divergent | |

| DVPEZND | Divergent | Divergent | Divergent | Divergent | |

| AREND |

NF, CN, PN, and RN signify the noises free, constant noises, period noises, and random noises, respectively. The bold font denotes the best result in the corresponding item

Table 4.

Compare the MSSREs of different models when solving the QR problem.

| Model | MSSRE with NF | MSSRE with CN | MSSRE with PN | MSSRE with RN | |

|---|---|---|---|---|---|

| OZND | |||||

| NZND | |||||

| VPZND | |||||

| NTZND | |||||

| DPZND | Divergent | Divergent | Divergent | Divergent | |

| DVPEZND | Divergent | Divergent | Divergent | Divergent | |

| AREND | |||||

| OZND | |||||

| NZND | |||||

| VPZND | |||||

| NTZND | |||||

| DPZND | Divergent | Divergent | Divergent | Divergent | |

| DVPEZND | Divergent | Divergent | Divergent | Divergent | |

| AREND |

NF, CN, PN, and RN signify the noises free, constant noises, period noises, and random noises, respectively. The bold font denotes the best result in the corresponding item

Performance under noise-free conditions: The AREND performs well under noisy conditions, both on the LR and QR problems. Table 1 shows that AREND has an ASSRE of for the LR problem, which is much lower than other models such as for OZND and for NTZND. The QR problem in Table 3 yields similar results, with AREND’s ASSRE of and , respectively, which are significantly better than the other models. The results of MSSRE further support this conclusion. In Tables 2 and 4, the MSSRE of the AREND is for the LR problem, and and for the QR problem, which are much smaller than the other comparative models, and show an extremely high accuracy.

Performance under constant noise conditions: In the constant noise condition, AREND still performs the best, although the errors of most models have increased. The LR problem in Table 1 shows that AREND has an ASSRE of , which is significantly better than NZND’s and VPZND’s For the QR problem (see Table 3), AREND’s ASSRE of and is much smaller than OZND’s and . From the MSSRE point of view, AREND has a MSSRE of in the LR problem (Table 2), compared to and in the QR problem (Table 4). Compared with other models, AREND is less affected by constant noise and shows good robustness.

Performance under period noise conditions: Period noise has a large impact on most models, but AREND is still able to maintain a good performance. In Table 1, AREND’s ASSRE for the LR problem is , while the errors for OZND and NTZND are as high as and , respectively. The ASSRE for the QR problem in Table 3 show a similar trend, with AREND having errors of and , significantly better than the other models. From the MSSRE point of view, the MSSRE of AREND is for the LR problem (Table 2) and and for the QR problem (Table 4), which compared with the other models, are results prove the periodical robustness under noisy conditions again.

Performance under random noise conditions: Random noise is usually the most challenging type of noise, and many models perform poorly or even diverge under this condition. For example, DPZND and DVPEZND diverge under noisy conditions (Tables 1 and 3). However, AREND still maintains a low error. In the LR problem in Table 1, AREND has an ASSRE of , compared to for OZND and for NZND. In the QR problem in Table 3, AREND’s ASSRE of and again outperforms the other models. The MSSRE results also support this observation, with AREND having an MSSRE of in the LR problem (Table 2) and values of and in the QR problem (Table 4). These results show that the performance of the AREND remains stable under random noise, demonstrating excellent noise immunity.

Summary: From the quantitative results, it can be more scientifically seen that the AREND significantly outperforms other models under all noise conditions, whether solving the LR problem or the QR problem. In particular, the AREND demonstrates excellent robustness under complex random and period noise, while other models, such as DPZND and DVPEZND, tend to diverge under noisy conditions. This demonstrates that AREND is one of the most reliable models for dealing with real noise environments and has a wide range of potential applications.

Application in AoA

In this subsection, we design 3-D AoA algorithm localization to validate the AREND. This localization method relies on the base station’s azimuth , elevation of the base stations to locate mobile target.

The principle of AoA algorithm localization is illustrated in Fig. 9a. The position of the base station is defined as , , N depends on the number of base stations. And the position of the target object is given as . After geometric derivation, the following equation is derived as

where denotes , and stands for element-by-element division. The equation is reduced to . Then the error function is defined as , and using the evolution formula (9) and injecting the vector-type noise , the NTZND for the 3-dimensional AoA localization is obtained as

| 27 |

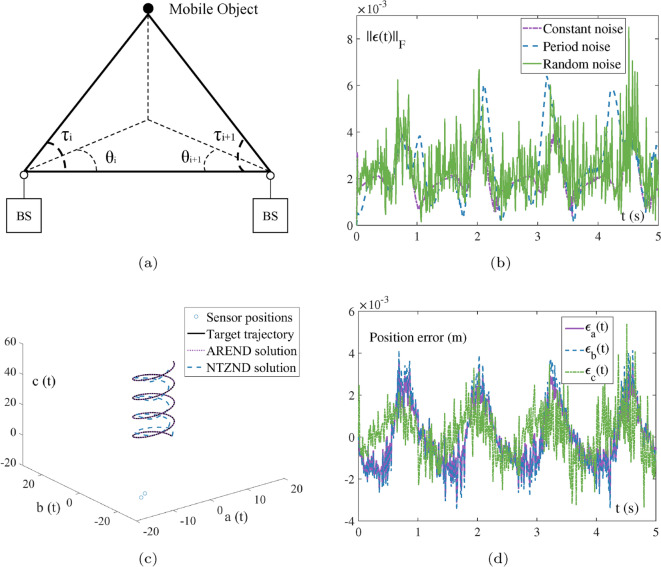

Fig. 9.

Validation results of the NTZND (27) and AREND (28) applied in AoA positioning, where the parameters are set to , . (a) The schematic diagram of the 3-D AOA localization algorithm. (b) The comparison of the residual error by the AREND solving AoA localization with multiple noises, where the constant noise is , the period noise is , the random bounded noise is (c) The comparison of simulated positional solving and target trajectory by the AREND and NTZND under the random noise. (d) The real-time position errors in 3 directions by the AREND solving under the random noise.

Likewise, the AREND for the 3-dimensional AoA localization by the evolution formula (11) is designed as

| 28 |

There are 2 base stations set up to simulate the localization process at the following locations: , , and the true trajectory of the target object is set: . The initial position of the target is assumed as . As shown in Fig. 9b, under three larger noises, the accuracy of the AREND is in the order of . In practical localization, random noise is more to be accounted for, and the target trajectory localization solved by the models under the random noise is shown in Fig. 9c. The deviation of the NTZND localization is too heavy, while the solution trajectory of the AREND almost overlaps with the target trajectory. Meanwhile, the position errors of the three directions are shown in Fig. 9d. Previously presented evidence supports the effectiveness and accuracy of the AREND in target localization.

Conclusions

In this paper, an Adaptive and Robust-Enhance Neural Dynamic (AREND), based on the framework of two types of Zeroing Neural Dynamic (ZND), is proposed to improve the performance of solving dynamic matrix LR and QR factorization. And a Strategy of Integration-Coupling (SIC) is proposed for solving multiple error function problems. The simulation experiments firstly demonstrate that the SIC moderates the instability of the Integration-Enhance Type of ZNDs. According to the comparisons, the AREND equipped with the SIC significantly enhances the performance with various environmental disturbances. This means utilizing the proposed model is a more desirable alternative. Revisiting the two types of ZNDs, the AREND has more model superiority undoubtedly, providing valuable tools for solving dynamic problems. Since then, we believe the AREND and SIC can be extended to solve more complex dynamic problems in our future work.

Author contributions

F.H. Zhuang, H.T. He and A.P. Ye were responsible for experimentation and writing of the manuscript, while L.L. Zou was responsible for methodological guidance, financial support, and manuscript revisions.

Funding

This work is supported by National Natural Science Foundation of China under Grant 62272109 and Undergraduate Innovation Team Project of Guangdong Ocean University under Grant CXTD2024011.

Data availability

Code related to this article can be found at https://github.com/Alana2a3/AREND-Code-Implementation, an open-source online code repository hosted at GitHub.

Declarations

Competing interests

The authors declare no competing interests.

Ethical standard

All data used in this paper are publicly available and no consent was required for their use.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Gohberg, I., Kaashoek, M.A. & Spitkovsky, I.M. An overview of matrix factorization theory and operator applications. in (Gohberg, I., Manojlovic, N., dos Santos, A.F., Eds.) Factorization and Integrable Systems. Operator Theory: Advances and Applications, 1–102. (Springer, 2003).

- 2.Srebro, N. (2004). Learning with matrix factorizations

- 3.Koren, Y., Bell, R. & Volinsky, C. Matrix factorization techniques for recommender systems Computer 42(8), 30–37 (2009).

- 4.Xiao, T. & Shen, H. Neural variational matrix factorization for collaborative filtering in recommendation systems. Appl. Intell.49, 3558–3569 (2019). [Google Scholar]

- 5.Al-Harthi, N., Alomairy, R., Akbudak, K., Chen, R., Ltaief, H., Bagci, H. & Keyes, D. (2020). Solving Acoustic Boundary Integral Equations Using High Performance Tile Low-Rank LU Factorization. In: High Performance Computing: 35th International Conference, pp 209-229

- 6.Higham, D. J., Higham, N. J. & Pranesh, S. Random matrices generating large growth in LU factorization with pivoting. SIAM J. Matrix Anal. Appl.42(1), 185–201 (2021). [Google Scholar]

- 7.Lindquist, N., Gates, M., Luszczek, P. & Dongarra, J. (2022). Threshold Pivoting for Dense LU Factorization. in 2022 IEEE/ACM Workshop on Latest Advances in Scalable Algorithms for Large-Scale Heterogeneous Systems. IEEE, pp 34-42

- 8.Zhao, J., Wen, Y., Luo, Y., Jin, Z., Liu, W. & Zhou, Z. (2021). SFLU: Synchronization-Free Sparse LU Factorization for Fast Circuit Simulation on GPUs. in 2021 58th ACM/IEEE Design Automation Conference. IEEE, pp 37-42

- 9.Kwasniewski, G., Ben-Nun, T., Ziogas, AN., Schneider, T., Besta, M. & Hoefler, T. (2021). On the parallel I/O Optimality of Linear Algebra Kernels: Near-Optimal LU Factorization. In Proceedings of the 26th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, pp 463-464

- 10.Wang, T., Li, W., Pei, H., Sun, Y., Jin, Z. & Liu, W. (2023). Accelerating Sparse LU Factorization with Density-Aware Adaptive Matrix Multiplication for Circuit Simulation. In 2023 60th ACM/IEEE Design Automation Conference. IEEE, pp 1-6

- 11.Zhang, S., Baharlouei, E. & Wu, P. (2020). High accuracy matrix computations on neural engines: Study of QR factorization. in Proceedings of the 29th International Symposium on High-Performance Parallel and Distributed Computing, pp 17–28

- 12.Shen, Y. & Ypma, T. J. Solving separable nonlinear least squares problems using the QR factorization. J. Comput. Appl. Math.345, 48–58 (2019). [Google Scholar]

- 13.Hebling, G. M., Massignan, J. A., Junior, J.B.-L. & Camillo, M. H. Sparse and numerically stable implementation of a distribution system state estimation based on multifrontal QR factorization. Electr. Power Syst. Res.189, 106734 (2020). [Google Scholar]

- 14.Fukaya, T., Kannan, R., Nakatsukasa, Y., Yamamoto, Y. & Yanagisawa, Y. Shifted Cholesky QR for computing QR factorization of ill-conditioned matrices. SIAM J. Sci. Comput.42(1), A477–A503 (2020). [Google Scholar]

- 15.Faifer, M., Laurano, C., Ottoboni, R. & Toscani, S. Adaptive polynomial harmonic distortion compensation through iteratively updated QR factorization. IEEE Trans. Instrum. Meas.72, 1–10 (2023).37323850 [Google Scholar]

- 16.Danaei, K. et al. 3D inversion of gravity data with unstructured mesh and least-squares QR-factorization (LSQR). J. Appl. Geophys.206, 104781 (2022). [Google Scholar]

- 17.Cao, X., Chen, Y., Zhao, Q., Meng, D., Wang, Y., Wang, D. & Xu, Z. (2015). Low-rank matrix factorization under general mixture noise distributions. in Proceedings of the IEEE International Conference on Computer Vision. IEEE, 1493–1501

- 18.Zhou, N. et al. Robust semi-supervised data representation and imputation by correntropy based constraint nonnegative matrix factorization. Appl. Intell.53(10), 11599–11617 (2023). [Google Scholar]

- 19.Connolly, M. P. & Higham, N. J. Probabilistic Rounding Error Analysis of Householder QR Factorization. SIAM J. Matrix Anal. Appl.44(3), 1146–1163 (2023). [Google Scholar]

- 20.Thomas, E. & Sarin, V. QR decomposition based low rank approximation for Gaussian process regression. Appl. Intell.53(23), 28924–28936 (2023). [Google Scholar]

- 21.Zhang, L., Liu, Z., Pu, J. & Song, B. Adaptive graph regularized nonnegative matrix factorization for data representation. Appl. Intell.50, 438–447 (2020). [Google Scholar]

- 22.Li, T., Zhang, R., Yao, Y., Liu, Y. & Ma, J. (2024). Link prediction using deep autoencoder-like non-negative matrix factorization with L21-norm. Appl. Intell. 1–26.

- 23.Ye, A., Xiao, X., Xiao, H., Jiang, C. & Lin, C. (2024). ACGND: Towards lower complexity and fast solution for dynamic tensor inversion. Complex Intell. Syst. 1–15.

- 24.Tong, K., Jiang, C., Gui, J. & Cao, Y. (2024). Taxonomy driven fast adversarial training. in Proceedings of the AAAI Conference on Artificial Intelligence, 5233–5242.

- 25.He, H., Jiang, C., Xiao, X. & Wang, G. A dynamic matrix equation solution method based on NCBC-ZNN and its application on hyperspectral image multi-target detection. Appl. Intell.53, 22267–22281 (2023). [Google Scholar]

- 26.Jiang, C. & Xiao, X. Norm-based adaptive coefficient znn for solving the time-dependent algebraic riccati equation. IEEE/CAA J. Automatica Sinica10(1), 298–300 (2023). [Google Scholar]

- 27.Lin, C., Mao, X., Qiu, C. & Zou, L. DTCNet: Transformer-CNN distillation for super-resolution of remote sensing image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens.10.1109/JSTARS.2024.3409808 (2024). [Google Scholar]

- 28.Gu, X., Chen, X., Yang, M., Du, Y. & Tang, M. Bi-DNE: Bilayer evolutionary pattern preserved embedding for dynamic networks. Complex Intell. Syst.10, 3763–3788 (2024). [Google Scholar]

- 29.Predić, B. et al. Cloud-load forecasting via decomposition-aided attention recurrent neural network tuned by modified particle swarm optimization. Complex Intell. Syst.10, 2249–2269 (2024). [Google Scholar]

- 30.Wang, C., Sun, Y., Ma, X., Chen, Q., Gao, Q & Liu, X. (2024). Multi-agent dynamic formation interception control based on rigid graph. Complex Intell. Syst. 10.1007/s40747-024-01467-3

- 31.Zhang, S., Lu, S., He, Q. & Kong, F. Time-varying singular value decomposition for periodic transient identification in bearing fault diagnosis. J. Sound Vib.379, 213–231 (2016). [Google Scholar]

- 32.Ghaderyan, P., Abbasi, A. & Ebrahimi, A. Time-varying singular value decomposition analysis of electrodermal activity: A novel method of cognitive load estimation. Measurement126, 102–109 (2018). [Google Scholar]

- 33.Uhlig, F. (2022). Adapted AZNN methods for time-varying and static matrix problems. arXiv preprint arXiv:2209.10002

- 34.Uhlig. F. (2024). Zhang neural networks: An introduction to predictive computations for discretized time-varying matrix problems. Numerische Mathematik 1–49.

- 35.Xiao, L., He, Y., Li, Y. & Dai, J. Design and analysis of two nonlinear ZNN models for matrix LR and QR factorization. IEEE Trans. Ind. Inform.19(6), 7424–7434 (2023). [Google Scholar]

- 36.Zhang, Y., Yi, C., Guo, D. & Zheng, J. Comparison on Zhang neural dynamics and gradient-based neural dynamics for online solution of nonlinear time-varying equation. Neural Comput. Appl.20, 1–7 (2011). [Google Scholar]

- 37.Jin, L., Zhang, Y., Li, S. & Zhang, Y. Noise-tolerant ZNN models for solving time-varying zero-finding problems. IEEE Trans. Autom. Control62(2), 992–997 (2016). [Google Scholar]

- 38.Chen, D. & Zhang, Y. Robust zeroing neural-dynamics and its time-varying disturbances suppression model. IEEE Trans. Neural Netw. Learn Syst.29(9), 4385–4397 (2017). [DOI] [PubMed] [Google Scholar]

- 39.Wei, L., Jin, L. & Luo, X. Noise-suppressing neural dynamics for time-dependent constrained nonlinear optimization. IEEE Trans. Syst. Man Cybern. Syst.52(10), 6139–6150 (2022). [Google Scholar]

- 40.Xiao, L. et al. Parameter-changing and complex-valued zeroing neural-network for time-varying complex linear matrix equations. IEEE Trans. Ind. Inform.17(10), 6634–6643 (2021). [Google Scholar]

- 41.Xiao, L., Li, X., Huang, W. & Jia, L. Finite-time solution of time-varying tensor inversion by a novel dynamic-parameter zeroing neural-network. IEEE Trans. Ind. Inform.18(7), 4447–4455 (2021). [Google Scholar]

- 42.Li, Z., Zhang, Y., Ming, L., Guo, J. & Katsikis, V. N. Real-domain QR decomposition models using zeroing neural network for time-varying matrices. Neurocomputing448, 217–227 (2021). [Google Scholar]

- 43.Chen, J. & Zhang, Y. Online singular value decomposition of time-varying matrix via zeroing neural dynamics. Neurocomputing383, 314–323 (2020). [Google Scholar]

- 44.Katsikis, V. N., Mourtas, S. D., Stanimirović, P. S. & Zhang, Y. Solving complex-valued time-varying linear matrix equations via QR decomposition. IEEE Trans. Neural Netw. Learn Syst.33(8), 3415–3424 (2021). [DOI] [PubMed] [Google Scholar]

- 45.Huang, H. et al. Modified Newton integration neural algorithm for dynamic complex-valued matrix pseudoinversion applied to mobile object localization. IEEE Trans. Ind. Inform.17(4), 2432–2442 (2020). [Google Scholar]

- 46.Noroozi, A., Oveis, A. H., Hosseini, S. M. & Sebt, M. A. Improved algebraic solution for source localization from TDOA and FDOA measurements. IEEE Wireless Commun. Lett.7(3), 352–355 (2018). [Google Scholar]

- 47.Dempster, A. G. & Cetin, E. Interference localization for satellite navigation systems. Proc. IEEE104(6), 1318–1326 (2016). [Google Scholar]

- 48.Jiang, C., Wu, C., Xiao, X. & Lin, C. Robust neural dynamics with adaptive coefficient for dynamic matrix square root. Complex Intell. Syst.9(4), 4213–4226 (2023). [Google Scholar]

- 49.Zhang, D., Lee, T. C., Sun, X. M. & Wu, Y. Practical regulation of nonholonomic systems using virtual trajectories and LaSalle invariance principle. IEEE Trans. Syst. Man Cybern. Syst.50(5), 1833–1839 (2018). [Google Scholar]

- 50.Rosenvasser, Y. N., Polyakov, E. Y. & Lampe, B. P. Application of laplace transformation for digital redesign of continuous control systems. IEEE Trans. Autom. Control44(4), 883–886 (1999). [Google Scholar]

- 51.Oppenheim, A.V., Willsky, A.S. & Nawab, S.H. Signals and Systems. (Prentice Hall Inc., 1997).

- 52.Xiao, L., Li, X., Cao, P., He, Y., Tang, W., Li, J. & Wang, Y. (2023). Dynamic-varying parameter enhanced ZNN model for solving time-varying complex-valued tensor inversion for image encryption. IEEE Trans. Neural Netw. Learn Syst. 1–10. [DOI] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Code related to this article can be found at https://github.com/Alana2a3/AREND-Code-Implementation, an open-source online code repository hosted at GitHub.