Abstract

Background

The significance of emotional prosody in social communication is well-established, yet research on emotion perception among cochlear implant (CI) users is less extensive. This study aims to explore vocal emotion perception in children using CI and bimodal hearing devices and compare them with their normal hearing (NH) peers.

Methods

The study involved children aged 4-10 years with unilateral CI and contralateral hearing aid (HA), matched with NH peers by gender and listening age. Children were selected using snowball sampling for the CI group and purposive sampling for the NH group. Vocal emotion perception was assessed for semantically neutral sentences in “happy,” “sad,” and “angry” emotions using a 3 alternate forced choice test.

Results

The NH group demonstrated significantly superior emotion perception (P = .002) compared to the CI group. Both groups accurately identified the “happy” emotion. However, the NH group had higher scores for the “angry” emotion compared to the “sad” emotion, while the CI group showed better scores for “sad” than “angry” emotion. Bimodal hearing devices improved recognition of “sad”and “angry” emotions, with a decrease in confusion percentages. The unbiased hit (Hu) value provided more substantial insight than the hit score.

Conclusion

Bimodal hearing devices enhance the perception of “sad” and “angry” vocal emotions compared to using a CI alone, likely due to the HA providing the temporal fine structure cues, thereby better representing fundamental frequency variations. Children with unilateral CI benefit significantly in the perception of emotions by using a HA in the contralateral ear, aiding in better socio-emotional development.

Keywords: Bimodal hearing devices, children, vocal emotion perception, cochlear implant

Main Points

Vocal perception of “sad” was significantly improved with bimodal hearing devices in children.

Using bimodal hearing devices led to a reduction in the percentage of confusions between different emotions.

Improvement with HA may be due to the better representation of fundamental frequency variation, even with limited residual hearing.

Unbiased hit score offers more valuable insight than the hit score.

Introduction

Recognition of emotions is crucial for effective human interactions.1 In socio-emotional communication, emotional cues often carry more significance than linguistic words.2,3 Spoken sentences not only convey linguistic meaning but also provide valuable details such as age, gender, dialect, and emotional states, thereby enriching communication.4 Accurate recognition of vocal emotions relies on both visual and auditory cues, with preferences differing between adults and children. Typically, infants and young children show a preference for auditory processing.5,6 However, children with congenital hearing impairment lack this early auditory dominance7,8 and face difficulties in perceiving relevant acoustic cues that convey different emotions. Consequently, this leads to inadequate development of emotion perception and production skills.9

The most preferred rehabilitation option for children with congenital hearing impairment is to provide them with hearing aids (HAs) or cochlear implants (CI). The audibility and perception of sound in children with hearing impairment are influenced by device characteristics. Cochlear implant typically preserves the intensity and duration of natural speech but may affect fine structure cues like pitch and harmonics.10 Hearing aid can offer access to low-frequency acoustic cues such as fundamental frequency and lower harmonics.11,12

In children using both devices, known as bimodal stimulation—CI in one ear and HA in the other—research suggests significant improvement in speech perception in noisy environments.13-16 However, conflicting findings exist, with some studies indicating no benefit in speech recognition in noise,17 and others suggesting interference from HA, leading to reduced performance in bimodal conditions compared to using the CI alone.13,14,18

Emotion perception among CI users has received less attention compared to studies focusing on speech recognition. However, accumulating evidence indicates that children using CI perform poorly in emotion identification tasks.19-21 Despite this, there is a dearth of studies in the literature investigating how bimodal fitting influences the perception of vocal emotions. Whether there is no improvement with the addition of HA or if interference occurs due to their use remains uncertain. Therefore, the current study was conceptualized to address this gap.

Further, preschool-aged children using CI have shown deficiencies in emotion identification, even when provided with facial cues.22 Interestingly, another study reports that by school age, children with CI have attained normal facial emotion recognition.23 This suggests that accurate facial emotion recognition may depend on auditory cues received during early childhood development. Consequently, there is a need to explore how a bimodal representation of acoustic stimuli might aid in the perception of emotions, particularly in preschool-aged children.

Thus, we hypothesized that children with normal hearing (NH) would perform better in vocal emotion perception due to better access to acoustic stimuli. Additionally, we hypothesized that adding a HA to the contralateral ear would improve vocal emotion perception in children with unilateral CI. Therefore, we aimed to (1) compare vocal emotion perception between children with NH and those with bimodal hearing devices, and (2) understand the perception of “happy,” “sad,” and “angry” emotions in children using unilateral CI with and without a contralateral HA.

Methods

Study Population

A standard group comparison and within-subjects research design was used. A total of 40 children participated in the study. It consisted of 20 children with NH and 20 children with congenital hearing impairment. The CI group (mean age: 6.06 ± 1.4 years) included children using both CI in one ear and HA in another ear, within the age range of 4-10 years. The children were grouped into the CI group if they met the following criteria: (1) they had profound hearing impairment in both ears with no malformation of the cochlea or auditory nerve, (2) they had been using a stable map in their CI for at least 1 year, (3) they had no retro-cochlear pathology, such as auditory neuropathy spectrum disorders, and (4) they had been using a contralateral HA for more than 4 hours a day and a CI for more than 8 hours per day.

Prior to the implantation, all these children underwent 2-3 months of aural rehabilitation with bilateral HAs. As there was no significant improvement in their speech-language skills with HAs, these children underwent CI. Various CI models, including Nucleus and Med El, were utilized among the children. Children in the CI group received 2-3 sessions per week of aural rehabilitation.

The NH group consisted of 20 children with NH, aged 3.5-4.5 years (mean age: 3.85 ± 0.34 years). The inclusion criteria for the NH group were as follows: (1) all children must have air-conduction thresholds less than 25 dB HL from 250 to 8000 Hz, and (2) all children must have either an “A” or “As” type tympanogram with the presence of acoustic reflexes. Purposive sampling was used to select the children in the NH group, whereas snowball sampling was used in the CI group. Written consent was obtained from the caregivers of all children before testing commenced.

Listening age of the children was assessed using the Integrated Scales of Development provided in the Cochlear handbook on listen, learn and talk (2005). This scale summarizes the developmental stages related to listening, speech, language, cognitive, and social communication. However, for the purpose of the study, only listening milestones were taken into consideration. The particular listening age was chosen only when the child does >75% of the listening tasks listed in that particular age interval. To ensure equivalence in terms of listening age and gender, a child with matching listening age and gender to the CI group was included in the NH group, irrespective of their chronological age. In the NH children, the listening age corresponded to the chronological age. The listening age of these children ranged between 31-36 months and 43-48 months.

Ethical Approval

Ethical approval for the study was provided by the AIISH Ethical Committee, with the approval number and date being No. DOR.9.1/Ph.D/SP/872/2020-21, February 08, 2023.

Procedure

The study utilized sentences from the Auditory Perception Test of emotions in Kannada,24 which comprised semantically neutral sentences. Originally, this test featured recordings of sentences expressing “happy,” “sad,” “neutral,” and “questioning” emotions. However, to incorporate the “angry” emotion for the current study, a new set of stimuli was recorded. The sentences were audio recorded by an adult female native speaker with a good voice and speech. Women speakers are generally more emotionally expressive compared to male speakers.25 The sentences were recorded in Adobe Audition software, using a calibrated unidirectional microphone in a sound-treated room. The sentences were digitized with a 16-bit analog-to-digital converter at a sampling frequency of 44,100 Hz. In addition, these sentences were normalized to minimize differences in the stimulus energy across the different emotion stimuli. The speaker was instructed to modulate the voice to convey different emotions such as happy, sad, and angry while uttering the sentences. The recorded sentences were given to 3 experienced audiologists and/or speech-language pathologists (ASLP). The ASLPs were instructed to rate the appropriateness of the recorded sentences to the emotion tagged, on a 3-point scale (1: very appropriate, 2: fairly appropriate, and 3: not appropriate). Based on their ratings, only the sentences that were rated “very appropriate” by at least 2 out of 3 were included in the test (10 items) and practice items (5 items).

Before the formal testing, a practice session involved 5 sentences to familiarize children with the speaker’s voice, speaking style, and the testing protocol. Both groups received three 20-minutes practice sessions. Feedback was provided during the practice session only. The child was made to sit at a distance of 1 m from a loudspeaker placed at 0° Azimuth. The sentences were played from a laptop through a calibrated audiometer at 50 dB HL. Three emojis representing happy, sad, and angry emotions were displayed. The task employed a three-Alternative Forced Choice (3AFC) paradigm wherein the child was expected to point to the appropriate emoji based on the emotion perceived. A score of “1” was given for accurate identification and “0” for incorrect identification. In cases of incorrect identification, the author recorded the specific emotion that led to the confusion using a confusion matrix.

In the CI group, the assessment involved utilizing the CI at the recommended settings and a trial BTE hearing aid with a fitting range of 60-110 dB HL in the contralateral ear with their custom ear mold. Children in the CI group were tested in both CI alone and CI with HA conditions. On the other hand, children in the NH group were tested binaurally.

Statistical Analysis

Comparison of within-subject and across-subject datasets was conducted using the Statistical Package for the Social Sciences (SPSS), version 20. Various tests, including multifactor analysis of variance, were employed to analyze differences in F0 and other parameters in the acoustic analysis of sentences expressing different emotions. The Mann−Whitney U-test and the Wilcoxon signed-rank test were used to assess differences in emotion perception between the CI and NH groups, as well as between the CI alone and the CI with HA conditions, respectively.

Results

The children in the CI group received their implants at ages ranging from 3.6 to 5.10 years, with an average age of 4.25 years (SD: 1.13 years). The implant age of the children varied from 1 to 2.75 years (mean age: 1.65 ± 0.7 years). The average aided thresholds in the CI alone condition were 29.09 ± 2.18 dB HL for low frequencies (250, 500, and 750 Hz), 25.35 ± 2.26 dB HL for mid-frequencies (1000, 1500, 2000 Hz), and 23.70 ± 3.30 dB HL for high frequencies (3000, 4000, 6000, and 8000 Hz). Aided thresholds in the CI and HA condition were similar for mid and high frequencies. However, for low frequencies, the mean threshold was 27.58 ± 1.09 dB HL. This difference in mean threshold for low frequencies was not statistically significant (P = .082) across CI alone and CI and HA.

Acoustic Analysis of Sentences with Different Emotions

The acoustic analysis of the sentences was performed using the Praat Software package.26 A total of 10 recordings for each emotion were analyzed to calculate the mean F0, F0 range, and mean intensity. As inferred from Table 1, the mean F0 and its range were high for the “angry” and “happy” emotions and low for the “sad” emotion. Additionally, the intensity for the “angry” emotion was higher than for the “sad” emotion.

Table 1.

Mean and Standard Deviation of Fundamental Frequency and Intensity

| Mean F0 ± SD (Hz) | F0 Range (Hz) | Mean Intensity ± SD (dB) | Intensity Range (dB) | |

|---|---|---|---|---|

| Happy | 248.93 ± 35.52 | 155.04-332.98 | 73.68 ± 1.85 | 31.55-59.23 |

| Sad | 220.83 ± 12.33 | 177.8-234.8 | 72.46 ± 2.36 | 33.16-43.94 |

| Angry | 288.82 ± 36.16 | 231.61-435.1 | 75.39 ± 1.67 | 33.12-53.32 |

Since the data for mean F0 (P = .20), F0 range (P = .19), mean intensity (P = .13), and intensity range (P = .47) were normally distributed, a multifactor analysis of variance was employed. Subsequent post hoc tests were conducted (when indicated) to make pairwise comparisons among multiple groups (happy–sad, happy–angry, sad–angry). The mean F0 and mean intensity were significantly different across the 3 emotions (F (2,11) = 12.866, P = .03, partial ŋ 2 = 0.38). Further, pairwise comparisons revealed that the mean F0 of the “angry” emotion was significantly different from that of the “happy” and “sad” emotions. In addition, the F0 range was larger for the “angry” emotion compared to the others. However, mean intensity differences within the sentences were statistically significant only between the “sad” and “angry” emotions.

Vocal Emotion Recognition

The Shapiro–Wilk’s test of normality was used to assess the data distribution. The emotion perception scores did not follow a normal distribution (P < .05); therefore, a Mann–Whitney U-test was conducted.

Comparison Between Cochlear Implant Group and Normal Hearing Group

To address the primary objective of the study, the emotion perception scores of the CI group, under both CI and HA conditions, were compared with those of the NH group. As predicted, the performance of vocal emotion perception was significantly superior in the NH group compared to the CI group for “happy” (U = 180.00, |Z| = 4.42, P = .01), “sad” (U = 167.00, |Z| = 3.78, P = .002), and “angry” (U =182.00, |Z| = 4.50, P = .02) emotions.

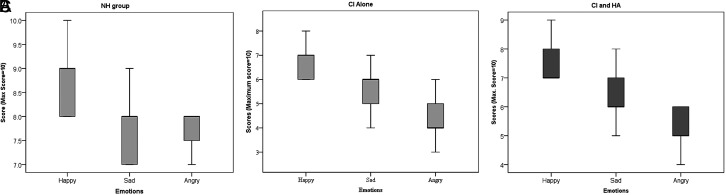

It is noteworthy that both groups recognized the “happy” emotion with high accuracy among other emotions. Additionally, children in the CI group had better scores for “sad” emotion followed by “angry,” whereas the NH group recognized “angry” better than “sad.” The median and interquartile range obtained by the CI group in CI alone and CI with HA conditions, as well as the NH group, are provided in Figure 1.

Figure 1.

Box plot depicting emotion scores for (A) NH group, (B) CI group in CI alone condition, and (C) CI group in CI and HA condition. It shows median, first quartile, and third quartile.

Comparison Between Cochlear Implant Alone and Cochlear Implant and Hearing Aid

A within-group comparison was conducted using the Wilcoxon signed-rank test, chosen due to the non-normal distribution of the dataset. Significant differences were observed in emotion perception scores for “sad” (Z = –3.30, P = .00) and “angry” (Z = –2.23, P = .02) between the CI alone and CI with HA conditions. However, no significant difference in scores for “happy” emotion perception was observed between CI alone and CI with HA conditions (Z = –1.34, P = .18).

To provide greater insight into the patterns of error in the judgment of emotions, confusion matrices were constructed for both groups (Table 2).

Table 2.

Average Perceptual Confusion Matrices were Constructed. The Value in it Represents Percentage of Responses. Bold Numbers Indicate a Match Between Intended Emotion and Given Reponse.

| CI Group | NH Group | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| CI Alone Response (%) |

CI and HA Response (%) |

Binaural Response (%) |

||||||||

| Happy | Sad | Angry | Happy | Sad | Angry | Happy | Sad | Angry | ||

| Target | Happy | 61.04 | 6.09 | 32.06 | 67.90 | 6.06 | 26.04 | 90.15 | 2.45 | 7.40 |

| Sad | 44.76 | 34.04 | 21.02 | 38.23 | 55.71 | 10.78 | ||||

| 6.94 | ||||||||||

| 75.43 | ||||||||||

| 19.63 | ||||||||||

| Angry | 55.05 | 18.42 | 36.63 | 44.23 | 12.85 | 42.92 | 15.81 | 2.69 | 81.50 | |

CI, cochlear implant; HA, hearing aid.

The percentage of confusions primarily occurred between “happy” and “angry,” whereas the least confusion was observed among “sad”–”happy” and “sad”–”angry” emotions. Additionally, the percentage of confusion was reduced in the CI and HA condition compared to the CI alone condition. The NH group exhibited significant confusions primarily between “sad” and “angry,” with the least confusion among “happy” and “angry.”

Unbiased hit (Hu) values27 were calculated to ensure that recognition accuracy remained unaffected by factors such as response guessing or response bias for all 3 emotions in the CI alone and CI with HA conditions in the CI group and the NH group.

|

Where “a” represents the number of correct identifications of “happy,” “b” stands for the number of identifications of “happy” as “sad,” “c” denotes the number of identifications of “happy” as “angry,” “d” represents the number of identifications of “sad” as “happy,” and “g” signifies the number of identifications of “angry” as “happy.” Similarly, Hu values are calculated across other emotions such as “sad” and “angry.”

The Wilcoxon signed-rank test revealed that the Hu value is significantly different across CI and CI and HA conditions for “happy” (Z = −2.97, P = .03), “sad” (Z = −3.49, P = .00), and “angry” (Z = −3.15, P = .00). The increase in Hu value from the CI alone condition to the CI and HA condition across all emotions suggests that adding a HA in the contralateral ear improves the accuracy of identifying the target emotion (Table 3).

Table 3.

Average Hit and Unbiased Hit Rate Represented Percent of Responses

| Happy | Sad | Angry | ||||

|---|---|---|---|---|---|---|

| Responses (%) | ||||||

| H | Hu | H | Hu | H | Hu | |

| CI alone | 61.04 | 23.38 | 34.04 | 11.98 | 36.63 | 11.31 |

| CI and HA | 67.90 | 30.65 | 55.71 | 27.79 | 42.92 | 23.39 |

| NH group | 90.15 | 72.14 | 75.43 | 69.68 | 81.50 | 60.92 |

H, average hit; Hu, unbiased hit rate.

Discussion

The current study evaluated vocal emotion perception in children using unilateral CI with and without a contralateral HA, compared to the performance of children in the NH group.

Comparison of Normal Hearing Group Versus Cochlear Group

The NH group displayed superiority in understanding different emotions with greater accuracy than the CI group. This finding is in line with several studies conducted in the past.9,19,20 Although the children in both groups have similar listening ages, the superior performance of NH children can be attributed to their greater access to acoustic features such as fundamental frequency (F0) variations, intensity, and duration. These features are often compromised in children using hearing devices, which can lead to reduced clarity in vocal emotion perception. The acoustic features that are essential for emotion perception are F0 variations, followed by intensity, and the least reliable is duration.28

Apart from pitch cues, the intensity differences among different emotions would help in recognizing the target emotions. However, normalizing these intensity differences across stimuli poses a challenge for the CI group.9 As a result, CI children are forced to rely on pitch distinctions for emotion perception. However, due to the poor spectral resolution of their devices (CI and HA), the CI group performs inferiorly to the NH group.

Further, previous studies indicate that 6-month-old infants can discriminate changes in auditory temporal characteristics but not in visual stimuli, highlighting the early auditory dominance by this age.7,8 Despite both the NH and CI groups having the same listening age, the NH group likely benefits from early auditory stimulation since birth, which leads to superior vocal emotion perception. In contrast, children in the CI group face challenges due to congenital hearing impairment and delayed intervention with devices like HA or CI, which exacerbates differences between the 2 groups.

Comparison of Cochlear Implant Alone Versus Cochlear Implant and Hearing Aid

Adding a HA to the contralateral ear of the CI resulted in enhanced performance. The observed benefits could be explained by the HA’s transmission of lower-frequency acoustic information. This is particularly beneficial because CI alone often has limitations in processing low-frequency sounds due to constraints in electrode array insertion.30

Additionally, CI is limited by its speech coding strategies, which primarily represent the envelope of the acoustic signal. On the contrary, HA provides information on temporal fine structure cues,31 which is crucial for vocal emotion perception. Together, the combination of CI and HA facilitates the integration of both envelope and fine structure cues, thereby improving vocal emotion perception.

Nevertheless, the increase in scores under bimodal conditions did not match the performance of children in the NH group. This is possibly because the CI children in our study may not have had sufficient acoustic bandwidth and sensitivity to detect additional voice cues.32 Dorman et al33 found that bimodal benefits for speech perception in quiet were greater for individuals with mild (0-40 dB HL) or moderate (41-60 dB HL) hearing loss at low frequencies, compared to those with more severe hearing loss (>60 dB HL), indicating the influence of residual hearing. Our study cohorts in the CI group had mean unaided pure tone thresholds greater than 80 dB HL at 500, 1000, and 2000 Hz. This indicates that children in the CI group had insufficient residual hearing to achieve the same significant advantages as children with NH.13

Comparison of Vocal Emotion Perception: Happy, Sad, and Angry

Both groups demonstrated a high rate of identification for “happy,” which could be attributed to greater variations in intensity within stimuli compared to “sad” and “angry.” On the other hand, the CI group encountered challenges in perceiving the “angry” emotion. This could be linked to rapid F0 variations in a sentence during the expression of the “angry” emotion, which might not be well represented with either the CI device (CI alone condition) or HA (CI and HA condition). These findings contradict previous research, which suggests that significant variations in F0 and high intensity could assist children with CI in recognizing “angry” emotions.1,4 Considering broad inter-subject variability in the study population, it is imperative to validate the current results with a larger study sample.

Additionally, the confusion patterns observed among CI children for the target emotions were largely congruent with those reported in prior research.34 The children in the CI group had fewer confusions between the “sad” and “angry” emotions than with other combinations, likely due to the intensity differences between these emotions.4,35 This argument is substantiated through the acoustic analysis of emotion sentences which showed statistically significant differences in mean intensity between “sad” and “angry.” Moreover, CI children exhibited increased confusion between “happy” and “angry,” which can be attributed to acoustic differences primarily in mean F0. The reduced spectral resolution in the CI group may hinder their ability to distinguish these F0 variations between the 2 emotions.35

The findings of the current study also indicate improved scores in identifying the emotion “sad” under the CI and HA conditions. This enhancement is likely due to the significantly lower intensity of the emotion “sad” compared to other emotions, making it acoustically more salient and easier to distinguish.36 The “sad” emotion was characterized by the lowest mean intensity, mean F0 values, and F0 variation ranges, emphasizing its distinctiveness from the “happy” and “angry”targets.34

Thus, children in the CI group relied on intensity cues rather than spectral variations to perceive emotions, consistent with previous findings.34,36 Additionally, the HA in the contralateral ear may have further enhanced their ability to differentiate intensity changes within sentences among different emotions. The HA not only improved vocal emotion perception but also decreased the number of confusions and increased the accuracy in identifying target emotions, as evidenced by the Hu value.

Conversely, children in the NH group showed minimal confusion between “happy” and “sad,” but greater confusion between “angry” and “sad,” indicating a reliance on pitch cues and/or spectral variations rather than intensity cues.35 Overall, these findings highlight a distinct pattern in vocal emotion perception between the CI and NH groups, emphasizing their differing reliance on spectral and temporal cues. Future research could investigate the relative importance of spectral and temporal cues with increasing age among CI children, using a longitudinal approach.

On the contrary, Meister and colleagues37 found that CI users performed poorly in identifying the stressed syllable. This means that CI users are incapable of using intensity cues which are naturally present9 unlike the NH group. However, in another study by Hopyan et al,19 it was reported that CI users relied on tempo cues rather than the crucial pitch cues associated with musical emotion to make the distinction between “happy” and “sad”. The conflicting results across studies are due to the heterogeneity within the CI population. The diversity among our CI children arises from factors such as the age at implantation, implant age, manufacturer differences in CI devices, and aural rehabilitation. These elements may impact the perception of vocal emotions, and subsequent research can comprehensively examine the influence of each factor individually.

Confounding Factors in Vocal Emotion Perception

The age at which children receive a CI can greatly influence their vocal emotion perception. In a study, the authors reported that the optimal age for CI to fully develop emotional comprehension is 18 months,38 which is slightly higher than the 12 months recommended for language skills development. They noted that this development predominantly involves the right hemisphere, crucial for emotion development, which dominates the processing of non-verbal emotional cues during the first 2 years. After this period, the balance between hemispheres shifts, making oral language more significant.38

Furthermore, it is well established that there exists a sensitive period of 3.5 years for the functional development of central auditory pathways.39 Since our study cohorts were implanted after 3.5 years of age, the results cannot be extrapolated to the children implanted earlier, i.e., before this sensitive period. Accordingly, this prolonged sound deprivation prior to CI could explain the observed discrepancies in vocal emotion perception among the CI and NH groups.

Strengths and Limitations

We utilized Hu values as an additional measure to assess emotion perception, ensuring accuracy independent of potential influences such as response guessing or bias. This method proved invaluable in quantifying the impact of using HA in conjunction with unilateral CI. Another strength of our study is the inclusion of a NH group matched for listening age, facilitating a direct comparison of the potential binaural benefits achievable with CI and HA.

Similar to other studies involving CI users, the external validity of the results must be justified by several methodological considerations. These include a smaller sample size and the reliance on snowball sampling, which may introduce bias and limit the generalizability of findings to the broader population. Further, our CI children exhibit significant variability in terms of age at implantation (3.6-5.10 years) and duration of implant use (1-2.75 years), which could again constrain the applicability of results. To improve the reliability of the findings, future research could benefit from increasing the sample size in each subgroup based on age at implantation, considering differences in device manufacturers and their speech coding strategies, all of which could potentially impact the outcomes of the study.

Conclusion

In conclusion, the study findings indicate that the NH group demonstrated superior performance in vocal emotion perception compared to the CI group. Children in the CI group face a disadvantage due to their congenital hearing impairment, and the rehabilitative options provided by hearing devices do not fully replicate the functionality of a normal ear. Hence, advancements in technology may offer promising strategies in the near future to accurately represent the fundamental frequency variation of speech signals. However, in the current scenario, using a HA in the contralateral ear could provide access to low-frequency information and their fine structure, potentially improving emotion perception and thereby enhancing socio-emotional development.

Funding Statement

The authors declare that this study received no financial support.

Footnotes

Ethics Committee Approval: This study was approved by the AIISH Ethical Committee (Approval no.: DOR.9.1/Ph.D/SP/872/2020-21, Date: 08.02.2023).

Informed Consent: Written informed consent was obtained from the caregivers of all children who participated in the study.

Peer-Review: Externally peer-reviewed.

Author Contributions: Concept – P.S., P.M.; Design – P.S., P.M.; Supervision – P.M.; Resources – P.S., P.M.; Materials – P.S., P.M.; Data Collection and/or Processing – P.S.; Analysis and/or Interpretation – P.S., P.M.; Literature Search – P.S.; Writing – P.S., P.M.; Critical Review – P.M.

Declaration of Interests: The authors have no conflicts of interest to declare.

References

- 1. Bryant G, Barrett HC. Vocal emotion recognition across disparate cultures. J Cogn Cult. 2008;8(1-2):135 148. ( 10.1163/156770908X289242) [DOI] [Google Scholar]

- 2. Zajonc RB. Feeling and thinking: preferences need no inferences. Am Psychol. 1980;35(2):151 175. ( 10.1037/0003-066X.35.2.151) [DOI] [Google Scholar]

- 3. Wallbott HG, Scherer KR. Cues and channels in emotion recognition. J Pers Soc Psychol. 1986;51(4):690 699. ( 10.1037/0022-3514.51.4.690) [DOI] [Google Scholar]

- 4. Ren L, Zhang Y, Zhang J, et al. Voice emotion recognition by mandarin-speaking children with cochlear implants. Ear Hear. 2022;43(1):165 180. ( 10.1097/AUD.0000000000001085) [DOI] [PubMed] [Google Scholar]

- 5. Nava E, Pavani F. Changes in sensory dominance during childhood: converging evidence from the Colavita effect and the sound-induced flash illusion. Child Dev. 2013;84(2):604 616. ( 10.1111/j.1467-8624.2012.01856.x) [DOI] [PubMed] [Google Scholar]

- 6. Neves L, Martins M, Correia AI, Castro SL, Lima CF. Associations between vocal emotion recognition and socio-emotional adjustment in children. R Soc Open Sci. 2021;8(11):211412. ( 10.1098/rsos.211412) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Lewkowicz DJ. Sensory dominance in infants 1: Six-month-old infants’ response do auditory–visual compounds. Dev Psychol. 1988;24(2):172 182. ( 10.1037/0012-1649.24.2.172) [DOI] [Google Scholar]

- 8. Zupan B. The role of audition in audiovisual perception of speech and emotion in children with hearing impairment. In: Belin P, Campanella S, Ethofer T, eds. Integrating Face and Voice in Person Perception. 2013:299 324. [Google Scholar]

- 9. Jiam NT, Caldwell M, Deroche ML, Chatterjee M, Limb CJ. Voice emotion perception and production in cochlear implant users. Hear Res. 2017;352:30 39. ( 10.1016/j.heares.2017.01.006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Fu QJ, Chinchilla S, Galvin JJ. The role of frequency and temporal cues in voice gender discrimination by normal-hearing listeners and cochlear implant users. J Assoc Res Otolaryngol. 2004;5(3):253 260. ( 10.1007/s10162-004-4046-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Carroll J, Tiaden S, Zeng FG. Fundamental frequency is critical to speech perception in noise in combined acoustic and electric hearing. J Acoust Soc Am. 2011;130(4):2054 2062. ( 10.1121/1.3631563) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Neuman AC, Svirsky MA. Effect of hearing aid bandwidth on speech recognition performance of listeners using a cochlear implant and contralateral hearing aid (bimodal hearing). Ear Hear. 2013;34(5):553 561. ( 10.1097/AUD.0b013e31828e86e8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Ching TYC, Incerti P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear Hear. 2004;25(1):9 21. ( 10.1097/01.AUD.0000111261.84611.C8) [DOI] [PubMed] [Google Scholar]

- 14. Dunn CC, Tyler RS, Witt SA. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. J Speech Lang Hear Res. 2005;48(3):668 680. ( 10.1044/1092-4388(2005/046)) [DOI] [PubMed] [Google Scholar]

- 15. Mok M, Grayden D, Dowell RC, Lawrence D. Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. J Speech Lang Hear Res. 2006;49(2):338 351. ( 10.1044/1092-4388(2006/027)) [DOI] [PubMed] [Google Scholar]

- 16. Gifford RH, Shallop JK, Peterson AM. Speech recognition materials and ceiling effects: considerations for cochlear implant programs. Audiol Neurootol. 2008;13(3):193 205. ( 10.1159/000113510) [DOI] [PubMed] [Google Scholar]

- 17. Morera C, Manrique M, Ramos A, et al. Advantages of binaural hearing provided through bimodal stimulation via a cochlear implant and a conventional hearing aid: a 6-month comparative study. Acta Otolaryngol. 2005;125(6):596 606. ( 10.1080/00016480510027493) [DOI] [PubMed] [Google Scholar]

- 18. Litovsky RY, Johnstone PM, Godar SP. Benefits of bilateral cochlear implants and/or hearing aids in children. Int J Audiol. 2006;45(suppl 1):S78 S91. ( 10.1080/14992020600782956) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Hopyan T, Gordon KA, Papsin BC. Identifying emotions in music through electrical hearing in deaf children using cochlear implants. Cochlear Implants Int. 2011;12(1):21 26. ( 10.1179/146701010X12677899497399) [DOI] [PubMed] [Google Scholar]

- 20. Chatterjee M, Zion DJ, Deroche ML, et al. Voice emotion recognition by cochlear-implanted children and their normally-hearing peers. Hear Res. 2015;322:151 162. ( 10.1016/j.heares.2014.10.003) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Nakata T, Trehub SE, Kanda Y. Effect of cochlear implants on children’s perception and production of speech prosody. J Acoust Soc Am. 2012;131(2):1307 1314. ( 10.1121/1.3672697) [DOI] [PubMed] [Google Scholar]

- 22. Wiefferink CH, Rieffe C, Ketelaar L, De Raeve L, Frijns JHM. Emotion understanding in deaf children with a cochlear implant. J Deaf Stud Deaf Educ. 2013;18(2):175 186. ( 10.1093/deafed/ens042) [DOI] [PubMed] [Google Scholar]

- 23. Hopyan-Misakyan TM, Gordon KA, Dennis M, Papsin BC. Recognition of affective speech prosody and facial affect in deaf children with unilateral right cochlear implants. Child Neuropsychol. 2009;15(2):136 146. ( 10.1080/09297040802403682) [DOI] [PubMed] [Google Scholar]

- 24. Agarwal D, Yathiraj A. Auditory Perception Test of Emotions in Kannada Sentences. Unpublished material. Department of Audiology, All India Institute of Speech & Hearing, Mysore; 2007. [Google Scholar]

- 25. Chaplin TM, Aldao A. Gender differences in emotion expression in children: A metaanalytic review. Psychol Bull. 2013;139(4):735 765. ( 10.1037/a0030737) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Boersma P. PRAAT, a system for doing phonetics by computer. Glot Int. 2001;5(9-10):341 347. [Google Scholar]

- 27. Wagner HL. On measuring performance in category judgment studies of nonverbal behavior. J Nonverbal Behav. 1993;17(1):3 28. ( 10.1007/BF00987006) [DOI] [Google Scholar]

- 28. Peng SC, Chatterjee M, Lu N. Acoustic cue integration in speech intonation recognition with cochlear implants. Trends Amplif. 2012;16(2):67 82. ( 10.1177/1084713812451159) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Green T, Faulkner A, Rosen S. Enhancing temporal cues to voice pitch in continuous interleaved sampling cochlear implants. J Acoust Soc Am. 2004;116(4 Pt 1):2298 2310. ( 10.1121/1.1785611) [DOI] [PubMed] [Google Scholar]

- 30. Dorman MF, Loizou PC, Rainey D. Simulating the effect of cochlear-implant electrode insertion depth on speech understanding. J Acoust Soc Am. 1997;102(5 Pt 1):2993 2996. ( 10.1121/1.420354) [DOI] [PubMed] [Google Scholar]

- 31. Stronks HC, Briaire JJ, Frijns JHM. The temporal fine structure of background noise determines the benefit of bimodal hearing for recognizing speech. J Assoc Res Otolaryngol. 2020;21(6):527 544. ( 10.1007/s10162-020-00772-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Nyirjesy S, Rodman C, Tamati TN, Moberly AC. Are there real-world benefits to bimodal listening? Otol Neurotol. 2020;41(9):e1111 e1117. ( 10.1097/MAO.0000000000002767) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Dorman MF, Cook S, Spahr A, et al. Factors constraining the benefit to speech understanding of combining information from low-frequency hearing and a cochlear implant. Hear Res. 2015;322:107 111. ( 10.1016/j.heares.2014.09.010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Luo X, Fu QJ, Galvin JJ. Vocal emotion recognition by normal-hearing listeners and cochlear implant users. Trends Amplif. 2007;11(4):301 315. ( 10.1177/1084713807305301) [published correction appears in Trends Amplif. 2007;11(3): e1]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Mildner V, Koska T. Recognition and production of emotions in children with cochlear implants. Clin Linguist Phon. 2014;28(7-8):543 554. ( 10.3109/02699206.2014.927000) [DOI] [PubMed] [Google Scholar]

- 36. Most T, Gaon-Sivan G, Shpak T, Luntz M. Contribution of a contralateral hearing aid to perception of consonant voicing, intonation, and emotional state in adult cochlear implantees. J Deaf Stud Deaf Educ. 2012;17(2):244 258. ( 10.1093/deafed/enr046) [DOI] [PubMed] [Google Scholar]

- 37. Meister H, Landwehr M, Pyschny V, Walger M, von Wedel H. The perception of prosody and speaker gender in normal-hearing listeners and cochlear implant recipients. Int J Audiol. 2009;48(1):38 48. ( 10.1080/14992020802293539) [DOI] [PubMed] [Google Scholar]

- 38. Mancini P, Giallini I, Prosperini L, et al. Level of emotion comprehension in children with mid to long term cochlear implant use: how basic and more complex emotion recognition relates to language and age at implantation. Int J Pediatr Otorhinolaryngol. 2016;87:219 232. ( 10.1016/j.ijporl.2016.06.033) [DOI] [PubMed] [Google Scholar]

- 39. Sharma A, Dorman MF. Central auditory development in children with cochlear implants: clinical implications. Adv Otorhinolaryngol. 2006;64:66 88. ( 10.1159/000094646) [DOI] [PubMed] [Google Scholar]

Content of this journal is licensed under a

Content of this journal is licensed under a