Abstract

Purpose:

To develop an artificial intelligence (AI) approach for differentiating between normal cornea, subclinical, and keratoconus (KC) using tomographic maps from Pentacam (Oculus) and corneal biomechanics from Corvis ST (Oculus).

Methods:

A total of 1,668 tomographic (769 patients) and 611 biomechanical (307 patients) images from the Chula Refractive Surgery Center, King Chulalongkorn Memorial Hospital were included. The sample size was divided into the Pentacam and combined Pentacam-Corvis groups. Different convolutional neural network approaches were used to enhance the KC and subclinical KC detection performance.

Results:

AI model 1, which obtained refractive maps from Pentacam, achieved an area under the receiver operating characteristic curve (AUC) of 0.938 and accuracy of 0.947 (sensitivity, 90.8% and specificity, 96.9%). AI model 2, which added dynamic corneal response and the Vinciguerra screening report from Corvis ST to AI Model 1, achieved an AUC of 0.985 and accuracy of 0.956 (sensitivity, 93.0% and specificity, 94.3%). AI model 3, which added the corneal biomechanical index to AI Model 2, reached an AUC of 0.991 and accuracy of 0.956 (sensitivity, 93.0% and specificity, 94.3%).

Conclusions:

Our study showed that AI models using either anterior corneal curvature alone or combined with corneal biomechanics could help classify normal and keratoconic corneas, which would make diagnosis more accurate and would be helpful in decision-making for the treatment.

Keywords: Artificial intelligence, Forme fruste keratoconus, Keratoconus, Keratoconus suspect, Machine learning, Subclinical keratoconus

INTRODUCTION

Keratoconus (KC) is a progressive ectatic cornea causing visual impairment. Etiology is not well understood, but several risk factors have been proposed, including eye rubbing, repeated trauma from contact lenses, and atopic disease.1 The prevalence of KC in meta-analysis up to June 2018 was 1.38 per 1000 population,2 and it was 19.6 per 100,000 in Asians in a 10-year retrospective study.3 Asian populations show higher KC incidence than Caucasians.3,4 Early detection means treating at earlier stage, so better visual acuity can be preserved.

Previously, slit-lamp examination and clinical signs were used for the diagnosis of KC. Subsequently, computer software programs were integrated with imaging devices, and topographic indices were included in the diagnostic criteria.5 Recently, Scheimflug images from Pentacam (Oculus Inc., Wetzlar, Germany) were introduced to provide three-dimensional images of anterior segment of the eye, allowing evaluation of both anterior and posterior corneal surfaces and pachymetric map.6 Following this, in vivo corneal biomechanical imaging systems, capturing deformation response to air pulse, were developed to provide dynamic corneal response (DCR) parameters representing mechanical stability of cornea using Scheimflug imaging analysis (Oculus Inc., Wetzlar, Germany).7 The combination of corneal thickness profile and deformation parameters is analyzed with logistic regression analysis, corneal biomechanical index (CBI). CBI can differentiate between KC and normal cornea. However, when detecting the fellow normal topographic eye of patients with very asymmetric ectasia in the other eye,8 CBI should be combined with other clinical data when diagnosing early KC. Later, combination of data from Pentacam and Corvis ST, tomographic biomechanical index (TBI), was developed using random forest with leave-one-out cross-validation. Detection of subclinical and clinical ectasia was significantly higher than that of either Belin-Ambrósio enhanced ectasia total deviation display (BAD-D) or CBI.8,9

Over 8.5 million people have undergone refractive surgery, and over 13 million individuals have undergone laser-assisted in situ keratomileusis (LASIK) in the United States.10 Small-incision lenticule extraction (SMILE) is another option for refractive surgery, which alters biomechanical integrity of cornea less than LASIK.11,12 However, post-SMILE ectasia cases have been reported with patient having normal preoperative topography. This indicates that abnormal corneal topography may not be the only risk factor for postoperative ectasia.13 Thus, combination of multiple parameters can enhance the early detection of KC.8

Artificial intelligence (AI) plays an important role in several medical fields.14 In ophthalmology, application is being combined with fundus photography, optical coherence tomography (OCT), and visual fields to screen and classify retinal diseases, including retinopathy of prematurity, age-related macular degeneration, and diabetic retinopathy.15 AI applications in cornea field are more commonly used for KC detection, screening candidates for refractive surgery, pterygium detection, and graft detachment detection after Descemet membrane endothelial keratoplasty.14 For KC detection, data of previous studies were analyzed with various supervised AI models as follows: linear discriminant analysis, reducing number of features and maximizing separability between groups;16,17,18,19,20 neural network models, processing through interconnected nodes to interpret input data into output data;16,17,21,22,23,24,25,26,27,28 decision tree models, classifying data based on conditions where leaves represent outcome and branches represent decision;29,30,31 multilayer perceptron model, fully connected neural network that consists of at least three layers of nodes;21 support vector machine model, classifying data by finding a hyperplane;21,32,33 and random forest models, containing multiple decision trees.8,34

In recent years, convolutional neural networks (CNN), which classify images or two-dimensional data, have been successful in many fields.35,36,37 There are typically two layers. The first is convolution layer that performs feature extraction and learns the characteristics of images,38,39 unlike other algorithms that were developed based on human parameter choices.19,21,22,23,24,25,30,32,33 The latter is fully connected layer that connects all the features extracted to recognize the pattern of input data.38,39 To date, eight studies have used CNN to detect KC; only three of these studies have evaluated subclinical KC. Different imaging technologies, such as Pentacam,39,40 Orbscan,41 and OCT38,42 were used for the data collection. Remarkably, inclusion of corneal biomechanics in CNN framework has not been reported in the previous literature.

This study aimed to develop an AI model that categorizes patients into normal, subclinical KC, and KC using tomographic maps and corneal biomechanics. CNN was used as architecture base. AI models could benefit clinicians by providing more accurate diagnosis and help in decision-making of noncorneal ophthalmologists.

METHODS

All the procedures were performed in accordance with the guidelines of the Declaration of Helsinki, the Belmont Report, and CIOMS International Conference on Harmonization in Good Clinical Practice. The research protocol was approved by relevant institutional review boards and that informed consent for data collection was obtained.

We reviewed Chula Refractive Surgery Center database of all patients who visited the King Chulalongkorn Memorial Hospital, Bangkok, Thailand, between 2014 and 2020. All patients aged >12 years who had tomographic map by Pentacam, regardless of corneal biomechanics evaluation by Corvis ST, and not using rigid and soft contact lenses at least 3 weeks and 7 days before examination, respectively, were included in the study. Patients with other corneal diseases, such as pellucid marginal degeneration; eyes with a history of trauma or corneal surgery, such as corneal crosslinking, intracorneal ring segment implantation, keratoplasty, or laser vision correction; and poor-quality images were excluded from the study. Demographic data, tomographic maps from Pentacam, and corneal biomechanics from Corvis ST were collected. If patients had visited more than once and duration of visit was at least 6 months apart, images were collected.

A total of 1,668 corneal tomographic and 611 corneal biomechanical images from 769 and 307 patients were obtained, respectively. Three corneal specialists independently classified eye examinations primarily based on clinical judgment, with additional consideration given to the following criteria, resulting in three categories: normal, subclinical KC, and KC. A normal cornea was defined as a bilateral normal topography, no slit-lamp findings suggestive of corneal ectasia, and no family history of KC. Forty-eight subclinical KC were defined as follows: (1) normal-appearing cornea on slit-lamp biomicroscopy, keratometry, retinoscopy, and ophthalmoscopy and at least one of following (2 or 3); (2) central corneal power >47.20 diopter (D) and/or inferior–superior asymmetry >1.40 D (inferior minus superior power at 3 mm diameter) and/or skewed radial axis >21° on corneal topography;43 (3) BAD-D value of <2.6 or ≥1.6 standard deviation.44 KC was defined according to the following criteria established by the Global Consensus on KC and Ectatic Disease in 2015: abnormal posterior elevation, abnormal corneal thickness distribution, and corneal thinning.45 If there were disagreements among corneal specialists, group discussion was conducted and final decision was made.

The sample size was divided into two groups: Pentacam and combined Pentacam-Corvis. Pentacam group had 1,668 eyes, including 945, 98, and 625 eyes with normal corneas, subclinical KC, and KC, respectively. Combined Pentacam-Corvis group had 611 eyes, including 320, 48, and 243 eyes with normal corneas, subclinical KC, and KC, respectively. To prepare our datasets for the analysis, we utilized FastAI library’s capabilities for efficient data handling and augmentation. In each group, all the images were randomly split into training, validation, and test sets. To ensure integrity and prevent possible data leakage, images derived from the same patient were stored in the same sets.

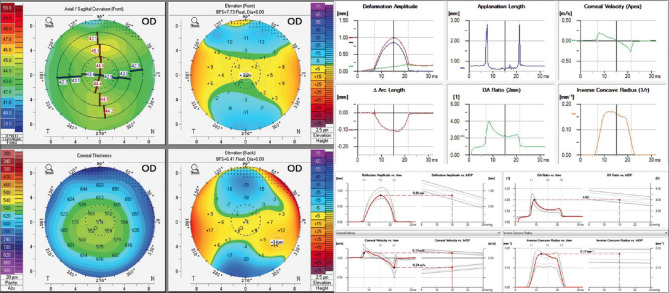

Training data set was analyzed three times to create three AI models. AI model 1 used four maps refractive display from Pentacam only [Figure 1]. We used EfficientNet-B746 pretrained on ImageNet47 as our base architecture allowed us to leverage advanced image recognition capabilities. Initial training focused on classifier head for one epoch to adapt pre-trained network to our specific classification task. Subsequent training for fine-tuning involved all network layers for five epochs, utilizing a 1-cycle learning rate schedule with cosine annealing48 and a peak learning rate of 2e–4. Model optimized for cross-entropy loss with label smoothing49 and AdamW50 was used as optimizer. To alleviate class imbalance, we oversampled subclinical images and added five duplicated images of each subclinical image into training set.41,42 During training, we performed image augmentation with rotation, zooming, warping, and lighting transforms to enhance model exposure to underrepresented classes.51 After training, where all weights were allowed to change,51 we saved weights as model checkpoints at the end of each epoch. Selection of model checkpoints based on the lowest validation loss ensured that only the most performant configurations were advanced for final evaluation on test set.

Figure 1.

Example of data preparation for four maps refractive display from Pentacam (left) and dynamic corneal response (top right) and Vinciguerra screening report (bottom right) from Corvis ST

AI models 2 and 3 were a combination of two models: one to classify four maps refractive display from Pentacam and another to classify DCR and Vinciguerra screening report from Corvis ST [Figure 1]. Both models employed a strategy of averaging and weighting output probabilities across dual analyses to refine class predictions. This approach leveraged complementary strengths of Pentacam and Corvis technologies. Distinction between Models 2 and 3 was incorporation of CBI in Model 3, providing an additional layer. Validation set played a crucial role in preliminary performance assessment, enabling iterative refinements to optimize accuracy for KC and subclinical KC detection. A test set was used to evaluate final model performance.

Statistical analysis

We calculated two-tailed 95% confidence intervals using DeLong interval for receiver operating characteristic/area under the curve (ROCAUC) and Wilson score interval for accuracy, sensitivity, specificity, and positive predictive value in each class. Overall, AI model performance was assessed using both macro and weighted averages: macro averaging equally considers all classes, while weighted averaging adjusts for class prevalence, addressing class imbalance. Results were compared with BAD-D, CBI, and TBI.

RESULTS

A total of 1,668 corneal tomographic images from 769 patients (with 132 patients who had more than one visit) and 611 corneal biomechanical images from 307 patients (with 46 patients who had more than one visit) in Pentacam group and combined Pentacam-Corvis group, respectively, were included from Chula Refractive Surgery Center database of all patients visiting the King Chulalongkorn Memorial Hospital, Bangkok, Thailand, between 2014 and 2020.

A total of 769 patients (391 men and 378 women) had a mean age 27.87 ± 6.83 (12–58) years calculated from the patient’s first visit. Topographical characteristics of Pentacam and combined Pentacam-Corvis groups are shown in Table 1. Mean keratometry, maximum keratometry, astigmatism, and inferior–superior values were the highest in KC group. All parameters of subclinical KC group ranged between those of normal and KC groups. Corneal thickness was the greatest in normal group.

Table 1.

Description of the study groups in mean±standard deviation (range)

| Group | Classification | Kmean (D) | Kmax (D) | Pachymin (µm) | Astig (D) | I-S value |

|---|---|---|---|---|---|---|

| Pentacam | Normal (n=945) | 43.84±1.36 (40.00–48.40) | 45.15±1.58 (40.60–52.20) | 545.66±28.81 (460–669) | 1.50±0.82 (0.10–7.30) | 0.34±0.56 (−1.46–2.35) |

| Subclinical KC (n=98) | 44.68±1.56 (41.00–48.10) | 46.57±1.64 (43.40–51.60) | 524.18±29.95 (453–601) | 2.11±1.17 (0.10–5.00) | 0.83±0.77 (−1.57–3.01) | |

| KC (n=625) | 47.93±4.50 (40.00–66.20) | 55.23±7.79 (43.30–81.50) | 469.69±39.98 (322–570) | 4.67±2.94 (0.2–15.1) | 3.95±3.31 (−3.39–18.53) | |

| Combined Pentacam-Corvis | Normal (n=320) | 43.68±1.28 (40.00–48.40) | 45.04±1.52 (40.90–52.20) | 545.27±27.53 (478–629) | 1.56±0.90 (0.20–7.30) | 0.33±0.59 (−1.32–2.35) |

| Subclinical KC (n=48) | 44.96±1.79 (42.20–48.10) | 46.96±1.88 (43.40–51.60) | 524.18±29.95 (453–601) | 2.15±1.36 (0.10–5.00) | 0.96±0.79 (−0.38–3.01) | |

| KC (n=243) | 47.36±3.88 (40.00–66.20) | 53.88±6.73 (43.30–78.80) | 474.71±37.29 (356–570) | 4.25±2.66 (0.2–13.8) | 3.71±2.84 (−2.38–13.35) |

Classification was based on consensus of experts. Kmean: Mean keratometry, Kmax: Maximum keratometry, Pachymin: Minimum pachymetry, Astig: Astigmatism, I-S value: Inferior-superior value, n: Number of images, KC: Keratoconus

In Pentacam group, 13 eyes had mean keratometry >47.2 D and 27 eyes had inferior–superior value >1.4 in normal group. Nine eyes had mean keratometry >47.2 D and 20 eyes had inferior–superior value >1.4 in subclinical KC group. In KC group, 345 eyes had mean keratometry ≤47.2 D and 140 eyes had inferior–superior value ≤1.4.

In combined Pentacam-Corvis group, four eyes had mean keratometry >47.2 D and 11 eyes had inferior–superior value >1.4 in normal group. Nine eyes had mean keratometry >47.2 D and 12 eyes had inferior–superior value >1.4 in subclinical KC group. In KC group, 143 eyes had mean keratometry ≤47.2 D and 45 eyes had inferior–superior value ≤1.4. Corneal biomechanical characteristics of combined Pentacam-Corvis group are shown in Table 2.

Table 2.

Description of the combined Pentacam-Corvis subgroups in mean±standard deviation (range)

| Group | A1 length (mm) | A2 length (mm) | PD (mm) | A1 velocity (m/s) | A2 velocity (m/s) | CRHC (mm) | DA maximum (mm) |

|---|---|---|---|---|---|---|---|

| Normal (n=320) | 2.24±0.34 (1.44–3.08) | 2.04±0.37 (0.72–3.20) | 4.88±0.28 (3.86–5.49) | 0.14±0.02 (0.09–0.19) | 0.24±0.03 (0.14–0.34) | 6.92±0.85 (5.04–10.35) | 1.00±0.09 (0.72–1.25) |

| Subclinical KC (n=48) | 2.24±0.40 (1.38–3.05) | 1.94±0.45 (1.06–3.17) | 4.87±0.31 (4.28–5.57) | 0.14±0.02 (0.10–0.19) | 0.25±0.03 (0.21–0.34) | 6.33±0.84 (4.95–8.42) | 1.03±0.11 (0.87–1.31) |

| KC (n=243) | 2.00±0.34 (1.38–3.10) | 1.71±0.35 (0.69–2.95) | 4.92±0.26 (3.51–5.61) | 0.16±0.02 (0.10–0.25) | 0.26±0.04 (0.09–0.42) | 5.56±0.90 (2.93–10.22) | 1.10±0.13 (0.64–1.70) |

|

| |||||||

| Group | IOP (mmHg) | bIOP (mmHg) | CCT (µm) | DA ration maximum (mm) | INR | ARTh | SP-A1 (mmHg/mm) |

|

| |||||||

| Normal (n=320) | 15.88±2.50 (11.00–24.50) | 15.45±2.06 (11.10–22.80) | 552.58±28.88 (484–644) | 4.32±0.40 (3.30–5.60) | 8.85±1.03 (6.10–11.30) | 528.40±91.70 (325.70–813.50) | 109.85±17.51 (57.10–182.40) |

| Subclinical KC (n=48) | 15.13±2.37 (10.00–19.50) | 14.83±1.98 (10.70–18.60) | 547.90±32.38 (463–610) | 4.60±0.47 (3.80–5.70) | 9.67±1.20 (7.60–12.4) | 477.95±90.28 (314.30–753.80) | 102.25±18.71 (59.40–134.60) |

| KC (n=243) | 13.29±2.78 (5.00–34.00) | 14.47±2.60 (7.40–35.90) | 492.99±34.32 (392–582) | 5.34±0.92 (3.40–11.50) | 11.95±2.32 (4.60–23.70) | 277.39±103.39 (62.70–621.00) | 73.53±18.85 (15.30–123.70) |

Classification was based on consensus of experts. A1 length: First applanation length, A2 length: Second applanation length, PD: Peak distance, A1 velocity: First applanation velocity, A2 velocity: Second applanation velocity, CRHC: Curvature radius highest concavity, DA max: Max deformation amplitude, IOP: Intraocular pressure, bIOP: Biomechanical corrected intraocular pressure, CCT: Central corneal thickness, DA ratio max: Deformation amplitude, INR: Integrated radius, ARTh: Ambrosio’s relational thickness to the horizontal profile, SP-A1: Stiffness parameter at A1, n: Number of images, KC: Keratoconus

Total corneal tomographic and corneal biomechanical images were 1,678 and 611, respectively, and were divided into three different sets. Ratio of training, validation, and test sets were 1334:167:167 in Pentacam group and 488:61:62 in combined Pentacam-Corvis group. The details of number of images in each group are listed in Table 3.

Table 3.

Number of images in each group in artificial intelligence models 1–3

| Number of images | Normal | Subclinical KC | KC | Total |

|---|---|---|---|---|

| AI model 1 | ||||

| Training set | 760 | 75 | 499 | 1334 |

| Validation set | 101 | 8 | 58 | 167 |

| Test set | 84 | 15 | 68 | 167 |

| Total | 945 | 98 | 625 | 1668 |

| AI model 2–3 | ||||

| Training set | 251 | 42 | 195 | 488 |

| Validation set | 36 | 2 | 23 | 61 |

| Test set | 33 | 4 | 25 | 62 |

| Total | 320 | 48 | 243 | 611 |

KC: Keratoconus, AI: Artificial intelligence

Confusion matrices for the classification of AI models 1–3 are presented in Table 4. For AI model 1, four KC, four subclinical KC, and eight normal eyes were misclassified. For AI model 2-3, one KC and three subclinical KC were misclassified. We observed that AI model 1 tended to classify normal eyes as subclinical, while AI model 2–3 tended to classify subclinical KC eyes as KC.

Table 4.

Confusion matrices (actual versus predicted class) of the artificial intelligence model 1–3 on test set

| Predicted class | Actual class | ||

|---|---|---|---|

|

| |||

| Normal | Subclinical KC | KC | |

| AI model 1 | |||

| Normal | 76 | 2 | 1 |

| Subclinical KC | 8 | 11 | 3 |

| KC | 0 | 2 | 64 |

| Total (n=167) | 84 | 15 | 68 |

| AI model 2 | |||

| Normal | 33 | 0 | 1 |

| Subclinical KC | 0 | 1 | 0 |

| KC | 0 | 3 | 24 |

| Total (n=62) | 33 | 4 | 25 |

| AI model 3 | |||

| Normal | 33 | 0 | 1 |

| Subclinical KC | 0 | 1 | 0 |

| KC | 0 | 3 | 24 |

| Total (n=62) | 33 | 4 | 25 |

KC: Keratoconus, n: Number of images, AI: Artificial intelligence

We developed three AI models to classify normal, subclinical KC, and KC eyes. AI model 3 reached the overall highest AUC of 0.991 and accuracy of 0.956 with 93.0% sensitivity and 94.3% specificity. Performance of each AI model for each classification is shown in Table 5. The comparisons between AI models and BAD-D, CBI, and TBI are presented in Table 6.

Table 5.

Performance of artificial intelligence models 1–3 on the test set

| Group | Accuracy (95% CI) | AUC (95% CI) | Sensitivity, % (95% CI) | Specificity, % (95% CI) | PPV (95% CI) |

|---|---|---|---|---|---|

| AI model 1 | |||||

| Normal (n=84) | 0.934 (0.882–0.965) | 0.934 (0.897–0.972) | 90.5 (84.7–94.3) | 96.4 (92.0–98.5) | 0.962 (0.917–0.984) |

| Subclinical KC (n=15) | 0.910 (0.854–0.947) | 0.831 (0.713–0.948) | 73.3 (65.8–79.7) | 92.8 (87.4–96.0) | 0.500 (0.422–0.578) |

| KC (n=68) | 0.964 (0.920–0.985) | 0.961 (0.929–0.992) | 94.1 (89.1–97.0) | 98.0 (94.1–99.4) | 0.970 (0.927–0.989) |

| Weighted average overall (n=167) | 0.947 | 0.938 | 90.8 | 96.9 | 0.924 |

| Macro average overall (n=167) | 0.936 | 0.968 | 86.0 | 95.7 | 0.811 |

| AI model 2 | |||||

| Normal (n=33) | 0.984 (0.902–0.999) | 1.000 (1.000–1.000) | 100.0 (92.7–100.0) | 96.6 (87.5–99.4) | 0.971 (0.882–0.995) |

| Subclinical KC (n=4) | 0.952 (0.856–0.987) | 0.823 (0.815–0.831) | 25.0 (15.3–37.9) | 100.0 (92.7–100.0) | 1.000 (0.927–1.000) |

| KC (n=25) | 0.935 (0.835–0.979) | 0.994 (0.993–0.994) | 96.0 (86.8–99.1) | 91.9 (81.4–97.0) | 0.889 (0.777–0.951) |

| Weighted average overall (n=62) | 0.956 | 0.985 | 93.0 | 94.3 | 0.929 |

| Macro average overall (n=62) | 0.957 | 0.939 | 73.7 | 96.1 | 0.953 |

| AI model 3 | |||||

| Normal (n=33) | 0.984 (0.902–0.999) | 1.000 (1.000–1.000) | 100.0 (92.7–100.0) | 96.6 (87.5–99.4) | 0.971 (0.882–0.995) |

| Subclinical KC (n=4) | 0.952 (0.856–0.987) | 0.901 (0.897–0.905) | 25.0 (15.3–37.9) | 100.0 (92.7–100.0) | 1.000 (0.927–1.000) |

| KC (n=25) | 0.935 (0.835–0.979) | 0.995 (0.994–0.995) | 96.0 (86.8–99.1) | 91.9 (81.4–97.0) | 0.889 (0.777–0.951) |

| Weighted average overall (n=62) | 0.956 | 0.991 | 93.0 | 94.3 | 0.929 |

| Macro average overall (n=62) | 0.957 | 0.965 | 73.7 | 96.1 | 0.953 |

KC: Keratoconus, n: Number of images, AUC: Area under the receiver operating characteristic curve, PPV: Positive predictive value, AI: Artificial intelligence, CI: Confidence interval

Table 6.

Performance of BAD-D, corvis biomechanical index, and tomographic biomechanical index

| Score | Accuracy | AUC | Sensitivity (%) | Specificity (%) | PPV |

|---|---|---|---|---|---|

| AI model 1 test set (n=167) | |||||

| BAD-D | |||||

| Weighted average | 0.912 | 0.904 | 85.6 | 95.3 | 0.893 |

| Macro average | 0.892 | 0.865 | 79.9 | 93.1 | 0.744 |

| AI model 2–3 test set (n=62) | |||||

| BAD-D | |||||

| Weighted average | 0.951 | 0.988 | 91.4 | 98.0 | 0.938 |

| Macro average | 0.935 | 0.956 | 86.3 | 96.2 | 0.778 |

| CBI | |||||

| Weighted average | 0.798 | 0.988 | 80.1 | 78.5 | 0.727 |

| Macro average | 0.839 | 0.956 | 55.6 | 86.2 | 0.533 |

| TBI | |||||

| Weighted average | 0.951 | 0.988 | 91.4 | 98.0 | 0.938 |

| Macro average | 0.935 | 0.956 | 86.3 | 96.2 | 0.778 |

BAD-D: Belin-Ambrósio enhanced ectasia total deviation display, CBI: Corvis biomechanical index, TBI: Tomography and biomechanical index, n: Number of images, AUC: Area under the receiver operating characteristic curve, PPV: Positive predictive value, AI: Artificial intelligence

DISCUSSION

Cornea has a viscoelastic property that allows it to absorb and dissipate energy.52 Changes in biochemicals and cells result in loss of corneal stroma in KC.53,54 Breakdown of collagen network causes weakened cornea to protrude.55 Refractive surgery alters corneal biomechanics.11,12 Accordingly, screening patients for subclinical KC before refractive surgery is essential to decrease the risk of iatrogenic ectasia. Moreover, early detection means early intervention and slow disease progression.56 Using multiple parameters from topography and corneal biomechanics can better detect early forms of KC.8

In this study, we developed three different AI models. First, AI model 1, which received information from Pentacam images, achieved the highest sensitivity of 73.3% in subclinical group. Therefore, AI model 1 would be suitable for screening. Next, AI model 2 obtained information from Pentacam and Corvis images. To our knowledge, this is the first study to combine two CNN models, Pentacam and Corvis ST. Adding Corvis images enhanced most of results. In AI model 3, we added CBI score to AI model 2, and performance was slightly higher than that of AI model 2. AI model 3 had the highest AUC and accuracy, suggesting it is best for diagnostic applications. Interestingly, even though AI models 2 and 3 produced same confusion matrices, they exhibited different ROCAUC. This observation can be explained by difference in prediction probabilities, influenced by model’s architecture and data features. However, training images in AI models 2–3 were much smaller than those in AI model 1 because of restricted number of Corvis images. Thus, difference in outcomes was minor. To enhance overall results, additional Corvis images of all classifications should be gathered.

Eight previous studies used CNN for KC screening. Dos Santos et al. used 20,160 ultra-high-resolution OCT images from 142 eyes and achieved an accuracy of 99.56%,42 while Lavric and Valentin used Pentacam topographic maps from 3000 eyes for classifying normal and KC eyes and achieved an accuracy of 99.33%.39 Kamiya et al. used deep learning of arithmetic mean output data of six color-coded maps from anterior segment OCT of 543 eyes and demonstrated that accuracy for classifying normal cornea was 99.1% (sensitivity, 100%; specificity, 98.4%), while total accuracy for grading severity of KC was 87.4%.38 Xie et al. built an AI model called Pentacam InceptionResNetV2 Screening System (PIRSS) from 6,465 four maps from Pentacam: axial curvature, front elevation, back elevation, and pachymetry maps. PIRSS achieved an accuracy of 95.0% (88.8%–97.8%) in discriminating candidates categorized into five groups: normal, suspected irregular, early-stage KC, KC, and myopic postoperative cornea. Nevertheless, if suspected irregular cornea group was excluded, accuracy reached 98.8%.40 Zéboulon et al. used raw numeric data of 3,000 images of four-maps of Orbscan and achieved an accuracy of 99.3%.41 Kuo et al. trained 326 topographic maps from a computer-assisted videokeratoscope to classify normal corneas and KC, which achieved a total accuracy of 95.8%.57 Abdelmotaal et al. included 3218 refractive maps from Pentacam to build an AI model. Accuracies of classifying normal corneas and KC were 99% and 98%, respectively.58 Subramanian and Ramesh used indices from 1500 SyntEye KTC model to generate topography images (500 images of each class: normal, mild KC, and KC). Accuracies of classifying normal corneas and KC were 95.4% and 97.4%, respectively.59 If the sole diagnoses of normal and KC are considered, our outcomes are comparable. For normal cornea identification, AI model 1 had accuracy of 93.4% and AI model 2 and 3 had the same accuracy of 98.4%. For KC diagnosis, AI model 1 had an accuracy of 96.4% and AI models 2 and 3 had the same accuracy of 93.5%.

The diagnosis of subclinical KC remains challenging because there is no clear definition. Of eight studies, three previous CNN studies included subclinical KC. Xie et al. trained 799 Pentacam images of suspected irregular cornea defined as suspicious for corneal morphologic abnormalities with CNN and achieved 76.5% (68.0%–83.3%) sensitivity and 98.2% (97.3%–98.8%) specificity (AUC, 0.98) in suspected irregular cornea group.40 Abdelmotaal et al., using 1072 refractive maps of subclinical KC, achieved an accuracy of 98%.58 Subramanian and Ramesh, using 500 generated topographic images of subclinical KC, reached an accuracy of 95.1% (sensitivity, 94.9%; specificity 95.1%).59 Although AI model 1, using 75 images, had comparable results to PIRSS, with sensitivity of 73.3% and specificity of 92.8% for subclinical KC group, challenges persist. Due to low sensitivity, these AI models cannot be employed for effective screening, highlighting the need for further investigation. We speculate that this shortcoming arises from indistinct feature representation of subclinical KC, which does not allow model to accurately differentiate these cases from normal ones.

For overall accuracy, our AI model 1 showed similar results to PIRSS (accuracy 93.7% when considering only three categories: normal, suspected irregular cornea, and early KC plus KC).40

We also compared performance of BAD-D, CBI, and TBI on the same test set as in our models [Table 6]. Result of AI model 1 was slightly higher than that of BAD-D. The outcomes of AI models 2 and 3 were comparable to those of BAD-D and TBI, while CBI achieved lower accuracy. AI model 3 was not compared to CBI because CBI was one of inputs.

Our study aimed to establish the potential of AI models using the available data. While we endeavored to create a robust model, the scarcity of subclinical KC data was a challenge we could not fully overcome within this study’s scope. Further studies should collect more data on subclinical KC to improve model performance. In addition, we did not evaluate severity grading of KC. Finally, the absence of external validation in this study emphasizes the need of further research to confirm model’s applicability in the real-world settings.

In conclusion, our new AI model, integrating anterior corneal curvature with corneal biomechanics, has high sensitivity and specificity for screening KC corneas. Thus, ophthalmologists could detect KC more accurately and be helpful for refractive surgeons to provide more efficient patient care during refractive surgery. However, identifying subclinical KC remains a challenge, highlighting the need for ongoing research.

Financial support and sponsorship

This work was financially supported by Quality Improvement Fund, King Chulalongkorn Memorial Hospital, The Thai Red Cross Society under Grant number HA-64-3300-21-024.

Conflicts of interest

There are no conflicts of interest.

Acknowledgments

The author is grateful to BridgeAsia Limited for AI and analytic software support. The authors thank Editage (www.editage.com) for the English language editing and Research Affairs, Faculty of Medicine, Chulalongkorn University for statistical consultation.

REFERENCES

- 1.Krachmer JH, Feder RS, Belin MW. Keratoconus and related noninflammatory corneal thinning disorders. Surv Ophthalmol. 1984;28:293–322. doi: 10.1016/0039-6257(84)90094-8. [DOI] [PubMed] [Google Scholar]

- 2.Hashemi H, Heydarian S, Hooshmand E, Saatchi M, Yekta A, Aghamirsalim M, et al. The prevalence and risk factors for Keratoconus: A systematic review and meta-analysis. Cornea. 2020;39:263–70. doi: 10.1097/ICO.0000000000002150. [DOI] [PubMed] [Google Scholar]

- 3.Pearson AR, Soneji B, Sarvananthan N, Sandford-Smith JH. Does ethnic origin influence the incidence or severity of Keratoconus? Eye (Lond) 2000;14:625–8. doi: 10.1038/eye.2000.154. [DOI] [PubMed] [Google Scholar]

- 4.Georgiou T, Funnell CL, Cassels-Brown A, O'Conor R. Influence of ethnic origin on the incidence of Keratoconus and associated atopic disease in Asians and white patients. Eye (Lond) 2004;18:379–83. doi: 10.1038/sj.eye.6700652. [DOI] [PubMed] [Google Scholar]

- 5.Rabinowitz YS. Videokeratographic indices to aid in screening for Keratoconus. J Refract Surg. 1995;11:371–9. doi: 10.3928/1081-597X-19950901-14. [DOI] [PubMed] [Google Scholar]

- 6.Swartz T, Marten L, Wang M. Measuring the cornea: The latest developments in corneal topography. Curr Opin Ophthalmol. 2007;18:325–33. doi: 10.1097/ICU.0b013e3281ca7121. [DOI] [PubMed] [Google Scholar]

- 7.Moshirfar M, Motlagh MN, Murri MS, Momeni-Moghaddam H, Ronquillo YC, Hoopes PC. Advances in biomechanical parameters for screening of refractive surgery candidates: A review of the literature, part III. Med Hypothesis Discov Innov Ophthalmol. 2019;8:219–40. [PMC free article] [PubMed] [Google Scholar]

- 8.Ambrósio R, Jr, Lopes BT, Faria-Correia F, Salomão MQ, Bühren J, Roberts CJ, et al. Integration of scheimpflug-based corneal tomography and biomechanical assessments for enhancing ectasia detection. J Refract Surg. 2017;33:434–43. doi: 10.3928/1081597X-20170426-02. [DOI] [PubMed] [Google Scholar]

- 9.Kataria P, Padmanabhan P, Gopalakrishnan A, Padmanaban V, Mahadik S, Ambrósio R., Jr Accuracy of scheimpflug-derived corneal biomechanical and tomographic indices for detecting subclinical and mild Keratectasia in a South Asian population. J Cataract Refract Surg. 2019;45:328–36. doi: 10.1016/j.jcrs.2018.10.030. [DOI] [PubMed] [Google Scholar]

- 10.Corcoran KJ. Macroeconomic landscape of refractive surgery in the United States. Curr Opin Ophthalmol. 2015;26:249–54. doi: 10.1097/ICU.0000000000000159. [DOI] [PubMed] [Google Scholar]

- 11.Sinha Roy A, Dupps WJ, Jr, Roberts CJ. Comparison of biomechanical effects of small-incision lenticule extraction and laser in situ Keratomileusis: Finite-element analysis. J Cataract Refract Surg. 2014;40:971–80. doi: 10.1016/j.jcrs.2013.08.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wu D, Wang Y, Zhang L, Wei S, Tang X. Corneal biomechanical effects: Small-incision lenticule extraction versus femtosecond laser-assisted laser in situ keratomileusis. J Cataract Refract Surg. 2014;40:954–62. doi: 10.1016/j.jcrs.2013.07.056. [DOI] [PubMed] [Google Scholar]

- 13.Moshirfar M, Albarracin JC, Desautels JD, Birdsong OC, Linn SH, Hoopes PC., Sr Ectasia following small-incision lenticule extraction (SMILE): A review of the literature. Clin Ophthalmol. 2017;11:1683–8. doi: 10.2147/OPTH.S147011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ting DS, Foo VH, Yang LW, Sia JT, Ang M, Lin H, et al. Artificial intelligence for anterior segment diseases: Emerging applications in ophthalmology. Br J Ophthalmol. 2021;105:158–68. doi: 10.1136/bjophthalmol-2019-315651. [DOI] [PubMed] [Google Scholar]

- 15.Keel S, van Wijngaarden P. The eye in AI: Artificial intelligence in ophthalmology. Clin Exp Ophthalmol. 2019;47:5–6. doi: 10.1111/ceo.13435. [DOI] [PubMed] [Google Scholar]

- 16.Vieira de Carvalho LA, Barbosa MS. Neural networks and statistical analysis for classification of corneal videokeratography maps based on Zernike coefficients: A quantitative comparison. Arq Bras Oftalmol. 2008;71:337–41. doi: 10.1590/s0004-27492008000300006. [DOI] [PubMed] [Google Scholar]

- 17.Silverman RH, Urs R, Roychoudhury A, Archer TJ, Gobbe M, Reinstein DZ. Epithelial remodeling as basis for machine-based identification of Keratoconus. Invest Ophthalmol Vis Sci. 2014;55:1580–7. doi: 10.1167/iovs.13-12578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Saad A. Validation of a new scoring system for the detection of early forme of Keratoconus. Int J Keratoconus Ectatic Corneal Dis. 2012;1:100–8. [Google Scholar]

- 19.Maeda N, Klyce SD, Smolek MK, Thompson HW. Automated keratoconus screening with corneal topography analysis. Invest Ophthalmol Vis Sci. 1994;35:2749–57. [PubMed] [Google Scholar]

- 20.Leão E, Ing Ren T, Lyra JM, Machado A, Koprowski R, Lopes B, et al. Corneal deformation amplitude analysis for Keratoconus detection through compensation for intraocular pressure and integration with horizontal thickness profile. Comput Biol Med. 2019;109:263–71. doi: 10.1016/j.compbiomed.2019.04.019. [DOI] [PubMed] [Google Scholar]

- 21.Souza MB, Medeiros FW, Souza DB, Garcia R, Alves MR. Evaluation of machine learning classifiers in Keratoconus detection from orbscan II examinations. Clinics (Sao Paulo) 2010;65:1223–8. doi: 10.1590/S1807-59322010001200002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Smolek MK, Klyce SD. Current Keratoconus detection methods compared with a neural network approach. Invest Ophthalmol Vis Sci. 1997;38:2290–9. [PubMed] [Google Scholar]

- 23.Maeda N, Klyce SD, Smolek MK. Neural network classification of corneal topography. Preliminary demonstration. Invest Ophthalmol Vis Sci. 1995;36:1327–35. [PubMed] [Google Scholar]

- 24.Kovács I, Miháltz K, Kránitz K, Juhász É, Takács Á, Dienes L, et al. Accuracy of machine learning classifiers using bilateral data from a Scheimpflug camera for identifying eyes with preclinical signs of Keratoconus. J Cataract Refract Surg. 2016;42:275–83. doi: 10.1016/j.jcrs.2015.09.020. [DOI] [PubMed] [Google Scholar]

- 25.Accardo PA, Pensiero S. Neural network-based system for early Keratoconus detection from corneal topography. J Biomed Inform. 2002;35:151–9. doi: 10.1016/s1532-0464(02)00513-0. [DOI] [PubMed] [Google Scholar]

- 26.Klyce SD, Karon MD, Smolek MK. Screening patients with the corneal navigator. J Refract Surg. 2005;21:S617–22. doi: 10.3928/1081-597X-20050902-12. [DOI] [PubMed] [Google Scholar]

- 27.Karimi A, Meimani N, Razaghi R, Rahmati SM, Jadidi K, Rostami M. Biomechanics of the healthy and Keratoconic corneas: A combination of the clinical data, finite element analysis, and artificial neural network. Curr Pharm Des. 2018;24:4474–83. doi: 10.2174/1381612825666181224123939. [DOI] [PubMed] [Google Scholar]

- 28.Issarti I, Consejo A, Jiménez-García M, Hershko S, Koppen C, Rozema JJ. Computer aided diagnosis for suspect Keratoconus detection. Comput Biol Med. 2019;109:33–42. doi: 10.1016/j.compbiomed.2019.04.024. [DOI] [PubMed] [Google Scholar]

- 29.Twa MD, Parthasarathy S, Roberts C, Mahmoud AM, Raasch TW, Bullimore MA. Automated decision tree classification of corneal shape. Optom Vis Sci. 2005;82:1038–46. doi: 10.1097/01.opx.0000192350.01045.6f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Smadja D, Touboul D, Cohen A, Doveh E, Santhiago MR, Mello GR, et al. Detection of subclinical Keratoconus using an automated decision tree classification. Am J Ophthalmol. 2013;156:237–46.e1. doi: 10.1016/j.ajo.2013.03.034. [DOI] [PubMed] [Google Scholar]

- 31.Chastang PJ, Borderie VM, Carvajal-Gonzalez S, Rostène W, Laroche L. Automated keratoconus detection using the EyeSys videokeratoscope. J Cataract Refract Surg. 2000;26:675–83. doi: 10.1016/s0886-3350(00)00303-5. [DOI] [PubMed] [Google Scholar]

- 32.Arbelaez MC, Versaci F, Vestri G, Barboni P, Savini G. Use of a support vector machine for Keratoconus and subclinical Keratoconus detection by topographic and tomographic data. Ophthalmology. 2012;119:2231–8. doi: 10.1016/j.ophtha.2012.06.005. [DOI] [PubMed] [Google Scholar]

- 33.Ruiz Hidalgo I, Rodriguez P, Rozema JJ, NíDhubhghaill S, Zakaria N, Tassignon MJ, et al. Evaluation of a machine-learning classifier for Keratoconus detection based on scheimpflug tomography. Cornea. 2016;35:827–32. doi: 10.1097/ICO.0000000000000834. [DOI] [PubMed] [Google Scholar]

- 34.Lopes BT, Ramos IC, Salomão MQ, Guerra FP, Schallhorn SC, Schallhorn JM, et al. Enhanced tomographic assessment to detect corneal ectasia based on artificial intelligence. Am J Ophthalmol. 2018;195:223–32. doi: 10.1016/j.ajo.2018.08.005. [DOI] [PubMed] [Google Scholar]

- 35.Xu B, Kocyigit D, Grimm R, Griffin BP, Cheng F. Applications of artificial intelligence in multimodality cardiovascular imaging: A state-of-the-art review. Prog Cardiovasc Dis. 2020;63:367–76. doi: 10.1016/j.pcad.2020.03.003. [DOI] [PubMed] [Google Scholar]

- 36.Ueda D, Shimazaki A, Miki Y. Technical and clinical overview of deep learning in radiology. Jpn J Radiol. 2019;37:15–33. doi: 10.1007/s11604-018-0795-3. [DOI] [PubMed] [Google Scholar]

- 37.Chan S, Reddy V, Myers B, Thibodeaux Q, Brownstone N, Liao W. Machine learning in dermatology: Current applications, opportunities, and limitations. Dermatol Ther (Heidelb) 2020;10:365–86. doi: 10.1007/s13555-020-00372-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kamiya K, Ayatsuka Y, Kato Y, Fujimura F, Takahashi M, Shoji N, et al. Keratoconus detection using deep learning of colour-coded maps with anterior segment optical coherence tomography: A diagnostic accuracy study. BMJ Open. 2019;9:e031313. doi: 10.1136/bmjopen-2019-031313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lavric A, Valentin P. KeratoDetect: Keratoconus detection algorithm using convolutional neural networks. Comput Intell Neurosci 2019. 2019:8162567. doi: 10.1155/2019/8162567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Xie Y, Zhao L, Yang X, Wu X, Yang Y, Huang X, et al. Screening candidates for refractive surgery with corneal tomographic-based deep learning. JAMA Ophthalmol. 2020;138:519–26. doi: 10.1001/jamaophthalmol.2020.0507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zéboulon P, Debellemanière G, Bouvet M, Gatinel D. Corneal topography raw data classification using a convolutional neural network. Am J Ophthalmol. 2020;219:33–9. doi: 10.1016/j.ajo.2020.06.005. [DOI] [PubMed] [Google Scholar]

- 42.Dos Santos VA, Schmetterer L, Stegmann H, Pfister M, Messner A, Schmidinger G, et al. CorneaNet: Fast segmentation of cornea OCT scans of healthy and Keratoconic eyes using deep learning. Biomed Opt Express. 2019;10:622–41. doi: 10.1364/BOE.10.000622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rabinowitz YS, McDonnell PJ. Computer-assisted corneal topography in Keratoconus. Refract Corneal Surg. 1989;5:400–8. [PubMed] [Google Scholar]

- 44.Fan R, Chan TC, Prakash G, Jhanji V. Applications of corneal topography and tomography: A review. Clin Exp Ophthalmol. 2018;46:133–46. doi: 10.1111/ceo.13136. [DOI] [PubMed] [Google Scholar]

- 45.Gomes JA, Tan D, Rapuano CJ, Belin MW, Ambrósio R, Jr, Guell JL, et al. Global consensus on Keratoconus and ectatic diseases. Cornea. 2015;34:359–69. doi: 10.1097/ICO.0000000000000408. [DOI] [PubMed] [Google Scholar]

- 46.Tan M, Le Q. EfficientNet: Rethinking Model Scaling For Convolutional Neural Networks. Kamalika C, Ruslan S, editors. Proceedings of the 36th International Conference on Machine Learning;Proceedings of Machine Learning Research: PMLR. 2019:6105–14. [Google Scholar]

- 47.Deng J, Dong W, Socher R, Li LJ, Kai L, Li FF, editors. ImageNet: A Large-Scale Hierarchical Image Database. 2009 IEEE Conference on Computer Vision and Pattern Recognition. 2009:20–5. [Google Scholar]

- 48.Smith LN, Topin N, editors. Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications. Bellingham, Washington, USA: International Society for Optics and Photonics; 2019. Super-convergence:very fast training of neural networks using large learning rates. [Google Scholar]

- 49.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z, editors. Rethinking the Inception Architecture for Computer Vision. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016:27–30. [Google Scholar]

- 50.Ginsburg B, Castonguay P, Hrinchuk O, Kuchaiev O, Lavrukhin V, Leary R, et al. Stochastic gradient methods with layer-wise adaptive moments for training of deep networks. arXiv Preprint arXiv. 2019;1905:11286. [Google Scholar]

- 51.Howard J, Gugger S. Fastai: A layered API for deep learning. Information. 2020;11:108. [Google Scholar]

- 52.Edmund C. Corneal elasticity and ocular rigidity in normal and Keratoconic eyes. Acta Ophthalmol (Copenh) 1988;66:134–40. doi: 10.1111/j.1755-3768.1988.tb04000.x. [DOI] [PubMed] [Google Scholar]

- 53.Fukuchi T, Yue BY, Sugar J, Lam S. Lysosomal enzyme activities in conjunctival tissues of patients with Keratoconus. Arch Ophthalmol. 1994;112:1368–74. doi: 10.1001/archopht.1994.01090220118033. [DOI] [PubMed] [Google Scholar]

- 54.Sawaguchi S, Yue BY, Sugar J, Gilboy JE. Lysosomal enzyme abnormalities in Keratoconus. Arch Ophthalmol. 1989;107:1507–10. doi: 10.1001/archopht.1989.01070020581044. [DOI] [PubMed] [Google Scholar]

- 55.Akhtar S, Bron AJ, Salvi SM, Hawksworth NR, Tuft SJ, Meek KM. Ultrastructural analysis of collagen fibrils and proteoglycans in Keratoconus. Acta Ophthalmol. 2008;86:764–72. doi: 10.1111/j.1755-3768.2007.01142.x. [DOI] [PubMed] [Google Scholar]

- 56.Wollensak G, Spoerl E, Seiler T. Riboflavin/ultraviolet-a-induced collagen crosslinking for the treatment of Keratoconus. Am J Ophthalmol. 2003;135:620–7. doi: 10.1016/s0002-9394(02)02220-1. [DOI] [PubMed] [Google Scholar]

- 57.Kuo BI, Chang WY, Liao TS, Liu FY, Liu HY, Chu HS, et al. Keratoconus screening based on deep learning approach of corneal topography. Transl Vis Sci Technol. 2020;9:53. doi: 10.1167/tvst.9.2.53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Abdelmotaal H, Mostafa MM, Mostafa AN, Mohamed AA, Abdelazeem K. Classification of color-coded scheimpflug camera corneal tomography images using deep learning. Transl Vis Sci Technol. 2020;9:30. doi: 10.1167/tvst.9.13.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Subramanian P, Ramesh GP. Keratoconus classification with convolutional neural networks using segmentation and index quantification of eye topography images by particle swarm optimisation. Biomed Res Int 2022. 2022:8119685. doi: 10.1155/2022/8119685. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]