Abstract

Artificial intelligence (AI) integration in healthcare has emerged as a transformative force, promising to enhance medical diagnosis, treatment, and overall healthcare delivery. Hence, this study investigates university students’ perceptions of using AI in healthcare. A cross-sectional survey was conducted at two major universities using a paper-based questionnaire from September 2023 to November 2023. Participants’ views regarding using artificial intelligence in healthcare were investigated using 25 items distributed across five domains. The Mann-Whitney U test was applied to compare variables. Two hundred seventy-nine (279) students completed the questionnaire. More than half of the participants (52%, n = 145) expressed their belief in AI’s potential to reduce treatment errors. However, about (61.6%, n = 172) of participants fear the influence of AI that could prevent doctors from learning to make correct patient care judgments, and it was widely agreed (69%) that doctors should ultimately maintain final control over patient care. Participants with experience with AI, such as engaging with AI chatbots, significantly reported higher scores in both the “Benefits and Positivity Toward AI in Healthcare” and “Concerns and Fears” domains (p = 0.024) and (p = 0.026), respectively. The identified cautious optimism, concerns, and fears highlight the delicate balance required for successful AI integration. The findings emphasize the importance of addressing specific concerns, promoting positive experiences with AI, and establishing transparent communication channels. Insights from such research can guide the development of ethical frameworks, policies, and targeted interventions, fostering a harmonious integration of AI into the healthcare landscape in developing countries.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-024-80203-w.

Keywords: Artificial intelligence in healthcare, Acceptability, Chatbot, Public perceptions, Students, Yemen

Subject terms: Health policy, Health services, Public health

Introduction

Artificial intelligence (AI) is anticipated to become the gold standard for health care decision making because of its power to analyze and understand massive amounts of data and make more precise, intelligent judgments than humans1–3. Expert systems, machine learning, deep learning, and artificial neural networks (ANNs) are some of the several shapes that AI may take. Incorporating AI into many healthcare services has been made possible by improvements in accessible storage, quicker networks, and more powerful computers. AI is now mature enough to be used in image analysis, illness pattern prediction, and triage across various medical specialties, including radiology, gynecology, neurology, cardiology, pathology, and robotic surgery4. Healthcare AI integration offers a unique opportunity to improve healthcare services.

AI has emerged as a developing trend in the healthcare sector due to the expansion of medical data availability and the creation of algorithms5,6. AI can increase medical diagnostic accuracy by using semantic analysis and picture recognition. Stanford University research from 2017 found that AI outperformed human doctors in detecting skin cancer by achieving more than 90% diagnostic accuracy7. With the aid of ever-sophisticated algorithms and sensitive tools, AI technology can offer outstanding therapeutic options and carry out specialized procedures in the context of medical decision-making and therapy. For instance, Shaderman’s research team built the first robotic hand to handle soft tissue autonomously8. Researchers from the Las Vegas Department of Health have created an AI system that can aid in preventing foodborne diseases by applying machine learning to Twitter data9. Richer medical and health data offer prospects for more precise disease prevention and health monitoring in preventive healthcare. Deep-mind researchers recently created a model to anticipate acute kidney damage 48 h before using this method10.

Regardless, AI is rapidly integrated into healthcare and will significantly impact services. For AI to reach its full potential in health care, it must be adopted by physicians, health professionals, patients, and other public members. Understanding the public acceptability of technology is crucial in this regard. This includes attitudes and beliefs toward collecting and using health-related data, which AI systems need to work11,12. Furthermore, while experts agree on what is and is not AI in health care11, public opinion may be more varied12. Effective engagement and adoption of AI technology in health care necessitate understanding what non-experts believe about the situation.

Given the speed of technological developments worldwide, understanding Yemen’s perspectives on AI is essential. The exploration of public perceptions regarding the use of AI in healthcare holds particular significance. Yemen faces unique challenges in healthcare accessibility and resources, exacerbated by ongoing civil conflict and humanitarian crises13,14. Investigating the perceptions of individuals in this context provides a crucial lens through which to understand how a population coping with limited access to medical resources perceives the potential of AI to address healthcare challenges. This study contributes to local considerations. It enriches the broader global understanding of AI integration in healthcare, shedding light on the dynamics of perception and acceptance in regions grappling with adversity12. The findings from Yemen may offer insights into tailoring strategies for AI implementation that are sensitive to the specific needs and concerns of populations facing resource constraints and complex healthcare landscapes.

Hence, this paper aims to explore university students’ perceptions of AI in healthcare, evaluate the factors influencing public attitudes, and discuss the implications for the successful implementation of AI technologies. By analyzing public opinion, understanding concerns, and addressing ethical considerations, we can ensure that AI in healthcare is embraced as a valuable tool to improve patient outcomes, enhance healthcare delivery, and foster a positive and inclusive healthcare ecosystem.

Methods

Study setting and design

Using a paper-based questionnaire, we conducted a cross-sectional study among general students at Hodeidah and Sana’a universities to explore their perceptions toward the use of artificial intelligence in healthcare. The inclusion criteria are the capability to read and understand the information in the questionnaire and the willingness to participate. There were no explicit exclusion criteria.

Participants and sampling

University general students often have the potential to shape public opinion and contribute to societal discussions. Their views on using AI in healthcare can have a ripple effect on the broader population, making their insights particularly valuable since the sample size involved students in any discipline from two major universities located in different multicultural cities within the country. The selection of Hodeidah and Sana’a universities was for their renowned reputation, diverse student populations, and strategic geographical locations. By including students from various cities and universities, we can capture regional variations in public perception toward AI use in healthcare. Other cities may have varying levels of exposure to AI technologies, cultural influences, and healthcare infrastructure, which can shape perceptions. The Raosoft sample size calculator (http://www.raosoft.com/samplesize.html) was used to estimate the sample size with a margin of error of 5%, a confidence interval of 95%, and a 50% response rate. The estimated sample size was 377. Hence, using convenience sampling we distributed 400 questionnaires to take into account non-responders; 200 were distributed at Hodeidah University, and the other 200 were distributed at Sana’a University.

Development of the questionnaire

The questionnaire was adopted from the previous validated quantitative study15. The questionnaire was introduced in both Arabic and English after back-forward translation. The questionnaire included three parts. The socio-demographic information on students, age, gender, etc., was represented in the first part. The 25-items in the questionnaire were grouped into five domains: “Benefits and Positivity, Concerns and Fears, Trust and Confidence in AI and Doctors, Patient Involvement and Data Sharing, and Professional Autonomy of Doctors” This categorization is based on the existing literature on public perceptions of AI in healthcare12,16, which identifies these as critical areas of concern and interest. The perception and self-assessment questions were answered on a five-point Likert scale. The questionnaire was tested for its face and content validity. Three independent faculty members evaluated the questionnaire to determine the relevance, clarity, conciseness, and ease of understanding of the items assessed by four students. Besides, pilot study was conducted among fifteen students to ass readability and internal consistency for the 25 items that represent participants’ perceptions concerning AI use in healthcare (Overall Cronbach’s Alpha = 0.816) Experts’ comments and results from pilot study were considered in the final draft of the questionnaire. Experts’ comments were considered in the final draft of the questionnaire.

Consent and ethical approval

Written informed consent was obtained from all participants prior to their involvement in the study. Before initiating any data collection procedures, the purpose of the study, the procedures involved, and the confidentiality measures were explained to each participant in their preferred language. Participants were informed that their participation was voluntary and that they had the right to withdraw from the study at any time without consequences. Additionally, participants were assured that their responses would remain confidential and would only be used for research purposes. The study design and procedure were approved by the Institutional Review Board at Aden University, Aden, Yemen, in compliance with the International Conference of Harmonization (ICH) Research Code (REC-164–2023), and all methods were performed in accordance with the relevant guidelines and regulations.

Data analysis

The data was coded and analyzed using the statistical package for the social sciences (SPSS) version 26 (Armonk, NY: IBM Corp.). The Kolmogorov-Smirnov test was used to test normality. Frequencies, percentages, median, and IQR were used to determine the respondent’s socio-demographic characteristics. Frequencies and percentages were used to determine the participant’s perception of AI use in healthcare. The Mann-Whitney U test was applied for the comparison of variables. Alpha level was set at 0.05.

The principal component analysis (PCA) was conducted to investigate the underlying structure of the dataset and identify key components. The suitability of the data for PCA was first assessed using the Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy and Bartlett’s test of sphericity. The KMO measure was 0.789, which exceeds the recommended value of 0.6, indicating that the sample is adequate for PCA. Bartlett’s test of sphericity was significant (chi-square value = 1296, p < 0.001), confirming that the correlations between items are sufficiently large for PCA.

To align with the theoretical framework and content areas of the study, we specified in advance that five components should be extracted. Although the scree plot (S1 Fig. 1) suggested a point of inflection after the second component, indicating that the first two components account for most of the explained variance, we retained five components based on the theoretical framework and the need to capture the full breadth of the content areas identified in our study12,15.

The component loadings were examined to interpret the components (S1 Table 1). Items with loadings below 0.400 were retained due to their theoretical importance and practical relevance to the constructs12,15. The rotated component matrix using Varimax rotation revealed that several items had strong loadings on their respective components. For instance, the item “I think that the use of artificial intelligence brings benefits for the patient” had a loading of 0.417 on Benefits and Positivity, indicating a moderate association with this component. However, items like “Doctors will play a less important role in the therapy of patients in the future” and “Through the use of artificial intelligence, there will be fewer treatment errors in the future” had loadings of 0.352 and 0.174 respectively, suggesting weaker associations but were retained due to their theoretical relevance.

Table 1.

Participants’ Socio-demographics & characteristics.

| Variables | n (%) | Overall Perception | ||

|---|---|---|---|---|

| Median (IQR) | p – value | |||

| Age | 0.75 | |||

| 18–24 | 231 (82.8) | 3.4(3.1–3.7) | ||

| ≥ 25 | 48 (17.2) | 3.5(3.2–3.8) | ||

| Sex | 0.98 | |||

| Male | 107 (38.4) | 3.6(3.2–3.8) | ||

| Female | 172 (61.6) | 3.5(3.1–3.7) | ||

| Permanent residency | 0.43 | |||

| Rural | 43 (15.4) | 3.5(3.2–3.8) | ||

| Urban | 236 (84.6) | 3.5(3.2–3.8) | ||

| Are you student in medical or any other health related specialty ? | 0.52 | |||

| Yes | 123 (44.1) | 3.5(3.2–3.8) | ||

| No | 156 (55.9) | 3.5(3.2–3.8) | ||

| Have you ever used any Artificial intelligence AI chatbot ? | 0.023* | |||

| Yes | 85 (30.5) | 3.6(3.2–3.8) | ||

| No | 194 (69.5) | 3.4(3.2–3.7) | ||

* Significant p-value

The overall Cronbach’s Alpha for the scale was 0.816, indicating good internal consistency. These results suggest that the five-component model is supported, and the retention of items with lower loadings ensures a comprehensive assessment of the constructs, maintaining the theoretical and practical integrity of the questionnaire.

Results

General results

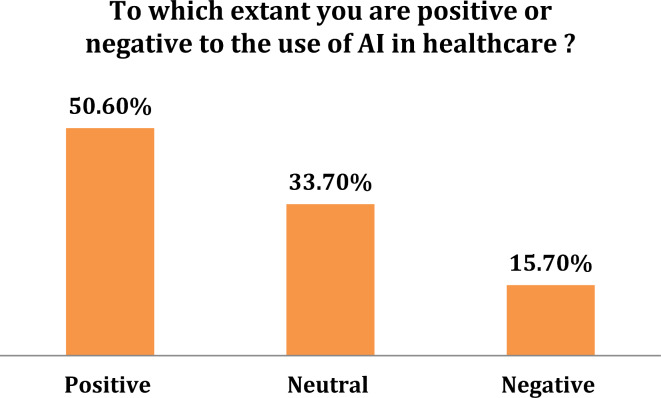

The study questionnaire received responses from 328 participants, representing a response rate of 82%. We excluded 36 surveys due to having two or more unanswered items, and an additional 13 surveys were eliminated because they had unanswered questions related to their socio-demographic information. Hence, 279 surveys were included in the final analysis, with the majority falling within the age range of 18–24 years (82.8%, n = 231), while a smaller proportion comprised individuals aged 25 years and older (17.2%, n = 48). Regarding gender distribution, the participants were divided into (38.4%, n = 107) males and (61.6%, n = 172) females. Regarding permanent residency, the majority resided in urban areas (84.6%, n = 236), whereas a smaller percentage lived in rural regions (15.4%, n = 43). When asked about their educational background, (44.1%, n = 123) of participants identified as medical or other health-related specialties students. Furthermore, when questioned about their experience with artificial intelligence AI chatbots, (30.5%, n = 85) confirmed utilizing them, whereas the majority (69.5%, n = 194) had not yet engaged with such technology (Table 1). When participants asked about their overall extant of positivity toward the use of AI in healthcare, about half of them were positive Fig. 1. In Table 1 participants who have previously used AI chatbots reported higher scores in terms of the overall perceptions on AI use in Healthcare (p = 0.023).

Fig. 1.

Participants positivity extant toward the use of AI in healthcare.

Benefits and positivity toward AI in healthcare

Table 2 below shows moderate perceptions regarding Benefits and Positivity Toward AI in the Healthcare domain with a median and IQR of 3.5(3.2–3.8). Furthermore, the overall score of this domain was significantly affected by the use of AI chatbots, in which participants who have previously used AI chatbots reported higher scores with a median and IQR of 3.7(3.3–4) and (p = 0.024). (Table 3)

Table 2.

Participants’ perception of different domains of AI in healthcare.

| Domain / items | Response N (%) | Median (IQR) | ||||

|---|---|---|---|---|---|---|

| SA | A | N | DA | SDA | ||

| Benefits and Positivity Toward AI in Healthcare | 3.5(3.2–3.8) | |||||

| I think that the use of artificial intelligence brings benefits for the patient | 20 (7.2) | 106 (38) | 85 (30.5) | 48 (17.2) | 20 (7.2) | 3(3–4) |

| Through the use of artificial intelligence, there will be fewer treatment errors in the future | 31 (11.1) | 114 (40.9) | 68 (24.4) | 48 (17.2) | 18 (6.5) | 4(3–4) |

| By using Artificial Intelligence, doctors will again have more time for the patient | 56 (20.1) | 116 (41.6) | 39 (14) | 44 (15.8) | 24 (8.6) | 4(3–4) |

| The use of artificial intelligence is an effective instrument against the overload of doctors and the shortage of doctors | 53 (19) | 125 (44.8) | 48 (17.2) | 36 (12.9) | 17 (6.1) | 4(3–4) |

| The use of artificial intelligence will reduce the workload of doctors | 53 (19) | 119 (42.7) | 51 (18.3) | 41 (14.7) | 15 (5.4) | 4(3–4) |

| Concerns and Fears | 3.4(3.1–3.8) | |||||

| Doctors will play a less important role in the therapy of patients in the future | 39 (14) | 99 (35.5) | 60 (21.5) | 62 (22.2) | 19 (6.8) | 4(2–4) |

| Artificial intelligence should not be used in medicine as a matter of principle | 53 (19) | 105 (37.6) | 49 (17.6) | 57 (20.4) | 15 (5.4) | 4(2–4) |

| Doctors are becoming too dependent on computer systems | 64 (22.9) | 126 (45.2) | 45 (16.1) | 30 (10.8) | 14 (5) | 4(3–4) |

| The influence of Artificial Intelligence on medical treatment scares me | 55 (19.7) | 123 (44.1) | 56 (20.1) | 34 (12.2) | 11 (3.9) | 4(3–4) |

| The use of Artificial Intelligence prevents doctors from learning to make their own correct judgement of the patient | 55 (19.7) | 117 (41.9) | 52 (18.6) | 46 (16.5) | 9 (3.2) | 4(3–4) |

| If AI predicts a low chance of survival for the patient, doctors will not fight for that patient’s life as much as before | 44 (15.8) | 85 (30.5) | 58 (20.8) | 62 (22.2) | 30 (10.8) | 3(2–4) |

| I am more afraid of a technical malfunction of Artificial Intelligence than of a wrong decision by a doctor | 72 (25.8) | 105 (37.6) | 44 (15.8) | 43 (15.4) | 15 (5.4) | 4(3–5) |

| I am worried that Artificial Intelligence-based systems could be manipulated from the outside (terrorists, hackers,…). | 77 (27.6) | 120 (43) | 34 (12.2) | 34 (12.2) | 14 (5) | 4(3–5) |

| The use of artificial intelligence impairs the doctor-patient relationship | 59 (21.1) | 101 (36.2) | 58 (20.8) | 48 (17.2) | 13 (4.7) | 4(3–4) |

| Trust and Confidence in AI and Doctors | 3.4(3.2–3.8) | |||||

| The testing of artificial intelligence before it is used on patients should be carried out by an independent body | 110 (39.4) | 111 (39.8) | 23( 8.2) | 27 (9.7) | 8 (2.9) | 4(4–5) |

| If a patient has been harmed, a doctor should be held responsible for not following the recommendations of AI | 49 (17.6) | 126 (45.2) | 46 (16.5) | 45 (16.1) | 13 (4.7) | 2(2–4) |

| I would trust the assessment of an artificial intelligence more than the assessment of a doctor | 26 (9.3) | 45 (16.1) | 62 (22.2) | 110 (39.4) | 36 (12.9) | 2(2–4) |

| Doctors know too little about AI to use it on patients | 36 (12.9) | 127 (45.5) | 63 (22.6) | 32 (11.5) | 21 (7.5) | 4(3–4) |

| I would like my doctor to override the recommendations of Artificial Intelligence if he comes to a different conclusion based on his experience or knowledge | 75 (26.9) | 116 (41.6) | 50 (17.9) | 28 (10) | 10 (3.6) | 4(3–4) |

| Patient Involvement and Data Sharing | 3.3(2.7–4) | |||||

| I would like my personal, medical treatment to be supported by Artificial Intelligence | 23 (8.2) | 90 (32.3) | 63 (22.6) | 79 (28.3) | 24 (8.6) | 3(2–4) |

| I would make my anonymous patient data available for non-commercial research (universities, hospitals, etc.) if this could improve future patient care | 40 (14.3) | 106 (38) | 60 (21.5) | 50 (17.9) | 23 (8.2) | 4(2–4) |

| Artificial intelligence-based decision support systems for doctors should only be used for patient care if their benefit has been scientifically proven | 42 (15.1) | 128 (45.9) | 50 (17.9) | 47 (16.8) | 12 (4.3) | 4(3–4) |

| I am not worried about the security of my data | 37 (13.3) | 86 (30.8) | 65 (23.3) | 74 (26.5) | 17 (6.1) | 3(2–4) |

| Professional Autonomy of Doctors | 3.5(3–4) | |||||

| The use of Artificial Intelligence is changing the demands of the medical profession | 44 (15.8) | 111 (39.8) | 49 (17.6) | 52 (18.6) | 32 (8.2) | 4(2–4) |

| A doctor should always have the final control over diagnosis and therapy | 86 (30.8) | 106 (38) | 37 (13.3) | 34 (12.2) | 16 (5.7) | 4(3–5) |

SA = Strongly agree,A = Agree,N = Natural, DA = Disagree, SDA = Strongly disagree

The final Overall Cronbach’s Alpha = 0.816. The Kaiser-Meyer-Olkin (KMO) Measure of Sampling Adequacy = 0.789. The Bartlett’s test of Sphericity = 1296 (d.f = 300, p = 0.000).

Table 3.

Analysis of five domains of perception of AI in healthcare.

| Benefits and Positivity Toward AI in Healthcare | Concerns and Fears | Trust and Confidence in AI and Doctors | Patient Involvement and Data Sharing | Professional Autonomy of Doctors | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Variables | ||||||||||||

| Median (IQR) | P - Value | Median (IQR) | P - Value | Median (IQR) | P - Value | Median (IQR) | P - Value | Median (IQR) | P - Value | |||

| Age | ||||||||||||

| 18–24 |

3.5 (3.2–3.8) |

0.70 |

3.4 (3.1–3.8) |

0.07 | 3.4 (3.2–3.8) | 0.5 | 3.3 (2.6–4) | 0.5 | 3.5 (3–4) | 0.84 | ||

| ≥ 25 | 3.5 (3–4) | 3.6 (3.2–4) | 3.6 (3–4) |

3.3 (2.6–3.6) |

3.5 (3–4.2) | |||||||

| Sex | ||||||||||||

| Male | 3.5 (3.2–4) | 0.39 | 3.4 (3.2–3.8) | 0.43 |

3.6 (3.3–4) |

0.023* | 3.3 (3–4) | 0.006* | 3.5 (3–4.5) | 0.52 | ||

| Female | 3.5 (3.2–3.8) | 3.4 (3.1–3.8) | 3.4 (3.3–3.8) | 3.3 (2.7–3.7) |

3.5 (3–4) |

|||||||

| Permanent residency | ||||||||||||

| Rural | 3.5 (3.2–4) | 0.67 | 3.4 (3.2–3.9) | 0.48 |

3.6 (3.2–3.9) |

0.25 |

3.3 (2.7–4) |

0.4 |

3.5 (3–4.3) |

0.81 | ||

| Urban | 3.5 (3.2–3.8) | 3.4 (3.1–3.7) | 3.4 (3.2–3.8) | 3.3 (2.7–4) | 3.5 (3–4) | |||||||

| Are you student in medical or any other health related specialty ? | ||||||||||||

| Yes | 3.5 (3.2–4) | 0.18 | 3.4 (3.2–3.8) | 0.24 | 3.4 (3–3.8) | 0.66 |

3.3 (2.7–4) |

0.56 | 3.5 (3–4.5) | 0.05 | ||

| No | 3.5 (3.2–3.8) | 3.4 (3–3.8) | 3.4 (3.2–3.9) |

3.3 (3–3.7) |

4 (3–4) | |||||||

| Have you ever used any Artificial intelligence AI chatbot ? | ||||||||||||

| Yes | 3.7 (3.3–4) | 0.024* | 3.7 (3.2–3.9) | 0.026* | 3.6 (3.2–4) | 0.097 |

3.3 (2.7–4) |

0.62 | 4 (3–4.5) | 0.12 | ||

| No | 3.5 (3.2–3.8) | 3.4 (3.1–3.8) | 3.4 (3.2–3.8) |

3.3 (2.7–4) |

3.5 (3–4) | |||||||

* Significant p-value

As shown in Table 2, participants generally agreed that using artificial intelligence benefits patients. Furthermore, over half of the participants expressed their belief in AI’s potential to reduce treatment errors in the future. There was an agreement (over 60%, n = 172) that AI can provide doctors with more dedicated time to emphasize patient care. Additionally, a significant majority (over three-fifths) of participants regarded AI as an effective tool to address the overload and shortage of doctors.

2. Concerns and fears

The Concerns and Fears domain in the current results showed a median and IQR of 3.4(3.1–3.8). As shown in Table 2, about half of the participants were concerned about the potential for doctors to play a less important role in patient therapy in the future, and over two-thirds of the participants expressed their belief that doctors are becoming excessively dependent on computer systems. Approximately (64%, n = 178) of participants feared AI’s influence on medical treatment. Furthermore, concerns were raised by almost two-thirds of the participants regarding technical malfunctions and the potential manipulation of AI-based systems. Around (70%, n = 197) of participants expressed worries about external entities, such as terrorists and hackers manipulating AI-based systems. Moreover, a notable concern arose among some participants (46.3%, n = 139), who feared doctors might be less motivated to fight for a patient’s life if AI predicts a low chance of survival. More than half of the participants believe using AI could potentially undermine the doctor-patient relationship. In addition, more than three-fifths of respondents felt that AI limits doctors’ autonomy in rendering their judgments.

Table 3 indicated that the overall score of the Concerns and Fears domain was significantly affected by the use of AI chatbots, with participants who had previously used AI chatbots reporting higher scores (p = 0.026). In S1 Table 1, participants studying health or medical specialties and those who previously used AI bots reported higher belief scores that doctors rely excessively on computer systems than their peers (p = 0.018, p = 0.032), respectively. Furthermore, AI chatbot users reported higher scores with a median and IQR of 4(4–5) to fear external entities such as terrorists and hackers manipulating AI-based systems (p = 0.046).

Trust and confidence in AI and doctors

A significant portion exceeding (60%, n = 205), believed that if a patient experiences harm, the responsibility should lie with the doctor for not adhering to AI recommendations. Moreover, there existed a divergence in trust between AI assessments and doctors’ evaluations, with approximately (25%, n = 71) of participants favoring AI assessments over those of doctors. In contrast, over (50%, n = 146) contested this opinion, and 22.2% maintained a “neutral” stance. A notable percentage of participants (79.2%, n = 221) strongly supported testing AI before its use on patients by independent bodies. Moreover, most participants (58.4%, n = 163) expressed apprehension about doctors’ limited knowledge and understanding of AI’s application in patient care.

In S1 Table 2, participants studying health or medical specialties reported higher belief scores regarding the trust of AI assessments over doctors’ evaluations, with median and IQR of 3(2–4) and 2(2–3) for participants in other non-health-related specialties, respectively (p = 0.036). AI chatbot users reported higher scores with a median and IQR of 5(4–5) to the idea of testing AI before its usage on patients by an independent body (p = 0.005).

Patient involvement and data sharing

This domain reported the lowest perception score compared to other domains reported in this study, with a median and IQR of 3.2(2.7–4). Table 2 revealed that a considerable number (40.5%, n = 113) of participants expressed a desire for their medical treatment to be supported by AI. Furthermore, it was important for many participants (61%, n = 170) that AI-based decision support systems should have scientifically proven benefits for patient care. Interestingly, (44.1%, n = 123) of participants expressed no worries about their data security.

The professional autonomy of doctors

Our data results are shown in Table 2. It is reported that about (55.6%, n = 155) of respondents recognized that AI is significantly altering the demands of the medical profession. However, it was widely agreed (69%, n = 192) that doctors should ultimately maintain final control over patient care.

Discussion

The study aimed to explore university students’ perceptions regarding using artificial intelligence (AI) in healthcare. The findings across different domains - Benefits and Positivity, Concerns and Fears, Trust and Confidence in AI and Doctors, Patient Involvement and Data Sharing, and Professional Autonomy of Doctors- provide valuable insights into the complex landscape of perceptions toward AI in healthcare. It’s essential to acknowledge the context in which these perceptions were obtained, given the challenges of healthcare accessibility and resources in Yemen. Yemen faces significant healthcare challenges, including limited access to medical resources and facilities13,14. As a result, participants may be inclined to view AI as a potential solution to improve healthcare delivery and outcomes in the face of these challenges.

Our findings reveal a cautious but hopeful outlook among participants regarding the advantages of incorporating AI into healthcare. Despite understandable concerns, such as the potential for AI to lessen the role of doctors and worries about technical issues, our study underscores participants’ recognition of AI’s potential to alleviate healthcare challenges in Yemen, a context marked by scarce resources and ongoing conflicts. Significantly, the study underscores how firsthand experience with AI applications, especially AI chatbots, influences perceptions, highlighting the critical role of exposure and education. Additionally, our results shed light on the intricate relationship between trust in AI and trust in doctors, with a notable portion of participants attributing responsibility to doctors if they fail to heed AI recommendations, indicating a need for further exploration and understanding.

Benefits and positivity toward AI in healthcare

Our study indicated that participants had modest perspectives on the advantages and positives of AI in healthcare. This implies a cautious optimism among individuals, implying that while they see the potential benefits of AI in healthcare, they may still have doubts or misgivings12. This study emphasizes the need for more research and education on AI in healthcare to overcome any misconceptions or apprehensions that the public may have. However, participants in our survey largely agreed that AI might assist patients by lowering treatment mistakes and doctors’ workload and giving doctors more time to emphasize patient care. This is similar to recent findings from Nigerian and German studies15,16. This acknowledgement of AI’s potential beneficial influence on healthcare delivery demonstrates that they perceive the worth of implementing AI technology. This optimistic mindset might originate from a desire to close current gaps in the healthcare system and improve patient safety. It might also result from increased faith in technology to solve complex healthcare challenges11,12,17. Furthermore, the participants’ perspectives reflect a practical awareness of Yemen’s healthcare situation, with AI identified as a viable option to address the issues of inadequate healthcare resources and a physician shortage. Furthermore, our survey found that participants believe AI can decrease treatment mistakes and provide more committed time for patient care. This aligns with the global aim of improving healthcare outcomes through technological advances4.

Another interesting conclusion from the study is that AI chatbot usage considerably impacts overall perception scores. This suggests that experience with AI applications, such as AI chatbots, might lead to more positive impressions of AI in healthcare. This conclusion highlights the need to allow people to interact with AI systems in hospital settings to create familiarity, knowledge, and acceptance.

Concerns and fears

About half of the participants expressed concern about the possibility of doctors playing a lesser role in patient therapy in the future, highlighting concerns about the changing dynamics in healthcare delivery. This worry reflects a realization of AI’s transformational potential in healthcare and a desire to retain the human touch in medical treatment as technology advances. Furthermore, this issue may originate from the idea that artificial intelligence systems may completely replace human doctors. A striking example of this issue is the study conducted in China among users of “Sina Weibo,” a Chinese social media platform using mixed methods. Among 200 (21%) reported posts mentioning AI replacing human doctors, 95 (47·5%) expressed that it would do so completely18. While AI can help with diagnostic and treatment decisions, it is critical to understand that physicians provide a depth of knowledge, empathy, and ethical judgment that AI cannot replace.

The results of the current study showed that close to two-thirds were concerned about using artificial intelligence (AI) in medical treatment. This apprehension derives from restricted access to healthcare facilities and resources in Yemen, a weaker healthcare system as a result of the ongoing civil conflict and humanitarian catastrophe, and fears about the possible loss of human touch and customized treatment. Furthermore, a lack of understanding regarding AI technology and its limits may add to misconceptions and concerns, particularly when entrusting AI with vital medical decisions. Moreover, existing societal and cultural views in Yemen may add to the fear. Some viewed AI’s usage as interfering with the natural order or performing a function reserved for human professionals. These worries originate from potential ethical issues and a concern about not completely controlling or comprehending AI system judgments.

The result that approximately half of the participants in the study expressed fear regarding doctors being less motivated to fight for a patient’s life if AI predicts a low chance of survival raises significant concerns about the ethical implications of using AI in healthcare; their proportion was much similar to a previous study conducted among German patients15. Firstly, this fear highlights the potential loss of trust between patients and healthcare professionals. Patients rely on their doctors to provide the best care and prioritize their well-being. Suppose AI is seen as influencing doctors’ decisions and potentially leading to a decline in their motivation to save a patient’s life. In that case, it can erode patient trust and negatively impact doctor-patient relationships. Secondly, this result raises questions about how AI predictions should be integrated into medical decision-making processes. While AI has the potential to enhance patient care and improve outcomes by providing accurate predictions, it should not replace the human judgment and empathy that physicians bring to their practice. Doctors have a moral and professional obligation to act in the best interest of their patients, irrespective of AI predictions.

Furthermore, the study reveals that approximately 63% of respondents were more afraid of a technical malfunction of AI than of a wrong decision by a doctor. This fear could be attributed to technology’s perceived unpredictability and potential dangers. The participants may have concerns about the reliability and accuracy of AI systems, worrying that a malfunction or an error in the algorithm could have severe consequences for patient outcomes. A common finding in many studies is that patients want physician supervision of AI and prefer a physician in a direct comparison12,15,16,19. A particularly striking example of the high respect and trust in the competence of physicians compared to AI tools is provided by the study of York and colleagues using the example of radiographic fracture identification. 24 On a 10-point scale representing confidence, the study population of 216 respondents awarded their human radiologists a near maximum score of 9.2 points, while the AI tool received only 7.0 points20. These findings suggest a need for transparency and clear communication about the limitations and capabilities of AI in healthcare. It is crucial to ensure that patients and healthcare professionals are well-informed about the functioning of AI systems and the safeguards to mitigate potential risks.

In addition, our study highlighted that participants with experience with AI applications, such as engaging with AI chatbots, might have more concerns and fears regarding the use of AI in healthcare.

Trust and confidence in AI and doctors

The results indicate that a significant proportion of Yemeni participants hold doctors responsible for any harm experienced by patients due to their failure to adhere to AI recommendations, which raises critical concerns within the specific socio-cultural and healthcare context of the region. In Yemen, where healthcare resources and infrastructure may face challenges, the reliance on doctors is already pronounced. Hence, participants may be inclined to view AI as a potential solution to improve healthcare delivery and outcomes in the face of these challenges. Moreover, this suggests the high reliance on AI systems in healthcare decision-making is due to their potential to provide accurate and reliable recommendations. However, medical decision-making involves factors like patient history, personal preferences, clinical expertise, and contextual nuances4,17. Doctors are trained to exercise clinical judgment and integrate AI technologies with their knowledge and skills. While AI technologies can provide valuable insights, they should not be viewed as infallible or all-encompassing17. Doctors must balance AI recommendations with patient-specific considerations and their clinical judgment.

The finding that only 25% of participants preferred AI’s evaluation above that of physicians indicates a rising degree of user confidence and trust in AI technology. This may be explained by the potential advantages that AI may provide, such as its speedy analysis of enormous volumes of data and capacity to make suggestions based on patterns and trends. This participant group could see AI as a trustworthy and objective information source. However, it’s interesting to note that more than half of the participants disagreed with this statement, expressing doubt about using AI alone to make medical judgments. This mistrust may stem from worries about the drawbacks and hazards of AI technology, such as its inability to simulate human empathy and the possibility of biases or mistakes in its algorithms. These participants could be drawn to the particular knowledge, wisdom, and intuition that human physicians offer in decision-making. These findings are in line with extensively discussed reported results from different settings in a mixed methods systematic review that the overall participants trusted AI less than humans; however, this gap in trust was reduced when participants were able to choose between AI and humans freely12.

The continuous discussion in medical care about how to strike the right balance between human judgment and AI suggestions is reflected in the differences in trust between AI and doctors’ assessments. It emphasizes the importance of properly weighing the benefits and drawbacks of using AI and human doctors to make well-informed, patient-centered judgments1,3,6,17. Achieving ideal patient results and preserving patient confidence in the healthcare system requires carefully balancing AI technology and human skills.

Patient involvement and data sharing

Our study found that the domain of Patient Involvement and Data Sharingreceived the lowest perception score, suggesting concerns about patient involvement in decision-making and data sharing. About 40.5% of participants desired AI-supported medical treatment, indicating trust in AI’s potential benefits. 61% of participants emphasized the need for evidence-based practice and a cautious approach to AI integration. 44.1% of participants expressed no concerns about data security, indicating trust in healthcare organizations. The reasons behind the low perception score could include concerns about losing control over treatment decisions, fear of data misuse, and skepticism about patient input. To improve patient involvement and data sharing, transparent communication channels should be established, including educating patients about AI’s benefits and limitations, ensuring informed consent, and implementing robust security measures. Patients should also be involved in their healthcare journey, fostering shared decision-making processes and promoting patient empowerment. Collaborative efforts between healthcare providers, AI developers, and policymakers can help establish guidelines and ethical frameworks for patient involvement and data sharing15,16,18.

The professional autonomy of doctors

The finding that more than half of respondents recognized the changing demands of the medical profession due to artificial intelligence can be justified by the increasing integration of AI technologies in healthcare. As AI applications become more prevalent, healthcare professionals and the general public will likely become more aware of the transformative effects on medical practices1–6,17,19. This awareness is particularly significant considering the current global trend towards integrating AI into various aspects of healthcare12. This perception aligns with the growing impact of AI in healthcare, prompting individuals to acknowledge and adapt to the evolving landscape of the medical profession12,15,16,19.

The majority of respondents believe that doctors should have the final say in diagnosis and therapy, despite AI systems, raising questions about the role of AI in healthcare. This study’s results are not completely surprising as they fit with most past research on patient and general public opinions; human physicians are typically trusted and followed more than AI systems12,15,16,18,19. This may be due to the perception that doctors have a level of expertise and experience that AI systems cannot replicate. AI systems are often seen as tools to support doctors in their decision-making process, but they do not replace them entirely. There are also concerns about the reliability and accuracy of AI systems, as they can produce incorrect or biased results. The complexity of diagnosis and therapy, involving various symptoms, patient history, and clinical data, may also influence this perception1,3,4. However, AI systems can rapidly analyze vast amounts of medical data, identify patterns, and generate insights, assisting doctors in making more informed decisions and providing timely, personalized care. AI tools can also improve healthcare access and efficiency1–8,17. The optimal approach is to balance human expertise and AI capabilities, incorporating AI insights into doctors’ decision-making while maintaining responsibility for the final diagnosis and therapy.

Study recommendations

Efforts to advance AI within Yemen’s healthcare landscape encompass two critical dimensions: education and broader integration. Through targeted educational programs, workshops, and informational campaigns, understanding and appreciation of AI’s potential in healthcare are deepened among stakeholders. Concurrently, tailored research endeavors seek solutions for Yemen’s unique healthcare challenges, driving innovation forward. Encouraging interactions with AI systems, such as chatbots within hospital settings, fosters familiarity and trust among stakeholders, highlighting AI’s supportive role alongside human expertise. Essential ethical guidelines delineate AI’s augmentation, not replacement, of medical judgment, while addressing concerns of potential conflicts of interest. Transparency initiatives clarify AI’s limitations and safeguards, bolstering trust and informed decision-making. Sustained dialogue among stakeholders, alongside transparent communication channels for patients, promotes accountability and informed consent. Collaboration among healthcare providers, developers, and policymakers is pivotal for establishing ethical frameworks and emphasizing AI’s complementary nature. Recognizing evolving perceptions, ongoing monitoring, and diverse sampling ensure interventions remain responsive. International collaboration facilitates knowledge exchange, which is crucial for informed policy formulation and responsible AI utilization. Planning for long-term interventions acknowledges the dynamic nature of technology and public sentiment, ensuring AI’s trajectory aligns with ethical imperatives and healthcare priorities in Yemen.

Study limitations and strengths

While our study provides valuable insights into public perceptions of AI in healthcare among university students in Yemen, it is essential to acknowledge certain limitations. The sample primarily consists of university students, limiting the generalizability of the findings to a broader population. More importantly one of the primary limitations of this study is the sample size, which did not meet the initially calculated requirement for optimal statistical power and generalizability. This limitation could affect the robustness of the findings and their applicability to broader populations. Despite this, efforts were made to ensure a diverse sample and to apply robust statistical techniques to mitigate these impacts. Future research should aim to include larger and more diverse samples to validate and extend these findings. Additionally, the study’s cross-sectional nature captures a snapshot in time, and perceptions may evolve over the long term. The study’s strengths lie in its comprehensive exploration of diverse domains and the nuanced understanding it offers. Despite limitations, these findings serve as a foundation for targeted interventions and highlight the need for ongoing research to capture the dynamic nature of attitudes toward AI in Yemen’s healthcare landscape and other settings with similar contexts and resources.

Study implications

The study on public perceptions of AI use in healthcare among Yemeni university students provides crucial insights that carry profound implications for the future of healthcare in the country. The identified cautious optimism, concerns, and fears emphasize the need for nuanced strategies for integrating AI technologies. To ensure successful implementation, initiatives should address specific concerns, promote positive experiences with AI, and establish transparent communication channels. Furthermore, the study underscores the importance of ongoing research, including longitudinal and cross-sectional studies, to track changing perceptions and explore variations across demographics. Insights from such research can guide the development of ethical frameworks, policies, and targeted interventions, fostering a harmonious integration of AI into the healthcare landscape in Yemen.

Conclusion

Our research illuminates the intricate perceptions held by university students concerning the utilization of artificial intelligence (AI) in healthcare within Yemen. The findings suggest a tempered optimism among participants regarding the potential benefits and positive impact of AI in healthcare. However, this confidence wanes when individuals are given the freedom to choose between AI-driven systems and human counterparts. Despite prevalent concerns, including apprehensions about the potential displacement of doctors and anxieties regarding technical glitches, the study underscores participants’ recognition of AI’s capacity to address healthcare challenges within Yemen’s resource-constrained and conflict-ridden context. Noteworthy is the significant influence of firsthand encounters with AI applications, notably AI chatbots, in shaping perceptions, emphasizing the pivotal role of exposure and education. Furthermore, the research unveils a nuanced interplay between trust in AI and trust in doctors, with a notable proportion of participants attributing responsibility to doctors for any harm resulting from non-compliance with AI recommendations. Particularly concerning is the lowest perception score observed in the domain of Patient Involvement and Data Sharing, indicating apprehensions regarding control over treatment decisions and data security. Insights derived from such studies can inform the development of ethical frameworks, policies, and targeted interventions, facilitating the seamless integration of AI into the healthcare landscape, especially in developing nations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Author contributions

Hatem NAH. Conceptualization; Formal analysis; Methodology; Project administration; Resources; Writing – original draft and Writing – review & editing. Mohamed Ibrahim MI. Supervision and Writing – review & editing. Yousuf SA. Methodology; Resources and Writing – review & editing.

Funding

The authors received no funding for this study.

Data availability

The data that support the findings of this study are available from the first author, upon reasonable request.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.McKinney, S. M. et al. International evaluation of an AI system for breast cancer screening. Nature577, 89–94 (2020). [DOI] [PubMed] [Google Scholar]

- 2.Niazi, M. K. K., Parwani, A. V. & Gurcan, M. N. Digital pathology and artificial intelligence. Lancet Oncol.20, e253–e261 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pai, K-C. et al. Artificial intelligence–aided diagnosis model for acute respiratory distress syndrome combining clinical data and chest radiographs. Digit. HEALTH. 8, 205520762211203 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yu, K-H., Beam, A. L. & Kohane, I. S. Artificial intelligence in healthcare. Nat. Biomed. Eng.2, 719–731 (2018). [DOI] [PubMed] [Google Scholar]

- 5.The Lancet. Artificial intelligence in health care: within touching distance. Lancet390 (10114), 2739 (2018). [DOI] [PubMed] [Google Scholar]

- 6.Beam, A. L. & Kohane, I. S. Translating Artificial Intelligence Into Clinical Care. JAMA316 (22), 2368–2369 (2016). [DOI] [PubMed] [Google Scholar]

- 7.Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature542 (7639), 115–118 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Shademan, A. et al. Supervised autonomous robotic soft tissue surgery. Sci. Transl Med.8 (337), 337ra64 (2016). [DOI] [PubMed] [Google Scholar]

- 9.Sadilek, A. et al. Deploying nEmesis: Preventing Foodborne Illn. Data Min. Social Media AIMag ;38(1):37. (2017). [Google Scholar]

- 10.Tomašev, N. et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature572 (7767), 116–119 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Matheny, M., Israni, S. M., Ahmed, M. & Whicher, D. (eds) Artificial Intelligence in Health care: The Hope, the Hype, the Promise, the Peril (National Academy of Medicine, 2019). [PubMed] [Google Scholar]

- 12.Young, A. T., Amara, D., Bhattacharya, A. & Wei, M. L. Patient and general public attitudes towards clinical artificial intelligence: a mixed methods systematic review. Lancet Digit. Health. 3 (9), e599–e611. 10.1016/S2589-7500(21)00132-1 (2021). [DOI] [PubMed] [Google Scholar]

- 13.Alshakka, M. et al. Detection of short-term side effects of ChAdOx1 nCoV-19 vaccine: a cross-sectional study in a War-Torn Country. Pragmat. Obs Res.13, 85–91. 10.2147/POR.S381836 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Alshakka, M. et al. Knowledge, attitude, and practice toward antibiotic use among the general public in a resource-poor setting: a case of Aden-Yemen. J. Infect. Dev. Ctries.17 (3), 345–352. 10.3855/jidc.17319 (2023). [DOI] [PubMed] [Google Scholar]

- 15.Fritsch, S. J. et al. Attitudes and perception of artificial intelligence in healthcare: a cross-sectional survey among patients. Digit. Health. 8, 20552076221116772. 10.1177/20552076221116772 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Adigwe, O. P., Onavbavba, G. & Sanyaolu, S. E. Exploring the matrix: knowledge, perceptions and prospects of artificial intelligence and machine learning in Nigerian healthcare. Front. Artif. Intell.6, 1293297. 10.3389/frai.2023.1293297 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yin, J., Ngiam, K. Y. & Teo, H. H. Role of artificial intelligence applications in real-life clinical practice: systematic review. J. Med. Internet Res.23, e25759 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gao, S., He, L., Chen, Y., Li, D. & Lai, K. Public perception of artificial intelligence in medical care: content analysis of social media. J. Med. Internet Res.22, e16649 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rojahn, J., Palu, A., Skiena, S. & Jones, J. J. American public opinion on artificial intelligence in healthcare. PLoS ONE. 18 (11), e0294028. 10.1371/journal (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.York, T., Jenney, H. & Jones, G. Clinician and computer: a study on patient perceptions of artificial intelligence in skeletal radiography. BMJ Health Care Inf.27, e100233 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the findings of this study are available from the first author, upon reasonable request.