Abstract

Primary school pupils have difficulty making accurate judgments of learning (JOL) in a digital environment. In two studies, we examined the contribution of metacognitive self-questioning to JOL accuracy in the course of reading literacy (Study 1) and mathematical literacy (Study 2) digital learning programs. Both studies comprised a six-session intervention. In each session fifth-grade students studied a short text (Study 1, N = 65) or story containing mathematical components (Study 2, N = 72), then judged their comprehension by predicting their performance before completing a test. For the experimental groups, metacognitive questioning was incorporated into the study materials. In both studies the experimental groups improved over the six sessions in both performance and calibration (i.e., reducing the gap between judgment and performance). The findings highlight the contribution of metacognitive support to improved performance and judgment accuracy and strengthen the case for incorporating metacognitive practices when teaching literacy in a digital environment.

Keywords: Metacognition, Accurate judgments of learning, Meta strategy knowledge, Reading literacy, Mathematical literacy

1. Introduction

1.1. Fostering literacy in a digital environment

Literacy is broadly defined as the ability to identify, understand, interpret, create, communicate and compute, using printed and written materials associated with varying contexts [1]. Literacy incorporates reading literacy, or an ability to read and communicate using the written word, and mathematical literacy—a capacity to reason mathematically and to formulate, employ, and interpret mathematics to solve problems in a variety of real-world contexts [2]. Both forms of literacy are important if individuals are to achieve their goals, develop their potential, and participate fully in their community and wider society [1,3]. Hence, fostering literacy is a cardinal objective of education systems worldwide.

Technological advancements have transformed digital learning environments, making them substantially different from those centered around printed books and prompting a shift in reading and learning habits [4,5]. Learning in a digital environment offers numerous advantages. With the necessary equipment, it is accessible and convenient—available anytime and anywhere—and cost-effective. Additionally, it is adaptable, providing access to diverse media (such as digital tasks, virtual online learning systems, and MOOC courses) and extensive content repositories (including digital books and journals, encyclopedias, and information databases across various disciplines) [6,7]. However, research has identified learning challenges specific to digital environments compared to traditional on-paper formats, including reduced achievement in areas like reading comprehension [4,[8], [9], [10], [11], [12]].

In an attempt to explain learning inferiority in a digital environment, scholars have tested several hypotheses. Some are associated with technological shortcomings of on-screen learning, whether physical, such as visual difficulties traced to screen display characteristics [[13], [14], [15]], or cognitive, such as the loss of mnemonic aids when learners navigate digital documents by scrolling [10,14]. However, even in studies that avoid these technological drawbacks (e.g., reducing visual impediments by using e-books and tablets, or eliminating the need for scrolling by using short texts), findings tend to show inferior performance relative to on-paper learning [1,9,16].

To determine how learning in digital environments may be improved, researchers have been examining the metacognitive processes by which individuals regulate and monitor their own learning. According to the model put forward by Nelson and Narens [17], individuals repeatedly self-judge the quality and quantity of their acquired knowledge as they learn. The metacognitive process by which people regulate their learning is known as Judgment of Learning (JOL) [18,19]. Under the discrepancy reduction model [17,20,21], people stop learning when they judge that they have attained an adequate level of knowledge. Learners devote additional time to learning as long as they consider their level of knowledge to be below a certain (self-defined) target level. It follows that when JOL is biased toward overconfidence, learning may be ceased prematurely, while underconfidence may result in an overallocation of learning time [22]. If so, inaccurate JOL may result in ineffective learning and poor performance.

To examine JOL in metacognitive studies, learners are asked to provide a subjective assessment of their level of knowledge by predicting their performance—i.e., their ability to correctly answer questions about some recently studied material (e.g., paired associate items, category exemplars, or texts) [23,24]. When the material studied is texts, as in the current study, the JOL refers to a judgment of comprehension. Predictions of performance then examine how learners evaluate their comprehension of the text.

Accuracy in JOL is calculated by comparing the judgment with performance and is measured in two ways: absolute accuracy (calibration) and relative accuracy (resolution). Absolute accuracy (calibration) is the disparity between JOL and performance [25]. The direction of this disparity points to overconfidence (JOL above actual learning) or underconfidence (JOL below actual learning). The smaller the disparity, the closer calibration is to the ideal. Relative accuracy (resolution) denotes the extent to which individuals’ judgments correspond to their success in one case (i.e., one test or item) relative to another [25,26]. In comprehension tasks, optimal relative accuracy indicates the ability to differentiate between texts or items that are well-understood and those that are not. Depending on whether or not the study structure allows for returning to earlier items, resolution can help the learner choose which items or texts to spend extra time on, or recognize which ones might require extra study time going forward.

Ackerman and Goldsmith [23], comparing on-paper learners with digital learners, found that the disparity between JOL and performance in digital contexts was significantly wider than that seen in on-paper learning, and that digital learners’ misjudgments in self-assessed knowledge manifested largely in overconfidence. Such findings have recurred in other studies among different age groups, with a variety of topics, and under various learning conditions [[27], [28], [29], [30]]. Younger learners, a relatively understudied group, have been found to have particular difficulty in accurately judging their own knowledge and correctly assessing how well they understand what they have learned [31,32].

Such findings suggest that learning in a digital environment is typified by faulty metacognitive monitoring based on inaccurate judgments of learning, and that this is why it has been found to be inferior to on-paper learning. However, studies have also found that learning strategies designed to induce deep processing (such as summarizing paragraphs, writing keywords, etc.) can help learners improve their JOL accuracy and performance, and eliminate the digital learning inferiority [16,25]. Many researchers call for continued testing of innovative pedagogies based on different learning strategies that may trigger deep processing and improve learning in a digital environment [1,9].

1.2. Metacognitive scaffolding, judgments of learning, and performance

A growing body of work in educational science, including theoretical and empirical studies, has begun to investigate learning strategies based on metacognitive processes in both traditional and digital environments. Learners, even young learners, are able to use metacognitive strategies and regulate their learning by applying monitoring and JOL [33]. Indeed, inculcating metacognitive strategies in learners has been found to improve performance in areas including problem-solving and inquiry-based learning [34,35], reading comprehension [36,37], processing information and online learning [38,39], and foreign-language studies [40,41].

One method of metacognitive teaching involves presenting questions that bring metacognitive processes aimed at monitoring and regulating learning to the learner's awareness. Interventions aimed at inculcating metacognitive self-questioning have been found to significantly improve learners' achievements in text comprehension [[42], [43], [44]]. However, thus far, such studies have mainly been conducted in on-paper learning environments.

The objectives (O) of the present study were to examine whether metacognitive self-questioning improves judgment of learning (JOL) accuracy in a digital environment (O1). We specifically focus on young learners (primary school pupils), who, as noted earlier, are a relatively understudied group in metacognitive research. Additionally, we take a broad approach by investigating the effect of a metacognitive questioning intervention on young learners' JOL accuracy as they engage with texts related to both reading and mathematical literacy (O2). We hypothesize (H) that metacognitive support in the form of self-questioning will improve learners’ JOL accuracy (H1) as well as their reading and mathematical literacy in digital environments (H2).

2. Research setting, participants, and method

In two studies, we tested the effect of metacognitive support on the accuracy of judgments of learning and students' actual performance during digital learning in two fields of knowledge: reading literacy (Study 1) and mathematical literacy (Study 2). In both studies, primary-school students took part in a series of six sessions at intervals of approximately one week. At each session, the children were given texts to read and study on computer monitors. Participants’ JOLs were then elicted before the children were tested on their comprehension.

The children were divided into two groups: an intervention group, which received metacognitive support, and a control group, which received no such support. The intervention integrated metacognitive self-questioning into the environment in which the texts were read. We then analyzed the findings with respect to performance and JOL accuracy (calibration and resolution). Here's a revised version of the paragraph:

A sample size of (n = 32) per condition was determined based on the need to detect medium-sized effects (f = .25) related to group differences in students' accuracy of judgments of learning, as well as reading literacy (Study 1) and mathematical literacy (Study 2) across two research conditions. This calculation was made with (alpha = .05) and a statistical power of (1 - beta = .80), using G∗Power 3 (Faul et al., 2007). To allow for potential technical issues or participant dropout, 35 participants per condition were recruited, totaling 140 fifth graders aged 10–11 years. The schools involved had mid-level socio-economic populations, as defined by Israel's Central Bureau of Statistics (2016), and average scores on national standardized tests.

3. Study 1

We examined the impact of metacognitive self-questioning on accuracy of judgments of learning and students’ actual performance during digital learning in reaing literacy.

3.1. Method

The next paragraph includes details on the method we used in Study 1.

3.1.1. Participants

Sixty-five fifth graders (ages 10–11, 52.3 % girls) attending two different schools took part in this study. The two schools were of similar socioeconomic status as defined by the Israel Central Bureau of Statistics [45]. Students who reported being recent immigrants were omitted from the sample because their reading comprehension difficulties might be traced to the challenges of reading in a foreign language. The study was approved by the Research Ethics Board at Bar-Ilan University, and by the Chief Scientist in the Israeli Ministry of Education (permit number 9223). In addition, students’ parents provided consent for their children to participate in the study.

The students were divided randomly into two groups. Group 1, “Meta_read” (n = 32, 62.5 % girls), engaged in lessons on reading literacy, and received metacognitive support in the form of questions integrated into the text. Group 2, “Control_read” (n = 33, 42.4 % girls), engaged in the same lessons on reading literacy without receiving support.

3.1.2. The intervention program

A courseware product called “Oriani—ze ani!” (“Literacy—That's Me!”) was developed for the purposes of this study. The program, written in the C Sharp programming language, includes six different texts tailored to the fifth-grade curriculum, each followed by a judgment of learning and then a multiple-choice test. The materials were based on the Meitzav (“School Growth and Efficiency Measures”) standardized tests developed by the National Authority for Measurement and Evaluation in Education (RAMA) in Israel, adjusted for the present study. The texts were in various genres (fiction, science, etc.), and their average length was 650 words.

In the intervention, the six texts were presented in six sessions at roughly one-week intervals. In each session, participants first read the text, then provided their judgment of learning. Finally, they were tested on their comprehension of the text they had read. The JOLs and test results from the first session (before the intervention proper) were used as a baseline (the pre-test), and the final set were used as the main measure of the intervention's effects (the post-test).

In the Meta_read group, in all sessions except the baseline (pre-test), four metacognitive self-questions following the IMPROVE model [46] were integrated into the texts: a comprehension question (“What is the text that I read about?”), a context question (“What topic that I am familiar with is related to this text?”), a strategy question (“What strategies will I use to help me understand the text that I read?”), and a reflection question (“I've finished reading. Did I understand the text? How can I improve my reading next time?”). In addition, the Meta_read group received a general introduction to the topic of metacognition before the intervention sessions began (see below).

3.1.3. Research tools

3.1.3.1. Judgment of learning - JOL

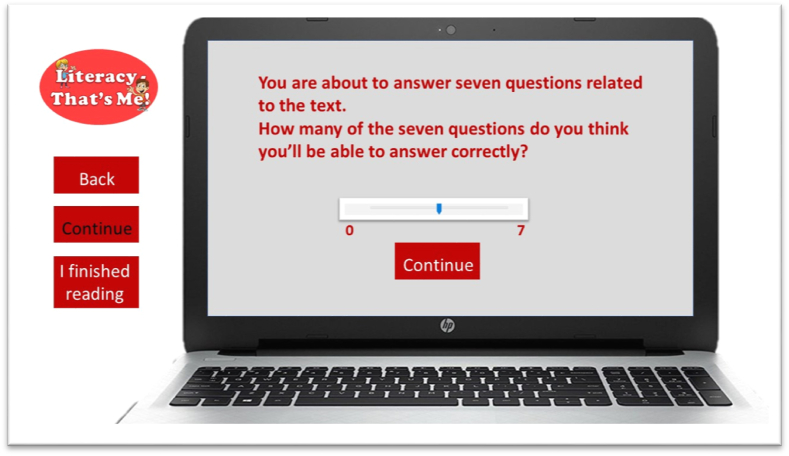

After reading each text, participants were asked to judge how well they had understood it. There are several possible ways of posing this type of question [23,46,47]. In the current study, participants were asked a performance-prediction question: “You are about to answer seven questions related to the text. How many of the seven questions do you think you'll be able to answer correctly?” The answer was given by sliding a cursor along a scale from 0 to 7.

After judging their comprehension, participants were given the choice to reread the text. This choice enabled us to examine whether the accuracy of participants' JOL influenced their choice to reread texts that were not well-understood, and whether re-reading improved their performance [48]. Afterwards, the courseware presented participants with a multiple-choice test that examined their comprehension of the text. The accuracy of students’ self-monitoring was calculated by comparing their JOLs with their test scores in two respects: resolution and calibration. In this study, six judgments were compared with outcomes on six tests.

3.1.3.2. Test Performance

Performance was evaluated through seven-item multiple-choice tests tailored to each text. As noted above, the texts and their corresponding tests were based on Hebrew-language Meitzav exams developed and validated by RAMA to test reading literacy among fifth-graders [49]. An individual score for each test was calculated for each participant. Scores were on a scale of 0–7, with higher scores indicating higher performance.

A reliability check for internal consistency (Cronbach's alpha) performed for the full set of questions on all the tests (42 items) yielded α = .840. On the basis of this coefficient, a general index score was calculated for each participant by averaging their scores on all 42 items.

Fig. 1, Fig. 2, Fig. 3, Fig. 4 present a sample text in both conditions, the performance prediction question, and one test question.

Fig. 1.

Sample Reading Literacy Text—Control Group (Study 1)

Note. The example shows a version of the text used for the control group in Study 1. Students had the option of continuing to read, scrolling back and reading the text again, or moving on to the next stage. The sample is shorter than the study texts and was used for training.

Fig. 2.

Sample Reading Literacy Text—Experimental Group (Study 1)

Note. In the experimental (Meta_read) condition, the courseware introduces metacognitive questions.

Fig. 3.

Prediction of performance (study 1).

Fig. 4.

Sample Question Used in a Literacy Test (Study 1)

Note. After reading the text and then predicting their performance, students are asked to answer seven multiple-choice items testing their understanding of the text. Each item has one correct answer.

3.1.4. Procedure

Participants were split into small groups based on their condition (Control_read or Meta_read) and were taught how to use the courseware during a training session in their school's computer room. During the training session, participants in the Meta_read group also received a detailed explanation about metacognition, including what it is and how it can be used before, during, and after learning to improve achievement. The explanation was designed to be easy to understand but not oversimplified (for example, the difficult word “metacognition” was introduced and defined as “thinking about thinking”). At the end of the training session, participants practiced the use of metacognitive questions with a few sample texts.

The six intervention sessions took place in small groups divided by condition, in the school's computer room, at intervals of about a week. In each session, participants worked independently using the courseware. Participants worked at their own pace, with each session lasting up to about an hour. For participants in the Meta_read group, each text was accompanied by the metacognitive questions outlined above (except for the pre-test; see Section 3.1.2). Participants in that group were asked to answer these questions in writing, using a notebook that they had been given. Participants in the Control_read group were also given a notebook and invited to write down anything they considered helpful, but they were not given specific questions to answer.

As noted above, the texts were about 650 words long, and required scrolling through several screens. In both groups, as participants read the text, they had the option of continuing to read (using a button marked “Continue” to scroll forward) or scrolling back and reading the text again (using a button marked “Back”; see Fig. 1, Fig. 2). When participants were satisfied that they had read and understood the text, they pressed a button labeled “I finished reading.” At this stage, the text disappeared from the screen and was replaced by a performance-prediction question: “You are about to answer seven questions related to the text. How many of the seven questions do you think you'll be able to answer correctly?” The participant answered by sliding a cursor along a ruler labeled from 0 to 7 (see Fig. 3). Then the following question appeared: “Would you like to read the text again?” If the participant pressed “Yes,” the text reappeared, followed again by the performance-prediction screen; otherwise, the participant advanced to the testing stage. The test comprised seven items in multiple-choice format, where each question (with its possible answers) appeared on the screen one at a time. Participants were asked to select the correct answer, then clicked a button marked “Continue” to move on to the next item. Finally, after completing the last item, participants were given the option of reviewing and changing their answers if they desired (they were not able to scroll back to the text at this stage). All data, including students' initial and subsequent judgments, test answers, etc., were stored by the system. The analyses reported below employ participants' final JOLs and final test answers.

The procedure for each session is summarized in Table 1.

Table 1.

Procedure for each session in study 1 (both conditions).

| Stage of performance | Action taken | Instructions |

|---|---|---|

| Stage A | Activating the courseware | Participants activate the coursewear by inputting a personal code. They are invited to write down in a notebook anything that may help them understand and remember what they have read. |

| Stage B | Reading a text | Participants read a brief text in one of various genres. In the Meta_read condition, metacognitive questions are integrated into the text. Participants in the Meta_read condition are asked to answer these questions by writing in their notebooks. |

| Stage C | Prediction of performance | Participants are told that they will be asked seven questions related to the text. They are asked to predict how many questions they will be able to answer correctly by sliding a cursor along a ruler anchored at 0 and 7. |

| Stage D | Option to re-read | Participants are asked if they would like to read the text again. Those who choose to re-read the text are then asked to provide a second prediction of performance. |

| Stage E | Testing | Participants answer seven questions by choosing one of four possible responses. |

| Stage F | Option to change responses | Participants are invited to review and change any of their responses. |

Finally, to ensure that participants' JOLs indeed reflected their perceived level of comprehension, a seventh session was run after the intervention with a text that was significantly harder than the others, and not suited to a fifth-grade reading level. This was meant to rule out the possibility that participants had predicted their performance randomly or uniformly across the various texts. Low performance predictions for the seventh test would indicate that participants’ JOLs indeed reflected the level of difficulty of the text.

3.2. Results

3.2.1. Test Performance

The quantitative data were analyzed to assess each research question, using IBM SPSS Statistics version 25 (IBM Corp, 2017). We first examined the results for the students’ performance in the six reading literacy tests. Table 2 presents the mean scores on each of the six tests for each research group.

Table 2.

Student performance in reading literacy by research group (study 1).

| Meta_read |

Control_read |

|||

|---|---|---|---|---|

| M | SD | M | SD | |

| Test1 | 3.43 | 2.05 | 3.44 | 2.07 |

| Test2 | 3.59 | 1.36 | 2.94 | 1.69 |

| Test3 | 4.10 | 1.68 | 2.82 | 1.68 |

| Test4 | 4.16 | 1.36 | 3.66 | 1.59 |

| Test5 | 4.45 | 2.24 | 3.67 | 1.68 |

| Test6 | 4.84 | 1.86 | 3.10 | 2.44 |

To rule out the possibility of pre-existing differences between the research groups, we performed an independent-samples t-test on the test results for the pre-test (the baseline test, taken by both groups without metacognitive support). No difference was found between the pre-test scores of the Control_read and Meta_read groups, t(51.5) = 1.52, p = .133.

The averages presented in Table 2 and the simple-effects analysis reveal an upward trend, though not significant, in participants' performance from test to test within the Meta_read group. We then checked for differences between participants’ performance in the pre-test and post-test, both within each group and between the groups. For this purpose, we conducted a 2 (points in time: pre and post) x 2 (research groups: Meta_read and Control_read) repeated-measures ANOVA. No main effect was found for time, F(1,59) = 1.430, p = .237, = .024. However, a significant interaction between time and group was found, F(1,59) = 4.931, p < .005, = .077. Fig. 5 presents this interaction effect graphically.

Fig. 5.

Study 1: Pre-test and post-test performance by research group.

The averages presented in Fig. 5 and the simple-effects analysis reveal a significant difference between the tests only in the Meta_read condition, with higher performance in the post-test, F(1,30) = 7.381, p < .05, = .197. In the Control_read group, there were no significant differences between the tests.

Finally, to check for an effect of the intervention on all six tests together, an independent-samples t-test was performed, with the average of the six test scores for each participant as the dependent variable and the research group as the independent variable. The results show a significant difference between the research groups in average scores. The performance of the Meta_read group (M = 4.169, SD = 1.12) significantly surpassed that of the Control_read group (M = 3.26, SD = 1.34), t(63) = 2.967, p < .005.

3.2.2. Judgment of learning - JOL

We next examined the results for students' JOLs. First, however, we checked to ensure that the JOL data accurately reflected participants' perceptions. As noted above, at the end of the intervention participants were presented with a seventh, more difficult text not suited to their age. As expected, paired-sample t-tests reveal a significant difference between the six age-appropriate texts and the seventh text in students' average test performance, t(59) = 7.836, p < .001, such that students' performance on the intervention tests (M = 3.744, SD = 1.33) exceeded their performance on the seventh test (M = 2.55, SD = 1.443). In addition, a significant difference was found between the six age-appropriate texts and the seventh text in students' performance predictions, t(59) = 7.393, p < .001, with a higher average JOL for the six intervention texts (M = 5.497, SD = 1.264) compared with the seventh (M = 3.91, SD = 1.582). Thus, we can assume that the students’ JOLs reflect their actual perceptions.

We can now consider the JOLs, and in particular their relation to the students’ performance. Fig. 6 presents the JOL and performance data for the six tests by research group.

Fig. 6.

Study 1: Jol and performance by research group.

3.2.3. Calibration

One aspect of JOL accuracy is calibration, defined as the absolute difference between participants’ JOLs (provided before testing) and actual test performance. Table 3 presents the calibration results for the six tests, divided by research group.

Table 3.

Calibration in reading literacy by research group (study 1).

| Meta_read |

Control_read |

|||

|---|---|---|---|---|

| M | SD | M | SD | |

| Test1 | 1.46 | .97 | 2.53 | 1.98 |

| Test2 | 2.10 | 1.56 | 3.36 | 1.83 |

| Test3 | 1.96 | 1.23 | 3.27 | 1.92 |

| Test4 | 1.93 | 1.51 | 2.81 | 1.82 |

| Test5 | 1.89 | 1.44 | 2.30 | 1.62 |

| Test6 | 1.75 | 1.57 | 2.54 | 2.30 |

To examine the relationship between calibration and reading literacy performance, we measured the correlation between the two. A significant negative relationship was found between the size of the performance–JOL gap and actual performance (r = −.72, p < .001), such that the smaller the disparity (i.e., the better the calibration) for a given student in a given test, the higher the student's score on that test.

As described above, our initial analyses ruled out the possibility of pre-existing differences between the research groups in performance. Again, we conducted an independent-samples t-test to ensure there was no variance in calibration between the research groups in the pre-test. Indeed, the Control_read and Meta_read groups showed no difference in calibration in the pre-test, t(50.4) = 1.23, p = .226.

The results presented in Table 3 and the simple-effects analysis reveal a downward trend, though not significant, in participants' performance–JOL gaps from test to test within the Meta_read group. That is, the participants’ JOLs within the Meta_read group became more and more accurate.

To check for effects of the experimental condition on differences in calibration between the pre-test and post-test, a 2 (points in time: pre and post) x 2 (research groups: Meta_read and Control_read) repeated-measures ANOVA was performed. The results showed a main effect for time, F(1,59) = 6.555, p < .05, = .100, and a significant interaction between time and group, F(1,59) = 4.974, p < .05, = .078. The averages and the simple-effects analyses reveal a significant difference between the tests in the Meta_read group, F(1,30) = 11.316, p < .005, = .274, such that calibration was better (i.e., the difference between students’ predictions and their performance was smaller) on the post-test (M = 1.290, SD = 1.346) compared with the pre-test (M = 2.258, SD = 1.210). In the Control_read group, no significant differences between the tests were found.

Finally, to check for an effect of the intervention on all six teststhan their low-achieving peers who did not receive such support. See Fig. 7.

Fig. 7.

Study 1: Performance–JOL gap among High and Low Achievers.

3.2.4. Resolution

Resolution denotes the extent to which participants successfully differentiate between higher and lower levels of performance. In the current study, resolution was calculated at the text level, and used to assess how well participants differentiated between texts they understood well, and those they understood less well. A common way of measuring resolution is Goodman and Kruskal's gamma [50,51]. The gamma correlation ranges from 1 to −1, with the ideal correlation around 1. In the current study, gamma was calculated based on participants' final JOLs and their performance.

First, we again checked to confirm that participants' judgments were made rationally as opposed to randomly. To do this, we performed a single-sample t-test in which the average gamma was compared with 0. In this test, the average gamma was found to be significantly different from 0 (p < .001), indicating that participants’ judgments were reasonable. In this study, resolution was found to be weak in both research groups (Meta_read: M = .32, SD = .45; Control_read: M = .269, SD = .47), with no significant differences.

To check for a difference in resolution between low-achieving and high-achieving participants, a t-test was performed. It yielded a significant difference in gamma between the achievement groups (M = −.066, SD = .591, vs. M = .301, SD = .545, for the low and high achievers, respectively). These findings imply that the low achievers were less able than higher achievers to distinguish between the texts they understood well and those they understood less well—an unsurprising finding, and one that accords with the finding reported above, that low achievers suffered from greater overconfidence than high achievers (see Fig. 7). However, whereas we found that metacognitive support was able to improve the calibration of low achievers, no such finding emerged for resolution.

3.3. Discussion of findings from study 1

The results of Study 1 point to meaningful improvement in reading literacy among students who received support in the form of metacognitive questioning when learning in a digital environment. These findings reinforce and expand on previous findings examining metacognitive support [51,52]. In particular, we observed an upward trend (though not significant) in students’ performance from test to test; and a significant improvement from the pre-test to the post-test within the group that received metacognitive support.

More important, the study introduced JOL as a variable related to performance in a reading literacy program. Participants were asked to judge their learning by predicting their performance in a comprehension test. As in other studies [22], it was found here that calibration—i.e., an ability to accurately assess one's own learning—is a metacognitive process associated with more effective learning and better performance. We found that the better the calibration—i.e., the smaller the disparity between prediction and performance—the better the participant's performance. This was the case in both the experimental group and the control group, and among high achievers and low achievers alike.

Calibration is especially important in the context of learning in a digital environment, where inaccurate JOLs are typical [23]. Such inaccurate JOLs can reflect overconfidence, leading learners to make poor decisions (e.g., to stop studying before they really understand the material). Accordingly, this study introduced a metacognitive support program aimed at helping learners improve their monitoring accuracy. The findings suggest that a support program based on asking metacognitive questions does have a positive effect on calibration. In the six-session intervention program, it was found that metacognitive support induced improvements in calibration from one session to the next. The participants’ JOLs became increasingly accurate, such that the gap between their evaluations and their actual performance narrowed from test to test.

Importantly, the effect of metacognitive support on JOL accuracy differed among participants at different levels of achievement. Low-achieving participants who received support showed significantly stronger calibration than did low achievers who did not receive support. It follows that metacognitive support in digital learning is an immensely valuable strategy for low-achieving students in particular. This conclusion reinforces the findings of other studies that demonstrate the efficacy of metacognitive support in improving performance among underachieving students [[53], [54], [55]]. The findings also point to a potential ceiling effect for metacognitive support. Among the high achievers, high calibration was observed in both the group that received support and the group that did not, suggesting that high performers are already able to accurately assess their learning.

In contrast to the improvement in calibration, the current study found low resolution values in both research groups. Across the board, participants had trouble differentiating between texts that they had learned and understood well and those they had not learned well and required further study. This finding recurs in many studies on judgment of comprehension among young students [19,47]. The lengthy lapse of time between each session (a week or longer) may have degraded the participants’ resolution by making it hard for them to determine which texts they had learned well and which they had not.

Study 1 examined the effect of metacognitive support on reading literacy. To test the effect of metacognitive support from a broader perspective, in Study 2 we focused on learning in the context of mathematical literacy, using short texts that conveyed mathematical information. Mathematical literacy has characteristics that differ from those of reading literacy. Mathematical texts, for example, are generally shorter than reading literacy texts in terms of word count, but they demand comprehension of mathematical symbols and language. Accordingly, the purpose of Study 2 was to pinpoint the contribution of metacognitive support to accuracy of JOLs in the context of mathematical literacy in a digital environment.

4. Study 2

We examined the impact of metacognitive self-questioning on the accuracy of judgments of learning (JOL) during digital learning programs focused on mathematical literacy.

4.1. Method

The next paragraph includes details on the method we used in Study 2.

4.1.1. Participants

Seventy-two fifth-graders (age 10–11, 45.8 % girls) took part in this study. The children attended two schools that had not been involved in Study 1. As in Study 1, the schools were of similar socioeconomic status, and students who defined themselves as recent immigrants were omitted from the sample. The students’ parents provided consent for their children to participate in the study.

The students were divided randomly into two groups. Group 1, “Meta_math” (n = 33, 47.1 % girls), engaged in lessons on mathematical literacy and received metacognitive support in the form of questions integrated into the mathematical text. Group 2, “Control_math” (n = 39, 44.7 % girls), engaged in the same lessons on mathematical literacy without receiving support.

4.1.2. The intervention program

The intervention program in Study 2 comprised six texts (sometimes accompanied by images) that examined mathematical literacy, tailored to the fifth-grade curriculum [56]. The texts were around 100 words long on average. Each text involved a situation from daily life that posed a mathematical problem; the participants were asked to consider the problem and complete a seven-item multiple-choice test by applying mathematical procedures. The texts included mathematical language and symbols based on PISA assessment tools (from the OECD's Programme for International Student Assessment) and adjusted for the age of the participants.

Fig. 8, Fig. 9, Fig. 10 present a sample text in both conditions and one test question.

Fig. 8.

Sample Mathematical Literacy Text—Control Group (Study 2)

Note. The example shows a version of the text used for the control group in Study 2. Students had the option of continuing to read, scrolling back and reading the text again, or moving on to the next stage. The sample is shorter than the study texts and was used for training.

Fig. 9.

Sample Mathematical Literacy Text—Experimental Group (Study 2)

Note. In the experimental (Meta_math) condition, the courseware introduces metacognitive questions.

Fig. 10.

Sample Question Used in a Mathematical Literacy Test (Study 2)

Note. After reading the text and then predicting their performance, students are asked to answer seven multiple-choice items testing their understanding of the text. Each item has one correct answer.

As in Study 1, in the Meta_math condition, four metacognitive self-questions following the IMPROVE model [46] were integrated into the texts. Again, the first set of JOLs and test results were used as a baseline (pre-test), with no metacognitive support for either group, and the final set were used as a post-test.

4.1.3. Research tools

4.1.3.1. Judgment of learning

Predictions of performance were collected as in Study 1; see Section 3.1.3.

4.1.3.2. Test Performance

Mathematical literacy performance was evaluated through seven-item multiple-choice tests developed for the purposes of the study. As described above, the texts and tests were based on Hebrew-language Meitzav exams, and were thoroughly vetted for clarity and coherence by a group of experts in teaching mathematics [56]. An individual score for each test was calculated for each participant. Scores were on a 0–7 scale, with higher scores meaning higher performance.

A reliability check for internal consistency (Cronbach's alpha) across the full set of questions on all the tests (42 items) yielded α = .804. On the basis of this coefficient, a general index score on the comprehension test was calculated for each participant by averaging their scores on all 42 items.

4.1.4. Procedure

The stages of this study were identical to those in Study 1. As in that study, all students were given notebooks in which they were invited to write down any information they felt might be helpful, including mathematical data. Thus, the tests assessed students’ comprehension and not their memory for details. See Section 3.1.4.

4.2. Results

4.2.1. Test Performance

As in Study 1, we first examined participants’ test performance. The average scores in the mathematical literacy tests for each research group are shown in Table 4.

Table 4.

Student performance in mathematical literacy by research group (study 2).

| Meta_math |

Control_math |

|||

|---|---|---|---|---|

| M | SD | M | SD | |

| Test1 | 3.09 | 1.75 | 2.97 | 1.59 |

| Test2 | 4.00 | 1.88 | 3.32 | 1.54 |

| Test3 | 3.94 | 1.51 | 3.11 | 1.65 |

| Test4 | 4.03 | 1.51 | 3.42 | 1.55 |

| Test5 | 4.56 | 1.77 | 3.33 | 1.53 |

| Test6 | 5.27 | 1.70 | 3.47 | 1.90 |

To rule out the possibility of pre-existing differences between the research groups, an independent-samples t-test was performed on the results for the pre-test (taken for both groups without metacognitive support). Indeed, no difference in pre-test scores was found between the Control_math and Meta_math groups, t(68) = .29, p = .769).

The averages presented in Table 4 and the simple-effects analysis reveal an upward trend, though not significant, in participants’ performance from test to test within the Meta_math group.To check for differences between the pre-test and post-test within each research group and between the groups, we carried out a 2 (points in time: pre and post) x 2 (research groups: Meta_math and Control_math) repeated-measures ANOVA. In this analysis, a main effect was found for time: F(1,67) = 28.228, p < .001, = .296. A significant interaction effect of time and group was also found: F(1,67) = 11.102, p < .005, = .142. Fig. 11 presents this interaction effect graphically.

Fig. 11.

Study 2: Pre-test and post-test performance by research group.

The averages presented in Fig. 11 and the simple-effects analysis reveal a significant difference between the tests only in the Meta_math group, with higher performance in the post-test, F(1,32) = 40.245, p < .001, = .557. In the Control_math group, no significant differences between the tests were found.

Finally, to check for an effect of the intervention on all six tests, an independent-samples t-test was performed, with the average of the six test scores for each participant as the dependent variable and the research group as the independent variable. The results show a significant difference between the research groups in average scores, t(70) = 3.413, p < .005, with the performance of the Meta_math group (M = 4.136, SD = 1.053) significantly higher than that of the Control_math group (M = 3.284, SD = 1.063).

4.2.2. Judgment of learning

Fig. 12 presents participants’ JOLs and performance for each of the six tests by research group.

Fig. 12.

Study 2: Jol and performance by research group.

4.2.3. Calibration

As in Study 1, we first examined calibration, defined as the absolute difference between participants’ JOL and actual performance. Table 5 presents the calibration results for the six tests, divided by research group.

Table 5.

Calibration in mathematical literacy by research group (study 2).

| Meta_math |

Control_math |

|||

|---|---|---|---|---|

| M | SD | M | SD | |

| Test1 | 2.75 | 1.90 | 3.02 | 1.62 |

| Test2 | 1.84 | 1.52 | 2.78 | 1.70 |

| Test3 | 2.27 | 1.44 | 3.16 | 1.64 |

| Test4 | 2.28 | 1.78 | 2.36 | 1.58 |

| Test5 | 1.82 | 1.31 | 2.86 | 1.70 |

| Test6 | 1.51 | 1.75 | 2.97 | 2.07 |

We next measured the correlation between calibration and mathematical literacy performance. A significant negative relationship was found between the size of the performance–JOL gap and actual performance (r = −.718, p < .001), such that the smaller the disparity (i.e., the better the calibration) for a given student in a given test, the better the student's performance in that test.

Again, to ensure there was no variance in calibration between the research groups in the pre-test, an independent-samples t-test was performed. Indeed, the Control_math and Meta_math groups showed no difference in calibration in the pre-test, t(68) = .639, p = .525.

The results presented in Table 5 and the simple-effects analysis reveal a downward trend, though not significant, in participants' performance–JOL gaps from test to test within the Meta_math group. That is, the participants’ JOLs within the Meta_math group became more and more accurate.

To check for a difference in calibration between the pre-test and the post-test within each research group, a 2 (points in time: pre and post) x 2 (research groups: Meta_math and Control_math) repeated-measures ANOVA was performed. This analysis found a significant difference in calibration over time, F(1,67) = 4.908, p < .05, = .068, and a significant interaction between time and group, F(1,67) = 4.104, p < .05, = .058. The averages and the simple-effects analyses reveal a significant difference between the tests in the Meta_math group, F(1,32) = 8.067, p < .01, = .201, such that calibration was better on the post-test (M = 1.515, SD = 1.752) than on the pre-test (M = 2.757, SD = 1.904). In the Control_math group, no significant differences between the tests were found.

Finally, to check for an effect of the intervention on all six tests, an independent-samples t-test was performed, with the average of the six absolute differences for each participant as the dependent variable and the research group (Meta_math or Control_math) as the independent variable. The results show a significant difference between the research groups in average calibration, t(70) = 3.359, p = .001, such that the disparities were significantly lower (i.e., calibration was better) in the Meta_math group (M = 2.098, SD = .859) compared to the Control_math group (M = 2.851, SD = 1.029).

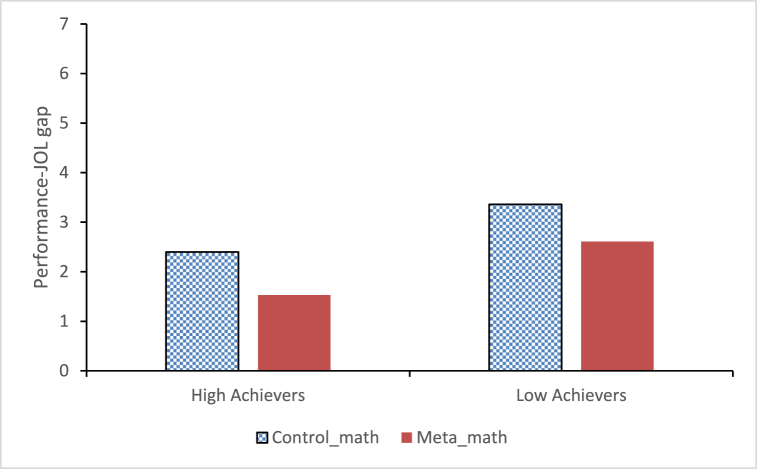

To examine the effect of metacognitive support on calibration among participants with different achievement levels, the participants were divided into two groups based on the median of their average scores on the pre-test. The high achievers (i.e., participants who scored higher than the median; n = 36) scored, on average, 4.470 (SD = .925) in the pre-test, while the low achievers (those scoring at or below the median; n = 36) scored, on average, 2.903 (SD = .702). To test for differences in the effect size between the high and low achievers, an independent-samples t-test was performed. Interestingly, these results show significant differences in calibration between those who received metacognitive support and those who did not in both performance groups. Among the high achievers, participants who received support predicted their performance more accurately than those not receiving support (M = 1.53, SD = .73; M = 2.4, SD = .78, for the Meta-math and Control_math groups, respectively), t(34) = 3.42, p = .002. The same was true for the low achievers (M = 2.61, SD = .54; M = 3.36, SD = 1.05, for the Meta_math and Control_math groups, respectively), t(34) = 2.63, p = .013. These findings are shown in Fig. 13.

Fig. 13.

Study 2: Performance–JOL gap among High and Low Achievers.

4.2.4. Resolution

As in Study 1, a single-sample gamma t-test compared with 0 was carried out. The average gamma was found to be significantly different from 0 (p < .007), indicating that participants’ JOLs were rational. In this study, weak correlations were found in both research groups (Meta_math: M = .235, SD = .601; Control_math: M = .167, SD = .523), with no significant differences.

4.3. Discussion of findings from study 1

The results of Study 2 show significant improvement in mathematical literacy among students who received support in the form of metacognitive questioning when learning in a digital environment. As in Study 1, the six-session intervention program showed that metacognitive support induced improvement in mathematical literacy from session to session. Again, there was an upward trend (though not significant), in participants’ performance from one test to the next within the group that received metacognitive support, and a significant improvement in that group from the pre-test to the post-test.

Our findings in Study 2 reinforce the findings of Study 1 and previous research [22] that calibration is a metacognitive process associated with effective learning and strong performance. Again, we found that better calibration—i.e., a smaller disparity between prediction and performance—is linked with better performance.

Importantly, the findings of this study show again that a support program can be effective in enhancing calibration. Over the course of the six-session intervention, metacognitive support led to an improvement in calibration from one session to the next, as participants’ JOLs became increasingly reflective of their performance.

In contrast to the improvement in calibration, Study 2 yielded low values of resolution among both research groups. The participants found it hard to differentiate between mathematical situations that they understood and managed well, and those learned poorly that required further study. This finding appears in many studies on comprehension judgments among young students [19,47]. As in Study 1, the lapse of time between sessions (a week or more) may have degraded resolution by making it hard for the participants to determine which mathematical concepts they had grasped well and which they had not.

5. General discussion

The present research examined the effects of metacognitive self-questioning on the accuracy of judgments of learning (JOL) and literacy outcomes in a digital learning environment, focusing on both reading and mathematical literacy among young learners.

Across both studies, the results consistently demonstrated that metacognitive support through self-questioning led to significant improvements in literacy outcomes. In Study 1, students receiving metacognitive support showed meaningful gains in reading literacy, with a notable improvement from pre-test to post-test. Similarly, in Study 2, students exhibited significant progress in mathematical literacy, again with a marked enhancement from pre-test to post-test. These findings align with previous research, underscoring the effectiveness of metacognitive interventions in fostering academic performance in digital environments.

A key focus of both studies was the role of metacognitive support in improving JOL accuracy. The findings from both studies confirmed that better calibration—reflected in a smaller disparity between predicted and actual performance—was associated with improved academic outcomes. Importantly, the metacognitive questioning intervention effectively enhanced calibration, as evidenced by increasingly accurate JOLs over the course of the intervention sessions. This improvement was particularly pronounced among low-achieving students, suggesting that metacognitive support is a valuable strategy for enhancing self-regulation and learning accuracy in this group.

Despite the positive effects on calibration, both studies reported low resolution values, indicating that participants struggled to differentiate between well-understood material and content that required further study. This difficulty, observed across both reading and mathematical literacy contexts, may be attributed to the time lapse between sessions, which could have impacted the students' ability to accurately assess their comprehension. This finding is consistent with prior research and highlights a potential limitation in the implementation of metacognitive interventions, particularly in digital learning environments [[53], [54], [55]].

To sum up, the combined results of Study 1 and Study 2 provide compelling evidence for the effectiveness of metacognitive support in enhancing both reading and mathematical literacy among young learners [36,37,47]. The studies also emphasize the importance of calibration as a metacognitive process that contributes to better learning outcomes. While the interventions successfully improved JOL accuracy, the challenge of low resolution suggests the need for further refinement of metacognitive support strategies, particularly in contexts where extended time lapses between learning sessions may occur. Overall, these findings contribute to the growing body of literature advocating for the integration of metacognitive strategies into digital education, especially for supporting underachieving students.

6. Limitations and directions for future research

This study had several limitations, which also provide scope for future investigation. First, Study 1 materials included texts in different genres. Our research framework did not allow us to determine whether the effect of metacognitive support on JOL accuracy and performance differed based on the genre of the text. In view of studies examining adult readers that found an association between text genre and JOL accuracy [1,57], the role of genre in the context of JOL accuracy among young readers should be tested in future research.

Second, the intervention took place over six sessions carried out at intervals of about a week, and sometimes more. This interval was chosen in order to test the efficacy of the intervention program over time. This may have made it hard for participants to differentiate between texts that were learned well and those that were not, thus creating difficulty in resolution compared with studies that calculated resolution on the basis of reading different texts in one sitting [58]. It is worth investigating in future research whether resolution will improve with a more compact timeframe for the intervention, and how such a change might affect other measures of learning (including calibration and performance).

Finally, the text-comprehension tests included multiple-choice questions but not open-ended ones. In reference to studies that noted the drawbacks of tests that lack open-ended questions [59], courseware in future research should examine the use of metacognitive questioning with open-ended test items.

These caveats notwithstanding, the present findings offers important insights for educational systems. Reading and learning in digital environments have become common for learners of all ages. We show that a well-designed intervention program can help to improve metacognitive learning processes, comprehension, and performance in reading and mathematical literacy.

CRediT authorship contribution statement

Tova Michalsky: Writing – original draft. Hila Bakrish: Data curation.

Data availability statement

The data is not available for this study due to ethical issues.

Declaration of competing interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests:The Author is a section editor in Education for this journal. If there are other authors, they declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Delgado P., Vargas C., Ackerman R., Salmerón L. Don't throw away your printed books: a meta-analysis on the effects of reading media on reading comprehension. Educ. Res. Rev. 2018;25:23–38. doi: 10.1016/J.EDUREV.2018.09.003. [DOI] [Google Scholar]

- 2.OECD Pisa 2021 mathematics framework (first draft) 2018. https://www.upc.smm.lt/naujienos/smm/penkiolikmeciu-matematinis-rastingumas/GB-2018-4-PISA-2021-Mathematics-Framework-First-Draft.pdf

- 3.UIL (UNESCO Institute for Lifelong Learning) Addressing Global Citizenship Education in Adult Learning Ens Education. 2019. APCEIU, & understanding, (UNESCO asia-pacific centre of education for international. [Google Scholar]

- 4.Murphy P., Long J., Holleran T., Esterly E. Persuasion online or on paper: a new take on an old issue. Learn. InStruct. 2003;13(5):511–532. doi: 10.1016/S0959-4752(02)00041-5. [DOI] [Google Scholar]

- 5.Winer D. Israel country report on ICT in education. 2018. http://meyda.education.gov.il/files/Owl/Hebrew/education-plans.pdf

- 6.Ross B., Pechenkina E., Aeschliman C., Chase A.M. Print versus digital texts: understanding the experimental research and challenging the dichotomies. Res. Learn. Technol. 2017;25 . [Google Scholar]

- 7.Dennis A.R., Abaci S., Morrone A.S., Plaskoff J., McNamara K.O. Effects of e-textbook instructor annotations on learner performance. J. Comput. High Educ. 2016;28(2):221–235. doi: 10.1007/s12528-016-9109-x. [DOI] [Google Scholar]

- 8.Daniel D.B., Woody W.D. E-textbooks at what cost? Performance and use of electronic v. print texts. Comput. Educ. 2013;62:18–23. doi: 10.1016/J.COMPEDU.2012.10.016. [DOI] [Google Scholar]

- 9.Kong Y., Seo Y.S., Zhai L. Comparison of reading performance on screen and on paper: a meta-analysis. Comput. Educ. 2018;123:138–149. doi: 10.1016/J.COMPEDU.2018.05.005. [DOI] [Google Scholar]

- 10.Mangen A., Walgermo B.R., Brønnick K. Reading linear texts on paper versus computer screen: effects on reading comprehension. Int. J. Educ. Res. 2013;58:61–68. doi: 10.1016/j.ijer.2012.12.002. [DOI] [Google Scholar]

- 11.Singer L.M., Alexander P.A. Reading across mediums: effects of reading digital and print texts on comprehension and calibration. J. Exp. Educ. 2017;85(1):155–172. doi: 10.1080/00220973.2016.1143794. [DOI] [Google Scholar]

- 12.Sperling R.A., Howard B.C., Miller L.A., Murphy C. Measures of children's knowledge and rRegulation of cognition. Contemp. Educ. Psychol. 2002;27(1):51–79. doi: 10.1006/ceps.2001.1091. [DOI] [Google Scholar]

- 13.Benedetto S., Drai-Zerbib V., Pedrotti M., Tissier G., Baccino T. E-readers and visual fatigue. PLoS One. 2013;8(12) doi: 10.1371/journal.pone.0083676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chou I.-C. Reading for the purpose of responding to literature: EFL students' perceptions of e-books. Comput. Assist. Lang. Learn. 2016;29(1):1–20. doi: 10.1080/09588221.2014.881388. [DOI] [Google Scholar]

- 15.Jeong H. A comparison of the influence of electronic books and paper books on reading comprehension, eye fatigue, and perception. Electron. Libr. 2012;30(3):390–408. doi: 10.1108/02640471211241663. [DOI] [Google Scholar]

- 16.Sidi Y., Shpigelman M., Zalmanov H., Ackerman R. Understanding metacognitive inferiority on screen by exposing cues for depth of processing. Learn. InStruct. 2017 doi: 10.1016/j.learninstruc.2017.01.002. [DOI] [Google Scholar]

- 17.Nelson T., Narens L. METAMEMORY: a theoretical framework and new findings. Psychol. Learn. Motiv. 1990;26:125–173. http://www.imbs.uci.edu/∼lnarens/1990/Nelson&Narens_Book_Chapter_1990.pdf [Google Scholar]

- 18.Dunlosky J., Rawson K.A. Overconfidence produces underachievement: inaccurate self evaluations undermine students' learning and retention. Learn. InStruct. 2012;22(4):271–280. doi: 10.1016/j.learninstruc.2011.08.003. [DOI] [Google Scholar]

- 19.Pieger E., Mengelkamp C., Bannert M. Metacognitive judgments and disfluency - does disfluency lead to more accurate judgments, better control, and better performance? Learn. InStruct. 2016;44:31–40. doi: 10.1016/j.learninstruc.2016.01.012. [DOI] [Google Scholar]

- 20.Butler D.L., Winne P.H. Feedback and self-regulated learning: a theoretical synthesis. Rev. Educ. Res. 1995;65(3):245–281. doi: 10.3102/00346543065003245. [DOI] [Google Scholar]

- 21.Dunlosky J., Thiede K.W. What makes people study more? An evaluation of factors that affect self-paced study. Acta Psychol. 1998;98(1):37–56. doi: 10.1016/S0001-6918(97)00051-6. [DOI] [PubMed] [Google Scholar]

- 22.Bjork R.A., Dunlosky J., Kornell N. Self-regulated learning: beliefs, techniques, and illusions. Annu. Rev. Psychol. 2013;64(1):417–444. doi: 10.1146/annurev-psych-113011-143823. [DOI] [PubMed] [Google Scholar]

- 23.Ackerman R., Goldsmith M. Metacognitive regulation of text learning: on screen versus on paper. J. Exp. Psychol. Appl. 2011;17(1):18–32. doi: 10.1037/a0022086. [DOI] [PubMed] [Google Scholar]

- 24.Ariel R., Karpicke J.D., Witherby A.E., Tauber S.K. Do judgments of learning directly enhance learning of educational materials? Educ. Psychol. Rev. 2020:1–20. doi: 10.1007/s10648-020-09556-8. [DOI] [Google Scholar]

- 25.Rawson K.A., Dunlosky J., Thiede K.W. The rereading effect: metacomprehension accuracy improves across reading trials. Mem. Cognit. 2000;28(6):1004–1010. doi: 10.3758/BF03209348. [DOI] [PubMed] [Google Scholar]

- 26.Thiede K.W., Anderson M.C.M., Therriault D. Accuracy of metacognitive monitoring affects learning of texts. J. Educ. Psychol. 2003;95(1):66–73. doi: 10.1037/0022-0663.95.1.66. [DOI] [Google Scholar]

- 27.Dahan Golan D., Barzillai M., Katzir T. The effect of presentation mode on children's reading preferences, performance, and self-evaluations. Comput. Educ. 2018;126:346–358. doi: 10.1016/J.COMPEDU.2018.08.001. [DOI] [Google Scholar]

- 28.Efklides A. Metacognition and affect: what can metacognitive experiences tell us about the learning process? Educ. Res. Rev. 2006;1(1):3–14. doi: 10.1016/j.edurev.2005.11.001. [DOI] [Google Scholar]

- 29.Lauterman T., Ackerman R. Overcoming screen inferiority in learning and calibration. Comput. Hum. Behav. 2014;35:455–463. doi: 10.1016/J.CHB.2014.02.046. [DOI] [Google Scholar]

- 30.Porat E., Blau I., Barak A. Measuring digital literacies: junior high-school students' perceived competencies versus actual performance. Comput. Educ. 2018;126:23–36. . [Google Scholar]

- 31.Sidi Y., Ophir Y., Ackerman R. Generalizing screen inferiority - does the medium, screen versus paper, affect performance even with brief tasks? Metacognition and Learning. 2016;11(1):15–33. doi: 10.1007/s11409-015-9150-6. [DOI] [Google Scholar]

- 32.Van Loon M.H., de Bruin A.B.H., van Gog T., van Merriënboer J.J.G. Activation of inaccurate prior knowledge affects primary-school students' metacognitive judgments and calibration. Learn. InStruct. 2013;24(1):15–25. doi: 10.1016/j.learninstruc.2012.08.005. [DOI] [Google Scholar]

- 33.Baars M., van Gog T., de Bruin A., Paas F. Effects of problem solving after worked example study on primary school children's monitoring accuracy. Appl. Cognit. Psychol. 2014;28(3):382–391. doi: 10.1002/acp.3008. [DOI] [Google Scholar]

- 34.Kim Y.H. Early childhood educators' meta-cognitive knowledge of problem-solving strategies and quality of childcare curriculum implementation. Educ. Psychol. 2016;36(4):658–674. doi: 10.1080/01443410.2014.934661. [DOI] [Google Scholar]

- 35.Mihalca L., Mengelkamp C., Schnotz W. Accuracy of metacognitive judgments as a moderator of learner control effectiveness in problem-solving tasks. Metacognition and Learning. 2017:1–23. doi: 10.1007/s11409-017-9173-2. [DOI] [Google Scholar]

- 36.Carretti B., Caldarola N., Tencati C., Cornoldi C. Improving reading comprehension in reading and listening settings: the effect of two training programmes focusing on metacognition and working memory. Br. J. Educ. Psychol. 2014;84(2):194–210. doi: 10.1111/bjep.12022. [DOI] [PubMed] [Google Scholar]

- 37.Muijselaar M.M.L., Swart N.M., Steenbeek-Planting E.G., Droop M., Verhoeven L., de Jong P.F. Developmental relations between reading comprehension and reading strategies. Sci. Stud. Read. 2017;21(3):194–209. doi: 10.1080/10888438.2017.1278763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Pellas N. The influence of computer self-efficacy, metacognitive self-regulation and self-esteem on student engagement in online learning programs: evidence from the virtual world of Second Life. Comput. Hum. Behav. 2014;35:157–170. doi: 10.1016/j.chb.2014.02.048. [DOI] [Google Scholar]

- 39.Winne P.H., Hadwin A.F. Springer; New York, NY: 2013. nStudy: Tracing and Supporting Self-Regulated Learning in the Internet; pp. 293–308. [DOI] [Google Scholar]

- 40.Guo L. Modeling the relationship of metacognitive knowledge, L1 reading ability, L2 language proficiency and L2 reading. Read. Foreign Lang. 2018;30(2):209–231. http://nflrc.hawaii.edu/rfl [Google Scholar]

- 41.Zhang D., Zhang L.J. Springer; Cham: 2019. Metacognition and Self-Regulated Learning (SRL) in Second/Foreign Language Teaching; pp. 883–897. [DOI] [Google Scholar]

- 42.McKeown M.G., Beck I.L., Blake R.G.K. Rethinking reading comprehension instruction: a comparison of instruction for strategies and content approaches. Read. Res. Q. 2009;44(3):218–253. doi: 10.1598/RRQ.44.3.1. [DOI] [Google Scholar]

- 43.McMaster K.L., den Broek P., van A., Espin C., White M.J., Rapp D.N., Kendeou P., Bohn-Gettler C.M., Carlson S. Making the right connections: differential effects of reading intervention for subgroups of comprehenders. Learn. Indiv Differ. 2012;22(1):100–111. doi: 10.1016/j.lindif.2011.11.017. [DOI] [Google Scholar]

- 44.Michalsky T., Mevarech Z.R., Haibi L. Elementary school children reading science texts: effects of metacognitive instruction. J. Educ. Res. 2009;102(5):363–374. [Google Scholar]

- 45.Central Bureau of Statistics . 2019. Characterization and Classification of Geographical Units by the Socio-Economic Level of the Population 2015. www.cbs.gov.il. [Google Scholar]

- 46.Mevarech Z., Kramarski B. Improve: a multidimensional method for teaching mathematics in heterogeneous Classrooms. Am. Educ. Res. J. 1997;34(2):365–394. doi: 10.3102/00028312034002365. [DOI] [Google Scholar]

- 47.Pilegard C., Mayer R.E. Adding judgments of understanding to the metacognitive toolbox. Learn. Indiv Differ. 2015;41:62–72. doi: 10.1016/J.LINDIF.2015.07.002. [DOI] [Google Scholar]

- 48.Thiede K.W., Anderson M.C. Summarizing can improve metacomprehension accuracy. Contemp. Educ. Psychol. 2003;28(2):129–160. doi: 10.1016/S0361-476X(02)00011-5. [DOI] [Google Scholar]

- 49.Rama - the national authority for measurement & evaluation in education. Medida Besherut Ha’lemida [Assessment for Learning. 2009 [Google Scholar]

- 50.Nelson T.O. A comparison of current measures of the accuracy of feeling-of-knowing predictions. Psychol. Bull. 1984;95(1):109–133. doi: 10.1037/0033-2909.95.1.109. [DOI] [PubMed] [Google Scholar]

- 51.Michalsky T., Schechter C. Preservice teachers' self-regulated learning: integrating learning from problems and learning from successes. Teach. Teach. Educ. 2013;30:60–73. [Google Scholar]

- 52.Valencia-Vallejo N., López-Vargas O., Sanabria-Rodríguez L. Effect of a metacognitive scaffolding on self-efficacy, metacognition, and achievement in E-learning environments. Knowledge Management & E-Learning. 2019;11(1):1–19. . [Google Scholar]

- 53.Raes A., Schellens T., De Wever B., Vanderhoven E. Scaffolding information problem solving in web-based collaborative inquiry learning. Comput. Educ. 2012;59(1):82–94. doi: 10.1016/j.compedu.2011.11.010. [DOI] [Google Scholar]

- 54.Zohar A., Ben David A. Explicit teaching of meta-strategic knowledge in authentic classroom situations. Metacognition and Learning. 2008;3(1):59–82. doi: 10.1007/s11409-007-9019-4. [DOI] [Google Scholar]

- 55.Zohar A., Peled B. The effects of explicit teaching of metastrategic knowledge on low- and high-achieving students. Learn. InStruct. 2008;18(4):337–353. doi: 10.1016/j.learninstruc.2007.07.001. [DOI] [Google Scholar]

- 56.RAMA - the National Authority for Measurement & Evaluation in Education. 2016. Meitzav 2006 [School Growth and Efficiency Measures 2006] [Google Scholar]

- 57.Golke S., Hagen R., Wittwer J. Lost in narrative? The effect of informative narratives on text comprehension and metacomprehension accuracy. Learn. InStruct. 2019;60:1–19. doi: 10.1016/j.learninstruc.2018.11.003. [DOI] [Google Scholar]

- 58.Dunlosky J., Lipko A.R. Metacomprehension. Curr. Dir. Psychol. Sci. 2007;16(4):228–232. doi: 10.1111/j.1467-8721.2007.00509.x. [DOI] [Google Scholar]

- 59.Ozuru Y., Briner S., Kurby C.A., McNamara D.S. Comparing comprehension measured by multiple-choice and open-ended questions. Can. J. Exp. Psychol. 2013;67(3):215–227. doi: 10.1037/a0032918. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data is not available for this study due to ethical issues.