Abstract

The geometric Brownian motion (GBM) is widely used for modeling stochastic processes, particularly in finance. However, its solutions are constrained by the assumption that the underlying distribution of returns follows a log-normal distribution. This assumption limits the predictive power of GBM, especially in capturing the complexities of real-world data, where deviations from log-normality are common. In this work, we introduce entropy corrections to the GBM framework to relax the log-normality constraint and better account for the inherent structures in real data. We demonstrate that as the deterministic components within the data increase, entropy decreases, leading to refinements in GBM’s predictive accuracy. Our approach shows significant improvements over conventional GBM in handling distributions that deviate from log-normal behavior, as demonstrated through both a simple dice roll experiment and real-world financial data. Beyond just financial modeling, this research also opens up new avenues for generating synthetic data that better captures real-world complexity, enhancing applications in fields like machine learning and statistical modeling.

Subject terms: Information theory and computation, Information technology

Introduction

The geometric Brownian motion (GBM) has long served as a foundational model for capturing stochastic nature of systems characterized by the continuous random fluctuations. In particular, this model has been argued to be well suited for forecasting diffusion processes1, population dynamics2 or most notably stock prices3. However, central to GBM is the assumption that logarithm of its solutions result in a normal distribution, a premise that, while convenient for analytical purposes, may not fully encapsulate the complexities inherent in realistic systems4. For instance, real data distributions often exhibit characteristics such as non-zero skewness, excess kurtosis or fluctuating volatility, notably deviating from the idealized bell curve5. These deviations have significant implications for the accuracy of traditional GBM, by challenging its abilities to properly portray extreme events or interpret underlying dynamics of considered data.

By recognizing these limitations, a motivation arises for the exploration of stochastic modeling that goes beyond the described constraints of normality as it appears in the conventional GBM. This is to say, there is a need to consider stochastic processes that may yield solutions that do not necessarily follow a log-normal distribution. While alternative models, such as the stochastic volatility6 and the jump-diffusion process7, have been proposed, they mostly call for the modification or replacement of GBM. Hence, the existing advantages of this framework, in terms of its simplicity, efficiency and continuity, are somewhat hindered or lost. As an alternative, the present study proposes non-violent approach of employing entropy constraints to improve predictive capabilities of GBM without sacrificing its beneficial features. The idea originates from the Shannon’s information theory, where entropy plays pivotal role and stands for the minimum number of logical states needed to communicate some message8. This is to say, the well-ordered message is expected to have a lower entropy than a more random one. As a result, it can be argued that entropy provides a means to judge how well GBM predicts future events by measuring the level of uncertainty around forecast data with respect to the original time series. This premise is additionally reinforced when invoking the ability of entropy measure to capture extreme events9.

To better picture above reasoning let us conceive gedanken experiment that hinges on a dice roll. We begin with a well known fact that probability of getting any side number during a conventional dice roll is equally probable, resulting in a uniform distribution. According to Shannon, the information about such process can be quantified by the entropy given as8:

|

1 |

where N is the number of intervals or classes in the probability distribution (PD) and  denotes the corresponding probability. For the mentioned conventional dice roll, the Shannon entropy takes its maximum value of 2.585, as

denotes the corresponding probability. For the mentioned conventional dice roll, the Shannon entropy takes its maximum value of 2.585, as  . However, if there is a biased dice roll with the probability of getting some particular number equal to 1/2 and the probability of 1/10 corresponding to each of the remaining outcomes, we have a more deterministic scenario where

. However, if there is a biased dice roll with the probability of getting some particular number equal to 1/2 and the probability of 1/10 corresponding to each of the remaining outcomes, we have a more deterministic scenario where  . Importantly, further increase in the probability of rolling particular number leads to the continued decrease in entropy. Henceforth, it can be established that an increase in the deterministic content within a probability distribution corresponds to a reduction in entropy.

. Importantly, further increase in the probability of rolling particular number leads to the continued decrease in entropy. Henceforth, it can be established that an increase in the deterministic content within a probability distribution corresponds to a reduction in entropy.

Extending the described principle to the domain of evolving time series reveals that normality assumption (i.e. the assumption that solutions to a stochastic process follow log-normal distribution) may indeed be considered as a rough approximation, particularly within a short to mid-term time frame. Consequently, employing GBM for this purpose should prove less efficacious. The observed correlation between an augmented determinism level and decreased system entropy reinforces presented viewpoint. In alignment with this rationale, an exploration of the entropy change, resulting from the addition of a trajectory forecast by GBM to the original time series, is warranted. In particular, an analysis of such entropy shift should allow for the identification of trajectories that amplify the preeminent probabilities within the original time series, thereby leading to a reduction in entropy. Here, a comprehensive analysis, underlined by this conceptual framework, is provided including not only introduction of the corresponding methodology but also its detailed validation.

Methodology

To begin with, it is instructive to recall and briefly discuss the GBM approach. This stochastic process constitutes extension of conventional Brownian motion by incorporating drift and volatility terms. Its mathematical derivation involves solving the following stochastic differential equation:

| 2 |

where S(t) is the series value at time t,  and

and  are respectively the drift and volatility coefficients, while dW(t) denotes the Wiener process (the Brownian motion). By using Itô’s calculus and assuming log-normal distribution of considered data under GBM, the central equation of S(t) can be obtained10–12:

are respectively the drift and volatility coefficients, while dW(t) denotes the Wiener process (the Brownian motion). By using Itô’s calculus and assuming log-normal distribution of considered data under GBM, the central equation of S(t) can be obtained10–12:

| 3 |

In this respect, GBM is a memoryless process, meaning its future behavior does not depend on past states. Because of the stochasticity imparted by the Wiener process, GBM allows to forecast numerous trajectories using  and

and  of the corresponding probability distribution. This yields solutions that can be next employed to compute expectation value of the averaged future movement.

of the corresponding probability distribution. This yields solutions that can be next employed to compute expectation value of the averaged future movement.

Here, the above approach is modified according to the presented concept of entropy constraints. This modification requires that entropy calculated for a given distribution is compared with a reference state. Thus, higher the entropy with respect to the reference state, higher will be the randomness. Following reverse analogy, the entropy would decrease if the level of determinism increases within the probability distribution. In this context, the main aim of the proposed modification is to search for the series predictions that lead to the described shift in entropy. This would ensure that, conditioned on a given probability distribution, the full information content is exploited to come up with a series of predictors for the time series that decreases the entropy of the evolved distribution.

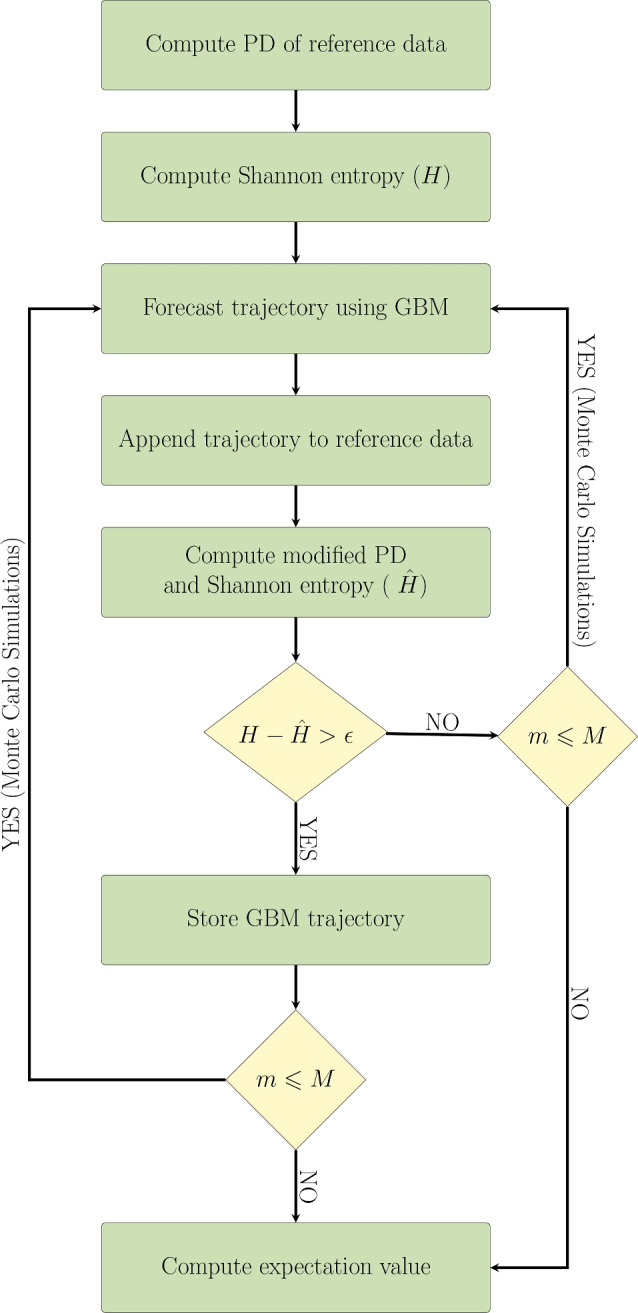

The detailed flowchart comprising steps of the proposed entropy corrected geometric Brownian motion (EC-GBM) approach is shown in Fig. 1. In summary, GBM is employed there to generate trajectories based on the reference PD, utilizing its mean and standard deviation. Next, these trajectories are incrementally appended to this distribution, and the entropy for each new distribution is computed. A comparison is then made between the calculated entropy and the entropy of the reference distribution. If the entropy decrease surpasses a predetermined threshold, denoted as  , the corresponding trajectory is accepted as a forecast trajectory. The selection of

, the corresponding trajectory is accepted as a forecast trajectory. The selection of  can be configured as a percentage relative to the maximum observed decrease in entropy throughout the simulation. This process is iterated in a Monte Carlo fashion, yielding a set of forecast trajectories aligned with the characteristics of the underlying distribution.

can be configured as a percentage relative to the maximum observed decrease in entropy throughout the simulation. This process is iterated in a Monte Carlo fashion, yielding a set of forecast trajectories aligned with the characteristics of the underlying distribution.

Fig. 1.

Flowchart representing steps involved in the EC-GBM approach for time series forecasting. The Monte Carlo sub-procedure is marked and is meant to run M times in total, with each iteration denoted by m. The EC-GBM solutions are refined with respect to the entropy threshold value  .

.

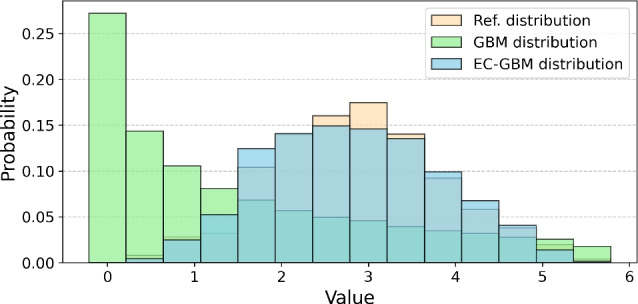

To briefly illustrate the comparative advantages of EC-GBM over conventional GBM, consider scenario where the data follows normal distribution instead of a log-normal one. The GBM approach in such circumstances would yield trajectories significantly divergent from the reference distribution i.e. GBM would assume that data adhere to a log-normal distribution, guiding its trajectory forecasts accordingly. However, as depicted in Fig. 2, even when the corresponding distribution deviates from the log-normal character, the EC-GBM method adeptly filters trajectories in alignment with the reference distribution. This underscores the effectiveness and robustness of EC-GBM as a superior alternative to GBM, irrespective of the underlying distributional assumptions.

Fig. 2.

The reconstructed probability distributions resulting from the GBM (green) and EC-GBM (blue) approaches when the underlying reference distribution is normal (orange).

Biased dice roll

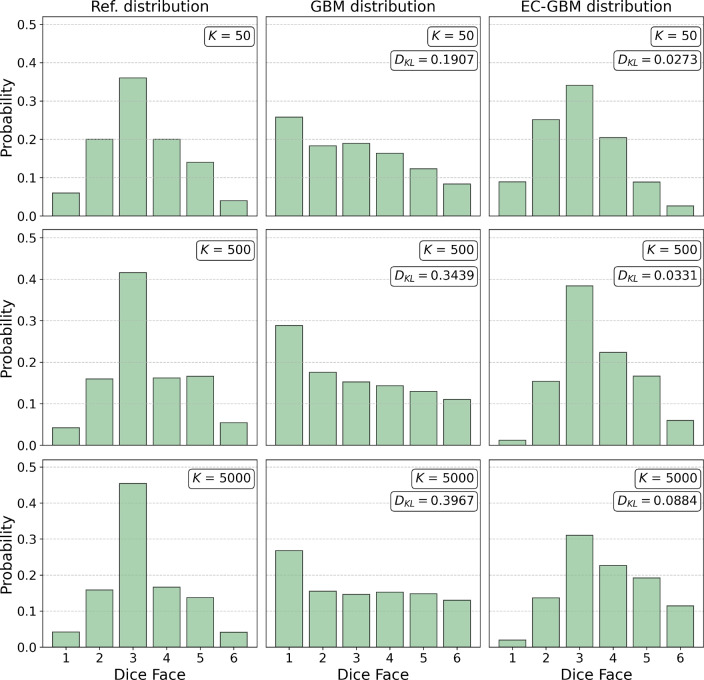

To properly validate the depicted potential and accuracy of EC-GBM, we analyze this approach at the quantitative level. This allows systematic and in-depth statistical discussion of the EC-GBM as well as the initial identification of the regimes where the proposed model works best. To do so, let us consider again a biased dice, however, this time the probability of rolling every face is unequal. The manifestation of such bias becomes particularly apparent when examining reference distributions depicted in Fig. 3 over an increasing number of rolls,  . By envisaging the biased dice roll as a time series, our objective is to discern the inherent distribution and generate future rolls that are in alignment with the underlying probability distribution. In this context, it is crucial to note that the resulting distribution does not adhere to a normal distribution, even with a large number of rolls. The bias introduces skewness towards favored outcomes, deviating from the typical bell curve associated with the normal distribution.

. By envisaging the biased dice roll as a time series, our objective is to discern the inherent distribution and generate future rolls that are in alignment with the underlying probability distribution. In this context, it is crucial to note that the resulting distribution does not adhere to a normal distribution, even with a large number of rolls. The bias introduces skewness towards favored outcomes, deviating from the typical bell curve associated with the normal distribution.

Fig. 3.

The probability distributions of a biased dice for varying numbers of rolls, as generated through the GBM and EC-GBM approaches. This process involves considering reference probability distribution of rolls for up to K rolls. The mean and the standard deviation are utilized to forecast the next 10, 50, 100 dice rolls for  ,

,  ,

,  respectively, using the GBM approach. Subsequently, the trajectories that lead to decrease in the entropy upon appending to the reference distribution constitute the EC-GBM distribution.

respectively, using the GBM approach. Subsequently, the trajectories that lead to decrease in the entropy upon appending to the reference distribution constitute the EC-GBM distribution.

The future trajectories are obtained by employing both the GBM and EC-GBM techniques for comparison and the resulting distributions are shown in Fig. 3. The inadequacy of the traditional GBM approach is evident in Fig. 3 when confronted with a skewed reference distribution. It is at this juncture that the EC-GBM emerges again as a more robust method, capitalizing on the maximum information content within the time series. This allows to predict outcomes with heightened accuracy that align with the underlying probability distribution, even in scenarios with fewer rolls. In what follows, the obtained results clearly demonstrates limitations of the GBM as caused by its inherent log-normality assumption.

As a measure of the difference between the reference and generated probability distributions, given by GBM and EC-GBM methods, the Kullback–Leibler ( ) divergence is calculated and shown in Fig. 3. The

) divergence is calculated and shown in Fig. 3. The  measure is often used in information theory and statistics to quantify the difference between two probability distributions in the following manner13:

measure is often used in information theory and statistics to quantify the difference between two probability distributions in the following manner13:

| 4 |

where P and Q stands for the reference and approximated distributions, respectively. This is to say, the  measures the information lost when Q is used to approximate P.

measures the information lost when Q is used to approximate P.

As depicted in Fig. 3, the  between the reference distribution and the distribution generated using EC-GBM approach is significantly lower compared to that observed with the use of traditional GBM approach. This is due to the fact that the biasness increases the level of determinism within the probability distribution and consequently, as discussed in Section 2, it decreases the entropy of the appended trajectory that enhances the probability peaks with regards to the reference distribution. Therefore, the trajectories predicted by GBM, that contribute to a decrease in entropy, essentially signify paths reinforcing bias within the distribution.

between the reference distribution and the distribution generated using EC-GBM approach is significantly lower compared to that observed with the use of traditional GBM approach. This is due to the fact that the biasness increases the level of determinism within the probability distribution and consequently, as discussed in Section 2, it decreases the entropy of the appended trajectory that enhances the probability peaks with regards to the reference distribution. Therefore, the trajectories predicted by GBM, that contribute to a decrease in entropy, essentially signify paths reinforcing bias within the distribution.

Hyperparameter selection and performance optimization

A critical aspect of many advanced models, including e.g. machine learning techniques, is the selection of hyperparameters, which can significantly influence model performance. In the mentioned machine learning algorithms, parameters such as the learning rate or regularization factor are tuned through cross-validation to achieve optimal results. Similarly, in our EC-GBM model, the hyperparameter  , which controls the degree of entropy correction, plays a pivotal role in determining the accuracy of the modeled distributions.

, which controls the degree of entropy correction, plays a pivotal role in determining the accuracy of the modeled distributions.

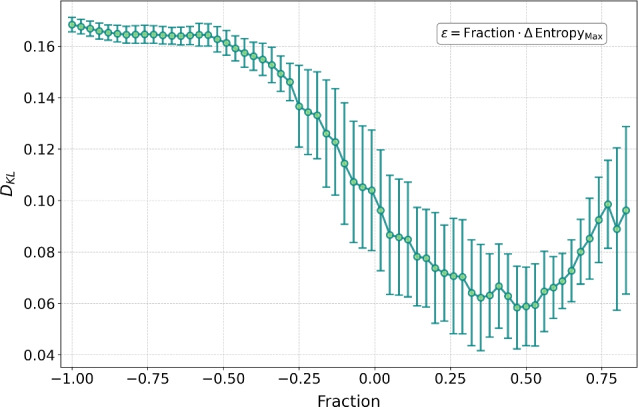

With regards to the selection of  , we conducted a rigorous analysis by computing the KL divergence (

, we conducted a rigorous analysis by computing the KL divergence ( ) as a function of

) as a function of  , parameterized as a fraction of the maximum entropy decrease. The resulting U-shaped curve in Fig. 4 demonstrates that KL divergence reaches a minimum at an intermediate value of

, parameterized as a fraction of the maximum entropy decrease. The resulting U-shaped curve in Fig. 4 demonstrates that KL divergence reaches a minimum at an intermediate value of  , indicating an optimal selection point for this hyperparameter. This optimal

, indicating an optimal selection point for this hyperparameter. This optimal  minimizes divergence between the EC-GBM-generated distributions and the target distributions, ensuring that the model most accurately reflects the real-world system it seeks to represent. Thus, the selection of

minimizes divergence between the EC-GBM-generated distributions and the target distributions, ensuring that the model most accurately reflects the real-world system it seeks to represent. Thus, the selection of  follows a principled approach akin to hyperparameter tuning in machine learning. By evaluating the KL divergence or alternative metrics, users can reliably choose an

follows a principled approach akin to hyperparameter tuning in machine learning. By evaluating the KL divergence or alternative metrics, users can reliably choose an  that maximizes the model’s performance, thereby ensuring reproducibility of results.

that maximizes the model’s performance, thereby ensuring reproducibility of results.

Fig. 4.

KL divergence ( ) as a function of the hyperparameter

) as a function of the hyperparameter  , expressed as a fraction of the maximum entropy decrease. The U-shaped curve indicates that

, expressed as a fraction of the maximum entropy decrease. The U-shaped curve indicates that  is minimized at an optimal

is minimized at an optimal  , where the EC-GBM distributions align best with the target distribution. Error bars indicate variability across different instances of the EC-GBM process.

, where the EC-GBM distributions align best with the target distribution. Error bars indicate variability across different instances of the EC-GBM process.

Results

The above discussion is supplemented by the analysis of the proposed methodology against real world empirical examples to further substantiate effectiveness of EC-GBM. Since our approach focuses on optimizing the utilization of information embedded in historical time series, it makes a perfect tool for refining forecasting models and strategies to enhance our ability to predict future data behavior. Among others, this underlies the predictive analytics in financial markets that constitutes convenient case for our considerations. In particular, we present two illustrative and interdependent examples of time series forecasting: (i) we examine two simulated time series, one characterized by an upward trend and the other by a downward trend, (ii) we also focus on the time series data for the gold-to-US dollar exchange rate, analyzed over the period from March 9 to March 17, 2023. This choice of data is motivated by gold’s status as a significant asset in financial markets, often serving as a hedge against currency fluctuations and economic uncertainty. We employed this time frame to capture short-term market behaviors and trends, which are particularly relevant in volatile trading environments. Our approach emphasizes the adaptability of the EC-GBM model in utilizing historical data to enhance predictive analytics, thus demonstrating its effectiveness in real-world financial scenarios.

The simulated time series for the artificial upward and downward trend are respctively represented by the following equations:

| 5 |

| 6 |

where  ,

,  are time series values at time t and 0 units, m is the slope and

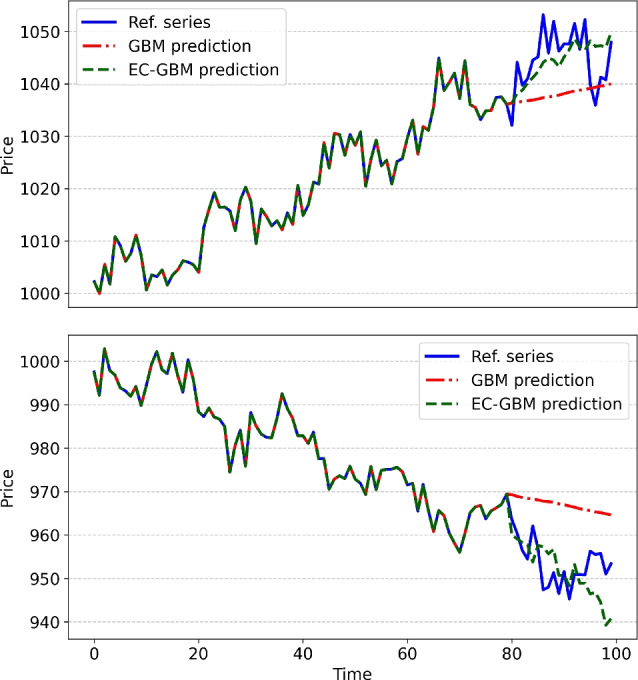

are time series values at time t and 0 units, m is the slope and  denotes a random error obtained from a normal distribution with mean zero and variance one. The computation of such time series is carried out for 100 steps with the objective of leveraging the initial 80 steps as an input to our methodology, subsequently employing both the GBM and EC-GBM approaches to forecast the next 20 steps. The deviations observed in the last 20 steps arise from the fact that these values are predictions generated by the models, which are compared to the true data, highlighting the effectiveness of the forecasting methodologies. This approach allows us to evaluate the predictive capabilities of our proposed methodology in comparison to the traditional GBM method. To derive the ultimate forecast trajectory, we calculate the expectation value for both the GBM and EC-GBM approaches at each time step. In the EC-GBM approach, expectations are computed based on trajectories that exhibit a reduction in entropy of at least 75

denotes a random error obtained from a normal distribution with mean zero and variance one. The computation of such time series is carried out for 100 steps with the objective of leveraging the initial 80 steps as an input to our methodology, subsequently employing both the GBM and EC-GBM approaches to forecast the next 20 steps. The deviations observed in the last 20 steps arise from the fact that these values are predictions generated by the models, which are compared to the true data, highlighting the effectiveness of the forecasting methodologies. This approach allows us to evaluate the predictive capabilities of our proposed methodology in comparison to the traditional GBM method. To derive the ultimate forecast trajectory, we calculate the expectation value for both the GBM and EC-GBM approaches at each time step. In the EC-GBM approach, expectations are computed based on trajectories that exhibit a reduction in entropy of at least 75 compared to the maximum entropy reduction observed across all GBM trajectories. Figure 5 visually demonstrates that while both GBM and EC-GBM accurately predict the overall trend, the forecast generated by the EC-GBM offers more valuable insights. Specifically, the EC-GBM solutions not only align with the temporal movement of the time series but also provide nuanced information regarding the magnitude of observed shifts, a feature that harmonizes more closely with the actual trajectory of time series.

compared to the maximum entropy reduction observed across all GBM trajectories. Figure 5 visually demonstrates that while both GBM and EC-GBM accurately predict the overall trend, the forecast generated by the EC-GBM offers more valuable insights. Specifically, the EC-GBM solutions not only align with the temporal movement of the time series but also provide nuanced information regarding the magnitude of observed shifts, a feature that harmonizes more closely with the actual trajectory of time series.

Fig. 5.

The forecast trajectories of simulated data, as obtained within the GBM (dashed red line) and EC-GBM (dashed green line) approach. The data for the upward (top panel) and downward (bottom panel) trend is considered. For reference purposes the historical data of simulated time series is depicted (blue solid line).

It is crucial to note that the simulated time series under consideration incorporates both an underlying trend and seasonality, providing ample information for informed forecasting of future trajectories. In contrast, real-world time series often involve a multitude of additional factors influencing their dynamics, necessitating a more comprehensive approach to account for these complexities. Nevertheless, the incorporation of information criteria in forecasting methodologies holds promise for enhancing the precision and quality of predictions in the presence of diverse influencing factors. In this context, we also illustrate the application of our forecasting methodology on real-world financial market data as shown in Fig. 6. To simulate real-world conditions accurately, we showcase the evolution of forecasted trajectories from both methodologies at various time steps, where each forecast utilizes the most recent 4 days of data as the underlying distribution to forecast the time series for the subsequent 32 h, with each time step representing a 4-h interval. Notably, both the GBM and the EC-GBM approaches adeptly predict the directional trend in market movement, similar to the previous case of the simulated time series. The correspondence is also observed when closely inspecting the EC-GBM results alone. In detail, the EC-GBM approach excels in capturing not only the qualitative trend but also the quantitative nuances of the market dynamics, offering a more comprehensive and accurate depiction of the observed movements.

Fig. 6.

The forecast trajectories of the real world financial data for the pair made up of gold against the US dollar (dashed lines), as obtained within the GBM (top panel) and EC-GBM (bottom panel) approach. The simulated data is presented for the selected time steps. For reference purposes the historical data of real world time series is depicted (blue solid line).

Conclusion

In summary, the present research endeavor allows to deepen current understanding of how to effectively discern and harness the wealth of information contained within historical time series. It aligns with the concept that the maximization of information content within probability distributions can be well characterized by entropy, serving as a fundamental tool for quantifying uncertainty and disorder across various systems and distributions14,15. In particular, the above findings are shown here to be highly advantageous for improving forecasting potential of elemental GBM process, by adopting entropy corrections to uncover patterns and structures within data sets of interest. The crux of introduced methodology lies in manipulating entropy measure through the strategic addition of trajectories, simulated by the traditional GBM method, to the existing time series distribution. By doing so, it is possible to notably refine our ability to predict and anticipate future data behavior. Note that entropy is not treated here as an ergodic observable, meaning that we focus on refining the statistical description of the system in order to capture real-world complexities, rather than ensuring the statistical equivalence between time and ensemble averages (for more details on ergodic observables see16). This is to say, the entropy serves here as a practical correctional tool, allowing us to account for the increased deterministic content in the data.

Our research underscores the potential applications of the EC-GBM approach across various domains where the traditional GBM method is employed. Particularly noteworthy are its implications in risk management, such as estimating value at risk, where the inadequacies of GBM become apparent when faced with the non-log-normal distributions. Additionally, in option pricing, where GBM-based Monte Carlo simulations are prevalent, our method offers promising avenues for improvement. By addressing the limitations of GBM across these critical financial applications, our research opens doors to more robust risk assessment and pricing strategies, thereby facilitating more informed decision-making in financial markets. Furthermore, by surpassing the log-normality assumption of the underlying distribution in the EC-GBM approach, even short-term time series distributions can be utilized to construct risk strategies tailored to the high volatility prevalent in financial markets. Nonetheless, the perspectives of the presented model are not limited only to the financial applications. Given appropriate conditions, the reasoning outlined here can be adapted to diverse fields where stochastic processes play a pivotal role. For instance, in modeling quantum stochastic processes, especially within continuous-time quantum dynamics, decoherence phenomena can be effectively described using GBM to characterize the random fluctuations responsible for decoherence. Moreover, refining probability distributions based on entropy values, as demonstrated here, shows promise for addressing analogous challenges in refining sampling schemes, including rejection sampling, importance sampling, Metropolis-Hastings algorithm, and others.

Beyond its potentially immediate application to financial modeling, the EC-GBM framework offers significant potential in machine learning, particularly in the generation of synthetic data. By generating data that accounts for entropy corrections, the EC-GBM can produce artificial datasets that align more closely with the underlying distribution of real-world data. This can be especially beneficial in scenarios involving limited or imbalanced datasets, where augmented data improves the training and performance of machine learning models. Hence, EC-GBM may serve not only as a robust methodology in itself but also as a valuable tool for enhancing the capabilities of machine learning systems.

Acknowledgements

S.K. would like to acknowledge the National Science Foundation under award number 1955907.

Author contributions

R.G. and E.A.D.S. and D.S. designed the study. R.G. wrote the final numerical code. E.A.D.S. and D.S. participated in writing parts of the numerical code. R.G. performed numerical calculations. All Authors critically reviewed obtained results and performed their analysis. R.G. wrote draft of the manuscript. R.G. and E.A.D.S. and D.S. wrote the final manuscript. S.K. and D.S. supervised investigations. All Authors reviewed the final manuscript.

Data availibility

The datasets generated during the current study are available from the corresponding author on reasonable request, while the employed financial datasets are publicly available online at www.histdata.com.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Grebenkov, D. S., Sposini, V., Metzler, R., Oshanin, G. & Seno, F. Exact distributions of the maximum and range of random diffusivity processes. New J. Phys.23, 023014 (2021). [Google Scholar]

- 2.Stojkoski, V., Utkovski, Z., Basnarkov, L. & Kocarev, L. Cooperation dynamics in networked geometric Brownian motion. Phys. Rev. E99, 062312 (2019). [DOI] [PubMed] [Google Scholar]

- 3.Black, F. & Scholes, M. The pricing of options and corporate liabilities. J. Polit. Econ.81, 637 (1973). [Google Scholar]

- 4.Marathe, R. R. & Ryan, S. M. On the validity of the geometric Brownian motion assumption. Eng. Econom.50, 159 (2005). [Google Scholar]

- 5.Stojkoski, V., Sandev, T., Basnarkov, L., Kocarev, L. & Metzler, R. Generalised geometric Brownian motion: Theory and applications to option pricing. Entropy22, 1432 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Heston, S. L. A closed-form solution for options with stochastic volatility with applications to bond and currency options. Rev. Financ. Stud.6, 327 (1993). [Google Scholar]

- 7.Merton, R. C. Option pricing when underlying stock returns are discontinuous. J. Financ. Econ.3, 125 (1976). [Google Scholar]

- 8.Shannon, C. E. A mathematical theory of communication. Bell Syst. Tech. J.27, 623 (1948). [Google Scholar]

- 9.Drzazga-Szczȩśniak, E. A., Szczepanik, P., Kaczmarek, A. Z. & Szczȩśniak, D. Entropy of financial time series due to the shock of war. Entropy25, 823 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Itô, K. Stochastic integral. Proc. Imp. Acad.20, 519 (1944). [Google Scholar]

- 11.Itô, K. Multiple Wiener integral. J. Math. Soc. Jpn.3, 157 (1951). [Google Scholar]

- 12.Itô, K. On stochastic differential equations. Mem. Am. Math. Soc.4, 1 (1951). [Google Scholar]

- 13.Kullback, S. & Leibler, R. A. On information and sufficiency. Ann. Math. Stat.22, 79 (1951). [Google Scholar]

- 14.Jaynes, E. T. Information theory and statistical mechanics. Phys. Rev.106, 620 (1957). [Google Scholar]

- 15.Gupta, R., Xia, R., Levine, R. D. & Kais, S. Maximal entropy approach for quantum state tomography. PRX Quantum2, 010318 (2021). [Google Scholar]

- 16.Baldovin, M., Marino, R. & Vulpiani, A. Ergodic observables in non-ergodic systems: The example of the harmonic chain. Phys. A630, 129273 (2023). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during the current study are available from the corresponding author on reasonable request, while the employed financial datasets are publicly available online at www.histdata.com.