Abstract

In this paper, we consider two fundamental cut approximation problems on large graphs. We prove new lower bounds for both problems that are optimal up to logarithmic factors.

The first problem is to approximate cuts in balanced directed graphs. In this problem, the goal is to build a data structure that -approximates cut values in graphs with vertices. For arbitrary directed graphs, such a data structure requires bits even for constant . To circumvent this, recent works study -balanced graphs, meaning that for every directed cut, the total weight of edges in one direction is at most times that in the other direction. We consider two models: the for-each model, where the goal is to approximate each cut with constant probability, and the for-all model, where all cuts must be preserved simultaneously. We improve the previous lower bound to in the for-each model, and we improve the previous lower bound to in the for-all model. 1 This resolves the main open questions of (Cen et al., ICALP, 2021).

The second problem is to approximate the global minimum cut in a local query model, where we can only access the graph via degree, edge, and adjacency queries. We improve the previous query complexity lower bound to for this problem, where m is the number of edges, is the size of the minimum cut, and we seek a -approximation. In addition, we show that existing upper bounds with slight modifications match our lower bound up to logarithmic factors.

1. Introduction

The notion of cut sparsifiers has been extremely influential. It was introduced by Benczúr and Karger [BK96] and it is the following: Given a graph with vertices, edges, edge weights , and a desired error parameter , a () cut sparsifier of is a subgraph on the same vertex set with (possibly) different edge weights, such that approximates the value of every cut in within a factor of . Benczúr and Karger [BK96] showed that every undirected graph has a cut sparsifier with only edges. This was later extended to the stronger notion of spectral sparsifiers [ST11] and the number of edges was improved to [BSS12]; see also related work with different bounds for both cut and spectral sparsifiers [FHHP19, KP12, ST04, SS11, LS17, CKST19].

In the database community, a key result is the work of [AGM12], which shows how to construct a sparsifer using linear measurements to -approximate all cut values. Sketching massive graphs arises in various applications where there are entities and relationships between them, such as webpages and hyperlinks, people and friendships, and IP addresses and data flows. As large graph databases are often distributed or stored on external memory, sketching algorithms are useful for reducing communication and memory usage in distributed and streaming models. We refer the readers to [McG14] for a survey of graph stream algorithms in the database community.

For very small values of , the dependence in known cut sparsifiers may be prohibitive. Motivated by this, the work of [ACK+16] relaxed the cut sparsification problem to outputting a data structure , such that for any fixed cut , the value is within a factor of the cut value of in with probability at least 2/3. Notice the order of quantifiers — the data structure only needs to preserve the value of any fixed cut (chosen independently of its randomness) with high constant probability. This is referred to as the for-each model, and the data structure is called a for-each cut sketch. Surprisingly, [ACK+16] showed that every undirected graph has a for-each cut sketch of size bits, reducing the dependence on to linear. They also showed an bits lower bound in the for-each model. The improved dependence on is indeed coming from relaxing the original sparsification problem to the for-each model: [ACK+16] proved an bit lower bound on any data structure that preserves all cuts simultaneously, which is referred to as the for-all model. This lower bound in the for-all model was strengthened to bits in [CKST19].

While the above results provide a fairly complete picture for undirected graphs, a natural question is whether similar improvements are possible for directed graphs. This is the main question posed by [CCPS21]. For directed graphs, even in the for-each model, there is an lower bound without any assumptions on the graph. Motivated by this, [EMPS16, IT18, CCPS21] introduced the notion of -balanced directed graphs, meaning that for every directed cut , the total weight of edges from to is at most times that from to . The notion of -balanced graphs turned out to be very useful for directed graphs, as [IT18, CCPS21] showed an upper bound in the for-each model, and an upper bound in the for-all model, thus giving non-trivial bounds for both problems for small values of . The work of [CCPS21] also proved lower bounds: they showed an lower bound in the for-each model, and an lower bound in the for-all model. While their lower bounds are tight for constant , there is a quadratic gap for both models in terms of the dependence on . The main open question of [CCPS21] is to determine the optimal dependence on , which we resolve in this work.

Recent work further explored spectral sketches, faster computation of sketches, and sparsification of Eulerian graphs (-balanced graphs with ) [ACK+16, JS18, CKK+18, CGP+23, SW19]. In this paper, we focus on the space complexity of cut sketches for general values of .

As observed in [ACK+16], one of the main ways to use for-each cut sketches is to solve the distributed minimum cut problem. This is the problem of computing a -approximate global minimum cut of a graph whose edges are distributed across multiple servers. One can ask each server to compute a (1 ± 0.2) for-all cut sketch and a for-each cut sketch. This allows one to find all -approximate minimum cuts, and because there are at most cuts with value within a factor of of the minimum cut, one can query all these cuts using the more accurate for-each cut sketches, resulting in an optimal linear in dependence in the communication.

Motivated by this connection to distributed minimum cut estimation, we also consider the problem of directly approximating the minimum cut in a local query model, which was introduced in [RSW18] and studied for minimum cut in [ER18, BGMP21]. The model is defined as follows.

Let be an unweighted and undirected graph, where the vertex set is known but the edge set is unknown. In the local query model, we have access to an oracle that can answer the following three types of local queries:

Degree query: Given , the oracle returns the degree of .

Edge query: Given and index , the oracle returns the i-th neighbor of , or if the edge does not exist.

Adjacency query: Given , the oracle returns whether .

In the Min-Cut problem, our goal is to estimate the global minimum cut up to a -factor using these local queries. The complexity of the problem is measured by the number of queries, and we want to use as few queries as possible. For this problem we focus on undirected graphs.

Previous work [ER18] showed an query complexity lower bound, where is the size of the minimum cut. The main open question is what the dependence on should be. There is also an upper bound in [BGMP21], and a natural question is to close this gap.

1.1. Our Results

We resolve the main open questions mentioned above.

Cut Sketch for Balanced (Directed) Graphs.

We study the space complexity of cut sketches for -node -balanced (directed) graphs. Previous work [IT18, CCPS21] gave an upper bound in the for-all model and an upper bound in the for-each model, along with an lower bound and an lower bound, respectively.

We close these gaps and resolve the dependence on , improving the lower bounds to match the upper bounds for all parameters , , and (up to logarithmic factors). Formally, we have:

Theorem 1.1 (For-Each Cut Sketch for Balanced Graphs).

Let and . Assume . Any for-each cut sketching algorithm for -balanced -node graphs must output bits.

Theorem 1.2 (For-All Cut Sketch for Balanced Graphs).

Let and . Assume . Any for-all cut sketching algorithm for -balanced -node graphs must output bits.

Query Complexity of Min-Cut in the Local Query Model.

We study the problem of -approximating the (undirected) global minimum cut in a local query model, where we can only access the graph via degree, edge, and adjacency queries.

We close the gap on the dependence in the query complexity of this problem by proving a tight lower bound, where is the number of edges and is the size of the minimum cut. This improves the previous lower bound in [ER18]. Formally, we have:

Theorem 1.3 (Approximating Min-Cut using Local Queries).

Any algorithm that estimates the size of the global minimum cut of a graph up to a factor requires queries in expectation in the local query model, where m is the number of edges in and is the size of the minimum cut.

We also show that with a slight modification, the query complexity upper bound in [BGMP21] can be improved to , which implies that our lower bound is tight (up to logarithmic factors).

1.2. Our Techniques

A common technique we use for the different problems is communication complexity games that involve the approximation parameter . For example, suppose Alice has a bit string of length , and she can encode into a graph such that, if she sends Bob a (for-each or for-all) cut sketch to Bob, then Bob can recover a specific bit of s with high constant probability. By communication complexity lower bounds, we know Alice must send bits to Bob, which gives a lower bound on the size of the cut sketch.

For-Each Cut Sketch Lower Bound.

Let . At a high level, we partition the nodes into sub-graphs, where each sub-graph is a -by- bipartite graph with two parts and . We then divide and into disjoint clusters and . For every cluster pair and , there are a total of edges. Intuitively, we wish to encode a bit string into forward edges (left to right) each with weight , and add backward edges (right to left) each with weight so that the graph -balanced. If we could approximately decode this string from a for-each cut sketch, then we would get an lower bound.

However, if we use a simple encoding method [ACK+16, CCPS21] where each bit is encoded into one edge (e.g., with weight 1 or 2) and query the edges leaving , then the backward edges with weight will cause the cut value to be . The cut sketch will have additive error , which will obscure . To address this, we instead encode bits of information across edges simultaneously. When we want to decode a specific bit , we query the (directed) cut values between two carefully designed subsets and . The key idea of our construction is that, although each edge in is used to encode many bits of , the encoding of different bits of is never too correlated: while encoding other bits does affect the total weight from to , this effect is similar to adding noise which only varies the total weight from to by a small amount.

For-All Cut Sketch Lower Bound.

Let . At a high level, we partition the nodes into sub-graphs, where each sub-graph is a -by- bipartite graph with two parts and . Let . We partition into disjoint clusters . We use edges from to to encode a bit string by setting the weight of each forward edge to 1 or 2, and adding a backward edge of weight to balance the graph.

We can show that the following problem requires bits of communication: Consider and a random subset where . Let denote ’s neighbors such that has weight 2, which is uniformly random if is uniformly random. The problem is to decide whether or for a sufficiently small constant . Intuitively, the graph encodes a -fold version of this communication problem, which implies an lower bound.

We need to show that Bob can distinguish between the two cases of given a for-all cut sketch. However, there are some challenges. The difference between the two cases is while the natural cut to query has value . The cut sketch will have additive error , which is too much. To overcome this, note that we have not used the property that the for-all cut sketch preserves all cuts. We make use of the following crucial observation in [ACK+16]: In expectation, roughly half of the nodes satisfy because is small. If Bob enumerates all subsets of size , he will eventually get lucky and find a set that contains almost all such nodes. Since there are roughly such nodes, the bias per node will contribute in total, which is enough to be detected even under an additive error.

Query Complexity of Min-Cut in the Local Query Model.

We prove our lower bound using communication complexity, but unlike previous work [ER18], we consider the following 2SUM problem [WZ14]: Given length- binary strings and , we want to approximate the value of up to a additive error, with the promise that at least a constant fraction of the satisfy while the remaining pairs satisfy or . Here is the number of indices where and are both 1, and is the set-disjointness problem, i.e., if and otherwise. The parameters , and will be chosen later.

We construct our graph based on the vectors and in a way inspired by [ER18]. We then give a careful analysis of the size of the minimum cut of , and show that under certain conditions, the size of the minimum cut is exactly . Consequently, a ()-approximation of the minimum cut yields an approximation of up to a additive error, which implies the desired lower bound.

2. Preliminaries

Let be a weighted (directed) graph with vertices and edges, where each edge has weight . We write if is unweighted and leave out . For two sets of nodes , let denote the set of edges from to . Let denote the total weight of edges from to . For a node and a set of nodes , we write for .

We write for . We use to denote the all-ones vector. For a vector , we write and for the and norm of respectively. For two vectors , let be the tensor product of and . Given a matrix , we use to denote the i-th row of .

Directed Cut Sketches.

We start with the definitions of -balanced graphs, for-all and for-each cut sketches [BK96, ST11, ACK+16, CCPS21].

We say a directed graph is balanced if all cuts have similar values in both directions.

Definition 2.1 (-Balanced Graphs).

A strongly connected directed graph is -balanced if, for all , it holds that .

We say is a for-all cut sketch if the value of all cuts can be approximately recovered from it. Note that is not necessarily a graph and can be an arbitrary data structure.

Definition 2.2 (For-All Cut Sketch).

Let . We say is a () for-all cut sketching algorithm if there exists a recovering algorithm such that, given a directed graph as input, can output a sketch such that, with probability at least 2/3, for all :

Another notion of cut approximation is that of a “for-each” cut sketch, which requires that the value of each individual cut is preserved with high constant probability, rather than approximating the values of all cuts simultaneously.

Definition 2.3 (For-Each Cut Sketch).

Let . We say is a for-each cut sketching algorithm if there exists a recovering algorithm such that, given a directed graph as input, can output a sketch such that, for each , with probability at least 2/3,

In Definitions 2.2 and 2.3, the sketching algorithm and the recovering algorithm can be randomized, and the probability is over the randomness in and .

3. For-Each Cut Sketch

In this section, we prove an lower bound on the output size of () for-each cut sketching algorithms (Definition 2.3).

Theorem 1.1 (For-Each Cut Sketch for Balanced Graphs).

Let and . Assume . Any for-each cut sketching algorithm for -balanced -node graphs must output bits.

Our result uses the following communication complexity lower bound for a variant of the Index problem, where Alice and Bob’s inputs are random.

Lemma 3.1 ([KNR01]).

Suppose Alice has a uniformly random string and Bob has a uniformly random index . If Alice sends a single (possibly randomized) message to Bob, and Bob can recover with probability at least 2/3 (over the randomness in the input and their protocol), then Alice must send bits to Bob.

Our lower-bound construction relies on the following technical lemma.

Lemma 3.2.

For any integer , there exists a matrix such that:

for all .

for all .

For all , the -th row of can be written as where and .

Proof.

Our construction is based on the Hadamard matrix . Recall that the first row of is the all-ones vector and that for all . For every , , we add as a row of , so has rows.

Condition (3) holds because for all . For Conditions (1) and (2), note that for any vectors , , , and , we have . Using this fact, Condition (1) holds because , and Condition (2) holds because and thus . □

We first prove a lower bound for the special case . Our proof for this special case introduces important building blocks for proving the general case .

Lemma 3.3.

Suppose . Any for-each cut sketching algorithm for -balanced -node graphs must output bits.

At a high level, we reduce the Index problem (Lemma 3.1) to the for-each cut sketching problem. Given Alice’s string , we construct a graph to encode , such that Bob can recover any single bit in by querying cut values of . Our lower bound (Lemma 3.3) then follows from the communication complexity lower bound of the Index problem (Lemma 3.1), because Alice can run a for-each cut sketching algorithm and send the cut sketch to Bob, and Bob can successfully recover the cut values with high constant probability.

Proof of Lemma 3.3.

We reduce from the Index problem. Let denote Alice’s random string.

Construction of .

We construct a directed complete bipartite graph to encode . Let and denote the left and right nodes of , where . We partition into disjoint blocks of equal size, and similarly partition into . We divide into disjoint strings of the same length. We will encode using the edges from to . Note that the encoding of each is independent since for .

We fix and and focus on the encoding of . Note that . We refer to the edges from to as forward edges and the edges from to as backward edges. Let denote the weights of the forward edges, which we will choose soon. Every backward edge has weight .

Let . Assume w.l.o.g. that for some integer . Consider the vector where is the matrix in Lemma 3.2 with . Because is uniformly random, each coordinate of is a sum of i.i.d. random variables of value ±1. By the Chernoff bound and the union bound, we know that with probability at least 99/100, for some constant . If this happens, we set , so that each entry of is between and . Otherwise, we set to indicate that the encoding failed.

We first verify that is -balanced. This is because every edge has a reverse edge with similar weight: For every and , the edge has weight , while the edge has weight .

We will show that given a cut sketch for some constant , Bob can recover a specific bit of using 4 cut queries. By Lemma 3.1, this implies an lower bound for cut sketching algorithms for and .

Recovering a bit in from a for-each cut sketch of .

Suppose Bob wants to recover a specific bit of , which belongs to the substring and has an index in . We assume that is successfully encoded by the subgraph between and .

For simplicity, we index the nodes in as and similarly for . We index the forward edges in alphabetical order, first by and then by . Under this notation, gives the total weight of forward edges from to , where are the indicator vectors of and .

The crucial observation is that, given a cut sketch of , Bob can approximate using 4 cut queries. By Lemma 3.2, for some . Let be the set of nodes with . Let be the set of nodes with . Let and .

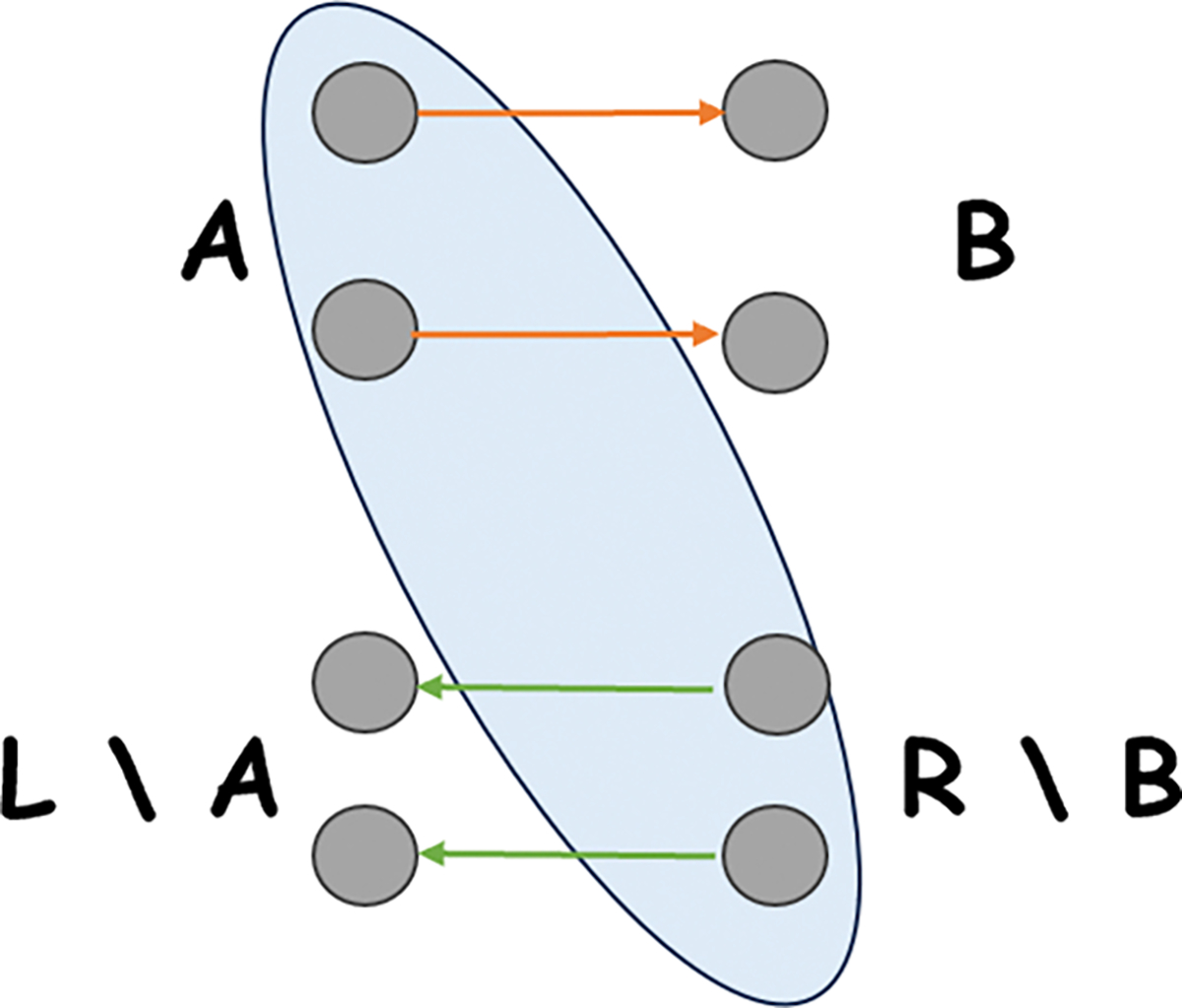

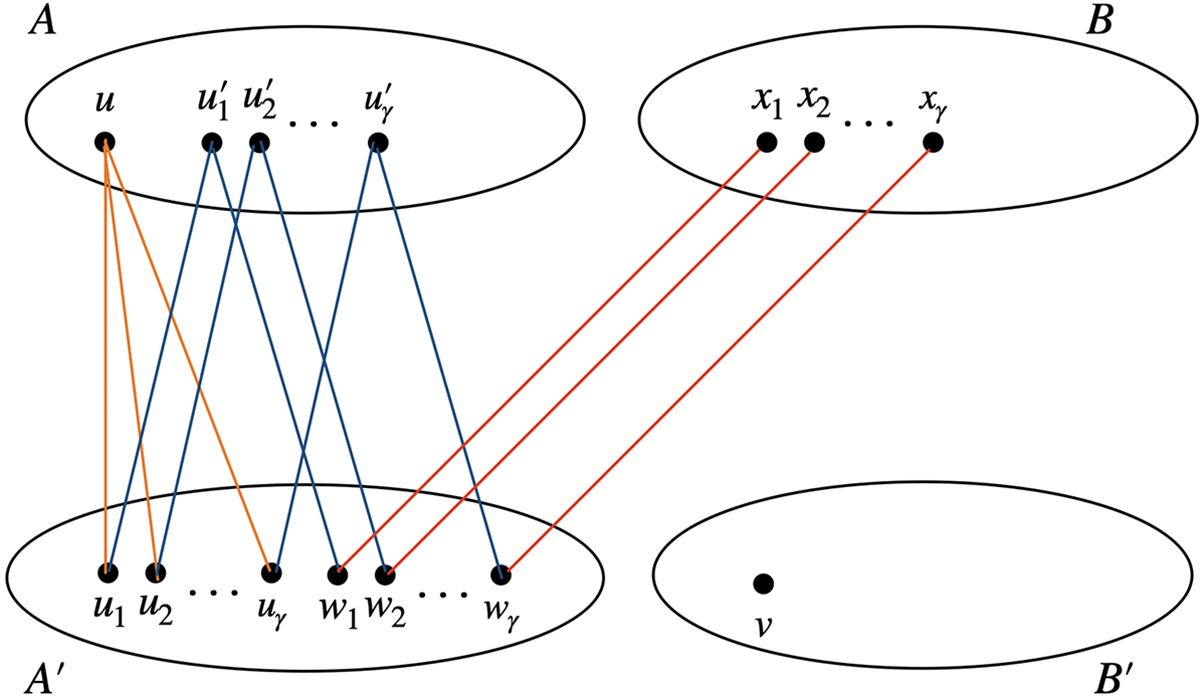

To approximate the value of (and similarly , , ), Bob can query for . Consider the edges from to : the forward edges are from to , each with weight ; and the backward edges are from to , each with weight . See Figure 1 as an example.

Figure 1:

For , the (directed) edges from to consist of the following: the forward edges from to , each with weight , and the backward edges from to , each with weight .

By Lemma 3.2, , so . The total weight of the forward edges is , and the total weight of the backward edges is , so the cut value is . Given a for-each cut sketch, Bob can obtain a multiplicative approximation of , which has additive error. After subtracting the total weight of backward edges, which is fixed, Bob has an estimate of with additive error. Consequently, Bob can approximate with additive error using 4 cut queries.

Now consider . By Lemma 3.2, and the rows of are orthogonal,

We can see that, for a sufficiently small universal constant , Bob can distinguish whether or based on an additive approximation of .

Bob’s success probability is at least 0.95, because the encoding of fails with probability at most 0.01, and each of the 4 cut queries fails with probability at most 0.01.2 □

We next consider the case with general values of , and , and prove Theorem 1.1.

Proof of Theorem 1.1.

Let . We assume w.l.o.g. that is an integer, is a multiple of , and is a power of 2. Suppose Alice has a random string . We will show that can be encoded into a graph such that

has nodes and is -balanced, and

Given a for-each cut sketch of and an index , where is a sufficiently small universal constant, Bob can recover with probability at least 2/3.

Consequently, by Lemma 3.1, any for-each cut sketching algorithm must output bits for and .

We first describe the construction of . We partition the nodes into disjoint sets , each containing nodes. Let be Alice’s random string with length . We partition into strings , with bits in each substring. We then follow the same procedure as in Lemma 3.3 to encode into a complete bipartite graph between and . Notice that we have and , which is the same setting as in Lemma 3.3.

We can verify that is -balanced. This is because every edge has a reverse edge whose weight is at most times the weight of . For every and , the edge has weight , while the edge has weight .

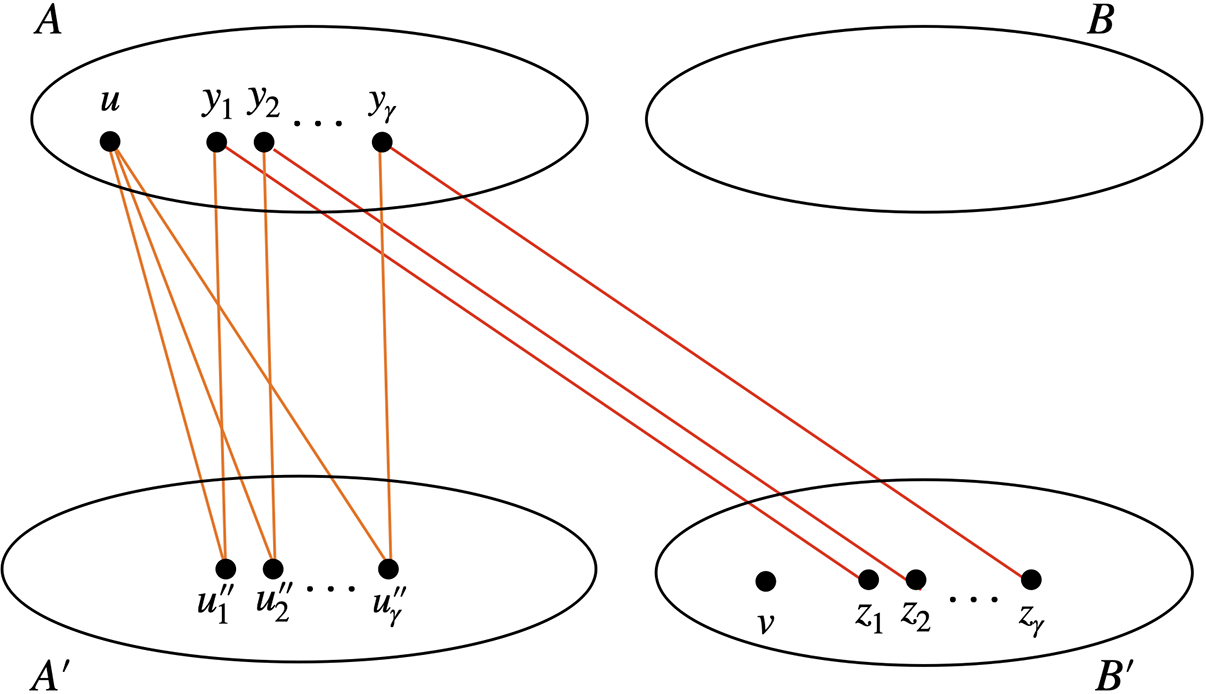

We next show that Bob can recover the -th bit of . Suppose Bob’s index belongs to the substring which is encoded by the subgraph between and . Similar to the proof of Lemma 3.3, Bob only needs to approximate for 4 pairs of with additive error, where , , and . To achieve this, Bob can query the cut value for . The edges from to are:

forward edges from to , each with weight .

backward edges from to , each with weight .

backward edges from to when , each with weight .

The cut value is . Consequently, given a for-each cut sketch, after subtracting the fixed weight of the backward edges, Bob can approximate with additive error. Similar to the proof of Lemma 3.3, for sufficiently small constant , repeating this process for 4 different pairs of will allow Bob to recover . □

4. For-All Cut Sketch

In this section, we prove an lower bound on the output size of for-all cut sketching algorithms (Definition 2.2).

Theorem 1.2 (For-All Cut Sketch for Balanced Graphs).

Let and . Assume . Any for-all cut sketching algorithm for -balanced -node graphs must output bits.

Our proof is inspired by [ACK+16] and uses the following communication complexity lower bound for an -fold version of the Gap-Hamming problem.

Lemma 4.1 ([ACK+16]).

Consider the following distributional communication problem: Alice has strings of Hamming weight . Bob has an index and a string of Hamming weight , drawn as follows:

is chosen uniformly at random;

every for is chosen uniformly at random;

and are chosen uniformly at random, conditioned on their Hamming distance being, with equal probability, either or for some universal constant .

Consider a (possibly randomized) one-way protocol, in which Alice sends Bob a message, and Bob then determines with success probability at least 2/3 whether is or . Then Alice must send bits to Bob.

Before proving Theorem 1.2, we first consider the special case .

Lemma 4.2.

Suppose . Any for-all cut sketching algorithm for -balanced n-node graphs must output bits.

We reduce the distributional Gap-Hamming problem (Lemma 4.1) to the for-all cut sketching problem. Suppose Alice has strings where , and Bob has an index and a string . We construct a graph to encode , such that given a for-all cut sketch of , Bob can determine whether or with high constant probability. Our lower bound then follows from Lemma 4.1.

Construction of .

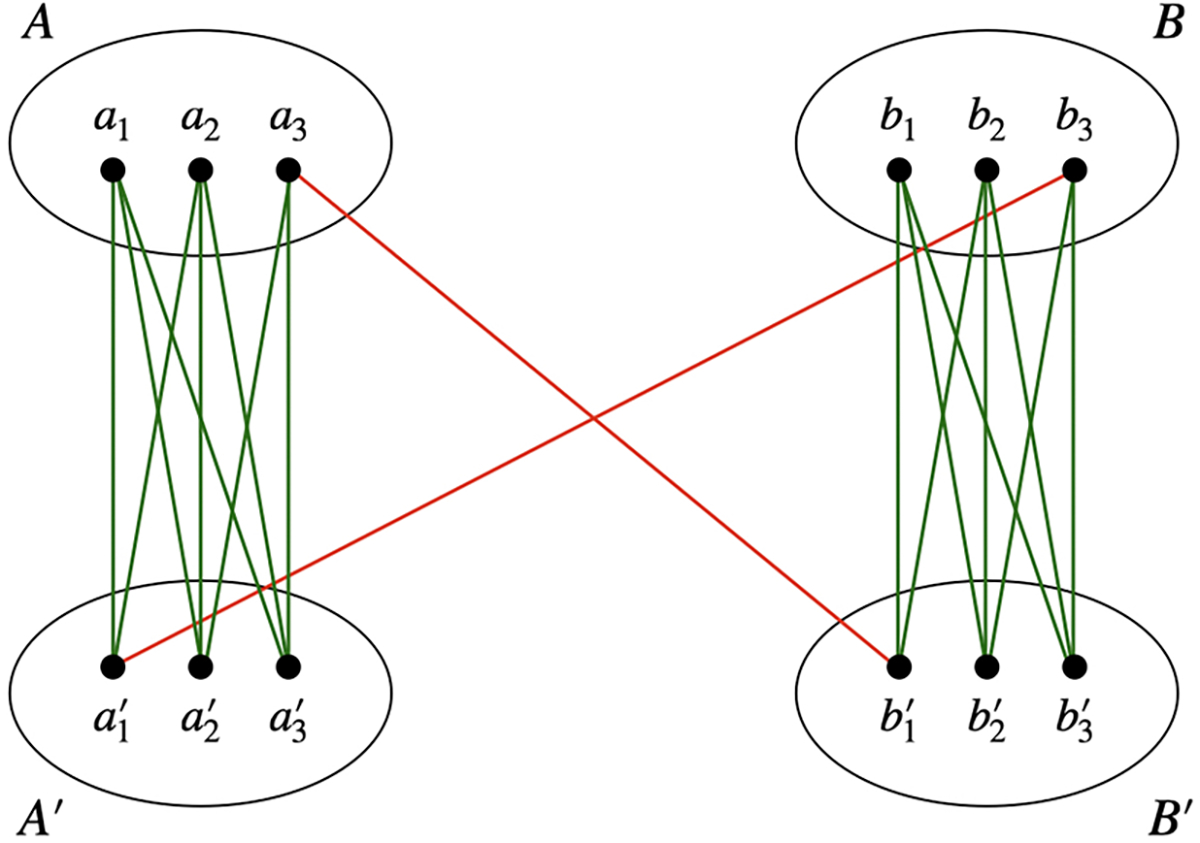

We construct a directed complete bipartite graph . Let and denote the left and right nodes of , where . We partition into disjoint sets with .

Consider the distributional Gap-Hamming problem in Lemma 4.1 with . We re-index Alice’s strings as , where and . Let be the nodes in . We encode using the edges from to : For node and the v-th node in , the forward edge has weight , and the backward edge has weight . Note that the encoding of each is independent since for .

Determining from a for-all cut sketch of .

Suppose Bob’s input (after re-indexing) is , , and . Bob wants to decide whether or .

Let denote the set of nodes where the forward edge has weight 2, which corresponds to the positions of 1 in . Let be the set of nodes such that .

Hence, to determine whether or , Bob only needs to decide whether or .

Let . The cut consists of forward edges from to and backward edges from to (. Ideally, if Bob knows , he can subtract the weight of backward edges to obtain and recover . However, Bob can only get a (-approximation of , which may have additive error because . With this much error, Bob cannot distinguish between the two cases.

To overcome this issue, we follow the idea of [ACK+16]. Intuitively, when is small, roughly half of satisfy . By enumerating all subsets of size , Bob can find a set such that most nodes satisfy . Since , the bias per node adds up to roughly , which can be detected even with error.

To prove Lemma 4.2, we need the following two technical lemmas, which are essentially proved in [ACK+16].

Lemma 4.3 (Claim 3.5 in [ACK+16]).

Let and . Consider the following sets:

With probability at least 0.98, we have and .

Lemma 4.4 (Lemma 3.4 in [ACK+16]).

Let be a sufficiently small universal constant. Suppose one can approximate with additive error for every with . Let be the subset with the highest (approximate) cut value. Then, with probability at least 0.96, we have .

We are now ready to prove Lemma 4.2.

Proof of Lemma 4.2.

We reduce from the distributional Gap-Hamming problem (Lemma 4.1) with . We re-index Alice’s strings as , where and .

We construct a directed bipartite graph with two parts and , where . Let . We partition into disjoint sets with . We encode using the edges from to : For node and the v-th node in , the forward edge has weight , and the backward edge has weight .

Note that is -balanced. We will show that given a for-all cut sketch of for some constant , Bob can decide whether or with probability at least 2/3. Consequently, by Lemma 4.1, any for-all cut sketching algorithm must output bits for and .

Bob enumerates every with and uses the cut sketch to approximate , where corresponds to the positions of 1 in Bob’s string and . Let . The cut has forward edges from to with weights 1 or 2, and backward edges from to with weight . The total weight of these edges is . Therefore, given a () for-all cut sketch, Bob can subtract the fixed weight of the backward edges and approximate with additive error . When is sufficiently small, this additive error is at most . By Lemma 4.4, Bob can find with such that . Finally, if , Bob decides and ; and if , Bob decides .

Suppose Bob’s index is . Notice that Bob uses to determine which to look at, but does not use any information about . Therefore, when and ,

Conversely, when and , because ,

The last inequality holds because and by Lemma 4.3 when .

We analyze Bob’s success probability. Lemma 4.3 fails with probability at most 0.02, Lemma 4.4 fails with probability at most 0.04, and the for-all cut sketch fails with probability at most 0.013 If they all succeed, Bob’s probability of answering correctly is at least . Bob’s overall fail probability is at most 0.02 + 0.04 + 0.01 + 0.25 < 1/3. □

We next consider the case with general values of , and , and prove Theorem 1.2.

Proof of Theorem 1.2.

Let . We assume w.l.o.g. that is an integer and is a multiple of . We reduce from the distributional Gap-Hamming problem in Lemma 4.1 with . We will show that Alice’s strings can be encoded into a graph such that

has nodes and is ()-balanced, and

After receiving a string , an index , and a () for-all cut sketch of for some universal constant , Bob can distinguish whether or with probability at least 2/3.

Consequently, by Lemma 4.1, any for-all cut sketching algorithm must output bits for and .

We first describe the construction of . We partition the nodes into disjoint sets , each containing nodes. Let be Alice’s random strings where . We partition the strings into disjoint sets , each with strings. We then follow the same procedure as in Lemma 4.2 to encode into a complete bipartite graph between and . Notice that has strings and , which is the same setting as in Lemma 4.2.

We can verify that is -balanced. This is because every edge has a reverse edge whose weight is at most times the weight of . For every and , the edge has weight 1 or 2, while the edge has weight .

We next show how Bob can distinguish between the two cases. Suppose Bob’s index specifies a string encoded by the subgraph between and . Similar to the proof of Lemma 4.2, we only need to show that given a for-all cut sketch, Bob can approximate with additive error for every with and for some with . To see this, consider . The edges from to are

forward edges from to , each with weight 1 or 2.

backward edges from to , each with weight .

backward edges from to when , each with weight .

The total weight of these edges is . Consequently, given a cut sketch, Bob can subtract the fixed weight of the backward edges and approximate with additive error. Similar to the proof of Lemma 4.2, for sufficiently small constant , this will allow Bob to distinguish between the two cases or with probability at least 2/3. □

5. Local Query Complexity of Min-Cut

In this section, we present an lower bound on the query complexity of approximating the global minimum cut of an undirected graph to a () factor in the local query model. Formally, we have the following theorem.

Theorem 1.3 (Approximating Min-Cut using Local Queries).

Any algorithm that estimates the size of the global minimum cut of a graph up to a factor requires queries in expectation in the local query model, where is the number of edges in and is the size of the minimum cut.

To achieve this, we define a variant of the 2-SUM communication problem in Section 5.1, show a graph construction in Section 5.2, and show that approximating 2-SUM can be reduced to the minimum cut problem using our graph construction in Section 5.3. In Section 5.4, we will show that our lower bound is tight up to logarithmic factors.

5.1. 2-SUM Preliminaries

Building off of the work of [WZ14], we define the following variant of the problem.

Definition 5.1.

For binary strings and , let denote the number of indices where and are both 1. Let denote whether and are disjoint. That is, if , and if .

Definition 5.2.

Suppose Alice has binary strings where each string has length and likewise Bob hast strings each of length is guaranteed to be either 0 or for each pair of strings . Furthermore, at least 1/1000 of the pairs are guaranteed to satisfy . In the problem, Alice and Bob want to approximate up to additive error with high constant probability.

Lemma 5.3.

To solve with high constant probability, the expected number of bits Alice and Bob need to communicate is .

Proof.

[WZ14] proved an expected communication complexity of for without the promise that at least a 1/1000 fraction of the string pairs intersect. Adding this promise does not change the communication complexity, because if ( and do not satisfy the promise, we can add a number of new and to satisfy the promise and later subtract their contribution to approximate with additive error . □

Theorem 5.4.

To solve with high constant probability, the expected number of bits Alice and Bob need to communicate is .

Proof.

Consider an instance of with Alice’s strings and Bob’s strings each with length . For each of Alice’s strings with length , we produce (with length ) by concatenating copies of , and likewise we produce for each of Bob’s strings . The setup where Alice has strings and Bob has strings is an instance of . From Lemma 5.3, the communication complexity of is . Thus, the communication complexity of is . □

5.2. Graph Construction

Inspired by the graph construction from [ER18], given two strings , we construct a graph such that is partitioned into , , and , where . Note that since , we can index the bits in by , where , . We construct the edges according to the following rule:

Figure 2 illustrates an example of the graph when and .

Figure 2:

Example of where and . The red edges represent the intersection at . The green edges represent all the non-intersections in and .

We will show that under certain assumptions about and , the number of intersections in is twice the size of the minimum cut in .

Lemma 5.5.

Given , if , then .

Proof.

To prove this, we use some properties about -connectivity of a graph. A graph is -connected if at least edges must be removed from to disconnect it. In other words, if a graph is -connected, then . Equivalently, a graph is -connected if for every , there are at least edge-disjoint paths between and . Therefore, given , if we can show that is -connected and there exists one cut of size exactly , then we can show . By the construction of the graph, it is easy to see that has size , since each intersection of , produces two crossing edges in between. Therefore, all we need to show here is that if , then is -connected.

Similar to [ER18], we prove this by looking at each pair of . Our goal is to show that for every , there exist at least edge-disjoint paths from to .

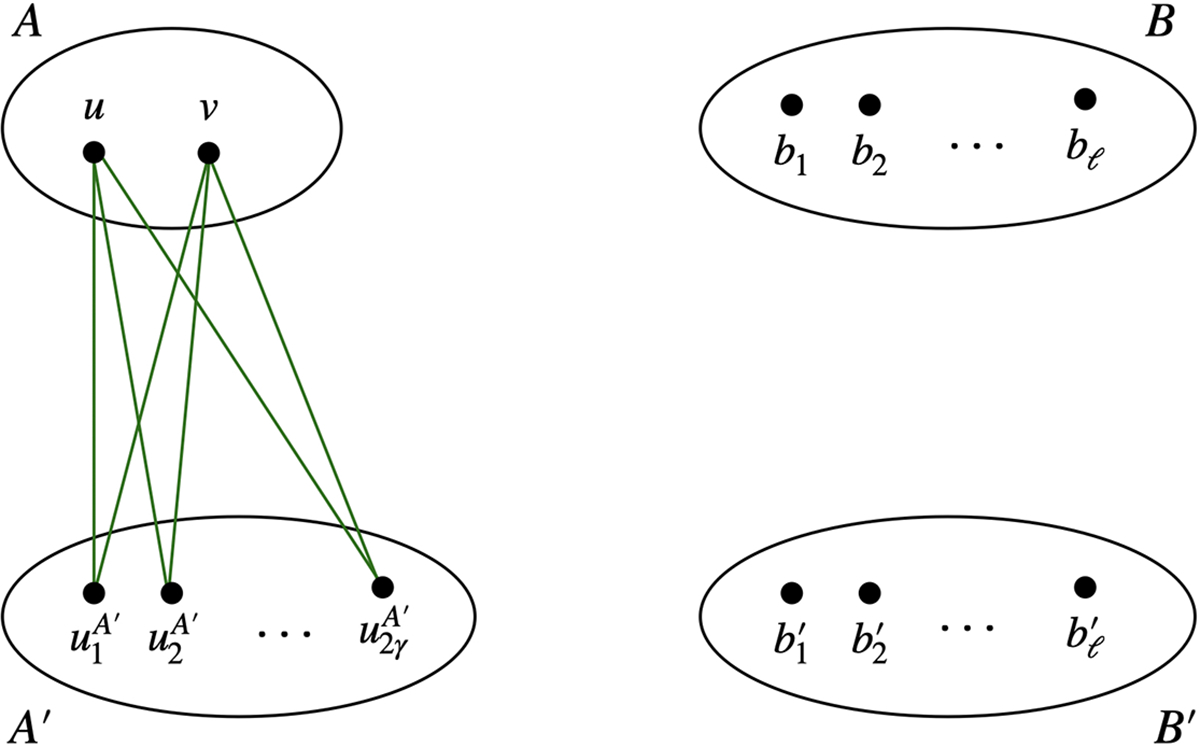

Case 1.

(or symmetrically ). For each pair , we have that there are at least distinct common neighbors in . This is because one intersection at and implies that the edge is not contained in , and would remove at most one common neighbor in . Since , we have that there are at least distinct common neighbors in , which we denote by . Therefore, each path is edge-disjoint, and we have at least edge-disjoint paths from to , as shown in Figure 3.

Figure 3:

. We omit all the , , and edges.

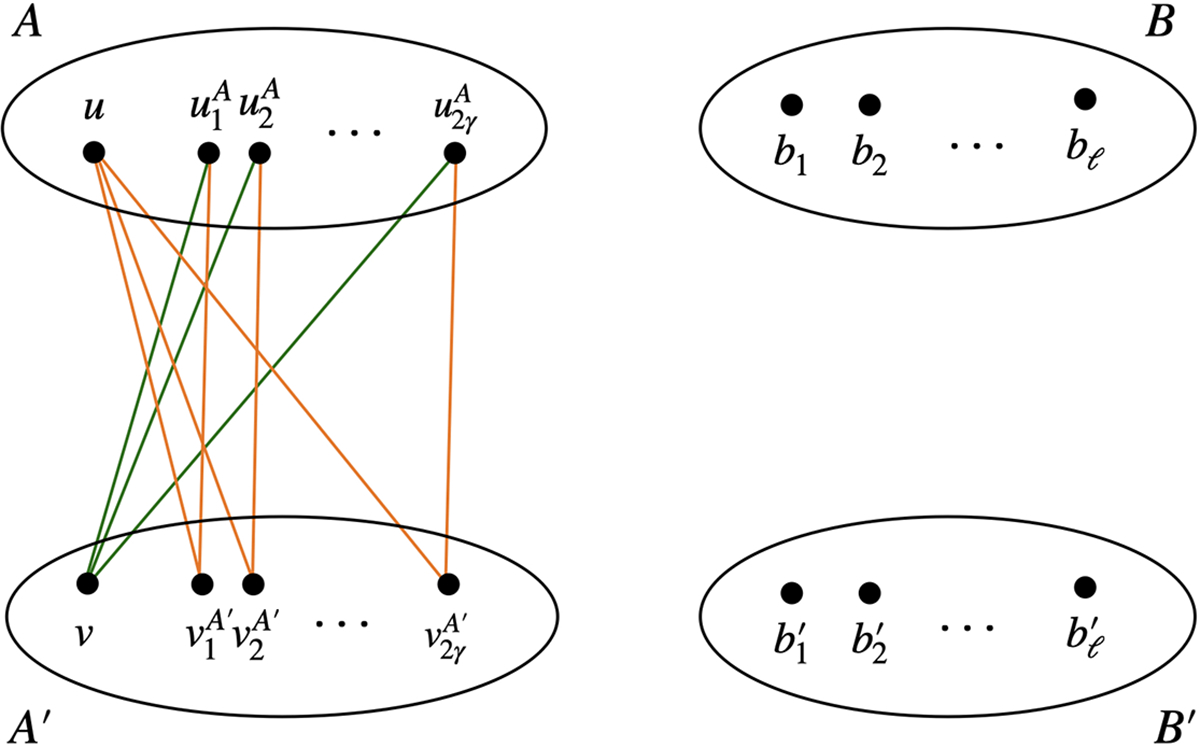

Case 2.

, (or symmetrically , ). Since , we have that has at least distinct neighbors in , which we denote by . From Case 1, we also have that each has at least distinct common neighbors in . Therefore, we can choose such that each path is edge-disjoint, so we have at least edge-disjoint paths from to , as shown in Figure 4. Note that it may be the case where . In this case, we can simply take the edge to be one of the edge-disjoint paths.

Figure 4:

, . We omit all the , , and edges. The green edges exist since has at least neighbors in . The orange edges exist since and have at least common neighbors in .

Case 3.

, (or symmetrically , ). In this case, we show two sets of edge-disjoint paths, where each set has at least edge-disjoint paths from to , and the two sets of paths do not overlap. Overall, we have at least edge-disjoint paths.

The first set of paths uses the edges between and . Let be the edges between and . Each of these edges represents one intersection in and . Therefore, there are exactly of them. From Case 2, we have that for every , there are edge-disjoint paths from to . Hence, for every , we can choose a path from to and these paths are edge-disjoint. Figure 5 illustrates the paths . By symmetry, we can extend the paths from to . This gives us edge-disjoint paths from to .

Figure 5:

, . The first set of paths goes from . We omit the paths from to , as they are symmetric to the paths from to . Once we extend the paths from to , we have edge-disjoint paths from to . Note that the and may not be distinct.

We now consider the second set of paths . Let

be the distinct edges between and . Once again, it suffices to prove that there are edge-disjoint paths from to , since the paths between to would be symmetric. From Case 1, we have that for every , there are at least common neighbors between and . Therefore, we can always find distinct such that the paths are edge-disjoint, as shown in Figure 6. Once we extend the paths from to , we have -edge disjoint paths in the second set.

Figure 6:

, . The second set of paths goes from . We omit the paths from to , as they are symmetric to the paths from to . Once we extend the paths from to , we have edge-disjoint paths from to . Note that the and may not be distinct.

Now we have two sets of paths and , where both sets have at least edge-disjoint paths. It remains to show that the paths in and can be edge-disjoint. Observe that the only possible edge overlaps between the paths from to the and paths from to the are and , since they are both neighbors of . However, note that what we have shown is that for every or , there are at least edge-disjoint paths from to or . Therefore, one can choose edge-disjoint paths from to and such that and do not overlap. And similarly one can choose edge-disjoint paths from to the and the . Overall, we have edge-disjoint paths from to .

Case 4.

, (or symmetrically , ). This case is similar to Case 3, where we have two edge-disjoint sets and . Consider the set of paths , where we use the edges

We can construct the paths from to using the same way as for in Case 3 (Figure 5). For the paths from to , however, we construct them using the same way as in in Case 3 (Figure 6). By connecting these paths, we obtain at least edge-disjoint paths in . Similarly, we can also construct at least edge-disjoint paths in , where we use the edges

We follow the same way of choosing the paths in and that are edge-disjoint. □

5.3. Reducing 2-SUM to MINCUT

In this section, we use the graph constructions in Section 5.2 to reduce the problem to MINCUT and derive a lower bound on the number of queries in the local query model.

Lemma 5.6.

Given , and , suppose that we have any algorithm that can estimate the size of the minimum cut of a graph up to a multiplicative factor with expected queries in the local query model. Then there exists an algorithm that can approximate up to an additive error using at most bits of communication in expectation given .

Proof.

We will show that the following algorithm satisfies the above conditions:

Given Alice’s strings each of length , let be the concatenation of Alice’s strings having total length . Similarly let be the concatenation of Bob’s strings.

Construct a graph as in Section 5.2 using the above concatenated strings as , .

Run and output as the solution to .

For the 2-SUM problem, let be the number of string pairs with intersections. Since there are pairs is at most . From our definition of 2-SUM, each intersecting string pair has intersections. are formed by concatenations, so . Since , Lemma 5.5 is applicable to so that

Since approximates MINCUT up to a () factor, . Thus, ‘s output to the 2-SUM problem is within . Recall that . We can see that approximates up to additive error .

To compare the complexities of and , recall is measured by degree, neighbor, and pair queries, whereas is measured by bits of communication. Given the construction of , as shown in [ER18], degree, neighbor, and pair queries can each be simulated using at most 2 bits of communication:

Degree queries: each vertex in has degree so Alice and Bob do not need to communicate to simulate degree queries.

Neighbor queries: assuming an ordering where ‘s j’th neighbor is either or , Alice and Bob can exchange and with 2 bits of communication to simulate a neighbor query.

Pair queries: Alice and Bob can exchange and with 2 bits of communication to determine whether edges and exist.

As each of ’s queries can be simulated using up to 2 bits of communication in , can use bits of communication to simulate queries in . So we have established a reduction from approximating up to additive error to approximating MINCUT up to a () multiplicative factor. □

We are now ready to prove Theorem 1.3.

Proof of Theorem 1.3.

Given an instance of , consider the same way of constructing the graph in Lemma 5.6. From the construction of , the number of edges is since each of pair corresponds to 2 edges. Using the promise from 2-SUM, we get that , where , which means that the size of the minimum cut of is . When , we have that the size of the minimum cut of is , and from Lemma 5.6 we obtain that any algorithm that satisfies the guarantee on the distribution of must have queries in expectation. When , the size of the minimum cut of is and similarly we get that any algorithm that satisfies the guarantee on the distribution of must use queries in expectation. Combining the two, we finally obtain an lower bound on the expected number of queries in the local query model. □

5.4. Almost Matching Upper Bound

In this section, we will show that our lower bound is tight up to logarithmic factors. In the work of [BGMP21], the authors presented an algorithm that uses queries, where is the size of the minimum cut. We will show that, despite their analysis giving a dependence of , a slight modification of their algorithm yields a dependence of . Formally, we have the following theorem.

Theorem 5.7 (essentially [BGMP21]).

There is an algorithm that solves the minimum cut query problem up to a -multiplicative factor with high constant probability in the local query model. Moreover, the expected number of queries used by this algorithm is .

To prove Theorem 5.7, we first give a high-level description of the algorithm in [BGMP21]. The algorithm is based on the following sub-routine.

Lemma 5.8 ([BGMP21]).

There exists an algorithm Verify-Guess which makes queries in expectation such that (here is the degree of each node)

If , then rejects with probability at least .

If , then accepts and outputs a -approximation of with probability at least .

Given the above sub-routine, the algorithm initializes a guess for the value of the minimum cut and proceeds as follows:

if rejects , set and repeat the process.

if accepts , set where . Let and return the value of as the output.

To analyze the query complexity of the algorithm, notice that when Verify-Guess first accepts , we have that . which means that and hence one call to will get the desired output. However, at a time in , the Verify-Guess procedure needs to make queries in expectation.

To avoid this, the crucial observation is that, during the above binary search process, the error parameter of does not have to be set to . Using a small constant is sufficient. This way, when Verify-Guess first accepts , we have , where is a constant. Consequently, the output of will satisfy the error guarantee. Using the analysis in [BGMP21], we can show that the query complexity of the new algorithm is .

Acknowledgement

Yu Cheng is supported in part by NSF Award CCF-2307106. Honghao Lin and David Woodruff would like to thank support from the National Institute of Health (NIH) grant 5R01 HG 10798-2, and a Simons Investigator Award. Part of this work was done while D. Woodruff was visiting the Simons Institute for the Theory of Computing.

Footnotes

In this paper, we use and to hide logarithmic factors in its parameters.

The success probability of a cut query given a for-each cut sketch (Definition 2.3) can be boosted from 2/3 to 99/100, e.g., by running the sketching and recovering algorithms times and taking the median. This increases the length of Alice’s message by a constant factor, which does not affect our asymptotic lower bound.

The probability that a for-all cut sketch (Definition 2.2) preserves all cuts simultaneously can be boosted from 2/3 to 99/100, e.g., by running the sketching and recovering algorithms times and taking the median. This increases the length of Alice’s message by a constant factor, which does not affect our asymptotic lower bound.

References

- [ACK+16].Andoni Alexandr, Chen Jiecao, Krauthgamer Robert, Qin Bo, Woodruff David P., and Zhang Qin. On sketching quadratic forms. In Proceedings of the 2016 ACM Conference on Innovations in Theoretical Computer Science (ITCS), pages 311–319, 2016. 2, 4, 5, 9, 10 [Google Scholar]

- [AGM12].Kook Jin Ahn Sudipto Guha, and McGregor Andrew. Graph sketches: sparsification, spanners, and subgraphs. In Proceedings of the 31st ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems (PODS), pages 5–14, 2012. 2 [Google Scholar]

- [BGMP21].Bishnu Arijit, Ghosh Arijit, Mishra Gopinath, and Paraashar Manaswi. Query complexity of global minimum cut. In Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques (APPROX/RANDOM), volume 207 of Leibniz International Proceedings in Informatics (LIPIcs), pages 6:1–6:15, 2021. 2, 3, 18, 19 [Google Scholar]

- [BK96].Benczúr András A. and Karger David R.. Approximating s-t minimum cuts in Õ(n2) time. In Proceedings of the 28th Annual ACM Symposium on the Theory of Computing (STOC), pages 47–55, 1996. 1, 5 [Google Scholar]

- [BSS12].Batson Joshua D., Spielman Daniel A., and Srivastava Nikhil. Twice-Ramanujan sparsifiers. SIAM J. Comput, 41(6):1704–1721, 2012. 1 [Google Scholar]

- [CCPS21].Cen Ruoxu, Cheng Yu, Panigrahi Debmalya, and Sun Kevin. Sparsification of directed graphs via cut balance. In 48th International Colloquium on Automata, Languages, and Programming (ICALP), volume 198 of LIPIcs, pages 45:1–45:21, 2021. 2, 3, 4, 5 [Google Scholar]

- [CGP+23].Chu Timothy, Gao Yu, Peng Richard, Sachdeva Sushant, Sawlani Saurabh, and Wang Junxing. Graph sparsification, spectral sketches, and faster resistance computation via short cycle decompositions. SIAM J. Comput, 52(6):S18–85, 2023. 2 [Google Scholar]

- [CKK+18].Cohen Michael B., Kelner Jonathan A, Kyng Rasmus, Peebles John, Peng Richard, Rao Anup B, and Sidford Aaron. Solving directed Laplacian systems in nearly-linear time through sparse LU factorizations. In Proceedings of the 59th IEEE Annual Symposium on Foundations of Computer Science (FOCS), pages 898–909, 2018. 2 [Google Scholar]

- [CKST19].Carlson Charles, Kolla Alexandra, Srivastava Nikhil, and Trevisan Luca. Optimal lower bounds for sketching graph cuts. In Proceedings of the 30th Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), pages 2565–2569, 2019. 1, 2 [Google Scholar]

- [EMPS16].Ene Alina, Miller Gary L., Pachocki Jakub, and Sidford Aaron. Routing under balance. In Proceedings of the 48th Annual ACM SIGACT Symposium on Theory of Computing (STOC), pages 598–611, 2016. 2 [Google Scholar]

- [ER18].Eden Talya and Rosenbaum Will. Lower bounds for approximating graph parameters via communication complexity. In Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques (APPROX/RANDOM), volume 116 of Leibniz International Proceedings in Informatics (LIPIcs), pages 11:1–11:18, 2018. 2, 3, 5, 13, 14, 17 [Google Scholar]

- [FHHP19].Wai Shing Fung Ramesh Hariharan, Harvey Nicholas J. A., and Panigrahi Debmalya. A general framework for graph sparsification. SIAM J. Comput, 48(4):1196–1223, 2019. 1 [Google Scholar]

- [IT18].Ikeda Motoki and Tanigawa Shin-ichi. Cut sparsifiers for balanced digraphs. In Approximation and Online Algorithms - 16th International Workshop (WAOA), volume 11312 of Lecture Notes in Computer Science, pages 277–294, 2018. 2, 3 [Google Scholar]

- [JS18].Jambulapati Arun and Sidford Aaron. Efficient Õ(n/ϵ) spectral sketches for the Laplacian and its pseudoinverse. In Proceedings of the 29th Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), pages 2487–2503, 2018. 2 [Google Scholar]

- [KNR01].Kremer Ilan, Nisan Noam, and Ron Dana. Errata for: “on randomized one-round communication complexity”. Comput. Complex, 10(4):314–315, 2001. 6 [Google Scholar]

- [KP12].Kapralov Michael and Panigrahy Rina. Spectral sparsification via random spanners. In Innovations in Theoretical Computer Science (ITCS), pages 393–398, 2012. 1 [Google Scholar]

- [LS17].Lee Yin Tat and Sun He. An SDP-based algorithm for linear-sized spectral sparsification. In Proceedings of the 49th Annual ACM SIGACT Symposium on Theory of Computing (STOC), pages 678–687, 2017. 1 [Google Scholar]

- [McG14].Andrew McGregor. Graph stream algorithms: a survey. ACM SIGMOD Record, 43(1):9–20, 2014. 2 [Google Scholar]

- [RSW18].Rubinstein Aviad, Schramm Tselil, and Matthew Weinberg S. Computing exact minimum cuts without knowing the graph. In 9th Innovations in Theoretical Computer Science Conference (ITCS), volume 94 of LIPIcs, pages 39:1–39:16, 2018. 2 [Google Scholar]

- [SS11].Spielman Daniel A. and Srivastava Nikhil. Graph sparsification by effective resistances. SIAM J. Comput, 40(6):1913–1926, 2011. 1 [Google Scholar]

- [ST04].Spielman Daniel A. and Teng Shang-Hua. Nearly-linear time algorithms for graph partitioning, graph sparsification, and solving linear systems. In Proceedings of the 36th Annual ACM Symposium on Theory of Computing (STOC), pages 81–90, 2004. 1 [Google Scholar]

- [ST11].Spielman Daniel A. and Teng Shang-Hua. Spectral sparsification of graphs. SIAM J. Comput, 40(4):981–1025, 2011. 1, 5 [Google Scholar]

- [SW19].Saranurak Thatchaphol and Wang Di. Expander decomposition and pruning: Faster, stronger, and simpler. In Proceedings of the 30th Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), pages 2616–2635, 2019. 2 [Google Scholar]

- [WZ14].Woodruff David P. and Zhang Qin. An optimal lower bound for distinct elements in the message passing model. In Proceedings of the 25th Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), page 718–733, 2014. 5, 13 [Google Scholar]