Abstract

FMRI data are noisy, complicated to acquire, and typically go through many steps of processing before they are used in a study or clinical practice. Being able to visualize and understand the data from the start through the completion of processing, while being confident that each intermediate step was successful, is challenging. AFNI’s afni_proc.py is a tool to create and run a processing pipeline for FMRI data. With its flexible features, afni_proc.py allows users to both control and evaluate their processing at a detailed level. It has been designed to keep users informed about all processing steps: it does not just process the data, but also first outputs a fully commented processing script that the users can read, query, interpret, and refer back to. Having this full provenance is important for being able to understand each step of processing; it also promotes transparency and reproducibility by keeping the record of individual-level processing and modeling specifics in a single, shareable place. Additionally, afni_proc.py creates pipelines that contain several automatic self-checks for potential problems during runtime. The output directory contains a dictionary of relevant quantities that can be programmatically queried for potential issues and a systematic, interactive quality control (QC) HTML. All of these features help users evaluate and understand their data and processing in detail. We describe these and other aspects of afni_proc.py here using a set of task-based and resting-state FMRI example commands.

Keywords: FMRI, processing, reproducibility, quality control, data visualization, software

1. Introduction

FMRI processing is complicated. It relies on many disparate types of computational procedures, including alignment, “data cleaning” (such as despiking and censoring), time series analysis, and statistical modeling. Researchers perform many different types of studies, each with a particular acquisition and modeling design: task-based, resting-state (Biswal et al., 1995), and naturalistic (Hasson et al., 2010) datasets have distinct considerations. For example, task-based paradigms might require consideration of response times or modulation, as well as careful choice of hemodynamic response modeling assumptions (Bellgowan et al., 2003; Chen et al., 2023; Lindquist et al., 2009; Prince et al., 2022). Furthermore, EPI data may be acquired with either a “traditional” single echo or with multiple echoes (Posse et al., 1999), with the latter becoming increasingly popular and requiring a choice of echo combination methods. Data can be acquired across human age ranges (infant, pediatric, adult, aging populations) and across different species (macaque, rat, mouse, fetal pig, etc.), with each scenario requiring special considerations and particular assumptions. Finally, analyses can take place in either volumetric or surface-based topologies, and include one or more runs to process simultaneously.

Here, we describe afni_proc.py, a program available within the open source, publicly available AFNI toolbox (Cox, 1996), to create full processing pipelines across this wide FMRI landscape. Briefly, afni_proc.py allows a researcher to set up a full (or partial) subject-level FMRI processing script, specifying a desired set of steps and options to manage reading in raw data through linear regression and quality control (QC). From its creation in 2006, the program was designed to balance several important aspects of processing by having these features:

Being readable and understandable, both by the researcher using it and by those with whom it is shared.

Being flexible to accommodate the precise steps that a researcher wants for the given analysis.

Being easy to use, relative to the amount of provided control.

Facilitating reproducibility, just by sharing the command and code version number used.

Retaining the provenance (record) of all processing that has been performed in a commented script, so no steps are hidden or require guessing.

Also retaining the intermediate datasets from each processing step, to facilitate quality control and to expedite investigations when the final results appear “unreasonable.”

Growing and adapting to new user needs.

These design choices have had added benefits that, as new acquisition methods have been developed (e.g., multiecho EPI), afni_proc.py has been able to incorporate new processing steps within a consistent framework.

This text is organized as follows. In the Section 2, we first describe the general usage and organization of afni_proc.py, a program for specifying single-subject (ss) pipelines. While not all details and options can be discussed here, we highlight several important aspects. These include the convenience and rigor of afni_proc.py processing, such as directly providing alignment concatenation and integrated checks through regression modeling, as well as how it provides detailed analysis provenance and inherent reproducibility. We then discuss how it facilitates quality control (QC) with its automatically generated HTML report and summary dictionary. Then, we outline general pipeline management before describing the specific datasets and summary of processing examples presented here. In the Section 3, we present practical examples of afni_proc.py commands for different analysis cases to guide the examination of major processing steps. We briefly describe different features and possible adjustments that might apply in various scenarios. Finally, in the Section 4, we summarize afni_proc.py’s design, development philosophy, and various validations. We outline some important considerations for setting up pipelines, and present some notes on choosing a hemodynamic response function (HRF). Lastly we discuss future directions and integrations for the program.

2. Methods

2.1. Setting up the pipeline: modular processing blocks with applicable options

AFNI’s afni_proc.py is a program to generate a full (or partial, if desired) FMRI processing pipeline for a single subject (covering what some researchers refer to as “first- and second-level” processing). First, a researcher specifies input datasets (such as an anatomical volume, one or more EPI time series, and tissue segmentations) and any accompanying files (stimulus timing files, physiological regressors, precalculated warp datasets, etc.); then, the researcher specifies the necessary processing choices (Fig. 1A). In order to simplify pipeline specification and conceptualization, afni_proc.py has a hierarchical and modular organization for defining an analysis. There is a top-level list of the major “processing blocks” to be performed (EPI-to-anatomical alignment, blurring, etc.), and then a set of any desirable option flags and values can be provided for each block (e.g., a particular cost function and blur radius). This layered framework allows the code to be readable, since the sequence of block names summarizes the processing and associated options have related prefixes. It also allows for flexibility to fit appropriately with a study design, since users can set up the blocks and tailor any number of options for each block (with the possibility for adding more at a researcher’s request, a frequent occurrence). Table 1 provides a list of the currently available processing blocks within afni_proc.py, with a brief description of each.

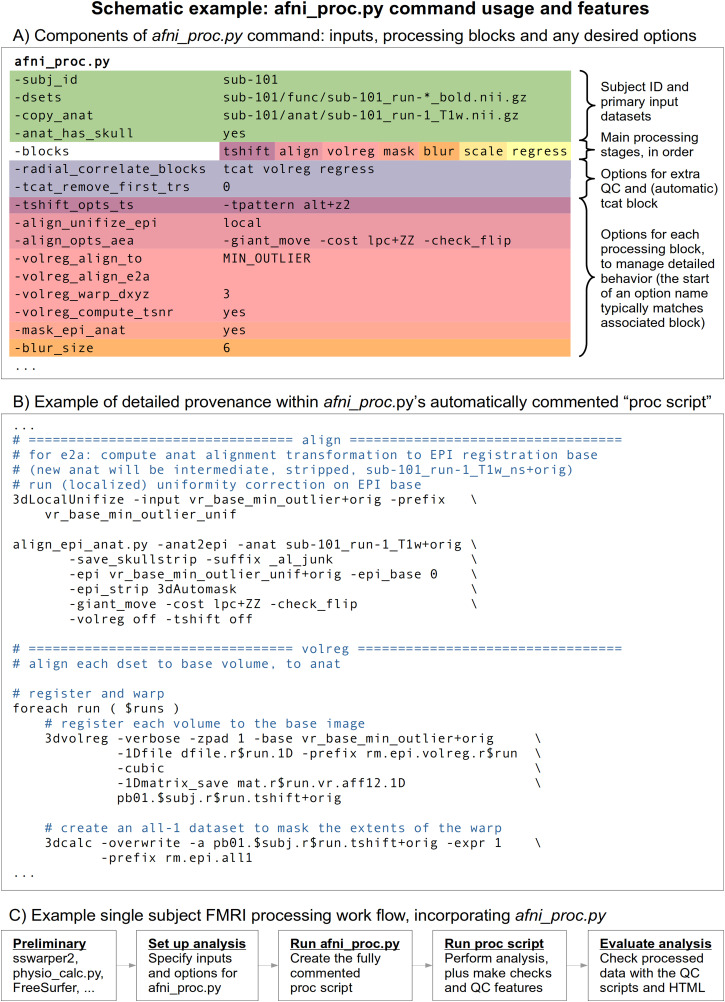

Fig. 1.

Schematic features of afni_proc.py. (A) Primary data inputs and descriptors are highlighted in green. The processing is managed hierarchically: first the user selects and orders the desired blocks (or major stages), and then for each can specify zero, one, or more options. The array of hot colors highlights which options are associated with which block, by matching them: the “tshift” block label with the “-tshift_opts_ts” option, etc. Note that the start of the option name typically matches the block, as well. (B) The afni_proc.py command creates a fully commented processing pipeline (“proc script”), so that the user has detailed understanding and provenance of all the steps of the analysis. (C) An example workflow that uses afni_proc.py for a single-subject analysis, utilizing some preliminary programs beforehand and incorporating automatically generated data checks and quality control features at the end. This can simply be looped over all subjects in a data collection.

Table 1.

Current processing blocks in afni_proc.py, with brief descriptions.

| Automatic blocks setup and initialization; not user specified, always used | |

| setup: | check args, set run list, make output directory, copy stim files |

| tcat: | copy input datasets and remove unwanted initial TRs |

| Default blocks standard steps; user may skip, modify or rearrange | |

| tshift: | slice timing alignment on volumes |

| volreg: | volume registration (for reduction of subject motion effects) |

| blur: | blur each volume (default is 4 mm FWHM) |

| mask: | create a “brain” mask from the EPI data |

| scale: | scale each run mean to 100, for each voxel (max of 200)a |

| regress: | regression analysis (stimulus model, filter, censor, etc.) |

| Optional blocks default is to not apply these blocks, but the user can add | |

| align: | align EPI and anatomy (linear affine) |

| combine: | combine echoes into one |

| despike: | truncate spikes in each voxel’s time series |

| empty: | placeholder for some other user command |

| ricor: | RETROICORb - removal of cardiac/respiratory regressors |

| surf: | project volumetric data into the surface domain (via SUMAc) |

| tlrc: | warp anatomical volume to a standard space or template |

| Implicit blocks not user-specified, but performed when appropriate | |

| blip: | perform B0 distortion correction |

| outcount: | temporal outlier detection |

| QC_review: | generate QC review scripts and HTML report (APQC HTML) |

| anat_unif: | anatomical uniformity correction |

The automatic and implicit blocks are not specified by the user in the “-blocks” option list; the former will always be performed, and the user can still manage their behavior with options, such as “-tcat_remove_first_trs” and more. The implicit blocks will be automatically added in an appropriate spot within the processing stream when the user adds a relevant option, such as “-blip_forward_dset” for the blip block. The users can see where and how these blocks are performed in detail within the created processing script.

Within each processing block, there can be zero, one, or more control parameters to specify. Several blocks and useful options are described in the code examples below. We note that the presence of the “empty” block allows researchers further flexibility to directly insert their own steps. Historically, however, many steps have been directly added to the program itself.

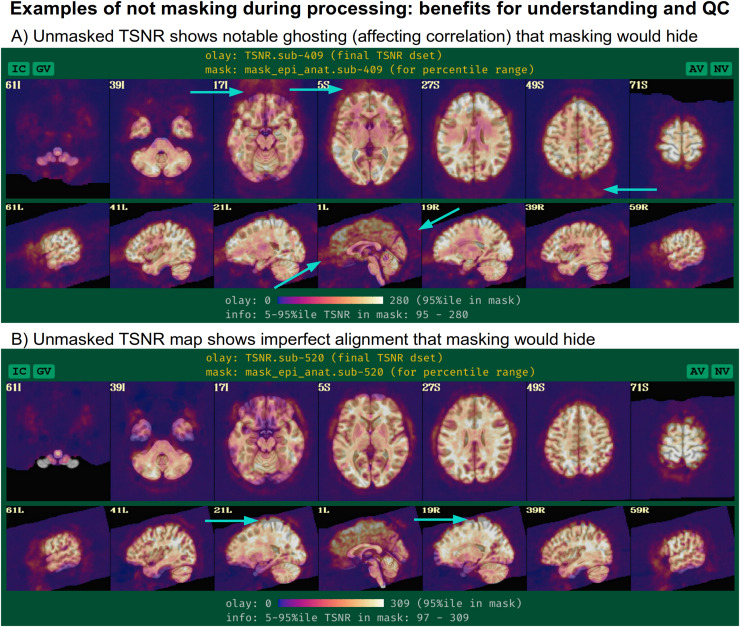

An important note about the “mask” block is that it typically only involves calculating relevant masks from the EPI and anatomical volumes, which can later be used at the group level or for summary estimates. The masks are typically not applied during standard processing, except for defining regions within which to estimate summary statistics such as temporal signal-to-noise ratio (TSNR), so that modeling results from all voxels (even outside the brain) can be assessed. This allows one to more thoroughly check for the presence of artifacts (e.g., due to a bad coil), severe ghosting, misalignment, or other features of the data, rather than to hide them away. Two examples of this are provided in Figure 2, which show TSNR after processing (in MNI space) using data from the FMRI Open QC project (Taylor, Glen, et al., 2023) from processing as part of Reynolds et al. (2023) contribution. In panel A, strong ghosting patterns are visible along the anterior–posterior axis, and understanding this helps explain some unexpected correlation patterns within the brain. In panel B, the full TSNR pattern reveals misalignment during initial processing that might otherwise be missed; this led to the discovery that anatomical skullstripping had been imprecise, which was missed due to low tissue contrast. By seeing data outside the brain, the problem was detected and reprocessing was applied to fix it—otherwise, misalignment would likely have gone unnoticed in masked data and simply skewed final results.

Fig. 2.

While an EPI brain mask is estimated during afni_proc.py processing, it is not applied to the data, so that results throughout the whole FOV can be viewed. This facilitates understanding the data better, as well as improving QC evaluation. Two examples of this are shown from data processed within the FMRI Open QC project, showing TSNR in the final MNI space after regression modeling. In (A), one can see strong ghosting outside the brain (cyan arrows), which helps explain some of the unexpected correlation patterns that are observed within the brain. In (B), one can see from the TSNR pattern that part of the final EPI data are not well aligned in standard space; this is due to initially impressive skullstripping, which could then be fixed. In both cases, masking would have hidden the reality of what was happening and contributed to potentially biased results.

The above is one example of how, in general, afni_proc.py has been developed to allow the researcher to see and explore more in their data: more details about the processing code, more intermediate files to explore for verification, and more quality control images and quantitative warnings to help evaluate the processing. This facilitates understanding and improves confidence in both the data and the processing.

2.1.1. Processing convenience and rigor

Many underlying steps are managed within the afni_proc.py program itself when building the processing script, in ways to optimize mathematical benefits. For example, there are typically several volume registration or alignment steps within a given single-subject pipeline, such as various combinations of reverse-phase-encoded EPI alignment for B0 distortion correction (implicit “blip” block); motion correction, possibly with multiple transformations (“volreg” block); EPI-to-anatomical alignment (“align” block); and subject anatomical-to-template alignment (“tlrc” block). Creating new datasets at each block would introduce unnecessary smoothing in the final data, as each regridding process involves interpolation. Instead, it is preferable first to concatenate all the estimated transforms and then to apply them in a single step (Jo et al., 2013); afni_proc.py performs this beneficial concatenation automatically, simplifying the procedure for the user. Additional conveniences include performing any bandpassing, censoring, and regression as a single step, rather than as a mathematically inconsistent two-step process (Hallquist et al., 2013). This allows for a complete evaluation of the degrees of freedom used in processing and avoidance of mistakenly overusing them.

Having these programmatic conveniences occur automatically “under the hood” has several benefits. It simplifies the processing specification, reduces the chance of errors or subtle bugs occurring, and increases the understandability of the processing itself. It also makes it easier to alter or update a processing stream, because one merely needs to add an option or change a parameter, rather than to reorganize potentially complicated logic within a script. Finally, it also means that more consistency checks can be performed automatically (e.g., keeping track of utilized degrees of freedom in the regression modeling appropriately).

2.1.2. Provenance and reproducibility

Specifying a full FMRI pipeline with a set of options in an afni_proc.py command is convenient. However, it is also necessary to ensure that the researcher knows exactly what occurs during the processing—having the provenance of the results—and afni_proc.py also provides this. When executed, the afni_proc.py command first produces a full processing script (Fig. 1B), which is organized by processing block, automatically commented, and even contains a copy of the generative afni_proc.py command. This “proc script” is then itself executed to process an individual’s data and saved for later reference. This two-stage approach—having the command generate a readable and commented script file, rather than simply carrying out the processing with no record—ensures that the researcher has all the details of the analysis at their fingertips. They are able to investigate the script to see the exact options used in each command at any time.

For example, a researcher might choose to smooth the EPI data during preprocessing by using the “blur” block, but not specify the blur radius explicitly and just use the program’s default (which is not necessarily set as a recommendation for all types of data). Even so, it is still possible to directly see the actual value used within the generated processing script, so there is no ambiguity or guesswork. The proc script is cleanly and clearly organized with the same hierarchy as the afni_proc.py command itself and contains detailed comments. Thus, the proc script fulfills two roles: it is both an exact, searchable specification of analysis, and it is a learning tool. If there are any questions about any aspect of the procedure, one can verify each step directly. Furthermore, when using the “-execute” option, a log file of all terminal text is also saved, for later reference (users are also encouraged to create their own log files, if not using this option).

Additionally, AFNI itself has inherent provenance tracking within its programs on a file-by-file basis. When a dataset is output, the command used to create it is added to its header. Therefore, the data contain an accumulating history of the commands used to produce it, including the AFNI code version and date+time of creation. This can be viewed with the full header information via AFNI’s 3dinfo program, and specifically queried by adding the “-history” option. This useful information is still included even in NIFTI files output by AFNI, via the AFNI extension, as well as NIML datasets such as surface files.

The relatively compact afni_proc.py command (typically 20–50 lines, as vertically spaced and aligned in Fig. 1A) can readily be published in a paper’s Appendix or in an online repository (GitHub, OSF, etc.). The processing can be reproduced by using the same command with the original code version or in a container. To simplify comparisons of different afni_proc.py commands, several options within the program itself exist:

“-compare_opts ..” compares a user’s command against a predefined afni_proc.py example from the help examples;

“-compare_example_pair ..” compares two sets of predefined commands;

“-compare_opts_vs_opts ..” compares two full commands;

and “-show_example ..” displays the full afni_proc.py command from the help file, which includes many examples from publications (we include the relevant command at the start of each example description, below).

For example, users could add “-compare_opts ‘example 6b’” to their afni_proc.py command to display a comparison with that help file-enumerated example, or “-compare_opts ‘AP publish 3b’” to compare against Ex. 2’s processing command from this paper. To display the basic surface-based processing command used in AFNI Bootcamp courses, one could use “afni_proc.py -show_example ‘AP class 3’.”

We note that the program has also developed with minimal external dependencies, to facilitate stability, compatibility, and reproducibility over time to the greatest extent possible. See Appendix B for more discussion of development.

2.1.3. Quality control and understanding data through processing

A primary goal of the afni_proc.py program (and of the entire AFNI platform) since its inception has been to help users “stay close to their data,” meaning that they understand the dataset from its raw state through all stages of processing. As part of this, afni_proc.py creates a “*.results” directory for each single-subject analysis, which contains copies of the original data, many of the intermediate datasets and the final outputs, so that all stages of the processing can be verified after running the script.

The afni_proc.py pipelines also generate several automatic and helpful QC features to review many aspects of the single-subject (ss) processing; the recent FMRI Open QC Project illustrated the numerous benefits of integrating both qualitative and quantitative QC items with full preprocessing, as demonstrated in both AFNI and several other neuroimaging software packages (see Taylor, Glen, et al., 2023 and op cit.). During afni_proc.py’s FMRI processing, relevant “basic” quantities are calculated and reported to the researcher at the end, such as subject motion summaries, degree of freedom (DF) counts, TSNR, and more; these are saved in a text file and can be displayed via the @ss_review_basic script in each results directory. Several potential pitfalls of analysis are also checked automatically, with results stored in “warning” files, such as not removing presteady-state volumes or having collinear regressors of interest (see Taylor et al., 2024 for a more complete list).

Since many processing steps either require or are greatly helped by visual verification, there is also a script created called @ss_review_driver, which will open viewing panels and the AFNI GUI to guide the user through visually verifying steps such as alignment, motion censoring, and model fitting. Each step of this review is commented with a pop-up GUI guide, as well. A script called @epi_review.${subj} (where ${subj} is replaced by the actual subject ID in the filename) is created, which opens up the AFNI GUI graph viewer and image panels, with time-scrolling on, to allow the user to assess the quality of the original EPI time series.

Finally, afni_proc.py generates a QC document that can be opened in a web browser to review important features from the pipeline in a single, navigable report: the APQC HTML (Taylor et al., 2024). It incorporates systematic images, automated warning checks, and several additional features, and in conjunction with a review of the “basic” quantities may be considered an efficient, minimal QC source for each single-subject analysis. The HTML can also now be run using a local Web server, so users can save QC ratings as they scroll through, saving comments and opening up the datasets themselves interactively. These features have all been shown to be useful in understanding the FMRI data and quality issues (Reynolds et al., 2023).

It is worth emphasizing the importance of afni_proc.py’s quality control features and having them integrated with processing. FMRI data can have a wide array of issues, and many of these can be subtle. While the primary focus of processing is typically to make it more appropriate for later analysis (removing artifacts, aligning datasets, etc.), it allows for underlying properties to be probed in different ways. The QC procedures in AFNI generally and in afni_proc.py specifically have been developed with the goal of taking advantage of these intermediate stages to understand the data itself more completely (Reynolds et al., 2023; Taylor, Glen, et al., 2023). This is also one reason why intermediate datasets are created and retained as part of afni_proc.py outputs, to allow for more detailed QC as needed. As such, quality control should not just be viewed as filtering out “bad” data, but as determining if one can have confidence in it, through its full range of properties.

2.1.4. Pipeline management

The outputs of other programs can be directly input into afni_proc.py scripts. One typical case is using the anatomical T1w-based results of FreeSurfer’s recon-all (Fischl et al., 2002). Common usages include incorporating the volumetric segmentations for defining tissue-based regressors in the “regress” block or the SUMA-standardized surface meshes (Argall et al., 2006) for projecting EPI data in surface-based analysis via the “surf” block. Nonlinear warps between the subject anatomical and a reference template can also be estimated beforehand, with the results passed along to afni_proc.py to concatenate with other transforms. Those warps can be calculated, for example, using AFNI’s 3dQwarp (Cox & Glen, 2013), sswarper2 (a new, preferred method that incorporates 3dQwarp for performing both anatomical skullstripping and nonlinear warping simultaneously; see, e.g., Taylor et al., 2018), or @animal_warper (similar set of functionality as sswarper2 but specifically designed for processing nonhuman datasets; see Jung et al., 2021). Physiological regressors can be included, after RETROICOR (Glover et al., 2000) time series estimation with programs such as RetroTS.py or the newer physio_calc.py (Lauren et al., 2023). A benefit of this modularity and separation is that, if the FMRI processing with afni_proc.py needs to be rerun for any reason, these precursor steps do not need to be redone; this can also save a lot of time and resources for computing intensive programs such as recon-all and sswarper2.

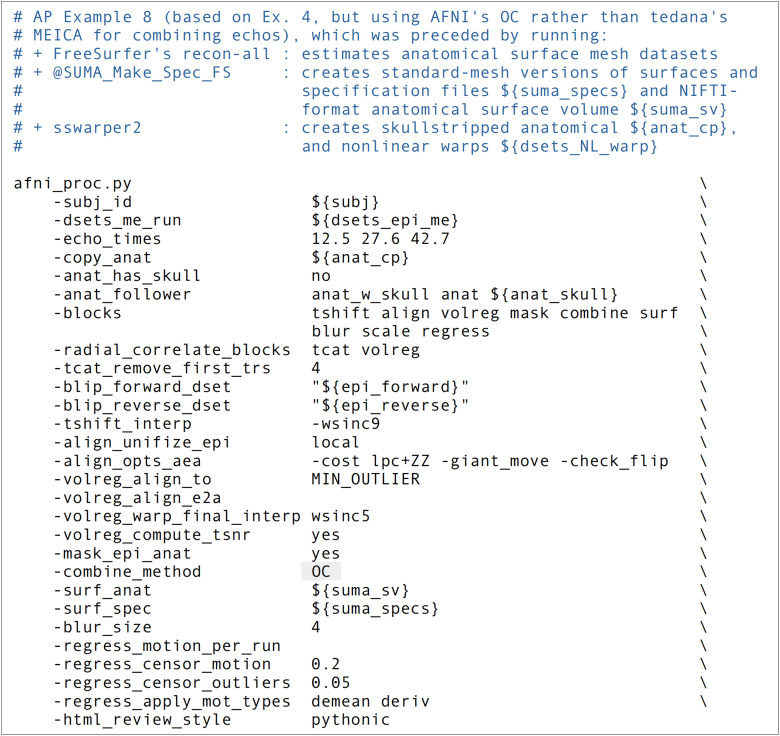

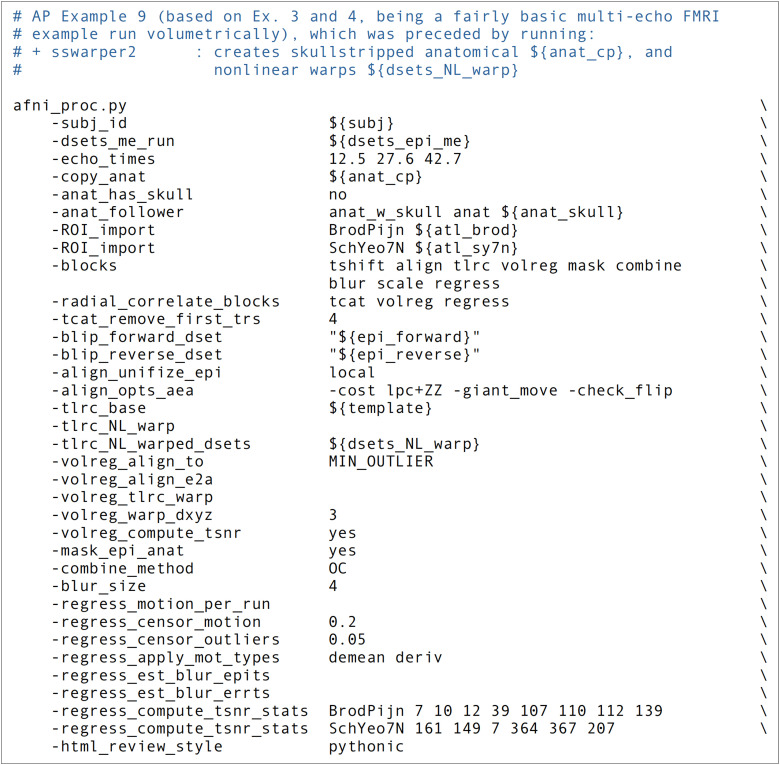

Some external programs with specific processing have been integrated within afni_proc.py. This has been the case for some workflows with the increasingly popular multiecho (ME) FMRI data, in which several (typically 3–5) T2* weighted volumes are acquired per time point. This information can be combined in various ways to boost signal-to-noise (SNR) in the analyzed BOLD signal. Such data can be processed in afni_proc.py, using the “combine” block to specify processing choices. The command contains its own optimal combination (OC) formulation from Posse et al. (1999). Additionally, options can be provided so it calls one of the multiecho independent component analysis (MEICA) routines from either the original Kundu et al. (2011) or the newer DuPre et al. (2021) tedana (TE-dependent analysis) codebases. The QC page of the latter is even integrated into afni_proc.py’s own APQC HTML. Note that tedana must be installed separately.

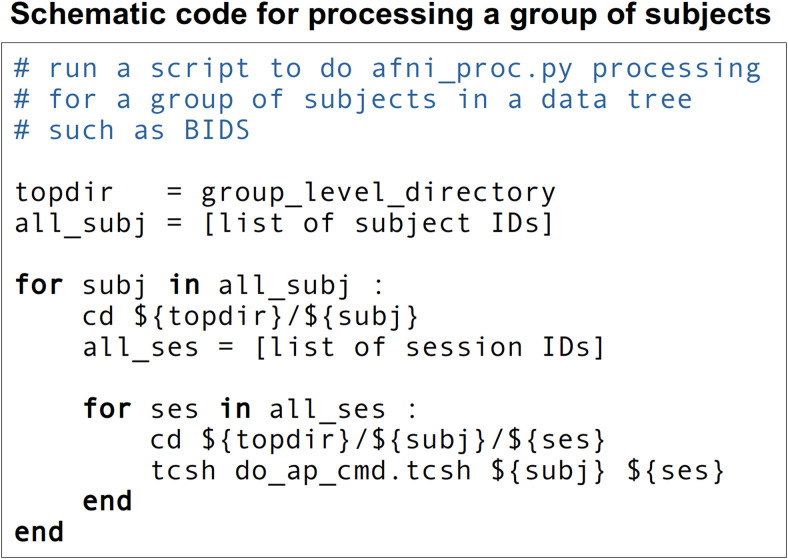

In the end, an example afni_proc.py workflow for single-subject analysis can look like that in Figure 1C. To incorporate this in a full group analysis, one can simply loop over a list of subjects, changing the input file names but keeping the remaining afni_proc.py blocks and options the same. This keeps the scripting simple if, for instance, subjects have different randomly generated stimulus timing files for a task-based FMRI analysis. Input volumes can be either NIFTI (Cox et al., 2004) or BRIK/HEAD format, and afni_proc.py also works directly with BIDS-formatted collections (Gorgolewski et al., 2016). Figure 3 shows a pseudocode example of running a group-level processing in this way: for each subject ID and session ID, do the afni_proc.py processing contained in a script. This framework runs efficiently on a BIDS data structure, as well as on any reasonably organized one. To use JSON sidecar information, AFNI’s abids_*.py programs can also be incorporated in the central script. In some cases, processing may need to be rerun on a subgroup of subjects—for example, to fix imprecise EPI-anatomical alignment—and afni_proc.py can easily be rerun for either a subgroup or entire group within a data collection.

Fig. 3.

Pseudocode for running single-subject processing at a group level, looping over a list of subject IDs and one or more sessions for each. At the heart of the second loop is the action to do the subject processing: here, to run a theoretical shell script (“do_ap_cmd.tcsh”) that contains an afni_proc.py command and just needs the subject and session ID values provided as arguments. This runs easily on a BIDS-formatted data collection (though some BIDS trees do not contain a session-level ID or directory structure, and so the second loop would be omitted).

The results directory created by afni_proc.py contains relevant outputs in a standardized structure and naming convention, including copies of the input data, several stages of intermediate files, a QC directory, and the final datasets. There is also a reference dictionary of key datasets and quantities (“out.ss_review_uvars.json”), which is both parsed by the QC generation programs and can serve as a useful reference of important outputs for the user (“uvars” stands for “user variables”; see Taylor et al., 2024, for details). Some additional quantities relevant for group analysis can also be calculated by afni_proc.py output scripts, and then used later. This includes estimating smoothness of noise autocorrelation functions (ACF) for group-level clustering (Cox et al., 2017); see Example 2, below. All of these estimated QC quantities might be compared or evaluated for data-dropping criteria with AFNI’s gen_ss_review_table.py (Reynolds et al., 2023).

The derived outputs can be used for further analysis within AFNI or any other software. The key quantities and datasets from processing are known from the keys defined for the “uvar” and “basic review” dictionaries. This output structure is inherently mappable to ones from analogous software tools in other packages or to BIDS-Derivatives. We have recently added functionality to create an additional output directory that follows the BIDS-Derivatives (v1.9.0) file structure and naming convention for FMRI processing, for the subset of afni_proc.py outputs that currently have definitions there. The user implements this by specifying “-bids_deriv yes.” An example of this is provided in the supplementary Ex. 9 (see Appendix C).

2.1.5. Description of example datasets used here

In the Results section, we present four examples of afni_proc.py commands for various FMRI processing. The full processing scripts for the workflows described here (including physio_calc.py, sswarper2, FreeSurfer’s recon-all, etc.) are publicly available,* including all of the supplementary examples in Appendix C. We first briefly describe the MRI datasets, which are publicly available† and representative of standard acquisitions. Each was collected at 3T field strength.

Examples 1, 3, and 4 use the same set of resting-state FMRI data (“sub-005”), which has been acquired with ME-FMRI. Exs. 1 and 3 just use a single echo, while example Ex. 4 utilizes all the echos during processing. The acquisition and original application of this dataset are detailed in Gilmore et al. (2019) and in Gotts et al. (2020). Briefly, there is one ME-FMRI resting-state run with TR = 2,200 ms; TE = 12.5, 27.6, 42.7 ms; voxels = 3.2 x 3.2 x 3.5 mm3; matrix = 64 x 64 x 33; N = 220 volumes; slice acceleration factor = 2 (ASSET). A short, single-echo (SE) reverse-encoded EPI set (N = 10, TE = 27.6 ms) was acquired for B0-distortion correction. There is also a standard T1w anatomical volume (1 mm isotropic voxels), and both cardiac and respiratory data were collected. This subject’s unprocessed dataset is organized in a BIDS-ish manner: the NIFTI file naming and directory structure match BIDS v1.3.0, but there are minimal differences such as not having associated JSON sidecar files.

Example 2 presents processing for one subject of task-based FMRI (“sub-10506”), using the publicly available Paired Associates Memory Task-Encoding (PAMENC) data collection (Poldrack et al., 2016; https://openneuro.org/datasets/ds000030/versions/1.0.0). The stimulus paradigm during scanning was a two-part memory task involving pairs of words and their pictures. For the “task” trials, participants were shown pairs of words for 1 s and then additional corresponding images were also shown for 3 s more; one image was drawn in a single color and the other was in black-and-white, and they were instructed to press Button 1 or 2 when the color image was on the left or right, respectively. For the “control” trials, pairs of scrambled stimuli were shown, again one in a single color and one in black-and-white, and users were to similarly indicate the side of the color image with a button push. At the same time, they were instructed to try to remember word pairs, as they would later be asked about how sure they were that two particular words were presented together. Briefly, there is one single-echo EPI run with TR = 2,000 ms; TE = 30 ms; voxels = 3.0 x 3.0 x 4.0 mm3; matrix = 64 x 64 x 34; N = 242 volumes. There were two FMRI stimulus classes, 40 trials of “task” and 24 trials of “control.” A standard T1w anatomical volume (1 mm isotropic voxels) was also acquired. This subject’s unprocessed dataset is organized in a complete BIDS v1.3.0 structure.

2.1.6. Overview of processing examples

Ex. 1 presents a special use case of afni_proc.py, that of only performing the spatial transformation steps of processing. This subset of steps might be used to test options in setting up alignment, or to investigate only specifically spatial properties of the data. The remaining Examples 2–4 all demonstrate full single-subject processing, through regression. Each is aimed at standard voxelwise analysis, as each includes blurring (spatial smoothing) during processing. Further examples or variations are provided in Appendix C.

Most voxelwise studies include blurring with the goal of increasing TSNR locally and to likely increase group overlap in the face of imperfect alignment and significant structural variability (particularly in the human cortex). Blurring can be applied in different ways, and we highlight some of these below. To use these examples as starting points for ROI-based analysis, one could simply remove the “blur” processing block and associated “-blur_*” options. Blurring should generally not be included when analyses will include averaging within ROIs, because it will spread signals across the region boundaries, artificially boosting local correlations and likely weakening distant ones. Moreover, the time series averaging within ROIs later on will still play the role of boosting local TSNR.

In these examples, some separate programs were run prior to afni_proc.py. This is sometimes done to derive useful information from supplementary datasets, like tissue maps from the anatomical volume, or physiological based regressors when cardiac and respiratory traces have been acquired during scan time, etc. The results of these steps are then included as additional inputs to afni_proc.py. We note these briefly here for each example, as well as in each table that shows an example command:

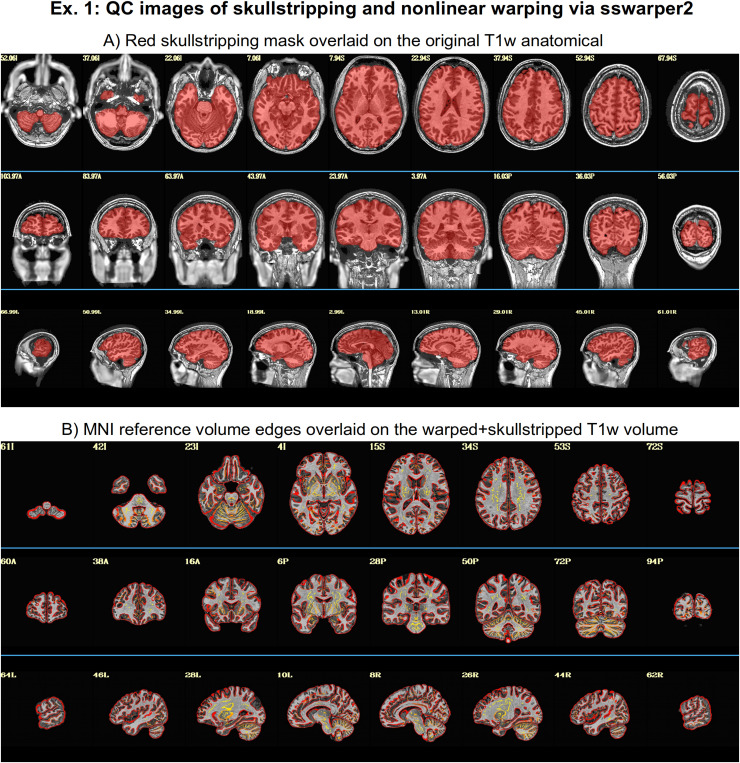

Example 1: AFNI’s sswarper2 was run on the anatomical T1w volume to simultaneously skullstrip (SS) it and estimate its nonlinear warp to standard space. Examples of its useful brainmask identification and alignment to standard space are shown in Figure 4.

Example 2: AFNI’s sswarper2 was run on the T1w volume for skullstripping and nonlinear warp estimation; AFNI’s timing_tool.py was used to create stimulus timing files from the provided events TSV files.

Example 3: FreeSurfer’s recon-all was run on the anatomical T1w volume to estimate CSF and ventricle maps, for estimating local tissue-based regressors with ANATICOR (Jo et al., 2010), as well as ROI parcellations; AFNI’s physio_calc.py (Lauren et al., 2023) was run on cardiac and respiratory traces, for creating physiological-based regressors with RETROICOR (C. Chang & Glover, 2009); AFNI’s sswarper2 was run on the T1w volume for skullstripping and nonlinear warp estimation.

Example 4: FreeSurfer’s recon-all was run on the T1w volume to estimate the anatomical surface, as well as ventricle maps; AFNI’s @SUMA_Make_Spec_FS was run on the FreeSurfer meshes to convert them to GIFTI format and to create standard meshes (Argall et al., 2006).

Fig. 4.

QC images generated by AFNI’s sswarper2, as it both skullstrips an anatomical volume (panel A) and calculates its nonlinear warp to template space (panel B). Both brainmasking and alignment with the template appear to be generally strong throughout the brain. The outputs of this program (or analogous ones, such as AFNI’s @animal_warper) can be used directly in afni_proc.py. Here and in axial/coronal images below, image left is subject left.

When processing included aligned datasets to a volumetric standard space template (i.e., when a script contained the tlrc processing block and a “${template}” dataset‡), the MNI-2009c-Asym space (Fonov et al., 2011) was used. Specifically, AFNI’s MNI152_2009_template_SSW.nii.gz version of the template was used, which has multiple subvolumes of information utilized by sswarper2 (and its predecessor, @SSwarper). Note that processing does not require use of a template space: final volumetric results could be native subject space; surface-based results typically end up on the mesh estimated from the subject’s own anatomical (but if using one of SUMA’s standard meshes, these could be equivalently displayed on any surface mesh).

3. Results

We present four afni_proc.py example commands and their results, describing the processing choices made in these examples, as well as other ones that could be used. It is impossible to provide a comprehensive set of examples,§ and the ones presented here have been chosen to highlight various features. The order of options within the command does not matter (NB: the order of blocks specified within the “-blocks ..” option does matter), but is typically chosen for grouping of relevant options and for clarity of purpose. Several of the images shown below come directly from the systematic views provided within the APQC HTML created by the given processing. These were run with AFNI version 24.2.02.**

3.1. Ex. 1: Partial processing, warping-only case: Spatial transformations

In addition to building complete processing pipelines, afni_proc.py can be used to perform subsets of processing. This can be simpler than writing a separate script to carry out the task, because afni_proc.py includes several convenience features. In this example, we focus on the subset of alignment-related features in standard FMRI processing. Note that the “regress” block is merely included so that the APQC HTML is created; no regression options are used here, and those will be discussed in subsequent examples along with other nonalignment considerations.

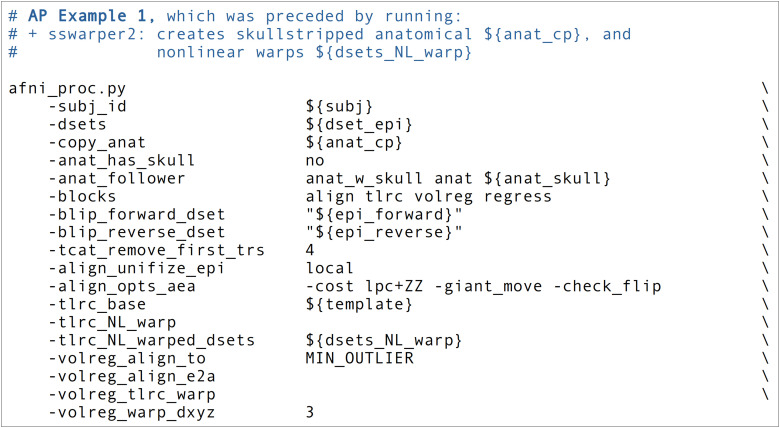

In the Ex. 1 command (Fig. 5, or run: afni_proc.py -show_example “AP publish 3a”), we use afni_proc.py to perform four alignment processes:

Fig. 5.

The afni_proc.py command for Ex. 1 (warping-only, single-echo FMRI). The options and any arguments are vertically spaced for readability. Here and throughout, items starting with “$” are variable names, which are typically file names or control options. ${sub} = the subject ID; ${anat_cp} = the input anatomical dataset (here, that has been skullstripped by sswarper2); ${anat_skull} = a version of the input anatomical dataset that still has its skull, for reference during processing; ${dset_epi} = the input EPI dataset (which is a single echo, here the second one from the ME-FMRI acquisition); ${epi_forward} = an EPI volume with phase encoding in the same direction as the main input FMRI datasets, to be used in alignment-based B0-inhomogeneity correction; ${epi_reverse} = an EPI volume with phase encoding in the opposite direction as ${epi_forward}, for B0-inhomogeneity correction; ${template} = name of reference volume for final space (here, the MNI template). Running this command produces a commented script of >450 lines, encoding the detailed provenance of all processing.

EPI with forward phase to EPI with reverse phase alignment (“blip” block, which is implicitly included when the “-blip_*_dset ..” options are present), which is estimated using a restricted nonlinear alignment using AFNI’s “3dQwarp -plusminus ..”;

EPI-to-anatomical alignment (“align” block), which is estimated with a linear affine transform;

anatomical-to-template alignment (“tlrc” block), which has been estimated nonlinearly here using AFNI’s sswarper2 so that anatomical skullstripping is also included;

EPI-to-EPI volumetric motion estimation and correction (“volreg” block), which is estimated with rigid-body alignment across the input FMRI dataset.

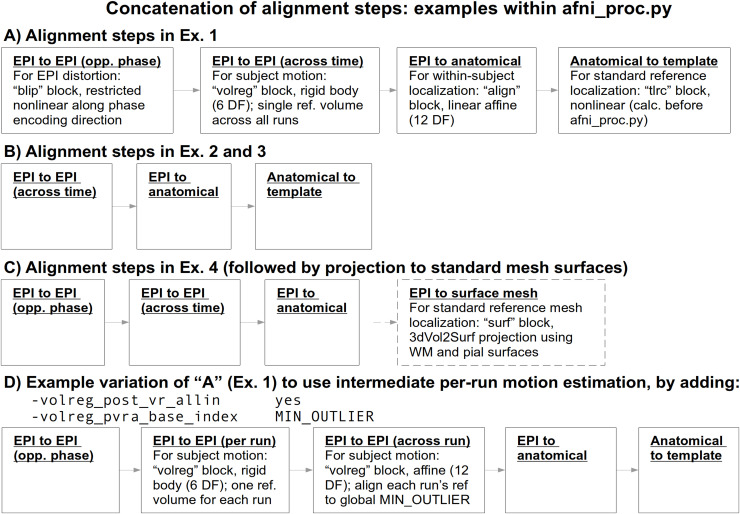

From the short command provided in Figure 5, the created proc script will first estimate each of the alignments individually. It will then conveniently concatenate the transformations into one comprehensive warp and apply that as a single transformation to the raw, input EPI dataset (Fig. 6A). This concatenation minimizes the blurring necessarily incurred by regridding and interpolation, while simultaneously motion correcting and warping the FMRI time series to the template space. The afni_proc.py command is an extremely compact way to perform this procedure (the produced “proc” script is over 450 lines long, including comments), and the programmatic details of concatenation can all be checked for educational or verification purposes within the output proc script.

Fig. 6.

Schematics of the various alignment steps within each example’s afni_proc.py command. Details are shown for the first time a particular step is presented. Alignment is calculated separately for each step, but then concatenated within the afni_proc.py script before applying to the EPI data. This tends to minimize extra blurring that would be incurred by multiple regridding and interpolation processes, if the stages were applied separately. In C (Ex. 4), after the concatenated warp is applied, the EPI data are projected onto a standardized surface mesh with 3dVol2Surf. Case D displays a variation of how to handle motion estimation when multiple runs are input, particularly if one might expect more differences between runs.

We note some additional points on the input EPI and anatomical data (which are provided with “-dsets ..” and “-copy_anat ..,” respectively). Firstly, these datasets can be in either NIFTI or BRIK/HEAD format, since AFNI reads and writes both. By default, the input anatomical volume would be skullstripped with a simple method. But when that procedure has already been performed, the user can deactivate that by including “-anat_has_skull no,” as has been done here. Multiple single-echo EPI datasets can be input after the single “-dsets ..” option, such as when there are multiple runs per session to be analyzed simultaneously. The final output from processing will be derived from one concatenated dataset, and one would typically then include an option for “per run regressors” within the regress block, to appropriately handle the breaks in the time series during regression (see Exs. 2–4, below). Finally, we note that it is possible to remove initial time points from the input EPI datasets as they are copied at the start of processing, using the “-tcat_remove_first_trs ..” option; this is often done if presteady-state volumes remain in the data, as shown here to remove the first four time points. The afni_proc.py script also contains an automatic check for potential presteady-state volumes, which is included in the “warns” section of the APQC HTML.

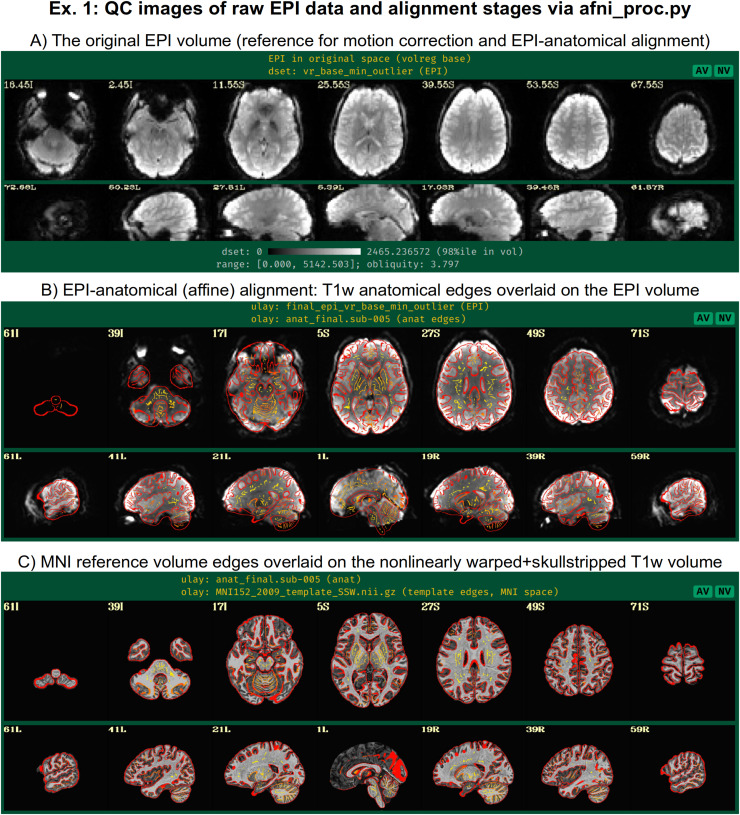

In addition to the main EPI time series data, reverse-phase-encoded EPI datasets, which are also known as “blip up/blip down” datasets, are input in this example via “-blip_forward_dset ..” and “-blip_reverse_dset …” The “blip” processing block†† is, therefore, implicitly included in the processing block list, as noted in Table 1; here, it occurs just prior to the “align” block in the generated processing script. This pair of blip datasets will be mutually aligned and produce a warp that “meets in the middle,” which reduces the geometric effects of B0 inhomogeneity (Andersson et al., 2003; H. Chang & Fitzpatrick, 1992; Holland et al., 2010). This has been shown to improve the matching of structures between subject EPI and anatomical data (e.g., Hong et al., 2015; Hutton et al., 2002; Irfanoglu et al., 2019; Roopchansingh et al., 2020). In Figure 7, one can see the reduced geometric distortion for the EPI volume, particularly by comparing sagittal slice views in “A” (e.g., slice 17.03R) with the postalignment EPIs underlaying the anatomical edges in “B” (e.g., slice 19R): the stretching of the former along the AP axis is greatly reduced in the latter. Note that some bright CSF can still be observed outside the anatomical edges.

Fig. 7.

A selection of QC images generated by afni_proc.py for Ex. 1, which focuses on alignment-related steps of preprocessing. Panel A shows one EPI volume in original view (specifically, the one used as a reference for motion correction and EPI-anatomical alignment) to check coverage, tissue contrast, etc. Panel B shows the underlaid EPI and overlaid edges of the anatomical volumes after affine alignment. Here, after blip up/down correction, the EPI shows greatly reduced B0 inhomogeneity distortion along the AP axis (cf. the sagittal views; some of the bright regions are CSF), and the general matching of the sulcal and gyral features and other tissue boundaries is strong. Panel C shows the anatomical (underlaid) and reference template (overlaid, edges) volumes after nonlinear alignment. There can be local structural differences expected (particularly in situations where there are differing numbers of sulci and gyri), but again the general matching of structural features is quite high.

In the anatomical-to-template block (“tlrc”), nonlinear alignment is switched on with “-tlrc_NL_warp,” here specifying an MNI template as a reference base (“-tlrc_base ..”). By default, this transform would be calculated using AFNI’s auto_warp.py, which calls 3dQwarp (Cox & Glen, 2013). However, in this example we have already run AFNI’s sswarper2 program to both skullstrip the anatomical and generate the nonlinear warp to template space prior to running afni_proc.py. This is a useful approach for running and evaluating a computationally expensive procedure before follow-on processing. If we end up running the afni_proc.py processing more than once or in a parallel processing stream, the warp estimation for this particular T1w dataset need not be recalculated. Figure 7C shows the QC images for the anatomical-to-template alignment in the APQC HTML, which in this case match those of sswarper2 (Fig. 4B). The precalculated warps have been passed to afni_proc.py with “-tlrc_NL_warped_dsets …” For nonhuman primate (NHP) and other animal processing, the @animal_warper program for skull removal and nonlinear warp estimation (Jung et al., 2021) can be integrated in exactly the same way.

In the volume registration block (“volreg”), each EPI time point is aligned to a reference using rigid-body alignment with 3dvolreg. The resulting motion parameter time series will be concatenated with the other transforms later in the processing. In the full processing examples below, we describe how it can also be used for both motion censoring criteria and in the regression modeling. The “-volreg_align_to ..” option allows the user to specify which EPI time point should be used as a reference volume. There are many considerations for this, but in general one would like to ensure that the reference volume itself is not corrupted by motion. To accomplish this in a general fashion, we recommend using the “MIN_OUTLIER” keyword with this option, so that the time point selected is that which has the fewest outliers in the input EPI time series; that choice seems to be the most generally reliable, with minimal risk of being corrupted in a given subject. The same volume selected for motion correction reference is also used for EPI-to-anatomical alignment. If the user would like to use a reference volume from a dataset other than the input EPI (e.g., using a separate, presteady-state volume with higher tissue contrast), then the “-volreg_base_dset ..” option could be used instead. Finally, we note that the output spatial resolution for the processed data can be specified here, with the “-volreg_warp_dxyz ..” option. If this option is not used, the data will be output at an isotropic spatial resolution slightly higher than the input EPI’s minimal voxel dimension.‡‡

In the EPI-to-anatomical alignment block (“align”), linear affine registration with 12 degrees of freedom is performed between the anatomical dataset and the same EPI reference volume used in motion correction. These volumes typically have differing/opposite tissue contrast, so an appropriate cost function must be selected to drive the alignment optimization. AFNI’s local Pearson correlation (lpc) cost function, specified here with “-align_opts_aea ..” has been shown to generally provide excellent alignment for these cases (Saad et al., 2009); the “+ZZ” provides extra stability, since EPIs can be variously noisy, inhomogeneous, and distorted. In some protocols, applied contrast agents such as MION can alter the EPI tissue contrast so that it matches that of the T1w volume. In such cases of matching contrast, the related “lpa” or “lpa+ZZ” cost function would be recommended. In some scenarios where the EPI volume has minimal tissue contrast, the “nmi” cost function may be a useful alternative. For other specialized scenarios, there are other cost functions that can be tried.

In addition to distortions, EPI volumes can have other nonideal properties that affect alignment. Recently, 3dLocalUnifize was developed and added to AFNI, to help deal with EPI volumes that have notable brightness inhomogeneity patterns that effectively change or greatly reduce tissue contrast. This program creates a brightness-homogenized version of a dataset while still preserving structural patterns, creating an intermediate dataset that is used for the alignment only. It is invoked within afni_proc.py by “-align_unifize_epi local,” as used here. We find that this option provides general stability and typically improves coregistration for human datasets even if they are noninhomogeneous; for nonhuman datasets, it is not currently recommended, as they tend to be more significant nonbrain features present in the FOV with which this functionality interacts. The “-giant_move” suboption opens the parameter search space for alignment parameters, which is useful when the EPI and anatomical do not have a strong initial overlap (even though in this case, the datasets overlay well). The “-check_flip” suboption is implemented to check for instances when the EPI and anatomical might be relatively left–right flipped to each other; while this sounds like an odd concern, this functionality has found such orientation errors in datasets contained in major public repositories such as FCON-1000, ABIDE, and OpenfMRI/OpenNeuro (Glen et al., 2020; Reynolds et al., 2023).

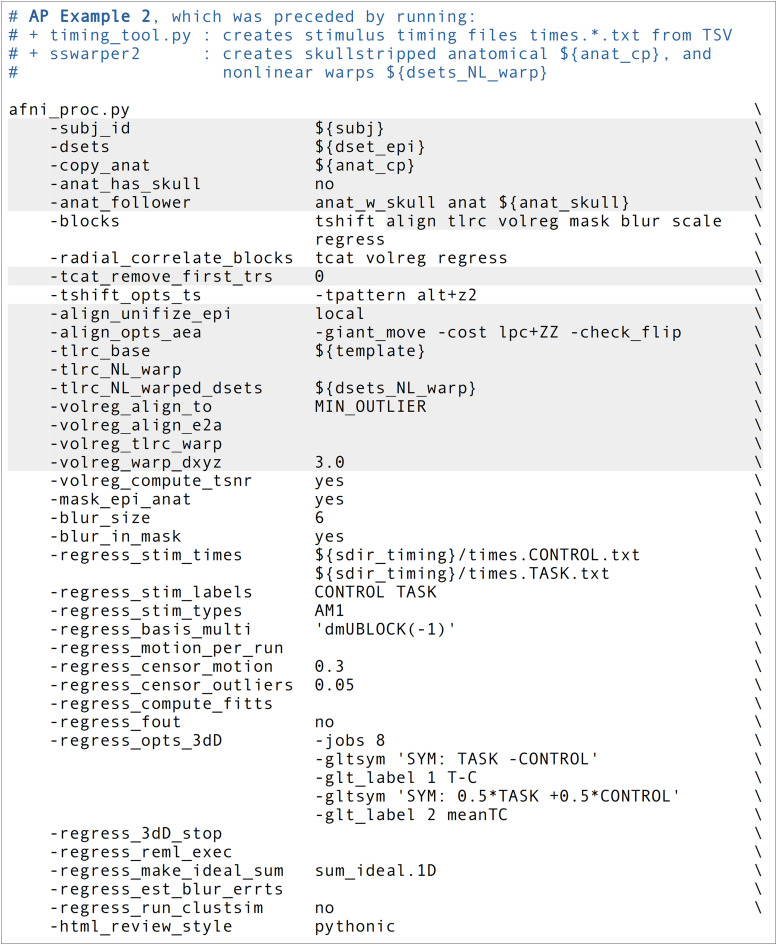

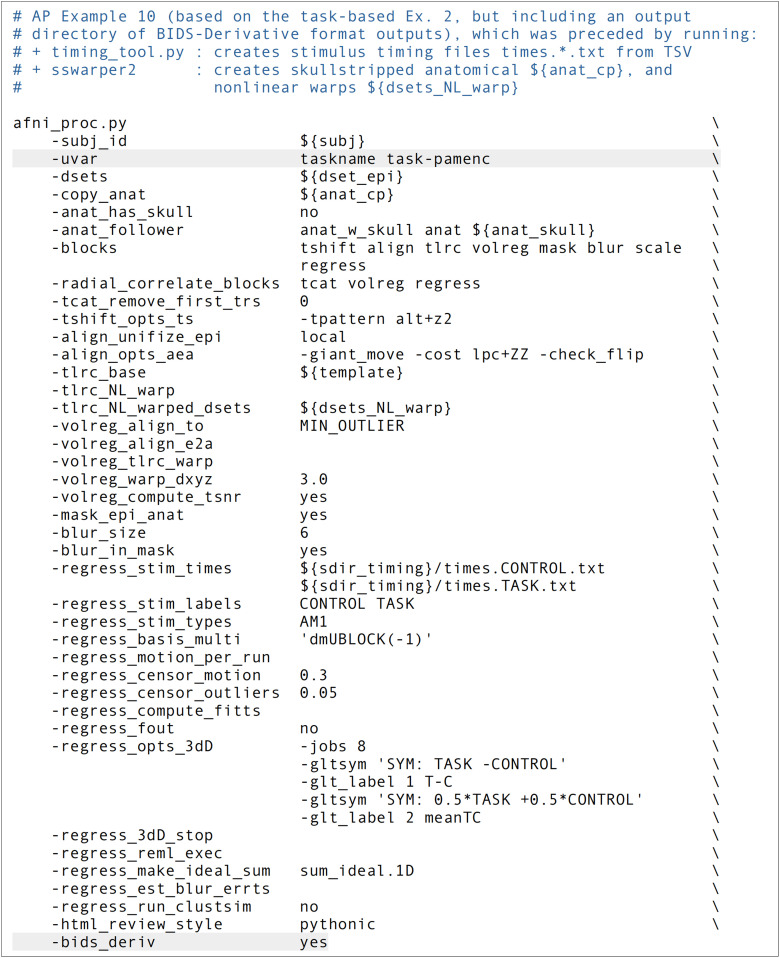

3.2. Ex. 2: Full task-based FMRI processing, with amplitude modulation

In this example, we demonstrate the full subject-level processing of a task-based FMRI dataset—that is, through the regression modeling block—with afni_proc.py (Fig. 8, or run: afni_proc.py -show_example “AP publish 3b”). The processing here would apply to a standard voxelwise analysis, given the presence of the “blur” block. The main facets of the “volreg,” “align,” and “tlrc” block were described above in Ex. 1, and apply equivalently here. As in Ex. 1, sswarper2 was run prior to afni_proc.py, and both its skullstripping and warping results are imported. An alignment-based difference from Ex. 1 is that this dataset does not have a pair of opposite phase encoding datasets for “blip” block geometric adjustment (though it could, if such were available for this subject).

Fig. 8.

The afni_proc.py command for Ex. 2 (task-based, single-echo FMRI, full processing). Options with gray background have already been described earlier in Ex. 1 here, and any variables described in the captions of Figure 5. ${blur_size} is the FWHM size of applied blur, in mm; ${sdir_timing} is the directory containing stimulus timing files. Running this command produces a commented script of >640 lines, encoding the detailed provenance of all processing.

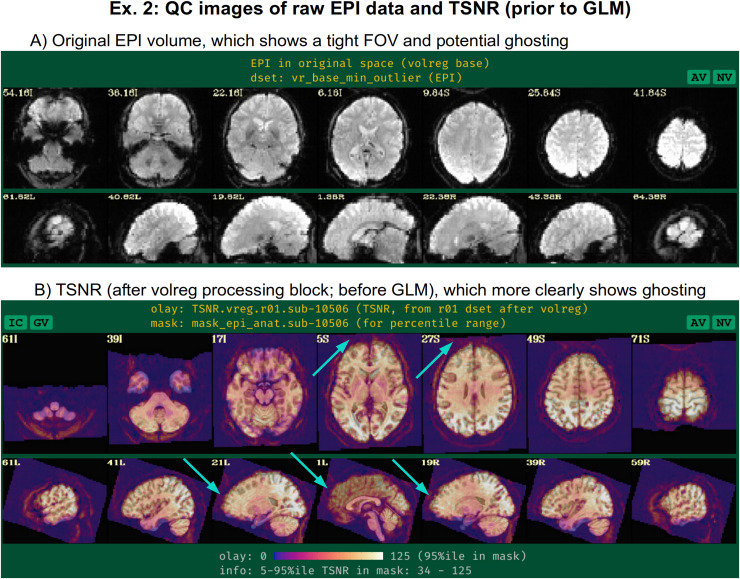

Since this example will focus more on time series modeling, the “-volreg_compute_tsnr yes” option has been added to include an image of the TSNR immediately after motion correction has been applied to the EPI time series, prior to regression modeling; the TSNR after the GLM is already included by default. An example of the utility of this can be seen in Figure 9B, where ghosting in the dataset is apparent. This means that one must be particularly cautious interpreting statistical results in the frontal regions where signal overlaps onto the brain. As noted above, just masking data would hide this circumstance and potentially lead to erroneous conclusions from just viewing GLM outputs. Panel A of the same figure shows raw EPI data, which indeed shows an extremely tight FOV for the acquisition; typically more empty space around the brain will help prevent such issues.

Fig. 9.

QC images generated by afni_proc.py for Ex. 2, showing: (A) the raw EPI volume in native space; and (B) the unmasked TSNR after the volreg processing block, prior to regression modeling. The unmasked TSNR image shows evidence for ghosting artifact overlapping into the brain (cyan arrows); as described in Figure 2, this shows the benefits of not masking data during processing to understand it better and more reliably evaluate it. The fact that the EPI has been acquired with such a tight FOV (see panel A) likely contributes to the presence of ghosting. The TSNR map also shows the presence of EPI distortion (the anterior TSNR pattern extends beyond the anatomical boundaries, even though structural alignment is good).

This afni_proc.py command includes some additional QC-specific options, and these will add or modify features in the output APQC HTML. For example, “-radial_correlate_blocks ..” specifies a list of blocks for which images will be created of local radial correlation (via AFNI’s @radial_correlate). That is, a dataset will be calculated where each voxel’s time series will be correlated with the Gaussian-weighted average of surrounding voxels’ times series over a large radius (20 mm half width at half max, by default); such a dataset has been shown to be useful in revealing scanner- or motion-related artifacts (Taylor et al., 2024). The “-html_review_style” option allows the user whether to use an older, simple program for line plots within the HTML (“basic” style), or a more modern version that contains extra information but requires having Python’s Matplotlib library installed (“pythonic” style). At present, the program will check Python dependencies to be able to run the latter style, to provide more informative plots by default.

When slice timing information is available in the EPI data, it can be applied to shift the input time series datasets appropriately by including the “tshift” block, as in this example. The user can supply various options to the 3dTshift command that will be used in the proc script via “-tshift_opts_ts …” In this case, the timing pattern is specified. With other “-tshift_* ..” options, users can control the interpolation method or the part of the TR to which shifted times should be aligned.

The “mask” block leads to the estimation of a whole brain mask, or as close to one as the data allow. Importantly, the mask is not applied directly to the FMRI data (zeroing out much of the field of view, FOV), but instead is reserved for use with various calculations. While most studies focus their investigations within the brain only, it is quite helpful to see EPI data across the whole FOV in order to be aware of possible distortions, noise values, and other properties within the data (see, e.g., Taylor, Reynolds, et al., 2023). One might calculate mean quantities within the mask, such as average TSNR to report, as well as combine it with similar masks across all subjects to create a group-level mask. The “-mask_epi_anat yes” option added here tightens the EPI mask by intersecting it with the anatomical mask, which is typically done to improve specificity.

The “blur” block is also included in the processing, which is standard for FMRI data that will be analyzed voxelwise, in order to boost local SNR though at the cost of spatial specificity. While the selected amount of blur can vary based on application (including not blurring, in the case of ROI-based analysis), a general guideline for single-echo FMRI inputs might be to use a “-blur_size ..” that is about 1.5–2 times the minimum voxel dimension. The present EPI voxels are 3 x 3 x 4 mm3, and, therefore, the selected blur has 6 mm FWHM. For multiecho FMRI data, one might prefer minimal blurring, because the TSNR after combining echos tends to be much higher. There are many different styles of blurring that can be applied (see Ex. 4 below for surface-based smoothing, and supplementary Ex. 6 in Appendix C for blurring data to an average amount).

Including the “scale” block leads to an important time series feature: the coefficients (or effect estimates) from the regressors of interest will then have meaningful units of BOLD percent signal change based on per-voxel baseline scaling. This method of voxelwise scaling has been shown to be useful in interpreting results and for promoting more detailed comparisons across studies (Chen et al., 2017). Note that other software may provide other formulations for scaling, which will have different interpretations and properties. We typically recommend including the scale block, particularly in task-based FMRI studies. This is in line with both the aforementioned paper and Chen et al. (2022), who further argued that doing so provides results with more valuable information both for quality control (QC) and analysis.

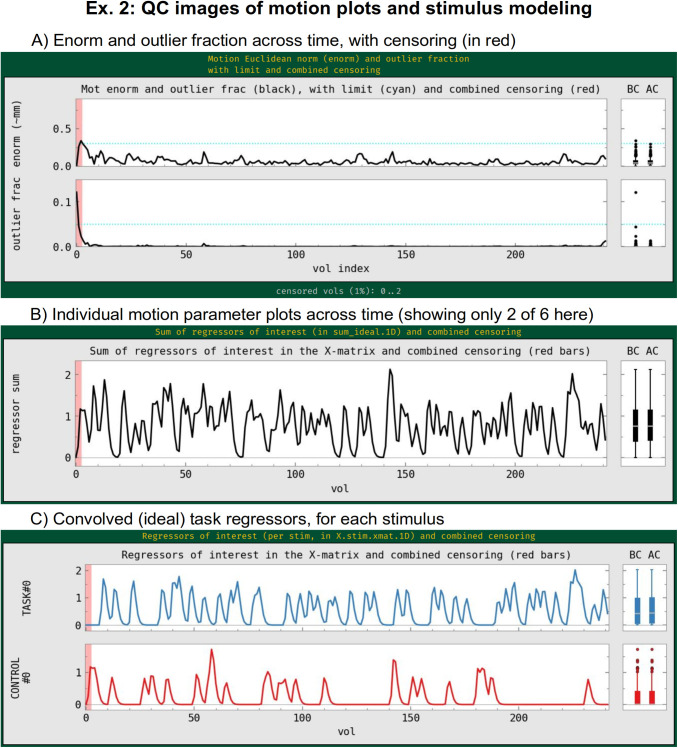

The “regress” block contains a number of important processing options related to the subject-level general linear model (GLM). This block typically contains the largest number of detailed specifications for the processing, as it produces the main outputs at the single-subject level. In the current block, there are two criteria set for censoring time points during regression—that is, removing specific time points from influencing the model (which is referred to as “scrubbing” in some software). First, an outlier-based criterion is used via “-regress_censor_outliers 0.05,” so that volumes whose brain masks contain more than 5% temporal outliers from the input time series will be censored. Additionally, a motion censoring criterion is based on the Euclidean norm (Enorm) of the first difference of the EPI motion parameter time series, in this case where the magnitude of change in Enorm > 0.3, which has approximate units of mm (“-regress_censor_motion 0.3”). Since this motion criterion is based on the difference of parameters, the volumes at both flagged time points are censored for suprathreshold estimates. The Enorm is the square root of the sum of squares (or L2-norm) of the motion parameter differences, similar to how standard distance metrics are formulated, making it more sensitive to a large change in any single component than an L1-norm, such as the framewise displacement (FD) parameter. Figure 10A shows the Enorm and outlier plots from the APQC HTML, as well as part of the individual motion parameter plots; censor thresholds (cyan lines) and suprathreshold locations (red fields) are both displayed on those plots and on subsequent line plots within the HTML.

Fig. 10.

QC images generated by afni_proc.py for Ex. 2 focused on the motion and regression model setup when processing task-based FMRI. Panel A shows the Enorm and outlier fraction plots across time, which are used for time point censoring. The dashed lines show the thresholds for each quantity, and the red bands highlight the location of any volumes to be censored (here, only three volumes are censored). The “BC” and “AC” boxplots show distributions of each plotted parameter before and after censoring, respectively. The lower two panels show the “ideal” stimulus response based on the timing and chosen hemodynamic response function (HRF): B shows the sum of responses, and C shows each individual stimulus class. The red band of censoring is also displayed here, to reveal any cases of stimulus-correlated motion (which is also checked automatically in the “warns” section of the APQC HTML).

In the present task paradigm, the modeling of the memory and button response includes duration modulation (DM), to allow for stimulus events of varying duration, whose values are also encoded in the stimulus timing files. There are two stimulus classes here, with the timing files and labels provided, respectively, by “-regress_stim_times ..” and “-regress_stim_labels …” Each event duration here is convolved as a boxcar with the BOLD impulse response function to create event responses of varying shape and magnitude within the idealized hemodynamic response function (HRF). Amplitude modulation (AM; also referred to as “parametric modulation” in some software) might be an alternative choice. The duration modulation function has two specifications§§: its shape, which here is “BLOCK”; and its length scale, which for the present task is 1 s (the negative sign is a syntax convention, see the online description in the footnote); by convolution, a stimulus of 1 s duration would then have unit magnitude in the units of the scaled regressor, and longer responses would have a larger magnitude, up to the limits of the basis function. The “idealized” response curve for each stimulus from the APQC HTML is shown in Figure 10C, along with the location of any censoring (see next paragraph). Note that each stimulus class can have its own basis function (which is what the “multi” in the “-regress_basis_multi ..” option refers to). There are many choices for how to model events, such as whether to use a fixed HRF or to fit the shape from the data, and these can greatly affect results; this is described more in the Section 4.

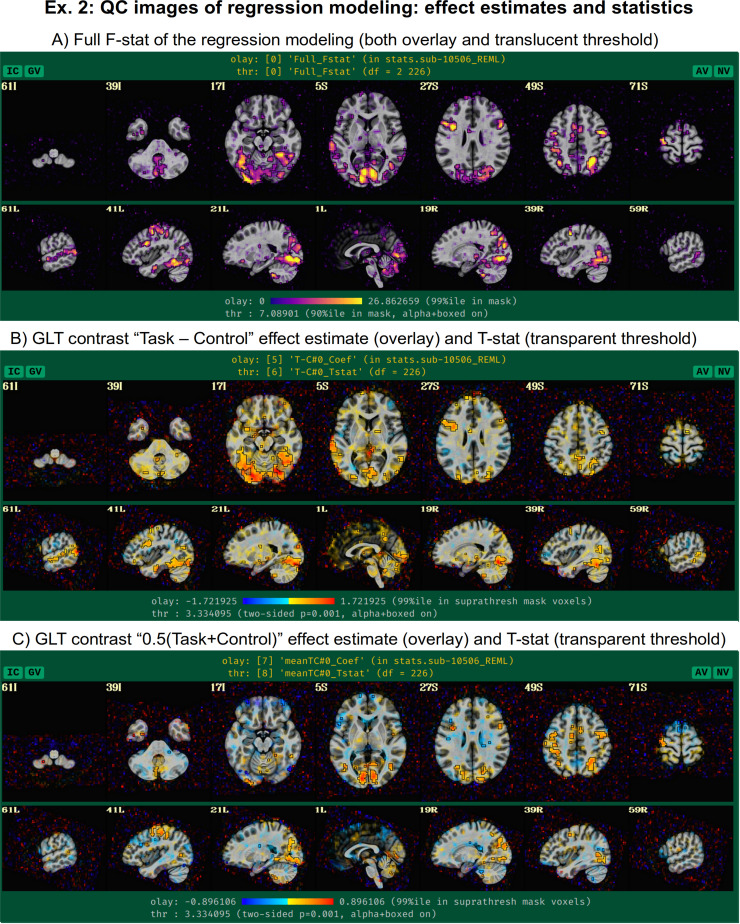

Beyond just modeling the events, one can also evaluate hypotheses about their effects as general linear tests, using “-regress_opts_3dD” to specify the desired tests for 3dDeconvolve to run. In this analysis, the two general linear tests evaluated are a basic “Task - Control” contrast and the mean “0.5 (Task + Control)” response, given the labels “T-C” and “meanTC,” respectively. We note that the topic of HRF modeling itself is quite large, and it depends heavily on the study and task details. More basis function considerations and options are presented in the Section 4. Figure 11 shows the regression modeling results as displayed in the APQC HTML. Statistical maps are used as threshold datasets, and where possible, effect estimates are used as the overlay (color) dataset. To retain useful information while thresholding and to better perform QC, transparent thresholding is applied to highlight regions of high significance while still showing results throughout the full field of view, even outside the brain (Allen et al., 2012; Taylor, Reynolds, et al., 2023).

Fig. 11.

QC images generated by afni_proc.py for Ex. 2, focused on evaluating the task-based regression modeling results. In each panel, the statistic value is used for thresholding in a translucent fashion: suprathreshold locations are opaque and outlined, and subthreshold locations are increasingly translucent. The overlay color is the accompanying effect estimate coefficient where available (panels B and C). Panel A exhibits the full F-stat, which shows the relative quality of model fit. Panels B and C show the two contrasts specified in the afni_proc.py command. In all cases, modeling results outside the brain are shown, for more complete evaluation and understanding of the processing results (Taylor, Reynolds, et al., 2023).

Finally, there are three other points to note about the regress block options. Even though there is only one time series in this dataset, we include the “-regress_motion_per_run” flag to highlight that in cases of concatenating multiple runs one can better account for motion-correlation variance. This option has no particular effect when there is only one input time series, but we typically leave it in as a practical default to not forget it in other scenarios. Secondly, 3dREMLfit is used to account for serial correlation in the time series residuals, using a generalized least squares estimation of temporal autocorrelation (“-regress_reml_exec”). This generalizes the default estimation functionality of 3dDeconvolve, allowing simultaneous estimation of the beta coefficients in the model with estimating temporal correlation and variance. Finally, “-regress_est_blur_errts” flags the processing script to estimate smoothness of the residual time series (the “errts*” dataset) with 3dFWHMx, which can be useful for QC considerations as well as for some group analyses that use clustering.

3.3. Ex. 3: Full resting-state FMRI: Volumetric, voxelwise analysis

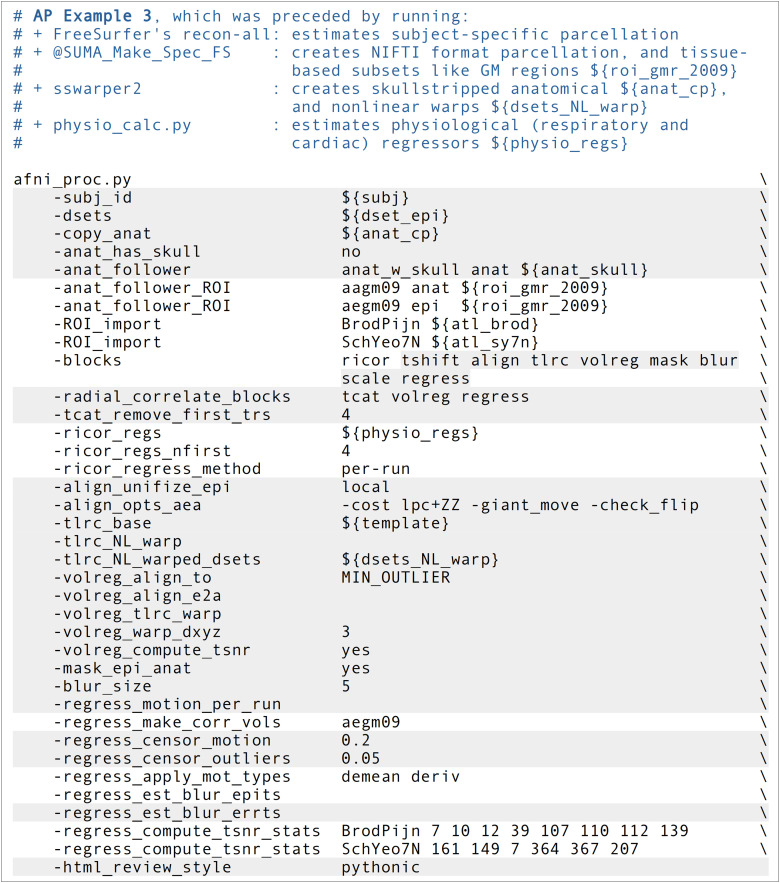

We now show an example of full resting-state processing with single-echo FMRI data, for voxelwise analysis (Fig. 12, or run: afni_proc.py -show_example “AP publish 3c”). Again, both the skullstripping and nonlinear warping from sswarper2 have been integrated. Unlike previous examples, this command includes local regressors that were estimated from separately measured physiological time series, as described below. It also imports results from FreeSurfer’s recon-all on the subject’s anatomical T1w dataset. The reverse phase encoding datasets from Ex. 1 could easily be applied here with the same “-blip_*” options, as well. We leave the blip correction out in this case and users can view the difference between applying the B0 distortion correction and not doing so; note that the amount of difference including versus ignoring this step will have for a dataset will depend strongly on the scanner and acquisition details being used.

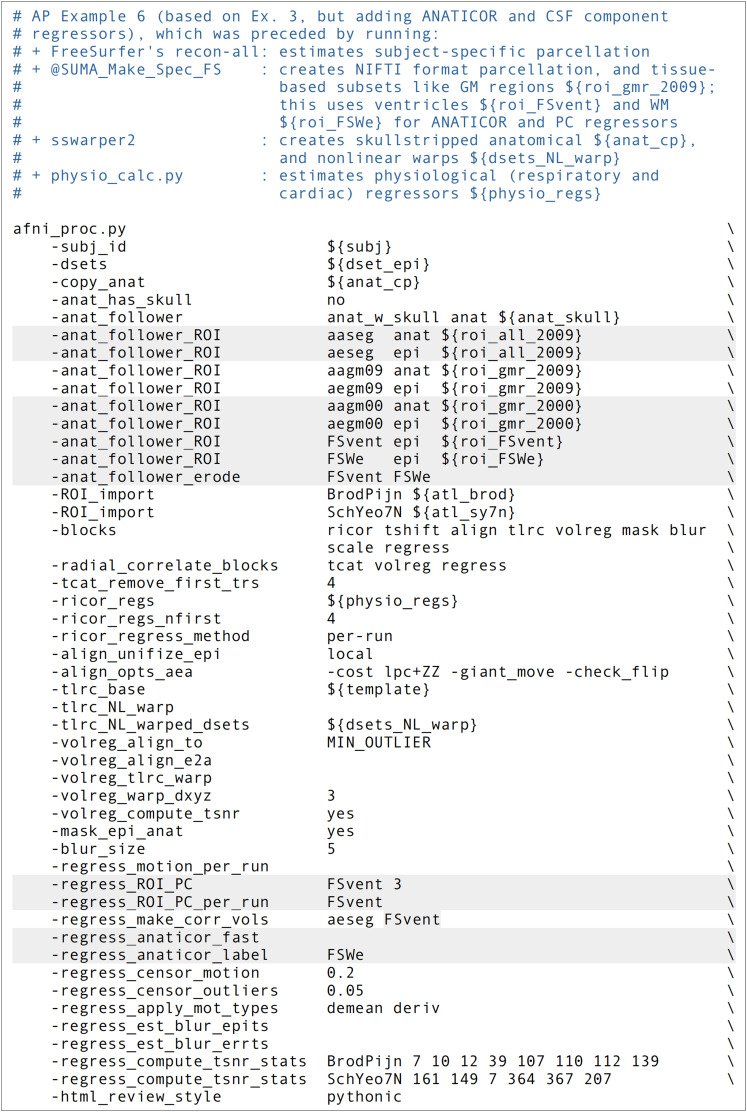

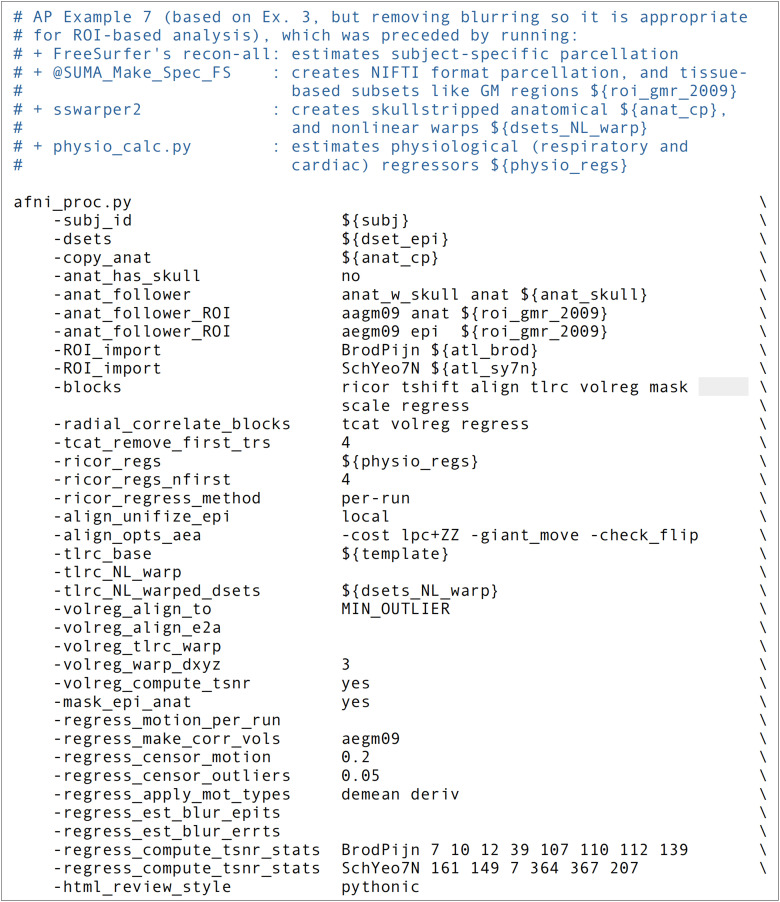

Fig. 12.

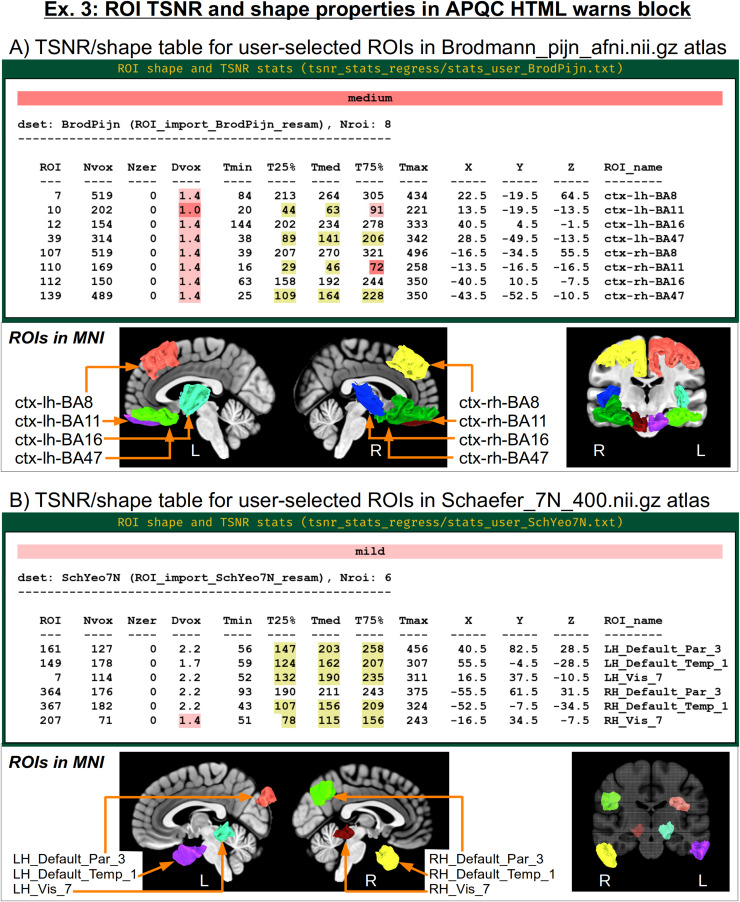

The afni_proc.py command for Ex. 3 (resting-state, single-echo FMRI, full processing). Options with gray background have already been described in earlier examples here, and any variables described in the captions of Figures 5 and 8. ${sdir_timing} is the directory containing stimulus timing files. Running this command produces a commented script of >740 lines, encoding the detailed provenance of all processing. Two additional atlases are imported here, for extracting ROIs for checking TSNR and shape properties: “BrodPijn” is the Brodmann atlas (1909) digitized by Pijnenburg et al. (2021); and “SchYeo7N” is the refined version of the 7-network, 400 parcellation Schaefer-Yeo atlas (Glen et al., 2021; Schaefer et al., 2018).

There are several situations where it can be useful to add precalculated masks or ROI atlas maps into the processing stream, each of which ends up in the final space (here, MNI) on either the EPI or anatomical grid, as specified. Here, the “-anat_follower_ROI ..” option is used to bring anatomical parcellation datasets FreeSurfer’s recon-all into the processing stream. For each imported dataset, the user assigns a brief label for working with the dataset within the code and also designates the final grid. Here, we are bringing in the gray matter (GM) map from FreeSurfer’s “2009” parcellation (Destrieux et al., 2010), which is used twice: one copy will have “epi” grid spacing (label = “aegm09”) and one will have “anat” grid spacing (label = “aagm09”). These could be imported as part of processing for an ROI-based analysis, for example (in which case we would remove the blur block in the afni_proc.py command), but in the present case, they will be used only for QC-related purposes.

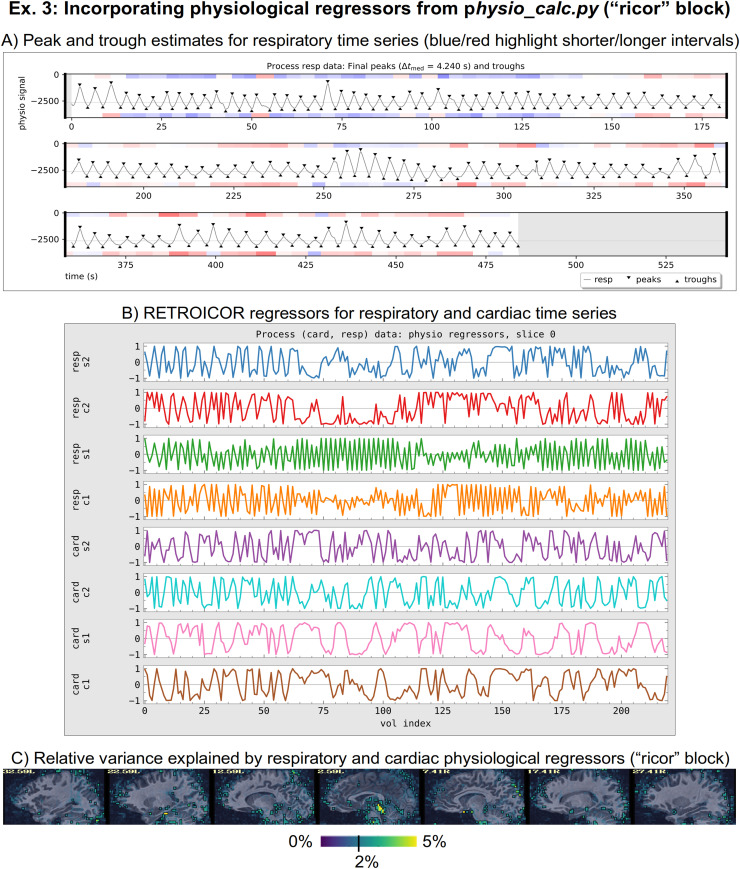

The “ricor” block is used to include regressors that have been estimated from physiological time series, which were measured during the FMRI acquisition. These typically include slicewise RETROICOR regressors (C. Chang & Glover, 2009; Glover et al., 2000), as well as volumetric respiration volume per time (RVT; Birn et al., 2006) regressors. In the present case, both cardiac and respiratory traces were acquired, so that eight slicewise regressors were calculated along with five volumetric ones (shifted copies of RVT). These sets of regressors were calculated with AFNI’s physio_calc.py prior to running afni_proc.py. Figure 13A displays the QC image of peak and trough estimation of a respiratory time series during that processing. Panel B in the same figure shows the eight cardiac and respiratory regressors based on RETROICOR that get applied to the FMRI data (five RVT regressors, not shown, are also included). Because RETROICOR contains slicewise information, its regressors are applied to the unwarped data, so “ricor” is typically one of the earliest processing blocks. Additionally, users can specify whether the regressors should be applied “per run” or “across runs” (the former is selected here), which applies when multiple EPI datasets are present. The relative variance of the combined physiological regressors is shown in Figure 13C, where the largest amounts are in the subcortical/inferior regions, as expected.

Fig. 13.

Aspects of processing related to having respiratory and cardiac time series data included in FMRI processing in Ex. 3. The AFNI program physio_calc.py was run on these physiological time series, for which peak and/or trough detection is a first key step, shown in panel A for the respiratory data. The QC image shows the estimated peak and trough locations with triangles (which can be edited in the program’s interactive mode, if necessary); the blue and red bands reflect the relative intervals between pairs of each, which can help highlight potential algorithm problems. Panel B shows the final RETROICOR regressors estimated by physio_calc.py. These are included in a slicewise manner within early afni_proc.py processing, along with five RVT regressors from the same program (not shown). Finally, panel C shows a map of the fractional variance explained using the 13 physiological regressors, with highest values around the subcortical and inferior regions.

It is worth noting that the way the physiological regressors are implemented relies on the ability of AFNI’s 3dREMLfit to work with voxelwise regressors. Most FMRI regression modeling uses a single set of regressors across the whole volume, but inherently the RETROICOR regressors are calculated per slice. This and other features such as the voxelwise regressors of ANATICOR (Jo et al., 2010), which are described in Appendix C, rely on this extended functionality.

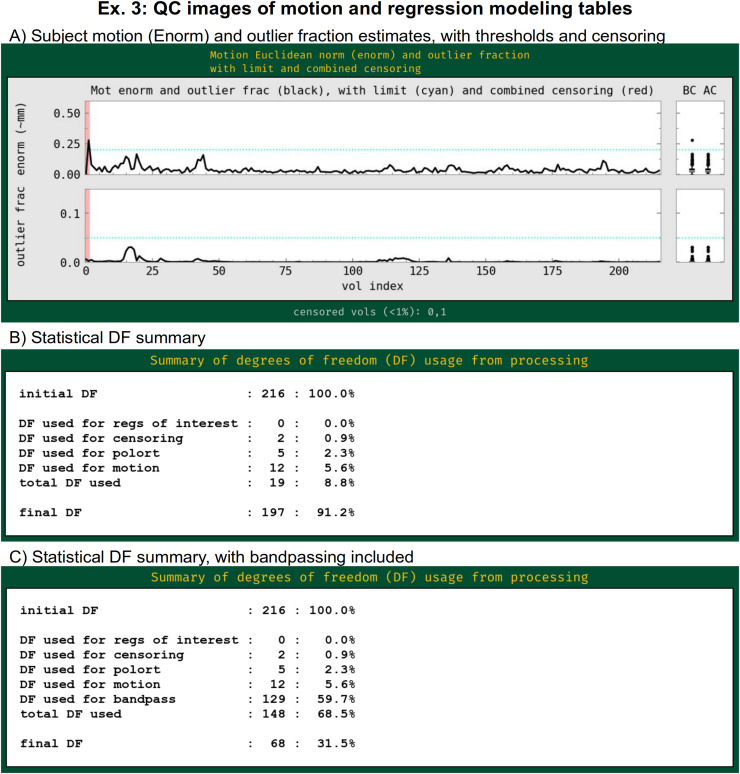

This example includes both outlier- and motion-based censoring, similar to Ex. 2. In this case, a slightly stricter motion criterion (Enorm > 0.2) is utilized, since resting-state FMRI tends to be more susceptible to motion-based artifacts than task-based data (as long as the motion is not strongly stimulus correlated). Figure 14A displays the Enorm and outlier fraction estimates for this subject, for which there were only two time points that reached threshold values for censoring. As is common for resting-state FMRI, we also include both the estimated motion profiles and their derivatives in the regression model (“-regress_apply_mot_types demean deriv”), spending slightly more degrees of freedom (DFs) to try to reduce motion effects. The degree of freedom bookkeeping for this regression model is displayed in Figure 14B, organized by category. It is worth noting that these EPI data display very little motion, and, therefore, censoring uses up very few degrees of freedom (<1%). However, in many cases censoring can use up a sizable fraction of degrees of freedom, and one must take care in overall model design to not use up too many degrees of freedom. The APQC HTML includes automatic checks for this.

Fig. 14.

QC images generated by afni_proc.py related to motion effects and regression modeling in Ex. 3 processing. Panel A shows the primary quantities that are used to assess subject motion and its effects: Enorm (Euclidean norm), which is approximately the amount of subject motion between time points, in mm; and outlier fraction. Users typically set thresholds for these quantities (horizontal blue lines) to determine which time points should be censored (highlighted in red). Panel B shows the degree of freedom bookkeeping for the regression model, organized by category of regressor. During modeling, data analysts must balance the removal of motion and other non-neuronal effects with the reduction of the statistical DF count. This example did not include bandpassing in processing, but Panel C shows the DF count if it had been included (see supplementary Ex. 5 in Appendix C). Note that bandpassing itself reduces the DF count by 60% of the original amount. Bandpassing can be problematic, particularly in cases of more subject motion.

It is worth highlighting that this processing example does not include bandpassing, even though doing so to restrict the analyzed time series to “low frequency fluctuations” (LFFs) has historically been a widely implemented choice in resting-state FMRI. The LFF band is typically 0.001–0.1 Hz or 0.01–0.1 Hz. It is certainly possible for afni_proc.py to include this within the regression model, using a single option to specify the interval of the frequency band to keep (e.g., “-regress_bandpass 0.01 0.1,” in units of Hz). In fact, one can specify multiple bands to keep, simply by specifying multiple pairs of boundaries. But there are several caveats that should be noted about the typical bandpassing to the LFF range. Many of them deal with counting degrees of freedom (DFs) while processing, which is something that is unfortunately often overlooked in the field, along with how software must take care with how bandpassing is performed within the processing; see Appendix A and Caballero-Gaudes and Reynolds (2017).

The fractional loss of degrees of freedom for FMRI bandpassing can be approximated by either of the following simple formulas:

| (1a) |

| (1b) |

where TR is the acquired EPI data’s repetition time (in seconds), and ftop and fbot are, respectively, the chosen upper and lower bounds of the band (in Hz); when fbot is much smaller than ftop, as would be common for standard LFF bands, then the simpler form in Equation 1b applies. In most resting-state papers that bandpass to LFFs, ftop = 0.1 Hz, so that for TR = 2 s one loses 60% of the degrees of freedom of the input data solely from the bandpass regressors. For FMRI with faster temporal sampling, the amount of loss grows: for TR = 1 s, one loses 80% of the DFs from the standard bandpassing alone. These are huge fractions of the time series DFs to remove, even before considering the further removal of DFs via motion, baseline, and censoring regressors. We note that afni_proc.py’s processing applies bandpassing in a mathematically consistent way within the regression model. It also tabulates these features and reports them so that users are aware, with various warning levels. If such accounting is not done, users might not be aware of the severe loss of DFs, even using up more than 100% of them, which is often a risk due to subject motion.

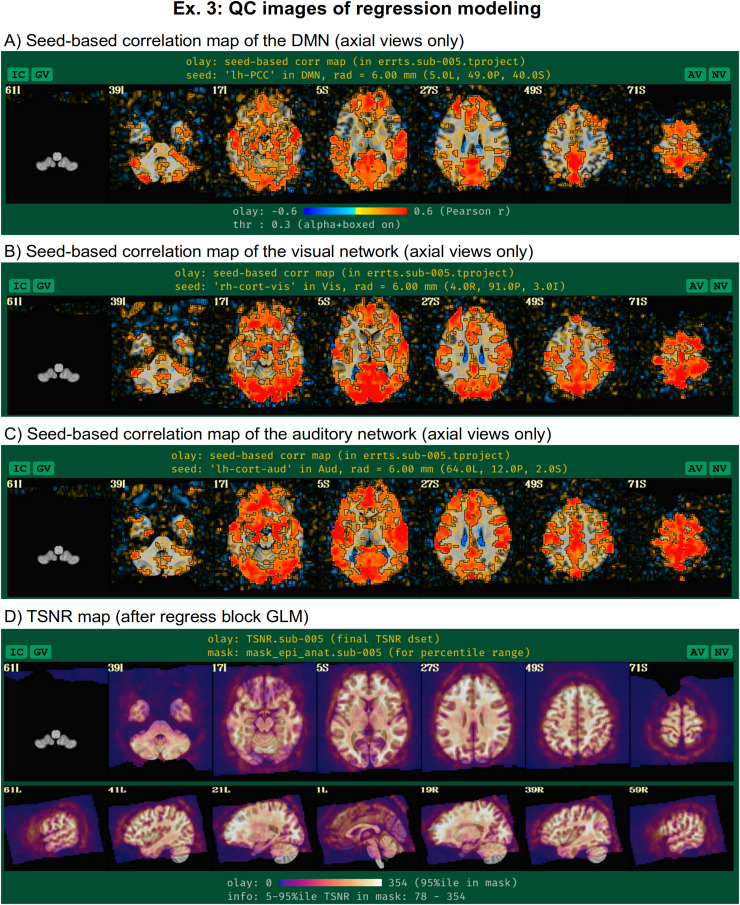

For resting-state and naturalistic FMRI, the main output of interest is the residual or “error” time series (errts) from the regression model. This is a notable difference from task-based data, where the effect estimates and statistics from the regressors of interest are the main output. In that case, the residuals should mostly contain the “noise” and other nonmodeled parts of the data, and they are typically just used to help judge the quality of data fit. In resting-state processing, evaluating the modeling job is more difficult because the conceptual separation of “signal” and “noise” does not materialize in the outputs. To help evaluate the final time series, the afni_proc.py QC HTML displays seed-based correlation maps of several major resting-state networks*** (Taylor et al., 2024): default mode network (DMN), visual and auditory networks (Fig. 15A), when the template space is known. These can reveal the presence of artifacts, signal dropout, problems with motion or regressors, and more. As above, transparent thresholding is applied. Additionally, effects of B0 inhomogeneity distortion are visible in the anterior regions (axial slices at Z = 5 S and 27 S), which may be reduced by including distortion correct via fieldmaps or reverse-phase encoding-derived warps (see Ex. 1, above).

Fig. 15.

QC images of statistical output for resting-state time series, for which the residuals are the time series of interest (Ex. 3). Panels A–C show axial maps the three seed-based correlation maps shown in the APQC HTML when the final space is a known template: for the default mode network (DMN), the visual network, and the auditory network. These allow for checks for artifacts and other potential problems from processing. Panel D displays the TSNR for these data, which can help distinguish regions of strong signal coverage from those with dropout or artifact.

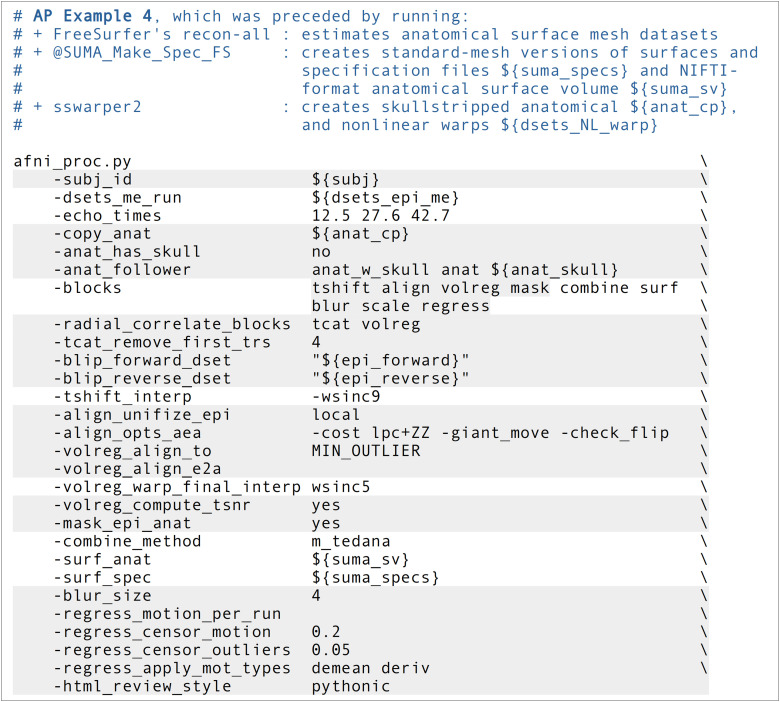

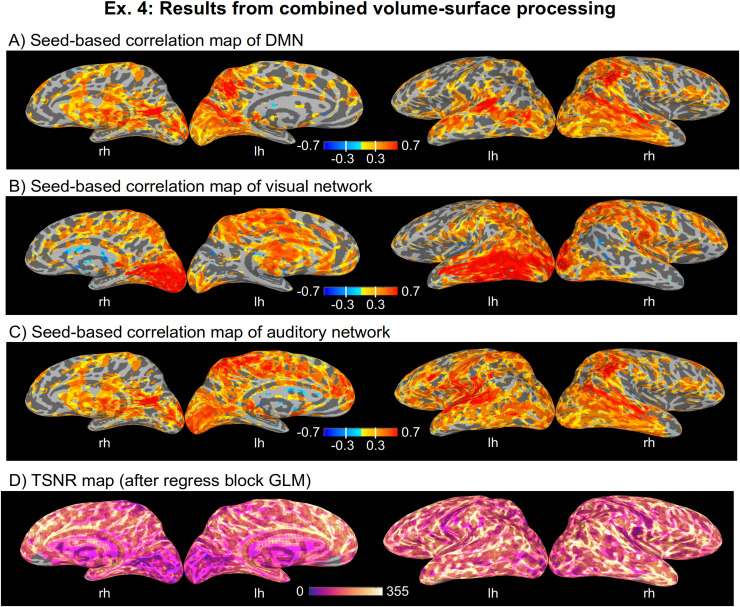

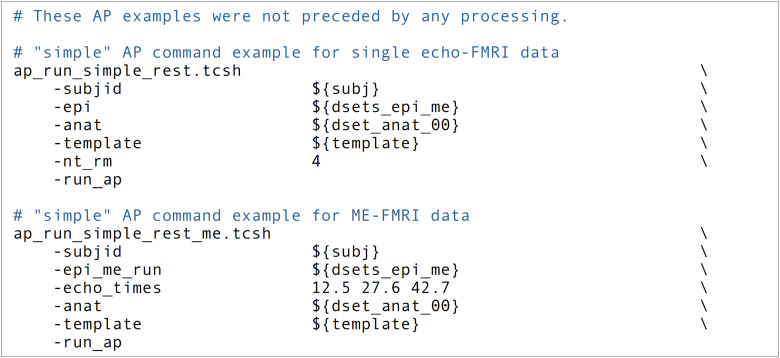

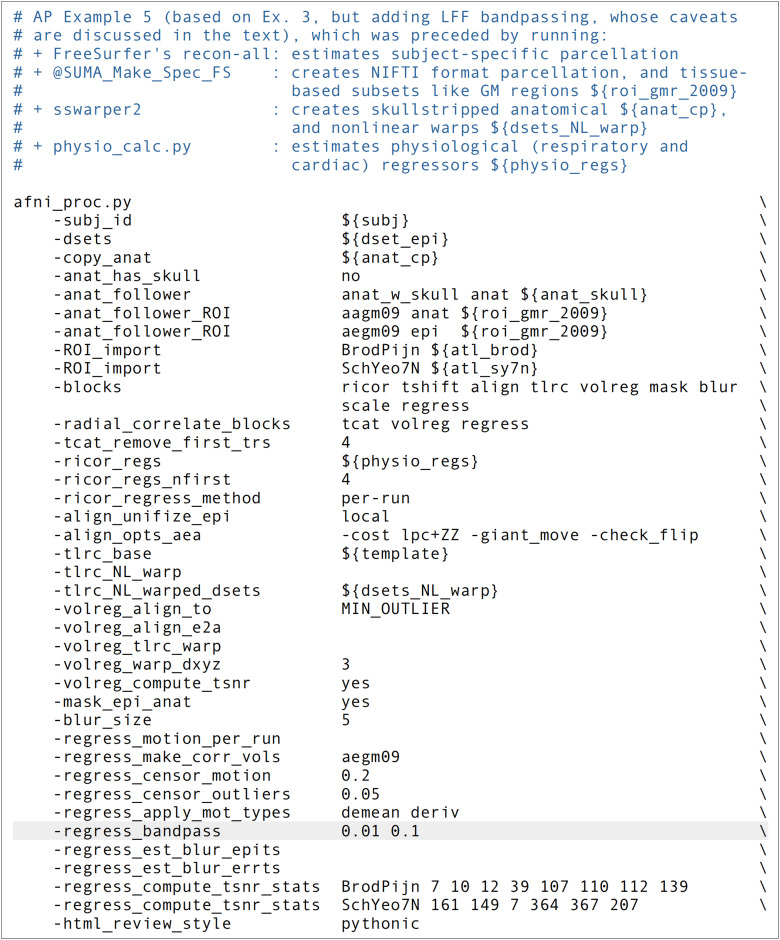

Additionally, viewing the temporal SNR (TSNR) of the time series can be useful for judging the regions of the brain with reasonable coverage for analysis (see Fig. 15D). Even within the acquired field of view, distortions and dropout can occur that greatly reduce the measured signal, and this can affect the ability of the researcher to investigate particular regions. For example, medial frontal and subcortical regions can be particularly affected by dropout, and differentially across subjects depending on brain-to-slice angle, reducing the ability to accurately study specific locations within the brain. We note that Figure 7 of Reynolds et al. (2023) contains an illustrative set of TSNR maps of varied degrees of quality, which are discussed in detail in the text.