Abstract

Background

The aim of this study was to examine the determinants of publication and whether publication bias occurred in gastroenterological research.

Methods

A random sample of abstracts submitted to DDW, the major GI meeting (1992–1995) was evaluated. The publication status was determined by database searches, complemented by a mailed survey to abstract authors. Determinants of publication were examined by Cox proportional hazards model and multiple logistic regression.

Results

The sample included abstracts on 326 controlled clinical trials (CCT), 336 other clinical research reports (OCR), and 174 basic science studies (BSS). 392 abstracts (47%) were published as full papers. Acceptance for presentation at the meeting was a strong predictor of subsequent publication for all research types (overall, 54% vs. 34%, OR 2.3, 95% CI 1.7 to 3.1). In the multivariate analysis, multi-center status was found to predict publication (OR 2.8, 95% CI 1.6–4.9). There was no significant association between direction of study results and subsequent publication. Studies were less likely to be published in high impact journals if the results were not statistically significant (OR 0.5, 95 CI 95% 0.3–0.6). The author survey identified lack of time or interest as the main reason for failure to publish.

Conclusions

Abstracts which were selected for presentation at the DDW are more likely to be followed by full publications. The statistical significance of the study results was not found to be a predictor of publication but influences the chances for high impact publication.

Background

Publication bias is the tendency to submit or accept manuscripts for publication based on the direction or strength of the study findings [1]. There are concerns that studies with statistically significant results are more likely to be published compared to studies with non significant (negative) results.

Publication bias is particularly problematic when pooled analyses are performed as it leads to an overestimation of the effect size [2,3]. Therefore, the issue is considered to be a problem primarily in those areas of research where meta-analyses are commonly performed [4-6]. Several studies on the determinants of publication have included basic science research abstracts [7-11], but so far only one study has looked into the occurrence of publication bias in this type of research [12].

There are several methods to detect publication bias in the medical literature. Most take advantage of the fact that small studies are more susceptible to publication bias and may, consequently, show larger treatment effects [13,14]. One of the earliest reports of publication bias in the biomedical literature came from the area of hepato-gastroenterology. In 1965, T.C. Chalmers et al. examined possible reasons for the high variability in reported case fatality rates in post transfusion hepatitis and found an inverse correlation between sample size and fatality rate [15]. More recently, Shaheen et al. were able to demonstrate publication bias in the reporting of cancer risk in Barrett's esophagus by using a funnel plot, a graphical method to detect publication bias [16]. Preferential publication of studies with positive results was also shown following the presentation of abstracts in endoscopy research [17].

Publication bias can arise during several phases of the publication process [18]. Abstracts submitted to scientific meetings are at an intermediate stage in the dissemination of research findings. The Digestive Diseases Week (DDW) is an important annual event in gastroenterology. The meeting is jointly organized by the American Gastroenterological Association (AGA), the American Association for the Study of Liver Diseases (AASLD), the American Society for Gastrointestinal Endoscopy (ASGE), and the Surgical Society for the Alimentary Tract (SSAT). Every year, several thousand abstracts are submitted to this meeting from investigators worldwide. Approximately half of these abstracts will eventually be followed by full articles in peer reviewed journals [19]. We wished to study the determinants of publication in gastroenterological research using DDW abstracts as a representative sample. We were particularly interested to know whether there is any indication for publication bias, where this arises and whether it varies by type of research or national origin. We also wished to survey the abstract authors to determine their perception as to what factors determined if an abstract went on to a full publication.

Methods

Study design and sampling

This retrospective cohort study was based on abstracts submitted to DDW between 1992 to 1995 (n = 17,205). Each was screened for abstract acceptance status (presentation at a meeting), number of centers participating, country of origin and research type. The mode of presentation (poster vs. oral) was not known. Multi-center status was assumed if abstract authors from > 3 different centers were named. The country of origin was based on the affiliation of the first author. Research type was defined as basic science studies (BSS) if the unit of analysis was not an intact human. Controlled clinical trials (CCT) were prospective studies on the effects of diagnostic tests or therapeutic interventions, using parallel or cross over controls. "Other clinical research" (OCR) comprised human physiology experiments, epidemiological studies and uncontrolled therapeutic studies. The screening was performed by student raters following a short introduction into research methodology. Following the screening, 50 BSS, 100 OCR and 100 CCT abstracts were randomly selected from each year using computer assigned random numbers, for a total sample size of 1,000

Abstract evaluation

Each abstract in the sample was reviewed in detail as to study design, sample size and statistical significance of the study results. Completeness of reporting and details of the study design were rated and combined into a summary score of formal abstract quality. The evaluation of abstracts was performed by raters with training in epidemiology and gastroenterology, masked in terms of authors, origin, acceptance for presentation at the meeting, and publication status. For all analytical studies (i.e. studies using designs appropriate for hypothesis testing), results were considered positive if statistical significance defined by a p – value < 0.05, or by a 95% confidence interval excluding unity was achieved for the main outcome of interest, or for the majority of outcomes if these were multiple [5,20]. Results were considered negative if statistical significance was not achieved for the main outcome, or for the majority of outcomes. Equivocal results were defined if there were no statements concerning the statistical significance of the main outcome, or the majority of outcomes. Descriptive studies (case reports, case series, incidence/prevalence studies, qualitative research and descriptions of procedures) were excluded from the main analyses.

Abstract quality assessment tool

We developed an instrument to evaluate formal abstract quality based on previously validated quality scoring instruments for full papers [21,22]. We also incorporated into the instrument published guidelines for the preparation of structured abstracts and short reports [23-25]. Depending on the study design, there were up to 21 items, including, for example, the definition of a research objective, use of randomization, control for confounding, use and reporting of adequate statistical methods and appropriateness of sample size. A summary score ranging from 0 – 1 could be calculated, adjusting for variations in the number of applicable items. The development and validation of this instrument are described in more detail in a separate article (Timmer, et al. Manuscript submitted for publication).

Data base searches

Publication status was assessed by searching MEDLINE, EMBASE, the Cochrane Library (CCT only) and Bios (BSS only) on the names of first and last authors. Only full manuscripts in peer reviewed journals as defined by Last [26] were considered to represent publications. The 1995 scientific impact factor (SIF) of a journal was used as an approximation of the prestige of a journal. This is a measure of the frequency with which the "average article" in a journal has been cited in a particular year or period [27].

Statistical methods

Prior to the examination of publication rates, all continuous data were dichotomized. Sample size medians were calculated separately for different subgroups (CCT: cross over, parallel; OCR: physiology, epidemiology, therapy & diagnostics; BSS: animal studies. More details are presented in a separate publication [28]). Subgroup specific medians were used as cut-off values for high sample size. A high quality score was defined as a summary score > 0.63 which corresponds to the upper tertile in this sample. Similarly, high journal impact was defined by an SIF > 3. Abstracts were categorized into four country groups based on geopolitical regions: 1) USA/Canada, 2) North and West Europe (NW Europe), 3) South and East Europe (SE Europe), 4) other. France and Germany were classified as NW European, while SE Europe also included Mediterranean countries such as Israel and Turkey. Due to the low number of submissions from Australia, these were combined with NW-European abstracts. Analyses were re-run excluding Australian abstracts to examine whether this altered the results.

The major analyses were based on the information derived from the abstract evaluation and the data base searches. Annual publication rates were calculated using actuarial survival methods [29]. Adjusted hazard ratios (HR) for the determinants of publication were calculated using Cox regression, or by multiple logistic regression if the assumption of proportional hazards was not met [30]. The independent variables included in the initial model were: research type, region of origin, sample size category, quality score category, statistical significance, country group and multi-center status. The model was reduced using manual back and forth procedures, with variable selection based on the stability of the effect sizes, and on the statistical significance of the estimates (p < 0.1).

The association between abstract acceptance and subsequent publication was examined separately as the presentation of an abstract was not considered an independent variable but rather part of the pathway to full publication (an 'interim outcome'). Logistic regression was used to predict early publication (< 2 years after the meeting) and high impact publication within the subgroup of published studies.

Sample size considerations

The sample size was chosen to provide sufficient power (>80%) to detect a 20% difference in publication rates between studies with statistically non significant ("negative") results vs. studies with positive outcome. The calculation allowed for separate analyses for CCT and OCR, assuming a negative: positive outcome ratio of 1:2, and a 60% publication rate for positive outcome studies (= 0.05). Since at the beginning of this study, there were no data on publication bias following BSS abstract presentations and because of uncertainty about the applicability of the descriptors used, a higher relevant difference was chosen for this subgroup (30%) and a smaller sample size was possible. Statistical significance was assumed on a 95% confidence level (two tailed).

Author survey

A four-page questionnaire was sent to authors of all abstracts, followed, if necessary, by up to two written reminders. The final reminder included a short version of the original questionnaire. The number of publications identified by the author survey were compared to those identified by the data base searches to calculate data base retrieval rates. Responder bias was assessed by comparing the characteristics of abstracts for which questionnaires were received with those for which no questionnaires were received. We corrected for responder bias and incomplete retrieval by multiplying the data base derived rate for the full sample by the reciprocal of the retrieval rate for the abstracts covered in the survey. Agreement between abstract and survey information was assessed using Cohen's kappa (κ) [31].

Models based on the mail survey included, in addition to those described above, the following: mode of abstract presentation, governmental funding, funding by industry, number of previous publications of the principal investigator and age and sex of the investigator.

Results

Abstract characteristics and publication rates

Between 1992 and 1995, 17,205 abstracts were submitted to DDW (928 CCT, 8028 OCR, 8150 BSS, 99 not classifiable (preliminary classification by student raters)). After revision of the research type by the expert raters, the stratified random sample drawn from these abstracts included 326 CCT, 455 OCR and 219 BSS. Of these, 164 descriptive studies were excluded from the analysis, reducing the sample size to 836 (326 CCT, 336 OCR, 174 BSS). Abstract characteristics are shown in Table 1. The highest percentage of abstracts with equivocal results was noted in BSS (overall 40%). OCR and BSS abstracts rarely reported negative results (12% and 4%, respectively), compared with CCT (30%). Based on the median sample size, the following cut off points were used to define large sample size (total number of abstracts in parentheses): CCT: > 50 subjects for CCT with parallel controls (n = 249), > 15 for cross over trials (n = 77). OCR: > 30 for therapy/diagnostics (n = 95), > 75 for epidemiological studies (n = 94), > 15 for physiology (n = 147). BSS: animal studies > 9 (n = 90), studies in biological material: not applicable or not reported (n = 84).

Table 1.

Baseline characteristics of abstracts

| CCT N (%) | OCR N (%) | BSS N (%) | total N | |

| total sampled | 326 | 455 | 219 | 1,000 |

| descriptive studies (excluded) | - | 119 | 45 | 164 |

| total in analysis | 326 | 336 | 174 | 836 |

| acceptance of abstract | ||||

| accepted | 227 (70) | 214 (64) | 101 (58) | 542 |

| rejected | 99 (30) | 122 (36) | 73 (42) | 294 |

| country region of origin | ||||

| US/Canada | 88 (27) | 128 (38) | 95 (55) | 311 |

| NW-Europe/Australia | 138 (42) | 107(32) | 34 (20) | 279 |

| SE-Europe/Mediterranean | 52 (16) | 44(13) | 9(5) | 105 |

| other | 48 (15) | 57(17) | 36(21) | 141 |

| number of study centers | ||||

| 1–2 | 280 (86) | 324 (96) | 173 (99) | 777 |

| >3 | 46 (14) | 12 (12) | 1(1) | 59 |

| statistical significance | ||||

| positive | 140 (43) | 149 (44) | 65 (37) | 354 |

| negative | 99 (30) | 41 (12) | 7(4) | 147 |

| equivocal | 87 (27) | 146 (44) | 102 (59) | 335 |

The data base searches were performed three to six years after abstract submission. For 392 abstracts, full publications were identified (publication rate 47%, 95% CI 43% to 50%). In Table 2, publication rates are shown for different subgroups. The proportion of published studies was highest for CCT (52%), lowest for OCR (42%) (BSS: 47%, p = 0.03 for differences by research type). Accepted abstracts were more often followed by full publication as compared to abstracts rejected for presentation at the meeting (58% vs. 32%, p < 0.001). For CCT, multi-center status, the statistical significance of the study results, and a high quality score were also associated with higher publication rates. No differences were found for country of origin or sample size.

Table 2.

Number of publications and crude publication rates by research type

| CCT N (%) | OCR N (%) | BSS N (%) | total N (%) | |

| total in analysis | 326 | 336 | 174 | 836 |

| total published | 170 (52) | 140 (42) | 82 (47) | 392 (47) |

| abstract acceptance | ||||

| accepted | 128 (56)* | 107(50)* | 59 (58)* | 292 (54)* |

| rejected | 42 (42) | 33 (27) | 23 (32) | 98 (34) |

| country group | ||||

| USA/Canada | 43 (49) | 57 (45) | 48 (51) | 147 (48) |

| N/W Europe/Australia | 78 (57) | 43 (40) | 14 (41) | 136(48) |

| S/E Europe/Mediterranean. | 22 (42) | 19(43) | 6(67) | 45 (44) |

| other | 27 (56) | 21 (37) | 14 (39) | 62 (43) |

| multi-center status | ||||

| 1–3 centers | 134(48)* | n/a | n/a | n/a |

| > 3 centers | 36 (78) | |||

| sample size | ||||

| < median | 72 (50) | 69 (40) | 8(44) | 142 (43) |

| > median | 98 (54) | 68 (42) | 12 (41) | 181 (48) |

| statistical significance | ||||

| positive | 84 (60)* | 64 (43) | 32 (49) | 177(50) |

| negative | 47 (48) | 17(42) | 5(71) | 69 (47) |

| equivocal | 39 (45) | 59 (40) | 45 (44) | 144 (43) |

| quality score | ||||

| <0.63 | 103 (47)* | 88 (42) | 54 (49) | 240 (45) |

| >0.63 | 67 (63) | 52 (41) | 28 (44) | 150(50) |

* p < 0.05 for differences in publication rates between categories. A quality score > 0.63 equals good abstract quality

Because of the violation of the proportional hazards assumption, Cox regression analysis could not be used to determine time to publication by research type for the combined sample. The results of the logistic regression can be seen in Table 4. Only multi-center status was a significant predictor of full publication (OR 2.8, 95% CI 1.6 to 4.9). Type of research, statistical significance of the results, origin of the abstract and sample size were not associated with publication rates. For CCT, multi-center status (HR 2.0, 95% CI 1.0 – 1.9) and a high quality score (HR 1.4, 95% CI 1.0–1.9) were associated with publication. No significant predictors of publication for OCR and BSS were identified. Using the country of the last author as country of origin, or excluding Australian abstracts from the analysis did not change the results of any of the models.

Table 4.

Predictors of publication

| data base based OR (95% CI) | survey based OR (95% CI) | |

| number of applicable abstracts | 836 | 494 |

| abstracts with missing values | 0 | 71 |

| total in analysis | 836 | 423 |

| statistical significance | ||

| positive | 1 | 1 |

| negative | 0.8(0.6–1.2) | 0.7(0.4–1.2) |

| equivocal | 0.7(0.6–1.0) | 0.4 (0.2–0.7) |

| previous publications of PI | ||

| less than 10 | not available | 1 |

| more than 10 | 1.7(1.1–2.8) | |

| multi-center status | ||

| 1–3 centers | 1 | 1 |

| > 3 centers | 2.8(1.6–4.9) | 2.0 (0.9–4.5) |

Data base search based: Model 2 17.1, df3, p < .001. Hosmer and Lemeshow Goodness-of-FitTest: 2 .24; 3df; p = .97 Survey based: Model 2 13.2;df 1; p < .001; Hosmer and Lemeshow: skipped (d.f. <1)

Time to publication and impact factor of the publications

Annual publication rates were highest in the second year after submission. Of the identified publications, 67% were published within two years (median time to publication: 18 months). If a project was not published within four years, the chances for subsequent publication were only 3% per year.

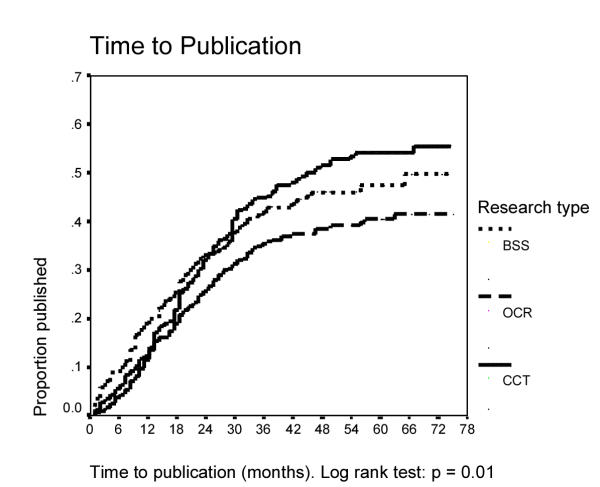

The time to publication by research type is presented in Fig. 1. The proportion of published studies was highest for CCT at the end of the follow up period, but they took longer to get published. In contrast, 71% of the BSS publications appeared within 2 years. This difference by research type was statistically significant (p = 0.01). No other predictors of rapid publication as defined by publication within 2 years were identified. Specifically, the statistical significance of the study results had no influence on the time to publication in this analysis, neither in the combined group, nor in any of the subgroups. Among the 392 publications identified by the data base searches, there were only 15 in languages other than English (4%). In a multivariate logistic regression model containing all published studies (n = 392), negative outcomes were less likely to appear in high impact journals (OR 0.5, 95% CI 0.3–0.6). For equivocal studies, the OR was 1.2 (95% CI 0.7–1.9, reference: positive studies, OR 1.0). Multi-center status (OR 2.4, 95% CI 1.2–4.9) and a high quality score (OR 1.7, 95% CI 1.1–2.6) were also retained in this model, as was the origin (North America: OR 1.0, NW-Europe: 0.9, 95% CI 0.6–1.5, SE Europe: 0.3, 95% CI 0.1–0.6, other: 0.7, 95% CI 0.4–1.3). The analysis was controlled for research type which, however, was not itself independently associated with journal scientific impact. Acceptance for presentation at a meeting was also associated with publication in a higher impact journal. The difference was small but statistically significant (OR for high impact if accepted: 1.5 (95% CI 1.0–2.4); rejected, OR = 1).

Figure 1.

Time to publication

Results of the mail survey

Questionnaires were received for 593 of 1,000 abstracts (46 returned undeliverable, response rate 62.2%). Of these, 58 were in short form. Response rates were higher for authors of subsequently published abstracts (69.9% vs. 55.6%, based on data base searches, p < 0.001), indicating significant responder bias. The survey identified 387 publications including 306 identified by the data base searches (data base retrieval rate 79%). The retrieval rate was lower for manuscripts published in languages other than English (33%; English language manuscripts: 80%, p = 0.03), however, this group was very small (n = 6). No other factors were associated with retrieval rates. The combined effect of incomplete retrieval by the data base searches on the one hand and responder bias in the survey on the other hand resulted in a significantly higher publication rate for the survey based analysis as compared to the data base search (64% vs. 47%). The publication rate corrected for these biases was estimated to be 58% (95% CI 55% to 61%).

Where survey information could be validated against information from the abstracts or from Medline, agreement was poor (abstract acceptance = 0.5, randomization (CCT only) = 0.2, statistical significance = 0.3). The poor agreement for statistical significance was due to the large proportion of abstracts with equivocally reported results. The majority of these (64%) had become statistically significant in the survey (8% negative, 27% equivocal). The date of publication was missing or incorrect in 58% of the reported publications.

In spite of the evident problems in the reliability and validity of the survey data, exploratory analysis of the survey data showed similar trends for the prediction of publication compared with the data base derived rates (Table 3). Based on the crude rates, the mode of presentation, the statistical significance of the results, and the number of previous publications were found to be significantly associated with full publication. There was a trend for higher publication rates with increasing age of the investigator; however, this was not statistically significant. Also, this variable was strongly correlated with the number of previous publications. Gender differences could not be adequately assessed due to the low number of female investigators (n = 33). In the multivariate analysis only the number of previous publications was significantly associated with publication (Table 4).

Table 3.

Survey information and survey based publication rates

| CCT N (%) | OCR N (%) | BSS N (%) | total N | Publication-rate | |

| total received | 192 | 270 | 131 | 593 | |

| descriptive studies (excluded) | - | 67 | 32 | 99 | |

| total in analysis | 192 | 203 | 99 | 4941 | 66% |

| presentation at the meeting | |||||

| oral presentation | 44 (24) | 27 (14) | 22 (22) | 93 | 80%* |

| poster | 120 (65) | 128 (66) | 65 (66) | 313 | 68% |

| no presentation | 20(11) | 28 (20) | 11(11) | 69 | 44% |

| statistical significance of results | |||||

| positive | 126 (66) | 136(67) | 79 (80) | 341 | 72%* |

| negative | 51 (27) | 15(7) | 4(4) | 70 | 61% |

| equivocal | 15(8) | 52 (26) | 16(16) | 83 | 49% |

| age of principal investigator | |||||

| < 35 years | 12(7) | 30(17) | 17(20) | 49 | 61% |

| 35–50 years | 100(61) | 106(60) | 43 (51) | 249 | 66% |

| > 50 years | 53 (32) | 42 (24) | 25 (29) | 120 | 72% |

| sex of principal investigator | |||||

| male | 145 (93) | 156(91) | 77 (92) | 378 | 68% |

| female | 11(7) | 15(9) | 7(8) | 33 | 61% |

| number of previous publications | |||||

| up to 10 | 34(19) | 56(31) | 33 (33) | 154 | 59%* |

| >10 | 149(81) | 126 (69) | 67 (67) | 128 | 70% |

| funding2 | |||||

| by industry | 106(55) | 38(19) | 10(10) | 154 | 66% |

| by government | 25(13) | 44 (22) | 59 (60) | 128 | 73% |

*p < 0.05 for differences in publication rates between categories. 1: Totals 494 due to missing values.: 2categories are not mutually exclusive

Reasons for non publication

In the majority of the 206 unpublished studies for which this information was available, the failure to publish rested with the investigator: 156 projects were never submitted as a manuscript (76% of the unpublished projects). The most frequent reason for non publication was lack of time (Table 5). Fifty manuscripts were not published because of editorial rejection. In addition, 121 manuscripts were reported to have been rejected at least once before eventual publication (maximum number of submissions: 5). Reasons for rejection were reported in 22 of the unpublished, and 102 of the eventually published studies, and most often included methodological problems or lack of interest in the topic. Negative results were cited as a reason for rejection in nine cases; however, all nine studies were eventually published in other journals.

Table 5.

Reasons for non completion or non submission

| most important factor | other factors | |

| total reporting1 | 112 | 112 |

| lack of time | 48 (42.9) | 76 (67.9) |

| co-investigator left | 12 (10.7) | 32 (28.6) |

| lack of interest | 5 (4.5) | 25 (22.3) |

| sample size/recruitment problems | 7 (6.3) | 26(23.2) |

| limitations in methodology | 4(3.6) | 21 (18.7) |

| unimportant results | 3 (2.7) | 16(14.3) |

| rejection anticipated | 3 (2.7) | 10(8.9) |

| publication not the aim | 3 (2.7) | 10(8.9) |

| negative results | 3 (2.7) | 11 (9.8) |

| external problems | 6 (5.4) | 6 (8.5) |

| side effects/ethical problems | 1 (0.9) | 5 (4.5) |

| equipment/software problems | 2(1.8) | 3 (2.7) |

| single decisive factor not reported | 15(13.4%) | - |

1Reasons for rejection were not available from short form questionnaires sent to primary non responders. Multiple responses were allowed for "other factors".

Discussion

This study examine determinants of publication of gastrointestinal research, based on a large sample of abstracts submitted to the major scientific meeting in this field. The evaluation of the randomly selected abstracts showed a wide variety in terms of research topic, origin, quality and research type. Until 1999 all DDW abstracts were published as a supplement to Gastroenterology. Abstracts are often not included in reviews nor are they always easily available to the interested reader. The reliability of information from abstracts has been questioned [32-34]. It is important to identify the factors which influence full publication as selective underreporting of research may result in seriously distorted information [35].

Based on data base searches, approximately about half of the abstracts were subsequently published as full manuscripts. The proportion ranged from 42% for other clinical research to 52% for controlled clinical trials. This is in accordance with data reported for other meetings. Publication rates following abstract presentation have been published for a large variety of biomedical specialties. In a recent Cochrane review on publication rates following abstract presentation, 46 studies were identified, following up a total of 15 985 abstracts [36]. Publication rates varied from 10% to 78%, with a median rate of 47%. This meta-analysis constitutes an update and extension to a previous study by Scherer, combining 2391 meeting abstracts from 10 studies, resulting in a pooled publication rate of 51% [37]. Since the publication of the Cochrane review, more follow up studies were published, including two in subspecialties of gastroenterology [17,38], two more studies following up gastroenterological abstracts are available in abstract form [19,39]. The similarity in the reporting of publication rates across different meetings is striking. Only in the report by Eloubeidi, a significantly lower rate was reported (25%) [17]. We suggest that this may be due to the restriction to endoscopy research which may not be representative of more general meetings.

The most important factor found to be associated with full publication in our study was the acceptance of the abstract for presentation at the meeting. We found an odds ratio of 2.3 (95% CI 1.7 to 3.1) for publication of accepted abstracts, as compared to rejected abstracts. This translates into a relative risk of 1.6 (95% CI 1.4 to 1.9), a number which is consistent with data reported by other investigators [8,39-44]. Scherer calculated a pooled relative risk of 1.8 (95% 1.7 to 2.0) for publication, based on 7 reports [36]. Generally, the determinants of abstract acceptance resemble those of subsequent publication, although additional factors may play a role [42,45]. Acceptance of an abstract for presentation may influence the decision of authors to pursue full publication. We did not consider abstract acceptance an independent factor in the publication process, but rather a variable on the causal chain. Therefore, we did not include this factor in our multivariate analysis. Our findings on the determinants of abstract acceptance are reported in more detail in a separate publication [28].

Most previous studies focused on clinical research, or were restricted to randomized clinical trials only. Few data are available on publication processes for basic science. Generally, due to the higher heterogeneity in terms of study design and study objectives, results in this group are more difficult to interpret. However, most trends were similar in the subanalyses. Basic science research was found to take less time to publication as compared to clinical research. This may explain the higher publication rate for BSS as compared to clinical research which was described by Kiroff in a study involving a shorter follow up period, and was also confirmed in the meta-analysis by Scherer [12,36]. In our study, the rate and the scientific impact of publication as measured by the scientific journal impact factor was similar between basic science research and controlled clinical trials.

A novel aspect was the international context which allowed for the examination of differences in publication rates based on the origin of the research. In a parallel study, North American origin was shown to be the most important predictor of abstract acceptance for presentation at the US-based DDW-meetings [28]. In contrast, no difference in the proportion of published studies, nor in the impact of the publications was found between research from North America or NW Europe and Australia. There was some indication that abstracts from other countries than North America, NW Europe and Australia were of somewhat lower quality, resulting in a lower proportion of high impact publications and lower publication rates, however, the number of submitted abstracts from these other countries was low, and estimates were imprecise.

Ideally, the decision to submit or accept a manuscript for publication is based on the scientific quality of the research [1]. This variable is particularly difficult to examine based on the limited information available from meeting abstracts. In a study on the fate of emergency medicine abstracts, abstract quality was assessed by a modification of Chalmers' rating system for the methodological quality of randomized clinical trials, as well as by a scientific originality rating [43]. Originality was found to be associated with subsequent publication, but, as in a previous study using a similar modification, the methodology score was not predictive [6,46]. However, only few design features were included in this score, and the instrument may not have been very sensitive to differences in formal quality. In contrast, we used a newly developed 21 item score, encompassing various aspects of completeness of reporting and design features to assess abstract quality. Due to the wide range of research types and topics within the sample, originality of the research could not be uniformly assessed. The positive association found for formal quality and prestige of the publication, however, may be taken as indicative of a good correlation between form and content, at least for the subgroup of controlled clinical trials, as was suspected by other authors [47].

A positive outcome of the study results was not significantly associated with subsequent publication. This is somewhat surprising in view of the consistent findings in the literature that show an about twofold chance for publication if the results are statistically significant [36]. One reason may be the high proportion of equivocal results. The inability to correctly classify these abstracts is likely to have diluted the strength of the association studied. Nevertheless, publication bias was still evident from the higher impact of publications with positive results, which is is in accordance with the observation of a higher susceptibility for publication bias in higher impact journals [4,6]. In addition, for basic science studies in particular, the low proportion of positive outcome studies indicated a biased abstract submission, a phenomenon also demonstrated by Callaham [43].

Limitations of this study include the low response rate in the author survey, which compromised the validity of an analysis based on author information. As significant responder bias and limitations to the reliability of the information given by the surveyed authors were evident, the results from the survey based models have to be interpreted with caution. On the other hand, the complementary use of data collection was important to gauge the reliablity of the information used.

Another limitation stems from the heterogeneity of the research projects and the international context. While this added valuable information on publication processes in a wider perspective, a few variables were more difficult to assess. Lack of comparability between study types may, for example, explain the failure to show an association between sample size and full publication. Similarly, national funding policies are likely to differ considerably, so that potential effects of source of funding on publication rates were possibly missed.

Conclusions

Based on the results of our study, there is subtle evidence for publication bias in gastroenterological research, expressed by a low proportion of negative outcome abstract submissions, especially in basic science, and by a lower proportion of high impact publications if the results are statistically negative. In the majority of cases, authors are responsible for the failure to publish. The acceptance of an abstract for presentation at a scientific meeting seems to be an important factor in the decision to submit a manuscript for publication. The submission of studies with statistically negative results needs to be encouraged, especially in basic science.

Abbreviations

BSS: basic science studies

N/W: North and West

CCT: controlled clinical trial

OCR: other clinical research

DDW: Digestive Diseases Week

S/E: South and East

HR: hazard ratio

SIF: scientific impact factor

Competing interests

None declared.

Authors' contributions

AT designed the study, developed the evaluation instruments, collected the data, performed the statistical analysis and drafted the manuscript. RJH participated in the development of the evaluation instrument and in the abstract evaluation. JC contributed to the design of the study and served as an advisor for the data base assessment. DH also contributed to the design of the study. LRS conceived the study and participated in its design and coordination. All authors read and approved the final manuscript.

Pre-publication history

The pre-publication history for this paper can be accessed here:

Acknowledgments

Acknowledgments

The authors wish to thank K. Dickersin, Providence, for expert advice and thoughtful comments on the manuscript. Thanks also to M. Dorgan, Edmonton, for assistance with the data base searches. A. Timmer was a fellow of the German Academic Exchange Service (DAAD). The study was supported by a grant from the Calgary Regional Health Authority R&D and by Searle Canada.

Contributor Information

Antje Timmer, Email: antje.timmer@klinik.uni-regensburg.de.

Robert J Hilsden, Email: rhilsden@acs.ucalgary.ca.

John Cole, Email: jhcole@ucalgary.ca.

David Hailey, Email: dhailey@ualberta.ca.

Lloyd R Sutherland, Email: lsutherl@acs.ucalgary.ca.

References

- Dickersin K. The existence of publication bias and risk factors for its occurrence. JAMA. 1990;263:1385–1389. doi: 10.1001/jama.263.10.1385. [DOI] [PubMed] [Google Scholar]

- Simes RJ. Confronting publication bias: a cohort design for meta-analysis. Stat Med. 1987;6:11–29. doi: 10.1002/sim.4780060104. [DOI] [PubMed] [Google Scholar]

- Thornton A, Lee P. Publication bias in meta-analysis. Its causes and consequences. J Clin Epidemiol. 2000;53:207–216. doi: 10.1016/S0895-4356(99)00161-4. [DOI] [PubMed] [Google Scholar]

- Begg CB, Berlin JA. Publication bias: a problem in interpreting medical data. J R Stat A. 1988;151:419–463. [Google Scholar]

- Begg CB, Berlin JA. Review: publication bias and dissemination of clinical research. J Natl Cancer Inst. 1989;81:107–115. doi: 10.1093/jnci/81.2.107. [DOI] [PubMed] [Google Scholar]

- Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–872. doi: 10.1016/0140-6736(91)90201-Y. [DOI] [PubMed] [Google Scholar]

- Gavazza JB, Foulkes GD, Meals RA. Publication pattern of papers presented at the American Society for Surgery of the Hand annual meeting. J Hand Surg [Am] 1996;21:742–745. doi: 10.1016/S0363-5023(96)80185-7. [DOI] [PubMed] [Google Scholar]

- Goldman L, Loscalzo A. Fate of cardiology research originally published in abstract form. N Engl J Med. 1980;303:255–259. doi: 10.1056/NEJM198007313030504. [DOI] [PubMed] [Google Scholar]

- Hamlet WP, Fletcher A, Meals RA. Publication patterns of papers presented at the Annual Meeting of The American Academy of Orthopaedic Surgeons. J Bone Joint Surg Am. 1997;79:1138–43. doi: 10.2106/00004623-199708000-00004. [DOI] [PubMed] [Google Scholar]

- Juzych MS, Shin DH, Coffey J, Juzych L, Shin D. Whatever happened to abstracts from different sections of the association for research in vision and ophthalmology? Inv Ophthalmol Vis Sci. 1993;34:1879–1882. [PubMed] [Google Scholar]

- Landry VL. The publication outcome for the papers presented at the 1990 ABA conference. J Burn Care Rehabil. 1996;17:23A–6A. doi: 10.1097/00004630-199601000-00002. [DOI] [PubMed] [Google Scholar]

- Kiroff GK. Publication bias in presentations to the Annual Scientific Congress. Aust N Z J Surg. 2001;71:167–171. doi: 10.1046/j.1440-1622.2001.02058.x. [DOI] [PubMed] [Google Scholar]

- Sterne JA, Gavaghan D, Egger M. Publication and related bias in meta-analysis: power of statistical tests and prevalence in the literature. J Clin Epidemiol. 2000;53:1119–29. doi: 10.1016/S0895-4356(00)00242-0. [DOI] [PubMed] [Google Scholar]

- Sterne JA, Egger M, Smith GD. Investigating and dealing with publication and other biases in meta-analysis. Br Med J. 2001;323:101–5. doi: 10.1136/bmj.323.7304.101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chalmers TC, Koff RS, Grady GF. A note on fatality in serum hepatitis. Gastroenterology. 1965;49:22–26. [Google Scholar]

- Shaheen NJ, Crosby MA, Bozymski EM, Sandler RS. Is there publication bias in the reporting of cancer risk in Barrett's esophagus? Gastroenterology. 2000;119:333–338. doi: 10.1053/gast.2000.9302. [DOI] [PubMed] [Google Scholar]

- Eloubeidi MA, Wade SB, Provenzale D. Factors associated with acceptance and full publication of GI endoscopic research originally published in abstract form. Gastrointest Endosc. 2001;53:275–282. doi: 10.1067/mge.2001.113383. [DOI] [PubMed] [Google Scholar]

- Chalmers TC, Frank CS, Reitman D. Minimizing the three stages of publication bias. JAMA. 1990;263:1392–1395. doi: 10.1001/jama.263.10.1392. [DOI] [PubMed] [Google Scholar]

- Duchini A, Genta RM. From abstract to peer-reviewed article: the fate of abstracts submitted to the DDW: [Abstract] Gastroenterology. 1997;112:A12. [Google Scholar]

- Dickersin K. Confusion about "negative" studies. N Engl J Med. 1990;322:1084. doi: 10.1056/NEJM199004123221514. [DOI] [PubMed] [Google Scholar]

- Moher D, Jadad AR, Nichol G, Penman M, Tugwell P, Walsh S. Assessing the quality of randomized controlled trials: an annotated bibliography of scales and checklists. Control Clin Trials. 1995;16:62–73. doi: 10.1016/0197-2456(94)00031-W. [DOI] [PubMed] [Google Scholar]

- Cho MK, Bero LA. Instruments for assessing the quality of drug studies published in the medical literature. JAMA. 1994;272:101–104. doi: 10.1001/jama.272.2.101. [DOI] [PubMed] [Google Scholar]

- Ad Hoc Working Group for Critical Appraisal of the Medical Literature A proposal for more informative abstracts of clinical studies. Ann Intern Med. 1987;106:598–604. [PubMed] [Google Scholar]

- Squires BP. Structured abstracts of original research and review articles. Can Med Ass J. 1990;143:619–622. [PMC free article] [PubMed] [Google Scholar]

- Deeks JJ, Altman DG. Inadequate reporting of controlled trials as short reports. Lancet. 1998;352:1908–1909. doi: 10.1016/S0140-6736(05)60399-6. [DOI] [PubMed] [Google Scholar]

- Last JM. New York, Oxford, Toronto: Oxford University Press. 3 1995. A dictionary of epidemiology. [Google Scholar]

- Garfield E. The impact factor. ISI-essays. 1994. http://:essay7.html

- Timmer A, Hilsden RJ, Sutherland LR. Determinants of abstract acceptance for the Digestive Diseases Week. BMC Med Res Methodol. 2001;1:13. doi: 10.1186/1471-2288-1-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altman DG. Practical statistics for medical research. London: Chapman & Hall; 1991.

- Christensen E. Multivariate survival analysis using Cox's regression model. Hepatology. 1987;7:1346–1358. doi: 10.1002/hep.1840070628. [DOI] [PubMed] [Google Scholar]

- Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. [PubMed] [Google Scholar]

- Relman AS. News reports of medical meetings: how reliable are abstracts? N Engl J Med. 1980;303:277–278. doi: 10.1056/NEJM198007313030509. [DOI] [PubMed] [Google Scholar]

- Soffer A. Beware the 200-word abstract! Arch Int Med. 1976;136:1232–1233. doi: 10.1001/archinte.136.11.1232. [DOI] [PubMed] [Google Scholar]

- Weintraub WH. Are published manuscripts representative of the surgical meeting abstracts? An objective appraisal. J Ped Surg. 1987;22:11–13. doi: 10.1016/s0022-3468(87)80005-2. [DOI] [PubMed] [Google Scholar]

- Chalmers I. Underreporting research is scientific misconduct. JAMA. 1990;263:1405–1408. doi: 10.1001/jama.263.10.1405. [DOI] [PubMed] [Google Scholar]

- Scherer RW, Langenberg P. Full publication of results initally presented in abstracts (Cochrane Methodology Review). The Cochrane Library Oxford: Update Softw (4) 2001. [DOI] [PubMed]

- Scherer RW, Dickersin K, Langenberg P. Full publication of results initially presented in abstracts. A meta-analysis. JAMA. 1994;272:158–162. doi: 10.1001/jama.272.2.158. [DOI] [PubMed] [Google Scholar]

- Timmer A, Blum T, Lankisch PG. Publication rates following pancreas meetings. Pancreas. 2001;23:212–215. doi: 10.1097/00006676-200108000-00012. [DOI] [PubMed] [Google Scholar]

- Sanders DS, Carter MJ, Hurston JP, Hoggard N, Lobo AJ. Full publication of abstracts presented at the British Society of Gastroenterology. [Abstract] Gut. 2000;46:A18. doi: 10.1136/gut.49.1.154a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman L, Loscalzo A. Publication rates of research originally presented in abstract form in three sub-specialties of internal medicine. Clin Res. 1982;30:13–17. [Google Scholar]

- Jackson KR, Daluiski A, Kay RM. Publication of abstracts submitted to the annual meeting of the Pediatric Orthopaedic Society of North America. J Pediatr Orthop. 2000;20:2–6. doi: 10.1097/00004694-200001000-00002. [DOI] [PubMed] [Google Scholar]

- De Bellefeuille C, Morrison CA, Tannock IF. The fate of abstracts submitted to a cancer meeting: factors which influence presentation and subsequent publication. Ann Oncol. 1992;3:187–191. doi: 10.1093/oxfordjournals.annonc.a058147. [DOI] [PubMed] [Google Scholar]

- Callaham ML, Wears RL, Weber EJ, Barton C, Young G. Positive-outcome bias and other limitations in the outcome of research abstracts submitted to a scientific meeting. JAMA. 1998;280:254–257. doi: 10.1001/jama.280.3.254. [DOI] [PubMed] [Google Scholar]

- McCormick MC, Holmes JH. Publication of research presented at the pediatric meetings. Change in selection. Am J Dis Child. 1985;139:122–126. doi: 10.1001/archpedi.1985.02140040020017. [DOI] [PubMed] [Google Scholar]

- Weber EJ, Callaham ML, Wears RL, Barton C, Young G. Unpublished research from a medical specialty meeting. Why investigators fail to publish. JAMA. 1998;280:257–259. doi: 10.1001/jama.280.3.257. [DOI] [PubMed] [Google Scholar]

- Chalmers I, Adams M, Dickersin K, et al. A cohort study of summary reports of controlled trials. JAMA. 1990;263:1401–1405. doi: 10.1001/jama.263.10.1401. [DOI] [PubMed] [Google Scholar]

- Panush RS, Delafuente JC, Connelly CS, et al. Profile of a meeting: how abstracts are written and reviewed. J Rheumatol. 1989;16:145–147. [PubMed] [Google Scholar]