ABSTRACT

There are limited options to estimate the treatment effects of variables which are continuous and measured at multiple time points, particularly if the true dose–response curve should be estimated as closely as possible. However, these situations may be of relevance: in pharmacology, one may be interested in how outcomes of people living with—and treated for—HIV, such as viral failure, would vary for time‐varying interventions such as different drug concentration trajectories. A challenge for doing causal inference with continuous interventions is that the positivity assumption is typically violated. To address positivity violations, we develop projection functions, which reweigh and redefine the estimand of interest based on functions of the conditional support for the respective interventions. With these functions, we obtain the desired dose–response curve in areas of enough support, and otherwise a meaningful estimand that does not require the positivity assumption. We develop ‐computation type plug‐in estimators for this case. Those are contrasted with g‐computation estimators which are applied to continuous interventions without specifically addressing positivity violations, which we propose to be presented with diagnostics. The ideas are illustrated with longitudinal data from HIV positive children treated with an efavirenz‐based regimen as part of the CHAPAS‐3 trial, which enrolled children years in Zambia/Uganda. Simulations show in which situations a standard g‐computation approach is appropriate, and in which it leads to bias and how the proposed weighted estimation approach then recovers the alternative estimand of interest.

1. Introduction

Causal inference for multiple time‐point interventions has received considerable attention in the literature over the past few years: if the intervention of interest is binary, popular estimation approaches include inverse probability of treatment weighting approaches (IPTW) [1], g‐computation estimators [2, 3] and longitudinal targeted maximum likelihood estimators (LTMLE) [4], among others. For IPTW, the treatment and censoring mechanisms need to be estimated at each time point, parametric g‐computation requires fitting of both the outcome and confounder mechanisms, sequential g‐computation is based on the iterated outcome regressions only, and LTMLE needs models for the outcome, censoring, and treatment mechanisms, iteratively at each point in time. All of those methods are relatively well‐understood and have been successfully applied in different fields (e.g., in [5, 6, 7, 8, 9, 10]).

However, suggestions on how to estimate treatment effects of variables that are continuous and measured at multiple time points are limited. This may, however, be of interest to construct causal dose–response curves (CDRC). For example, in pharmacoepidemiology, one may be interested in how counterfactual outcomes vary for different dosing strategies for a particular drug, see Section 2.

Early work on continuous interventions includes the seminal paper of Robins, Hernán, and Brumback [1] on inverse probability weighting of marginal structural models (MSMs). For a single time point, this requires the estimation of stabilized weights based on the conditional density of the treatment, given the confounders. This density may be estimated with parametric regression models, such as linear regression models. The MSM, describing the dose–response relationship (i.e., the CDRC), can then be obtained using weighted regression. The suggested approach can also be used for the longitudinal case, where stabilized weights can be constructed easily, and one may work with a working model where, for example, the effect of cumulative dose over all time points on the response is estimated.

There are several other suggestions for the point treatment case (i.e., a single time point): for example, the use of the generalized propensity score (GPS) is often advocated in the literature [11]. Similar to the MSM approach described above, both the conditional density of the treatment, given the confounders, and the dose–response relationship have to be specified and estimated. Instead of using stabilized weights, the GPS is included in the dose–response model as a covariate. To reduce the risk of bias due to model mis‐specification, it has been suggested to incorporate machine learning (ML) in the estimation process [12]. However, when combining GPS and ML approaches, there is no guarantee for valid inference, that is, related confidence intervals may not achieve nominal coverage [4]. Doubly robust (DR) approaches allow the integration of ML while retaining valid inference. As such, the DR‐estimator proposed by Kennedy et al. [13] is a viable alternative to both MSM and GPS approaches. It does not rely on the correct specification of parametric models and can incorporate general machine learning approaches. As with other, similar, approaches [14] both the treatment and outcome mechanisms need to be modeled. Then a pseudo‐outcome based on those two estimated nuisance functions is constructed, and put into relationship with the continuous intervention using kernel smoothing. Further angles in the point treatment case are given in the literature [15, 16, 17, 18].

There are fewer suggestions as to how to estimate CDRCs for multiple time point interventions. As indicated above, one could work with inverse probability weighting of marginal structural models and specify a parametric dose–response curve. Alternatively, one may favor the definition of the causal parameter as a projection of the true CDRC onto a specified working model [19]. Both approaches have the disadvantage that, with mis‐specification of the dose–response relationship or an inappropriate working model, the postulated curve may be far away from the true CDRC. Moreover, the threat of practical positivity violations is even more severe in the longitudinal setup, and working with inverse densities—which can be volatile—remains a serious concern [20]. It has thus been suggested in the literature to avoid these issues by changing the scientific question of interest, if meaningful, and to work with alternative definitions of causal effects.

For example, Young, Hernan, and Robins [21] consider so‐called modified treatment policies, where treatment effects are allowed to be stochastic and depend on the natural value of treatment, defined as the treatment value that would have been observed at time , had the intervention been discontinued right before . A similar approach relates to using representative interventions, which are stochastic interventions that maintain a continuous treatment within a pre‐specified range [22]. Diaz et al. [23] present four estimators for longitudinal modified treatment policies (LMTPs), based on IPTW, g‐computation and doubly robust considerations. These estimators are implemented in an R‐package (lmtp). An advantage of those approaches is that the positivity assumption can sometimes be relaxed, depending on how interventions are being designed. Moreover, the proposed framework is very general, applicable to longitudinal and survival settings, and one is not forced to make arbitrary parametric assumptions if the doubly robust estimators are employed. It also avoids estimation of conditional densities as it recasts the problem at hand as a classification procedure for the density ratio of the post‐intervention and natural treatment densities. A disadvantage of the approach is that it does not aim at estimating the CDRC, which may, however, relate to the research question of interest, see Section 2.

In this paper, we are interested in counterfactual outcomes after intervening on a continuous exposure (such as drug concentration) at multiple time points. For example, we may be interested in the probability of viral suppression after 1 year of follow‐up, had HIV‐positive children had a fixed concentration level of efavirenz during this year. In this example, it would be desirable to estimate the true CDRC as closely as possible, to understand and visualize the underlying biological mechanism and decide what preferred target concentrations should be. This comes with several challenges: most importantly, violations of the positivity assumption are to be expected with continuous multiple time‐point interventions. If this is the case, estimands that are related to modified treatment policies or stochastic interventions, as discussed above, can be tailored to tackle the positivity violation problem, but redefine the question of interest. In pharmacoepidemiology and other fields this may be not ideal, as interpretations of the true CDRC are of considerable clinical interest, for example to determine appropriate drug target concentrations.

We propose g‐computation based approaches to estimate and visualize causal dose–response curves. This is an obvious suggestion in our setting because developing a standard doubly robust estimator, for example, a targeted maximum likelihood estimator, is not possible as the CDRC is not a pathwise‐differentiable parameter; and developing non‐standard doubly robust estimators is not straightforward in a multiple time‐point setting. Our suggested approach has two angles:

As a first step, we simply consider computing counterfactual outcomes for multiple values of the continuous intervention (at each time point) using standard parametric and sequential g‐computation. We evaluate with simulation studies where this “naive” estimation strategy can be successful and useful, and where not; and which diagnostics may be helpful in judging its reliability. To our knowledge, this standard approach has not been evaluated in the literature yet.

We then define regions of low support through low values of the conditional treatment density, evaluated at the intervention trajectories of interest. Our proposal is to redefine the estimand of interest (i.e., the CDRC) only in those regions, based on suitable weight functions. Such an approach entails a compromise between identifiability and interpretability: it is a tradeoff between estimating the CDRC as closely as possible [as in (i)], at the risk of bias due to positivity violations and minimizing the risk of bias due to positivity violations, at the cost of redefining the estimand in regions of low support. We develop a g‐computation based plug‐in estimator for this weighted approach.

We introduce the motivating question in Section 2, followed by the theoretical framework in Section 3. After presenting our extensive Monte‐Carlo simulations (Section 4), we analyze the illustrative data example in Section 4.3. We conclude in Section 5.

2. Motivating Example

Our data comes from CHAPAS‐3, an open‐label, parallel‐group, randomized trial (CHAPAS‐3) [24]. Children with HIV (aged 1 month to 13 years), from four different treatment centers (one in Zambia, three in Uganda) were randomized to receive one out of 3 different antiretroviral therapy (ART) regimens, given as fixed‐dose‐combination tablets. Each regimen consisted of 3 drugs. Every child received lamivudine (first drug), and either nevirapine or efavirenz (second drug), which was chosen at the discretion of the treating physician (and based on age). The third drug was randomly assigned (1:1:1) and either stavudine, zidovudine, or abacavir. The primary endpoint in the trial were adverse events, both clinical (grade 2/3/4) and laboratory (confirmed grade 3, or any grade 4).

Several substudies of the trial explored pharmacokinetic aspects of the nonnucleoside reverse transcriptase inhibitors (NNRTI) efavirenz and nevirapine (the second, non‐randomized drug), in particular the relationship between the respective drug concentrations and elevated viral load, that is, viral failure [25, 26]. Our analyses are motivated by these substudies and are based on the subsets of children, who received efavirenz (i.e., 125 out of 478 patients). We use the same data as Bienczak et al. [25].

In the trial, efavirenz dose was recommended to be based on weight using 200 mg for those weighing 10–13.9 kg, 300 mg for 14–19.9 kg, 400 mg for 20–34.9 kg, and 600 mg (the adult dose) above 34.9 kg. While children may receive the same dose, EFV concentrations vary individually and depend on the child's metabolism (i.e., specifically the single nucleotide polymorphisms in the CYP2B6 gene encoding the key metabolizing enzyme). Too low concentrations reduce antiviral activity of the drug and thus lead to viral failure (a negative outcome, which typically leads to a change in drug regimen). This is the reason why one is interested in the minimum concentration that is still effective against viral replication; or, more generally in the range of concentrations that should be targeted.

Our analysis evaluates the relationship between EFV concentrations (not doses) and viral failure, over a follow‐up period of 84 weeks. We are specifically interested in the counterfactual probability of viral load (VL) copies/ml at 84 weeks if children had concentrations (12/24 h after dose) of mg/L at each follow‐up visit, where ranges from to mg/L. This question translates into the longitudinal causal dose–response curve (CDRC), which –in our example–, is actually a concentration‐response curve. That is, we want to know how the probability of failure varies for different concentration trajectories.

However, drawing a particular CDRC is challenging for the following reasons: (i) the form of the curve should be flexible and as close as possible to the truth; (ii) there exist time‐dependent confounders (e.g., adherence, weight) that are themselves affected by prior concentration levels, making regression an invalid method for causal effect estimation [27]; (iii) with long follow‐up and moderate sample size, and given the continuous nature of the concentration variables, positivity violations are an issue of concern that have to be addressed.

More details on the data analysis are given in Section 4.3.

3. Framework

3.1. Notation

We consider a longitudinal data setup where at each time point , , we measure the outcome , a continuous intervention and covariates , for individuals. We denote as “baseline variables” and as follow‐up variables, with . The intervention and covariate histories of a unit (up to and including time ) are and , , , respectively. The observed data structure is

That is, we consider units, followed‐up over time points where at each time point we work with the order .

We are interested in the counterfactual outcome that would have been observed at time if unit had received, possibly contrary to the fact, the intervention history , . For a given intervention , the counterfactual covariates are denoted as . We use to denote the history of all data up to before .

3.2. Estimands

3.2.1. Estimands for One Time Point

In order to illustrate the ideas, we first consider a simple example with only one time point where . Suppose we observe an independent and identically distributed sample , where has support . Our estimand of interest is the causal dose–response curve:

Estimand 1:

| (1) |

where . Assume has density with respect to some dominating measure . The dose–response curve (1) can be identified [13] as

| (2) |

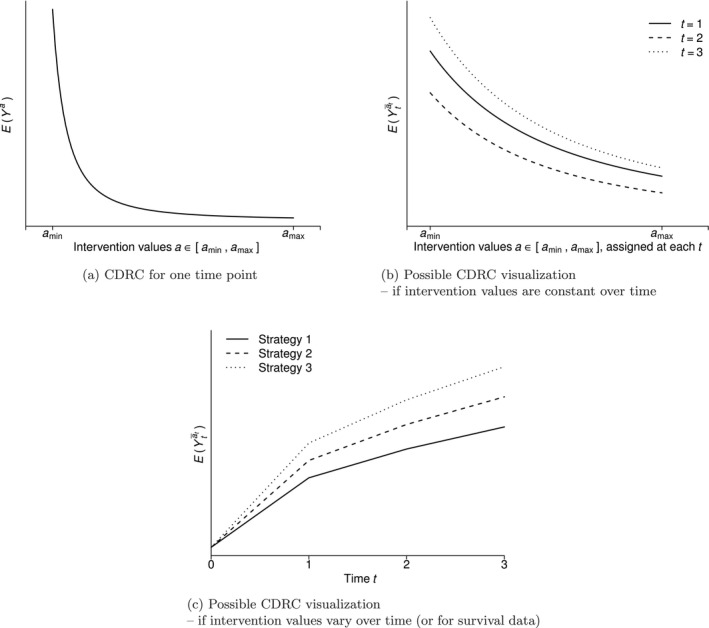

where the first equality follows by the law of iterated expectation, the assumption that is independent of conditional on (conditional exchangeability) and positivity (see below). The second equality follows because in the event of (consistency). An example of such a curve for a particular interval is given in Figure 1a.

FIGURE 1.

Considerations for causal dose–response curves (CDRCs).

This quantity is undefined if there is an intervention level such that the conditional density function is zero for some with . The strong positivity assumption reflects this consideration by requiring that almost everywhere [28]. This assumption may be weakened by making additional parametric model assumptions or restricting the range of . In this paper, we however address violations of the positivity assumption through a redefinition of the estimand of interest, where we replace the marginal distribution function of in (2) by a user‐given distribution function. That is, we instead target

| (3) |

for some weight function . This is essentially a weighted average of the conditional dose–response curve .

To illustrate the construction of a meaningful weight function for (3), consider two extreme cases: , and , where is the marginal density of . Under the first case, we have , which is equal to the dose–response curve (Estimand 1) whenever the positivity assumption holds. Under , we have , which is not a causal quantity (unless exchangeability is assumed) but it does not require the positivity assumption. We therefore propose to use a function such that in areas of that have good support, and in areas of that have low support. By “good support”, we mean that the positivity assumption is met in the sense of , that is, bounded away from zero. Thus, one possible function to use is

| (4) |

Alternatively, one may use to define good support. Using (4) in (3) targets the desired dose–response curve under enough support and avoids reliance on positivity otherwise. Formally,

Estimand 2 is then given by:

| (5) |

For fixed , this is taking a weighted average of the counterfactuals , where the weights are equal to one for units in the population for whom positivity holds, and the weights are for those for whom it does not. Whenever , this simply downweights observations that rely more on extrapolation so that they are not as influential in the estimators, and imply an interpretation as outlined below otherwise

Interpretation. To understand the implication and interpretation of using this estimand, consider our motivating data example: first, note that both and are undefined outside the support region of . For instance, in our study the CDRC is undefined for negative concentration values and biologically implausible concentration values of mg/L. Suppose we are interested in for mg/L: for all patients with covariate regions that have we stick to the CDRC m(a), such that we obtain ; but for those where this does not hold, maybe because they are ultraslow metabolizers who will not clear the drug fast enough to ever achieve mg/L under full adherence (i.e., ), we target . This means that we do not require positivity or rely on parametric extrapolations in poor support regions (where the intervention seems “unrealistic”); we rather use the present associations to allow individual concentration trajectories that lead to for those patient groups. Thus, the proposed estimand (5) offers a tradeoff between identifiability and interpretability: that is, a tradeoff between estimating the CDRC as closely as possible, at the risk of bias due to positivity violations because of the continuous intervention; and minimizing the risk of bias due to positivity violations, at the cost of redefining the estimand. More details on possible interpretations are given in Sections 3.2.2 and 3.5.

Choice of c . As in the case for binary interventions, when truncating the propensity score for inverse probability weighting or targeted maximum likelihood estimation, one may use rules of thumbs and simulation evidence to decide for . This is because we want the conditional treatment density to be bounded away from zero, to avoid negative effects of (near‐)positivity violations. Possible ad‐hoc choices are and [29], though we argue below that multiple c's may be selected and presented based on diagnostics, see Section 3.4.

Estimands under different weight functions. In principal, one could construct other weight functions too. An obvious choice would be to simply use the marginal treatment density as a weight. Such a weight choice has been motivated in the context of estimands that are defined through parameters in a marginal structural working model for (continuous summaries of) longitudinal binary interventions [30]. In this case, greater weight is given to interventions with greater marginal support; and the more support there is for each possible intervention choice, the estimand will be closer to the CDRC. While intuitively it may make sense to rely more on interventions that are more often observed in the data, this approach does not directly address positivity violations; in the example above, there may be enough patients with concentrations close to mg/L overall, but not among ultraslow metabolizers, which is the issue to be addressed.

3.2.2. Estimands for Multiple Time Points

To illustrate our proposed concepts for multiple time points, consider data for two time points first: (). We are interested in . In principle, we may be interested in any within the support region . Practically, it may be possible that we only care about interventions for which (as in the motivating example), or : in this case, we may restrict the estimand to the respective region . Similar to a single time point there may be the situation where , but . This corresponds to a situation where there is little support for the intervention value given that we already intervened with and given the covariate history; for regions, where this is the case, we address the respective positivity violations by redefining the estimand.

Causal Dose–Response‐Curve. If there are multiple time points, the CDRC is

Estimand 1:

| (6) |

where and . The estimand (6) can, in principle, be identified through various ways. A possible option under the assumptions of sequential conditional exchangeability, consistency and positivity [31] –as defined below– is the sequential g‐formula (also known as the iterated conditional expectation representation [3]):

| (7) |

In the above expression, is part of , and thus . Because is continuous, a strong positivity requirement corresponds to

| (8) |

This means, we require within the support region a positive conditional treatment density for each , given its past. This strong assumption may be relaxed either under additional parametric modeling assumptions or by a restriction to some or by a redefinition of the estimand. We continue with the latter strategy. Consistency in the multiple time‐point case is the requirement that if and if . With sequential conditional exchangeability we require the counterfactual outcome under the assigned treatment trajectory to be independent of the actually assigned treatment at time , given the past: for .

Note: Sometimes, we may want to visualize the CDRC graphically, for example, by plotting for each (and stratified by , or a subset thereof, if meaningful) if the set of strategies is restricted to those that always assign the same value at each time point, see Figure 1b for an illustration. Alternatively, we may opt to plot the CDRC as a function of , for some selected strategies , see Figure 1c. This may be useful if intervention values change over time, or for survival settings.

To link the above longitudinal g‐computation formula (7) to our proposals, it may be written in terms of the following recursion. Let . For recursively define

| (9) |

where = .

Then, the counterfactual mean outcome is identified as , which follows from the reexpression of (9) in terms of (7) by recursively evaluating the integral; see Appendix A.1 for details. The above recursive integral is well defined only if the positivity assumption (8) is met. As for the single time‐point case, we propose to address violations of the positivity assumption by targeting a modified identifying expression, which we express through the following recursive integral:

| (10) |

As before, if the weight function is equal to one, the above expression can be used to define the actual CDRC (6) through recursive evaluation of (10). If, however, the weight function is equal to

| (11) |

then the expression becomes

| (12) |

by application of Bayes' rule. Intuitively, the above quantity does not remove confounding of the relation between and , because it conditions on rather than fixing (i.e., intervening on) . However, the above expression does not require the positivity assumption to be well defined, as motivated further below. Generally speaking, applying the above weights iteratively over all time points (and all units) leads to

see Appendix A.2 for details.

For the longitudinal case, we follow a strategy similar to that considered for the single time‐point case, and consider a compromise between satisfying the positivity assumption and adjusting for confounding. This compromise can be achieved by using weight functions that are in areas of good support, and are equal to (11) in areas of poor support:

| (13) |

Using the weights (13) in (10) has similar implications as for a single time point; that is, we obtain a weighted average of counterfactuals depending on the units' support for a given strategy . Formally, Estimand 2 equates to:

| (14) |

where , indicates that at least one , , and the notation indicates that the weights have been applied up to and including time . Practically, this means that

For units where there is enough conditional support for a strategy in terms of for all time points up to and including time , the weights are 1. For those, we still target the CDRC, that is, .

For units that do not have enough conditional support for a strategy at all time points, the weights in (13), second row, are used. With this, intuitively we target as outlined above.

If there exist units for which there is support for a strategy at some points, but not at others, the weights will be 1 at some time points, but not for all. The implication is that we target the CDRC as much as this possible and deviate from it only at the time points where it is necessary.

Note that for the second estimand no positivity assumption as defined in (8) is required for identification because whenever a (near‐)positivity violation is present in terms of the weights (13) redefine the estimand in a way such that the assumption is not needed.

If the denominator in (13) is very small, that is, , one could replace it with a very small ad‐hoc value. Alternatively, one may want to compromise in the sense of evaluating the conditional density until previous time points:

| (15) |

Interpretation of the weighted estimand. The weighted estimand can be interpreted as follows: those units which have a covariate trajectory that makes the intervention value of interest at not unlikely to occur (under the desired intervention before ), receive the intervention at ; all other units get different interventions that produce, on average, outcomes as we would expect among those who actually follow the intervention trajectory of interest (if the weight denominator is well‐defined). Informally speaking, we calculate the CDRC in regions of enough support and stick to the actual research question, but make use of the marginal associations otherwise, if possible.

As a practical illustration for this interpretation, consider our motivating study: if, given the covariate and intervention history, it seems possible for a child to have a concentration level of mg/L, then we do indeed calculate the counterfactual outcome under . For some patients however, it may be unlikely (or even biologically impossible!) to actually observe this concentration level: for example, children who are adherent to their drug regimen and got an appropriate drug dose prescribed, but are slow metabolizers will likely never be able to have very low concentration values. In this case, we do not consider the intervention to be feasible and rather let those patients have individual concentration levels which generate outcomes that are typical for children with mg/L. In short, we calculate the probability of failure at time if the concentration level is set at for all patients where this seems “feasible”, and otherwise to individual concentration trajectories that produce “typical” outcomes with mg/L.

The more complex cases of the weighted estimand reflect the fact that certain strategies become unlikely only at certain time points: for example, patients who are adherent to their treatment and receive always the same dose will likely not have heavy varying concentrations in their body; thus, a strategy that looks at the effect of a concentration jump during follow‐up, for example, (3, 3, 3, 0) will only be unlikely at the fourth visit, but not before—and hence a deviation from the CDRC will only be needed at the fourth time point.

The interpretation of this weighted dose–response curve seems somewhat unusual; note however that this approach has the advantages that (i) compared to a naive approach it does not require the positivity assumption, and (ii) compared to LMTP's it both sticks to the actual research question as close as “possible” and does not require a positivity assumption for the chosen policies of interest. We explain below, in Section 3.5, why we believe, however, that the weighted estimand (Estimand 2) should typically be presented together with the CDRC, that is, Estimand 1.

3.3. Estimation

To develop an estimation strategy, it is useful to see that we can reexpress our target quantity (10) in terms of the iterated weighted conditional expectation representation

| (16) |

where ; see Appendix A.3 for details. This means that Estimand 2 can be written as a series of iterated weighted outcomes. An alternative representation, based on a weighted parametric ‐formula is given in Appendix A.4.

For Estimand 2, one can build a substitution estimator based on either (16) or (A.3). That is, we use either a parametric or sequential g‐formula type of approach where the (iterated) outcome is multiplied with the respective weight at each time point, regressed on its past, and recursively evaluated over time for a specific intervention . More specifically, a substitution estimator of (16) can be constructed as follows: (i) estimate the innermost expectation, that is, multiply the estimated weights (13) with the outcome at time ; then, (ii) intervene with the first intervention trajectory of interest ; (iii) predict the weighted outcome under this intervention; (iv) estimate the second innermost expectation by regressing the product of the prediction from (iii) and the respective weight on its past; (v) then, intervene with and obtain the predicted weighted outcome under the intervention. These steps can be repeated until , and for each intervention trajectory of interest.

As the weighted (iterated) outcomes may possibly have a skewed, complex distribution (depending on the weights), we advocate for the use of (16) as a basis for estimation. This is because estimating the expectation only is typically easier than estimating the whole conditional distribution, as needed for (A.3). In many applications, a data‐adaptive estimation approach may be a good choice for modeling the expectation of the weighted outcomes.

For the estimates of the weighted curve to be consistent, both the expected weighted (iterated) outcomes and the weights need to be estimated consistently.

Note that for , we suggest to calculate a weighted mean, instead of estimating the weighted expected outcome data‐adaptively. This is because the former is typically more stable when follows some standard distribution, but does not. It is a valid strategy as standardizing with respect to the pre‐intervention variables and then calculating the weighted mean of the outcome is identical to standardizing the weighted outcome with respect to , and then calculating the mean. Another option for is to facilitate step 6 before step 5 and then create a stacked dataset of the counterfactual outcomes for all intervention values , and then fit a weighted regression of on , based on the stacked weight vectors. The fitted model can then be used to predict the expected outcome under all . This strategy is however not explored further in this paper.

The algorithm in Table 1 presents the detailed steps for a substitution estimator of (16). The algorithm is implemented in the ‐package mentioned below, and further illustrated in Appendix A.6.

TABLE 1.

Algorithm for estimating Estimand 2 at time .

| Step 0a | Define a set of interventions , with and is the element of , . |

| Step 0b | Set . |

| For | |

| Step 1a | Estimate the conditional density , see also Footnote 1. |

| Step 1b | Estimate the conditional density , see also Footnote 1. |

| Step 2 | Set , where is the element of intervention . |

| Step 3a | Plug in into the estimated densities from step 1, to calculate and . |

| Step 3b | Calculate the weights from (15) based on the estimates from 3a. |

| If , estimate as required by the definition of (15) for . | |

| Step 4 | Estimate , see also Footnote 2. |

| Step 5 | Predict based on the fitted model from step 4 and the given intervention . |

| For | |

| Step 1a | Estimate the conditional density , see also Footnote 1. |

| Step 1b | Estimate the conditional density , see also Footnote 1. |

| Step 2 | Set . |

| Step 3a | Calculate and . |

| Step 3b | Calculate the weights . If , then is undefined. |

| Step 4 | Estimate . |

| Step 5 | Calculate ; that is, obtain the estimate of Estimand 2 at through calculating the weighted mean of the iterated outcome under the respective intervention at t=0. |

| Then | |

| Step 6 | Repeat steps 2–5 for the other interventions , . This yields an estimate of estimand 2 at . |

| Step 7 | Repeat steps 1–6 on bootstrap samples to obtain confidence intervals. |

Note: 1The conditional treatment densities can be estimated with (i) parametric models, if appropriate, like the linear model, (ii) nonparametric flexible estimators, like highly‐adaptive LASSO density estimation [32], (iii) a “binning strategy” where a logistic regression model models the probability of approximately observing the intervention of interest at time , given one has followed the strategy so far and given the covariates, (iv) other options, like transformation models or generalized additive models of location, shape and scale [33, 34]. Items (i)–(iii) are implemented in our package mentioned below. 2The iterated weighted outcome regressions are recommended to be estimated data‐adaptively, because the weighted outcomes are often non‐symmetric. We recommend super learning for it [4], and this is what is implemented in the package mentioned below.

3.3.1. Multiple Interventions and Censoring

So far, we have described the case of 1 intervention variable per time point. Of course, it is possible to employ the proposed methodology for multiple intervention variables . In this case, one simply has to estimate the conditional densities for all those intervention variables, and taking their product, at each time point. This follows from replacing (11) with , and then factorizing the joint intervention distribution based on the assumed time ordering of . Intervention variables may include censoring variables: we may, for instance, construct estimands under the intervention of no censoring. A possible option to apply the proposed methodology is then to use weights to target for units that do not have enough conditional support for the intervention strategy of interest. Appendix A.5 lists the required modifications of the estimation procedure in this case. Alternatively, one may use weights that lead to under positivity violations. For this, one would require estimating the conditional censoring mechanisms in steps 1a and 1b too. There are however many subtleties and dangers related to the interpretation and estimation under censoring, and possibly competing events; for example, conditioning on the censoring indicators may lead to collider bias, intervening on censoring mechanisms to direct effect estimands [35], identification assumptions need to be refined and generic time‐orderings where treatment variables are separated by different blocks of confounders may lead to more iterated expectations that have to be fitted. Those details go beyond the scope of this paper.

3.4. Violations of the Positivity Assumption, Diagnostics and the Choice of

Suppose we are interested in a subset of interventions that are part of the support region . A violation of the strong positivity assumption exists if for any particular intervention of interest it happens that . To diagnose the extent of practical positivity violations, one needs estimates of the conditional treatment densities, at each time point, and evaluated for each treatment strategy that is part of —and summarize them in a meaningful way. We propose two options for facilitating this:

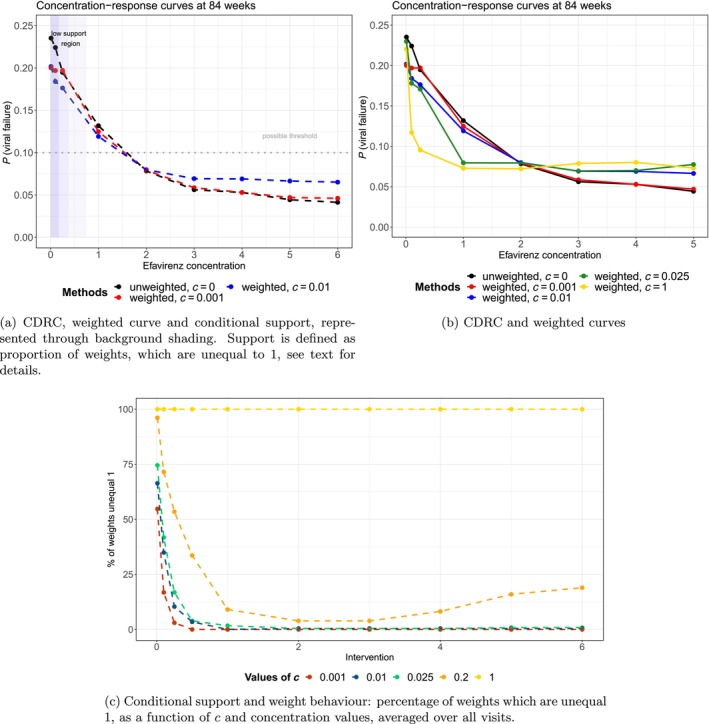

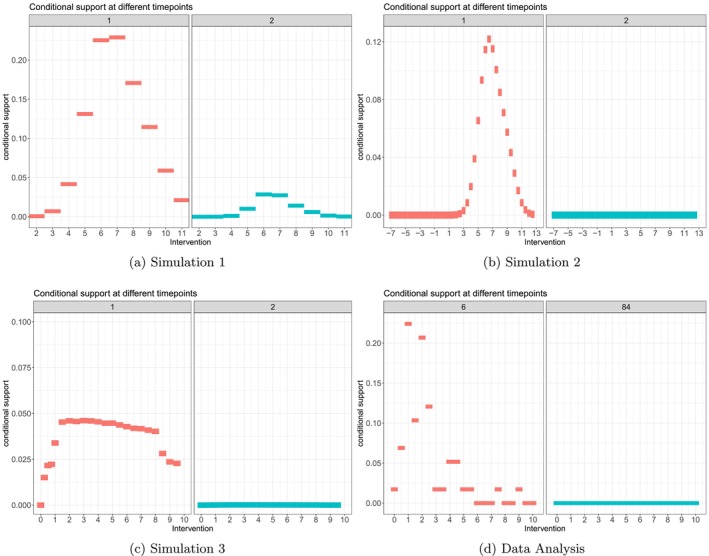

Calculate the proportion of weights (13) that are different from 1 (and thus indicate positivity violations) for each time point, for a range of 's and the interventions of interest. Figure 4c gives an example on how to visualize those proportions: it shows that for intervention trajectories close to a high proportion of children have weights and thus for these interventions practical positivity violations exist (which we may want to address with the weighted estimand).

Estimate the conditional treatment densities, under the intervention trajectories of interest, with the following binning strategy: suppose is the element of and the intervention values at are already ordered such that ; then calculate

| (17) |

that is, we want to estimate the probability to approximately observe the intervention value under strategy , given that one has followed the same strategy of interest so far (until ), and irrespective of the covariate history. Alternatively, instead of defining the bin widths through the intervention values of interest, we may calculate them data‐adaptively [36]. Estimating the mean of those probabilities over all observed in a particular data set serves as diagnostic tool to measure the support for each rule , at each time point. One could estimate (17) with standard regression techniques among the subset of those units who followed the respective strategy until , and present a summary of those probabilities as a rough measure of support for each intervention trajectory of interest. This approach gives a sense of actual units following the strategies of interest in the data, given the covariates. Examples are given in Figure B2.

FIGURE 4.

Results of the data analysis.

Choice of c : The first diagnostic, possibly visualized as in Figure 4c, can be used to get a sense which set of are informative to present weighted curves (as argued in Section 3.5). More specifically, those c's which are close enough to zero to detect positivity violations, but do not alter the intervention values of interest for a too large proportion of units (and for too many intervention values), are good candidates. Alternatively, as indicated above, similar to the case when truncating the propensity score for inverse probability of treatment weighting or TMLE, ad‐hoc choices could be used to decide when a treatment density is considered to be bounded away from zero (e.g., ).

3.5. The Case for Presenting Both Estimands

Consider again our running example where we are interested in the probability of failure at 84 weeks under concentration levels of . As indicated above, adherent patients who are ultraslow metabolizers may not be able to have low concentration levels, for example, . In such a case, there is a strong case to redefine the estimand and not enforce to calculate . Now, suppose we have : obviously, there is no need to redefine the estimand. However, due to the finite sample the actual estimate may be . Obviously, we would in this region more likely rely on extrapolation than changing the estimand.

Now, practically, whenever an estimate of the conditional treatment density is close to zero, we do not necessarily know whether this is a finite sample (or estimation) issue, or due to “infeasibility”, that is, an illogical intervention given the history of a patient. Presenting Estimand 1 shows the case where nothing is infeasible and we rely on extrapolations; presenting Estimand 2, possibly shown for multiple c's, shows the change in the curve as we consider more intervention trajectories to be infeasible. Both estimands together give us a sense if main conclusions could change when positivity violations are addressed differently.

4. Monte‐Carlo Simulations

In this section, we evaluate the proposed methods in three different simulation settings. Simulation 1 considers a very simple setting, as a basic reference for all approaches presented. In Simulation 2, a survival setting is considered, to explore the stability of standard, unweighted g‐computation in more sophisticated setups. The third simulation is complex, and inspired by the data‐generating process of the data analysis. It serves as the most realistic setup for all method evaluations.

4.1. Data Generating Processes and Estimands

Simulation 1: We simulated both a binary and normally distributed confounder, a continuous (normally distributed) intervention and a normally distributed outcome—for 3 time points and a sample size of . The exact model specifications are given in Appendix C.1. The intervention strategies of interest comprised intervention values in the interval which were constant over time; that is, . The primary estimand is the CDRC (6) for and all . The secondary estimand is the weighted curve defined through (14), with the weights (13), for the same intervention strategies.

Simulation 2: We simulated a continuous (normally distributed) intervention, two covariates (one of which is a confounder), an event outcome and a censoring indicator—for 5 time points and varying sample sizes of and . The exact model specifications are given in Appendix C.2. The intervention strategies of interest comprised intervention values in the interval which were constant over time; that is, . The estimand is the CDRC (6) for , all and under no censoring ().

Simulation 3: We simulated data inspired by the data generating process of the data example outlined in Sections 2 and 4.3 as well as Figure 3. The continuous intervention refers to drug concentration (of evavirenz), modeled through a truncated normal distribution. The binary outcome of interest is viral failure. Time‐varying confounders, affected by prior interventions, are weight and adherence. Other variables include co‐morbidities and drug dose (time‐varying) we well as sex, genotype, age and NRTI regimen. We considered 5 clinic visits and a sample size of . The exact model specifications are given in Appendix C.3. The intervention strategies of interest comprised concentration values in the interval which were constant over time; that is, . The primary estimand is the CDRC (6) for and all . The secondary estimand is the weighted curve (14) with the weights (13) for the same intervention strategies.

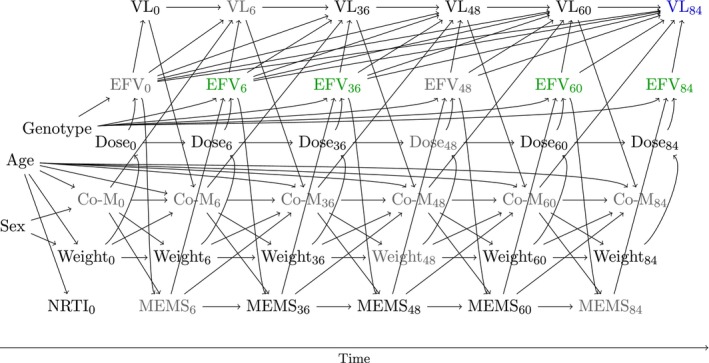

FIGURE 3.

Directed acyclic graph for the data analysis. The intervention variable is shown in green (efavirenz concentration), the outcome in blue (viral load at the end of follow‐up). Unmeasured variables are colored in gray. Both MEMS and weight are time‐dependent confounders which are affected by prior treatment nodes.

4.2. Estimation and Evaluation

All simulations were evaluated based on the results of simulation runs. We evaluated the bias of estimating and with standard parametric g‐computation with respect to the true CDRC. In Simulations 1 and 3 model specification for ‐computation was based on variable screening with LASSO, Simulation 2 explored the idealized setting of using correct model specifications. We further evaluated the bias of the estimated weighted curve with the true weighted curve, for ; that is, we looked whether could be recovered for every intervention strategy of interest. Estimation was based on the algorithm of Table 1. The density estimates, which are required for estimating the weights, were based on both appropriate parametric regression models (i.e., linear regression) and binning the continuous intervention into intervals; a computationally more sophisticated data‐adaptive approach for density estimation is considered in Section 4.3. We then compared the estimated CDRC and weighted curve () with other weighted curves where and . To estimate the iterated conditional expectations of the weighted outcome, we used super learning, that is, a data adaptive approach [4]. Our learner sets included different generalized linear (additive) regression models (with penalized splines, and optionally interactions), multivariate adaptive regression splines, LASSO estimates and regression trees—after prior variable screening with LASSO and Cramer's V [37]. Lastly, we visualize the conditional support of all considered intervention strategies, in all simulations, as suggested in (17).

4.3. Results

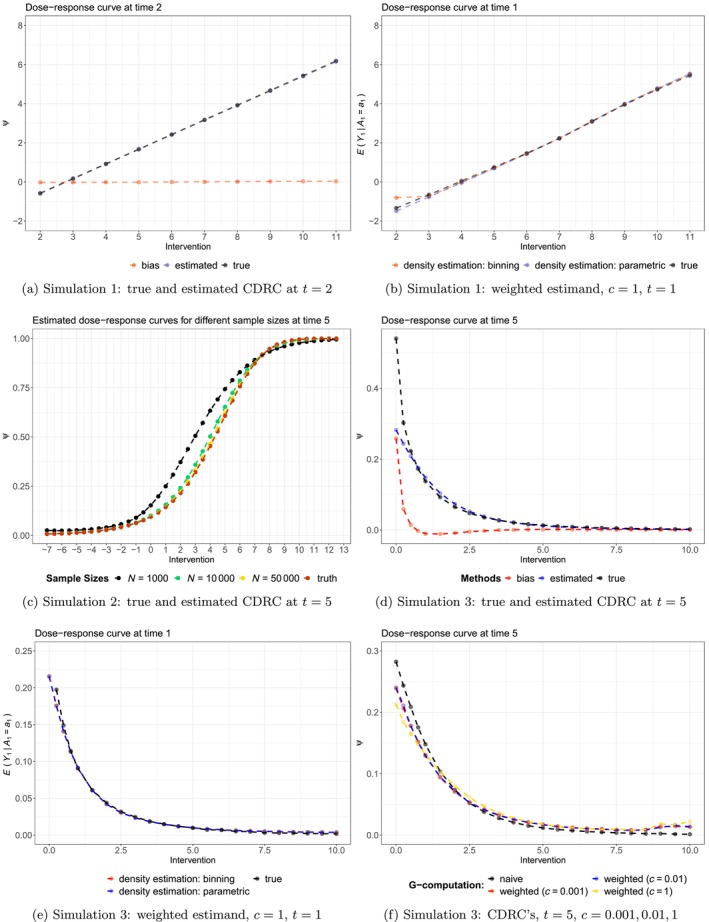

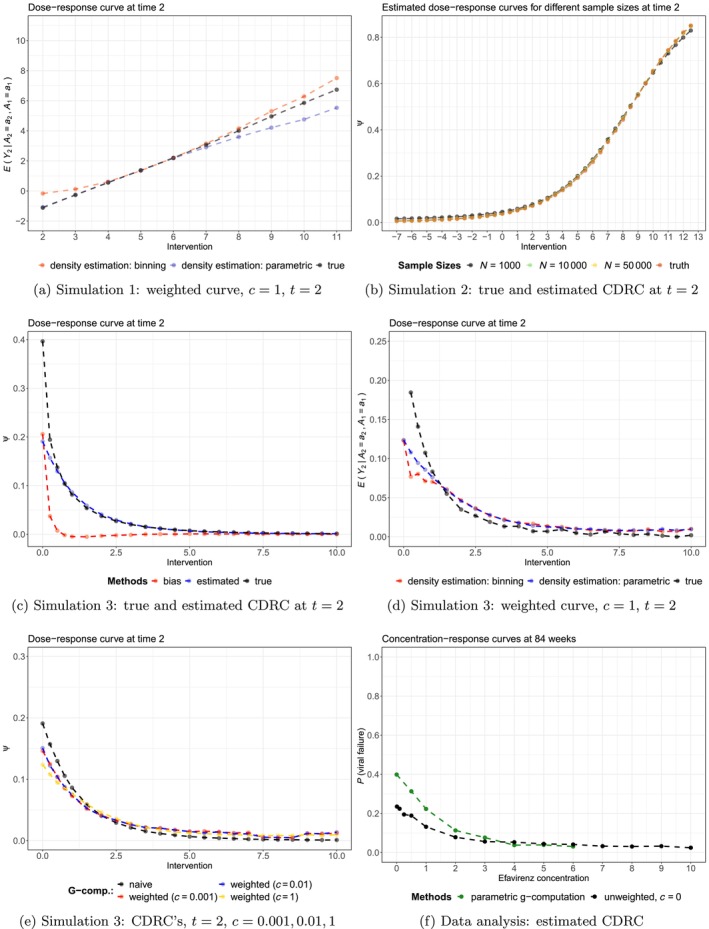

The results of the simulations are summarized in Figures 2, B1, and B2.

FIGURE 2.

Results of the Monte‐Carlo simulations.

In Simulation 1, applying standard g‐computation to the continuous intervention led to approximately unbiased estimates for all intervention values, through all time points (Figure 2a); and independent of the level of support (Figure B2a). The weighted curve, with , recovered the association perfectly for the first time point, independent of the density estimation strategy (Figure 2b). For later time points, some bias can be observed in regions of lower support (i.e., for intervention values between and ), see Figure B1a.

Simulation 2 shows a setting in which g‐computation estimates for the CDRC are not always unbiased, despite correct model specifications! Figure 2c visualizes this finding for the fifth time point: it can be seen that a sample size of 10.000 is needed for approximately unbiased estimation of the CDRC over the range of all intervention strategies. Note, however, that for some time points lower sample sizes are sufficient to guarantee unbiased estimation (Figure B1b).

The most sophisticated simulation setting 3 reveals some more features of the proposed methods. First, we can see that in areas of lowest support, toward concentration values close to zero (Figure B2c), there is relevant bias of a standard g‐computation analysis (Figure 2d); whereas in areas of reasonable to good support the CDRC estimates are approximately unbiased. The weighted curve with can recover the associations (Figure 2e) but there is some bias for later time points as the most critical area close to zero is approached, independent of the method used for estimating the weights (Figure 5d). Both Figures 2f and B1e highlight the behavior of Estimand 2 for and : it can be clearly seen that the curves represent a compromise between the CDRC and the association represented by the weighted curve (with ). Evaluating the results for intervention values of zero, shows that weighting the curve in areas of low support yields to a compromise that moves the CDRC away from the estimated high probabilities of viral failure to more moderate values informed by the observed association. Knowledge of this behavior may be informative for the data analysis below.

5. Data Analysis

We now illustrate the ideas based on the data from Bienczak et al. [25], introduced in Section 2. We consider the trial visits at weeks of children on an efavirenz‐based treatment regime. The intervention of interest is efavirenz mid‐dose interval concentration (), defined as plasma concentration (in ) 12 h after dose; the outcome is viral failure (, defined as copies/mL). Measured baseline variables, which we included in the analysis, are . Genotype refers to the metabolism status (slow, intermediate, extensive) related to the single nucleotide polymorphisms in the CYP2B6 gene, which is relevant for metabolizing evafirenz and directly affects its concentration in the body. Measured follow‐up variables are Lt = {weight, adherence (measured through memory capsMEMS), dose}.

The assumed data generating process is visualized in the DAG in Figure 3, and explained in more detail in Appendix D. Briefly, both weight and adherence are time‐dependent confounders, potentially affected by prior concentration trajectories, which are needed for identification of the CDRC.

Our target estimands are the CDRC (6) and the weighted curve (14) at weeks. The intervention strategies of interest are .

The analysis illustrates the ideas based on a complete case analysis of all measured variables represented in the DAG (), but excluding dose (not needed for identification) and MEMS (due to the high proportion of missingness) [38].

We estimated the CDRC both with sequential and parametric g‐computation using the intervention strategies . The estimation of the weighted curve followed the algorithm of Table 1. The conditional treatment densities, which are needed for the construction of the weights, were estimated both parametrically based on the implied distributions from linear models (for concentrations under 5 ) and with highly‐adaptive LASSO conditional density estimation (for concentrations ) [32, 39]. This is because the density in the lower concentration regions were approximately normally distributed, but more complex for higher values.

The conditional expectation of the weighted outcome (Step 4 of the algorithm) was estimated data‐adaptively with super learning using the following learning algorithms: multivariate adaptive regression splines, generalized linear models (also with interactions), ordinal cumulative probability models, generalized additive models as well as the mean and median. Prior variable screening, which was essential given the small sample size, was based on both the LASSO and Cramer's .

We estimated the weighted curve for . We also calculate the support of the continuous intervention strategies as described in Section 3.4.

5.1. Results

The main results of the analyses are given in Figure 4.

Figure 4a,b show that the estimated CDRC (black solid line) suggests higher probabilities of failure with lower concentration values. The curve is steep in the region from 0 to 2 mg, which is even more pronounced for parametric g‐computation when compared to sequential g‐computation (Figure B1f).

Both Figures 4a and B2d illustrate the low level of (conditional support) support for extremely small concentration values close to mg/L.

Note that in Figure 4a the area shaded in dark blue relates to low conditional support, defined as a proportion % of weights being unequal from 1; that is, more than 50% of observations having an estimated conditional treatment density under the respective intervention values. Intermediate, light and no blue background shadings refer to percentages of 15%–50%, 5%–15% and %, respectively. More details on how the conditional support defined through weight summaries varies as a function of and the intervention values is illustrated in Figure 4c. It can be seen that with a choice of , for most patients the actual intervention of interest seems feasible for concentrations values above mg/L; however, concentrations mg/L seem unrealistic for many patients and thus weighting is then used more often. As the proportion of weights, which are unequal 1, do not change much between and , we report only curves for and in Figure 4a.

The weighted curves in Figure 4b are less steep in the crucial areas close to zero and –as in the simulation studies– we observe a tendency of the curves with to provide a visual compromise between the CDRC and the estimand recovering the relevant association ().

The weighted curves show the probability of failure under different concentration levels, at least if the respective concentration level is likely possible (realistic, probable) to be observed given the patient's covariate history; for patients where this seems unlikely, patients rather have individual concentrations that lead to outcomes comparable to the patient population with the respective concentration trajectory. For example, if patients are adherent and slow metabolizers, it may be unlikely that they achieve low concentration levels and we do not enforce the intervention of interest. We let these patients rather behave individually in line with outcomes typically seen for low concentration values.

The practical implication is that if we do not want to extrapolate in regions of low support and/or do not consider specific concentration values to be feasible for some patients, the concentration‐response curve is flatter. The current lower recommended target concentration limit is mg/L, at which we still estimate high failure probabilities under standard g‐computation approaches. If, for example, we do not want to accept failure probabilities %—but are worried about positivity violations—, then we see that main conclusions with respect to a lower recommended target concentration limit would not change when using Estimand 2 in addition to Estimand 1 (Figure 4a).

We recommend to report results similar to Figure 4a, that is presenting a figure that shows both Estimand 1 and Estimand 2 (possibly based on several 's, informed by a graph as in Figure 4c).

6. Discussion

We have introduced and evaluated various methods for causal effect estimation with continuous multiple time‐point interventions. As an obvious first step, we investigated standard (parametric or sequential) g‐formula based approaches for identification and estimation of the actual causal dose–response curve. We emphasized that this has the advantage of sticking to the estimand of interest, but with a relatively strong positivity assumption which will likely be violated in most data analyses. Our simulations were designed to explore how well such a standard approach may extrapolate in sparse data regions and how the approach performs in both simple and complex settings. We found that in simple scenarios the CDRC estimates were approximately unbiased, but that in more complex settings sometimes large sample sizes were needed for good performance and that in regions of very poor support a relatively large bias could be observed. These findings suggest that the toolkit for causal effect estimation with longitudinal continuous interventions should therefore ideally be broader.

We therefore proposed the use of a weight function which returns the CDRC under enough conditional support, and makes use of the crude associations between the outcome at time and the treatment history otherwise. This choice ensures that the estimand is always well‐defined and one does not require positivity. Our simulations suggest that the provided estimation algorithm can indeed successfully recover the weighted estimand and illustrate the compromise that is practically achieved. As the proposed weights may have skewed distributions, we highlighted the importance of using a data‐adaptive estimation approach, though appropriate interval estimation, beyond using bootstrapping, remains to be investigated.

We hope that our manuscript has shown that for continuous multiple time point interventions one has to ultimately make a tradeoff between estimating the CDRC as closely as possible, at the risk of bias due to positivity violations and minimizing the risk of bias due to positivity violations, at the cost of redefining the estimand. If the scientific question of interest allows a redefinition of the estimand in terms of longitudinal modified treatment policies [23, 40, 41] then this may be a great choice. Otherwise, it may be helpful to use g‐computation algorithms for continuous interventions, visualize the estimates in appropriate curves, calculate the suggested diagnostics by estimating the conditional support and then estimate the proposed weighted curves as a magnifying glass for the curves behavior in areas of low support (where the intervention of interest may not be feasible or realistic).

Our suggestions can be extended in several directions: it may be possible to design different weight functions that keep the spirit of modifying estimands under continuous interventions only in areas of low support; the extent of the compromise, controlled by the tuning parameter , may be chosen data‐adaptively and our basic considerations for time‐to‐event data may be extended to cover more estimands, particular in the presence of competing risks.

6.1. Software

All approaches considered in this paper have been implemented in the ‐packages CICI and CICIplus available at https://cran.r‐project.org/web/packages/CICI/index.html and https://github.com/MichaelSchomaker/CICIplus.

Conflicts of Interest

The authors declare no conflicts of interest.

Supporting information

Data S1.

Data S2.

Data S3.

Data S4.

Data S5.

Acknowledgments

We are grateful for the support of the CHAPAS‐3 trial team, their advice regarding the illustrative data analysis and making their data available to us. We would like to particularly thank David Burger, Sarah Walker, Di Gibb, Andrzej Bienczak, and Elizabeth Kaudha. We would also like to acknowledge Daniel Saggau, who has contributed to the data‐generating processes of our simulation setups and Igor Stojkov for contributing substantive knowledge to pharmacological angles relevant to the data example. Computations were performed using facilities provided by the University of Cape Town's ICTS High Performance Computing team. Michael Schomaker is supported by the German Research Foundations (DFG) Heisenberg Programm (grants 465412241 and 465412441).

Appendix A. Additional Theory Details

A.1. Iterated Nested Expectation Representation of Estimand 1

Using (9) corresponds to a recursive definition of Estimand 1. For , the integral corresponds to

Moving to yields

Reevaluating the integral from then leads to

This expression is identical to under conditional sequential exchangeability, positivity and consistency, which yields the sequential g‐formula listed in (7). The result is known from Bang and Robins [3]. Briefly, first recall that positivity can be defined as in (8), consistency is the requirement that

| (A.1) |

and sequential conditional exchangeability is defined as

| (A.2) |

Suppose . Then,

where the first and third equality follow from the law of iterated expectation, the second and fourth by conditional exchangeability (i.e., and ), the fifth by definition and the sixth by consistency. Similarly, we can derive (7) generically for any . For the conditional expectations to be well‐defined, one needs the positivity assumption.

A.2. On Why the Weights (11) Lead to

Consider the recursive evaluation of the integral (12) from to , which shows how the weights recover the association:

A.3. Iterated Nested Expectation Representation of Estimand 2

Using the definition of (10), and observing that = , we have for :

where . For , we then get

Reevaluating the integral from then leads to

A.4. Parametric ‐Formula Representation

The weighted estimand, shown in the iterated weighted outcome representation in (16) can, alternatively, also be rewritten as

| (A.3) |

where the second term in (A.3) is

Note that in the above notation includes past outcomes, multiplied with the respective weights.

The equality follows from the knowledge that iterated nested expectation representations of the g‐formula can be reexpressed as a traditional (parametric) g‐formula factorization because both representations essentially standardize with respect to the post intervention distribution of the time‐dependent confounders [3, 6, 42]. In our case, the outcome is .

A.5. Time‐to‐Event Considerations

Suppose we have a time‐ordering of , . If we use weights to target for units that do not have enough conditional support for the intervention strategy of interest, that is, , we have to modify the estimation algorithm of Table 1 as follows:

| Step 1a/b | Estimate the densities among those uncensored, and without prior event (). |

| Step 2 | Also, set . |

| Step 4 | Fit the model for the weighted outcome among those uncensored and without prior event, that is, estimate . |

| Step 5 | Additionally, set , if . |

A.6. Example Code for the Implementation of the Algorithm of Table 1

# load example efavirenz data from package CICI, select 2 time points for illustration # variable order: sex metabolic log_age NRTI weight.0 efv.0 VL.0 adherence.1 weight.1 efv.1 VL.1 library(CICI); library(CICIplus); data(EFV); EFV.2 <‐ EFV[,1:11]

# manual implementation of estimand 2

# Step 0a: interventions are (efv.0, efv.1) = [(0,0),(0.5,0.5),(1,1)]

# Step 0b: define ∖tilde{Y}_1 = Y_1 = VL.1

Y.tilde.1 <‐ EFV.2$VL.1

### iteration: t=1

# Step 1a: estimate numerator density g(efv.1 | past)

# (assuming normality for illustration)

fitted.n.1 <‐ lm(efv.1 ˜ .,data=subset(EFV.2, select=‐VL.1))

# Step 1b: estimate denominator density g(efv.1 | past up to efv.0)

fitted.d.1 <‐ lm(efv.1 ˜ sex+metabolic+log_age+NRTI+weight.0+efv.0,data=EFV.2)

# Step 2: set a_1=a_0=0

EFV.2.A <‐ EFV.2; EFV.2.A[,c("efv.0","efv.1")] <‐ 0

# Step 3a: evaluate densities at a=0

eval.n.1 <‐ dnorm(0,mean=predict(fitted.n.1, newdata=EFV.2.A),sd=summary(fitted.n.1)$sigma)

eval.d.1 <‐ dnorm(0,mean=predict(fitted.d.1, newdata=EFV.2.A),sd=summary(fitted.d.1)$sigma)

# Step 3b: estimate weights with c=0.01

wf <‐ function(num,den,c)as.numeric(num > c) + as.numeric(num <= c)*(num/den)

w.1 <‐ wf(eval.n.1,eval.d.1,c=0.01)

# Step 4: estimate iterated weighted outcome regression

# (binomial as all wY are 0/1 in this specific case, otherwise gaussian)

mY.1 <‐ glm(I(Y.tilde.1*w.1) ˜ ., data=subset(EFV.2, select=‐VL.1), family="binomial")

# Step 5: predict iterated weighted outcome under intervention

Y.tilde.0 <‐ predict(mY.1, newdata=EFV.2.A, type="response")

### iteration: t=0 # Step 1a: estimate numerator density g(efv.0 | past) fitted.n.0 <‐ lm(efv.0 ˜ sex+metabolic+log_age+NRTI+weight.0,data=EFV.2) # Step 1b: estimate denominator density g(efv.0) fitted.d.0 <‐ lm(efv.0 ˜ 1,data=EFV.2) # Step 2: set a_0=0 (done above already) # Step 3a: evaluate densities at a=0 eval.n.0 <‐ dnorm(0,mean=predict(fitted.n.0, newdata=EFV.2.A),sd=summary(fitted.n.0)$sigma) eval.d.0 <‐ dnorm(0,mean=predict(fitted.d.0, newdata=EFV.2.A),sd=summary(fitted.d.0)$sigma) # Step 3b: estimate weights with c=0.01 w.0 <‐ wf(eval.n.0,eval.d.0,c=0.01) # Step 4: estimate iterated (unweighted) outcome regression mY.0 <‐ glm(Y.tilde.0 ˜ sex+metabolic+log_age+NRTI+weight.0+efv.0, data=EFV.2) # Step 5: final estimate under intervention estimate <‐ weighted.mean(predict(mY.0, newdata=EFV.2.A),w=w.0)

# Step 6: Repeat steps 1‐5 for a=(0.5,0.5) and a=(1,1) # Step 7: Bootstrapping

# Note: the online material contains code on how to arrive at exactly the same result with the package # Note: typically super learning is used to estimate the iterated outcome regressions, and the # densities are preferably estimated non‐parametrically. The package offers these options.

Appendix B. Additional Results

B.1. Simulation and Analysis Results

FIGURE B1.

Additional simulation and analysis results.

B.2. Intervention Support

FIGURE B2.

Conditional support for intervention strategies of interest, for both the simulation settings and the data analysis.

Appendix C. Data‐Generating Processes

C.1. DGP for Simulation 1

For :

For :

C.2. DGP for Simulation 2

For :

For :

C.3. DGP for Simulation 3

Both baseline data () and follow‐up data () were created using structural equations using the ‐package simcausal. The below listed distributions, listed in temporal order, describe the data‐generating process. Our baseline data consists of sex, genotype, , and the respective Nucleoside Reverse Transcriptase Inhibitor (NRTI). Time‐varying variables are co‐morbidities (CM), dose, efavirenz mid‐dose concentration (EFV), elevated viral load (= viral failure, VL) and adherence (measured through memory caps, MEMS), respectively. In addition to Bernoulli (), Multinominal () and Normal () distributions, we also use truncated normal distributions; they are denoted by , where and are the truncation levels. Values which are smaller than are replaced by a random draw from a distribution and values greater than are drawn from a distribution, where refers to a continuous uniform distribution. For the specified multinomial distributions, probabilities are normalized, if required, such that they add up to 1. The data‐generating process reflects the following considerations: more complexity than the first two simulation settings in terms of distribution shape and variety, as well as non‐linearities; similarity to the assumed DGP in the data analysis; generation of both areas of poor and good conditional intervention support, such that the proposed weighting scheme can be evaluated in all its breadth.

For :

For :

Appendix D. More on the DAG

D.1.

The measured efavirenz concentration depends on the following factors: the dose itself (which is recommended to be assigned based on the weight‐bands), adherence (without regular drug intake, the concentration becomes lower), and the metabolism characterized in the gene CYP2B6, through the 516G and 983T polymorphisms [43]. Given the short half‐life of the drug relative to the measurement interval, no arrow from EFV to EFV

to EFV is required. Viral failure is essentially caused if there is not enough drug concentration in the body; and there might be interactions with co‐morbidities and co‐medications. Note also that co‐morbidities, which are reflected in the DAG, are less frequent in the given data analysis as trial inclusion criteria did not allow children with active infections, treated for tuberculosis and laboratory abnormalities to be enrolled into the study. Both weight and MEMS (= adherence) are assumed to be time‐dependent confounders affected by prior treatments (= concentrations). Weight affects the concentration indirectly through the dosing, whereas adherence affects it directly. Adherence itself is affected by prior concentrations, as too high concentration values can cause nightmares and other central nervous system side effects, or strong discomfort, that might affect adherence patterns. Weight is affected from prior concentration trajectories through the pathway of viral load and co‐morbidities. Finally, both weight and adherence affect viral outcomes not only through EFV concentrations, but potentially also through co‐morbidities such as malnutrition, pneumonia and others.

is required. Viral failure is essentially caused if there is not enough drug concentration in the body; and there might be interactions with co‐morbidities and co‐medications. Note also that co‐morbidities, which are reflected in the DAG, are less frequent in the given data analysis as trial inclusion criteria did not allow children with active infections, treated for tuberculosis and laboratory abnormalities to be enrolled into the study. Both weight and MEMS (= adherence) are assumed to be time‐dependent confounders affected by prior treatments (= concentrations). Weight affects the concentration indirectly through the dosing, whereas adherence affects it directly. Adherence itself is affected by prior concentrations, as too high concentration values can cause nightmares and other central nervous system side effects, or strong discomfort, that might affect adherence patterns. Weight is affected from prior concentration trajectories through the pathway of viral load and co‐morbidities. Finally, both weight and adherence affect viral outcomes not only through EFV concentrations, but potentially also through co‐morbidities such as malnutrition, pneumonia and others.

Funding: Michael Schomaker is supported by the German Research Foundations (DFG) Heisenberg Programm (grants 465412241 and 465412441).

Data Availability Statement

The authors have nothing to report.

References

- 1. Robins J. M., Hernan M. A., and Brumback B., “Marginal Structural Models and Causal Inference in Epidemiology,” Epidemiology 11, no. 5 (2000): 550–560. [DOI] [PubMed] [Google Scholar]

- 2. Robins J., “A New Approach to Causal Inference in Mortality Studies With a Sustained Exposure Period—Application to Control of the Healthy Worker Survivor Effect,” Mathematical Modelling 7, no. 9–12 (1986): 1393–1512. [Google Scholar]

- 3. Bang H. and Robins J. M., “Doubly Robust Estimation in Missing Data and Causal Inference Models,” Biometrics 64, no. 2 (2005): 962–972. [DOI] [PubMed] [Google Scholar]

- 4. Van der Laan M. and Rose S., Targeted Learning (New York: Springer, 2011). [Google Scholar]

- 5. Schnitzer M. E., van der Laan M. J., Moodie E. E., and Platt R. W., “Effect of Breastfeeding on Gastrointestinal Infection in Infants: A Targeted Maximum Likelihood Approach for Clustered Longitudinal Data,” Annals of Applied Statistics 8, no. 2 (2014): 703–725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Schomaker M., Luque Fernandez M. A., Leroy V., and Davies M. A., “Using Longitudinal Targeted Maximum Likelihood Estimation in Complex Settings With Dynamic Interventions,” Statistics in Medicine 38 (2019): 4888–4911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Baumann P., Schomaker M., and Rossi E., “Estimating the Effect of Central Bank Independence on Inflation Using Longitudinal Targeted Maximum Likelihood Estimation,” Journal of Causal Inference 9, no. 1 (2021): 109–146. [Google Scholar]

- 8. Bell‐Gorrod H., Fox M. P., Boulle A., et al., “The Impact of Delayed Switch to Second‐Line Antiretroviral Therapy on Mortality, Depending on Failure Time Definition and CD4 Count at Failure,” American Journal of Epidemiology 189 (2020): 811–819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Cain L. E., Saag M. S., Petersen M., et al., “Using Observational Data to Emulate a Randomized Trial of Dynamic Treatment‐Switching Strategies: An Application to Antiretroviral Therapy,” International Journal of Epidemiology 45, no. 6 (2016): 2038–2049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Kreif N., Tran L., Grieve R., De Stavola B., Tasker R. C., and Petersen M., “Estimating the Comparative Effectiveness of Feeding Interventions in the Pediatric Intensive Care Unit: A Demonstration of Longitudinal Targeted Maximum Likelihood Estimation,” American Journal of Epidemiology 186, no. 12 (2017): 1370–1379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hirano K. and Imbens G. W., The Propensity Score With Continuous Treatments, chapter 7 (Hoboken, NJ: Wiley, 2004), 73–84. [Google Scholar]

- 12. Kreif N., Grieve R., Díaz I., and Harrison D., “Evaluation of the Effect of a Continuous Treatment: A Machine Learning Approach With an Application to Treatment for Traumatic Brain Injury,” Health Economics 24, no. 9 (2015): 1213–1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Kennedy E. H., Ma Z., McHugh M., and Small D. S., “Nonparametric Methods for Doubly Robust Estimation of Continuous Treatment Effects,” Journal of the Royal Statistical Society, Series B: Statistical Methodology 79 (2017): 1229–1245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Westling T., Gilbert P., and Carone M., “Causal isotonic regression,” Journal of the Royal Statistical Society, Series B 82 (2020): 719–747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Zhang Z., Zhou J., Cao W., and Zhang J., “Causal Inference With a Quantitative Exposure,” Statistical Methods in Medical Research 25, no. 1 (2016): 315–335. [DOI] [PubMed] [Google Scholar]

- 16. Galvao A. F. and Wang L., “Uniformly Semiparametric Efficient Estimation of Treatment Effects With a Continuous Treatment,” Journal of the American Statistical Association 110, no. 512 (2015): 1528–1542. [Google Scholar]

- 17. VanderWeele T. J., Chen Y., and Ahsan H., “Inference for Causal Interactions for Continuous Exposures Under Dichotomization,” Biometrics 67, no. 4 (2011): 1414–1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Díaz I. and van der Laan M. J., “Targeted Data Adaptive Estimation of the Causal Dose–Response Curve,” Journal of Causal Inference 1, no. 2 (2013): 171–192. [Google Scholar]

- 19. Neugebauer R. and van der Laan M., “Nonparametric Causal Effects Based on Marginal Structural Models,” Journal of Statistical Planning and Inference 137, no. 2 (2007): 419–434. [Google Scholar]

- 20. Goetgeluk S., Vansteelandt S., and Goetghebeur E., “Estimation of Controlled Direct Effects,” Journal of the Royal Statistical Society, Series B: Statistical Methodology 70 (2008): 1049–1066. [Google Scholar]

- 21. Young J. G., Hernan M. A., and Robins J. M., “Identification, Estimation and Approximation of Risk Under Interventions That Depend on the Natural Value of Treatment Using Observational Data,” Epidemiological Methods 3, no. 1 (2014): 1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Young J. G., Logan R. W., Robins J. M., and Hernán M. A., “Inverse Probability Weighted Estimation of Risk Under Representative Interventions in Observational Studies,” Journal of the American Statistical Association 114, no. 526 (2019): 938–947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Díaz I., Williams N., Hoffman K. L., and Schenck E. J., “Non‐Parametric Causal Effects Based on Longitudinal Modified Treatment Policies,” Journal of the American Statistical Association 118, no. 542 (2021): 1–16. [Google Scholar]

- 24. Mulenga V., Musiime V., Kekitiinwa A., et al., “Abacavir, Zidovudine, or Stavudine as Paediatric Tablets for African HIV‐Infected Children (Chapas‐3): An Open‐Label, Parallel‐Group, Randomised Controlled Trial,” Lancet Infectious Diseases 16, no. 2 (2016): 169–179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Bienczak A., Denti P., Cook A., et al., “Plasma Efavirenz Exposure, Sex, and Age Predict Virological Response in HIV‐Infected African Children,” Journal of Acquired Immune Deficiency Syndromes 73, no. 2 (2016): 161–168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Bienczak A., Denti P., Cook A., et al., “Determinants of Virological Outcome and Adverse Events in African Children Treated With Paediatric Nevirapine Fixed‐Dose‐Combination Tablets,” AIDS 31, no. 7 (2017): 905–915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Hernan M. and Robins J., Causal Inference (Boca Raton, USA: Chapman & Hall/CRC, 2020), https://www.hsph.harvard.edu/miguel‐hernan/causal‐inference‐book/. [Google Scholar]

- 28. Petersen M. L., Porter K. E., Gruber S., Wang Y., and van der Laan M. J., “Diagnosing and Responding to Violations in the Positivity Assumption,” Statistical Methods in Medical Research 21, no. 1 (2012): 31–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Gruber S., Phillips R. V., Lee H., and van der Laan M. J., “Data‐Adaptive Selection of the Propensity Score Truncation Level for Inverse‐Probability—Weighted and Targeted Maximum Likelihood Estimators of Marginal Point Treatment Effects,” American Journal of Epidemiology 191, no. 9 (2022): 1640–1651. [DOI] [PubMed] [Google Scholar]

- 30. Petersen M., Schwab J., Gruber S., Blaser N., Schomaker M., and van der Laan M., “Targeted Maximum Likelihood Estimation for Dynamic and Static Longitudinal Marginal Structural Working Models,” Journal of Causal Inference 2, no. 2 (2014): 147–185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. van der Laan M. J. and Gruber S., “Targeted Minimum Loss Based Estimation of Causal Effects of Multiple Time Point Interventions,” International Journal of Biostatistics 8, no. 1 (2012): 9. [DOI] [PubMed] [Google Scholar]

- 32. Hejazi N. S., van der Laan M. J., and Benkeser D., “‘Haldensify’: Highly Adaptive Lasso Conditional Density Estimation in R,” Journal of Open Source Software 7, no. 77 (2022): 4522, 10.21105/joss.04522. [DOI] [Google Scholar]

- 33. Hothorn T., Kneib T., and Bühlmann P., “Conditional Transformation Models,” Journal of the Royal Statistical Society, Series B 76, no. 1 (2014): 3–27. [Google Scholar]

- 34. Stasinopoulos M. D., Rigby R. A., Heller G. Z., Voudouris V., and Bastiani F. D., Flexible Regression and Smoothing: Using GAMLSS in R (New York: Chapman and Hall/CRC, 2017). [Google Scholar]

- 35. Young J. G., Stensrud M. J., Tchetgen E. J. T., and Hernan M. A., “A Causal Framework for Classical Statistical Estimands in Failure‐Time Settings With Competing Events,” Statistics in Medicine 39, no. 8 (2020): 1199–1236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Díaz I. and van der Laan M., “Super Learner Based Conditional Density Estimation With Application to Marginal Structural Models,” International Journal of Biostatistics 7, no. 1 (2011): 38, 10.2202/1557-4679.1356. [DOI] [PubMed] [Google Scholar]

- 37. Heumann C., Schomaker M., and Shalabh, Introduction to Statistics and Data Analysis—With Exercises, Solutions and Applications in R (Heidelberg, Germany: Springer, 2023). [Google Scholar]

- 38. Holovchak A., McIlleron H., Denti P., and Schomaker M., “Recoverability of Causal Effects in a Longitudinal Study Under Presence of Missing Data,” arXiv Eprints, 2024, https://arxiv.org/abs/2402.14562. [DOI] [PubMed]

- 39. Nima S., Hejazi D. B., and van der Laan M. J., “haldensify: Highly Adaptive Lasso Conditional Density Estimation,” R package version 0.2.3, 2022, https://github.com/nhejazi/haldensify.

- 40. Díaz I., Hoffman K. L., and Hejazi N. S., “Causal Survival Analysis Under Competing Risks Using Longitudinal Modified Treatment Policies,” Lifetime Data Analysis 30, no. 1 (2024): 213–236. [DOI] [PubMed] [Google Scholar]

- 41. Hoffman K. L., Salazar‐Barreto D., Williams N. T., Rudolph K. E., and Díaz I., “Studying Continuous, Time‐Varying, and/or Complex Exposures Using Longitudinal Modified Treatment Policies,” Epidemiology 35, no. 5 (2024): 667–675. [DOI] [PubMed] [Google Scholar]

- 42. Petersen M. L., “Commentary: Applying a Causal Road Map in Settings With Time‐Dependent Confounding,” Epidemiology 25, no. 6 (2014): 898–901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Bienczak A., Cook A., Wiesner L., et al., “The Impact of Genetic Polymorphisms on the Pharmacokinetics of Efavirenz in African Children,” British Journal of Clinical Pharmacology 82, no. 1 (2016): 185–198. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data S1.

Data S2.

Data S3.

Data S4.

Data S5.

Data Availability Statement