Abstract

Background

As implementation science (IS) in low and middle-income country settings continues to grow and generate interest, there is continual demand for capacity building in the field. Training programs have proliferated, but evaluations of these efforts are sparse and primarily from high-income countries. There is little knowledge about the impact of IS training on students’ careers post-graduation. This evaluation of the first cohort of students who graduated from the 18-month implementation science concentration in HIV/AIDS within the Master of Science program at University of the Witwatersrand in South Africa addresses this gap.

Methods

We conducted two rounds of virtual interviews with the students, who were from eight African countries, immediately after the training program ended (n = 10 participants) and again five years later (n = 9 participants). The first survey captured student perceptions of IS before they entered the program and their opinions just after graduation. The follow-up evaluated their perceptions five years after graduation. Interviews were recorded, transcribed, and coded in ATLAS.ti (first round) and MAXQDA (second round), using the framework method and thematic analysis.

Results

Prior to the training, all students, even those with no knowledge of the field, perceived that the IS training program would help them develop skills to address critical public health priorities. These expectations were generally met by the training program, and most students reported satisfaction despite what they felt was a limited timeframe of the program and insufficient mentorship to complete their dissertation projects at their home institutions across the African continent. Five years post-graduation, most of the students did not have jobs in IS but continued applying their training in their roles and had subsequently pursued further education, some in IS-related programs.

Conclusions

IS training in Africa was clearly seen as valuable by trainees but IS job opportunities remain scarce. Training programs need to be more closely tied to local government priorities, and training for in-country policy and decision-makers is needed to increase demand for qualified IS researchers and practitioners.

Supplementary Information

The online version contains supplementary material available at 10.1186/s43058-024-00672-y.

Keywords: Capacity building, Implementation science, South Africa

Contributions to the literature.

In recent years, implementation science training programs have expanded across high and low and middle-income countries (LMICs) to fill needs for implementation science expertise among researchers and implementors.

As far as we know, this was the first study to assess the long-term impacts of an implementation science capacity building program (the Wits IS training program) in a LMIC.

This longitudinal qualitative study contributes to the implementation science literature by describing graduates’ perspectives on how the IS program influenced their career trajectories and by identifying improvement areas for LMIC-based IS training programs to encourage greater professional opportunities for trained implementation scientists.

Background

Over the last two decades, implementation science (IS), a relatively new field that is centered around identifying strategies to improve implementation of evidence-based interventions (EBIs), has grown in significance and impact [1]. IS has been lauded as a method for speeding up the process of getting lifesaving interventions to patients and populations [2]. As the field has gained recognition, training programs in this discipline’s theories and methodologies have expanded across both high and low and middle-income countries (LMICs) [1, 3]. Public health schools in the United States and Europe now offer IS tracks and courses within their master’s and PhD programs [3–6]. Likewise, several capacity building programs in LMICs that are focused on, or have major components of, implementation science have been established [7].

The goal of many of these programs within LMICs is to develop IS capacity across individuals who will then become program managers, policymakers, or independent researchers, who will apply their IS knowledge to research and practice to improve EBI implementation, and ultimately, population health [8–10]. Acknowledging these needs, there has been a proliferation of IS training programs in LMICs funded by organizations such as the National Institute of Health Fogarty International Center and the World Health Organization (WHO) Special Program for Research and Training in Tropical Diseases (TDR). Recent publications have described a range of program modalities, from more intensive multi-year fellowships to shorter massive open online courses, and evaluations of these programs’ student satisfaction and short-term impacts has revealed generally positive reviews and improvements in IS competencies [11–14].

One such program was the 18-month Master of Science (MSc) in Epidemiology with an IS concentration at the University of the Witwatersrand (Wits) School of Public Health in Johannesburg, South Africa. This program was created in partnership with the University of North Carolina (UNC) at Chapel Hill [10]. The Wits IS training program was co-developed and co-taught by UNC and Wits faculty. Funded by both the Fogarty International Center and WHO TDR, this program has existed since 2015 and attracted many international students. It consists of twelve courses, five that are required IS core courses. Most of the courses are lectures delivered in two-week blocks with assessments spread over the semester. In the second year, students are required to complete their theses by conducting implementation research in their home countries. A full description of the program’s pedagogy on inception is described by Ramaswamy et al. (2020) [10].

The first cohort of students enrolled in the Wits IS program in 2015 and graduated in 2017. As members of the first cohort, these students had close relationships to Wits and UNC faculty which provided the opportunity to interview them several years after graduation to assess the long-term effect of the IS training. As far as we know, there are no evaluations globally that explore the impact of IS training on students’ careers after graduation. This qualitative study describes perceptions of the first cohort of Wits University students about their experience at three time points: pre-training, post-training, and five years after graduation. We expect that the results from this study will be valuable to improve the design and delivery of LMIC-based IS training programs in the future. We included relevant items from the Consolidated Criteria for Reporting Qualitative Research in reporting this study (see Additional File 1).

Methods

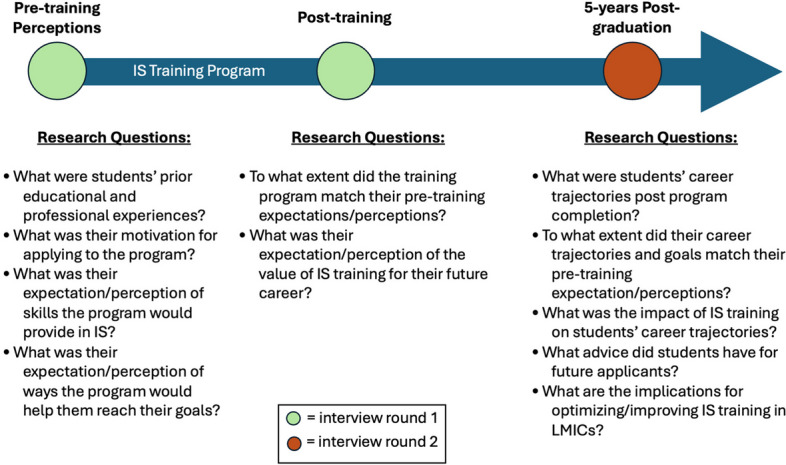

We conducted two sets of interviews: the first, one year after participants graduated from the program (in 2018) and the second, with the same participants five years later. The first set of interviews explored students’ motivations and expectations when they applied to the program and how these changed as a result of what they learned in the program and their learning experience. The second set of interviews gathered perspectives on how these expectations evolved post-graduation, and the corresponding implications for improving IS training in academic programs in LMICs. Figure 1 summarizes the study timeline and the research questions at each time point.

Fig. 1.

Training timeline and research questions

First-round interviews- post-training

Inclusion criteria for the study were that interviewees must have been enrolled in the first cohort of MSc IS students and successfully graduated from the program. Twelve MSc students who met inclusion criteria were invited via email to participate in the first-round interviews in May 2018. An information sheet was attached to the email to provide participants details about the project. 10 students were successfully interviewed. Of those not interviewed, one declined and the other failed to attend the scheduled interview multiple times.

For the first round of interviews, one of the co-authors (NS) created the interview guide, based on inputs from the program leadership team (LI, JK, RR, and AP). Questions focused on participants’ pre-training program perceptions and expectations, in-program experiences, and post-training perceived value of the training program for their careers (see Additional File 2 for the round 1 interview guide). Interviews were completed in private by NS, a female doctoral student with previous qualitative interviewing training. They were conducted in English via Zoom, were audio-recorded, and lasted around 60 min each. Verbal consent via Zoom was obtained for study participation and written consent was obtained for recording the interview. This study was approved by the Wits and UNC Human Research Ethics Committees prior to data collection.

Second-round interviews- 5-years post-training

Following completion of data analysis in July 2019, a request for follow-up information was sent to all participants to keep their contact information updated. In 2022, follow-up interviews were conducted by a new member of the research team (OO) with the same graduates who participated in the round one interviews to learn about their longer-term post-graduation experiences. The aim of the follow-up interviews was to explore program graduates’ perceptions about the impact of the IS training on their career path, how the program met or did not meet their pre-training expectations, and their opinions on how to improve IS training across LMICs. SB and OO developed the round 2 interview guide (see Additional File 3 for interview guide). The graduates were contacted via email by OO, provided an updated information sheet, and invited to participate in the follow-up interviews. Nine of the ten graduates contacted responded to the email and were consented for the interview and recording. Virtual interviews were scheduled with the consenting graduates and conducted by OO in private on Microsoft Teams. OO, PhD, is a female researcher with previous qualitative interview training. Each interview was recorded and lasted 30 to 45 min. These follow-up interviews were also approved by the UNC and Wits Human Research Ethics Committee, a process that was facilitated by LI.

Data analysis

The framework method, a seven-stage process for analyzing qualitative data, guided analysis of the first round of interviews [15, 16]. Framework analysis is a class of thematic analysis methods called “codebook methods” by Braun and Clark (2023). It is a highly systematic method that involves creating an “analytical framework” based on coding a limited set of transcripts and aggregating codes into categories. These codes and categories are the framework that is used for coding the remaining transcripts. One of the framework method’s defining elements is the use of matrices to reduce the data and summarize it by categories [15]. Framework analysis is appropriate when the objective is not to develop a new theory but to categorize themes from field data to answer a set of research questions.

After transcription was completed, the transcripts were anonymized by NS. NS coded three transcripts using ATLAS.ti and shared them with the principal investigators to develop the categories of the analytical framework [17]. All transcripts were then coded, and codes were either assigned to existing categories or new categories were identified. The categories organized by research question are shown in Table 1. Summaries were created from the coded interviews and charted onto a matrix under the appropriate framework categories. Thematic analysis was conducted by highlighting potential themes in the matrix for each category to make connections between and within interviews. Highlighted sections within categories were compared across and within interviews to find similarities in student experiences and perceptions.

Table 1.

Research questions and analytical framework categories – interview round 1

| Research Questions | Framework Categories |

|---|---|

| Pre-training | |

| What were students’ prior educational and professional experiences and how did these lead to their motivation for applying to the program? | Prior professional experience |

| What was their expectation/perception of the skills the program would provide in IS and the ways in which the program would help them to reach their goals? |

Learning objectives Pre-training perceptions |

| Post-training | |

| To what extent did the training program match their pre-training expectations/perceptions? |

In-training experience Course content Course structure Challenges Field project Post-training perceptions |

| What was their expectation/perception of the value of IS training for their future career? |

Influence of IS Professional goals Academic goals Employment |

The second round of interviews also used a codebook method; following transcription and anonymization of the second round of interviews, OO worked with SB to develop a codebook using the post-graduation research questions as a guide. OO coded all interviews in MAXQDA (2022 version) [18] and then organized the main codes into a matrix of categories. The matrix, organized by research question, is shown in Table 2. As before, thematic analysis was performed within each category. If appropriate, themes from the second round that were more applicable to the research questions in Table 1 were combined with first round results.

Table 2.

Research questions and categories – interview round 2

| Research Questions | Categories |

|---|---|

| What were students’ career trajectories post program completion? |

Additional academic training Employment history Current employment |

| To what extent did their career trajectories and goals match their pre-training expectation/perceptions? |

Professional goals How goals have evolved Thought it would be easy to get a job |

| What was the impact of IS training on students’ career trajectories? |

Benefits of Wits training Professional recognition Unused aspects of training |

| What advice did students have for future applicants? | Advice for potential applicants |

| What are the implications for optimizing/improving IS training in LMICs? |

Advice for training in Africa Opinion about IS gap in Africa |

Results

Participant demographics

Demographic and background data on the interviewees is presented in Tables 3 and 4. Eight nationalities were represented. Six interviewees had prior degrees in the medical field, two had prior public health degrees, and two had degrees in laboratory science. Four students received funding during IS training, and five students found IS-aligned positions following graduation from the program.

Table 3.

Interview 1 participant demographics

| Characteristic | Number (n = 10) |

|---|---|

| Gender | |

| Man | 6 |

| Woman | 4 |

| Nationality | |

| Burundi | 1 |

| Ghanaian | 1 |

| Kenyan | 1 |

| Malawian | 1 |

| Nigerian | 3 |

| South African | 1 |

| Tanzanian | 1 |

| Zambian | 1 |

| Highest Degree | |

| Medical Degree (e.g. MBBS) | 4 |

| Post-graduate (e.g. MPH, MSc) | 1 |

| Bachelors (e.g. BSc) | 5 |

| Received Funding for MSc IS | |

| Yes | 4 |

| No | 6 |

Abbreviations: MBBS Bachelor of Medicine and Bachelor of Surgery, MPH Master of Public Health, BSc Bachelor of Science

Table 4.

Interview participant degree focus and employment status at 2 rounds

| Prior Degree Focus (n = 10) | |

| Medical Field | 6 |

| Public Health Field | 2 |

| Laboratory Science | 2 |

| Employment Status at Time of Interview 1 (n = 10) | |

| Employed - Non-IS-aligned positions | 3 |

| Employed – IS-aligned positions | 5 |

| Unemployed | 1 |

| Student (PhD) | 1 |

| Employment Status (at Time of Interview 2 (n = 9) | |

| Employed - Non-IS-aligned positions | 1 |

| Employed – IS-aligned positions | 3 |

| Unemployed | 1 |

| Student (PhD with some IS focus)a | 4 |

aSome students also were employed, but we only counted them as students for clarity purposes

Themes from interviews

Results are presented in the order of the timeline in Fig. 1.

Pre-training

Questions about students’ pre-training experience focused on their prior professional experience, motivation for applying to the program, and pre-training perceptions and goals. In discussing their prior experience, students had medical, public health, or laboratory science experience. The idea that preventive medicine was preferable to tertiary medicine was evident throughout most student responses. Students with prior degrees in medicine and laboratory science had experiences that pushed them toward prevention and public health work over clinical care, lab work, and one-on-one work with patients. Students with prior experience in public health wanted to focus on an aspect of prevention that they felt had the greatest need. For these students, their prior experience motivated them to find a program that could provide them with education to bolster their skills and the ability to work with health systems to improve population outcomes in the field. One student described their reason for choosing the program as follows:

“So I had done my MPH in, well, I specialized in drug policy and health system management…but then when I saw an opportunity for implementation science and then you read what implementation science was about, I think oh, this is something that has been missing for me in terms of what I want to do with my life. You know, I just did not just want to report on what is happening, but then somehow bridge that gap into practice…I was like, OK, I can do another master’s because this one is not your typical Epi [Epidemiology] MPH master’s but it’s more. For me, I saw it as being practical.”

Students perceived that implementation science would provide them with the skills to translate knowledge to practice, which in turn, would help them improve health outcomes. Students came to this conclusion from information provided on the program website. One interviewee said she “loved… the definition of the program in translating research into practice so I really wanted to see what the program was all about.” Overall, students wanted to have a career where they were doing work with tangible public health outcomes: “I want to be doing and conducting projects, implementing projects in the field.” Some students were unhappy with their current positions, while others wanted to focus more narrowly on IS because they believed in its importance as a field. Thus, students perceived that the IS training program would improve their skills and abilities, which would improve their work capabilities, leading to careers where they could impact health outcomes. As one student said, “I approached the program because it was going into the past of what I was already doing and it actually added up towards what I want to do in the future.”

Post-training

Post-training questions included the extent to which the training program matched students’ pre-training expectations and goals and their perceptions of the value of IS training for their future careers.

Pre vs. Post-training expectations

While all students interviewed were able to successfully complete the course requirements, they described a few challenges during the program, which for some, made it difficult to have as fulfilling a learning experience as they originally envisioned. The IS program was part of an 18-month MSc degree program in Epidemiology and was offered in short module format. The primary implementation science content was provided in two courses based on similar content offered in the MPH program at UNC (described in Ramaswamy (2019) [19].

The first challenge was related to the course’s short timeframe of 18 months, within which students were required to complete an implementation research project involving primary data collection in their home countries in addition to completing 12 courses. This involved writing a research proposal, applying for and receiving ethics board approval, implementing their field project and collecting primary data, analyzing their data, and writing their final report. Several students felt that 18 months was insufficient to conduct rigorous research. As expressed by one student “primary data in such a short span of time, it’s impossible.” Ethics board approval was complicated by the fact that students often needed approval at Wits and their home country. Further adding to the timeframe challenges, ethics board members did not have formal IS training, which led to approvals being delayed because of the need to clarify aspects of their proposed research.

A second major challenge was that supervisors assigned to students during field projects were sometimes unable to provide the feedback and mentorship that students expected. In the majority of cases this was due to supervisors’ lack of IS knowledge. Due to this challenge, students felt that they did not learn as much as they could have if given a supervisor formally trained in IS. One student described it this way: “I would have actually loved to see someone who knows the stuff guiding me, telling me what to do not just me proposing what to do. I mean getting the guidance or advice from someone, but I didn’t get any advice during that process.” This was also the case of the external evaluators who reviewed the final IS project report. Some students felt that this lack of knowledge led to a harsher assessment than was warranted of their research project, leading to the implementation science students receiving lower grades than other MSc students in other Epidemiology tracks.

Despite these challenges, all but two students felt that the material presented during the classes was sufficient and that they were able to apply the theoretical knowledge gained in class in completing their field project. Reflecting back on their experiences at the end of the program, all interviewees felt that the program was overall beneficial:

“It was practical, it was applicable and interesting;” “(the training is) actually very important, training is essential. I am now a trained implementation scientist, one of the… first in Africa so I think that is something to be proud of.”

Perceptions of IS training value for future careers

Overall, interviewees perceived that the program provided them with the skills and knowledge needed to reach their professional goals, all of which included some aspect of IS. Their professional goals fell into two main categories: research and improving health. Five students discussed the beneficial nature of the program, including the field project, in providing them with a better understanding of and ability to conduct research. These students also discussed their plans to enroll in a PhD program, because they felt that it would help them to reach their long-term goals of becoming independent researchers. In contrast, four students described their main objective as affecting change in the health system. Both categories of students felt that these goals were possible due to the training they received at Wits: “the training and the practicum was very useful because they gave me the skills, the knowledge as well as the confidence that I can really do this,” “the program actually helped me to be able to focus and to build a niche for myself.”

Being employed in an implementation science-focused position was noted as a professional goal for all the students. At the time of the interview, five of the students were employed in a position where they could do implementation science work, such as working in a project management role, conducting research that involved implementation science, or teaching implementation science courses. Four were looking for implementation science jobs, but lack of opportunities made it difficult to find a position. One student was enrolled in a PhD program at the time of the first-round interview.

5-Years post-training

There were nine interviews conducted as part of the 5-year post-training interviews. As shown in Fig. 1, the main themes explored during the interviews were career trajectory post-graduation, the extent to which students’ career trajectory and goals matched their pre-training expectations and perceptions, the impact of IS training on career trajectory, advice for future program candidates, and overall implications for improving/optimizing training in LMICs.

Career trajectory post-graduation

Eight of the graduates were employed at the time of the interview while one was not. The one who was unemployed had hoped that getting a job would be easier than she had experienced, stating: “I thought it would be easier getting a job in the field of implementation science … Yeah things have just been something else.”

Eight of the graduates had gone on to apply for additional graduate programs, including one MSc program, six PhD programs (two completed and four ongoing at the time of the interview), and one professional fellowship program in public health (this participant also planned to apply for an IS PhD program). Three of the programs were IS-related, while the others were in Pharmacoeconomics, Applied Health Services Research, Public Health Economics and Decision Science, and Clinical Medicine (see Table 4). Interviewees’ decision to obtain further qualifications was influenced by their desire to pursue a career in academia, seeking to fill the capacity gap in IS in their home country, and an interest in personal academic development. The one graduate who had not pursued an additional qualification was taking a break from school and work to focus on raising her kids; however, she aspired to start a PhD program related to implementation science within the next year.

Impact of training on career trajectory & trajectory alignment with expectations

In the second round of interviews, most of the students felt that where they were in their careers was more or less aligned with their expectations before they entered the Wits program and immediately after graduation. As they mentioned in the first interview round, their primary motivation for joining the program was to improve health outcomes, but with additional skills related to research and translation, and this remained their goal after graduation. Five years after graduation, many of the graduates felt that they had positions aligned with these goals. For instance, one of the participants stated:

“My goal was to actually become a lecturer at university and again to provide assistance, like to the Ministry of Health and other players in the sector of Public Health to be able to actually give them advice on how better to implement projects and programs. How better to run programs at country level in the African context. So just it was sort of becoming a consultant to independent organizations and WHO, which I think I’ve achieved.”

Another participant who had started a PhD program said:

“No, my goals haven’t changed really. Only that I was successful in being given an academic position back in my country and I’m teaching…in the School of Medicine and that dates from back in 2017 as well. By the time I graduated, I was already given a position. And yeah, for me the aim or just the rationale behind my training and education in the UK is that since I’m in the academic field, I need to train further to just be promoted to professor and also to you know, just to grab some further skills into research…I’m still in the same path as at the time I graduated from Wits.”

The participants all spoke of different ways that the IS training had influenced their current professional trajectories. Whether or not their current employment involved implementation science directly, they had found ways to integrate the IS competencies in whichever sector they worked. One of the participants, whose job involved health program development, said:

“The beauty of Implementation Science is, you see it in everyday work, especially when you’re implementing health programs. So, suppose I started a project from scratch, something that had actually not been done in my country before that is TB in the private sector, getting the project to start and sustaining it and even take it to scale was actually something that required a lot of implementation science skills, which I already possess…So, all that implementation science is something that we see and use all the time.”

Going further, interviewees highlighted aspects of the training that were particularly useful in different contexts. One participant described the integration of quality improvement skills in their current role:

“The IS program at Wits is a very robust program. And it takes one through the core concepts of implementation science, as well as quality improvement. And this is something that I’ve found extremely useful in my career…I’m actually able to guide healthcare workers and small groups within healthcare facilities to implement some of those quality improvement interventions…Help facilities contextualize their situation, help them select the best interventions that is fit for their facilities at that particular point in time, depending on the area, and then help them see it through, that they are implementing those interventions with fidelity…And some of these things you don’t just stumble upon them. I mean, you really need to have gone through an implementation science program to be able to do this efficiently.”

Only one participant expressed an expectation of IS training as a step to better employment opportunities and was disappointed when she did not get a job as easily as she had hoped:

“Considering we’ve got all the training Wits collaborated with WHO TDR and all that. So, I felt it was going to be much easier, not necessarily a soft landing, but then at least getting the job would be easy actually in the field of implementation science. But unfortunately, that’s not the case.”

Several other individuals echoed the fact that getting employment in implementation science may not be straightforward. They felt that individuals would need to be prepared to integrate IS competencies into their professions rather than wait to find specific jobs in the field. At the same time, they acknowledged that training in IS opened a wide range of opportunities.

One participant said, “I think it opens up a wide range of skills and it’s up to you to choose which ones to embark on later on as I choose to be a Health Economist you know nesting some components from IS. You can dive into implementation research and academics, or from consulting to policy.”

Another participant noted that it is important to, “stay abreast of updates in the field” because implementation science is an emerging field. He stated:

“[I] also keep tabs of IS work that is been done by other people across the world. IS is an emerging field, a lot of things are changing, a lot of things are being added all the time. It’s good to keep yourself abreast with what’s happening in other places in other countries that are really serious about implementation science, so that you have a very broad knowledge.”

Finally, another interviewee highlighted that having work experience prior to joining the program would be useful to better conceptualize career growth. Referring to personal experience, the participant stated:

“I think that for me, personally, the training helped me shape my career goal much better. If I hadn’t had any work experience or worked in any particular disease program my perspective of the program might be a little bit different and I think that it’s very important if implementation science is something you’re considering, then go into the field, start working on something, get your hands a little bit dirty, before you go back to class and learn the theories and concepts of implementation science.”

Recommendations for IS Training in Africa

The participants made several suggestions for improving IS training in the African continent. They noted the importance of having more localized research to make application of the theories learnt in the classroom easier to conceptualize and apply for the students:

“Implementation Science is new in Africa or in some Sub-Saharan Africa countries, so most of these implementation theories are theories that are developed in advanced countries. So sometimes the context in which they are developed and transferring them to make them relevant to non-western research participants is very difficult, it is a very big challenge because sometimes you have to adapt them to be able to suit your context or suit your studies or suit your population. Most of the practical examples that we learned during the program were guided by papers that were or studies that were developed in the U.S. I would suggest that more of such practical examples should be within the African continent.”

Participants also suggested that the program faculty could provide connections to areas of need in the continent where the training could be applied. They felt that this would make identifying employment opportunities easier for the candidates post-training:

“It would be important to link people from the training to the work environment in a more intentional way probably by collaborating with organizations and that kind of thing, so that at the end, people could have maybe a list of projects from different organizations that students can choose from…Otherwise, people will just go to other organizations doing public health and they will focus on the Public Health and Epidemiology and Health Economics and other Biostatistics and things like that, and then Implementation Science will keep struggling.”

To ensure that the IS skills gained through the program are deployed within health sectors in Africa, one participant suggested, “… get people from government, but also people in the non-governmental sector to join the IS program as massively as possible. We need these skills to permeate different institutions at different levels. So, for those policymakers that decides on who gets into the program, this is something that is really, really important to look into.” Another interviewee suggested that the Wits program support other institutions in developing an IS program and that it provide post-graduation support to the alumni:

“So beyond Wits, I think Wits should be able to actually motivate other schools to provide the training across Africa. It should really support its graduates, its alumni, to continue to do some work related to implementation science. Because I remember that time after graduation, we did request for any programs to follow up students who graduated from the program… because the aim was to build some people who can help the implementation, effective implementation of programs in Africa and there was nothing linked to the follow up really. That’s why everyone just had to find their own way you know around. Some went into PhD, different PhDs. Others went into programs.”

Discussion

As far as we know, this is the first paper to evaluate the long-term impacts of an IS training program in a LMIC setting. Some recent papers have evaluated the more immediate impact of IS training programs and found positive perceptions of the programs and increases in IS core competencies among recent graduates. Three of these were surveys and most were conducted in high-income countries (HICs) [14, 20–22]. To build this literature, future IS training programs should systematically collect data on their alumni, including their current position, if it is an IS position or not, and what IS components (if any) they are able to utilize in their work. Our study contributes to the literature not only through our focus on LMICs but also through the detailed exploration of program graduates’ perspectives of how one of the earliest implementation science training programs in Africa affected their career trajectories. This analysis can inform the tailoring of IS curricula to strengthen capacity building and increase the supply of qualified candidates. There are now several training programs in Africa, but our study also highlights the level of interest in the field beyond implementation researchers. Students who had little knowledge about IS applied to the program because the need for more systematic methods to support implementation is well understood in LMIC settings, and students were enthusiastic about the idea of gaining knowledge and skills in how to better implement.

The need for implementation science is therefore intuitively understood, and it is important to ensure that capacity building curricula are tailored to the topics relevant to LMIC settings. In the US, a significant focus of implementation research is on the implementation of EBIs in clinical practice in medical and behavioral health. Our research indicates that in Africa, IS priorities are more centered around implementing programs and policies at the national and regional levels [4, 23]. These differing priorities should be considered in the development of IS training programs and in the expansion of IS teaching capacity building throughout Africa. For example, these programs could center on policy-focused implementation strategies, community-engaged research, and on fit to local context, using case studies as a teaching tool [24, 25]. In addition, these programs should center methods for implementation science measure and theory adaptation and development, given that the majority were developed in HICs and may not be relevant to all LMIC contexts [25, 26]. These focuses will encourage the development of local IS literature, which is still limited in many LMICs. It may also be beneficial to offer IS courses within MPH programs in LMICs, given that these programs are practice oriented.

In addition to tailored capacity building, this study also indicates the need to advocate for and communicate the value and importance of implementation science to employers to increase demand for employees with implementation science skillsets. The students had a positive experience during their IS training, felt that their initial interest in the field was confirmed by the training, and many were able to integrate components of IS into their work. However, it is a matter of concern that few of the graduates from the first cohort were able to pursue careers in implementation science, despite the persistent implementation challenges in their countries (e.g., one student became faculty in Implementation Science, but outside of Africa). Expanding the training to include potential employers, through practice oriented IS short courses, is one solution. Learning needs assessments in LMICs have also strongly indicated the need for field-based mentors [27, 28]. As the study participants indicated, local mentorship was an ongoing challenge for students in this cohort. IS training at the university level needs to be reinforced by training for mentors and decision makers, which may increase the demand for qualified IS researchers and practitioners and job opportunities for those who graduate from IS programs. After training, policymakers and senior managers could join the pool of supervisors for home country research projects. This will require relationships between training programs and policy makers and the government. IS programs in LMIC settings need to be intimately connected to local governmental priorities so that stakeholders can see the value of IS. Otherwise, the training will either be diluted by being incorporated into other forms of research or become focused on obtaining grant funding reflecting HIC priorities.

A limitation of this research is that interviews were only conducted with the first cohort of participants from the training program, which was a small sample. There have now been six cohorts, and some of the concerns that were mentioned, especially related to the lack of mentors, are likely to have improved over time. For example, students now analyze secondary instead of primary data for their projects and almost all faculty in the department have been trained in implementation science, so serve as internal supervisors on the projects. However, the 5-year interviews were conducted in 2022, and the graduates from 2017, who are likely some of the most qualified and experienced IS professionals in their home countries, were still not seeing a market for implementation scientists in Africa. In the meanwhile, training programs, both formal and informal, have proliferated, and there is little evidence that these trainings are resulting in significant improvements in implementation outcomes, or that funding for implementation research by LMIC governments has increased adequately to employ trainees. It is therefore unlikely that the challenges experienced by members of this cohort are different from those of more recent program graduates.

Conclusions

Global IS training programs will play an important role in helping to bridge the research-to-practice gap and developing an IS workforce globally. From our short and long-term evaluation of the UNC-Wits IS training program, we found that program graduates have been able to use the IS skills that they gained through their training within their careers to guide implementation of EBIs and public health programs. However, there are still significant opportunities for improvements in academic and non-academic training programs, both in equipping trainees to be ready to address local implementation challenges, and in equipping decision makers and employers to understand the value of the field. These findings can help shape the formation of future global IS training programs to encourage the development of program graduates that are well-prepared to lead the expansion of IS research and practice within their countries.

Additional global implementation science training programs will not be sufficient to ensure the development of a local implementation science-trained workforce without the mechanisms to ensure that the students who graduate from them are employed in implementation science-related positions. We end this paper with a call to action to policymakers, training institutions, and employers in LMICs to prioritize the creation of implementation science-centered positions to help ensure the sustainability of implementation science training programs and the opportunities that they create.

Supplementary Information

Additional file 2. Round 1 Interview Guide

Additional file 3. Round 2 Interview Guide

Acknowledgements

We would like to thank the first cohort of IS training program participants who were interviewed for this project.

Abbreviations

- EBIs

Evidence-based Interventions

- HIC

High-income Country

- IS

Implementation Science

- LMIC

Low-income Country

- MPH

Master of Public Health

- MSc

Master of Science

- TDR

Special Program for Research and Training in Tropical Diseases

- Wits

University of Witwatersrand

- WHO

World Health Organization

- UNC

University of North Carolina

Authors’ contributions

AP and RR were co-PIs and TC was the local PI on the project. RR also wrote sections of the manuscript. OO and NS conducted the interviews and drafted the results sections. SMB and KR drafted sections of the manuscript and SMB helped develop the round 2 codebook. KS and KR were program managers, while LI and JK were part of the IS program leadership team. All authors reviewed and provided feedback on the paper.

Funding

This study was funded by NIH Fogarty International Center Grant D43TW009774 University of North Carolina/University of the Wits Waters Rand AIDS Implementation Research and Cohort Analyses Training Grant. Sophia M. Bartels was supported by NIH’s Population Research Infrastructure Program awarded to the Carolina Population Center (P2C HD050924) and by the National Institute on Drug Abuse of the National Institutes of Health under Award Number F31DA057893 during the writing of this manuscript.

Data availability

Data can be made available upon reasonable request; we would work with the interviewees to facilitate this request, if needed.

Declarations

Ethics approval and consent to participate

The University of North Carolina at Chapel Hill and University of Witwatersrand Institutional Review Boards approved this study, and all interviewees completed informed consent before participating in the interviews.

Consent for publication

Not applicable.

Competing interests

We have no competing interests to list.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Chambers DA, Emmons KM. Navigating the field of implementation science towards maturity: challenges and opportunities. Implement Sci. 2024;19(1):26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3(1):32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Davis R, D’Lima D. Building capacity in dissemination and implementation science: a systematic review of the academic literature on teaching and training initiatives. Implement Sci. 2020;15(1):97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chambers DA, Pintello D, Juliano-Bult D. Capacity-building and training opportunities for implementation science in mental health. Psychiatry Res. 2020;283: 112511. [DOI] [PubMed] [Google Scholar]

- 5.Shete PB, Gonzales R, Ackerman S, Cattamanchi A, Handley MA. The University of California San Francisco (UCSF) training program in implementation science: program experiences and outcomes. Front Public Health. 2020;8:94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.EIC. Training and Education European Implementation Collaborative 2019. Available from: https://implementation.eu/training-and-education/.

- 7.NIH. NIH RePORTER. Available from: https://reporter.nih.gov/search/D_jm-aqbkEqVro9DjkBbQg/projects.

- 8.Akiba CF, Go V, Mwapasa V, Hosseinipour M, Gaynes BN, Amberbir A, et al. The sub-Saharan Africa Regional Partnership (SHARP) for mental health capacity building: a program protocol for building implementation science and mental health research and policymaking capacity in Malawi and Tanzania. Int J Mental Health Syst. 2019;13(1):70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Osanjo GO, Oyugi JO, Kibwage IO, Mwanda WO, Ngugi EN, Otieno FC, et al. Building capacity in implementation science research training at the University of Nairobi. Implement Sci. 2016;11(1):30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ramaswamy R, Chirwa T, Salisbury K, Ncayiyana J, Ibisomi L, Rispel L, et al. Developing a field of study in implementation science for the Africa Region: the Wits–UNC AIDS implementation science fogarty D43. Pedagogy Health Promotion. 2020;6(1):46–55. [Google Scholar]

- 11.Bartels SM, Hai Hoang VT, Le GM, Trang NT, Van Dyk QF, Sripaipan T, et al. Innovations for building implementation science capacity among researchers and policymakers: the depth and diffusion model. Global Implementation Research and Applications. 2024;7:1–4. [Google Scholar]

- 12.Asampong E, Kamau EM, Teg-Nefaah Tabong P, Glozah F, Nwameme A, Opoku-Mensah K, et al. Capacity building through comprehensive implementation research training and mentorship: an approach for translating knowledge into practice. Globalization Health. 2023;19(1):35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dako-Gyeke P, Asampong E, Afari E, Launois P, Ackumey M, Opoku-Mensah K, et al. Capacity building for implementation research: a methodology for advancing health research and practice. Health Res Policy Syst. 2020;18(1):53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hooley C, Baumann AA, Mutabazi V, Brown A, Reeds D, Cade WT, et al. The TDR MOOC training in implementation research: evaluation of feasibility and lessons learned in Rwanda. Pilot Feasibility Stud. 2020;6(1):66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gale NK, Heath G, Cameron E, Rashid S, Redwood S. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med Res Methodol. 2013;13(1): 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Pope C, Ziebland S, Mays N. Qualitative research in health care. Analysing qualitative data. BMJ. 2000;320(7227):114–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.ATLAS.ti Mac (version 23.2.1) [Qualitative data analysis software]: ATLAS.ti Scientific Software Development GmbH; 2023. Version 23.2.1. Available from: https://atlasti.com.

- 18.VERBI Software. MAXQDA 2022 [computer software]. Berlin: VERBI Software. 2021. Available from: https://maxqda.com.

- 19.Ramaswamy R, Mosnier J, Reed K, Powell BJ, Schenck AP. Building capacity for public health 3.0: introducing implementation science into an MPH curriculum. Implement Sci. 2019;14(1):18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Vroom EB, Albizu-Jacob A, Massey OT. Evaluating an implementation science training program: impact on professional research and practice. Glob Implement Res Appl. 2021;1(3):147–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Carlfjord S, Roback K, Nilsen P. Five years’ experience of an annual course on implementation science: an evaluation among course participants. Implement Sci. 2017;12(1):101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Van Pelt AE, Bonafide CP, Rendle KA, Wolk C, Shea JA, Bettencourt A, et al. Evaluation of a brief virtual implementation science training program: the Penn Implementation Science Institute. Implement Sci Commun. 2023;4(1):131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dearing J, Kee K. Historical roots of dissemination and implementation science. 2012. p. 55–71.

- 24.Beecroft B, Sturke R, Neta G, Ramaswamy R. The case for case studies: why we need high-quality examples of global implementation research. Implement Sci Commun. 2022;3(1):15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bartels SM, Haider S, Williams CR, Mazumder Y, Ibisomi L, Alonge O, et al. Diversifying implementation science: a global perspective. Glob Health Sci Pract. 2022;10(4):e2100757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Aldridge LR, Kemp CG, Bass JK, Danforth K, Kane JC, Hamdani SU, et al. Psychometric performance of the Mental Health Implementation Science Tools (mhIST) across six low- and middle-income countries. Implement Sci Commun. 2022;3(1):54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Turner MW, Bogdewic S, Agha E, Blanchard C, Sturke R, Pettifor A, et al. Learning needs assessment for multi-stakeholder implementation science training in LMIC settings: findings and recommendations. Implement Sci Commun. 2021;2(1):134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tabak RG, Padek MM, Kerner JF, Stange KC, Proctor EK, Dobbins MJ, et al. Dissemination and implementation science training needs: insights from practitioners and researchers. Am J Prev Med. 2017;52(3 Suppl 3):S322-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 2. Round 1 Interview Guide

Additional file 3. Round 2 Interview Guide

Data Availability Statement

Data can be made available upon reasonable request; we would work with the interviewees to facilitate this request, if needed.