Abstract

This paper proposes DigitalUpSkilling, a novel IoT- and AI-based framework for improving and personalising the training of workers who are involved in physical-labour-intensive jobs. DigitalUpSkilling uses wearable IoT sensors to observe how individuals perform work activities. Such sensor observations are continuously processed to synthesise an avatar-like kinematic model for each worker who is being trained, referred to as the worker’s digital twins. The framework incorporates novel work activity recognition using generative adversarial network (GAN) and machine learning (ML) models for recognising the types and sequences of work activities by analysing an individual’s kinematic model. Finally, the development of skill proficiency ML is proposed to evaluate each trainee’s proficiency in work activities and the overall task. To illustrate DigitalUpSkilling from wearable IoT-sensor-driven kinematic models to GAN-ML models for work activity recognition and skill proficiency assessment, the paper presents a comprehensive study on how specific meat processing activities in a real-world work environment can be recognised and assessed. In the study, DigitalUpSkilling achieved 99% accuracy in recognising specific work activities performed by meat workers. The study also presents an evaluation of the proficiency of workers by comparing kinematic data from trainees performing work activities. The proposed DigitalUpSkilling framework lays the foundation for next-generation digital personalised training.

Keywords: wearable sensors, internet of things, machine learning, work activity recognition, worker training

1. Introduction

A work skill is the ability of a worker to perform a specific task that usually requires different modes of action and body movements with frequent repetition [1,2]. Training is vital for any worker to enhance his/her proficiency in skills that are required to proficiently complete the assigned task [3]. Therefore, work training helps individuals acquire and refine their skills, leading to improved proficiency and productivity [4]. A crucial step in training a worker is to identify the individual’s current proficiency in performing any specific work activities required for the task he/she is assigned to complete [5]. In traditional training, a human trainer is primarily responsible for recognising and assessing the work activities of the trainees [6]. However, the trainer’s abilities to observe and analyse information from the trainees are limited, and the trainer’s evaluations of the activities and skills of each trainee are often subjective. These challenges are due to well-understood human limitations in observing and analysing vast amounts of information from trainees, which leads to poor skill proficiency improvement [7]. Moreover, traditional training often uses a one-size-fits-all approach [8]. Whereas, in addition to each trainee’s baseline proficiency level, individual trainees also exhibit differences in their capacity to acquire new information, as well as in their preferred learning styles and cognitive approaches to skill acquisition [9]. By not considering each trainee’s skill proficiency level in performing specific activities, they undergo unnecessary training or are not trained enough to perform the activities that are required to improve their skills [10]. In current training practices, trainers typically rely on easily trackable parameters, such as distance covered, materials produced, or time taken to complete tasks [3]. Several studies have used production output, faster completion time and quality of work as metrics for measuring work skill proficiency [11]. However, in improving work proficiency, these measurements are not always sufficient. The movement patterns of workers, particularly hand and other body parts movements, can significantly contribute to proficiency [12]. To address these issues, this paper proposes DigitalUpSkilling, a novel wearable IoT-ML-based framework for digital and personalised worker training. DigitalUpSkilling supplements the human trainer’s partial observations and related subjective skill evaluation of the trainees, with comprehensive observations performed by IoT sensors [13] and smart ML-based models for recognising and evaluating skill proficiency, providing a data-driven and personalised worker training solution.

For any sensor-based training, the first and most crucial step is human activity recognition. Related work on human activity recognition (HAR) can be classified as either sensor-based or vision-based HAR. Existing vision-based HAR solutions typically analyse camera images [14] and thermal images [15], or they propose human pose recognition algorithms [16]. As such, research typically focuses on detecting any activity, and the accuracy of vision-based HAR algorithms is usually limited in real-world work environments [17]. Sensor-based HAR is gaining momentum due to recent advancements in wearable sensor technology and accuracy [18,19,20,21]. Currently, wearable sensors can collect data from real-life environments without any visual obstruction and with great accuracy. Furthermore, no related study has used workers’ kinematic data from body movements during work activities to improve worker skills and work performance. Some related work has proposed combining sensors and ML for improving performance in sports [22,23,24]. Another advantage of using sensors is the easy creation of digital twins, which have proved to be useful in digitalising manufacturing activities [25], but currently, their use is very limited in real-world activity recognition. Finally, while some related works [26] on AI-based personalised worker training showed improved training and worker performance, they did not consider improving workers’ skills in physical work activities.

To illustrate the proposed DigitalUpSkilling framework and its benefits, the paper presents a study on training meat processing workers in a real-life production environment. The meat processing industry is one of the biggest export industries in Australia [27], and a significant portion—57%—of the production cost is attributed to workers’ expenses [28]. Inefficiency in training can lead to higher labour costs and less productivity. By enhancing training methods, the proposed framework can reduce these inefficiencies, enabling workers to perform their tasks faster and with greater accuracy, ultimately reducing labour costs and improving overall productivity. One key aspect of the DigitalUpSkilling framework is its evaluation of work activity recognition accuracy in meat processing tasks; then, it compares the performance attributes of the recognised activities. However, challenges such as limited datasets and a small number of participants complicate its study. Additionally, like other real-world job activities, these tasks are not time-bound, increasing the likelihood of activity class imbalance. To overcome these limitations, the study proposes a hybrid GAN-ML activity classification model that leverages generative techniques to augment data and enhance classification performance.

In summary, the novel contributions of this paper are the following:

Digital twins of workers that continuously synthesise avatar-like kinematic models of the activities for each worker being trained. In doing so, it uses wearable sensors that observe how individual workers perform physical work activities;

A hybrid GAN-ML work activity recognition model for recognising the types of work activities each worker performs;

Skill proficiency recognition analysis for evaluating how well each trainee performs specific work activities and the overall task he/she is responsible for;

An industry study that illustrates how highly accurate work activity recognition GAN-ML models can detect complex meat processing activities using body movement data from full-body wearable IoT sensors.

The remainder of the paper is organised as follows: Section 2 presents related work on IoT-GAN-ML-based HAR and skill assessment. Section 3 describes the proposed DigitalUpSkilling framework. Section 4 presents a study on activity recognition involving complex work activities performed by meat processing workers. Section 5 discusses the performance of the activity recognition GAN-ML model in the study, while Section 6 discusses work skill proficiency measurement from sensor data and digital twins. Section 7 concludes the paper and provides directions for future research.

2. Related Work

As discussed in Section 1, the DigitalUpSkilling framework involves steps for automatically recognising and measuring the proficiency of individual workers conducting specific work. The steps include collecting body movement data, synthesising kinematic digital twins of the workers being trained, and training and using GAN-ML models for work activity classification and for skill assessments. This section provides an overview of the relevant literature related to these steps.

IoT sensors for digitally capturing human movement from work activities: Because of its versatile nature and performance, wearable sensor-based technology is now used in various fields, including smart homes, healthcare, and sports [29,30,31,32]. Wearable IoT sensors gained popularity in work activity recognition because they can easily be integrated into garments and accessories or even directly attached to the body, enabling unobtrusive monitoring during activities [33]. Also, IoT sensors are great tools for human activity recognition for users who are involved in rigorous activity and movement [34,35]. The accelerometer and gyroscope in an IoT sensor can connect body movement data from individuals. These sensors can capture kinematic data from work activity and create a kinematic model. The abundance of data collected by sensors enables the tailoring of training programmes according to individuals’ specific needs and capabilities, thereby optimising their proficiency during training activities [23,36]. Recently, smartphones and wristbands have become popular tools for activity recognition in real-world environments due to their portability and the ability to collect continuous sensor data [37,38]. These devices, equipped with accelerometers, gyroscopes, and heart rate sensors can effectively monitor physical activities, such as walking and running, as well as even stress, providing valuable data for health and fitness applications when combined with ML models [39]. However, there are limited studies focusing on body movement metrics such as joint angles, smoothness of movements, and abduction, which are crucial to work proficiency.

Moreover, several studies have used IoT sensors on participants’ hands to analyse their movement [35,40], and other studies [32,34,35,41] have mounted sensors on other body parts of participants, such as on the sacrum and shoulder. Studies show that placing a single sensor on the human body allows for the detection of movement with that particular body part [42]. In contrast, full-body sensors allow for the capture of full-body movements and, hence, can provide more precise feedback about body movement and proficiency with a full-body kinematic model [42,43,44], which is important for work activities.

Research has also shown good accuracy in terms of recognising human activity from sensor data [45,46,47]. For posture recognition of construction workers, Subedi et al. [48] used a depth sensor camera to collect movement data and an ML algorithm for work activity classification.

IoT-sensor-based digital twins for capturing human movement: The integration of the IoT and digital twins for human training has created new possibilities for data-driven and personalised learning. By gathering real-time data from IoT devices, including wearable sensors, digital twins—a virtual representation of a physical entity—are produced, enabling continuous monitoring and feedback on performance [49]. Though the use of digital twins is not new in the field of manufacturing in the Industry 4.0 era [50,51], in human training, particularly in physically intensive activities, it is underutilised. Digital twins have the ability to track detailed human body movements in real time [43], provide insights into skill proficiency [52], and contribute to next-generation training [43]. IoT devices, like inertial measurement units (IMUs) or smart wearables, stream real-time data to digital twins, representing and analysing human movements in real time [42], thus facilitating adaptive training environments.

GAN-ML-based work activity classification: From IoT sensor data, activities can be recognised and measured using machine learning algorithms [21]. ML-based HAR can detect physical activity, identify areas for improvement, and suggest actions for improvement [53]. The last decade witnessed ML-based activity recognition in different domains, including smart homes, healthcare, rehabilitation, security, and the sports industry, which includes both simple and complex physical activities by humans [18,19,20,21].

In a literature review [54] of daily activities, such as running, jogging, eating, and biking, various ML-based HAR models achieved high accuracies of 80-98%. In another literature review, Yadav et al. [55] present a summary of seven studies that used wearable IoT sensors with different machine learning algorithms, such as random forest (RF) and support vector machine (SVM), showing accuracies of 72% to 98%. Research by Forkan et al. [56] on the meat industry used IoT- and ML-based models to recognise work activities, resulting in good accuracy in activity recognition. Subedi et al. [48] used an ML algorithm for activity classification on construction workers but in a non-work environment. In addition, other studies exist on ML-based activity recognition for workers [57,58]. So, from these studies it can be concluded that human activity recognition models, such as RF and SVM, are widely used and provide good accuracy.

To address the issue of small datasets for human activity recognition, some studies used the generative adversarial network (GAN), conditional generative adversarial networks (CGAN), etc., as data augmentation models [59,60]. In addition to GANs, ML models, such as the synthetic minority oversampling model (SMOTE) [61,62] and adaptive synthetic sampling approach (ADASYN) [63], have been applied to mitigate the challenges of imbalanced datasets of real-world scenarios. Among these approaches, the combination of GAN and SMOTE often outperformed other models [64]. Furthermore, to enhance the data quality and remove overlapping instances, techniques such as Tomek links and edited nearest neighbours (ENN) are frequently used [65,66].

ML-based skill proficiency measurement and assessment: Several studies have been conducted to understand human proficiency using IoT and ML models, mainly in sports. In one study, Su and Chen [22] used IoT and AI models to predict the scores of basketball players and support the team’s decision-making process. The data used in the research were from an existing dataset of NBA league players, and proficiency was predicted based on the players’ salaries. Another study [67] used an ML model to predict and evaluate the proficiency of female handball players using their physiological characteristics, such as BMI and height. Pappalardo et al. [68] evaluated the proficiency of soccer players using ML models. A systematic review by Lam et al. regarding proficiency in technical skills in surgery by ML models showed good accuracy [69]. However, these studies focused on external factors like salaries and physiological characteristics rather than measuring their proficiency based on their actions or body movements when performing the tasks.

Machine learning (ML) models are increasingly being used to analyse complex biomechanical data, such as flexion, abduction, and acceleration of body parts, which are traditionally overlooked during training because of the limitations of human observation [70]. ML can process large datasets generated from sensors to extract meaningful patterns and insights about movement efficiency, enabling real-time feedback and performance improvement [71]. By leveraging ML, these methods can provide precise, data-driven insights that help optimise worker training and enhance proficiency [72].

AI and IoT solutions for personalised training frameworks for workers: Fraile et al. [73] proposed a methodological framework for personalised training programmes for industry workers using artificial intelligence (AI) and neural language processing (NLP). The study focused on the internal conversations of workers in the workplace and determined their skills from these conversation. One study [74] proposed an AI-based framework for personalised employee training to improve the overall learning models and abilities. In the study, a questionnaire and feedback were collected from employees. Then, the NLP model was used for skill extraction and assessment of the questionnaire and feedback. Butean et al. [75] proposed an AI- and VR-based solution for improving training methods for industry workers, where they proposed a learning platform for training humans, including industrial workers.

Research gaps: Related work that employs wearable IoT sensors for work activity recognition is in the early stages. Existing GAN-ML models for work activity recognition and skill proficiency measurement have been trained with work data collected in laboratories, not real-life production settings. Similarly, there are currently no studies using GAN-ML models that are trained and developed using human movement data from work-related activities specifically to assess work skill proficiency. Finally, to the best of our knowledge, we could not find any frameworks or studies that combined IoT, GAN, and ML models for work activity recognition and work skill proficiency measurement for the training of workers.

3. DigitalUpSkilling Framework for Digital Personalised Training

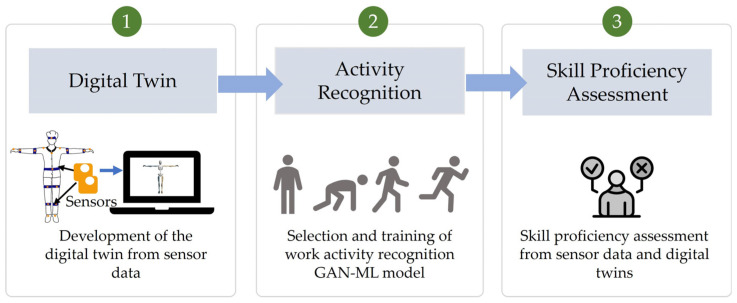

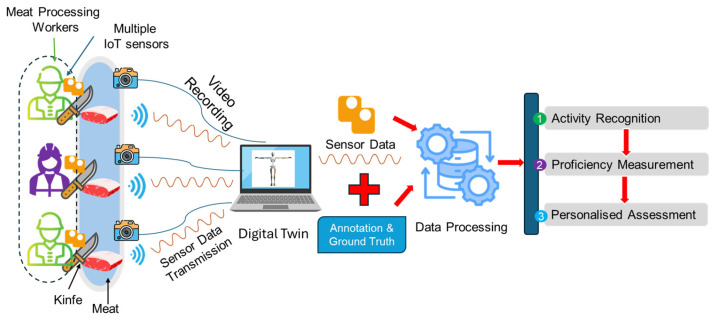

The proposed DigitalUpSkilling framework includes the following three major parts, as depicted in Figure 1: (1) development of digital twins (i.e., kinematic models) of each trainee from sensor data observations that are produced by the wearable sensors he/she is wearing; (2) selection and training for work activity recognition using a hybrid GAN-ML activity classification model; and (3) development of the skill proficiency assessment ML model. Section 3.1, Section 3.2 and Section 3.3 present these parts of the DigitalUpSkilling framework in further detail.

Figure 1.

DigitalUpSkilling framework.

3.1. Data Collection Using Wearable IoT Sensors and Generation of Workers’ Digital Twins

As work activities have many variations without set patterns, it is difficult to distinguish them. Therefore, to capture work activities, the DigitalUpSkilling framework employs full-body wearable IoT sensors that collect kinematic data from inertial measurement units (IMUs) attached to different parts of each worker’s body. The sensor data observations include body joint rotations, movements, and related accelerations that determine a participant’s orientation, position, and movement in real time.

The DigitalUpSkilling framework uses all of the kinematic data that are collected by these sensors on each worker to generate real-time digital twins of their movements. The workers’ digital twins are used to capture and observe the movements that are necessary to perform specific work activities and the sequence of such activities in the completion of tasks. Ground truth is provided by a camera that records the training and corresponding work session, and the resulting video is then used for data labelling during ML training.

3.2. Work Activity Recognition

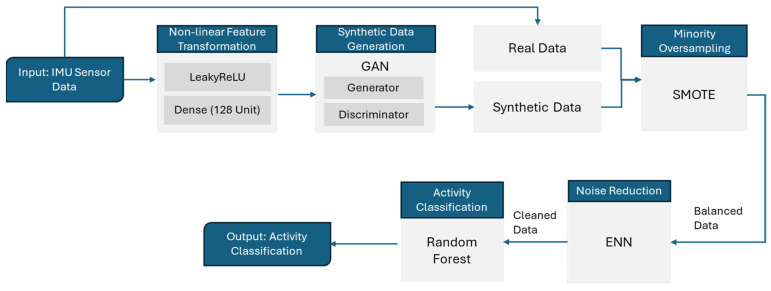

The DigitalUpSkilling framework proposed a hybrid GAN-ML activity classification model that can be trained to recognise specific work activities from human body movement (Figure 2). First, the accuracies of the ML models are evaluated on the original sensor data. Then, the classification accuracies for combinations of sensors on different body parts, as well as the combination of sensors, are evaluated. Then, the proposed model’s GAN uses a generator and discriminator to generate synthetic data and distinguish synthetic data from original sensor data, respectively. This process enhances the size of the collected data set. After, to balance out any kind of class imbalance, the SMOTE model is used, which conducts minority oversampling and balances the dataset. In the next step, ENN is used to filter out noise to decrease misclassification in the nearest classes with the closest values. Finally, the RF model is used to classify the activity. In the proposed model, supervised ML models are trained to recognise specific work activities that are typically performed by workers who work on the same activities. The proposed classification model takes IMU sensor data as inputs and analyses the kinematic data on the body parts of the trainees while they are performing work activities and classifies their activities.

Figure 2.

Hybrid GAN-ML activity classification.

3.3. Skill Proficiency ML

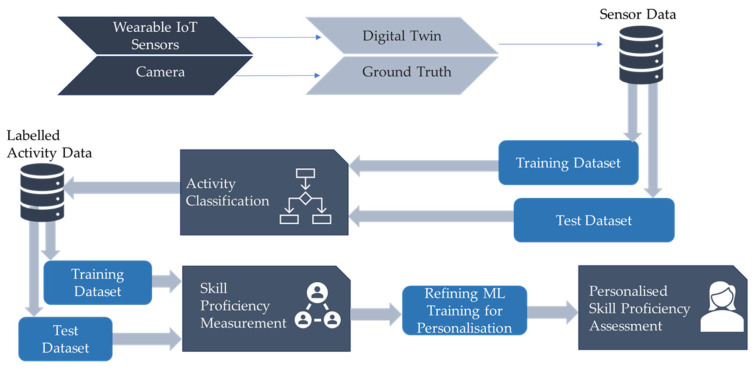

The DigitalUpSkilling framework uses ML-based work performance classification and assesses work skill proficiency related to physically intensive jobs. The training of the skill proficiency ML models is achieved as follows: (1) first, it uses the kinematic data collected from digital twins of all workers who perform that work activity; (2) using the proposed GAN-ML-based work activity recognition model, it divides this comprehensive dataset into work activity-specific datasets (each of which contains kinematic data from all workers performing that type of activity); (3) finally, for each type of activity detected by the work activity recognition ML model, it trains the model with data from workers that performed the best in this activity type (e.g., produced more of the product, fewer defects, and better quality products, or a combination of these) to classify proficiency. This approach to proficiency assessment using the DigitalUpSkilling framework is depicted in Figure 3.

Figure 3.

Skill proficiency assessment.

For certain work tasks, the skill proficiency model may need to be tailored to each specific activity type; in other cases, a single model may suffice for assessing skill proficiency across various activities. We define the test data and training dataset for activity recognition using ML models. This approach can be refined further to account for the activity’s difficulty level or the gender, age, and past work experience of the workers being trained. This enables a more personalised and accurate skill proficiency assessment, allowing for the customisation of ML training models to better evaluate individual skill levels.

Furthermore, the ground truth is updated whenever a new dataset is added to this framework. Hence, the framework will help improve the overall training approach for industrial workers and make the training and assessment digitalised and personalised.

4. Study of Work Activity Recognition for Meat Processing Activities

We conducted a real-world study on the meat processing industry based on the proposed DigitalUpSkilling framework. This section depicts the design of the study.

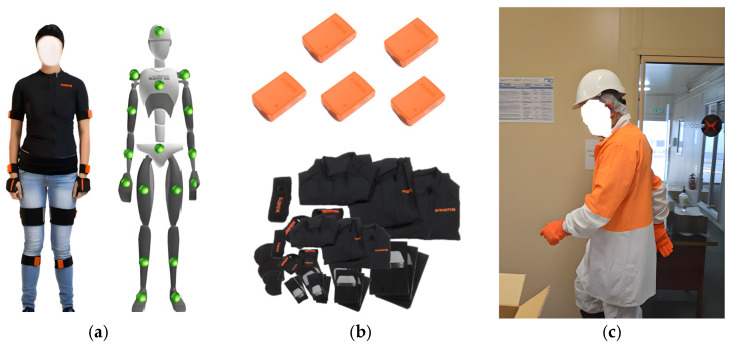

Sensor selection for data collection: The study used the Movella Awinda Suit [76], which has 17 IMU sensors. It provides data in 60 Hz from a 100 m range. The 17 IMU sensors were placed on the participants’ wrists, forearms, upper arms, shoulders, feet, lower legs, and upper legs, as well as on hips, chests, and necks (Figure 4), which provided a full-body kinematic model and could capture the movement of any type of work activity.

Figure 4.

(a) Placement of sensors; (b) sensors and straps; (c) alignment of sensors with the participant’s movements.

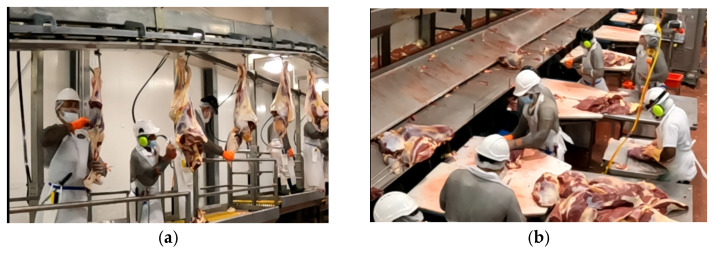

Participants and datasets: In this study, we had two male participants with more than fifteen years of work experience who are experienced trainers. We had fewer participants in this study, as obtaining such data from participants working in real work environments is challenging (Figure 5).

Figure 5.

Work environment for the data collection: (a) boning area; (b) slicing area.

Moreover, no public datasets contained data on work activities in real work environments that were suitable for our study [77]. The entire data collection procedure was carried out inside the meat processing factory while participants performed their regular meat processing tasks.

The participants performed two repetitions with two different knives for each activity set, boning and slicing, to achieve a balanced dataset with different sets of body movements. Hence, the study had eight sets of activity data from the participants. We asked participants to use knives with varying levels of sharpness during the data collection to obtain broad variations in body movements and actions to ensure that the study included diversified data on real-work activities. The activities were based on two different meat processing plant assembly lines. Each activity set with different knives was performed for 5 to 15 min.

Activity selection: To incorporate real-life data, we chose meat processing workers involved in work activities such as boning and slicing meat in a meat processing plant. Both are crucial meat processing activities involving specific types of complex physical activities and techniques.

Boning: Boning is the removal of bones from large meat cuts. This physical activity requires steady hand control and attention to detail. Meat processors utilise various tools, such as knives and cleavers, to carefully separate meat from bones. They also trim excess fat and connective tissue. Boning requires repetitive motions to extract meat from the bones efficiently.

Slicing: Slicing involves cutting meat into thin, uniform slices. This task demands repetitive movement of the hand and body of meat workers. Different knives are used to create slices with a consistent thickness. Like boning, slicing also requires steady hand control and attention to detail.

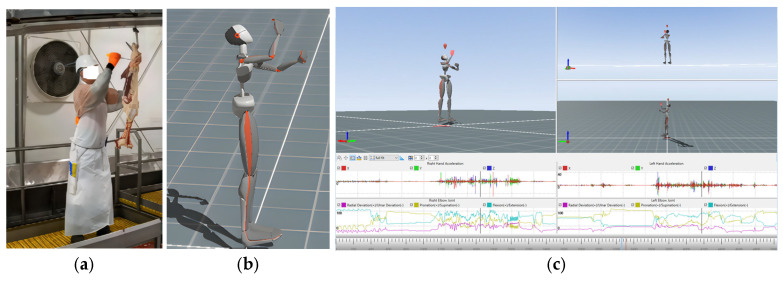

Data Collection: For collecting data, 17 IMU sensors were mounted on workers, using straps and a body vest. Then, they put on their uniform, performed the calibration, and entered the meat processing area to perform their work activities. We collected sensor data on the following activities they performed: boning and slicing. Sensor data were transmitted via Bluetooth to a computer system, where digital twins visualised worker movements in real time. Movements were also recorded using a video camera. The overall setup, data collection process, and data processing are presented in Figure 6.

Figure 6.

Dataflow of the study.

Digital twins from IMU data: Initially, we took the participants’ body measurements and placed the sensor as depicted in Figure 4. For initialisation of the digital twins representation, we calibrated the sensors before the worker started their regular work activity. We collected data from wearable full-body IoT sensors, which were recorded using the real-time digital twins created with MVN Analyse software (version: 2024.2.0) [76] (Figure 6). We also checked for magnetic interference, as this could possibly affect the quality of the digital twins and their live representations. The digital twins not only represented body movements via a 3D avatar, but we could also monitor any particular joint’s angle rotation, acceleration, and flexion, which are important factors in body movements and workers’ skills, as depicted in Figure 7c. The session was also recorded using a camera, which served as the ground truth for the digital twins. With the help of the calibration process, all sensors were properly aligned, and the digital twins’ representations were real representations of the participants’ body movements.

Figure 7.

(a) Worker performing boning; (b) worker’s real-time digital twin; (c) digital twins showing body movements along with real-time graphs of the joint’s movements.

Data annotation from digital twins: We recorded each session using a video camera for record the ground truth. We annotated each microstep by synchronising the video with the real-time kinematic digital twins generated from the wearable full-body sensor data using MVN Analyse software. We synchronised the signals from each sensor, the video, and the associated timestamps to annotate data accurately.

Collected data included activities such as idleness, walking, steeling, reaching, cutting, slicing, pulling, placing/manipulating, and dropping. Each activity contained several single actions by different body parts, such as closing, reaching, opening, moving, unlocking, holding, cutting, spreading, releasing, dropping, picking, throwing, etc., as shown in Table 3. The dataset consists of 529,718 data samples from the IMU sensors, collected at a 60 Hz sampling rate.

The study found many low-level actions that make up complex work activities as shown in the Table 1.

Table 1.

Actions and activities.

| Actions and Activities | Names |

|---|---|

| Work Activities | Idleness, walking, cutting, reaching, slicing, dropping |

| Single Actions | body locomotion: sit, stand, walk, bend left-hand actions: spread, reach, open, close, move, unlock, hold, cut, spread, release, drop, pick, throw right-hand actions: spread, reach, open, close, move, unlock, hold, cut, spread, release, drop, pick, throw left-leg actions: still, move, spread, straighten, bend, lift right-leg actions: still, move, spread, straighten, bend, lift |

4.1. Data Labelling

Activity labelling was conducted for each timestamp as given in the Table 2 and Table 3:

Table 2.

Activity Labelling—Boning.

| Activity | Label | Observation |

|---|---|---|

| Idle | 0 | The participant keeps a hand stationary and waits for the next piece to arrive in the carcass. |

| Walking | 1 | The participant walks to get the next piece of meat in the carcass or to move around. |

| Steeling | 2 | The participant sharpens the knife with sharpening tools; to do this, they use both hands. |

| Reaching | 3 | The participant reaches for a new piece of meat from the carcass. |

| Cutting | 4 | Using the knife, the participant turns a large piece of meat into smaller pieces. |

| Dropping | 5 | The participant grabs a small piece of separated meat and throws it away on the conveyor belt. |

Table 3.

Activity Labelling—Slicing.

| Activity | Label | Actions Description |

|---|---|---|

| Idle | 0 | The participant keeps a hand stationary and waits for the next piece to arrive in the carcass. |

| Walking | 1 | The participant walks to get the next piece of meat in the carcass or to move around. |

| Steeling | 2 | The participant sharpens the knife with sharpening tools; to do this, they use both hands. |

| Reaching | 3 | The participant reaches for a new piece of meat on the belt or table. |

| Cutting | 4 | The participant turns a large piece of meat into a smaller piece with the help of a knife. |

| Slicing | 5 | The participant cuts fats from a meat piece. |

| Pulling | 6 | The participant rips away fat/meat from the meat piece. |

| Placing/Manipulating | 7 | The participant manipulates the meat placement or pinches the meat. |

| Dropping | 8 | The participant grabs a small piece of separated meat and throws it away. |

Data Preprocessing: After the annotation, we performed data preprocessing to clean raw sensor data and prepare them for activity classification. First, we removed missing data from the dataset using imputation techniques to fill in missing values, and we removed irrelevant or redundant features from the dataset, ensuring the data was clean and consistent. We applied data augmentation techniques using a generative adversarial network (GAN), with utilisation of a Leaky ReLU activation function and dense layers (128 units), to generate synthetic data and increase the size of the dataset, which initially contained 529,718 data samples from two participants. To address the issue of class imbalance, we then applied SMOTE (synthetic minority oversampling technique) to further balance the data by oversampling the minority classes, followed by edited nearest neighbours (ENN) to remove noisy or misclassified samples. The dataset was split into training and test sets with a 75/25 ratio using stratified sampling to preserve the class distribution. For each participant, a 969-dimensional matrix was created by the sensors, where 969 represents the features collected from 17 sensors for each data sample.

ML model selection for work activity recognition: For the study, we chose existing ML models that have been proven as effective at human activity classification. For this research, we chose RM, SVM, DT, and KNN for the work activity classification and then compared the accuracy of the ML models to find the most accurate one for this study. After computing the features, we split the training and test data to train the ML models. These ML models were trained on a labelled dataset comprising various work activities.

Feature selection: To classify data in the ML models, we extracted the 3D coordinates, velocity, acceleration, and rotational data for each of the 17 sensors. After, we selected the features of body parts that were active during the activities, and then the features that promised a high contribution to the recognition of the activity were selected. For each of the timestamps, we computed the mean () and standard deviation (sigma), where the cap X bar signifies the magnitude, and presents the variability. These values depict orientation-related data. The sensor readings for each variable were combined (, , and ) separately and the weighted sum of squares was calculated.

Then, the combined values for , , and were calculated as follows:

| (1) |

Here

n represents the total number of sensors;

, , and represent the sensor readings for the ith sensor for , and , respectively.

This expression represents a form of vector magnitude or Euclidean norm that combines the contributions from all three components , , and ) across the 17 sensors.

4.2. Data Preprocessing

Let the dataset be represented as , where is the feature vectors (sensor data), and is the corresponding activity labels.

Handling Missing Values

For any feature vector, , with missing data, the model applied an imputation function , replacing the NaN values with the mean of the corresponding feature.

| (2) |

Train–Test Split

Split into the training set, , and the test set, , at a 75−25% ratio.

4.3. Generating Synthetic Data Using GAN

Generator: The generator takes a random noise vector, and outputs synthetic data, , in the same feature space as real data. The generator uses Leaky ReLU activations for hidden layers.

Leaky ReLU activation

| (3) |

where α is a small slope (e.g., 0.01) for negative inputs.

Generator output:

| (4) |

Discriminator: The discriminator, , takes input, and outputs a probability, that the input is real. It is trained to distinguish between real data, , and synthetic data, .

Discriminator loss for real data

| (5) |

Discriminator loss for fake data

| (6) |

Training GAN

The generator is trained to minimise the following:

| (7) |

The discriminator is trained to minimise the following:

| (8) |

Synthetic data generation

After training, synthetic samples, , are produced by the generator, as follows: .

4.4. Handling Class Imbalance with SMOTE

Let us assume is a subset of , representing the minority class data. Here, we use SMOTE to generate synthetic samples between the nearest neighbours of the minority class, as follows:

| (9) |

This creates new synthetic data points, to balance the class distribution.

4.5. Data Cleaning with ENN

After SMOTE, we applied edited nearest neighbours (ENN) to remove noisy or misclassified data points.

For each sample,, check its -nearest neighbors, as follows:

| (10) |

If ’s label disagrees with the majority label in its neighbourhood, it removes from the dataset.

4.6. Classification with RF Model

Then, we trained the RF model, , on the resampled and cleaned dataset, . RF consists of decision trees, ), each trained on a bootstrapped subset of the training data. Where the prediction for each sample, , is given by the following:

| (11) |

Here, each tree outputs a classification, and is the final prediction.

Evaluation matrix of the study: We used accuracy, precision, recall, and the F1-score to evaluate various ML models’ performances. The following are the formulas for these metrics:

Accuracy: It presents the ratio of accurately classified samples to the total data samples in the model.

| (12) |

Precision: It presents the ratio of correctly predicted positives to the total predicted positives, representing the accuracy of the positive predictions.

| (13) |

Recall: It represents the ratio of accurately predicted positives to all data samples.

| (14) |

F1-Score: It presents the harmonic mean of the precision and recall of the data samples.

| (15) |

In these formulas:

True positives: Data correctly predicted as positive;

False positives: Data incorrectly predicted as positive;

False negatives: Data incorrectly predicted as negative;

True negatives: Data correctly predicted as negative.

We also used the confusion matrix (CM) to evaluate the performance of the selected ML classification models. CM is a square matrix where the rows signify the true class labels, and the columns show the predicted activity labels. The diagonal element of the matrix depicts the percentage at which the predicted activity label matched the true activity label.

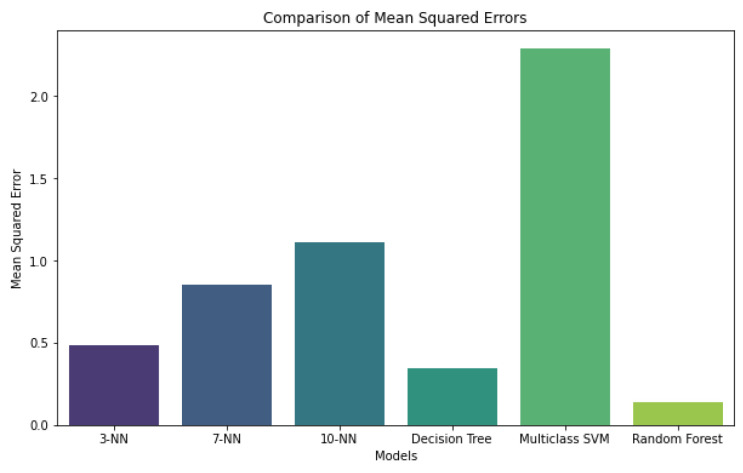

5. Performance of the Work Activity Recognition Model

We used several feature combinations and algorithms to obtain good accuracy in activity recognition. We used classification accuracy as the primary decision criterion and drew direct comparisons regarding classification accuracy. We compared the classification with all 969 features from 17 sensors for the full body movements. Our experiment shows that the RF model had the best classification accuracy for full-body sensor data. KNN and SVM showed higher error rates, as given in Figure 8, whereas the RF model had the least mean squared error (MSE). Therefore, we chose the RF model for the rest of the study to obtain the most accurate classification results.

Figure 8.

Comparison of the error rates of the different ML models.

Then, we compared the accuracy of the RF model using various single features and fused features for different combinations of sensors. The right hand is the most prominent part of the body that is actively involved in physical tasks. Later, we combined data from eight sensors that were placed on both hands and 17 sensors placed over the entire body. Table 4 represents the classification accuracy using RF, where we used single features, such as acceleration and magnitude, and then combined the features. The results show that the accuracy was slightly higher when using data from both hands and the full body rather than just the right hand. The most accurate results came from the pitch and roll data and the fused data on velocity, magnitude, and pitch and roll.

Table 4.

Accuracy comparison with sensor data from different body parts.

| Sensors | Activity | Velocity | Magnitude | Pitch and Roll | Velocity + Magnitude + Pitch and Roll |

|---|---|---|---|---|---|

| Right Hand (4 Sensors) | Boning | 0.7371 | 0.8372 | 0.9936 | 0.9921 |

| Slicing | 0.4972 | 0.5648 | 0.9779 | 0.9737 | |

| Both Hand (8 Sensors) | Boning | 0.8062 | 0.9158 | 0.9984 | 0.9992 |

| Slicing | 0.5275 | 0.7359 | 0.9923 | 0.9897 | |

| Full Body (17 Sensors) | Boning | 0.8372 | 0.8713 | 0.9984 | 0.9984 |

| Slicing | 0.5563 | 0.5988 | 0.9962 | 0.9962 |

Table 5 represents the classification accuracy using RF, single, and combined features. The results show that the accuracy was different for different activity sets, such as boning and slicing, and for slicing, the accuracy was lower than for boning. When fusing different features, the accuracy increases, and the accuracy for boning and slicing is similar.

Table 5.

Accuracy comparison with data fusion.

| Data | Activity | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|---|

| 3D acceleration | Boning | 0.8362 ± 0.0011 | 0.8516 ± 0.0048 | 0.8362 ± 0.0011 | 0.8032 ± 0.0101 |

| Slicing | 0.596 ± 0.0397 | 0.69 ± 0.0346 | 0.596 ± 0.0397 | 0.5188 ± 0.0604 | |

| Magnitude | Boning | 0.8682 ± 0.0031 | 0.8651 ± 0.0056 | 0.8682 ± 0.0031 | 0.8531 ± 0.0087 |

| Slicing | 0.6213 ± 0.0225 | 0.6854 ± 0.0082 | 0.6213 ± 0.0225 | 0.566 ± 0.0356 | |

| Pitch and roll | Boning | 0.9979 ± 0.0005 | 0.998 ± 0.0004 | 0.9979 ± 0.0005 | 0.9979 ± 0.0005 |

| Slicing | 0.9961 ± 0.0001 | 0.9962 ± 0.0001 | 0.9961 ± 0.0001 | 0.9961 ± 0.0001 | |

| 3D Acceleration, magnitude, pitch and roll | Boning | 0.9982 ± 0.0002 | 0.9982 ± 0.0002 | 0.9982 ± 0.0002 | 0.9982 ± 0.0002 |

| Slicing | 0.9963 ± 0.0001 | 0.9964 ± 0.0000 | 0.9963 ± 0.0001 | 0.9963 ± 0.0001 | |

| 3D Acceleration, magnitude, pitch and roll, centre of mass | Boning | 0.9978 ± 0.0006 | 0.9978 ± 0.0006 | 0.9978 ± 0.0006 | 0.9978 ± 0.0006 |

| Slicing | 0.9962 ± 0.0009 | 0.9962 ± 0.0009 | 0.9962 ± 0.0009 | 0.9962 ± 0.0009 |

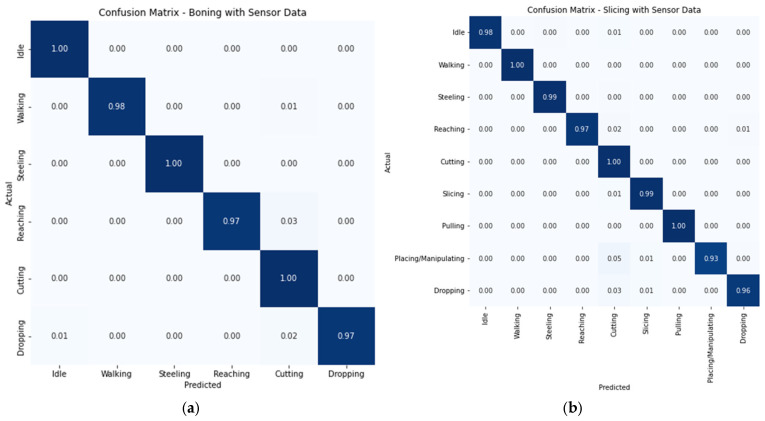

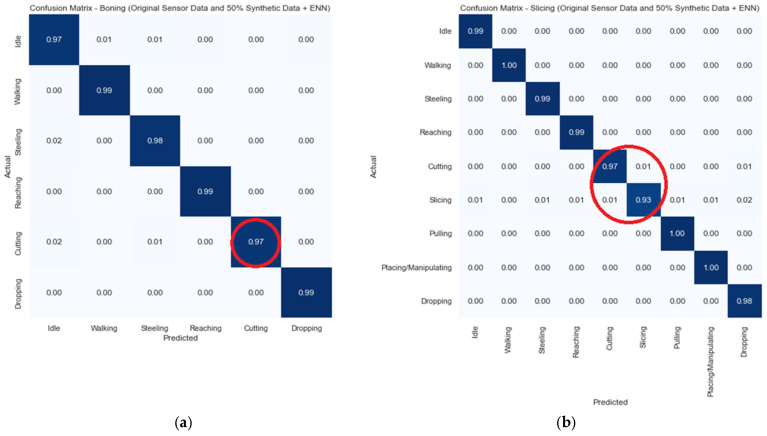

Based on the results in Table 4 and Table 5, we used the random forest (RF) model for the confusion matrix of boning and slicing using pitch and roll data from four sensors on the right hand. The matrix depicts that the RF algorithm was effective for identifying simple activities, such as idleness, standing, and walking, as well as complex work activities, such as steeling, cutting, and slicing, which are recognised with high accuracy. This approach shows the accuracy of the ML model in recognising work activities for each participant using minimal features (Figure 9).

Figure 9.

Confusion matrices: (a) boning; (b) slicing with pitch and roll from right-hand sensors.

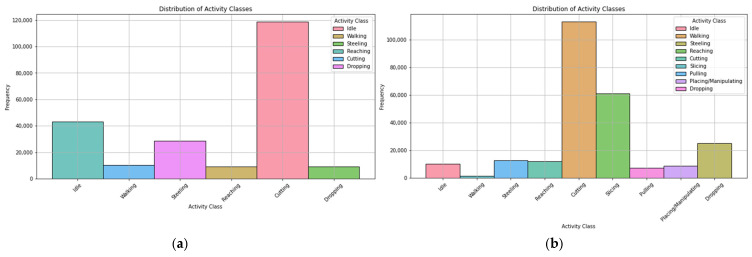

After classification, the distribution of activity classes is given in Figure 10. The figure depicts that in boning, the number of data samples for cutting was significantly higher than for other activities. Similarly, for slicing, the data samples for cutting and slicing were much higher in number than the rest of the activities. Both figures indicate that there is an imbalance in the classes.

Figure 10.

Distribution of the activity classification: (a) boning; (b) slicing.

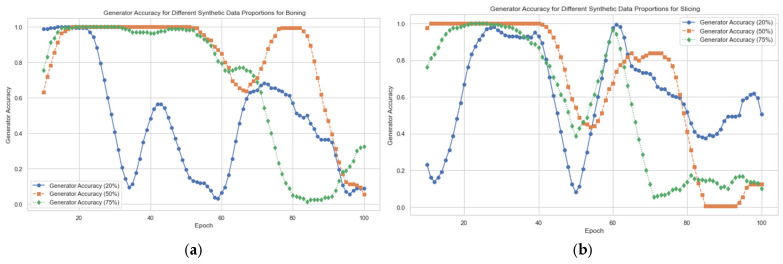

A total of 529,718 data samples were collected while performing boning and slicing. As the collected datasets show an imbalance in the classes as depicted in Figure 10, to make these data more robust, as well as to solve the data imbalance, we used a generative adversarial network (GAN) to generate synthetic data in different percentages: 25%, 50%, and 75%. The result show that 50% of the synthetic data yielded great accuracy with 60–80 epochs, as presented in Figure 11.

Figure 11.

Accuracy of the GAN for different percentages of synthetic data: (a) boning; (b) slicing.

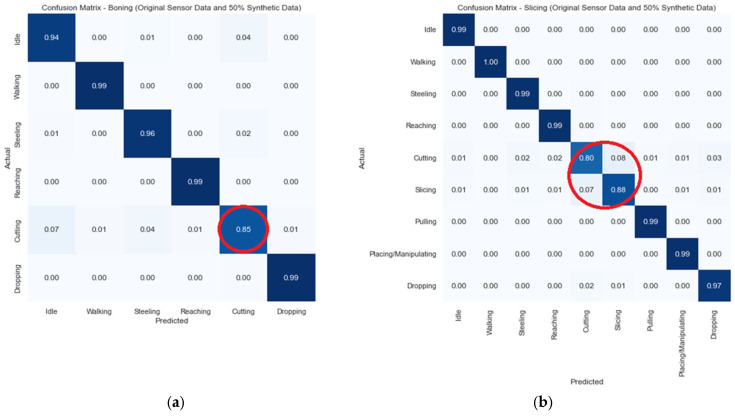

The model created synthetic data using a general GAN with a generator, and then we utilised a discriminator to distinguish between synthetic and real data. Along with the synthetic data generated by the GAN, to solve the class imbalance, the SMOTE was applied. The classification results are depicted in Figure 12.

Figure 12.

Accuracy of the GAN with different percentages of synthetic data (circled area showing drop in the accuracy): (a) boning; (b) slicing.

The results in Figure 12 depict that after combing the synthetic data and combining the SMOTE, the accuracy of the major classes in both boning and slicing decreased. Especially for slicing activities, with 8% of the cutting class data being identified as slicing. Similarly, 7% of the slicing class data were identified as cutting. To overcome this misclassification, we applied another ML model, ENN, which removed nearest neighbours to decrease misclassifications. Hereafter, the classification results improved with an accuracy over 90%, as shown in Figure 13.

Figure 13.

Classification accuracy with the GAN, SMOTE, and ENN (circled area showing improvement in the accuracy): (a) boning; (b) slicing.

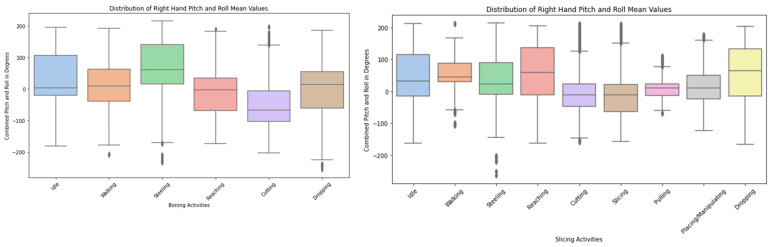

After performing the activity recognition, we gathered preliminary findings of work proficiency analysis as per the proposed DigitalUpSkilling framework depicted in this paper. The preliminary analysis of body movement data demonstrates an influential use of the IoT-GAN-ML model to support the proposed framework. For example, Figure 14 shows that steeling, reaching, and dropping activities involve the most varied hand movements, with a wider range of pitch and roll values. In contrast, cutting, slicing, and pulling have more stable hand orientations, with pitch and roll values concentrated around the median.

Figure 14.

Distribution of right-hand pitch and roll mean (in degree).

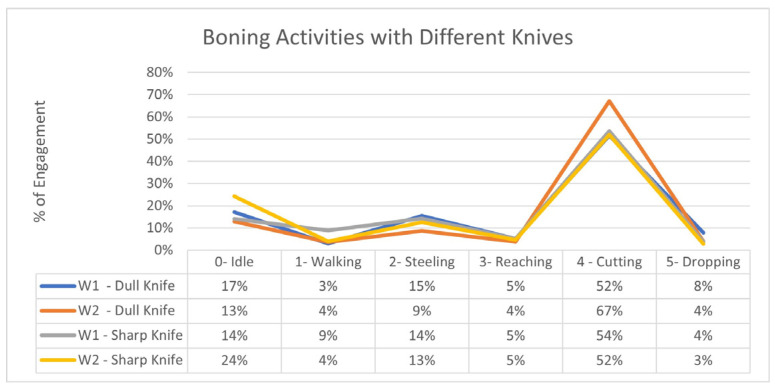

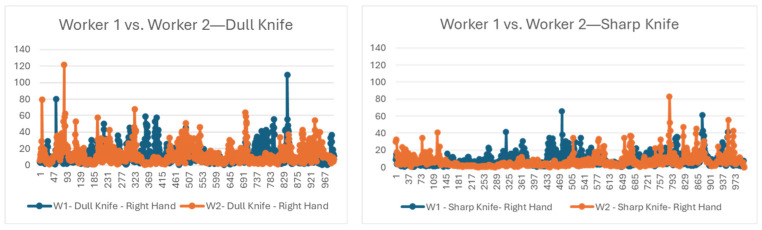

The initial results from the study show that workers’ engagement, as well as the effectiveness of the tools used for work activities, can be determined from the proposed GAN-ML-based HAR. Figure 14 suggests a correlation between the sharpness and idle time of the workers, as using sharp knives allows participants to work less while maintaining their productivity. For instance, worker 2 with a sharp knife was more idle but remained more productive compared to when using a dull knife.

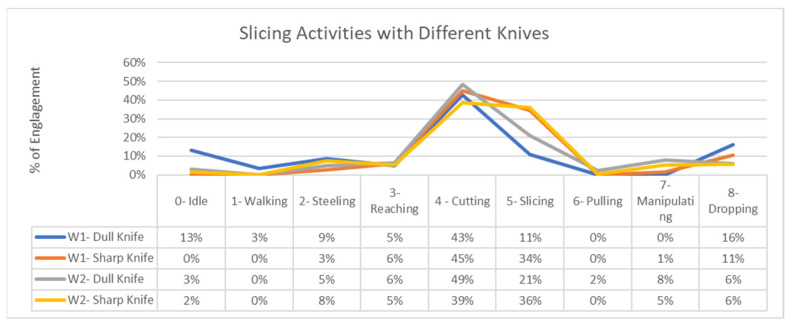

Furthermore, the model can also determine the effectiveness of the sharp tools. Figure 15 demonstrates that as the knives became dull, workers were exerting more effort and remained more engaged in cutting rather than slicing, as slicing mostly depends on the sharpness of the knife and requires less effort than cutting.

Figure 15.

Comparison of the engagement in boning (W1: Worker 1; W2: Worker 2).

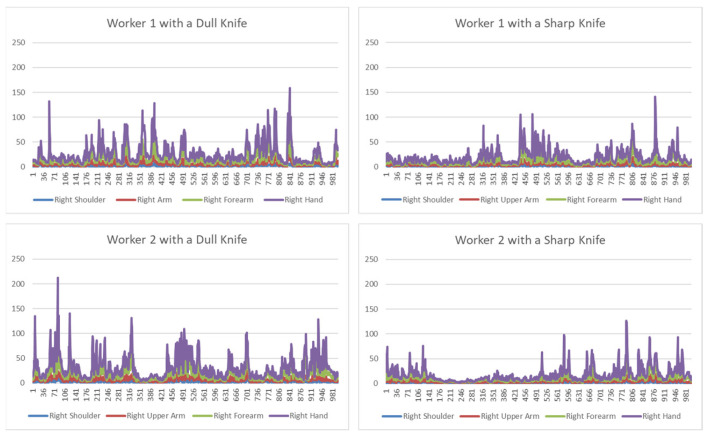

The evaluation of participants’ proficiency suggests that there is a correlation between the sharpness of a knife and performance, as their body movement changed accordingly. To prove this, we conducted a preliminary analysis to demonstrate that the proposed framework can be used for recognising work activities and analysing individualised proficiency. For this, initially, we used 12 features out of 969 features (right-hand acceleration features) with dull and sharp knives for both participants. Part of the results are presented in Figure 16.

Figure 16.

Comparison of the engagement in slicing.

The top two graphs in Figure 17 depict the magnitude of acceleration for the right hand of worker 1 with different knives, and the bottom two graphs show the acceleration of the right hand of worker 2 with knives. Here, data from sensors from the right shoulder, right upper arm, right forearm, and right hand are presented, which represents their prominent hand acceleration during cutting while boning the meat. From the analysis, it has been observed that for both, workers’ acceleration of their hand increased with a dull knife, which indicates that their performance decreased and their effort level increased because of the knife’s lack of sharpness.

Figure 17.

Comparison of the accelerations of the right hand.

6. Discussion of Skill Proficiency

Section 5 presents the performance of the proposed model of work activity classification, as well as comparisons of the body movements of the workers, to understand work proficiency. In addition, with the use of the IoT-GAN-ML-based classification model, we also identified the idle times for each worker, the wait times while sharpening the knife, and total active time contributing to work, and, most importantly, we compared the proficiency for various dimensions. Hence, similarly, the proposed framework can be used to detect the effectiveness of tools used during work, unusual body movements, and recommend areas for improvement via training for real-life work activities. The above findings prove that the proposed framework can assess proficiency in work activities, which in turn can contribute to personalising and digitalising the training of workers. Therefore, if sensor data are continuously fed into the proposed model, the proposed DigitalUpSkilling framework is capable of producing activity recognition and proficiency measurement with more features provided by the sensors, which will suit any dynamic work or sports environment with real-life physical work or sports activities.

In addition to work proficiency, identifying body movements such as unusual acceleration or other movement during work can help us prevent potential work-related injury from repetitive limb movements [20]. Studies show that there are ergonomic risk factors for people who are engaged in repetitive tasks in physical-activity-based industries. The risk can come from excessive force, stressful posture, repetitive movement, lifting, climbing, etc. [19]. In the extended experiment, initially, we compared the accelerations of the participants’ body parts when performing a similar activity to determine the difference in acceleration for the same activity. For instance, Figure 18 shows a comparison of the accelerations of both workers’ hands. Here, we observed that, previously, both workers showed a significant increase in acceleration for different parts of their hands. However, it was found that worker 1 was consistently generating more acceleration and, thus, rapid hand movement than worker 2, regardless of the knife’s sharpness.

Figure 18.

Comparison of the accelerations of the right-hand.

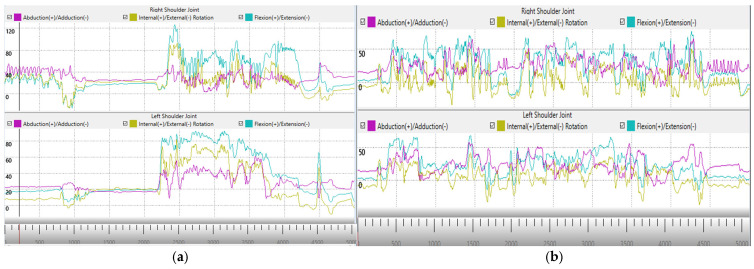

Furthermore, we compared the joint angles and abduction of both participants with similar knives for boning activity represented by digital twins. The live representations from sensor data in Figure 19 show that the right and left shoulder joint movements, such as abduction and rotation, especially flexion/extension of worker 1, exhibited greater variability and amplitude, especially during peak activity periods. Worker 2 had more controlled and less intense activity for both the right and left shoulder joints and showed smoother and more consistent movement patterns with lower variability across the same joint movement parameters. Shoulder flexion is the forward motion of the arm, usually lifting the arm in front of the body. Increased shoulder flexion variability is a sign of irregular or excessive body motions, especially while doing repetitive tasks like those in a meat processing facility.

Figure 19.

Comparisons of abduction, rotation, and flexion of the right shoulder during boning activities: (a) worker 1; (b) worker 2.

Both results, acceleration from sensor data (Figure 18) and abduction graph from digital twins (Figure 19), demonstrate that worker 2 had smoother hand movements than worker 1. These are the attributes that are usually difficult to oversee by a human trainer. These findings will help both the trainer and trainee to focus on particular areas for improvement to further improve work proficiency, as well as to practice healthy body movements during work. Hence, the proposed framework can provide better input for trainees and trainers and improve the current approach to training.

Here the comparisons were conducted based on 8–12 features. With the digital twins and a hybrid GAN-ML model, the DigitalUpSkilling framework can explore results from many more features from a total of 969 features for a more comprehensive analysis. This data can be used to train an ML model to identify unusual body movements and categorise those that are potentially harmful. These findings can be utilised in personalised rehabilitation exercises, assuring optimal recovery and lowering the risk of injury [78,79]. Thus, these findings using the DigitalUpSkilling framework have the potential to be used in rehabilitation and sports to prevent injuries during training and exercise [80].

7. Conclusions and Future Directions

In this study, we proposed DigitalUpSkilling, a framework for digital and personalised worker training. The framework advances current IMU-based activity recognition by integrating digital twins with a combination of GAN advanced machine learning techniques, particularly focusing on addressing challenges in real-time body movement through digital twins and data augmentation for small datasets. Unlike traditional approaches, we utilised a hybrid GAN-ML model that enhances data through GAN-based synthetic data generation, combined with SMOTE and ENN to handle class imbalance and remove noise, ultimately improving classification accuracy. Additionally, the model leverages sensor fusion techniques and non-linear feature transformation with Leaky ReLU and Dense (128 units) layers, resulting in refined body movement assessment. The integration of these methods demonstrates improved robustness in varying work environments with limited data availability. To illustrate the framework and its benefits, the paper presented a case study on meat processing. The study’s results show that the proposed hybrid GAN-ML-based work activity recognition can recognise work activities using IoT sensor data with an accuracy of over 90%.

The study also included preliminary work skill proficiency assessments, such as acceleration of hand, rotations and movement smoothness, using 12 out of 969 possible features obtained from wearable sensors. While a trainer’s observation can consider only a few work-skill proficiency assessment parameters, the proposed models can potentially take into account other important features from these 969 features, achieving a more comprehensive and specific skill proficiency assessment. This study can be further improved to analyse proficiency in terms of body posture, and smoothness of movement during work activities from abduction data, which would not only contribute to work proficiency but also contribute to preventing injurious movements during work activities.

The future areas for improvement in this paper are as follows: first, both of the participants in the study were professional trainers in the meat industry, so there was no significant difference in skill proficiencies and body movements. In future work, we intend to include participants with more diverse skill proficiencies. Moreover, we also plan to apply the use of semi-supervised learning models and deep learning models for activity recognition and skill proficiency measurement. Finally, we aim to upgrade the DigitalUpSkilling framework to include more metrics from body movements, such as calculating jerk metrics and RULA scores from digital twins, which would be beneficial to other areas of physical training beyond specialised work tasks, such as sports, post-operative rehabilitation, and prevention of occupational injuries.

Acknowledgments

The authors express their gratitude to Felip Marti Carrillo and Prem Prakash Jayaraman of Swinburne University of Technology for their contributions to the data collection process. They also extend sincere thanks to Mats Isaksson and John McCormick, Swinburne University of Technology for their support with the IoT sensors and software, for data collection.

Author Contributions

Conceptualisation, N.A. and D.G.; methodology, N.A.; validation, N.A. and D.G.; formal analysis, N.A.; data curation, N.A.; writing—original draft preparation, N.A.; writing—review and editing, N.A., D.G. and A.M.; supervision, D.G and A.M. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Ethics approval was provided by Swinburne University of Technology’s Human Research Ethics Committee (Ref: 20237262-17305).

Informed Consent Statement

Participants gave their informed consent before they participated in the study. Ethics approval was received from Swinburne University of Technology’s Human Research Ethics Committee (Ref: 20237262-17305). All data collection and experiments were performed according to the university’s ethical guidelines.

Data Availability Statement

Data and results are included in the article. Further inquiries can be directed to the corresponding author/s.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding Statement

The authors express their sincere appreciation to the Australian Meat Processor Corporation Ltd. (AMPC) for supporting data collection through the project ‘An IoT Solution for Measuring Knife Sharpness and Force in Red Meat Processing Plants’ (Project No. 2021-1735).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Bosse H.M., Mohr J., Buss B., Krautter M., Weyrich P., Herzog W., Jünger J., Nikendei C. The Benefit of Repetitive Skills Training and Frequency of Expert Feedback in the Early Acquisition of Procedural Skills. BMC Med. Educ. 2015;15:22. doi: 10.1186/s12909-015-0286-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Guthrie J.P. High-Involvement Work Practices, Turnover, and Productivity: Evidence from New Zealand. Acad. Manag. J. 2001;44:180–190. doi: 10.2307/3069345. [DOI] [Google Scholar]

- 3.Salas E., Tannenbaum S.I., Kraiger K., Smith-Jentsch K.A. The Science of Training and Development in Organizations. Psychol. Sci. Public Interest. 2012;13:74–101. doi: 10.1177/1529100612436661. [DOI] [PubMed] [Google Scholar]

- 4.Schonewille M. Does Training Generally Work? Explaining labour productivity effects from schooling and training. Int. J. Manpow. 2001;22:158–173. doi: 10.1108/01437720110386467. [DOI] [Google Scholar]

- 5.Anders Ericsson K. Deliberate Practice and Acquisition of Expert Performance: A General Overview. Acad. Emerg. Med. 2008;15:988–994. doi: 10.1111/j.1553-2712.2008.00227.x. [DOI] [PubMed] [Google Scholar]

- 6.Analoui F. Training and Development. J. Manag. Dev. 1994;13:61–72. doi: 10.1108/02621719410072107. [DOI] [Google Scholar]

- 7.Urbancová H., Vrabcová P., Hudáková M., Petrů G.J. Effective Training Evaluation: The Role of Factors Influencing the Evaluation of Effectiveness of Employee Training and Development. Sustainability. 2021;13:2721. doi: 10.3390/su13052721. [DOI] [Google Scholar]

- 8.Pashler H., McDaniel M., Rohrer D., Bjork R. Learning Styles. Psychol. Sci. Public Interest. 2008;9:105–119. doi: 10.1111/j.1539-6053.2009.01038.x. [DOI] [PubMed] [Google Scholar]

- 9.Jenkins J.M. Strategies for Personalizing Instruction, Part Two. Int. J. Educ. Reform. 1999;8:83–88. doi: 10.1177/105678799900800110. [DOI] [Google Scholar]

- 10.Morgan P.J., Barnett L.M., Cliff D.P., Okely A.D., Scott H.A., Cohen K.E., Lubans D.R. Fundamental Movement Skill Interventions in Youth: A Systematic Review and Meta-Analysis. Pediatrics. 2013;132:e1361–e1383. doi: 10.1542/peds.2013-1167. [DOI] [PubMed] [Google Scholar]

- 11.Arthur W., Bennett W., Edens P.S., Bell S.T. Effectiveness of Training in Organizations: A Meta-Analysis of Design and Evaluation Features. J. Appl. Psychol. 2003;88:234–245. doi: 10.1037/0021-9010.88.2.234. [DOI] [PubMed] [Google Scholar]

- 12.Zhang L., Diraneyya M.M., Ryu J., Haas C.T., Abdel-Rahman E.M. Jerk as an Indicator of Physical Exertion and Fatigue. Autom. Constr. 2019;104:120–128. doi: 10.1016/j.autcon.2019.04.016. [DOI] [Google Scholar]

- 13.Georgakopoulos D., Jayaraman P.P. Internet of Things: From Internet Scale Sensing to Smart Services. Computing. 2016;98:1041–1058. doi: 10.1007/s00607-016-0510-0. [DOI] [Google Scholar]

- 14.Hidaka I., Inoue S., Zin T.T. A Study on Worker Tracking Using Camera to Improve Work Efficiency in Factories; Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies (LifeTech); Osaka, Japan. 7–9 March 2022; Piscataway, NJ, USA: IEEE; 2022. pp. 568–569. [Google Scholar]

- 15.Guan A.C.K., Mamat M.J. Bin Allocation of Thermal Imaging Camera: Promoting Efficient Movement in Entrepreneur Workplace. ARTEKS J. Tek. Arsit. 2022;7:67–76. doi: 10.30822/arteks.v7i1.1215. [DOI] [Google Scholar]

- 16.Chintada A., V U. Improvement of Productivity by Implementing Occupational Ergonomics. J. Ind. Prod. Eng. 2022;39:59–72. doi: 10.1080/21681015.2021.1958936. [DOI] [Google Scholar]

- 17.Jegham I., Ben Khalifa A., Alouani I., Mahjoub M.A. Vision-Based Human Action Recognition: An Overview and Real World Challenges. Forensic Sci. Int. Digit. Investig. 2020;32:200901. doi: 10.1016/j.fsidi.2019.200901. [DOI] [Google Scholar]

- 18.Nawal Y., Oussalah M., Fergani B., Fleury A. New Incremental SVM Algorithms for Human Activity recognition in Smart Homes. J. Ambient Intell. Humaniz. Comput. 2022;14:13433–13450. doi: 10.1007/s12652-022-03798-w. [DOI] [Google Scholar]

- 19.Bock M., Hölzemann A., Moeller M., Van Laerhoven K. Improving Deep Learning for HAR with Shallow LSTMs; Proceedings of the 2021 International Symposium on Wearable Computers; Online. 21–26 September 2021; New York, NY, USA: ACM; 2021. pp. 7–12. [Google Scholar]

- 20.Kim Y.-W., Joa K.-L., Jeong H.-Y., Lee S. Wearable IMU-Based Human Activity Recognition Algorithm for Clinical Balance Assessment Using 1D-CNN and GRU Ensemble Model. Sensors. 2021;21:7628. doi: 10.3390/s21227628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fu D. Research on Intelligent Recognition Technology of Gymnastics Posture Based on KNN Fusion DTW Algorithm Based on Sensor Technology. Int. J. Wirel. Mob. Comput. 2023;25:58–67. doi: 10.1504/IJWMC.2023.132423. [DOI] [Google Scholar]

- 22.Su F., Chen M. Basketball Players’ Score Prediction Using Artificial Intelligence Technology via the Internet of Things. J. Supercomput. 2022;78:19138–19166. doi: 10.1007/s11227-022-04573-6. [DOI] [Google Scholar]

- 23.Meżyk E., Unold O. Machine Learning Approach to Model Sport Training. Comput. Hum. Behav. 2011;27:1499–1506. doi: 10.1016/j.chb.2010.10.014. [DOI] [Google Scholar]

- 24.Fernandes C., Matos L.M., Folgado D., Nunes M.L., Pereira J.R., Pilastri A., Cortez P. A Deep Learning Approach to Prevent Problematic Movements of Industrial Workers Based on Inertial Sensors; Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN); Padua, Italy. 18–23 July 2022; Piscataway, NJ, USA: IEEE; 2022. pp. 1–8. [Google Scholar]

- 25.Georgakopoulos D., Jayaraman P.P. Digital Twins and Dependency/Constraint-Aware AI for Digital Manufacturing. Commun. ACM. 2023;66:87–88. doi: 10.1145/3589662. [DOI] [Google Scholar]

- 26.Luo X., Qin M.S., Fang Z., Qu Z. Artificial Intelligence Coaches for Sales Agents: Caveats and Solutions. J. Mark. 2021;85:14–32. doi: 10.1177/0022242920956676. [DOI] [Google Scholar]

- 27.Beef and Veal—DAFF. [(accessed on 14 March 2024)]; Available online: https://www.agriculture.gov.au/abares/research-topics/agricultural-outlook/beef-and-veal.

- 28.Ampc Cost to Operate and Processing Cost Competitiveness. [(accessed on 14 March 2024)]. Available online: https://www.ampc.com.au/getmedia/cddf3a65-fac3-49a9-a0db-986bd25dfd1b/AMPC_CostToOperateAndProcessingCostCompetetitivness_FinalReport.pdf?ext=.pdf.

- 29.Taborri J., Keogh J., Kos A., Santuz A., Umek A., Urbanczyk C., van der Kruk E., Rossi S. Sport Biomechanics Applications Using Inertial, Force, and EMG Sensors: A Literature Overview. Appl. Bionics Biomech. 2020;2020:2041549. doi: 10.1155/2020/2041549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ahmed K., Saini M. FCML-Gait: Fog Computing and Machine Learning Inspired Human identity and Gender Recognition Using Gait Sequences. Signal Image Video Process. 2023;17:925–936. doi: 10.1007/s11760-022-02217-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rana M., Mittal V. Wearable Sensors for Real-Time Kinematics Analysis in Sports: A Review. IEEE Sens. J. 2021;21:1187–1207. doi: 10.1109/JSEN.2020.3019016. [DOI] [Google Scholar]

- 32.Hamidi Rad M., Gremeaux V., Massé F., Dadashi F., Aminian K. SmartSwim, a Novel IMU-Based Coaching Assistance. Sensors. 2022;22:3356. doi: 10.3390/s22093356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Qin Z., Zhang Y., Meng S., Qin Z., Choo K.-K.R. Imaging and Fusing Time Series for Wearable Sensor-Based Human Activity Recognition. Inf. Fusion. 2020;53:80–87. doi: 10.1016/j.inffus.2019.06.014. [DOI] [Google Scholar]

- 34.Yuan B., Kamruzzaman M.M., Shan S. Application of Motion Sensor Based on Neural Network in Basketball Technology and Physical Fitness Evaluation System. Wirel. Commun. Mob. Comput. 2021;2021:5562954. doi: 10.1155/2021/5562954. [DOI] [Google Scholar]

- 35.Wiesener C., Seel T., Axelgaard J., Horton R., Niedeggen A., Schauer T. An Inertial Sensor-Based Trigger Algorithm for Functional Electrical Stimulation-Assisted Swimming in Paraplegics. IFAC-PapersOnLine. 2019;51:278–283. doi: 10.1016/j.ifacol.2019.01.039. [DOI] [Google Scholar]

- 36.Chen K., Zhang D., Yao L., Guo B., Yu Z., Liu Y. Deep Learning for Sensor-Based Human Activity Recognition: Overview, Challenges, and Opportunities. ACM Comput. Surv. 2022;54:77. doi: 10.1145/3447744. [DOI] [Google Scholar]

- 37.Cescon M., Choudhary D., Pinsker J.E., Dadlani V., Church M.M., Kudva Y.C., Doyle III F.J., Dassau E. Activity Detection and Classification from Wristband Accelerometer Data Collected on People with Type 1 Diabetes in Free-Living Conditions. Comput. Biol. Med. 2021;135:104633. doi: 10.1016/j.compbiomed.2021.104633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Straczkiewicz M., James P., Onnela J.-P. A Systematic Review of Smartphone-Based Human Activity Recognition Methods for Health Research. NPJ Digit. Med. 2021;4:148. doi: 10.1038/s41746-021-00514-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wong J.C.Y., Wang J., Fu E.Y., Leong H.V., Ngai G. Activity Recognition and Stress Detection via Wristband; Proceedings of the 17th International Conference on Advances in Mobile Computing & Multimedia; Munich, Germany. 2–4 December 2019; New York, NY, USA: ACM; 2019. pp. 102–106. [Google Scholar]

- 40.Song X., van de Ven S.S., Chen S., Kang P., Gao Q., Jia J., Shull P.B. Proposal of a Wearable Multimodal Sensing-Based Serious Games Approach for Hand Movement Training After Stroke. Front. Physiol. 2022;13:811950. doi: 10.3389/fphys.2022.811950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Albert J.A., Zhou L., Glöckner P., Trautmann J., Ihde L., Eilers J., Kamal M., Arnrich B. Will You Be My Quarantine; Proceedings of the 14th EAI International Conference on Pervasive Computing Technologies for Healthcare; Atlanta, GA, USA. 18–20 May 2020; New York, NY, USA: ACM; 2020. pp. 380–383. [Google Scholar]

- 42.Caserman P., Krug C., Göbel S. Recognizing Full-Body Exercise Execution Errors Using the Teslasuit. Sensors. 2021;21:8389. doi: 10.3390/s21248389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Demircan E. A Pilot Study on Locomotion Training via Biomechanical Models and a Wearable Haptic Feedback System. Robomech J. 2020;7:19. doi: 10.1186/s40648-020-00167-0. [DOI] [Google Scholar]

- 44.Chartomatsidis M., Goumopoulos C. A Balance Training Game Tool for Seniors Using Microsoft Kinect and 3D Worlds. In: Ziefle M., Maciaszek L., editors. Proceedings of the 5th International Conference on Information and Communication Technologies for Ageing Well and e-Health; Heraklion, Greece. 2–4 May 2019; Setúbal, Portugal: SCITEPRESS—Science and Technology Publications; 2019. pp. 135–145. [Google Scholar]

- 45.Bianchi V., Bassoli M., Lombardo G., Fornacciari P., Mordonini M., De Munari I. IoT Wearable Sensor and Deep Learning: An Integrated Approach for Personalized Human Activity Recognition in a Smart Home Environment. IEEE Internet Things J. 2019;6:8553–8562. doi: 10.1109/JIOT.2019.2920283. [DOI] [Google Scholar]

- 46.Ding W., Abdel-Basset M., Mohamed R. HAR-DeepConvLG: Hybrid Deep Learning-Based Model for Human Activity Recognition in IoT Applications. Inf. Sci. 2023;646:119394. doi: 10.1016/j.ins.2023.119394. [DOI] [Google Scholar]

- 47.Mekruksavanich S., Jitpattanakul A. LSTM Networks Using Smartphone Data for Sensor-Based Human Activity Recognition in Smart Homes. Sensors. 2021;21:1636. doi: 10.3390/s21051636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Subedi S., Pradhananga N. Sensor-Based Computational Approach to Preventing Back Injuries in Construction Workers. Autom. Constr. 2021;131:103920. doi: 10.1016/j.autcon.2021.103920. [DOI] [Google Scholar]

- 49.Tao F., Zhang H., Liu A., Nee A.Y.C. Digital Twin in Industry: State-of-the-Art. IEEE Trans. Ind. Inform. 2019;15:2405–2415. doi: 10.1109/TII.2018.2873186. [DOI] [Google Scholar]

- 50.Uhlemann T.H.-J., Lehmann C., Steinhilper R. The Digital Twin: Realizing the Cyber-Physical Production System for Industry 4.0. Procedia CIRP. 2017;61:335–340. doi: 10.1016/j.procir.2016.11.152. [DOI] [Google Scholar]

- 51.Kritzinger W., Karner M., Traar G., Henjes J., Sihn W. Digital Twin in Manufacturing: A Categorical Literature Review and Classification. IFAC-PapersOnline. 2018;51:1016–1022. doi: 10.1016/j.ifacol.2018.08.474. [DOI] [Google Scholar]

- 52.Ryselis K., Petkus T., Blažauskas T., Maskeliūnas R., Damaševičius R. Multiple Kinect Based System to Monitor and Analyze Key Performance Indicators of Physical Training. Hum.-Cent. Comput. Inf. Sci. 2020;10:51. doi: 10.1186/s13673-020-00256-4. [DOI] [Google Scholar]

- 53.Upadhyay A.K., Khandelwal K. Artificial Intelligence-Based Training Learning from Application. Dev. Learn. Organ. Int. J. 2019;33:20–23. doi: 10.1108/DLO-05-2018-0058. [DOI] [Google Scholar]

- 54.Alo U.R., Nweke H.F., Teh Y.W., Murtaza G. Smartphone Motion Sensor-Based Complex Human Activity Identification Using Deep Stacked Autoencoder Algorithm for Enhanced Smart Healthcare System. Sensors. 2020;20:6300. doi: 10.3390/s20216300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Yadav S.K., Tiwari K., Pandey H.M., Akbar S.A. A Review of Multimodal Human Activity Recognition with Special Emphasis on Classification, Applications, Challenges and Future Directions. Knowl. Based Syst. 2021;223:106970. doi: 10.1016/j.knosys.2021.106970. [DOI] [Google Scholar]

- 56.Forkan A.R.M., Montori F., Georgakopoulos D., Jayaraman P.P., Yavari A., Morshed A. An Industrial IoT Solution for Evaluating Workers’ Performance Via Activity Recognition; Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS); Dallas, TX, USA. 7–9 July 2019; Piscataway, NJ, USA: IEEE; 2019. pp. 1393–1403. [Google Scholar]

- 57.Choi J., Gu B., Chin S., Lee J.-S. Machine Learning Predictive Model Based on National Data for Fatal Accidents of Construction Workers. Autom. Constr. 2020;110:102974. doi: 10.1016/j.autcon.2019.102974. [DOI] [Google Scholar]

- 58.Sopidis G., Ahmad A., Haslgruebler M., Ferscha A., Baresch M. Micro Activities Recognition and Macro Worksteps Classification for Industrial IoT Processes; Proceedings of the 11th International Conference on the Internet of Things; St. Gallen, Switzerland. 8–12 November 2021; New York, NY, USA: ACM; 2021. pp. 185–188. [Google Scholar]

- 59.Chen W.-H., Cho P.-C. A GAN-Based Data Augmentation Approach for Sensor-Based Human Activity Recognition. Int. J. Comput. Commun. Eng. 2021;10:75–84. doi: 10.17706/IJCCE.2021.10.4.75-84. [DOI] [Google Scholar]

- 60.Jimale A.O., Mohd Noor M.H. Fully Connected Generative Adversarial Network for Human Activity Recognition. IEEE Access. 2022;10:100257–100266. doi: 10.1109/ACCESS.2022.3206952. [DOI] [Google Scholar]

- 61.Shafqat W., Byun Y.-C. A Hybrid GAN-Based Approach to Solve Imbalanced Data Problem in Recommendation Systems. IEEE Access. 2022;10:11036–11047. doi: 10.1109/ACCESS.2022.3141776. [DOI] [Google Scholar]

- 62.Veigas K.C., Regulagadda D.S., Kokatnoor S.A. Optimized Stacking Ensemble (OSE) for Credit Card Fraud Detection Using Synthetic Minority Oversampling Model. Indian J. Sci. Technol. 2021;14:2607–2615. doi: 10.17485/IJST/v14i32.807. [DOI] [Google Scholar]

- 63.He H., Yang B., Garcia E.A., Li S.T. ADASYN: Adaptive Synthetic Sampling Approach for Imbalanced Learning; Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence); Hong Kong, China. 1–8 June 2008; Piscataway, NJ, USA: IEEE; 2008. pp. 1322–1328. [Google Scholar]

- 64.Sauber-Cole R., Khoshgoftaar T.M. The Use of Generative Adversarial Networks to Alleviate Class Imbalance in Tabular Data: A Survey. J. Big Data. 2022;9:98. doi: 10.1186/s40537-022-00648-6. [DOI] [Google Scholar]

- 65.Elhassan A.T., Aljourf M., Al-Mohanna F., Shoukri M. Classification of Imbalance Data Using Tomek Link (T-Link) Combined with Random Under-Sampling (RUS) as a Data Reduction Method. Glob. J. Technol. Optim. 2016;1:111. doi: 10.4172/2229-8711.S1111. [DOI] [Google Scholar]

- 66.Xu Z., Shen D., Nie T., Kou Y. A Hybrid Sampling Algorithm Combining M-SMOTE and ENN Based on Random Forest for Medical Imbalanced Data. J. Biomed. Inform. 2020;107:103465. doi: 10.1016/j.jbi.2020.103465. [DOI] [PubMed] [Google Scholar]

- 67.Oytun M., Tinazci C., Sekeroglu B., Acikada C., Yavuz H.U. Performance Prediction and Evaluation in Female Handball Players Using Machine Learning Models. IEEE Access. 2020;8:116321–116335. doi: 10.1109/ACCESS.2020.3004182. [DOI] [Google Scholar]

- 68.Pappalardo L., Cintia P., Ferragina P., Massucco E., Pedreschi D., Giannotti F. PlayeRank: Data-driven Performance Evaluation and Player Ranking in Soccer via a Machine Learning Approach. ACM Trans. Intell. Syst. Technol. 2019;10:59. doi: 10.1145/3343172. [DOI] [Google Scholar]

- 69.Lam K., Chen J., Wang Z., Iqbal F.M., Darzi A., Lo B., Purkayastha S., Kinross J.M. Machine Learning for Technical Skill Assessment in Surgery: A Systematic Review. NPJ Digit. Med. 2022;5:24. doi: 10.1038/s41746-022-00566-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Matijevich E.S., Scott L.R., Volgyesi P., Derry K.H., Zelik K.E. Combining Wearable Sensor Signals, Machine Learning and Biomechanics to Estimate Tibial Bone Force and Damage during Running. Hum. Mov. Sci. 2020;74:102690. doi: 10.1016/j.humov.2020.102690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Patalas-Maliszewska J., Pajak I., Krutz P., Pajak G., Rehm M., Schlegel H., Dix M. Inertial Sensor-Based Sport Activity Advisory System Using Machine Learning Algorithms. Sensors. 2023;23:1137. doi: 10.3390/s23031137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Senanayake D., Halgamuge S., Ackland D.C. Real-Time Conversion of Inertial Measurement Unit Data to Ankle Joint Angles Using Deep Neural Networks. J. Biomech. 2021;125:110552. doi: 10.1016/j.jbiomech.2021.110552. [DOI] [PubMed] [Google Scholar]

- 73.Fraile F., Psarommatis F., Alarcón F., Joan J. A Methodological Framework for Designing Personalised Training Programs to Support Personnel Upskilling in Industry 5.0. Computers. 2023;12:224. doi: 10.3390/computers12110224. [DOI] [Google Scholar]

- 74.Patki S., Sankhe V., Jawwad M., Mulla N. Personalised Employee Training; Proceedings of the 2021 International Conference on Communication information and Computing Technology (ICCICT); Mumbai, India. 25–27 June 2021; Piscataway, NJ, USA: IEEE; 2021. pp. 1–6. [Google Scholar]

- 75.Butean A., Olescu M.L., Tocu N.A., Florea A. Improving Training Methods for Industry Workers Though AI Assisted Multi-Stage Virtual Reality Simulations. Elearning Softw. Educ. 2019;1:61–67. [Google Scholar]

- 76.MVN Analyze|Movella.Com. [(accessed on 28 January 2024)]. Available online: https://www.movella.com/products/motion-capture/mvn-analyze?utm_feeditemid=&utm_device=c&utm_term=xsens%20mvn%20analyze&utm_source=google&utm_medium=ppc&utm_campaign=&hsa_cam=15264151125&hsa_grp=132748033194&hsa_mt=e&hsa_src=g&hsa_ad=561667410925&hsa_acc=1306794700&hsa_net=adwords&hsa_kw=xsens%20mvn%20analyze&hsa_tgt=aud-1297463063737:kwd-928333037508&hsa_ver=3&utm_feeditemid=&utm_device=c&utm_term=xsens%20mvn%20analyze&utm_source=google&utm_medium=ppc&utm_campaign=HMM+%7C+Asia+%7C+Search&hsa_cam=15264151125&hsa_grp=132748033194&hsa_mt=e&hsa_src=g&hsa_ad=561667410925&hsa_acc=1306794700&hsa_net=adwords&hsa_kw=xsens%20mvn%20analyze&hsa_tgt=aud-1297463063737:kwd-928333037508&hsa_ver=3&gad_source=1&gclid=CjwKCAiA8NKtBhBtEiwAq5aX2Be-eBaXkvEk2ctESXuZnoGwfKpW2j2hAjrwPmjMZKQJww9fYd2l3RoCfRIQAvD_BwE.

- 77.Morshed M.G., Sultana T., Alam A., Lee Y.-K. Human Action Recognition: A Taxonomy-Based Survey, Updates, and Opportunities. Sensors. 2023;23:2182. doi: 10.3390/s23042182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Hulshof C.T.J., Pega F., Neupane S., van der Molen H.F., Colosio C., Daams J.G., Descatha A., Kc P., Kuijer P.P.F.M., Mandic-Rajcevic S., et al. The Prevalence of Occupational Exposure to Ergonomic Risk Factors: A Systematic Review and Meta-Analysis from the WHO/ILO Joint Estimates of the Work-Related Burden of Disease and Injury. Environ. Int. 2021;146:106157. doi: 10.1016/j.envint.2020.106157. [DOI] [PubMed] [Google Scholar]

- 79.He Z., Liu T., Yi J. A Wearable Sensing and Training System: Towards Gait Rehabilitation for Elderly Patients With Knee Osteoarthritis. IEEE Sens. J. 2019;19:5936–5945. doi: 10.1109/JSEN.2019.2908417. [DOI] [Google Scholar]

- 80.Zhao Y., You Y. Design and Data Analysis of Wearable Sports Posture Measurement System Based on Internet of Things. Alex. Eng. J. 2021;60:691–701. doi: 10.1016/j.aej.2020.10.001. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data and results are included in the article. Further inquiries can be directed to the corresponding author/s.