Abstract

Abstract

Background

Plain language summaries (PLSs) are easy-to-understand summaries of research articles that should follow principles of plain language and health literacy. PLS author instructions from health journals help guide authors on word count/PLS length, structure and the use of jargon. However, it is unclear whether published PLSs currently adhere to author instructions.

Objectives

This study aims to determine (1) the degree of compliance of published PLSs against the PLS author instructions in health journals and (2) the extent to which PLSs meet health literacy principles.

Study design

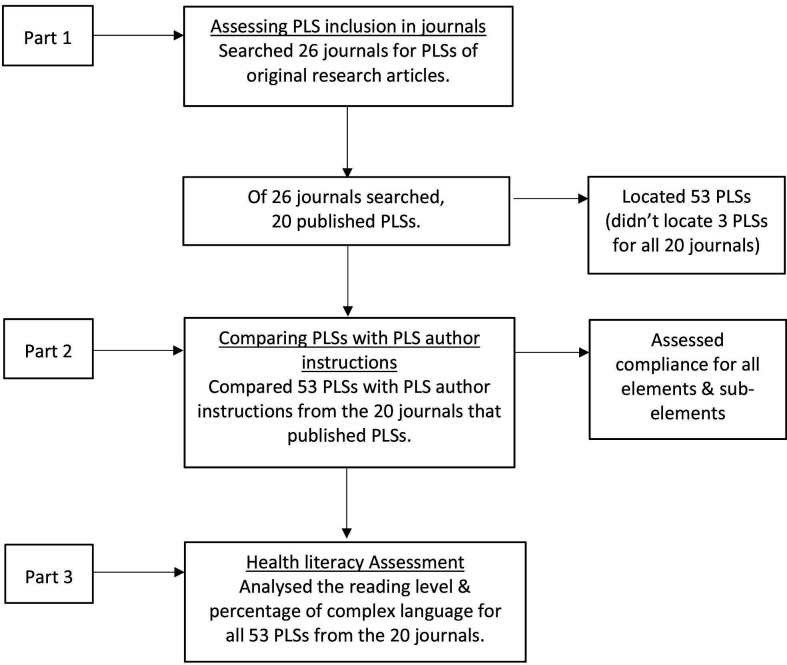

We conducted a three-part systematic environmental scan.

Methods

We examined 26 health journals identified from a previous review. In part 1, we assessed the inclusion frequency of PLSs in the 26 journals; in part 2, we assessed the level of compliance of PLSs with PLS author instructions; and in part 3, we conducted a health literacy assessment of the PLSs.

Results

Part 1: we found PLSs for 20/26 (76.9%) included journals. Part 2: no journal achieved 100% compliance with PLS author instructions. The highest level was 88% and the lowest was 0%. Part 3: no PLS was written at a readability level suitable for a general audience. The mean reading level was grade 15.8 (range 10.2–21.2and mean percentage of complex words, 31% (range 8.5%–49.8%).

Discussion

PLSs are an important means through which consumers can access research findings. We found a lack of compliance between PLS author instructions and PLSs published in health journals that may impede access and use by consumers. This study highlights the need for better ways to support authors adhere to PLS instructions and improved monitoring by journals.

Keywords: PUBLIC HEALTH, Health Literacy, Health Education, Patient-Centered Care

STRENGTHS AND LIMITATIONS OF THIS STUDY.

We provide a health literacy assessment (reading level and use of complex words) of plain language summaries (PLSs) from journals covering a range of medical specialties.

Our dataset only included journals that published PLSs in English, so it is unclear if including PLSs published in languages other than English might have altered the results.

Author instructions for writing PLSs were inconsistent in terms of the elements included and the amount of detail provided, so direct comparisons were not straightforward.

Introduction

For the past two decades, the availability of health information in all forms has increased, most notably that of online information.1 This increased access has led to some concerns about the quality and trustworthiness of online health information and whether it is suitable for a general audience.1 Plain language summaries (PLSs) are an important means of providing consumers with reliable results of health research as they are written with consumers in mind. By definition, PLSs are easy-to-understand summaries of research articles that follow principles of plain language and health literacy, such as avoiding or explaining jargon.2 3 PLSs can play a vital role in helping to prevent the spread of health misinformation, the impact of which was highlighted by the recent pandemic.4 This is important as online health information is often used by patients and practitioners to guide medical decisions or shared with others, for example, through peer networks such as online patient groups.5 By providing health information to the public in a way they can understand and use, PLSs can help contribute to improved health literacy.3

It is standard practice for health journals to provide a series of instructions to guide authors as they prepare and submit their manuscripts, referred to as author instructions or guidelines for authors. Journals that publish PLSs include important details for the PLS in these author instructions, covering areas such as word limit, content, structure and the use of jargon, acronyms and abbreviations. Instructions related to PLS word limit and structure may vary between journals based on formatting requirements. Those instructions relating to content help to ensure the information in the PLS is based on the article and useful to the reader. In principle, instructions related to the use of jargon, acronyms, abbreviations and reading level can assist with the readability of the PLS and suitability for the intended audience. Although agencies differ in their specific recommendations, most agree that written material for a general audience should be written at a reading level no higher than grade 8.36,8 Unfortunately, readability analysis of PLSs in health journals shows the reading level of PLSs is consistently higher than grade 8.9,11

In academic publication, author instructions for PLSs help guide authors to know what a journal wants included in a PLS and how it should be structured. There is, however, little value in a journal including author instructions for writing PLSs if they are not reflected in PLSs published by the journal. Compliance of author instructions for PLSs and their corresponding PLSs in health journals has not been investigated. However, similar research was conducted by Malički et al on general author instructions, that is, those for the entire manuscript. Malički et al conducted a systematic review and meta-analysis of 153 studies that analysed author instructions.12 12 of these studies analysed adherence of published articles to the author instructions from the journal, with most (83.3%) achieving suboptimal adherence (<80% of manuscripts adhered to the author instructions or partially or completely).12 However, the extent to which PLSs reflect the author instructions on which they are based is unknown. Although the studies in Malički et al’s review did not measure adherence to PLS author instructions explicitly, their results suggest that instructions for PLSs may not be followed in many instances.

This study is a follow-up to the scoping review on the instructions available to health researchers for writing PLSs.13 This scoping review highlighted that only 5.1% of journals included a PLS.13 Of those journals that published PLSs, most (70%) had author instructions that included advice about the use of jargon.13 However, only one journal included recommended the PLS authors use a readability tool.13 This may account for the high reading level of many PLSs in health journals.

The purpose of this study is to determine (1) the degree of compliance of published PLSs against the PLS author instructions in health journals and (2) the extent to which PLSs meet health literacy principles such as readability.

Methods

Definition of terms

For this review, we define a consumer as a member of the lay public not possessing any expert or technical health expertise. We use the terms consumer, patient and public synonymously. Journals vary in the terms used to refer to the instructions or guidelines for writing PLSs, so we will use the term ‘author instructions’ in all instances for consistency. We will use the acronym PLS to refer to the singular form and PLSs to refer to the plural form of the term PLS.

Eligibility criteria and selection of health journals

Health journals to be included in this environmental scan were identified from our previous scoping review.13 This scoping review included a comprehensive search of 534 health journals covering 11 journal categories linked to the top 10 non-communicable diseases.13 In our original review, we located author instructions from 27 journals that met our inclusion criteria, which were that the journal (1) published text-based PLSs (as defined by the INVOLVE PLS resource published by the National Institute for Health Research2) (2) included author instructions indicating the audience for the PLS was consumers and (3) published PLSs in English.13 We only had two exclusion criteria for this study, which were to (1) exclude one journal from our previous scoping review13 (Cochrane Database of Systematic Reviews published by Wiley) because it exclusively publishes systematic reviews, and the focus of this study was journals that published original research articles and (2) exclude journals that publish PLSs in languages other than English.

The included 26 journals were Postgraduate Medicine; JACC-Cardiovascular Imaging; Journal Of Cardiac Failure; European Urology Oncology; Cancer; Osteoarthritis And Cartilage; Therapeutic Advances In Musculoskeletal Disease; Rheumatology And Therapy; Journal Of Applied Sport Psychology; European Urology; European Urology Open Science; European Urology Focus; Journal Of Asthma And Allergy; Expert Review Of Respiratory Medicine; Neurology And Therapy; CNS Drugs; Pain And Therapy; Journal Of Hepatology; Gastroenterology; Therapeutic Advances In Gastroenterology; Expert Review Of Gastroenterology & Hepatology; Ophthalmology And Therapy; British Journal Of Dermatology; American Journal Of Clinical Dermatology; Dermatology And Therapy and Clinical Cosmetic And Investigational Dermatology.

Most of these journals (73%) did not require a PLS for all published articles, that is, the PLS was optional.13

Data collection and analysis

This study was divided into three sections, which we outline in figure 1. Any disagreements or inconsistencies between reviewers (KG and JS) in any part of the study were first resolved by discussion, then assessed by a third person (DM) when needed.

Figure 1. Data collection and analysis process. PLSs, plain language summaries.

Part 1: assessing the inclusion of a PLS in original research articles

The purpose of part 1 was to determine the extent to which the included journals published PLSs with original research articles. Our strategy was to search a maximum of 20 original research articles per journal with the aim of locating three articles that had an accompanying PLS. Two reviewers (KG and JS) searched journals in September and October 2022, beginning with the most recently published volumes and working in reverse chronological order of publication date. Reviewers recorded the number of articles searched to locate three with a corresponding PLS. For example, if reviewers located three PLSs after searching 14 articles, the search was stopped at this point. Reviewers accessed journal articles through the library subscriptions held by The University of Sydney, Australia. All journals were accessible via these subscriptions.

Part 2: comparing identified PLSs with PLS author instructions

For part 2, we compared each PLS identified in part 1 with the PLS author instructions from the corresponding journal. We assessed the level of compliance across those elements from Gainey et al13 for which a compliance review was feasible. These were word count/PLS length, content, structure, wording and the use of jargon, acronyms and abbreviations. We defined feasibility based on the elements from Gainey et al13 that we could easily measure using existing tools or through a priori consensus. Therefore, we excluded the elements of purpose and resources as we considered text relating to the purpose of the PLS as informational rather than instructional and we could not determine whether the PLS author has used any of the included resources when writing the PLS.

We broke each of the elements into subelements to simplify the assessment process. To assess each subelement, two independent raters (KG and JS) referred to the journal’s author instructions and determined the extent to which each PLS was consistent with them, using a three-point rating scale of ‘full’, ‘partial’ or ‘non’ compliance. We calculated overall compliance as a percentage across all PLSs for each journal. Compliance of 80% was considered ‘high’, 51%–79% was ‘medium’ compliance, 31%–50% was ‘low’ compliance and ≤30% was ‘very low’ compliance.

Assessing some of these elements is subjective, so a priori criterion was established and iteratively refined by reviewers during data collection. In online supplemental appendix 1, we show the method or criteria used to assess compliance, compliance scoring and an example of the elements and subelements from the author’s instructions.

Part 3: health literacy assessment

The purpose of part 3 was to assess the suitability of the PLSs for a consumer audience, using health literacy principles such as readability and the use of complex language. To do this, reviewers (KG and JS) used an online real-time editor (ie, the SHeLL Editor8 for both analyses). The SHeLL editor8 measures readability using the Simple Measure of Gobbledygook Index. In our assessments, we copied the text from each PLS into the editor and recorded the grade reading level and percentage of complex language (with higher scores indicating greater text complexity).

Anderson et al14 noted that readability scores are impacted by jargon or complex words that are unavoidable, as their use is important to the understanding of the subject in the PLS. Using this rationale, we excluded jargon or complex words from our readability analysis. Using these words was appropriate, conveying important detail or context to the reader and, most times, there was no plain language equivalent.

When preparing the text from each PLS for the readability and complex language analysis, we excluded words from the following categories:

Term for an illness or disease if this term is the subject of the PLS, for example, we would remove the term ‘psoriasis’ from the PLS of a paper titled ‘Treatment Patterns for Targeted Therapies, Nontargeted Therapies, and Drug Holidays in Patients with Psoriasis’.

Medication/pharmaceutical product, for example, Vericiguat. This did not apply to descriptive terms such as antibiotic or anti-inflammatory.

Medical device or other commercial product, for example, HeartLogic.

Company name, for example, Neurolief.

Study or trial name, for example, SPARTAN study.

Geographical location, for example, Spain.

Population descriptor, for example, indigenous.

After we obtained scores for readability and complex words for all PLSs, we applied a rating scale of ‘excellent’, ‘good’ and ‘poor’. Witten material aimed at the public should have a reading level of grade 8 or lower, so a rating of excellent was given to PLSs with a reading level was ≤grade 8.36,8 A ‘good’ rating was given if the reading level was >8, 9 or 10, and a ‘poor’ rating was given if the reading level was >10. For complex words, a rating of ‘excellent’ was given if the complex language score was ≤5%, ‘good’ if it was 5%–10% and ‘poor’ if it was >10%. Results for reading level and percentage of complex words are shown in online supplemental appendix 2. We included results both before (raw) and after we excluded (edited) words.

Patient and public involvement

We consulted a consumer representative (SC), who was engaged as part of the research team to provide input on the study. SC provided ongoing feedback on the study methods and results, offering insight from the perspective of an end-user of PLSs. SC also reviewed the full manuscript and cowrote a PLS for the review.

Results

Part 1: assessing the inclusion of a PLS in original research articles

We found PLSs for 20 of the 26 (76.9%) included journals, locating 53 PLSs. For 15/26 (57.7%) journals, we located three PLSs, for 3/26 (11.5%) journals we located two PLSs, in 2/26 (7.7%) journals we located 1 PLS and in 6/26 (23.1%) journals we located zero PLSs. Of the seven journals for which PLSs were stated as being mandatory, we found PLSs in six.

For half of the journals (14/26, 53.8%), we had to search the maximum of 20 articles to locate three with a PLS. Alternatively, for seven journals, we only had to search three articles to locate three with a PLS. See table 1 for results from part 1 of the study, that is, the number of PLSs located for each journal.

Table 1. Number of plain language summaries located in each journal.

| Journal name | Total number of PLSs found | Total number of articles searched to locate ≤3 PLSs |

| American Journal Of Clinical Dermatology | 3 | 10 |

| British Journal Of Dermatology | 3 | 3 |

| Cancer | 3 | 5 |

| CNS Drugs | 3 | 7 |

| Dermatology And Therapy | 3 | 19 |

| European Urology | 3 | 3 |

| European Urology Focus | 3 | 3 |

| European Urology Oncology | 3 | 3 |

| European Urology Open Science | 3 | 3 |

| Journal Of Applied Sport Psychology | 3 | 3 |

| Journal Of Asthma And Allergy | 3 | 20 |

| Journal Of Cardiac Failure | 3 | 6 |

| Journal Of Hepatology | 3 | 3 |

| Rheumatology And Therapy | 3 | 20 |

| Therapeutic Advances In Gastroenterology | 3 | 10 |

| Expert Review Of Respiratory Medicine | 2 | 20 |

| Neurology And Therapy | 2 | 20 |

| Pain And Therapy | 2 | 20 |

| Clinical Cosmetic And Investigational Dermatology | 1 | 20 |

| Ophthalmology And Therapy | 1 | 20 |

| Expert Review Of Gastroenterology&Hepatology | 0 | Did not locate any PLSs |

| Gastroenterology | 0 | Did not locate any PLSs |

| JACC-Cardiovascular Imaging | 0 | Did not locate any PLSs |

| Osteoarthritis And Cartilage | 0 | Did not locate any PLSs |

| Postgraduate Medicine | 0 | Did not locate any PLSs |

| Therapeutic Advances In Musculoskeletal Disease | 0 | Did not locate any PLSs |

| Total | 53 | 238 |

PLSsplain language summaries

Part 2: comparing identified PLSs with PLS author instructions

The results for part 2 of the study are presented in tables2 3. Out of the 20 journals assessed, no journal achieved 100% compliance with all author instructions. The highest level of compliance was 88% (ie, high compliance) and the lowest was 0% (ie, very low compliance). Two (10%) achieved a compliance rating of high (ie, ≥80% compliance) and 13 (65%) achieved a rating of medium (ie, 51%–79% compliance). The compliance for five (25%) journals was rated as low or very low (ie, ≤50% compliance). Four of the journals with low or very low compliance were from the same journal publisher and contained five or fewer subelements in their PLS instructions. Two of these achieved zero compliance.

Table 2. Compliance for all journals.

| Journal | Number of subelements | Number of PLSs located | Compliance score for each PLS | Compliance score for all PLSs | Compliance score for all PLSs (%) | Compliance rating* | ||

| PLS 1 | PLS 2 | PLS 3 | ||||||

| American Journal Of Clinical Dermatology | 7 | 3 | 13 | 12 | 12 | 37 | 88 | High |

| Rheumatology And Therapy | 7 | 3 | 10 | 13 | 13 | 36 | 86 | High |

| British Journal Of Dermatology | 9 | 3 | 15 | 13 | 14 | 42 | 78 | Medium |

| Neurology And Therapy | 7 | 2 | 10 | 11 | 0 | 21 | 75 | Medium |

| Expert Review Of Respiratory Medicine | 13 | 2 | 19 | 19 | 0 | 38 | 73 | Medium |

| Journal Of Asthma And Allergy | 12 | 3 | 17 | 16 | 19 | 52 | 72 | Medium |

| Pain And Therapy | 7 | 2 | 8 | 12 | 0 | 20 | 71 | Medium |

| Journal Of Applied Sport Psychology | 13 | 3 | 18 | 18 | 18 | 54 | 69 | Medium |

| Clinical Cosmetic And Investigational Dermatology | 13 | 1 | 18 | 0 | 0 | 18 | 69 | Medium |

| European Urology Oncology | 4 | 3 | 6 | 4 | 6 | 16 | 67 | Medium |

| CNS Drugs | 7 | 3 | 9 | 10 | 8 | 27 | 64 | Medium |

| Therapeutic Advances In Gastroenterology | 15 | 3 | 18 | 19 | 16 | 53 | 59 | Medium |

| Dermatology And Therapy | 8 | 3 | 10 | 10 | 7 | 27 | 56 | Medium |

| Journal Of Cardiac Failure | 3 | 3 | 4 | 3 | 3 | 10 | 56 | Medium |

| Cancer | 10 | 3 | 9 | 10 | 12 | 31 | 52 | Medium |

| Ophthalmology And Therapy | 9 | 1 | 8 | 0 | 0 | 8 | 44 | Low |

| Journal Of Hepatology | 5 | 3 | 2 | 7 | 3 | 12 | 40 | Low |

| European Urology | 4 | 3 | 2 | 2 | 0 | 4 | 17 | Very low |

| European Urology Focus | 4 | 3 | 0 | 0 | 0 | 0 | 0 | Very low |

| European Urology Open Science | 4 | 3 | 0 | 0 | 0 | 0 | 0 | Very low |

High =≥80%, medium=51%–79%, low=50%–31%, Vvery low =≤30%.

PLSsplain language summaries

Table 3. Compliance for all elements and subelements of PLSs.

| Element | Subelement | Frequency in author instructions (N=53) | Compliance score all PLSs | Percentage compliance | Compliance rating* |

| Word count/PLS length | Maximum number of words | 38 | 70 | 92.1% | High |

| PLS length | 15 | 10 | 50% | Low | |

| Content | Based on manuscript | 24 | 46 | 95.8% | High |

| Background | 17 | 26 | 76.5% | Medium | |

| Methods | 15 | 25 | 83.3% | High | |

| Main findings/take-home message | 29 | 35 | 60.0% | Medium | |

| Impact/’so what’ of research | 18 | 23 | 63.9% | Medium | |

| Other | 3 | 1 | 16.7% | Very low | |

| Structure | Bullet points | 10 | 8 | 40% | Low |

| Paragraph style or similar | 48 | 68 | 70.1% | Medium | |

| Other | 3 | 3 | 50% | Low | |

| Wording/language | Plain English/easy to understand | 43 | 31 | 36.0% | Low |

| Active voice | 29 | 25 | 43.1% | Low | |

| First person | 3 | 0 | 0% | Very low | |

| Person-centred language | 9 | 14 | 77.8% | Medium | |

| Reading level/Readability | 2 | 4 | 50% | Low | |

| Other | 18 | 18 | 50% | Low | |

| Jargon, acronyms and abbreviations | Jargon—explain or avoid | 32 | 31 | 48.4% | Low |

| Abbreviations—explain or avoid | 29 | 56 | 96.6% | High |

High =≥80%, medium=51%–79%, low=50%–31%, Vvery low =≤30%.

PLSsplain language summaries

The degree of compliance varied between author instruction elements and subelements. Since author instructions vary between journals, not all subelements are included in the instructions for all journals, that is, although we analysed 53 PLSs, results for some subelements are less than 53.

No element or subelement achieved 100% compliance; however, four subelements achieved a high compliance rating. These were the word count/PLS length subelement of ‘maximum number of words’, the content subelements ‘based on the manuscript’ and ‘main findings/take-home message’, and ‘impact/’so what’ of research’ and the jargon, acronym and abbreviations subelement ‘abbreviations—explain or avoid’. Of the 18 subelements, more than half 11/18, 61.1%) were given a low or very low compliance rating. This means most PLSs were not written in accordance with these aspects of the author instructions. For the structure subelement of ‘paragraph style of similar’, approximately one-third fully complied (27.3%), one-third partially complied (38.6%) and one-third did not comply (34.1%). Only 11 (35.5%) PLSs fully complied with the instruction to explain or avoid jargon, with 11 (35.5.%) failing to comply. Jargon scores ranged from 0% to 18%, with a mean and median of 5%.

Part 3: health literacy assessment

Based on the health literacy assessment, no PLS was likely to be suitable for a general audience, that is, no PLS met the standard reading level of grade 8 recommended for written material aimed at a general patient audience.3 8 Using the edited scores for readability (with words excluded), the lowest reading level was grade 10.2, and the highest was 21.2. The mean readability score was a grade reading level of 15.8 and the median was 15.9. All PLSs were rated as ‘poor’, meaning they had a reading level of >grade 10. Using the edited scores for the complex language analysis (with words excluded), the range was from 8.5% to 49.8%. The mean was 31.0% and the median was 31.7%. All but one PLS was rated as ‘poor’, meaning 52/53 (98.1%) had a >10% complex words.

In online supplemental appendix 2, we show results of the health literacy assessment. We included both raw and edited scores for both analyses. The raw scores are those prior to excluding any words and the edited scores are after excluding words.

Discussion

We conducted a systematic environmental scan to determine (1) the degree of compliance of published PLSs against the PLS author instructions in health journals and (2) the extent to which PLSs meet health literacy principles such as readability. We found 53 PLSs across 20 journals. When assessing PLS compliance with journal instructions, only two journals were rated as highly compliant, while two-thirds (65%) of journals obtained a medium level of compliance and five were given either a low or very low compliance rating. Compliance ratings for subelements of PLSs varied greatly but were highest for elements such as word count/PLS length and lowest for elements such as wording. No PLS met the reading level of grade 8 recommended for written material aimed at a general patient audience,3 8 and across PLSs, an average of 31% of words were considered complex. There was a high level of homogeneity between the PLS author instructions from journals from the same publisher, which impacted the results. This is particularly the case for the journals that received low and very low compliance rating.

The poor compliance for some journals and subelements identified in this study could be caused by many factors. As PLSs are not mandatory for most biomedical journals, authors unfamiliar with writing PLSs may not consider including a PLS with their manuscript submission. Also, some researchers may not prioritise PLSs or find them too consuming to produce because of a lack of experience communicating their research with a general audience.15 In this instance, authors may not consult PLS author instructions or only refer to some subelements such as word count and structure, as these are quite easy to follow. This may be exacerbated by journal editors if they do not reinforce the author’s instructions when manuscripts are accepted for publication, regardless of whether the PLS is optional or mandatory. This could be because of a lack of time, resources and commitment to ensuring the PLS is useful for the intended audience, or an assumption that the author has followed the author’s instructions. There are generally no specific criteria for peer reviewers in relation to reviewing PLSs. A lack of detail and consistency in PLS author instructions may also be a factor in low compliance. Nambiar et al assessed 20 subcategories in author instructions from 80 journals (40 biomedical and 40 physical science).16 They found no journal had a perfect score for completeness and clarity. Many journals had incomplete information for word limits and intended audience.16 Applied to PLSs specifically, unclear and incomplete author instructions, particularly regarding the intended audience, can lead to a PLS written at an inappropriate reading level, unsuitable for a lay non-expert audience. This problem is worsened when limited detail is provided for the use of jargon, acronyms and abbreviations, and complex language.

PLSs that contain jargon or complex wording could be difficult for a general audience to understand fully. If the take-away message of PLS is misunderstood, it can increase the potential for incorrect health information to be used to inform medical decisions. This incorrect message may then be shared on social networks, for example, patient support groups or through peer networks, worsening the spread of misinformation. Our findings pertaining to the high-grade reading level of PLSs mirror the findings of other studies.9,11 The PLSs in this study ranged from grade 10 to grade 21, much higher than grade 8, which is recommended for a general audience.36,8 The main purpose of PLSs is to convey health research to a general audience. If a PLS cannot be easily understood by a general audience, not only is effort wasted in producing it, but there is the potential for the message of the study to be misunderstood by the reader. If this misinformation is then shared or used to inform decisions about medical care, the implications can have negative, unintended consequences.

We have already discussed potential reasons for non-compliance with PLS author instructions, such as a lack of experience writing for a general audience. These reasons likely apply to our findings for health literacy principles, that is, the mean and median reading levels were approximately grade 16, almost all PLSs contained high percentages of complex language and only half of the PLSs complied with the instruction to avoid jargon. Other factors should also be considered, and they relate to the type of people who read PLSs. Research about the health literacy level of people who access PLSs is limited. What is known suggests that people who read PLSs have a higher health literacy level than the public and display high health information-seeking behaviour.17 Martínez Silvagnoli et al17 found that participants preferred PLSs written at a grade 9–11 reading level, finding PLSs at this reading level contained enough detail to convey the message of the PLS without oversimplifying the language used. Patient-Centered Outcomes Research Institute acknowledges that jargon may be appropriate to include in some PLSs even though it will probably increase the reading level.18 What matters most is ensuring any jargon used is relevant and defined when first used.19

When the PLS length was expressed in the author’s instructions as overall length, for example, one to two short sentences, only half of the PLSs complied. The primary reason for non-compliance was the PLS containing long sentences when the author’s instructions stated, ‘short sentences’. This finding is of note when considering the effectiveness of PLS author instructions from both an author and reader perspective. Although it might be easier to write a PLS according to a word count, sentence length contributes to readability of written material. PLS author instructions may be improved if the PLS length is expressed as both a maximum word count with the recommendation to use short sentences.

Strengths and limitations

This is the first study to assess the level of compliance between PLSs in health journals and the PLS author instructions on which they are to be based. Although there was subjectivity in rating some subelements, we used a systematic approach, including two independent raters to conduct the journal search and data analysis using a priori criterion to reduce bias. Also, we used gold-standard readability formulas and validated tools for assessing the reading level and jargon (SHeLL editor)8 and the De-Jargoniser tool.20

It is unclear what role that language may play in compliance between author instructions and PLSs. For example, some journals use the terminology ‘author instructions’ whereas others use ‘author guidelines’, ‘submission guidelines’ or ‘guide for authors’. Unfortunately, our dataset was not large enough to segment results according to these terms. The scope of this study was limited to assessing compliance only, so we cannot comment on the appropriateness or usefulness of any elements within the PLS author instructions themselves.

Future directions

Support from journals to make PLS instructions easier to follow might improve compliance. The Golden Rules for scholarly journal editors were published in 2014 by the European Association of Science Editors and they suggest that author instructions should be ‘simple and easily understood’.21 They also suggest a table at the beginning of a journal’s author instructions that outlines the important information needed for the manuscript submission.21 This proposed table would cover areas such as word limits, title page information, structure, formatting, author forms required, submission notes such as tables, figures and supplementary files, and journal policies.21 Resources such as this table could provide authors with a more standardised guide during their article preparation and submission.21 One limitation of this table is that it does not include PLSs.21 If this table or similar resources are to be adopted by journal publishers, it is vital that PLSs are included, whether they are optional, mandatory, or not even included by the journal. In the latter instance, the PLS rather than detailed PLS instructions, NA for not applicable could be denoted. To support this idea, monitoring systems for compliance could be initiated by health journals prior to manuscript approval.

This study found that most PLSs were not written at a grade 8 reading level, consistent with previous studies on this topic.8,10 Based on our understanding of the readers of PLSs as having higher health literacy than the general population,17 perhaps it is time to reconsider the reading level we recommend for PLSs to accommodate the appropriate use of complex words and jargon. A reading level of grade 8 may be too restrictive and compromise the message of the PLS.

Conclusion

The PLSs from most of the journals we included in our study were rated as having a medium level of compliance with the author’s instructions. There was wide variation in the degree of compliance with elements and subelements, which could be due to how easily authors can comply with the PLS author instructions. PLSs that contain jargon or complex wording could be difficult for consumers to understand fully. If the take-away message of PLSs is misunderstood, it can increase the potential for incorrect health information to be used to inform medical decisions. This incorrect message may then be shared on social network, for example, patient support groups or through peer networks, worsening the spread of misinformation. However, it might be time to reconsider current PLS instructions and challenge existing ideas about what reading level and use of jargon is appropriate. PLSs should be a balance of providing enough detail about the study without patronising the reader. Several international groups across various industries are collaborating to develop evidence-based guidelines for PLSs.22 This is an opportunity for experts in this field to challenge current assumptions about PLSs and produce guidelines that are contemporary and practical. Journals could assist by ensuring PLS instructions are easy to follow and through monitoring compliance. Clearly, more data on the users of PLSs would provide a fuller understanding of how to address this issue.

supplementary material

Acknowledgements

DM is supported by an Australian Research Council (ARC) Discovery Early Career Researcher Award (DECRA). The authors thank Sarah Lukeman for her contribution to the study methods section as a consumer representative.

Footnotes

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Prepublication history and additional supplemental material for this paper are available online. To view these files, please visit the journal online (https://doi.org/10.1136/bmjopen-2024-086464).

Provenance and peer review: Not commissioned; externally peer reviewed.

Patient consent for publication: Not applicable.

Data availability free text: All data were collated from publicly available sources. The datasets generated and/or analysed during the current study are available from the corresponding author on reasonable request.

Patient and public involvement: Patients and/or the public were involved in the design, or conduct, or reporting, or dissemination plans of this research. Refer to the Methods section for further details.

Ethics approval: None required.

Presented at: Preliminary results of this study were presented at the Cochrane Colloquium 2023 by KG and the abstract was published as a supplement in the Cochrane Database of Systematic Reviews. Gainey K, Smith J, McCaffery K, et al. Plain language summaries of published health research articles: how well are we doing? Abstract was also accepted for the 27th Cochrane Colloquium, London, UK. Cochrane Database of Systematic Reviews 2023; (1 Supp 1). https://doi.org/10.1002/14651858.CD202301.

Contributor Information

Karen Gainey, Email: karen.gainey@sydney.edu.au.

Jenna Smith, Email: jenna.smith@sydney.edu.au.

Kirsten McCaffery, Email: kirsten.mccaffery@sydney.edu.au.

Sharon Clifford, Email: sharon.clifford@bigpond.com.

Danielle Muscat, Email: danielle.muscat@sydney.edu.au.

Data availability statement

Data are available upon reasonable request.

References

- 1.Jacobs W, Amuta AO, Jeon KC. Health information seeking in the digital age: An analysis of health information seeking behavior among US adults. Cog Soc Sci. 2017;3:1302785. doi: 10.1080/23311886.2017.1302785. [DOI] [Google Scholar]

- 2.NIHR Plain English summaries. https://www.nihr.ac.uk/plain-english-summaries Available.

- 3.Cheng C, Dunn M. Health literacy and the Internet: a study on the readability of Australian online health information. Aust N Z J Public Health. 2015;39:309–14. doi: 10.1111/1753-6405.12341. [DOI] [PubMed] [Google Scholar]

- 4.James LC, Beeby R. Plain Language Summaries of Publications – What Has COVID-19 Taught Us. J Clin Stud. 2022;13 [Google Scholar]

- 5.Ramsay I, Peters M, Corsini N, et al. Consumer health information needs and preferences: a rapid evidence review. Sydney: ACSQHC;2017; [DOI] [PubMed] [Google Scholar]

- 6.South Australia Health Engaging with consumers, carers and the community: guide and resources. 2021. https://www.sahealth.sa.gov.au/wps/wcm/connect/public+content/sa+health+internet/resources/engaging+with+consumers+carers+and+the+community+guide+and+resources Available.

- 7.Daraz L, Morrow AS, Ponce OJ, et al. Readability of Online Health Information: A Meta-Narrative Systematic Review. Am J Med Qual. 2018;33:487–92. doi: 10.1177/1062860617751639. [DOI] [PubMed] [Google Scholar]

- 8.Ayre J, Bonner C, Muscat DM, et al. Multiple Automated Health Literacy Assessments of Written Health Information: Development of the SHeLL (Sydney Health Literacy Lab) Health Literacy Editor v1. JMIR Form Res. 2023;7:e40645. doi: 10.2196/40645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Carvalho FA, Elkins MR, Franco MR, et al. Are plain-language summaries included in published reports of evidence about physiotherapy interventions? Analysis of 4421 randomised trials, systematic reviews and guidelines on the Physiotherapy Evidence Database (PEDro) Physiotherapy. 2019;105:354–61. doi: 10.1016/j.physio.2018.11.003. [DOI] [PubMed] [Google Scholar]

- 10.Yi L, Yang X. Are lay abstracts published in Autism readable enough for the general public? A short report. Autism. 2023;27:2555–9. doi: 10.1177/13623613231163083. [DOI] [PubMed] [Google Scholar]

- 11.Wen J, He S, Yi L. Easily readable? Examining the readability of lay summaries published in Autism Research. Autism Res. 2023;16:935–40. doi: 10.1002/aur.2917. [DOI] [PubMed] [Google Scholar]

- 12.Malički M, Jerončić A, Aalbersberg IjJ, et al. Systematic review and meta-analyses of studies analysing instructions to authors from 1987 to 2017. Nat Commun. 2021;12:5840. doi: 10.1038/s41467-021-26027-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gainey KM, Smith J, McCaffery KJ, et al. What Author Instructions Do Health Journals Provide for Writing Plain Language Summaries? A Scoping Review. Patient . 2023;16:31–42. doi: 10.1007/s40271-022-00606-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Anderson HL, Moore JE, Millar BC. Comparison of the readability of lay summaries and scientific abstracts published in CF. Res News J Cyst Fibros. 2021;13:48. doi: 10.1016/j.jcf.2021.09.009. [DOI] [PubMed] [Google Scholar]

- 15.Brownell SE, Price JV, Steinman L. Science Communication to the General Public: Why We Need to Teach Undergraduate and Graduate Students this Skill as Part of Their Formal Scientific Training. J Undergrad Neurosci Educ. 2013;12:E6–10. [PMC free article] [PubMed] [Google Scholar]

- 16.Nambiar R, Tilak P, Cerejo C. Quality of author guidelines of journals in the biomedical and physical sciences. Learn Publ. 2014;27:201–6. doi: 10.1087/20140306. [DOI] [Google Scholar]

- 17.Martínez Silvagnoli L, Shepherd C, Pritchett J, et al. Optimizing Readability and Format of Plain Language Summaries for Medical Research Articles: Cross-sectional Survey Study. J Med Internet Res. 2022;24:e22122. doi: 10.2196/22122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Maurer M, Siegel JE, Firminger KB, et al. Lessons Learned from Developing Plain Language Summaries of Research Studies. HLRP: Health Literacy Research and Practice . 2021;5:e155–161. doi: 10.3928/24748307-20210524-01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stoll M, Kerwer M, Lieb K, et al. Plain language summaries: A systematic review of theory, guidelines and empirical research. PLoS One. 2022;17:e0268789. doi: 10.1371/journal.pone.0268789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rakedzon T, Segev E, Chapnik N, et al. Automatic jargon identifier for scientists engaging with the public and science communication educators. PLoS One. 2017;12:e0181742. doi: 10.1371/journal.pone.0181742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ufnalska S, Terry A. Proposed universal framework for more user-friendly author instructions. Eur Sci. 2020;46 doi: 10.3897/ese.2020.e53477. [DOI] [Google Scholar]

- 22.Rosenberg A. Working Toward Standards for Plain Language Summaries. Sci Editor . 2022;45:46–50. doi: 10.36591/SE-D-4502-46. [DOI] [Google Scholar]