Abstract

Highly effective antiobesity and diabetes medications such as glucagon-like peptide 1 (GLP-1) agonists and glucose-dependent insulinotropic polypeptide/GLP-1 (dual) receptor agonists (RAs) have ushered in a new era of treatment of these highly prevalent, morbid conditions that have increased across the globe. However, the rapidly escalating use of GLP-1/dual RA medications is poised to overwhelm an already overburdened health care provider workforce and health care delivery system, stifling its potentially dramatic benefits. Relying on existing systems and resources to address the oncoming rise in GLP-1/dual RA use will be insufficient. Generative artificial intelligence (GenAI) has the potential to offset the clinical and administrative demands associated with the management of patients on these medication types. Early adoption of GenAI to facilitate the management of these GLP-1/dual RAs has the potential to improve health outcomes while decreasing its concomitant workload. Research and development efforts are urgently needed to develop GenAI obesity medication management tools, as well as to ensure their accessibility and use by encouraging their integration into health care delivery systems.

Introduction

Highly effective antiobesity and diabetes medications such as glucagon-like peptide 1 (GLP-1) agonists have ushered in a new era of treatment of these highly prevalent, morbid conditions that have increased across the globe over the past few decades. It is estimated that by 2030 nearly 30 million people in the United States will be on GLP-1 or glucose-dependent insulinotropic polypeptide/GLP-1 (dual) receptor agonists (RAs; henceforth referred to as GLP-1/dual RA) medications. Currently, their use is throttled by limited availability and insurance coverage challenges. As these issues resolve, their widespread use will trigger an even larger bottleneck—the substantial clinical management burden driven by the frequent communication, titration, and administrative interactions required to successfully manage obesity and related conditions using these important new medications. Indeed, health care providers (HCPs) and their practices have already begun to experience the strain of managing the high demand for weight loss medications. Relying on existing systems and resources to address the oncoming rise in GLP-1/dual RA use will be insufficient. Generative artificial intelligence (GenAI) has the potential to offset the clinical and administrative demands associated with the management of patients on these medication types. Research and development efforts are urgently needed to develop GenAI GLP-1/dual RA medication management tools, as well as to ensure their accessibility and use by encouraging their integration into health care delivery systems.

The High Burden of Obesity Medications

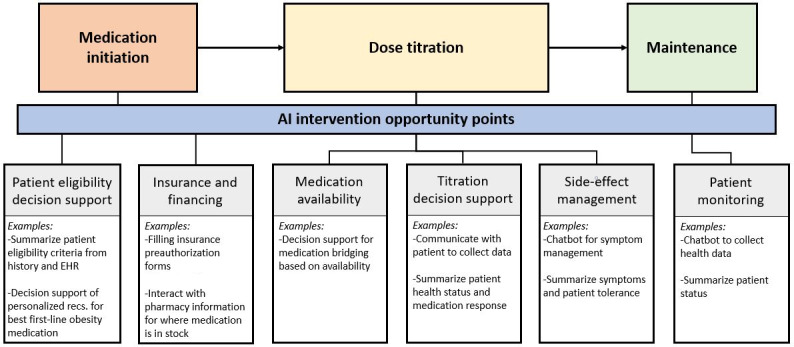

When an HCP chooses to prescribe a GLP-1/dual RA to their patient, they are embarking on a months-long journey of clinical or administrative burden greater than most common chronic disease medications. A clinical team will be tasked with regularly balancing weight loss goals, hemoglobin A1c targets, and side effects; continuously evaluating whether to continue to titrate up (or down) the medication until a maintenance dose is achieved. Moreover, HCPs will likely be faced with navigating insurance preauthorizations and fielding patient calls and messages about side effects, while searching for alternative pharmacies or bridging medications to address medication shortages.

On a small scale, this may be manageable, but as the number of patients on GLP-1/dual RAs expands to accommodate the 42% of Americans with obesity [1], it is unsustainable. With clinical practices already overburdened by administrative workload and HCPs at high risk for burnout [2], it is unreasonable to assume that the additional labor demands to manage patients on GLP-1/dual RAs could be handled by the existing workforce or that a health care system could feasibly hire enough additional personnel to meet this demand. To address the potential wave of future patients on GLP-1/dual RAs, tools are needed to reduce communication and administrative burden, allowing HCPs to focus on more complex patient care.

GenAI Role in Medication Management

GenAI may represent an opportunity to automate many of the low-complexity, high-burden GLP-1/dual RA management tasks. As compared to previous iterations of artificial intelligence (AI), the technical functionality of GenAI allows for the creation of content, addressing numerous aspects of care management tasks that were previously impossible or overly burdensome to automate. Specifically, through the use of recurrent neural networks [3], generative adversarial networks [4], and large language models [5] with natural language processing [6] capabilities, GenAI has an inherent flexibility to combine heterogeneous sources of data to generate summaries, perform calculations, and create original content, including the production of potentially impactful metrics to improve clinical decision-making [7-10]. Furthermore, advancements in natural language understanding research have enabled the design of AI-driven chatbots—conversational agents that mimic human interaction through written, oral, and visual forms of communication with a user [11,12]. AI chatbots can learn from previous interactions, offering a more personalized, engaging, and on-demand user experience to support health behaviors [13,14]. The addition of GenAI functionality to AI-driven chatbots further improves the chatbot’s ability to respond dynamically. In these ways, the capabilities of GenAI extend its potential functionality well beyond a single algorithm for medication titration.

Indeed, while still an emerging technology, GenAI has shown itself to be a potentially effective tool for patient medication and care management in the areas of diabetes insulin management, hypertension, and weight management. In the form of chatbots, AI has demonstrated its use to facilitate the collection of patient data, reduce HCP message burden [14], and deliver health coaching for adults with overweight and obesity [12], producing similar results to those expected from in-person lifestyle interventions [15]. AI chatbots have also demonstrated the potential integration of wearable device data and messaging platforms for the creation of personalized intervention messaging [16]. GenAI-generated responses to patient questions have even been shown to be perceived as higher quality, more empathetic, and have greater clinical decision support accuracy than physician responses [17,18]. GenAI is also powering new “ambient clinical documentation” tools that effectively transform patient-clinician conversations into medical documentation [19].

Through the synthesis of patient data, clinical guidelines, and information databases, GenAI can provide effective and accurate clinical decision support and patient intervention, and even pharmacist-validated medication management [20]. For example, a voice-based conversational GenAI application effectively provided an autonomous real-time remote patient intervention for basal insulin management among patients with type 2 diabetes by incorporating HCP-selected titration algorithms and emergency protocols (parameters) for hypoglycemia and hyperglycemia based on daily patient reports of insulin dose and blood sugar value. This intervention led to significantly improved insulin management as compared to standard care [21]. Similarly, GenAI has revealed promise as a potential solution to the high burden incurred in remote patient monitoring for hypertension. By creating a GenAI-powered messaging platform for patient interactions, and integrating GenAI-created smart summaries into the electronic health record (EHR), these tools assisted in the management of the large volume of incoming data and have the potential to enhance both patient and HCP-facing tasks associated with digital health care for hypertension management [22]. These examples highlight how GenAI tools may be capable of supporting more efficient GLP-1/dual RA dose titration and increasing patient engagement without significantly increasing HCP workload.

Similar to these example cases, the management of GLP-1/dual RA medications requires patient engagement, as well as the collation of information from patients themselves, medical records, and clinical guidelines. Furthermore, GLP-1/dual RA management can be subjective with nuance in the interpretation of patient symptom tolerability and thus requires greater use of clinical judgment as opposed to hard and fast rules or cutoffs. GenAI has the potential to address multiple aspects of GLP-1/dual RA management, including streamlining patient-HCP communication, giving HCPs recommendations on optimal dose titration, and providing prescribing guidance based on nonclinical factors such as insurance coverage and medication availability (Figure 1). Through its inherent flexibility to incorporate multiple data sources, GenAI can interpret patient natural language responses regarding side effects and weight-loss goals, as well as incorporate information living in the EHR and other databases such as patient characteristics including weight changes, medical history, current medication dose, blood sugar levels, and insurance status [7]. This enables GenAI to provide a broad range of GLP-1/dual RA management services from personalized guidance for patients on the management of side effects to clinical advisement to optimize dose titrations.

Figure 1. GLP-1/dual RA medication management workflow and example opportunities for GenAI intervention. AI: artificial intelligence; EHR: electronic health record; GenAI: generative artificial intelligence; GLP-1: glucagon-like peptide 1; RA: receptor agonist.

Health Care System Integration of GenAI Interventions

Scaling the effective management of GLP-1/dual RAs, however, cannot be achieved through stand-alone development of GenAI tools, bots, and algorithms—they must be deeply integrated into the health care delivery system. This requires careful EHR and clinical workflow integration; a “last-mile” problem that most health care startups and innovators avoid until the end of their product development journey. While this may be consistent with their business plan, it has repeatedly led to low penetration of these potentially valuable digital tools. GenAI-assisted GLP-1/dual RA management will need early and deep clinical and EHR integration to disrupt this pattern.

To achieve clinical integration, however, there will be several privacy, cost, implementation, and ethical challenges that must be considered [23]. First, as with other forms of digitization of health care, the use of AI may introduce additional data privacy concerns regarding data storage, sharing, and use in model training [24]. Consequently, accommodations will need to be made to house and maintain any patient data, and the AI models being used, on internal firewall-protected servers, as opposed to externally hosted AI platforms [17].

The cost of integrating GenAI into clinical practice is also not insubstantial. In addition to costs associated with setting up and maintaining additional secure servers to house data, there are costs associated with each GenAI interaction. Depending on the task demanded, a sequence of several back-end prompts is likely required to achieve the desired outcome, with each prompt costing a multitude of “tokens” (ie, the basic units of text or code GenAI uses to process and generate language) and the use of each token coming at a monetary cost [25]. Moreover, each use of GenAI comes with an additional inference cost due to energy consumption, which can overtake the energy costs of training a GenAI model with high volumes of use [26].

To promote the successful implementation of GenAI products into clinical practice, usability, workflow integration, and user trust must be considered. Although presumptions have been made that the user-friendly, adaptable, and rapidly iterative aspects of GenAI will improve efficiency, productivity, and quality in ways not achieved with previous technologies [27], the deployment of GenAI interventions must be cognizant of clinical workflows, current technology integrations, and be designed with the user needs in mind [28]. Furthermore, the use of AI technologies in clinical care is not universally trusted by HCPs and patients [29,30], suggesting that substantial training and trust-building efforts will be required to improve acceptability and gain universal adoption.

Furthermore, there remain numerous ethical concerns associated with relying on GenAI in clinical care, including the potential exacerbation of disparities in health equity. Some ethical issues are associated with the technological aspects of GenAI functionality including the potential impact of algorithmic and language bias built into the training data used to create GenAI models, and how the reliability of models, including their potential for AI hallucinations, may impact clinical safety [31,32]. To address these types of concerns, health care institutions are likely to need a governance committee to oversee GenAI implementation, detail policies around data protection and data management practices, and thoroughly test GenAI models prior to allowing them for clinical use [33].

Digital health equity is another ethical consideration that will need to be addressed early and often in the development and deployment of GenAI-enabled clinical care, such as GLP-1/dual RA management tools. Due to structural inequities of access to insurance coverage, digital tools, and digital literacy, as well as health care system resources for GenAI adoptions, the potential benefits of GenAI GLP-1/dual RA management tools may be inequitably distributed.

Bias within GenAI training datasets has the potential to reinforce existing inequities [17]. Conscious efforts are likely to be needed to evaluate and tailor model training data for the populations of interest and through comparing and validating different samples of training data for representativeness [34]. The development and use of frameworks to evaluate the impact of GenAI use on health disparities and guide model modifications, as explored in other areas such as clinical predictive modeling [35], may be useful for guiding the equitable use of GenAI in clinical care [36].

Correspondingly, the development of GenAI obesity medication management and other GenAI-driven clinical tools should engage equitable digital design philosophies such as liberatory design [37]. Practical outcomes of this may include integrating GenAI into current system technologies that are widely available, such as SMS text messaging or existing EHR platforms, thus allowing for greater accessibility to these tools. Furthermore, to serve as health equity promotion interventions themselves, GenAI tools could be designed to detect and address known structural inequities, thereby proactively mitigating potential conscious or unconscious biases from the HCP.

Conclusions

The rapidly escalating use of GLP-1/dual RA medications is poised to overwhelm an already overburdened HCP workforce and health care delivery system, stifling its potentially dramatic benefits. Early adoption of GenAI to facilitate the management of these GLP-1/dual RAs has the potential to improve health outcomes while decreasing its concomitant workload. Investment in GenAI’s potential to support GLP-1/dual RA management is greatly needed. This effort should be guided by inclusive design principles and deep integration into clinical workflows to achieve scalable impact on clinical outcomes.

Acknowledgments

Generative artificial intelligence (AI) was not used in the writing of this study.

Abbreviations

- AI

artificial intelligence

- EHR

electronic health record

- GenAI

generative artificial intelligence

- GLP-1

glucagon-like peptide 1

- HCP

health care provider

- RA

receptor agonist

Footnotes

Authors’ Contributions: ERS contributed to conceptualization, methodology, writing the first draft, and revisions. AES contributed to conceptualization, methodology, writing the first draft, and revisions. HL contributed to conceptualization and revisions. DMM contributed to conceptualization, methodology, writing the paper draft, and revisions.

Contributor Information

Elizabeth R Stevens, Email: elizabeth.stevens@nyulangone.org.

Arielle Elmaleh-Sachs, Email: Arielle.Elmaleh-Sachs@nyulangone.org.

Holly Lofton, Email: holly.lofton@nyulangone.org.

Devin M Mann, Email: devin.mann@nyulangone.org.

References

- 1.Stierman B, Afful J, Carroll MD, et al. National Health Statistics Reports; 2021. National Health and Nutrition Examination Survey 2017–March 2020 prepandemic data files development of files and prevalence estimates for selected health outcomes. NHSR No. 158. doi. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Murthy VH. Confronting health worker burnout and well-being. N Engl J Med. 2022 Aug 18;387(7):577–579. doi: 10.1056/NEJMp2207252. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 3.Salehinejad H, Sankar S, Barfett J, Colak E, Valaee S. Recent advances in recurrent neural networks. arXiv. 2017 Dec 29; doi: 10.48550/arXiv.1801.01078. Preprint posted online on. doi. [DOI]

- 4.Alqahtani H, Kavakli-Thorne M, Kumar G. Applications of generative adversarial networks (GANs): an updated review. Arch Computat Methods Eng. 2021 Mar;28(2):525–552. doi: 10.1007/s11831-019-09388-y. doi. [DOI] [Google Scholar]

- 5.Zhao WX, Zhou K, Li J, et al. A survey of large language models. arXiv. 2023 Mar 31; doi: 10.48550/arXiv.2303.18223. Preprint posted online on. doi. [DOI]

- 6.Fanni SC, Febi M, Aghakhanyan G, Neri E. In: Natural Language Processing. Klontzas ME, Fanni SC, Neri E, editors. Springer International Publishing; 2023. Introduction to artificial intelligence; pp. 87–99. doi. [DOI] [Google Scholar]

- 7.Bays HE, Fitch A, Cuda S, et al. Artificial intelligence and obesity management: an Obesity Medicine Association (OMA) Clinical Practice Statement (CPS) 2023. Obes Pillars. 2023 Jun;6:100065. doi: 10.1016/j.obpill.2023.100065. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Yu KH, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat Biomed Eng. 2018 Oct;2(10):719–731. doi: 10.1038/s41551-018-0305-z. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 9.Jayakumar P, Bozic KJ. Advanced decision-making using patient-reported outcome measures in total joint replacement. J Orthop Res. 2020 Jul;38(7):1414–1422. doi: 10.1002/jor.24614. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 10.Morin O, Vallières M, Braunstein S, et al. An artificial intelligence framework integrating longitudinal electronic health records with real-world data enables continuous pan-cancer prognostication. Nat Cancer. 2021 Jul;2(7):709–722. doi: 10.1038/s43018-021-00236-2. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 11.Laranjo L, Dunn AG, Tong HL, et al. Conversational agents in healthcare: a systematic review. J Am Med Inform Assoc. 2018 Sep 1;25(9):1248–1258. doi: 10.1093/jamia/ocy072. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Oh YJ, Zhang J, Fang ML, Fukuoka Y. A systematic review of artificial intelligence chatbots for promoting physical activity, healthy diet, and weight loss. Int J Behav Nutr Phys Act. 2021 Dec 11;18(1):1–25. doi: 10.1186/s12966-021-01224-6. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Stephens TN, Joerin A, Rauws M, Werk LN. Feasibility of pediatric obesity and prediabetes treatment support through Tess, the AI behavioral coaching chatbot. Transl Behav Med. 2019 May 16;9(3):440–447. doi: 10.1093/tbm/ibz043. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 14.Lee NS, Luong T, Rosin R, et al. Developing a chatbot–clinician model for hypertension management. NEJM Catalyst. 2022 Oct 19;3(11):0228. doi: 10.1056/CAT.22.0228. doi. [DOI] [Google Scholar]

- 15.Stein N, Brooks K. A fully automated conversational artificial intelligence for weight loss: longitudinal observational study among overweight and obese adults. JMIR Diabetes. 2017 Nov 1;2(2):e28. doi: 10.2196/diabetes.8590. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chew HSJ. The use of artificial intelligence-based conversational agents (chatbots) for weight loss: scoping review and practical recommendations. JMIR Med Inform. 2022 Apr 13;10(4):e32578. doi: 10.2196/32578. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yu P, Xu H, Hu X, Deng C. Leveraging generative AI and large language models: a comprehensive roadmap for healthcare integration. Healthcare (Basel) 2023 Oct 20;11(20):2776. doi: 10.3390/healthcare11202776. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Small WR, Wiesenfeld B, Brandfield-Harvey B, et al. Large language model–based responses to patients’ in-basket messages. JAMA Netw Open. 2024 Jul 1;7(7):e2422399. doi: 10.1001/jamanetworkopen.2024.22399. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tierney AA, Gayre G, Hoberman B, et al. Ambient artificial intelligence scribes to alleviate the burden of clinical documentation. NEJM Catalyst. 2024 Feb 21;5(3) doi: 10.1056/CAT.23.0404. doi. [DOI] [Google Scholar]

- 20.Roosan D, Padua P, Khan R, Khan H, Verzosa C, Wu Y. Effectiveness of ChatGPT in clinical pharmacy and the role of artificial intelligence in medication therapy management. J Am Pharm Assoc (2003) 2024;64(2):422–428. doi: 10.1016/j.japh.2023.11.023. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 21.Nayak A, Vakili S, Nayak K, et al. Use of voice-based conversational artificial intelligence for basal insulin prescription management among patients with type 2 diabetes: a randomized clinical trial. JAMA Netw Open. 2023 Dec 1;6(12):e2340232. doi: 10.1001/jamanetworkopen.2023.40232. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rodriguez DV, Andreadis K, Chen J, Gonzalez J, Mann D. Development of a GenAI-powered hypertension management assistant: early development phases and architectural design. 2024 IEEE 12th International Conference on Healthcare Informatics (ICHI); Jun 3-6, 2024; Orlando, FL, USA. Presented at. doi. [DOI] [Google Scholar]

- 23.Golda A, Mekonen K, Pandey A, et al. Privacy and security concerns in generative AI: a comprehensive survey. IEEE Access. 2024;12:48126–48144. doi: 10.1109/ACCESS.2024.3381611. doi. [DOI] [Google Scholar]

- 24.Paul M, Maglaras L, Ferrag MA, Almomani I. Digitization of healthcare sector: a study on privacy and security concerns. ICT Expr. 2023 Aug;9(4):571–588. doi: 10.1016/j.icte.2023.02.007. doi. [DOI] [Google Scholar]

- 25.Tokens, characters and usage fees: decoding the AI price war. PYMNTS. Dec 15, 2023. [27-09-2024]. https://www.pymnts.com/news/artificial-intelligence/2023/tokens-characters-and-usage-fees-decoding-the-ai-price-war/ URL. Accessed.

- 26.Desislavov R, Martínez-Plumed F, Hernández-Orallo J. Trends in AI inference energy consumption: beyond the performance-vs-parameter laws of deep learning. Sustain Comput Inform Syst. 2023 Apr;38:100857. doi: 10.1016/j.suscom.2023.100857. doi. [DOI] [Google Scholar]

- 27.Wachter RM, Brynjolfsson E. Will generative artificial intelligence deliver on its promise in health care? JAMA. 2024 Jan 2;331(1):65. doi: 10.1001/jama.2023.25054. doi. [DOI] [PubMed] [Google Scholar]

- 28.Margetis G, Ntoa S, Antona M, Stephanidis C. Handbook of Human Factors and Ergonomics. John Wiley & Sons, Inc; 2021. Human-centered design of artificial intellignce; pp. 1085–1106. doi. [DOI] [Google Scholar]

- 29.Rojas JC, Teran M, Umscheid CA. Clinician trust in artificial intelligence: what is known and how trust can be facilitated. Crit Care Clin. 2023 Oct;39(4):769–782. doi: 10.1016/j.ccc.2023.02.004. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 30.Hatherley JJ. Limits of trust in medical AI. J Med Ethics. 2020 Jul;46(7):478–481. doi: 10.1136/medethics-2019-105935. doi. [DOI] [PubMed] [Google Scholar]

- 31.Chen Y, Esmaeilzadeh P. Generative AI in medical practice: in-depth exploration of privacy and security challenges. J Med Internet Res. 2024 Mar 8;26:e53008. doi: 10.2196/53008. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zhang P, Kamel Boulos MN. Generative AI in medicine and healthcare: promises, opportunities and challenges. Fut Internet. 2023;15(9):286. doi: 10.3390/fi15090286. doi. [DOI] [Google Scholar]

- 33.Reddy S. Generative AI in healthcare: an implementation science informed translational path on application, integration and governance. Implement Sci. 2024 Mar 15;19(1):27. doi: 10.1186/s13012-024-01357-9. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Schwartz R, Vassilev A, Greene K, Perine L, Burt A, Hall P. Towards a Standard for Identifying and Managing Bias in Artificial Intelligence. National Institute of Standards and Technology; 2022. [27-09-2024]. https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=934464 URL. Accessed. doi. [DOI] [Google Scholar]

- 35.Stevens ER, Caverly T, Butler JM, et al. Considerations for using predictive models that include race as an input variable: the case study of lung cancer screening. J Biomed Inform. 2023 Nov;147:104525. doi: 10.1016/j.jbi.2023.104525. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kim JY, Hasan A, Kellogg KC, et al. Development and preliminary testing of Health Equity Across the AI Lifecycle (HEAAL): a framework for healthcare delivery organizations to mitigate the risk of AI solutions worsening health inequities. PLOS Digit Health. 2024 May;3(5):e0000390. doi: 10.1371/journal.pdig.0000390. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sarafian K. In: EdMedia + Innovate Learning 2023. Bastiaens T, editor. Association for the Advancement of Computing in Education (AACE); 2023. Liberatory design: taking action to learn and liberate; pp. 211–215. [Google Scholar]