Key Points

Question

How accurate and fair are predictive models of postpartum depression, defined as screening above clinical cutoffs on psychometric screening tools, trained on commonly available electronic health records?

Findings

In this diagnostic study assessing predictive models of postpartum depression of 19 430 patients, models performed modestly (mean areas under the receiver operating curves ranged from 0.602 to 0.635) and did not display the same historical biases against racial and ethnic minority groups (significantly higher positive screen rates and lower false-negative rates).

Meaning

Findings suggest that it is critical for researchers and physicians to consider their model design (eg, desired target and predictor variables) and evaluate model bias to minimize health disparities.

Abstract

Importance

Machine learning for augmented screening of perinatal mood and anxiety disorders (PMADs) requires thorough consideration of clinical biases embedded in electronic health records (EHRs) and rigorous evaluations of model performance.

Objective

To mitigate bias in predictive models of PMADs trained on commonly available EHRs.

Design, Setting, and Participants

This diagnostic study collected data as part of a quality improvement initiative from 2020 to 2023 at Cedars-Sinai Medical Center in Los Angeles, California. The study inclusion criteria were birthing patients aged 14 to 59 years with live birth records and admission to the postpartum unit or the maternal-fetal care unit after delivery.

Exposure

Patient-reported race and ethnicity (7 levels) obtained through EHRs.

Main Outcomes and Measures

Logistic regression, random forest, and extreme gradient boosting models were trained to predict 2 binary outcomes: moderate to high-risk (positive) screen assessed using the 9-item Patient Health Questionnaire (PHQ-9), and the Edinburgh Postnatal Depression Scale (EPDS). Each model was fitted with or without reweighing data during preprocessing and evaluated through repeated K-fold cross validation. In every iteration, each model was evaluated on its area under the receiver operating curve (AUROC) and on 2 fairness metrics: demographic parity (DP), and difference in false negatives between races and ethnicities (relative to non-Hispanic White patients).

Results

Among 19 430 patients in this study, 1402 (7%) identified as African American or Black, 2371 (12%) as Asian American and Pacific Islander; 1842 (10%) as Hispanic White, 10 942 (56.3%) as non-Hispanic White, 606 (3%) as multiple races, 2146 (11%) as other (not further specified), and 121 (<1%) did not provide this information. The mean (SD) age was 34.1 (4.9) years, and all patients identified as female. Racial and ethnic minority patients were significantly more likely than non-Hispanic White patients to screen positive on both the PHQ-9 (odds ratio, 1.47 [95% CI, 1.23-1.77]) and the EPDS (odds ratio, 1.38 [95% CI, 1.20-1.57]). Mean AUROCs ranged from 0.610 to 0.635 without reweighing (baseline), and from 0.602 to 0.622 with reweighing. Baseline models predicted significantly greater prevalence of postpartum depression for patients who were not non-Hispanic White relative to those who were (mean DP, 0.238 [95% CI, 0.231-0.244]; P < .001) and displayed significantly lower false-negative rates (mean difference, −0.184 [95% CI, −0.195 to −0.174]; P < .001). Reweighing significantly reduced differences in DP (mean DP with reweighing, 0.022 [95% CI, 0.017-0.026]; P < .001) and false-negative rates (mean difference with reweighing, 0.018 [95% CI, 0.008-0.028]; P < .001) between racial and ethnic groups.

Conclusions and Relevance

In this diagnostic study of predictive models of postpartum depression, clinical prediction models trained to predict psychometric screening results from commonly available EHRs achieved modest performance and were less likely to widen existing health disparities in PMAD diagnosis and potentially treatment. These findings suggest that is critical for researchers and physicians to consider their model design (eg, desired target and predictor variables) and evaluate model bias to minimize health disparities.

This diagnostic study assesses whether predictive models of perinatal mood and anxiety disorders that consider model design and are trained on commonly available electronic health record data can mitigate clinical biases embedded in those records and minimize health disparities.

Introduction

Perinatal mood and anxiety disorders (PMADs) encompass a range of mental health disorders, including major depressive disorder and various anxiety disorders, that occur during pregnancy or up to 1 year post partum and affect approximately 20% of birthing parents.1 Postpartum depression (PPD) is the most common PMAD, affecting approximately 13% of birthing parents in high-income countries2 and 20% of birthing parents in low-income and middle-income countries.3 Despite recent national recommendations and state laws,4,5,6 nearly 50% of PMADs are never identified. Fewer than 1 in 4 women in Los Angeles, California, receive PMAD screening or education during prenatal, postpartum, or well-child medical visits.7 Even when they receive screening, many women do not respond openly to these questions.8,9,10 The consequences of untreated PMAD are substantial, including inhibited child development.11,12,13,14,15,16,17,18,19 When severe, PPD can lead to suicide, one of the leading causes of perinatal death.20 As with all health conditions, early detection and prevention are key. However, comprehensive clinical interviews are a time-intensive process that can be conducted only by a licensed mental health professional.21,22 The diagnostic efficacy of more brief, inpatient screening procedures can be affected by varying psychometric properties,23 method of administration,24,25 stigma,26 and human error.9 Thus, better diagnostic and prognostic models are needed for early identification and referral to treatment.9,22,27,28

Women from racial and ethnic minority groups are at an increased risk of PMAD.29,30,31 PMAD prevalence rates in low-income and ethnic minority women, for example, are more than double the expected rate (approximately 13%),2 with prevalence rates as high as 38% for depression.32 However, women from racial and ethnic minority groups are less likely to be screened for systemic reasons (eg, access) and less likely to follow-up with care for reasons related to perceived stigma, cultural beliefs, culturally insensitive interventions, and lower standard of care.33,34,35,36,37,38 For example, non-Hispanic White women are more likely than Black and Latina women to initiate postpartum mental health care, receive follow-up care, and refill prescriptions.33 Prior studies have also shown that low-income urban Black women self-report lower severity of depressive symptoms on psychometric questionnaires23,39,40,41,42 despite equivalent diagnoses, yielding higher rates of false negatives when compared with other groups; recommendations following these results suggest lowering the standard cutoffs for positive screening.

Due to the many barriers and the complex etiology of psychiatric disorders,22 identification of patients at risk of PMAD poses a major challenge for public health officials and health care professionals. To address this challenge, recent research offers machine learning as a clinical tool to support more routine screening of PMAD by using information obtained from electronic health records (EHRs), which readily include many highly predictive factors,43,44 such as prior mental health diagnoses,45 gestational age,46 infant birth weight,47 method of delivery,48 and neonatal intensive care unit admission.49 While machine learning has the potential to democratize medicine,50 such models are inherently prone to reproducing existing medical biases present in EHRs.51 Therefore, it is paramount to assess and account for any existing biases in the machine learning process.

Given the persistent disparities in obtaining diagnosis and treatment, this study emphasizes that the choices of target and predictor variables are imperative to ensure that the model does not widen existing disparities by reproducing biases in the dataset, an issue first raised by Obermeyer et al.52 While prior work on predictive models of PMAD has achieved impressive performance (areas under the receiver operating curves [AUROCs] of 0.60 to 0.93), the target variable is often represented as logged International Classification of Diseases (ICD) codes (ie, official diagnoses) and pharmacy records,43,44,53,54,55,56,57 or prior psychometric screening results are required as a key predictor in the model.44,58,59,60,61,62,63 However, given that women from racial and ethnic minority groups are less likely to receive screening and treatment, such models are inherently susceptible to reproducing existing biases. For example, such models predict greater risk of PMAD for White women than for Black women, even after debiasing,55 reflecting disparities in obtaining official diagnoses and treatment. Similar to Obermeyer et al,52 such algorithmic predictions are not necessarily indicative of lower prevalence of PMAD among Black women, but likely indicative of lower access or greater discrimination42 and perceived stigma.42 (Indeed, non-Hispanic White patients in our study were more likely than Asian, Black, or Hispanic patients to have been prescribed psychotherapeutic drugs prior to delivery [eTable 1 in Supplement 1]). While there are disparities in psychometric responding, adjusting for lower self-reported severity (eg, lowering cutoff values) is more tractable than adjusting for lower rates of logged ICD codes and pharmacy records. Moreover, models that use prior psychometric screening as a primary predictor may be unusable for communities that are unlikely to receive routine symptom screening.

The current study evaluated the performance of machine learning models trained on commonly available EHR data (ie, did not require prior screening) to predict psychometric screening outcomes; the models were also evaluated for potential bias. We do not recommend replacing traditional psychometric screening with machine learning. Instead, we suggest that machine learning can augment traditional procedures to encourage more equitable and routine screening for PMAD. For example, predictive modeling does not require patients to answer potentially sensitive questions regarding their mental health status (eg, suicidal ideation) in the presence of family or during stressful events (eg, being admitted for an emergency cesarean delivery), as is commonly done in the current workflow,8 which may contribute to higher false-negative screen rates in racial and ethnic minority women.

To our knowledge, only 2 studies have used psychometric screening for PPD as the target variable without requiring prior psychometric screening as a key predictor. When using comparable information for training, Matsuo et al64 achieved modest performance on predicting the Patient Health Questionnaire 9 (PHQ-9) screening results trained on EHRs obtained from multiple birthing centers in Japan (AUROCs = 0.569 to 0.626) but did not evaluate algorithmic bias.64 Huang et al65 also achieved modest performance on predicting the Edinburgh Postnatal Depression Scale (EPDS) screening results from EHRs obtained from an urban academic hospital in Chicago, Illinois (best AUROC = 0.670).65 Huang et al65 concluded that algorithmic performance was biased against Black and Latina women despite these groups comprising the majority group in the dataset; algorithmic bias was defined as lower AUROC values for Black and Latina women relative to non-Hispanic White women. Last, all prior work that evaluated model bias was limited to 2 or 3 racial and ethnic groups. The present study extends the current literature by evaluating model performance between multiple racial and ethnic groups and provides a more rigorous evaluation of model performances through repeated K-fold cross validation in lieu of reporting results from a single training-validation-testing split, which may yield overly optimistic results of model performance.

Methods

Data Collection

The report for this diagnostic study adheres to the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis for Artificial Intelligence (TRIPOD + AI) guideline for reporting clinical prediction models. The data were collected between 2020 and 2023 as part of the Postpartum Depression Screening, Education and Referral Quality Improvement Initiative at Cedars-Sinai Medical Center (CSMC), located in Los Angeles, California. Since 2017, CSMC has routinely screened all patients with a live birth for PPD immediately following delivery. The aim of the quality improvement project is to support early identification and intervention at CSMC. Screening data from birthing individuals were included in this study if they were admitted to the postpartum unit or the maternal-fetal care unit after delivery. Our dataset does not include screening data from the prenatal or predelivery time point or from patients who experienced stillbirth. The hospital’s institutional review board reviewed this project and approved the use of deidentified patient data and thus waived the need to obtain patient informed consent.8,9 In February 2022, screening for PMAD transitioned from the PHQ-9 to the EPDS, which was originally developed to screen for perinatal depression, but further assesses anxiety symptoms.66

Race and Ethnicity

Race and ethnicity were provided by the patient during intake or later through the patient portal. Patients selected from applicable categories, including African American or Black, American Indian or Alaskan Native, Asian or Native Hawaiian or Other Pacific Islander, White, and other (not further specified). None of the patients in our sample identified as American Indian or Alaskan Native. If a patient selected multiple categories, we categorized them as multiple races. If a patient declined to answer, we categorized them as unknown race but did not exclude their data from analysis due to potential systematic reasons for withholding this information. Patients selected from ethnicity categories of Hispanic or not Hispanic.

Depression Screening

PHQ-9

The PHQ-9 was developed for the dual purpose of establishing tentative diagnoses of depressive episodes and depressive symptom severity.67 Each question in the scale had 4 response choices: “not at all,” “several days,” “more than half the days,” and “nearly every day.” Total scores ranged from 0 to 27, which assessed the presence and severity of a depressive episode,68 with higher scores indicating greater symptoms of depression.

EPDS

The EPDS was developed to screen for PMADs.69,70 Patients indicated on a 4-point Likert scale the frequency with which they experienced symptoms (eg, “I have blamed myself unnecessarily when things go wrong”) during the past 7 days. Total scores ranged from 0 to 30, with higher scores indicating greater symptoms of PPD.

Data Preparation

The target variable was patients’ screening results from either the PHQ-9 or the EPDS, which were dichotomized into low risk (negative) or moderate to high risk (positive) based on the clinical cutoffs used at CSMC, which are more sensitive than research-based cutoffs, to ensure that patients with PMAD are identified and referred for treatment.9 Both measures screen for suicidal ideation. Screening positive was determined by endorsement of suicidal ideation or a PHQ-9 score of 5 or higher or a EPDS score of 8 of higher.

Dataset

The full dataset consisted of 19 790 EHRs. Patients were screened for depression no more than 9 days following delivery. Only the first complete record from each patient was included in the analysis. Data were analyzed separately for patients who received the PHQ-9 and the EPDS. The final dataset consisted of 19 430 unique patients.

Machine Learning

Deidentified data and codes for model fitting and visualization are available on GitHub.71 Model fitting and statistical analyses were conducted with the Python packages, sklearn and scipy, respectively. Statistical tests assume a significance level of α < .05 and 2-tailed tests of hypotheses.

Models

Three supervised classification algorithms were fitted to the data: logistic regression, random forest, and extreme gradient boosting. The Box outlines the predictors used to train each model. To determine 1 set of hyperparameters for each model, the dataset was first split into approximately 75% for training, 15% for validation, and 10% for testing using stratified random sampling (stratified by race and outcome). Random undersampling of the training set was used to address issues related to class imbalance. Hyperparameters were fine-tuned using the tree-structured Parzen estimator sampling algorithm from the optuna library, with an objective to maximize the AUROC in the validation set. The test performance (AUROC) was calculated for each model. To provide a more robust evaluation of each set of fine-tuned hyperparameters, each of the 3 models was subsequently fitted assuming the set of fine-tuned hyperparameters and were evaluated using repeated 10-fold cross validation (repeated 10 times). In every fold, random undersampling was implemented on the training split to address issues related to class imbalance. In each of the 100 total iterations, fairness and performance (AUROC) metrics were computed for each race and ethnicity in the testing split.

Box. Predictor Variables for Machine Learning Models.

Predictors

-

Age

Birthing patient age at time of delivery

-

Race

African American or Black, Asian American and Pacific Islander, Hispanic White, non-Hispanic White (reference group in logistic regression), multiple race, other, and unknown

-

Ethnicity

Hispanic, non-Hispanic (reference group in logistic regression), unknown

-

Marital status

Married (reference group in logistic regression), single, significant other, domestic partner, married, legally separated, divorced, widowed, unknown

-

Financial class

Government (0) or private (1) health insurance

-

Low birth weight

Infant birth weight <2500 g

-

Preterm birth

Gestational age <36 wk

-

Delivery method

Vaginal (0) or cesarean (1)

Neonatal intensive care unit admission

Maternal fetal care unit admission

Gestational preeclampsia

Gestational diabetes

Gestational hypertension

-

History of anxiety

Previous diagnosis of anxiety, postpartum anxiety, panic, or posttraumatic stress disorder (at least 1 y prior to delivery)

-

History of depression

Previous diagnosis of depression, postpartum depression (at least 1 y prior to delivery)

-

History of bipolar disorder

Previous diagnosis of bipolar disorder (at least 1 y prior to delivery)

-

History of postpartum mental health disturbances

Previous diagnosis of postpartum mental health disturbance related to another pregnancy (at least 1 y prior to delivery)

-

Mental health diagnoses

Previous diagnosis of any psychiatric disorder (at least 1 y prior to delivery),

-

History of psychotherapeutic medication

Psychotherapeutic prescription prior to delivery

History of cardiovascular medication

Metrics of Algorithmic Fairness

Multiple metrics to measure algorithmic fairness exist in the literature (eg, demographic parity [DP]72 and equalized odds73). Although Huang et al65 operationalized bias as the differences in AUROCs—a metric that jointly evaluates true- and false-positive rates—health disparities in PMAD diagnoses are specifically in underdiagnosis and higher false-negative rates in certain populations. Therefore, the present study evaluated models on DP and false-negative rates between patients in racial and ethnic minority groups and non-Hispanic White patients. Demographic parity is a condition that posits that the predictions of a model ought to be independent of group membership.74 However, it may not be an appropriate measure of fairness when there are known disparities in disease prevalence among groups,74 as in PMADs.29,30,31 Therefore, DP was used for evaluating whether models correctly recovered known variations of PMADs (eg, higher for Black patients than for non-Hispanic White patients). In a classification case, DP was calculated as DP = P(ŷ = 1|g = a) − P(ŷ = 1|g = b), where ŷ indicates the model predictions, and g indicates group membership.

Additional Bias Mitigation Techniques

Multiple methods have been proposed over the years to mitigate bias in various stages of the machine learning pipeline. These methods may modify properties of the dataset (preprocessing75,76,77,78), model (in processing79), or predictions (postprocessing73) to optimize an objective function (eg, accuracy).

In addition to training models to predict a target variable that is less likely to reflect existing dipartites, we evaluated a preprocessing technique called reweighing.75 This method assigns weights to data points in the training dataset to change their contribution to the objective function of the model. The current study calculated sample weights in accordance with the formulation in the study by Kamiran and Calders.75

Statistical Analysis

One-Sample t Tests

To test the overall direction of potential bias across all baseline models (ie, models without reweighing), 1-sample t tests were performed for the DP and differences in false-negative rates from the test sets. The null hypotheses assert that the true differences are equal to zero.

Independent Samples t Tests

To test the effect of reweighing on the aforementioned metrics, metrics obtained from models without reweighing were compared with those with reweighing using independent samples t tests. The null hypotheses assert that the differences between models with or without reweighing are zero.

All analyses were conducted with Python, version 3.11.9, software (Python Software Foundation). A 2-sided value of P < .05 was considered statistically significant.

Results

The final dataset used for model training and evaluation consisted of 11 377 PHQ-9 records and 8658 EPDS records, with a total of 19 430 unique patients between 14 and 59 years of age (mean [SD] age, 34.1 [4.9] years) at the time of their first delivery at CSMC. Among them, 1402 (7%) identified as African American or Black, 2371 (12%) as Asian American and Pacific Islander, 1842 (10%) as Hispanic White, 10 942 (56%) as non-Hispanic White, 606 (3%), as multiple races, 2146 (11%) and as other (not further specified); 121 (<1%) did not provide this information. We differentiated between only non-Hispanic and Hispanic White patients, rather than other groups, because while the sample included other race categories with Hispanic ethnicity, the number of individuals in each group was too small for meaningful analyses. All patients identified as female. During the transition of CSMC from using the PHQ-9 to using the EPDS, 605 patients with complete data received both measures and were included in each set of analyses.

Prevalence

In our sample, racial and ethnic minority patients were more likely than non-Hispanic White patients to screen positive on both the PHQ-9 (odds ratio, 1.47 [95% CI, 1.23-1.77]) and the EPDS (odds ratio, 1.38 [95% CI, 1.20-1.57]. eTable 2 in Supplement 1 gives prevalence rates for each racial and ethnic group.

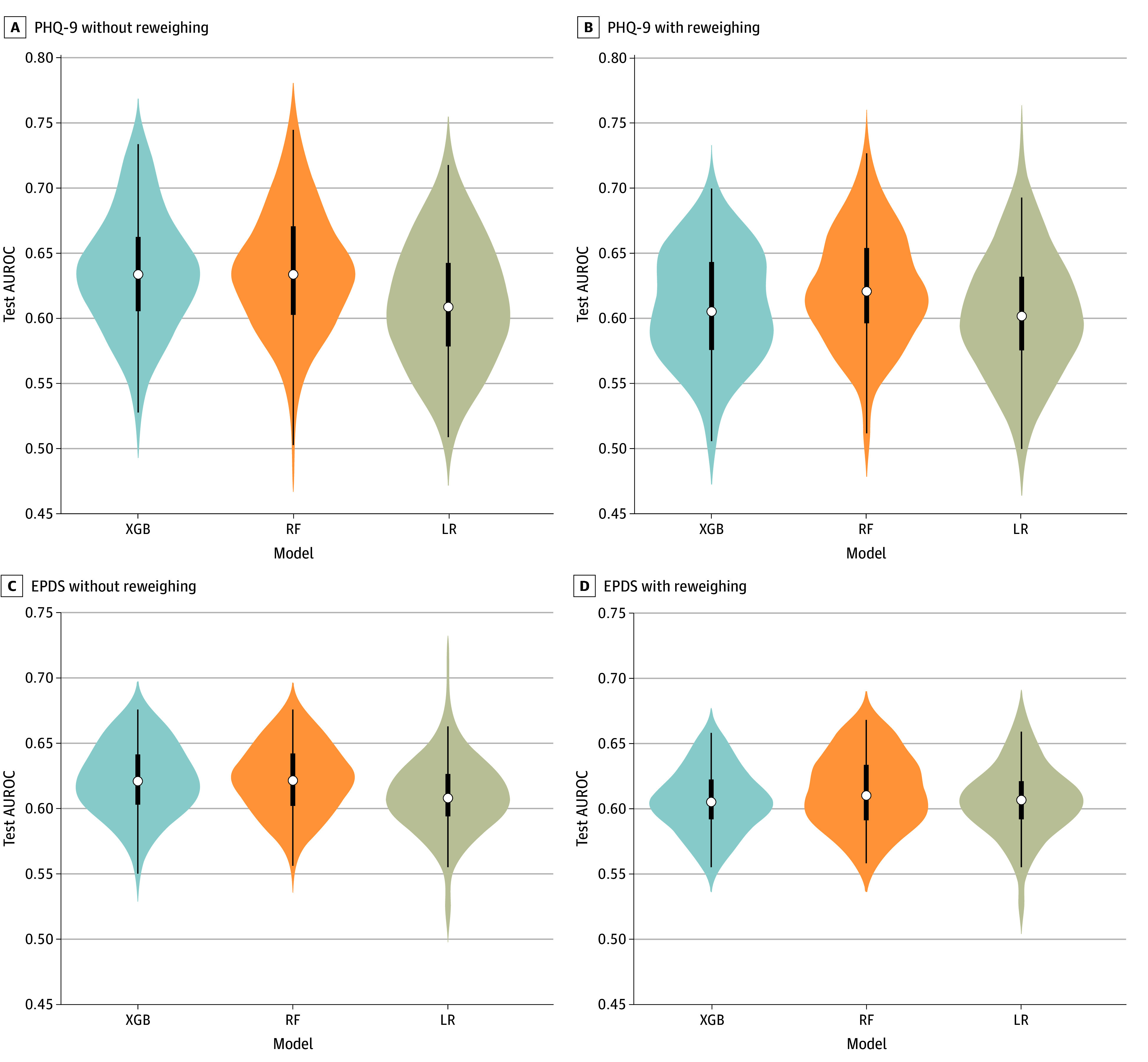

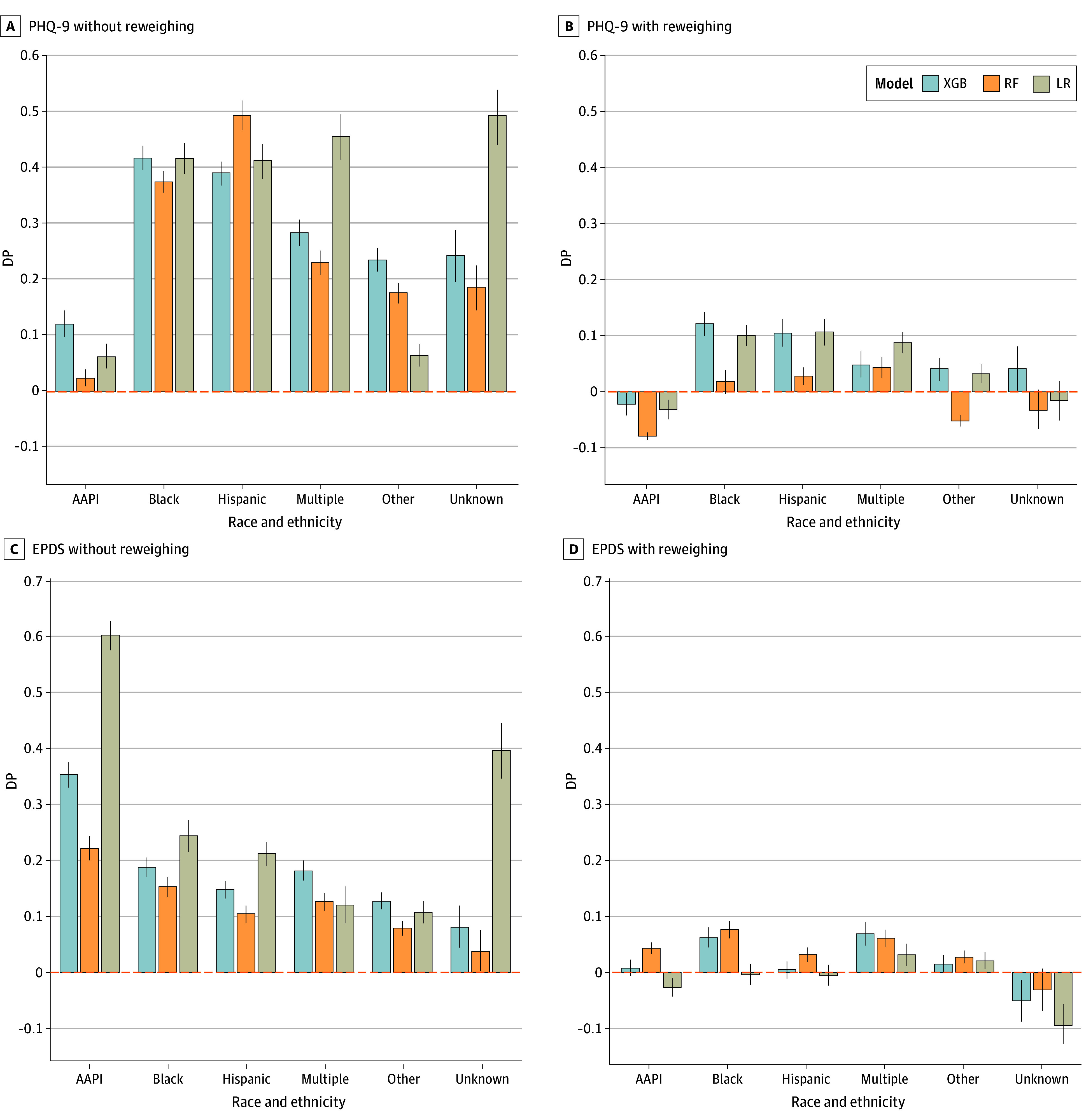

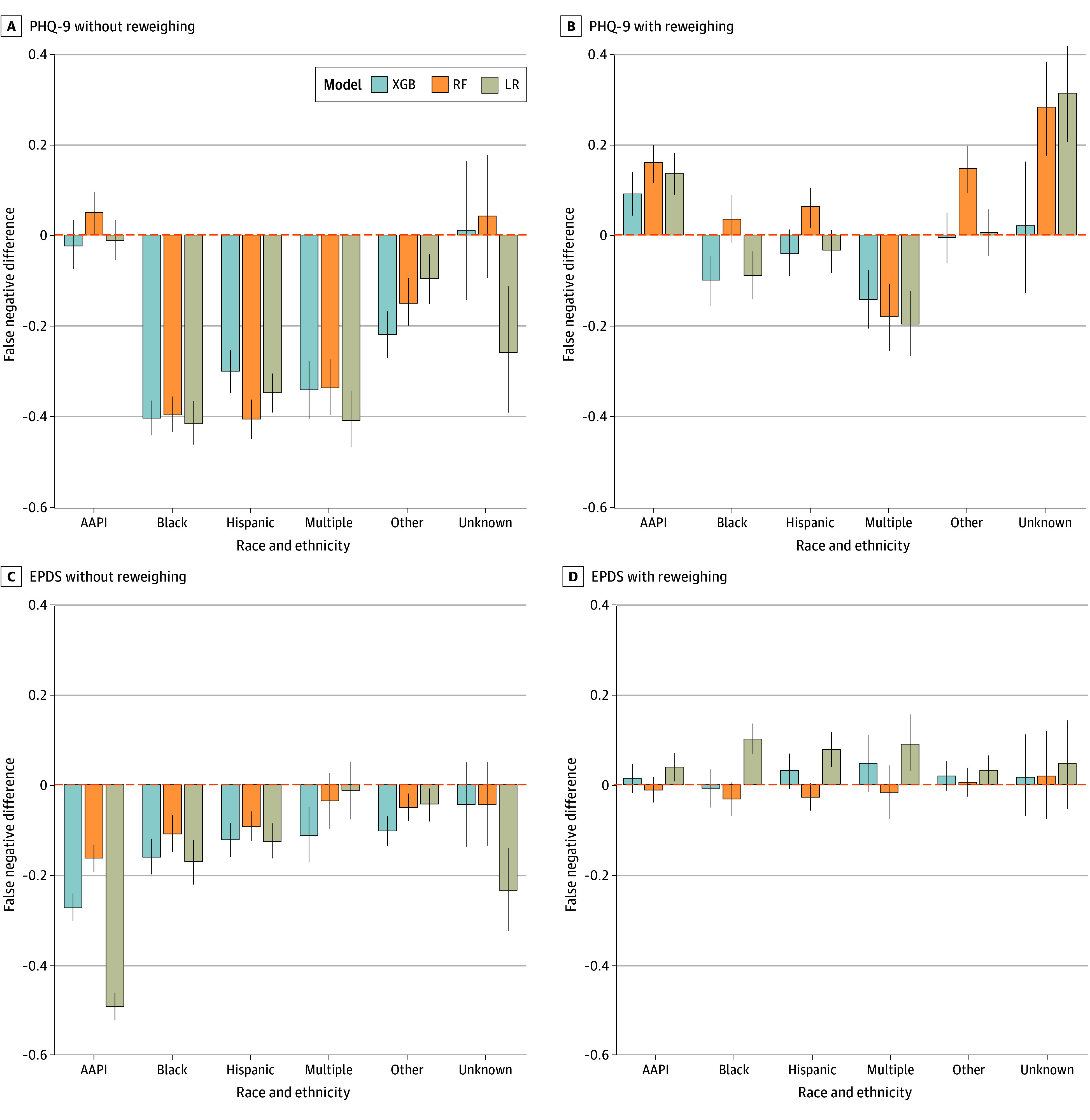

Machine Learning Performances

The present models were comparable with or outperformed (eTable 3 and eFigures 1-5 in Supplement 1) other studies using similar target and predictor features64,65 (mean AUROCs between 0.602 and 0.635). The Table displays the mean test AUROC of each model with (0.610 to 0.635) or without (0.602 to 0.622) reweighing the samples in the training set. Figure 1 displays the AUROCs of the 3 models obtained from 100 unique training-testing splits. Figure 2 and Figure 3 display the DP and differences in false negatives between each racial and ethnic group and non-Hispanic White patients.

Table. Mean Test Area Under the Receiver Operating Curve (AUROC).

| Scale/reweigh | Mean AUROC (95% CI) | ||

|---|---|---|---|

| Logistic regression | Random forest | Extreme gradient boosting | |

| PHQ-9 | |||

| No | 0.610 (0.601-0.620) | 0.635 (0.626-0.644) | 0.635 (0.626-0.643) |

| Yes | 0.602 (0.594-0.611) | 0.622 (0.613-0.630) | 0.609 (0.600-0.617) |

| EPDS | |||

| No | 0.611 (0.605-0.616) | 0.624 (0.619-0.629) | 0.623 (0.618-0.628) |

| Yes | 0.607 (0.602-0.613) | 0.614 (0.609-0.620) | 0.607 (0.602-0.612) |

Abbreviations: EPDS, Edinburgh Postnatal Depression Scale; PHQ-9, Patient Health Questionnaire 9.

Figure 1. Distribution of the Model Test Areas Under the Receiver Operating Curves (AUROCs) From Repeated 10-Fold Cross Validation.

The white marker in the middle of each distribution represents the median; thick lines, the 25th and 75th percentiles; and thin lines, minimum and maximum data values. EPDS indicates Edinburgh Postnatal Depression Scale; LR, logistic regression; PHQ-9, Patient Health Questionnaire 9; RF, random forest; and XGB, extreme gradient boosting.

Figure 2. Demographic Parity (DP) for Each Race and Ethnicity Group Relative to Non-Hispanic White Patients.

The figure collapses across ethnic groups but distinguishes between Hispanic and Non-Hispanic White patients. Error bars represent 95% CIs. AAPI indicates Asian American and Pacific Islander; EPDS, Edinburgh Postnatal Depression Scale; LR, logistic regression; PHQ-9, Patient Health Questionnaire 9; RF, random forest; and XGB, extreme gradient boosting.

Figure 3. False Negatives for Each Race and Ethnicity Group Relative to Non-Hispanic White Patients.

The figure collapses across ethnic groups but distinguishes between Hispanic and Non-Hispanic White patients. Error bars represent 95% CIs. AAPI indicates Asian American and Pacific Islander; EPDS, Edinburgh Postnatal Depression Scale; LR, logistic regression; PHQ-9, Patient Health Questionnaire 9; RF, random forest; and XGB, extreme gradient boosting.

Statistical Analyses for Bias Metrics

One-Sample t Tests

Without reweighing, the models predicted greater positive rates for racial and ethnic minority patients than for non-Hispanic White patients (DP), (mean DP, 0.238 [95% CI, 0.231-0.244]; P < .001). They also displayed lower false negatives for racial and ethnic minorities relative to non-Hispanic White patients (mean difference, −0.184; [95% CI, −0.195 to −0.174]; P < .001).

Independent Samples t Tests

Reweighing seemingly minimized differences in DP. Overall, DP was lower in models with reweighing (mean DP, 0.022 [95% CI, 0.017-0.026]), than models without (see aforementioned values) (P < .001). Overall differences in false-negative rates were also lower with reweighing (mean difference, 0.018 [95% CI, 0.008-0.028]) than without (see aforementioned values) (P < .001).

Given that non-Hispanic White patients were among the least likely to screen positive in our dataset, their data points were up weighted in the reweighing process. While reweighing data based on observed frequencies appears to minimize differences in false-negative rates between patients from various racial and ethnic groups and non-Hispanic White patients, doing so may perpetuate biases against patients who are not non-Hispanic White (Figure 3).

Discussion

The present diagnostic study evaluated the ability of machine learning to predict PPD screening results, given commonly available EHR data elements, and emphasized the need to use target variables that are less likely to reflect existing disparities, an issue first raised by Obermeyer et al.52 Additionally, given that health disparities in PMADs specifically lie in underdiagnosis and missed diagnoses for racial and ethnic minorities relative to non-Hispanic White patients, the present study operationalized bias as differences in false-negative rates. Together with routine screening, the current results demonstrated how model designs that integrate knowledge of health disparities may limit the potential of an algorithm to exaggerate those disparities. This approach also operationalized bias to be more context-specific, thereby providing a more nuanced understanding of potential algorithmic bias in predictive models of PPD. Unlike prior research, the present models do not perpetuate the same biases against Black and Hispanic patients relative to non-Hispanic White patients. False-negative rates were lower for racial and ethnic minorities, and models were more likely to predict a positive PPD screen for them relative to non-Hispanic White patients (DP). The difference in conclusions between the present study and those from Huang et al65 is likely due to different definitions of bias (ie, AUROC in Huang et al65 vs false negatives in the present study). Therefore, it may be possible that the best performing model in the study by Huang et al65 also had lower false-negative rates for Black and Hispanic patients relative to non-Hispanic White patients, but had higher false-positive rates, yielding lower AUROCs (eFigures 6-8 in Supplement 1).

Compared with studies that predict ICD codes and pharmacy records using EHRs and prior screening, the current results and those from similar studies64,65 displayed lower performance. The model predictions in the present study did not reproduce historic biases in which racial and ethnic minorities were less likely to receive a positive screen.55 Additionally, our results likely corroborate prior findings that demonstrate the predictive power of prior psychometric screening results.43,44,58,59,60,61,62,63

We acknowledge that machine learning alone will not resolve issues related to physical and social barriers.80,81,82,83 Instead, we propose machine learning as a component of achieving more equitable health care. Achieving completely equitable mental health care will require fundamental restructuring of clinical workflows, establishing more comprehensive mental health services, and improving mental health and implicit bias training for clinical workers. Furthermore, while machine learning tools may support early detection efforts, there may be limited resources after a positive screen, a critically important problem outside the scope of this study.

Limitations

Ideally, the accuracy of the models would be equal across all patient demographics. However, deterministically reweighing based on expected and observed frequencies in the data can potentially rebias against certain racial and ethnic groups, as observed in our results.

Conclusions

In this diagnostic study of predictive models of postpartum depression, clinical prediction models trained to predict psychometric screening results from commonly available EHR data elements achieved modest performance and were less likely to widen existing health disparities in PMAD diagnosis and potentially treatment. Future work should explore methods to optimize the weights during training that achieve specific performance and fairness goals.

eTable 1. Percentage of patients with records of psychotherapeutic drugs, prescribed prior to delivery, across racial groups

eTable 2. Counts of patients who received the PHQ-9 and EPDS measures

eTable 3. Mean test AUROC without reweighing during preprocessing with associated 95% confidence intervals (bootstrapped)

eFigure 1. Model test AUROC’s across 100 bootstrapped test sets and collapsed across sensitive attributes

eFigure 2. Feature importance based on mean accuracy decrease for predicting PHQ-9 outcome

eFigure 3. Feature importance based on mean accuracy for predicting EPDS outcome

eFigure 4. Demographic parity for each race relative to Non-Hispanic White patients

eFigure 5. False negative for each race relative to Non-Hispanic White patients

eAppendix 1. Comparing with Huang et al

eFigure 6. Test AUROCs for each race relative to Non-Hispanic White patients

eFigure 7. False positive rates for each race relative to Non-Hispanic White patients

eFigure 8. True positive (TP) rates for each race relative to Non-Hispanic White patients

Data Sharing Statement

References

- 1.Meltzer-Brody S, Rubinow D. An Overview of Perinatal Mood and Anxiety Disorders: Epidemiology and Etiology. In: Cox E, ed. Women’s Mood Disorders. Springer; 2021:5-16. doi: 10.1007/978-3-030-71497-0_2 [DOI] [Google Scholar]

- 2.Gavin NI, Gaynes BN, Lohr KN, Meltzer-Brody S, Gartlehner G, Swinson T. Perinatal depression: a systematic review of prevalence and incidence. Obstet Gynecol. 2005;106(5 Pt 1):1071-1083. doi: 10.1097/01.AOG.0000183597.31630.db [DOI] [PubMed] [Google Scholar]

- 3.Fisher J, Cabral de Mello M, Patel V, et al. Prevalence and determinants of common perinatal mental disorders in women in low- and lower-middle-income countries: a systematic review. Bull World Health Organ. 2012;90(2):139G-149G. doi: 10.2471/BLT.11.091850 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.The American College of Obstetricians and Gynecologists Committee Opinion No. 630: Screening for perinatal depression. Obstet Gynecol. 2015;125(5):1268-1271. doi: 10.1097/01.AOG.0000465192.34779.dc [DOI] [PubMed] [Google Scholar]

- 5.Siu AL, Bibbins-Domingo K, Grossman DC, et al. ; US Preventive Services Task Force (USPSTF) . Screening for depression in adults: US Preventive Services Task Force recommendation statement. JAMA. 2016;315(4):380-387. doi: 10.1001/jama.2015.18392 [DOI] [PubMed] [Google Scholar]

- 6.Earls MF; Committee on Psychosocial Aspects of Child and Family Health American Academy of Pediatrics . Incorporating recognition and management of perinatal and postpartum depression into pediatric practice. Pediatrics. 2010;126(5):1032-1039. doi: 10.1542/peds.2010-2348 [DOI] [PubMed] [Google Scholar]

- 7.Los Angeles County of Public Health . Health indicators for mothers and babies in Los Angeles County, 2016. Accessed August 20, 2024. http://publichealth.lacounty.gov/mch/lamb/results/2016%20results/2016lambsurveillancerpt_07052018.pdf

- 8.Accortt EE, Wong MS. It is time for routine screening for perinatal mood and anxiety disorders in obstetrics and gynecology settings. Obstet Gynecol Surv. 2017;72(9):553-568. doi: 10.1097/OGX.0000000000000477 [DOI] [PubMed] [Google Scholar]

- 9.Accortt EE, Haque L, Bamgbose O, Buttle R, Kilpatrick S. Implementing an inpatient postpartum depression screening, education, and referral program: a quality improvement initiative. Am J Obstet Gynecol MFM. 2022;4(3):100581. doi: 10.1016/j.ajogmf.2022.100581 [DOI] [PubMed] [Google Scholar]

- 10.Los Angeles County Department of Public Health . Health indicators for mothers and babies in Los Angeles County, 2014. Accessed August 20, 2024. http://publichealth.lacounty.gov/mch/LAMB/Results/2014Results/2014LAMBSurveillanceRptFINAL_041718.pdf

- 11.Miller ES, Saade GR, Simhan HN, et al. Trajectories of antenatal depression and adverse pregnancy outcomes. Am J Obstet Gynecol. 2022;226(1):108.e1-108.e9. doi: 10.1016/j.ajog.2021.07.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Grace SL, Evindar A, Stewart DE. The effect of postpartum depression on child cognitive development and behavior: a review and critical analysis of the literature. Arch Womens Ment Health. 2003;6(4):263-274. doi: 10.1007/s00737-003-0024-6 [DOI] [PubMed] [Google Scholar]

- 13.Paulson JF, Dauber S, Leiferman JA. Individual and combined effects of postpartum depression in mothers and fathers on parenting behavior. Pediatrics. 2006;118(2):659-668. doi: 10.1542/peds.2005-2948 [DOI] [PubMed] [Google Scholar]

- 14.Zelkowitz P, Milet TH. Postpartum psychiatric disorders: their relationship to psychological adjustment and marital satisfaction in the spouses. J Abnorm Psychol. 1996;105(2):281-285. doi: 10.1037/0021-843X.105.2.281 [DOI] [PubMed] [Google Scholar]

- 15.Davis EP, Snidman N, Wadhwa PD, Glynn LM, Schetter CD, Sandman CA. Prenatal maternal anxiety and depression predict negative behavioral reactivity in infancy. Infancy. 2004;6(3):319-331. doi: 10.1207/s15327078in0603_1 [DOI] [Google Scholar]

- 16.Feldman R, Granat A, Pariente C, Kanety H, Kuint J, Gilboa-Schechtman E. Maternal depression and anxiety across the postpartum year and infant social engagement, fear regulation, and stress reactivity. J Am Acad Child Adolesc Psychiatry. 2009;48(9):919-927. doi: 10.1097/CHI.0b013e3181b21651 [DOI] [PubMed] [Google Scholar]

- 17.Misri S, Kendrick K. Perinatal depression, fetal bonding, and mother-child attachment: a review of the literature. Curr Pediatr Rev. 2008;4(2):66-70. doi: 10.2174/157339608784462043 [DOI] [Google Scholar]

- 18.Tronick E, Reck C. Infants of depressed mothers. Harv Rev Psychiatry. 2009;17(2):147-156. doi: 10.1080/10673220902899714 [DOI] [PubMed] [Google Scholar]

- 19.Fihrer I, McMahon CA, Taylor AJ. The impact of postnatal and concurrent maternal depression on child behaviour during the early school years. J Affect Disord. 2009;119(1-3):116-123. doi: 10.1016/j.jad.2009.03.001 [DOI] [PubMed] [Google Scholar]

- 20.Grigoriadis S, Wilton AS, Kurdyak PA, et al. Perinatal suicide in Ontario, Canada: a 15-year population-based study. CMAJ. 2017;189(34):E1085-E1092. doi: 10.1503/cmaj.170088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.First MB, Spitzer RL, Gibbon M, Williams JBW. Structured Clinical Interview for the DSM-IV-TR Axis I Disorders, Patient Edition (SCID-I/P). Biometrics Research, New York State Psychiatric Institute; 1994. [Google Scholar]

- 22.Meltzer-Brody S, Howard LM, Bergink V, et al. Postpartum psychiatric disorders. Nat Rev Dis Primers. 2018;4:18022. doi: 10.1038/nrdp.2018.22 [DOI] [PubMed] [Google Scholar]

- 23.Yang J, Martinez M, Schwartz TA, Beeber L. What is being measured? a comparison of two depressive symptom severity instruments with a depression diagnosis in low-income high-risk mothers. J Womens Health (Larchmt). 2017;26(6):683-691. doi: 10.1089/jwh.2016.5974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Miller ES, Wisner KL, Gollan J, Hamade S, Gossett DR, Grobman WA. Screening and treatment after implementation of a universal perinatal depression screening program. Obstet Gynecol. 2019;134(2):303-309. doi: 10.1097/AOG.0000000000003369 [DOI] [PubMed] [Google Scholar]

- 25.Puryear LJ, Nong YH, Correa NP, Cox K, Greeley CS. Outcomes of implementing routine screening and referrals for perinatal mood disorders in an integrated multi-site pediatric and obstetric setting. Matern Child Health J. 2019;23(10):1292-1298. doi: 10.1007/s10995-019-02780-x [DOI] [PubMed] [Google Scholar]

- 26.Jones N, Corrigan PW. Understanding stigma. In: Corrigan PW, ed. The Stigma of Disease and Disability: Understanding Causes and Overcoming Injustices. American Psychological Association; 2013:9-34. [Google Scholar]

- 27.National Collaborating Centre for Mental Health and National Institute for Health and Clinical Excellence. Antenatal and postnatal mental health: clinical management and service guidance. Accessed August 31, 2024. https://www.nice.org.uk/guidance/cg192/resources/antenatal-and-postnatal-mental-health-clinical-management-and-service-guidance-pdf-35109869806789

- 28.Milgrom J, Gemmill AW, eds. Identifying Perinatal Depression and Anxiety: Evidence-Based Practice in Screening, Psychosocial Assessment and Management. John Wiley & Sons, Ltd; 2015. doi: 10.1002/9781118509722 [DOI] [Google Scholar]

- 29.Mehra R, Boyd LM, Magriples U, Kershaw TS, Ickovics JR, Keene DE. Black pregnant women “get the most judgment”: a qualitative study of the experiences of black women at the intersection of race, gender, and pregnancy. Womens Health Issues. 2020;30(6):484-492. doi: 10.1016/j.whi.2020.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Somerville K, Neal-Barnett A, Stadulis R, Manns-James L, Stevens-Robinson D. Hair cortisol concentration and perceived chronic stress in low-income urban pregnant and postpartum Black women. J Racial Ethn Health Disparities. 2021;8(2):519-531. doi: 10.1007/s40615-020-00809-4 [DOI] [PubMed] [Google Scholar]

- 31.Giurgescu C, Engeland CG, Templin TN, Zenk SN, Koenig MD, Garfield L. Racial discrimination predicts greater systemic inflammation in pregnant African American women. Appl Nurs Res. 2016;32:98-103. doi: 10.1016/j.apnr.2016.06.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gress-Smith, JL, Luecken, LJ, Lemery-Chalfant K, Howe R. Postpartum depression prevalence and impact on infant health, weight, and sleep in low-income and ethnic minority women and infants. Matern Child Health J. 2012;16(4):887–893. doi: 10.1007/s10995-011-0812-y [DOI] [PubMed] [Google Scholar]

- 33.Kozhimannil KB, Trinacty CM, Busch AB, et al. Racial and ethnic disparities in postpartum depression care among low-income women. Psychiatr Serv. 2011;62(6):619-625. doi: 10.1176/appi.ps.62.6.619 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Saha S, Beach MC. Impact of physician race on patient decision-making and ratings of physicians: a randomized experiment using video vignettes. J Gen Intern Med. 2020;35(4):1084-1091. doi: 10.1007/s11606-020-05646-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Takeshita J, Wang S, Loren AW, et al. Association of racial/ethnic and gender concordance between patients and physicians with patient experience ratings. JAMA Netw Open. 2020;3(11):e2024583. doi: 10.1001/jamanetworkopen.2020.24583 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Fernandez Turienzo C, Newburn M, Agyepong A, et al. ; NIHR ARC South London Maternity and Perinatal Mental Health Research and Advisory Teams . Addressing inequities in maternal health among women living in communities of social disadvantage and ethnic diversity. BMC Public Health. 2021;21(1):176. doi: 10.1186/s12889-021-10182-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Dennis CL, Chung-Lee L. Postpartum depression help-seeking barriers and maternal treatment preferences: a qualitative systematic review. Birth. 2006;33(4):323-331. doi: 10.1111/j.1523-536X.2006.00130.x [DOI] [PubMed] [Google Scholar]

- 38.Abrams LS, Dornig K, Curran L. Barriers to service use for postpartum depression symptoms among low-income ethnic minority mothers in the United States. Qual Health Res. 2009;19(4):535-551. doi: 10.1177/1049732309332794 [DOI] [PubMed] [Google Scholar]

- 39.Chaudron LH, Szilagyi PG, Tang W, et al. Accuracy of depression screening tools for identifying postpartum depression among urban mothers. Pediatrics. 2010;125(3):e609-e617. doi: 10.1542/peds.2008-3261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Tandon SD, Cluxton-Keller F, Leis J, Le HN, Perry DF. A comparison of three screening tools to identify perinatal depression among low-income African American women. J Affect Disord. 2012;136(1-2):155-162. doi: 10.1016/j.jad.2011.07.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hsieh WJ, Sbrilli MD, Huang WD, et al. Patients’ perceptions of perinatal depression screening: a qualitative study. Health Aff (Millwood). 2021;40(10):1612-1617. doi: 10.1377/hlthaff.2021.00804 [DOI] [PubMed] [Google Scholar]

- 42.Putnam-Hornstein E, Needell B, King B, Johnson-Motoyama M. Racial and ethnic disparities: a population-based examination of risk factors for involvement with child protective services. Child Abuse Negl. 2013;37(1):33-46. doi: 10.1016/j.chiabu.2012.08.005 [DOI] [PubMed] [Google Scholar]

- 43.Wang S, Pathak J, Zhang Y. Using electronic health records and machine learning to predict postpartum depression. In: Ohno-Machado L, Sérouss B, eds. MEDINFO 2019: Health and Wellbeing e-Networks for All. IOS Press; 2019:888-892. [DOI] [PubMed] [Google Scholar]

- 44.Amit G, Girshovitz I, Marcus K, et al. Estimation of postpartum depression risk from electronic health records using machine learning. BMC Pregnancy Childbirth. 2021;21(1):630; Epub ahead of print. doi: 10.1186/s12884-021-04087-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Beck CT. Predictors of postpartum depression: an update. Nurs Res. 2001;50(5):275-285. doi: 10.1097/00006199-200109000-00004 [DOI] [PubMed] [Google Scholar]

- 46.Ihongbe TO, Masho SW. Do successive preterm births increase the risk of postpartum depressive symptoms? J Pregnancy. 2017;2017:4148136. doi: 10.1155/2017/4148136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Helle N, Barkmann C, Bartz-Seel J, et al. Very low birth-weight as a risk factor for postpartum depression four to six weeks postbirth in mothers and fathers: cross-sectional results from a controlled multicentre cohort study. J Affect Disord. 2015;180:154-161. doi: 10.1016/j.jad.2015.04.001 [DOI] [PubMed] [Google Scholar]

- 48.Smithson S, Mirocha J, Horgan R, Graebe R, Massaro R, Accortt E. Unplanned cesarean delivery is associated with risk for postpartum depressive symptoms in the immediate postpartum period. J Matern Fetal Neonatal Med. 2022;35(20):3860-3866. doi: 10.1080/14767058.2020.1841163 [DOI] [PubMed] [Google Scholar]

- 49.Wyatt T, Shreffler KM, Ciciolla L. Neonatal intensive care unit admission and maternal postpartum depression. J Reprod Infant Psychol. 2019;37(3):267-276. doi: 10.1080/02646838.2018.1548756 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Clusmann J, Kolbinger FR, Sophie Muti H, et al. The future landscape of large language models in medicine. Commun Med (Lond). 2023;3(1):141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Agniel D, Kohane IS, Weber GM. Biases in electronic health record data due to processes within the healthcare system: retrospective observational study. BMJ. 2018;361:k1479. doi: 10.1136/bmj.k1479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366(6464):447-453. doi: 10.1126/science.aax2342 [DOI] [PubMed] [Google Scholar]

- 53.Zhang Y, Wang S, Hermann A, Joly R, Pathak J. Development and validation of a machine learning algorithm for predicting the risk of postpartum depression among pregnant women. J Affect Disord. 2021;279:1-8. doi: 10.1016/j.jad.2020.09.113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hochman E, Feldman B, Weizman A, et al. Development and validation of a machine learning-based postpartum depression prediction model: a nationwide cohort study. Depress Anxiety. 2021;38(4):400-411. doi: 10.1002/da.23123 [DOI] [PubMed] [Google Scholar]

- 55.Park Y, Hu J, Singh M, et al. Comparison of methods to reduce bias from clinical prediction models of postpartum depression. JAMA Netw Open. 2021;4(4):e213909. doi: 10.1001/jamanetworkopen.2021.3909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Slezak J, Sacks D, Chiu V, et al. Identification of postpartum depression in electronic health records: validation in a large integrated health care system. JMIR Med Inform. 2023;11:e43005. doi: 10.2196/43005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Wakefield C, Frasch MG. Predicting patients requiring treatment for depression in the postpartum period using common electronic medical record data available Antepartum. AJPM Focus. 2023;2(3):100100. doi: 10.1016/j.focus.2023.100100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.English S, Steele A, Williams A, et al. Modelling of psychosocial and lifestyle predictors of peripartum depressive symptoms associated with distinct risk trajectories: a prospective cohort study. Sci Rep. 2018;8(1):12799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Zhang W, Liu H, Silenzio VMB, Qiu P, Gong W. Machine learning models for the prediction of postpartum depression: application and comparison based on a cohort study. JMIR Med Inform. 2020;8(4):e15516. doi: 10.2196/15516 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Andersson S, Bathula DR, Iliadis SI, Walter M, Skalkidou A. Predicting women with depressive symptoms postpartum with machine learning methods. Sci Rep. 2021;11(1):7877. doi: 10.1038/s41598-021-86368-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Gopalakrishnan A, Venkataraman R, Gururajan R, et al. Predicting women with postpartum depression symptoms using machine learning techniques. Mathematics. 2022;10(23):4570. doi: 10.3390/math10234570 [DOI] [Google Scholar]

- 62.Yang ST, Yang SQ, Duan KM, et al. The development and application of a prediction model for postpartum depression: optimizing risk assessment and prevention in the clinic. J Affect Disord. 2022;296:434-442. doi: 10.1016/j.jad.2021.09.099 [DOI] [PubMed] [Google Scholar]

- 63.Venkatesh KK, Kaimal AJ, Castro VM, Perlis RH. Improving discrimination in antepartum depression screening using the Edinburgh Postnatal Depression Scale. J Affect Disord. 2017;214:1-7. doi: 10.1016/j.jad.2017.01.042 [DOI] [PubMed] [Google Scholar]

- 64.Matsuo S, Ushida T, Emoto R, et al. Machine learning prediction models for postpartum depression: a multicenter study in Japan. J Obstet Gynaecol Res. 2022;48(7):1775-1785. doi: 10.1111/jog.15266 [DOI] [PubMed] [Google Scholar]

- 65.Huang Y, Alvernaz S, Kim SJ, et al. Predicting prenatal depression and assessing model bias using machine learning models. medRxiv. Preprint posted online July 18, 2023 doi: 10.1101/2023.07.17.23292587 [DOI] [PMC free article] [PubMed]

- 66.Brouwers EPM, van Baar AL, Pop VJM. Does the Edinburgh Postnatal Depression Scale measure anxiety? J Psychosom Res. 2001;51(5):659-663. doi: 10.1016/S0022-3999(01)00245-8 [DOI] [PubMed] [Google Scholar]

- 67.Kroenke K, Spitzer RL. The PHQ-9: a new depression diagnostic and severity measure. Psychiatr Ann. 2013;32:509-515. doi: 10.3928/0048-5713-20020901-06 [DOI] [Google Scholar]

- 68.Kroenke K, Spitzer RL, Williams JBW. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001;16(9):606-613. doi: 10.1046/j.1525-1497.2001.016009606.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Cox JL, Holden JM, Sagovsky R. Detection of postnatal depression: development of the 10-item Edinburgh Postnatal Depression Scale. Br J Psychiatry. 1987;150:782-786. doi: 10.1192/bjp.150.6.782 [DOI] [PubMed] [Google Scholar]

- 70.Smith-Nielsen J, Egmose I, Wendelboe KI, Steinmejer P, Lange T, Vaever MS. Can the Edinburgh Postnatal Depression Scale-3A be used to screen for anxiety? BMC Psychol. 2021;9(1):118. doi: 10.1186/s40359-021-00623-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Wong E. Evaluating bias-mitigated predictive models of perinatal mood and anxiety disorders. GibHub. Accessed August 20, 2024. https://github.com/emilyfranceswong/Evaluating-Bias-Mitigated-Predictive-Models-of-Perinatal-Mood-and-Anxiety-Disorders/tree/main [DOI] [PMC free article] [PubMed]

- 72.Dwork C, Hardt M, Pitassi T, et al. Fairness through awareness. Paper presented at: ITCS ’12: Proceedings of the 3rd Innovations in Theoretical Computer Science Conference; January 8-10, 2012;214-226. Cambridge, MA. Accessed August 15, 2024. https://dl.acm.org/doi/abs/10.1145/2090236.2090255

- 73.Hardt M, Price E, Srebro N. Equality of opportunity in supervised learning. Paper presented at: 30th Conference on Neural Information Processing Systems; January 8-10, 2016;Barcelona, Spain. [Google Scholar]

- 74.Hou B, Mondragón A, Tarzanagh DA, et al. PFERM: a fair empirical risk minimization approach with prior knowledge. AMIA Jt Summits Transl Sci Proc. 2024;2024:211-220. [PMC free article] [PubMed] [Google Scholar]

- 75.Kamiran F, Calders T. Data preprocessing techniques for classification without discrimination. Knowl Inf Syst. 2012;33:1-33. doi: 10.1007/s10115-011-0463-8 [DOI] [Google Scholar]

- 76.Jiang H, Nachum O. Identifying and correcting label bias in machine learning. Paper presented at: 23rd International Conference on Artificial Intelligence and Statistics; August 26-28, 2020; Palermo, Italy. In: Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, PMLR 108:702-712, 2020. [Google Scholar]

- 77.Calmon FP, Wei D, Vinzamuri B, et al. Optimized pre-processing for discrimination prevention. Paper presented at: 31st Conference on Neural Information Processing Systems; December 4-9, 2017; Long Beach, CA. In: 31st Conference on Neural Information Processing Systems; 2017. [Google Scholar]

- 78.Xing X, Liu H, Chen C, et al. Fairness-aware unsupervised feature selection. Paper presented at: 30th ACM International Conference on Information & Knowledge Management; November 1-5, 2021; New York, NY. In: Proceedings of the 30th ACM International Conference on Information and Knowledge Management;2021;3548-3552. [Google Scholar]

- 79.Zhang BH, Lemoine B, Mitchell M. Mitigating unwanted biases with adversarial learning. Paper presented at: 2018 AAAI/ACM Conference on AI, Ethics, and Society; October 21-23, 2018; In: Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society; 2018; 335-340. [Google Scholar]

- 80.Frankham C, Richardson T, Maguire N. Psychological factors associated with financial hardship and mental health: a systematic review. Clin Psychol Rev. 2020;77:101832. doi: 10.1016/j.cpr.2020.101832 [DOI] [PubMed] [Google Scholar]

- 81.Myers CA. Food insecurity and psychological distress: a review of the recent literature. Curr Nutr Rep. 2020;9(2):107-118. doi: 10.1007/s13668-020-00309-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Liu Y, Njai RS, Greenlund KJ, Chapman DP, Croft JB. Relationships between housing and food insecurity, frequent mental distress, and insufficient sleep among adults in 12 US States, 2009. Prev Chronic Dis. 2014;11:E37. doi: 10.5888/pcd11.130334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Downey L, Van Willigen M. Environmental stressors: the mental health impacts of living near industrial activity. J Health Soc Behav. 2005;46(3):289-305. doi: 10.1177/002214650504600306 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. Percentage of patients with records of psychotherapeutic drugs, prescribed prior to delivery, across racial groups

eTable 2. Counts of patients who received the PHQ-9 and EPDS measures

eTable 3. Mean test AUROC without reweighing during preprocessing with associated 95% confidence intervals (bootstrapped)

eFigure 1. Model test AUROC’s across 100 bootstrapped test sets and collapsed across sensitive attributes

eFigure 2. Feature importance based on mean accuracy decrease for predicting PHQ-9 outcome

eFigure 3. Feature importance based on mean accuracy for predicting EPDS outcome

eFigure 4. Demographic parity for each race relative to Non-Hispanic White patients

eFigure 5. False negative for each race relative to Non-Hispanic White patients

eAppendix 1. Comparing with Huang et al

eFigure 6. Test AUROCs for each race relative to Non-Hispanic White patients

eFigure 7. False positive rates for each race relative to Non-Hispanic White patients

eFigure 8. True positive (TP) rates for each race relative to Non-Hispanic White patients

Data Sharing Statement