Abstract

Background

Feedback plays an integral role in clinical training and can profoundly impact students’ motivation and academic progression. The shift to online teaching, accelerated by the COVID-19 pandemic, highlighted the necessity of transitioning traditional feedback mechanisms to digital platforms. Despite this, there is still a lack of clarity regarding effective strategies and tools for delivering digital feedback in clinical education. This scoping review aimed to assess the current utilization of digital feedback methods in clinical education, with a focus on identifying potential directions for future research and innovation.

Methods

A database search using a published protocol based on the Joanna Briggs Institute framework was conducted between January 2010 and December 2023. Six databases were searched, PubMed/MEDLINE, EBSCOhost, Scopus, Google Scholar, Union Catalogue of Theses and Dissertations, and WorldCat Dissertations and Theses. Reviewers independently screened the papers against eligibility criteria and discussed the papers to attain consensus. Extracted data were analyzed qualitatively and descriptively.

Results

Of the 2412 records identified, 33 reports met the inclusion criteria. Digital tools explored for feedback included web-based and social sites, smart device applications, virtual learning environments, virtual reality, and artificial intelligence. Convenience and immediate, personalized feedback and enhanced formative assessment outcomes were major facilitators of digital feedback utility. Technical constraints, limited content development, training, and data security issues hindered the adoption of these tools. Reports mostly comprised empirical research, published in the global North and conducted on undergraduates studying medicine.

Conclusion

This review highlighted a geographical imbalance in research on feedback exchange via digital tools for clinical training and stressed the need for increased studies in the global South. Furthermore, there is a call for broader exploration across other health professions and postgraduate education. Additionally, student perceptions of digital tools as intrusive necessitate a balanced integration with traditional feedback dialogues. The incorporation of virtual reality and artificial intelligence presents promising opportunities for personalized, real-time feedback, but requires vigilant governance to ensure data integrity and privacy.

Scoping review protocol

Supplementary Information

The online version contains supplementary material available at 10.1186/s13643-024-02705-y.

Keywords: Digital tools, Applications, Digital feedback, Feedback, Clinical training

Background

Feedback is a powerful tool that educators can utilize to improve student motivation, engagement, and performance [1]. Feedback in education is generally understood as a continuum, an ongoing teaching and learning process, used to support and promote student learning [1, 2]. In clinical training, feedback has been defined as “specific information about the comparison between a trainee’s observed performance and a standard, given with the intent to improve the trainee’s performance” [3]. Effective feedback provides information on observations rather than judgement, highlights the differences between benchmark and actual outcomes, and offers students an opportunity to grow through self-reflection [4]. Feedback can also guide teaching and assessment strategies and help develop self-regulated learners [5].

The concept of feedback provision has been reconceptualized with the adoption of competency-based learning in medical education [6]. In this model, students are at the core of the feedback process, and their engagement in feedback exchange is encouraged [6]. It is acknowledged that feedback is a formative, continuous process and that instructors need to provide appropriate feedback regularly so students can remediate observed behaviors timeously [7, 8]. However, even though instructors believe that they provide effective, timely feedback, students appear to disagree [9]. This could be attributed to the student’s lack of awareness that feedback was being provided, difficulties in processing of the feedback or an actual lack of feedback [9]. Student acceptance of feedback may depend on various factors, including the mode and tone of feedback delivery, cultural influences, medium of instruction (not delivered in student’s first language), student expectations, or the use of technology [4, 10]. It is recommended that feedback delivery should be reviewed to enhance how students perceive and use the feedback provided to them [11].

Modes of feedback delivery include written, verbal, gestural, video-based, or automated via virtual learning systems [4, 12–14]. While it is recognized that no single feedback model will suit every clinical context [15], the digitization of education has demonstrated promise, offering the potential for various feedback modalities. Digital tools in education received widespread prominence with the enforced shift to online learning during COVID-19, along with associated challenges in education continuity and feedback provision [16, 17]. In clinical training, digital tools were in use prior to the pandemic, but several novel alternatives were attempted during this period to support clinical training and feedback. Some of the digital tools used to promote medical education and feedback delivery included smartphone applications; videoconferencing platforms; social networking and instant messaging sites, Zoom, Facebook, and WhatsApp; and virtual and augmented reality [18–20].

Considering the digital evolution of medical education, the aim of this scoping review was to identify and describe the digital tools and applications currently being used in feedback exchange in clinical training. Furthermore, it aimed to spotlight prevailing trends and gaps regarding the adoption and use of these digital resources in giving and receiving of feedback for learning in clinical training.

Methods

Protocol

This scoping review was informed by a published protocol [21], based on the Joanna Briggs Institute (JBI) framework, and adhered to the reporting guideline of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) [22, 23] (see Additional file 1).

Eligibility criteria

Peer-reviewed studies, including original research, review papers and guides, and grey literature, including conference proceedings, on digital tools and applications used to give and receive feedback in clinical training were included. Full-text articles that were available in English and included undergraduate and/or postgraduate medical education or clinical training with a specific focus on feedback delivered via digital technologies or electronic modality were sought. Opinion papers and studies utilizing digital tools and applications for reasons other than feedback provision in clinical training environments were excluded. The timeframe for the database search was from 01 January 2010 to 31 December 2023, as most digital tools in clinical education gained prominence in the recent decade [24].

Information sources and search strategy

Database searches were conducted to find articles that fulfil the eligibility criteria of this scoping review, with assistance from an experienced subject librarian. The following databases were searched:

PubMed/MEDLINE

EBSCOhost (Academic search complete, CINAHL with full text)

Google Scholar

Scopus

Union Catalogue for Theses and Dissertations via SABINET Online.

WorldCat Dissertations and Theses

In October 2022, an initial search was conducted to develop the study protocol [21]. Subsequently, database searches were conducted in February 2023 and January 2024. Details of the database search are provided in Additional file 2.

Screening and selection

The results were downloaded to an Endnote library and duplicate entries were removed. Two researchers independently screened the titles and abstracts for the eligibility criteria. The full texts of all suitable articles were then retrieved and reviewed. A manual search of the reference lists of these articles and a hand search were also conducted. If consensus on the eligibility of an article was not reached, a third reviewer made the final decision thereon.

Data charting and synthesis of results

A data charting form was created for this scoping review. Initially, one reviewer tested the form, which was then deliberated upon with other reviewers. Following further testing and numerous critical discussions involving all reviewers, the chart underwent revisions and upon reaching a consensus, was ultimately utilized to compile information. The following descriptive data were collected: year of publication, country of publication, article type, sampling method, study design, data collection tools, participant profile, type of digital tools and applications, the main features and functions of the digital tools and applications, their current use for feedback exchange in clinical training, and the facilitators and barriers of using these tools and applications in clinical training. The data charting form is found in Additional file 3. Content thematic analysis [21] was adopted to extract the relevant data. The findings were coded and reported in a narrative for each identified category. In accordance with the JBI framework, scoping reviews provide an overview of current evidence, and formal assessment of methodological quality is not mandatory and was therefore not conducted [25].

Results

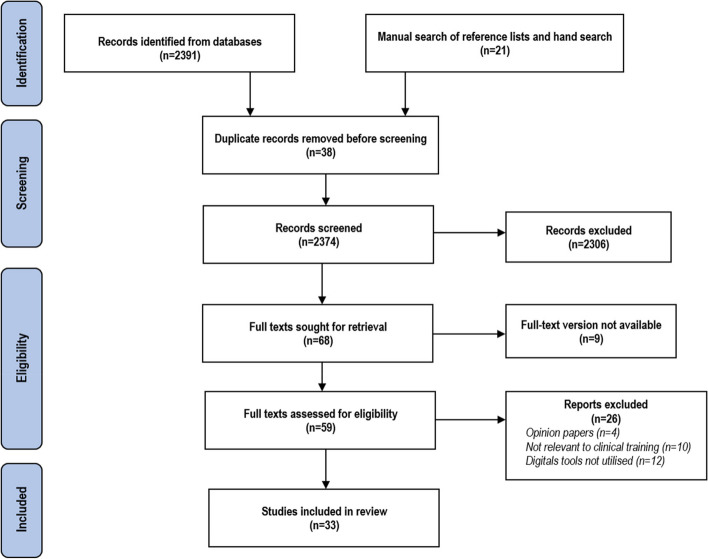

The database search, including the manual search of reference lists and hand search, identified 2412 reports. After the removal of duplicates and completion of title and abstract screening, 68 papers were eligible for review. The eligibility assessment process led to the inclusion of 33 papers in this scoping review (Fig. 1, Table 1).

Fig. 1.

PRISMA flow diagram for scoping review of digital tools and applications used for feedback exchange in clinical training

Table 1.

Summary of included publications

| Author Year of publication Country |

Article type | Sampling method Study design Data collection tools |

Participant profile Sample size |

Aim | Key findings | What digital tools and applications are available for feedback exchange in the clinical training environment? | What are the main functions or features of these digital tools and applications? | How are these digital tools and applications currently being used for feedback exchange in clinical training? | Sub-themes for facilitators and barriers of using these digital tools for feedback in the clinical environment? |

|---|---|---|---|---|---|---|---|---|---|

|

De Kleijn et al. [26] 2013 Netherlands |

Original research |

Purposive Mixed method Survey |

Biomedical Sciences Undergraduate n = 147 |

To provide insight on student reasons for using or not using OFA | OFA on VLE were useful in providing feed up, feedback, and feed forward information to help students prepare for summative assessments and improved course completion rates | VLE | Immediate feedback | FA |

F: convenience B: technical constraints, digital literacy |

|

Ferenchick et al. [27] 2013 USA |

Innovation in research |

Purposive Quantitative method Performance data |

Medicine Undergraduate n = 883 |

To develop an easily accessible and user-friendly web-based assessment and feedback tool with customizable content that can record trainee progress for both learner and program evaluations | User-friendly application and is highly feasible in competency-based medical education |

Just in Time Medicine e-logbook |

Cloud-based, Customizable Real-time data of training program and feedback |

WBA |

F: convenience, improved administration B: device privacy |

|

Forgie et al. [28] 2013 Canada |

Review |

Keywords search Review Database search |

Research papers Undergraduate n = 37 |

To describe how X (Twitter) may be used as a learning tool in medical education | X is easily accessible, useful for immediate personalized feedback, and provided students with control over their learning process | X (Twitter) | Immediate feedback | FA |

F: convenience B: digital literacy, data authenticity |

|

Gray et al. [29] 2014 UK |

Innovation in research |

Purposive Action research Literature review and survey |

Medicine Undergraduate n = 12 |

To develop a novel smartphone application to provide feedback about the trainees’ performance and on the trainers’ clinical training and supervision skills | Healthcare Supervision Logbook serves as a logbook of procedures, collects daily feedback from trainers and trainees, and is useful in identifying trends in clinical practice and training |

Healthcare Supervision Logbook e-logbook |

Cloud-based mobile application Real-time data of training program and feedback |

WBA |

F: convenience, improved administration B: content development |

|

Stone [30] 2014 UK |

Original research |

Convenience Mixed method Survey |

Medicine Undergraduate n = 75 |

To determine the factors that could influence a medical student’s engagement with written feedback delivered through an online marking tool | Most students preferred the online feedback as compared to handwritten feedback, only a few were dissatisfied and further feedback dialogues between lecturer and students were recommended | Online feedback tool (Grademark by Turnitin) | Timely feedback | FA |

F: convenience B: technical constraints, training |

|

Hennessy et al. [31] 2016 UK |

Original research |

Convenience Qualitative method Survey and focus group |

Medicine Undergraduate n = 150 |

To determine if X (Twitter) can support student learning on a neuroanatomy module and its impact on student engagement and learning experiences | X was a useful platform for immediate feedback, created supportive student network and positively impacted on students' learning experience | X (Twitter) | Immediate feedback | FA |

F: convenience, enhanced FA outcomes B: training |

|

Liu et al. [32] 2016 Australia |

Original research |

Purposive Randomized crossover study Questionnaires |

Medicine Undergraduate n = 268 |

To evaluate the effectiveness of EQClinic in improving the nonverbal clinical communication skills of medical students | EQClinic facilitated student reflection and improved nonverbal clinical communication skills | EQClinic | Immediate feedback | Nonverbal communication skills |

F: convenience, enhanced FA outcomes B: technical constraints, training |

|

Lefroy et al. [33] 2017 UK |

Innovation in research |

Purposive Action research Performance data and focus group |

Medicine Undergraduate n = 666 |

To determine whether an app-based software system that supports creation and storage of assessment feedback summaries makes WBA easier for clinical tutors and enhances the educational impact on medical students | The app was designed to provide feedback on the trainee’s WBA and deemed useful for detailed feedback but device usage during feedback discussions was distracting and further training for tutors on app usage was recommended |

Keele Workplace Assessor Mobile and web-based application e-logbook |

Automatic speech recognition Real-time data of training program and feedback |

WBA |

F: convenience B: technical constraints, digital literacy |

|

Abdulla [34] 2018 Ireland |

Original research |

Convenience Quantitative method Survey and performance data |

Medicine Undergraduate n = 65 |

To validate the utilization of online student response systems in medical education | Online interactive exercises with immediate feedback were positively received by students and improved class performance | Socrative |

Immediate feedback Real-time class performance data |

FA |

F: convenience, improved administration B: technical constraints |

|

Donkin et al. [35] 2019 Australia |

Original research |

Purposive Mixed method Survey |

Medical Laboratory Science Undergraduate n = 28 |

To determine if students who used video feedback and online resources displayed greater engagement with the subject and achieved higher grades | Virtual training may be effective when the hands-on approach is too complex for early learners, expensive, or inaccessible due to laboratory or time constraints. Video-assisted feedback helped with self-reflection and expert feedback improved student performance |

Interactive lessons on SmartSparrow Adaptive e-Learning Platform Video-assisted feedback via Blackboard |

Immediate feedback Interactive virtual lessons Immediate access to skills video for reflection |

FA |

F: convenience, enhanced FA outcomes B: training |

|

Dixon et al. [36] 2020 UK |

Original research |

Purposive Action research Performance data |

Trainers n = 12 |

To measure aspects of the validity of the assessments and qualitative clinical feedback provided by VR dental simulators and comparison of the feedback from VR dental simulator and clinical tutors | The Virteasy dental simulator provided reliable and clinically relevant qualitative feedback. VR simulators need to be supported by well-defined and clear pedagogy to maximize their utility and ensure validated approaches to assessment | Virteasy dental simulator by HRV | Real-time, qualitative feedback | FA |

F: enhanced FA outcomes, customizable content B: currently limited clinical scenarios |

|

Duggan et al. [37] 2020 Canada |

Innovation In research |

Purposive Mixed method Performance data and survey |

Medicine Undergraduate n = 704 |

To evaluate the use of mobile technology in recording of EPA in an undergraduate medical curriculum | The eClinic Card allowed for recording of the level of EPA and monitoring of students. Although it was easy to use, instructors required further coaching and feedback training |

eClinic card Mobile-based application e-logbook |

Real-time data of training program and feedback | WBA, EPA |

F: convenience, improved administration B: faculty training |

|

McGann et al. [38] 2020 USA |

Original research |

Purposive Non-random study Survey |

Medicine Undergraduate Trainees n = 86 Trainers n = 21 |

To examine the feasibility and utility of a completely online surgical skills elective for undergraduate medical students | Students preferred a hybrid elective. Peer feedback was not highly rated, and multiple, shorter videos were recommended for a more complete evaluation |

Instrument Identification Module [purposegames.com] Video-assisted feedback [box.com] Zoom feedback session |

Timely feedback | FA |

F: enhanced FA outcomes, safe learning environment B: training |

|

Rucker et al. [39] 2020 USA |

Innovation in research |

Convenience Action research with participatory enquiry Survey |

Medicine Undergraduate n = 118 |

To facilitate a clinical interviewing skills lab and telemedicine-type encounter with standardized patients using Zoom breakout and waiting room features | Useful alternative to live group activities and for FA. It is not practical to assess every student if there are large student classes, and more faculty are required to host further sessions | Breakout rooms in Zoom for online OSCEs | Real-time, personalized feedback | FA |

F: convenience B: content development, training |

|

Mukherjee, et al. [40] 2021 India |

Original research |

Purposive Interventional study Performance data and questionnaire |

Medicine Undergraduate n = 197 |

To objectively quantify the benefit of virtual education using WhatsApp-based discussion groups | Instant messaging platforms were popular, especially during the pandemic. WhatsApp-based case discussions were a good supplementation for surgical training and evaluation | WhatsApp Instant messaging application |

Encrypted data Customizable Timely feedback |

FA |

F: convenience B: content development, digital literacy |

|

Snekalatha, et al. [41] 2021 India |

Curriculum development and assessment |

Purposive Quantitative method Survey |

Medicine Undergraduate n = 100 |

To determine medical students’ perception of the reliability of the unproctored online assessments and the usefulness of the feedback they receive on their performance, and practical issues of these online tests | OFA via Google Forms provided faster feedback than traditional testing methods. Although technical challenges were faced with network connectivity, the immediate feedback from any location improved student engagement and helped them prepare for summative assessments | Google Forms |

Cloud-based Immediate feedback |

FA |

F: convenience, improved administration, enhanced FA outcomes B: technical constraints, content development |

|

Tautz, et al. [42] 2021 Switzerland |

Original research |

Convenience Convergent mixed method Survey and focus group |

Psychology Undergraduate n = 95 |

To evaluate the perceived impact of digital tools on active learning, repetition, and feedback in a large university class | The classroom response system was well received by students and was perceived to improve active learning and student engagement |

Classroom response system Question tool |

Immediate feedback | FA |

F: convenience, enhanced FA outcomes B: training |

|

Ain et al. [43] 2022 Pakistan |

Original research |

Convenience Quantitative method Survey |

Medical, Dental, Pharmacy Undergraduate n = 351 |

To determine undergraduate medical and health sciences students’ perceptions of OFA | Immediate feedback was appreciated by students and improved student engagement, preparation for summative assessments, and course completion rates although time constraints were reported. Useful for educational continuity during the enforced lockdown during the pandemic and in low-resourced environments | VLE | Immediate feedback | FA |

F: convenience B: technical constraints |

|

Barteit et al. [44] 2022 Zambia |

Original research |

Purposive Convergent mixed-methods Interviews and performance data |

Medicine Undergraduate Trainees n = 10 Trainers n = 2 |

To evaluate the feasibility and acceptability of an e-logbook for continuous evaluation and monitoring of medical skills training | The e-logbook was accepted for monitoring and evaluating medical skills during the clinical rotations. Challenges included e-logbook design and need to improve user flow. It also created a perception of increased work burden as additional time was required to complete the e-logbook. Continuous training and contextual redesign were recommended |

e-logbook Internal Medicine LMMU Tablet-based e-logbook |

Offline use possible Immediate feedback Real-time data of training program |

WBA |

F: convenience, improved administration B: content development, training |

|

Fazlollahi et al. [45] 2022 Canada |

Original research |

Purposive Randomized clinical trial Performance data |

Medicine Undergraduate n = 70 |

To determine if AI tutoring feedback resulted in improvement in surgical performance over 5 practice sessions and on learning and skill retention | In comparison to remote expert instructions, feedback from the virtual online assistant simulation showed improved performance outcome and skill transfer, suggesting advantages for its use in simulation training. Repeated individualized feedback was possible | Virtual online assistant application in a tumor resection simulator, NeuroVR | Real-time feedback | FA |

F: customizable content, improved administration B: currently single clinical scenario |

|

Goodman, et al. [46] 2022 USA |

Original research |

Convenience Innovation in research Survey |

Medicine Undergraduate n = 20 Postgraduate n = 23 Trainers n = 13 |

To create a free, mobile, case-based gastroenterology/hepatology educational resource and report on trainee feedback on these cases | A novel open-access educational resource that supported on-the-go learning for trainees at various stages of studies, and promoted independent, self-paced learning, and clinical reasoning development. It was perceived as a valuable tool especially for remote learning |

GISIM open-access website resource with virtual-cased-based learning Mobile platform |

Customizable Real-time iterative feedback |

FA |

F: convenience B: content development |

|

Hung et al. [47] 2022 Taiwan |

Original research |

Convenience Quantitative method Survey |

Undergraduate Medicine n = 176 |

To determine the effectiveness of SRS in the online dermatologic video curriculum on medical students | The integration of SRS in the online video curriculum improved students’ completion rates and learning outcomes. SRS is easy to use and enhanced student–faculty interaction | Zuvio | Immediate feedback | FA |

F: convenience, enhanced FA outcomes B: technical constraints |

|

Sader et al. [48] 2022 Switzerland |

Original research |

Purposive Prospective mixed method Survey and focus group |

Medicine Undergraduate n = 158 |

To evaluate quality of the feedback given by near peers during online OSCEs and explore the experience of near-peer feedback from both learner’s and near peer’s perspectives | Junior students found the online OCSEs less stressful and more personalized, but feedback on hands-on skills was a challenge. Senior students found feedback provision enhanced their skills. Further training on feedback techniques and content development of OSCEs was required | Zoom | Immediate, Personalized feedback | FA |

F: convenience B: content development |

|

Shankar [49] 2022 Malaysia |

Review | Narrative review | n/a | To review how AI can be used in health professions education | Intelligent tutoring systems can support student learning by providing individualized feedback and creating personalized learning pathways and help identify knowledge and skill gaps and support learning. Data integrity and privacy are important issues to consider. Unconscious bias in the data used to educate AI systems should be avoided | AI tutoring systems |

Customizable Personalized automated feedback |

FA |

F: convenience, customizable, safe learning environment B: Data integrity and privacy |

|

Singaram [50] 2022 South Africa |

Original research |

Convenience Action Research (participatory) Interviews |

Medicine Postgraduate Trainees n = 5 Trainers n = 6 |

To design and develop a mobile application to enable bi-directional, specific, non-judgemental feedback to improve self-regulated learning and enhance clinical competence | The Feedback App was convenient and easy to use. There was potential to enhance clinical training thereby improving patient outcomes | Mobile Feedback App | Immediate feedback | FA |

F: convenience, enhanced FA outcomes B: technical constraints |

|

Al Kahf et al. [51] 2023 France |

Original research |

Purposive Randomized controlled study Performance data and survey |

Medicine Undergraduate n = 355 |

To compare the scores of trainees with access to Chatprogress against the control group | Students’ performance improved with access to and use of chatbots, and was a potential tool for teaching clinical reasoning in medicine | Chatprogress website with chatbot games | Immediate feedback | FA |

F: convenience, safe learning environment B: currently limited clinical scenarios |

|

Daud et al. [52] 2023 Qatar |

Original research |

Purposive Interventional study Questionnaire |

Dentistry Undergraduate n = 23 |

To evaluate the impact of VRHS in restorative dentistry on the learning experiences and perceptions of dental students | Novice dental students perceived VRHS as a useful tool for enhancing their manual dexterity. VR should support rather than replace conventional mannequin-simulated training in pre-clinical restorative dentistry. Advancements to the VRHS hardware and software are required to bridge the gap and provide a smooth transition to clinics. Further research with longitudinal data is recommended | VRHS (SIMtoCARE Dente) | Immediate, haptic sensory feedback | FA |

F: safe learning environment, reduced overheads, enhanced FA outcomes B: currently unrealistic scenarios, cost factors |

|

Drachuk et al. [53] 2023 Ukraine |

Conference proceedings | Narrative review | n/a | To characterize digital online tools used to provide effective feedback | The introduction of various online tools into the educational process motivates students and educators and provides an opportunity for students to receive high-quality, constructive feedback | Google Forms, Typeform, Survey Monkey, Kahoot, Slido, Mentimeter, Miro | Immediate feedback | FA |

F: convenience, improved administration B: technical constraints |

|

How et al. [54] 2023 Canada |

Original research |

Purposive Randomized controlled trial Questionnaires |

Medicine Undergraduate n = 52 Feedback providers (residents) n = 11 |

To implement remote smartphone-based feedback methods and report on the perceived feasibility and utility thereof in comparison to standard feedback methods | The study demonstrated feasibility and utility of an accessible and affordable model of remote video-delivered feedback in technical skills acquisition. Further investigation on more advanced technical skills which limit third-party observation (non-video endoscopy), and non-surgical skills were suggested | Remote live feedback or remote recorded feedback via Zoom and Google Drive | Immediate feedback | FA |

F: enhanced formative FA outcomes, customizable content B: cost factors, training, digital fatigue |

|

Marty et al. [55] 2023 Switzerland |

Guide | n/a | n/a | This AMEE Guide was developed to support institutions and programs that want to utilize mobile technology to implement EPA and WBA | Mobile technology should ensure easy, efficient, and valid information to assess for students’ readiness for autonomy in patient care, and for progression in medical training. Collection of assessment data on a large scale creates the fear of a surveillance society. Unintended data usage or breach is possible, and institutions must create ethical rules to protect the patient and data user | e-portfolios and mobile-based applications |

Immediate feedback Real-time data on training programs |

WBA, EPA |

F: Convenience, enhanced FA outcomes B: Cost factors, data ethical, and legal concerns |

|

Narayanan, et al. [56] 2023 India |

Review | Narrative review | n/a | To categorize the utility of available AI tools for students, faculty, and administrators | There is currently limited adoption of AI in medical education. Institutions should encourage AI use while providing appropriate support to educators and students to ensure optimal ethical use of technology | AI systems | Immediate feedback | FA |

F: enhanced FA outcomes B: data integrity, faculty digital literacy |

|

Peñafiel et al. [57] 2023 Ecuador |

Conference proceedings |

Purposive Randomized controlled study Performance data and questionnaire |

Medicine Undergraduate Trainees n = 100 Trainers n = 10 |

To compare the effectiveness of AI-based tutoring against expert tutoring | Students who received AI-based tutoring demonstrated better performance in the multi-test examination and appeared to have better retention of complex medical skills | Virtual Learning Experience platform with AI | Immediate feedback | FA |

F: enhanced FA outcomes B: content development |

|

Yilmaz et al. [58] 2023 Canada |

Original research |

Purposive Randomized controlled trial Performance data and questionnaire |

Medicine Undergraduate n = 120 |

To explore optimal feedback methodologies to enhance trainee skill acquisition in simulated surgical bimanual skills learning during brain tumor resections, by comparing numerical, visual, and visuospatial feedback | Simulations with autonomous visual and visuospatial assistance may be effective in teaching bimanual operative skills using visual and visuospatial feedback modalities | NeuroVR neurosurgical simulator with haptic feedback | Immediate automated feedback | FA |

F: enhanced FA outcomes, safe learning environment, customizable content B: content development |

OFA online formative assessments, VLE virtual learning environment, FA formative assessment, WBA workplace-based assessments, VR virtual reality, AI artificial intelligence, EPA entrustable professional activities, SRS student response system, OSCEs objective structured clinical examinations, VRHS virtual reality with haptic simulation

Characteristics of included publications

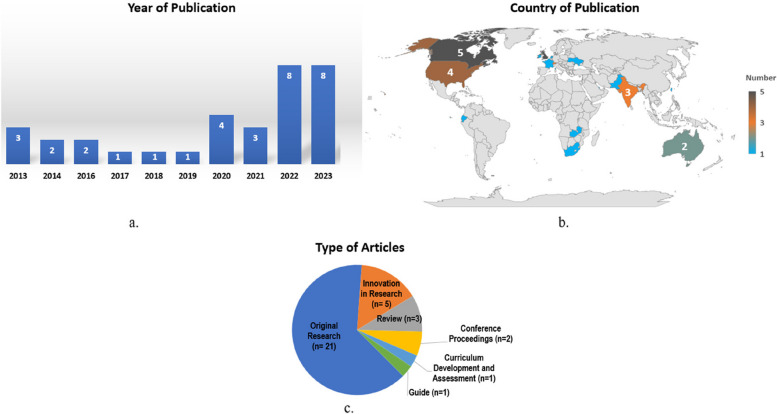

Year of publication

The timeframe selected for this scoping review was 01 January 2010 to 31 December 2023. Eight papers were published between 2010 and 2017 [26–33], and 25 studies were published from 2018 (the halfway mark of the selected time range) [34–58] (Fig. 2a).

Fig. 2.

Characteristics of included publications. a Year of publication. b Country of publication. c Type of articles

Country of publication

Of the 33 papers selected for this scoping review, 24 originated in the global North, namely, the UK (n = 5) [29–31, 33, 36], Canada (n = 5) [28, 37, 45, 54, 58], USA [27, 38, 39, 46], Switzerland [42, 48, 55], Australia [32, 35], and one paper each from France [51], Ireland [34], Netherlands [26], Ukraine [53], and Taiwan [47]. Nine papers emanated from the global South, three were from India [40, 41, 56], and one paper each from Ecuador [57], Malaysia [49], Qatar [52], Pakistan [43], South Africa [50], and Zambia [44] (Fig. 2b). In the context of this study, global North and global South refers not to geographical but rather political and economic context, and draws attention to the inequitable power dynamics in global research and publication [59, 60].

Type of articles

The types of studies were original research (n = 21) [26, 30–32, 34–36, 38, 40, 42–48, 50–52, 54, 58], innovation in research (n = 5) [27, 29, 33, 37, 39], review [28, 49, 56] curriculum development and assessment [41], conference proceedings [53, 57], and a guide [55] (Fig. 2c). For the purpose of this review, the “innovation in research” category was based on the actual publication classification, which described studies that developed their digital tools or applications in iterative steps.

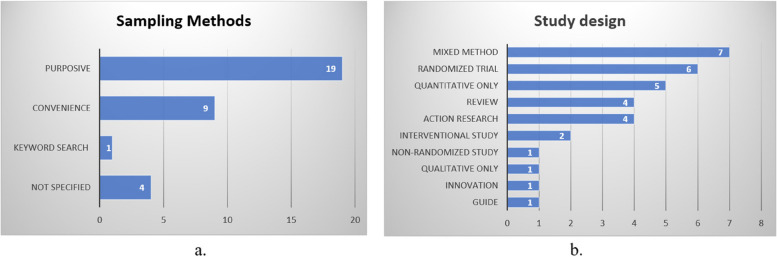

Sampling methods

Sampling methods of the eligible papers comprised purposive sampling (n = 19) [26, 27, 29, 32, 33, 35–38, 40, 41, 44, 45, 48, 51, 52, 54, 57, 58], convenient sampling (n = 9) [30, 31, 34, 39, 42, 43, 46, 47, 50], literature search using keywords [28], and unspecified [49, 53, 55, 56] (Fig. 3a).

Fig. 3.

Characteristics of included publications. a Sampling methods. b Study design

Study design

Study designs included mixed methods (n = 7) [26, 30, 35, 37, 42, 44, 48], randomized trials (n = 6)) [32, 45, 51, 54, 57, 58], quantitative only (n = 5) [27, 34, 41, 43, 47], review [28, 49, 53, 56], action research (n = 5) [29, 33, 36, 39, 50], interventional study [40, 52], and one paper each for non-randomized study [38], qualitative only [31], innovation [46], and a guide [55] (Fig. 3b).

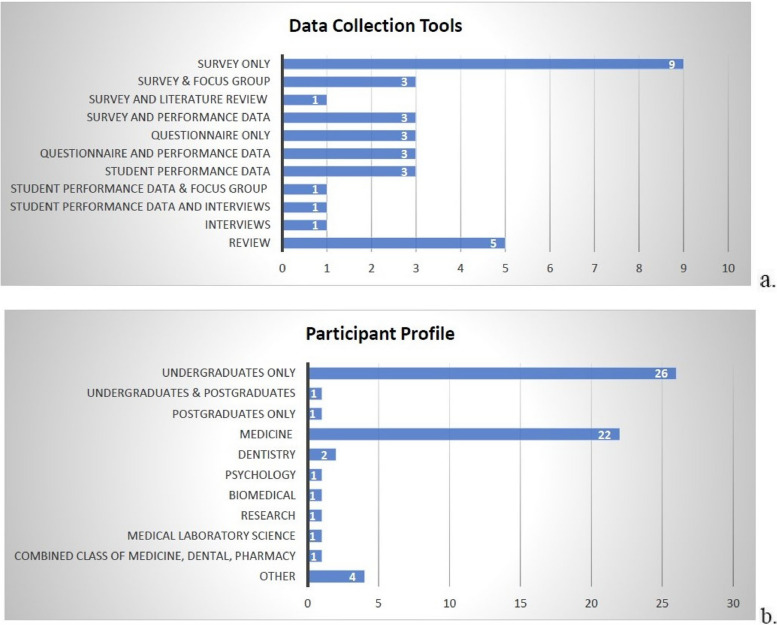

Data collection tools

Nine studies used surveys as a sole method of data collection [26, 30, 35, 38, 39, 41, 43, 46, 47], while further studies employed surveys in combination with focus groups [31, 42, 48], performance data [34, 37, 51], and literature review [29]. In total, six studies used questionnaires [32, 40, 52, 54, 57, 58], and of these, three studies employed questionnaires in conjunction with performance data [40, 57, 58]. In addition, three studies used performance data exclusively as data collection tools [27, 36, 45], while further studies used performance data in combination with either focus groups [33] or interviews [44]. One study collected data solely from interviews [50], and five studies conducted a literature search [28, 49, 53, 55, 56] (Fig. 4a).

Fig. 4.

Characteristics of included publications. a Data collection tools. b Participant profile

Participant profile

Of the empirical studies (n = 28), 26 investigated undergraduate students [26–35, 37–45, 47, 48, 51, 52, 54, 57, 58], one paper explored postgraduate students [50], while a further paper investigated both undergraduate and postgraduate students [46]. Studies conducted on medical students comprised 22 papers [27, 29–34, 37–41, 44–48, 50, 51, 54, 57, 58], two studies were conducted in dentistry [36, 52], and one paper each for biomedical sciences [26], research [28], psychology [42], medical laboratory science [35], and a combined class of medical, dental, and pharmacy students [43] (Fig. 4b).

Type of digital tools and applications utilized feedback exchange in clinical training

Four categories of digital tools were identified in feedback exchange for clinical training: web-based platforms, applications, virtual learning environments, and virtual reality and artificial intelligence.

Web-based platforms

Of the four papers that investigated feedback provision using web-based platforms, social networking platform X (formerly Twitter) [28, 31] and Turnitin [30] were explored. One study developed a dedicated open-access website, called GISIM, to deliver Gastroenterology/Hepatology resources [46].

Applications

Fourteen papers reported on the use of either mobile or web-based applications in feedback provision. These studies included instant messaging application, WhatsApp, [40], and novel custom-designed e-logbook applications (n = 5), Just in Time Medicine [27], Healthcare Supervision Logbook [29], eClinic Card [37], Keele Workplace Assessor [33], e-logbook LMMU [44], as well as e-portfolios [55] and a mobile formative assessment application [50]. Two papers investigated open-access applications, Google Drive [54], box.com, and purposegame.com [38]. Interactive web-based student response systems (SRS) were investigated by five papers, via learning management systems (LMS) [42], or through Zuvio [47], Socrative [34], Google Forms [41, 53], Miro, Mentimeter, Kahoot!, and Slido [53].

Virtual learning environments

Three papers described feedback through the higher education institute LMS, Blackboard, Moodle, Sakai, and SmartSparrow Adaptive e-Learning Platform [26, 35, 43]. Virtual environments such as teleconferencing platform, Zoom [38, 39, 48], and teleconsultation site, EQClinic [32], were also explored in feedback provision.

Virtual reality and artificial intelligence

Eight papers investigated virtual reality (VR) and artificial intelligence (AI) in feedback provision. In dentistry training, papers described the use of the VirtEasy VR system [36] and the SIMtoCARE Dente VR system, the latter of which was programmed to provide haptic feedback [52]. In the training of neurologists, the NeuroVR system was investigated to provide haptic feedback [58] and with a Virtual Operative Assistant (VOA) that provided AI tutoring feedback [45]. AI tutoring systems were explored in two further papers [49, 57], and chatbots and gamification were also investigated in feedback provision [51, 56].

Main features of digital tools and applications in feedback exchange

Eight main features of the digital tools and applications were identified, including the use of cloud-based technology (n = 9) [27, 29, 35, 39, 41, 43, 48, 53, 54]. Data security (n = 5) in the form of encryption [40], secure cloud [29] or internal servers [33, 55, 56] and user authentication to gain access to the feedback (n = 7) [29, 33, 34, 39, 47, 48, 51] was a notable feature. Reported features included the user interface (n = 10) [27, 29, 32, 33, 40, 44, 46, 47, 50, 53], utility in resource-constrained areas, and offline use of digital resources [40, 44] and access through the institute virtual learning environment (VLE) [35, 43]. Automatic speech recognition was described [33] as well as customizable content (n = 7) [27, 35, 40, 41, 43, 49, 53]. Digital tools and applications that permitted multiple simultaneous users were explored by 13 papers [28, 31, 34, 35, 39, 40, 42, 43, 46–48, 51, 53].

Main functions of digital tools and applications in feedback exchange

The focus of this scoping review was on the feedback process; therefore, the main functions of the digital tools and applications were reported in relation to temporal aspects and types of feedback provision.

Immediate automated feedback

Immediate feedback occurs in asynchronous learning conditions; pre-engineered responses are automatically supplied when students ask a question or answer a quiz, for example, responses by a chatbot or explanations in an online quiz. Nine papers reported immediate feedback to trainees, including studies using the VLE via LMS [35, 43] and EQClinic [32], Google Forms and SRS [34, 41, 42, 47, 53], and chatbots [51].

Real-time feedback

This is provided in synchronous learning environments in which follow-up questions can be asked. This was further categorized as feedback provided to the trainee and to the faculty. Thirteen papers described real-time feedback to trainees using web-based platforms, including GISIM [46], X [28, 31], VLE via Zoom [39, 48, 54], instant messaging platform, WhatsApp [40], VR via Virteasy VR [36], NeuroVR with VOA [45], VR systems with haptic feedback [52, 58], and AI tutoring systems [49, 57]. Four papers described real-time feedback to faculty to provide real-time data on the program and student progress [27, 29, 33, 37].

Timely feedback

This was reported to be provided from within 2 h of the trainee request. Eight papers reported timely feedback using WhatsApp [40], Turnitin [30], video-assisted feedback via box.com [38] and Zoom [54], and e-logbooks [27, 29, 33, 37].

Individualized feedback

Personalized feedback for trainees was described by 14 papers, via WhatsApp chat feature [40] and X using tweets and hashtags [28, 31], VLE via LMS [35], Zoom [39, 48, 54], and EQClinic [32]. Some VR and AI tools provided adaptive feedback through machine learning processes (n = 6) [36, 45, 52, 56–58].

Other feedback functions

Studies reported on digital feedback provision from a variety of sources. Four papers explored peer feedback [38, 48, 50, 54], and two papers utilized multi-feedback sources that included patients, peers, trainees, and trainers [29, 32]. In addition, iterative feedback [46], feedforward and feed up feedback provision [26], feedback on nonverbal communication [32], and bi-directional feedback [50] were described.

Utilization of the digital tools and applications in feedback exchange

Feedback utility was categorized into workplace-based assessments (WBA), entrustable professional activities (EPA), and formative assessments (FA). WBA refers to documented observations by a trainer regarding a trainee’s clinical competencies and ability to provide patient-centric care [61], while EPA evaluations are completed while the trainee is being supervised to gain feedback on their competency, allowing them to eventually perform the tasks without supervision [37]. E-logbooks were used for WBA (n = 6) [27, 29, 33, 37, 44, 55] and EPA [37].

FA is “assessment for learning” that continues throughout the learning process [5]. It is useful as it informs students on their level of understanding and enables the instructor to monitor student progress and intervene timeously if necessary [5, 62]. Twenty papers explored digital tools for FA via web-based and VLE platforms [28, 31, 34, 35, 38, 39, 41–43, 46–48, 53], WhatsApp [40], mobile application [50], chatbots [51, 56], and AI tutoring systems [45, 49, 57].

Facilitators of the use of digital tools and application in feedback exchange

Facilitators to the use of digital tools and applications in feedback provision were categorized as convenience, enhanced formative assessment outcomes, customizable content, safe learning environment, and improved administrative processes.

Convenience

Feedback could be easily accessed on all digital devices (n = 18) [27, 29, 32–35, 37, 40–44, 46, 47, 50, 51, 53, 55], and digital tools were feasible for WBA and programmatic assessment [37, 44, 46, 55]. Digital tools allowed for ease of access from any location and time (n = 7) [30, 31, 41, 48, 49, 53, 54] and offline use was also possible [44]. Platforms such as Zoom, WhatsApp, and X allowed for FA and continuity of clinical training [39, 40], especially in the lockdown period during COVID-19 [26, 48] and were useful in resource-constrained regions [28, 44].

Enhanced formative assessment outcomes

Various feedback formats were reported to improve trainee skills and performance (n = 25). Immediate, real-time, and timely feedback (n = 10) [27–29, 36, 38, 42, 47, 49, 52, 56] from multiple feedback sources [29, 32, 55] allowed for longitudinal monitoring of trainees [37, 44, 55]. Digital tools provided opportunities for student self-reflection (n = 12) [28, 29, 32, 35–37, 42, 45, 49, 50, 54, 57]. Eye-hand coordination and fine motor skills were enhanced with the use of VR systems [52]. Unlimited viewing access to personalized feedback [54], opportunities for repeated practice in a low-stakes environment [36, 38, 57, 58], and the ability to train at their own pace and time [54] helped trainees to prepare for future residencies [38] and summative assessments [41, 47]. This also improved trainee engagement in the module [35] and course completion rates [47].

Customizable content

Digital tools allowed for the creation of personalized, objective, and iterative FA [36, 46, 52]. They offered flexibility by providing options for several clinical scenarios of varying duration and knowledge levels; therefore, trainees with different levels of expertise could practice and receive tailored feedback [45, 51]. It was also possible to adapt feedback modalities to suit the user’s personal preferences [58]. Furthermore, anonymous student login to the FA could be enabled, and this encouraged student participation in the module [42].

Safe learning environment

Five papers highlighted the usefulness of VR and AI in promoting trainee and patient safety. AI learning scenarios minimized the risk of user injuries from sharps [52], and VR and AI training tools allowed trainees to learn in a safe environment without risk to a real patient (n = 5) [49, 51, 52, 56, 58].

Improved administrative processes

Seventeen papers reported that digital tools enhanced the monitoring and administration of the academic program. The collection of daily data and the instant feedback from FA aided with monitoring class performance and identifying trends and challenges that could prompt early intervention by trainers (n = 5) [26, 29, 34, 47, 53]. Evaluations and feedback plans were linked to the trainee’s cloud-based account [27], and digital tools supported a programmatic assessment approach (n = 5) [27, 37, 44, 46, 55]. Digital tools allowed for efficient administration with large numbers of trainees per class [41, 42]. E-logbooks promoted data safety by eliminating the challenges that occurred when paper-based logbooks were lost [44]. Digital tools were cost-effective for use in data collation [41]. The potential for unlimited independent practice sessions with VR and AI saved instructor time (n = 5) [45, 49, 51, 52, 56] and reduced the overheads for resource consumables and faculty [36, 52].

Barriers to the use of digital tools and applications in the feedback process

The use of digital tools and applications in the feedback process was hindered by technical constraints, cost factors, content development, training, data security and privacy, and digital literacy.

Technical constraints

A few students experienced technical constraints in the use of some of the applications [26, 30, 31]. Challenges with the design of the digital devices included layout [50], small typeface, and occasional inaccurate speech recognition facility [33], and that the device needed to have a good camera, microphone, and adequate lighting [32, 54]. Network connectivity was required to use the digital tools [33, 53], and the lack of stable internet connection hampered active participation in FA [41, 43]. One application was restricted to a single country and available in two languages only [47].

Cost factors

High initial investment and running costs were reported for the installation, maintenance, and update of software [52, 54, 55]. Additional faculty were required to assess all students during virtual activities such as online OSCEs, and supplementary financial resources were required to compensate these staff [39].

Content development

The authenticity and accuracy of learning resources accessed via social platforms such as X were questioned [28]. Some of the online feedback tools were designed to provide one-way flow of information which is not ideal, and therefore bi-directional feedback mechanisms were recommended [30, 33, 44]. Digital feedback is not a replacement for high-quality face-to-face feedback [29]. The nature of online activities meant that hands-on clinical training was not possible via Zoom and WhatsApp [40, 48]. It was impractical and time-consuming to assess every student in the online OSCE, especially with large student numbers [39]. Unproctored online FA may not be an accurate reflection of student performance [41].

In one study, the e-logbook was not adapted for local context resulting in the trainees and trainers experiencing challenges with terminology and use [44]. Faculty also contended that it was time-intensive to complete the e-logbooks in addition to providing feedback to the many trainees they had to supervise [44].

The use of multiple choice FA does not reflect real-life conversations with patients [51], and students preferred open questions and requested the clinical reasoning for both correct and incorrect options [51]. The prevailing VR and AI training tools were limited to single scenarios or simple skill assessments and could not assess complex or complete range of competencies [36, 45, 46, 51]. The VR dental simulator had unrealistic teeth, maneuver range of jaw, handpieces, and impractical use of associated instruments [52]. AI tutorials required a higher level of interaction and dedication from students compared to face-to-face methods [57], and cognitive overload may impact on the trainee’s understanding of content [58].

Need for training

The lack of training on the use of X was thought to have affected student engagement in the course [31]. Students could have been provided with guidelines on suitable questions for the feedback tool [42]. Some students were nervous during the recording of FA which may have impacted on their performance [35], and they requested further training on how to use optimal camera angles and lighting to create FA videos and guidelines on how to conduct peer review [38, 54]. Student training was required on the use of the e-logbooks [44]. Faculty training was required on the efficient use of the applications on the digital devices [37, 44]. Furthermore, faculty required guidance on coaching and feedback strategies [37, 39] and content development for online FA [39].

Data security, data integrity, and device privacy

Data security and privacy and the ethical use of data were highlighted by five papers [28, 29, 49, 55, 56]. Data integrity is essential as an unconscious bias in data will impact on feedback provided by AI tutoring systems [49]. Device privacy was a concern as some trainees and trainers were uncomfortable with using the trainees’ personal mobile device for feedback provision [27, 33].

Digital literacy

Student acceptance and engagement with digital feedback can depend on various factors such as their learning preferences, time management skills, and the presence/ existence of digital fatigue [26, 54]. Some students had difficulty expressing themselves through a digital format [40] and preferred face-to-face feedback as they could ask follow-up questions [38]. Students with poor discipline were easily distracted by other social applications on their devices and by the large volume of notifications during group activities [28, 40]. Some trainees were distracted by the trainer’s use of the device to input feedback during a live feedback session [33]. A further concern was faculty fear that AI would take their jobs thus impacting on their acceptance of AI in the institutional space [56].

Discussion

This scoping review explored the utility of digital tools and applications for feedback exchange in clinical training.

The volume of publications in the latter half of the selected timeframe (2018–2023) highlighted the exponential growth in the use of digital tools for feedback in clinical training. This trend aligned with the increased integration of technology in education, partially attributed to improved accessibility to mobile smart digital devices, wireless networks, and faster broadband [63]. The challenges posed by the COVID-19 pandemic also prompted the recent acceleration in the adoption of digital tools by medical education. However, it must be noted that the absence of earlier studies in this review may limit a comprehensive understanding of the historical evolution of digital tools in this context.

The significant volume of studies from the global North, particularly the UK, Canada, and Europe, raised concerns about the applicability of these findings to diverse global contexts. Furthermore, the limited number of studies from the global South highlighted a significant gap in understanding of how digital tools are utilized for feedback provision in resource-constrained settings. In addition, research on digital tools may be lacking in resource-constrained regions due to the burgeoning digital divide between the global North and global South, with the inequities in the latter regions being further exacerbated after COVID-19 [64]. Another consideration in the lack of representation of related research in the global South could stem from reported “epistemic injustice” [65]. Under-resourced researchers from the global South face challenges in gaining recognition, funding, support, and publishing their research in high-impact journals that are predominantly based in the global North [60, 65].

The exclusive focus on undergraduate students, predominantly in medical education, indicated a potential bias in the current literature. The scant representation of other health professions and postgraduate students raised questions about the inclusivity and diversity of research in this area. In addition, while mixed-methods designs prevailed, the limited number of purely qualitative studies may overlook nuanced aspects of the learner and instructor experiences with digital feedback tools.

The diverse array of digital tools and applications in the eligible studies reflected the dynamic landscape of technology integration in clinical training. The emergence of mobile clinical applications demonstrates promise for educational continuity, particularly in resource-limited settings. The capacity for customizable content and multiple feedback modalities and sources is a major driver for the shift to digitization of clinical feedback. There is a greater depth of feedback, from patients, trainers, trainees, and peers, which can promote student self-reflection and enable easier longitudinal monitoring and programmatic assessment compared to traditional paper-based data collection processes.

The advantages of using VR for feedback exchange in clinical training included the potential for unlimited, iterative FA in a low-risk environment, and immediate, objective, personalized feedback [36]. It provided a safe learning environment for students without causing harm to real patients [66]. However, the training of clinical reasoning, for example, in multiple choice format did not translate to how real-life conversations with patients are conducted [51]. Current VR training scenarios are confined to simple skills and limited knowledge transfer. Realistic complex clinical scenarios and debriefing feedback sessions with students are vital to optimize student learning through VR and AI clinical training programs. Interestingly, it is acknowledged that although VR training can enhance clinical knowledge, develop skills, and reduce errors, it could also create an unrealistic level of self-confidence which may impact on student performance with a live patient [67].

AI can be trained to discern the various levels of surgical skill expertise. It can also provide objective feedback on observed skills, and grade student assignments and virtual OSCEs thereby eliminating the potential bias associated with traditional assessments, and save faculty time and resources [68]. AI can develop individualized learning plans and real-time iterative feedback by guiding students through their responses and recommending additional study references [68]. This data can provide valuable insight on student performance and identify the areas that require improvement and for program monitoring and evaluation [68]. However, AI training tools are vulnerable to bias; therefore, the datasets used to train machine learning processes that create FA and customized student feedback should be transparent, trustworthy, valid, and reliable [69, 70].

To encourage student acceptance of feedback, it must be delivered in a recognizable and accessible format. Although the digital tools are prevalent in the educational sphere, students may not always be willing or prepared to adopt digitization in an academic setting [71]. Digital fatigue [54] and cognitive overload [58] may impact on acceptance of digitized feedback. Furthermore, although students may be familiar with social platforms on a personal level, they may not understand the professional implications of data privacy and ethics on social sites [67]. Issues that have been raised include lack of awareness of the division between social and professional identities, posting of non-anonymized patient interactions, and the use of informal language in open-access sites [67].

The trainer’s attitudes and beliefs on technology also influence their adoption and use of technology [72]. It is reported that an intergenerational gap between students and faculty may result in the latter being less comfortable or familiar with technology and hence less likely to utilize digital tools for clinical training [67].

Some studies on digital tools reported that the structured feedback format was intended to promote feedback conversations between the trainee and trainer after observed activities; however, some trainees perceived the digital tool to be an “obtrusive third party” during feedback conversations as their trainers focused on completing the feedback on the device rather than engaging in a constructive feedback dialogue [33, 37]. Several studies recommended further training of instructors for optimum balance in the use of digital tools in the feedback conversations [29, 33, 37]. Major challenges that affect the roll-out of digitization in clinical education, especially in low-resourced areas, were identified as the lack of training for both students and educators on digital technology, and inadequate infrastructure and devices to enable effective utilization of technology [73]. Therefore, to ensure the successful adoption of digital resources in clinical training, continuing student and faculty training is essential, as well as easy access to digital tools and applications that have been adapted for local context [74].

A recent study described the adaptation of a digital WBA tool using a relationship-centric approach in peri-operative surgical training [75]. Positive findings included improved trainer-trainee communication, teaching, feedback, and learning outcomes [75]. This study reiterated the importance of engagement, iterative feedback, and collaboration in the feedback process by both feedback providers and users. These factors would also be essential in the successful adoption of digital tools and applications for feedback in clinical training.

Cloud-based technology is renowned for rapid storage and retrieval of large volumes of data [76]. In the studies that utilized cloud-based technology, trainers and management were able to receive daily and sessional updates from the data uploaded via the digital platforms which allowed them to monitor and identify potential challenges and implement timeous changes [29, 37]. This was found to have improved student engagement and program completion rates. However, cloud-based technology is vulnerable to security threats and attacks; hence, additional costs may be incurred to secure the data [76]. Furthermore, reports indicate that governance processes to oversee the utilization of digital applications in healthcare and the workplace are lacking [29, 55]. Although the rapid pace of digitization may improve communication, feedback provision, and patient outcomes in the delivery of healthcare, researchers suggest that academic and healthcare institutes develop appropriate governance frameworks to avoid unintended effects and to ensure the data security and privacy of the users of digital technology and their patients [77].

The positive impact of digital tools on education continuity and in resource-constrained regions is evident. Immediate feedback, real-time, longitudinal monitoring of academic progress, and personalized feedback emerged as significant facilitators of digital use in clinical training, aligning with the literature which emphasizes the importance of the positive influence of timely feedback on student engagement and learning outcomes. However, technical and cost constraints, digital literacy, concerns regarding data security and ethics, and the need for continuous training are some of the multifaceted challenges associated with the adoption of digital tools for feedback in clinical training.

Limitations

Only articles in the English language were sought, and potentially relevant studies published in other languages would not have been included in this scoping review. Further, while the selected databases are expansive, other potentially relevant databases may have been omitted due to limited access. Rapid digitization in education is occurring, as evidenced by the surge in recent publications regarding this topic. The database search was conducted until December 2023, and it is likely that updated research on this area has been since published. A scoping review aims to map and describe current evidence and is not required to critique the methodology quality of the studies; hence, quality assessments of the included papers were not conducted [25]. This may introduce potential bias with the reporting of positive outcomes only. The findings of the scoping review were overwhelmingly focused on feedback provision to undergraduate medical students and may not be generalizable to postgraduate and diverse student populations.

Conclusion

The findings from this scoping review map the evidence of what is currently known about the use of digital tools and applications in giving and receiving feedback in clinical training. The geographical imbalance in the literature prompts a call for increased research in the global South, acknowledging contextual differences and resource constraints. Furthermore, while the current literature focuses on undergraduate medical students, there is a call for a broader exploration of other health professions beyond medicine, and a more extensive examination of postgraduate education is crucial for a comprehensive understanding of the role of digital tools in diverse educational settings.

Student perceptions that digital tools are an “obtrusive, third party” in feedback sessions highlighted the need to balance the use of technology with constructive timely feedback dialogues between trainer and trainee. There is a notable transformation in recent years with the inclusion of VR and AI in clinical training. By improving the complexity of clinical scenarios, these tools bode well for personalized, objective, real-time feedback. This can improve student motivation and engagement thereby potentially enhancing performance and course completion rates; however, ensuring dataset integrity is vital for AI systems. Evidence also suggests that governance processes should be implemented to oversee data security, privacy, and integrity at the institutional level. Further research and implementation of appropriate protocols on these aspects are warranted.

In conclusion, this scoping review offers valuable insights into the effectiveness of digital tools and applications for feedback exchange in clinical training. Further, it highlights critical considerations and gaps in the literature, proposes avenues for future research, and stresses the significance of contextualizing findings within diverse educational contexts.

Supplementary Information

Additional file 1. Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) Checklist.

Acknowledgements

Not applicable.

Authors’ contributions

VSS led the development, conceptualization, and writing of the manuscript. RP contributed to the data analysis and interpretation, and preparation and revision of the manuscript. EMK contributed to the data search and analysis and review of the manuscript. All authors read and approved the manuscript.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Data availability

All data generated or analyzed during this study are included in this published article [and supplementary information files].

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

We confirm that this work is original and has not been published elsewhere, nor is it currently under consideration for publication elsewhere. All authors involved agreed to submit this manuscript to Systematic Reviews.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77(1):81–112. 10.3102/003465430298487. [Google Scholar]

- 2.Shepard LA. The role of assessment in a learning culture. J Educ. 2008;189(1/2):95–106. [Google Scholar]

- 3.Van De Ridder JMM, Stokking KM, McGaghie WC, Ten Cate OTJ. What is feedback in clinical education? Med Educ. 2008;42(2):189–97. 10.1111/j.1365-2923.2007.02973.x. [DOI] [PubMed] [Google Scholar]

- 4.Amonoo HL, Longley RM, Robinson DM. Giving feedback. Psychiatr Clin. 2021;44(2):237–47. 10.1016/j.psc.2020.12.006. [DOI] [PubMed] [Google Scholar]

- 5.Hooda M, Rana C, Dahiya O, Rizwan A, Hossain MS. Artificial Intelligence for assessment and feedback to enhance student success in higher education. Math Probl Eng. 2022:1–19. 10.1155/2022/5215722

- 6.Ramani S, Könings KD, Ginsburg S, van der Vleuten CPM. Feedback redefined: Principles and practice. JGIM: J Gen Intern Med. 2019;34(5):744–9. 10.1007/s11606-019-04874-2 [DOI] [PMC free article] [PubMed]

- 7.Govender L, Archer E. Feedback as a spectrum: The evolving conceptualisation of feedback for learning. Afr J Health Prof Educ. 2021;13(1):12–3. 10.7196/AJHPE.2021.v13i1.1414. [Google Scholar]

- 8.Ramani S, Krackov SK. Twelve tips for giving feedback effectively in the clinical environment. Med Teach. 2012;34(10):787–91. 10.3109/0142159X.2012.684916. [DOI] [PubMed] [Google Scholar]

- 9.Branch Jr WT, Paranjape A. Feedback and reflection: teaching methods for clinical settings. Acad Med. 2002;77(12 Part 1):1185–8. 10.1097/00001888-200212000-00005 [DOI] [PubMed]

- 10.Shrivasta SR, Shrivasta PS, Ramasamy J. Effective feedback: An indispensable tool for improvement in quality of medical education. 2014

- 11.Hattie J, Gan M. Instruction based on feedback. Handbook of Research on Learning and Instruction: Routledge; 2011. p. 263–85. [Google Scholar]

- 12.Dinham S. Feedback on feedback. Teacher: Nat Educ Mag. 2008(May 2008):20–3

- 13.Halim J, Jelley J, Zhang N, Ornstein M, Patel B. The effect of verbal feedback, video feedback, and self-assessment on laparoscopic intracorporeal suturing skills in novices: a randomized trial. Surg Endosc Other Interv Tech. 2021;35(7):3787–95. 10.1007/s00464-020-07871-3. [DOI] [PubMed] [Google Scholar]

- 14.Johnson CE, Weerasuria MP, Keating JL. Effect of face-to-face verbal feedback compared with no or alternative feedback on the objective workplace task performance of health professionals: a systematic review and meta-analysis. BMJ Open. 2020;10(3):e030672. 10.1136/bmjopen-2019-030672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Burgess A, van Diggele C, Roberts C, Mellis C. Feedback in the clinical setting. BMC Med Educ. 2020;20:1–5. 10.1186/s12909-020-02280-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Domene-Martos S, Rodríguez-Gallego M, Caldevilla-Domínguez D, Barrientos-Báez A. The use of digital portfolio in higher education before and during the COVID-19 pandemic. Int J Environ Res Public Health. 2021;18(20):10904. 10.3390/ijerph182010904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jiang L, Yu S. Understanding changes in EFL teachers’ feedback practice during COVID-19: Implications for teacher feedback literacy at a time of crisis. Asia Pac Educ Res. 2021;30(6):509–18. [Google Scholar]

- 18.Belfi LM, Dean KE, Bartolotta RJ, Shih G, Min RJ. Medical student education in the time of COVID-19: A virtual solution to the introductory radiology elective. Clin Imaging. 2021;75:67–74. 10.1016/j.clinimag.2021.01.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chandrasinghe PC, Siriwardana RC, Kumarage SK, Munasinghe BNL, Weerasuriya A, Tillakaratne S, et al. A novel structure for online surgical undergraduate teaching during the COVID-19 pandemic. BMC Med Educ. 2020;20:1–7. 10.1186/s12909-020-02236-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Igbonagwam HO, Dauda MA, Ibrahim SO, Umana IP, Richard SK, Enebeli UU, et al. A review of digital tools for clinical learning. J Med Women’s Assoc Nigeria. 2022;7(2):29–35. 10.4103/jmwa.jmwa_13_22. [Google Scholar]

- 21.Singaram VS, Bagwandeen CI, Abraham RM, Baboolal S, Sofika DNA. Use of digital technology to give and receive feedback in clinical training: A scoping review protocol. Syst Rev. 2022;11(1):268. 10.1186/s13643-022-02151-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Peters MDJ, Marnie C, Tricco AC, Pollock D, Munn Z, Alexander L, et al. Updated methodological guidance for the conduct of scoping reviews. Int J Evid Based Healthc. 2021;19(1):3–10. 10.1097/XEB.0000000000000277. [DOI] [PubMed] [Google Scholar]

- 23.Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA Extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Ann Intern Med. 2018;169(7):467–73. 10.7326/M18-0850. [DOI] [PubMed] [Google Scholar]

- 24.Usmanova G, Lalchandani K, Srivastava A, Joshi CS, Bhatt DC, Bairagi AK, et al. The role of digital clinical decision support tool in improving quality of intrapartum and postpartum care: experiences from two states of India. BMC Pregnancy Childbirth. 2021;21(1):1–12. 10.1186/s12884-021-03710-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Peters MDJ, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. JBI Evidence Implementation. 2015;13(3):141–6. 10.1097/XEB.0000000000000050. [DOI] [PubMed] [Google Scholar]

- 26.De Kleijn RAM, Bouwmeester RAM, Ritzen MMJ, Ramaekers SPJ, Van Rijen HVM. Students’ motives for using online formative assessments when preparing for summative assessments. Med Teach. 2013;35(12):e1644–50. 10.3109/0142159X.2013.826794. [DOI] [PubMed] [Google Scholar]

- 27.Ferenchick GS, Solomon D. Using cloud-based mobile technology for assessment of competencies among medical students. Peer J. 2013;1:e164. 10.7717/peerj.164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Forgie SE, Duff JP, Ross S. Twelve tips for using Twitter as a learning tool in medical education. Med Teach. 2013;35(1):8–14. 10.3109/0142159X.2012.746448. [DOI] [PubMed] [Google Scholar]

- 29.Gray TG, Hood G, Farrell T. The development and production of a novel Smartphone App to collect day-to-day feedback from doctors-in-training and their trainers. BMJ Innovations. 2015;1(1):25–32. [Google Scholar]

- 30.Stone A. Online assessment: What influences students to engage with feedback? Clin Teach. 2014;11(4):284–9. [DOI] [PubMed] [Google Scholar]

- 31.Hennessy CM, Kirkpatrick E, Smith CF, Border S. Social media and anatomy education: Using Twitter to enhance the student learning experience in anatomy. Anat Sci Educ. 2016;9(6):505–15. 10.1002/ase.1610. [DOI] [PubMed] [Google Scholar]

- 32.Liu C, Lim RL, McCabe KL, Taylor S, Calvo RA. A web-based telehealth training platform incorporating automated nonverbal behavior feedback for teaching communication skills to medical students: A randomized crossover study. J Med Internet Res. 2016;18(9):e246. 10.2196/jmir.6299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lefroy J, Roberts N, Molyneux A, Bartlett M, Gay S, McKinley R. Utility of an app-based system to improve feedback following workplace-based assessment. Int J Med Educ. 2017;8:207. 10.5116/ijme.5910.dc69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Abdulla MH. The use of an online student response system to support learning of Physiology during lectures to medical students. Educ Inf Technol. 2018;23(6):2931–46. 10.1007/s10639-018-9752-0. [Google Scholar]

- 35.Donkin R, Askew E, Stevenson H. Video feedback and e-Learning enhances laboratory skills and engagement in medical laboratory science students. BMC Med Educ. 2019;19:1–12. 10.1186/s12909-019-1745-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Dixon J, Towers A, Martin N, Field J. Re-defining the virtual reality dental simulator: Demonstrating concurrent validity of clinically relevant assessment and feedback. Eur J Dent Educ. 2021;25(1):108–16. 10.1111/eje.12581. [DOI] [PubMed] [Google Scholar]

- 37.Duggan N, Curran VR, Fairbridge NA, Deacon D, Coombs H, Stringer K, et al. Using mobile technology in assessment of entrustable professional activities in undergraduate medical education. Perspect Med Educ. 2021;10(6):373–7. 10.1007/s40037-020-00618-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.McGann KC, Melnyk R, Saba P, Joseph J, Glocker RJ, Ghazi A. Implementation of an e-learning academic elective for hands-on basic surgical skills to supplement medical school surgical education. J Surg Educ. 2021;78(4):1164–74. 10.1016/j.jsurg.2020.11.014. [DOI] [PubMed] [Google Scholar]

- 39.Rucker J, Steele S, Zumwalt J, Bray N. Utilizing Zoom breakout rooms to expose preclerkship medical students to TeleMedicine encounters. Med Sci Educ. 2020;30:1359–60. 10.1007/s40670-020-01113-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mukherjee R, Roy P, Parik M. What’s up with WhatsApp in supplementing surgical education: An objective assessment. Ann R Coll Surg Engl. 2022;104(2):148–52. 10.1308/rcsann.2021.0145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Snekalatha S, Marzuk SM, Meshram SA, Maheswari KU, Sugapriya G, Sivasharan K. Medical students’ perception of the reliability, usefulness and feasibility of unproctored online formative assessment tests. Adv Physiol Educ. 2021;45(1):84–8. 10.1152/advan.00178.2020. [DOI] [PubMed] [Google Scholar]

- 42.Tautz D, Sprenger DA, Schwaninger A. Evaluation of four digital tools and their perceived impact on active learning, repetition and feedback in a large university class. Comput Educ. 2021;175:104338. 10.1016/j.compedu.2021.104338. [Google Scholar]

- 43.Ain NU, Jan S, Yasmeen R, Mumtaz H. Perception of undergraduate medical and Health Sciences students regarding online formative assessments during COVID-19. Pakistan Armed Forces Med J. 2022;72(3):882–6. 10.51253/pafmj.v72i3.7556. [Google Scholar]

- 44.Barteit S, Schmidt J, Kakusa M, Syakantu G, Shanzi A, Ahmed Y, et al. Electronic logbooks (e-logbooks) for the continuous assessment of medical licentiates and their medical skill development in the low-resource context of Zambia: A mixed-methods study. Front Med. 2022;9: 943971. 10.3389/fmed.2022.943971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Fazlollahi AM, Bakhaidar M, Alsayegh A, Yilmaz R, Winkler-Schwartz A, Mirchi N, et al. Effect of artificial intelligence tutoring vs expert instruction on learning simulated surgical skills among medical students: A randomized clinical trial. JAMA Network Open. 2022;5(2):e2149008-e. 10.1001/jamanetworkopen.2021.49008 [DOI] [PMC free article] [PubMed]

- 46.Goodman MC, Chesner JH, Pourmand K, Farouk SS, Shah BJ, Rao BB. Developing a novel case-based Gastroenterology/Hepatology online resource for enhanced education during and after the COVID-19 pandemic. Dig Dis Sci. 2023;68(6):2370–8. 10.1007/s10620-023-07910-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Hung C-T, Fang S-A, Liu F-C, Hsu C-H, Yu T-Y, Wang W-M. Applying the student response system in the online dermatologic video curriculum on medical students’ interaction and learning outcomes during the COVID-19 pandemic. Indian J Dermatol. 2022;67(4):477. 10.4103/ijd.ijd_147_22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sader J, Cerutti B, Meynard L, Geoffroy F, Meister V, Paignon A, et al. The pedagogical value of near-peer feedback in online OSCEs. BMC Med Educ. 2022;22(1):572. 10.1186/s12909-022-03629-8. [DOI] [PMC free article] [PubMed] [Google Scholar]