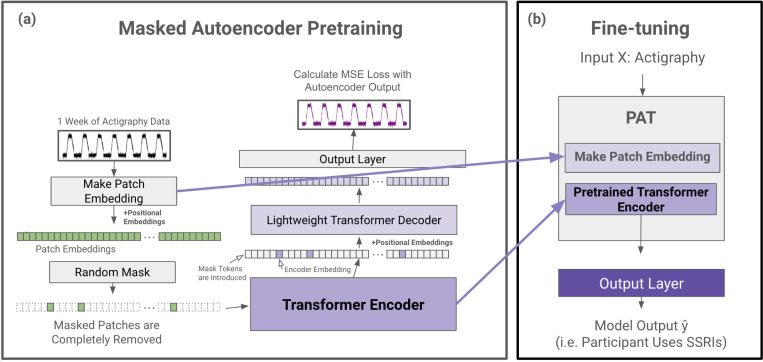

Figure 3. PAT Pretraining and Fine-tuning.

(a) During the pretraining phase, actigraphy data is transformed into patch embeddings with positional embeddings and then randomly masked without replacement (e.g. 90% of patches are removed). The remaining patches are fed into a transformer encoder. The encoder embedding tokens are then realigned with masked tokens, and positional embeddings are added again before being fed into a lightweight decoder. The decoder output is then fed to a simple output layer to reconstruct the input actigraphy data. The mean squared error between the input and the reconstructed output is calculated for loss. (b) After pretraining, the patch embedding layer and the pretrained transformer encoder are extracted to create PAT. Any output layer can be added to PAT for a variety of actigraphy understanding tasks (e.g. classification).