Abstract

Objective

To co-design artificial intelligence (AI)-based clinical informatics workflows to routinely analyse patient-reported experience measures (PREMs) in hospitals.

Methods

The context was public hospitals (n=114) and health services (n=16) in a large state in Australia serving a population of ~5 million. We conducted a participatory action research study with multidisciplinary healthcare professionals, managers, data analysts, consumer representatives and industry professionals (n=16) across three phases: (1) defining the problem, (2) current workflow and co-designing a future workflow and (3) developing proof-of-concept AI-based workflows. Co-designed workflows were deductively mapped to a validated feasibility framework to inform future clinical piloting. Qualitative data underwent inductive thematic analysis.

Results

Between 2020 and 2022 (n=16 health services), 175 282 PREMs inpatient surveys received 23 982 open-ended responses (mean response rate, 13.7%). Existing PREMs workflows were problematic due to overwhelming data volume, analytical limitations, poor integration with health service workflows and inequitable resource distribution. Three potential semiautomated, AI-based (unsupervised machine learning) workflows were developed to address the identified problems: (1) no code (simple reports, no analytics), (2) low code (PowerBI dashboard, descriptive analytics) and (3) high code (Power BI dashboard, descriptive analytics, clinical unit-level interactive reporting).

Discussion

The manual analysis of free-text PREMs data is laborious and difficult at scale. Automating analysis with AI could sharpen the focus on consumer input and accelerate quality improvement cycles in hospitals. Future research should investigate how AI-based workflows impact healthcare quality and safety.

Conclusion

AI-based clinical informatics workflows to routinely analyse free-text PREMs data were co-designed with multidisciplinary end-users and are ready for clinical piloting.

Keywords: Medical Informatics, Patient-Centered Care, Health Services Research, Machine Learning

WHAT IS ALREADY KNOWN ON THIS TOPIC

It is difficult to routinely capture and integrate the patient voice into quality improvement cycles at scale. Free-text data collected from patient-reported experience measures (PREMs) provide rich context into the patient experience. Digital solutions are emerging to analyse this unstructured data with speed and precision; yet, understanding how PREMs analysis can be digitised in healthcare settings remains unexplored.

WHAT THIS STUDY ADDS

In partnership with a statewide healthcare system in Australia as a case setting, this study identified problems with existing workflows to routinely analyse free-text PREMs data. Three AI-based clinical workflows that use unsupervised machine learning algorithms were co-produced with multidisciplinary end-users to address the existing workflow problems and are ready to be evaluated in practice.

HOW THIS STUDY MIGHT AFFECT RESEARCH, PRACTICE OR POLICY

AI can potentially improve how the narrative patient experience is routinely analysed at scale. AI-based semi-automated analytics could improve the speed and accuracy of analysing free-text PREMs data to advance towards a learning health system.

Introduction

Patient experience is a key indicator of healthcare quality and safety. Understanding the importance of measuring patient experience of healthcare has grown steadily over time and is now widely reflected in key healthcare performance frameworks, such as the Quadruple Aim.1 Improving patient experience has been associated with positive patient safety outcomes across diverse settings (eg, emergency care provision, paediatrics, elective surgery) and conditions (eg, chronic heart failure, mental health, stroke).2 In a hospital setting, patient satisfaction is commonly surveyed via patient-reported experience measures (PREMs). There are many validated PREMs that invite patients to rate elements of care using a simple numerical scale, which often overlook the richness of the patient narrative.3 Open-ended questions are now commonly included in PREMs surveys, such as ‘what was good about your care?’ and ‘what could be improved?’. Free-text (unstructured) data from patient experience surveys allow clinicians and administrators to learn from the narrative experiences (rather than just quantitative ranking data) of patients to conduct meaningful continuous quality improvement activity cycles in healthcare settings.4

Analysing free-text data at scale is time and resource intensive and increasingly unsustainable as acute care populations continue to grow. The complexities of large-scale manual analysis of free-text patient-generated health data are driving a new era of digital and AI-based analytical solutions. Natural language processing (NLP) and other machine learning (ML) techniques have emerged as contemporary tools to analyse free-text data from patient experience feedback.3 To date, most applications of NLP and ML for patient experience in health services have focused on analysing publicly sourced data from social media sites (eg, Twitter, Facebook) and healthcare forums (eg, National Health Service Choices, Yelp).3 There is limited research that has investigated how to integrate automation via NLP or ML techniques into routine practice to create a semi-automated (human and AI) workflow that analyses free-text PREMs data. Our study addressed this research and practice gap across three research questions (RQs):

RQ1: What are the current problems of analysing free-text PREMs data in the state public healthcare system?

RQ2: What is the current workflow, and how can we co-design an optimal future workflow to routinely analyse free-text PREMs in the state public healthcare system?

RQ3: What are the requirements of a proof-of-concept AI-based clinical informatics workflow to routinely analyse free-text PREMs data in the state public healthcare system, and what is the theoretical feasibility of translating this workflow into practice?

Our overall aim was to co-produce a AI-based (semi-automated) clinical informatics workflow to routinely analyse PREMs in hospitals and health services in a statewide public healthcare system in Australia that delivers inpatient care to more than 1.3 million patients across 114 hospitals each year.

Methods

Study design

The study design was qualitative cross-sectional and adhered to the Consolidated criteria for Reporting Qualitative Research checklist (online supplemental material).5 We adapted a generative co-design framework for healthcare innovation6 to answer our RQs in three phases: (1) framing the issue (ie, defining the problem) and understanding the current state, (2) generatively co-designing a desired future state and (3) exploring translation.6 Researchers and stakeholders collaborated across these three phases and applied specific qualitative methods to answer each RQ:

RQ1: Defining the problem (multidisciplinary stakeholder focus group)

RQ2: Current workflow and co-designing a new workflow (statewide key informant interviews)

RQ3: Proof-of-concept and assessing the theoretical feasibility of translating a new AI-based workflow into practice (co-design workshops)

The study was theoretically underpinned by participatory action research principles7 8 of iterative, collaborative and open-ended enquiry to co-create knowledge. We pursued collaboration between researchers and multidisciplinary stakeholders with direct experience of the problem to encourage problem-ownership, open dialogue and create a shared research environment to promote healthcare improvement for analysing PREMs data.8

Setting

The setting for this study was a statewide public hospital (n=114) and health service (n=16) network in Australia. This health system provides acute care for >1.3 million inpatients annually. PREMs inpatient surveys have been implemented in all 114 hospitals since May 2021. The average statewide survey response rate is ~14%.

Participants and recruitment

Due to the focus on patient safety and quality improvement, purposive sampling was used to identify and invite multidisciplinary stakeholders within the state healthcare system to participate. Directors/managers of the statewide patient safety and quality branch were purposively targeted for recruitment to answer RQ1 due to expert knowledge and experience of PREMs at a systems level. Multidisciplinary (healthcare professionals, data analysts, operational staff) stakeholders were purposively targeted in RQ2 to generatively co-design workflow solutions for PREMs data analysis. Multidisciplinary (quality improvement managers, health consumer engagement leads, data analysts, researchers) stakeholders and a health consumer were purposively invited across diverse health services (by rural, regional and metropolitan geography given variations in services across the state) to generatively co-design workflow solutions for PREMs data analysis (RQ2). Inclusion criteria were staff members currently employed by the public hospital and health service network with an active role in patient safety and quality improvement activities. There were no exclusion criteria.

A short overview (10 min) explaining the aims, design, benefits and risks of the study was offered to potential participants. Participants could re-negotiate or withdraw consent at any time throughout the project. All qualitative data collection was recorded with participant consent and transcribed automatically using Microsoft Teams. Researchers had no prior relationship with participants, participants’ knowledge of the researchers was limited to the present study, only approved researchers and participants were present during research activities, and no research activities were repeated.

Patient and public involvement

A health consumer representative was engaged to co-design solutions to PREMs data analysis to answer RQ2. Health consumer engagement leads (staff) from the statewide public hospital and health service were also invited to participate. Health consumers were not involved in designing or developing the study. Results will be disseminated via stakeholder email networks and social media.

Study procedure

RQ1: defining the problem

A statewide patient safety and quality branch was the primary stakeholder, and directors/managers (n=4) were invited to participate in a virtual focus group (~1 hour) to develop a problem statement relating to statewide PREMs free-text data analysis. Three open-ended questions were asked to develop a draft problem statement:

What is the current state of statewide PREMs free-text data analysis?

What is the ideal future state?

How can we fill the gap between the current and future state?

The draft problem statement was iteratively refined via email by all stakeholders until consensus was reached. Data was collected (deidentified and aggregate) a priori on PREMs survey administration from the state patient safety and quality improvement team and stratified by a health service, including number of surveys distributed, survey responses (n (%)) and responses to the ‘satisfaction with overall care’ question (n (%)) to summarise the current state of PREMs responses and inform the problem statement

RQ2: current workflow and co-designing a future workflow

Healthcare staff in health services with roles and responsibilities related to PREMs inpatient data were identified by the improvement service engaged in phase 1. These healthcare staff were approached and invited to participate in virtual key informant interviews (~45 min) to explore (a) the current state and (b) the ideal future state of local PREMs free-text data analysis in health services. Researchers (OC, Research Fellow (PhD); WC, Research Assistant (BSc); TS, Programme Manager (DipGov)) conducted key informant interviews with diverse quality and safety representatives (n=12) responsible for PREMs analysis across five health services. Semistructured interviews were conducted that explored: the current workflow for PREMs free-text data analysis, the ideal future state of workflows for PREMs free-text data analysis and the gap between the current and ideal states.

RQ3: proof-of-concept and theoretical feasibility assessment of an artificial intelligence (AI)-based workflow

The research team (comprising a clinical informatician, health services researchers, research assistant, data analyst and project manager) conducted design workshops (2×2 hours) based on the ‘ideal future state’ solution requirements communicated by healthcare staff in phase 2. Qualitative data generated from phases 1–2 were analysed in an inductive-deductive approach to develop solution requirements. Individual solution requirements were first inductively defined from line-by-line codes and overall themes from the qualitative focus group (phase 1) and key informant interviews (phase 2). Then, solution requirements were deductively mapped into three categories:

Must have: in scope, highly feasible

Should have: in scope, somewhat feasible.

Could have: out of scope, limited feasibility.

Two workshops (virtual and in-person) were then held to iteratively co-design technical solutions and workflows to enable AI-based analysis of PREMs free-text data at a large operational scale. The co-designed solutions were deductively mapped to the evidence-based Feasibility Assessment Framework (FAF)9 to assess the theoretical feasibility of integrating solutions into routine quality improvement cycles. Theoretical feasibility was assessed using the Technical, Economic, Legal, Operational and Schedule criteria (see table 1).

Table 1. Technical, Economic, Legal, Operational and Schedule (TELOS) criteria according to the Feasibility Assessment Framework9.

| TELOS area | Objective |

| Technical | Assess alternatives for buildability, functionality/performance, reliability/availability, capacity and maintainability. |

| Economic | Assess whether benefits will exceed costs. |

| Legal | Determine project’s ability to surmount regulatory and ethical requirements. |

| Operational | Determine project’s environmental fitness (eg, culture, structure, systems, policies and stakeholder acceptance). |

| Schedule | Assess whether the optimal/alternative solutions can be completed within desired or mandatory time. |

Stakeholders (healthcare staff) who participated in RQ1 (defining the problem) and RQ2 (co-design) were invited to provide expert feedback on the co-designed solutions and their mapping to the FAF.

Data analysis

Qualitative data derived from phases 1 to 3 were analysed using an inductive, thematic approach according to the framework method,10 which is designed to generate practice-oriented findings to support multidisciplinary healthcare research, across four phases: (1) transcription and familiarisation with interviews, (2) independent line-by-line coding of the first three interviews (NVivo v.14) to develop an initial working analytical framework (WC, OC), (3) applying the analytical framework to the remaining transcripts (WC, OC) and (4) charting data into a final framework matrix to derive final themes and subthemes (WC, OC). Quantitative data of inpatient responses to statewide PREMs surveys derived from RQ1 was analysed using descriptive statistics (counts, percentages).

Results

Participant characteristics

A total of 16 participants were recruited from across the state healthcare system to participate in RQ1 (defining the problem) and RQ2 (current state vs ideal future state). No potential participants refused to participate and no participants withdrew.

RQ1: Defining the problem

Quantitative data of PREMs inpatient surveys in the statewide target setting revealed that across 16 health services between 2020 and 2022, there were a total of 175 282 PREMs inpatient surveys distributed that received 23 982 responses (mean response rate, 13.7%) (online supplemental material). In the five health services targeted in phase 2 (HS Red, Orange, Purple, Navy, Maroon), there were a total of 92 925 PREMs inpatient surveys distributed that received 13 304 responses, delivering a mean response rate of 14% versus the state average of 12%.

Three issues were identified by participants (n=4) as core to the problem of routinely analysing PREMs free-text data in their health service (see table 2). Participants identified that due to the amount of data received, limitations with data analysis, poor integration with health service workflows and inequitable distribution of resources across health services, using PREMs data to implement quality improvement activities was difficult.

Table 2. Problems of analysing patient-reported experience measures (PREMs) free-text data as identified by statewide healthcare staff (n=4).

| Problem | Description |

| Data analysis |

|

| Health service workflows |

|

| Capability and resourcing |

|

RQ2: current workflow and co-designing a future workflow

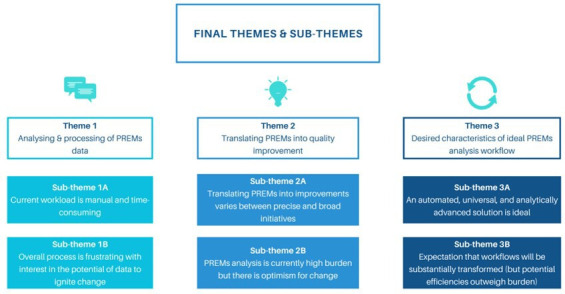

A total of 12 healthcare staff and one health consumer were interviewed across five statewide health services, representing >30% of the state public healthcare system in major (n=2), regional (n=2) and remote (n=1) geographical settings. Qualitative analysis revealed three themes and six subthemes that describe the current and future (ideal) state of analysing free-text PREMs data (figure 1). Themes 1 and 2 describe the current state and theme 3 characterises the future (ideal) state (see online supplemental material for supporting participant quotes).

Figure 1. Final themes and subthemes related to current and future (ideal) state of patient-reported experience measures (PREMs) free-text analysis workflows from key informant interviews.

Theme 1: analysis and processing of patient-reported experience measures (PREMs data

All participants emphasised the manual and time-intensive nature of the existing workflow to analyse PREMs free-text data (quote 1A, quote 1B). PREMs free-text data analysis was not completed consistently; key barriers were competing priorities and time limitations (quote 1C). Formal qualitative analysis of PREMs responses was reported as rare. Two health services described a deductive analytical approach, whereby individual PREMs responses were applied to predetermined themes of interest to the health service.

Theme 2: translating patient-reported experience measures (PREMs) into quality improvement

Translation of analysed PREMs free-text data into quality improvement activities varied across all health services and ranged from ‘complaint management’ to proactively generating targeted solutions to health service problems with multidisciplinary staff (quote 2A, quote 2B). Participants generally reported difficulty in translating PREMs responses into quality improvement activities; yet there was curiosity in the story the data was telling and how PREMs analysis is used to target improvement initiatives in clinical priority areas (Quote 2C).

Theme 3: desired characteristics of ideal patient-reported experience measures (PREMs) analysis workflows

Automation was a universally desired characteristic of future state PREMs free-text data analysis workflows (quote 3A). Despite positive overall attitudes towards automation of workflows, one health service cautioned that automation may struggle to replace authentic, human-centred value that is derived from manual analysis (quote 3B, quote 3C). The disparity in resourcing capabilities for PREMs free-text data analysis between rural and metropolitan sites was noted as motivation for an automated solution as it could reduce inequalities in staffing for PREMs analysis (quote 3D). Augmenting existing point-in-time statistics with longitudinal descriptive analytics to monitor and evaluate PREMs feedback over time and place was viewed as desirable (quote 3E). Upskilling staff in a new technical solution was recognised as a significant investment; however, there was acknowledgement that disruption is typical in healthcare environments and would ultimately produce a return on investment (quote 3F, Quote 3G).

RQ3: proof-of-concept and theoretical feasibility assessment of an artificial intelligence (AI)-based workflow

Semi-automated (artificial intelligence (AI)-based) content analysis tool

Results from RQ2 demonstrated the healthcare staff desired an automated solution to analyse free-text PREMs data that maintained human-centred authenticity and was realistic to implement in practice. Given emerging research applies NLP to analyse patient experience data and demonstrates its feasibility across a range of settings (social media, healthcare),3 11 we identified Leximancer (v4.5)—an AI-based semi-automated content analysis tool —as a potential validated solution that was aligned with healthcare staff needs.12 Prior research demonstrated that Leximancer is 74% effective at mapping complex concepts from matched qualitative data and >3 times faster than traditional manual thematic analysis.13 Leximancer applies an unsupervised ML algorithm to reveal patterns of terms in a body of text.14 It then generates networks between terms to develop ‘concepts’, which are collections of words that are linked together within the text, and group them into ‘themes’—concepts that are highly connected.14 Leximancer displays the relationship between concepts and themes visually. The primary output of Leximancer is an inter-topic concept map (see online supplemental material for a published example).15

Solution requirements

The results of phases 1 and 2 were consolidated with two design workshops held with the Leximancer team (n=3) in phase 3 to generate solution requirements . Solution requirements were presented to Leximancer staff to inform workflow development. ‘Must have’ requirements of an ideal (future state) PREMs free-text data analytical workflow were advanced descriptive analytics (eg, multilevel stratification by health system demographics), dashboard visualisation, ability to view raw free-text data, automation of reports and findings and privacy management controls. ‘Should have’ requirements added the ability to selectively analyse free-text data by using custom dates and additional stratification by quantitative patient demographic details. ‘Could have’ requirements added capability to longitudinally monitor themes and concepts to identify trends in patient experience. Solution requirements were generated for three potential solutions of increasing complexity and capability for end-users to consider:

No code (must have requirements only).

Low code (must have + should have requirements).

High code (must have + should have + could have requirements).

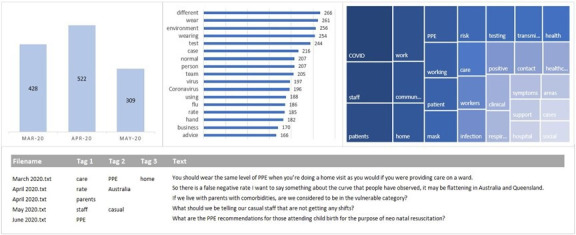

Feasibility Assessment Framework

Table 3 presents the completed theoretical feasibility assessment of three potential solutions—no code, low code, high code—to integrate an AI-based content analysis workflow into routine PREMs reporting and analysis workflows in the statewide health system. Figure 2 shows the sample dashboard (using mock data) for displaying AI-based analysis of PREMs free-text data in a low code solution.

Table 3. Theoretical feasibility assessment of three artificial intelligence (AI)-based semi-automated workflows to routinely analyse free-text patient-reported experience measures (PREMs) data.

| TELOS area | Overall assessment | No code | Low code | Full code |

| Technical feasibility |

|

|

|

|

| Economic feasibility |

|

|

|

|

| Legal feasibility |

|

|

|

|

| Operational feasibility |

|

|

|

|

| Schedule feasibility |

|

|

|

|

TELOSTechnical, Economic, Legal, Operational and Schedule

Figure 2. Sample dashboard (using mock data) for displaying AI-based analysis of patient-reported experience measures (PREMs) free-text data in a low code solution.

Discussion

Principal findings

This qualitative study explored the use of AI with multidisciplinary end-users to analyse routinely collected free-text PREMs data in hospitals and health services in a large state in Australia. The current methods used by health services and healthcare professionals to analyse PREMs free-text data are manual, time-consuming and labour-intensive with limited supporting resources to drive continuous quality improvement. Driven by an unsupervised ML algorithm, these workflows appeared theoretically technically, operationally and legally feasible to integrate into routine practice. Healthcare staff were optimistic and ready for a digital transformation of their PREMs free-text data analysis workflows to improve frontline capability for quality improvement of inpatient care at new levels of speed and precision.

Comparison to literature

Open-ended questions are an essential component of the ‘patient voice’ and often are used to describe negative experiences that become highly useful for healthcare management teams to drive quality improvement.16 The patient narrative is also an essential part of clinical reasoning.17 18 Fragmentation or underappreciation of the patient story in the context of electronic medical records has been shown to impede efficient healthcare relationships and care delivery.19 Automated solutions for analysing unstructured health data have been explored with increasing intensity over the past decade to capture the richness of the patient narrative. Early research succeeded in predicting the agreement between a patient’s structured (closed-ended) experience feedback with their unstructured (open-ended), free-text comments to a high degree of accuracy.20 Sentiment of patient experience (eg, positive, negative, neutral) was also investigated to quickly classify and track improvements in patient experience over time and place.21 This has advanced to developing comprehensive digital systems to analyse free-text PREMs data in real time and visualise their results to healthcare professionals.22 A PREMs observatory in Italy demonstrates capability to monitor PREMs data using online reports across multiple healthcare regions and conduct real-time qualitative analysis that is returned to healthcare staff on the ward as actionable feedback to ‘close the loop’.22 Similarly in the Netherlands, a new open-ended PREMs questionnaire, NLP analysis pipeline and visualisation package, called AI-PREM, was co-designed with patients and clinicians to provide real-time analysis of free-text PREMs data for healthcare professionals, although its application in real-world quality improvement cycles remains unclear.11 Recent research has also co-designed web-based dashboards using free-text PREMs data that are highly usable.23 Understanding how their application in routine healthcare workflows affects the quality and safety of care over time remains a translational gap.

Implications for patients and health services

‘Improved patient experience’ is one quadrant of the Quadruple Aim of Healthcare,1 a unifying framework that is emerging as a standard for meaningfully evaluating the value of digital healthcare.24 Digitising analysis of free-text PREMs data could improve patient experience of healthcare by enabling a learning health system (LHS). An LHS collects data, generates new knowledge and translates benefits in a continuous cycle of improvement that generates improvements to the quality and safety of healthcare.25 Healthcare stakeholders in the present study presented a clear vision for the ‘ideal future state’ of PREMs analysis that aligned strongly with a LHS—one that is automated, monitors trends over time and is integrated into existing workflows—to guide targeted quality improvement at a ward and facility level. As with any digital healthcare transformation, there are likely cultural, behavioural and operational impacts of implementing AI-based analysis of free-text PREMs data, such as digital deceleration, transient reduction in operational efficiency post-transformation and digital hypervigilance, whereby actors can unnecessarily overcompensate in their response to potential issues.26 Healthcare professionals in this study expressed their willingness to receive specialist training in semi-automated PREMs analysis to reduce potential disruption26 and that any immediate operational difficulties with a new system would be outweighed by long-term efficiencies.

Limitations

Patients are the ultimate stakeholder in this research, and while our study did include consumer representatives, future research will require meaningful coproduction and governance of AI PREMs analysis workflows with patients and consumers.

Conclusions

Currently, health services rely on manual analysis and interpretation of free-text data collected from PREMs questionnaires that is difficult to translate into healthcare quality improvement activities. This limits the capacity for routinely incorporating the rich patient narrative into routine quality improvement. This study co-produced AI-based clinical workflows to routinely analyse free-text PREMs data to address this problem. Future research into the implementation of AI-based PREMs analysis must be prioritised as PREMs data are increasingly part of global health system infrastructure. Healthcare improvement will likely accelerate by integrating the consumer voice into routine clinical governance activities.

supplementary material

Acknowledgements

We would like to thank the health consumer and health consumer representatives who contributed during the co-design phase of this study.

Footnotes

Funding: This study ws funded by Queensland Health (No grant/award number)

Provenance and peer review: Not commissioned; externally peer reviewed.

Patient consent for publication: Not applicable.

Ethics approval: This study involved human participants, and this study was granted ethics exemption by the Metro South Hospital and Health Service human research ethics committee (EX/2022/QRBW/86221) and ratified by the University of Queensland Research Integrity Office (2022/HE001400). Participants gave informed consent to participate in the study before taking part.

Contributor Information

Oliver J Canfell, Email: oliver.canfell@kcl.ac.uk.

Wilkin Chan, Email: wilkin.chan@uq.edu.au.

Jason D Pole, Email: j.pole@uq.edu.au.

Teyl Engstrom, Email: t.engstrom@uq.edu.au.

Tim Saul, Email: tim.saul@health.qld.gov.au.

Jacqueline Daly, Email: jacqueline.daly@health.qld.gov.au.

Clair Sullivan, Email: clair.sullivan@health.qld.gov.au.

Data availability statement

Data may be obtained from a third party and are not publicly available.

References

- 1.Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12:573–6. doi: 10.1370/afm.1713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Doyle C, Lennox L, Bell D. A systematic review of evidence on the links between patient experience and clinical safety and effectiveness. BMJ Open. 2013;3:e001570. doi: 10.1136/bmjopen-2012-001570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Khanbhai M, Anyadi P, Symons J, et al. Applying natural language processing and machine learning techniques to patient experience feedback: a systematic review. BMJ Health Care Inform . 2021;28:e100262. doi: 10.1136/bmjhci-2020-100262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Grob R, Schlesinger M, Barre LR, et al. What Words Convey: The Potential for Patient Narratives to Inform Quality Improvement. Milbank Q. 2019;97:176–227. doi: 10.1111/1468-0009.12374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19:349–57. doi: 10.1093/intqhc/mzm042. [DOI] [PubMed] [Google Scholar]

- 6.Bird M, McGillion M, Chambers EM, et al. A generative co-design framework for healthcare innovation: development and application of an end-user engagement framework. Res Involv Engagem. 2021;7:12. doi: 10.1186/s40900-021-00252-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Baum F, MacDougall C, Smith D. Participatory action research. J Epidemiol Community Health. 2006;60:854–7. doi: 10.1136/jech.2004.028662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cornish F, Breton N, Moreno-Tabarez U, et al. Participatory action research. Nat Rev Methods Primers . 2023;3:34. doi: 10.1038/s43586-023-00214-1. [DOI] [Google Scholar]

- 9.Ssegawa JK, Muzinda M. Feasibility Assessment Framework (FAF): A Systematic and Objective Approach for Assessing the Viability of a Project. Procedia Comput Sci. 2021;181:377–85. doi: 10.1016/j.procs.2021.01.180. [DOI] [Google Scholar]

- 10.Gale NK, Heath G, Cameron E, et al. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med Res Methodol. 2013;13:117. doi: 10.1186/1471-2288-13-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.van Buchem MM, Neve OM, Kant IMJ, et al. Analyzing patient experiences using natural language processing: development and validation of the artificial intelligence patient reported experience measure (AI-PREM) BMC Med Inform Decis Mak. 2022;22:183. doi: 10.1186/s12911-022-01923-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Leximancer Pty Ltd; 2021. Leximancer. [Google Scholar]

- 13.Engstrom T, Strong J, Sullivan C, et al. A Comparison of Leximancer Semi-automated Content Analysis to Manual Content Analysis: A Healthcare Exemplar Using Emotive Transcripts of COVID-19 Hospital Staff Interactive Webcasts. Int J Qual Methods. 2022;21 doi: 10.1177/16094069221118993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Haynes E, Garside R, Green J, et al. Semiautomated text analytics for qualitative data synthesis. Res Synth Methods. 2019;10:452–64. doi: 10.1002/jrsm.1361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Canfell OJ, Meshkat Y, Kodiyattu Z, et al. Understanding the Digital Disruption of Health Care: An Ethnographic Study of Real-Time Multidisciplinary Clinical Behavior in a New Digital Hospital. Appl Clin Inform. 2022;13:1079–91. doi: 10.1055/s-0042-1758482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Riiskjær E, Ammentorp J, Kofoed PE. The value of open-ended questions in surveys on patient experience: number of comments and perceived usefulness from a hospital perspective. Int J Qual Health Care. 2012;24:509–16. doi: 10.1093/intqhc/mzs039. [DOI] [PubMed] [Google Scholar]

- 17.López A, Detz A, Ratanawongsa N, et al. What patients say about their doctors online: a qualitative content analysis. J Gen Intern Med. 2012;27:685–92. doi: 10.1007/s11606-011-1958-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Schembri S. Experiencing health care service quality: through patients’ eyes. Aust Health Rev. 2015;39:109–16. doi: 10.1071/AH14079. [DOI] [PubMed] [Google Scholar]

- 19.Varpio L, Rashotte J, Day K, et al. The EHR and building the patient’s story: A qualitative investigation of how EHR use obstructs a vital clinical activity. Int J Med Inform. 2015;84:1019–28. doi: 10.1016/j.ijmedinf.2015.09.004. [DOI] [PubMed] [Google Scholar]

- 20.Greaves F, Ramirez-Cano D, Millett C, et al. Machine learning and sentiment analysis of unstructured free-text information about patient experience online. The Lancet. 2012;380:S10. doi: 10.1016/S0140-6736(13)60366-9. [DOI] [Google Scholar]

- 21.Alemi F, Torii M, Clementz L, et al. Feasibility of real-time satisfaction surveys through automated analysis of patients’ unstructured comments and sentiments. Qual Manag Health Care. 2012;21:9–19. doi: 10.1097/QMH.0b013e3182417fc4. [DOI] [PubMed] [Google Scholar]

- 22.De Rosis S, Cerasuolo D, Nuti S. Using patient-reported measures to drive change in healthcare: the experience of the digital, continuous and systematic PREMs observatory in Italy. BMC Health Serv Res. 2020;20:315. doi: 10.1186/s12913-020-05099-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Khanbhai M, Symons J, Flott K, et al. Enriching the Value of Patient Experience Feedback: Web-Based Dashboard Development Using Co-design and Heuristic Evaluation. JMIR Hum Factors. 2022;9:e27887. doi: 10.2196/27887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Woods L, Eden R, Canfell OJ, et al. Show me the money: how do we justify spending health care dollars on digital health? Med J Aust. 2023;218:53–7. doi: 10.5694/mja2.51799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Enticott J, Johnson A, Teede H. Learning health systems using data to drive healthcare improvement and impact: a systematic review. BMC Health Serv Res. 2021;21:200. doi: 10.1186/s12913-021-06215-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Sullivan C, Staib A. Digital disruption “syndromes” in a hospital: important considerations for the quality and safety of patient care during rapid digital transformation. Aust Health Rev. 2018;42:294–8. doi: 10.1071/AH16294. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data may be obtained from a third party and are not publicly available.