Abstract

Artificial intelligence (AI) is transforming respiratory healthcare through a wide range of deep learning and generative tools, and is increasingly integrated into both patients’ lives and routine respiratory care. The implications of AI in respiratory care are vast and multifaceted, presenting both promises and uncertainties from the perspectives of clinicians, patients and society. Clinicians contemplate whether AI will streamline or complicate their daily tasks, while patients weigh the potential benefits of personalised self-management support against risks such as data privacy concerns and misinformation. The impact of AI on the clinician–patient relationship remains a pivotal consideration, with the potential to either enhance collaborative care or create depersonalised interactions. Societally, there is an imperative to leverage AI in respiratory care to bridge healthcare disparities, while safeguarding against the widening of inequalities. Strategic efforts to promote transparency and prioritise inclusivity and ease of understanding in algorithm co-design will be crucial in shaping future AI to maximise benefits and minimise risks for all stakeholders.

Shareable abstract

AI has the potential to revolutionise healthcare, enhancing care outcomes. Balancing benefits and risks is crucial. Used responsibly, AI-informed shared decision-making can empower patients, support clinicians and mitigate healthcare disparities. https://bit.ly/47SZlrL

Introduction

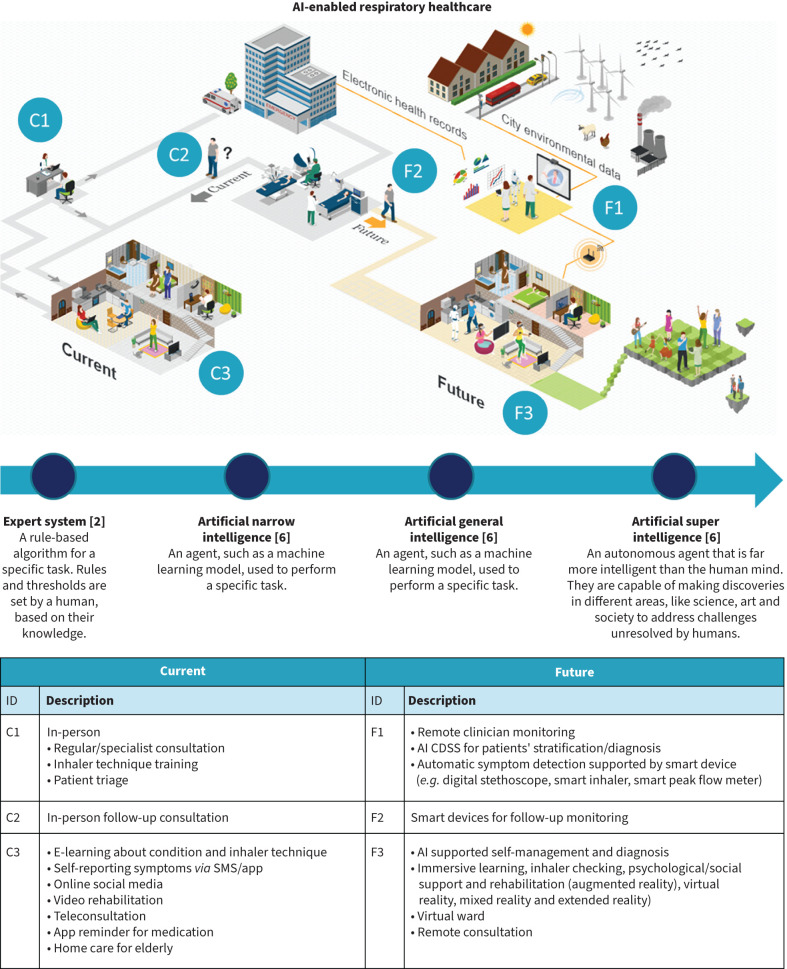

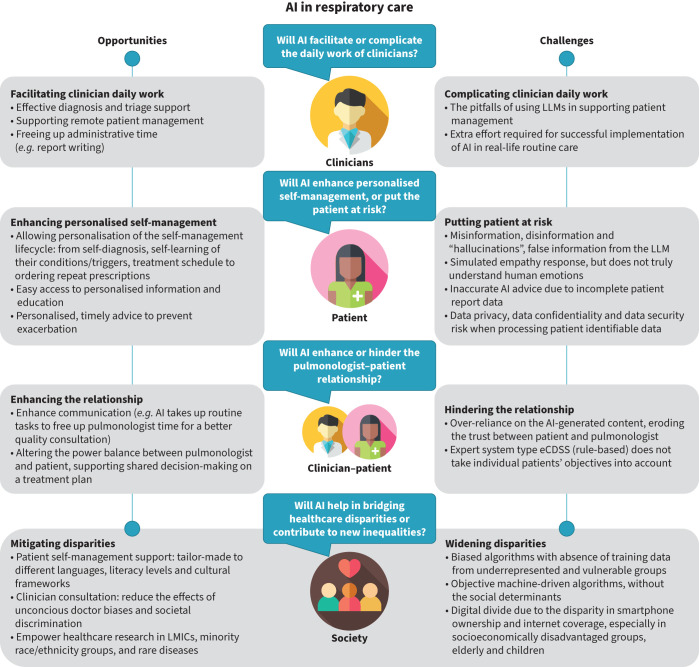

Artificial intelligence (AI) is transforming respiratory healthcare. It can enable early diagnosis of respiratory disease, reduce patients’ waiting time in primary care or hospital triage processes, facilitate medication adherence and support self-management by recognising deterioration and giving patients tailor-made advice about adjusting medication to prevent attacks [1]. Figure 1 illustrates a vision of the current and the future roles of AI in supporting respiratory healthcare. While AI can improve health outcomes and support efficient routine care, it also raises concerns regarding data privacy, algorithmic bias and potential misuse in supporting patient care [1, 3]. This review considers the opportunities and challenges of using a spectrum of AI in respiratory care, from rule-based expert algorithms to the widely discussed deep learning and generative AI models such as the large language models (LLMs) and the breakthrough beyond generative AI. ChatGPT (OpenAI), Copilot (Microsoft) and Gemini (Google) are examples of machine learning models in generative AI that can recognise, summarise, translate, predict, and generate language (text) using very large datasets [4, 5]. We will discuss state-of-the-art evidence on the promise and uncertainties such models offer from perspectives of both the clinician and patient, highlight how the patient–clinician relationship is shifting from a traditional care model to a patient-centric, AI-enabled care model, and consider the overarching societal themes of disparities and equity. Figure 2 summarises the risks and the benefits of using AI. Box 1 summarises the pitfalls from these four perspectives explicitly for LLMs.

FIGURE 1.

The risks and benefits of artificial intelligence (AI) in respiratory healthcare from the clinician, patient, clinician–patient and societal perspective. CDSS: clinical decision support system. Figure created using ICOGRAMS.

FIGURE 2.

The risks and benefits of artificial intelligence (AI) from the clinician, patient, clinician–patient and societal perspectives. eCDSS: electronic clinical decision support system; LLMs: large language models; LMICs: low- and middle-income countries.

BOX 1 Summary of the pitfalls of using large language models (LLMs) in respiratory healthcare from the clinician, patient, clinician–patient relationship and societal perspectives

•Hallucinations: LLMs can generate plausible-sounding responses that are not justified based on the training data, giving the illusion of a correct answer.

•Overconfidence: LLMs can present this incorrect information (the hallucinations) with a high degree of apparent certainty, potentially misleading users.

•Inconsistency: Due to their stochastic nature, LLMs may provide different answers to the same question across multiple inferences. Periodic updates to LLMs can also result in varying outputs between versions, potentially leading to inconsistent advice over time.

•Bias: LLMs may exhibit biases, offering incorrect answers to medical questions based on a patient's sex, race or ethnic origin.

•Data privacy risks: LLMs may store or use identifiable medical information in ways that could compromise patient privacy or violate HIPAA/GDPR regulations due to the rapidly changing nature of AI models.

•Outdated information: LLMs may not always reflect the most current medical guidelines or research, leading to potentially outdated advice.

•Lack of explanatory power: LLMs can generate answers but cannot explain their reasoning. This inability to justify decisions is a key barrier to accepting generative AI models for clinical use in healthcare.

HIPAA: US Health Insurance Portability and Accountability Act; GDPR: EU/UK General Data Protection Regulation.

Clinicians' perspective of AI-enabled respiratory care

AI can help clinicians in their day-to-day work by facilitating the diagnostic process, aiding in patient management and freeing up administrative time.

Diagnosis and triage support

In terms of diagnostic support, AI has evidence-based utility for interpreting pulmonary function tests (PFTs) and analysing chest radiography. Using interpretation rules recommended by the American Thoracic Society and the European Respiratory Society, AI can identify PFT patterns (obstructive, restrictive, mixed or normal) and, with some additional clinical information, can assist in the diagnosis of respiratory diseases [6]. A study has shown that collaboration between AI and clinicians leads to better diagnostic interpretation of PFTs than either AI alone or the clinician alone, with AI-suggested diagnoses prompting clinicians to consider cases more thoroughly [7]. For the analysis of chest radiographs, the information provided by AI-powered automated image analysis helped improve the diagnostic performance of clinicians. A randomised trial showed that using AI assistance significantly improved the diagnostic performance of non-radiologist clinicians in detecting abnormal lung findings [8]. LLMs have demonstrated utility in triaging patients according to the severity of their respiratory symptoms. After being provided with information about the patients’ main symptoms, vital parameters and medical history, ChatGPT-4 performed almost as well as an emergency clinician at triaging emergency department patients [9].

Patient management

In terms of patient management, AI is particularly interesting for helping clinicians monitor patients and make decisions about their care. AI enables clinicians to objectively monitor changes in their patients’ images (computed tomography (CT) or magnetic resonance) using automated methods for quantifying affected areas in, for example, interstitial lung diseases and cystic fibrosis [10–12]. For patients monitored at home using remote monitoring systems, AI-based systems can alert the clinical team to any worsening of the condition by interpreting data from connected devices (e.g. in asthma) [13, 14].

Conversing with LLMs may also inform decisions about patient management. For example, a team of radiologists asked ChatGPT “What CT features of congenital pulmonary airway malformations should I look for in a child?” and obtained an answer they considered appropriate [15]. Another team evaluated the recommendations given by ChatGPT-3.5 on a real case of unresolved pneumonia and considered that 87% of the recommendations obtained from ChatGPT were very appropriate [16]. However, there are a number of important considerations for clinicians using LLMs in clinical practice (see box 1 for a summary of LLM pitfalls). If LLMs are not given appropriate access to learn about a patient's history, the answers will be less personalised for the individual; however, ensuring confidentiality is paramount. The safer approach is to use LLMs to obtain answers to questions about patient management without entering a specific patient's history.

Administrative support

Finally, AI could save clinicians time on administrative tasks such as report writing. It is possible to use multimodal generative AI to produce consultation reports from audio recordings of the conversation between clinician and patient during consultations [15]. This could enable clinicians to concentrate on other tasks. Conversely, AI could also complicate the daily work of doctors. One challenge is implementing AI solutions in the clinical workflow. If, for example, the AI requires different data collection from the data already collected in daily practice, the time wasted on duplicate processes will not be sustainable in the long term.

AI has many applications that could make the day-to-day work of clinicians easier. However, the use of AI in clinical practice is currently limited except for automated analysis of chest radiographs. Clinical validation studies and reflection on the integration of AI into clinicians’ workflows are still needed.

Patients’ perspective of AI-enabled personalised self-management

Generative AI can readily be accessed by patients. Patients can use natural language to interact with generative AI without requiring any computer coding skills. A recent study demonstrated that ChatGPT-3 and InstructGPT can achieve a pass mark equivalent to a third-year medical student in a US medical licensing examination, suggesting that LLMs have the potential to provide timely clinical advice to patients with the caveat that exam scenarios may not reflect actual patient inquiries so that the accuracy of clinical advice in real-life situations may be different [17].

Personalising self-management

By accessing generative AI using smartphones, patients can easily obtain health information about their condition [18], which has the potential to be particularly useful for helping newly diagnosed patients to understand their treatment as they are learning how to manage their condition. A large action model (LAM) is a machine learning model that acts as an agent, performing tasks by itself to achieve a goal though a series of actions [19]. For instance, on a simple interface, patients can ask the AI to perform actions beyond just providing the information that existing LLMs can support. No LAM is yet used for healthcare purposes, but in the future, they have the potential to link with existing healthcare apps on patients' smart devices to provide seamless personalised AI-enabled self-management support. This could align (for example) with the Global Initiative for Asthma personalised management lifecycle: from self-diagnosis, self-learning of their condition/triggers, ordering repeat prescriptions, advice on when and what quantity of medicines should be taken to avoid attacks, and arranging a follow-up consultation with their clinicians [19–21]. LAMs also have the potential to organise a treatment schedule for patients, offering visualisation of the treatment process. It can seek their consent at each stage to automatically schedule clinic visits and reorder prescriptions or medications. Building trust is crucial for patients to adopt such AI systems [6].

Respiratory information and education

Several studies show that generative AI in LLMs (such as ChatGPT) has the potential to answer general, “factual” questions about a disease, such as cancer, retinal disease and genetics [18]. Performance may vary between general enquiries regarding a relatively common condition like pneumonia and specific queries about interstitial lung disease, potentially due to less extensive training data available for the latter. In the field of respiratory health, several studies are underway in collaboration with the European Respiratory Society Clinical Research Collaboration (ERS CRC) CONNECT to assess the medical accuracy of LLM responses to patient inquiries about respiratory diseases [22]. Early findings show that this subset of AI is able to gather an understandable summary answer for patients from a huge amount of available information online. However, the accuracy of the answers depends on the quality of the source information (that is, the “training data” provided to the AI), and a major limitation is the possibility of “hallucinations” – generated responses that are not justified based on the training data [23]. The system accuracy of LLMs is improving with time [24], but patients should be cautioned about the limitations and the risks of such AI advice. Further investigation is necessary to identify the risk factors and mitigate the risk by incorporating a second LLM source to confirm (or not) the original response, to safeguard patient safety during real-life deployments [25]. Apart from the system accuracy, the manner in which patients interact with LLMs significantly influences their response precision. The accuracy of the responses relies heavily on how users formulate their questions, raising the issue of how we can “prompt” patients so they interact with the LLMs in a manner that elicits meaningful responses.

Personalised timely advice

AI can provide timely management advice to a patient if an imminent exacerbation is detected [1]. A limitation is that AI does not (yet) possess qualia and cannot truly understand human emotion, although it can simulate empathy to provide “personalised” self-management advice [26, 27]. Qualia are the subjective properties that determine the conscious aspect of experience, referring to the ways things are experienced as opposed to how they objectively are. They are the phenomenal properties that define “what it is like” to have a conscious experience [28]. Patients can acknowledge and praise the AI which reinforces the presentation of medical information in a manner that resonates with their understanding and appreciation, but the AI is still limited in recognising the patient's physical, mental and emotional needs to provide trustworthy self-management and treatment advice, unlike a human clinician [29].

For an AI system that uses regular patient-reported data for generating advice or remote diagnosis, missing data is a significant challenge. Patients may stop submitting readings for multiple reasons, for example, feeling too ill, forgetfulness or a lack of motivation. For instance, some patients might believe they understand their condition better than the machine, while others may perceive logging as unhelpful and discontinue data submission after a few days or weeks [30]. Some patients may manipulate data to complete a missing daily reading, risking introducing bias to their self-reported data. AI that can automatically and silently collect patient data reduces these inherent sources of bias [31].

Data privacy

AI can provide a real-time quality check for measurements that require good technique to obtain an accurate value (for example, taking a peak flow measurement) and check inhaler technique as the patient uses their inhaler [32]. However, when AI is used to collect patients’ data in their daily lives (especially when it involves location or facial recognition applications), data privacy, data confidentiality and data security become a concern [33]. While data privacy statements may provide detailed explanations, lengthy terms and conditions or legal statements are overwhelming and many (most?) patients will simply check the consent boxes during registration to expedite the process and proceed to using an AI service [34]. The trend of valuing service convenience over data privacy was first observed with search engines. Many patients search for information online before seeing a clinician. Research has shown that analysing these searches can detect diseases like lung carcinoma, sometimes before formal diagnosis [35]. This highlights both the predictive potential but also the privacy risks of search data analysis. Regulations, such as the US Health Insurance Portability and Accountability Act (HIPAA) and the EU/UK General Data Protection Regulation (GDPR), exist to protect data privacy when AI collects and shares patients' personal data with third parties [36, 37]. The norms outlined in these regulations ensure a lawful, fair and transparent approach to obtaining patient consent for data use, collecting minimal patient data and ensuring that patient data are deidentified when processed by third parties. However, as AI evolves rapidly and iteratively, maintaining ongoing compliance will become increasingly challenging [38]. Approaches like federated learning or blockchain and techniques such as homomorphic encryption and differential privacy which allow patients to use the AI on their own device whilst retaining control of their own data, offer a potential “trade-off” to supporting compliance in the future [39].

Misinformation and disinformation

Generative AI applications such as Deepfakes (https://deepfakesweb.com) and Deepswap (www.deepswap.ai) can generate synthetic videos, images and voices. There is a risk that this type of generative AI could create a video purporting to be from an official healthcare organisation or famous clinician to promote a fake treatment. The risk is that patients who do not already know the answer to a question would find it difficult to judge if such a video were genuine or if a given answer is correct. In a cross-sectional study, it was observed that patients tend to favour online AI responses for their healthcare inquiries over consulting with a clinician [26]. Patients may be overly accepting and spread the mis/disinformation widely, which sparks concerns about who may be able to verify the information quickly to prevent the negative consequences that this may bring for patients' health [40].

AI can offer personalised self-management support for a respiratory patient, but risks such as lack of emotional understanding, data privacy concerns and potential misinformation must be carefully managed for safe and ethical deployment in the patient's daily life. The accuracy and usefulness of AI responses are influenced by the way the questions are asked, highlighting the importance of further mitigation strategies to maximise the benefits and minimise risks in patient self-management.

The clinician–patient relationship in the era of AI

AI could have an impact on two fundamental aspects of the clinician–patient relationship: communication and the power balance between clinicians and patients.

The impact of AI on communication

AI has the potential to transform communication between clinicians and their patients. By automating routine tasks with AI, clinicians could free up time for more meaningful and empathetic face-to-face discussions with their patients [41, 42]. Alongside human interactions during face-to-face consultations, novel patient–AI–clinician interactions could be incorporated into telemonitoring systems, with the clinician giving general instructions to the AI, which then follows a predefined pattern for daily decision-making with the patient. In practice, this would not represent a radical change for patients with chronic respiratory conditions, such as asthma, who are used to following action plan algorithms when their symptoms worsen. This familiarity may explain the high acceptance of AI-based decision-making systems for the day-to-day management of children with asthma and their parents, as shown by two surveys conducted in France [43, 44]. In addition, clinician–patient communication may increasingly rely on AI-generated content. Some clinicians might be tempted to save time by asking an LLM to draft a response to a patient's message, which they would then edit before sending [45]. However, this practice raises concerns about the authenticity of clinician–patient exchanges. If patients become aware that a substantial portion of the messages they receive are generated by AI, it could erode trust in the clinician–patient relationship.

The impact of AI on power balance

AI may alter the power balance between clinicians and patients. When an AI system, using vast amounts of data and complex algorithms, can recommend a treatment based on a patient's specific characteristics, the clinician's role in the patient's care may be diminished [46, 47]. Conversely, patients can be empowered by the information they access through LLMs, just as search engines like Google have enabled patients to engage in more informed discussions with their clinicians [48].

To navigate this shift, a collaborative approach between the clinician and the patient is required. Clinicians must be transparent about their use of AI and explain their clinical reasoning to the patient to maintain trust and foster partnership [49]. In turn, patients can participate more meaningfully in decisions about their care and choose between the options presented by their clinician.

Paradoxically, the development of AI decision-making systems increases the need for human shared decision-making. A study aimed at developing an electronic clinical decision support system (eCDSS) to determine the most appropriate treatment for children with asthma illustrates this point. Researchers asked 137 children, their parents and their clinicians to assign points to different objectives when taking, supervising or prescribing an asthma controller treatment [50]. The study revealed little correlation within each child–parent–clinician triad. Children prioritised the prevention of symptoms during exercise, parents emphasised the preservation of lung function, and clinicians focused on preventing asthma attacks. The study concluded that to incorporate patients’ values into an eCDSS for asthma controller treatments, it was first necessary to engage in a shared decision-making process between the children, parents and clinicians.

This example highlights the importance of human collaboration and communication in the era of AI-assisted healthcare. While AI can provide valuable insights and recommendations, the best outcomes are ultimately achieved through open dialogue and shared decision-making. In contrast, ruled-based eCDSS systems may provide standardised recommendations misaligned with individual patient goals. Although AI can learn and incorporate patient objectives, implementation is more complex when a caregiver's perspective is added to that of the patient and professional.

Societal perspective in bridging healthcare disparities

AI has been hailed as part of the “fourth industrial revolution”, and its ability to autonomously integrate vast inputs into cogent output is a key driver for growth in other technologies. The pace and scale of AI's development dwarfs that of previous industrial revolutions [51], which themselves had longstanding consequences with which societies still grapple today. They serve as a reminder of the responsibility to anticipate the harms of revolutionary technologies and channel them into a force for good.

Healthcare disparities often mirror societal inequities, affecting vulnerable and marginalised groups. Disparities are often multifactorial, combining the effects of social determinants, underrepresentation in research and institutionalised discrimination [52–57]. This section sets out how AI may both mitigate and widen existing disparities.

AI may be used to mitigate disparities

Self-management plays a vital role in chronic respiratory diseases [58], incorporating concepts such as treatment concordance, trigger avoidance, self-monitoring and lifestyle change. With its ability to integrate data from existing knowledge with sensor data from the “Internet of Things”, AI has potential to facilitate personalised self-management in single platform solutions tailored to language, literacy and cultural frameworks. AI is already being used to communicate asthma education by generating multilingual avatars in languages including Urdu and Bengali [59].

Potential benefits and pitfalls of eCDSSs have already been discussed. In rendering personalised treatment decisions more objective, an eCDSS potentially reduces the effects of unconscious clinician biases and societal discrimination in the decision-making process. This, however, relies on the output of the eCDSS itself being unbiased (discussed in the following section).

The COVID-19 pandemic highlighted the issue of missing data on protected characteristics, and AI is demonstrating superior methods of accounting for missing race/ethnicity data [53, 60, 61]. It may even circumvent the lack of power in research into rare diseases as demonstrated by the use of AI to produce a cluster analysis in lymphangioleiomyomatosis [62].

In low- and middle-income countries (LMICs), poor infrastructure and individual poverty may reduce access to high quality healthcare. AI-enabled technologies have the potential to automate diagnostics, reducing the burden on healthcare workers in limited resource settings. An eCDSS may increase the accessibility of specialist clinical care to those living remotely, for example, when used by healthcare workers [63, 64].

AI risks widening disparities

Whilst AI could prove transformative, crucially it relies on good quality, large datasets to provide training for its algorithms [63, 65]. Systematic absence of underrepresented and vulnerable groups from such datasets can lead to biased outputs [53, 66]. For LMICS, challenges may arise due to a paucity of routinely collected health data, risking inappropriate application of algorithms trained on data from high income contexts with more complete routine health datasets. Outside of the healthcare system, this has been exemplified in the reinforcement of institutionalised prejudices through biased predictive policing systems [67]. Risks in respiratory health could include algorithms which exclude people from advanced treatments such as lung transplant based on biases in training data.

To prevent such biases from being “baked in” to AI systems, there must be deliberate effort to detect these biases in training data and even to “bake in” equity through (for example) development and application co-design with diverse stakeholders [68]. It should not be assumed that because AI is machine-driven it is objective or that it will not “see” social determinants which are removed from training data [69]. Both explainability and ongoing scrutiny will be essential to maintain a vision of digital equity for the long-term.

At an individual level, smartphone ownership, access to affordable internet connectivity, digital literacy and the manual dexterity to use digital tools are required [64]. Groups which may be excluded from this digital health revolution include the elderly, those in rural neighbourhoods, those facing socioeconomic disadvantage and children [70]. Children face the risk of being left behind due to their smaller population size and the increased precautions necessary for conducting clinical research. Consequently, this situation may result in AI solutions receiving lower priority because of their limited economic returns.

Closely related to the issue of access is that of trust, which is at risk when public institutions are poorly transparent [71, 72]. Engagement may be hampered in communities where there is already a lack of trust, as was seen in some patterns of COVID-19 vaccine hesitancy [73, 74]. Misinformation can centre on issues of privacy, and may have a disproportionate impact on disadvantaged groups, further reducing their representation in the data [75].

There is a unique opportunity to prepare for a “fourth industrial revolution” which is already transforming healthcare and society. This includes a responsibility to facilitate AI's potential to mitigate existing disparities, as well as counter the risks of AI leading to worsening disparities.

Conclusions

The use of AI, especially generative AI (such as LLMs), in respiratory care is growing rapidly. Off-the-shelf AI applications are already assisting clinicians and patients in routine care, self-education, self-diagnosis and self-management. These applications are promising in supporting effective clinical decisions, faster diagnoses, and timely advice to prevent exacerbations. They also have the potential to foster a more empathetic clinician–patient relationship. However, there are potential risks from misuse of AI, misinformation, data privacy, and widening healthcare disparities. Continuous evaluation and improvement of systems are essential to ensure the AI is reliable, transparent, inclusive, and understandable by patients and clinicians. Collaboration between technology developers, healthcare providers, and patients is crucial in shaping AI tools that truly enhance respiratory care. Robust policies and guidelines are necessary to ensure equitable access to these tools and to address ethical concerns related to AI in healthcare. As we navigate this rapidly evolving landscape, it is clear that the potential of AI in respiratory care is vast but must be harnessed responsibly and ethically.

Acknowledgements

We are grateful to Hilary Pinnock (Usher Institute, The University of Edinburgh, Edinburgh, UK) for providing insights on the implications from the respiratory patient care perspective.

Footnotes

D. Drummond is the Secretary of the mHealth and eHealth group of the European Respiratory Society; K. Hansen is the Former Chair of the European Lung Foundation; V. Poberezhets is the Former Chair of the mHealth and eHealth group of the European Respiratory Society; C.Y. Hui is the Chair of the mHealth and eHealth group of the European Respiratory Society.

Author contributions: D. Drummond, I. Adejumo and C.Y. Hui wrote the initial draft and final version of the manuscript. K. Hansen, V. Poberezhets and G. Slabaugh commented on the implications from the patient, clinical, societal and technology perspective. All authors approved the final version.

Conflict of interest: D. Drummond is the secretary of the mHealth/eHealth group 1.04 at the European Respiratory Society and is the principal investigator of the Asthma JoeCare study (NCT04942639) sponsored by the company Ludocare. I. Adejumo is a committee member of the Specialist Asthma Advisory Group at the British Thoracic Society, and declares investigator-led PhD studentship funding and speaker's fees from GlaxoSmithKline outside the submitted work. K. Hansen reports support for attending meetings from the European Respiratory Society, the International Respiratory Coalition and the World Health Organization, and leadership roles with the European Respiratory Society (Advocacy Council), the International Respiratory Coalition (Steering Committee) and the World Health Organization (Civil Society Commission Steering Committee). V. Poberezhets is the former Chair of the mHealth/eHealth group 1.04 at the European Respiratory Society, and declares personal fees from AstraZeneca and TEVA outside the submitted work. G. Slabaugh is a member of the Scientific Advisory Board at BioAIHealth and has stock or stock options in BioAIHealth; declares investigator-led PhD studentship funding, project grants and consultant fee from Exscientia, MSD, MRC, Innovate UK, AstraZeneca, Remark AI, and Derg; and has planned or issued patents with Queen Mary University of London and Huawei outside the submitted work. C.Y. Hui is the Chair of the mHealth/eHealth group 1.04 at the European Respiratory Society, and reports consultancy fees from the European Respiratory Society CRC CONNECT, and is a visiting researcher at the University of Edinburgh and a senior consultant in net zero and sustainability (healthcare) at Turner and Townsend. Her research with the University of Edinburgh is independent from, and not financially supported by Turner and Townsend. Her views in this publication are her own, and not those of Turner and Townsend. Neither she nor Turner and Townsend stand to gain financially from this work.

References

- 1.Pinnock H, Hui CY, van Boven JF. Implementation of digital home monitoring and management of respiratory disease. Curr Opin Pulm Med 2023; 29: 302–312. doi: 10.1097/MCP.0000000000000965 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Grosan C, Abraham A. Rule-based expert systems. In: Grosan C, A Abraham, eds. Intelligent Systems: A Modern Approach. Berlin, Springer, 2011; pp. 149–185. [Google Scholar]

- 3.European Medicines Agency . Reflection paper on the use of artificial intelligence in the lifecycle of medicines. Date last accessed: 21 March 2024. Date last updated: 19 July 2023. www.ema.europa.eu/en/news/reflection-paper-use-artificial-intelligence-lifecycle-medicines

- 4.NVIDIA . Large Language Models Explained. 2024. Date last accessed: 5 August 2024. www.nvidia.com/en-us/glossary/large-language-models/

- 5.Introduction to Large Language Models. Date last accessed: 5 August, 2024. Date last updated: 6 September 2024. https://developers.google.com/machine-learning/resources/intro-llms

- 6.Topalovic M, Das N, Ninane V, et al. Artificial intelligence outperforms pulmonologists in the interpretation of pulmonary function tests. Eur Respir J 2019; 53: 1801660. doi: 10.1183/13993003.01660-2018 [DOI] [PubMed] [Google Scholar]

- 7.Das N, Happaerts S, Janssens W, et al. Collaboration between explainable artificial intelligence and pulmonologists improves the accuracy of pulmonary function test interpretation. Eur Respir J 2023; 61: 2201720. doi: 10.1183/13993003.01720-2022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lee HW, Jin KN, Kim Y J, et al. Artificial intelligence solution for chest radiographs in respiratory outpatient clinics: multicenter prospective randomized clinical trial. Ann Am Thorac Soc 2023; 20: 660–667. doi: 10.1513/AnnalsATS.202206-481OC [DOI] [PubMed] [Google Scholar]

- 9.Paslı S, Şahin AS, İmamoğlu M, et al. Assessing the precision of artificial intelligence in emergency department triage decisions: insights from a study with ChatGPT. Am J Emerg Med 2024; 78: 170–175. doi: 10.1016/j.ajem.2024.01.037 [DOI] [PubMed] [Google Scholar]

- 10.Dournes G, Hall CS, Woods JC, et al. Artificial intelligence in computed tomography for quantifying lung changes in the era of CFTR modulators. Eur Respir J 2022; 59: 2100844. doi: 10.1183/13993003.00844-2021 [DOI] [PubMed] [Google Scholar]

- 11.Handa T, Tanizawa K, Sakamoto R, et al. Novel artificial intelligence-based technology for chest computed tomography analysis of idiopathic pulmonary fibrosis. Ann Am Thorac Soc 2022; 19: 399–406. doi: 10.1513/AnnalsATS.202101-044OC [DOI] [PubMed] [Google Scholar]

- 12.Chassagnon G, Vakalopoulou M, Hua-Huy T, et al. Deep learning-based approach for automated assessment of interstitial lung disease in systemic sclerosis on CT images. Radiol Artif Intell 2020; 2: e190006. doi: 10.1148/ryai.2020190006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Finkelstein J, Jeong IC. Machine learning approaches to personalize early prediction of asthma exacerbations. Ann N Y Acad Sci 2017; 1387: 153–165. doi: 10.1111/nyas.13218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhang O, Minku LL, Gonem S. Detecting asthma exacerbations using daily home monitoring and machine learning. J Asthma 2021; 58: 1518–1527. doi: 10.1080/02770903.2020.1802746 [DOI] [PubMed] [Google Scholar]

- 15.Tierney AA, Gayre G, Lee K, et al. Ambient artificial intelligence scribes to alleviate the burden of clinical documentation. NEJM Catal Innov Care Deliv 2024; 5: CAT-23. DOI: 10.1056/CAT.23.0404 [DOI] [Google Scholar]

- 16.Chirino A, Wiemken T, Ramirez J, et al. High consistency between recommendations by a pulmonary specialist and ChatGPT for the management of a patient with non-resolving pneumonia. Nort Healthc Med J 2023; 1. doi: 10.59541/001c.75456 [DOI] [Google Scholar]

- 17.Gilson A, Safranek CW, Taylor RA, et al. How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ 2023; 9: e45312. doi: 10.2196/45312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Li J, Dada A, Egger J, et al. ChatGPT in healthcare: a taxonomy and systematic review. Comput Methods Programs Biomed 2024; 245: 108013. doi: 10.1016/j.cmpb.2024.108013 [DOI] [PubMed] [Google Scholar]

- 19.Rabbit lab . Large action models. Date last accessed: 5 August 2024. www.rabbit.tech/research

- 20.Pinnock H, McClatchey K, Hui CY. Supported self-management in asthma. In: Pinnock H, Poberezhets V, Drummond D, eds. Digital Respiratory Healthcare (ERS Monograph). Sheffield, European Respiratory Society, 2023; pp. 199–215. doi: 10.1183/2312508X.erm10223 [DOI] [Google Scholar]

- 21.Global Initiative for Asthma . GINA global strategy for asthma management and prevention 2023. Date last accessed: 21 March, 2024. https://ginasthma.org/

- 22.Drummond D, Hui CY, Adejumo I, et al. ERS “CONNECT” Clinical Research Collaboration – moving multiple digital innovations towards connected respiratory care: addressing the over-arching challenges of whole systems implementation. Eur Respir J 2023; 62: 2301680. doi: 10.1183/13993003.01680-2023 [DOI] [PubMed] [Google Scholar]

- 23.Eysenbach G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: a conversation with ChatGPT and a call for papers. JMIR Med Educ 2023; 9: e46885. doi: 10.2196/46885 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Johnson D, Goodman R, Scoville E, et al. Assessing the accuracy and reliability of AI-generated medical responses: an evaluation of the Chat-GPT model. Res Sq 2023; preprint [ 10.21203/rs.3.rs-2566942/v1]. [DOI] [Google Scholar]

- 25.Potapenko I, Boberg-Ans LC, Subhi Y, et al. Artificial intelligence-based chatbot patient information on common retinal diseases using ChatGPT. Acta Ophthalmol 2023; 101: 829–831. doi: 10.1111/aos.15661 [DOI] [PubMed] [Google Scholar]

- 26.Ayers JW, Poliak A, Smith DM, et al. Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern Med 2023; 183: 589–596. doi: 10.1001/jamainternmed.2023.1838 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gkinko L, Elbanna A. Hope, tolerance and empathy: employees’ emotions when using an AI-enabled chatbot in a digitalised workplace. Inform Technol People 2022; 35: 1714–1743. doi: 10.1108/ITP-04-2021-0328 [DOI] [Google Scholar]

- 28.Auvray M, Myin E, Spence C. The sensory-discriminative and affective-motivational aspects of pain. Neurosci Biobehav Rev 2010; 34: 214–223. doi: 10.1016/j.neubiorev.2008.07.008 [DOI] [PubMed] [Google Scholar]

- 29.Aru J, Labash A, Vicente R, et al. Mind the gap: challenges of deep learning approaches to theory of mind. Artif Intell Rev 2023; 56: 9141–9156. doi: 10.1007/s10462-023-10401-x [DOI] [Google Scholar]

- 30.Tsang KCH, Pinnock H, Wilson AM, et al. Predicting asthma attacks using connected mobile devices and machine learning; the AAMOS-oo observational study protocol. BMJ Open 2022; 12: e064166. doi: 10.1136/bmjopen-2022-064166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Exarchos KP, Beltsiou M, Kostikas K, et al. Artificial intelligence techniques in asthma: a systematic review and critical appraisal of the existing literature. Eur Respir J 2020; 56: 2000521. doi: 10.1183/13993003.00521-2020 [DOI] [PubMed] [Google Scholar]

- 32.Wijsenbeek MS, Moor CC, Johannson KA, et al. Home monitoring in interstitial lung diseases. Lancet Respir Med 2023; 11: 97–110. doi: 10.1016/S2213-2600(22)00228-4 [DOI] [PubMed] [Google Scholar]

- 33.Gerke S, Shachar C, Cohen IG. Regulatory, safety, and privacy concerns of home monitoring technologies during COVID-19. Nat Med 2020; 26: 1176–1182. doi: 10.1038/s41591-020-0994-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Belcheva V, Ermakova T, Fabian B. Understanding website privacy policies—a longitudinal analysis using natural language processing. Information 2023; 14: 622. doi: 10.3390/info14110622 [DOI] [Google Scholar]

- 35.Van Riel N, Auwerx K, Schoenmakers B, et al. The effect of Dr Google on doctor–patient encounters in primary care: a quantitative, observational, cross-sectional study. BJGP open 2017; 1: bjgpopen17X100833. doi: 10.3399/bjgpopen17X100833 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.US Department of Health and Human Services . Health information privacy. Date last accessed: 5 August 2024. www.hhs.gov/hipaa/index.html

- 37.European Commission . Data protection in the EU. Date last accessed: 5 August 2024. https://commission.europa.eu/law/law-topic/data-protection/data-protection-eu_en

- 38.Rezaeikhonakdar D. AI Chatbots and challenges of HIPAA compliance for AI developers and vendors. J Law Med Ethics 2023; 51: 988–995. doi: 10.1017/jme.2024.15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Khalid N, Qayyum A, Bilal M, et al. Privacy-preserving artificial intelligence in healthcare: techniques and applications. Comput Biol Med 2023; 158: 106848. doi: 10.1016/j.compbiomed.2023.106848 [DOI] [PubMed] [Google Scholar]

- 40.Monteith S, Glenn T, Bauer M, et al. Artificial intelligence and increasing misinformation. Br J Psychiatry 2023: 224: 33–35. doi: 10.1192/bjp.2023.136 [DOI] [PubMed] [Google Scholar]

- 41.Ridd M, Shaw A, Salisbury C, et al. The patient–doctor relationship: a synthesis of the qualitative literature on patients’ perspectives. Br J Gen Pract 2009; 59: e116–e133. doi: 10.3399/bjgp09X420248 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Aminololama-Shakeri S, López JE. The doctor-patient relationship with artificial intelligence. AJR Am J Roentgenol 2019; 212: 308–310. doi: 10.2214/AJR.18.20509 [DOI] [PubMed] [Google Scholar]

- 43.Abdoul C, Cros P, Drummond D, et al. Parents’ views on artificial intelligence for the daily management of childhood asthma: a survey. J Allergy Clin Immunol Pract 2021; 9: 1728–1730. doi: 10.1016/j.jaip.2020.11.048 [DOI] [PubMed] [Google Scholar]

- 44.Gonsard A, AbouTaam R, Drummond D, et al. Children's views on artificial intelligence and digital twins for the daily management of their asthma: a mixed-method study. Eur J Pediatr 2023; 182: 877–888. doi: 10.1007/s00431-022-04754-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Chen S, Guevara M, Savova GK, et al. The effect of using a large language model to respond to patient messages. Lancet Digital Health 2024; 6: e379–e381. doi: 10.1016/S2589-7500(24)00060-8 [DOI] [PubMed] [Google Scholar]

- 46.Tanaka M, Matsumura S, Bito S. Roles and competencies of doctors in artificial intelligence implementation: qualitative analysis through physician interviews. JMIR Form Res 2023; 7: e46020. doi: 10.2196/46020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Drummond D. Between competence and warmth: the remaining place of the physician in the era of artificial intelligence. NPJ Digital Med 2021; 4: 85. doi: 10.1038/s41746-021-00457-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Tan SS, Goonawardene N. Internet health information seeking and the patient-physician relationship: a systematic review. J Med Internet Res 2017; 19: e9. doi: 10.2196/jmir.5729 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Hamilton JG, Genoff Garzon M, Gucalp A, et al. ‘A tool, not a crutch’: patient perspectives about IBM Watson for oncology trained by Memorial Sloan Kettering. J Oncol Pract 2019; 15: e277–e288. doi: 10.1200/JOP.18.00417 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Masrour O, Personnic J, Wanin S, et al. Objectives for algorithmic decision-making systems in childhood asthma: perspectives of children, parents, and physicians. Digit Health 2024; 10: 20552076241227285. doi: 10.1177/20552076241227285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Schwab K. The fourth industrial revolution: what it means, how to respond. Date last updated: 12 December 2015. https://www.foreignaffairs.com/world/fourth-industrial-revolution [Google Scholar]

- 52.Foley D, Best E, Reid N, et al. Respiratory health inequality starts early: the impact of social determinants on the aetiology and severity of bronchiolitis in infancy. J Paediatr Child Health 2019; 55: 528–532. doi: 10.1111/jpc.14234 [DOI] [PubMed] [Google Scholar]

- 53.Magesh S, John D, Li WT, et al. Disparities in COVID-19 outcomes by race, ethnicity, and socioeconomic status: a systematic-review and meta-analysis. JAMA Netw Open 2021; 4: e2134147. doi: 10.1001/jamanetworkopen.2021.34147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Shepherd V, Wood F, Griffith R, et al. Protection by exclusion? The (lack of) inclusion of adults who lack capacity to consent to research in clinical trials in the UK. Trials 2019; 20: 474. doi: 10.1186/s13063-019-3603-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Akenroye A, Keet C. Underrepresentation of blacks, smokers, and obese patients in studies of monoclonal antibodies for asthma. J Allergy Clin Immunol Pract 2020; 8: 739–741.e6. doi: 10.1016/j.jaip.2019.08.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Sheikh A. Why are ethnic minorities under-represented in US research studies? PLoS Med 2006; 3: e49. doi: 10.1371/journal.pmed.0030049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Okelo SO. Structural inequities in medicine that contribute to racial inequities in asthma care. Semin Respir Crit Care Med 2022; 43: 752–762. doi: 10.1055/s-0042-1756491 [DOI] [PubMed] [Google Scholar]

- 58.Pinnock H, Parke HL, Taylor SJ, et al. Systematic meta-review of supported self-management for asthma: a healthcare perspective. BMC Med 2017; 15: 64. doi: 10.1186/s12916-017-0823-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Mid-Yorkshire Hospitals NHS Trust . NHS - Mid Yorkshire Hospitals NHS Trust Asthma Self-Management Videos. Date last accessed: 5 August 2024. www.respiratoryfutures.org.uk/resources/general-resources-multi-lingual-respiratory-resources/nhs-mid-yorkshire-hospitals-nhs-trust-asthma-self-management-videos/ [Google Scholar]

- 60.Kim JS, Gao X, Rzhetsky A. RIDDLE: race and ethnicity imputation from disease history with deep learning. PLoS Comput Biol 2018; 14: e1006106. doi: 10.1371/journal.pcbi.1006106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Raghav K, Anand S, Loree JM, et al. Underreporting of race/ethnicity in COVID-19 research. Int J Infect Dis 2021; 108: 419–421. doi: 10.1016/j.ijid.2021.05.075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Chernbumroong S, Johnson J, Johnson SR, et al. Machine learning can predict disease manifestations and outcomes in lymphangioleiomyomatosis. Eur Respir J 2021; 57: 2003036. doi: 10.1183/13993003.03036-2020 [DOI] [PubMed] [Google Scholar]

- 63.Williams D, Hornung H, Peery A, et al. Deep learning and its application for healthcare delivery in low and middle income countries. Front Artif Intell 2021; 4: 553987. doi: 10.3389/frai.2021.553987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Brall C, Schröder-Bäck P, Maeckelberghe E. Ethical aspects of digital health from a justice point of view. Eur J Public Health 2019; 29: Suppl. 3, 18–22. doi: 10.1093/eurpub/ckz167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Liu JX, Goryakin Y, Scheffler R, et al. Global health workforce labor market projections for 2030. Hum Resour Health 2017; 15: 1. doi: 10.1186/s12960-016-0176-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Benson RT, Koroshetz WJ. Health disparities: research that matters. Stroke 2022; 53: 663–669. doi: 10.1161/STROKEAHA.121.035087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Reese H. What happens when police use AI to predict and prevent crime? Date last accessed: 21 March 2024. Date last updated: 23 February 2022. https://daily.jstor.org/what-happens-when-police-use-ai-to-predict-and-prevent-crime/

- 68.Gebran A, Thakur SS, Daye D, et al. Development of a machine learning–based prescriptive tool to address racial disparities in access to care after penetrating trauma. JAMA surgery 2023. Oct 1; 158: 1088–1095. doi: 10.1001/jamasurg.2023.2293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Gichoya JW, Banerjee I, Kuo PC, et al. AI recognition of patient race in medical imaging: a modelling study. Lancet Digit Health 2022; 4: e406–e414. doi: 10.1016/S2589-7500(22)00063-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.The Kings Fund . Supporting digital inclusion in health care. Date last accessed: 5 August 2024. www.kingsfund.org.uk/insight-and-analysis/projects/digital-equity

- 71.Powles J, Hodson H. Google DeepMind and healthcare in an age of algorithms. Health Technol (Berl) 2017; 7: 351–367. doi: 10.1007/s12553-017-0179-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Armstrong S. NHS England faces legal action over redacted NHS data contract with Palantir. BMJ 2024; 384: q455. doi: 10.1136/bmj.q455 [DOI] [PubMed] [Google Scholar]

- 73.Hansen BT, Labberton AS, Kraft KB, et al. Coverage of primary and booster vaccination against COVID-19 by socioeconomic level: a nationwide cross-sectional registry study. Hum Vaccin Immunother 2023; 19: 2188857. doi: 10.1080/21645515.2023.2188857 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Willis DE, Andersen JA, McElfish PA, et al. COVID-19 vaccine hesitancy: race/ethnicity, trust, and fear. Clin Transl Sci 2021; 14: 2200–2207. doi: 10.1111/cts.13077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Yao Y, Wu YS, Wang MP, et al. Socio-economic disparities in exposure to and endorsement of COVID-19 vaccine misinformation and the associations with vaccine hesitancy and vaccination. Public Health 2023; 223: 217–222. doi: 10.1016/j.puhe.2023.08.005 [DOI] [PubMed] [Google Scholar]