SUMMARY

Clinical medicine has embraced the use of evidence for patient treatment decisions; however, the evaluation strategy for evidence in laboratory medicine practices has lagged. It was not until the end of the 20th century that the Institute of Medicine (IOM), now the National Academy of Medicine, and the Centers for Disease Control and Prevention, Division of Laboratory Systems (CDC DLS), focused on laboratory tests and how testing processes can be designed to benefit patient care. In collaboration with CDC DLS, the American Society for Microbiology (ASM) used an evidence review method developed by the CDC DLS to develop a program for creating laboratory testing guidelines and practices. The CDC DLS method is called the Laboratory Medicine Best Practices (LMBP) initiative and uses the A-6 cycle method. Adaptations made by ASM are called Evidence-based Laboratory Medicine Practice Guidelines (EBLMPG). This review details how the ASM Systematic Review (SR) Processes were developed and executed collaboratively with CDC’s DLS. The review also describes the ASM transition from LMBP to the organization’s current EBLMPG, maintaining a commitment to working with agencies in the U.S. Department of Health and Human Services and other partners to ensure that EBLMPG evidence is readily understood and consistently used.

KEYWORDS: systematic review, meta-analysis, outcomes, evidence-based medicine

INTRODUCTION

This review describes the history of Centers for Disease Control and Prevention, Division of Laboratory Systems (CDC’s LMBP) initiatives and integration with the American Society for Microbiology (ASM), specifically the “ASM 7,” a group of clinical microbiologists with diverse backgrounds and interests but a shared belief in the value of clinical microbiology to patient care. The ASM7 met in February 2011 at ASM headquarters in Washington, D.C., and allied with the CDC’s vision to promote evidence-based laboratory medicine practices in clinical microbiology. The alliance resulted in the publication of two Systematic Review (SR) manuscripts, one on rapid detection of bloodstream infections and one on urine pre-analytics (1, 2). These manuscripts represent the first microbiology-focused questions examined by ASM using the CDC DLS LMBP A-6 cycle SR methods (3). After a few adaptations to the A-6 cycle, ASM published a third manuscript focused on Clostridioides difficile diagnostic methods (4). Over time, many microbiologists, stakeholders, biostatisticians, medical librarians, and subject matter experts joined the original “ASM 7” team, providing context to the iterations that followed to create ASMs Evidence-based Laboratory Medicine’s Practice Guidelines for Clinical Microbiology’s EBLMPG. Currently, ASM is updating two previous CDC DLS Systematic Reviews, one on blood culture contamination (5) and one on rapid identification of bloodstream infections (1). This review is based on the history of ASM’s adoption and adaptation of the CDC’s LMBP A-6 cycle method.

WHAT IS AN ASM EVIDENCE-BASED LABORATORY MEDICINE PRACTICE GUIDELINE?

Evidence-based practice (EBP), like evidence-based medicine (EBM), has historical roots going back centuries, but the modern era of popularity dates only to the mid-1990s (6). Its strength lies in “the conscientious and judicious use of current best evidence derived from clinical care research in managing individual patients” (7). The use of EBP is intended to optimize decision-making through the availability of EBP guidelines derived from rigorous analysis of the evidence from well-designed and properly conducted clinical research. This evidence analysis approach is called a SR process; it informs individual medical and scientific decisions with valid summary findings obtained through a systematic search of the available evidence, followed by critique and synthesis.

Coupled with a clinician’s expertise, proficiency, and judgment, important medical decisions, diagnoses, and therapeutic choices (6–8) are made based on the patient’s needs and EBP. A leader in EBP and EBM, the Agency for Healthcare Research and Quality (AHRQ), states that the evidence-based decision-making process involves the following steps (9):

Converting information needs into focused questions.

Efficiently identify the best evidence to answer the question and synthesize the evidence.

Critically appraising the evidence for validity and clinical usefulness.

Applying the results in clinical practice.

Evaluating the performance of the evidence in clinical application.

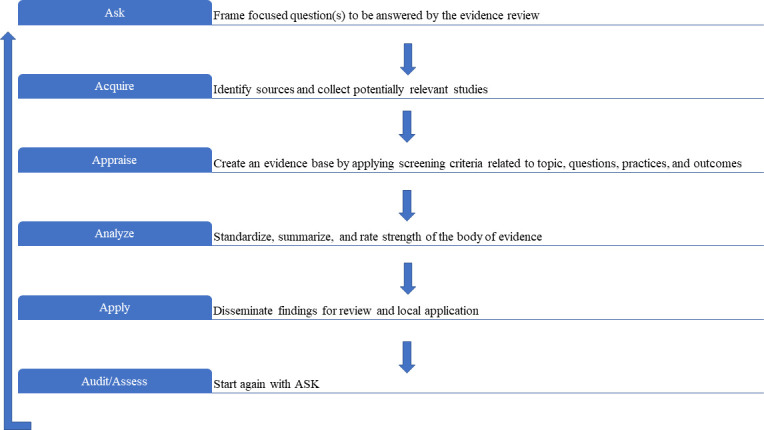

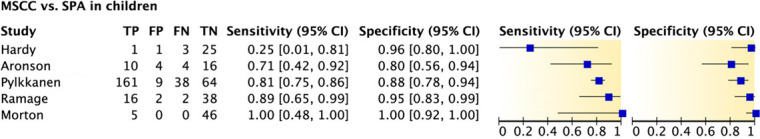

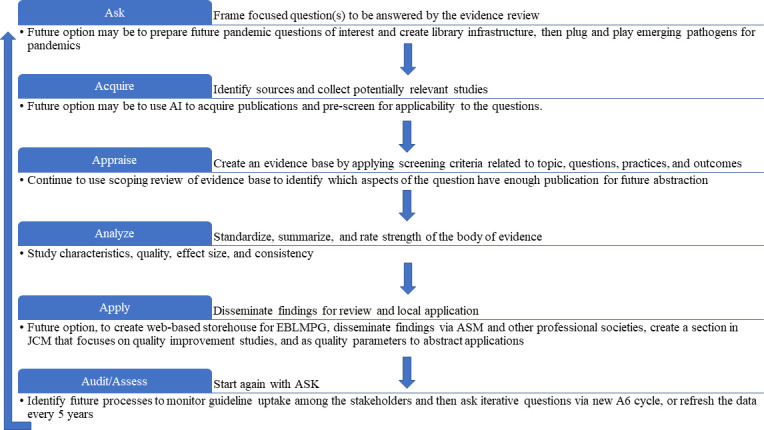

Adding laboratory practices to EBM was a logical step. Importantly, medical decisions include those based on laboratory medicine test results, which comprise approximately 70% of electronic health records (10). In alignment with AHRQ, by 2011, the A-6 method (Fig. 1) was validated by CDC DLS (3) and adopted by ASM. The CDC DLS LMBP A-6 cycle is a method to derive evidence-based laboratory medicine practice guidelines (EBLMPG). EBLMPG emphasizes and utilizes EBP principles and processes to provide best-practice guidelines for clinical laboratories, with linkage to healthcare quality aims and patient outcomes.

Fig 1.

The original LMBP A-6 cycle.

In contrast to SR performed in clinical medicine, which focuses primarily on patient-associated outcomes, clinical research in laboratory medicine generally focuses on diagnostic tests’ accuracy, precision, value, and outcomes. Such laboratory evidence often repudiates previously accepted diagnostic tests and replaces them with more robust ones that may better support patient outcomes more efficiently and economically.

What makes "evidence-based" practice different from consensus-based expert opinion?

When problem-solving is performed by a consensus of expert opinion, the focus is on persons with extensive knowledge and expertise in a given field. Consensus opinion includes the input of several experts in a group whose views are heard and understood. Consensus is neither an absolute agreement between the expert discussion nor the majority’s preference. Instead, the consensus process includes collaborative, cooperative, egalitarian, inclusive, and participatory decision-making; the resulting outcome reflects the best interest of the whole, achievable at the time, and supported by all. As expected, any group of experts has varied experience levels and different perspectives, which influence their input to the overall discussion and their practice recommendations. Proposals are generated collaboratively, unsatisfied concerns are addressed, and the recommendations are modified to maximize general agreement. Through such cooperative decision-making, the discussers consent overall to progress in settling the issue or question at hand, even if there is disagreement on select topics. Thus, the final solution creates a consensus opinion, often defined as an “acceptable solution” in which participants mutually agree on the solution, essentially representing the collective opinion of each discussion group member.

There are many examples of consensus-driven guidelines based on expert opinion and published by scientific organizations in their area of expertise and overlapping topics. Within clinical microbiology, the organizations providing most of the guidelines include the American Society for Microbiology (ASM), the Clinical and Laboratory Standards Institute (CLSI), the Infectious Diseases Society of America (IDSA), and the College of American Pathologists (CAP). Each organization has its own, albeit often similar, approach to publishing consensus guidelines, which are summarized in Table 1. In 2010, ASM aimed to replace the Cumitechs with Practical Guidance for Clinical Microbiology (PGCM) documents, now published in Clinical Microbiology Reviews (CMR), ASM Press. CMR editors prioritize previous Cumitech subjects and new subjects, assembling a group of experts with different expertise to work on the topic being reviewed. The group used evidence-based literature, expertise, and the team’s consensus to develop documents that provide general guidance for clinical microbiologists, emphasizing current diagnostic methods and their appropriate implementation. These PGCM documents link microbiologic practice to current clinical and scientific issues.

TABLE 1.

Laboratory guideline attribute by organizationa

| Attribute and example organizations | CLSI | CAPb | IDSA | CDC-LMBPc | ASM-Cumitech and CPG | ASM-EBLMPG |

|---|---|---|---|---|---|---|

| Consensus opinion after routine literature review expert opinion | Yes | Sometimes | Sometimes | No | Yes | No |

| Consensus based on evidence from a systematic literature review | No | Yes | Yes | Yes | No | Yes |

| Consensus based on evidence from a systematic literature review with meta-analysis | No | No | No | Yes | No | Yes |

| Multidisciplinary panels are convened from major stakeholders | Yes | Yes | Yes | Yes | No | Yes |

| Training provided for the multidisciplinary panelists | No | Unknown | Unknown | Yes | No | Yes |

| Librarian included | No | Unknown | Unknown | Yes | No | Yes |

| Methodologist included | No | Yes | No | Yes | No | Yes |

| Biostatistician included | No | No | Optional | Yes | No | Yes |

| Patient advocates included | No | Sometimes | Unknown | Yes | No | No |

| Documented requirements for guideline creation | Yes | Yes | Yes | Yes | Yes | Yes |

| Uses GRADE criteria for guidelines | No | No | No | Yes | No | Yes |

| IOM compliance or posted to AHRQ or former NGC (de-funded) | No | No | Yes | Yes | No | Yes |

| Includes information about worldwide regulatory agencies and compliance | Yes | No | No | No | No | No |

| May included industry or government representation with COI disclosure | Yes | Optional | Optional | Yes | Yes | Optional |

| Includes unpublished grey literature or data | No | Yes | No | Yes | Maybe | No |

| Includes possible harm assessment | No | Yes | No | Yes | No | Yes |

| Includes bias assessment | No | No | No | Yes | No | No |

| Includes cost assessment | No | Yes | Optional | Sometimes | No | Sometimes |

Clinical Laboratory Standards Institute, CLSI; College of American Pathologists, CAP; Infectious Disease Society of America, IDSA; CDC Laboratory Medicine Best Practices, CDC-LMBP; ASM Practical Guidance for Clinical Microbiology (PGCM) or Cumitech, ASM-CPG; ASM Evidence-based Laboratory Medicine Practice Guidelines, ASM-EBLMPG.

They also consider the benefits/harms, value, and cost of each guideline and include open comment period feedback from stakeholders and experts.

Published to AHRQ.

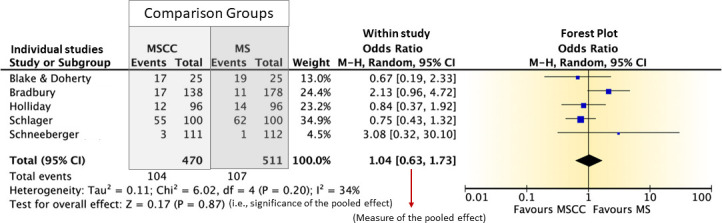

In contrast to the consensus process, the main focus of EBP is on the critical evaluation and synthesis of evidence derived from empirical research gathered by a SR process. Analysis of SR data is then combined with the expertise of clinicians, scientists, and patient advocates in making the final decisions about the care of individual patients (4). The goal of EBP is to benefit patient outcomes and achieve healthcare quality aims. EBP exposes limitations and research gaps in the existing evidence base while emphasizing the most efficient and effective patient care processes and practices. To align with EPB, LMBP uses the best available, systematically evaluated evidence combined with clinical acumen, scientific expertise, and knowledge of patient-centric healthcare and laboratory operations. All aspects are used to weigh any evidence-based laboratory guideline for its use in patient care with decisions based on SR with metanalysis. An SR need not include a meta-analysis (especially when studies are both limited and highly heterogeneous), and when this is the case, a narrative synthesis without statistical assessment may be more appropriate. However, combining a SR with a meta-analysis (Fig. 3) is optimal (11, 12).

What is a systematic review with meta-analysis?

A Systematic Review (SR) is a literature review designed to answer a defined research question by collecting, summarizing, and critically analyzing all empirical evidence (e.g., multiple research studies or manuscripts) that fits pre-specified eligibility criteria. The hallmarks of an SR leading to an EBP guideline are 3-fold: first, the literature search is extensive, systematic, and transparent; second, the risk of bias (RoB) is assessed for all included studies; and third, the strength of the synthesized evidence is graded in a standardized fashion. The SR is then followed by a meta-analysis that uses statistical methods to summarize the results of selected studies from the SR (3).

For individual treatment questions, SRs of extensive collections of randomized clinical trials (RCT) are the ideal evidence base but do not necessarily include recommendations. EBP is not restricted to RCTs but can draw on other studies, published or unpublished (e.g., gray literature) that meet pre-established quality criteria and contain relevant information for the project questions(s) (13). Importantly, questions for laboratory practice extend beyond individual treatment questions to include laboratory process improvement questions, where the focus is on diagnostic accuracy questions or the implementation of procedures or interventions within organizations that could improve the health of individual patients. For questions like these, other study designs are more appropriate (14).

Limitations of systematic reviews

Systematic Reviews, by their very nature, generally seek to answer a narrow and specific question—they typically do not cover a wide breadth of related topics. Also, conducting a meta-analysis does not guarantee that there will be no bias in the individual studies that contribute to the synthesis. Thus, methodological flaws in individual study design may also contribute to bias. One set of authors cautions users of SRs to carefully consider the quality of the product and adhere to the dictum “caveat emptor” (buyer beware) (15).

These limitations and other issues led to the request by the U.S. Congress that a set of standards be created, which could be used to develop trustworthy clinical practice guidelines. The standards encompass issues like transparency, conflicts of interest, guideline development, group composition, external review, strength of recommendations, and guideline updates. At the request of the U. S. Congress, the Institute of Medicine (IOM) developed a set of standards for developing rigorous, trustworthy clinical practice guidelines (https://nap.nationalacademies.org/resource/9728/To-Err-is-Human-1999--report-brief.pdf). The IOM Report that resulted was called “Clinical Practice Guidelines We Can Trust” and was published in 2011 (16). Since that report, different tools have been developed to help both SR authors and readers evaluate the RoB in SRs (13, 17, 18).

HISTORY OF EVIDENCE-BASED GUIDELINES IN LABORATORY MEDICINE AND CLINICAL MICROBIOLOGY

The Cochrane Consumer Network

The Cochrane Consumer Network (https://consumers.cochrane.org/) is the original clinical medicine group performing SRs to generate clinical evidence. It is an international network headquartered in the United Kingdom and is home to over 7,500 SRs. The CDC DLS LMBP includes the A-6 cycle method for SRs and was the first evidence-based method dedicated to quality issues in laboratory medicine. The CDC DLS commissioned the development of a report, which was published in May 2008, called Laboratory Medicine: A National Status Report (19). The report’s content was developed based on the input of a committee composed of technical experts and numerous diverse stakeholders involved with laboratory medicine. The report aimed to lay the groundwork for transforming laboratory medicine over the following decade. The LMBP initiative was conceived, in part, to address the evaluation gap identified between laboratory medicine practices and their impact on national healthcare quality aims and patient outcomes. The plan was to develop methods for systematically reviewing and evaluating laboratory quality improvement studies to identify effective laboratory medicine practices linked to national healthcare quality aims and improved patient outcomes. The result was the development of the robust CDC DLS LMBP A-6 SR method, based on validated evidence-based SR methods used in clinical medicine. Since most errors occur in the pre-analytical and post-analytical testing phases, laboratory practices in these areas are the focus of LMBP efforts (19). The inclusion criteria are outlined in the IOM Report (16) and were adopted by the now-defunded National Guideline Clearinghouse (NGC) in 2013 All published LMBP Systematic Reviews, and the first two ASM guidelines were accepted and posted by NGC before it was discontinued.

The American Association for Clinical Chemistry (AACC) was the first clinical laboratory society to publish laboratory practice guidelines. The National Academy of Clinical Biochemistry (NACB) initially published guidelines for point-of-care testing (20), developed like consensus guidelines. Since then, CAP started publishing evidence-based guidelines for laboratory medicine, which qualify for and are placed in the NGC (21). The ASM also started producing evidence-based guidelines that address clinical microbiology laboratory issues. The following sections address ASM’s approach to EBLMPG, which is based on the CDC DLS LMBP A-6 cycle methods.

The CDC DLS LMBP A-6 cycle method

The CDC DLS LMBP A-6 cycle method is summarized in Table 2 and is compared with SR steps common to all evidence-based guideline approaches. Steps A1-A6 of the CDC DLS LMBP A-6 cycle guide the LMBP SRs. LMBP data collection and analysis are distinct but interrelated processes, followed by a meta-analysis (3). A team of individuals with specific functions conducts the SR. These team members work in a highly coordinated manner to conduct the SR and meta-analysis to develop an EBP guideline.

TABLE 2.

Steps in performing a systematic review and the relationship to the Laboratory Medicine Best Practice A-6 method

| Step | Description | A-6 step |

|---|---|---|

| Step 1 | Formulate a question for review. Once the question is agreed upon, it should not be changed. | ASK-A-1 |

| Step 2 | Identify all relevant studies. American Society for Microbiology (ASM) works with a medical librarian who has been specially trained in performing literature searches for Systematic Reviews. The Centers for Disease Control and Prevention (CDC) librarian is one of the individuals who worked with ASM. The librarian and the rest of the team drill down to keywords or strings of words that should be used to retrieve the available literature. | ACQUIRE-A-2 |

| Step 3 | Assess the quality of the studies. There are several ways to do this. Originally, clinical medicine studies were performed using the “Cochrane” method. This method was part of the original British method for Systematic Reviews and uses the QUADAS method for assessing bias and quality | APPRAISE-A-3 |

| Step 4 | Summarize the evidence from the total body of evidence. Data analysis consists of using statistical analyses to understand similarities and differences between studies using meta-analysis. | ANALYZE-A-4 |

| Step 5 | Interpret the findings. At this point, the Systematic Review (SR) question should be answered based on the findings and conclusions drawn from the total body of evidence. | ANALYZE-A-4 |

| Post SR | Apply the findings to actual clinical practice. Assess the impact of the practice |

APPLY-A-5 ASSESS-A-6 |

The A-6 cycle consists of six steps described in the section, “How are Systematic Reviews Performed Using the CDC DLS LMBP A-6 Cycle Method?” To promote transparency, the A-6 process is open to all relevant stakeholders, including the public (3). The “A’s” definitions (Ask, Acquire, Appraise, Analyze, Apply, and Assess) assure reproducibility and minimize bias while facilitating guideline development and updates. It is an expectation that if an A-6 cycle SR were repeated by a different team using the same method and criteria, they should achieve the same findings. One of the differences in the LMBP A-6 cycle method is the inclusion of unpublished data, recommended by The Cochrane Collaboration to mitigate publication bias (1, 2, 22). Grey data can originate from laboratories conducting Quality Improvement (QI) projects or cost-benefit business data that could be included in the review to benefit patient care.

In 2010, Dr. Robert Kolter, the ASM President, convened 10 clinical microbiology leaders at ASM to review current products and services, brainstorm new ideas, and plan for the future. The intent was to make sure that the clinical microbiology community viewed ASM as responsive to their concerns. One important new initiative was to establish the ASM Professional Practice Committee. The Professional Practice Committee established the EBLMPG Subcommittee to ensure clinical microbiologists addressed laboratory knowledge gaps through an SR process and ultimately improved health outcomes by developing and disseminating evidence-based guidance to laboratorians, patients, clinicians, and other decision-makers. The anticipated guidance would describe laboratory interventions that are most effective for patients under specific circumstances. In addition, it was clear that the Centers for Medicare and Medicaid Services (CMS) was critically evaluating practices that would be reimbursed based on determinations that payment was not appropriate for services for which evidence to support the approach was not available (i.e., not “medically necessary”). Other payers generally follow CMS payment rules, and loss of reimbursement would be detrimental to the clinical laboratory; therefore, evidence to support best practices was deemed imperative.

Collaboration between CDC DLS and ASM

The CDC DLS had developed and validated the methodology that became the LMBP “A-6 Cycle” method (3) and was interested in piloting the project with external clinical laboratory partners. In 2010, with the collaboration of CDC DLS and ASM, Dr. Peter Gilligan, Dr. Alice S. Weissfeld, and Peggy McNult worked collaboratively to expand the use of the LMBP A-6 cycle in clinical microbiology and invited seven members to participate. There were several reasons ASM decided to adopt and adapt the CDC DLSLMBP A-6 cycle method for Systematic Reviews. First, the LMBP A-6 cycle method focuses on identifying evidence-based practices that support the six healthcare quality aims of the Institute of Medicine (IOM), now renamed the National Academy of Medicine. These aims are to provide healthcare that is patient-centered, safe, timely, effective, efficient, and equitable (23).

CDC DLS trained the ASM EBLMPG committee members on the LMBP A-6 cycle method in 2011. Each of the seven committee members selected a topic, developed a question, and performed a prequalification literature search under the guidance of the CDC DLS team. Topics were presented at a weekend meeting, and the ASM members selected the top three to investigate. All guidelines are freely accessible upon publication, aligning with CDC and NGC recommendations so that all clinical laboratories have access and can utilize the best practice recommendations therein.

ASM shadowed the CDC DLS team as they researched the first topic: "What practices are effective at increasing timeliness for providing targeted therapy for those patients who are admitted for or are found to have bloodstream infections (e.g., positive blood cultures) to improve clinical outcomes (reductions in length of stay, antibiotic costs, morbidity, and mortality)?" (1). In 2013, ASM and CDC DLS signed a Memorandum of Understanding to solidify their relationship. They embarked on developing a second guideline, also chosen by ASM committee members: "Does optimizing the collection, preservation, and transport of urine for microbiological culture improve the diagnosis and management of patients with urinary tract infection?" (2). Testing for C. difficile was the third guideline topic: some questions it answered were as follows: “How effective are NAAT testing practices for diagnosing patients suspected of Clostridioides difficile infection?” “What is the diagnostic accuracy of Nucleic Acid Amplification Tests (NAAT) versus either toxigenic culture or cell cytotoxicity neutralization assay?” and “What is the diagnostic accuracy of a glutamate dehydrogenase (GDH)-positive EIA followed by NAAT versus either toxigenic culture or Columbia colistin naladixic acid agar (CCNA)?” (4). Pre-surveys for the urine pre-analytic and the C. difficile testing guidelines were launched in early 2016 and were the last pre-surveys performed.

Finally, ASM entered into a 5-year cooperative agreement with CDC DLS designed to address issues with the final steps of the LMBP A-6 process (A-5, APPLY, and A-6, ASSESS). The agreement intended to establish metrics to measure EBLMPG’s dissemination and promotion, awareness, familiarity, adoption, and implementation (24, 25). The agreement was designed to help organizations develop lasting and sustainable institutional knowledge with qualitative or quantitative methods using free or relatively inexpensive approaches. The survey instruments for all the activities mentioned above were reviewed and approved by the Office of Management and Budget (OMB Control Number 0920–1096; Expiration Date 01/31/2019). The surveys were intended to be completed by stakeholders pre- and post-publication of guidelines to assess guideline impact on laboratory practice.

ASM members on the LMBP committee

Three groups are integral to ASM’s use of the LMBP A-6 cycle method: (i) the “Review Team” (aka the Core Team), comprised of up to 10 individuals; (ii) the Expert Panel of five individuals; and (iii) the LMBP Workgroup (15–20 individuals depending on the focused questions selected for the project) (3). All original and revised roles are listed in Table 3. The Review Team is led by the Review Coordinator, who works with the Medical Librarian and assigns articles to the Expert Panel consisting of subject matter experts on the topic being investigated; the panel members perform the data abstractions. The Review Coordinator ultimately develops the manuscript detailing the SR process and its conclusions. The Review Team also includes the Technical Lead, a clinical microbiologist with unique expertise regarding the topic under review. Other members include the CDC Liaison, a member of the LMBP team, and the ASM Liaison, the Chair of the Subcommittee on Evidence-based Medicine for ASM. Finally, the Biostatistician (more specifically, a Meta-biostatistician) serves as a member of the Review Team and participates in all discussions leading up to the actual data abstraction, audits, and consensus of the abstractors. The Meta-biostatistician also coordinates the statistical review of the findings, including the meta-analysis upon which recommendations are made and graded. The LMBP Workgroup, a group of multidisciplinary stakeholders convened by CDC DLS, provides an independent external review of the SR activities to identify conflicts of interest, issues with the SR protocol and execution, and bias (financial, unconscious, and intellectual) that may have been inadvertently introduced.

TABLE 3.

Team members in the LMBP A-6 process

| Title | Roles in CDC/ASM teams | Role in current ASM EBLMPG |

|---|---|---|

| Review coordinator | Process oversight | Yes |

| Technical/Scientific lead | Scientific oversight | Yes |

| ASM Liaison | ASM/CDC liaison | Yes |

| CDC Liaison | ASM/CDC liaison | No |

| LMBP workgroup (CDC) | Independent advisory and review group convened by CDC | Yesa |

| Expert Panel | Reviewers for inclusion/exclusion criteria, performing extractions and adding scientific subject-matter expertise | Yes |

| External Extraction Team | Not applicable | Yesb |

| Technical assessment Team | Appraisers of final recommendations | No |

| Technical review team | Reviewers for final document pre-publication | No |

| Medical librarian | Support for data procurement and management | Yes |

| Meta-Biostatistician | Support for data analysis including meta-analysis | Yes |

| Stakeholders | Reviewers for final document post-publication | No |

The role differs with ASM. CDC no longer assigns the roles and the workgroup performs only bias assessment, not the full extraction. The full extraction and scoping reviews for ASM are now performed by the Rutgers External Extraction Team.

A new team added by ASM for initial extraction and scoping reviews.

ADAPTING LMBP TO ASM’S CURRENT EBLMPG PROCESS

Since 2011, several notable changes occurred when ASM further adapted the EBLMPG process. In 2016, the QUADAS-2 was added to the EPLMPB, at that time for the assessment of diagnostic accuracy for the C. difficile guideline (4). QUADAS stands for Quality Assessment of Diagnostic Accuracy Studies, and the tool was originally developed in 2003 specifically for meta-analysis of diagnostic accuracy studies (26). QUADAS and QUADAS-2 were specifically developed to facilitate the comparison of studies with heterogeneous study designs (27). These instruments include questions to assess bias, sources of variation, and reporting quality. QUADAS-2 is recommended by the AHRQ, the Cochrane Collaboration, and the United Kingdom National Institute for Health and Clinical Excellence.

By 2019, CDC DLS MUA and the cooperative agreement ended, and Dr. Colleen Kraft assumed the leadership of the ASM EBLMPG from Dr. Alice S. Weissfeld. In 2021, ASM discontinued collaboration with the CDC DLS and named Rutgers University, Newark, NJ, as a new collaborator under the direction of Dr. J. Scott Parrott, and Dr. Esther Babady became the new EBLMPG leader.

Table 3 provides an overview of ASM’s adaptation of roles from the CDC DLS LMBP SR process from 2010 to 2023. To speed and streamline ASM’s EBLMPG process, in 2019, grey data were excluded from the ASM EBLMPG process based on the limited number of abstract data that met the quality criteria and the requirement for IRB review. Additionally, Rayyan web-based software (28) was used to drive the title and abstract review and document consensus among the experts for inclusion and exclusion. Before, Rayyan, the Core Review team used spreadsheets to document the review process. Finally, in 2019, ASM adopted the use of artificial intelligence (AI)-enhanced title and abstract screening resource (29), the SR Data Repository Plus web-based software (SRDR+), which is now the method of choice for documenting the process of moving the full literature review to the publication list that meets the criteria of the research question before the data abstractions.

In 2021, the process further diverged from the classic LMBP process. Since then, there is no longer a CDC Liaison on the Core Review Team. In addition, the Expert Panel no longer solely performs the discrete data abstractions. Recognizing the differences in content versus statistical expertise, the Expert panel co-extracts data from the included articles along with specially trained students and faculty at Rutgers University into the AHRQ Systematic Review Data Repository (30). The Expert panel also performs bias assessment of all publications and harmonizes results. Finally, the LMBP Workgroup no longer exists as part of the EBLMPG process.

One of the most important issues for any SR is for all participants to use the same extraction template (31). The SRDR electronic platform is maintained by Brown University (Providence, RI) at the behest of AHRQ. In this format, scoping reviews are completed in conjunction with Rutgers faculty and the Core Review Team before the full systematic reviews to determine which research questions are most likely to have sufficient evidence to answer the question. An example scoping review was published by Rubenstein et al., describing rapid diagnostic practices for positive blood cultures (32).

HOW ARE ASM SYSTEMATIC REVIEWS PERFORMED USING THE CDC DLS LMBP A-6 CYCLE METHOD?

ASM’s experience with ask step – A-l

The initial LMBP topics were selected by the clinical microbiologists dubbed “ASM 7.” The development of focused questions was guided by the PICO framework (Population, Indicator/intervention/test, Comparator/control, and Outcome). The focused question reflected the problem, including (i) population involved, (ii) practices/intervention used, (iii) intermediate outcomes (e.g., laboratory parameters, impact on workload, and turn-around-times), and (iv) health/healthcare outcomes (e.g., morbidity and mortality). The latter may also include economic impact information. Analytic frameworks were established for these focused questions to depict how implementing selected interventions/practices can lead to targeted quality aims and outcomes.

ASM’s experience in developing analytic frameworks

Systematic Reviews using the CDC DLS A-6 cycle method begin with the preparation of an analytic framework, a collaborative effort between the SR team, the expert panel, the meta-biostatistician, and others as required for the particular project (librarians, evaluators, etc.). The analytic framework visually outlines the question(s) being asked and identifies the bodies of evidence to be included. Furthermore, analytic frameworks define and clarify the scope of the topic to be investigated by promoting a “structured” methodological approach and promoting transparency, consistency, and compatibility of results in external review. Analytic frameworks are a common method for organizing and linking the separate evidence analysis questions and are a type of logic model (33, 34), whereas an analytic framework may serve as a major outcome of a SR (e.g., by organizing a complex process into a coherent “map” or model) (35). At the very least, an analytic framework provides a crucial planning template for the evidence analysis project that accomplishes the following tasks:

Formulate answerable questions

Questions that link “near neighbor” items in the model are often more feasible than questions that try to link early processes to “downstream” outcomes. For example, a question focusing on pre-analytical processes (e.g., appropriate test or test algorithm selection) may connect easily with a question about diagnostic test accuracy. In contrast, a question that seeks to link pre-analytical processes to downstream patient health outcomes may be unanswerable by the current evidence except through inference and consideration of other bodies of evidence (e.g., the availability of proven patient management strategies triggered by test results).

Prioritize among questions

When several different review questions are asked about a laboratory medicine process, an analytic framework can help the SR team prioritize questions and focus the search and analysis efforts.

Prepare for analysis

The question being asked will determine not just the data to be extracted from the individual studies but will also shape the structure of the data extraction tool and the type of synthesis (e.g., meta-analysis or narrative synthesis) that can be performed. Capturing the correct data is crucial for successful quantitative analysis later in the process.

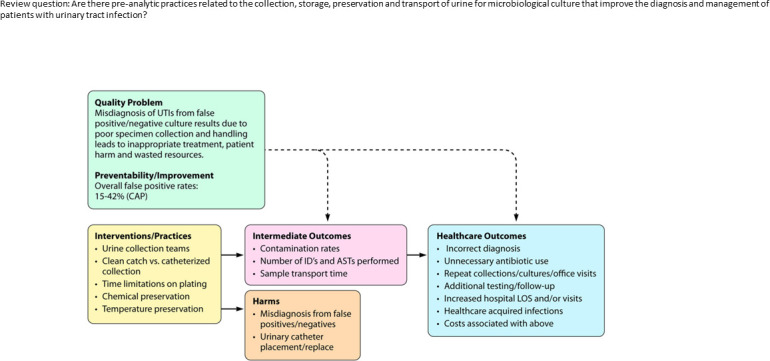

LaRocco and colleagues provide a simplified example (Fig. 2) of an analytic framework (2). Several aspects of the LaRocco analytic model are worth noting: (i) The single model gave rise to eight different practice (evidence analysis) questions. (ii) The model links the eight questions into a coherent framework to build the evidence base, piece by piece, within the larger process of interest. (iii) The model suggests many more review questions that could have been asked and may need to be asked in future research. (iv) The model could easily be elaborated and will likely have to be for future projects that seek to integrate the findings in LaRocco work into the complexities of the patient care process (36).

Fig 2.

Analytic framework. Adapetd from LaRocco et al. (2). Review question: Are there pre-analytic practices related to the collection, storage, preservation, and transport of urine for microbiological culture that improve the diagnosis and management of patients with urinary tract infection?

In this framework, the Review Question was defined first, “Are there preanalytical practices related to the collection, storage, preservation, and transport of urine for microbiological culture that improve the diagnosis and management of patients with urinary tract infections?” The quality issue/problem was articulated based on the 15% to 42% false-positive urine culture rates defined by CAP using quality metrics (2). Interventions and practices to answer the review question and address the problem were then listed and, in this case, included the following practices: (i) the use of urine collection teams, (ii) how the clean catch compared with a catheterized collection, (iii) the time limitations from collection to plating, and (iv) whether chemical preservation or refrigeration was necessary. Intermediate outcomes to indicate a successful intervention would be a lowering of the contamination rate, a decrease in the number of identifications and antimicrobial susceptibilities performed, and a reduction in transport time. Harms to the patient also needed to be considered, i.e., the possibility of no treatment because of a false negative result or the wrong therapy because of a false positive result, as well as the potential for unnecessary placement of an indwelling urinary catheter or incorrect categorization of a CAUTI (catheter-associated urinary tract infection) for quality reporting purposes. Finally, general healthcare outcomes were considered. These are related to specific clinical and financial outcomes that should be avoided, including: (i) incorrect diagnosis, (ii) unnecessary antibiotic use, (iii) repeat urine cultures if the physician questions the result, and (iv) the possibility of healthcare-acquired infections and additional healthcare costs.

ASM’s experience with meta-biostatistician assistance in creating the analytic framework

Comprehensive SR with meta-analysis projects may include questions that encompass the entire laboratory medicine process: from the comparison of pre-analytical practices, intra-analytical practices (including test use based on diagnostic accuracy), and post-analytic practices (including the effect of diagnostic tests on patient management), as well as longer-term individual health and organizational outcomes (19). It was learned through ASM’s collaborative efforts with CDC DLS that it is vital for the SR Team and Expert Panel to have a clear picture of how these different components inter-relate in preparation for the analysis (A-4) step, including quantitative meta-analysis. Meta-analyses can be carried out only when the review question(s) has been clearly and narrowly specified. This approach can sometimes be confusing in a larger and more comprehensive evidence analysis project. Thus, the meta-biostatistician is critical when working with and advising the SR Team and Expert Panel to create the analytic framework.

ASM’s experience planning for study designs during the preparation of the analytic framework

As clinical laboratories and the entire healthcare system continuously evolve, increasing focus is placed on improving the value of services, including patient and organizational outcomes. As laboratory interventions and diagnostic interventions become more data-driven, outcome-oriented, and evidence-based, laboratory professionals will need to become well-versed in aspects of experimental design, biostatistics, epidemiology, quality improvement science, implementation science, and evaluation science. For instance, understanding the conditions necessary for a high-quality experimental study design, assessment of study bias, and review of confounding experimental variables are critical aspects of well-constructed quality improvement studies. Knowledge of these concepts will allow laboratory scientists to generate data that can be more suitable for inclusion in clinical outcome studies derived from quality improvement projects, comparative analytics studies, or impact studies of new testing methods.

“In vitro diagnostic” (IVD) clinical research studies that utilize “de-identified” human tissues or fluids, which cannot be linked long-term to a living individual, are commonly performed in collaboration with clinical laboratories that perform research. This type of clinical research differs from what is defined as “patient-oriented research,” which is commonly interventional, also described as “research conducted with human subjects,” and may occur in collaboration with clinical laboratories or outside of the laboratory. To review the various types of experimental designs, formulation of a sound and reasonable hypothesis is always the starting point, followed by appropriate and ethical study design, choice of relevant endpoints, assessment of study bias and confounding variables, statistical analysis, feasibility, and hypothesis testing—for details about the various aspects of research, the reader can refer to several references (19, 36–43).

Given an evolving healthcare system, emerging technology, and expanding competencies required for laboratory professionals, the LMBP Initiative was created to develop a standardized process by which laboratories can identify and evaluate quality improvement practices that effectively improve healthcare quality and patient outcomes. These efforts mainly focus on pre-analytical and post-analytical phases of laboratory testing, where errors occur (19). LMBP created a variation of existing qualitative and quantitative synthesis approaches in Systematic Reviews (41–43) but with the same goal of making laboratory guidelines and study conclusions stronger than any single study’s analysis alone.

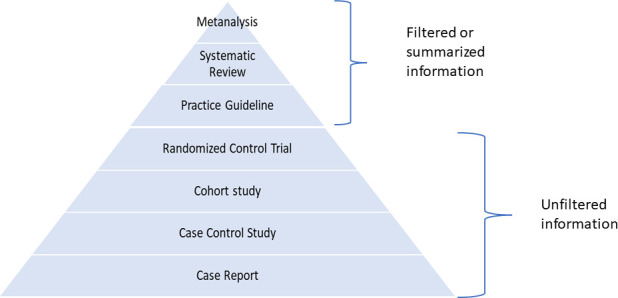

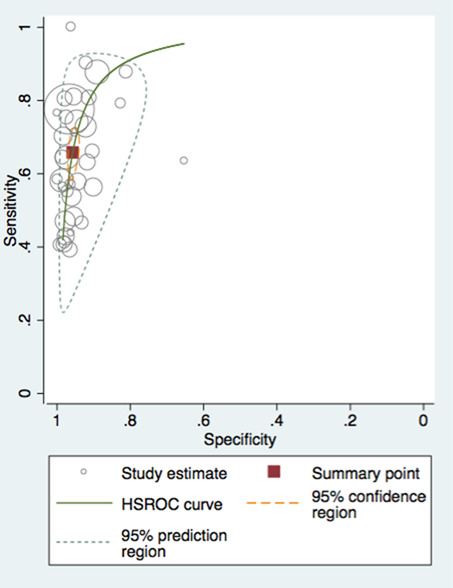

The ideal approach to synthesis is generally quantitative. Meta-analysis lends itself to the numerical synthesis of the combined studies' effect sizes and requires knowledge of advanced statistical techniques and study populations' heterogeneity. Although meta-analysis is the study type with the highest rigor (Fig. 3) for answering questions, there can still be variation in the confidence of meta-analytic results since there are distinct levels of evidence that provide the basis for the meta-analysis. As noted above, a meta-analysis of high-rigor RCTs would provide the highest level of confidence for individual treatment questions, whereas a meta-analysis of cross-sectional diagnostic accuracy studies would provide the highest level of confidence regarding test accuracy (44, 45). For laboratory process improvement questions, where results are sensitive to highly localized contexts, other types of study designs may be optimal (14).

Fig 3.

Ranking in terms of scientific rigor, with meta-analysis representing the highest form of scientific rigor.

ASM’s experience with acquire step – A-2, Keywords and strings

ASM’s use of step A-2 involved the development of a SR search strategy aimed at finding all relevant literature on the topic. Through the ASM-CDC DLS collaboration, it was learned that search strategy development is most successful when there is the involvement of a Medical Librarian, the Expert Panel, and a Meta-biostatistician (descriptions of each of their roles appear in later subsections). It was found that search strategies should be designed with high sensitivity for maximum retrieval of relevant literature. A highly sensitive strategy incorporates an appropriate combination of subject headings and text words. Subject headings are standardized terms used in a bibliographic database’s indexing scheme or controlled vocabulary. MeSH (Medical Subject Heading) terms are assigned to MEDLINE/PubMed records to describe the content and enhance access, as Emtree terms are to Embase records (46). Other bibliographic databases use different schemes to facilitate information retrieval. The searcher can identify and map subject headings using the database’s online thesaurus. ASM additionally discovered that relying entirely on subject headings for SR literature searching is not recommended, as quality and depth of indexing varies across bibliographic records. Subject headings provide a solid foundation on which to build a search strategy and account for indexing shortfalls, text words are added to boost its retrieval potential. Only a few of the earliest LMBP Initiatives (1, 8) sought grey data to avoid publication bias (22). In those studies, library databases that included grey data were used, along with direct calls for data with or without pre-conceived data gathering templates. Since then, ASM excluded grey data; therefore, some publication bias will be a limitation in EBLMPGs published in or after 2019.

During ASM’s adoption of the A-6 cycle method, key concepts were derived from review questions, and analytic frameworks were used to identify terms formed into search strings. It was learned that review questions structured in a standard format, such as PICO components, can quickly be broken down into searchable concepts. Not all concepts are included in the strategy, as certain concepts are challenging to define as search terms. For instance, concepts that refer to specific populations, settings, or outcomes may not lend themselves to searching because they are inadequately described in titles and abstracts and poorly defined in controlled vocabulary (47). It was learned that attempts to capture concepts could inflate or limit the results of a search in undesirable ways. Other concepts, like the type of study, are common to SRs and validated strategies are made available for typical use. These validated strategies are called filters or hedges and are incorporated into a strategy to increase sensitivity. Filters designed for specific databases should be used accordingly. For example, the Cochrane Occupational Safety and Health Review Group has developed three randomized control trial (RCT) filters: one for PubMed, one for Embase.com, and one for PsycINFO ProQuest. Each filter accounts for its own controlled vocabulary, field identifiers, and syntax specific to the database and platform.

A final search strategy evaluation was developed iteratively across targeted literature databases. For example, a broad initial sweep of the literature was launched to find a sample set of relevant citations, from which additional terms and subject headings were harvested for inclusion in the search strategy. Once a draft text of the strategy had been scripted, the searcher tested the usefulness of additional search elements like alternate spellings, truncation characters, adjacency operators, and field restrictions. The search strategy, typically developed for the database, given the highest priority, was modified for subsequent databases to account for variations in database capabilities, structure, and search syntax. The strategy evolved through several iterations, as it was reviewed, tested, revised, and finally approved by the team. The ultimate search strategy treads a delicate balance between being reasonably specific (i.e., minimizing inclusion of irrelevant citations in the search results) and highly sensitive (i.e., maximizing inclusion of relevant citations in the search results).

It was learned that mistakes in the strategy can result in a biased or incomplete evidence base, negatively impacting the quality of the SR (48). For example, some of the most common errors in search strategies include missed MeSH terms, irrelevant terms and subject headings, missed spelling variants, and inappropriate use of logical operators (49). It was also learned that searchers may submit their strategies to colleagues for peer review. A formal peer review process for electronic search strategies has yet to be established; however, a tool called the PRESS Instrument, developed by the Canadian Agency for Drugs and Technology in Health (CADTH) and the Cochrane Information Retrieval Methods Work Group, has demonstrated value in facilitating strategy evaluation (50). This tool identifies the elements of the search strategy most likely to impact the accuracy and completeness of the evidence base (49).

ASM’s experience with the role of the medical librarian

IOM standards for Systematic Reviews underscore the need to employ a librarian or information management professional with expertise in searching (51). Medical librarians have unique expertise in managing information related to clinical and biomedical literature sources and are highly educated professionals with a unique set of applicable skills that contribute measurably to a SR overall integrity and credibility. They are studied and practiced in collecting, indexing, storing, and retrieving bibliographic information. They understand the mechanics of searching and the logic of search strings, and they can design complex strategies to achieve search objectives. Furthermore, they are aware of hundreds of databases and information resources that can be used for evidence gathering. They also possess training in numerous database systems and software applications for storing and managing large amounts of information. They maintain professional connections with thousands of knowledgeable librarians with whom they can consult. A medical librarian with specific training in performing SR searches is recommended, as the search methods employed have a different strategy than routine searches. There are librarians who not only have had additional training in the SR process but also have had prior experience working on projects.

Developing rigorous, appropriate, and complete search strategies is a skill honed by experience and practiced daily by librarians, as is detailed record keeping. The quality of searching and reporting is significantly improved when a trained librarian is engaged in the SR process (52). Librarians should be introduced to the SR project in the initial planning phases. Meeting with the team early in the process will help the librarian understand the rationale for the project, the questions being investigated, and the protocol being used. The information gathered at this point can be synthesized to draft a preliminary search strategy with which a pilot search can be launched. The results of this search can conclusively establish the need for a SR and identify any previous reviews published on the topic. The findings can help the team refine or refocus the study questions and protocol.

Experienced SR searchers understand the importance of maintaining reporting standards. The SR methods must be transparent, replicable, and updatable. The search process, strategy, and results must be meticulously documented and fully reported. A failure to accurately record and report on these elements degrades the value of the SR. During the search phase, the librarian keeps track of all reportable elements. At the end of the search phase, the team receives a complete library of retrieved references with all pertinent bibliographic information and the final search report.

To best meet Preferred Reporting Items for SRs and Meta-Analyses (PRISMA) guidelines, the librarian’s final report should include the following: the complete search strategy as it was executed in each database searched; database information for each database searched, including time coverage and platform information; a record of the number of citations retrieved per database; and the date the search was executed (53). These items will then be reported in the methods section of, or an attached appendix to, the final manuscript. Without this information, the SR is neither replicable nor updatable. Copies of search reports must be maintained as a component of the SR process.

If a significant amount of time lapses between the date of the initial search and the date the manuscript is submitted for publication, a search refresh is required. The review team will again employ the librarian to run the update, who will document the update process and report on the necessary elements. With this information, any librarian can perform an update or a refresh of the literature lists. If the complete original search strategies and database information are unavailable, performing the update is impossible.

Databases for literature searches

Databases were selected based on subject content, date coverage, and scope. Appropriate database choices will account for the subject in question, the population under study, and the likelihood of finding relevant publications (51). Searchers considered what journals the database indexes and how often it’s updated with new information. It was learned that when making database decisions, secondary factors such as cost, accessibility, and usability may come into play. Though time and money can influence decisions, selection is “ideally based on the potential contribution of each database to the project or on the potential for bias if a database is excluded, as supported by research evidence” (54). In addition to vetted databases, hundreds of information resources are publicly available on association, agency, and government websites. These will provide access to published articles, unpublished papers, technical reports, conference proceedings, grey literature, and government documents. Once electronic resources are exhausted, supplemental hand-searching of subject bibliographies, reference lists, and select journals identifies studies that may have been overlooked.

Based on guidance provided by the CDC Librarian, who has special training in SR processes, it was learned that a minimum of three bibliographic databases should be used to gather the evidence (55). The three most important databases for clinical trials, per Cochrane guidelines, are MEDLINE/PubMed, Embase, and Cochrane Central Register of Controlled Trials (46). MEDLINE and Embase together provide good coverage of the biomedical literature. ASM has also used SCOPUS and CINAHL. For questions that were interdisciplinary in nature, additional searching in subject-specific databases is warranted. Subject-specific and regional databases were searched when the core databases were deemed unlikely to yield a complete evidence base (51), such as PsycINFO, commonly searched to access mental health or behavioral science literature, and CINAHL, used for nursing and allied health. Regional databases were also introduced to capture literature targeting specific populations or settings. Two good examples of regional databases are LILACS, which provides comprehensive coverage of Caribbean and Latin American literature, and African Index Medicus, for literature published in and about Africa.

ASM’s experience with the role of the expert panel

The primary role of the Expert Panel in the Acquire Step (A-2) is to provide feedback and input to the SR team for a Laboratory Medicine Best Practices SR. The panel may also contribute to the final manuscript. The panel members should be knowledgeable about the topic to be reviewed and understand evidence review methods and data management. Potential panelists are evaluated and selected based on their publication record and their level of involvement and leadership in relevant organizations and initiatives (19). The Expert Panel composition should be selected before the start of the SR.

ASM’s experience with the role of the meta-biostatistician

It is vital to capture (i.e., “Acquire”) the correct information in the right way to conduct the proper analysis. Readers new to the SR/metanalysis process may not be aware that even when considering the same diagnostic test, the information captured (and, hence, data structure) can be very different depending on whether the question is about the accuracy of the test relative to a reference test (i.e., a diagnostic test accuracy question) versus questions about the test’s use and timing in the patient care process as they relate to patient health outcomes (i.e., practice intervention or even prognosis question) (56). For example, if the question is specifically about diagnostic test accuracy, true/false positives, and true/false negatives must be extracted from the individual studies. In contrast, for practice intervention/improvement or prognosis questions, data extraction would include group means, measures of dispersion, odds ratios, hazard ratios, etc. From the perspective of quantitative analysis, not only do these two types of data have very different data structures but very different meta-analytic methods as well (57).

Before data extraction, the Expert Panel and Review Team (with the input of the meta-biostatistician) should identify whether the questions of interest relate to a practice intervention (i.e., comparison of implemented quality improvement practices, protocols, procedures, etc.) or (from an in vitro diagnostic perspective) relate to analytic validity, clinical validity, or clinical utility (58, 59). Appropriate study designs for inclusion, the structure of the data collection tool, the methods of analysis, and, ultimately, the conclusions that can be drawn all vary depending on which of the above questions are being asked. These must be specified ahead of time for the project to be successful.

The meta-biostatistician is not merely an expert in computation but should be an expert in the methodological procedures and principles of creating SRs and meta-analyses of reviewed studies at many steps along the process. While the statistical methods involved in most non-diagnostic accuracy meta-analyses are less complicated; therefore, the development of several resources (59, 60) and (Review Manager software RevMan version 5.3; http://community.cochrane.org/tools/review-production-tools/revman-5) allow non-statisticians to quickly and easily compute pooled effect sizes and measures of heterogeneity as well as create forest and funnel plots; there are hazards with these types of meta-analyses, which are easily avoided with periodic consultation with a meta-biostatistician. In contrast, the challenges with diagnostic accuracy meta-analyses are far more complex and require more intensive involvement of the meta-biostatistician. In either case, the participation of a meta-biostatistician versed in the methods of the more extensive evidence analysis process is essential. For either diagnostic accuracy or practice intervention/improvement questions, consultation with a meta-biostatistician is critical regarding issues of confounding, model veracity, and publication bias.

Though a meta-biostatistician should be included in the evidence analysis team, there is no definitive guide for when and how the meta-biostatistician should be included in the evidence analysis process. The issue of when to involve the meta-biostatistician in the more extensive evidence analysis process is important (61). Indeed, the LMBP is an integrated process where activities that occur in any one part of the process are carried out with thoughtful reference to other steps in the LMBP A-6 cycle (19). For instance, formulating practice-relevant evidence analysis questions has direct implications for not only the types of data to be extracted from research studies but also the best (or possible) analyses that can be carried out on these data as well as the limitations of what can be inferred from the evidence. Not having the correct data in the proper format can hinder or even preclude the possibility of analyses of interest for stakeholders.

The role depends as much on the other team members’ experience as the meta-biostatistician’s experiences. In general, however, the involvement of the meta-biostatistician should not be limited only to the ANALYZE step. An experienced meta-biostatistician should be able to offer valuable input at several points in the process (see Table 1). There are at least three key analysis challenges in which meta-biostatistician input is crucial, and these will be discussed in the context of the A-6 step in which they are encountered (Table 4). These challenges include the following:

TABLE 4.

Steps in the LMBP A-6 process for input from the meta-statistician

| LMBP A-6 step | Input of meta-statistician |

|---|---|

| ASK (A-1) |

Consultation on question formulation, methodological criteria for specifying inclusion and exclusion criteria, creation of the logic model or analytic framework |

| APPRAISE (A-3) |

Input on the structure of data extraction fields tailored to the particular project (to facilitate meta-analyses) Available for technical data extraction questions Consultation on effect size |

| ANALYZE (A-4) |

Help with statistical measure conversions to facilitate meta-analysis Recommendations for dealing with missing data Methods for conducting indirect comparisons and more complex mixed treatment methods. Sensitivity analyses |

Creating the analytic framework (ASK)

Identifying the right information and right structure before analysis (ASK)

Addressing the challenges of diagnostic test accuracy meta-analyses (ANALYZE)

Collecting and storing SR data

The review team developed a data management plan for data collection, management, and storage. A SR literature search’s final publication data set typically contains thousands of citations. The plan established a reference management software program designed to handle the large volume of citations characteristic of SRs. Commonly used software programs include Endnote, RefWorks, Reference Manager, and others (62). ASM learned that it was important that all members of the team needed to be working in the same software program and that a central repository of publications be kept. Modifications to the reference library and records should be visible and shared with the whole team. Operating in multiple programs and funneling results from one system to another could result in lost data, complicating reference tracking and counts.

The commercial bibliographic databases employed in evidence retrieval commonly include a feature to facilitate the exportation of hundreds of bibliographic citations at a time. These databases often allow for the direct export of citations into a reference software program and provide options to download information in importable file formats. For proprietary reasons, the database vendor limits the number of citations that can be exported simultaneously. If the results exceed these limits, citations must be downloaded in batches. Keeping careful track of the numbers during exporting and importing is essential. The smaller information sources may not have citation exportation as an option. Relevant citations identified in these sources are manually entered into the citation library as new records. ASM used RefWorks, but is currently using EndNote.

ASM’s experience with appraise Step – A-3

Step A-3 involves screening evidence obtained during step A-2 for inclusion or exclusion, generally by a straightforward title or abstract review by at least two team members who agree upon inclusion. Inclusion and exclusion criteria are well-defined, and careful records of title disposition are maintained. For studies deemed acceptable for inclusion, this step is followed by abstracting information from the included evidence into standardized data extraction templates, (formerly called abstraction forms by CDC DLS) with determinations of RoB ratings and effect size measures for individual studies.

Translational laboratory medicine studies (diagnostic accuracy)

In clinical laboratory settings, a translational step is to adapt and deploy findings from patient-oriented research or clinical trials into daily clinical practice. These transfer-of-practice studies are typically called Method Comparisons or Diagnostic Accuracy Studies. As an example of how diagnostic clinical trial data may enhance our understanding of infections, multiplex molecular panel testing identified an increased frequency of co-infections that spawned investigations to detail the clinical impact of co-infections on disease severity. For more detail on reporting the results of such diagnostic tests, refer to the current document, “Statistical Guidance on Reporting Results from Studies Evaluating Diagnostic Tests; Guidance for Industry and FDA Reviewers” (40).

Incorporating large-scale clinical trials

Most large-scale clinical trials are randomized (RCT) in the pharmaceutical industry but are uncommon in the clinical laboratory (37). Clinical trials are always hypothesis-driven and need to be adequately powered, i.e., large enough to ensure that a negative result is not the result of insufficient samples or patients but an actual biological result or diagnostic condition. For non-RCT, clinical outcomes may not be defined; however, the study size and general study design would be amenable to the LMBP A-6 process if the study answers any of the LMBP questions posed for a particular project.

Incorporating other meta-analysis

A “meta-analysis” is the process by which the results of multiple individual studies are statistically combined (41, 42). Meta-analysis is a component of the SR process. It is a method for systematically combining pertinent qualitative and quantitative study data from several selected studies to develop a single conclusion that has greater statistical power. This conclusion is statistically more robust than the analysis of any single study due to increased numbers of subjects, greater diversity among subjects, or accumulated effects and results.

Bias in meta-analysis

Because of the complex and time-consuming nature of the SR process, meta-analysis should include mitigation of certain pitfalls. Human literature review and grading processes are inherently subjective, and if not carefully designed and executed, appropriate studies may accidentally be discarded. The infrastructure supporting multiple human raters for each publication reviewed, third-party tie breakers, and strict guidelines for data abstraction help mitigate the biases that could occur. Bias can also occur if the studies pooled for SR are a mixture of different experimental design types (e.g., observational studies mixed with RCT). When mitigating bias, the pooled study types should be similar (i.e., all randomized controlled trials or all observational studies).

Publication bias (also called small study bias) is another type of bias in which studies with positive results have a better chance of being published, are published earlier, and are published in journals with higher impact factors. In addition, studies tend to be published only when there are positive results; therefore, studies with negative results are excluded from the published literature, and conclusions based exclusively on published studies can be misleading. In another example, publication bias could occur because some healthcare settings are not as likely to publish their results (e.g., community hospitals vs. academic medical centers). Publication bias is common in the healthcare literature and may cause readers to understand a problem differently than if they had information about a broader group of healthcare organizations. It is optimal when publication bias is assessed and documented (63), preferable with the help of a meta-biostatistician.

Confounding variables in study design

When reviewing primary literature, subject matter experts must identify RoB and use their subject matter expertise to create plausible scenarios to describe how or why the interventions work in a healthcare or laboratory setting to assess the generalizability of the final results. To do that, reviewers must understand the relationship of variables in the context of the quality question, identifying confounding variables in order to control for them. For instance, a confounding variable (a confounding factor or confounder) is an extraneous variable in a statistical model that correlates (positively or negatively) with both the dependent and independent variables, distorting the perceived relationship between variables. Confounding variables can occur in a primary clinical study of infection when an infectious exposure (independent variable) and an outcome like mortality (dependent variable) are both strongly associated with a third (possibly random and unrelated) variable (like end-stage cardiac disease). In contrast, moderator variables are those for which the effect of the predictor on the outcome varies; they specify conditions under which the relationship changes direction. For example, a moderating variable could provide insight into how the intervention may work differently in different circumstances (e.g., Emergency Department versus Critical Care versus general inpatient units). Finally, mediator variables are intermediate variables in a causal chain between two other variables. Within the context of SRs and meta-analysis, in addition to subject matter expertise, confounding variables to detect bias occur via subgroup analyses for studies that do and do not adjust for known confounders (64) and subgroup analysis by diagnostic testing method or device.

It is essential to control for confounding effects and assess the impact of the variables of interest. For any research study, it is crucial to determine whether the designed study will answer the question raised in the hypothesis. In clinical laboratory-based research, the control of confounders may be more easily achieved as the investigator can match samples for many baseline characteristics (e.g., disease states) and have control over the environment during the study. Considering subject age, gender, race, and co-morbidities can help the investigator match subjects in a control and an intervention group. It should be considered during the experimental design phases of a study.

In clinical research, controlling for confounders is often more challenging. For example, the laboratory may have little control over the type of patients who enter an emergency department with sepsis, but the laboratory can record information describing the subjects being tested and compare characteristics between two cohorts after the study period ends. When the effects of confounders are not controlled for, they are often dealt with through statistical adjustment, i.e., the use of regression analyses. However, assessing the impact of confounders by these techniques on study outcomes may still be inadequate (65–67).

Specific challenges for bias in diagnostic studies

Many factors impact the reporting of diagnostic accuracy studies. For example, the true gold or reference standard may be defined differently in different studies, depending on the methodology used and the purpose of the study. In many situations in evaluating a diagnostic laboratory test or product, there is either no gold standard or its use is impractical, which can introduce variability in study rankings and potential for bias. In these instances, the ideal would be to calibrate the new test to the known performance of an accepted reference standard; however, this is not always done or possible. Without such calibration, the indiscriminate use of terms such as sensitivity and specificity could be misleading. In such cases, positive and negative agreement are the preferred terms. In agreement studies, the focus is on evaluating a diagnostic test by assessing its agreement in performance with some other well-understood but imperfect test(s).

In diagnostic test evaluation, there are also situations in which the truth may be known but only for a subset of subjects or specimens. These situations can lead to verification bias. In such cases, adjustment for verification bias is imperative because establishing the truth of the patient’s condition depends inherently on the test or tests applied to a small and possibly not representative group of patients. An example of introducing bias would be the use of “discrepant testing,” a resolution of discrepancy in which discordant results between the two methods receive further testing. Although commonly reported, this practice introduces a bias toward confirmation of the new test and is discouraged (68).

In another example, the laboratory investigator often needs to establish the limit of detection (LOD), for which a nonparametric statistical approach can be used. This method is commonly used in statistics to model and analyze ordinal or nominal data with small sample sizes (69). Different ways of determining LOD exist. For example, some use 19 positives out of 20 to define the LOD, whereas some use probit analysis. Due to the inherent differences in determining LOD, the RoB must be considered to ensure diagnostic accuracy. To control for diagnostic study bias, the use of the STARD criteria “Statistical Guidance on Reporting Results from Studies Evaluating Diagnostic Tests; Guidance for Industry and FDA Reviewers” (40) or the GRADE (Grading of Recommendations, Assessment, Development, and Evaluation) tools can be helpful.

Abstraction training and performance, the role of an expert panel, and meta-biostatistician

Individuals who volunteered for the Expert Panel underwent structured training sessions using the database designed to enter the abstractions. Each member of the Expert Panel independently abstracted a selected publication, after which a conference call was convened to compare the results of the individual quality ratings. This training helped ensure consistency among team members performing abstractions.

Using the LMBP method, two separate abstractors reviewed each paper. If they disagreed with each other’s assessment, a third person was asked to adjudicate the differences. By the time, this phase was finished, at least 2 of 3 abstractors had agreed on the essence of the evidence summary and placed results in an Evidence Summary Table (EST). The agreement between at least two abstractors was usually honored but, if necessary, was discussed further with other Expert Panel/Review Team members, including the Meta-biostatistician, to audit the process and confirm consensus. ASM recruited 10 to 12 subject matter experts (SMEs) or more, as necessary, who served as the abstractors/Expert Panel and Core Team. The final recommendations were presented to the LMBP Workgroup convened by CDC DLS, which independently assessed the data acquisition, analysis process, and the resultant practice recommendations (15).

ASM’s experience with the analyze step – A-4

The Analyze step involves aggregating the body of evidence to derive summary findings, including practice recommendations based on a practice’s effect as observed in the evidence base and the quality of evidence, as described in Christenson et al. 2011 (3).

Role of the expert panel

Utilizing the LMBP process, the SR team works with the Expert Panel and the meta-biostatistician to assess the strength of evidence for the practice(s) being evaluated and translates the evidence into draft evidence-based recommendations, which are then submitted to the LMBP Workgroup for independent external review (16). After the data are abstracted and analyzed by the meta-biostatistician and reviewed and discussed by the Core Team Members, further discussion ensues about whether recommendations regarding the initial questions can be answered and what potential harms or limitations exist. Then, the Technical Assessment Team, the Technical Review Team, and if available, the outside Stakeholders weigh in. Finally, these recommendations are presented to the LMBP Workgroup by the Review Coordinator and/or Technical Lead and the Meta-biostatistician. Translating summary findings into draft evidence-based practice recommendations includes direct input on recommendation categorizations and the degree of confidence that the practice will do more good than harm, in light of the evidence on both effectiveness and aspects of implementation.

Role of the meta-biostatistician

Meta-analytic methods for questions of intervention, etiology, or prognosis are well established. Readers familiar with standard statistical procedures used in primary research (original collection and analysis of data not collected before; secondary research involves examination of data collected and reported in previous studies) should find the computation and interpretation of most non-diagnostic accuracy meta-analytic statistics reasonably straightforward. They are simply extensions of the statistical procedures found in primary research. More recent methods to allow for indirect comparisons (70, 71) and manage heterogeneity (72) have been developed but are not yet widely used and will not be discussed here. Special statistical considerations arise with diagnostic test accuracy (DTA) questions. Four are particularly important:

Paired outcome measures

In non-DTA questions (e.g., therapy or prognosis questions), there is typically only one measure of overall effect (e.g., the pooled mean or odds ratio). In DTA questions, two related values are typically reported: sensitivity and specificity, positive and negative predictive value, or positive and negative likelihood ratio. It is recommended that meta-analyses be carried out on all three of these measures (73). While single-value summary statistics are available (e.g., diagnostic odds ratio [DOR]), they may not be clinically useful (74).

Outcome measures are related

Because sensitivity and specificity are related (e.g., one typically increases as the other decreases) at different index test threshold levels, their pooled estimates should not be computed separately (65). This dependence means that special statistical modeling procedures are warranted (57).

Threshold values

Since a diagnostic test aims to differentiate people with the disease or condition from those who do not, the outcome is binary. Therefore, the index test’s threshold (or cutoff) level is used to “sort” subjects into those with the condition or disease from those without. Changing the threshold level changes the results. Primary DTA studies often evaluate different index test threshold levels, which poses a challenge when combining summary statistics across studies. Sensitivity and specificity values from two primary studies that used very different threshold levels are not easily comparable.

Reference test error

When a “gold standard” aka “reference standard” test exists (i.e., where we can be relatively confident that the subject did or did not have the disease or condition), then the ability to determine whether the index test accurately sorts subjects into positive and negative disease (or condition) categories is straightforward (74). However, when the reference test is known to be imperfect or the new or index test is believed to be better than the reference test, then estimates of diagnostic accuracy statistics will not be trustworthy (74).