Abstract

In recent years, advances in image processing and machine learning have fueled a paradigm shift in detecting genomic regions under natural selection. Early machine learning techniques employed population-genetic summary statistics as features, which focus on specific genomic patterns expected by adaptive and neutral processes. Though such engineered features are important when training data are limited, the ease at which simulated data can now be generated has led to the recent development of approaches that take in image representations of haplotype alignments and automatically extract important features using convolutional neural networks. Digital image processing methods termed α-molecules are a class of techniques for multiscale representation of objects that can extract a diverse set of features from images. One such α-molecule method, termed wavelet decomposition, lends greater control over high-frequency components of images. Another α-molecule method, termed curvelet decomposition, is an extension of the wavelet concept that considers events occurring along curves within images. We show that application of these α-molecule techniques to extract features from image representations of haplotype alignments yield high true positive rate and accuracy to detect hard and soft selective sweep signatures from genomic data with both linear and nonlinear machine learning classifiers. Moreover, we find that such models are easy to visualize and interpret, with performance rivaling those of contemporary deep learning approaches for detecting sweeps.

Keywords: feature extraction, machine learning, selective sweep, signal processing

Introduction

The rapid increase in computational power over the last decade has fueled the development of sophisticated models for making predictions about diverse evolutionary phenomena (Angermueller et al. 2016; Schrider and Kern 2018; Azodi et al. 2020; Korfmann et al. 2023; Rymbekova et al. 2024). In particular, artificial intelligence has been major a driver, with approaches employing linear and nonlinear modeling frameworks (Hastie et al. 2009). These novel methodologies have been applied for detecting selection, estimating evolutionary parameters, and inferring rates of genetic processes (Williamson et al. 2007; Chun and Fay 2009; Ronen et al. 2013; Schrider and Kern 2016; Sheehan and Song 2016; Flagel et al. 2019; Adrion et al. 2020; Wang et al. 2021; Burger et al. 2022; Hejase et al. 2022; Kyriazis et al. 2022; Gower et al. 2023; Hamid et al. 2023; Smith et al. 2023; Zhang et al. 2023; Ray et al. 2024).

Multiple strategies have been pursued to address evolutionary questions using machine learning. One such attempt has been to use summary statistics calculated in contiguous windows of the genome as input feature vectors to detect natural selection (Ronen et al. 2013; Pybus et al. 2015; Sheehan and Song 2016; Sugden et al. 2018; Mughal and DeGiorgio 2019; Mughal et al. 2020; Arnab et al. 2023; Korfmann et al. 2024), as natural selection is expected to leave a local footprint of altered diversity within the genome (Hudson and Kaplan 1988; Hermisson and Pennings 2017; Setter et al. 2020). To train such models, summary statistics are calculated from simulated genomic data, with frameworks ranging from linear models, such as regularized logistic regression (Mughal and DeGiorgio 2019; Mughal et al. 2020), to nonlinear models, such as ensemble methods that combine the effectiveness of different classifiers and deep neural networks (Lin et al. 2011; Pybus et al. 2015; Schrider and Kern 2016; Sheehan and Song 2016; Kern and Schrider 2018; Hejase et al. 2022; Arnab et al. 2023; Mo and Siepel 2023; ). However, when opting to use summary statistics to train these models, we are making an assumption that the chosen set of summary statistics is sufficient to discriminate among different evolutionary events, thereby providing satisfactory classification. Summary statistics can thus lead to subpar model performance if suitable measures are not chosen, with these statistics often selected based on theoretical knowledge and experience.

A complementary strategy is to use raw genomic data in the form of haplotype alignments as input to machine learning models, and for the model to perform automatic feature extraction. Attempts at this have mainly adopted convolutional neural networks (CNNs) (LeCun et al. 1998), which have been successfully applied to a number of problems in population genomics, including detection of diverse evolutionary phenomena, estimation of genetic parameters such as recombination rate, and inference of demographic history (Chan et al. 2018; Flagel et al. 2019; Torada et al. 2019; Gower et al. 2021; Isildak et al. 2021; Qin et al. 2022; Cecil and Sugden 2023; Lauterbur et al. 2023; Whitehouse and Schrider 2023; Riley et al. 2024). By construction, CNNs are not sensitive to small differences in details among different neighborhoods in a sample because of the smoothing that is accomplished through the one or more pooling layers in the CNN architecture (Goodfellow et al. 2016). Because such subtle details are often noise, it is typically beneficial to remove them as they might impact model predictions. However, other times those small differences in details could prove to be important enough to provide an improvement in predictive performance, as we will show in this article. In many of the population-genomic problems that machine learning methods have been applied to, the data are expected to have appreciable levels of autocorrelation (i.e. correlation of neighboring genomic locations due to linkage disequilibrium), which have been handled by using input features deriving from contiguous windows of the genome (e.g. Lin et al. 2011; Schrider and Kern 2016; Kern and Schrider 2018) and by explicitly modeling such autocorrelations (e.g. Flagel et al. 2019; Mughal and DeGiorgio 2019; Mughal et al. 2020; Isildak et al. 2021; Arnab et al. 2023).

A recent attempt to improve input data modeling was taken by Mughal et al. (2020), which used signal processing tools to extract features from input summary statistics calculated in contiguous windows of the genome, with those features automatically modeling the spatial autocorrelation of the data. Mughal et al. (2020) demonstrated that signal processing techniques can help improve true positive rate and accuracy for detecting positive natural selection, even within a linear model. Moreover, the trained models could be easily visualized, resulting in an interpretable framework for understanding what features are important for making predictions. Therefore, we believe such approaches, which have a long-standing foundation within engineering (e.g. Starck et al. 2002; Liu and Chen 2019), can further be used to make more accurate inferences from raw signals and haplotype data.

To apply such methods on raw haplotype data, we consider basis expansions (see supplementary methods, Supplementary Material online) in terms of wavelet (Daubechies 1992) and curvelet (Candes et al. 2006) bases. Wavelets and curvelets are part of a generalized framework termed α-molecules (Grohs et al. 2014), which have been extensively employed in image (two-dimensional signal) analysis (Starck et al. 2002). Here, the parameter α symbolizes the amount of anisotropy permitted in the scaling of basis functions. Isotropic scaling means that both coordinate axes are scaled by the same amount. In contrast, anisotropic scaling means that the x and y axes can have different scaling factors. As an example, isotropic scaling of a circle would lead to other circles of different sizes, whereas anisotropic scaling of a circle would lead to ellipses of different lengths and widths. For wavelets, the basis functions are scaled isotropically, whereas for curvelets, the basis functions can be rotated and are scaled anisotropically (parabolic scaling; see supplementary methods, Supplementary Material online). The resulting wavelet and curvelet coefficients used to decompose an image can be used as input features to machine learning models, and we expect wavelets and curvelets to embed key components in the data to aid machine learning models in achieving better performance.

As a proof of concept, we highlight the utility of α-molecules for extracting features from image representations of haplotype alignments when applied to the problem of uncovering genomic regions affected by past positive natural selection. Positive selection leads to the increase in frequency of beneficial traits within a population. Because genomic loci underlie traits, beneficial genetic variants at these loci that code for such traits will also elevate in frequency. This rapid rise of these beneficial mutations leads to alleles at nearby neutral loci to also increase in frequency by a phenomenon known as genetic hitchhiking (Smith and Haigh 1974). This hitchhiking causes a loss of diversity at neighboring neutral loci in addition to the lost diversity at the site of selection due to positive selection. The resulting ablation of haplotype diversity is known as a selective sweep (Przeworski 2002; Hermisson and Pennings 2005, 2017; Pennings and Hermisson 2006), and this is a key pattern that researchers exploit when developing statistics for detecting positive selection from genomic data.

Evidence of natural selection garnered by identifying selective sweeps aids in our understanding of the natural history of populations. For example, detection of sweeps can lend insight into the pervasiveness of positive selection in shaping genomic variation, whereas the prediction of the particular type of adaptive process (i.e. as hard sweep, soft sweep, or adaptive introgression) (Hermisson and Pennings 2005; Pennings and Hermisson 2006; Setter et al. 2020) can serve as a blueprint for pinpointing particular mechanisms that have driven adaptation (Hernandez et al. 2011; Granka et al. 2012; Huerta-Sánchez et al. 2014; Schrider and Kern 2016). However, sweep detectors can be confused by false signals due to other common evolutionary phenomena. For example, other forms of natural selection (e.g. background selection) (Charlesworth et al. 1993; Braverman et al. 1995; Charlesworth et al. 1995; Hudson and Kaplan 1995; Nordborg et al. 1996; McVean and Charlesworth 2000; Boyko et al. 2008; Akashi et al. 2012; Charlesworth 2012) as well as demographic history, such as population bottlenecks (Jensen et al. 2005; Stajich and Hahn 2005), can leave similar imprints on genetic data (though see Schrider 2020). Therefore, the problem of detecting sweep patterns from genomic variation has been extensively studied for decades, and represents a difficult yet suitable setting for evaluating novel modeling frameworks.

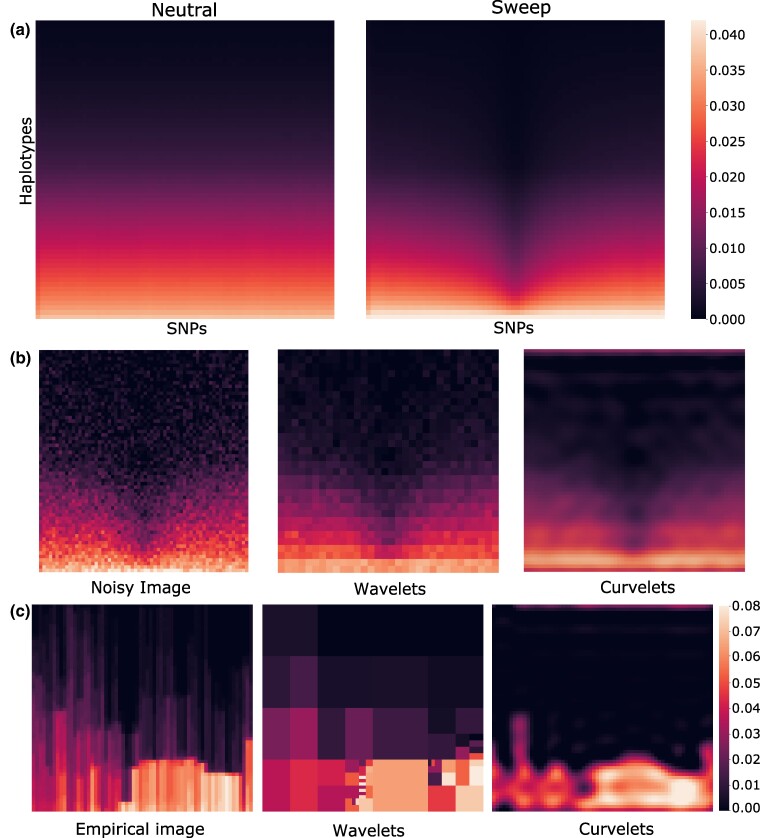

As an illustration of applying α-molecules to raw haplotype data, we depict the effect of decomposing and reconstructing image representations of haplotype alignments from wavelet and curvelet basis expansions (Fig. 1). Specifically, Fig. 1a shows heatmaps of haplotype images, averaged across many simulated replicates, for two evolutionary scenarios: neutrality (left) and a selective sweep (right). The sweep image displays a prominent vertical dark region in the image center, which symbolizes the loss of diversity due to positive selection. This pattern can be further illustrated an example of how such an image was created (supplementary fig. S1, Supplementary Material online), where we see that after processing a haplotype alignment, regions with greater numbers of major alleles will have a concentration of values close to zero toward the top of the image and values close to one toward the bottom of the image. The sharp contrast in the center of the sweep image (Fig. 1a) signifies the presence of major alleles (zeros) at high frequency in the center of the image, which presents a reduction in diversity. However, in more practical settings, this dark region will not be as easy to detect, as there will be some noise involved. Figure 1b shows a noisy image of the sweep setting, together with reconstructions from wavelet and curvelet decomposition that recover the purity of the original image. Noise in this scenario may have arisen due to both technical and biological factors, such as the particular set and number of sampled individuals, the accuracy of genotype calling and haplotype phasing in these individuals, and whether there have been mutation or recombination events recently in the history of some of those samples. The reconstructions are the result of wavelet and curvelet decomposition for which only the top one percent of coefficients with largest magnitude values are retained and the remaining coefficients have their values set to zero. This hard percentile-based thresholding results in a sparse set of coefficients such that only a few have nonzero values (). We depict a more realistic setting of haplotype variation expected from empirical data in Fig. 1c, which shows original and sparse wavelet and curvelet reconstructions of an image of diversity surrounding a gene on human autosome 7. Both wavelet and curvelet processes can capture the details of the original sweep image fairly well, with the curvelet reconstructed image smoother due to the greater freedom that is afforded to it by the curvelet decomposition of the functions (see supplementary methods, Supplementary Material online).

Fig. 1.

Image representations of haplotype alignments and their sparse reconstructions. Darker regions correspond to higher prevalence of major alleles. a) Heatmaps representing the -dimensional original haplotype alignments used as input to α-DAWG, averaged across 10,000 simulated replicates for either neutral (left) or sweep (right) settings. The mean sweep heatmap (right) shows the characteristic signature of positive selection, with a loss of genomic diversity (vertical darkened region) at the center of the haplotype alignments where a beneficial allele was introduced within sweep simulations. In contrast, this feature is absent in the mean neutral heatmap (left). b) Reconstructed images of a noisy signal with wavelet and curvelet decomposition. The noisy image was generated by adding Gaussian noise of mean zero and standard deviation one to the mean sweep image depicted in panel (a). For sparse reconstruction from wavelet and curvelet coefficients, we employed a hard percentile-based cutoff, where only the one percent of coefficients with largest magnitude values were retained for the image and the remaining coefficients were set to zero. While both sparse reconstruction approaches capture the overall signal in the noisy image, the curvelet reconstructed image is smoother than the wavelet reconstructed image. c) Sparse reconstruction of haplotype alignments for a genomic region encompassing the CCZ1 gene on human chromosome 7 from wavelet and curvelet coefficients in which hard percentile-based thresholding was employed as in panel (b).

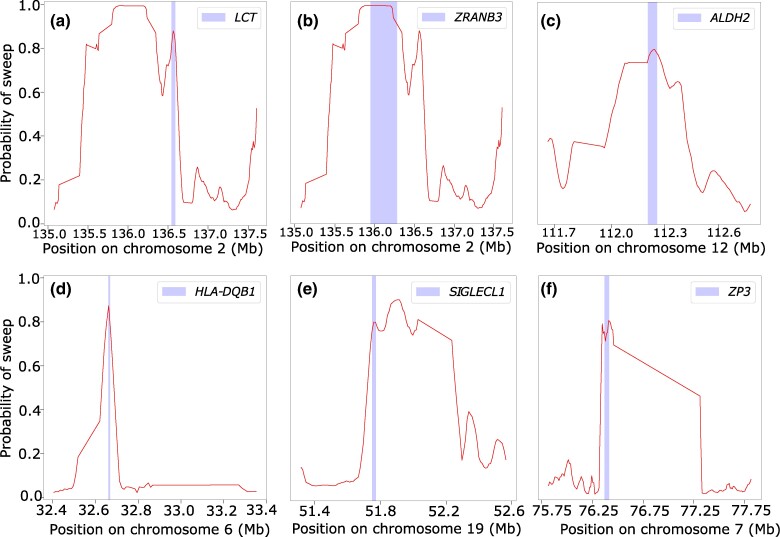

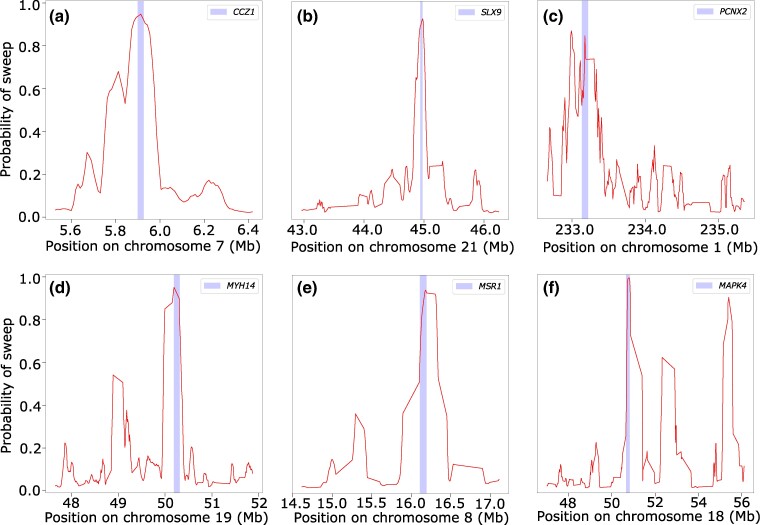

In the following sections, we introduce a set of linear and nonlinear methods that we term α-DAWG (α-molecules for Detecting Adaptive Windows in Genomes), where such methods first decompose an image representation of a haplotype alignment in terms of α-molecules, specifically with wavelets and curvelets, and then use extracted α-molecule basis coefficients as features to train and test models to discriminate sweeps from neutrality. We validate the true positive rate, accuracy, and robustness of our proposed α-DAWG framework, and show that models trained to detect sweeps from neutrality perform favorably on a number of demographic and selection scenarios, as well as the confounding factors of background selection, recombination rate variation, and missing genomic segments. We also demonstrate that α-DAWG attains superior true positive rate and accuracy relative to a CNN-based sweep classifier, and expand upon potential reasons for this in the section “Discussion”. As a product of employing wavelet and curvelet analysis on images, we are also able to visualize the parameters underlying the α-DAWG sweep classifiers, which provides a framework for understanding the learned characteristics of input images important for detecting sweeps. As a proof of effectiveness on realistic data, we apply α-DAWG to phased whole-genome data of central European (CEU) humans (The 1000 Genomes Project Consortium 2015) and recover a number of established candidate sweep regions (e.g. LCT, ZRANB3, ALDH2, and SIGLECL1), as well as predict some novel genes as sweep candidates (e.g. CCZ1, SLX9, PCNX2, and MSR1). Finally, we make available open-source software for implementing α-DAWG at https://github.com/RuhAm/AlphaDAWG.

Results

Sweep Detection with Linear α-DAWG Implementations

We decompose two-dimensional genetic signals (haplotype alignments) using wavelets or curvelets before using them to train models to differentiate between sweeps and neutrality. For the wavelet transform, we employed Daubechies least asymmetric wavelets as the basis functions (Daubechies 1988). Each sampled observation of the data are composed of a dimensional matrix (see section “Haplotype alignment processing”). Each sample is wavelet decomposed and the resulting coefficients are flattened into a single vector of length 4,096. Likewise, the curvelet transform gives a vector of coefficients with a length of 10,521 for each sample. We train individual linear models that use as input either wavelet or curvelet coefficients (α-DAWG[W] and α-DAWG[C]), and we also train a model that jointly uses as input wavelet and curvelet coefficients (α-DAWG[W-C]) with a flattened vector of length 14,617 per sample.

We first test α-DAWG on two datasets of differing difficulty that are inspired by a simplified population genetic model. Specifically, we initially explore application of α-DAWG on a constant population size demographic history with diploid individuals (Takahata 1993), as well as a mutation rate of per site per generation (Scally and Durbin 2012) and a recombination rate drawn from an exponential distribution with a mean of per site per generation (Payseur and Nachman 2000) and truncated at three times the mean (Schrider and Kern 2016) for 1.1 megabase (Mb) simulated sequences. Under these parameters, we generated simulated sequences using the coalescent simulator discoal (Kern and Schrider 2016) for 200 sampled haplotypes that we assigned as neutral observations. Additionally, to generate selective sweep observations from standing variation, we introduced a beneficial mutation at the center of simulated sequences with per-generation selection coefficient (Mughal et al. 2020) drawn uniformly at random on a scale, frequency of beneficial mutation when it becomes selected drawn uniformly at random on a scale, and generations in the past in which the beneficial mutation becomes fixed t. The first dataset (denoted by Constant_1), we set such that sampling occurs immediately after the selective sweep completes. For the second dataset (denoted by Constant_2), we draw uniformly at random, such that the distinction between sweeps and neutrality is less clear. We outline these parameters for generating the four datasets in section “Protocol for simulating population genetic variation”.

To evaluate the performance of α-DAWG on the two datasets, we generated training sets of 10,000 observations per class and test sets of 1,000 observations per class under each of the Constant_1 and Constant_2 datasets. We applied glmnet (Friedman et al. 2010) for training and testing under a logistic regression model with an elastic net regularization penalty. We used 5-fold cross validation to identify the optimum regularization hyperparameters (Hastie et al. 2009). Moreover, for α-DAWG[W] and α-DAWG[W-C], we treated the wavelet decomposition level as an additional hyperparameter, which we also determined using 5-fold cross validation across the set . Additional details describing the model and its fitting to training data can be found in the section “Methods” and supplementary methods, Supplementary Material online. Optimum values for the three hyperparameters estimated for the Constant_1 and Constant_2 datasets are displayed in Table 1.

Table 1.

Optimum hyperparameters chosen through 5-fold cross validation for the elastic net logistic regression classifier across the four datasets (Constant_1, Constant_2, CEU_1, and CEU_2) and three feature sets (wavelet, curvelet, and joint wavelet-curvelet [W-C])

| Hyperparameters | Constant_1 | Constant_2 | CEU_1 | CEU_2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Wavelet | Curvelet | W-C | Wavelet | Curvelet | W-C | Wavelet | Curvelet | W-C | Wavelet | Curvelet | W-C | |

| Wavelet level | 1 | N/A | 1 | 1 | N/A | 1 | 1 | N/A | 1 | 1 | N/A | 1 |

| γ | 0.9 | 0.8 | 0.9 | 0.7 | 0.9 | 0.9 | 0.1 | 0.4 | 0.1 | 0.9 | 0.9 | 0.9 |

| λ | 0.00189 | 0.002 | 0.001 | 0.00168 | 0.001 | 0.021 | 0.0013 | 0.038 | 0.0012 | 0.0019 | 0.011 | 0.0017 |

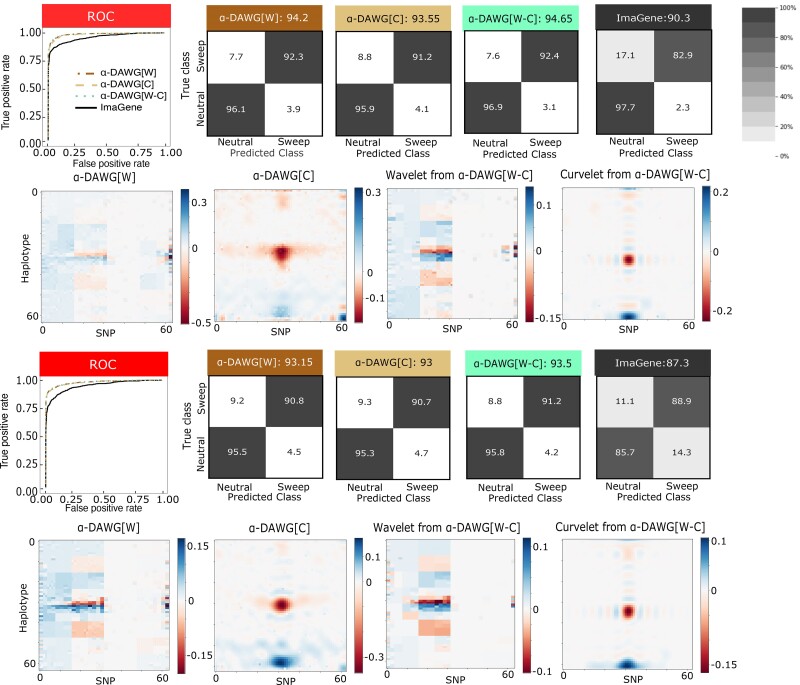

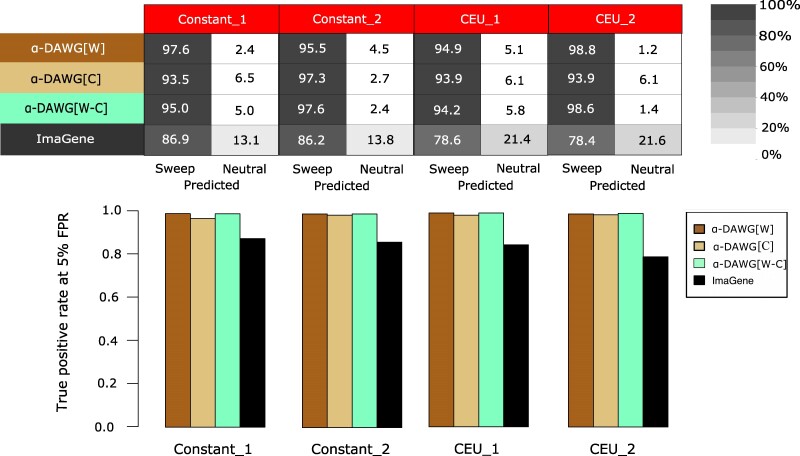

To assess classification ability, we evaluated model accuracy, relative classification rates through confusion matrices, and true positive rate with receiver-operating characteristic (ROC) curves. Using these evaluation metrics, all three linear α-DAWG models have similar classification abilities when applied on either the Constant_1 or Constant_2 dataset (Fig. 2). However, for both Constant_1 and Constant_2 datasets, linear α-DAWG[W-C] performs slightly better than linear α-DAWG[W] and α-DAWG[C]. As expected, method true positive rate and accuracy are higher for the Constant_1 dataset compared with Constant_2, as there is greater class overlap in the latter dataset. For both datasets, all three linear α-DAWG models outperform ImaGene, and the linear α-DAWG models classify neutral regions with higher accuracy than sweep regions (Fig. 2). However, though our three linear α-DAWG models exhibit relatively balanced classification rates between neutral and sweep settings, ImaGene is more unbalanced on the Constant_1 dataset. Specifically, for the Constant_1 dataset, ImaGene classifies only 2.3% of neutral regions incorrectly, which is slightly better than the linear α-DAWG models, but also misclassifies 17.1% of sweeps as neutral, which ultimately leads to its lower overall accuracy but is preferable to a high misclassification of neutral regions as sweeps. Interestingly, for the Constant_2 dataset, ImaGene displays more balanced classification rates, with 11.1% and 14.3% rates of misclassification for sweep and neutral regions, respectively. This finding could be the result of the Constant_2 dataset having more overlap between the two classes. For both of these datasets, the linear α-DAWG models exhibit superior classification accuracy relative to ImaGene, as well as higher true positive rate at low false positive rates based on the ROC curves (Fig. 2).

Fig. 2.

Performances of the three linear α-DAWG models and ImaGene applied to the Constant_1 (top two rows) and Constant_2 (bottom two rows) datasets that were simulated under a constant-size demographic history and 200 sampled haplotypes. The training and testing sets respectively consisted of 10,000 and 1,000 observations for each class (neutral and sweep). Sweeps were simulated by drawing per-generation selection coefficient and the frequency of beneficial mutation when it becomes selected , both uniformly at random on a scale. Moreover, the generations in the past in which the sweep fixed t was set as for the Constant_1 dataset and drawn uniformly at random as for the Constant_2 dataset. Model hyperparameters were optimized using 5-fold cross validation (Table 1) and ImaGene was trained for the number of epochs that obtained the smallest validation loss. The first and third rows from the top display the ROC curves for each classifier (first panel) as well as the confusion matrices and accuracies (in labels after colons) for the four classifiers (second to fifth panel). The second and fourth rows from the top display the two-dimensional representations of regression coefficient functions reconstructed from wavelets or curvelets for α-DAWG[W] (first panel), α-DAWG[C] (second panel), and α-DAWG[W-C] (third and fourth panels). Cells within confusion matrices with darker shades of gray indicate that classes in associated columns are predicted at higher percentages. The white color at the center of the color bar associated with a β function represents little to no emphasis placed by linear α-DAWG models, whereas the dark blue and dark red colors signify a positive and negative emphasis, respectively.

In addition to their accuracies and true positive rates, an important aspect of predictive models in population genetics is their interpretability in terms of the ability to understand what features of the input image representations of haplotype alignments are important for discriminating between sweeps and neutrality. To explore how well each classifier captures characteristic differences between sweep and neutral observations, we collected the fitted regression coefficients and applied inverse wavelet and curvelet transforms to reconstruct the function describing the importance of different regions of the haplotype alignment that we use as input to linear α-DAWG (see section “Linear α-DAWG models with elastic net penalization” of the supplementary methods, Supplementary Material online). For both datasets, the resulting β functions display elevated importance in places where we would expect differences between sweep and neutral variation to arise—i.e. increased importance for features at the center of simulated sequences near the site of selection, tapering off toward zero with distance from the center (Fig. 2). This pattern of importance is reflected in the mean two-dimensional images for the 10,000 training observations per class, with sweeps showing (on average) valleys of diversity toward the center of simulated sequences, whereas diversity of neutral simulations is (on average) flat across the simulated sequences (Fig. 1a). We also notice that the β function for the linear α-DAWG[W] models generally has a step-wise structure (ignoring local noise), whereas the β functions from linear α-DAWG[C] models are smoother. Similar characteristics are found in the corresponding β functions under linear α-DAWG[W-C] models (one function for wavelet coefficients and one for curvelet coefficients).

Robustness to Background Selection

Though we have shown that α-DAWG performs well at distinguishing the pattern of lost genomic diversity due to positive selection from neutral variation, it is important to consider other common forces that may lead to local reductions in diversity within the genome. In particular, the pervasive force of negative selection (McVicker et al. 2009; Comeron 2014) that constrains variation at functional genomic elements can lead to not only reductions in diversity at selected loci, but also at nearby linked neutral loci through a phenomenon termed background selection (Charlesworth et al. 1993; Hudson and Kaplan 1995; Charlesworth 2012), much like genetic hitchhiking that leads to a pattern of a selective sweep resulting from positive selection. Moreover, background selection can lead to distortions in the distribution of allele frequencies that can mislead sweep detectors (Charlesworth et al. 1993, 1995, 1997; Keinan and Reich 2010; Seger et al. 2010; Nicolaisen and Desai 2013; Huber et al. 2016). We expect that α-DAWG should be robust to background selection, as background selection is not expected to substantially alter the distribution of haplotype frequencies as it does not lead to the increase in frequencies of haplotypes (Charlesworth et al. 1993; Charlesworth 2012; Enard et al. 2014; Fagny et al. 2014; Schrider 2020). Nevertheless, it is important to explore whether novel sweep detectors are robust to false signals of selective sweeps due to background selection.

To assess whether α-DAWG is robust to background selection, we simulated 1,000 new test replicates of background selection under a constant-size demographic history using the forward-time simulator SLiM (Haller and Messer 2019), as the original simulator discoal that we used to train α-DAWG does not simulate negative selection. Specifically, we evolved a population of diploid individuals for generations, which includes a burn-in period of generations and a subsequent generations of evolution after the burn-in, under the same genetic and demographic parameters used to generate the Constant_2 dataset. At the end of each simulation, we sampled 200 haplotypes from the population for sequences of length 1.1 Mb. In addition to these parameters, we also introduced a functional element located at the center of the 1.1 Mb sequence for which deleterious mutations may arise continuously throughout the duration of the simulation and with a structure that mimics a protein-coding gene of length 55 kb where we might expect selective constraint. Using the protocol of Cheng et al. (2017), selection coefficients for recessive () deleterious mutations that arise within this coding gene were distributed as gamma with shape parameter 0.2 and mean 0.1 or 0.5 for moderately and highly deleterious alleles, respectively. Moreover, this gene consisted of 50 exons each of length 100 bases, 49 introns interleaved with the exons each of length 1,000 bases, and 5’ and 3’ untranslated regions (UTRs) flanking the first and last exons of the gene of lengths 200 and 800 bases, respectively. The lengths of these components of the coding gene structure were selected to roughly match the mean values from human genomes (Mignone et al. 2002; Sakharkar et al. 2004). Sampled haplotype alignments were processed according to the steps described in the section “Haplotype alignment processing”, and these 1,000 background selection test observations were then used as input to α-DAWG trained on the Constant_2 dataset.

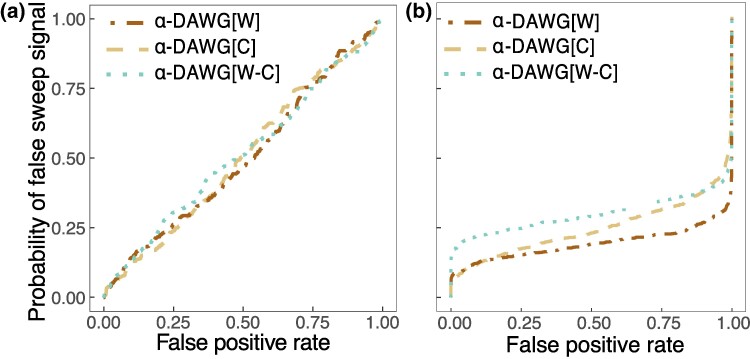

We find that the probability linear α-DAWG falsely detects moderate background selection as a sweep is roughly equal to the false positive rate based on neutral replicates (Fig. 3a), indicating that from the lens of linear α-DAWG, the distribution of sweep probabilities under background selection is approximately the same as under neutrality. When it comes to detecting moderately strong background selection, linear α-DAWG performs even better as the probability of falsely detecting background selection as a sweep is even lower than the false positive rate based on neutral replicates, emphasizing robustness under moderately strong background selection (Fig. 3b). Thus, as expected, because linear α-DAWG operates on features extracted from haplotype alignments, it is robust to patterns of lost diversity locally in the genome due to background selection in settings of moderately strong and weaker background selection under human-inspired demographic and genetic parameters.

Fig. 3.

Probability of falsely detecting moderate background selection a) and moderately strong background selection b) as a sweep as a function of false positive rate under neutrality for the three linear α-DAWG models trained using the Constant_2 dataset as in Fig. 2. The probability of a false sweep signal is the fraction of background selection test replicates with a sweep probability higher than the sweep probability under neutral test replicates that generated a given false positive rate. Details regarding the simulation of background selection can be found in the section “Robustness to background selection”.

Effect of Population Size Fluctuations

Our evaluation of the performance of α-DAWG classifiers in comparison to ImaGene focused on equilibrium demographic settings in which the population size is held constant. However, this is a highly unrealistic scenario, as true populations tend to fluctuate in their sizes over time for a number of reasons. We therefore sought to explore whether demographic models with population size changes would substantially hamper the accuracies and true positive rates of α-DAWG classifiers. Extreme population bottlenecks have been demonstrated to cause false signals of selective sweeps due to their increased variance in coalescent times, as well as to make sweep detection more difficult through their global loss of haplotype diversity across the genome. We therefore simulated a setting of a severe population bottleneck, using a demographic history inferred (Terhorst et al. 2017) from whole-genome sequencing of CEU individuals from the CEU population in the 1,000 Genomes Project dataset (The 1000 Genomes Project Consortium 2015).

Neutral simulations were run under this demographic history, and sweep simulations were performed with a beneficial mutation added on top of the demographic history, using the same selection parameters as in the constant-size demographic history that we previously explored—i.e. per-generation selection coefficient (Mughal et al. 2020) and frequency of beneficial mutation when it becomes selected , each drawn uniformly at random on a scale. Moreover, similarly to our previous experiments, we considered two datasets of varying difficulty, each with 10,000 simulations per class for the training set and 1,000 simulations per class for the testing set. The first dataset (denoted by CEU_1) with time of sweep completion set to generations in the past and the second, more difficult, dataset (denoted by CEU_2) with drawn uniformly at random. All classification models were trained and tested in an identical manner to the earlier Constant_1 and Constant_2 datasets, with optimum estimated values for the three hyperparameters displayed in Table 1.

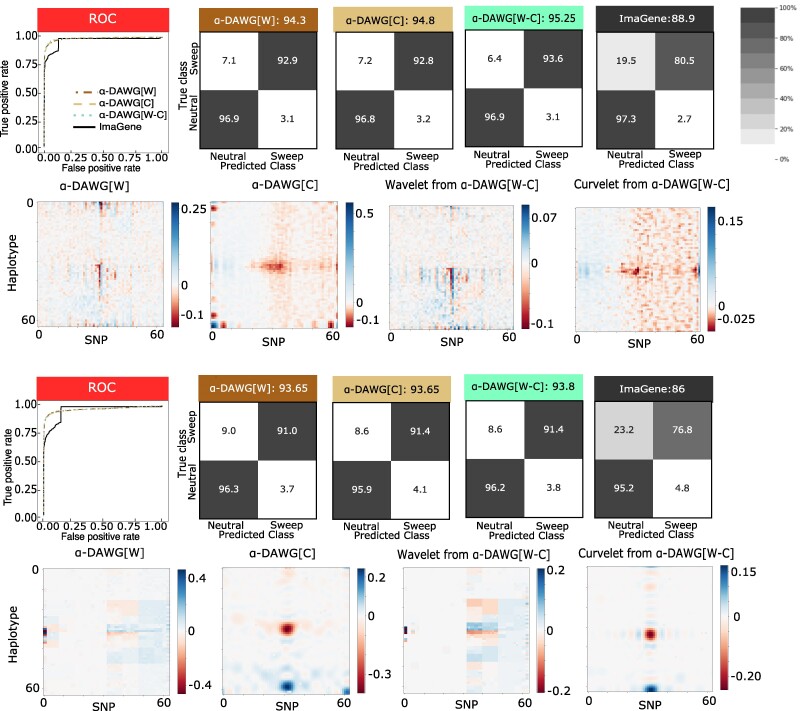

Comparing Figs. 4 to 2, we can see that demographic histories with extreme bottlenecks have actually lead to an improvement (though marginal) in the true positive rates and accuracies of the three linear α-DAWG models compared with the constant size demographic histories, with linear α-DAWG[W-C] displaying slighting elevated true positive rate and accuracy compared with linear α-DAWG[W] and α-DAWG[C]. In contrast, ImaGene has slightly decreased accuracy and true positive rate compared with the constant-size histories. Similarly, the three linear α-DAWG models have relatively balanced classification rates between neutral and sweep settings (with a slight skew toward neutrality), whereas ImaGene has highly unbalanced with a strong, yet conservative, skew toward neutrality for both the CEU_1 and CEU_2 datasets.

Fig. 4.

Performances of the three linear α-DAWG models and ImaGene applied to the CEU_1 (top two rows) and CEU_2 (bottom two rows) datasets that were simulated under a fluctuating population size demographic history estimated from CEU humans (Terhorst et al. 2017) and 200 sampled haplotypes. The training and testing sets, respectively, consisted of 10,000 and 1,000 observations for each class (neutral and sweep). Sweeps were simulated by drawing per-generation selection coefficient and the frequency of beneficial mutation when it becomes selected , both uniformly at random on a scale. Moreover, the generations in the past in which the sweep fixed t was set as for the CEU_1 dataset and drawn uniformly at random as for the CEU_2 dataset. Model hyperparameters were optimized using 5-fold cross validation (Table 1) and ImaGene was trained for the number of epochs that obtained the smallest validation loss. The first and third rows from the top display the ROC curves for each classifier (first pane) as well as the confusion matrices and accuracies (in labels after colons) for the four classifiers (second to fifth panel). The second and fourth rows from the top display the two-dimensional representations of regression coefficient functions reconstructed from wavelets or curvelets for α-DAWG[W] (first panel), α-DAWG[C] (second panel), and α-DAWG[W-C] (third and fourth panels). Cells within confusion matrices with darker shades of gray indicate that classes in associated columns are predicted at higher percentages. The white color at the center of the color bar associated with a β function represents little to no emphasis placed by linear α-DAWG models, whereas the dark blue and dark red colors signify a positive and negative emphasis, respectively.

To ascertain whether more training data may aid in boosting the performance of ImaGene, we simulated additional training data using the same protocols used to generate the CEU_2 dataset, resulting in a training set comprised of 30,000 observations per class. We trained ImaGene on this larger set, and evaluated it on the same test dataset consisting of 1,000 observations per class. Our experiments reveal that, using more training data results in a 5.5% increase in overall accuracy and a 9.4% increase in sweep detection accuracy for ImaGene (supplementary fig. S2, Supplementary Material online). Furthermore, the true positive rates at small false positive rates also improved with this additional training data, showing a quicker ascent to the upper left-hand corner of the ROC curve (supplementary fig. S2, Supplementary Material online). Despite the improvement in performance by ImaGene with additional training data, linear α-DAWG models still outperformed it by at maximum 1.95% (compare third row of Fig. 4 to supplementary fig. S2, Supplementary Material online) while the nonlinear models outperformed ImaGene with at maximum 3.1% (compare fourth row of supplementary figs. S8 to S2, Supplementary Material online) higher overall classification accuracy with the smaller training set.

Though this increase in classification performance by ImaGene is promising, it comes at a significant cost of additional computational and time requirements. Time requirements could potentially have been reduced by employing a population genetic simulator that is faster than the coalescent simulator that we used, such as some the forward time simulator SLiM (Haller and Messer 2023) that can employ advances parameter scaling and tree-sequence recording for speedup. However, even with such advances, replicate generation can remain slow for sweeps deriving from weak selection coefficients, which may require many simulation restarts, and the parameter scaling has recently been shown to potentially bias the integrity of the simulation (Dabi and Schrider 2024).

From these results, linear α-DAWG appears to be robust for this classical problematic setting for detecting sweeps. We also reconstructed the linear α-DAWG β functions for these bottleneck scenarios, showing increased importance for features near the center of image representations of haplotype alignments for which diversity from sweeps is expected to differ from neutrality in our simulations (Fig. 4), similar to the results from the constant-size history settings (Fig. 2). We also observe that the β functions are noisier when trained on the CEU_1 dataset than on the CEU_2 dataset. This increased noise is due to the optimal hyperparameter γ (see Table 1) estimated closer to zero for all three linear α-DAWG models trained on CEU_1, whereas γ is estimated closer to one on CEU_2. Because smaller γ values result in greater -norm penalization compared with -norm, the lack of sparsity in the estimated wavelet coefficients for reconstructing the β functions from CEU_1 likely led to more noise. Moreover, the significant peaks near the pixels toward the bottom rows and middle columns of the β functions (e.g. α-DAWG[C] in both Figs. 2 and 4) likely reflect emphasis in the model contributed by the most recent, strongest, and hardest sweeps.

Robustness to Recombination Rate Heterogeneity

Recombination rate varies across genomes, and therefore has an impact in shaping haplotypic diversity observed among populations within and among species (Smukowski and Noor 2011; Cutter and Payseur 2013; Singhal et al. 2015; Peñalba and Wolf 2020; Winbush and Singh 2020). In particular, low recombination rates may decrease local haplotypic diversity, which may resemble the pattern of a selective sweep, whereas high recombination rates may elevate local haplotypic diversity, which may eliminate sweep signatures. The genomes across a variety of organisms exhibit a complex recombination landscape in which we observe isolated genomic regions with extremely high (known as hotspots) and low (known as coldspots) recombination rates (Petes 2001; Hey 2004; Myers et al. 2005; Galetto et al. 2006; Grey et al. 2009; Baudat et al. 2010; Singhal et al. 2015; Booker et al. 2020; Lauterbur et al. 2023). Therefore, it is important to evaluate the degree with which α-DAWG is robust against scenarios of recombination rate heterogeneity, including at hotspots and coldspots.

To test the robustness of α-DAWG under recombination rate heterogeneity, we simulated 1,000 neutral test replicates under both constant (i.e. Constant_1 and Constant_2) and fluctuating population size (i.e. CEU_1 and CEU_2) models using the coalescent simulator discoal (Kern and Schrider 2016), fixing genetic parameters identical to their respective original datasets (Constant_1, Constant_2, CEU_1, and CEU_2) while only changing the recombination rate. Specifically, for a given replicate the recombination rate was drawn from an exponential distribution with mean of or per site per generation and truncated at three times the mean, resulting in a respective decrease in the mean recombination rate across the entire simulated 1.1 Mb region by one or two orders of magnitude relative to distribution used to train the α-DAWG classifiers. To simulate recombination hotspots and coldspots under constant and fluctuating population size models, we simulated 1,000 neutral test replicates using the coalescent simulator msHOT (Hellenthal and Stephens 2007), fixing genetic parameters identical to their respective original datasets (Constant_1, Constant_2, CEU_1, and CEU_2) with the exception of the recombination rate. In particular, for each test replicate, the recombination rate (r) was drawn from an exponential distribution with mean of per site per generation and truncated at three times the mean (as in the settings used to train α-DAWG), except that the central 100 kb region of the sequence evolved with a recombination rate of or for coldspots and or for hotspots, resulting in a localized decrease or increase in the recombination rate at the center of the simulated sequences, respectively.

Our results reveal that under a shift in the mean recombination rate by one or two orders of magnitude lower than what was used for training, linear α-DAWG models exhibit an increased neutral misclassification rate up to 14% for constant-size demographic histories when compared with results in which the recombination rate distribution in test data matched what the models were trained on (supplementary fig. S3, Supplementary Material online). For the more-realistic CEU demographic history, the neutral misclassification rate observed for linear α-DAWG models is somewhat lower, maxing out at about an 11% increase in neutral misclassification (supplementary fig. S3, Supplementary Material online). Of these models, linear α-DAWG[W] often had the smallest misclassification error, though the ranking of the linear α-DAWG models based on neutral misclassification errors were not consistent across tested settings. Therefore, in the face of significant reductions in mean recombination rates relative to what was employed during training, linear α-DAWG models show modest inflation of neutral misclassification rates when compared with results under the usual training settings for realistic demographic settings.

Furthermore, when faced with recombination hotspots and coldspots, linear α-DAWG models show a slight rise (as high as 10%) in misclassification rate, whereas some models show proportional deflation in misclassification rates (as much as 4%) of neutrally evolving regions (supplementary fig. S4, Supplementary Material online). In general, increasing the recombination rate from extreme coldspot to extreme hotspot tends to reduce the neutral misclassification rate under the realistic CEU demographic history (supplementary fig. S4, Supplementary Material online). When it comes to coldspots, linear α-DAWG models show decreases in neutral misclassification rates up to 2% as well as elevations in neutral misclassification rates as high as 10% (supplementary fig. S4, Supplementary Material online). In the case of hotspots, linear α-DAWG models exhibit diminishing neutral misclassification rates as low as 4% and inflations in neutral misclassification as high as 8% (supplementary fig. S4, Supplementary Material online). In summary, we observe that even under recombination hotspot or coldspots, linear α-DAWG models show a general resilience as evidenced by their minimal change in misclassification rate from original settings, with fewer errors made for hotspots compared with coldspots (supplementary fig. S4, Supplementary Material online).

In addition to testing the resilience of linear α-DAWG models on recombination rate heterogeneity, we went on to evaluate how a reduced sweep footprint affects sweep detection accuracy when applied to the CEU_2 dataset. In particular, the sweep footprint size F can be computed as , where s is the selection coefficient per generation, r is the recombination rate per site per generation, and is the effective population size (Gillespie 2004; Garud et al. 2015; Hermisson and Pennings 2017). Thus, the sweep footprint size is inversely proportional to the recombination rate. To this end, we simulated 1,000 sweep test replicates with recombination rates drawn from an exponential distribution with mean of (twice that used for training) per site per generation and truncated at three times the mean leading to a sweep footprint size that is on average half the width of the original replicates, with the mean footprint size across the original replicates approximately 329 kb. We find that the reduced footprint size indeed presents a challenge for the linear α-DAWG models, as we observe a drop in sweep detection accuracy from original results (supplementary fig. S5, Supplementary Material online). This drop in sweep detection accuracy due to reduced sweep footprint size falls in the range of 3.7% to 4.5% when compared with the sweep detection accuracy obtained from test replicates using the original recombination rate (supplementary fig. S5, Supplementary Material online). Overall, a 2-fold reduction in sweep footprint size has minimal to moderate effects on the ability of linear α-DAWG models to detect sweeps, adding to the potential robustness of our models.

Performance Under Mutation Rate Variation

Mutation rate varies within the genome and across species (Kumar and Subramanian 2002; Bromham 2011; Bromham et al. 2015; Harpak et al. 2016; Castellano et al. 2020) due to factors including transcription-translation conflicts and DNA replication errors (Bromham 2009; Dillon et al. 2018), and this mutation rate heterogeneity could affect the performance of predictive models that use genomic variation as input. Like with recombination rate, genomic regions with low mutation rates can be mistaken as evidence of a selective sweep, as they will harbor low haplotypic diversity, whereas genomic regions with high mutation rates can mask footprints of past selective sweeps, as they will exhibit elevated haplotypic diversity (Harris and Pritchard 2017). Thus it is paramount that α-DAWG perform well under such conditions of mutation rate variation.

To evaluate whether α-DAWG is resilient to, and performs well under, mutation rate heterogeneity, we simulated an additional 1,000 sweep and 1,000 neutral replicates using discoal (Kern and Schrider 2016), where we deviated from simulation protocol for generating training data for α-DAWG in which we fixed the mutation rate as per site per generation. Specifically, for each new test replicate, we sampled the mutation rate uniformly at random within the interval and evaluated how α-DAWG models fare under this setting of mutation rate variation. We outlined the performance in terms of accuracy and classification rates using confusion matrices and true positive rate using ROC curves (supplementary fig. S6, Supplementary Material online).

We found that linear α-DAWG models show excellent overall accuracy (from 89.55 to 96.9%) under mutation rate variation (supplementary fig. S6, Supplementary Material online). In terms of detecting neutrally evolving regions, linear α-DAWG[W] exhibits accuracy in the range of 90.5 to 96%, whereas linear α-DAWG[C] and α-DAWG[W-C] display a better neutral detection rate in the range of 95.2 to 98.7% and 93.5 to 98.2%, respectively (supplementary fig. S6, Supplementary Material online). Moreover, all linear α-DAWG models retain high true positive rates across scenarios tested, evidenced by quick rises to the upper left hand corner of the ROC curve, with linear α-DAWG[C] and α-DAWG[W-C] models demonstrating higher true positive rates than linear α-DAWG[W]—an exception being the applications of these models on the Constant_1 dataset for which linear α-DAWG[W] edges out the other two (supplementary fig. S6, Supplementary Material online). These results suggest that all linear α-DAWG models retain high true positive rates and are accurate when confronted with mutation rate variation.

Comparison with a Summary Statistic Based Deep Learning Classifier

Though we have benchmarked the linear α-DAWG models with the nonlinear classifier ImaGene that also uses images of haplotype alignments as input, it is important to consider classifiers that instead use statistics summarizing variation as input. We specifically investigate the performance of the nonlinear diploS/HIC classifier (Kern and Schrider 2018), which was originally developed for distinguishing among five classes, namely, soft sweeps, hard sweeps, linked soft sweeps, linked hard sweeps, and neutrality from unphased multilocus genotypes (MLGs) using a feature vector of 12 summary statistics calculated across 11 windows, where the central window is being classified. We have adjusted diploS/HIC from its native state as a multiclass classifier, to instead make decisions as a binary classifier to distinguish sweeps from neutrality for comparison purposes with α-DAWG. We trained and tested diploS/HIC on the Constant_1, Constant_2, CEU_1, and CEU_2 datasets.

We find that diploS/HIC displays excellent overall accuracy (supplementary fig. S7b, Supplementary Material online) and high true positive rates (supplementary fig. S7a, Supplementary Material online) across different false positive rate thresholds in both the constant and fluctuating population size settings. On all four test datasets, diploS/HIC outperforms the best performing linear α-DAWG[W-C] by between and (compare Figs. 2 and 4 with supplementary fig. S7b, Supplementary Material online) in terms of overall accuracy. The edge of diploS/HIC over the linear α-DAWG models in terms of performance is further evident in the ROC curves, where on all datasets we observe a rapid ascent to the upper left-hand corner of the curve (supplementary fig. S7a, Supplementary Material online). Though diploS/HIC outperforms linear α-DAWG across all datasets, a possible opportunity to close this performance gap would be to employ a nonlinear α-DAWG, which we explore further in the section “Performance boost with nonlinear models”.

Comparison with a Likelihood Ratio Based Classifier

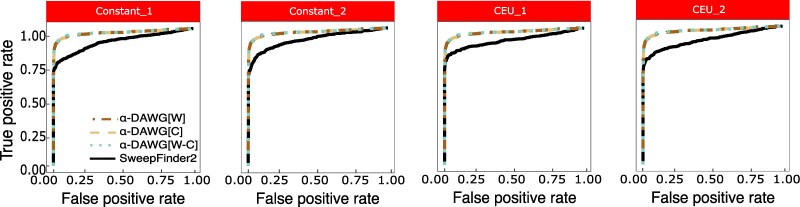

Though we have elected to evaluate the performance of our α-DAWG methods in comparison to ImaGene, as it also uses images as input to a machine learning classifier, it is informative to explore classification ability relative to more traditional methods of sweep detection, such as the maximum likelihood approach SweepFinder (Nielsen et al. 2005; DeGiorgio et al. 2016). Comparing linear α-DAWG to SweepFinder2 (DeGiorgio et al. 2016) on all four test datasets, we see that linear α-DAWG models consistently demonstrate superior true positive rate across the range of false positive rates compared with SweepFinder2 (Fig. 5). Though SweepFinder is a powerful sweep classifier, this result is expected, because the test sweep datasets have varying degrees of sweep softness, strength, and age. The sweep model employed by SweepFinder2 is one of a recent, hard, and effectively immediate (i.e. strong) sweep, and thus the method has limited true positive rate in detecting soft sweeps. On the other hand, the sweep training data given to linear α-DAWG models were generated across a range of sweep softness, strength, and age, and so α-DAWG is more suited to detecting a broad set of sweep modes relative to traditional model-based approaches.

Fig. 5.

ROC curves of the three linear α-DAWG models and SweepFinder2 applied to the Constant_1, Constant_2, CEU_1, and CEU_2 test datasets, with the linear α-DAWG models trained and applied in Figs. 2 and 4.

Ability to Detect Hard Sweeps from de Novo Mutations

Our experiments have explored α-DAWG classification ability when trained and tested on settings for which the initial frequency of the beneficial mutation (f) was allowed to vary across a broad range of values from 0.001 to 0.1, with adaptation from beneficial mutations at these frequencies occurring through selection on standing variation. The lower frequency range would likely lead to harder sweeps, as only one or a few haplotypes would rise to high frequency, whereas the upper range would yield softer sweeps. However, a more classic example of a hard sweep would occur through selection on a de novo mutation for which the beneficial allele is present on a single haplotype (Przeworski 2002; Hermisson and Pennings 2005). We therefore elected to examine the accuracy of α-DAWG for detecting hard sweeps from de novo mutations.

To evaluate this scenario, we simulated an additional 1,000 test sweep replicates with discoal (Kern and Schrider 2016) for each of the Constant_1, Constant_2, CEU_1, and CEU_2 datasets, with protocol identical to those for simulating sweeps under these datasets with the exception that , where is the diploid effective population size rather than . We then deployed our three linear α-DAWG models and ImaGene that were trained on settings for which to evaluate relative classification accuracy and true positive rate of hard sweeps from de novo mutations. We find that linear α-DAWG and ImaGene showcase relative classification ability consistent with prior experiments, with all approaches having high accuracy and true positive rate and with linear α-DAWG edging out ImaGene for sweep detection (Fig. 6). Though the setting of hard sweeps from de novo mutations was not explicitly included within the domain of the linear α-DAWG training distribution, it is not surprising that linear α-DAWG models still retain high accuracy and true positive rate for such scenarios, as the footprints of hard sweeps are more prominent than those of soft sweeps (Hermisson and Pennings 2017).

Fig. 6.

Performances of the three linear α-DAWG models and ImaGene applied to the Constant_1, Constant_2, CEU_1, and CEU_2 test datasets of hard sweeps from de novo mutations (see Performance on hard sweeps from de novo mutations) using the linear α-DAWG models and ImaGene trained as in Figs. 2 and 4. The top panel depicts the percentage of 1,000 hard sweep from de novo mutation test replicates classified as a sweep or neutrality, whereas the bottom panel shows true positive rate at a 5% false positive rate (FPR) to detect such sweeps. Cells within confusion matrices at the top with darker shades of gray indicate that classes in associated columns are predicted at higher percentages.

Performance Boost with Nonlinear Models

So far we have only discussed linear classifiers. However, if the decision boundary separating sweeps from neutrality is nonlinear, then a nonlinear model may be expected to yield better performance than a linear model. We therefore considered extending our logistic regression classifier to a multilayer perceptron neural network. The number of hidden layers or the number of nodes within a hidden layer of the network is related to the models capacity, or its flexibility in the set of functions that it can model well (Goodfellow et al. 2016). Because a neural network with enough hidden layers or enough nodes within the hidden layers can approximate arbitrarily complicated functions, it is possible to overfit the model to the training data (Cybenko 1989; Hornik et al. 1989). Common solutions to this overfitting issue include limiting the network capacity (number of hidden layers and nodes) or constraining the model through regularization (Goodfellow et al. 2016).

With this in mind, we considered a neural network with one hidden layer containing eight hidden nodes within the layer so that we can still model nonlinear functions while also having limited capacity of the network. This limited capacity also heavily reduces the number of parameters that need to be estimated in the model, thereby reducing the computational cost of fitting he model. As with our previous linear models, we also included an elastic net regularization penalty to constrain the model, and employed 5-fold cross validation to identify the optimum regularization hyperparameters. This neural network was implemented using keras with a tensorflow backend, and we fit this model to all four datasets that we considered earlier: Constant_1, Constant_2, CEU_1, and CEU_2. Additional details describing the model and its fitting to training data can be found in the section “Methods” and supplementary methods, Supplementary Material online. Optimum values for the three hyperparameters estimated on the four datasets are displayed in Table 2.

Table 2.

Optimum hyperparameters chosen through 5-fold cross validation for the elastic net eight node and one hidden layer perceptron classifier across the four datasets (Constant_1, Constant_2, CEU_1, and CEU_2) and three feature sets (wavelet, curvelet, and joint wavelet-curvelet [W-C])

| Hyperparameters | Constant_1 | Constant_2 | CEU_1 | CEU_2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Wavelet | Curvelet | W+C | Wavelet | Curvelet | W+C | Wavelet | Curvelet | W+C | Wavelet | Curvelet | W+C | |

| Wavelet level | 1 | N/A | 1 | 1 | N/A | 1 | 1 | N/A | 1 | 1 | N/A | 1 |

| γ | 0.3 | 1 | 0.9 | 1 | 0.1 | 0.9 | 1 | 0.9 | 0.1 | 1 | 0.9 | 0.1 |

| λ | ||||||||||||

Using nonlinear versions of the α-DAWG models instead of linear, we see once again that the three nonlinear α-DAWG classifiers perform similarly to each other on each dataset (supplementary fig. S8, Supplementary Material online). In contrast to the linear classifiers (Figs. 2 and 4), nonlinear α-DAWG[W-C] outperforms other nonlinear models in only Constant_2, and CEU_2 datasets while lagging slightly behind α-DAWG[C], and α-DAWG[W] in Constant_1 and CEU_1 datasets, respectively (supplementary fig. S8, Supplementary Material online). These scenarios in which the nonlinear α-DAWG[W-C] performs the best are settings in which there is greater overlap between the neural and sweep classes. Comparing Figs. 2, 4, and supplementary fig. S8, Supplementary Material online, we see that nonlinear α-DAWG[C] and α-DAWG[W-C] models showcase increased overall classification accuracy on the Constant_1 and CEU_2 datasets. Moreover, nonlinear α-DAWG[W] and α-DAWG[W-C] models exhibit increased overall classification accuracy on the CEU_1 dataset, with a neutral detection rate as high as 98.5%, which provides the nonlinear models with an edge over their linear counterparts with the same image decomposition method. We also observe a deviation from nonlinear models having superior performance over linear ones on the Constant_2 dataset, for which all nonlinear all α-DAWG models have overall decreased accuracy. That is, no single model among the six α-DAWG models (three linear and three nonlinear) consistently performs better than the others. However, when examining the performance boost of our nonlinear models, we need to consider the robustness scenarios where the test inputs may have been generated from genomic regions with missing data, which may give rise to false detection of sweep signals at neutrally evolving regions. We discuss more about how nonlinear α-DAWG models fare when faced with technical hurdles like missing data in section “Robustness to missing genomic segments”.

In addition to predictive ability, as with the linear model, we collected the regression coefficients from the eight hidden nodes and inverse transformed them to reconstruct the functions at each of the hidden nodes. We then averaged these maps according to their weights with which they contribute to the output node (see section “Nonlinear α-DAWG models with elastic net penalization” of the supplementary methods, Supplementary Material online). Supplementary fig. S9, Supplementary Material online shows that the functions for each of the nonlinear α-DAWG models display expected patterns, with increased importance for features at the center of the haplotype images, tapering off toward zero with distance from the center, as well as curvelet coefficient functions typically smoother than wavelet coefficient functions. Though the β functions observed differ from each other, they each emphasize the center of input images (supplementary fig. S9, Supplementary Material online). Importantly, the β functions are not an indicator of model performance, as they simply depict areas of an image that nonlinear α-DAWG models place emphasis. We also note that we observe markedly different β functions across nonlinear α-DAWG models in both smoothness and magnitude, which depends on the signal decomposition method applied as well as the optimal regularization hyperparameters associated with the model.

To further evaluate the performance of the nonlinear α-DAWG models, we compared it with diploS/HIC. We find that the gap in overall accuracy between nonlinear α-DAWG models and diploS/HIC closes in with diploS/HIC outperforming the best nonlinear α-DAWG models between and (compare fourth row of supplementary fig. S8, Supplementary Material online with supplementary fig. S7b, Supplementary Material online). The edge diploS/HIC has over nonlinear α-DAWG models is likely owed to the fact that diploS/HIC uses summary statistics, which have been chosen because they are adept at detecting sweep patterns and also for discriminating among evolutionary processes in general (Panigrahi et al. 2023). On the other hand, this is an ideal setting without some of the potential technical hurdles that might be encountered in empirical data. Thus, in the section “Robustness to missing genomic segments”, we explore how diploS/HIC and the linear and nonlinear α-DAWG models fare when challenged with artificial drops in haplotypic diversity due to missing data.

Robustness to Missing Genomic Segments

So far we have explored experiments that mimicked the biological process that would allow simulated haplotype variation to approximate real empirical haplotype variation as closely as possible. However, we assumed that this variation was known with certainty, and have not yet considered flawed data due to technical artifacts. One particular technical issue is that some regions of the genome are difficult to assay variation at, leading to chunks of missing genomic segments in downstream datasets due to the inability to access the portion of the genome or because that region was filtered as the data were found to be unreliable. The presence of such missing segments can reduce the number of SNPs and, thus, the number of distinct observed haplotypes, causing spurious drops in haplotype diversity locally in the genome that may masquerade as selective sweeps. Indeed, previous studies have found that such forms of missing data can mislead methods to erroneously detect sweeps at neutrally evolving regions (Mallick et al. 2009; Mughal and DeGiorgio 2019). It is therefore desirable that sweep classifiers are robust against this kind of confounding factor.

To evaluate the robustness of α-DAWG to missing data, we removed portions of SNPs in the test set using the identical protocol of Mughal and DeGiorgio (2019). Briefly, we removed 30% of the total number of SNPs in each simulated replicate by deleting 10 nonoverlapping chunks of contiguous SNPs, each of size equaling 3% of the total number of simulated SNPs. The starting position for each missing chunk was chosen uniformly at randomly from the set of SNPs, and this position was redrawn if the chunk overlapped with previously deleted chunks. Image representations of haplotype alignments were then created from these modified genomic segments by applying the same data processing steps as in our nonmissing experiments (see section “Haplotype alignment processing”). All models were trained assuming no missing genomic segments, with missing segments only in the test dataset.

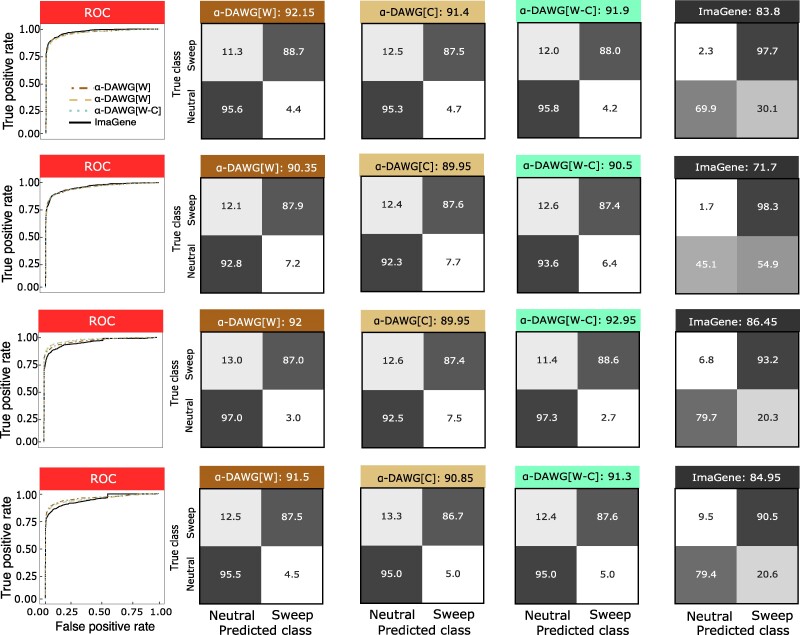

Figure 7 shows the true positive rates, accuracies, and classification rates of the three linear α-DAWG models and ImaGene applied to the four datasets in which the test data have missing segments. Comparing the results to those of Figs. 2 and 4, in all cases the three linear α-DAWG models have unbalanced classification rates, with a skew toward predicting neutrality. Though missing data ultimately reduces accuracy of the three linear α-DAWG models, the misclassifications are conservative, as it is preferable to misclassify sweeps as neutral (i.e. fail to detect the sweep event) than to falsely classify neutral regions as sweeps (i.e. detect a nonexistent process). Moreover, as evident from comparing Fig. 7 to Figs. 2 and 4, the three linear α-DAWG models have sacrificed only a small margin of overall performance. These experiments therefore suggest that the three linear α-DAWG models are robust to missing data, in that they do not falsely detect sweeps, which is what we might expect from missing genomic segments due to the loss of haplotype diversity. In contrast, missing data have a more critical impact on the performance of ImaGene, with it now exhibiting a strong skew toward classifying sweeps. Unfortunately, such skew is detrimental as a high percentage of neutral simulations are now falsely predicted as sweeps, which diverges from conservative classification rates of the three linear α-DAWG models under missing genomic regions. The diminished performance of ImaGene under missing data suggests that the alignment processing method employed by α-DAWG may help it guard against false sweep footprints due to the reduced haplotypic variation caused by missing genomic regions.

Fig. 7.

Performances of the three linear α-DAWG models and ImaGene applied to the Constant_1, Constant_2, CEU_1, and CEU_2 (from top to bottom) test datasets with missing genomic segments (see Robustness to missing genomic segments) using the linear α-DAWG models and ImaGene trained and applied in Figs. 2 and 4. Each row displays the ROC curves (first panel) as well as the confusion matrices and accuracies (in labels after colons) for the four classifiers (second to fifth panels). Cells within confusion matrices with darker shades of gray indicate that classes in associated columns are predicted at higher percentages.

We also ran identical missing data analyses for our nonlinear α-DAWG models, with supplementary fig. S10, Supplementary Material online highlighting the considerable robustness of α-DAWG to the technical artifacts generated by missing genomic segments. We observe that among our nonlinear α-DAWG models, α-DAWG[W-C] shows higher overall classification accuracy compared with the other two nonlinear α-DAWG models (supplementary fig. S10, Supplementary Material online). This elevated accuracy comes at an increased computational cost due to the greater number of coefficients that are needed to optimize in the nonlinear α-DAWG[W-C] model (supplementary fig. S11, Supplementary Material online). Specifically, the nonlinear α-DAWG[W-C] model has the highest computational demand with a mean CPU usage of 18.75% and mean memory overhead of about 52.14 GB per epoch, whereas the nonlinear α-DAWG[W] and α-DAWG[C] models respectively have mean CPU usages of 9.45 and 14.95% and mean memory overheads of about 49.91 GB and 49.64 GB per epoch (supplementary fig. S11, Supplementary Material online).

We further went on to compare the performance of diploS/HIC against α-DAWG when the test data contains missing segments on all four datasets. We find that all linear α-DAWG models have better overall accuracy compared with results obtained utilizing diploS/HIC (compare Fig. 7 and supplementary fig. S7d, Supplementary Material online). Though diploS/HIC shows high sweep detection accuracy, it suffers in correctly detecting neutrally evolving regions as it misclassifies at minimum 30.6% of neutral replicates with missing segments as sweeps (supplementary fig. S7d, Supplementary Material online). This high misclassification rate of neutral regions as sweeps is likely due to the fact that diploS/HIC uses physical based windows to compute summary statistics (Mughal and DeGiorgio 2019). On the other hand, misclassification of neutral regions as sweeps does not exceed 7.7% across all linear α-DAWG models on all datasets in the presence of missing segments (Fig. 7). It is also possible diploS/HIC has suffered here on missing genomic segments because it is a nonlinear model, and maybe the model has learned too many of the fine details within the idealistic training data. Thus, we also evaluate how a nonlinear α-DAWG models would behave when encountering missing genomic segments in test input data. We find that nonlinear α-DAWG models misclassify at most 9.4% of neutral replicates with missing segments as sweeps across all datasets as opposed to a 30.6% misclassification rate of such neutral observations by diploS/HIC (compare supplementary fig. S10, Supplementary Material online with supplementary fig. S7d, Supplementary Material online). These results underscore the apparent disadvantage of using physical based windows when faced with missing genomic tracts. Overall, both linear and nonlinear α-DAWG models show better resilience when confronted with scenarios involving missing genomic segments compared with diploS/HIC.

These missing data experiments assumed a fixed percentage of missing SNPs (30%) distributed evenly across 10 genomic chunks of roughly 3% missing SNPs, which is likely to be less realistic than missing data distributions observed empirically. To consider such a scenario, we selected missing genomic segments inspired from an empirical distribution for which the missing segments are arranged in blocks with mean CRG (Centre for Genomic Regulation) mappability and alignability score (Talkowski et al. 2011) lower than 0.9 (Mughal et al. 2020). To generate test replicates with missing segments, we randomly selected one of the 22 human autosomes, where the probability of selecting a particular autosome is proportional to its length. Once an autosome is selected, we chose a 1.1 Mb region uniformly at random, and identified blocks within this region with low mean CRG scores. In the case where this region does not harbor blocks with low mean CRG score, we chose another starting position for a 1.1 Mb region until a region was found with blocks of low mean CRG score. We then scaled the genomic positions of this 1.1 Mb region to start at zero and stop at one to adhere to the discoal position format for simulated replicates, and subsequently removed SNPs from the test replicate that intersected positions of blocks with low mean CRG scores. As it is likely to find only a few (typically one) long block of missing segment in a 1.1 Mb region, this protocol for generating missing data in a contiguous stretch is different and more realistic than our prior protocol for removing SNPs within 10 short blocks. The mean percentage of missing SNPs using this empirical inspired protocol is about 10.87%, which is lower than and contrasts with the 30% missing SNPs observed in our original experiment.

Comparing the results of our empirically inspired experiments (supplementary fig. S12, Supplementary Material online) to those without missing data (Figs. 2 and 4), in all cases the three linear α-DAWG models with missing data performed on par with nonmissing scenarios in terms of overall accuracy (from 91.30 to 96.55%). In general, when compared with settings without missing data, all methods on all datasets display a relative increase in sweep detection accuracy, with the exception of linear α-DAWG[C] applied to the Constant_2 dataset for which sweep detection accuracy is slightly decreased by 0.4%. Moreover, our empirically inspired experiments show promising results using nonlinear α-DAWG models, in which the nonlinear α-DAWG[W-C] model shows an edge over the other two nonlinear α-DAWG models with overall accuracies ranging from 92.55 to 96.40% (supplementary fig. S13, Supplementary Material online). Furthermore, when compared with our previous missing data experiments, we find the empirically inspired missing data distribution has better overall accuracy as well as fewer sweep misclassifications on all datasets for both linear (compare Fig. 7 and supplementary fig. S12, Supplementary Material online) and nonlinear (compare supplementary figs. S10 and S13, Supplementary Material online) models. This improved accuracy is owed to the fact that in the empirically inspired experiments, the mean percentage of missing SNPs is lower than that of the original missing data experiments. Overall, α-DAWG models are robust to different degrees and distributions of missing loci, and are unlikely to falsely attribute lost haplotypic diversity due to missing segments as a sweep.

Robustness of α-DAWG Models Against Class Imbalance

Class imbalance during training can potentially cause the trained machine learning models to be biased toward more accurately predicting the major class at the expense of the minor class (Libbrecht and Noble 2015), making the exploration of classifier robustness to such settings important. Moreover, a minority of the genome is expected to be evolving under positive selection (Sabeti et al. 2006), and so we expect that in many empirical applications, the sweep class would be a minor class within the test (empirical) set. We therefore set out to explore whether α-DAWG models are able to surmount class imbalance in the training and test sets using precision-recall curves. Precision is defined as the proportion of true positives among all the predictions that are positives, whereas recall is the proportion of true positives among actual positives in a dataset. Precision-recall curves provide a more transparent view of classifier performance than ROC curves under class imbalance. To evaluate the effect of training imbalance, we considered training sets of 10,000 observations, each with different combinations of observations from each class that ranged from balanced (5,000 observations per class) to severely imbalanced (1,000 observations in one class and 9,000 in the remaining class). Furthermore, to assess the impact of testing imbalance, we considered training sets of 10,000 observations per class and test sets composed of varying combinations of observations from each class that ranged from balanced (500 observations per class) to severely imbalanced (100 observations in one class and 900 in the remaining class), totaling 1,000 observations per test set.

Our results show that despite infusing severe class imbalance during training, all linear α-DAWG models exhibit high precision for the majority of the recall range (supplementary fig. S14, Supplementary Material online). This excellent performance is further accentuated by the area under the precision-recall curve (AUPRC), which shows that all linear α-DAWG models have an AUPRC ranging from 96.23% to 99.15% (supplementary fig. S14, Supplementary Material online). Specifically, when linear α-DAWG models are trained with a balanced dataset (5,000 observations per class), the AUPRC is highest (ranging from 97.17% to 99.15%), whereas the lowest AUPRC (96.23%) is obtained when only 1,000 of the training observations were neutral (supplementary fig. S14, Supplementary Material online). These results suggest that class imbalance during training has only a minor affect on performance of linear α-DAWG. When it comes to resilience of linear α-DAWG models under imbalanced testing sets, all linear α-DAWG models have high AUPRC, ranging from 94.03 to 99.98% (supplementary fig. S15, Supplementary Material online). Moreover, in most cases, as the proportion of sweep observations increased, the AUPRC also increased (supplementary fig. S15, Supplementary Material online). Overall, the observed robustness to training imbalance is echoed by the results of testing imbalance.

Effect of Selection Strength on α-DAWG Models

Selection strength of a beneficial mutation influences the prominence of the valley of lost diversity left by a selective sweep, with stronger sweeps contributing to a wider sweep footprint on average (Willoughby et al. 2017; Roze 2021; Sultanov and Hochwagen 2022). Moreover, this adaptive parameter also impacts the sojourn time of the sweeping haplotypes, which may lead to the erosion of sweep footprints by recombination (Hamblin and Di Rienzo 2002; Pennings and Hermisson 2017; Garud 2023). Therefore, it is important to assess the ability of α-DAWG models to detect sweeps when the range of selection coefficients (s) is varied, to better understand the effectiveness of α-DAWG models under different selection regimes. To this end, we simulated sweep test replicates with selection strength that differs from the range used in the training set, which was drawn uniformly at random on a logarithmic scale. We instead simulated five test sets each with 1,000 sweep replicates, where the selection coefficient was drawn uniformly at random within the restricted ranges of , , , , or , and with all other genetic, demographic, and adaptive parameters identical to those of the CEU_2 dataset (see the section “Effect of population size fluctuations” for details).