ABSTRACT

Dyslexia is a language‐based neurobiological and developmental learning disability marked by inaccurate and disfluent word recognition, poor decoding, and difficulty spelling. Individuals can be diagnosed with and experience symptoms of dyslexia throughout their lifespan. Screening tools such as the Dyslexia Adult Checklist allow individuals to self‐evaluate common risk factors of dyslexia prior to or in lieu of obtaining costly and timely psychoeducational assessments. Although widely available online, the Dyslexia Adult Checklist has yet to be validated. The purpose of this study was to validate this Checklist in a sample of adults with and without dyslexia using both univariate and multivariate statistical approaches. We hypothesised that the Dyslexia Adult Checklist would accurately distinguish between individuals with a self‐reported diagnosis of dyslexia (n = 200) and a control group (n = 200), as measured by total scores on the screening tool. Results from our sample found the Dyslexia Adult Checklist to be valid (Cronbach's α = 0.86), and reliable (sensitivity = 76%–91.5%, specificity = 80%–88%). Compared to the originally proposed cut‐off score of 45, given the higher sensitivity rate and negative predictive value, we recommend researchers and clinicians use a cut‐off score of 40 to indicate possible mild to severe symptoms of dyslexia when using the Dyslexia Adult Checklist.

Keywords: Adult Dyslexia Checklist, checklist, dyslexia, screening tool, validation

1. Introduction

Dyslexia is a language‐based neurobiological and developmental learning disability marked by slow and laboured reading, reading comprehension difficulties, spelling difficulties, problems with word retrieval, poor working memory, difficulties with language acquisition, a deficit in letter‐sound correspondence, and the flipping of letters (American Psychiatric Association 2013; Lyon, Shaywitz, and Shaywitz 2003; Wagner et al. 2020). As a specific learning disability in reading, individuals with dyslexia often experience difficulties with daily tasks including word retrieval, recalling addresses, telephone numbers, the days of the week, months of the year, foreign names, and places, as well as early speech production and later articulation difficulties (Share 2021). These difficulties are not thought to arise from a single deficit, but from multiple contributing factors of biological origin, neurological and cognitive differences, and environmental influences (Erbeli and Wagner 2023; International Dyslexia Association 2021; Shaywitz and Shaywitz 2005; Siegel and Smythe 2005). Dyslexia is often diagnosed in childhood but persists throughout the lifespan (Lyon, Shaywitz, and Shaywitz 2003; McNulty 2003; Swanson and Hsieh 2009).

While 15% to 20% of the general population display some symptoms of dyslexia, not everyone with a diagnosis of dyslexia experiences the same constellation or severity of symptoms (American Psychiatric Association 2013; Snowling et al. 2012). This inconsistency in symptomology is indicative of a lack of a definitive causal theory for dyslexia. This gap in our understanding of the causes of dyslexia magnifies the inconsistencies when operationally defining and evaluating dyslexia (Pennington 2006; Siegel and Smythe 2005; Snowling et al. 2012; Wagner et al. 2020). Our theoretical understanding of dyslexia impacts and influences our ability to consistently and accurately detect, assess, and diagnose individuals with dyslexia. While an overview of theoretical causal theories of dyslexia is beyond the scope of this paper, it is critical that researchers and practitioners alike keep these theories in mind when discussing assessment and screening tools. This is because the basis of these tools is derived from theories, such as the phonological deficit theory, double deficit theory and magnocellular theory of dyslexia (Bosse, Tainturier, and Valdois 2007; Everatt and Denston 2020; Saksida et al. 2016; Stein 2001, 2018). Further complicating this issue is the high comorbidity rate for other developmental deficits and neurodevelopmental disorders, such as Attention Deficit/Hyperactivity Disorder (ADHD; a neurodevelopmental disorder categorised by inattention and/or a hyperactive/impulsive presentation; Dahl et al. 2020; Gooch et al. 2013), dyscalculia (a learning disability affecting a person's ability to understand number‐based information and mathematics; Gliksman and Henik 2018), and dysgraphia (a learning disability affecting a person's written expression and/or fine motor skills; Nicolson and Fawcett 2011).

The identification of dyslexia allows for timely interventions and specialised support, which can significantly improve outcomes for individuals with dyslexia, as a dyslexia diagnosis is often required to access accommodations and support in educational settings from elementary/primary school to university. Additionally, time of diagnosis can lead to secondary effects on perceived academic and general competence in individuals with dyslexia who acquire a diagnosis later in life, with individuals receiving an earlier diagnosis having an overall better understanding of their diagnosis (Battistutta, Commissaire, and Steffgen 2018; Brunswick and Bargary 2022). A diagnostic assessment for dyslexia is typically costly and includes the use of standardised test batteries, such as measures of academic ability, behavioural observations, self‐report measures, as well as parent and teacher reports (Andresen and Monsrud 2022; Everatt and Denston 2020). However, diagnostic assessments for dyslexia are generally demanding on school systems due to a lack of resources (e.g., access to school psychologists), long wait times, and are costly if conducted privately. In addition, the requirements for diagnosis are often governed by regulatory bodies based on the Country/State/Province where the individual resides (Everatt and Denston 2020; Yates and Taub 2003).

Screening tools are generally more cost‐effective compared to formal assessments and can be used as a precursor prior to assessment. They are designed to be time efficient, allowing for a quick screening of a large number of individuals. They can be administered in various settings, such as schools or community centres, by educators or healthcare professionals, without the need for specialised training or equipment. This makes them particularly useful for quickly and cost‐efficiently screening populations or helping identify individuals who may require further assessment and evaluation by professionals to confirm a dyslexia diagnosis (Trevethan 2017). Additionally, screening tools can and have previously been used to provide students with access to accommodations when assessments are pending (Everatt and Denston 2020). Implementing a screening tool for dyslexia allows for systematic data collection and research on the prevalence, characteristics, and outcomes associated with dyslexia. Screening tools can also provide valuable insights into the effectiveness of various interventions (Leloup et al. 2021; Nukari et al. 2020), help in monitoring progress over time (Bjornsdottir et al. 2014; Huang et al. 2020), for multigenerational investigations of dyslexia and further self‐understanding (Snowling et al. 2012). While the use of screening tools in the early identification of dyslexia is declining in favour of more functional and procedural changes with the implementation of a Response to Intervention process in school systems (Coyne et al. 2018; Sharp et al. 2016; Snowling 2013), validated self‐administered tools to identify adults with dyslexia at the individual and research levels continue to be lacking.

One of today's most widely used screening tools for adults is the Dyslexia Adult Screening Test (DAST; Nicolson and Fawcett 1997, 2011). The DAST is intended to assess adults in higher education. This battery of tests consists of 11 subtasks of which nine are domain‐specific to dyslexia. Some examples of these subtasks include a verbal fluency test and a rapid naming task, which assesses the ability to rapidly retrieve the name of visually presented stimuli. The DAST can be administered in 30 min by a qualified professional. Nicolson and Fawcett (1997) found the DAST to be accurate, with a sensitivity rate of 94% and a false positive rate of 0%. However, this study was conducted with a sample size of 165 participants, with only 15 individuals identified as having dyslexia based on the Adult Dyslexia Index (Nicolson and Fawcett 1997). A follow‐up study was conducted with a total sample size of 238 Canadian post‐secondary students of which 117 participants had a previous diagnosis of dyslexia (Harrison and Nichols 2005). Harrison and Nichols (2005) found the DAST to be less than accurate, correctly categorising only 74% of students with dyslexia as having dyslexia, and incorrectly classifying 16% of control participants as benefiting from further assessment. (Harrison and Nichols 2005). With the intention of using screening tools to reduce wait times and alleviate demands on the system, a high false positive rate could in fact have negative consequences on the system. Additionally, a false negative rate of 26% can have detrimental effects on the careers and quality of life of individuals who could otherwise benefit from further assessment. Similarly, see the Dyslexia Screening Test‐Junior/Secondary also created by Nicolson and Fawcett (2004) which is a screening tool that provides a profile of strengths and weaknesses to be used to guide in‐school support for students aged 6 years to 11 and 5 months, and 11 years and 6 months to 16 years and 5 months, respectively.

Similarly, to the DAST, the Bangor Dyslexia Test (BDT) was designed to be administered by a qualified professional (Miles 1983). The BDT can evaluate dyslexia‐like characteristics in individuals above the age of seven. The BDT consists of a battery of 10 subtests, mainly comprised of working memory tasks rather than reading and spelling. The BDT was designed based on observational evidence from clinical investigations of memory in individuals with dyslexia. The BDT was validated in a sample of 233 university students, of which 193 students self‐identified as having dyslexia. The results indicated that the BDT has a sensitivity of 96.4% and a specificity of 82.5% (Reynolds and Caravolas 2016). While highly sensitive and specific, the theoretical underpinning of this screening tool, with its focus on working memory deficits, is no longer consistent with the growing literature on the phonological and other deficits of dyslexia (Erbeli and Wagner 2023; Everatt and Denston 2020; Franzen, Stark, and Johnson 2021; Shaywitz and Shaywitz 2005; Siegel and Smythe 2005).

The Yale Children's Inventory (YCI) is an 11‐scale parent‐based rating scale, derived from a definition of learning disabilities suggesting (1) disturbance in attentional processes, (2) disruptive behavioural activities, (3) cognitive skills deficits, or some combination of the three, based on the Diagnostic and Statistical Manual of Mental Disorders, Third Edition (DSM‐III; Shaywitz et al. 1986). Based on their sample of 260 parent ratings of typically developing, gifted and learning‐disabled children, Shaywitz et al. (1986) found the YCI to have an internal consistency that ranged from 0.72 to 0.93 and test/retest correlations of 0.61 to 0.89. However, the YCI's emphasis on attentional deficits is similarly outdated and no longer in line with current theoretical beliefs about dyslexia (Erbeli and Wagner 2023; Everatt and Denston 2020; Franzen, Stark, and Johnson 2021; Shaywitz and Shaywitz 2005; Siegel and Smythe 2005).

The Adult Reading Questionnaire (ARQ) is a 15‐item self‐report questionnaire that assesses literacy, language, and organisation and was designed to assess dyslexia in adulthood (Snowling et al. 2012). This questionnaire was first validated on a sample of parents of children participating in a larger longitudinal research initiative. The authors of this questionnaire used some questions derived from the Dyslexia Adult Checklist such as “do you find it difficult to read aloud?,” “do you ever confuse the names of things?,” and “do you confuse left and right?” (Snowling et al. 2012). The result of their factor structure was a four‐factor solution with the 11 items of interest making up the Adult Reading Questionnaire loading onto two factors, termed word reading and word finding. The convergent and divergent validity of individual ARQ items were investigated based on nonverbal ability and vocabulary subtests on the Wechsler Abbreviated Scale of Intelligence as well as reading and spelling skills. Self‐reported dyslexia was used for group classification, and sensitivity and specificity of the ARQ as a screening tool for predicting self‐reported dyslexia were not investigated. Despite numerous existing screening tools outlined above, there is no true “gold standard” screening tool for adults with dyslexia and many of the available tools are required to be administered by qualified professionals. Smythe (2010) explains that the ideal screening tool for identifying risk factors for further investigation and later diagnosis, or research purposes would be free of charge, easily accessible, self‐administered and psychometrically valid and reliable. One screening tool that has the potential to meet these criteria for dyslexia is the Dyslexia Adult Checklist; however, it has not been evaluated to date.

Created by Smythe and Everatt (2001), the Dyslexia Adult Checklist consists of 15 items with various item weightings. It screens for deficits in phonology, word retrieval, and orthography, among others. This checklist examines literacy both directly and indirectly. Questions on the checklist ask responders about perceived deficits, except for item 10 which asks about finding creative solutions to problems (see Majeed, Hartanto, and Tan 2021 for meta‐analysis on dyslexia creativity). For example, the question “How easy do you find it to sound out words such as el‐e‐phant?” examines phonological manipulation difficulties; “Do you confuse visually similar words such as cat and cot?” suggests orthographic confusion; and “Do you confuse the names of objects, for example table for chair?” relates to semantic difficulties. Short‐term memory problems are investigated by asking, “Do you get confused when given several instructions at once?” and “Do you make mistakes when taking down telephone messages?”. Other questions in the checklist such as “When writing, do you find it difficult to organise thoughts on paper?” are more complex, whereby the underlying deficit in this question straddles deficits seen in individuals with both dyslexia and dysgraphia (Smythe and Siegel 2005). The breadth of questions in this checklist reflects an adherence to a multiple‐deficit approach to dyslexia (Erbeli and Wagner 2023; Siegel and Smythe 2005). It broadens the definition of dyslexia by considering multiple literary and non‐literary sources of deficits, leading to a questionnaire structure that may indicate a one or two‐factor solution. This suggests that the correlation between items on the questionnaire may indicate either one overarching construct or two underlying constructs. The checklist can be found on the British Dyslexia Association website claiming that it contains questions that help predict dyslexia (https://www.bdadyslexia.org.uk/dyslexia/how‐is‐dyslexia‐diagnosed/dyslexia‐checklists). However, publicly available information on the structure, development, validity, and reliability of this screening tool is limited.

The purpose of this study is to investigate the psychometric properties of the Dyslexia Adult Checklist in an adult sample, by using both univariate reliability and validity measures, as well as multivariate statistical approaches to examine construct validity. This paper will investigate the underlying factor structure of the Dyslexia Adult Checklist. In addition, we will answer the question of whether the Dyslexia Adult Checklist can accurately categorise adults who self‐identify as having dyslexia. We hypothesise that individuals with dyslexia will score higher on the checklist compared to typical readers and that the checklist will demonstrate both sensitivity and specificity. Additionally, we theorise a single‐factor solution, whereby the checklist measures the single construct of dyslexia. Given the theoretical underpinnings of the phonological and other non‐literary deficit theories of dyslexia, and the high number of items in the checklist that inquire about phonological, orthographic, and semantic deficits, analyses were carried out for both one and two‐factor solutions with the second‐factor representing visual–spatial and short‐term memory deficits.

2. Method

All aspects of this research were conducted in compliance with the regulations outlined by The Tri‐Council Policy Statement: Ethical Conduct for Research Involving Humans, as well as The Official Policies of the first author's affiliated University, including the Policy for the Ethical Review of Research Involving Human Participants. Informed consent was obtained from the participants after an explanation of the study was given to them. The research protocol was approved by the University's Human Rights Ethics Research Committee (certificates #30009781, #30003975, #30009701, and 30010817).

2.1. Participants

A sample of 400 adults was recruited both for in‐person and online participation. Targeted advertisements, looking for adults with dyslexia between the ages of 18–49 who are English‐speaking, were directed at students in accessibility centres on university campuses across Canada and the United States as well as advocacy groups. Control participants were mainly recruited from the Department of Psychology Participant Pool at Concordia University in Montreal. Participants were required to self‐identify as having dyslexia, totalling 200 individuals. The remaining 200 individuals did not identify as individuals with dyslexia and therefore made up the control group. The data were divided into two, for exploratory factor analysis (EFA; n = 200) and confirmatory factor analysis (CFA; n = 200), each with 100 control participants and 100 participants with dyslexia (see Table 1 for demographic information).

TABLE 1.

Demographic information of groups.

| Total sample (n = 400) | EFA (n = 200) | CFA (n = 200) | ||||

|---|---|---|---|---|---|---|

| Control (CON) | Dyslexia (DYS) | Control (CON) | Dyslexia (DYS) | Control (CON) | Dyslexia (DYS) | |

| n | 200 | 200 | 100 | 100 | 100 | 100 |

| Gender identity | ||||||

| Male | 46 | 69 | 15 | 32 | 31 | 37 |

| Female | 152 | 125 | 84 | 68 | 68 | 57 |

| Other | 1 | 5 | 1 | 0 | 0 | 5 |

| Prefer not to answer | 1 | 1 | 0 | 0 | 1 | 1 |

| Mage | 23.36 | 25.29 | 23.89 | 23.74 | 22.82 | 26.84 |

| SDage | 4.88 | 7.35 | 5.37 | 5.30 | 4.31 | 8.70 |

| Minage | 17 | 18 | 17 | 18 | 18 | 18 |

| Maxage | 43 | 58 | 43 | 46 | 40 | 58 |

Note: The age range of participants was between 17 and 58, above that of our initial recruitment parameters of 18 and 49. Additional measures were taken to ensure that participants above the initial cut‐off did not skew results.

An a priori power analysis, conducted using G*Power 3.1 (Erdfelder, Faul, and Buchner 1996), tested the difference between two independent means. Using a one‐tailed independent samples t‐test, a minimal effect size of interest (d = 0.80) based on Stark, Franzen, and Johnson (2022), an alpha of 0.05, and a minimal desired power of 0.95, a minimum sample size of 35 participants per group will detect a true effect size greater than 0.794 (Faul et al. 2007).

2.2. Measures

2.2.1. Dyslexia Adult Checklist

The Dyslexia Adult Checklist (Smythe and Everatt 2001) is comprised of 15 questions used to assess aspects of literacy, language and organisation. Items require the respondent to rate symptoms of dyslexia on a 4‐point Likert‐type scale (rarely, occasionally, often, most of the time, or easy, challenging, difficult, and very difficult), in which certain questions are assigned a higher weight for the total score. The higher the total score, the higher the risk of having dyslexia. According to Smythe and Everatt (2001), a total score of 45 or more indicates that the respondent is showing signs consistent with mild dyslexia, and a score above 60 as moderate or severe dyslexia. On the checklist, the minimum and maximum possible scores are 22 and 88, respectively.

2.2.2. Apparatus

Data were collected using the online data collection platform within Qualtrics. All data were anonymous and coded, with no link between the participants' identities and their data. Data collection through in‐person recruitment was also anonymous and coded.

2.2.3. Procedure

Data were collected as part of three in‐person and online studies investigating perceptual aspects of dyslexia. Participants' age and gender identities were collected and used in exploratory analysis. Participants completed the questionnaire using a pen and paper or online, respectively.

2.3. Data Analysis

2.3.1. Univariate Analysis

Psychometric properties of the screening tool and other statistical analyses were examined using XLSTAT (Addinsoft 2021), a data analysis add‐on for Microsoft Excel (Microsoft Corporation 2021, Version 2102), as well as Jeffery's Amazing Statistical Package (JASP: JASP Team 2020, Version 0.14) statistical software. After screening for any missing values and assessing normality, we obtained descriptive statistics, including means and standard deviations, for total scores on the Dyslexia Adult Checklist. All data were analysed using Bayes factors and effect size measures. This approach allowed us to identify the strength of evidence for both the null and research hypotheses, alongside traditional significance testing.

2.3.2. Multivariate Analysis

All multivariate analyses were conducted using MPlus 8.6 with a Maximum Likelihood with robust standard errors (MLR) estimation and unit variance identification to set the scale of the latent factor(s). First, a 1 to 4‐factor Exploratory Factor Analysis (EFA) was conducted on the data collected from the first 200 participants who completed the Dyslexia Adult Checklist to determine the best‐fitting factor structure. Following the investigation of the factor structure for the different EFAs, we selected a final model to replicate using a Confirmatory Factor Analysis (CFA) and an Exploratory Structural Equation Model (ESEM) in another sample of 200 participants who completed the Dyslexia Adult Checklist as a method to confirm the factor structure found with the results of the EFA. There was no missing data in either of the samples. For the ESEM model, a priori cross‐loadings were “targeted” to approach zero via the rotational procedure thus providing a confirmatory approach to ESEM specifications (Morin, Myers, and Lee 2020). Target loadings were freely estimated for their respective factors.

To avoid reliance on the chi‐squared test of exact fit, which is overly sensitive to minor misspecifications, we used sample‐size independent goodness‐of‐fit indices. We used typical interpretation guidelines, which suggest that comparative fit index (CFI) and Tucker‐Lewis index (TLI) values should be greater than 0.90 and 0.95, supporting adequate and excellent model fit, respectively. Comparable guidelines for the Root Mean Square Error of Approximation (RMSEA) suggest relying on values smaller than 0.08 and 0.06 to support adequate and excellent model fit, respectively (Hu and Bentler 1999; Marsh, Hau, and Grayson 2005). In addition to these fit indices, we also considered the statistical adequacy and theoretical conformity of the different solutions by examining factor loadings, cross‐loadings, uniquenesses, and latent correlations. The same guidelines for the evaluation of model fit were used to evaluate model fit for the CFA and the ESEM.

Data and additional tables and figures are available from the Data S1.

3. Results

3.1. Univariate Statistics

3.1.1. Data Integrity

Our sample used for the statistical analysis comprised 400 participants: 200 individuals with dyslexia (Mean age = 25.29, SD = 7.35) and 200 controls (Mean age = 23.36, SD = 4.88), and upon inspection, no missing values were found. Individuals in the dyslexia group self‐identified as having dyslexia. Given the wide range and severity of symptoms seen in dyslexia, no univariate outliers were removed from the analysis. See Table 1 for demographic information.

In the control group, total scores on the screening tool were non‐normally distributed, with skewness of 1.36 (SE = 0.17) and kurtosis of 1.89 (SE = 0.34). In the dyslexia group, normality was met, with skewness of 0.32 (SE = 0.17) and kurtosis of −0.45 (SE = 0.34). A Shapiro–Wilk test of normality was performed on the independent samples t‐test suggesting a deviation from normality (W dyslexia = 0.91, p = 0.008; W control = 0.89, p < 0.001). Additionally, Levene's test of equality of variances was violated (F(1) = 24.47, p < 0.001). In considering these non‐normally distributed data, we opted to employ a Welch‐independent samples t‐test and Glass's delta effect size for comparing group differences (Lakens 2013). Bayesian and Frequentist statistics were conducted for all analyses. Bayes factors were computed to interpret the relative strength of the null hypothesis as compared to the alternative hypothesis (Dienes 2011; Wetzels et al. 2011), using the JASP default Cauchy prior width of 0.7, as other values only have minimal impact on the results (Gronau, Ly, and Wagenmakers 2020).

3.1.2. Group Comparison

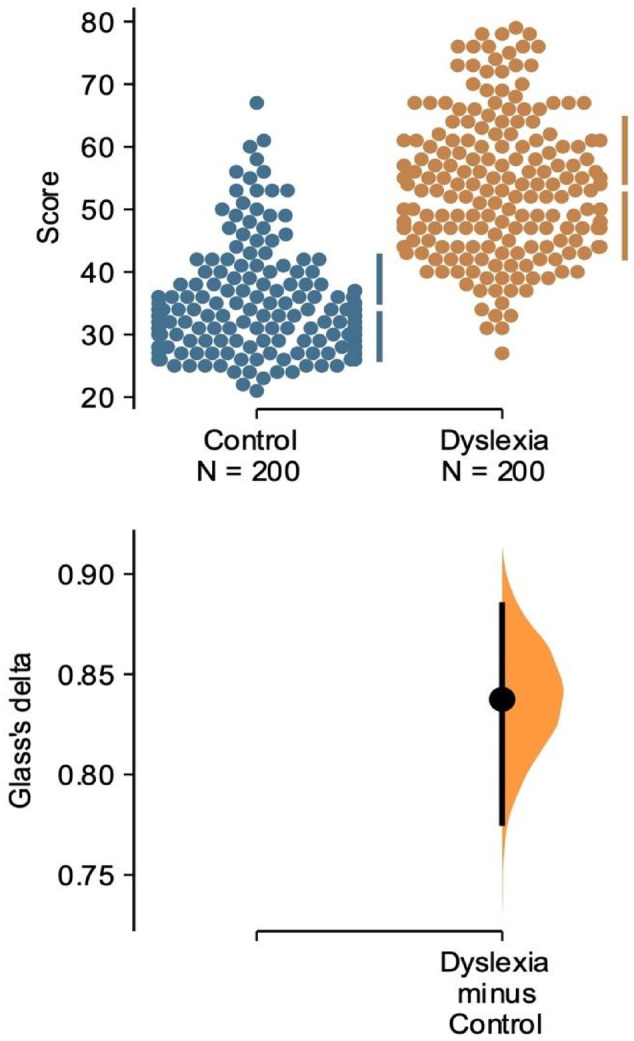

To compare the mean of total scores on the Dyslexia Adult Checklist between groups, we conducted a Welch's independent samples t‐test and a Bayesian independent samples t‐test. Both analyses were performed using a directional hypothesis such that total scores in the dyslexia group were hypothesised to be higher than total scores in the control group. Individuals with dyslexia obtained higher scores on the Dyslexia Adult Checklist (M = 53.39, SD = 11.11) compared to control participants (M = 34.27, SD = 8.23). The results indicate that there was a statistically significant difference between the total scores on the screening tool between groups, t(366.82) = −19.58, p < 0.001, Glass's d = −1.72, 95% CI [−∞, −1.50], BF10 = 5.85 × 1056, with the dyslexia group obtaining higher scores (M = 53.39) than the control group (M = 34.27; see Figure 1). The Bayes Factor obtained indicates that we have decisive evidence for the alternative hypothesis, that individuals with dyslexia obtained higher scores on the screening tool than those without dyslexia.

FIGURE 1.

Mean total score on the Dyslexia Adult Checklist between groups. The Glass's delta between Control and Dyslexia is shown in the above Gardner‐Altman estimation plot. Both groups are plotted on the left axes; the mean difference is plotted on a floating axis on the right as a bootstrap sampling distribution. The mean difference is depicted as a dot; the 95% confidence interval is indicated by the ends of the vertical error bar.

3.1.3. Reliability

The purpose of this paper is to examine the validity of the Dyslexia Adult Checklist. One requirement for statistical validity is reliability. Since data were collected only once from each participant, a single‐test reliability analysis, as well as a Bayesian single‐test reliability analysis were conducted to ensure that the screening tool consistently reflects the construct it is measuring. For the purposes of this study, reliability was defined by the coefficient Cronbach's alpha (α) and an average interitem correlation. Cronbach's α can be used to quantify the internal consistency of the items within a screening tool (Streiner 2003). It is used under the assumption that multiple items within the scale measure the same underlying construct. Cronbach's α is affected by inconsistent responses at the item level and test length. According to both Frequentist and Bayesian scale reliability statistics, our scores collected from the Dyslexia Adult Checklist were found to be consistent and reliable (15 items; Cronbach's α = 0.86, 95% CI [0.84, 0.87]).

The average inter‐item correlation represents how well the items in the screening tool measure the construct that is being evaluated. Using our sample of individuals with and without dyslexia, we found that the Dyslexia Adult Checklist had an average inter‐item correlation of 0.35 (95% CI [0.32, 0.38]) when both Frequentist and Bayesian scale reliability statistics were conducted.

3.1.4. Predictive Validity

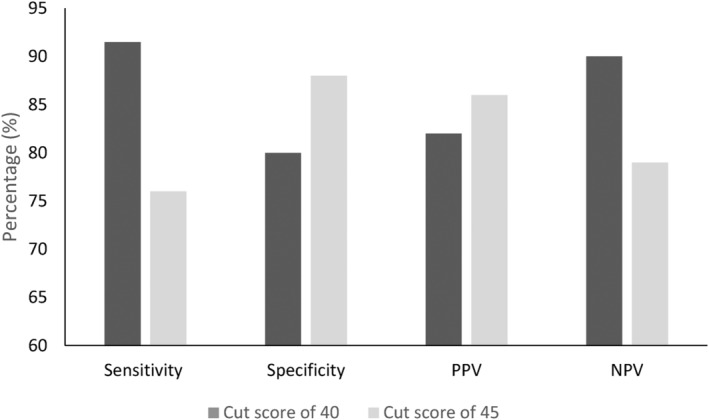

The predictive validity of the Dyslexia Adult Checklist was investigated using a fourfold table and measured by calculating the sensitivity, specificity, and predictive values of scores (see Table 2 and Figure 2). Using the originally proposed cut‐off‐score of 45 (Smythe and Everatt 2001), we found our sample to have a sensitivity rate of 76%, and therefore a false negative rate of 24%. Additionally, scores from our sample reflected a specificity rate of 88% and a false positive rate was found to be 12%. Predictive values were likewise calculated yielding a positive predictive value of 86% and a negative predictive value of 79% (Figure 2).

TABLE 2.

Fourfold tables with cut‐off‐score of 45 and 40.

| Self‐identified | |||

|---|---|---|---|

| Dyslexia (n = 200) | Control (n = 200) | ||

| Cut‐off‐score of 45 | Above 45 | 152 | 24 |

| Below 45 | 48 | 176 | |

| Cut‐off‐score of 40 | Above 40 | 183 | 40 |

| Below 40 | 17 | 160 | |

Note: N = 400.

FIGURE 2.

Sensitivity, specificity, and predictive values between cut‐off‐scores used. Comparison of sensitivity, specificity, and predictive values for the Dyslexia Adult Checklist between cut‐off‐score used. N = 400 for each cut‐off‐core. NPV = negative predictive value; PPV = positive predictive value.

A secondary analysis was conducted using a more conservative cut‐off‐score of 40 (see Table 2). This analysis revealed that our sample exhibited an increased sensitivity rate of 91.5% and a specificity rate of 80%. Additionally, we found a positive predictive value of 82% and a negative predictive value of 90% (Figure 2). Hence, the adoption of this more conservative cut‐off score resulted in improved sensitivity.

3.2. Multivariate Statistics

3.2.1. Data Integrity

3.2.1.1. Multivariate Outliers

To assess for multivariate outliers, the Mahalanobis Distance statistic was investigated. The chi‐square critical value table was examined to find the critical value at the 0.01 alpha level with 10° of freedom (for the 10 variables), which yields a Mahalanobis Distance of D 2 = 23.209 (Raykov and Marcoulides 2008). With this method, 84 multivariate outliers were identified. Additionally, two scatterplots using the Cook's Distance (x‐axis), and the Mahalanobis Distance (y‐axis) were created to visually inspect for severe outliers for the sample used in the EFA and the sample used in the CFA. Regarding the scatterplot for the first sample (i.e., EFA sample) three were identified (i.e., Participant ID number 1, 16, 193; see Figure A in Data S1). Regarding the scatterplot for the second sample (i.e., CFA sample) three were identified (i.e., Participant ID number 5, 57, 137; see Figure B in Data S1). All analyses were conducted with and without outliers to determine if their presence had an influence on the results. As the results did not change once the outliers were removed, we proceeded to retain the model including the data of all participants.

3.2.1.2. Factorability of the Matrix

Two Pearson correlations were conducted to investigate potential issues of high co‐variance across both samples. For the first sample, the largest correlation observed was between question 1 (“Do you confuse visually similar words such as cat and cot”) and question 6 (“Do you re‐read paragraphs to understand them?” r = 0.59). Additionally, out of the 106 bivariate correlations, 65 were above 0.3 (see Table A in Data S1). The Kaiser–Meyer–Olkin measure of sampling adequacy was conducted and was found to be good (KMO = 0.90).

For the second sample, we likewise found the largest correlation between question 1 and question 6 (r = 0.59). Out of the 106 bivariate correlations, 78 were above 0.3 (see Table B in Data S1). The Kaiser‐Meyer‐Olkin measure of sampling adequacy was conducted and was found to be great (KMO = 0.92).

3.2.2. Exploratory Factor Analyses

An EFA was conducted using Maximum Likelihood Robust (MLR) and a Geomin (0.5) oblique rotation, with a maximum of 1000 iterations using the MPlus 8.6 software. A parallel analysis was conducted and plotted as a first method to establish the final number of factors (see Figure C in Data S1). Visual observation of this matrix led us to determine that both the one‐factor and two‐factor solutions were viable options, as additional factors showed no improvement over randomness. The number of solutions paired with the number of factors fell in line with our predictions. The model fit indices for the one to four‐factor solutions are reported in Table C in Data S1.

In the one‐factor solution, model fit indices were adequate in terms of CFI and TLI, and excellent in terms of RMSEA. All factor loadings were positive and statistically significant (M |λ| = 0.582), with a few items [i.e., items 5 (0.232), 10 (0.298), and 14 (0.338)] displaying weaker loadings (see Table 3 for a full list of factor loadings for the 1‐ and 2‐factor EFAs). Moving to the two‐factor solution, model fit was adequate for CFI and TLI (albeit slightly better than for the one‐factor solution), and excellent in terms of RMSEA. The Geomin Factor Correlations indicate a statistically significant correlation (r = 0.545) between Factor 1 and Factor 2. Based on our a priori theoretical operationalization of the two factors structure, the target factor loadings for factor 1 (M |λ| = 0.455) and factor 2 (M |λ| = 0.541) are all statistically significant, however generally lower than for the 1‐factor solution. Moreover, we observed the presence of multiple cross‐loadings (e.g., items 9, 13, and 15) that indicate rather strong overlap between the factors. Theoretically speaking, these cross‐loadings are no more likely to define both factors compared to other items in the questionnaire, while all items may be suited to represent a 1‐factor structure based on our 1‐factor EFA results. In this sense, it remains unclear whether the one or two‐factor solution is best necessitating a full investigation of the factor structure in our second sample. As such, we conducted a 1‐ and 2‐factor CFA using our second sample and compared the 2‐factor CFA model to a 2‐factor ESEM model with target rotation based on the a priori factor structure to investigate whether the same cross‐loadings emerge (i.e., displaying stability in the items defining both factors).

TABLE 3.

EFA one‐ and two‐factor solutions Geomin rotated loadings.

| Items | One‐factor solution | Two‐factor solution | ||||

|---|---|---|---|---|---|---|

| Factor loadings (1) | Uniquenesses | Factor loadings (1) | Uniquenesses | Factor loadings (2) | Uniquenesses | |

| Item 1 | 0.754* (0.04) | 0.43 | 0.269 (0.185) | 0.437 | 0.569* (1.65) | 0.437 |

| Item 2 | 0.749* (0.04) | 0.44 | 0.294* (0.086) | 0.438 | 0.548* (0.081) | 0.438 |

| Item 3 | 0.564* (0.051) | 0.68 | 0.538* (0.192) | 0.615 | 0.133 (−188) | 0.615 |

| Item 4 | 0.612* (0.054) | 0.63 | 0.893* (0.251) | 0.292 | −0.102 (0.15) | 0.292 |

| Item 5 | 0.232* (0.073) | 0.95 | 0.208 (0.134) | 0.938 | 0.065 (0.137) | 0.938 |

| Item 6 | 0.643* (0.049) | 0.59 | 0.134 (0.102) | 0.562 | 0.579* (0.098) | 0.562 |

| Item 7 | 0.676* (0.041) | 0.54 | 0.044 (0.105) | 0.472 | 0.702* (0.099) | 0.472 |

| Item 8 | 0.777* (0.034) | 0.4 | 0.242 (0.125) | 0.389 | 0.623* (0.121) | 0.389 |

| Item 9 | 0.649* (0.044) | 0.58 | 0.338* (0.098) | 0.573 | 0.405* (0.102) | 0.573 |

| Item 10 | 0.298* (0.066) | 0.91 | 0.051 (0.117) | 0.908 | 0.273* (0.115) | 0.908 |

| Item 11 | 0.475* (0.056) | 0.78 | 0.408* (0.19) | 0.746 | 0.147 (0.177) | 0.746 |

| Item 12 | 0.675* (0.045) | 0.55 | 0.079 (0.096) | 0.494 | 0.665* (0.085) | 0.494 |

| Item 13 | 0.615* (0.052) | 0.62 | 0.327* (0.129) | 0.625 | 0.37* (0.135) | 0.625 |

| Item 14 | 0.338* (0.082) | 0.89 | 0.39* (0.112) | 0.838 | 0.021 (0.113) | 0.838 |

| Item 15 | 0.661* (0.047) | 0.56 | 0.411* (0.138) | 0.555 | 0.347* (0.14) | 0.555 |

p < 0.05.

3.2.3. CFA & ESEM

The results of the CFA indicated an adequate model fit in terms of CFI and TLI and excellent model fit in terms of RMSEA. All factor loadings for this solution are statistically significant (M |λ| = 0.541) and only one weak loading (i.e., item 10: |λ| = 0.190) was present. In fact, item 10 seemed to load weekly in the 1‐factor EFA for the first sample as well, indicating that this item may not be as relevant (at least statistically speaking) in terms of defining dyslexia. Interestingly, the 2‐factor solution displayed almost identical fit to the 1‐factor solution which is likely due to the standardised correlation approaching one (i.e., a 2‐factor CFA model with a standardised correlation equal to one between both factors is mathematically equivalent to a 1‐factor model). The strong factor correlation for the 2‐factor solution is likely due to the presence of substantive cross‐loadings (as observed in the first sample) making it clear that ESEM should be favoured over CFA, should a 2‐factor solution be retained (Morin, Myers, and Lee 2020). All factor loadings for the 1‐ and 2‐factor CFA models are available in Table 4.

TABLE 4.

Factor loadings for one and two‐factor CFA solutions.

| Factor loadings—One‐factor solution | Factor loadings—Two‐factor solution | ||||

|---|---|---|---|---|---|

| Items | Standardised | Uniquenesses | Items | Standardised | Uniquenesses |

| Factor 1 | Factor 1 | ||||

| AC1 | 0.725* | 0.474 | AC3 | 0.664* | 0.559 |

| AC2 | 0.678* | 0.54 | AC4 | 0.543* | 0.705 |

| AC3 | 0.655* | 0.571 | AC5 | 0.5* | 0.75 |

| AC4 | 0.536* | 0.712 | AC9 | 0.684* | 0.533 |

| AC5 | 0.493* | 0.757 | AC11 | 0.588* | 0.654 |

| AC6 | 0.632* | 0.601 | AC14 | 0.536* | 0.713 |

| AC7 | 0.709* | 0.497 | AC15 | 0.717* | 0.486 |

| AC8 | 0.671* | 0.549 | Factor 2 | ||

| AC9 | 0.683* | 0.533 | AC1 | 0.727* | 0.472 |

| AC10 | 0.16* | 0.974 | AC2 | 0.688* | 0.526 |

| AC11 | 0.577* | 0.667 | AC6 | 0.641* | 0.589 |

| AC12 | 0.715* | 0.488 | AC7 | 0.716* | 0.488 |

| AC13 | 0.555* | 0.692 | AC8 | 0.672* | 0.549 |

| AC14 | 0.519* | 0.731 | AC10 | 0.165* | 0.973 |

| AC15 | 0.705* | 0.503 | AC12 | 0.72* | 0.482 |

| AC13 | 0.554* | 0.693 | |||

p < 0.05.

The results of the two‐factor ESEM model indicate excellent fit in terms of CFI, TLI, and RMSEA as well as a substantial decrease in the standardised correlation between factor 1 and 2 (r = 0.626). However, once again several cross‐loadings were observed, differing from the 2‐factor EFA conducted on the first sample (i.e., items 1, 8, 13, and 15). When paired with a standardised correlation between the two factor greater than 0.6 indicates substantive overlap between the two factors in the ESEM model. Moreover, six of the 15 target loadings were not statistically significant in the ESEM model revealing that the a priori 2‐factor structure specified is not supported. Standardised factor loadings and uniquenesses for this solution are available in Table F from the Data S1.

3.2.4. Model Selection

To recap, in the first sample both the EFA and CFA displayed adequate to excellent model fit and both had adequate target loadings with a moderate correlation observed between the two factors in the 2‐factor EFA. In the second sample, the 1‐factor solution was again adequate in terms of fit and standardised factor loadings, with item 10 systematically displaying a weaker association with the latent constructs across both samples and all models. The two‐factor CFA model was ruled out due to the substantive degree of overlap between the two factors. The 2‐factor ESEM displayed the greatest amount of fit and an acceptable standardised factor correlation, but the factor structure was not supported based on the strength of the target and cross‐loadings. Indeed, composite reliability coefficients for the two‐factor solutions, based on the a priori factor structure specified, are low for factor 1 in both samples (sample 1: ω = 0.695; sample 2: ω = 0.690) and low for factor 2 in the second sample (sample 1: ω = 0.812; sample 2: ω = 0.505). Conversely, composite reliability coefficients for the 1‐factor solution are excellent in both samples (ω: McDonald 1970; sample 1 ω = 0.889, sample 2 ω = 0.897). Based on the above results it appears that a 1‐factor CFA better captures the underlying factor structure of the scale by doing so in a more stable manner compared to a two, or more‐factor solution. These results lend further support in favour of viewing the Dyslexia Adult Checklist as unidimensional.

3.2.5. Factor Scores Comparison With Total Scores

We compared the individual factor scores per participant obtained in the one‐factor CFA to the total score per participant obtained on the checklist. This was done to determine if the factor scores predicted total scores on the Dyslexia Adult Checklist. We ran a Pearson correlation and found a large and statistically significant positive association between these two scores (r = 0.988, p < 0.001, 95% CI [0.984, 0.991]), whereby the larger the factor score, the larger the score on the checklist. Looking at specific scores as examples, a participant who received a total score of 25 on the Dyslexia Adult Checklist had a factor score of −1.5. Further, a participant who received a score of 40 on the Dyslexia Adult Checklist received a factor score of −0.1. Finally, a participant who received a score of 78 on the Dyslexia Adult Checklist received a factor score of 2.4. For the full list of factor scores and manual scores, please see Table G in the Data S1. The consistency between the factor scores and the manual scores lends further support in favour of viewing the Dyslexia Adult Checklist as unidimensional. In addition, the factor scores obtained from the CFA can subsequently be used in the same fashion as the scores obtained by the checklist to determine if an individual has dyslexia where, with replication, a cut‐off score or range of scores can be established for diagnostic purposes. This statistical approach helps to avoid the need for arbitrarily defined cut‐off scores.

4. Discussion

The purpose of this study was to investigate whether the Dyslexia Adult Checklist, a widely accessible screening tool, is a valid and reliable measure in identifying adults with dyslexia. As hypothesised, individuals with a self‐reported diagnosis of dyslexia obtained significantly higher total scores on the Dyslexia Adult Checklist than individuals without dyslexia. The screening tool was additionally found to be both reliable at the item level (Cronbach's α = 0.86), and accurate (sensitivity = 76%–92%, specificity = 80%–88%) in its screening for individuals with dyslexia. Lastly, a one‐factor structure was found, reflecting a single construct of dyslexia.

The Dyslexia Adult Checklist scores are based on the weighted total sum of item responses with the official scoring guideline specifying scores below 45 as indicating “probably non‐dyslexic,” scores between 45 and 60 representing “showing signs consistent with mild dyslexia” and a score above 60 being indicative of “signs consistent with moderate or severe dyslexia.” The simplicity of the scoring system with the provided interpretation is appealing since any respondent can score and understand the results of the screening tool. In our first analysis, we investigated group‐level differences using an independent samples t‐test. The result of this analysis revealed group‐level differences between our sample of individuals with and without dyslexia using complementary null hypothesis significance testing and Bayesian statistics. Given that the checklist was designed with the intention of identifying individuals with dyslexia based on a high overall score, our findings are consistent with our prior hypothesis and the overall objective of the screening tool.

When identifying and classifying individuals of a certain group based on an individual's reported characteristics, such as dyslexia, it is paramount that the tool is both reliable and valid. Reliability is a requirement for statistical validity; a tool cannot be valid if not firstly reliable. In this study, the reliability of our scores was assessed by calculating the coefficient Cronbach's alpha (α) and an average interitem correlation. We found that approximately 14% of the variance in our scores was due to the combined effect of test length and inconsistent responding at the item level, as measured by Cronbach's alpha. Additionally, the items that make up the screening tool are correlated indicating that they measure the same underlying construct; however, they do not repeatedly measure the same characteristics of dyslexia as indicated by the low‐to‐mid range inter‐item correlation. We can therefore conclude that the Dyslexia Adult Checklist is a reliable measure of dyslexia at the item level. That said, to our knowledge, this is the first study to report any reliability coefficients for scores on the Dyslexia Adult Checklist, and so replication is needed to further strengthen and comment on the reliability of this screening tool. In comparison to other existing screening tools, we found the Dyslexia Adult Checklist to have strong reliability (α = 0.86) compared to BDT (α = 0.72; Reynolds and Caravolas 2016) and Adult Reading Questionnaire (α ≥ 60; Protopapa and Smith‐Spark 2022; Snowling et al. 2012). Future studies should investigate the reliability of this tool over time using test–retest methods, given that dyslexia is a developmental disorder that persists throughout the lifespan (McNulty 2003).

With reliability assumed, we examined the validity of the scores using sensitivity, specificity, and predictive values. Scores were calculated using the cut‐off‐score of 45 proposed by Smythe and Everatt (2001), and a more lenient cut‐off‐score of 40. Sensitivity refers to the ability of the screening tool to correctly classify individuals from the dyslexia group as showing signs of dyslexia, based on a cut‐off‐score of 45 (Parikh et al. 2008; Smythe and Everatt 2001; Trevethan 2017). Specificity refers to the ability of the screening tool to correctly classify individuals from the control group as not showing signs of dyslexia, by obtaining a score below 45 (Parikh et al. 2008; Smythe and Everatt 2001; Trevethan 2017). Our findings indicate a sensitivity rate of 76% and specificity rate of 88% with a cut‐off‐score of 45. Improvingly, when using a cut‐off‐score of 40 to indicate signs of dyslexia, we found a sensitivity rate of 91.5% and a specificity rate of 80%. In addition, we calculated positive and negative predictive values as they consider the entire sample, both individuals with and without self‐reported dyslexia. At the individual level, should the respondent score above 45, indicating mild to severe symptoms of dyslexia, the probability of that individual having dyslexia is 86%, and 82% when using a cut‐off‐score of 40. However, should the respondent score below 45, the probability of that individual not having dyslexia is 79%, versus 90% with a cut‐off‐score of 40. As expected, depending on the cut‐off score used, we see a trade‐off that occurs between sensitivity, specificity, and our predictive values (Figure 2). With a cut‐off score of 45, we have a higher specificity rate and a lower sensitivity, and the opposite pattern emerged with a cut‐off score of 40. With a cut‐off‐score of 40, the high negative predictive value implies minimal false negatives, which is desirable in a screening tool such as this. However, this comes at a trade‐off, in this case, a lower positive predictive value which is indicative of more false positives. Given these results, we suggest using the more conservative cut‐off score of 40 when using the Dyslexia Adult Checklist, although researchers and practitioners may choose the cut‐off score they desire based on how they wish to use the screening tool.

In comparison to other existing screening tools, Nicolson and Fawcett's (1997) original research study reported a higher sensitivity (94%) and lower false positive rate (0%) compared to our findings from the Dyslexia Adult Checklist using both a cut‐off‐score of 45 (76% and 12%, respectively) and 40 (91.5% and 20%, respectively). This is however in contrast to replication findings by Harrison and Nichols (2005), who report a sensitivity rate of only 74%, a specificity rate of 84%, and a false positive rate of 16%. Distinctively, Singleton, Horne, and Simmons (2009) developed a computerised screening tool using adapted phonological processing, lexical access, and working memory tasks. Their test battery can be completed in 15 min and for the most part, is self‐administered. Using a sample of 70 individuals with dyslexia and 69 controls, the results of their discriminant function analysis based on the composite scale score was a sensitivity measure of 83.6% and a specificity of 88.1%. However, the aforementioned screening tools are either required to be professionally administered or do not rely on self‐reporting. Therefore, we are unable to speak to the convergent validity.

We consider our results to demonstrate improved psychometric validity of the Dyslexia Adult Checklist when using a cut‐off‐score of 40 versus 45 for indicating signs of dyslexia. Given the nature of screening tools, high sensitivity and negative predictive value are paramount in ensuring the usefulness of the tool. This is a stark difference to assessment tools which require a more nuanced balance between sensitivity, specificity, and predictive values (Grimes and Schulz 2002; see Singleton, Horne, and Simmons 2009). Within the field of educational screening, it is recommended that screening tools have false positive and false negative rates that are less than 25% (Singleton, Horne, and Simmons 2009; also see Singleton 1997). Additionally, Glascoe and Byrne (1993) argue that specificity rates should be at least 90% and sensitivity at least 80% for a test to be regarded as valid. Based on these criteria, the Dyslexia Adult Checklist would be considered valid using a cut‐off‐score of 40. With a cut‐off‐score of 45; however, the checklist does not meet these criteria, as its sensitivity rate was found to be only 76%. Given the higher sensitivity rate and negative predictive value when using a more inclusive cut‐off‐score of 40, we recommend that researchers and clinicians use this cut‐off‐score to indicate possible mild to severe symptoms of dyslexia when using the Dyslexia Adult Checklist. To our knowledge, this is the first study to assess the validity of the Dyslexia Adult Checklist. For this reason, we are unable to directly compare our findings to previous research using this screening tool.

The results of the EFA allowed us to confirm the possibility of a one or two‐factor solution for the Dyslexia Adult Checklist. Upon completion of the CFA, it became evident that a one‐factor solution yielded the best fit for the data. This conclusion was drawn based on the consistent factor loadings observed from the EFA to the CFA results, as well as the substantial cross‐loading between factors in the two‐factor solutions. Dyslexia is an umbrella term encompassing various difficulties experienced by individuals with dyslexia. Consequently, a checklist featuring a single‐factor solution is deemed appropriate, where the individual items assess a broad spectrum of deficits. These findings allow us to interpret that the Dyslexia Adult Checklist effectively captures one underlying construct of dyslexia and, thus, measures the construct it intends to evaluate. This finding stands in contrast to the findings by Snowling et al. (2012) in their development of the Adult Reading Questionnaire. As stated in the introduction, the Adult Reading questionnaire is loosely derived from questions from the Dyslexia Adult Checklist by Smythe and Everatt (2001). Here, they found their questions on dyslexia to load into two distinct factors, word reading, and word finding. Despite their findings, we hypothesised and found a one‐factor solution to best fit our data and the Dyslexia Adult Checklist as it adheres to and asks questions based on a multi‐deficit framework, not only focusing on literary outcomes such as word reading and finding.

The present study's design allowed for a sensible way to assess the reliability and validity of the Dyslexia Adult Checklist. Nevertheless, a potential limitation of this study is the generalizability of the results. The results of this study may not hold over other populations or settings as the sample used in our study was mainly recruited from university campuses (Shadish, Cook, and Campbell 2002). Participants in the control and dyslexia groups were recruited both online and in person, as part of multiple research studies, some of which required participants to be educated at the university level. We hypothesised however that individuals taking part in higher‐level education would rate their symptoms lower in severity compared to individuals who did not pursue higher education. This would make these individuals more difficult to identify and differentiate from individuals without dyslexia, as their responses may fall closer to the cut‐off score. However, this is not what we found. We further hypothesise, should this study be replicated with a more representative sample, that our results would be consistent with this replication. On the other hand, participants making up our control group were university students and therefore may not represent the average reader. Further affecting the generalizability of our findings, the sample used in our study was over‐represented by female participants, which is to be expected when sampling from a post‐secondary institution (Statistics Canada 2022). It should be noted that individuals with dyslexia that make up our experimental group, self‐identified as having dyslexia and were not required to provide proof of diagnosis to participate in our research study. Additionally, a detailed examination of the difficulties of our dyslexia sample was not undertaken, such as an investigation into comorbidities, perceived severity of dyslexia and family diagnosis, which could impact the generalizability of our findings. Considering that this study appears to be the first to assess the psychometric properties of the Dyslexia Adult Checklist, future studies should aim to replicate this study on a more diverse and representative sample to allow for even more generalizability.

The Dyslexia Adult Checklist is a self‐report screening tool that can be completed and scored without the presence of a qualified professional. Although we may speculate that, self‐report screening tools may not be as accurate as professionally administered measures; there are many advantages of using self‐report screening tools. For instance, individuals may easily identify key traits that they possess and in return, pay close attention to how they respond to questions (Robins, Fraley, and Krueger 2010). As discussed above, screening tools are not diagnostic and should only be used to signal individuals for further assessment, should they wish to pursue additional testing. The self‐report nature of the Dyslexia Adult Checklist can therefore act as a precursor for further professionally administered screening tools and later diagnostic testing. Additionally, the Dyslexia Adult Checklist can act as a quick and easy screening tool for researchers investigating adults with dyslexia or conducting reading and literacy‐related research in the general population.

As cited throughout the literature, it appears that the Dyslexia Adult Checklist originally appeared in the BDA Handbook in 2001, and again in the BDA Employment and Dyslexia Handbook in 2009, both written by Smythe. We were unable to access copies of these documents from the British Dyslexia Association. Smythe and Siegel (2005) state that the questionnaire was developed based on research, that it is indeed predictive and has been validated in English. However, based on our investigation, there is no further mention of this research study or its results. The validation of such tools is not only considered best practise and necessary prior to being widely distributed, but public access to such results is also paramount for transparent research and replication of results.

Based on the findings from our sample of individuals with and without dyslexia, we recommend a more conservative cut‐off‐score of 40 on the Dyslexia Adult Checklist when self‐administering this screening tool. Currently, the recommended 45 cut‐off‐score makes it so that individuals taking this screening tool have a higher false negative rate, whereby a higher number of individuals who could possibly have dyslexia may be misclassified as not having severe enough symptoms of dyslexia. This may be problematic, as these individuals may not get access to or believe that they do not need further assessment.

Currently, the use of this tool is twofold; for self‐exploration and understanding (British Dyslexia Association n.d.), as well as for research purposes in group categorisation (Franzen, Stark, and Johnson 2021; Stark, Franzen, and Johnson 2022; Vatansever et al. 2019). With the current research, we aim to prompt other researchers in the field to report the psychometric properties of screening and assessment tools used in their own research. We eagerly await the replication of our findings and the reporting of psychometric properties from across research samples. Due to the average inter‐item correlation being 0.35, future researchers may wish to investigate other types of reliability and validity such as test–retest reliability through longitudinal research and convergent and divergent validity, as well as conduct an item analysis with the aim of condensing the questionnaire by removing highly correlated items. In addition, research should investigate contributing factors affecting scores on the Dyslexia Adult Checklist, such as the perceived severity of dyslexia and perceived support from others. The Dyslexia Adult Checklist makes note of an additional cut‐off‐score of 60, distinguishing between mild and severe signs which may warrant further investigation. Lastly, researchers may wish to develop new self‐administered questionnaires investigating multiple underlying factors of dyslexia more explicitly, such as the Adult Reading Questionnaire, as a predictive screening tool for adults with dyslexia (Snowling et al. 2012). Such a questionnaire can be used to identify profiles of deficits in individuals with dyslexia with the aim of developing more targeted interventions.

As diagnostic tests are generally time‐consuming and expensive, screening tools offer an efficient means of self‐evaluating common symptoms of dyslexia prior to seeking a clinical diagnosis, in better self‐understanding and for use in research. The Dyslexia Adult Checklist is a widely accessible screening tool that we found to be both valid and reliable, though, we recommend using a lower cut‐off‐score of 40 rather than the 45 proposed by the original authors of the Dyslexia Adult Checklist (Smythe and Everatt 2001).

Author Contributions

All authors contributed to the study's conception and design. Material preparation and data collection were performed by Zoey Stark, Vanessa Soldano and Léon Franzen. Data analysis was performed by Zoey Stark and Karine Elalouf. All authors contributed to the writing of the manuscript and approved its final version.

Ethics Statement

The present study has received approval by the Concordia University Human Ethics Research Committee (UHREC Certificate: 30003975; 30009781; 3000970; 30010817).

Conflicts of Interest

The authors declare no conflicts of interest.

Supporting information

Data S1:

Acknowledgements

We would like to thank our participants and research volunteers for their support with this study. We would also like to extend our thanks to the Concordia University Access Center for Students with Disabilities for their help in recruitment.

Funding: This work was supported by Social Sciences and Humanities Research Council (grant 767‐2022‐1603) and Fonds de recherche du Québec—Société et culture (FRQSC; grant 282985) given to Zoey Stark.

Data Availability Statement

The data that support the findings of this study are openly available from the first author's OSF repository (DOI: 10.17605/OSF.IO/JX42M).

References

- Addinsoft . 2021. “XLSTAT Statistical and Data Analysis Solution.” [Computer Software].

- American Psychiatric Association . 2013. Diagnostic and Statistical Manual of Mental Disorders. 5th ed. Washington, DC: American Psychiatric Association. [Google Scholar]

- Andresen, A. , and Monsrud M. B.. 2022. “Assessment of Dyslexia–Why, When, and With What?” Scandinavian Journal of Educational Research 66, no. 6: 1063–1075. 10.1080/00313831.2021.1958373. [DOI] [Google Scholar]

- Battistutta, L. , Commissaire E., and Steffgen G.. 2018. “Impact of the Time of Diagnosis on the Perceived Competence of Adolescents With Dyslexia.” Learning Disability Quarterly 41, no. 3: 170–178. 10.1177/07319487187621. [DOI] [Google Scholar]

- Bjornsdottir, G. , Halldorsson J. G., Steinberg S., et al. 2014. “The Adult Reading History Questionnaire (ARHQ) in Icelandic: Psychometric Properties and Factor Structure.” Journal of Learning Disabilities 47, no. 6: 532–542. 10.1177/0022219413478662. [DOI] [PubMed] [Google Scholar]

- Bosse, M. L. , Tainturier M. J., and Valdois S.. 2007. “Developmental Dyslexia: The Visual Attention Span Deficit Hypothesis.” Cognition 104, no. 2: 198–230. 10.1016/j.cognition.2006.05.009. [DOI] [PubMed] [Google Scholar]

- British Dyslexia Association . n.d. “How Is Dyslexia Diagnosed?” https://www.bdadyslexia.org.uk/dyslexia/how‐is‐dyslexia‐diagnosed/dyslexia‐checklists.

- Brunswick, N. , and Bargary S.. 2022. “Self‐Concept, Creativity and Developmental Dyslexia in University Students: Effects of Age of Assessment.” Dyslexia 28, no. 3: 293–308. 10.1002/dys.1722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coyne, M. D. , Oldham A., Dougherty S. M., et al. 2018. “Evaluating the Effects of Supplemental Reading Intervention Within an MTSS or RTI Reading Reform Initiative Using a Regression Discontinuity Design.” Exceptional Children 84, no. 4: 350–367. 10.1177/0014402918772791. [DOI] [Google Scholar]

- Dahl, V. , Ramakrishnan A., Spears A. P., et al. 2020. “Psychoeducation Interventions for Parents and Teachers of Children and Adolescents With ADHD: A Systematic Review of the Literature.” Journal of Developmental and Physical Disabilities 32: 257–292. 10.1007/s10882-019-09691-3. [DOI] [Google Scholar]

- Dienes, Z. 2011. “Bayesian Versus Orthodox Statistics: Which Side Are You On?” Perspectives on Psychological Science 6, no. 3: 274–290. 10.1177/1745691611406920. [DOI] [PubMed] [Google Scholar]

- Erbeli, F. , and Wagner R. K.. 2023. “Advancements in Identification and Risk Prediction of Reading Disabilities.” Scientific Studies of Reading 27, no. 1: 1–4. 10.1080/10888438.2022.2146508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erdfelder, E. , Faul F., and Buchner A.. 1996. “GPOWER: A General Power Analysis Program.” Behavior Research Methods, Instruments, & Computers 28, no. 1: 1–11. 10.3758/bf03203630. [DOI] [Google Scholar]

- Everatt, J. , and Denston A.. 2020. Dyslexia: Theories, Assessment and Support. London: Routledge. [Google Scholar]

- Faul, F. , Erdfelder E., Lang A.‐G., and Buchner A.. 2007. “G*Power 3:A Flexible Statistical Power Analysis Program for the Social, Behavioral, and Biomedical Sciences.” Behavior Research Methods 39, no. 2: 175–191. 10.3758/bf03193146. [DOI] [PubMed] [Google Scholar]

- Franzen, L. , Stark Z., and Johnson A. P.. 2021. “Individuals With Dyslexia Use a Different Visual Sampling Strategy to Read Text.” Scientific Reports 11, no. 1: 6449. 10.1038/s41598-021-84945-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glascoe, F. P. , and Byrne K. E.. 1993. “The Accuracy of Three Developmental Screening Tests.” Journal of Early Intervention 17: 368–379. [Google Scholar]

- Gliksman, Y. , and Henik A.. 2018. “Conceptual Size in Developmental Dyscalculia and Dyslexia.” Neuropsychology 32, no. 2: 190–198. 10.1037/neu0000432. [DOI] [PubMed] [Google Scholar]

- Gooch, D. , Hulme C., Nash H. M., and Snowling M. J.. 2013. “Comorbidities in Preschool Children at Family Risk of Dyslexia.” Journal of Child Psychology and Psychiatry 55, no. 3: 237–246. 10.1111/jcpp.12139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimes, D. A. , and Schulz K. F.. 2002. “Uses and Abuses of Screening Tests.” Lancet 359, no. 9309: 881–884. 10.1016/S0140-6736(02)07948-5. [DOI] [PubMed] [Google Scholar]

- Gronau, Q. F. , Ly A., and Wagenmakers E.‐J.. 2020. “Informed Bayesian T‐Tests.” American Statistician 74: 137–143. [Google Scholar]

- Harrison, A. G. , and Nichols E.. 2005. “A Validation of the Dyslexia Adult Screening Test (DAST) in a Post‐Secondary Population.” Journal of Research in Reading 28, no. 4: 423–434. 10.1111/j.1467-9817.2005.00280.x. [DOI] [Google Scholar]

- Hu, L. , and Bentler P. M.. 1999. “Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria Versus New Alternatives.” Structural Equation Modeling: A Multidisciplinary Journal 6, no. 1: 1–55. 10.1080/10705519909540118. [DOI] [Google Scholar]

- Huang, A. , Wu K., Li A., Zhang X., Lin Y., and Huang Y.. 2020. “The Reliability and Validity of an Assessment Tool for Developmental Dyslexia in Chinese Children.” International Journal of Environmental Research and Public Health 17, no. 10: 3660. 10.3390/ijerph17103660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- International Dyslexia Association . 2021. https://dyslexiaida.org/.

- JASP Team . 2020. “JASP (Version 0.14).” [Computer Software].

- Lakens, D. 2013. “Calculating and Reporting Effect Sizes to Facilitate Cumulative Science: A Practical Primer for T‐Tests and ANOVAs.” Frontiers in Psychology 4: 863. 10.3389/fpsyg.2013.00863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leloup, G. , Anders R., Charlet V., Eula‐Fantozzi B., Fossoud C., and Cavalli E.. 2021. “Improving Reading Skills in Children With Dyslexia: Efficacy Studies on a Newly Proposed Remedial Intervention—Repeated Reading With Vocal Music Masking (RVM).” Annals of Dyslexia 71, no. 1: 60–83. 10.1007/s11881-021-00222-4. [DOI] [PubMed] [Google Scholar]

- Lyon, G. , Shaywitz S., and Shaywitz B.. 2003. “A Definition of Dyslexia.” Annals of Dyslexia 53, no. 1: 1–14. 10.1007/s11881-003-0001-9. [DOI] [Google Scholar]

- Majeed, N. M. , Hartanto A., and Tan J. J. X.. 2021. “Developmental Dyslexia and Creativity: A Meta‐Analysis.” Dyslexia 27, no. 2: 187–203. 10.1002/dys.1677. [DOI] [PubMed] [Google Scholar]

- Marsh, H. W. , Hau K. T., and Grayson D.. 2005. “Goodness of Fit in Structural Equation.” In Contemporary Psychometrics, edited by A. Maydeu‐Olivares and J. J. McArdle, 275–340. New Jersy: Lawrence Erlbaum Associates Publishers. [Google Scholar]

- McDonald, R. P. 1970. “Theoretical Foundations of Principal Factor Analysis, Canonical Factor Analysis, and Alpha Factor Analysis.” British Journal of Mathematical and Statistical Psychology 23: 1–21. [Google Scholar]

- McNulty, M. A. 2003. “Dyslexia and the Life Course.” Journal of Learning Disabilities 36, no. 4: 363–381. 10.1177/00222194030360040701. [DOI] [PubMed] [Google Scholar]

- Microsoft Corporation . 2021. “Microsoft Excel (Version 2102).” [Computer Software].

- Miles, T. 1983. Dyslexia: The Pattern of Difficulties. 2nd ed. London: Whurr Publishers. [Google Scholar]

- Morin, A. J. S. , Myers N. D., and Lee S.. 2020. “Modern Factor Analytic Techniques: Bifactor Models, Exploratory Structural Equation Modeling (ESEM) and Bifactor‐ESEM.” In Handbook of Sport Psychology, edited by Tenenbaum G. and Eklund R. C., 4th ed., 1044–1073. London, UK: Wiley. [Google Scholar]

- Nicolson, R. , and Fawcett A.. 2004. Dyslexia Screening Test – Junior. London, UK: Pearson. [Google Scholar]

- Nicolson, R. I. , and Fawcett A. J.. 1997. “Development of Objective Procedures for Screening and Assessment of Dyslexic Students in Higher Education.” Journal of Research in Reading 20, no. 1: 77–83. 10.1111/1467-9817.00022. [DOI] [Google Scholar]

- Nicolson, R. I. , and Fawcett A. J.. 2011. “Dyslexia, Dysgraphia, Procedural Learning and the Cerebellum.” Cortex 47, no. 1: 117–127. 10.1016/j.cortex.2009.08.016. [DOI] [PubMed] [Google Scholar]

- Nukari, J. M. , Poutiainen E. T., Arkkila E. P., Haapanen M.‐L., Lipsanen J. O., and Laasonen M. R.. 2020. “Both Individual and Group‐Based Neuropsychological Interventions of Dyslexia Improve Processing Speed in Young Adults: A Randomized Controlled Study.” Journal of Learning Disabilities 53, no. 3: 213–227. 10.1177/0022219419895261. [DOI] [PubMed] [Google Scholar]

- Parikh, R. , Mathai A., Parikh S., Sekhar G. C., and Thomas R.. 2008. “Understanding and Using Sensitivity, Specificity and Predictive Values.” Indian Journal of Ophthalmology 56, no. 1: 45–50. 10.4103/0301-4738.37595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennington, B. F. 2006. “From Single to Multiple Deficit Models of Developmental Disorders.” Cognition 101, no. 2: 385–413. 10.1016/j.cognition.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Protopapa, C. , and Smith‐Spark J. H.. 2022. “Self‐Reported Symptoms of Developmental Dyslexia Predict Impairments in Everyday Cognition in Adults.” Research in Developmental Disabilities 128: 104288. [DOI] [PubMed] [Google Scholar]

- Raykov, T. , and Marcoulides G. A.. 2008. “An Introduction to Applied Multivariate Analysis.” Routledge 128: 104288. 10.1016/j.ridd.2022.104288. [DOI] [Google Scholar]

- Reynolds, A. E. , and Caravolas M.. 2016. “Evaluation of the Bangor Dyslexia Test (BDT) for Use With Adults.” Wiley Online Library 22: 27–46. 10.1002/dys.1520. [DOI] [PubMed] [Google Scholar]

- Robins, R. , Fraley R., and Krueger R.. 2010. Handbook of Research Methods in Personality Psychology. New York: Guilford. [Google Scholar]

- Saksida, A. , Iannuzzi S., Bogliotti C., et al. 2016. “Phonological Skills, Visual Attention Span, and Visual Stress in Developmental Dyslexia.” Developmental Psychology 52, no. 10: 1503–1516. 10.1037/dev0000184. [DOI] [PubMed] [Google Scholar]

- Shadish, W. R. , Cook T. D., and Campbell D. T.. 2002. Experimental and Quasi‐Experimental Designs for Generalized Causal Inference. Boston, MA: Houghton Mifflin. [Google Scholar]

- Share, D. L. 2021. “Common Misconceptions About the Phonological Deficit Theory of Dyslexia.” Brain Sciences 11, no. 11: 1510. 10.3390/brainsci11111510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharp, K. , Sanders K., Noltemeyer A., Hoffman J., and Boone W. J.. 2016. “The Relationship Between RTI Implementation and Reading Achievement: A School‐Level Analysis.” Preventing School Failure: Alternative Education for Children and Youth 60, no. 2: 152–160. 10.1080/1045988X.2015.1063038. [DOI] [Google Scholar]

- Shaywitz, S. E. , Schnell C., Shaywitz B. A., and Towle V. R.. 1986. “Yale Children's Inventory (YCI): An Instrument to Assess Children With Attentional Deficits and Learning Disabilities I. Scale Development and Psychometric Properties.” Journal of Abnormal Child Psychology 14: 347–364. [DOI] [PubMed] [Google Scholar]

- Shaywitz, S. E. , and Shaywitz B. A. S. A.. 2005. “Dyslexia (Specific Reading Disability).” Society of Biological Psychiatry 57: 1301–1309. 10.1016/j.biopsych.2005.01.043. [DOI] [PubMed] [Google Scholar]

- Siegel, L. S. , and Smythe I. S.. 2005. “Reflections on Research on Reading Disability With Special Attention to Gender Issues.” Journal of Learning Disabilities 38, no. 5: 473–477. [DOI] [PubMed] [Google Scholar]

- Singleton, C. , Horne J., and Simmons F.. 2009. “Computerised Screening for Dyslexia in Adults.” Journal of Research in Reading 32, no. 1: 137–152. 10.1111/j.1467-9817.2008.01386.x. [DOI] [Google Scholar]

- Singleton, C. H. 1997. “Screening Early Literacy.” In The Psychological Assessment of Reading, edited by Beech J. R. and Singleton C. H., 67–101. London: Routledge. [Google Scholar]

- Smythe, I. 2010. “Dyslexia in the Digital Age: Making It Work.” Continuum.

- Smythe, I. , and Everatt J.. 2001. “Adult Checklist.” https://cdn.bdadyslexia.org.uk/documents/Dyslexia/Adult‐Checklist‐1.pdf?mtime=20190410221643&focal=none.

- Smythe, I. , and Siegel L.. 2005. Provision and Use of Information Technology With Dyslexic Students in University in Europe, 28–39. An EU Funded Project. [Google Scholar]

- Snowling, M. , Dawes P., Nash H., and Hulme C.. 2012. “Validity of a Protocol for Adult Self‐Report of Dyslexia and Related Difficulties.” Dyslexia 18, no. 1: 1–15. 10.1002/dys.1432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snowling, M. J. 2013. “Early Identification and Interventions for Dyslexia: A Contemporary View.” Journal of Research in Special Educational Needs 13, no. 1: 7–14. 10.1111/j.1471-3802.2012.01262.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark, Z. , Franzen L., and Johnson A. P.. 2022. “Insights From a Dyslexia Simulation Font: Can We Simulate Reading Struggles of Individuals With Dyslexia?” Dyslexia 28, no. 2: 228–243. 10.1002/dys.1704. [DOI] [PubMed] [Google Scholar]

- Statistics Canada . 2022. Table 37‐10‐0135‐01. “Postsecondary Graduates, By Field of Study, International Standard Classification of Education, Age Group and Gender.” 10.25318/3710013501-eng. [DOI]

- Stein, J. F. 2001. “The Magnocellular Theory of Developmental Dyslexia.” Dyslexia 7, no. 1: 12–36. 10.1002/dys.186842. [DOI] [PubMed] [Google Scholar]

- Stein, J. F. 2018. “The Current Status of the Magnocellular Theory of Developmental Dyslexia.” Neuropsychologia 130, no. 1: 66–77. 10.1016/j.neuropsychologia.2018.03.022. [DOI] [PubMed] [Google Scholar]

- Streiner, D. 2003. “Starting at the Beginning: An Introduction to Coefficient Alpha and Internal Consistency.” Journal of Personality Assessment 80, no. 1: 99–103. 10.1207/s15327752jpa8001_18. [DOI] [PubMed] [Google Scholar]

- Swanson, H. L. , and Hsieh C. J.. 2009. “Reading Disabilities in Adults: A Selective Meta‐Analysis of the Literature.” Review of Educational Research 79, no. 4: 1362–1390. 10.3102/0034654309350931. [DOI] [Google Scholar]

- Trevethan, R. 2017. “Sensitivity, Specificity, and Predictive Values: Foundations, Pliabilities, and Pitfalls in Research and Practice.” Frontiers in Public Health 5: 307. 10.3389/fpubh.2017.00307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vatansever, D. , Bozhilova N. S., Asherson P., and Smallwood J.. 2019. “The Devil Is in the Detail: Exploring the Intrinsic Neural Mechanisms That Link Attention‐Deficit/Hyperactivity Disorder Symptomatology to Ongoing Cognition.” Psychological Medicine 49, no. 7: 1185–1194. 10.1017/S0033291718003598. [DOI] [PubMed] [Google Scholar]

- Wagner, R. K. , Zirps F., Edwards A., et al. 2020. “The Prevalence of Dyslexia: A New Approach to Its Estimation.” Journal of Learning Disabilities 53, no. 5: 354–365. 10.1177/0022219420920377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wetzels, R. , Matzke D., Lee M. D., Rouder J. N., Iverson G. J., and Wagenmakers E.‐J.. 2011. “Statistical Evidence in Experimental Psychology: An Empirical Comparison Using 855 T Tests.” Perspectives on Psychological Science 6, no. 3: 291–298. 10.1177/1745691611406923. [DOI] [PubMed] [Google Scholar]

- Yates, B. , and Taub J.. 2003. “Assessing the Costs, Benefits, Cost‐Effectiveness, and Cost‐Benefit of Psychological Assessment: We Should, We Can, and Here's How.” Psychological Assessment 15, no. 4: 478–495. 10.1037/1040-3590.15.4.478. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data S1:

Data Availability Statement

The data that support the findings of this study are openly available from the first author's OSF repository (DOI: 10.17605/OSF.IO/JX42M).