Abstract

The deferred correction (DeC) is an iterative procedure, characterized by increasing the accuracy at each iteration, which can be used to design numerical methods for systems of ODEs. The main advantage of such framework is the automatic way of getting arbitrarily high order methods, which can be put in the Runge-Kutta (RK) form. The drawback is the larger computational cost with respect to the most used RK methods. To reduce such cost, in an explicit setting, we propose an efficient modification: we introduce interpolation processes between the DeC iterations, decreasing the computational cost associated to the low order ones. We provide the Butcher tableaux of the new modified methods and we study their stability, showing that in some cases the computational advantage does not affect the stability. The flexibility of the novel modification allows nontrivial applications to PDEs and construction of adaptive methods. The good performances of the introduced methods are broadly tested on several benchmarks both in ODE and PDE contexts.

Electronic Supplementary Material

The online version of this article (10.1007/s42967-023-00294-6) contains supplementary material, which is available to authorized users.

Keywords: Efficient deferred correction (DeC), Arbitrary high order, Stability, Adaptive methods, Hyperbolic PDEs

Introduction

A huge amount of phenomena in many different fields can be modeled through ODEs and PDEs, whose analytical solutions are usually not available, hence, many numerical methods have been developed to approximate such solutions. Indeed, the higher the accuracy needed in the approximation, the more expensive the associated numerical simulations are in terms of the computational time and resources employed. If, on the one hand, with modern computers the speed of the simulations has drastically improved, and on the other hand, the always stricter tolerances required by modern applications have led to massive simulations only accessible to supercomputers and, still, characterized by very long computational times. That is why any effort in reducing the computational costs of numerical simulations is of paramount importance. A classical way of reducing them is the adoption of high order methods, which allow to reach lower errors within coarse discretizations.

A wide series of arbitrarily high order methods is based on the DeC approach. Its original formulation has been firstly introduced in 1949 in [17] in a simple prediction-correction time integrator framework. A more elegant version based on spectral integration in time was introduced in 2000 [16], characterized by an iterative procedure allowing to increase the order of accuracy by one at each iteration. In 2003 [28], Minion generalized the deferred correction (DeC) framework to obtain an implicit-explicit arbitrarily high order method, with various applications to ODEs and PDEs [19, 23, 29, 30, 35]. Later on, the DeC approach has been generalized by Abgrall [2] to solve hyperbolic PDEs with high order continuous Galerkin (CG) spatial discretizations, overcoming the burden related to the mass matrix leading to numerous applications in the hyperbolic field [4, 6, 7, 14, 27]. The DeC has been also modified to preserve physical structures (positivity, entropy, moving equilibria, conservation) [3, 5, 14, 31]. Finally, in [18] it has been pointed out that DeC and ADER methods are very similar iterative time integrators and, when restricted to ODEs, they can be written as RK schemes, see also [21, 36].

The clear advantage of the DeC framework is the possibility to easily increase the order of accuracy, the drawback is the expensive computational cost, due to the iterations and to the high degree of the polynomial reconstruction of the numerical solution considered in each of them. To alleviate such cost, the ladder strategy was proposed in implicit DeC algorithms [23, 28, 35], where the reconstruction in time increases the degree at each iteration. Between the iterations, an interpolation procedure links the different reconstructions. Though being the idea used in some works, it has never been deeply studied and analyzed, in particular, for the purely explicit DeC.

Inspired by this idea, in this work, we provide a detailed description of two novel families of efficient explicit DeC methods, based on easy modifications of existing DeC schemes. By explicitly constructing their Butcher tableaux and studying their stability, we show that in some cases the new efficient versions and the classical one have the same stability functions. Moreover, we exploit the modification to build adaptive methods that, given a certain tolerance, automatically choose the order of accuracy to reach such error in the most efficient way. We also apply the efficient modification in the context of mass matrix-free CG-DeC methods [2] for hyperbolic PDEs.

The structure of this work is the following. We start by introducing the DeC procedure in an abstract framework in Sect. 2 and as a tool for the numerical solution of ODEs systems in Sect. 3. In Sect. 4, we introduce the new families of efficient DeC methods. Then, we give their Butcher tableaux in Sect. 5 and in Sect. 6 we study in detail their linear stability. In Sect. 7, we describe the application to the numerical solution of hyperbolic problems with CG spatial discretizations avoiding mass matrices. We propose an adaptive and efficient version of the methods in Sect. 8. In Sect. 9, we present numerical results for ODEs and hyperbolic PDEs with various comparisons with the classical DeC methods. Section 10 is dedicated to the conclusions.

Abstract DeC Formulation

We will first introduce the DeC abstract formulation proposed by Abgrall in [2]. Let us assume that we have two operators between two normed vector spaces and , namely , associated to two discretizations of the same problem and dependent on a same discretization parameter . In particular, assume that corresponds to a high order implicit discretization, while, corresponds to a low order explicit one. We would like to solve , i.e., finding such that , to get a high order approximation of the solution to the original problem, but this is not easy because of its implicit character. Instead, the low order explicit operator is very easy to solve and, more in general, we assume that it is easy to solve with given, but the associated accuracy is not sufficient for our intended goals. In the next theorem, we will provide a simple recipe to get an arbitrary high order approximation of the solution of by combining the operators and in an easy iterative procedure.

Theorem 1

(DeC accuracy) Let the following hypotheses hold:

-

i)

existence of a unique solution to

solution of such that ;

-

ii)

coercivity-like property of independent of such that

1 -

iii)

Lipschitz-continuity-like property of

independent of such that2

Then, if we iteratively define as the solution of

| 3 |

we have that

| 4 |

Proof

The proof relies on a direct use of the hypotheses. In particular, we have

| 5a |

| 5b |

| 5c |

| 5d |

where in (5a) we have used (1), in (5b) the definition of the DeC iteration (3), in (5c) the fact that and, finally, in (5d) we have used (2). By repeating these calculations recursively we get the desired result.

Let us remark that, due to our assumption on the operator , the updating formula (3) represents a simple explicit recipe to approximate arbitrarily well the solution of . The convergence for is ensured independently of the starting vector provided that . The coefficients and can be computed once the operators and are defined. In the next sections, we will provide such definitions for different DeC ODE solvers, and the convergence constraint imposed by will sum up to a classical timestep restriction for explicit methods.

If the solution of is an Rth order accurate approximation of the exact solution of the original problem to which the operators are associated, it does not make sense to approximate with the accuracy higher than R, as we are actually interested in . In particular, thanks to the accuracy estimate (4), if is an -approximation of , the optimal choice is , i.e., the optimal number of iterations coincides with the accuracy of the operator . Any further iteration results in a waste of computational resources.

In the following, we will characterize the operators and for some DeC ODEs solvers, explicitly writing the associated updating formulas. To provide a clearer understanding of the methods, we also report their more classical formulation, in Appendix A, in terms of residual and error functions [16]. However, we will stick to Abgrall’s formulation [2] for its compactness, the possibility to directly work on the solution and its flexibility, which allows for applications to more general contexts, such as structure preserving methods [3, 5, 14, 31], mass-matrix free finite element methods [2, 4, 6], ADER-DG methods [18, 25]. All these generalizations and the efficient modifications that we present in this paper are straightforward in Abgrall’s formulation, while they are more involved in the classical DeC framework.

The DeC for Systems of ODEs

We want to solve the Cauchy problem

| 6 |

with , and a continuous map Lipschitz continuous with respect to uniformly with respect to t with a Lipschitz constant L, which ensures the existence of a unique solution. We will present two explicit DeC methods for the numerical solution of such a problem, which are based on approximations of its integral form.

bDeC, which was introduced originally in [24] in a more general family of schemes, but fully exploited for its simplicity only starting from [2] in the context of Galerkin solvers for hyperbolic PDEs without the mass matrix. In this method, the integral form is approximated on “big” intervals, hence the name bDeC.

sDeC, which has a longer history [16] and more developments [19, 22, 28, 35]. In this method, the integral form is approximated on “small” intervals, hence the name sDeC.

Then, we will consider a general family of DeC methods, DeC, depending on a parameter , which contains both the previously described formulations as particular cases, as described in [24].

We assume a one-step method setting: at each time interval , we assume to know and we look for . In particular, as in the context of a general consistency analysis, we assume . In this context, the parameter of the DeC is the step size . A more traditional but equivalent formulation of bDeC and sDeC in terms of error and residual functions [8, 9, 12, 13] is reported in Appendix A.

bDeC

In the generic time step , we introduce subtimenodes Several choices of subtimenodes are possible, but for the following discussion we will consider equispaced ones. In the numerical tests, we will also present results obtained with Gauss-Lobatto (GL) subtimenodes [16, 18, 31], which can obtain a higher accuracy for a fixed number of subtimenodes. We will refer to as the exact solution in the subtimenode and to as the approximation of the solution in the same subtimenode. Just for the first subtimenode, we set .

The bDeC method is based on the integral version of the ODE (6) in each interval , which reads

| 7 |

Starting from this formulation, we define the high order operator and the low order operator . We define by approximating the function in (7) with a high order interpolation via the Lagrange polynomials of degree M associated to the subtimenodes and exact integration of such polynomials

| 8 |

where the normalized coefficients do not depend on . This leads to the definition of the spaces of Sect. 2. Let us remark that is defined on the M components corresponding to the subtimenodes where the solution is unknown, while is an intrinsic datum of the operator. The generic mth component of the global problem corresponds to a high order discretization of (7). In particular, for equispaced subtimenodes, we have that if is the mth component of the solution of (8), then, it is an th order accurate approximation of . The proof is based on a fixed-point argument and can be found in the supplementary material. It is worth noting that coincides with an implicit RK method with M stages, e.g., when choosing GL subtimenodes one obtains the LobattoIIIA methods.

The definition of the low order explicit operator is based on a first order explicit Euler discretization of (7) leading to

| 9 |

where the normalized coefficients are determined only by the distribution of the subtimenodes. The generic mth component of corresponds to the explicit Euler discretization of (7), hence, it is first order accurate and any system can be readily solved for a given .

The operators and fulfill the hypotheses required to apply the DeC procedure, the proofs can be found in the supplementary material. In particular, we highlight that , while .

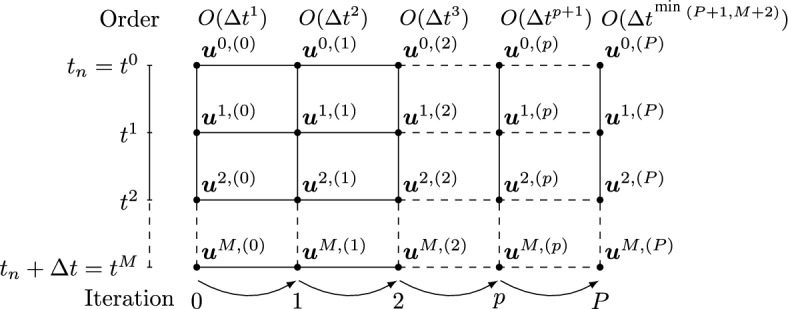

Let us now characterize the updating formula (3) to this setting. The vector is, in this case, made by M components , associated to the subtimenodes in which the solution is unknown, while we set for all p. Then, (3) gives

| 10 |

The starting vector for our iterative procedure is chosen as for all m. At the end of the iteration process, we set . A graphical sketch of the updating process is shown in Fig. 1. As said in Sect. 2, the optimal number of iterations depends on the accuracy of the operator , i.e., for equispaced subtimenodes and for GL ones. Further iterations would not increase the order of accuracy of the method. On the other hand, to build a Pth order method, the optimal choice consists of P iterations with for equispaced and for GL subtimenodes.

Fig. 1.

Sketch of the DeC iterative process for equispaced subtimenodes

sDeC

The sDeC operators differ from the bDeC ones by the “smaller” intervals considered to obtain the integral version of the ODE. In fact, adopting the previous definition of the subtimenodes, the sDeC method is based on the integral version of (6) over the intervals for . This leads to the following definition of the operators

| 11 |

| 12 |

with and normalized coefficients. As before, is a first order explicit discretization, while, is a high order implicit one and, further, we have .

Differently from the previous formulation, in this case we cannot solve the operator in all its components at the same time but we have to do it component by component from to . The same holds for the general problem for a fixed . However, still the computation of its solution can be performed explicitly.

Let us characterize the updating formula (3) to this context. The explicit character of the operator leads to an explicit recipe for the computation of whose components, in this case, must be computed in an increasing order

| 13 |

With recursive substitutions, (13) can be equivalently written as

| 14 |

Now, let us focus on the last term of (14). Exchanging the sums over r and , thanks to the fact that , we have

| 15 |

which allows to explicitly compute all the components in sequence from to , in opposition to bDeC where a parallel strategy can be adopted. For what concerns the accuracy of the method and the optimal number of iterations, one can refer to what already said in the context of the bDeC formulation.

Let us observe that the sDeC method is equivalent to the DeC method presented in [16] in terms of residuals and error functions. We show the equivalence in Appendix A.

A General Family of DeC Methods, DeC

Following [21], we can construct a family of schemes dependent on a single parameter by a convex combination of the updating formulas of bDeC (10) and sDeC (15):

| 16 |

Through (16), it is possible to explicitly compute iteration by iteration the different components starting from until M. Of course, when we retrieve the bDeC formulation, while for we get the sDeC one.

Matrix Formulation

We will now introduce a compact matrix-formulation of the presented methods. For convenience, we will now introduce the vectors containing as components the quantities related to all the subtimenodes including the initial one, even if is never changed along the iterations and it is not an input of the operators previously described. To avoid confusion, we refer to the vectors not containing such a component with the small letter and to the vectors containing it with the capital letter, i.e.,

| 17 |

We will also denote the component-wise application of to the vectors and by

| 18 |

With the previous definitions, it is possible to recast the general updating formula (16) in the following compact form:

| 19 |

where the vector and the matrices are defined as

| 20 |

with the matrix being strictly lower-triangular, as the scheme is fully explicit. Let us observe that the first component of is never updated. This is coherent with what we have said so far. The matrices and that we have defined are referred to a scalar ODE (). In case one wants to adapt them to a vectorial problem, they must be block-expanded.

Two Novel Families of DeC Methods

In this section, we will show how to construct two novel families of efficient DeC methods by introducing a modification in the DeC methods, first focusing on equispaced subtimenodes and then extending the idea to GL ones. The modification is based on the following observation: at any iteration , we get a solution that is pth order accurate using subtimenodes even though only p would be formally sufficient to provide such accuracy. In other words, the number of subtimenodes is fixed a priori for all iterations to get the desired order of accuracy. These subtimenodes are used throughout the whole iterative process, although the formal order of accuracy, for which such nodes are required, is reached only in the final iteration. This represents indeed a waste of computational resources.

The proposed modification consists in starting with only two subtimenodes and increasing their number, iteration by iteration, matching the order of accuracy achieved in the specific iteration. In particular, we introduce intermediate interpolation processes between the iterations to retrieve the needed quantities in the new subtimenodes. The idea has been introduced in [28] for implicit methods, but without a systematic theory and related analytical study. We will present here two possible interpolation strategies which will lead to the definition of two general families of efficient DeC methods.

We will use the star symbol to refer to quantities obtained through the interpolation process. The number of subtimenodes will change iteration by iteration, therefore, it is useful to define the vector of the subtimenodes in which we obtain the approximations of the solution at the pth iteration, with and .

DeCu

The DeCu methods are obtained from the DeC methods by introducing an intermediate interpolation process on the solution between the iterations. For convenience, we will formulate the methods in terms of the vectors containing the component associated to the initial subtimenode.

We start with associated to two subtimenodes, and , and we perform the first iteration

| 21 |

is first order accurate and it yields an -accurate reconstruction on . Here, and are the operators associated to two subtimenodes. Now, we perform the first interpolation, via a suitable interpolation matrix , passing from two to three equispaced subtimenodes

| 22 |

where the last equality is due to the fact that, by consistency, the sum of the elements on the rows of the interpolation matrices is equal to 1. The subscript 3 has been added to to distinguish it from the initial . Now, we have , still first order accurate. Then, we perform the second iteration

| 23 |

which gives a second order accurate approximation, i.e., an -accurate approximation. Thus, we continue with another interpolation

| 24 |

from which we can get -accurate and so on. Proceeding iteratively, at the pth iteration we have

| 25 |

| 26 |

where is -accurate, and is -accurate. Clearly, the DeC operators and , used in the pth iteration, are chosen according to the dimension of involved variables.

Let us notice that , got at the pth iteration, is -accurate and associated to subtimenodes but, actually, they would be enough to guarantee the -accuracy. For this reason, if the final number of subtimenodes is fixed to be , the optimal choice is to perform M iterations to reach such setting and a final th iteration without interpolation to saturate the -accuracy associated to the subtimenodes. In this way, we have that the interpolation is performed at each iteration except the first and the last one. Thus, the last iteration reads

| 27 |

where the matrices and are the ones used also for the Mth iteration. A useful sketch of the algorithm is represented in Fig. 2.

Fig. 2.

DeCu and DeCdu, sketches: dots for computed values, crosses for interpolated ones

On the other hand, one could also not fix a priori the final number of subtimenodes and stop when certain conditions are met, see an example for adaptive methods in Sect. 8.

DeCdu

Like the DeCu methods, the DeCdu methods are based on the introduction of an interpolation process between the iterations. In this case, the interpolated quantity is the function . The name is due to the fact that formally we interpolate .

We start with two subtimenodes, associated to and , and and we perform the first iteration of the DeC method, as in (21), getting , which is -accurate. Then, we can compute , whose components allow to get an -accurate global reconstruction of in the interval through the Lagrange interpolation. We thus perform an interpolation to retrieve the approximated values of in three equispaced subtimenodes in the interval , getting . Then, we compute

| 28 |

which is in and -accurate. We can iteratively continue with interpolations, , and iterations, obtaining the general updating formula

| 29 |

with and -accurate. Analogous considerations, as for the DeCu method, hold on the advantage of performing a final iteration with no interpolation when the final number of subtimenodes is fixed. Also in this case, the reader is referred to Fig. 2 for a better understanding of the method.

DeCu and DeCdu with Gauss-Lobatto Subtimenodes

As already explained, GL subtimenodes can guarantee an accuracy equal to 2M. In such a case, if the final number of subtimenodes is fixed, we start with two subtimenodes and we alternate iterations of the DeC method and interpolations as in the equispaced case, adding one subtimenode at each iteration until reaching the desired subtimenodes, then, we continue with normal iterations of the DeC until to get the maximal order of accuracy associated to such a choice. The updating formulas are identical to the ones already presented. The interpolation is not performed at the first iteration and from the th iteration on. On the other hand, if the order P is fixed, the most efficient choice is given by a final number of subtimenodes equal to with and P iterations.

Contrary to what one might think, it is not possible to postpone an interpolation process after the saturation of the maximal accuracy associated to some intermediate number of GL subtimenodes adopted in the early iterations. The interpolation processes must mandatorily take place in the first iterations. This is due to the mismatch between the -accuracy of the operator associated to GL subtimenodes and the -accuracy of the interpolation process with the same number of subtimenodes.

The DeC as RK

An explicit RK method with S stages applied in the interval reads

| 30 |

The coefficients , , and uniquely characterize the RK method and can be stored, respectively, into the strictly lower triangular matrix A and the vectors and , often summarized in a Butcher tableau

It is well known, as presented in [18, 21, 24], that DeC methods can be written into the RK form. This also holds for the new methods, DeCu and DeCdu. In this section, we will explicitly construct their Butcher tableaux. We will adopt a zero-based numeration and the following convention for slicing. If , we denote by its slice from the ith row to the jth row (included) and from the kth column to the th column (included). We omit the last (first) index in case we want to include all the entries until the end (from the beginning), e.g., . The same notation is assumed for vectors. We define also the vectors of the coefficients in different iterations of the new methods and, for the original DeC method, the fixed vector . In order to make the Butcher tableaux as compact as possible, the computation of the solution in the different subtimenodes at the first iteration will be always made through the explicit Euler method. This little modification has no impact on the formal accuracy, since the first iteration is meant to provide a first order approximation of the solution.

We will focus on equispaced subtimenodes. The extension to the GL case is trivial: it suffices to repeat the block without interpolation, related to the final iteration of the standard method, for the needed number of times, in the optimal case.

DeC

We recall the general updating formula of the DeC methods in a matricial form

| 31 |

If we align each iteration one after the other and we consider the approximation in each subtimenode of each iteration as an RK stage, we can pass to the RK formulation. Indeed, we do not repeat the redundant states, i.e., all the , and we keep only as representative of all of them. This leads to the RK formulation (30) with the Butcher tableau as in Table 1, where we added on top and on the right side the references to the different iteration steps. The number of stages of this formulation amounts to for any type of subtimenodes. If , the DeC method reduces to the bDeC method and the Butcher tableau simplifies to Table 2. In such case, we observe that we do not need the whole vector , but we can just compute the component associated to the final subtimenode with the only , leading to a total number of RK stages equal to .

Table 1.

RK structures for the original DeC with equispaced subtimenodes, at the left at the bottom, A in the middle

| A | ||||||||

|---|---|---|---|---|---|---|---|---|

| 0 | 0 | |||||||

Table 2.

RK structures for the original bDeC with equispaced subtimenodes, at the left at the bottom, A in the middle

| A | ||||||||

|---|---|---|---|---|---|---|---|---|

| 0 | 0 | |||||||

bDeCu

Let us recall the general updating formulas of the DeCu methods

| 32 |

| 33 |

to which we need to add an initial iteration made with Euler and either a final iteration or, in the context of GL subtimenodes, some final iterations (M in the optimal case) of the standard DeC method performed without interpolation. In this case, the stages of the RK method are given by all the components of the vectors and (excluding the redundant states). From easy computations, one can see that for the number of stages of the DeCu method coincides with the number of stages of the DeC method without computational advantage under this point of view. For this reason, we focus on the bDeCu method (), for which we have a substantial computational advantage. In such a case, the updating formulas (32) and (33) reduce to

| 34 |

| 35 |

The right-hand sides of the previous equations involve the computation of in interpolated states only and, in particular, the update of only depends on . This means that the scheme can be rewritten in terms of the vectors only (plus ), drastically reducing the number of stages. The RK coefficients are reported in Table 3, in which we have

| 36 |

The total number of RK stages is given by , so less with respect to the original method. The formula holds for both equispaced and GL subtimenodes.

Table 3.

RK structures for the bDeCu method, at the left at the bottom, A in the middle

| A | dim | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | ||||||||

| 2 | ||||||||||

| 3 | ||||||||||

| 4 | ||||||||||

| M | ||||||||||

| M | ||||||||||

Remark 1

(On the relation between stages and computational cost) The number of stages is not completely explanatory of the computational costs of the new algorithms. In the context of the novel methods, the cost associated to the computation of the different stages is not homogeneous, especially in applications to PDEs, as some of them are “properly” computed through the updating formula (16) of the original scheme, while the others are got through an interpolation process which is much cheaper. As an example, (32) can be computed as . In particular, as already specified, the novel DeCu methods for are characterized by the same number of stages as the original DeC, nevertheless, roughly half of them is computed through interpolation. For this reason, they have been numerically investigated for .

DeCdu

Again, we start by recalling the updating formulas of the method

| 37 |

supplemented with an initial Euler step and a final iteration or, for GL subtimenodes, at most M final iterations of DeC without interpolation. The usual identification of subtimenodes and RK stages leads to the Butcher tableau in Table 4, in which we have

| 38 |

| 39 |

The number of stages in this case amounts to , with a computational advantage of with respect to the original method.

Table 4.

RK structures for the DeCdu method with equispaced subtimenodes, at the left at the bottom, A in the middle

| A | dim | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | |||||||||

| 1 | |||||||||||

| 2 | |||||||||||

| 3 | |||||||||||

| M | |||||||||||

Also in this case, it is worth giving a particular attention to the method given by . Again, the possibility to compute without any need for the other components of further reduces the number of stages to .

We conclude this section with two tables, Tables 5 and 6, containing the number of stages of the original methods and of the novel ones, respectively for equispaced and GL subtimenodes, up to order 13 with associated theoretical speed up factors computed as the ratios between the stages of the original methods and the stages of the modified methods.

Table 5.

Number of stages for the original (DeC, bDeC) and novel (DeCu, DeCdu, bDeCu, bDeCdu) methods with equispaced subtimenodes and speed up factor

| DeC | bDeC | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| RK stages | Speed up | RK stages | Speed up | ||||||

| P | M | DeC/DeCu | DeCdu | DeCdu | bDeC | bDeCu | bDeCdu | bDeCu | bDeCdu |

| 2 | 1 | 2 | 2 | 1.000 | 2 | 2 | 2 | 1.000 | 1.000 |

| 3 | 2 | 6 | 5 | 1.200 | 5 | 5 | 4 | 1.000 | 1.250 |

| 4 | 3 | 12 | 9 | 1.333 | 10 | 9 | 7 | 1.111 | 1.429 |

| 5 | 4 | 20 | 14 | 1.429 | 17 | 14 | 11 | 1.214 | 1.545 |

| 6 | 5 | 30 | 20 | 1.500 | 26 | 20 | 16 | 1.300 | 1.625 |

| 7 | 6 | 42 | 27 | 1.556 | 37 | 27 | 22 | 1.370 | 1.682 |

| 8 | 7 | 56 | 35 | 1.600 | 50 | 35 | 29 | 1.429 | 1.724 |

| 9 | 8 | 72 | 44 | 1.636 | 65 | 44 | 37 | 1.477 | 1.757 |

| 10 | 9 | 90 | 54 | 1.667 | 82 | 54 | 46 | 1.519 | 1.783 |

| 11 | 10 | 110 | 65 | 1.692 | 101 | 65 | 56 | 1.554 | 1.804 |

| 12 | 11 | 132 | 77 | 1.714 | 122 | 77 | 67 | 1.584 | 1.821 |

| 13 | 12 | 156 | 90 | 1.733 | 145 | 90 | 79 | 1.611 | 1.835 |

Table 6.

Number of stages for the original (DeC, bDeC) and novel (DeCu, DeCdu, bDeCu, bDeCdu) methods with GL subtimenodes and speed up factor

| DeC | bDeC | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| RK stages | Speed up | RK stages | Speed up | ||||||

| P | M | DeC/DeCu | DeCdu | DeCdu | bDeC | bDeCu | bDeCdu | bDeCu | bDeCdu |

| 2 | 1 | 2 | 2 | 1.000 | 2 | 2 | 2 | 1.000 | 1.000 |

| 3 | 2 | 6 | 5 | 1.200 | 5 | 5 | 4 | 1.000 | 1.250 |

| 4 | 2 | 8 | 7 | 1.143 | 7 | 7 | 6 | 1.000 | 1.167 |

| 5 | 3 | 15 | 12 | 1.250 | 13 | 12 | 10 | 1.083 | 1.300 |

| 6 | 3 | 18 | 15 | 1.200 | 16 | 15 | 13 | 1.067 | 1.231 |

| 7 | 4 | 28 | 22 | 1.273 | 25 | 22 | 19 | 1.136 | 1.316 |

| 8 | 4 | 32 | 26 | 1.231 | 29 | 26 | 23 | 1.115 | 1.261 |

| 9 | 5 | 45 | 35 | 1.286 | 41 | 35 | 31 | 1.171 | 1.323 |

| 10 | 5 | 50 | 40 | 1.250 | 46 | 40 | 36 | 1.150 | 1.278 |

| 11 | 6 | 66 | 51 | 1.294 | 61 | 51 | 46 | 1.196 | 1.326 |

| 12 | 6 | 72 | 57 | 1.263 | 67 | 57 | 52 | 1.175 | 1.288 |

| 13 | 7 | 91 | 70 | 1.300 | 85 | 70 | 64 | 1.214 | 1.328 |

Stability Analysis

In this section, we study the stability of the novel DeC schemes. We will prove two original results. First, the stability functions of bDeCu and bDeCdu coincide with the bDeC ones and do not depend on the distribution of the subtimenodes but only on the order. Second, if we fix the subtimenodes distribution and the order, the DeCdu methods coincide with the DeCu methods on linear problems. For all the schemes, we will show the stability region using some symbolical and numerical tools.

Let us start by reviewing some known results for RK methods [10, 37]. The linear stability of an RK scheme is tested on Dahlquist’s problem where with . Being the RK schemes linear, we can write a general RK iteration as , with the stability function of the method. The stability function is defined as

| 40 |

where is a vector with all the entries equal to 1. The set of complex numbers z such that is called the stability region. We remark that the stability function for explicit RK methods is a polynomial. In fact, the inverse of can be written in the Taylor expansion as

| 41 |

and, since A is strictly lower triangular, it is nilpotent, i.e., there exists an integer r such that and the minimum of these natural numbers is called degree of nilpotence. By definition of , it is clear that for all . Moreover, it is also clear that , where S is the number of stages of the explicit RK method and the dimension of the matrix A. Hence, R(z) is a polynomial in z with degree at most equal to S. We recall that [37], if an RK method is of order P, then

| 42 |

Thus, we know the first terms of the stability functions for all the DeCs of order P presented above. Further, the following result holds.

Theorem 2

The stability function of any bDeC, bDeCu, and bDeCdu method of order P is

| 43 |

and does not depend on the distribution of the subtimenodes.

Proof

The proof of this theorem relies only on the block structure of the matrix A for such schemes. In all these cases, the matrix A can be written as

| 44 |

where are some non-zero block matrices and the 0 are some zero block matrices of different sizes. The number of blocks in each row and column of A is P, the order of the scheme. By induction, we can prove that has zeros in the main block diagonal, and in all the block diagonals below the main diagonal, i.e., if , where the indices here refer to the blocks. Indeed, it is true that if . Now, let us consider the entry with , i.e., . Such entry is defined as and we will prove that all the terms of the sum are 0. Let . Then because of the structure of A; while, if , we have that , so by induction.

In particular, this means that , because any block row index i is smaller than for any block column index j, as P is the number of the blocks that we have in each row and column. Hence,

| 45 |

Plugging this result into (40), we can state that the stability function R(z) is a polynomial of degree P, the order of the scheme. Since all the terms of degree lower or equal to P must agree with the expansion of the exponential function (42), the stability function must be (43). Finally, let us notice that no assumption has been made on the distribution of the subtimenodes, hence, the result is general for any distribution.

In the following, we will show that, given a certain order P and a distribution of subtimenodes, the DeCu and DeCdu methods are equivalent on linear problems and, as a consequence, they share the same stability functions.

Theorem 3

(Equivalence on linear problems) Given an order P, a distribution of subtimenodes and , the schemes DeCu and DeCdu applied to linear systems are equivalent.

Proof

Without loss of generality, we can focus on Dahlquist’s equation . Since the schemes are linear, the same arguments would apply component-wise also on linear systems of equations. Let us start by explicitly writing down the general updating formula (29) of the DeCdu method for Dahlquist’s equation

| 46 |

For the DeCu method, the updating formula (26) becomes

| 47 |

Now, using the definition of , we obtain

| 48 |

which coincides with (46). This means that, at each iteration, the two modified schemes coincide.

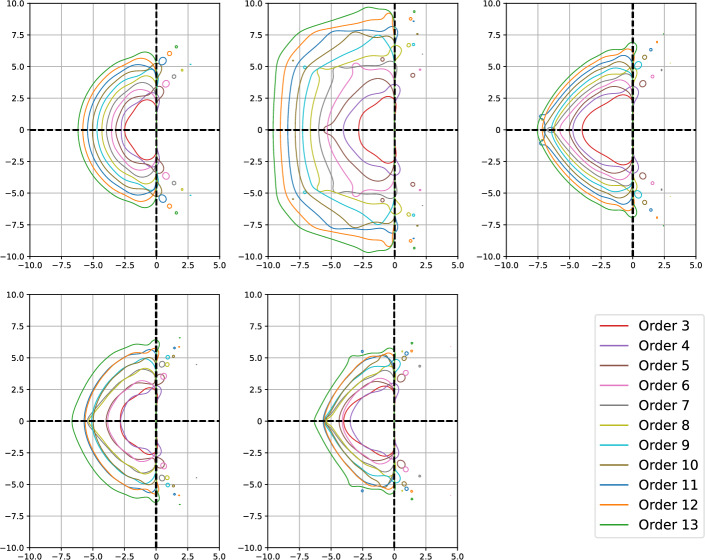

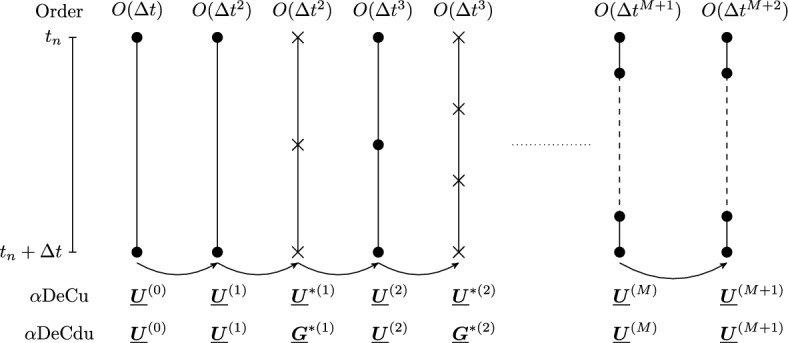

In Fig. 3, we depict the stability region of all the presented methods from order 3 to 13. We remark that there is no difference in terms of the stability between bDeC, bDeCu, and bDeCdu, nor dependence on the distribution of the subtimenodes, as well as sDeCu and sDeCdu have the same stability regions for fixed subtimenodes.

Fig. 3.

Stability regions for various schemes with order from 3 to 13: bDeC, bDeCu, and bDeCdu (equivalent) for any distribution of subtimenodes (top left), sDeC for equispaced subtimenodes (top center), sDeCu and sDeCdu (equivalent) for equispaced subtimenodes (top right), sDeC for GL subtimenodes (bottom left), sDeCu and sDeCdu (equivalent) (bottom center), legend (bottom right)

Application to Hyperbolic PDEs

In this section, we apply the novel explicit efficient DeC techniques to hyperbolic PDEs. We will focus on the CG framework, which is particularly challenging with respect to FV and DG formulations, due to the presence of a global sparse mass matrix. In particular, we will consider two strategies that allow to avoid the related issues. We will describe the operators and for the two strategies in the bDeC formulation and see how to apply the bDeCu efficient modification. The proofs of the properties of the operators are provided in the supplementary material.

Continuous Galerkin FEM

The general form of a hyperbolic system of balance laws reads

| 49 |

where , with some initial condition on the space domain , and boundary conditions on . We consider a tessellation of with characteristic length h, made by convex closed polytopals K, and we introduce the space of continuous piecewise polynomial functions . We choose a basis of , e.g., the Lagrange polynomials or the Bernstein polynomials, which is such that each basis function can be associated to a degree of freedom (DoF) and such that with . Further, we assume a normalization of the basis functions yielding Then, we project the weak formulation in space of the PDE (49) over , i.e., we look for such that for any

| 50 |

where the stabilization term is added to avoid the instabilities associated to central schemes. Thanks to the assumption on the support of the basis functions, it is possible to recast (50) as

| 51 |

where is the vector of all and the space residuals are defined as

| 52 |

We would like to solve this system of ODEs in time without solving any linear system at each iteration nor inverting the huge mass matrix.

The first possibility consists in adopting particular basis functions, which, combined with the adoption of the induced quadrature formulas, allow to achieve a high order lumping of the mass matrix. This leads to a system of ODEs like the one described in the previous section and, hence, the novel methods can be applied in a straightforward way. Examples of such basis functions are given by the Lagrange polynomials associated to the GL points in one-dimensional (1D) domains and the Cubature elements in two-dimensional (2D) domains, introduced in [15] and studied in [20, 26, 27, 32]. The second strategy, introduced by Abgrall in [2] and based on the concept of residual [1, 4, 6, 33], exploits the abstract DeC formulation presented in Sect. 2, introducing a first order lumping in the mass matrix of the operator , resulting in a fully explicit scheme, as we will explain in detail in the following.

DeC for CG

In this section, we will define the operators and of the DeC formulation for CG FEM discretizations proposed by Abgrall in [2]. In this context, the parameter of the DeC is the mesh parameter h of the space discretization. We assume CFL conditions of the type .

The definition of the high order implicit operator is not very different from the one seen in the context of the bDeC method for ODEs. We denote by the exact solution of the ODE (51) in the subtimenode and by its approximation, containing, respectively, all components and . As usual, for the first subtimenode we set . Starting from the exact integration of (51) over and replacing by its Mth order interpolation in time associated to the subtimenodes, we get the definition of the operator as

| 53 |

where, for any and , we have

| 54 |

The solution to is th order accurate. Unfortunately, such problem is a huge nonlinear system difficult to directly solve. According to the DeC philosophy, we introduce the operator making use of low order approximations of (51) to achieve an explicit formulation. In particular, we use the forward Euler time discretization and a first order mass lumping, obtaining

| 55 |

whose components, for any and , are defined as

| 56 |

with .

Remark 2

(Choice of the basis functions) For any m and i, we can explicitly compute from if and only if . This means that the construction of the operator is not always well-posed for any arbitrary basis of polynomials. For example, with Lagrange polynomials of degree 2 on triangular meshes, we have for some i. However, the construction is always well-posed with Bernstein bases, which verify for all i.

Let us characterize the iterative formula (3) in this context. We have

| 57 |

where consists of M subtimenodes components , each of them containing I DoF components . Just like in the ODE case, procedure (57) results in an explicit iterative algorithm due to the fact that the operator is explicit. After a direct computation, the update of the component associated to the general DoF i in the mth subtimenode at the pth iteration reads

| 58 |

We remark that also in this case we assume whenever p or m are equal to 0. For what concerns the optimal number of iterations, analogous considerations to the ones made in the ODE case hold. Finally, it is worth observing that the resulting DeC schemes cannot be written in the RK form due to the difference between the mass matrices in and . In fact, such DeC formulation is not obtained via a trivial application of the method of lines.

bDeCu for CG

As for ODEs, it is possible to modify the original DeC for hyperbolic problems to get a new more efficient method by introducing interpolation processes between the iterations. The underlying idea is the same, and we increase the number of subtimenodes as the accuracy of the approximation increases. At the general iteration p, the interpolation process allows to get from and then we perform the iteration via (58) getting

| 59 |

Application to Adaptivity

In this section, we will see how to exploit the interpolation processes in the new schemes, DeCu and DeCdu, to design adaptive methods. In the context of an original DeC method with a fixed number of subtimenodes, iteration by iteration, we increase the order of accuracy with respect to the solution of the operator . For this reason, performing a number of iterations higher than the order of accuracy of the discretization adopted in the construction of the operator is formally useless, as we have already pointed out in Sect. 2. Instead, in the context of an DeCu or DeCdu method, we could in principle keep adding subtimenodes, through interpolation, always improving the accuracy of the approximation with respect to the exact solution of (6), until a convergence condition on the final component of (always associated to ) is met, e.g.,

| 60 |

with a desired tolerance. This leads to a p-adaptive version of the presented algorithms.

Numerical Results

In this section, we will numerically investigate the new methods, showing the computational advantage with respect to the original ones. Since the DeC, DeCu, and DeCdu methods of order 2 coincide, we will focus on methods from order 3 on.

ODE Tests

We will assess here the properties of the new methods on different ODEs tests, checking their computational costs, their errors and their adaptive versions. We will focus on the methods got for (bDeC) and (sDeC).

Linear System

The first test is a very simple system of equations

| 61 |

with the exact solution and . We assume a final time .

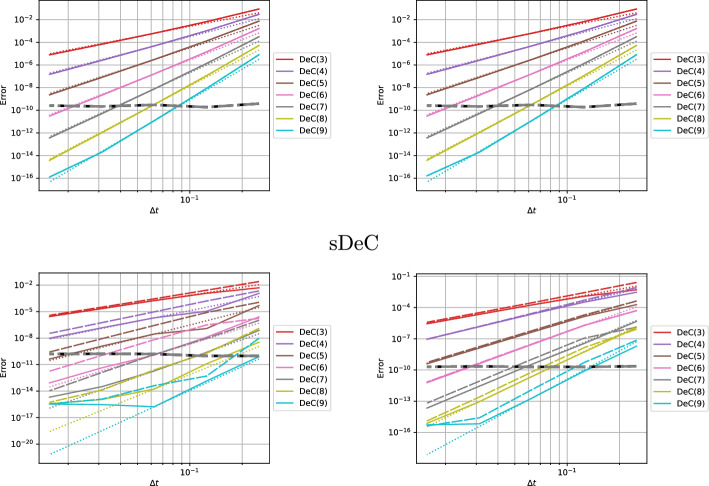

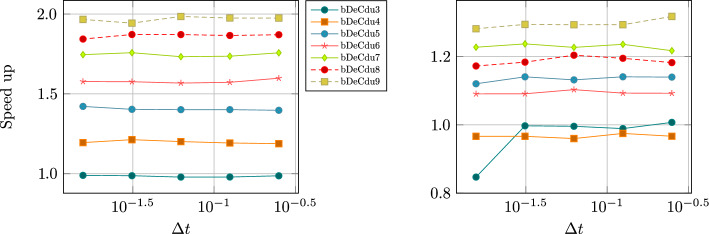

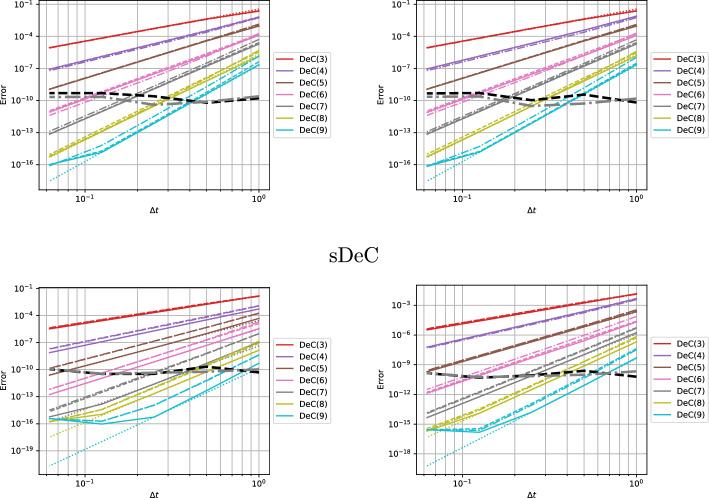

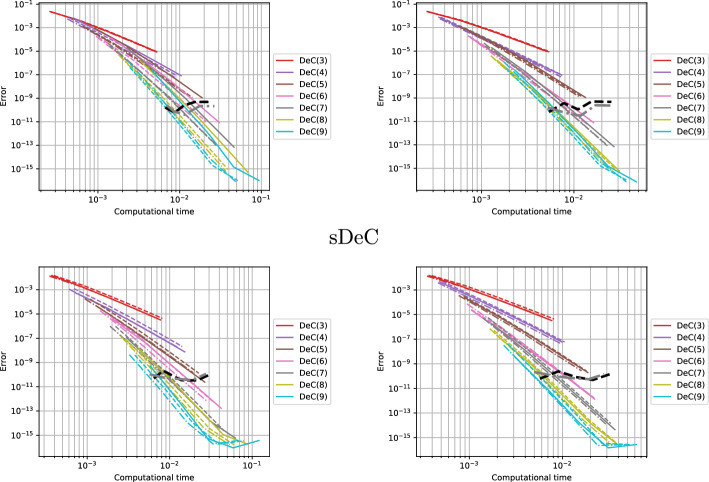

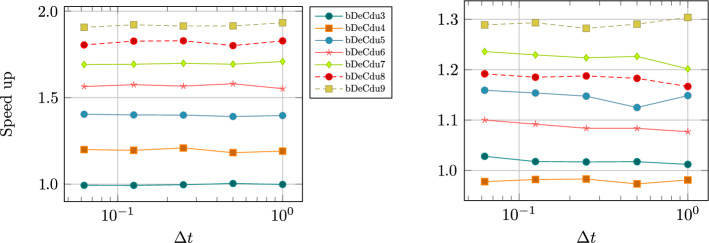

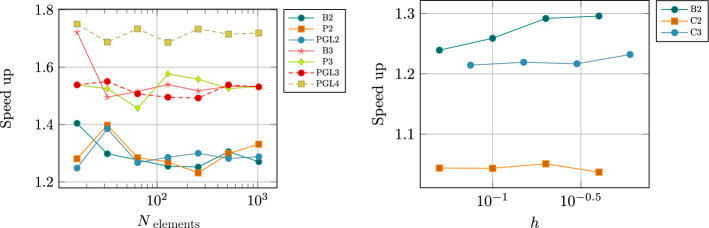

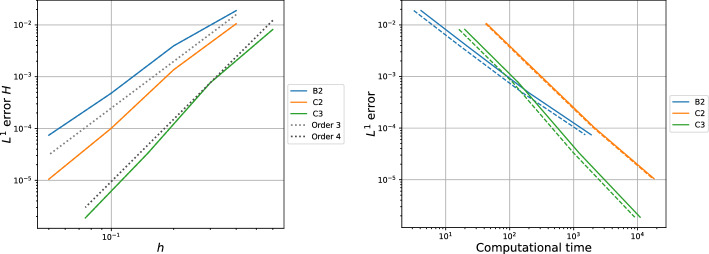

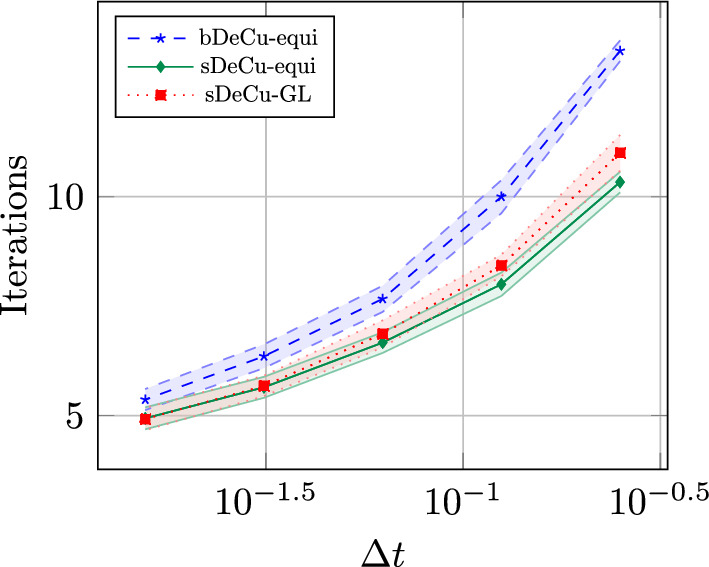

In Fig. 4, we plot the error decay for all methods with respect to for all orders from 3 to 9 and the expected order of convergence is achieved in all cases. We can see that the bDeC, bDeCu, and bDeCdu methods have the same error, since they coincide on linear problems, as shown in Theorem 2. The sDeC methods show a more irregular behavior and, on average, the errors with the sDeCu and sDeCdu, which coincide due to Theorem 3, are slightly larger than the one of sDeC for a fixed . In Fig. 5, we plot the error against the computational time of the methods. For bDeC methods there is a huge advantage in using the novel methods: the Pareto front is composed only by the novel methods. In particular, for equispaced subtimenodes there is a larger reduction in computational cost than for GL ones, as predicted by theory. For sDeC methods the situation is not as clear as in the bDeC case. We can systematically see a difference between sDeCu and sDeCdu, being the latter more efficient than the former. In the context of GL subtimenodes, the sDeCdu is slightly better than the original sDeC method from order 5 on in the mesh refinement. We also tested the adaptive versions of the methods, characterized by the convergence criterion (60) with a tolerance . As we observe in Fig. 4, the error of these methods (in black and gray) is constant and independent of . The required computational time, see Fig. 5, is comparable to the one of very high order schemes. In Fig. 6, we report the average number of iterations ± half standard deviation for different adaptive methods with respect to the time discretization. As expected, the smaller the timestep, the smaller is the number of iterations necessary to reach the expected accuracy. In Fig. 7, we display, for different , the speed up factor of the bDeCdu method with respect to the bDeC method computed as the ratio between the computational times required by the bDeCdu and the bDeC method. For equispaced subtimenodes we see that, as the order increases, the interpolation process reduces the computational time by an increasing factor, which is almost 2 for order 9. For GL subtimenodes the reduction is smaller but still remarkable, close to in the asymptotic limit.

Fig. 4.

Linear system: error decay for DeC with the continuous line, DeCu with the dashed line, DeCdu with the dash-dotted line, reference order with the dotted line, adaptive DeCu with the dashed black line, and adaptive DeCdu with the dash-dotted gray line. Equispaced subtimenodes on the left and GL on the right

Fig. 5.

Linear system: error with respect to computational time for DeC with the continuous line, DeCu with the dashed line, DeCdu with the dash-dotted line, adaptive DeCu with the dashed black line, and adaptive DeCdu with the dash-dotted gray line. Equispaced subtimenodes on the left and GL on the right

Fig. 6.

Linear test: average number of iterations (± half standard deviation) of some adaptive DeC for different time steps

Fig. 7.

Linear system test: speed up factor for the bDeCdu method. Equispaced subtimenodes on the left and GL on the right

Vibrating System

Let us consider a vibrating system defined by the following ODE:

| 62 |

with , . Its exact solution [11] reads with particular solution of the whole equation characterized by

| 63 |

and general solution of the homogeneous equation

| 64 |

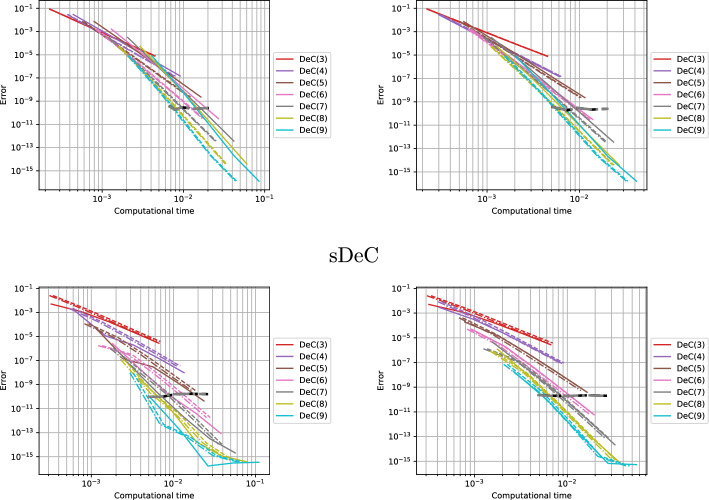

where , and are the real roots of the characteristics polynomial associated to (62), which are equal to when . and are two constants computed by imposing the initial conditions and . The mathematical steps needed to get the solution are reported in the supplementary material. The second order scalar ODE (62) can be rewritten in a standard way as a vectorial first order ODE. In the test, we have set , , , , , , , and with a final time . In Fig. 8, we show the error decay for all methods. Differently from the linear case, here bDeC, bDeCu, and bDeCdu are not equivalent. Nevertheless, in terms of errors, they behave in a similar way and, also comparing equispaced and GL subtimenodes, we do not observe large deviations. On average the novel schemes are slightly less accurate for a fixed , even if this is not true for all orders of accuracy. For the sDeC, there is a larger difference in the errors between sDeC and sDeCu or sDeCdu, though being the order of accuracy always correct. These effects are visible also in Fig. 9. For bDeC with equispaced subtimenodes, the advantages of using the novel methods are evident: the error is almost the same and the computational time reduces by almost half for high order schemes. For bDeC methods with GL subtimenodes the computational advantage of the novel methods is not as big as the one registered in the previous case as expected from theory, see Tables 5 and 6, but still pretty visible. For what concerns the sDeC methods with equispaced subtimenodes, the performance of sDeCdu is similar to the one of sDeC until order 5, while, from order 6 on, the novel method is definitely more convenient. The sDeCu method is always less efficient than the sDeCdu one; in particular, only for very high orders it appears to be preferable to the standard method. The general trend of the sDeC methods with GL subtimenodes is that the sDeCdu and the sDeCu always perform, respectively, slightly better and slightly worse than the original sDeC. The results of the adaptive methods for this test are qualitatively similar to the ones seen in the context of the previous test: the methods produce a constant error for any . Also in this case, the threshold for the relative error has been chosen equal to . Finally, in Fig. 10, we display the speed up factor of the new bDeCdu methods with respect to the original bDeC: as expected from theory, it increases with the order of accuracy.

Fig. 8.

Vibrating system: error decay for DeC with the continuous line, DeCu with the dashed line, DeCdu with the dash-dotted line, reference order with the dotted line, adaptive DeCu with the dashed black line, and adaptive DeCdu with the dash-dotted gray line. Equispaced subtimenodes on the left and GL on the right

Fig. 9.

Vibrating system: error with respect to the computational time for DeC with the continuous line, DeCu with the dashed line, DeCdu with the dash-dotted line, adaptive DeCu with the dashed black line, and adaptive DeCdu with the dash-dotted gray line. Equispaced subtimenodes on the left and GL on the right

Fig. 10.

Vibrating system test: speed up factor for the bDeCdu method. Equispaced subtimenodes on the left and GL on the right

Hyperbolic PDE Tests

For hyperbolic PDEs, we will focus on the bDeC and the bDeCu methods with equispaced subtimenodes. The order of the DeC will be chosen to match the spatial discretization one. We will use two stabilizations discussed in [26, 27]: continuous interior penalty (CIP) and orthogonal subscale stabilization (OSS). The CIP stabilization is defined as

| 65 |

where , is the set of the -dimensional faces shared by two elements of , is the jump across the face f, is the partial derivative in the direction normal to the face f, is a local reference value for the spectral radius of the normal Jacobian of the flux, is the diameter of f, and is a parameter that must be tuned.

The OSS stabilization is given by

| 66 |

where , is the projection of onto , is a local reference value for the spectral radius of the normal Jacobian of the flux, is the diameter of K, and is a parameter that must be tuned.

1D Linear Advection Equation (LAE)

We consider the LAE, with periodic boundary conditions on the domain , the initial condition and the final time . The exact solution is given by . For the spatial discretization, we considered three families of polynomial basis functions with degree n: Bn, the Bernstein polynomials [2, 4]; Pn, the Lagrange polynomials associated to equispaced nodes; PGLn, the Lagrange polynomials associated to the GL nodes [26]. For Bn and Pn, we used the bDeC version for hyperbolic PDEs (58) introduced by Abgrall; for PGLn, we adopted the bDeC formulation for ODEs (10), as, in this case, the adopted quadrature formula associated to the Lagrangian nodes leads to a high order mass lumping. For all of them, we used the CIP stabilization (65) with the coefficients reported in Table 7 found in [26] to minimize the dispersion error, even if, differently from there, we assumed here a constant CFL . In particular, since the coefficients for P3 and PGL4 were not provided, we used for the former the same coefficient as for B3, while, for the latter the same coefficient as for PGL3.

Table 7.

Coefficients used for LAE in one dimension

| B2 | P2 | PGL2 | B3 | P3 | PGL3 | PGL4 | |

|---|---|---|---|---|---|---|---|

| 0.016 | 0.002 42 | 0.003 46 | 0.007 02 | 0.007 02 | 0.000 113 | 0.000 113 |

The coefficients adopted for P3 and PGL4 are not provided in [26]

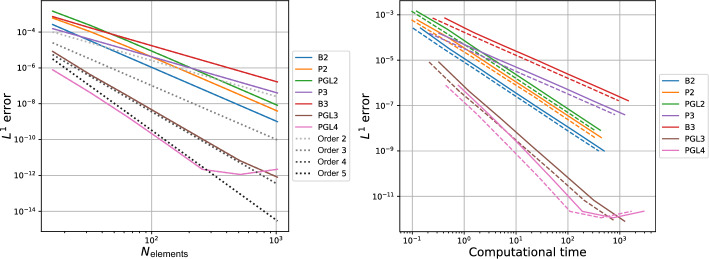

The results of the convergence analysis and of the computational cost analysis are displayed in Fig. 11. For a fixed number of elements, the errors of the bDeC and of the bDeCu methods are essentially identical, leading to a remarkable computational advantage of the novel method with respect to the original bDeC, visible in the plot on the right, where the error against the computational time is depicted. The formal order of accuracy is recovered in all the cases but for B3 and P3 for which we get only second order for both bDeC and bDeCu.

Fig. 11.

1D LAE: bDeC with continuous line, bDeCu with the dashed line, and reference order with the dotted line. Convergence analysis on the left and error with respect to computational time on the right

Remark 3

(Issues with the DeC for PDEs) The loss of accuracy for bDeC4 and B3 elements has been registered in other works, e.g., [4, 26, 27]. Even in the original paper [2], the author underlined the necessity to perform more iterations than theoretically expected for orders greater than 3 to recover the formal order of accuracy. According to authors’ opinion the problem deserves a particular attention, for this reason, the results related to B3 and P3 have not been omitted. The pathology seems to have effect only in the context of unsteady tests and it is maybe due to a high order weak instability. The phenomenon is currently under investigation; more details can be found in the supplementary material. However, we remark that this issue does not occur for elements that allow a proper mass lumping like PGL (or Cubature in 2D).

The speed up factor of the novel bDeCu with respect to the original method is reported in Fig. 12. The obtained speed up factors are higher than ODE ones, because in the implementation of the DeC for PDEs the major cost is not given by the flux evaluation of previously computed stages, but by the evolution of the new stages. This slightly changes the expected and the observed speed up, providing even larger computational advantages.

Fig. 12.

Speed up in the hyperbolic tests of bDeCu with respect to bDeC. 1D LAE on the left and 2D shallow water (SW) on the right

2D Shallow Water (SW) Equations

We consider the SW equations onto , defined, in the form (49), by

| 67 |

where H is the water height, is the vertically averaged speed of the flow, g is the gravitational constant, is the identity matrix, and is the number of physical dimensions. The test is a compactly supported unsteady vortex from the collection presented in [34] given by

| 68 |

where , , and

| 69 |

with and the function defined by

| 70 |

We set , , , with a final time and Dirichlet boundary conditions. For the spatial discretization, we considered two basis functions: Bn, the Bernstein polynomials; Cn, the Cubature elements introduced in [15]. As they allow a high order mass lumping, for Cn elements we used the bDeC (10) for ODEs and OSS stabilization (66), instead, for Bn we considered the PDE formulation (58) and CIP stabilization (65). The tests with B2 have been run with and ; for C2 elements we have set and , the optimal coefficients minimizing the dispersion error of the original bDeC according to the linear analysis performed in [27]; for C3 we adopted and .

The results of the convergence analysis and of the computational cost analysis are displayed in Fig. 13. The errors produced by the novel and the original bDeC method are so close that the lines coincide. The resulting computational advantage can be seen in the plot on the right. The formal order of accuracy is recovered in all the cases and the speed up factor, in Fig. 12, proves the convenience in using the novel bDeCu formulation instead of the original bDeC. Let us observe that, according to Table 5, the number of stages of bDeC3 and bDeCu3 is identical, nevertheless, as observed in Remark 1, the number of stages does not strictly correspond to the computational time. If we do not consider the “cheap” stages computed via interpolation, we get the theoretical speed up factor , which is what we obtained in the numerical test for B2. We conclude this section with one last observation: the computational advantage registered with B2 is much higher with respect to C2 and C3 ones, because we have run the simulations with different codes: the results obtained with B2 are obtained with a Fortran implementation, while, for C2 and C3 we have used Parasol, a Python implementation developed by Michel et al. [27] and kindly provided to us. A more careful implementation would increase further the speed up factors associated to such elements.

Fig. 13.

2D SW: bDeC with the continuous line, bDeCu with the dashed line, and reference order with the dotted line. Convergence analysis on the left and error with respect to computational time on the right

Conclusions and Further Developments

In this work, we have investigated analytical and numerical aspects of two novel families of efficient explicit DeC methods. The novel methods are constructed by introducing interpolation processes between the iterations, which increase the degree of the discretization to match the accuracy of the approximation associated to the iterations. In particular, we proved that for some of the novel methods the stability region coincides with the one of the original methods. The novel methods have been tested on classical benchmarks in the ODE context revealing, in most of the cases, a remarkable computational advantage with respect to the original ones. Furthermore, the interpolation strategies have been used to design adaptive schemes. Finally, we successfully proved the good performances of the novel methods in the context of the numerical solution of hyperbolic PDEs with continuous space discretizations. Overall, we believe that the approach proposed in this work can alleviate the computational costs not only of DeC methods but also of other schemes with a similar structure. For this reason, investigations of other numerical frameworks are planned and, in particular, we are working on applications to hyperbolic PDEs (with FV and ADER schemes), in which also the order of the space reconstruction is gradually increased iteration by iteration. We hope to spread broadly this technique in the community to save computational time and resources in the numerical solution of differential problems, as only little effort is required to embed the novel modification in an existing DeC code.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 The interested reader is referred to the supplementary material for all the proofs omitted in this document for the sake of compactness. (PDF 562 kb)

Acknowledgements

L. Micalizzi has been funded by the SNF grant 200020_204917 “Structure preserving and fast methods for hyperbolic systems of conservation laws” and by the Forschungskredit grant FK-21-098. D. Torlo has been funded by a SISSA Mathematical Fellowship. The authors warmly acknowledge Sixtine Michel for providing the code Parasol.

Appendix A: Residual Formulations

Here, we report the residual formulations of the original bDeC and sDeC methods presented in Sect. 3. In particular, we will present the spectral DeC formulation in terms of residuals introduced in [16] and prove that it is equivalent to the sDeC method. Then, we will see how to get, with a little modification of the presented spectral DeC formulation, the residual formulation of the bDeC method.

Appendix A.1: Link Between Spectral DeC and sDeC

We want to solve system (6) in the interval getting from . Also in this case, we consider an iterative procedure on the approximated values of the solution in the subtimenodes , collected in the vector , with fixed. Given , we consider the interpolation polynomial . The spectral DeC relies on the definition at each iteration p of two support variables, namely the error function with respect to the exact solution and the residual function respectively given by

| A1 |

| A2 |

By integrating the original ODE (6), making use of the definitions of the error function and of the residual function and differentiating again, we get that the error function satisfies the ODE

| A3 |

We can numerically solve such an ODE in each subinterval through the explicit Euler method starting from on, thus getting

| A4 |

with the integrals in the residual function approximated through a spectral integration, i.e., . We have used for and the usual convention adopted throughout the manuscript, with m standing for the subtimenode to which such quantities are associated. Indeed, we have and . The computed errors are then used to get new approximated values of the solution , allowing to repeat the described process with new error and residual functions, and , analogously defined. The procedure gains one order of accuracy at each iteration until the accuracy of the discretization is saturated and, at the end of the iteration process with P iterations, one can set . By explicit computation, we have that (A4) is equivalent to the sDeC updating formula (13). In fact, recalling the definition of and , we get

| A5 |

from which, recalling the definition of , follows

| A6 |

Appendix A.2: bDeC

The residual formulation of the bDeC method is obtained in a similar way. Keeping the same definitions of , , and , we have that (A3) still holds. We solve it through the explicit Euler method in each subinterval obtaining

| A7 |

with the same definition for through spectral integration. This is the residual formulation of the bDeC method. Recalling that , we get

| A8 |

from which, recalling the definition of and of , finally follows

| A9 |

which is nothing but (10).

Funding

Open access funding provided by University of Zurich. This work received financial support by the Swiss National Foundation (Switzerland) and Scuola Internazionale Superiore di Studi Avanzati (Italy).

Data Availability

On behalf of all authors, the corresponding author is available to collect documentation of compliance with ethical standards and send upon request.

Compliance with Ethical Standards

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Footnotes

Lorenzo Micalizzi and Davide Torlo contributed equally to this work.

References

- 1.Abgrall, R.: Residual distribution schemes: current status and future trends. Comput. Fluids 35(7), 641–669 (2006) [Google Scholar]

- 2.Abgrall, R.: High order schemes for hyperbolic problems using globally continuous approximation and avoiding mass matrices. J. Sci. Comput. 73(2/3), 461–494 (2017) [Google Scholar]

- 3.Abgrall, R., Ivanova, K.: Staggered residual distribution scheme for compressible flow. arXiv:2111.10647 (2022)

- 4.Abgrall, R., Bacigaluppi, P., Tokareva, S.: High-order residual distribution scheme for the time-dependent Euler equations of fluid dynamics. Comput. Math. Appl. 78(2), 274–297 (2019) [Google Scholar]

- 5.Abgrall, R., Le Mélédo, E., Öffner, P., Torlo, D.: Relaxation deferred correction methods and their applications to residual distribution schemes. SMAI J. Comput. Math. 8, 125–160 (2022) [Google Scholar]

- 6.Abgrall, R., Torlo, D.: High order asymptotic preserving deferred correction implicit-explicit schemes for kinetic models. SIAM J. Sci. Comput. 42(3), 816–845 (2020) [Google Scholar]

- 7.Bacigaluppi, P., Abgrall, R., Tokareva, S.: “A posteriori’’ limited high order and robust schemes for transient simulations of fluid flows in gas dynamics. J. Comput. Phys. 476, 111898 (2023) [Google Scholar]

- 8.Boscarino, S., Qiu, J.-M.: Error estimates of the integral deferred correction method for stiff problems. ESAIM Math. Model. Numer. Anal. 50(4), 1137–1166 (2016) [Google Scholar]

- 9.Boscarino, S., Qiu, J.-M., Russo, G.: Implicit-explicit integral deferred correction methods for stiff problems. SIAM J. Sci. Comput. 40(2), 787–816 (2018) [Google Scholar]

- 10.Butcher, J.C.: Numerical Methods for Ordinary Differential Equations. Wiley, Auckland (2016) [Google Scholar]

- 11.Cheli, F., Diana, G.: Advanced Dynamics of Mechanical Systems. Springer, Cham (2015) [Google Scholar]

- 12.Christlieb, A., Ong, B., Qiu, J.-M.: Comments on high-order integrators embedded within integral deferred correction methods. Commun. Appl. Math. Comput. Sci. 4(1), 27–56 (2009) [Google Scholar]

- 13.Christlieb, A., Ong, B., Qiu, J.-M.: Integral deferred correction methods constructed with high order Runge-Kutta integrators. Math. Comput. 79(270), 761–783 (2010) [Google Scholar]

- 14.Ciallella, M., Micalizzi, L., Öffner, P., Torlo, D.: An arbitrary high order and positivity preserving method for the shallow water equations. Comput. Fluids 247, 105630 (2022) [Google Scholar]

- 15.Cohen, G., Joly, P., Roberts, J.E., Tordjman, N.: Higher order triangular finite elements with mass lumping for the wave equation. SIAM J. Numer. Anal. 38(6), 2047–2078 (2001) [Google Scholar]

- 16.Dutt, A., Greengard, L., Rokhlin, V.: Spectral deferred correction methods for ordinary differential equations. BIT 40(2), 241–266 (2000) [Google Scholar]

- 17.Fox, L., Goodwin, E.: Some new methods for the numerical integration of ordinary differential equations. In: Mathematical Proceedings of the Cambridge Philosophical Society, vol. 45, pp. 373–388. Cambridge University Press, Cambridge (1949)

- 18.Han Veiga, M., Öffner, P., Torlo, D.: DeC and ADER: similarities, differences and a unified framework. J. Sci. Comput. 87(1), 1–35 (2021) [Google Scholar]

- 19.Huang, J., Jia, J., Minion, M.: Accelerating the convergence of spectral deferred correction methods. J. Comput. Phys. 214(2), 633–656 (2006) [Google Scholar]

- 20.Jund, S., Salmon, S.: Arbitrary high-order finite element schemes and high-order mass lumping. Int. J. Appl. Math. Comput. Sci. 17(3), 375–393 (2007) [Google Scholar]

- 21.Ketcheson, D., Bin Waheed, U.: A comparison of high-order explicit Runge-Kutta, extrapolation, and deferred correction methods in serial and parallel. Commun. Appl. Math. Comput. Sci. 9(2), 175–200 (2014) [Google Scholar]

- 22.Layton, A.T., Minion, M.L.: Conservative multi-implicit spectral deferred correction methods for reacting gas dynamics. J. Comput. Phys. 194(2), 697–715 (2004) [Google Scholar]

- 23.Layton, A.T., Minion, M.L.: Implications of the choice of quadrature nodes for Picard integral deferred corrections methods for ordinary differential equations. BIT Numer. Math. 45(2), 341–373 (2005) [Google Scholar]

- 24.Liu, Y., Shu, C.-W., Zhang, M.: Strong stability preserving property of the deferred correction time discretization. J. Comput. Math. 26(5), 633–656 (2008) [Google Scholar]

- 25.Micalizzi, L., Torlo, D., Boscheri, W.: Efficient iterative arbitrary high order methods: an adaptive bridge between low and high order. arXiv:2212.07783 (2022)

- 26.Michel, S., Torlo, D., Ricchiuto, M., Abgrall, R.: Spectral analysis of continuous FEM for hyperbolic PDEs: influence of approximation, stabilization, and time-stepping. J. Sci. Comput. 89(2), 1–41 (2021) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Michel, S., Torlo, D., Ricchiuto, M., Abgrall, R.: Spectral analysis of high order continuous FEM for hyperbolic PDEs on triangular meshes: influence of approximation, stabilization, and time-stepping. J. Sci. Comput. 94(3), 49 (2023) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Minion, M.L.: Semi-implicit spectral deferred correction methods for ordinary differential equations. Commun. Math. Sci. 1(3), 471–500 (2003) [Google Scholar]

- 29.Minion, M.L.: Semi-implicit projection methods for incompressible flow based on spectral deferred corrections. Appl. Numer. Math. 48(3/4), 369–387 (2004) [Google Scholar]

- 30.Minion, M.L.: A hybrid parareal spectral deferred corrections method. Commun. Appl. Math. Comput. Sci. 5(2), 265–301 (2011) [Google Scholar]

- 31.Öffner, P., Torlo, D.: Arbitrary high-order, conservative and positivity preserving Patankar-type deferred correction schemes. Appl. Numer. Math. 153, 15–34 (2020) [Google Scholar]

- 32.Pasquetti, R., Rapetti, F.: Cubature points based triangular spectral elements: an accuracy study. J. Math. Stud. 51(1), 15–25 (2018) [Google Scholar]

- 33.Ricchiuto, M., Abgrall, R.: Explicit Runge-Kutta residual distribution schemes for time dependent problems: second order case. J. Comput. Phys. 229(16), 5653–5691 (2010) [Google Scholar]

- 34.Ricchiuto, M., Torlo, D.: Analytical travelling vortex solutions of hyperbolic equations for validating very high order schemes. arXiv:2109.10183 (2021)

- 35.Speck, R., Ruprecht, D., Emmett, M., Minion, M., Bolten, M., Krause, R.: A multi-level spectral deferred correction method. BIT Numer. Math. 55(3), 843–867 (2015) [Google Scholar]

- 36.Torlo, D.: Hyperbolic problems: high order methods and model order reduction. PhD thesis, University Zurich (2020)

- 37.Wanner, G., Hairer, E.: Solving Ordinary Differential Equations II: Stiff and Differential-Algebraic Problems, vol. 375. Springer, Berlin (1996) [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material 1 The interested reader is referred to the supplementary material for all the proofs omitted in this document for the sake of compactness. (PDF 562 kb)

Data Availability Statement

On behalf of all authors, the corresponding author is available to collect documentation of compliance with ethical standards and send upon request.