Abstract

Background: Cancer ranks second among the causes of mortality worldwide, following cardiovascular diseases. Brain cancer, in particular, has the lowest survival rate of any form of cancer. Brain tumors vary in their morphology, texture, and location, which determine their classification. The accurate diagnosis of tumors enables physicians to select the optimal treatment strategies and potentially prolong patients’ lives. Researchers who have implemented deep learning models for the diagnosis of diseases in recent years have largely focused on deep neural network optimization to enhance their performance. This involves implementing models with the best performance and incorporating various network architectures by configuring their hyperparameters. Methods: This paper presents a novel hybrid approach for improved brain tumor classification by combining CNNs and EfficientNetV2B3 for feature extraction, followed by K-nearest neighbors (KNN) for classification, which has been described as one of the simplest machine learning algorithms based on supervised learning techniques. The KNN algorithm assumes similarities between new cases and available cases and assigns new cases to the category that most closely resembles the available categories. Results: To evaluate the recommended method’s efficacy, two widely known benchmark MRI datasets were utilized in the experiments. The initial dataset consisted of 3064 MRI images depicting meningiomas, gliomas, and pituitary tumors. Images from two classes, consisting of healthy brains and brain tumors, were included in the second dataset, which was obtained from Kaggle. Conclusions: In order to enhance the performance even further, this study concatenated the CNN and EfficientNetV2B3’s flattened outputs before feeding them into the KNN classifier. The proposed framework was run on these two different datasets and demonstrated outstanding performance, with accuracy of 99.51% and 99.8%, respectively.

Keywords: brain tumor, CNN, EfficientNetV2B3, KNN

1. Introduction

Among all causes of death, cancer ranks sixth in the world. It is an essential pathological condition. Brain tumors are thought to be among the deadliest malignancies, with poor survival rates [1]. The tumor shape, texture, location, and other factors can significantly affect the types of tumors that occur in the brain, which include gliomas, pituitary tumors, and meningiomas [2]. The rates of occurrence for tumors of the brain during clinical monitoring are approximately 45 percent, 15 percent, and 15 percent, respectively. Doctors can obtain diagnoses and predict the survival of patients based on the type of tumor [3]. Additionally, they make decisions by following the appropriate care before surgery. Radiotherapy and chemotherapy adopt a ’wait and see’ strategy, avoiding invasive procedures. Moreover, a crucial part of treatment planning is tumor grading [4].

Brain tumors can be detected in 2D and 3D using magnetic resonance imaging (MRI), a nonsurgical, quick, and easy medical imaging technique. It is one of the most widely accepted techniques for the identification and detection of cancer, focusing on ultra-high-definition images of brain tissue [5].

However, identifying the type of cancer using tomography images may be a challenging, inaccurate, and time-consuming exercise, requiring highly specialized physician experience and entailing a laborious process [6]. Tumors can appear in many different shapes, and the images may not contain enough discernible landmarks to aid in medical assessment. The traditional diagnostic method of histopathology involves detecting the tumor type via the microscopic examination of a tumor biopsy on a tumbler slide. Achieving a comprehensive analysis will allow the patient to begin the best treatment immediately, extending their life [7]. Accordingly, this highlights the pressing need for artificial intelligence (AI) to provide and expand a new and modern computer-assisted diagnosis (CAD) system. This system will alleviate the workload during the analysis of tumor types and act as a supporting device for medical doctors and radiologists. The proposed concept in this paper seeks to provide implementation information for solutions for the classification of tumors using classical and hybrid techniques combining convolutional neural networks (CNNs) with classical machine learning. We assessed the proposed technique using an MRI brain tumor dataset including three types of brain tumors (meningiomas, gliomas, and pituitary tumors), as well as images classified as ’tumor’ or ’non-tumor’ [8]. The nominal objective of this research is to explore how artificial intelligence can be used to determine the type of tumor in order to identify the appropriate treatment method, as, in most medical cases, brain surgery can be life-threatening and lead to serious health issues for the patient.

In summary, manual diagnosis is often inaccurate. Furthermore, the treatment of brain tumors frequently presents problems because it can impair a patient’s ability to respond effectively during surgery and lower their chances of survival [9]. However, accurate diagnosis can benefit patients, allowing them to begin the appropriate course of treatment immediately. Consequently, there is a critical need to develop innovative machine diagnosis systems via artificial intelligence (AI). These systems aim to alleviate the burden of patient diagnosis and tumor classification, providing a helpful tool for radiologists and physicians [10]. By decreasing the impacts associated with tumor diagnosis and classification, these systems aim to benefit doctors and radiologists [10].

The most popular systems use convolutional neural networks (CNNs) with several layers based on linear equations between matrices known as convolutions. In addition, these CNNs have fully connected, nonlinear, pooling, and conventional layers. Meanwhile, fully connected, traditional layers consist of parameters and nonlinear and pooling layers [11].

EfficientNetV2 is a new type of CNN designed to improve the speed and effectiveness compared to previous structures. It has provided the strongest results and improved the effectiveness in classifying many types of tumors using ImageNet. In brief, EfficientNetV2B3 may offer a suitable model for medical image classification, requiring the input of 32 × 32 images and 14.5M parameters and resulting in accuracy of 95.8% [12].

EfficientNetV2 surpasses EfficientNet to improve the speed, effectiveness, and productivity. It was created by employing a set of scaling parameters (profundity, determination, and width). EfficientNetV2 is much faster than past and current state-of-the-art models, and it is much smaller (up to 6.8 times) [13]. The input image size and regularization parameters are user-defined, where EfficicentNetV2 employs three types of regularization: dropout, RandAugment, and mistakes [14].

The CNN model, which comprises five blocks of layers with two dropout layers and max pooling after each block, is combined with EfficientNetV2B3 in the proposed framework. It has a primary block of 32 filters and individual blocks of 64, 128, and 256 filters [15].

EfficientNetV2B3 combines dropout, pooling, and batch normalization to form a 24-layer architecture consisting of one fully connected layer, one softmax layer, and 22 convolutional layers. Eight blocks constitute the convolutional layers, and the sizes of the inputs of the images are changed to 32 by 32 pixels [16]. When the output of each model integrates two layers, each having 64 and 16 units, the proposed model performs better [17,18].

Many algorithms are employed in brain tumor classification, such as KNN and deep learning methods. In this research, a hybrid framework is proposed, combining CNN and EfficientNetV2B3 for feature extraction and employing KNN for the classification of images.

The structure of this paper is as follows. Section 2 presents related research on the classification of brain tumors. Section 3 presents the problem definition. Section 4 illustrates the proposed approach, including the dataset, image prepossessing, data augmentation, feature extraction, classification models, implementation, and evaluation metrics. Section 5 presents the results of our experiments. Section 6 presents the ablation study, and a discussion is presented in Section 7. Finally, the conclusions regarding the proposed hybrid method are presented in Section 8.

2. Related Work

Image processing techniques play a significant role in medical applications by assisting in the detection of anomalies and diagnosis of diseases. This includes medical images obtained through imaging techniques such as CT scans, MRI, and X-rays. MRI, in particular, has a significant advantage over other diagnosis methods because it does not expose patients to radiation [19].

Across several domains, including computer vision, robotics, and computer-aided diagnosis, deep learning has become a powerful tool. This paves the way for its integration with biomedical image processing, which could result in significant improvements in medical care [20].

Deep learning models acquire the capability to learn several representation and abstraction levels across a large scale because they are fed with raw data. They possess many advantages over existing machine learning techniques, which are restricted to processing only real-life image data, are slower, and require a large amount of effort to adjust the functionalities [21].

CNNs are among these types. They are most commonly utilized for image, video, and speech recognition. The biological functions of the visual system in animals have served as inspiration for CNNs. The CNN’s ability to identify patterns in images has allowed it to be successfully used in image processing [22].

Convolutional layers are composed of extracted features such as colors and edges, and they rely on utilizing a learnable kernel. The cores’ spatial dimensions are small but they are distributed across the input area depth. Layers spread each filter horizontally to create a feature map using the spatial dimensions of the input. The pooling layer is used to decrease the number of variables and the mathematical model’s complexity while lowering the dimensions of the features. Many algorithms have been employed in brain tumor classification, like KNN [23].

Cheng et al. [24] proposed a model consisting of GLCM and BOW, which was applied to a dataset with three different types of brain tumors and obtained precision of 91.28%.

Ismael et al. [25] presented a model based on a neural network algorithm and used statistical features; it obtained 91.9% precision. Its specificity for pituitary tumors was 95.66%, while it was 96% and 96.29% for meningiomas and gliomas, respectively.

Afshar et al. [26] proposed a model for the categorization of brain tumors with rough boundaries, using an additional pipeline as input to improve CapNet’s focus, with accuracy of 90.89%. In [10], Deepak et al. proposed a comprehensive framework based on the pre-trained GoogleNet for feature extraction on an MRI dataset. Gull et al. [27] proposed a model that utilized AlexNet and VGG-19 for brain tumor classification and achieved accuracy of 98.50% and 97.25%, respectively, for VGG-19 and AlexNet. Mondal et al. [28] compared the results of various CNN models (such as DenseNet201 and ResNet50) and achieved accuracy of 98.33%.

3. Problem Definition

Determining a tumor’s grade and type is essential, particularly at the beginning of the treatment plan. The accurate detection of abnormal tissue is essential for diagnosis. This has been confirmed by the availability of practical methods that employ classification, segmentation, or a combination of both to quantitatively and subjectively characterize the brain. The processing of MR images can be performed manually, semi-automatically, or through fully programmed processes based on human interaction. Accurate segmentation and classification are essential for medical image processing, but they must usually be performed manually by specialists, which takes time. Conversely, an accurate diagnosis allows patients to start an appropriate treatment sooner and have longer lifespans. Therefore, in the area of artificial intelligence (AI), there is a pressing need for the development and design of innovative frameworks to decrease the amount of work that radiologists and physicians must perform to diagnose and characterize tumors.

This work uses an automation system that was developed for the purpose of classifying and segmenting brain tumors. This new technology could significantly improve the diagnostic skills of neurosurgeons and other medical professionals, especially with regard to analyzing tumors in ectopic brain regions, which is crucial for early and precise diagnosis. One of the main principles of this research is to promote accessible and efficient communication. To decrease the knowledge gap between medical professionals, the system simplifies the presentation of magnetic resonance imaging (MRI) results. This improved comprehension can enable well-informed decision-making across the board in clinical workflows. Furthermore, the proposed methodology promotes a fully automated approach, minimizing the need for extensive pre-processing steps. This not only optimizes the workflow’s efficiency but also mitigates the potential for human errors during pre-processing, ultimately leading to more consistent and reliable results.

4. Proposed Approach

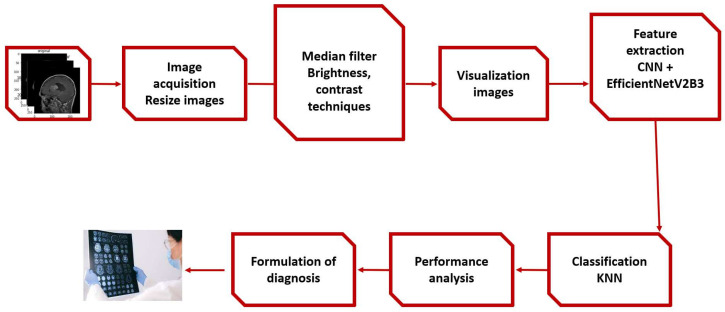

The proposed framework and the experimental stages are described in Figure 1 and discussed in this section.

Figure 1.

The proposed framework.

4.1. Proposed Framework

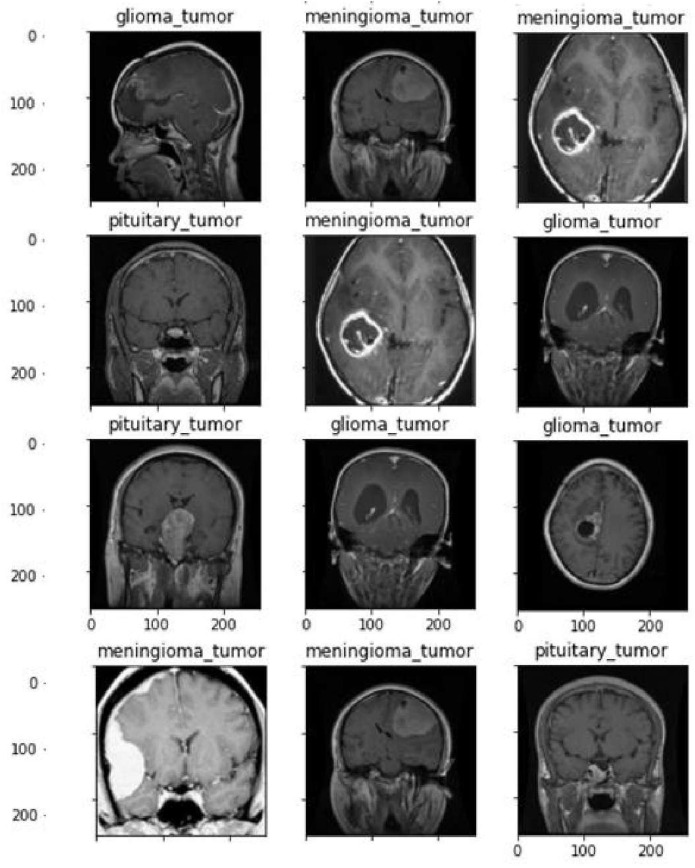

Within the suggested framework, the input images are as shown in Figure 2 and Figure 3, obtained from MRI datasets [29].

Figure 2.

Samples of brain tumor images with class labels in dataset 1.

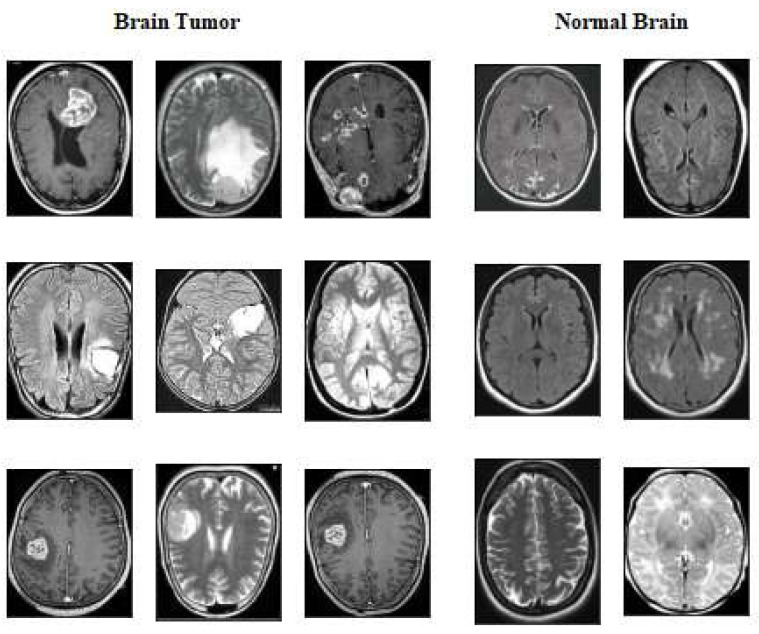

Figure 3.

Samples of brain tumor images with class labels in dataset 2.

First, we apply a median filter to remove noise and then apply augmentation techniques such as brightness and contrast enhancement; we then proceed to the extraction stage by combining EfficientNetV2B3 and the CNN, as well as the KNN classifier, with the exception of the the deep CNN models’ standard softmax classifier. Our studies use KNN because we were inspired by earlier research that showed KNN to perform well in CNN feature classification.

4.2. Dataset

We worked with two distinct, publicly accessible brain MRI datasets to perform our experimental studies.

The first dataset consisted of MRI images of three types of brain tumors, namely gliomas, meningiomas, and pituitary tumors. Cheng et al. [29] initially processed the dataset, which was obtained from Tianjin Medical University General Hospital and Nan Fang Hospital in Guangzhou, China. Figure 3 displays a selection of sample images from the dataset, along with their corresponding class labels. The ground truth for the tumor dataset includes the following tumor types: pituitary tumors, gliomas, and meningiomas, with a total of 930, 1426, and 708 images, respectively. The dataset of brain CE-MRI images can be accessed at (https://figshare.com/articles/dataset/brain_tumor_dataset/1512427, accessed on 19 September 2024).

The second dataset, named Brain Tumour Detection 2020 (BR35H) [30], is an MRI dataset obtained from the Kaggle website at (https://www.kaggle.com/datasets/ahmedhamada0/brain-tumor-detection, accessed on 19 September 2024). We refer to this dataset as dataset2, consisting of two classes, with 1500 images of the tumor class and 1500 images of normal or non-tumor cases.

4.3. Image Pre-Processing

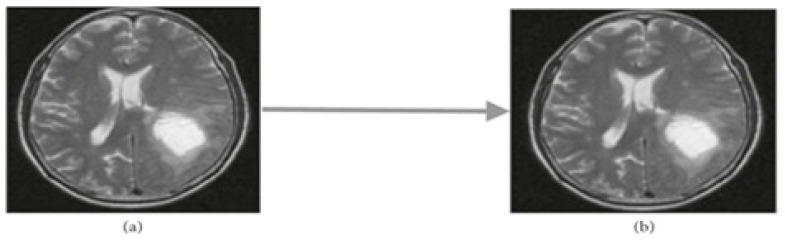

MRI images contain a significant amount of noise, which could be caused by the surroundings, instrumentation, or operator errors. These could cause significant MRI scan inaccuracies. Thus, the first stage is to clean the MRI image of noise. There are two different types of noise reduction methods: linear and nonlinear. The average weight of the neighborhood is used to update the pixel value in linear filters for noise reduction. The image quality is decreased by this process. Conversely, in the nonlinear method, the delicate structures are degraded but the sides remain intact [31]. The essential challenge in the pre-processing step is to enhance the best MRI brain tumor image and enhance the contrast. The pre-processing step can assist in increasing the signal-to-noise ratio, eliminating noise, and clarifying the edges of the MRI brain tumor image. In this case, the noise was removed from the images using a median filter, as illustrated in Figure 4.

Figure 4.

Applying median filter. (a) Input image. (b) Median Filtering.

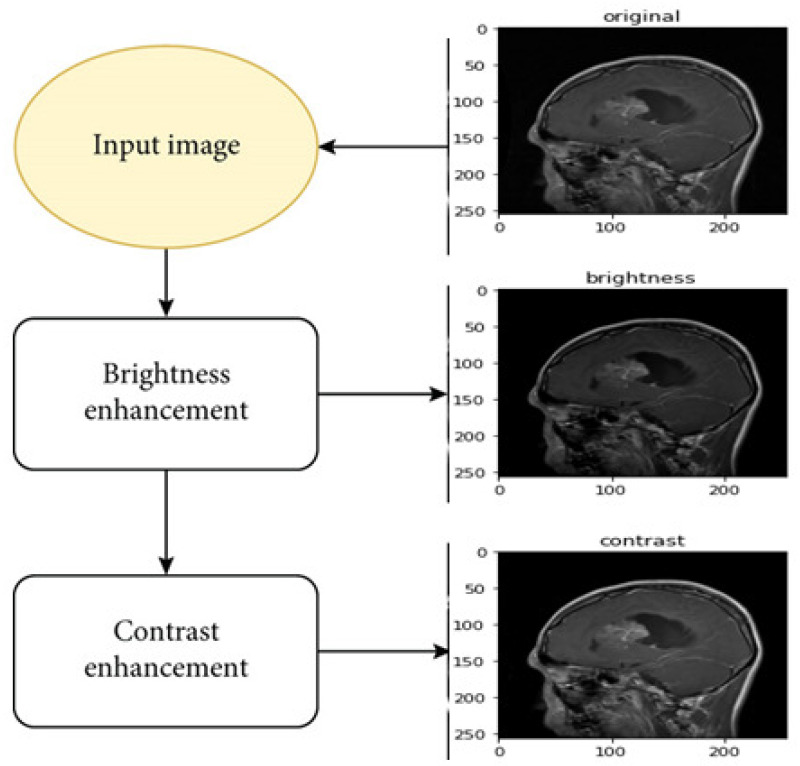

4.4. Data Augmentation

Data augmentation is defined as a technique that involves artificially creating updated versions of a dataset to increase the training set and use the available data. It includes making minor modifications to the dataset to generate new data points [32].

Data augmentation has demonstrated strong performance in various clinical image issues and brain scan analysis. The data augmentation method is used to artificially increase the scale of the training image data through rotating and flipping the authentic dataset. More training image data will assist the CNN structure in reinforcing the overall performance and creating skillful models [33]. In this study, the contrast and brightness augmentation methods illustrated in Figure 5 were applied to the images during training.

Figure 5.

Applying augmentation techniques including brightness and and contrast enhancement.

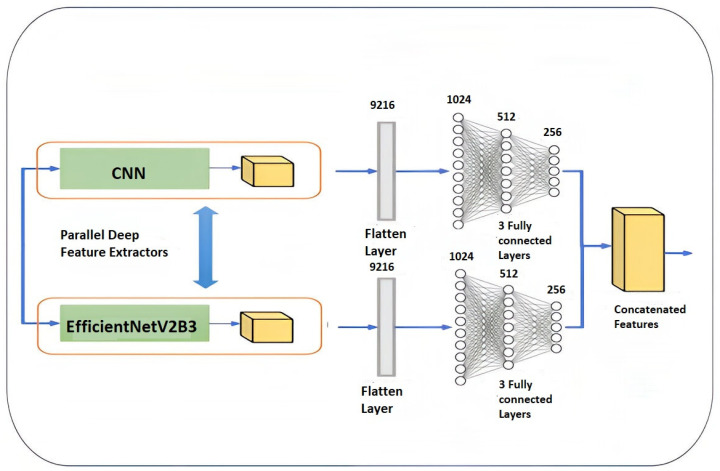

4.5. Feature Extraction

In these experiments, automated feature extraction was achieved by integrating the CNN and EfficientNetV2B3, as seen in Figure 6.

Figure 6.

Feature extraction layer followed by KNN classifier.

4.6. Classification Methods

The algorithm known as KNN is a supervised learning algorithm and a non-parametric method that relies on proximity to perform predictions or classifications of specific data, and it can be used for classification and regression tasks. The principle on which it operates is that similar points are located close to each other. The KNN algorithm has been utilized in the classification of brain tumor datasets. KNN is an appropriate algorithm for multi-class category problems, as KNN exhibits higher accuracy as a classifier. We use the hybrid EfficientNetV2B3 and KNN to enhance the accuracy. The KNN takes the area of the softmax mechanism and the conventional pooling layer within the last image for categorization [34].

The K-nearest neighbors pseudo-code is shown in Algorithm 1.

| Algorithm 1 KNN Algorithm. |

| 1. run dist(Z, ) where x = 1,2, …, m; where dist is the distance between points. 2. Order the Euclidean distances m. 3. Let k be +ve INT. Get first k distance from list. 4. Get k-points corresponding to kdistance. 5. Let be the number of points belonging to among k, i.e., I ≥ 0 6. If ∀ x ≠ y, then put z in class x. |

4.7. Implementation

The image classification performance relies on dividing the image features and performing classification as separate phases within the framework. The classification stage is crucial in brain tumor classification models. The framework’s code is created using Python libraries, and we execute the Python code on Google Colaboratory (Colab). It is tested on two different datasets using various augmentation techniques, such as brightness and contrast enhancement, and without augmenting the input image. The training and execution of the hybrid technique are also performed using Google Colaboratory (Colab). This cloud service is based on Jupyter Notebooks, and it provides a virtual GPU powered by an NVIDIA Tesla K80 with 16 GB RAM. The keras library is adopted, along with TensorFlow, to build the deep learning architecture.

A CNN is a feedforward neural network and is frequently used for the collection of data in order to obtain discriminative information. The convolutional layers are Conv1D (1, 256) and Conv1D (1, 128). The ReLu activation function is used. After processing the data by means of the Conv1D (1, 256), Conv1D (1, 128), and max-pooling layers, the dimensions of the output are 1*128. EfficientNetV2 is considered a new family of convolutional networks that have faster training speeds and better parameter efficiency than previous models; it is used to extract view feature X1. The CNN is applied to extract the shape feature X2. X1 and X2 are processed by the flatten layer.

First, the median filter was used to minimize noise in the input images, and the augmentation methods mentioned previously were applied. Then, the features were extracted using the hybrid method (CNN and EfficientNetV2B3) as a feature extraction layer. The KNN algorithm was executed in the classification stage. The KNN parameters include k, which is equal to 49, and the next neighborhood or distance metrics. A small k value can increase the system’s vulnerability to noise and overfitting. A greater value of k is used to increase the importance of the calculations. Additionally, class-related data imbalances can occur. When k is given a high value, the results become dominant. The experiment was performed five times, and each experiment was performed as follows: five occasions with a validation process. The average result of these five experiments is given as the average value and deviation.

4.8. Evaluation Metrics

Precision measures how often the model correctly predicts the disease, and it is calculated using the following equation:

The following formula can be used to calculate the recall:

F1 score: Calculated using the following formula, the F1 is the weighted average of the true positive rate (recall rate).

5. Experimental Results

Multiple metrics have been established for the assessment of a typical classifier’s performance. The most widely used measure of quality is the accuracy of classification. Accuracy measures the percentage of correctly classified samples according to to the total number of samples.

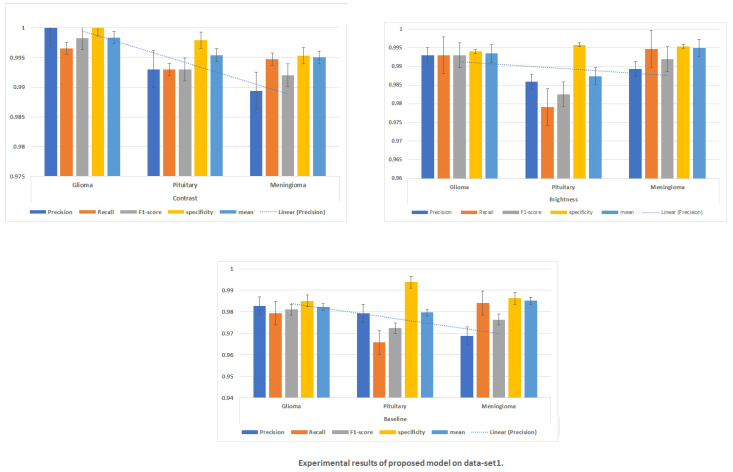

The accuracy of classification achieved on each dataset is shown in Table 1. It is found that the best method, utilizing contrast images on dataset1, achieved accuracy of 99.51%, as shown in Figure 7.

Table 1.

Experimental results of proposed model.

| Dataset | Augmentation | Precision | Recall | F1 Score | Specificity | Mean |

|---|---|---|---|---|---|---|

| Dataset1 | Baseline | 99.76% | 97.64% | 97.66% | 98.83 % | 98.23% |

| Brightness | 99.02% | 98.89% | 98.92% | 99.50% | 99.19% | |

| Contrast | 99.51% | 99.47% | 99.44% | 99.77% | 99.19% | |

| Dataset2 | Baseline | 99.46% | 99.47% | 99.46% | 99.46 % | 99.47% |

| Brightness | 99.83% | 99.83% | 99.83% | 99.83% | 99.83% | |

| Contrast | 99.83% | 99.83% | 99.83% | 99.83% | 99.83% |

Figure 7.

Experimental results of proposed model on dataset1.

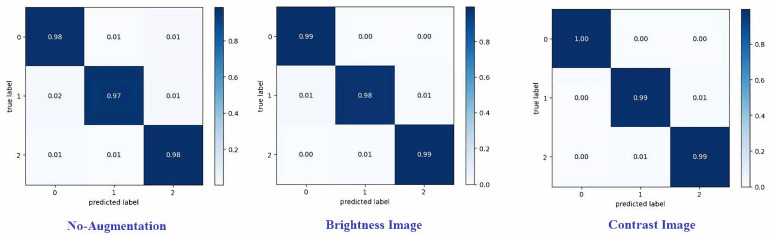

A confusion matrix summarizes the predictions of a model. Each row corresponds to a real category and a single item. A confusion matrix is normalized by dividing each element’s value in every class, enhancing the visual representation of misclassification in each class [35]. Normalized confusion matrices for the best technique are shown, where G, P, and M, or 0, 1, and 2, refer to gliomas, pituitary tumors, and meningiomas, respectively. Various metrics can be used from the confusion matrix to demonstrate the classifier’s performance for each tumor category. Recall (or sensitivity) and precision are essential metrics [36,37,38].

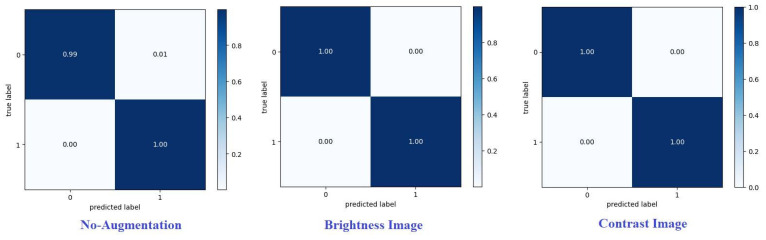

Figure 8 and Figure 9 present the confusion matrices for the proposed framework when using various augmentation techniques on each dataset.

Figure 8.

Confusion matrix for proposed model on dataset1 with different augmentation techniques.

Figure 9.

Confusion matrix for proposed model on dataset2 with different augmentation techniques.

6. Ablation Study

To confirm the significance of the proposed approach, ablation experiments were performed with augmentation and the baseline. We evaluated the hybrid classification approach, and the proposed hybrid model’s results are presented in Table 2 and Table 3. The classification experiments were conducted with several configurations, with the hybrid approach implemented in conjunction with extensions and compared to the basic model.

Table 2.

Results of experiments on dataset 1.

| Augmentation | Tumor | Precision | Recall | F1 Score | Specificity | Mean |

|---|---|---|---|---|---|---|

| Contrast | G | 1 | 0.9965 | 0.9982 | 1 | 0.9983 |

| p | 0.993 | 0.9930 | 0.9930 | 0.9979 | 0.9954 | |

| M | 0.9894 | 0.9947 | 0.9920 | 0.9953 | 0.9950 | |

| Brightness | G | 0.9930 | 0.9930 | 0.9930 | 0.9940 | 0.9935 |

| p | 0.9860 | 0.9792 | 0.9826 | 0.9958 | 0.9874 | |

| M | 0.9894 | 0.9947 | 0.9920 | 0.9954 | 0.9950 | |

| Baseline | G | 0.9828 | 0.9794 | 0.9811 | 0.9851 | 0.9822 |

| p | 0.9792 | 0.9658 | 0.9724 | 0.9938 | 0.9797 | |

| M | 0.9688 | 0.9841 | 0.9764 | 0.9863 | 0.9852 |

Table 3.

Results of experiments on dataset 2.

| Augmentation | Tumor | Precision | Recall | F1 Score | Specificity | Mean |

|---|---|---|---|---|---|---|

| Contrast | Tumor | 1 | 0.9983 | 1 | 1 | 0.9983 |

| No tumor | 0.9967 | 1 | 0.9983 | 0.9967 | 0.9983 | |

| Brightness | Tumor | 0.9993 | 0.9973 | 0.9983 | 0.9993 | 0.9983 |

| No tumor | 0.9973 | 0.9993 | 0.9983 | 0.9993 | 0.9983 | |

| Baseline | Tumor | 0.9973 | 0.9921 | 0.9947 | 0.9973 | 0.0.9947 |

| No tumor | 0.9921 | 0.9973 | 0.9947 | 0.9921 | 0.9947 |

7. Discussion

This article introduces a precise and completely automated system, requiring minimal pre-processing, for the categorization of brain tumors. The system utilizes advanced transfer learning techniques to analyze the features of brain MRI scans. KNN is utilized to extract the features. Classifiers rely on augmentation techniques to improve their performance. This framework achieved significantly higher precision when compared to all other relevant options from previous research, and it could be further examined using a more significant number of images.

Typically, the primary metric used to evaluate such systems in the majority of previous studies is the accuracy criterion, as well as using the sensitivity, precision, and recall. We compare the obtained results with those of past work conducted on an identical benchmark dataset [29] in Table 4 and on the Kaggle BR35H dataset in Table 5.

Table 4.

Comparison of the obtained accuracy with that achieved in past work on the same dataset (dataset1).

| Author | Year | Method | Performance |

|---|---|---|---|

| Cheng et al. [24] | 2015 | BoW-SVM | 91.28% |

| Paul et al. [39] | 2016 | Fully connected CNN | 84.19% |

| Ismael et al. [25] | 2018 | DWT-Gabor-NN | 91.9% |

| Pashaei et al. [26] | 2018 | CNN-ELM | 93.68% |

| Afshar et al. [40] | 2019 | CapsNet | 90.89% |

| Deepak et al. [41] | 2019 | Deep CNN-SVM | 97.1% |

| Ismael et al. [10] | 2020 | ResNet50 | 97% for image level |

| Gull et al. [27] | 2021 | VGG-19, AlexNet | 97.25% |

| Mondal et al. [28] | 2022 | DenseNet201, InceptionV3, MobileNetV2, ResNet50, and VGG19 | 97.91 % |

| Eman et al. [42] | 2023 | ResNet50+KNN | 99.1% |

| Proposed model | 2024 | Combination of CNN with EfficientNetV2B3 and KNN classifier | 99.52% |

Table 5.

Comparison of the obtained accuracy with that achieved in past work on the same dataset (dataset2 (BR35H)).

| Author | Year | Method | Performance |

|---|---|---|---|

| Hamada et al. [30] | 2020 | CNN models | 97.5% |

| Asmaa et al. [43] | 2021 | CNN with augmented images | 98.8% |

| Amran et al. [44] | 2022 | AlexNet, MobileNetV2 | 99.51% |

| Falak et al. [45] | 2023 | Keras Sequential Model (KSM) | 97.99% |

| Proposed model | 2024 | Combination of CNN with EfficientNetV2B3 and KNN classifier | 99.83% |

The authors in [10,24,25,26,27,28,39,40,41] achieved accuracy of 91.28%, 84.19%, 91.90%, 93.68%, 90.89%, 97.1%, 97%, 97.25%, and 97.91% respectively. In this study, the suggested model has also achieved high accuracy when tested on the same dataset1, exceeding that of the best-performing model in the previous studies by 1.5%.

The authors in [30,43,44,45] achieved accuracy of 97.5%, 98.8%, 99.50%, and 97.99%, respectively. High accuracy has also been attained with the proposed model when tested on the same BR35H Kaggle dataset2, exceeding that of the best-performing model in the previous studies by 0.3%.

8. Conclusions

This paper introduces a system for the automatic categorization of brain tumors, requiring only minimal processing. The training of the model on a brain tumor MRI dataset consisting of 3064 images was assessed with various performance metrics, like the accuracy, precision, and recall. We combined the flatten layer of the CNN with the flatten layer of EfficientNetV2B3 as the feature extraction layers and then used a KNN classifier with various augmentation techniques. This led to 99.51% accuracy, and the use of improved, cutting-edge technology increased this by 1.5%. We intend to expand the proposed framework to more extensive datasets and more brain tumor types. Moreover, the proposed framework will become available in the cloud to provide doctors with fast and accurate diagnoses when using MRI images as input. Future research efforts will extend the proposed framework by incorporating additional types of brain tumors and larger datasets. With the help of the recommended web applications, medical professionals can analyze MRI images with speed and precision. A range of medical imaging techniques, such as X-rays, ultrasound, endoscopic methods, thermoscopy, and histological imaging, can be employed with the proposed model. Future studies could address a number of the constraints of this work. An adequate evaluation requires a large amount of extra patient data, mainly for the meningioma class, which had the lowest number of images of all three training classes studied. Moreover, studies should focus on tuning additional hyperparameters for a variety of convolutional layers, a variety of filters for each convolutional layer, the kernel size, and a variety of absolutely linked layers. In addition, the enhancement of the proposed model may be achieved by increasing the dimensions of the hyperparameters. Finally, by including CE-MRI images of normal brains in the dataset, further differentiation may be achieved for tumor classification. In the future, a larger dataset could be used for educational purposes. Finally, the problems of dimensionality that arise due to moving weights and parameters should be addressed.

Author Contributions

E.M.G.Y.: conceptualization, data collection, formal analysis, writing—review and editing; M.N.M. and I.A.I.: conceptualization, methodology, writing—original draft, validation, supervision; A.M.A.: funding acquisition, validation, visualization; M.N.M.: writing—original draft, analysis; E.M.G.Y.: investigation, data collection. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data collected and analyzed during this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding Statement

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.El-Dahshan E.S.A., Mohsen H.M., Revett K., Salem A.B.M. Computer-aided diagnosis of human brain tumour through MRI: A survey and a new algorithm. Expert Syst. Appl. 2014;41:5526–5545. doi: 10.1016/j.eswa.2014.01.021. [DOI] [Google Scholar]

- 2.Gibbs P., Buckley D.L., Blackb S.J., Horsman A. Tumour volume determination from MR images by morphological segmentation. Phys. Med. Biol. 1996;41:2437. doi: 10.1088/0031-9155/41/11/014. [DOI] [PubMed] [Google Scholar]

- 3.Bharkar S.M., Koh J., Suk M. Multiscale image segmentation using a hierarchical selforganizing map. Neurocomputing. 1997;14:241–272. [Google Scholar]

- 4.Benson C., Lajish V., Rajamani K. Brain tumour extraction from MRI brain images using marker based watershed algorithm; Proceedings of the IEEE International Conference on Advances in Computing, Communications and Informatics (ICACCI); Kochi, India. 10–13 August 2015; pp. 318–323. [Google Scholar]

- 5.Chen H., Qin Z., Ding Y., Lan T. Brain tumour segmentation with generative adversarial nets; Proceedings of the IEEE 2nd International Conference on Artificial Intelligence and Big Data (ICAIBD); Chengdu, China. 25–28 May 2019; pp. 301–305. [Google Scholar]

- 6.Arunkumar N., Mohammed M.A., Abd Ghani M.K., Ibrahim D.A., Abdulhay E., Ramirez-Gonzalez G., de Albuquerque V.H.C. K-means clustering and neural network for object detecting and identifying abnormality of brain tumour. Soft Comput. 2019;23:9083–9096. doi: 10.1007/s00500-018-3618-7. [DOI] [Google Scholar]

- 7.Szegedy C., Ioffe S., Vanhoucke V., Alemi A.A. Inception-v4, inception-resnet and the impact of residual connections on learning; Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence; San Francisco, CA, USA. 4–9 February 2017. [Google Scholar]

- 8.Vishwanathan S.V.M., Murty M.N. SSVM: A simple SVM algorithm; Proceedings of the 2002 International Joint Conference on Neural Networks. IJCNN’02 (Cat. No. 02CH37290); Honolulu, HI, USA. 12–17 May 2002; pp. 2393–2398. [Google Scholar]

- 9.Kumari M., Singh V. Breast cancer prediction system. Procedia Comput. Sci. 2018;132:371–376. doi: 10.1016/j.procs.2018.05.197. [DOI] [Google Scholar]

- 10.Ismael S.A.A., Mohammed A., Hefny H. An enhanced deep learning approach for brain cancer MRI images classification using residual networks. Artif. Intell. Med. 2020;102:101779. doi: 10.1016/j.artmed.2019.101779. [DOI] [PubMed] [Google Scholar]

- 11.Albawi S., Mohammed T.A., Al-Zawi S. Understanding of a convolutional neural network; Proceedings of the 2017 International Conference on Engineering and Technology (ICET); Antalya, Turkey. 21–23 August 2017; pp. 1–6. [Google Scholar]

- 12.Tan M., Le Q. Efficientnetv2: Smaller models and faster training; Proceedings of the International Conference on Machine Learning; Virtual Event. 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- 13.Liu D., Wang W., Wu X., Yang J. EfficientNetv2 model for breast cancer histopathological image classification; Proceedings of the 2022 3rd International Conference on Electronic Communication and Artificial Intelligence (IWECAI); Zhuhai, China. 14–16 January 2022; pp. 384–387. [Google Scholar]

- 14.Anagun Y. Smart brain tumour diagnosis system utilizing deep convolutional neural networks. Multimed. Tools Appl. 2023;82:44527–44553. doi: 10.1007/s11042-023-15422-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jiang D. Analysis on the Application of Artificial Intelligence in the Medical Field; Proceedings of the 2020 8th International Conference on Orange Technology (ICOT); Daegu, Republic of Korea. 18–21 December 2020; pp. 1–4. [DOI] [Google Scholar]

- 16.Wang X., Ahmad I., Javeed D., Zaidi S.A., Alotaibi F.M., Ghoneim M.E., Daradkeh Y.I., Asghar J., Eldin E.T. Intelligent hybrid deep learning model for breast cancer detection. Electronics. 2022;11:2767. doi: 10.3390/electronics11172767. [DOI] [Google Scholar]

- 17.Dhillon A., Verma G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020;9:85–112. doi: 10.1007/s13748-019-00203-0. [DOI] [Google Scholar]

- 18.Theckedath D., Sedamkar R.R. Detecting affect states using VGG16, ResNet50 and SE-ResNet50 networks. SN Comput. Sci. 2020;1:1–7. doi: 10.1007/s42979-020-0114-9. [DOI] [Google Scholar]

- 19.Goodfellow I., Bengio Y., Courville A. Deep Learning. MIT Press; Cambridge, MA, USA: 2016. [Google Scholar]

- 20.Jakkula V. Tutorial on support vector machine (svm) Sch. EECS Wash. State Univ. 2006;37:3. [Google Scholar]

- 21.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 22.Wu J. Introduction to convolutional neural networks. Natl. Key Lab Nov. Softw. Technol. Nanjing Univ. China. 2017;5:495. [Google Scholar]

- 23.Mascarenhas S., Agarwal M. A comparison between VGG16, VGG19 and ResNet50 architecture frameworks for Image Classification; Proceedings of the 2021 International Conference on Disruptive Technologies for Multi-Disciplinary Research and Applications (CENTCON); Bengaluru, India. 19–21 November 2021; pp. 96–99. [Google Scholar]

- 24.Cheng J., Huang W., Cao S., Yang R., Yang W., Yun Z., Wang Z., Feng Q. Enhanced performance of brain tumour classification via tumour region augmentation and partition. PLoS ONE. 2015;10:e0140381. doi: 10.1371/journal.pone.0140381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ismael M.R., Abdel-Qader I. Brain tumour Classification via Statistical Features and Back-Propagation Neural Network; Proceedings of the 2018 IEEE International Conference on Electro/Information Technology (EIT); Rochester, MI, USA. 3–5 May 2018; pp. 252–257. [DOI] [Google Scholar]

- 26.Pashaei A., Sajedi H., Jazayeri N. Brain tumour Classification via Convolutional Neural Network and Extreme Learning Machines; Proceedings of the 2018 8th International Conference on Computer and Knowledge Engineering (ICCKE); Iran, Islamic. 25–26 October 2018; pp. 314–319. [DOI] [Google Scholar]

- 27.Gull S., Akbar S., Shoukat I.A. A Deep Transfer Learning Approach for Automated Detection of Brain tumour Through Magnetic Resonance Imaging; Proceedings of the 2021 International Conference on Innovative Computing (ICIC); Lahore, Pakistan. 9–10 November 2021; pp. 1–6. [Google Scholar]

- 28.Mondal A., Shrivastava V.K. A novel Parametric Flatten-p Mish activation function based deep CNN model for brain tumour classification. Comput. Biol. Med. 2022;150:106183. doi: 10.1016/j.compbiomed.2022.106183. [DOI] [PubMed] [Google Scholar]

- 29.Cheng J. Brain Magnetic Resonance Imaging Tumor Dataset. Figshare MRI Dataset Version 5. 2017. [(accessed on 19 September 2023)]. Available online: https://figshare.com/articles/dataset/brain_tumor_dataset/1512427.

- 30.Hamada A. Hamada2020br35h, Br35h: Brain Tumour Detection 2020. 2020. [(accessed on 19 September 2023)]. Available online: https://www.kaggle.com/datasets/ahmedhamada0/brain-tumor-detection.

- 31.Sonka M., Fitzpatrick J.M. Handbook of Medical Imaging. Volume 2, Medical Image Processing and Analysis. SPIE; Bellingham, WA, USA: 2000. [Google Scholar]

- 32.Perumal S., Velmurugan T. Preprocessing by contrast enhancement techniques for medical images. Int. J. Pure Appl. Math. 2018;118:3681–3688. [Google Scholar]

- 33.Van Dyk D.A., Meng X.L. The art of data augmentation. J. Comput. Graph. Stat. 2001;10:1–50. doi: 10.1198/10618600152418584. [DOI] [Google Scholar]

- 34.Guo G., Wang H., Bell D., Bi Y., Greer K. KNN model-based approach in classification; Proceedings of the OTM Confederated International Conferences “On the Move to Meaningful Internet Systems”; Sicily, Italy. 3–7 November 2003; Berlin/Heidelberg, Germany: Springer; 2003. pp. 986–996. [Google Scholar]

- 35.Visa S., Ramsay B., Ralescu A.L., Van Der Knaap E. Confusion matrix-based feature selection. MAICS. 2011;710:120–127. [Google Scholar]

- 36.Wise M.N., editor. The Values of Precision. Princeton University Press; Princeton, NJ, USA: 1997. [Google Scholar]

- 37.Buckl M., Gey F. The relationship between recall and precision. J. Am. Soc. Inf. Sci. 1994;45:12–19. doi: 10.1002/(SICI)1097-4571(199401)45:1<12::AID-ASI2>3.0.CO;2-L. [DOI] [Google Scholar]

- 38.Chicco D., Jurman G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020;21:6. doi: 10.1186/s12864-019-6413-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Paul J.S., Plassard A.J., Landman B.A., Fabbri D. Medical Imaging 2017: Biomedical Applications in Molecular, Structural, and Functional Imaging, Proceedings of the SPIE Medical Imaging, Orlando, FL, USA, 11–16 February 2017. Volume 10137. SPIE; Bellingham, WA, USA: 2017. Deep learning for brain tumour classification; pp. 253–268. [Google Scholar]

- 40.Afshar P., Plataniotis K.N., Mohammadi A. Capsule Networks for Brain tumour Classification Based on MRI Images and Coarse tumour Boundaries; Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); Brighton, UK. 12–17 May 2019; pp. 1368–1372. [DOI] [Google Scholar]

- 41.Deepak S., Ameer P.M. Brain tumour classification using deep CNN features via transfer learning. Comput. Biol. Med. 2019;111:103345. doi: 10.1016/j.compbiomed.2019.103345. [DOI] [PubMed] [Google Scholar]

- 42.Younis E., Mahmoud M.N., Ibrahim I.A. Python Libraries Implementation for Brain tumour Detection Using MR Images Using Machine Learning Models. In: Biju S., Mishra A., Kumar M., editors. Advanced Interdisciplinary Applications of Machine Learning Python Libraries for Data Science. IGI Global; Hershey, PA, USA: 2023. pp. 243–262. [DOI] [Google Scholar]

- 43.Naseer A., Yasir T., Azhar A., Shakeel T., Zafar K. Computer-aided brain tumour diagnosis: Performance evaluation of deep learner CNN using augmented brain MRI. Int. J. Biomed. Imaging. 2021;2021:5513500. doi: 10.1155/2021/5513500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Amran G.A., Alsharam M.S., Blajam A.O.A., Hasan A.A., Alfaifi M.Y., Amran M.H., Gumaei A., Eldin S.M. Brain tumour Classification and Detection Using Hybrid Deep tumour Network. Electronics. 2022;11:3457. doi: 10.3390/electronics11213457. [DOI] [Google Scholar]

- 45.Hossain T., Shishir F.S., Ashraf M., Al Nasim M.A., Shah F.M. Brain tumour Detection Using Convolutional Neural Network. J. Stud. Res. 2023;12:2. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data collected and analyzed during this study are available from the corresponding author upon reasonable request.