Abstract

Collections librarians from academic libraries are often asked, on short notice, to evaluate whether their collections are able to support changes in their institutions' curricula, such as new programs or courses or revisions to existing programs or courses. With insufficient time to perform an exhaustive critique of the collection and a need to prepare a report for faculty external to the library, a selection of reliable but brief qualitative and quantitative tests is needed. In this study, materials-centered and use-centered methods were chosen to evaluate the toxicology collection of the University of Saskatchewan (U of S) Library. Strengths and weaknesses of the techniques are reviewed, along with examples of their use in evaluating the toxicology collection. The monograph portion of the collection was evaluated using list checking, citation analysis, and classified profile methods. Cost-effectiveness and impact factor data were compiled to rank journals from the collection. Use-centered methods such as circulation and interlibrary loan data identified highly used items that should be added to the collection. Finally, although the data were insufficient to evaluate the toxicology electronic journals at the U of S, a brief discussion of three initiatives that aim to assist librarians as they evaluate the use of networked electronic resources in their collections is presented.

INTRODUCTION

From time to time academic librarians are asked to evaluate collections, either entirely or in specific areas, to determine whether the collections can support changes in the curriculum. These adjustments to the curriculum may be in the form of new courses introduced at the undergraduate or graduate level or revisions to existing courses. An affirmation that the library owns the titles on a professor's reading list is often all that is expected. Librarians know that students require much more support from collections and library staff than just access to titles on a reading list. Thus, requests for collection evaluation from academic staff may be seen as opportunities to educate faculty about the collections at their disposal and the depth of knowledge that librarians must possess to be effective stewards of these valuable resources.

Librarians facing a collection-evaluation exercise may select from a variety of techniques described in the voluminous collection development literature. It is important to select both quantitative and qualitative techniques to avoid skewing the results in favor of one or more methods. Several factors need to be considered when selecting evaluation techniques, including the reasons for the study and the documented reliability and validity of selected techniques. Other factors—such as cost, available staff time, and timeline—are also important. Baker and Lancaster [1] identify two basic approaches to collection evaluation:

the materials-centered approach that focuses on the materials in a collection and considers collection size and diversity

-

the use-centered approach that focuses on the use a collection receives and a library's patron base

The faculty of the Toxicology Centre [2] at the University of Saskatchewan (U of S) requested an evaluation of the ability of the library's collections to support a graduate-level program in toxicology. After considering the environmental factors of no incremental funding, no additional staff support (one librarian would collect the data, analyze them, and write the report), and a timeline of one month, the following evaluation techniques were selected:

-

materials centered-approaches

– list checking: books

– citation analysis: books

-

– citation analysis: journals

○ cost-effectiveness

○ impact factor

– classified profile: books

– Internet resources

-

use-centered approaches

– interlibrary loan: books, journals

– circulation: books, journals

Most of the above techniques were developed to evaluate materials found in traditional print collections; revised or new methods are required to evaluate library collections in which electronic resources are becoming increasingly important. For instance, CD-ROMs that are added to the collection can be evaluated as if they were print materials, but electronic journals that are not owned by the library, but only leased, must be evaluated with usage data obtained from the information provider. Several initiatives are underway to investigate ways in which libraries can collect and analyze statistics to evaluate collections containing electronic resources; these initiatives will be mentioned later. Regardless of the problems associated with assessing the usefulness of electronic resources in a collection, if data are available, electronic resources should be evaluated.

The conspectus methodology was rejected as one of the evaluation techniques, because, according to Lancaster, it “does not evaluate the strength of its [library's] collections in various subject areas, it merely identifies its stated collection development policies in those areas” [3]. The conclusion is that conspectus data are more useful for internal library collection management decisions and for cooperative collection activities and services such as interlibrary loans [4] than for reporting to university staff external to the library.

MATERIALS-CENTERED APPROACHES

List checking: books

This method checks the collection against authoritative sources, such as bibliographies by recognized subject experts, catalogs of libraries with strong collections in the area, or standard lists in a discipline. A customized list may be prepared by compiling references from a number of authoritative sources. List checking using subject bibliographies is generally preferred for specialized subject collections or for collections of large specialized libraries [5]. An adequate collection will contain many titles on the list, although knowledge of local users' needs is necessary to determine the level of adequacy of the collection. A sample may contain as few as 300 titles, but 1,000 or more titles would be needed to determine what is missing from the collection [6]. Examining the titles of held items is useful for identifying strengths and weaknesses of the collection and for compiling lists of items for purchase. Some disadvantages of list checking, as determined by Hall, include lists that quickly become outdated, conformity among collections that may not reflect local needs, and no indication of items that the library should not hold, (e.g., outdated materials, multiple copies, etc.) [7].

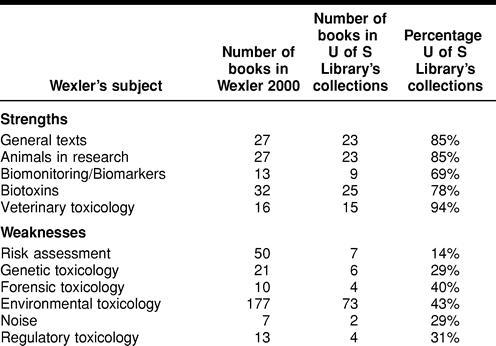

In evaluating the specialized toxicology collection at the U of S Library, the subject bibliography used was Information Resources in Toxicology by Wexler [8]. The U of S Library owns approximately 50% of the titles on Wexler's list. Table 1 shows areas of strength in the toxicology collection including general texts on toxicology, animals in research, biotoxins, and veterinary toxicology. Areas that need attention are risk assessment, genetic toxicology, forensic toxicology, and environmental toxicology. Areas with few resources, such as noise and regulatory toxicology, need not be addressed, because they are not reflected in the present curriculum. A list of titles recommended for purchase can also be generated from the data and included in the evaluation report.

Table 1 Areas of strength and weakness determined by percentage of books by subject owned by the University of Saskatchewan (U of S) Library compared to books listed in Wexler 2000

Citation analysis

Citation analysis of collections is based on the assumption that library users select bibliographic references from scholarly sources in their subject areas that have been used by their peers in the preparation of their publications [9]. This method can be used for collection management purposes to predict future scholarly use of collections [10] and is suitable for analyzing research collections used by faculty and graduate students. Care is taken to select the source of references, so that it will provide a pool representing what should be present in a given collection. In evaluating science, technology, and medicine collections, currency is important; therefore, references should be selected from recent issues of important journals or books rather than from older sources [11].

Citation analysis has several disadvantages, these include a primary focus on the needs of those who do research, identification of items that may not be useful to undergraduate students in a field, and underrepresentation of items that are not cited in scholarly publications. Regardless of its limitations, citation analysis is useful, because it identifies references within the core discipline as well as those in allied and other disciplines. Because of the currency of references, this technique is also useful for identifying the literature used in emerging and interdisciplinary areas. Two approaches to citation analysis used in this report are: using a form of list checking, in which the collection is evaluated against a list of references cited by researchers, and ranking journals using citation data, which have been collected and analyzed in the Journal Citation Reports on CD Science Edition.

Citation analysis: books

In the first approach, the toxicology collection at the U of S was evaluated against a list of the bibliographic references that have been cited by researchers, instead of a list of “best” books. A list of references to monographs representing the materials that should be available in a research-level toxicology collection was created. All non-serial sources cited from 1997 to 1999 in the top-ranked journal in toxicology, the Annual Review of Pharmacology and Toxicology, were collected; random sampling was not required. During the three-year period sampled, 193 references were identified and analyzed by language, format, date, and subject area. Forty-five percent of the items from the Annual Review reference list were in the U of S toxicology collection in two formats; 185 were books, and twelve were government documents. Fifty-eight percent of the books were published after 1990, and 97% were in English. No pattern of prolific authors was revealed; 159 authors were responsible for one title each, while an additional sixteen authors produced two or more books accounting for thirty-four titles.

Using Wexler's subject headings [12] as a template, the most-cited subjects were: target sites, accounting for 10% of all the books sampled; pharmacokinetics and metabolic toxicology (12%); biochemical, cellular, and molecular toxicology (10%); and miscellaneous titles (21%). These subjects, representing areas of current research intensiveness in toxicology, were highlighted for developing the U of S toxicology collection. If Wexler's subjects represented the core areas in toxicology, and the titles assigned to the miscellaneous subject did not fit into Wexler's categories, it was reasonable to assume that these materials were peripheral to toxicology.

Analyzing references to monographs in the serials literature yields results that appear to be more useful for making selection decisions than for demonstrating the merits of an existing collection. The analysis indicates areas in which researchers are working (e.g., pharmacokinetics and metabolic toxicology) and highlights peripheral areas of interest. This information is useful for selecting materials for future purchase and adjusting acquisition patterns. While citation analysis may work well for serials, it is less useful for monographs, because: (1) they are not cited heavily in the literature even though they may be used extensively in libraries, (2) verifying and correcting references from the monographic literature is time consuming, and (3) references from books emphasize retrospective materials. Subramanyam [13] and Hall [14] both recommend that circulation data, rather than citation data, be used to identify highly used books.

Citation analysis: journals

In another approach to collection evaluation using citation analysis, journals in a subject area are ranked according to some criteria to determine their relative value in a discipline. The ranking allows use statistics to be compared among similar titles and allows librarians to select the “best,” or highest-ranked, journals. Lancaster [15] lists seven methods of ranking journals; the two used here are cost-effectiveness and impact factor.

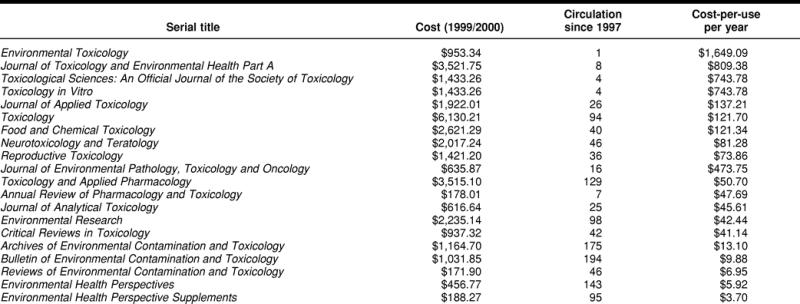

Rank ordering of journals by cost per use is a technique that highlights a serial's cost effectiveness. Cost-per-use data are available in many libraries because of past serials cancellation projects; the data include subscription costs and circulation data compiled for a specified time period. For the toxicology collection, eight titles out of twenty cost more than $100 per use (Table 2). These eight titles were examined and, based upon faculty input, three were selected for cancellation with document delivery being recognized as a reasonable alternative to ownership. Five titles have been retained for their support of specialized areas of the curriculum and research but are subject to annual review to gauge their continued usefulness. In an evaluation report, a list of serials, ranked by annual cost and including cost-per-use data, is useful for educating faculty about some of the issues surrounding serials management in an academic library.

Table 2 Ranking of toxicology serials currently held at the U of S Library by cost-per-use per year

The second method of citation analysis of journals used in evaluating the U of S toxicology collection is ranking by impact factor. The impact factor, along with other data, can be found in ISI's Journal Citation Reports (JCR). The ranking is seen as a measure of the prestige of a journal in a discipline, as defined by citation by peers.

Two advantages of ranking serials using citation counts are the objective data that are readily available from the JCR and rankings that are not influenced by individual bias. There are several disadvantages to ranking journals by impact factor; some of which, according to Nisonger [16] involve the age of the journals. Older, established journals are cited more often because they are older, established, and, thus, well known. They have larger audiences, and their articles have more opportunities to be cited. New journals are at a disadvantage, because their citation counts may not be available. Two disadvantages of this method that are not related to the age of serials are citation counts that are exaggerated by self-citation and data that are not local.

Regardless of the disadvantages of the method, the data compiled in the JCR continue to be consulted by librarians and faculty to determine the quality of a journal in a specific discipline. Nisonger [17] has determined that, because a journal's ranking may fluctuate from year to year, data from three consecutive years of the JCR should be used for ranking. ISI calculates the impact factor for a journal by comparing the current year's citations to those of the previous two years; subsequently, when averaging the data from three years, the middle years are counted twice. Nisonger proposed a correction method called the “adjusted impact factor” to compensate for this double counting.

In evaluating the U of S toxicology collection, the library owns thirteen of the top twenty toxicology journals ranked by adjusted impact factor from the 1999 science edition of the JCR [18], with incomplete holdings of an additional six titles. Of the complete list of sixty-eight toxicology journals in the 1999 JCR, the library owns twenty-three, has partial holdings of eighteen more titles, and has never owned twenty-seven of the titles on the list. A list of top-ranked journals in a discipline is useful to indicate to faculty which of the “best” titles the library owns and to compile a purchase list of those titles that would improve the collection.

Classified profile: monograph

The classified profile is a materials-centered technique of evaluation that focuses on the collection's ability to support teaching in its institution. In a classified profile, Library of Congress (LC) classification numbers are first selected to represent the subject matter of courses from the curriculum and then searched in the catalog to determine the number of books in this area in the collection. Enrollment figures for each course are included in the analysis to indicate demand for materials.

Golden [19] developed this methodology to assess the support provided by the current book collection for the courses offered by University of Nebraska at Omaha. Burr [20] modified the method by comparing his classified profile data to that of Association of College and Research Libraries standards for collections. He further refined the analysis by considering publication date, country of origin, publisher, and language of publications for all books in the LC classification ranges.

To evaluate the U of S toxicology collection, LC classification numbers were assigned to eight graduate student courses in toxicology. Support in terms of numbers of books per course was adequate, although enrollments were low, because the courses were taught at the graduate level. For example, the “General Toxicology I” course (VT P 836.5) was supported by 236 books in the following LC classification ranges: RA1193, RA1211, RA1219.3–RA1221, RA1230–RA1270, and SF757.5. The “Carcinogenesis and Mutagens” course, with seventy-two books, was identified as an area requiring additional resources. A classified profile in an evaluation report indicates that local values are used to show the library's support (number of books) for specific courses currently taught. The classified profile focuses on the local needs of students and teachers.

Internet resources

When evaluating Internet resources, access to those resources is essential, but ownership is not. Because the number of Internet resources that can be added to a list of best resources is virtually unlimited and constantly changing, the number of potential resources can be reduced by consulting authoritative sources in the discipline. Resources that are repeatedly cited should be included in the preliminary list. Finally, each item on the list should be evaluated using many of the same criteria used when adding items to a collection. Pionteck and Garlock [21] have devised a series of questions to ask when evaluating Internet resources. A list of critically evaluated best Internet resources included in an evaluation report is useful for faculty and staff.

USE-CENTERED APPROACHES

Use-centered studies examine how collections are used, and they are versatile enough to evaluate all types and sizes of collections. Their value in research libraries is still being debated, because use studies tell little about how useful patrons find their materials and what patrons want and cannot find and nothing at all about patrons who do not use the library. Three types of data that reflect collection use are: (1) records of interlibrary loan requests, (2) counts of circulation of materials, and (3) usage of electronic resources. Interlibrary loan (ILL) data indicate unmet user needs, and circulation data reflect patron needs that are met by a collection. The best evaluation reports include analyses of data collected by a variety of use-centered techniques and collection-centered methods.

Interlibrary loan

ILL requests can be broken down by material type—such as title, subject, age, format, and language of request—and requestor characteristics—such as status of requestor, degree program, and college or departmental affiliation. Using ILL data to select materials for science, technology, and medicine collections is facilitated by the fact that the majority of ILL requests in these disciplines are for current in-print titles. Pritchard [22] has shown that items requested by ILL, and later added to the collection, had circulation statistics comparable to those selected directly by library staff. ILL data can also be used to identify groups who borrow heavily and to indicate whether the area of the collection that they use needs strengthening. In an evaluation report to faculty, an analysis of ILL requests can indicate types of materials that are of interest to users and identify specific titles requested frequently enough that they should be added to the collection.

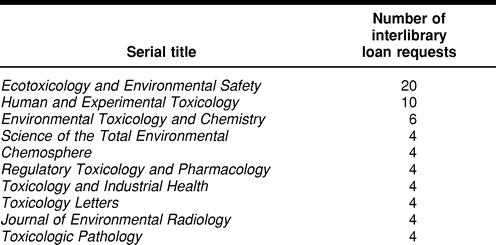

For the U of S toxicology collection, from September 11, 1999, to March 17, 2000, toxicology faculty, staff, and students requested 2.5% of all submitted U of S ILL requests. Articles from ten journals were requested three or more times (Table 3) but were not subsequently purchased. They are, however, monitored annually to determine the number of ILL requests generated and their usefulness in supporting the curriculum and research. Journal articles were the format most requested (152 copies were supplied; 8 books were borrowed). The eight books requested by patrons were in print and acquired for the toxicology collection. In terms of patron status, graduate students submitted 69% of requests for materials, while faculty submitted 31% of the requests; all requests were eventually filled.

Table 3 Titles of toxicology serials requested by interlibrary loan more than three times at the U of S Library from September 1999 to March 2000

ILL borrowing accounts for only a small percentage of library use, therefore other techniques for measuring collection use, such as circulation studies, need to be included in the evaluation.

Circulation

Circulation data are useful in evaluations, because they reveal which materials users select to satisfy their needs. When evaluating circulation data, the author assumes that, when patrons check out items they are displaying an interest in them, although they may not use them. Patrons also use materials inhouse. When considered together, circulation counts and inhouse data provide a fuller understanding of collection use [23].

Circulation statistics can be used to support collection decisions such as weeding, storing, preserving, buying multiple copies, converting records, and reclassifying collections. Many library studies have shown that borrowed books, whether individually or by subject groupings, are more or less the same as those that are used inhouse. Therefore, predicting which materials will be highly used is possible by examining either circulation or inhouse data. As mentioned earlier, circulation studies, rather than citation studies, best reveal the use patterns of books and identify highly used books. Age and language are two other factors that are useful in determining which items will be used and which will not be used.

In evaluating the U of S toxicology collection, circulation data for two years, ending in March 2001, were examined. Internal use and total checkouts data for 476 toxicology monographs revealed that 129 books circulated four or more times. Sixty percent of these books were published prior to 1991. This finding did not agree with the assumption that library users preferred recently published scientific materials. Further examination of publication dates indicated that as total number of checkouts (or use) increased, the percentage of books published after 1990 increased. For example, 78% of books that circulated four times were published before 1991. At the other end of the spectrum, 100% of the books that circulated fourteen to twenty-four times were published in 1991 or later. The collection of highly used books was examined; duplicate and replacement copies were purchased as required.

In a report to faculty, circulation data can be used to create a list of highly used materials and to identify materials that require multiple copies, replacement, or both. ILL analysis also highlights patron usage by material type; this information can be kept in mind for future purchases.

Electronic resources

As mentioned in the abstract, data were insufficient to evaluate the electronic journals component of the U of S toxicology collection. At present, a number of national and international initiatives are underway to examine and develop library electronic statistics and performance measures.

The Association of Research Libraries (ARL) E-Metrics Project “supports an investigation of measurement of library performance in the networked information environment” [24]. Twenty-four ARL institutions are funding the study and participating in it. The three-phase project began in April 2000. The ARL E-Metrics Project plans to provide libraries with a manual of statistical tests that can be used to evaluate the use of electronic resources.

Another initiative, with a different approach from the consortium-based ARL E-Metrics Project, is the White Paper on Electronic Journal Usage Statistics published in October 2000 and sponsored by the Council on Library and Information Resources (CLIR). The CLIR “commissioned Judy Luther to review how and what statistics are currently collected and to identify issues that must be resolved before librarians and publishers feel comfortable with the data and confident in using them” [25].

An international initiative is the International Coalition of Library Consortia (ICOLC) report Guidelines for Statistical Measures of Usage of Web-Based, Indexed, Abstracted, and Full Text Resources, released in November 1998. The report defines a basic set of information requirements for electronic products. “Information providers are encouraged to go beyond these minimal requirements” [26]. Use elements that must be included for electronic products are: number of queries, number of menu selections, number of sessions (logins), number of turn aways, number of items viewed or downloaded (e.g., tables of contents, abstracts, articles, chapters, poems, etc.), and other items such as images.

CONCLUSIONS

Within the constraints of the examination of the U of S toxicology collection, the materials-centered evaluation techniques were generally the simplest to use and their results the easiest to interpret. For monographs, list checking and the classified profile method each reflected the local needs of library users and revealed the strengths and weaknesses of the toxicology collection. Analyzing the monograph collection using citation analysis was not productive; it did highlight areas of research interest that could be used in selecting books, but, because journals are paramount in current research, the technique did not identify important and recent books in toxicology.

Considering journals, cost effectiveness was the most useful journal-ranking tool, because it used local data and highlighted for faculty the exorbitant cost of some scientific journals. Impact factor data were easy to use, and “adjusting” the data improved their reliability.

Of the use-centered methods, circulation counts were the most reliable data collected over a range of variables. ILL data, on the other hand, were quite superficial due to the statistical capability of the ILL software. Regardless, use-centered data are important to collect and examine, because they focus on users instead of the collection.

As collections shift from print to electronic formats, the author looks to the development of statistics and measures that enable evaluation of networked electronic resources and services. These techniques will supplement traditional collection-evaluation methods and allow librarians to fully evaluate their collections.

Footnotes

* Based on a presentation at the 101st Annual Meeting of the Medical Library Association, Orlando, Florida; May 29, 2001.

REFERENCES

- Baker SL, Lancaster FW. The measurement and evaluation of library services. 2d ed. Arlington, VA: Information Resources Press, 1991. [Google Scholar]

- Toxicology Centre, University of Saskatchewan. The Toxicology Group. [Web document]. Saskatoon, CA: The University, 2001. [rev 15 Oct 2001; cited 4 Feb 2002]. <http://www.usask.ca/toxicology/toxgroup.shtml>. [Google Scholar]

- Lancaster FW. If you want to evaluate your library …. 2d ed. Champaign, IL: University of Illinois Graduate School of Library and Information Science, 1993. [Google Scholar]

- Baker SL, Lancaster FW. The measurement and evaluation of library services. 2d ed. Arlington, VA: Information Resources Press, 1991:77. [Google Scholar]

- Baker SL, Lancaster FW. The measurement and evaluation of library services. 2d ed. Arlington, VA: Information Resources Press, 1991:44. [Google Scholar]

- Baker SL, Lancaster FW. The measurement and evaluation of library services. 2d ed. Arlington, VA: Information Resources Press, 1991:44. [Google Scholar]

- Hall BH. Collection assessment manual for college and university libraries. Phoenix, AZ: Oryx Press, 1985. [Google Scholar]

- Wexler P. ed. Information resources in toxicology. 3d ed. San Diego, CA: Academic Press, 2000. [Google Scholar]

- Nisonger TE. Management of serials in libraries. Englewood, CO: Libraries Unlimited, 1998. [Google Scholar]

- Hall BH. Collection assessment manual for college and university libraries. Phoenix, AZ: Oryx Press, 1985:55. [Google Scholar]

- Baker SL, Lancaster FW. The measurement and evaluation of library services. 2d ed. Arlington, VA: Information Resources Press, 1991:47. [Google Scholar]

- Wexler P. ed. Information resources in toxicology. 3d ed. San Diego, CA: Academic Press, 2000:27. [Google Scholar]

- Subramanyam K. Citation studies in science and technology. In: Stueart RD, Miller GB, Jr., eds. Collection development in libraries: a treatise. v.10, part B. Greenwich, CT: JAI Press, 1980:345–72. (Foundations in library and information science.). [Google Scholar]

- Hall BH. Collection assessment manual for college and university libraries. Phoenix, AZ: Oryx Press, 1985:55. [Google Scholar]

- Lancaster FW. If you want to evaluate your library …. 2d ed. Champaign, IL: University of Illinois Graduate School of Library and Information Science, 1993:87. [Google Scholar]

- Nisonger TE. Management of serials in libraries. Englewood, CO: Libraries Unlimited, 1998:126. [Google Scholar]

- Nisonger TE. A methodological issue concerning the use of Social Sciences Citation Index Journal Citation Reports impact factor data for journal ranking. Libr Acquis Pract Th. 1994 Winter; 18(4):447–58. [Google Scholar]

- Journal Citation Reports on CD-ROM (science edition). Philadelphia, PA: Institute for Scientific Information, 1999. [Google Scholar]

- Golden B. A method of quantitatively evaluating a university library collection. Libr Res Tech Serv. 1974 Summer; 18(3):268–74. [Google Scholar]

- Burr RL. Evaluating library collections: a case study. J Acad Libr. 1979 Nov; 5(5):256–60. [Google Scholar]

- Pionteck S, Garlock K. Creating a World Wide Web resource collection. Internet Res [Internet], 1996;6(4):20–6. [cited 5 May 2001]. <http://www.emerald-library.com/brev/17206dc1.htm>. (Subscription required). [Google Scholar]

- Pritchard SJ. Purchase and use of monographs originally requested on interlibrary loan in a medical school library. Libr Acquis Pract Th 1980;4(2):135–9. [Google Scholar]

- Hall BH. Collection assessment manual for college and university libraries. Phoenix, AZ: Oryx Press, 1985:64. [Google Scholar]

- Association of Research Libraries. Measures for electronic resources (e-metrics). [Web document]. Washington, DC: The Association. [cited 4 Feb 2002]. <http://www.arl.org/stats/newmeas/emetrics/>. [Google Scholar]

- Luther J. White paper on electronic journal usage statistics. [Web document]. Council on Library Information and Resources, October 2000. [cited 3 May 2001]. <http://www.clir.org/pubs/reports/pub94/contents.html>. [Google Scholar]

- International Coalition of Library Consortia. Guidelines for statistical measures of usage of Web-based indexed, abstracted, and full text resources. The Coalition, 1998. [cited 3 May 2001]. <http://www.library.yale.edu/consortia/webstats.html>. [Google Scholar]