Abstract

Since multi-view learning leverages complementary information from multiple feature sets to improve model performance, a tensor-based data fusion layer for neural networks, called Multi-View Data Tensor Fusion (MV-DTF), is used. It fuses M feature spaces , referred to as views, in a new latent tensor space, , of order P and dimension , defined in the space of affine mappings composed of a multilinear map —represented as the Einstein product between a -order tensor anda rank-one tensor, , where is the m-th view—and a translation. Unfortunately, as the number of views increases, the number of parameters that determine the MV-DTF layer grows exponentially, and consequently, so does its computational complexity. To address this issue, we enforce low-rank constraints on certain subtensors of tensor using canonical polyadic decomposition, from which M other tensors , called here Hadamard factor tensors, are obtained. We found that the Einstein product can be approximated using a sum of R Hadamard products of M Einstein products encoded as , where R is related to the decomposition rank of subtensors of . For this relationship, the lower the rank values, the more computationally efficient the approximation. To the best of our knowledge, this relationship has not previously been reported in the literature. As a case study, we present a multitask model of vehicle traffic surveillance for occlusion detection and vehicle-size classification tasks, with a low-rank MV-DTF layer, achieving up to and in the normalized weighted Matthews correlation coefficient metric in individual tasks, representing a significant and improvement compared to the single-task single-view models.

Keywords: Einstein product, Hadamard product, Hadamard factor tensors, multi-view learning, multitask learning, vehicle traffic surveillance

1. Introduction

Vehicle traffic surveillance (VTS) systems are key components of intelligent transportation systems (ITSs), as they enable the automated video content analysis of traffic scenes to extract valuable traffic data. It includes crucial aspects of vehicle behavior, such as trajectories and speed, as well as traffic parameters, e.g., lane occupancy, traffic volume, and density. These data serve as the cornerstone for a variety of high-level ITS applications, including collision detection [1,2], route planning, and traffic control [3,4]. Currently, there exist several mathematical models for various tasks related to vehicle traffic, each with different conditions and traffic network topologies. For a comprehensive overview of vehicle traffic models, see, e.g., [5].

However, due to the complex nature of vehicle traffic, VTS systems are usually broken down into a set of smaller tasks, including vehicle detection, occlusion handling, and classification [6,7,8,9,10,11,12,13,14]. Each task is represented as a feature model, which should be related to the underlying task-specific explanatory factors, while it is either developed by human experts (hand-crafted) or automatically learned. These features focus on specific aspects of vehicles, such as texture, color, and shape, which individually provide complementary information to each other. Therefore, finding a highly descriptive feature model is crucial for enhancing the learning process on every VTS task.

Such feature diversity has made data fusion (DF) attractive for leveraging its shared and complementary information. DF allows for the integration of data from different sources to enhance our understanding and analysis of the underlying process [15]. In this context, there are two common DF levels [16]: low-level, where data are combined before analysis, and decision-level, where processed data from each source are integrated at a higher level, such as in ensemble learning [17]. Moreover, the diverse nature of data sources poses challenges, such as heterogeneity across sources, high-dimensional data, missing values, and a lot of redundancy that DF algorithms should address [18,19].

As part of DF, multi-view learning (MVL) is a machine learning (ML) paradigm that exploits the shared and complementary information contained in multiple data sources, called views, obtained from different feature sets [20]. Here, data represented by M views are referred to as M-view data. For instance, an image represented by texture, edges, and color features can be regarded as three-view data. MVL methods can be grouped into three categories: co-training, multiple kernel learning, and subspace learning (SL) [21,22]. Among these, SL-based methods focus on learning a low-dimensional latent subspace that captures the shared information across views [23].

On the other hand, multitask learning (MTL) is another ML paradigm where multiple related tasks are learned simultaneously to leverage their shared knowledge, with the ultimate aim of improving generalization and performance in individual tasks [24,25,26,27,28].

Recently, artificial neural networks (ANNs) have shown superior performance in vision-based VTS systems. ANNs are computational models built from a composition of functions, called layers, which together capture the underlying relationships between the so-called input and output spaces to solve a given task, such as regression or classification [29]. Such layers, including fully connected (FC) and convolutional (Conv), are parameterized by weights and biases structured as tensors, matrices, or vectors, which are learned during training. Notably, the first layers usually act as feature extractors, whereas higher layers capture the relationships between extracted features and the output space.

Furthermore, higher-order tensors [30], or multidimensional arrays, have gained significant attention over the last decade due to their ability to naturally represent multi-modal data, e.g., images and videos, and their interactions. They have been successfully applied in various domains, including signal processing [31], machine learning [32,33,34,35,36,37], computer vision [38], and wireless communications [39,40]. For instance, tensor methods such as decomposition models have been employed for the low-rank approximation of tensor data, enabling more efficient and effective analysis of such data.

In this work, we propose a computationally efficient tensor-based multi-view data fusion layer for neural networks, here expressed as the Einstein product. Our approach leverages multiple feature spaces to address the limitations inherent to single-view models, such as reduced data representation capacity and model overfitting. It offers improved flexibility and scalability, as it enables the integration of additional views without significantly increasing the computational burden. Finally, we present a case study with a multitask, multi-view VTS model, demonstrating significant performance improvements in vehicle-size classification and occlusion-detection tasks.

1.1. Related Work

Occlusion detection is a challenging problem in vision-based tasks, in which vehicles or some parts of them are hidden by other elements in the traffic scene, making their detection a difficult task. Early works have explored approaches based on empirical models, which infer the presence of occlusion by assuming specific geometric patterns, such as concavity in the shape of occluded vehicles [41,42,43,44,45,46,47]. Recently, deep learning (DL) has also been employed for occlusion detection [48,49,50,51,52], where such models are even capable of reconstructing the occluded parts [53,54].

Several algorithms based on ML and DL have been proposed for intra- and inter-class vehicle classification [6,8,9,55,56,57,58,59,60]. In [8], Hsieh et al. employ the optimal classifier to categorize vehicles as cars, buses, or trucks by leveraging the linearity and size features of vehicles, achieving accuracy of up to 97.0%. Moussa [9] introduces two levels of vehicle classification: the multiclass level, which categorizes vehicles as small, midsize, and large, and the intra-class level, in which midsize vehicles are classified as pickups, SUVs, and vans. In [6], we proposed a one-class support vector machine (OC-SVM) classifier with a radial basis kernel to classify vehicles as small, midsize, and large. By representing vehicles in a 3D feature space (area, width, and aspect-ratio) features, a recall, precision, and f-measure of up to were achieved for the midsize class. Other techniques include the gray-level co-occurrence matrix (GLCM) [61], 3D appearance models [62,63,64], eigenvehicles [65], and non-negative factorization [66,67,68]. Recently, CNN-based classifiers have been employed, outperforming previous works [55,58,59,60,69].

Other works based on MLV and MTL have also been developed for VTS systems. For instance, Wang et al. [70] proposed an MVL approach to foreground detection, where three-view heterogeneous data (brightness, chromaticity, and texture variations) are employed to improve detection performance. Then, their conditional probability densities are estimated via kernel density estimation, followed by pixel labeling through a Markov random field. In [71], a multi-view object retrieval approach to surveillance videos integrates semantic structure information from CNNs trained on ImageNet and deep color features, using locality-sensitive hashing (LSH) to encode the features into short binary codes for efficient retrieval. Chu et al. [72] present vehicle detection with multitask CNNs and a region-of-interest (RoI) voting scheme. This framework addresses simultaneously supervision with subcategory, region overlap, bounding-box regression, and category information to enhance detection performance. In [73], a multi-task CNN for traffic scene understanding is proposed. The CNN consists of a shared encoder and specific decoders for road segmentation and object detection, generating complementary representations efficiently. Additionally, the detection stage predicts object orientation, aiding in 3D bounding box estimation. Finally, Liu et al. [74] introduce the Multi-Task Attention Network (MTAN), a shared network with a global feature pooling and task-specific soft-attention modules to learn task-specific features from global features while allowing feature sharing across tasks.

Although, our work is focused on multi-view and multitask VTS systems, some works related to other domains are also overviewed. In [36], a tensor-based, multi-view feature selection method called DUAL-TMFS is proposed for effective disease diagnosis. This approach integrates clinical, imaging, immunologic, serologic, and cognitive data into a joint space using tensor products, and it employs SVM with recursive feature elimination to select relevant features, improving classification performance in neurological disorder datasets. Zadeh et al. [75] introduce a novel model called a tensor fusion network for multimodal sentiment analysis. It leverages the outer product between modalities to model both the intra-modality and inter-modality dynamics. On the other hand, Liu et al. [76] propose an efficient multimodal fusion scheme using low-rank tensors. Experimental validations across multimodal sentiment analysis, speaker trait analysis, and emotion recognition tasks demonstrate competitive performance and robustness across a variety of low-rank settings.

Table 1 offers a comprehensive overview of existing research related to our approach and to VTS systems. It highlights the use of ML and DL approaches, fed either by hand-crafted features or raw data with automatic feature learning, to capture the underlying task patterns. While DL features generally achieve superior performance, they require large, high-quality training sets and high computational complexity models to find suitable representations. Conversely, hand-crafted features can perform competitively for specific tasks, but determining the optimal feature representation is challenging, as no single hand-crafted feature can fully describe the underlying task’s relationships.

Furthermore, the emerging trend towards the adoption of ANN models on VTS systems is evident. However, despite their high performance, these models demand substantial memory and computational resources for learning and inference, as their layers are usually overparameterized. To address these challenges, various techniques such as sparsification, quantization, and low-rank approximation have been proposed to compress the parameters of pre-trained layers [77,78,79,80,81,82,83]. Among these techniques, low-rank approximation is very often employed. In [79,80], Denil et al. compress FC layers using matrix decomposition models. Conv layers are compressed via tensor decompositions, including canonical polyadic decomposition (CPD) [81,82] and Tucker decomposition [83]. However, compressing pre-trained layers usually results in an accuracy loss, and a fine-tuning procedure is often employed to recover the accuracy drop [82,84,85,86]. Therefore, some authors have suggested the incorporation of low-rank constraints into the optimization problem [87,88,89]. Other works have found that compressing raw images before training also contributes to computational complexity reduction, as suggested in [32,90]. Additionally, in [91], tensor contraction layers (TCLs) and tensor regression layers (TRLs) are introduced in CNNs for dimensionality reduction and multilinear regression tasks, respectively. This approach imposes low-rank constraints via Tucker decomposition on the weights of TCLs and TRLs to speed up their computations.

Table 1.

Related work summary.

| Reference | Input | Method | Contribution |

|---|---|---|---|

| [6,8,9,10,14] | Single-view | ML | Hand-crafted geometric features represent vehicles for detection and classification using ML-based algorithms |

| [11,12] | Single-view | DL | CNN models are proposed to perform automatic feature learning for vehicle detection and classification |

| [65] | Single-view | Eigenvalue decomposition | Eigenvehicles are introduced as an unsupervised feature representation method for vehicle recognition |

| [66,67,68] | Single-view | Nonnegative factorization | A part-based model is employed for vehicle recognition via non-negative matrix/tensor factorization |

| [72,73,74] | Single-view | DL-based MTL | MTL models based on DL are employed to simultaneously perform multiple tasks, including road segmentation, vehicle detection and classification |

| [92] | Multi-view | DL | This work employs a YOLO-based model that fuses camera and LiDAR data at multiple levels |

| [61,93,94] | Single-view | ML | Single-view features, such as HOG, Haar wavelets, or GLCM, represent vehicles for classification in ML models |

| [95] | Multi-view | Tucker decomposition | A tensor decomposition is employed for feature selection of HOG, LBP, and FDF features |

| [70,71,96] | Multi-view | MVL | MVL approaches are proposed to enhance vehicle detection, classification, and background modeling by learning richer data representations from color features |

| [30,97,98,99,100] | − | − | These works provide theoretical foundations on tensors and its operations, such as the Einstein and Hadamard products, with applications across multiple domains |

| [32,77,78,79,80,81,82,83,90] | − | DL | Matrix and tensor decompositions are employed for speeding up CNNs by compressing FC and Conv layers and reducing the dimensionality of their input space |

| [91] | − | DL | Multilinear layers are introduced for dimensionality reduction and regression purposes in CNNs, leveraging tensor decompositions for efficient computation. |

1.2. Contributions

The main contributions of this work are the following:

We found a novel connection or mathematical relationship between the Einstein and Hadamard products for tensors (for details, see Section 5.2). From this connection, other algorithms for efficient approximations of the Einstein product can be developed.

Since multi-view models provide a more comprehensive input space than single-view models, we employ a tensor-based data fusion layer, here called multi-view data tensor fusion (MV-DTF). Unlike other works, our approach maps the multiple feature spaces (views) into a latent tensor space, , using a multilinear map, here expressed as the Einstein product (see Section 5), followed by a translation.

A major drawback of the MV-DTF layer is its high computational complexity, which grows exponentially with the number of views. To address this issue, a low-rank approximation for the MV-DTF layer, here called the low-rank multi-view data tensor fusion (LRMV-DTF) layer, is also proposed. This approach leverages the novel relationship between the Einstein and Hadamard products (see Section 5.2), where the lower the rank values, the more computationally efficient the operation.

As a case study, we introduce a high-performance multitask ANN model for VTS systems capable of simultaneously addressing various VTS tasks but which is here limited to occlusion detection and vehicle-size classification. This model incorporates the proposed LRMV-DTF layer as multi-view feature extractor to provide a more comprehensive input space compared to individual spaces.

1.3. How to Read This Article

For a comprehensive understanding of this paper, the following is suggested the following: Section 1 presents the motivation behind our research on VTS systems, as well as a review of their related works, while Section 2 introduces tensor algebra and multilinear maps, which will be essential for understanding the subsequent mathematical definitions; however, if you are already familiar with their theoretical foundations, you can proceed directly to Section 3 to delve into the problem statement and its mathematical formulation, where the main objectives are stated. These objectives are important to understand the major results of the paper. Section 4 provides a comprehensive overview of VTS systems and their associated tasks as an important case study. If you are already familiar with these concepts, proceed to Section 5 for the technical and mathematical details of the MV-DTF layer. Particularly, Section 5 is very important because it presents the novel connection between Einstein and Hadamard products. Section 6 presents the results and their analysis for a deeper understanding of our findings, which are complemented by figures and tables to facilitate data interpretation. Finally, Section 7 provides the conclusions of this work, summarizing the key points and suggesting directions for future research.

2. Mathematical Background

2.1. Notation

In this study, we adopt the conventional notation established in [30], along with other commonly used symbols. Table 2 provides a comprehensive overview of the symbols utilized in this paper. An Nth-order tensor is denoted by , where the dimension is usually referred to as the n-mode of . The ith entry of a vector, , is denoted as ; the th entry of a matrix by ; while the th entry of an Nth-order tensor is denoted as , where is called the n-mode index. The n-mode fiber of an Nth-order tensor is an -dimensional vector resulted from fixing every index but ; i.e., , where colon mark: denotes all possible values of the n-mode index , i.e., . The ith n-mode slice of an Nth-order tensor is an th-order tensor defined by just fixing the index, i.e., . Finally, for any two functions, and , denotes their function composition. For an understanding on tensor algebra, we refer the interested reader to the comprehensive work by Kolda and Bader [30].

Table 2.

Basic notation used in this work.

| The field for real, natural, and binary numbers | |

| ≃ | It denotes isomorphism between two structures |

| The subset of natural numbers | |

| Tensor, matrix, column vector, and scalar | |

| The dimension of a vector space, V | |

| ⊙ | Hadamard product |

| ⊗ | Tensor product |

| The n-mode tensor-matrix product | |

| The Einstein product along the last N modes | |

| The th element of a sequence, | |

| indexed by , where X can be a scalar, vector, or tensor | |

| The feature space for the m-th view | |

| The output space for the t-th task | |

| The t-th classification task | |

| The MV-DTF layer and its low-rank approximation | |

| The t-th task-specific function | |

| Hypothesis space of the classifiers for the t-th task | |

| The latent tensor space | |

| P | The order of the latent tensor space |

| The dimension of the latent tensor space | |

| For a tensor , it denotes the | |

| tensor rank of the -th subtensor |

2.2. Multilinear Algebra

This section provides an overview of basic concepts of multilinear algebra, such as tensors and their operations over a set of vector spaces.

Definition 1

(Multilinear map [101]). Let and W be vector spaces over a field, . And let be a function that maps an ordered M-tuple of vectors, , into an element, , where . If, for all and , Equation (1) holds, then is said to be a multilinear map (or an M-linear map); i.e., it is linear in each argument.

(1)

Definition 2

(Tensor product). Let and W be real vector spaces, where , and . Then, the tensor product of the set of M vector spaces , denoted as , is another vector space of dimension , called tensor space, together with a multilinear map, , that satisfies the following universal mapping property [101,102]: for any multilinear map , there exists a unique linear map, , such that .

Definition 3

(Tensor). Let be vector spaces over some field, , where . An M-order tensor, denoted as , is an element in the tensor product .

Definition 4

(m-mode matricization [30]). The m-mode matricization is a mapping that rearranges the m-mode fibers of a tensor, , into the columns of a matrix, , where .

Definition 5

(Rank-one tensor). Let be an Mth-order tensor, and let be a set of M vectors, where for all . Then, if can be written using the tensor product , it is said to be a rank-one tensor, and its -th entry will be determined by .

Definition 6

(Tensor decomposition rank). The decomposition rank, R, of a tensor, , is the smallest number of rank-one tensors that reconstructs exactly as their sum. Then, is called a rank-R tensor.

Definition 7

(Tensor multilinear rank). For any Mth-order tensor, , its multilinear rank, denoted as is the M-tuple , whose mth entry, , corresponds to the dimension of the column space of , i.e., , formally called m-mode rank.

Definition 8

(Tensor m-mode product). Given a tensor, , and a matrix, , their m-mode product, denoted as , produces another tensor, , whose th entry is given by Equation (2). Therefore, .

(2)

2.3. Einstein and Hadamard Products

In this section, the fundamental concepts for the mathematical modeling of the MV-DTF layer are presented, including the Hadamard and Einstein products.

Definition 9

(Inner product). For any two tensors, , , their inner product is defined as the sum of the product of each entry, as Equation (3) shows:

(3)

Definition 10

(Hadamard product). The Hadamard product of two Nth-order tensors , , denoted as , results in an Mth-order tensor, , such that its th-entry is equal to the element-wise product .

Definition 11

(Einstein product [100,103]). Given two tensors, and , of order and , their Einstein product or tensor contraction, denoted as , produces an tensor, , whose th entry is given by the inner product between subtensors and , as Equation (4) shows:

(4)

The product can be understood as a linear map, ; i.e., for any two scalars , and tensors , the following properties hold:

Distributive: .

Homogeneity: .

2.4. Subspace Learning

Recent advances in sensing and storage technologies have resulted in the generation of massive amounts of complex data, commonly referred to as big data [104,105]. These data are often represented in a high-dimensional space, making their visualization and analysis a challenging task. To address these challenges, subspace learning methods have emerged as a powerful approach to learning a low-dimensional representation of high-dimensional data [106,107], such as the spatial and temporal information encoded in videos. In this section, a brief review of linear and multilinear methods for subspace learning is presented, highlighting their advantages and disadvantages.

2.4.1. Linear Subspace Learning (LSL)

Given a dataset, , of N samples, arranged in matrix form as , whose n-th column vector corresponds to the n-th sample , LSL seeks to find a linear subspace of that best explains the data. The resulting subspace can be spanned by a set of linearly independent basis vectors, , where . By leveraging this subspace, high-dimensional data can be projected onto a lower-dimensional space , as Equation (5) shows:

| (5) |

where is called the factor matrix, whose columns correspond to the basis vectors, and is the projection of the input matrix onto .

A wide variety of techniques have been proposed to address the LSL problem, ranging from unsupervised approaches such as principal component analysis [108], factor analysis (FA) [109], independent component analysis [110], canonical correlation analysis [111], and singular value decomposition [112], as well as supervised approaches like linear discriminant analysis [113]. Subsequently, such techniques aim to estimate by solving optimization problems such as maximizing the variance or minimizing the reconstruction error of the projected data.

Although LSL methods have shown great effectiveness in modeling vector-based observations, they face difficulties when addressing multidimensional data. Then, to apply LSL methods on tensor data, it is necessary to vectorize them. Unfortunately, this transformation very often leads to a computationally intractable problem due to the large number of parameters to be estimated, and the model may suffer from overfitting. Furthermore, vectorization also destroys the inherent multidimensional structure and correlations across modes of tensor data [30,106].

2.4.2. Multilinear Subspace Learning (MSL)

Multilinear subspace learning is a mathematical framework for exploring, analyzing, and modeling complex relationships over tensor data, preserving their inherent multidimensional structure. According to Lu [106], the MSL problem can be formulated as follows: Given a dataset arranged in tensor form as , where subtensor corresponds with the n-th data point , MLS seeks to find a set of M subspaces that best explains data, where the mth subspace resides in and is spanned by a set of linearly independent basis vectors, . The MSL problem can be formally defined using Equation (6):

| (6) |

where is a matrix whose columns correspond to the basis vectors of the m-th subspace, and denotes a function to be maximized.

A classical MSL technique is the Tucker decomposition [30], which aims to approximate a given Mth-order tensor, , into a core tensor, , multiplied along the m-mode by a matrix, , for all , as Equation (7) shows:

| (7) |

where is the m-th factor matrix associated with the m-mode fiber space of , captures the level of interaction on each factor matrix, and .

Similarly, canonical polyadic decomposition [30] aims to approximate a given Mth-order tensor into as a sum of R rank-one tensors, as Equation (8) shows:

| (8) |

where is the r-th weighting term, and is the m-mode factor vector for the r-th rank-one tensor , while Equation (8) is exact iff R is the decomposition rank.

While MSL effectively mitigates several drawbacks related to LSL methods, it has some disadvantages. First, the intricate mathematical operations required for MSL methods very often involve high computational complexity, impacting both time and storage requirements. Moreover, MSL requires a substantial amount of data to effectively capture the intricate relationships of multilinear subspaces. Therefore, addressing these challenges is crucial to ensuring proper learning.

3. Problem Statement and Mathematical Definition

In this section, the problem to be addressed is formulated in natural language, outlining specific tasks related to VTS systems. Subsequently, the inherent challenges are mathematically formulated.

3.1. Problem Statement

Given a traffic surveillance video of seconds, recorded with a static camera, where multiple moving vehicles are observed, we aim to comprehensively model vehicle traffic using a multitask, multi-view learning approach. This model simultaneously addresses various tasks, such as vehicle detection, classification, and occlusion detection, each of them represented by specific views that partially describe the underlying problem. By projecting multi-view data into a unified, low-dimensional latent tensor space, which builds a new input space for the tasks, our approach should improve the model performance and provide a more comprehensive representation of different study cases, e.g., the traffic scene, compared to single-task, single-view learning models.

3.2. Mathematical Definition

3.2.1. Multitask, Multi-View Dataset: The Input and Output Spaces

Consider a collection of T supervised classification tasks related to VTS systems, such as vehicle detection, classification, and occlusion detection, where, to the t-th task, corresponds a dataset, , composed of M-view labeled instances, e.g., moving vehicles, as Equation (9) shows:

| (9) |

where is the feature vector of the k-th instance over the m-th view and t-th task, belonging to the feature space , i.e., , the M-tuple is an element of the input data space , and its corresponding true label in an output space, .

3.2.2. Task Functions

For the t-th task, we aim to learn a multi-view classification function, , that predicts, with high probability, the true label of the k-th instance, as Equation (10) shows, where belongs to some hypothesis space, .

| (10) |

Consequently, the dimension of the output space represents the number of classes in the t-th learning task.

3.2.3. The Parametric Model

Considering the high-dimension of the input data space, it seems reasonable to project multi-view data onto a low-dimensional latent space, , by learning some mapping , as Equation (11) shows:

| (11) |

where is the projection of the k-th instance, and can be either unidimensional (e.g., J), or multidimensional (e.g., ). If we need a more efficient mapping, , a low-rank approximation function, , is required instead of .

Let be the t-th task-specific mapping that predicts the label from the k-th instance embedded in the latent space , as shown in Equation (12), where can be represented by, e.g., ANN, SVM, or random forest (RF) algorithms. In consequence, the function composition can determine the t-th task function .

| (12) |

3.2.4. The Optimization Problem

For a given multitask, multi-view dataset, , our problem can be reduced to learn simultaneously the set of functions that minimizes the multi-objective empirical risk of Equation (13) [114]:

| (13) |

where belongs to some hypothesis space , is the loss function related to the t-th task that measures the discrepancy between the true label and the predicted one, and is a weighting parameter, determined either statically or dynamically, which controls the relative importance of the t-th task.

3.2.5. Objectives

The main objectives are as follows:

For a multi-view input space of M views, to learn a mapping , where is a low-dimensional latent tensor space with or J (see Section 5.1, particularly Equation (20)).

To reduce the computational complexity of , a low-rank approximation, , needs to be learned.

For a set of T tasks, e.g., VTS tasks, the set of task-specific functions must be learned, where , and is the output space of the t-th task.

To evaluate the performance of our approach, a multitask, multi-view model for the case study of VTS systems (see Section 6.2) is employed.

4. Vehicle Traffic Surveillance System: Multitask, Multi-View Input Space Formation

In this section, we provide a general description of several tasks associated with a typical vision-based VTS system, including background and foreground segmentation, occlusion handling, and vehicle-size classification. Together, these tasks enable the estimation of traffic parameters, such as traffic density, vehicle count, and lane occupancy, inferred from the video. Specifically, these parameters are essential for high-level ITS applications.

4.1. Background and Foreground Segmentation

Let be a fourth-order tensor representing a traffic surveillance video, recorded at a frame rate with a duration of seconds. Here, , W, and H represent the image spatial dimensions, corresponding to width and height, respectively, and B is the dimensionality of the image spectral coordinate system, i.e., the color space in which each pixel lives, or the number of spectral bands in hyper-spectral imaging (HSI). For example, corresponds to grayscale, while corresponds to RGB color space. Finally, denotes the number of frames in the video.

From the aforementioned tensor , it is important to note the following:

The nth frontal slice represents the nth frame of the video at time .

The third-mode fiber denotes the th pixel value at frame n, where is the pixel location belonging to the image spatial domain .

Each pixel value is quantized using D bits per spectral band. For simplicity, here, we assume the 8-bit grayscale color space , i.e., , but it can be extended to other color spaces. Consequently, reduces to .

Every th pixel value can be modeled as a discrete random variable, , with a probability mass function (pmf), denoted as , where .

For any observation time, , the pmf of any pixel can be estimated, denoted as .

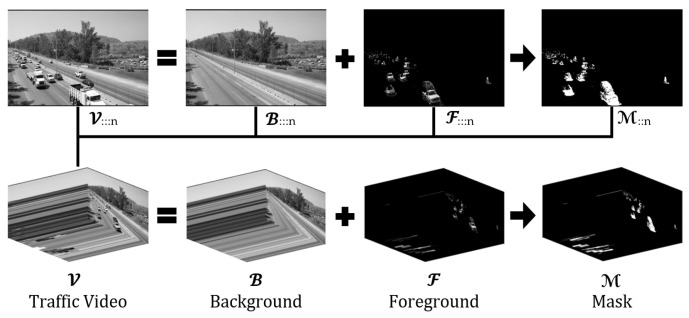

Then, tensor can be decomposed as Equation (14) shows and Figure 1 illustrates:

| (14) |

where is called the background tensor, is the foreground tensor, and is the binary mask of the foreground tensor, whose -th entry if the th pixel value of at frame n is part of the foreground tensor ; otherwise , the complement of , and can be obtained from the Hadamard product .

Figure 1.

Illustration of the traffic surveillance video decomposition model.

4.2. Blob Formation

After decomposing into the background and foreground tensors, various moving objects, including vehicles, pedestrians, and cyclists, can be extracted by analyzing or its mask, . One such technique is called connected components analysis (CCA) [115,116]. CCA recursively searches at every nth frontal slice for connected pixel regions (see Definition 12), referred to in the literature as binary large objects (blobs), which can contain pixels associated with moving objects.

Definition 12

(Blob). A blob, denoted as S, is a set of pixel locations connected by a specified connectivity criterion (e.g., four-connectivity or eight-connectivity [117]). Specifically, a pixel located at belongs to blob S if there exists another pixel location, , such that the connectivity criterion is met, as Equation (15) shows:

(15) where is an inter-pixel distance that establishes the connectivity criterion given some threshold value, , and .

For every blob detected at frame n, a blob mask, , can be formed whose entries are given by Equation (16). Note that the pixel values of blob can be obtained from the product .

| (16) |

4.3. Vehicle Feature Extraction and Selection

Feature extraction can be considered a mapping, , that transforms a given blob, S, into a low-dimensional point, , called the feature vector, as shown in Equation (17):

| (17) |

where , called the feature space, captures specific aspects of blobs , e.g., color, shape, or texture.

The image moments (IMs) are a classical hand-crafted feature extractor that provides information about the spatial distribution, shape, and intensity of a blob image. Typical features extracted via the IM include centroid, area, orientation, and eccentricity. Formally, the -th raw IM for blob S is given by the bilinear map of Equation (18):

| (18) |

where , are vectors whose i-th and j-th entries are and , respectively.

4.4. Vehicle Occlusion Task

Assuming there are vehicles on the road at the n-th frame, each associated with a specific blob , let denote the set of these blobs, and let be the set of blobs detected via CCA in the n-th frame, where . The v-th vehicle, with blob , is occluded by the u-th detected blob if any of the conditions in Equation (19) are met.

| (19) |

Given the set of detected blobs in the n-th frame, the vehicle occlusion detection aims to predict, with high probability, a set of blobs , each containing more than one vehicle. To achieve this, an occlusion feature space, , is constructed using a feature extraction mapping to capture the vehicle occlusion patterns. In this space, every detected blob, , is represented by an -dimensional feature vector . Assuming occlusions are only composed of partially observed vehicles, a classification function, , can be built to predict whether a detected blob, , has more than one vehicle.

4.5. Vehicle Classification Task

Given a set of vehicle-size labels (e.g., ) represented in a vector space, , called the output space, the vehicle classification task aims to predict, with high probability, the true label for an unseen vehicle blob instance, , at frame n. First, each blob, , is mapped into some feature space, , using a feature extraction mapping, , constructed to explain the vehicle-size patterns. From this space, a feature vector, , associated with , is derived. Then, a classification function, , can be built to predict the label of a vehicle blob instance, .

5. A Multi-View Data Tensor Fusion Layer and the Connection Between the Einstein and Hadamard Products

In this section, the concept of a multi-view data tensor fusion (MV-DTF) layer and its connection with Einstein and Hadamard products are introduced. Basically, MV-DTF is a form of an FC layer for multi-view data; i.e., it is an affine function, but instead of using a linear map, our layer employs a multilinear map to encode the interactions across views. Additionally, a low-rank approximation for the MV-DTF layer is also proposed to reduce its computational complexity.

5.1. Multi-View Tensor Data Fusion Layer: The Mapping g as an Einstein Product

Inspired by previous works [36,75,76], we restrict the function space of the MV-DTF layer to the affine functions characterized by a multilinear map, , followed by a translation and, possibly, a non-linear map, , as Equation (20) shows:

| (20) |

where is the MV-DTF layer, the P-order tensor is the projection of the k-th instance onto the latent tensor space , called the fused tensor, with dimension , is the translational term, formally called bias, and the mapping .

Definition 13 specifies how a multilinear map can be represented using coordinate systems, and from this representation, a tensor can be induced for every multilinear map.

Definition 13

(Coordinate representation of a multilinear map [101]). Let and W be real vector spaces, where for all , and . Let be the standard basis for W. And let be a multilinear map. Given an ordered M-tuple , where , the map is completely determined by a linear combination of basis vectors and scalars , as Equation (21) shows.

(21) The collection of scalars can then be arranged into an th-order tensor, denoted as , which determines , and whose -th entry corresponds with .

Next, Definition 14 establishes a connection between the Einstein product and multilinear maps via the universal property of multilinear maps (see Definition 2).

Definition 14.

Let be a set of vectors, where for all . And let be the multilinear map induced via the tensor , and is the multilinear map associated with the tensor product . For , the Einstein product can be understood as a linear map, . Then, and Φ are related by the universal property of multilinear maps, as Equation (22) shows.

(22)

For the multilinear map in Equation (20), Definition 13 ensures the existence of a tensor, , that determines , and Definition 14 provides the associated linear map of . From the above definitions, Equation (20) can be rewritten in tensor form as Equation (23) shows, where is a rank-one tensor resulted from the tensor product of the M view vectors associated with the k-th instance.

| (23) |

Note that Equation (23) represents a differentiable expression with respect to tensors and . Consequently, their values can be learned using optimization algorithms such as stochastic gradient descent (SGD), where the number of parameters to learn, denoted as L, corresponds with the number of entries of tensors and , as Equation (24) shows. Note that L scales exponentially with the number of views, M, and the order P of . Specifically, for , L is reduced to . This exponential growth can lead to computational challenges while increasing the risk of overfitting due to the induced curse of dimensionality [118,119,120]; i.e., the number of samples needed to train a model grows exponentially with its dimension.

| (24) |

5.2. Hadamard Products of Einstein Products and Low-Rank Approximation Mapping

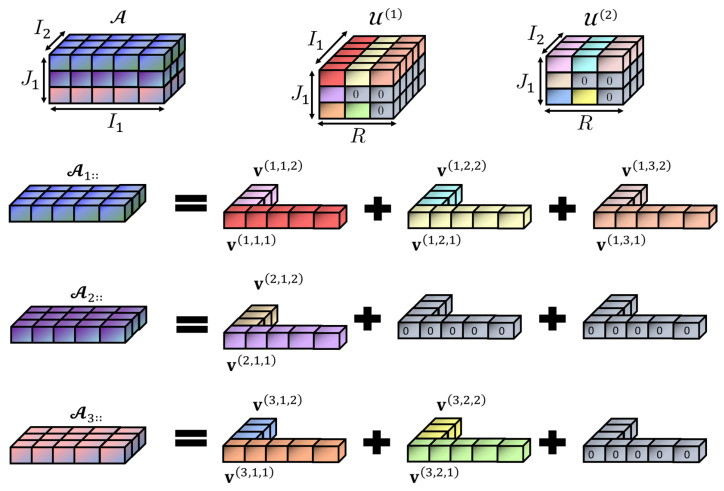

Low-rank approximation is a well-known technique that not only allows for reducing model parameter storage requirements but also helps in alleviating the computational burden of neural network models [81,82,85,86,87,88,89,121]. Based on these facts, in this work, we explore a CPD-based low-rank structure, illustrated in Figure 2, to overcome the curse of dimensionality induced via the MV-DTF layer. This structure helps reduce the number of parameters required for the MV-DTF layer, and it is computationally more efficient (see Proposition 1). But before presenting this structure, the concept of Hadamard factor tensors is first introduced in Definition 15.

Figure 2.

Illustration of subtensors of and the Hadamard factor tensors , and for the multilinear map , where , , , and .

Definition 15

(Hadamard factor tensors). Let be a -order tensor, whose -th subtensor results from fixing every index but the last M modes; i.e., for all and can be approximated as a rank- tensor using the CPD, as Equation (25) shows:

(25) where the number of subtensors in corresponds to the dimension of the latent space ; i.e., , each -th subtensor has a specific rank, , which can be different across subtensors, and for , known as the m-mode factor vector, the superscripts identify the -th subtensor to which it corresponds, identifies its associated r-th rank-one tensor in the CPD, and its mode. Then, the set of factor vectors along the m-mode can be rearranged into a -order tensor, , here called the m-mode Hadamard factor tensor, whose -mode fibers are given by Equation (26):

(26) where is the zero vector, and is the maximum rank across subtensors, employed to avoid inconsistencies due to different rank values between subtensors.

Figure 2 illustrates the concept of Hadamard-factor tensors for the multilinear map with associated tensor . Here, there is a two-view data () with dimensions , and , respectively; the order and dimension of the latent tensor space are and , and hence, there are three subtensors, , , associated with the tensor . For subtensor , its rank is ; hence, for subtensor , , i.e., , while for subtensor , , and . From these vectors, two Hadamard factor tensors, and , can be constructed, corresponding to the first and second views, respectively. The second-mode dimension of these tensors corresponds to the greatest subtensor rank, i.e., , to avoid heterogeneous rank values across subtensors. Hence, the second and third subtensors incorporate two and one additional zero vectors, respectively, as Figure 2 shows.

Proposition 1 presents the primary result of this work, i.e., the mathematical relationship between Einstein and Hadamard products. To the best of our knowledge, this relationship is not known.

Proposition 1.

Let be a rank-one tensor, where for all . And let be a -order tensor induced via the multilinear map , which can be decomposed into a set of M factor tensors for a given rank, , where for all . Then, can be approximated by a sum of R Hadamard products of Einstein products, as Equation (27) shows:

(27) where is the m-mode Hadamard factor tensor. A vector of all ones is denoted as . And .

Proof.

In Appendix A. □

By leveraging Proposition 1 for tensor in Equation (23), the MV-DTF layer can be approximated through a more efficient low-rank mapping , called the low-rank multi-view data tensor fusion (LRMV-DTF) layer, defined in Equation (28), where the m-mode factor tensor , associated with the m-th view, contributes to building every k-th fused tensor .

| (28) |

From this approximation, the number of parameters required for the LRMV-DTF layer, denoted as , is provided in Equation (29). Note that the product of the -dimensions related to the views in L (Equation (24)) has been replaced with a summation, which yields fewer parameters to learn compared to those in the MV-DTF layer, reducing the risk of overfitting.

| (29) |

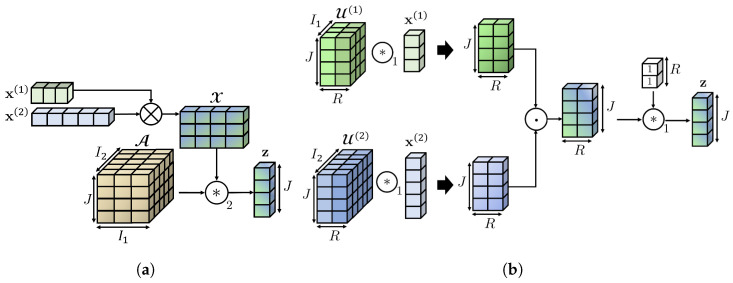

An illustration of our layers is shown in Figure 3a (MV-DTF), and Figure 3b (LRMV-DTF). Here, the number of views , and their dimensions , and respectively. The order of the latent space is , and its dimension . Consequently, the multilinear map is , with associated tensor , and bias . However, vector is fixed to zero for simplicity. For low-rank approximation, the rank of the -th subtensor is . Hence, according to Definition 15, tensor can be decomposed into two factor tensors: , and , associated with the first and second views, respectively.

Figure 3.

Illustration of the MV-DTF and LRMV-DTF layers. (a) MV-DTF layer , where . (b) LRMV-DTF layer .

This relationship between the Einstein and Hadamard product enables a rank-R CPD for every subtensor of tensor and, consequently, a low-rank approximation.

5.3. Dimension J or , Order P of the Latent Space , and the Rank R: The Hyperparameters of the MV-DTF and LRMV-DTF Layers

The proposed layers introduce three hyperparameters to tune: the order P and the dimension of , and the rank value R:

Latent space dimension: It determines the expressiveness of the latent space to capture complex patterns across views. High-dimensional spaces enhance expressiveness but also increase the risk of overfitting, while low-dimensional spaces reduce expressiveness but mitigate the risk of overfitting.

Latent space order: It is determined by the architecture of the ANN. For instance, in multi-layer perceptron (MLP) architectures, the input space dimension is unidimensional, e.g., ; hence, . In contrast, the input space of CNNs is multidimensional; thereby, .

Rank: It determines the computational complexity of the MV-DTF layer. For low rank values on subtensors of , the number of parameters to learn can be reduced, but it may not capture complex interactions across views effectively, limiting the model performance. Conversely, high rank values increase the capacity to learn complex patterns in data, but they may lead to overfitting.

5.4. MV-DTF and LRMV-DTF on Neural Network Architectures: The Mapping Set

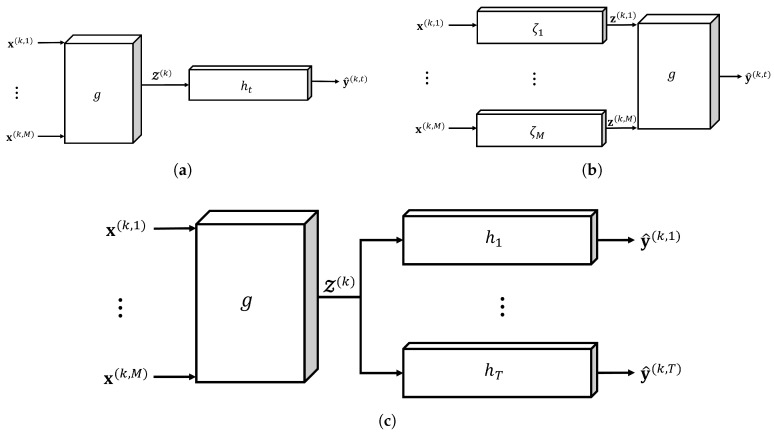

According to the desired level of fusion [16], two primary configurations can be employed where our data fusion layer can be incorporated in an ANN architecture:

Feature extraction: The MV-DTF layer g can be integrated into an ANN to map the multi-view input space into some latent space, , for multi-view feature extraction; see Figure 4a,c. Here, both the order P and dimension of must correspond with the order and dimension of the input layer in the architecture of the ANN.

Multilinear regression: The MV-DTF layer performs multilinear regression to capture the multilinear relationships between the multi-view latent space and the output space for single-task learning (see Figure 4b). Here, is the m-th single-view latent space obtained from the mapping , where is the m-th single-view input space. Consequently, the dimension and order of the latent space must correspond with those of the output space.

Figure 4.

Primary configurations for incorporating the MV-DTF layer in neural network architectures. (a) MV-DTF layer for multi-view feature extraction on single-task learning, where , and . (b) MV-DTF layer for multilinear regression on single-task learning, where , and . (c) MV-DTF for multi-view feature extraction on multitask learning, where , and .

6. Results and Discussion

6.1. Dataset Description

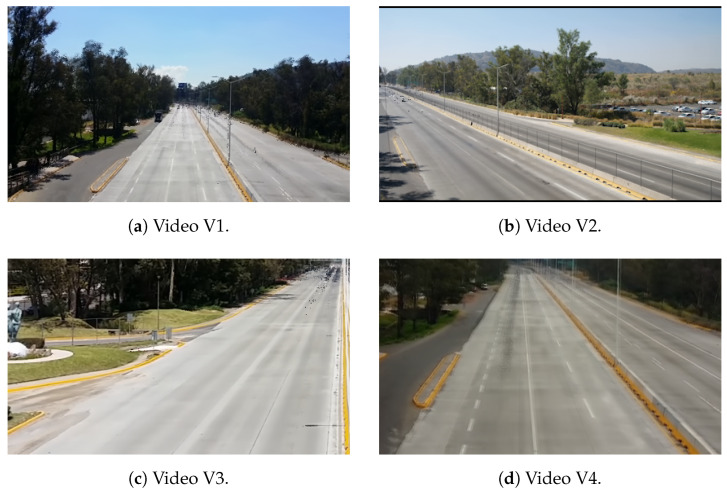

To test the effectiveness of the proposed MV-DTF layer, we conduct experiments on four real-world traffic surveillance videos, encompassing more than 50,000 frames of footage with a resolution of 420 × 240 pixels and recorded at a frame rate of 25 FPS (accessible via [122]). Sample images from each test video can be observed in Figure 5, while technical details are provided in Table 3.

Figure 5.

Test videos employed for vehicle-size classification and occlusion detection tasks. They were recorded at different dates and views (click on each Figure to link to the video).

Table 3.

Technical details of the test videos.

| Video | Duration (s) | Tracked Vehicles | Temporal Samples of Tracked Vehicles |

|---|---|---|---|

| V1 | 146 | 137 | 6132 |

| V2 | 326 | 333 | 19,194 |

| V3 | 216 | 239 | 14,457 |

| V4 | 677 | 720 | 91,870 |

A collection of over 92,000 images of vehicles was then extracted from the test videos using the background and foreground method. Each image has been manually labeled for two tasks (): (1) occlusion detection, where vehicles are categorized as occluded or non-occluded (labeled to as 1 and 0, respectively), and (2) vehicle-size classification, where non-occluded vehicles are categorized as small (S), midsize (M), large (L), or very large (XL), with labels 1 to 4. Next, one-hot encoding was used to represent the class labels of each task. Consequently, the output spaces for the classification and occlusion detection tasks become , and , respectively, i.e., and .

In addition, three subsets of image moment-based features were extracted and normalized for each vehicle image: (1) a 4D feature space, (i.e., ), consisting of the vehicle blob solidity, orientation, eccentricity, and compactness features; (2) a 3D feature space, (i.e., ), encompassing the vehicle’s width, area, and aspect ratio; and (3) a 2D feature space, , representing the vehicle centroid coordinates. Together, the three feature spaces form a three-view input space of dimension ; i.e., the number of views is , and , , and are the dimensions of each feature space.

As a result, two datasets, denoted as and , were created from the test videos, where corresponds to the occlusion detection task and to the vehicle-size classification task. Both datasets encompass vehicle instances represented in a three-view feature space, available in [123]. Table 4 and Table 5 provide a summary of our datasets, detailing the distribution of images across the occlusion and vehicle-size classification tasks.

Table 4.

Description of the vehicle instance dataset for the occlusion detection task.

| Video | Occluded | Unoccluded |

|---|---|---|

| V1 | 4671 | 1461 |

| V2 | 12,684 | 6510 |

| V3 | 10,384 | 4073 |

| V4 | 41,002 | 11,084 |

Table 5.

Description of the vehicle instance dataset for the vehicle-size classification task.

| Video | Small (Class 1) | Midsize (Class 2) | Large (Class 3) | Very Large (Class 4) |

|---|---|---|---|---|

| V1 | 45 | 4018 | 390 | 218 |

| V2 | 169 | 11,687 | 676 | 152 |

| V3 | 206 | 8426 | 1179 | 573 |

| V4 | 777 | 35,843 | 3101 | 1282 |

6.2. The Multitask, Multi-View Model Architecture and Training

6.2.1. The Multitask, Multi-View Model Architecture

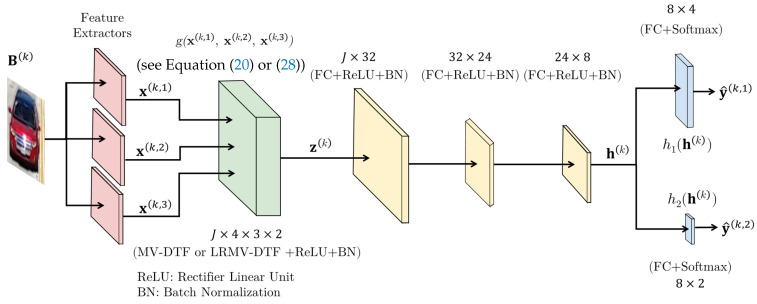

To learn the two tasks, a multitask, multi-view ANN model based on the MLP architecture was employed. The proposed model is structured in four main stages (see Figure 6): (1) hand-crafted feature extractors (shown in red), (2) an MV-DTF/LRMV-DTF layer (in green), (3) the neck (in yellow), and (4) the task-specific heads (in blue). Stages 1 and 2 form the backbone of the model, serving as a feature extractor to capture both low-level and high-level features from the raw data. Stage 3 refines the features extracted from the backbone. Finally, stage 4 performs prediction or inference. In addition, dropout is applied at the end of each stage to reduce the risk of overfitting and enhance the model’s generalization.

Figure 6.

The proposed multitask, multi-view ANN architecture.

The MV-DTF/LRMV-DTF layer provides the mapping , where , , and . The order P of the latent space is fixed to one, i.e., without loss of generality, which simplifies its dimension to J, a hyperparameter to tune. Therefore, the parameters of the MV-DTF/LRMV-DTF layer are either the tensor , or the associated Hadamard factor tensors , and , where the rank R is a hyperparameter to tune, along with the bias tensor . Consequently, Equation (28) is reduced to Equation (30) (Equation (30) holds when is the tensor decomposition rank for all ):

| (30) |

where the fused tensor and bias are transformed to the vectors and , respectively, and .

To solve this problem (see Section 3), the multi-objective optimization defined in Equation (13) is employed with and , where and are the task-specific occlusion detection and vehicle classification functions, can be either the MV-DTF or LRMV-DTF layer, and are the binary cross-entropy and multiclass cross-entropy loss (see Definitions 16 and 17, respectively) for the above tasks, and the task importance weighting hyperparameters and were selected from a finite set of values through cross-validation, a technique often employed by other authors [69].

Definition 16

(Binary cross-entropy (BCE)). Let be the true label of an instance, and let be the predicted probability for the positive class. The BCE between and is given by the following:

(31)

Definition 17

(Multiclass cross entropy (MCE)). Let be the true label of an instance, related to some multi-classification problem with C classes, encoded in one-hot format. And let be the predicted probability vector, where is the probability that the instance belongs to the c-th class. The MCE between and is given by the following:

(32)

6.2.2. Training and Validation

From the total number of tracked vehicles in Table 3, of them were selected from the four test videos via stratified random sampling for training and validation purposes. Including all temporal instances of a particular vehicle can cause data leakage; i.e., the model may learn specific patterns from highly correlated temporal samples, resulting in reduced generalization to unseen vehicles. To prevent this, uncorrelated temporal instances were only considered for each selected vehicle.

Vehicles from the subset, along with their uncorrelated temporal instances, were partitioned into two sets: (1) the training set , containing the of vehicles and their temporal instances; and (2) the validation set , with of the vehicles and their instances, where superscript t indexes the task-specific dataset (i.e., for vehicle-size classification and for occlusion detection). The remaining of vehicles and their instances comprise the testing set, denoted as .

Adaptive moment estimation [124] was employed to optimize the parameters of our model. Training was performed for a maximum of 200 epochs, with an early stopping scheme to avoid overfitting by halting training when performance on no longer improved. The training strategy for our multitask, multi-view model is shown in Algorithm 1, where is a mutually exclusive batch of the t-th task, i.e., for , with , and K is the number of batches.

| Algorithm 1 Training scheme. |

|

All experiments were conducted and implemented in Python 3.10 and the PyTorch framework on a computer equipped with an Intel Core i7 processor running at 2.2 GHz. To accelerate the processing time, an NVIDIA GTX 1050 TI GPU was employed.

6.3. Performance Evaluation Metrics

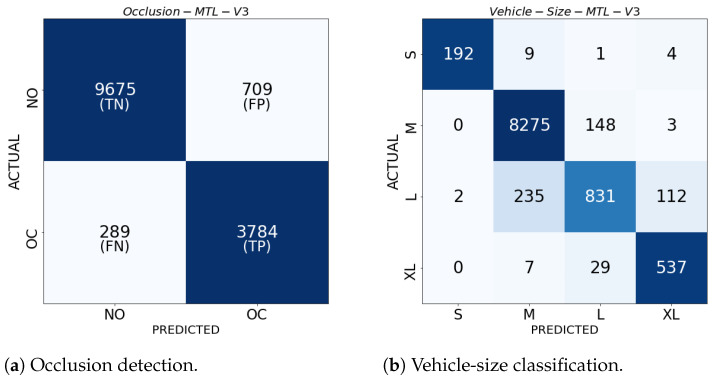

In this work, we evaluate the performance of the proposed multitask, multi-view model using six main metrics: accuracy (ACC), F1-measure (F1), geometric mean (GM), normalized Matthews correlation coefficient (MCCn), and normalized Bookmaker informedness (BMn), as detailed in Table 6 (see details of these metrics in [125]). For binary classification, where vehicle instances are categorized into two classes—positive and negative—the performance metrics were directly derived from the entries of a confusion matrix (CM), characterized by true positives (TPs), false negatives (FNs), false positives (FPs), and true negatives (TNs). In multiclass classification with classes, the notions of TP, FN, FP, and TN are less straightforward than in binary classification, as the confusion matrix becomes a matrix whose (i,j)-th entry represents the number of samples that truly belong to the i-th class but were classified as the j-th class. In order to derive the performance metrics, a one-vs.-rest approach is typically employed to reduce the multiclass CM into C binary CMs, where the c-th matrix is formed by treating the c-th class as positive and the rest as the negative class [125,126]. Figure 7 illustrates the CM notion for binary classification (Figure 7a) and multiclass classification with classes (Figure 7b), which were obtained from the mean values of runs.

Table 6.

Mathematical definition of classification performance metrics used in this work (metrics marked with * are biased by class imbalance [127]).

| Metric | Equation | Weighted Metric |

|---|---|---|

| ACC * | ||

| F1 * | ||

| MCC * | ||

| GM | ||

| BM | ||

| SNS | ||

| SPC | ||

| PRC * | ||

| Global GM | - | |

| Global BM | - | |

| multiclass MCC * | - | |

Figure 7.

Confusion matrices for vehicle-size classification and occlusion detection on video V3 ( and ), whose entries correspond to the mean values of runs.

In Table 6, C denotes the number of classes of interest, M is the number of classified instances, is a multiclass CM, metrics with subscript c refer to those computed from the c-th binary CM, obtained by reducing the multiclass CM fixing the c-th class. And metrics with subscript w denote weighted metrics, which consider the individual contributions of each class by weighting the metric value of the c-th class by the number of samples, , of class c. This approach provides a “fair” evaluation by considering the impact of imbalanced class distributions on the overall performance.

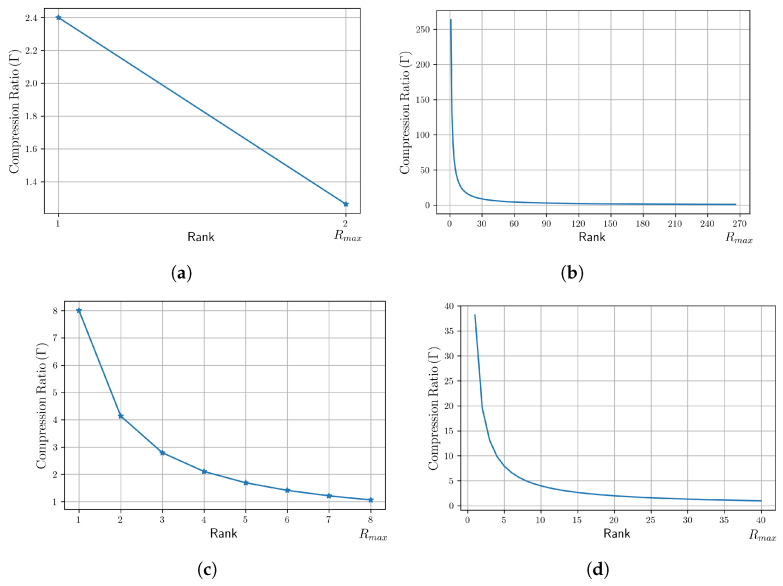

Furthermore, in order to quantify how much compression is achieved via the LRMV-DTF layer, a compression ratio, , between the number of parameters in the MV-DTF layer and those in the LRMV-DTF layer, i.e., L and , is defined in Equation (33). Note that is independent of the latent space dimension, and it depends only on the view dimensions. It ensures that the compression ratio is consistent, regardless of the latent tensor space dimension.

| (33) |

6.4. Hyperparameter Tuning: The Latent Space Dimension J and the Rank R Values

To determine suitable hyperparameters for the low-rank MV-DTF layer, cross-validation via a grid search was employed [128]. Let be two finite sets containing candidate values for the rank R and latent space dimensionality J, respectively. A grid search trains the multitask, multi-view model, built with the pair , on the training set , and it subsequently evaluates its performance on the validation set using some metric, . The most suitable pair of values is that which achieves the highest performance metric over the validation set .

For our case study with a tensor, , we fixed to study the impact of the J value on the classification metrics across tasks, whereas was selected based on the rank values that reduce the number of parameters in the LRMV-DTF layer according to the compression ratio (see Table 7), and and for performance analysis only. Since our datasets exhibit class imbalance, the MCC as the evaluation metric was used, given its robustness on imbalanced classes, as explained by Luque et al. in [127]. Through this empirical process, we found that and achieve the best trade-off between model performance and the compression ratio in the set . The sets and , and the most suitable pair of values, , must be determined for each multitask, multi-view dataset.

Table 7.

Compression ratio for different pairs of values and two multi-view spaces.

| Input Space Dimension | ||||||

|---|---|---|---|---|---|---|

| (Our Case Study) | ||||||

| (2, 1) | 48 | 20 | 2.4 | 48,000 | 182 | 263.7 |

| (2, 2) | 48 | 38 | 1.26 | 48,000 | 362 | 132.6 |

| (2, 3) | 48 | 56 | 0.86 | 48,000 | 542 | 88.56 |

| (2, 4) | 48 | 74 | 0.65 | 48,000 | 722 | 66.48 |

| (8, 1) | 192 | 80 | 2.4 | 192,000 | 728 | 263.7 |

| (8, 2) | 192 | 152 | 1.26 | 192,000 | 1448 | 132.6 |

| (8, 3) | 192 | 224 | 0.86 | 192,000 | 2168 | 88.56 |

| (8, 4) | 192 | 296 | 0.65 | 192,000 | 2888 | 66.48 |

| (32, 1) | 768 | 320 | 2.4 | 768,000 | 2912 | 263.7 |

| (32, 2) | 768 | 608 | 1.26 | 768,000 | 5792 | 132.6 |

| (32, 3) | 768 | 896 | 0.86 | 768,000 | 8766 | 88.56 |

| (32, 4) | 768 | 1184 | 0.65 | 768,000 | 11,552 | 66.48 |

6.5. Performance Evaluation

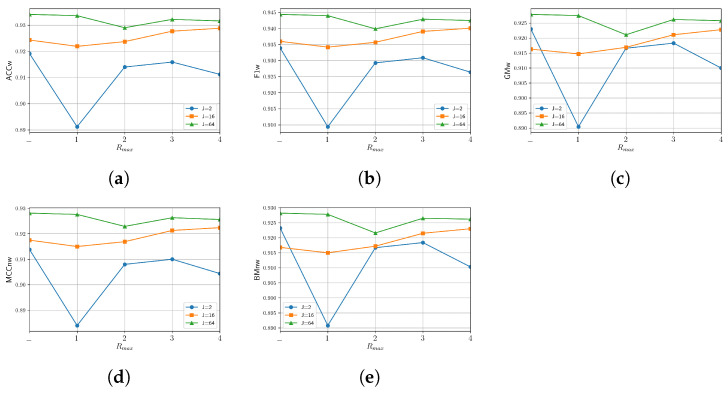

In this section, the performance of our multitask, multi-view case study in occlusion detection and vehicle classification tasks is evaluated. Our experiments focused on evaluating the impact of the rank, R, and dimension, J, of the latent tensor space on computational complexity and model performance. To ensure the consistency of our results, each experiment was repeated 30 times. We first provide the results for the space saving achieved using different R and J values in MV-DFT and its low-rank approximation, LRMV-DTF, followed by an analysis of their effects on the learning phases and model performance.

Table 7 provides a comparison of the compression achieved across different pairs of values and two multi-view input space dimensions. It is noteworthy that compression is only achieved for , and the larger the , the higher the compression. Specifically, for the multi-view space , compression is achieved only for (see Figure 8a), while for the multi-view space , a compression can be achieved for higher rank values (see Figure 8b). In consequence, provides an upper rank bound, denoted as , beyond which compression is no longer achieved. For tensors with greater dimensions or a greater order, the upper rank bound would be greater (see Figure 8).

Figure 8.

Compression ratio for the set of rank R values that enable compression () and multi-view input space dimensions. (a) our case study. (b) . (c) . (d) .

Figure 8 illustrates the relationship between the compression ratio and the rank R for various multi-view spaces with different order and dimensionalities. For each space, we observe that, when the rank R increases, the compression ratio decreases. Figure 8a,b show the compression for multi-view spaces with the same order but different dimensionality, while Figure 8c,d show the compression for higher-order multi-view spaces.

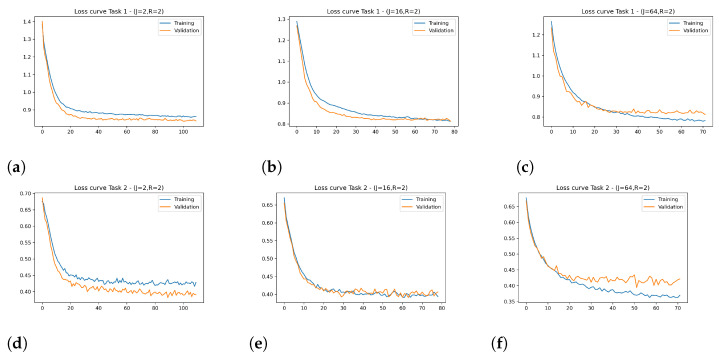

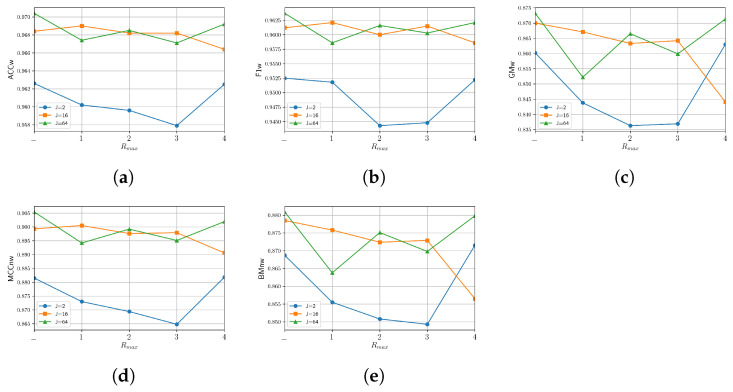

Figure 9 shows the training and validation loss curves over epochs for the model using either the MV-DTF or LRMV-DTF layer. From this figure, distinct behaviors in the loss curves can be observed on the training and validation phases:

For low-dimensional latent tensor space (see Figure 9a,d), although stable, a slower convergence and higher loss values for both training and validation are observed. This indicates that the model may be underfitting.

For high-dimensional latent tensor space (Figure 9c,f), a lower training loss is achieved. However, it exhibits fluctuations in the validation loss, especially for Task 2 (Figure 9f). This suggests that the model begins to overfit as J increases, leading to probable instability in validation performance. The marginal gains in training loss do not justify the increased risk of overfitting.

For (Figure 9b,e), the most balanced performance across both tasks is achieved, showing faster convergence and smoother validation loss curves compared to and . It achieves lower training loss while maintaining minimal validation loss variability, indicating good generalization.

Figure 9.

Loss curves obtained during the training and validation stages of the multitask, multi-view model across different latent space dimensions (J values), with fixed rank R = 2, for occlusion detection (first row) and vehicle-size classification (second row). (a) Loss curves for task 1 (). (b) Loss curves for task 1 (). (c) Loss curves for task 1 (). (d) Loss curves for task 2 (). (e) Loss curves for task 2 (). (f) Loss curves for task 1 ().

In the subsequent subsections, the performance evaluation for each task on the tested videos is presented, highlighting the impact of the selected hyperparameters in the model’s generalization.

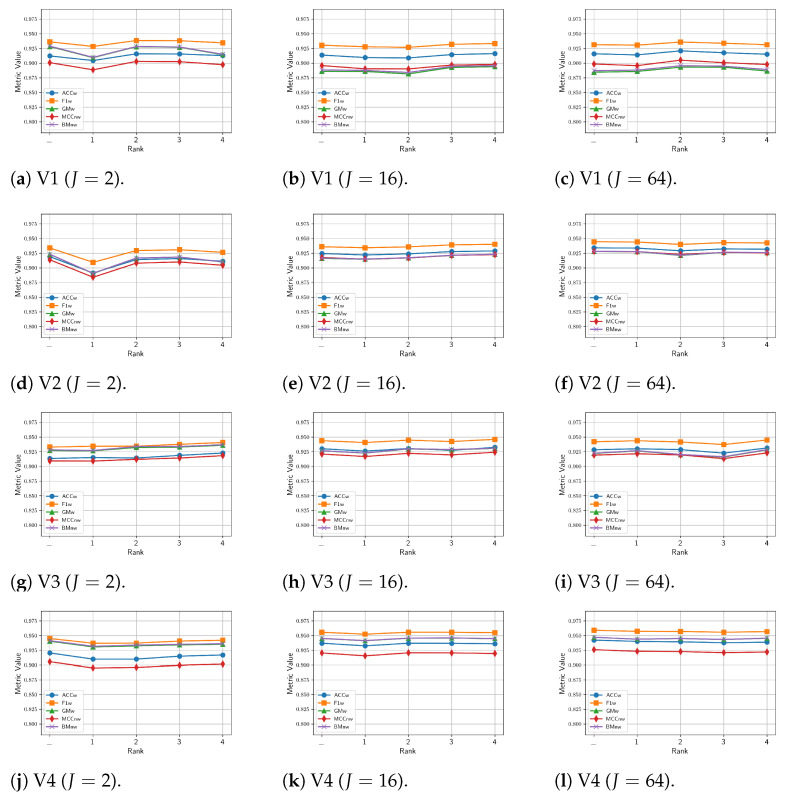

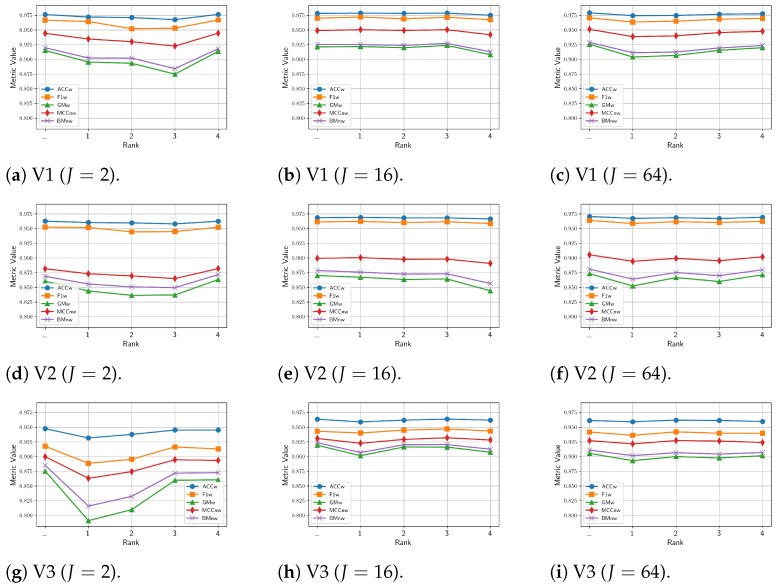

6.5.1. Vehicle Occlusion Detection Results

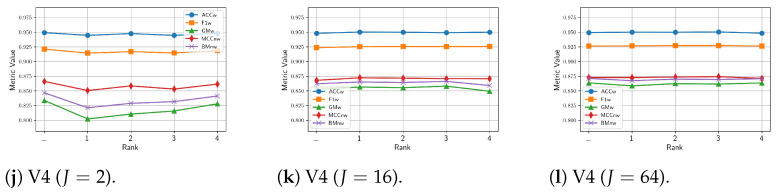

This section presents the comparison results of the proposed multitask, multi-view model, with either the MV-DTF or LRMV-DTF layer and different pair of values, on the occlusion detection task. Figure 10 shows the mean values of performance metrics obtained from our model for 30 different training runs, evaluated across test videos. Each row corresponds to a specific test video, while each column reflects a particular latent tensor-space dimension J value. As illustrated in Figure 10, a performance drop for different rank R values is very low, especially in high-dimensional spaces (e.g., and , on the second and third columns of Figure 10). However, in low-dimensional spaces (see the first column of Figure 10 for ), the rank choice has a slightly greater impact on the performance, and a fine-tuning rank R value is necessary, as the dimension J decreases.

Figure 10.

Mean values for 30 runs of performance metrics achieved on the occlusion detection task in test videos with the multi-view multitask model. The value denotes the results when the MV-DTF layer is employed, whereas the other values correspond to the LRMV-DTF layer.

Additionally, Figure 10 is complemented by Table A1, which presents the mean and standard deviation of performance metrics across multiple runs, with the best and worst values highlighted in blue and red, respectively. From this table, the pair shows the lowest standard deviations across most metrics, providing a good balance between computational complexity (see Table 7) and competitive performance with the MV-DTF layer. Although high-dimensional spaces (e.g., ) yield high performance, they also tend to exhibit large standard deviations, potentially increasing the risk of overfitting despite their higher mean values.

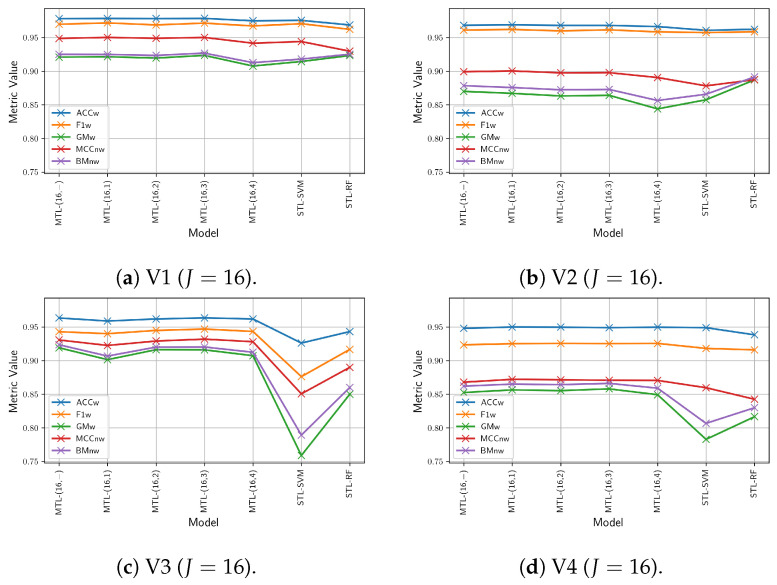

Finally, Figure 11 presents a performance comparison between our multi-view multitask model, using the LRMV-DTF with , and single-task learning (STL) single-view learning (SVL) models of SVM and RF, tested across test videos. Figure 11 highlights that the proposed model exhibits higher and more consistent performance than STL-SVL models on all metrics and videos, particularly in V2, V3, and V4. In contrast, the SVM and RF models show a noticeable performance drop in these videos. Overall, the proposed model improves the performance in the MCCnw metric of up to , which represents a significant improvement over the SVM and RF models.

Figure 11.

Comparison results between MTL and STL models on the occlusion detection task.

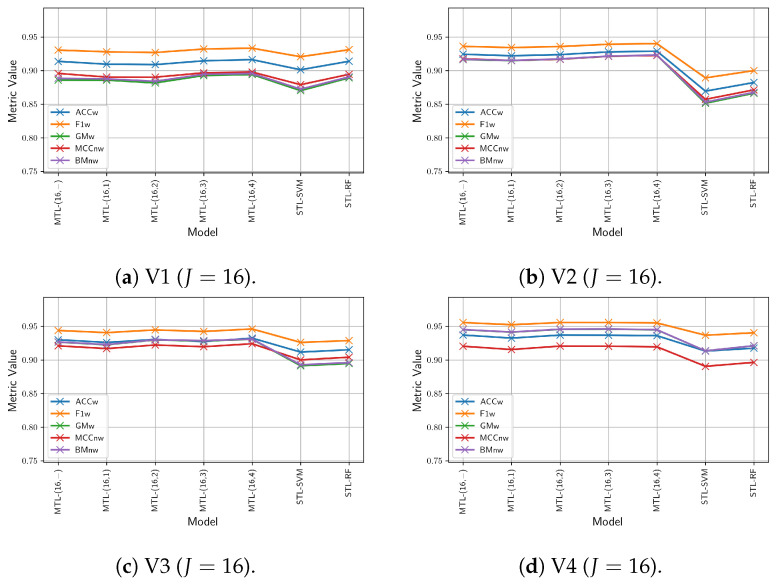

6.5.2. Vehicle-Size Classification Results

This section presents the comparison results of the proposed multitask, multi-view model, incorporating either the MV-DTF or LRMV-DTF layer with different pairs of values, on the vehicle-size classification task. Figure 12 shows the mean values of the performance metrics for 30 different training runs on the test videos, where each row and column are related to a specific test video, and latent tensor space dimension, respectively. From this figure, we observe that the lower the J value, the worse the performance. Similarly, high R values generally contribute to improved performance. For , there is a noticeable drop in performance, especially on the GMw, BMnw, and MCCnw metrics, suggesting that low-dimensional spaces fail to capture the complexity of the task. However, as long as J increases to 16 and 64, the metrics stabilize, and the performance drops across ranks becomes negligible, particularly for the ACCw and F1w metrics.

Figure 12.

Mean values for 30 runs of performance metrics achieved on the vehicle-size classification task in test videos with the multi-view multitask model. The value denotes the results when the MV-DTF layer is employed, whereas the other values correspond to the LRMV-DTF layer.

Additionally, Table A2 shows the mean and standard deviation of performance metrics across runs, with the best and worst values highlighted in blue and red, respectively. From this table, we found that high-dimensional spaces tend to yield not only higher mean performance but also lower standard deviation, indicating more stable and consistent outcomes across different test videos. In contrast, low-dimensional spaces (e.g., ) are more sensitive to the rank R hyperparameter, particularly for GMw, BMnw, and MCCnw. Consequently, a computationally efficient LRMV-DTF layer can be achieved in high-dimensional spaces by selecting low rank values without a significant performance drop. In contrast, for low-dimensional latent spaces (), the performance is more sensitive to the choice of R, especially for GMw, BMnw, and MCCnw. Therefore, selecting an appropriate rank becomes crucial for low J values to avoid significant drops in performance.

Finally, in Figure 13, a comparison between our multi-view, multitask model and STL-SVL models (SVM and RF) across test videos is presented. This figure highlights the superiority of our multitask model, particularly in videos V3 and V4, where the SVM and RF models again exhibit a significant performance drop. Overall, the proposed model improves the performance in the MCCnw metric by up to , which represents a significant improvement over the SVM and RF models.

Figure 13.

Comparison results between MTL and STL models on the vehicle-size classification task.

6.5.3. Comparison with a Multitask Single-View Model

We also provide a comparison between the proposed multitask, multi-view model with its corresponding single-view model in Table A3. The latter model is basically the proposed model but without incorporating the MV-DTF layer, and the input space can only be either , , or . However, in this work, we fix the input space to . Finally, for a fair comparison, this model incorporates a layer that maps the feature space onto a latent space of dimension J.

The results provided in Table A3 show the overall mean value of weighted metrics across all videos, where it can be observed that incorporating the MV-DTF layer into this single-view model an improvement of up to and on the BMnw and MCCnw metrics, is achieved. These results are also consistent across all latent space dimensions.

Unlike the F1 metric, the experimental results show that the performance of single-view models does not exhibit a negative impact when the fusion layer is incorporated. Furthermore, even though the model parameters increase, incorporating the MV-DTF layer offers several advantages, including that the layer approximation through Hadamard products allows selecting ranks that, unlike the classical CPD, higher compression rates can be achieved.

Finally, Figure A1 and Figure A2 show the results for the occlusion detection and vehicle-size classification tasks, respectively. In contrast to the performance shown in Figure 10 and Figure 12, Figure A1 and Figure A2 show each metric independently for more detail.

6.6. Discussion

The promising results of the MV-DTF layer and its low-rank approximation LRMV-DTF comprise the following:

The performance and consistency of the multitask, multi-view model are significantly influenced by the dimensionality of the latent tensor space (see Figure 10 and Figure 12). For a specific dimension, , the model exhibits two distinct behaviors, given another J value: for , the model tends to underfit, whereas for , it is prone to overfitting to the training data.

A negligible performance drop was observed in our case study as the compression ratio approaches 1, i.e., , when the LRMV-DTF layer is employed. This result provides empirical evidence of the underlying low-rank structure in the subtensors of tensor in the MV-DTF layer.

The maximum allowable rank value (upper rank bound) that achieves parameters’ compression increases as the dimensions of the multi-view space grow and/or as the number of dimensions (tensor order) increases.

The major limitations of the MV-DTF layer are as follows:

Selecting suitable hyperparameters, i.e., the dimensionality or J of the latent tensor space , and the rank R for the LRMV-DTF layer, is a challenging task.

A high-dimensional latent space increases the risk of overfitting, while very low-dimensional spaces may not fully capture the underlying relationships across views, resulting in underfitting.

Reducing the rank of subtensors tends to decrease performance and increase the risk of underfitting classification models for low-dimensional latent spaces. Although higher rank values may improve model performance, they also increase the risk of overfitting.

The choice of rank R involves a trade-off: higher values increase computational complexity but can capture more complex patterns, while lower values reduce the computational burden but may limit expressiveness of the model, resulting in performance decreasing.

7. Conclusions

In this work, we found a novel connection between the Einstein and Hadamard products for tensors. It is a mathematical relationship involving the Einstein product of the tensor associated with a multilinear map , and a rank-one tensor , where , for all , and . By enforcing low-rank constraints on the subtensors of , which result by fixing every index but the last M, each j-th subtensor is approximated as a rank- tensor through the CPD. By exploiting this structure, a set of M third-order tensors , here called the Hadamard factor tensors, are obtained. We found that the Einstein product can then be approximated by a sum of R Hadamard products of M Einstein products , where R corresponds to the maximum decomposition rank across subtensors, and for all .

Since multi-view learning leverages complementary information from multiple feature sets to enhance model performance, a tensor-based data fusion layer for neural networks, called Multi-View Data Tensor Fusion, is here employed. This layer projects M feature spaces , referred to as views, into a unified latent tensor space through a mapping , where , and for all . Here, we constrain g to the space of affine mappings composed of a multilinear map, , followed by a translation. The multilinear map is here represented by the Einstein product , where is the induced tensor of , and . Unfortunately, as the number of views increases, the number of parameters that determine g grow exponentially, and consequently, its computational complexity also grows.

To mitigate the curse of dimensionality in the MV-DTF layer, we exploit the mathematical relationship between the Einstein product and Hadamard product, which is the low-rank approximation of the Einstein product, useful when the compression ratio .

The use of the LRMV-DTF layer based on the Hadamard product does not imply necessarily an improvement of the model performance compared to the MV-DTF layer based on the Einstein product. In fact, the dimension of the latent space J and the rank of subtensors R must be tuned via cross-validation (see Section 6.4). When the decomposition rank of subtensors is less than the upper rank bound (), an efficient low-rank approximation of the MV-DTF layer based on the Einstein product is obtained.

From our experiments, we show that the intoduction of the MV-DTF and LRMV-DTF layers in a case study multitask VTS model for vehicle-size classification and occlusion detection tasks improves its performance compared to single-task and single-view models. For our case study, i.e., a particular case using the LRMV-DTF layer with and , our model achieved an MCCnw of up to 95.10% for vehicle-size classification and 92.81% for occlusion detection, representing significant improvements of 7% and 6%, respectively, over single-task single-view models while reducing the number of parameters by a factor of 1.3.

Finally, for every case study, the dimension of the latent tensor space, J, and the decomposition rank, R, must be tuned. Additionally, the employment of an MV-DTF layer or a LRMV-DTF layer must be determined while the tradeoff between the model performance and computational complexity is taken into account.

Open Issues

A computational complexity analysis must be conducted to evaluate the LRMV-DTF layer efficiency.

For VTS systems, to integrate other high-dimensional feature spaces in order to improve the expressiveness of the latent tensor space and its computational efficiency.

To explore other tensor decomposition models, such as the tensor-train model, for more efficient algorithms in high-dimensional data.

To extend our work to more complex network architectures.

To address other VTS tasks within the MTL framework for a more comprehensive vehicle traffic model.

Acknowledgments

The authors acknowledge the Consejo Nacional de Ciencia y Tecnología (CONACYT) for Ph.D. student grant no. CB-253955. The first author also expresses gratitude to the Center for Research and Advanced Studies of the National Polytechnic Institute (CINVESTAV IPN) and its academic staff, especially to Torres, D. Special thanks are extended to the reviewers for their valuable feedback and constructive comments, which greatly contributed to improving the quality of this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| ITS | Intelligent Transportation Systems |

| VTS | Vehicle traffic surveillance |

| MVL | Multi-view learning |

| MTL | Multitask learning |

| MV-DTF | Multi-View Data Tensor Fusion |

| LRMV-DTF | Low-Rank Multi-view Data Tensor Fusion |

Appendix A. Mathematical Proofs