Abstract

Sign language (SL) is a means of communication that is used to bridge the gap between the deaf, hearing-impaired, and others. For Arabic speakers who are hard of hearing or deaf, Arabic Sign Language (ArSL) is a form of nonverbal communication. The development of effective Arabic sign language recognition (ArSLR) tools helps facilitate this communication, especially for people who are not familiar with ArSLR. Although researchers have investigated various machine learning (ML) and deep learning (DL) methods and techniques that affect the performance of ArSLR systems, a systematic review of these methods is lacking. The objectives of this study are to present a comprehensive overview of research on ArSL recognition and present insights from previous research papers. In this study, a systematic literature review of ArSLR based on ML/DL methods and techniques published between 2014 and 2023 is conducted. Three online databases are used: Web of Science (WoS), IEEE Xplore, and Scopus. Each study has undergone the proper screening processes, which include inclusion and exclusion criteria. Throughout this systematic review, PRISMA guidelines have been appropriately followed and applied. The results of this screening are divided into two parts: analysis of all the datasets utilized in the reviewed papers, underscoring their characteristics and importance, and discussion of the ML/DL techniques’ potential and limitations. From the 56 articles included in this study, it was noticed that most of the research papers focus on fingerspelling and isolated word recognition rather than continuous sentence recognition, and the vast majority of them are vision-based approaches. The challenges remaining in the field and future research directions in this area of study are also discussed.

Keywords: Arabic sign language (ArSL), Arabic sign language recognition (ArSLR), dataset, machine learning, deep learning, hand gesture recognition

1. Introduction

Sign language (SL) is a powerful means of communication among humans. It is used by people who are deaf or hard of hearing, as well as those who struggle with oral speech due to disability or special conditions. According to the latest estimations, as of March 2021, there are over 1.5 billion people worldwide experiencing some degree of hearing loss, which could increase to 2.5 billion by 2050 [1]. Around 78 million people with hearing difficulties were estimated in the Eastern Mediterranean region, which could grow to 194 million by 2050 [1].

There are two main classes of sign language symbols: single-handed and double-handed [2]. These signs are further divided into dynamic and static. One dominant hand is used to represent one-handed signs. Any static or dynamic gesture can be used to represent it. Both dominant and non-dominant hands are used when signing to indicate two-handed signs [2]. SL uses non-manual signs (NMS), such as body language, lip patterns, and facial expressions, in addition to manual signs (MS) that use static hand/arm gestures, hand/arm motions, and fingerspelling [3].

Sign language recognition (SLR) is the process of recognizing and deciphering sign language movements or gestures [4]. It usually involves complex algorithms and computational operations. Sign language recognition systems (SLRS) is one application within the field of human-computer interaction (HCI) that interprets sign language of hearing impairments into text or voice of oral language [5]. SLRS can be categorized into three main groups based on the main technique used for gathering data, namely sensor-based or hardware-based, vision-based or image-based, and hybrid [6,7]. Sensor-based techniques use data gloves that the signer wears to collect data about their actions from external sensors. Most of the current research, however, has focused on vision-based approaches that use images, videos, and depth data to extract the semantic meaning of hand signals due to practical concerns with sensor-based techniques [4,6]. Hybrid methods have occasionally been employed to gather information on sign language recognition. Comparing hybrid methods to other approaches, they perform similarly or even better in terms of proportional automatic speech or handwriting recognition. In hybrid techniques, multi-mode information about the hand shapes and movement is obtained by combining vision-based cameras with other types of sensors, like glove sensors.

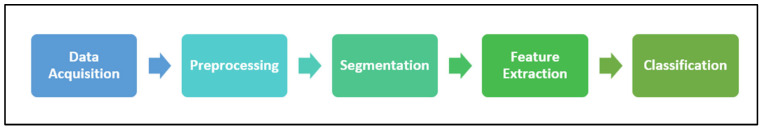

As shown in Figure 1, the stages involved in sign language recognition can be broadly divided into data acquisition, pre-processing, segmentation, feature extraction, and classification [8,9]. In data acquisition, single frames of images are the input for static sign language recognition; video, or continuous frames of images, is the input for dynamic signs. In order to enhance the system’s overall performance, the preprocessing stage modifies the image or video inputs. Segmentation, or the partitioning of an image or video into several separate parts, is the third step. A good feature extraction arises from perfect segmentation [10]. Feature extraction is performed to transform important parts of the input data into sets of compact feature vectors. When it comes to the recognition of sign language, the features that are extracted from the input hand gestures should contain pertinent information and be displayed in a condensed form that helps distinguish the sign that needs to be categorized from other signals. The final step is classification. Machine learning (ML) techniques for classification can be divided into two categories: supervised and unsupervised [8]. Through the use of supervised machine learning, a system can be trained to identify specific patterns in input data, which can subsequently be utilized to predict future data. Using labeled training data and a set of known training examples, supervised machine learning infers a function. Inferences are derived from datasets containing input data without labeled responses through the application of unsupervised machine learning. There is no reward or penalty weightage indicating which classes the data should belong to because the classifier receives no labeled responses. Deep learning (DL) approaches have surpassed earlier cutting-edge machine learning techniques in a number of domains in recent years, particularly in computer vision and natural language processing [4]. Eliminating the need to construct or extract features is one of the primary objectives of deep models. Deep learning enables multi-layered computational models to learn and represent data at various levels of abstraction, mimicking the workings of the human brain and implicitly capturing the intricate structures of massive amounts of data.

Figure 1.

Stages of sign language recognition systems.

It is worth noting that there is no international SL. In fact, SL is geography-specific, as it differs from one region or country to another in terms of syntax and grammar [9,11,12,13]. Different sign languages include, for example, American Sign Language (ASL), Australian Sign Language (Auslan), British Sign Language (BSL), Japanese Sign Language (JSL), Urdu Sign Language, Arabic Sign Language (ArSL), and others [9,11,12,13].

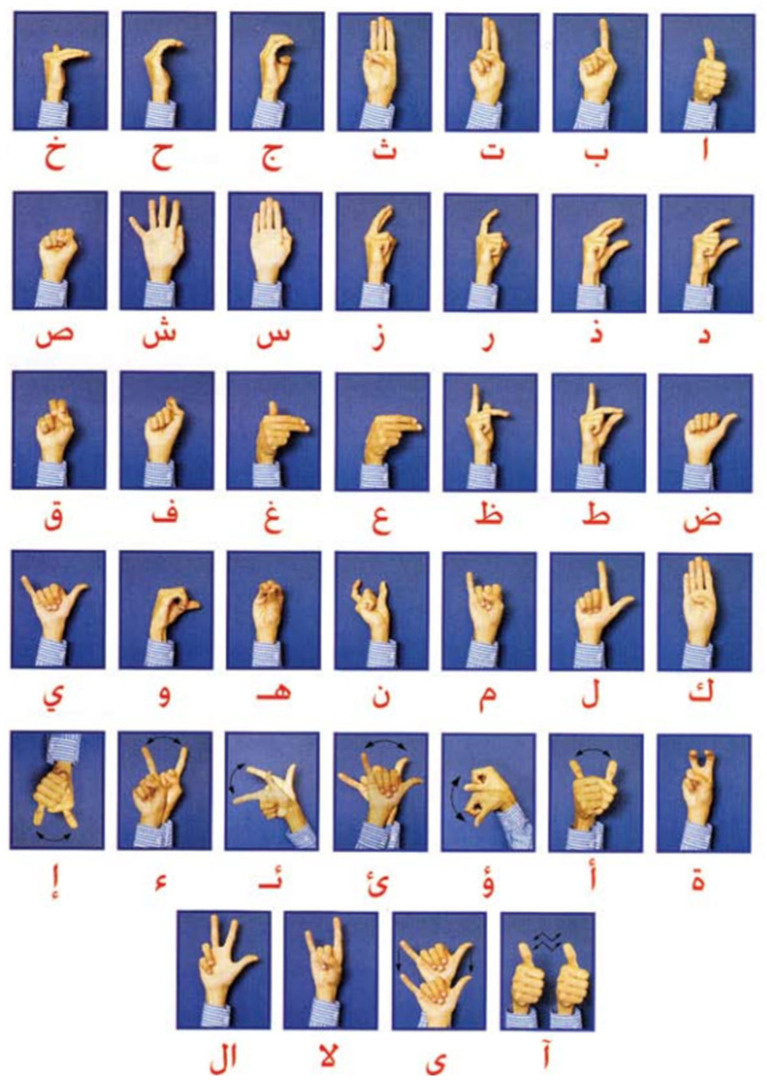

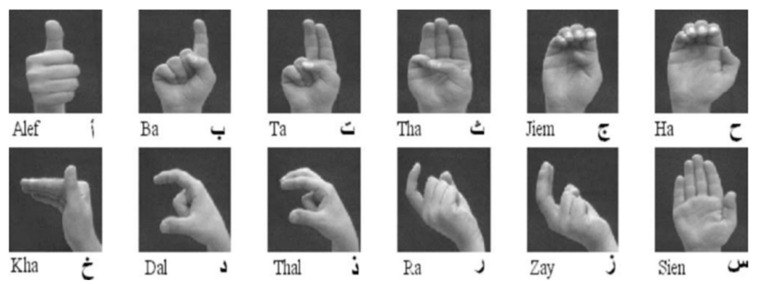

The language that is spoken throughout the Middle East and North Africa is unified ArSL [2]. Although there are 28 letters in the Arabic alphabet, Arabic sign language uses 39 signs [14,15], as depicted in Figure 2. The eleven extra signs are fundamental signs made up of two letters combined. For example, in Arabic, the two letters “ال” are frequently used, much like “the” in English. Thus, the 39 signs for the Arabic alphabet are used in the majority of reviewed works on Arabic sign language recognition (ArSLR) [9,15].

Figure 2.

Representation of the Arabic sign language for Arabic alphabets [14].

SLR research efforts are categorized according to a taxonomy that starts with creating systems that can recognize small forms and segments, like alphabets, and progresses to larger but still small forms, like isolated words, and ultimately to the largest and most challenging forms, like complete sentences [4,9,15]. As the unit size to be recognized increases, the ArSLR job becomes harder. Three main categories—fingerspelling (alphabet) sign language recognition, isolated word sign language recognition, and continuous sentences sign language recognition—can be used to classify ArSLR research [9,15].

In this study, a comprehensive review of the current landscape of ArSLR using machine learning and deep learning techniques is conducted. Specifically, the goal is to explore the application of ML and DL in the past decade, the period between 2014 and 2023, to gain deeper insights into ArSLR. To the author’s knowledge, none of the previously reviewed surveys have systematically reviewed the ArSLR studies published in the past decade. Therefore, the main purpose of the current study is to thoroughly understand the progress made in this field, discover valuable information about ArSLR, and shed light on the current state of knowledge. Through an extensive systematic literature review, I have collected and synthesized the most recent results regarding recognizing ArSL using both ML and DL. The analysis involves a collection of techniques, exploring their effectiveness and potential for improving the accuracy of ArSLR. Moreover, current trends and future directions in the area of ArSLR are explored, highlighting important areas of interest and innovation. By understanding the current landscape, the aim is to provide valuable insights into the direction of research and development in this evolving field.

The rest of this paper is organized as follows: in Section 2, the methodology that will help to achieve the goals of this systematic literature review is detailed. In Section 3, the results of the research questions and sub-questions are analyzed, synthesized, and interpreted. In Section 3.4, the most important findings of this study are discussed in an orderly and logical manner, the future perspectives are highlighted, and the limitations of this study are presented. Finally, Section 4 draws the conclusions.

2. Research Methodology

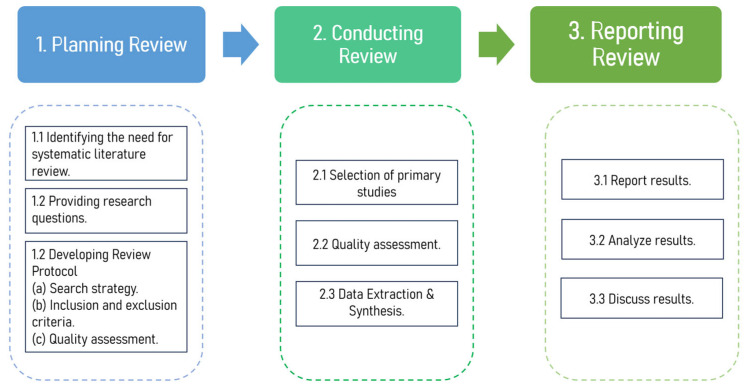

The systematic literature review gathers and synthesizes the papers published in various scientific databases in an orderly, accurate, and analytical manner about an area of interest. The goal of the systematic approach is to direct the review process on a specific research topic to assess the advancement of research and identify potential new directions. This study adopts the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [16]. The research methodology encompasses three key stages: planning, conducting, and reporting the review. Figure 3 provides a visual representation of the methodology applied in this study.

Figure 3.

Overview of research methodology.

2.1. Planning the Systematic Literature Review

The aim of this stage is to determine the need for a systematic literature review and to establish a review protocol. To determine the need for such a systematic review, an exhaustive search of systematic literature views in different scientific databases is performed.

2.1.1. Identification of the Need for a Systematic Literature Review

A search string was developed to find similar systematic literature reviews on ArSLR and to assess whether the planned, systematic review of this study will help close or reduce any gaps in knowledge. Two equivalent search strings were constructed, one for the Web of Science database and one for the Scopus database:

Web of Science = TS = ((Arabic sign language) AND recognition AND ((literature review) OR review OR survey OR (systematic literature review)))

Scopus = TITLE-ABS-KEY ((Arabic AND sign AND language) AND recognition AND ((literature AND review) OR review OR survey OR (systematic AND literature AND review))).

Any review paper published before 2014 was discarded. After filtering the remaining review papers based on their relevance to the topic of interest, a total of five literature reviews were eligible for inclusion. Three of the review papers [9,15,17] were found in both Web of Science and Scopus. Two papers were found in Scopus [18,19].

In 2014, Mohandes et al. [17] presented an overview of image-based ArSLR studies. Systems and models in the three levels of image-based ArSLR, including alphabet recognition, isolated word recognition, and continuous sign language recognition, are reviewed along with their recognition rate. Mohandes et al. have extended their survey [17] to include not only systems and methods for image-based ArSLR but also sensor-based approaches [15]. This survey also shed light on the main challenges in the field and potential research directions. The datasets used in some of the reviewed papers are briefly discussed [15,17].

A review of the ArSLR background, architecture, approaches, methods, and techniques in the literature published between 2001 and 2017 was conducted by Al-Shamayleh et al. [9]. Vision-based (image-based) and sensor-based approaches were covered. The three levels of ArSLR for both approaches, including alphabet recognition, isolated word recognition, and continuous sign language recognition, were examined with their corresponding recognition rates. The study also identified future research gaps and provided a road map for research in this field. Limited details of the utilized datasets in the reviewed papers were mentioned, such as training and testing data sizes.

Mohamed-Saeed and Abdulbqi [18] presented an overview of sign language and its history and main approaches, including hardware-based and software-based. The classification techniques and algorithms used in sign language research were briefly discussed, along with their accuracy. Tamimi and Hashlmon [19] focused on Arabic sign language datasets and presented an overview of different datasets and how to improve them; however, other areas, such as classification techniques, were not addressed.

In addition to Web of Science and Scopus, Google Scholar was used as a support database to search for systematic literature review papers on ArSLR. Similar keywords were used and yielded four more reviews [2,12,20,21] that are summarized below:

Alselwi and Taşci [10] gave an overview of the vision-based ArSL studies and the challenges the researchers face in this field. They also outlined the future directions of ArSL research. Another study was conducted by Wadhawan and Kumar [2] to systematically review previous studies on the recognition of different sign languages, including Arabic. They provided reviews for the papers published between 2007 and 2017. The papers in each sign language were classified according to six dimensions: acquisition method, signing mode, single/double-handed, static/dynamic signing, techniques used, and recognition rate. Mohammed and Kadhem [20] offered an overview of the ArSL studies published in the period between 2010 and 2019, focusing on input devices utilized in each study, feature extraction techniques, and classification algorithms. They also reviewed some foreign sign language studies. Al Moustafa et al. [21] provided a thorough review of the ArSLR studies in three categories: alphabets, isolated words, and continuous sentence recognition. Additionally, they outlined the public datasets that can be used in the field of ArSLR. Despite mentioning “systematic review” in the paper’s title, they did not follow a systemic approach in their review.

Valuable contributions are made by gathering papers on ArSLR and carrying out a comprehensive literature evaluation. Of the surveys mentioned above, only one adopts a systematic review process [2]; nevertheless, this survey does not analyze or focus on the datasets included in the evaluated studies, and it does cover the studies published after 2017. As a result, it was necessary to provide the community and interested researchers with an analysis of the current status of ArSLR. The purpose of this research, therefore, is to close or at least lessen that gap. All the review papers are listed and summarized in Table 1 in chronological order from the oldest to the newest.

Table 1.

Summary of existing surveys of Arabic sign language recognition (ArSLR).

| Ref | Year | Journal/Conference | Timeline | SL | Datasets | Classifiers | Feature Extraction | Performance Metrics | Systematic | Focus of the Study | Source |

|---|---|---|---|---|---|---|---|---|---|---|---|

| [15] | 2014 | IEEE Transactions on Human-Machine Systems | Not specified | Arabic | Partial | Yes | Yes | Recognition accuracy: yes | No | Reviews systems and methods for image-based and sensor-based ArSLR, main challenges, and presents research directions for ArSLR. | WoS Scopus Google Scholar |

| [17] | 2014 | IEEE 11th International Multi-Conference on Systems, Signals & Devices (SSD14) | Not specified | Arabic | Partial | Yes | Yes | Recognition accuracy: yes, Error rate: partial |

No | Reviews methods for image-based ArSLR, challenges, and shows research directions for ArSLR. | WoS Scopus Google Scholar |

| [9] | 2020 | Malaysian Journal of Computer Science | 2001–2017 | Arabic | Partial | Yes | Yes | Recognition accuracy: yes | No | Provides review, taxonomy, open challenges, research roadmap, and future directions. | WoS Scopus Google Scholar |

| [12] | 2021 | Bayburt University Journal of Science | Not specified | Arabic | No | Yes | Partial | Recognition accuracy: yes | No | Reviews vision-based ArSLR studies and shows challenges and future directions. | Google Scholar |

| [2] | 2021 | Archives of Computational Methods in Engineering | 2007–2017 | Arabic and others. | Partial | Yes | No | Recognition accuracy: yes | Yes | Review previous studies on recognition of different sign languages, including ArSLR, with a focus on six aspects: acquisition method, signing mode, single/double handed, static/dynamic signing, techniques used, and recognition rate. | Google Scholar |

| [20] | 2021 | Journal of Physics: Conference Series | 2010–2019 | Arabic and others | No | Yes | Yes | Recognition accuracy: yes | No | Gives an overview of the ArSLR studies in terms of input devices, feature extraction and classifier algorithms. | Google Scholar |

| [18] | 2023 | Conference of Mathematics, Applied Sciences, Information and Communication Technology | Not specified | Arabic, Iraqi sign language and others | No | Yes | No | Recognition accuracy: yes | No | Presents techniques used in sign language research and shows the main classification of the approaches. | Scopus |

| [19] | 2023 | International Symposium on Networks, Computers and Communications (ISNCC) | Not specified | Arabic | Partial: Only public datasets | No | No | No | No | Reviews the current ArSL datasets and how to improve them. | Scopus |

| [21] | 2024 | Indian Journal of Computer Science and Engineering (IJCSE) | Not specified | Arabic | Partial: Only public datasets | Yes | Yes | Accuracy | No | Investigates the ArSLR studies focusing on classifier algorithms, feature extraction, and input modalities. It also shed light on the public datasets in the field of ArSLR. | Google Scholar |

| Our | 2024 | Sensors Journal | 2014–2023 | Arabic | Yes | Yes | Yes | Yes | Systematic | A variety of ArSLR algorithms are systematically reviewed in order to determine their efficacy and potential for raising ArSLR performance. Furthermore, the present and future directions in the field of ArSLR are examined, emphasizing significant areas of innovation and interest. |

Yes means this aspect is fully covered, Partial means this aspect is partially covered, and No means this aspect is not covered.

2.1.2. Providing Research Questions

This section discusses the fundamental questions to be investigated in the study. The research questions are divided into three main groups:

Questions about the used datasets

Questions about the used algorithms and methods

Questions about the research limitations and future directions.

Table 2, Table 3 and Table 4 display the questions along with their respective purposes.

Table 2.

Research questions related to the used datasets.

| No. | Research Question | Purpose | |

|---|---|---|---|

| RQ.1 | What are the characteristics of the datasets used in the selected papers? | To discover the quality and characteristics of the datasets utilized for ArSLR. | |

| RQ1.1 | How many datasets are used in each of the selected papers? | ||

| RQ1.2 | What is the availability status of the datasets in the reviewed papers? | ||

| RQ1.3 | How many signers were employed to represent the signs in each dataset? | ||

| RQ1.4 | How many samples are there in each dataset? | ||

| RQ1.5 | How many signs are represented by each dataset? | ||

| RQ1.6 | What is the number of datasets that include manual signs, non-manual signs, or both manual and non-manual signs? | ||

| RQ1.7 | What are the data acquisition devices that were used to capture the data for ArSLR? | ||

| RQ1.8 | What are the acquisition modalities used to capture the signs? | ||

| RQ1.9 | Do the datasets contain images, videos, or others? | ||

| RQ1.10 | What is the percentage of the datasets that represent alphabets, numbers, words, sentences, or a combination of these? | ||

| RQ1.11 | What is the percentage of the datasets that have isolated, continuous, fingerspelling, or miscellaneous signing modes? | ||

| RQ1.12 | What is the percentage of the datasets based on their data collection location/country? | ||

Table 3.

Research questions related to the used algorithms and methods.

| No. | Research Question | Purpose | |

|---|---|---|---|

| RQ.2 | What were the existing methodologies and techniques used in ArSLR? | To identify and compare the existing ML/DL methodologies and algorithms used in different phases of ArSLR. | |

| RQ2.1 | Which preprocessing methods were utilized? | ||

| RQ2.2 | Which segmentation methods were applied? | ||

| RQ2.3 | Which feature extraction methods were used? | ||

| RQ2.4 | What ML/DL algorithms were used for ArSLR? | ||

| RQ2.5 | Which evaluation metrics were used to measure the performance of ArSLR algorithms? | ||

| RQ2.6 | What are the performance results in terms of recognition accuracy? | ||

Table 4.

Research questions related to the research limitations and future directions.

| No. | Research Question | Purpose | |

|---|---|---|---|

| RQ.3 | What are the challenges, limitations, and future directions mentioned in the reviewed papers? | To identify and analyze limitations, challenges, and future directions in the field of ArSLR research. | |

| RQ3.1 | Has the number of research papers regarding ArSLR been increasing in the past decade? | ||

| RQ3.4 | What are the limitations and/or challenges faced by researchers in the field of ArSLR? | ||

| RQ3.5 | What are the future directions for ArSLR research? | ||

2.1.3. Developing Review Protocol

Once the research questions and sub-questions were specified, the scope of the research was defined using the PICO method proposed by Petticrew and Roberts [22]:

P: Population. Deaf individuals who use Arabic sign language to communicate.

I: Intervention. Recognition of Arabic sign language.

C: Comparison. The machine learning and deep learning algorithms adopted for Arabic sign language recognition and the datasets developed for Arabic sign language.

O: Outcomes. Performance reported in the selected studies.

Search Strategy

To establish a systematic literature review, it is crucial to specify the search terms and to identify the scientific databases where the search will be conducted. In 2019, a study was carried out to evaluate the search quality of PubMed, Google Scholar, and 26 other academic search databases [23]; the results revealed that Google Scholar is not suitable as a primary search resource. Therefore, the most widely used scientific databases in the field of research were selected for this systematic review, namely Web of Science, Scopus, and IEEE Xplore Digital Library. Each database was last consulted on 2 January 2024.

A search string is an essential component of a systematic literature review for study selection, as it restricts the scope and coverage of the search. A set of search strings was created with the Boolean operator combining suitable synonyms and alternate terms: AND restricts and limits the results, and OR expands and extends the search [24]. Moreover, double quotes have been used to search for exact phrases. At this initial stage, the search was limited to the title, abstract, and keywords of the papers. Each database has different reserved words to indicate these three elements, such as TS in Web of Science, TITLE-ABS-KEY in Scopus, and All Metadata in IEEE Xplore. The specific search strings used in each scientific database are listed below:

Web of Science:

TS = (Arabic sign language) AND recognition

Scopus:

TITLE-ABS-KEY (“Arabic sign language” AND recognition)

IEEE Xplore:

((“All Metadata”: “Arabic sign language”) AND (“All Metadata”: recognition))

Inclusion and Exclusion Criteria

The selection process of the studies has a significant impact on the systematic review’s findings. As a result, all studies that were found using the search strings were assessed to see if they should be included in this review. The review excluded all studies that did not fulfill all inclusion criteria. A study was excluded if it satisfied at least one of the exclusion criteria. The inclusion criteria used in this systematic review are:

IN1: Papers must be peer-reviewed articles and published in journals.

IN2: Papers that are written in English.

IN3: Papers are published between January 2014 and December 2023.

IN4: Papers must be primary studies.

IN5: Papers that use Arabic sign language as a language for recognition.

IN6: Papers that use machine learning and/or deep learning methods as a solution to the problem of ArSLR.

IN7: Papers that include details about the achieved results.

IN8: Papers that mention the datasets used in their experiment.

We used several criteria to discard papers from the candidate set. The exclusion criteria (EC) are as follows:

EC1: Papers that are conference proceedings, data papers, books, notes, theses, letters, or patents.

EC2: Papers that are not written in English.

EC3: Papers that are published before January 2014 or after December 2023.

EC4: Papers that are secondary studies (i.e., review, survey).

EC5: Papers that do not use Arabic sign language as a language for recognition.

EC6: Papers that do not use machine learning or deep learning methods as a solution to the problem of ArSLR.

EC7: Papers that do not include details about the achieved results.

EC8: Papers that do not mention the datasets used in their experiment.

EC9: Papers with multiple versions are not included; only one version will be included.

EC10: Duplicate papers found in more than one database (e.g., in both Scopus and Web of Science) are not included; only one will be included.

EC11: The full text is not accessible.

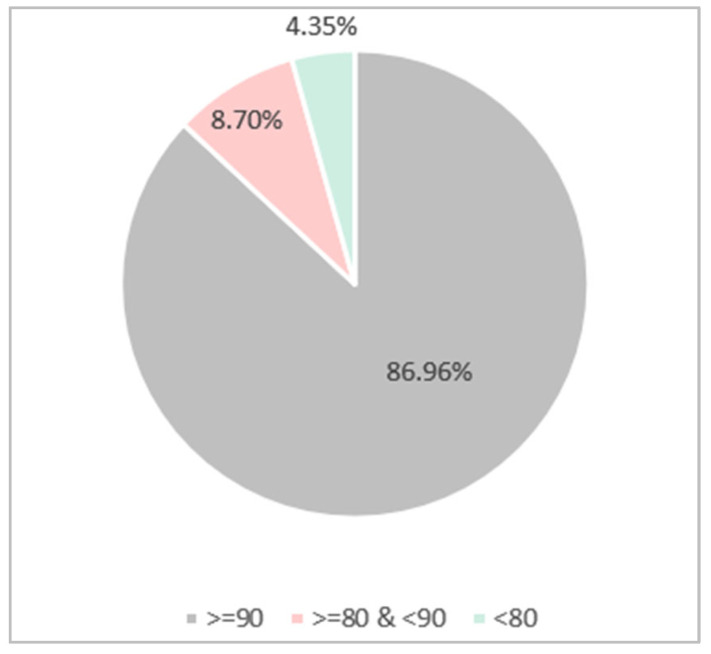

Quality Assessment

It is deemed essential to assess the quality of the primary studies in addition to inclusion/exclusion criteria to offer more thorough criteria for inclusion and exclusion, to direct the interpretation of results, and to direct suggestions for further research [16]. In-depth assessments of quality are typically conducted using quality instruments, which are checklists of variables that must be considered for each primary study. Numerical evaluations of quality can be achieved if a checklist’s quality items are represented by numerical scales. Typically, checklists are created by considering variables that might affect study findings. A quality checklist aims to contribute to the selection of studies through a set of questions that must be answered to guide the research. In the current study, a scoring system based on the answers to six questions was applied to each study. These questions are:

QA1: Is the experiment on ArSLR clearly explained?

QA2: Are the machine learning/deep learning algorithms used in the paper clearly described?

QA3: Is the dataset and number of training and testing data identified clearly?

QA4: Are the performance metrics defined?

QA5: Are the performance results clearly shown and discussed?

QA6: Are the study’s drawbacks or limitations mentioned clearly?

Each study obtained a score of three for each question: 0, 0.5, and 1, denoting no, partially, and yes, respectively. A study was finally taken into consideration if it received a score of 4 or more out of 6 on the previous questions.

2.2. Conducting the Systematic Literature Review

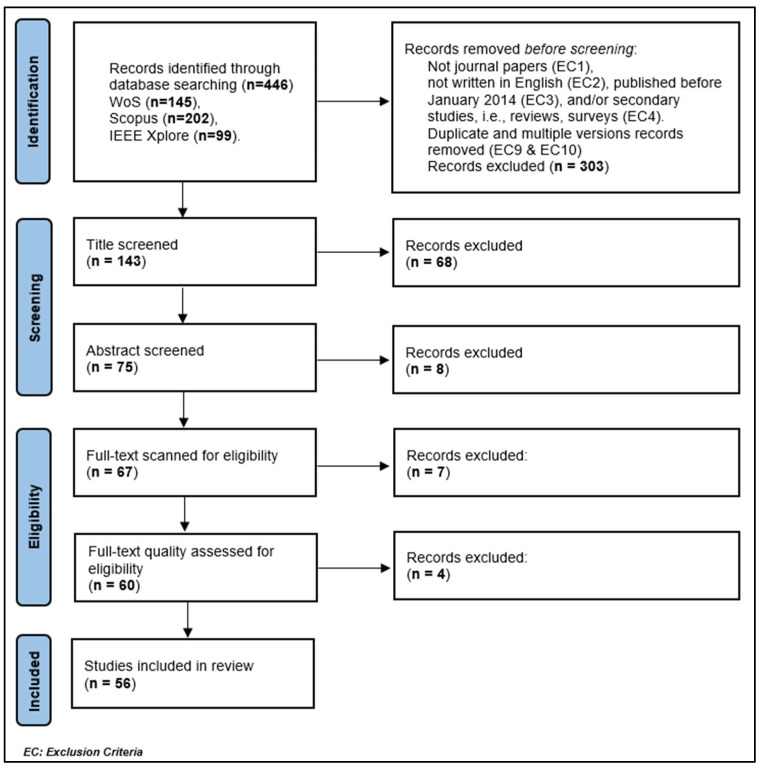

The search results and evaluation of the papers chosen for inclusion are discussed here. Figure 4 summarizes the number of eligible papers and shows how many were eliminated in each step.

Figure 4.

Steps of paper selection.

2.2.1. Selection of Primary Studies

The search for peer-reviewed papers was conducted in three large databases: Web of Science, Scopus, and IEEE Xplore. The Web of Science, Scopus, and IEEE Xplore Digital Library databases are estimated to be suitable because there are duplicate papers in the search results, i.e., the same work appears in different databases at the same time. This indicates the very high coverage of these scientific databases. These databases were searched using predetermined search strings, as specified in the review protocol above. There were 145 papers found in Web of Science and 202 and 99 in Scopus and IEEE Xplore, respectively.

Of the 446 papers in the initial search, the exclusion criteria were applied automatically to select eligible papers as follows: 303 papers were discarded because they were conference proceedings, data papers, books, notes, theses, letters, or patents (EC1), not written in English (EC2), published before January 2014 (EC3), and/or secondary studies, i.e., reviews, surveys (EC4). At this stage, the number of remaining papers was 62, 74, and 7 in Web of Science, Scopus, and IEEE Xplore, respectively.

Title-Level Screening Stage

Exclusion criteria EC9 and EC10 were applied to remove duplicate papers and multiple versions. The titles were also screened to discard the papers that meet EC1, EC4, and EC5. In this stage, we excluded 68 research papers; thus, the number of papers was lowered to 75 eligible papers. Examples of research papers that were excluded in this stage are presented in Table 5.

Table 5.

The reasoning behind the exclusion of some papers in the title-level screening stage.

| Ref. | Paper Title | Reason for Exclusion |

|---|---|---|

| [25] | Indian sign language recognition system using network deconvolution and spatial transformer network | EC5—Indian sign language recognition not ArSLR |

| [26] | SignsWorld Atlas; a benchmark Arabic Sign Language database | EC1—Data paper |

| [15] | Image-Based and Sensor-Based Approaches to Arabic Sign Language Recognition | EC4—Review paper |

Abstract-Level Screening Stage

In this stage, the abstracts for the 75 papers were screened to shorten paper reading time. The paper is excluded if the abstract meets at least one of the exclusion criteria: EC5: Papers that do not use Arabic sign language as a language for recognition and/or EC6: Papers that do not use machine learning or deep learning methods as a solution to the problem of ArSLR.

As a result of this screening, eight research papers were excluded, and 67 were included in the full-text article scanning. Examples of research papers that were excluded in this stage are included in Table 6.

Table 6.

The reasoning behind the exclusion of some papers in the abstract-level screening stage.

| Ref. | Paper Title | Reason for Exclusion |

|---|---|---|

| [27] | A machine translation system from Arabic sign language to Arabic | EC6—Paper does not use machine learning or deep learning methods as a solution to the problem of ArSLR |

| [28] | Isolated Video-Based Arabic Sign Language Recognition Using Convolutional and Recursive Neural Networks | EC5—Paper does not use ArSL as a language for recognition. Moroccan sign language recognition is used. Although Moroccan is an Arabic delicate, Moroccan sign language is different from the unified Arabic sign language. |

Full-Text Scanning Stage

In this stage, the full texts of 67 papers are scanned. The paper is excluded if it satisfies one of the following exclusion criteria,

EC5: Papers that do not use Arabic sign language as a language for recognition,

EC6: Papers that do not use machine learning or deep learning methods as a solution to the problem of ArSLR,

EC7: Papers that do not include details about the achieved results,

EC8: Papers that do not mention the datasets used in their experiment,

EC9: Papers with multiple versions are not included; only one version will be included, or

EC11: The full text is not accessible.

A total of Seven research papers were excluded in this stage, making the number of studies for the next stage 60. Examples of the excluded research papers and the reasoning for their exclusion are illustrated in Table 7.

Table 7.

The reasoning behind the exclusion of some papers in the full-text scanning stage.

| Ref. | Paper Title | Reason for Exclusion |

|---|---|---|

| [29] | Supervised learning classifiers for Arabic gestures recognition using Kinect V2 | EC5—Paper does not use ArSL as a language for recognition. Egyption sign language recognition is used. Although Egyption is an Arabic delicate, Egyption sign language is different from the unified Arabic sign language (ArSL). |

| [30] | Arabic dynamic gesture recognition using classifier fusion | |

| [31] | Intelligent real-time Arabic sign language classification using attention-based inception and BiLSTM | EC5—Paper does not use ArSL as a language for recognition. Saudi sign language recognition is used. Saudi is an Arabic delicate, however; Saudi sign language is different from the unified Arabic sign language (ArSL). |

| [32] | Arabic sign language intelligent translator | EC9—Papers with multiple versions are not included; only one version will be included. The same methodology and results discussed in this paper are presented in another paper published in 2019 and written by the same authors [33]. |

| [34] | Mobile camera-based sign language to speech by using zernike moments and neural network | EC11—The full text is not accessible. |

2.2.2. Quality Assessment Results

This section presents the quality assessment results used to select relevant papers based on the quality criteria defined in Section 2.1.3. Failure to provide information to meet these criteria leads to a lower paper evaluation score. Papers with a score of 3.5 or lower out of 6 are removed from the pool of candidates. As a result, only high-quality papers are taken into consideration. Table 8 shows some example research papers from this stage and elaborates on how and why they were included or excluded.

Table 8.

The reasoning behind the exclusion or inclusion of some papers in the full-text article screening stage.

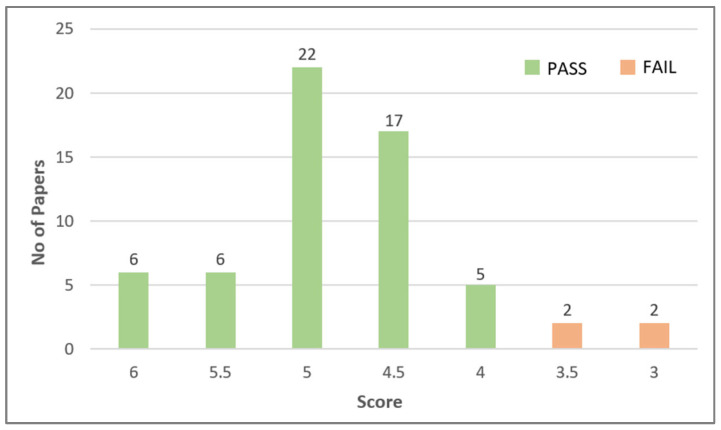

Out of 60 papers, 56 of them met the quality assessment criteria. The number of papers that failed and passed these criteria is illustrated in Figure 5.

Figure 5.

Number of papers passed and failed the quality assessment.

One of the reasons why some papers fail to pass the quality assessment phase is that some papers did not explain their ArSLR methodology clearly and thoroughly. The other reasons are an insufficient description of the utilized ML or DL algorithms and/or a lack of information related to the used datasets, such as the number of training and testing datasets. Missing identification of performance metrics and poor analysis and discussion of the results also contributed to removing some papers from the pool of candidates. Many of the studies included a detailed explanation of their methodology; however, some neglected to address the limitations and drawbacks of the techniques, which also affected the assessment.

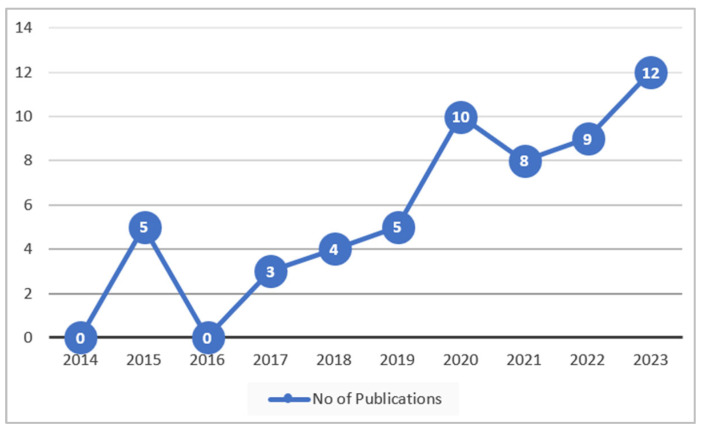

As seen in Figure 6, an increase has been observed in recent years, from 2017 to 2023, in the research of ArSLR, which itself exhibits the significance of this investigation.

Figure 6.

Number of eligible papers published per year.

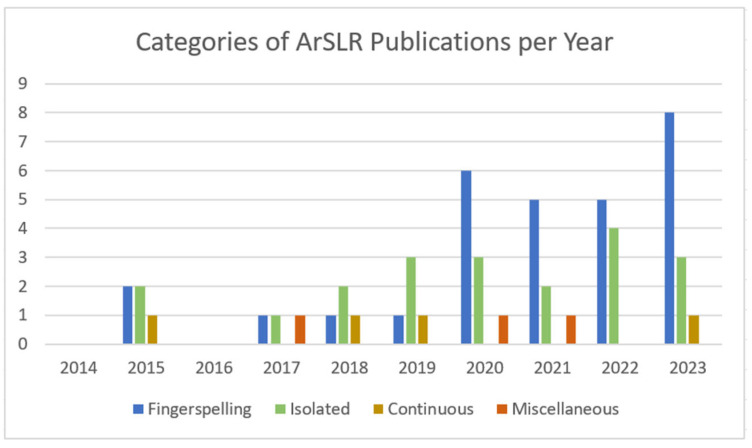

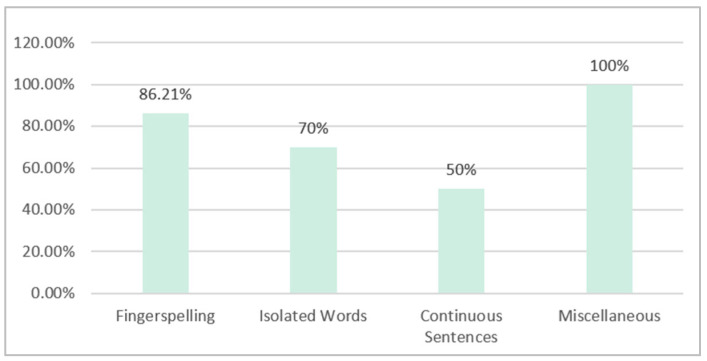

Figure 7 reveals the number of publications for each category of ArSLR for the years between 2014 and 2023. It can be seen that, over the years, fingerspelling recognition has attracted more researchers, followed by isolated recognition and then continuous recognition. It is worth noting that a few of the selected papers have worked on more than one category of ArSLR, and they belong to the category of miscellaneous recognition [37,38,39].

Figure 7.

Number of publications for each category of ArSLR for the years between 2014 and 2023.

2.2.3. Data Extraction and Synthesis

After reading the full text, the author aims to extract the answers to the defined research questions from each study of the 56 papers included in this systematic review. The extracted data were synthesized to provide a comprehensive summary of the results. The author used a test-retest strategy and reassessed a random sample of the primary studies that were identified following the first screening in order to verify the consistency of their inclusion/exclusion judgments.

2.3. Reporting the Systematic Literature Review

Addressing the research questions and sub-questions listed in Table 2, Table 3 and Table 4 is the main aim of this stage. The following section presents the literature review, answering questions RQ1 through RQ3 and making a summary and synthesis of the data gathered from the results of the selected studies.

3. Research Results

In this section, the answer to each research question is provided by summarizing and discussing the results of the selected papers.

3.1. Datasets of Arabic Sign Language

To answer the first research question, RQ1: What are the characteristics of the datasets used in the selected papers? Twelve research sub-questions have been answered. These questions address different aspects and characteristics of the datasets utilized in the selected papers.

Large volumes of data are necessary for ML/DL models to carry out certain tasks accurately. One of the obstacles to the advancement of Arabic sign language recognition research and development is the availability of datasets [27]. Despite the huge number of sign language videos on the internet, recognition systems cannot benefit from these unannotated videos.

Sign language datasets fall into three primary categories: fingerspelling, isolated signs, and continuous signs. Fingerspelling datasets focus on the shape, orientation, and direction of the finger. Sign language for numbers and alphabets belongs to this category, where the signs are primarily static and performed with just fingers. Datasets for isolated words can be either static (images) or dynamic (videos). Continuous sign language datasets consist of videos of signers performing more than one sign word continuously. Combinations of the other collections make up the fourth category of datasets, known as miscellaneous datasets.

Table 9, Table 10, Table 11 and Table 12 summarize the main characteristics of the datasets in the reviewed papers according to the category they represent. ArSL datasets for Arabic alphabets and/or numbers are summarized in Table 9. Table 10 and Table 11 describe ArSL datasets for words and sentences, whereas Table 12 summarizes ArSL miscellaneous datasets. Rows shaded in gray indicate methods that utilize wearable sensors.

Table 9.

Fingerspelling ArSL datasets. “PR” means Private dataset, “AUR” means Available Upon Request dataset, and “PA” means Publicly Available dataset.

| Year | Dataset | Created/Used by | Availability | # Signers (Subjects) | Samples | # Signs (Classes) | MS/NMS | Data Acquisition Device | Acquisition Modalities | Images/Videos or Others? | Collection | Signing Mode | Static/Dynamic | Data Collection Location/Country | Comments | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ref. | Year | With Other Datasets? | Alphabets | Numbers | Words | Sentences | Isolated | Continuous | Fingerspelling | |||||||||||||

| 2001 | Al-jarrah dataset [40] | [41] | 2023 | No | AUR | 60 | 1800 | 30 | MS | Camera | RGB | 128 × 128 pixels grey scale images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Jordan | |

| 2015 | ArASLRDB [42] | [42] | 2015 | No | PR | - | 357 | 38 | MS | Camera | RGB | 640 × 480 pixels saved in jpg file format | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | 29 letters, 9 numbers |

| 2015 | The Suez Canal University ArSL dataset [43] | [43] | 2015 | No | AUR | - | 210 | 30 | MS | Camera | RGB | 200 × 200 Gray-scale images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | 7 images for each letter gesture |

| [44] | 2020 | No | ||||||||||||||||||||

| 2016 | ArSL [45] | [46] | 2023 | Yes | AUR | - | 350 | 14 | MS | Camera | RGB | 64 × 64 grey scale images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | |

| [47] | 2023 | Yes | ||||||||||||||||||||

| 2017 | [48] | [48] | 2017 | No | PR | 20 | 1398 | 28 | MS | Microsoft Kinect V2 and Leap Motion Controller (LMC) sensors (manufactured by Leap Motion, Inc., a company based in San Francisco, CA, USA. Leap Motion, Inc. has since merged with Ultraleap, a UK-based company) | Depth, skeleton models | All upper joints (hand’s skeleton data). | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Saudi Arabia | |

| 2018 | [49] | [49] | 2018 | No | PR | 30 | 900 | 30 | MS | Smart phone cameras | RGB | RGB | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Saudi Arabia | |

| 2019 | ArSL2018 [50,51] | [52] | 2019 | No | PA | 40 | 54,049 | 32 | MS | smart camera (iPhone 6S manufactured by Apple Inc., headquartered in Cupertino, CA, USA) | RGB | 64 × 64 pixels gray scale images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Saudi Arabia | |

| [53] | 2020 | No | ||||||||||||||||||||

| [54] | 2020 | No | ||||||||||||||||||||

| [38] | 2020 | YES | ||||||||||||||||||||

| [55] | 2020 | No | ||||||||||||||||||||

| [56] | 2021 | YES | ||||||||||||||||||||

| [57] | 2021 | No | ||||||||||||||||||||

| [58] | 2022 | No | ||||||||||||||||||||

| [59] | 2022 | No | ||||||||||||||||||||

| [60] | 2022 | No | ||||||||||||||||||||

| [61] | 2022 | No | ||||||||||||||||||||

| [47] | 2023 | Yes | ||||||||||||||||||||

| [62] | 2023 | Yes | ||||||||||||||||||||

| [63] | 2023 | Yes | ||||||||||||||||||||

| [46] | 2023 | Yes | ||||||||||||||||||||

| [64] | 2023 | No | ||||||||||||||||||||

| [65] | 2023 | No | ||||||||||||||||||||

| [66] | 2023 | No | ||||||||||||||||||||

| 2019 | Ibn Zohr University dataset [67] | [63] | 2023 | Yes | AUR | - | 5839 | 28 | MS | professional Canon® camera (manufactured by Canon Inc., headquartered in Ōta City, Tokyo, Japan). | RGB | 256 × 256 pixels in RGB colored images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Morocco | |

| 2020 | [68] | [68] | 2020 | No | PR | 10 | 300 | 28 | MS | RGB digital camera | RGB | 128 × 128 RGB images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | |

| 2020 | [69] | [69] | 2020 | No | PR | - | 3875 | 31 | MS | Camera | RGB | 128 × 128 RGB images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Saudi Arabia | 125 images for each letter |

| 2021 | [70] | [70] | 2021 | No | PR | 7 | 4900 | 28 | MS | Six 3-D IMU sensors (manufactured by SparkFun Electronics, headquartered in Boulder, CO, USA). | Raw data | Gyroscopes and accelerometers data | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Palestine | |

| 2021 | [71] | [71] | 2021 | Yes | PR | 10 | 22,000 | 44 | MS | Camera | RGB | RGB image | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Iraq | 32 letters, 11 numbers (0:10), and 1 sign for none |

| 2021 | [72] | [72] | 2021 | Yes | PR | 10 | 2800 | 28 | MS | Webcam and smart mobile cameras | RGB | RGB images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | Light background |

| 2021 | [72] | [72] | 2021 | Yes | PR | 10 | 2800 | 28 | MS | Webcam and smart mobile cameras | RGB | RGB images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | Dark background |

| 2021 | [72] | [72] | 2021 | Yes | PR | 10 | 1400 | 28 | MS | Webcam and smart mobile cameras | RGB | RGB images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | Images made with a right hand and wearing gloves, white background |

| 2021 | [72] | [72] | 2021 | Yes | PR | 10 | 1400 | 28 | MS | Webcam and smart mobile cameras | RGB | RGB images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Egypt | Images made with a bare hand “two hands” and wearing glove with different background |

| 2021 | [73] | [73] | 2021 | No | PR | - | 15,360 | 30 | MS | Mobile cameras | RGB | 720 × 960 × 3 RGB images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Saudi Arabia | |

| 2022 | [74] | [74] | 2022 | No | PR | 20+ | 5400 | 30 | MS | Smart phone camera | RGB | RGB | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Saudi Arabia | |

| 2023 | [63] | [63] | 2023 | Yes | PR | 30 | 840 | 28 | MS | Camera | RGB | 64 × 64 pixels gray scale images | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | S | Iraq | 50% left-handed, 50% right-handed |

Table 10.

ArSL Datasets for isolated words. “PR” means Private dataset, “AUR” means Available Upon Request dataset, and “PA” means Publicly Available dataset.

| Year | Dataset | Created/Used by | Availability | # Signers (Subjects) | Samples | # Signs (Classes) | MS/NMS | Data Acquisition Device | Acquisition Modalities | Images/Videos or Others? | Collection | Signing Mode | Static/Dynamic | Data Collection Location/Country | Comments | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ref. | Year | With Other Datasets? | Alphabets | Numbers | Words | Sentences | Isolated | Continuous | Fingerspelling | |||||||||||||

| 2007 | Shanableh dataset [75] | [76] | 2019 | Yes | AUR | 3 | 3450 | 23 | MS | Video camera | RGB | RGB video recorded at 25 fps with 320 × 240 resolution. | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | United Arab Emirates (UAE) | Words chosen from the greeting section |

| [77] | 2020 | No | ||||||||||||||||||||

| [78] | 2022 | Yes | ||||||||||||||||||||

| 2015 | EMCC database (EMCCDB) [79] | [79] | 2015 | No | PR | 3 | 1288 | 40 | MS | Camera | RGB | 30 frames per second for video frames and the size of the frames is 640 × 480 pixels. | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Egypt | |

| 2015 | Dataset for hands recognition [80] | [80] | 2015 | YES | PR | 2 | 80 | 20 | MS | LMC | Skelton joint points | Sequences of frames | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Egypt | |

| 2015 | Dataset for facial expressions recognition [80] | [80] | 2015 | YES | PR | 2 | 50 | 5 | NMS | Digital camera | RGB | 70 × 70 RGB image | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | S | Egypt | 5 basic facial expressions: happy, sad, normal, surprised, and looking-up. |

| 2015 | Dataset for body movement recognition [80] | [80] | 2015 | YES | PR | 2 | NM | 21 | NMS | Digital camera | RGB | 100 × 100 RGB image | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | S | Egypt | 16 different hand positions, and 5 shoulder movements. |

| 2017 | [81] | [81] | 2017 | No | PR | 10 | 143 | 5 | MS | Microsoft Kinect V2 and LMC sensors | Depth, skelton models | All features of upper joints including the hand’s skeleton | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | S | Saudi Arabia | Words [Cruel, Giant, Plate, Tower, Objection] |

| 2018 | [82] | [82] | 2018 | YES | PR | 21 | 7500 | 150 | MS & NMS | Microsoft Kinect V2 | RGB video, depth video, 3D skeleton sequence, sequence of hand states, sequence of face features. | Joints of the upper body part, which are 16 joints are used. | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Egypt | |

| 2018 | [5] | [5] | 2018 | No | PR | 450 | 30 | MS | Camera | RGB | Colored videos at a rate of 30 fps | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Egypt | 30 daily commonly used words at school | |

| 2019 | [83] | [83] | 2019 | No | PR | 2 | 2000 | 100 | MS | Front and side LMCs. | RGB | Sequence of frames. | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Saudi Arabia | |

| 2019 | [76] | [76] | 2019 | YES | PR | 3 | 7500 | 50 | MS | Microsoft Kinect V2 | RGB, depth, and skeleton joints | The color images are saved into MP4 video, depth frames and skeleton joints are saved into binary files. | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Saudi Arabia | |

| 2019 | ArSL Isolated Gesture Dataset [33] | [33] | 2019 | No | AUR | 3 | 1500 | 100 | MS | Camera | RGB | Each video has a frame speed of 30 frames per second | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Egypt | 30 Alphabets, 10 numbers, 10 Prepositions, pronouns and question words, 10 Arabic life expressions, and 40 Common nouns and verbs. |

| 2020 | [84] | [84] | 2020 | NO | PR | 5 | 440 | 44 | MS | LMC | Skelton joint points | Sequences of frames | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Syria | 29 signs are one hand gestures, and 15 signs are two hand gestures |

| 2020 | [85] | [85] | 2020 | No | PR | 10 | 222 | 6 | MS | Kinect sensor | Depth | Depth video frames | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Saudi Arabia | Arabic Words [Common-, Protein, Stick, Disaster, Celebrity, Bacteria] |

| 2020 | [38] | [38] | 2020 | Yes | PR | - | 288 | 10 | MS | Mobile camera | RGB | RGB images | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | S | Egypt | |

| 2021 | KArSL-33 [86,87] | [78] | 2022 | Yes | PA | 3 | 4950 | 33 | MS & NMS | Kinect V2 | Depth, Skeleton joint points, RGB | RGB video format | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Saudi Arabia | |

| 2021 | mArSL [88,89] | [88] | 2021 | No | AUR | 4 | 6748 | 50 | MS & NMS | Kinect V2 | RGB, depth, joint points, face, and faceHD. | 224 × 224 RGB video frames | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Saudi Arabia | |

| [35] | 2023 | No | ||||||||||||||||||||

| 2021 | KArSL-100 [86,87] | [78] | 2022 | Yes | PA | 3 | 15,000 | 100 | MS & NMS | Kinect V2 | RGB, depth, skeleton joint points | RGB video format | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ | S & D | Saudi Arabia | 30-digit signs, 39 letter signs, and 31-word signs. |

| KArSL-190 [86,87] | [78,90] | 2022 | Yes | 3 | 28,500 | 190 | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ | S & D | Saudi Arabia | 30-digit signs, 39 letter signs, and 121-word signs. | ||||||

| KArSL-502 [86,87] | [90] | 2022 | Yes | 3 | 75,300 | 502 | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ | S & D | Saudi Arabia | 30 digit and signs, 39 letter signs, and 433 sign words. | ||||||

| 2022 | [91] | [91] | 2022 | No | PR | - | 1100 | 11 | MS | - | RGB | RGB video format | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Saudi Arabia | 11 Words: [Friend, Neighbor, Guest, Gift, Enemy, To Smel, To Help, Thank you, Come in, Shame, House] |

| 2022 | [92] | [92] | 2022 | No | PR | 55 | 7350 | 21 | MS | Kinect camera V2 | RGB and Depth video | RGB video format | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Iraq | Words: [Nothing, Cheek, Friend, Plate, Marriage, Moon, Break, Broom, You, Mirror, Table, Truth, Watch, Arch, Successful, Short, Smoking, I, Push, stingy, and Long] |

| 2023 | [93] | [93] | 2023 | No | PR | 1 | 500 | 5 | MS | - | - | - | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | S | Saudi Arabia | |

| 2023 | [94] | [94] | 2023 | No | PR | 72 | 8467 | 20 | MS | Mobile camera | RGB | Videos | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | D | Egypt | |

Table 11.

ArSL Datasets for continuous sentences. “PR” means Private dataset, “AUR” means Available Upon Request dataset, and “PA” means Publicly Available dataset.

| Year | Dataset | Created/Used by | Availability | # Signers (Subjects) | Samples | # Signs (Classes) | MS/NMS | Data Acquisition Device | Acquisition Modalities | Images/Videos or Others? | Collection | Signing Mode | Static/Dynamic | Data Collection Location/Country | Comments | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ref. | Year | With Other Datasets? | Alphabets | Numbers | Words | Sentences | Isolated | Continuous | Fingerspelling | |||||||||||||

| 2015 | Tubaiz, Shanableh, & Assaleh dataset [95] | [95] | 2015 | No | AUR | 1 | 400 sentences 800 words | 40 | MS | Two DG5-VHand data gloves and (a video camera in training phase) | Raw sensor data (feature vectors) for hand movement and hand orientation | Sensor readings | ✗ | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | D | UAE | An 80-word lexicon was used to form 40 sentences. |

| [96] | 2019 | Yes | ||||||||||||||||||||

| 2018 | [97] | [97] | 2018 | No | PR | 7 | 1260 samples | 42 | MS | Microsoft Kinect V2 | RGB, depth, skeleton joints | For each sign, a sequence of skeleton data consisted of 20 joint positions per frame is recorded (formed from x, y, and depth coordinates) | ✗ | ✗ | ✓ | ✓ | ✗ | ✓ | ✗ | D | Egypt | Sentences containing medical terms performed by 7 signers (5 training, 2 testing). (20 samples from different 5 signers for each sign as a training set and 10 samples from different 2 signers as a testing set) |

| 2019 | Self-acquired sensor-based dataset 2 [96] | [96] | 2019 | YES | AUR | 2 | 400 sentences 800 words. | 40 | MS | Two Polhemus G4 motion trackers | Raw data | Motion tracker readings | ✗ | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | D | Egypt | |

| 2019 | Self-acquired vision-based dataset 3 [96] | [96] | 2019 | Yes | AUR | 1 | 400 sentences 800 words. |

40 | MS | Camera | RGB | Videos with frame rate set to 25 Hz with a spatial resolution of 720 × 528 | ✗ | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | D | Egypt | - |

| [98] | 2023 | No | ||||||||||||||||||||

Table 12.

ArSL Miscellaneous Datasets. “PR” means Private dataset, “AUR” means Available Upon Request dataset, and “PA” means Publicly Available dataset.

| Year | Dataset | Created or Used by | Availability | # Signers (Subjects) | Samples | # Signs (Classes) | MS/NMS | Data Acquisition Device | Acquisition Modalities | Images/Videos or Others? | Collection | Signing Mode | Static/Dynamic | Data Collection Location/Country | Comments | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ref. | Year | With Other Datasets? | Alphabets | Numbers | Words | Sentences | Isolated | Continuous | Fingerspelling | |||||||||||||

| 2017 | [37] | [37] | 2017 | No | PR | 3 | 26,060 samples | 79 | MS | LMC | Hands skeleton joint points | Sequences of frames | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | S & D | Egypt | Alphabets: 28, Numbers: 11 (0–10), common dentist words: 8, common verbs and nouns-single hand: 20, common verbs and nouns-two hands: 10 |

| 2021 | [39] | [39] | 2021 | No | PR | 40 | 16,000 videos | 80 | MS & NMS | Kinect camera V2 | RGB, depth, skeleton data | 1280 × 920 × 3 RGB frames | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ | ✓ | S & D | Saudi Arabia | |

3.1.1. RQ1.1: How Many Datasets Are Used in Each of the Selected Papers?

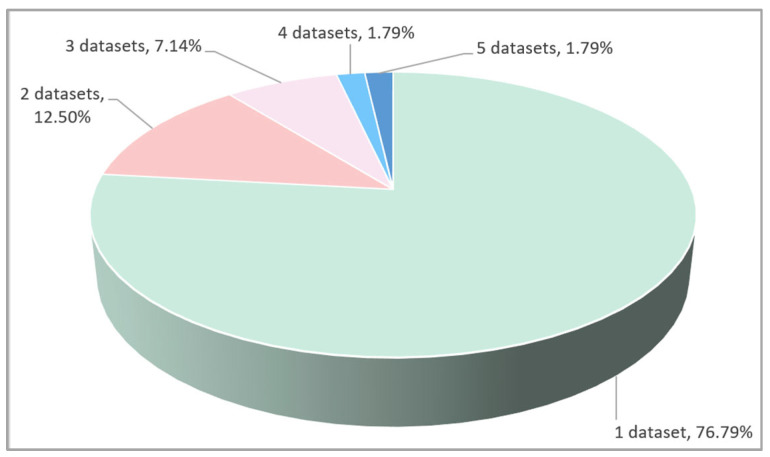

According to the results in Figure 8, we can see that a high percentage of the reviewed papers, 76.79%, representing 43 papers, use one dataset in their experiments. Seven papers, with a percentage of 12.5%, rely on two datasets to conduct their experiments. Three and four datasets are utilized in four and one studies, with a percentage of 7.14% and 1.79%, respectively. Only one study uses five datasets with a percentage of 1.79%.

Figure 8.

Percentage of the reviewed studies based on the number of utilized datasets.

One of the following could be the rationale for using multiple datasets:

Incorporate different collections of sign language, for example, a dataset for letters and a dataset for words.

Collect sign language datasets pertaining to many domains, such as health-related words and greeting words.

Collect datasets representing different modalities, for example, one for JPG and the other for depth and skeleton joints and/or different data acquisition devices.

Enhance the study by training and testing the model using more than one sign language dataset and/or comparing the results.

Conduct user-independent sign language recognition, where the proposed mode is tested using signs represented by different signers from those in the training set.

Table 13 summarizes the reasons behind the use of more than one dataset in the reviewed studies.

Table 13.

Reasons for utilizing more than one dataset in the reviewed studies.

| No of Datasets | Ref. | Reason for Utilizing More Than One Dataset | Comments |

|---|---|---|---|

| 2 | [76] | Reason 2 & 3 | Different modalities and sign words from different domains. |

| [38] | Reason 1 | Alphabet and words. | |

| [56] | Reason 4 | Arabic and American alphabet sign language datasets. | |

| [71] | Reason 4 | Arabic sign language for 32 letters, 11 numbers and none, and American sign language for 27 letters, delete, space, and nothing. | |

| [46] | Reason 5 | Both datasets contain sign language representations for 14 letters. The second dataset is entirely used for testing. | |

| [47] | |||

| [62] | Reason 1 | Alphabet and numbers. | |

| 3 | [82] | Reason 1, 2, 3 and 4 | Arabic sign language for words, Indian sign language for common and technical words, numbers and fingerspelling (alphabets), and Italian sign language for words. All datasets have different modalities. |

| [96] | Reason 3 | A total of 40 Arabic sign language sentences created from 80 words lexicon signs are captured in each dataset with different acquisition devices, namely DG5-VHand data gloves, two Polhemus G4 motion trackers, and camera. | |

| [90] | Reason 1, 2, 3 and 4 | KArSL-502 and KArSL-190 constitute letters, numbers, and words captured using Kinect V2 and stored in different modalities, RGB, depth, and skeleton joint points. The third dataset is LSA64 (Argentinian Sign Language), which contains only words captured by the camera and saved as RGB. | |

| [63] | Reason 5 | All the ArSL datasets are for letters, however, two of them are only used for testing. | |

| 4 | [72] | Reason 4 & 5 | All the ArSL datasets are for letters, however, only one of them is used for testing. |

| 5 | [78] | Reason 1, 2, 3, 4 | KArSL-33, KArSL-100, and KArSL-190 constitute letters, numbers, and words captured using Kinect V2 and stored in different modalities, RGB, depth, and skeleton joint points. The fourth dataset, Kazakh-Russian sign language (K-RSL), contains sign language for Kazakh-Russian words captured by LOGITECH C920 HD PRO WEBCAM and saved as RGB, skeleton joint points. The fifth dataset is for greeting words captured by camera and saved as RGB. |

3.1.2. RQ1.2: What Is the Availability Status of the Datasets in the Reviewed Papers?

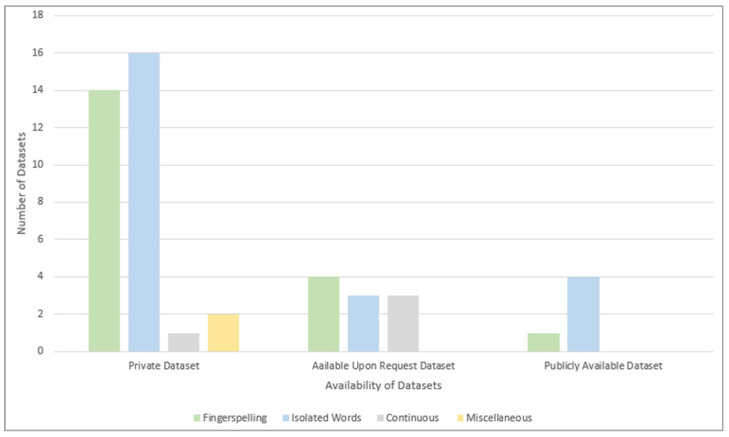

We can divide the availability of the datasets in the reviewed papers into three main groups: publicly available, available upon request, and self-acquired private datasets. Figure 9 shows that self-acquired private datasets constitute the bulk of the reviewed datasets, followed by datasets that can be obtained upon request and those that are publicly available. Due to the lack of available upon request and publicly available datasets for the fingerspelling, isolated, or miscellaneous datasets, the researchers who worked on them had to build their own private ArSL datasets. On the contrary, around three-quarters of the continuous datasets are available upon request, while the remaining dataset was built privately.

Figure 9.

Availability status of the reviewed datasets in each dataset category.

Public and available upon-request datasets are discussed according to the dataset category. Examples of each of the datasets are also presented where available.

Category 1: Fingerspelling Datasets

Arabic Alphabets Sign Language (ArSL2018)

This publicly available dataset [50,51] consists of 54,049 images of ArSL alphabets for 32 Arabic signs, performed by 40 signers of various age groups. Each alphabet (class) has a different number of images. The RGB format was used to capture the images, which had varying backgrounds, lighting, angles, timings, and sizes. Image preprocessing was carried out to prepare for classification and recognition. The gathered images were adjusted to a fixed dimension of 64 × 64 and converted into grayscale images, meaning that individual pixels may have values ranging from 0 to 255. Figure 10 shows a sample of the ArSL2018 dataset.

Figure 10.

The ArSL2018 dataset, illustration of the ArSL for Arabic alphabets [48,49].

Al-Jarrah Dataset

This dataset [40] is available upon request and contains gray-scale images. For every gesture, 60 signers executed a total of 60 samples. Of the 60 samples available for each motion, 40 were used for training, and the remaining 20 were used for testing.

For training purposes, 40 of the 60 samples for each gesture were used, while the remaining 20 samples were used for testing. The samples were captured in various orientations and at varying distances from the camera. Samples of the dataset are exhibited in Figure 11.

Figure 11.

Samples of ArSL alphabets [39].

The Suez Canal University ArSL Dataset

This dataset [43] consists of 210 grayscale ArSL images with size 200 × 200. These images represent 30 letters, with seven images for each letter. Different rotations, lighting, quality settings, and picture bias were all taken into consideration when capturing the images. All images are centered and cropped to 200 × 200. These images were gathered from a range of signers with varying hand sizes. This dataset is available upon request from the researchers.

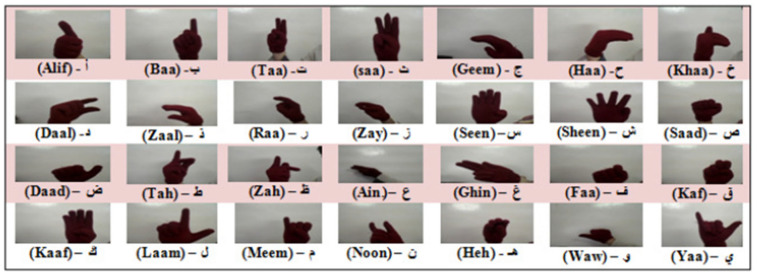

ArSL Dataset

The 700 color images in this dataset [45], which is available upon request, show the motions of 28 Arabic letters, with 25 images per letter. Various settings and lighting conditions were used to take the images included in the dataset. Different signers wearing dark-colored gloves and with varying hand sizes executed the actions, as shown in Figure 12.

Figure 12.

Samples of the ArSL Alphabet in the ArSL dataset [43].

Ibn Zohr University Dataset

This dataset [67], which is available upon request, is made up of 5771 images for 28 Arabic letters. The color size of these images is 256 × 256 pixels.

Category 2: Isolated Datasets

Shanableh Dataset

This dataset [75], which is available upon request, includes ArSL videos of 23 Arabic gesture words/sentences that are signed by three distinct signers. The 23 gestures were recorded in 3450 videos, with each gesture being repeated 150 times, thanks to the 50 repetitions of each gesture made by each of the three signers. Using no restrictions on background or clothing, the signer was recorded while executing the signs using an analog camcorder. The final videos from each session were edited into short sequences that each individually represented a gesture after being converted to digital video.

KArSL-33

There are 33 dynamic sign words in the publicly available KArSL-33 dataset [86,87]. Each sign was executed by three experienced signers. By performing each sign 50 times by each signer, a total of 4950 samples (33 × 3 × 50) were generated. There are three modalities for each sign: RGB, depth, and skeleton joint points.

Category 3: Continuous Datasets

Tubaiz, Shanableh, & Assaleh Dataset

This available upon-request dataset [95] employs an 80-word lexicon to create 40 sentences with no restrictions on sentence structure or length. Using two DG5-VHand data gloves, the sentences are recorded. The DG5-VHand glove is equipped with five integrated bend sensors and a three-axis accelerometer, enabling the detection of both hand orientation and movements. The gloves are suitable for wireless operations and rely on batteries. Information is transmitted, and sensor readings are collected between the gloves and a Bluetooth-connected PC. Subsequently, the sentences that were obtained were divided and assigned labels. A female volunteer, age 24, who is right-handed, offered to repeat each sentence ten times. A total of 33 sentences need both hands for gesturing, whereas the gestures for seven sentences can be made with just the right hand.

Hassan, Assaleh, and Shanableh Sensor-Based Dataset

An 80-word lexicon was utilized to create 40 sentences in this available upon-request dataset [96], with no restrictions on sentence structure or length. Two Polhemus G4 motion trackers were employed to gather this dataset. These trackers offer six measurements: roll (a, e, r) and azimuth, elevation, and coordinates for the Euler angle. Volunteers to symbolize the signs included two signers.

Hassan, Assaleh, and Shanableh Vision-Based Dataset

This available upon-request dataset [95] uses an 80-word lexicon to create 40 sentences with no restrictions on sentence grammar or length. This dataset was obtained solely with a camera; no wearable sensors were employed in the process. There was just one signer who offered to perform the signs.

Category 4: Miscellaneous Datasets

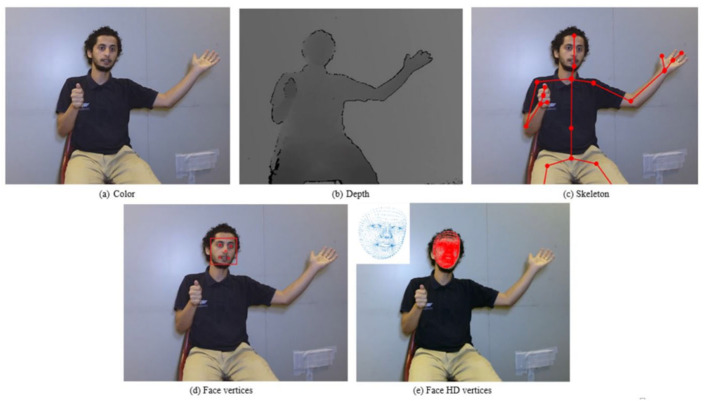

mArSL

Five distinct modalities—color, depth, joint points, face, and faceHD—are provided in the multi-modality ArSL dataset [88,89]. It is composed of 6748 video samples captured using Kinect V2 sensors, demonstrating fifty signs executed by four signers. Both manual and non-manual signs are emphasized. An example of the five modalities offered for every sign in mArSL is presented in Figure 13.

Figure 13.

The mArSL dataset, an example of the five modalities provided for each sign sample [88].

KArSL-100

There are 100 static and dynamic sign representations in the KArSL-100 dataset [86,87]. A wide range of sign gestures were included in the dataset: 30 numerals, 39 letters, and 31 sign words. For every sign, there were three experienced signers. Each signer repeated each sign 50 times, resulting in an aggregate of 15,000 samples of the whole dataset (100 × 3 × 50). For every sign, there are three modalities available: skeleton joint points, depth, and RGB.

KArSL-190

There are 190 static and dynamic sign representations in the KArSL-190 dataset [86,87]. The dataset featured a variety of sign gestures, including digits (30 signs), letters (39 signs), and 121 sign words. A broad spectrum of sign gestures was incorporated into the dataset, including 121 sign words, 30 number signs, and 39 letter signs. Each sign was executed by three skilled signers. Each signer repeated each sign 50 times, resulting in 28,500 samples of the dataset (190 × 3 × 50). For each sign, there are three modalities available: skeleton joint points, depth, and RGB.

KArSL-502

Eleven chapters of the ArSL dictionary’s sign words, totaling 502 static and dynamic sign words, are contained in the KArSL dataset [86,87]. Numerous sign gestures were incorporated in the dataset, including 433 sign words, 30 numerals, and 39 letters. For every sign, there were three capable signers. Every signer repeated each sign 50 times, resulting in 75,300 samples of the dataset (502 × 3 × 50). Each sign has three modalities: RGB, depth, and skeletal joint points.

It is worth mentioning that public non-ArSL datasets were also utilized in a number of reviewed studies along with ArSL datasets [56,71,78,82,90]. The purpose of these studies was to apply the proposed models to these publicly available datasets and compare the results with published work on the same datasets.

3.1.3. RQ1.3 How Many Signers Were Employed to Represent the Signs in Each Dataset?

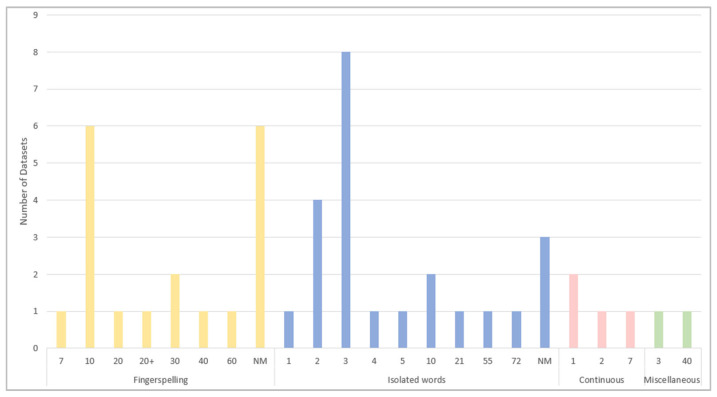

The number of signers is one of the factors that impact the diversity of the datasets. In the reviewed studies, the number of signers varies from one dataset to another. The results show that the minimum number of signers is one in three datasets, whereas the highest number of signers is 72 in only one dataset, an isolated word dataset. Figure 14 shows that the majority of the ArSL datasets, with around 60%, recruit between one and ten signers. More diversity is provided by ten ArSL datasets that are executed by 20 to 72 signers. The number of signers who executed the signs is not mentioned or specified (NM) in any of the other nine ArSL fingerspelling and isolated datasets.

Figure 14.

Number of datasets according to the number of signers.

3.1.4. RQ1.4 How Many Samples Are There in Each Dataset?

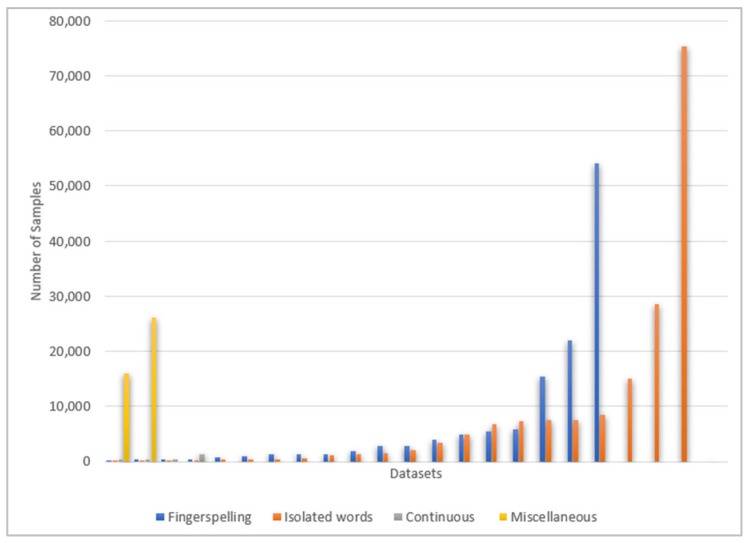

Training machine learning and deep learning algorithms require the presence of datasets with a high volume of data or samples. Table 9, Table 10, Table 11 and Table 12 show the number of samples in each of the reviewed datasets. As Figure 15 reveals, a high number of samples are found in the datasets that belong to the category of isolated words. The biggest dataset in terms of the number of samples among all the reviewed datasets is KArSL-502 [86,87], with 75,300 samples. The second-biggest dataset is ArSL2018 [50,51], the fingerspelling dataset, which contains 54,049 samples. This is followed by the datasets that represent isolated words and fingerspelling. The dataset with the lowest sample size, 50, is the dataset for five facial expressions [80], which belongs to the category of isolated words.

Figure 15.

Number of samples in the datasets of reviewed papers for each dataset category.

3.1.5. RQ1.5 How Many Signs Are Represented by Each Dataset?

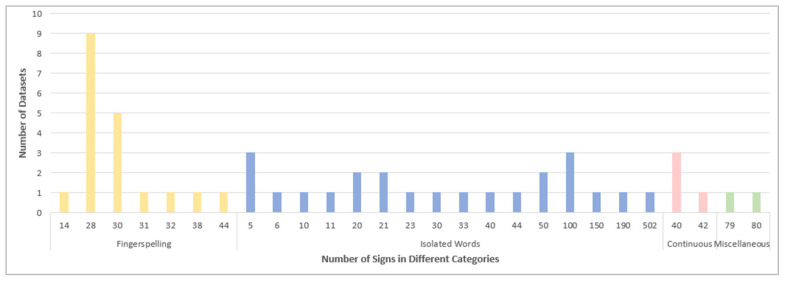

The number of signs differs based on the dataset category, as illustrated in Figure 16. In fingerspelling datasets, the number of signs ranges from 14 letters representing the openings of the Qur’anic surahs [46,47] to 38 representing letters and numbers [42], and 44 representing letters, numbers, and none [71]. The signs for basic Arabic letters, 28 letters, are represented by eight datasets. The remaining datasets in this category consist of signs for 30, 31, and 38 basic and extra Arabic letters. Interestingly, the highest number of signs is 502 in an isolated words dataset [86,87], as well as the lowest number of signs, five, is found in isolated words datasets [80,81,93].

Figure 16.

Number of datasets for each number of signs in different categories.

3.1.6. RQ1.6 What Is the Number of Datasets That Include Manual Signs, Non-Manual Signs, or Both Manual and Non-Manual Signs?

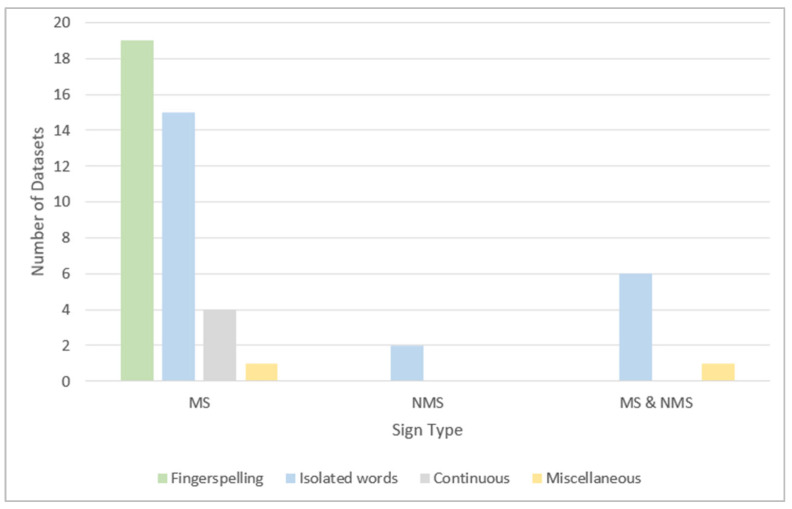

Table 9, Table 10, Table 11 and Table 12 show the type of sign captured in each reviewed dataset. The type of sign can be a manual sign (MS), represented by hand or arm gestures and motions and fingerspelling; non-manual signs (NMS), such as body language, lip patterns, and facial expressions; or both MS and NMS. Figure 17 illustrates that the majority of the signs in all categories—except miscellaneous—are manual signs. In fingerspelling and continuous sentence datasets, all the signs are manual. Non-manual signs are represented by only two isolated word datasets. Both sign types are represented by three isolated word datasets and one miscellaneous dataset.

Figure 17.

Number of datasets representing manual signs (MS), non-manual signs (NMS), and both signs in each dataset category.

3.1.7. RQ1.7 What Are the Data Acquisition Devices That Were Used to Capture the Data for ArSLR?

Datasets for sign language can be grouped as sensor-based or vision-based, depending on the equipment used for data acquisition. Sensor-based datasets are gathered by means of sensors that signers might wear on their wrists or hands. Electronic gloves are the most utilized sensors for this purpose. One of the primary problems with sensor-based recognition methods was the need to wear these sensors while signing, which led researchers to turn to vision-based methods. Acquisition devices with one or more cameras are usually used to gather vision-based datasets. One piece of information about the signer is provided by single-camera systems, such as a color video stream. Multiple cameras, each providing distinct information about the signer, such as depth and color information, are combined to produce a multi-camera gadget. One of these devices that can provide different types of information, like color, depth, and joint point information, is the multi-modal Kinect.

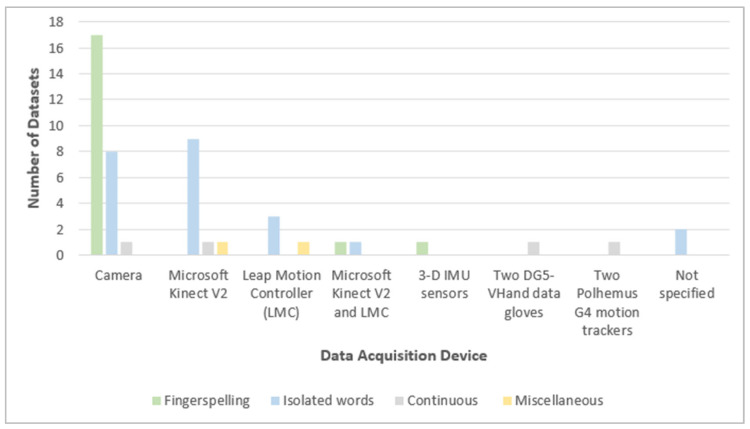

The majority of the reviewed ArSL datasets use cameras to capture the signs, followed by Kinect and leap motion controllers (LMC), as shown in Figure 18. Wearable sensor-based datasets [70,95,96] use devices like DG5-VHand data gloves, Polhemus G4 motion trackers, and 3-D IMU sensors to capture the signs. These three acquisition devices are the least used among all the devices due to the recent tendency to experiment with vision-based ArSLR systems. No devices were specified in two of the datasets.

Figure 18.

Data acquisition devices used to capture the data in the ArSL dataset.

3.1.8. RQ1.8 What Are the Acquisition Modalities Used to Capture the Signs?

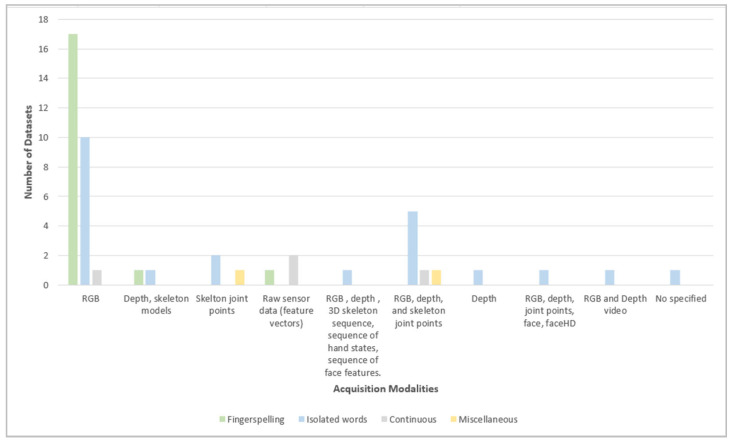

As depicted in Figure 19, the most popular acquisition modality for the datasets that belong to fingerspelling and isolated categories is RGB. Raw sensor data (feature vectors) is mostly used in continuous datasets, whereas RGB, depth, and skeleton joint points are the most popular in the category of isolated word datasets. The least used acquisition modalities for fingerspelling datasets are depth, skeleton models, and raw sensor data. For the other dataset categories, the least used modalities are distributed among different types of acquisition modalities.

Figure 19.

Acquisition modalities used to capture the signs in the ArSL dataset.

3.1.9. RQ1.9 Do the Datasets Contain Images, Videos, or Others?

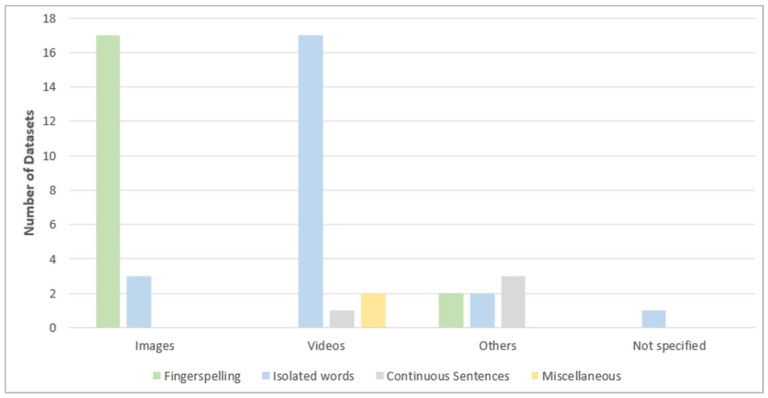

As illustrated in Figure 20, almost all fingerspelling datasets—except two—contain images. Videos are the content of around 74% of the isolated datasets, followed by images and others. Only one continuous dataset contains videos, while the remaining continuous datasets contain different content from images and videos, such as sensor readings. All the miscellaneous datasets contain videos only.

Figure 20.

Contents of ArSL datasets, images, videos, or others.

3.1.10. RQ1.10 What Is the Percentage of the Datasets That Represent Alphabets, Numbers, Words, Sentences, or a Combination of These?

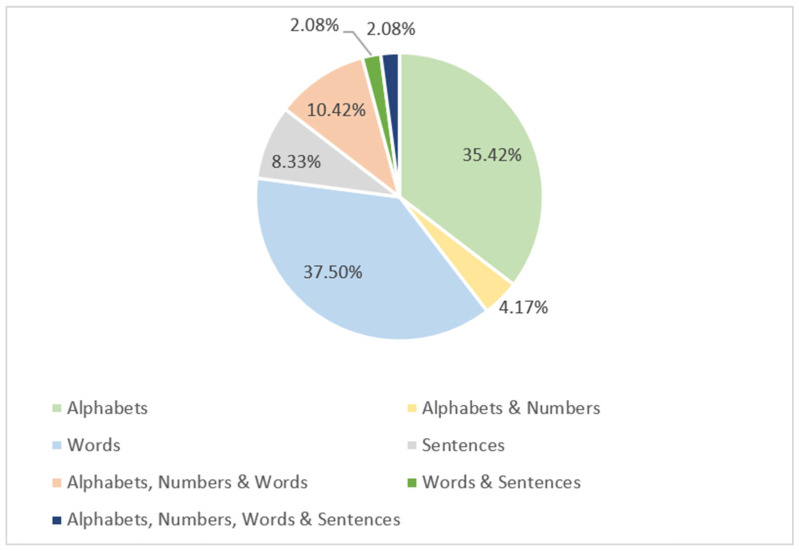

ArSL datasets can be used to represent alphabets, numbers, words, sentences, or combinations of them. As demonstrated in Figure 21, the highest percentage of the reviewed ArSL datasets, roughly 37.50%, constitute words. This is followed by 35.42% of the datasets that represent alphabets. Combinations of alphabets, numbers, and words are represented by 10.42%. A minimum number of ArSL datasets are used to represent sentences, alphabets and numbers, words and sentences, and alphabets, numbers, words, and sentences with percentages of 8.33%, 4.17%, 2.08%, and 2.108%, respectively.

Figure 21.

Percentages of alphabets, numbers, words, sentence datasets, and combinations of them.

3.1.11. RQ1.11 What Is the Percentage of the Datasets That Have Isolated, Continuous, Fingerspelling, or Miscellaneous Signing Modes?

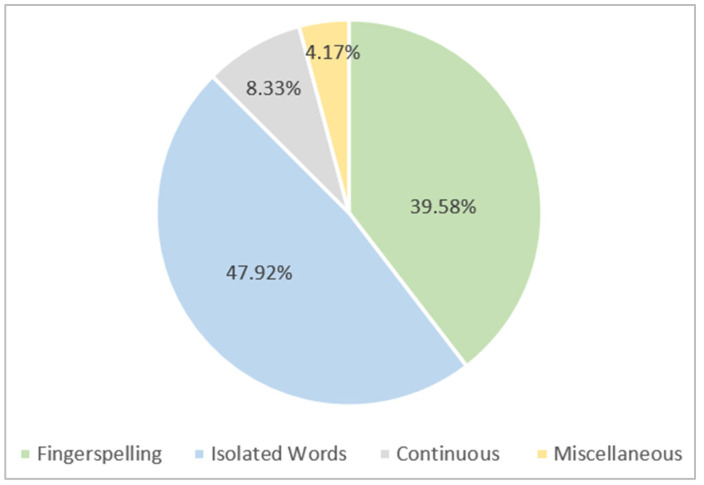

With a percentage of 47.92%, isolated mode is regarded as the most prevalent mode, followed by fingerspelling signing (39.58%) and then continuous signing mode (8.33%), as shown in Figure 22. With 4.17%, the category involving miscellaneous signing modes has the least amount of work accomplished in this area.

Figure 22.

Percentages of the datasets based on the signing mode, fingerspelling, isolated, continuous, and miscellaneous.

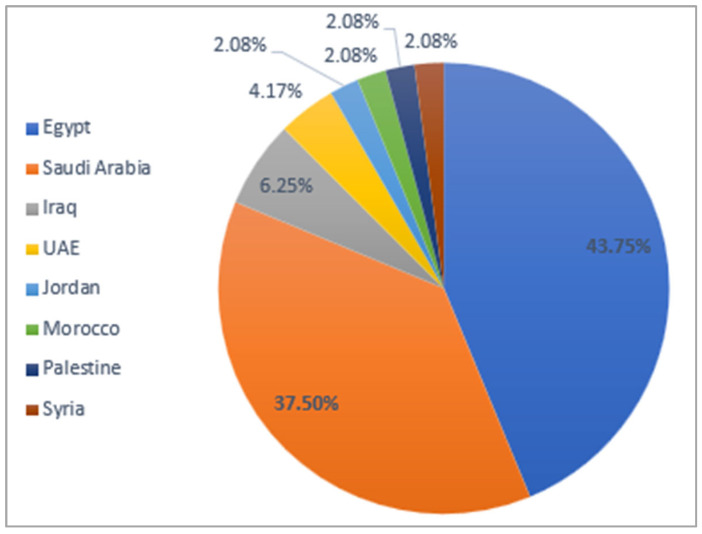

3.1.12. RQ1.12 What Is the Percentage of the Datasets Based on Their Data Collection Location/Country?

Figure 23 illustrates the distribution of the ArSL dataset collection across different countries. The figure shows that Egypt is the largest contributor to the ArSL dataset collection, accounting for 43.75%. Saudi Arabia follows with a significant contribution of 37.50%. Together, these two countries make up the majority of the dataset collection, totaling 81.25%. Iraq and the UAE contribute moderately to the dataset, with 6.25% and 4.17%, respectively. Jordan, Morocco, Palestine, and Syria each contribute a small and equal share of 2.08%, highlighting their relatively minor involvement. This distribution suggests the need to expand dataset collection efforts to the underrepresented countries to ensure a broader and more balanced representation of ArSL datasets. It might also reflect potential gaps in resources or interest in ArSL-related initiatives in these regions.

Figure 23.

Distribution of the datasets based on their data collection location/country.

3.2. Machine Learning and Deep Algorithms Used for Arabic Sign Language Recognition

To address the second research question, RQ2: “What were the existing methodologies and techniques used in ArSLR?” six sub-questions were explored. These questions examine different phases of the ArSLR methods discussed in the reviewed papers, including data preprocessing, segmentation, feature extraction, and the recognition and classification of signs. The answers to each sub-question are analyzed across various ArSLR categories, such as fingerspelling, isolated words, continuous sentences, and miscellaneous methods. Table 14, Table 15, Table 16 and Table 17 provide a concise summary of the reviewed studies for each category. Rows shaded in gray indicate methods that utilize wearable sensors.

Table 14.

Summary of fingerspelling ArSLR research papers.

| Year | Ref. | Preprocessing Methods | Segmentation Methods | Feature Extraction Methods | ArSLR Algorithm | Evaluation Metrics | Performance Results | Testing and Training Methodology | Signing Mode |

|---|---|---|---|---|---|---|---|---|---|

| 2015 | [42] | Transform to YCbCr, Image normalization. |

Skin detection, background removal techniques. | Feature extraction through observation detection and then creation of observation vector | HMM. This algorithm divides the rectangle surrounding by the hand shape into zones. | Accuracy. | 100% recognition accuracy for 16 zones. | Training: 70.87% (253 images), and Testing: 29.13% (104 images). | Signer-dependent |

| 2015 | [43] | - | - | SIFT to extract the features and LDA to reduce dimensions. |

SVM, KNN, nearest-neighbor (minimum distance) | Accuracy. | SVM shows the best accuracy around 98.9%. | Different partitioning methods were experimented | Signer-dependent |

| 2017 | [48] | Remove observations with rows that had same values, and rows that had multiple missing values or null. | - | Two feature types:

|

SVM, KNN, and RF. | Accuracy, and AUC. | SVM produced a higher overall accuracy = 96.119%. | Training: 75% (1047 observations), Testing: 25% (351 observations). | Signer-dependent |