Abstract

Background

Molecular profiling of estrogen receptor (ER), progesterone receptor (PR), and ERBB2 (also known as Her2) is essential for breast cancer diagnosis and treatment planning. Nevertheless, current methods rely on the qualitative interpretation of immunohistochemistry and fluorescence in situ hybridization (FISH), which can be costly, time-consuming, and inconsistent. Here we explore the clinical utility of predicting receptor status from digitized hematoxylin and eosin-stained (H&E) slides using machine learning trained and evaluated on a multi-institutional dataset.

Methods

We developed a deep learning system to predict ER, PR, and ERBB2 statuses from digitized H&E slides and evaluated its utility in three clinical applications: identifying hormone receptor-positive patients, serving as a second-read tool for quality assurance, and addressing intratumor heterogeneity. For development and validation, we collected 19,845 slides from 7,950 patients across six independent cohorts representative of diverse clinical settings.

Results

Here we show that the system identifies 30.5% of patients as hormone receptor-positive, achieving a specificity of 0.9982 and a positive predictive value of 0.9992, demonstrating its ability to determine eligibility for hormone therapy without immunohistochemistry. By restaining and reassessing samples flagged as potential false negatives, we discover 31 cases of misdiagnosed ER, PR, and ERBB2 statuses.

Conclusions

These findings demonstrate the utility of the system in diverse clinical settings and its potential to improve breast cancer diagnosis. Given the substantial focus of current guidelines on reducing false negative diagnoses, this study supports the integration of H&E-based machine learning tools into workflows for quality assurance.

Subject terms: Breast cancer, Computational biology and bioinformatics, Diagnostic markers

Plain language summary

Breast cancer diagnosis involves identifying three important proteins: estrogen receptor (ER), progesterone receptor (PR), and ERBB2. Profiling these proteins helps oncologists determine which treatments are most likely to benefit patients. However, current testing methods can be expensive, time-consuming, and sometimes inaccurate. This study introduces and validates an artificial intelligence system that predicts the presence of these proteins using routine tissue slides. The system is tested on data from multiple medical centers and accurately identifies patients with ER and PR proteins who could benefit from hormone therapy. It also detects cases where the original diagnosis was incorrect. This tool may improve diagnostic accuracy, reduce errors, and enhance the efficiency of breast cancer care by integrating artificial intelligence into clinical workflows.

Shamai et al. develop and validate a deep learning system for predicting receptor status from H&E images in breast cancer. The system accurately identifies hormone receptor-positive patients and detects false negative diagnoses, supporting its integration into clinical workflows to improve diagnostic accuracy, patient care, and quality assurance.

Introduction

Breast cancer is the most common cancer diagnosed in women and the leading cause of cancer death among women aged 20–491. The National Comprehensive Cancer Network (NCCN) recommends assaying every invasive breast cancer case for expression of Estrogen receptor (ER), Progesterone receptor (PR), and ERBB2 (also known as Her2), which are crucial for treatment guidance and prognosis evaluation2.

Immunohistochemistry (IHC) and fluorescence in situ hybridization (FISH) are the current conventional techniques for assessing ER, PR, and ERBB2 expressions. Accordingly, hormone receptor-positive breast cancer, defined as either ER-positive or PR-positive by IHC, typically necessitates hormonal therapy as a recommended treatment approach. Current guidelines also recommend reporting ER-low-positive cases (1% ≤ IHC ≤ 10%), where the benefit of endocrine therapy is unclear3.

Unlike hematoxylin and eosin (H&E) staining, which is universally applied for cancer diagnosis, IHC and FISH are expensive, time-consuming, and inaccessible in some regions. Additionally, their interpretation requires specialized expertise and may yield inconsistent outcomes. Such inconsistencies may arise from preanalytical factors like fixation time, analytical factors like antibody types, and post-analytical factors such as subjective interpretations by pathologists4–8. Another factor that poses a considerable challenge is intratumor heterogeneity, as receptor expression can vary within different parts of a tumor9. Typically, laboratories stain only one block of the tumor for IHC, which might not fully capture this heterogeneity. Given the critical importance of accurate molecular diagnostics, current guidelines strongly advocate for diverse quality assurance measures, particularly aimed at mitigating the risk of false negative diagnoses3,10.

Machine-learning-based analysis of digitized H&E slides has shown immense potential for improving tumor detection, diagnosis, and grading11,12. Recent studies have shown the ability of deep learning models to predict the ER, PR, ERBB2, and PD-L1 statuses from H&E images in breast cancer13–18.

Nevertheless, the overall performance of these models remains inferior to the standard of care molecular profiling using IHC. Therefore, despite the potential of H&E-based receptor prediction to improve breast cancer diagnosis, the integration of this methodology into clinical workflows remains unclear, and its application and impact on diagnosis have not been rigorously validated in clinical settings.

Here, we present the development and clinical validation of a deep learning system designed to predict the ER, PR, and ERBB2 statuses in invasive breast cancer from H&E-stained whole slide images (WSIs). We introduce three applications to explore the potential impact and utility of receptor prediction in clinical practice. Namely, the validation encompasses the system’s ability to identify hormone receptor-positive patients, serve as a second-read tool for quality assurance, and overcome intratumor heterogeneity by enabling the analysis of tumor sections unstained for IHC. A key contribution of this work is the extensive and diverse data used for developing and validating the models. Our data includes six independent cohorts representative of diverse clinical settings, and collaborations enabling re-staining and reevaluation of previously diagnosed cases. Upon validation, we show that our system identifies hormone receptor-positive tumors with near-perfect specificity. Additionally, by reevaluating potential false negative diagnoses flagged by the system, we detect misclassifications in many cases diagnosed by traditional methods. Importantly, some of these reclassifications change the patients’ treatment recommendations.

Methods

Data collection and scanning

Our data collection consists of 19,845 H&E slides from 7950 patients across six independent cohorts (Table 1). The variety of scanners, magnifications, and staining reagents offers a robust framework for assessing the system’s generalizability and accuracy in diverse clinical settings. Moreover, some of the cohorts were scanned in-house for the purpose of this study, which allowed several important advantages: (1) The data from Carmel, Haemek, Ipatimup, and CHUCB included all invasive breast cancer diagnoses within the collection period, excluding only slides with scanning issues or insufficient tissue. This ensures the validation of uncurated real-world data from pathology labs, including common artifacts, such as air bubbles, marker marks, and scanning defects. (2) Our collaboration with the Carmel Medical Center enabled multiple experiments and thorough reassessment of potential false negative diagnoses, and assess the potential of the system as a second-read tool for quality assurance in clinical practice. (3) To simulate a prospective validation, the Carmel-Test data was designed to include patients diagnosed after the patients in Carmel-Train. This ensured an evaluation of the model’s performance amidst pre- to post-analytical changes over time.

Table 1.

Overview of the Data

| Dataset | Years | Collected | Included in study | ER+ slides (%) | PR+ slides (%) | ERBB2+ slides (%) | ||

|---|---|---|---|---|---|---|---|---|

| Slides | Patients | Slides | Patients | |||||

| For development and validation | ||||||||

| TCGA | 1988–2013 | 3114 | 1097 | 2576 | 1040 | 77.6% | 67.0% | 18.3% |

| ABCTB | 2006–2015 | 3024 | 2552 | 2946 | 2490 | 79.3% | 71.3% | 14.7% |

| Carmel-Train | 2017–2020 | 5738 | 1483 | 5639 | 1482 | 88.2% | 74.4% | 12.7% |

| Carmel-Test | July 2020–Aug 2021 | 1872 | 578 | 1855 | 577 | 88.9% | 73.7% | 12.8% |

| For external validation | ||||||||

| Haemek | 2019–2021 | 1226 | 503 | 981 | 482 | 79.3% | 49.8% | 17.2% |

| CHUCB | 2014–2020 | 218 | 183 | 176 | 172 | 87.5% | 69.9% | 13.1% |

| Ipatimup | 2021–2022 | 100 | 100 | 100 | 100 | 83.0% | 69.0% | 15.1% |

| For additional experiments | ||||||||

| Carmel-Intratumor | 2017–2020 | 502 | 212 | 479 | 207 | 90.8% | 49.7% | 5.2% |

| Carmel-Benign | 2020–2021 | 4051 | 1454 | 3965 | 1440 | - | - | - |

| Carmel-Test-Rescanned | Jan–Aug 2021 | 467 | 319 | 452 | 315 | 88.1% | 71.0% | 13.3% |

| Total* | 19,845 | 7950 | 18,717 | 7783 | ||||

This study utilized 19,845 slides from 7950 patients across six independent cohorts. See Supplementary Fig. 1 for the distribution of tiles across the cohorts. Exclusions were limited to poorly scanned or tissue-deficient slides. Carmel-Train, TCGA-Train, and ABCTB-Train (CAT-Train) were used for training the models in a cross-validation manner, as well as for hyperparameter tuning. After training, the models were locked and validated on Carmel-Test, ABCTB-Test, and TCGA-Test (CAT-Test). Haemek, CHUCB, and Ipatimup cohorts were used for additional external validation. Carmel-Benign slides were used to explore model performance enhancement by including slides with no cancer. Carmel-Intratumor slides were used to assess the system’s potential to overcome intratumor heterogeneity. Carmel-Test-Rescanned scans were used to evaluate the system’s performance consistency across different scanners.

ER estrogen receptor, PR progesterone receptor.

*Carmel-Intratumor and Carmel-Test-Rescanned are a subset of Carmel-Train and Carmel-Test and were not included in the total calculation.

We excluded slides that contained fewer than 100 tissue tiles, representing 6.55 square millimeters of tissue because our transformer configuration requires 100 tiles per bag. Such slides were rare, constituting only 0.19% of the dataset. Additionally, we excluded slides that lacked labels or had physical scan issues. Patient pathology data was collected from the pathology reports, encompassing age, gender, breast cancer subtype, and status of ER, PR, and ERBB2. ER, PR, and ERBB2 statuses were defined in accordance with current guidelines3. Namely, samples with at least 1% of tumor nuclei showing positive nuclear staining by ER-IHC and PR-IHC were interpreted as ER-positive and PR-positive, respectively. For ERBB2, samples with an IHC score of 3+ or an IHC score of 2+ accompanied by a positive FISH test were considered ERBB2-positive. Additional data for some cohorts included cancer grade and sample excision type (core needle biopsy or surgical specimen). To prevent data leaks, the ground truth labels of test datasets were concealed from model developers. See Supplementary Fig. 1 for the distribution of tiles across the cohorts.

A detailed overview of each dataset is provided below (Table 1):

TCGA

The Cancer Genome Atlas Breast Cancer (TCGA-BRCA) dataset, publicly available and spanning 40 sites, comprises 3112 H&E slides from 1097 patients diagnosed between 1988 and 201319,20. It includes both formalin-fixed paraffin-embedded and frozen tissue sections. We utilized both diagnostic and frozen sections to enrich the training data, aiming to enhance diversity and model generalizability. The inference was done on the diagnostic slides. In total, 2576 diagnostic slides from 1040 TCGA patients were used in this study for training and analysis. The digitized TCGA slides are available in .svs file format, with variable magnifications and resolutions ranging from 0.11 to 0.55 microns per pixel (MPP).

ABCTB

The Australian Breast Cancer Tissue Bank (ABCTB) spans multiple sites across Australia and comprises 3024 H&E slides from 2552 patients diagnosed between 2006 and 201521,22. Scanned at the University of Sydney, these slides were provided in .ndpi files at a resolution of 0.226 MPP. We included 2946 slides from 2490 ABCTB patients in our analysis.

Carmel

The Carmel dataset, collected and scanned at the Carmel Medical Center in Israel, played a crucial role in our research. The Carmel-Train subset contained a total of 5738 H&E slides from 1483 patients diagnosed between 2017 and June 2020. Out of these, 5639 slides from 1482 patients were included in the study. The Carmel-Test subset contained 1872 H&E slides from 578 patients diagnosed between July 2020 and August 2021, with 1855 slides from 577 patients included in the study. We meticulously removed sample tumors in Carmel-Test that belonged to patients with previous diagnoses to minimize data leaks and biases, ensuring that no patients were included in both train and test datasets. For each tumor sample, we collected 1–3 H&E slides. These slides were scanned with a 3DHistech Pannoramic 250 Flash III scanner at 20x magnification at the Biomedical Core Facility in Israel, producing .mrxs slides with a resolution of 0.242 MPP. An important aspect to note is that the H&E slides were collected from the same tumor blocks that were also stained for IHC.

Carmel-Intratumor

The Carmel-Intratumor dataset was specifically collected from the Carmel Medical Center to apply our trained models to areas of the tumor not stained for IHC. This was done to detect cases where expressing cells could potentially be missed in the diagnosis due to intratumor variability. For this, we collected H&E slides fulfilling all the following inclusion criteria: (1) H&E slides containing tumor, (2) slides from surgical specimen blocks not stained for IHC, and (3) slides from tumor samples classified as negative (for a target receptor) based on IHC staining of other blocks. Core needle biopsies were excluded as they consist of a single block always stained for IHC at Carmel Medical Center. Cases with multiple samples stained for IHC that were all negative were also excluded, as such cases were less likely to have tumor expression variability.

To collect the Carmel-Intratumor data, a pathologist examined all surgical specimen H&E slides and marked which of them contained a tumor. The slide digitization process was similar to that of Carmel. In total, we collected 502 H&E slides from 495 blocks belonging to 212 patients, with 479 slides from 472 blocks belonging to 207 patients ultimately used in the study.

To broaden the analysis, Carmel-Intratumor was designed to include patients from the entire Carmel dataset, and not just Carmel-Test, which could raise concerns regarding potential data leaks. However, the risk of overfitting does not lead to an advantage. The system was applied to the Carmel-Intratumor dataset to identify tumor blocks predicted as ER-positive and PR-positive, even when the ground truth was negative. Overfitting to the ground truth status would actually result in identifying fewer potential false negative cases, which is contrary to our objectives. Moreover, Carmel-Train consists of five folds, and we ensured that each model was applied to patients it was not trained on.

Carmel-Benign

The Carmel-Benign dataset, also collected from the Carmel Medical Center, included any breast tumor sample diagnosed as benign between 2020–2021. It comprised 4051 slides from 1454 patients, with 3965 slides from 1440 patients included in the study. This dataset was scanned alongside the other Carmel datasets using the same scanner.

Carmel-test-rescanned

The Carmel-Test-Rescanned dataset consisted of 467 slides from 319 patients from the Carmel-Test dataset, which were rescanned using a Zeiss scanner at the Faculty of Medicine, Bar-Ilan University in Israel. Ultimately, 452 slides from 315 patients were included in the analysis. The slides were scanned at an MPP of 0.220.

Haemek

The Haemek dataset, collected and scanned in-house at the Haemek Medical Center in Israel, was used as an external validation. It contains 1226 H&E slides from 503 patients encompassing any diagnosed case between 2019–2021. For each tumor sample, we collected 1–2 H&E slides. The slide digitization process was similar to that of Carmel. In total, 981 slides from 482 patients were included in the training and analysis. The large gap in the number of slides was due to the fact that IHC staining was not performed for every tumor sample, resulting in many slides lacking corresponding IHC annotations.

CHUCB

The Centro Hospitalar Universitário Cova da Beira (CHUCB) dataset, which included 218 H&E slides from 187 patients diagnosed between 2014–2020 at CHUCB, Covilha, Portugal, was also used for external validation. It included any case diagnosed during these years. Overall, 176 H&E slides from 172 patients were included in the study. The slides were digitized at Ipatimup using the 3DHistech Pannoramic 1000 scanner, producing .mrxs files at a resolution of 0.242 MPP.

Ipatimup

Lastly, the Ipatimup dataset, comprising 100 H&E slides from 100 patients diagnosed between 2021–2022 at the Ipatimup laboratory in Porto, Portugal, was included for external validation. This dataset encompassed any case diagnosed within this timeframe. All slides were included in the study and were digitized at Ipatimup using the same 3DHistech Pannoramic 1000 scanner, resulting in .mrxs files at a resolution of 0.242 MPP.

All H&E slides were obtained from formalin-fixed paraffin-embedded tissues, except for TCGA, which also included slides from frozen tissues. The diversity in the data collection, spanning multiple geographic locations, and diagnostic periods, ensures that our cohorts are representative of real-world clinical settings. The inclusion of various cancer grades, excision types, and patient demographics further supports the generalizability of our findings.

Slide segmentation and tiling

Digitized WSIs were segmented into tissue and background regions utilizing Otsu’s method23, enabling the exclusion of background areas. The segmented slides were then divided into non-overlapping tiles, each measuring 256 × 256 pixels. Tiles were extracted at a standardized magnification, achieving a uniform MPP of 1.0 across the different cohorts, ensuring consistency in scale and analysis (Fig. 1a).

Fig. 1. Overview of the proposed deep learning system.

a The process involved automated segmentation and tiling of slides, feature extraction per tile using a receptor-CNN, and utilizing a transformer-based approach for multiple instance learning to predict receptor status at the slide level. Separate models were trained for each receptor. b A CNN was trained to distinguish between slides with cancer (from Carmel-Train) and slides with no cancer (from Carmel-Benign). This malignancy classifier was then integrated with the receptor-CNN, enhancing its capability to incorporate malignancy features into receptor status predictions. H&E Hematoxylin and Eosin, ER Estrogen Receptor, PR Progesterone Receptor, CNN Convolutional Neural Network.

Model architecture, hyperparameters, and optimization

Analyzing WSIs involves splitting the slides into tiles due to their large size, which poses learning challenges when using slide-level annotations like receptor status. To address this, we adopted a multiple instance learning (MIL)14,24 approach, which processes multiple tiles simultaneously based on their relative importance. To fully leverage the scale and variability of our datasets, we integrated convolutional neural networks (CNNs) with transformer-encoder-based MIL. Our methodology was inspired by recent studies that utilized transformers to enhance attention-based MIL analysis of WSIs25–27. This combination utilizes the power of CNNs for image feature extraction and transformers for their superior contextual interpretation capabilities (Fig. 1a).

The CNN feature extraction was performed using the Pre-activation ResNet-50 architecture28, optimized to minimize cross-entropy loss for each prediction task against the ground truth label of either ER, PR, or ERBB2 status. The training involved 1000 epochs, utilizing the Adam optimizer29 with an initial learning rate of 1 × 10−5 and a weight decay of 1 × 10−5. Each epoch processed minibatches of 18 tiles, extracting a 512-feature vector per tile.

A transformer was then employed in a MIL framework to analyze bags of 100 tile feature vectors, each bag corresponding to a unique slide, with a minibatch size of 32 bags. The transformer underwent 100 epochs of training with the AdamW optimizer30, a starting learning rate of 0.01 which was divided by 10 at epochs 30 and 70, and a weight decay of 0.01.

During inference, bags of 100 tiles were randomly sampled per slide, with the CNN extracting features which were then assessed by the transformer. A score between 0 and 1 was generated per slide, indicating the likelihood of a positive status for ER, PR, and ERBB2. To enhance confidence in the model’s predictions, multiple bag samples per slide were analyzed, and an average inference score was calculated. The total training time of the CNN and transformer models was ~9.5 days per model. Inference time averaged 9 s per slide.

Data augmentation and generalization

To improve model generalization and mitigate overfitting, diverse data augmentations were applied. These included random adjustments to color properties (brightness, contrast, saturation, and hue), Gaussian blurring, rotational flips, affine transformations, random tile shifts, and masking.

Training and inference

We developed our models using the Carmel, ABCTB, and TCGA (CAT) cohorts. Carmel-Train and Carmel-Test were collected in a consecutive manner, to simulate a prospective validation on Carmel-Test. The ABCTB and TCGA cohorts were randomly partitioned at the patient level into training and testing sets, with 25% of ABCTB and 16.6% of TCGA assigned to the respective testing sets. The CAT-Train dataset was comprised of Carmel-Train, ABCTB-Train, and TCGA-Train cohorts. Similarly, the CAT-Test dataset was comprised of Carmel-Test, ABCTB-Test, and TCGA-Test cohorts. The CAT-Train dataset was further randomly subdivided into five folds, at the patient level, for the 5-fold cross-validation. Conventionally, hyperparameter tuning was done in the cross-validation process.

Post-training, an ensemble approach was adopted, averaging the prediction scores of the five cross-validation models to enhance robustness and generalizability across both the CAT-Test and external cohorts, without any further calibration or parameter tuning. Separate models were developed for each receptor status prediction (ER, PR, ERBB2).

The hybrid model

To further enhance the models’ ability to predict receptor status, we incorporated slides from benign tumors within the learning process (Fig. 1b). For this, the Carmel-Benign dataset was randomly split, at the patient level, into a training set (75%) and a testing set (25%). We trained a feature extractor model, specifically to classify slides as either malignant (derived from Carmel-Train) or benign (derived from Carmel-Benign-Train). At the same time, distinct feature extractor models were trained on Carmel-Train slides to predict the ER, PR, and ERBB2 status (Fig. 1b). The Malignant-vs-Benign and receptor-prediction models were trained using identical hyperparameters and strategies.

For each receptor status prediction task, the feature vectors generated by the Malignant-vs-Benign model were combined with those from the respective receptor-prediction model. This composite feature vector was then used to train the transformer model, adapting it to predict receptor statuses based on a richer representation that includes both neoplastic features and specific receptor characteristics. During the inference phase, the trained hybrid system was applied to slides in the Camel-Test set, which consists exclusively of malignant tumors. This approach enhances the model’s ability to incorporate critical neoplastic features into the receptor status prediction process.

Prediction of eligibility for hormone therapy

To predict hormone-receptor status, which is defined as positive if either the ER or PR receptor is positive, a similar concatenation strategy was employed. Namely, feature vectors from models separately trained to predict ER and PR statuses were merged. This enriched feature vector was then utilized to train the transformer model to predict the overall hormone status. By leveraging pre-trained models for ER and PR predictions, this method efficiently utilizes existing resources, avoiding the necessity to retrain models from scratch for the combined hormone status prediction task.

Second-read tool for quality assurance in clinical practice

To assess the consistency of traditional molecular diagnosis, we identified diagnoses that changed from receptor-negative in the initial core needle biopsy (CNB) diagnosis to receptor-positive in subsequent surgical specimen diagnoses. We used the Carmel dataset because both CNB and surgical specimens are always stained for IHC in Carmel, effectively reducing biases in this analysis. We included only patients who initially had a CNB diagnosis followed by a surgical specimen diagnosis. Additionally, patients were excluded if the surgical diagnosis occurred more than 6 months after the initial CNB or if the tumor subtype identified in the surgical diagnosis differed from that in the CNB, thereby ensuring the analysis of the same tumor.

For the second-read quality assurance system, we established thresholds to flag samples as potential false negatives. These thresholds were set to trigger alerts for the top 10%, 5%, and 2.5% of the highest-scoring negative samples for ER, PR, and ERBB2, respectively. This approach was designed to maintain a comparable and sufficient number of flagged tumors across each receptor category. The resulting thresholds were 0.15 for ER, 0.68 for PR, and 0.91 for ERBB2. These thresholds differ from those used for hormone receptor-positivity identification due to the distinct applications and models in which they were applied. For tumor blocks with more than one H&E slide, we determined if a block should be flagged for reclassification by averaging the prediction scores of its slides. Notably, we observed no differences in the flagged blocks when, instead of averaging, we randomly selected a single H&E slide per block and used its score.

Receptor prediction from non-neoplastic regions

To visually represent the regions within the tissue that influenced the decision-making process of our models, we generated heatmaps. Utilizing the method outlined by ref. 13, we implemented this with our CNN. The process began with the CNN producing 512 feature maps for each tile, derived just before the fully connected (FC) layer. These feature maps were then individually multiplied by their corresponding FC weights to synthesize a singular feature map. We then upscaled this map back to the size of the tile and applied a sigmoid function for normalization. Methodologically, this process is akin to applying the CNN to the vicinity of each pixel on the slide, where the collective output scores form the final heatmap. These heatmaps were then aggregated across the entire slide for a comprehensive visualization. To further optimize computational efficiency, we leveraged the fully convolutional nature of the heatmap calculation, processing considerably larger tiles at once (Supplementary Fig. 2). This approach enabled us to generate heatmaps for larger regions of the slide in a single step. Approaches like GradCam resulted in a similar outcome. The advantage of the chosen approach was mainly in its interpretable process.

Data interpretation by t-SNE

To shed light on the models’ decision-making, we utilized t-distributed stochastic neighbor embedding (t-SNE)31. For the t-SNE visualizations, each tile from the slides in Carmel-Test was processed through the CNN to obtain a 512-dimensional feature vector, alongside its corresponding prediction score for ER, PR, or ERBB2 status. These feature vectors were used for the t-SNE analysis, in which they were embedded into a two-dimensional space to facilitate a visual examination of the tiles’ distribution and clustering. This approach enabled us to identify distinct morphological patterns and prediction score-based groupings among the tiles, providing insights into the model’s predictive behavior for each of the receptors.

Statistics and reproducibility

Data collection, annotation, and analysis in our study spanned from April 2020 to January 2024. We assessed the performance of our models using the following statistical measures: positive predictive value (PPV), specificity, and the area under the ROC curve (AUC). The AUC was selected to illustrate the models’ performance due to its independence from classification thresholds and data balance, alongside its prevalent use in similar studies. PPV and specificity were chosen to demonstrate the system’s capability to accurately identify positive cases. These performance metrics were calculated at the slide level to reflect the system’s real-world application. However, statistical significance was always determined at the patient level, ensuring the independence of samples in our analysis. For the statistical significance, we employed 95% confidence intervals (CI) and one-sided P values from bootstrapped significance tests, considering P < 0.05 in a 1-tailed hypothesis test as indicative of statistical significance. P values were calculated using patient-level bootstrapping with replacement, based on 1000 replicates. Sample sizes for each experiment are provided throughout the paper. To specifically assess the statistical significance of the performance gap between two classifiers applied to the same test data, we employed the bootstrapped significance test and calculated the P value from the empirical distribution of performance differences between classifiers.

We reported results per slide for several reasons, although clinical decisions are typically informed at the patient level. (1) Some patients had multiple IHC results due to intratumor heterogeneity or multiple excisions. For instance, a biopsy and subsequent surgical excision might show different receptor statuses. (2) The deep learning system predicts receptor expression based on the tumor region in the H&E slide, not the whole patient, as other regions or tumors may differ. Thus, predictions are designed for individual slides. Pathologists benefit from per-tumor predictions, and applying the proposed system to a biopsy is preferable over waiting for surgical specimens. (3) Inconsistent labeling across datasets prevents a consistent patient-level analysis, as TCGA often provides a single IHC result, while others like Carmel have multiple diagnoses.

Ethical approvals and consent for the datasets

The ABCTB dataset, available through the Australian Breast Cancer Tissue Bank, received ethical and scientific approval according to their access policy (see Data Availability). Informed consent was obtained from all participants in the ABCTB cohort. The Institutional Review Board (IRB) overseeing the creation of the ABCTB dataset is the Sydney Local Health District Ethics Committee (Royal Prince Alfred Zone). The creation of the TCGA dataset was approved by the Institutional IRBs of the National Cancer Institute (NCI) and the National Human Genome Research Institute, ensuring compliance with the Common Rule (45 CFR 46) and HIPAA regulations. Contributing institutions also obtained IRB approval for sample collection and data submission, with informed consent from living participants and re-consent waived for deceased individuals. Data from Carmel, Haemek, Ipatimup, and CHUCB were collected and digitized internally for this study. Each dataset’s collection followed protocols compliant with the Helsinki Declaration and relevant institutional policies, and each dataset’s access and usage were approved in advance by corresponding local ethics boards (Helsinki approvals). Specifically, data from Carmel, Ipatimup, and CHUCB were approved for the research by the Helsinki committee of the Lady Davis Carmel Medical Center, and data from Haemek was approved for the research by the Haemek Medical Center Ethics Committee. Informed consent was waived by the IRBs, as the study is retrospective, involves only digital, de-identified data with no way to trace it back to patients, and does not involve any invasive or physical interventions. As each dataset adheres to its own ethics approval processes, including prior IRB or Helsinki Declaration approvals, additional IRB approval at the university level was deemed unnecessary for the use of these datasets in this study.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Results

Prediction of ER, PR, and ERBB2 from H&E slides

We trained our models to predict the ER, PR, and ERBB2 statuses from the H&E slides in five-fold cross-validation using the CAT-Train dataset, consisting of Carmel-Train, ABCTB-Train, and TCGA-Train. The models achieved cross-validation AUC scores of 0.951 for ER, 0.792 for PR, and 0.822 for ERBB2, indicating strong predictive capability (Table 2).

Table 2.

The System’s AUC Performance Across the Cohorts

| CAT-Cross-Validation | CAT-Test | Carmel-Test | ABCTB –Test | TCGA-Test | Haemek | CHUCB | Ipatimup | |

|---|---|---|---|---|---|---|---|---|

| AUC (95% CI, n = 8721) | AUC (95% CI, n = 2788) | AUC (95% CI, n = 1855) | AUC (95% CI, n = 750) | AUC (95% CI, n = 183) | AUC (95% CI, n = 981) | AUC (95% CI, n = 176) | AUC (95% CI, n = 100) | |

| ER status | 0.951 (0.945–0.958) | 0.955 (0.943–0.966) | 0.958 (0.941–0.974) | 0.953 (0.937–0.970) | 0.930 (0.889–0.971) | 0.937 (0.917–0.957) | 0.924 (0.878–0.971) | 0.959 (0.928–0.991) |

| PR status | 0.792 (0.777–0.806) | 0.807 (0.784–0.830) | 0.790 (0.755–0.823) | 0.849 (0.821–0.876) | 0.820 (0.765–0.875) | 0.741 (0.706–0.778) | 0.682 (0.612–0.750) | 0.785 (0.704–0.877) |

| ERBB2 status | 0.822 (0.808–0.837) | 0.846 (0.821–0.874) | 0.844 (0.810–0.879) | 0.872 (0.842–0.904) | 0.790 (0.688–0.895) | 0.823 (0.776–0.878) | 0.806 (0.748–0.863) | 0.690 (0.537–0.852) |

The system’s AUC performance, along with 95% confidence intervals (CIs), for predicting ER, PR, and ERBB2 status from H&E-stained WSIs. The number of slides in each cohort is presented. The AUC values were calculated at the slide level. CAT-Cross-Validation AUC represents the averaged cross-validation AUC scores across the five folds. CAT: Carmel, ABCTB, TCGA. The number of independent samples corresponds to the number of patients for each dataset and is as follows: CAT-Cross-Validation (n = 4186), CAT-Test (n = 1369), Carmel-Test (n = 577), ABCTB-Test (n = 625), TCGA-Test (n = 167), Haemek (n = 482), CHUCB (n = 172), and Ipatimup (n = 100).

ER estrogen receptor, PR progesterone receptor, CAT Carmel, ABCTB, TCGA, AUC Area Under the Curve, CI Confidence Interval.

After cross-validation, the models were locked and validated using CAT-Test (comprising Carmel-Test, ABCTB-Test, and TCGA-Test), achieving AUC scores of 0.955 for ER, 0.807 for PR, and 0.846 for ERBB2. Notably, the test AUCs were within the cross-validation AUCs’ CIs, demonstrating that hyperparameter tuning during cross-validation did not overfit to CAT-Train. Additionally, we extended our testing to the Haemek, CHUCB, and Ipatimup cohorts, which were entirely external to the CAT cohorts, without any calibration. The consistent AUC performance across all datasets, particularly high for ER status, highlights the models’ ability to differentiate receptor-expressing and non-expressing tumors across diverse cohorts and settings. The area under the precision/recall and negative predictive value (NPV)/specificity curves are detailed in Supplementary Table 1. Given the clinical value of identifying ERBB2-low cases, the system’s ability to classify cases into ERBB2-low, ERBB2-0, and ERBB2-positive categories is presented in Supplementary Table 2.

Prediction of eligibility for hormone therapy

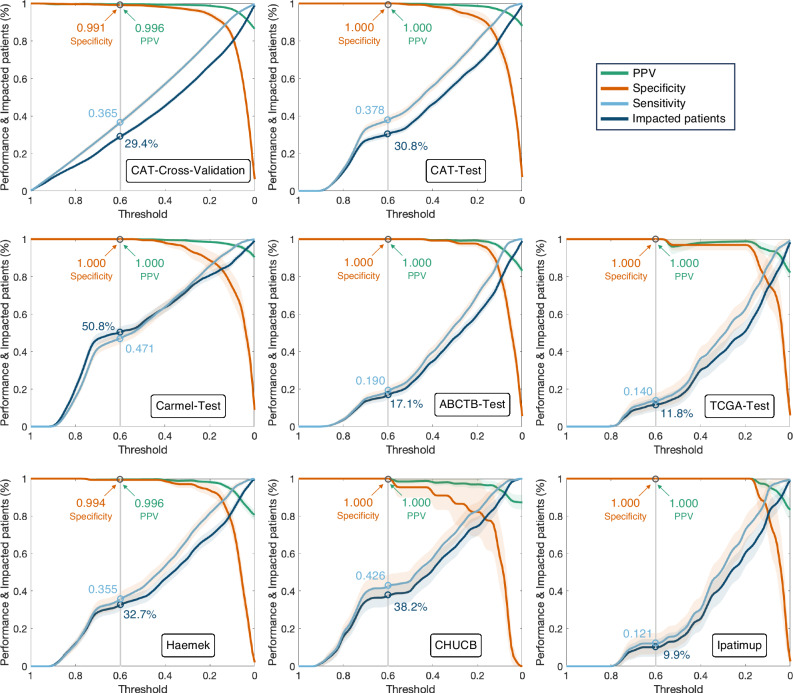

We used the trained ER and PR models to predict the hormone status, achieving AUC scores per slide of 0.943 (95% CI: 0.935–0.951) for the cross-validation and 0.948 (95% CI: 0.936–0.961) for CAT-Test. While the overall performance was high, machine-learning prediction scores reflect a confidence level that can be further leveraged, with higher scores indicating an increased likelihood of positive outcomes. To that end, we utilized a classification threshold above which scores are identified as hormone receptor-positive. We then calculated the percentage of patients identified as hormone receptor-positive, along with the specificity and PPV for each threshold (Fig. 2). The operating threshold for clinical application can be selected based on cross-validation performance. In cross-validation, a threshold of 0.6 identified 29.4% of patients as hormone receptor-positive (specificity = 0.991, PPV = 0.996) (Fig. 2). When applied to the test cohorts using the same threshold, the system accurately identified all hormone receptor-positive cases except in Haemek, where only one slide out of 247 was negative. Overall, out of 3926 test slides (2102 patients) across all cohorts, 1242 slides (31.6%) from 642 patients (30.5%) were identified as hormone receptor-positive by the system, of which all but one slide were indeed positive (specificity = 0.9982, PPV = 0.9992).

Fig. 2. The ability of the proposed system to identify hormone receptor-positive patients.

Slides with prediction scores above the threshold are classified as hormone receptor-positive. The system’s performance is evaluated as specificity (orange), sensitivity (cyan), and PPV (green) per slide. The high specificity and PPV demonstrate the system’s ability to identify hormone receptor-positive patients without IHC staining. The performance and impacted patients (dark blue) are presented with respect to the threshold, showing that a threshold of 0.6 is a good choice for the operating threshold. The sensitivity can be interpreted as the percentage of actual hormone receptor-positive slides that are correctly identified as hormone receptor-positive. The 95% confidence intervals are highlighted for each plot. The number of independent samples for each threshold corresponds to the relative portion of impacted patients from the total number of patients in each dataset: CAT-Cross-Validation (n = 4186), CAT-Test (n = 1369), Carmel-Test (n = 577), ABCTB-Test (n = 625), TCGA-Test (n = 167), Haemek (n = 482), CHUCB (n = 172), and Ipatimup (n = 100). IHC Immunohistochemistry, PPV positive predictive value. CAT Carmel, ABCTB, TCGA.

This indicates the system’s high accuracy in identifying hormone receptor-positive patients. Based on these results, such a system could potentially identify, without IHC, about a third of breast cancer patients as eligible for hormone therapy. Importantly, while we applied a single threshold across all cohorts, calibrating the threshold to specific centers could enhance its impact. For instance, in ABCTB, Haemek, and Ipatimup, such a calibration could impact additional patients without compromising performance.

We next investigated the characteristics of tumor samples predicted as hormone receptor-positive, including their tumor subtype, grade, and molecular subtype (Supplementary Data 1). Our findings show that the predicted hormone receptor-positive group included tumors from any of the clinicopathology categories, suggesting the system could be applied across all breast cancer types. Intriguingly, the system successfully identified high-grade tumors as hormone receptor-positives, even though their morphology is not typically associated with hormone receptor-positivity.

The percentage of high-grade and ERBB2-positive tumors in the identified hormone receptor-positive cases was lower than in the actual hormone receptor-positive group, suggesting a lower likelihood of the system identifying hormone receptor-positiveness within such tumors than within others. Additionally, the hormone receptor-positive predictions included almost no ER-low-positive tumors, showing that the system primarily identifies ER-high-positivity.

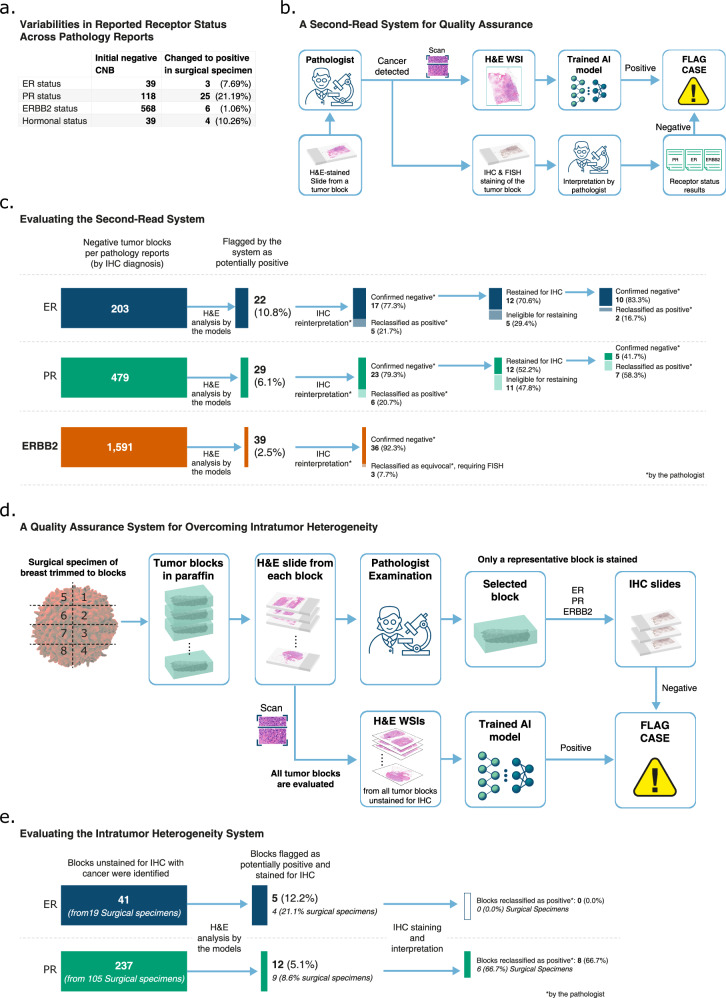

Second-read tool for quality assurance in clinical practice

Although mitigated through a variety of quality controls, IHC-based diagnosis may still have substantial variabilities. To better understand the variabilities within our data, we examined the pathology reports in Carmel Medical Center, where all tumor samples are stained for IHC. Focusing on patients with both CNB and subsequent surgical specimen diagnoses, we identified diagnoses that changed from receptor-negative to receptor-positive (Fig. 3a). The presence of such variabilities, which were more pronounced for PR and comparatively less for ERBB2, despite the lab’s adherence to current guidelines, underscores the need for enhanced quality assurance measures.

Fig. 3. Enhancing diagnosis by flagging potentially false negative cases.

a We included patients following the most common diagnostic pathway at Carmel Medical Center, encompassing all invasive breast cancer cases diagnosed from 2017 to July 2021, beginning with an initial core needle biopsy (CNB) diagnosis, followed by a subsequent diagnosis of the same tumor a couple of months later. We report the proportion of patients whose initial negative diagnosis was later altered to positive, reflecting the inconsistencies in traditional IHC diagnosis. b A Second-Read System for Quality Assurance: The digital pathology diagnosis is done in parallel to the pathologist diagnosis by traditional IHC staining. The system flags cases where the pathologist’s diagnosis is negative, but the deep learning model indicates positive. The pathologist should consider restaining and reevaluating these flagged cases. c We illustrate our experiment for reevaluating tumor blocks initially diagnosed as negative by pathologists but flagged as potential false negatives by our system in Carmel Medical Center. The process begins with a reinterpretation of the IHC slides by an expert pathologist, followed by restaining of blocks still deemed negative. In our study, ERBB2 slides were not restained for IHC. d We illustrate the process of overcoming intratumor heterogeneity in surgical specimens using the trained models. The pathologist’s diagnosis process begins with the tumor being trimmed into blocks. Slices from each block are then cut and stained with H&E for pathological examination, based on which the most representative block is selected for subsequent IHC staining. While the pathologist typically stains only one block for IHC, the trained models are applied to the H&E slides from all cancerous tumor blocks, effectively mapping the entire tumor for potential missed receptor expression. For surgical specimens classified as negative by the pathologist, blocks that are classified as positive by the system are flagged for reevaluation. e Eligible cases are surgical specimens in Carmel Medical Center that were diagnosed as negative by the pathologist based on IHC staining of their representative blocks and contain alternative blocks with cancer that were unstained for IHC. For these surgical specimens, the alternative blocks were identified, and their H&E slides were analyzed by the models. Blocks that were above a predefined threshold were flagged as potentially having receptor expression. We stained the flagged blocks for IHC, and the pathologist interpreted the results. H&E Hematoxylin and Eosin, WSI Whole Slide Image, ER Estrogen Receptor, PR Progesterone Receptor, CNB Core Needle Biopsy, IHC Immunohistochemistry, FISH Fluorescence In Situ Hybridization, AI Artificial Intelligence.

We propose utilizing our trained models as a second-read system for quality assurance, for identifying potential false negative molecular diagnoses (Fig. 3b). To that end, our system was applied to tumor blocks marked negative for ER, PR, and ERBB2 in the pathology reports. Per receptor, blocks with H&E scores exceeding a predefined threshold were flagged by our system as potential false negatives for reassessment.

Implementing this system at Carmel Medical Center, a total of 203 ER-negative, 479 PR-negative, and 1591 ERBB2-negative tumor blocks were identified (Fig. 3c). The system flagged 22 ER, 29 PR, and 39 ERBB2 blocks as potential negatives. Upon reinterpretation of the IHC staining, 5 of the flagged ER blocks and 6 of the flagged PR blocks were reclassified as positive. For ERBB2, 3 blocks were reinterpreted as equivocal (IHC = 2), requiring FISH for a conclusive outcome.

For ER and PR blocks confirmed negative by reinterpretation, we restrained the blocks for IHC by slicing adjacent slides. Due to insufficient material, some blocks were not suitable for restaining. Consequently, 2 of the restrained ER blocks and 7 of the restained PR blocks turned out to be positive, underscoring the substantial role of staining as a contributing factor to IHC variability, particularly in PR cases.

Notably, one ER and one PR case showed a strong IHC = 100% staining upon reinterpretation (Supplementary Table 3); these were likely registry errors in the pathology report system as they were from the same tumor. The rest of the ER cases had IHC between 1% and 10%, suggesting that the system could be particularly helpful for detecting missed ER-low-positive cases, which are harder to identify than ER-high-positive cases (Supplementary Table 3).

To further explore ER-low-positive cases, we visualized the ER status (ER-negative, ER-low-positive, ER-high-positive) of each tumor block in relation to its ER prediction score (Supplementary Fig. 3). High prediction scores were almost always associated with high ER positivity. Additionally, ER-low-positives with intermediate prediction scores were occasionally missed by IHC staining or pathologist interpretation.

In total, out of 23 ER and 29 PR flagged blocks, 7 (30.4%) and 13 (44.8%) were reclassified as positive, respectively. Furthermore, when correcting for the exclusion of cases ineligible for restaining, these proportions can be estimated as 38.4% (=6/29 + 7/12) for ER and 79.0% (=6/29 + 7/12) for PR. This shows that by selectively flagging a small subset (3–10%) of cases with a potentially large proportion of false negatives, our system offers a practical quality assurance approach.

Addressing intratumor heterogeneity

In the standard diagnostic process for surgical specimens, a representative tumor block is chosen for IHC staining (Fig. 3d). While laboratories may stain additional blocks as required, usually only one block is stained, primarily due to considerations of workload and cost-effectiveness. In the case of intratumor heterogeneity, however, this practice may overlook expressive regions in the tumor, increasing the risk of false negative diagnoses.

To explore the utility of our models in addressing intratumor heterogeneity, we focused on ER-negative and PR-negative surgical specimens diagnosed in Carmel Medical Center. In contrast to the second-read system where IHC-stained blocks were reassessed, here we stained alternative blocks previously unstained for IHC. We identified 19 ER-negative and 105 PR-negative specimens with unstained cancer-containing blocks (Fig. 3e). We applied our models to the H&E slides of these blocks, and the system flagged 5 ER and 12 PR blocks as potentially expressive. Subsequent IHC staining and pathological review revealed no ER expression in the flagged ER blocks (Fig. 3e). However, 8 of the flagged PR blocks showed PR expression with IHC between 50–100% (Supplementary Table 3).

These results indicate that mapping the entire surgical specimen through H&E analysis can enable a practical approach for detecting false negative diagnoses due to tumor heterogeneity, particularly for receptors like PR with high tumor variability. Notably, at Carmel Medical Center, IHC staining is occasionally performed on multiple blocks per surgical specimen, considerably reducing such variability. In most pathology centers, where IHC is typically performed only once, this methodology could play a crucial role in reducing false negative diagnoses even for ER.

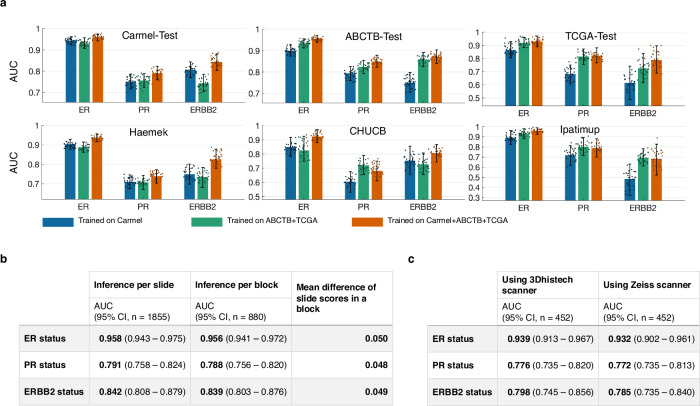

Impact of data variability on model performance

To evaluate the impact of training dataset size and diversity on the predictive and generalization ability of our models, we retrained the models using several dataset combinations and compared their performance (Fig. 4a). It can be seen that the models performed better on each target cohort when their training data included that cohort. For example, in the ABCTB-Test subplot, the green and orange bars (training included ABCTB) were higher than the blue bar (training excluded ABCTB). Interestingly, the performance also improved when adding training cohorts other than the target cohort. Additionally, the models trained on all cohorts usually generalized better than those trained on fewer cohorts. These results show that the diversity and size of the training data play an important role in both internal performance and generalizability.

Fig. 4. Impact of the variety of datasets, scanners, and block slices on the performance.

a We present the AUC performance for predicting ER, PR, and ERBB2 status across different test sets. We compare the performance of models trained exclusively on Carmel-Train (blue), those trained on the combined ABCTB-Train and TCGA-Train datasets (green), and models trained on all three training cohorts collectively (orange). Unsurprisingly, the models showed higher performance on Carmel-Test when the Carmel-Train dataset was included in the training. Interestingly, the performance on Carmel also improved when the training included Carmel-Train, ABCTB-Train, and TCGA-Train, as opposed to solely using Carmel-Train. Similarly, the performance on ABCTB-Test and TCGA-Test improved when ABCTB-Train and TCGA-Train were included in the training, and the addition of Carmel-Train further improved the performance. For the external cohorts, the models trained on all three CAT-Train cohorts generally outperformed those trained on a subset of CAT-Train. Specifically, for PR and ERBB2 status, adding the Carmel-Train dataset to the training negatively impacted the performance in CHUCB and Ipatimup. This decrease in performance might be attributed to the limited data in these cohorts, potentially affecting the reliability of the performance metrics. For each bar, 95% confidence intervals are shown. The number of independent samples corresponds to the number of patients for each dataset: Carmel-Test (n = 577), ABCTB-Test (n = 625), TCGA-Test (n = 167), Haemek (n = 482), CHUCB (n = 172), and Ipatimup (n = 100). The confidence intervals were computed via bootstrapping with 1000 iterations, sampling with replacement. Each bar also shows 30 randomly selected points from the bootstrap iterations. b Utilizing the models trained on all three cohorts and applied to the Carmel-Test data. We computed a per-block score by averaging the scores of individual H&E slides within each corresponding block. The AUC performance at the block level did not surpass the AUC achieved at the individual slide level. 95% confidence intervals are presented, where the number of independent samples corresponds to the number of patients in Carmel-Test (n = 577). Additionally, we computed the absolute difference in prediction scores for pairs of H&E slides from the same blocks. The low mean difference indicates that the models are robust to the inherent variability within the blocks. c The aim was to evaluate the impact on performance when applying the models to slides scanned with a scanner not used in the training. For this goal, a subset of H&E slides from the Carmel-Test dataset, originally scanned with the 3DHISTECH Pannoramic 250 FLASH scanner, was rescanned using a Zeiss scanner, forming the Carmel-Test-Rescanned cohort. 95% confidence intervals are presented, where the number of independent samples corresponds to the number of patients (n = 315). The results demonstrate no significant difference in AUC performance between the two scanners, affirming the models’ robustness against various scanning modalities. H&E Hematoxylin and Eosin, ER Estrogen Receptor, PR Progesterone Receptor, AUC Area Under the Curve.

Next, we evaluated whether utilizing multiple H&E slides per block, rather than one, contributes to the models’ performance. We computed a per-block score by averaging the scores of individual H&E slides within each corresponding block. This analysis showed that aggregating scores across multiple slides per block did not improve the performance and that there was high consistency in scores among slides from the same block, suggesting that analyzing multiple slides per block does not offer additional benefits (Fig. 4b). Finally, we explored the models’ robustness to variations in scanning equipment, indicating a decrease in performance when applying the models to slides scanned with an external scanner. However, the reduction was not statistically significant (P = 0.182, 0.408, and 0.162 for ER, PR, and ERBB2, respectively), suggesting that diverse training data can also reduce the need for scanner-specific adjustments (Fig. 4c).

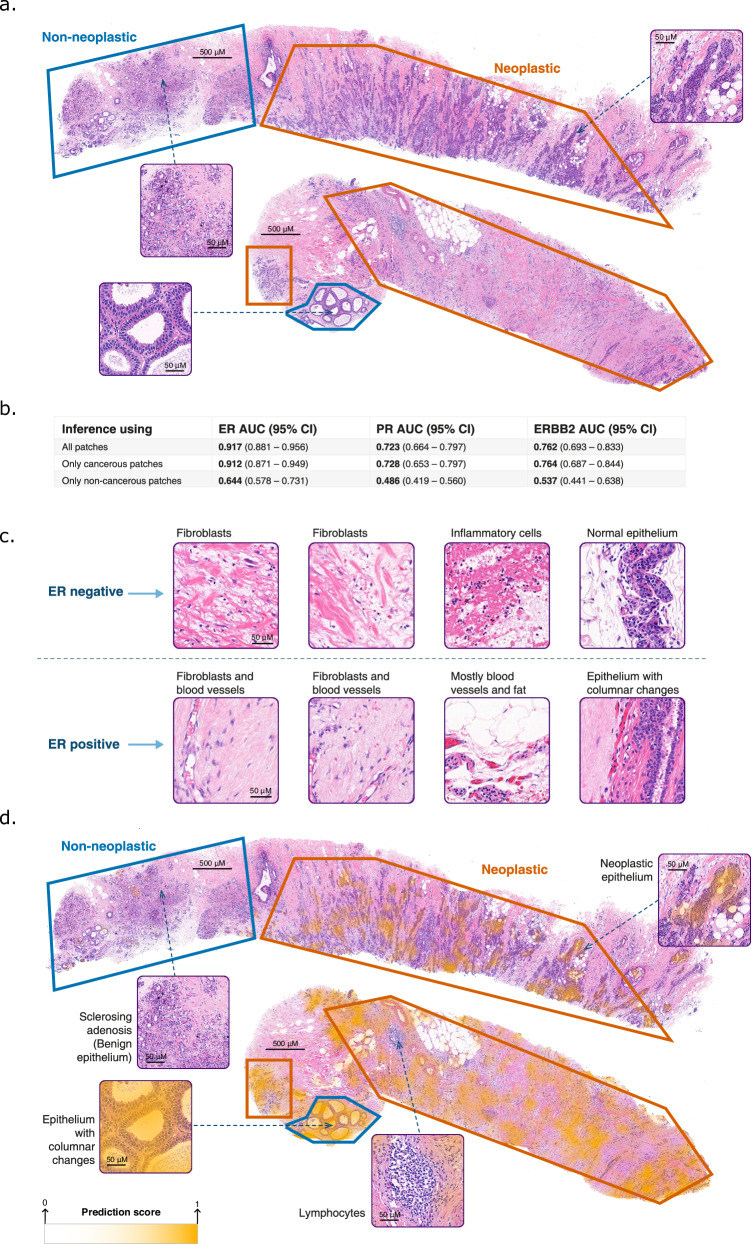

Receptor prediction from non-neoplastic regions

To explore the contribution of non-neoplastic regions to the models’ decision-making, an expert pathologist marked neoplastic and non-neoplastic regions in 200 slides from Carmel-Test (Fig. 5a). We constrained our trained models to make their predictions from either neoplastic or non-neoplastic regions. The models’ performance was significantly better using neoplastic regions than non-neoplastic ones. However, ER status could be predicted from only non-neoplastic regions (P < 0.001) (Fig. 5b), suggesting that these areas might carry informative cues about the tumor’s ER expression, even though ER expression by IHC is typically assessed only using neoplastic epithelium. In contrast, for PR and ERBB2, receptor status could not be predicted from non-neoplastic areas.

Fig. 5. Applying the models to neoplastic and non-neoplastic regions.

a Pathologist segmentation of neoplastic regions (orange) and non-neoplastic regions (blue) on top of 200 H&E slides from the Carmel-Test dataset. Selected tiles are magnified for detailed observation. The segmentation was carefully done to avoid cross-contamination, with the pathologist marking only the clearly identifiable regions. b The model’s AUC performance was evaluated in three scenarios: applying it exclusively to neoplastic regions (orange) regions, to non-neoplastic regions (blue), and to both. This evaluation demonstrated that non-neoplastic regions contribute valuable information regarding the ER status of the tumor. The 95% confidence intervals are indicated, where the number of independent samples is n = 164. c Tiles from non-neoplastic regions with high and low ER prediction scores are illustrated for a better understanding of the system’s decision-making. d The heatmaps overlaid on top of the H&E images highlight the areas that the system relied on for making the prediction for ER status. The system did not highlight the lymphocyte area in the neoplastic region. In the non-neoplastic areas, the system highlighted columnar cell changes but did not highlight benign epithelial tissues, including the sclerosing adenosis. The color legend of the heatmap is shown. A stronger yellow color indicates a higher score for ER+ prediction, signifying that the system places greater reliance on this region for its final prediction. H&E Hematoxylin and Eosin, ER Estrogen Receptor, PR Progesterone Receptor, AUC Area Under the Curve.

To better understand of the model’s ER status predictions, the pathologist examined non-neoplastic tiles with the highest and lowest ER prediction scores. These regions contained stroma, normal fibroblasts, neutrophils, normal epithelial, blood vessels, and fat tissue (Fig. 5c). The ER-positive cases, corresponding to high prediction scores, had no neutrophils, and sparser, more homogeneous, and fibrillar stroma than the ER-negative cases. Additionally, some epithelial tissues in the ER-positive cases had columnar cell changes that are non-mandatory precursors for ER-positive breast cancer. We also utilized heatmaps to show the regions that influenced the model’s decision (Fig. 5d). The heatmaps mostly highlighted neoplastic epithelium, in line with the native localization of ER receptors. Interestingly, the system also highlighted selective regions from the non-neoplastic areas.

The system’s ability to infer ER status from the entire tumor, encompassing both neoplastic and non-neoplastic regions, may be advantageous for quality assurance, by analyzing regions complementary to those typically assessed in IHC-based diagnosis. This approach may be particularly beneficial in cases with minimal neoplastic tissue.

Incorporating slides with no cancer

To understand whether incorporating benign tumors could enhance performance, we compiled the Carmel-Benign dataset, consisting of 1,440 patients (3,965 slides) diagnosed with benign breast tumors at Carmel Medical Center (Table 1). Carmel-Benign was randomly divided into Carmel-Benign-Train (75%) and Carmel-Benign-Test (25%) sets. We developed a model using the Carmel-Benign-Train and Carmel-Train datasets to classify H&E slides as either benign or malignant. Applied to the test slides, this cancer-versus-benign model achieved AUC = 0.994 (95% CI: 0.991–0.996), demonstrating a high ability to identify neoplastic features.

Next, for each receptor, the cancer-versus-benign model was integrated with the receptor-prediction model, forming a hybrid model, which was applied to the Carmel-Test slides (Fig. 1b). The hybrid models improved the performance over the receptor-prediction models slightly for ER and PR, and significantly for ERBB2, suggesting that an improved ability to identify neoplastic features could enhance the prediction of receptor status (Supplementary Table 4).

Data interpretation by t-SNE

To shed light on the models’ decision-making, we utilized t-SNE31 to arrange tiles with similar predictive characteristics closely together (Fig. 6). Interestingly, tiles mapped at the bottom of all plots presented non-cancerous areas, connective tissue, abundant extracellular matrix, and artifacts. Such tiles had intermediate prediction scores, showing that they were less relevant for the receptor predictions.

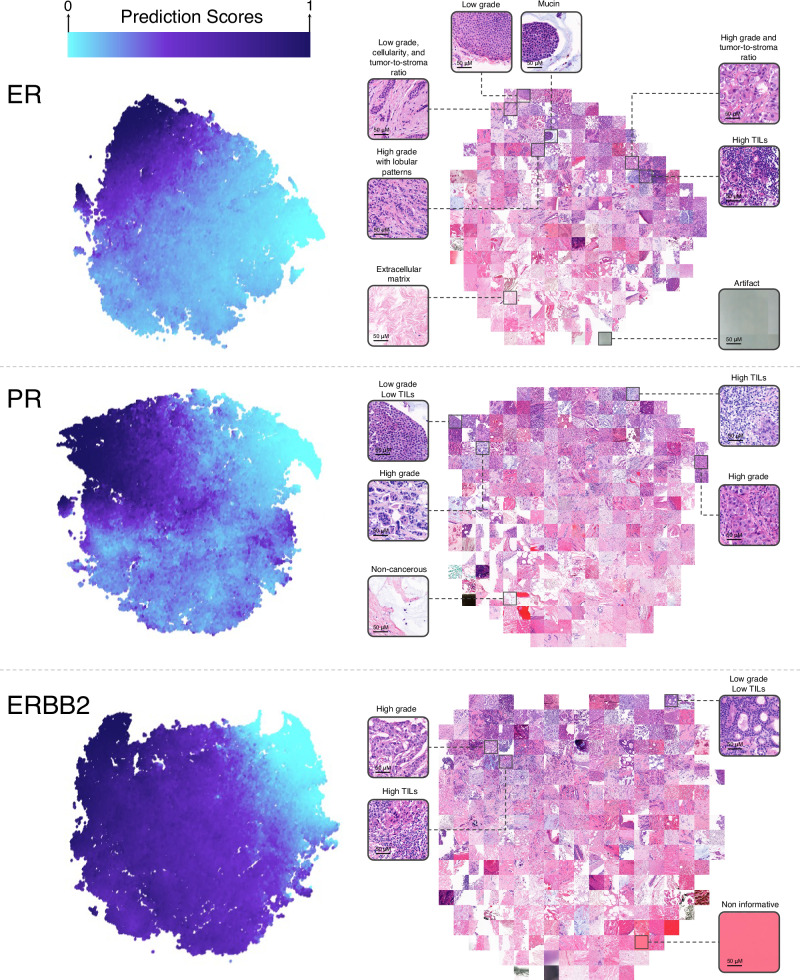

Fig. 6. t-SNE visualization for tiles in Carmel-test.

The t-SNE visualizations for ER, PR, and ERBB2 represent feature vectors of Carmel-Test tiles embedded into two-dimensional space. Each point in the colored map corresponds to a 256 × 256 tile, with its color indicating the tile’s prediction score between 0 (light blue) and 1 (dark blue). Distinct color zones underscore how tiles with varying morphologies are grouped into separate regions. To showcase the morphological patterns characteristic of each region, a selection of tiles from the t-SNE plot is enlarged and displayed. ER Estrogen Receptor, PR Progesterone Receptor, TIL Tumor-Infiltrating Lymphocytes, t-SNE t-Distributed Stochastic Neighbor Embedding.

For ER, high-score tiles typically featured cancer cells with low nuclear pleomorphism, mucin presence, low tumor-to-stroma ratios, and minimal tumor-infiltrating lymphocytes (TILs). These findings align with known characteristics of hormone receptor-positive tumors, such as low nuclear and histologic grades, and infiltrative growth patterns, often associated with mucin production, like in lobular, mucinous, or solid papillary carcinomas32–35. Conversely, low-score tiles had cancer cells with larger, more variable nuclei, solid growth patterns, high tumor-to-stroma ratios, and numerous TILs. This was consistent with typical features of hormone receptor-negative tumors, having solid growth patterns with higher nuclear grades, frequently associated with TIL presence36. Aligning with our analysis, some high-score tiles displayed high-grade areas, typically not indicative of ER positivity. These tiles generally exhibited less solid architectures than the low-score high-grade tiles and had lobular growth patterns, even in invasive ductal carcinomas.

The PR t-SNE displayed features similar to that of ER but showed lower distinction for low- and high-score tiles, particularly for high nuclear pleomorphism which was evident in both. For ERBB2, high-score tiles were characterized by cancer cells with high nuclear pleomorphism and an increased number of TILs, aligning with the understanding that such cases typically have higher nuclear and histologic grades and a greater likelihood of TIL presence37,38.

Expert pathologists’ predictions of ER status from H&E images

We assessed the ability of two expert pathologists to predict the ER status based solely on H&E-stained images. For this experiment, we randomly selected 250 slides from 250 distinct patients within the Carmel-Test dataset. Each pathologist independently reviewed the slides and provided an estimated ER status based on their visual examination. Additionally, to evaluate the pathologists’ ability to identify ER-positive tumors, we asked them to specifically indicate the cases they were confident were ER-positive. We then compared their performance to that of our deep learning model (Supplementary Fig. 4). While the pathologists demonstrated strong predictive ability, the model ultimately outperformed them. Notably, when we focused on high-grade cases, the model’s advantage was even more pronounced. This difference is likely attributable to the pathologists’ reliance on tumor grade as a key factor in their predictions.

Discussion

In this study, we developed and validated a deep-learning system for analyzing H&E slides from breast cancer patients to predict ER, PR, and ERBB2 expression. This task is among the few that have not been practically demonstrated by humans, yet machine-learning models have shown promise in succeeding. However, the clinical applicability of previously reported models remained ambiguous, hindered by limited data and lack of real-world validation. In this study, we developed and evaluated the performance and clinical utility of a receptor prediction system in a real-world setting, focusing on three clinical applications: (1) identifying hormone receptor-positive patients, (2) serving as a second-read system for quality assurance, and (3) reducing false negative diagnoses by accounting for intratumor heterogeneity.

To represent a diverse patient population and technical variables, including different machinery, staining reagents, lab personnel, and scanners, we collected data from six independent cohorts across Israel, Portugal, Australia, and the USA. Three of these cohorts were external and were not included in the models’ development. These external cohorts included all invasive breast cancer cases diagnosed during the collection period, representing uncurated data. The data from Carmel Medical Center was unique in several aspects: it consisted of all cases diagnosed between 2017–2021, and included an independently collected test set comprising patients diagnosed after those used for developing the models. This approach allowed a simulation of prospective validation. Moreover, the collaboration with Carmel Medical Center allowed assessment of the system’s potential as a quality assurance tool integrated into the clinical workflow.

After assembling the datasets, we evaluated the models’ general performance in predicting ER, PR, and ERBB2 statuses, showing high AUCs across all cohorts. Notably, when tested on the TCGA and ABCTB datasets, our AUCs for ER, PR, and ERBB2 (0.949, 0.843, and 0.859) exceeded those reported in recent representative studies on the same datasets (0.83–0.92, 0.72–0.81, and 0.58–0.79)14–16. This is likely due to the diversity and size of our training data, coupled with our transformer-based MIL approach.

To evaluate the ability of the system to identify hormone receptor-positive patients, we applied our models to the test data from all six cohorts. For patients with high prediction scores, representing a third of the total evaluated, the system was non-inferior to traditional IHC for the identification of hormone receptor-positive tumors (PPV = 0.9992, specificity = 0.9982). Importantly, the one misclassified slide could not be reassessed for potential false negative diagnosis. Furthermore, our findings indicate that calibrating the system’s threshold for different institutions could potentially expand the identified patients by at least 10%. Achieving such performance for patients with high prediction scores might pave the way to utilize the system in places where IHC is inaccessible.

Current guidelines recommend meticulous quality control practices to mitigate the risk of false negative diagnoses. Motivated by this directive, we assessed our system’s utility as a quality assurance tool integrated into the pathology workflow. First, we used the system as a second-read tool for flagging potential false negative IHC diagnoses. We uncovered misclassifications due to a range of factors like staining deficiencies, pathologist misinterpretation, and registry errors. In total, 23 IHC diagnoses were reclassified (7 ER, 13 PR, and 3 ERBB2 cases), where in some cases, eligibility for hormonal therapy could be reconsidered. This marks the first instance of detection of receptor misclassification by deep learning-based H&E analysis.

Second, we used our system to flag potential false negative IHC diagnoses as a result of intratumor heterogeneity. To that end, we applied our system across all tumor blocks of selected surgical specimens. While none of these specimens were reclassified as ER-positive following their reassessment, 6 surgical specimens were reclassified as PR-positive, indicating considerable PR expression heterogeneity. This method could also be utilized prior to IHC, guiding pathologists to select blocks that would more likely result in positive IHC staining (Fig. 7).

Fig. 7. Prioritizing blocks with higher probability of positive expression.

By ordering tumor blocks within each surgical specimen based on their probability for receptor positivity, pathologists can improve the selection of tumor blocks for IHC staining. H&E Hematoxylin and Eosin, IHC Immunohistochemistry.

Overall, using our quality assurance systems, 4.45% of the ER-negative and 5.98% of the PR-negative IHC diagnoses were reclassified as positive. Given 25% ER-negative and 30% PR-negative rates in the general breast cancer population39,40, and an annual incidence of 300,000 cases in the USA1, this translates to an annual false-negative detection rate of 3000 ER and 5000 PR cases in the USA.

Current guidelines for ER testing recommend reporting ER-low-positive cases (1% ≤ IHC ≤ 10%). A limitation of our hormone status prediction system is that it is not designed to discriminate between low and high ER-positive cases. Nevertheless, cases identified by the system were almost never ER-low-positive, which was likely due to the choice of the high operating threshold aimed at achieving high specificities.

Another limitation of our deep-learning system is the lack of interpretability of its predictions, due to the non-reliance on explainable features. To gain insight into the system’s decision-making processes, we employed various methods, including segmentation of non-neoplastic regions, generation of heatmaps, and t-SNE visualizations. These experiments confirmed that the system based its predictions on named histopathologic features, largely corroborating established correlations between tissue morphology and receptor status. Additionally, these analyses show that unlike IHC, which evaluates hormonal status strictly in neoplastic epithelial regions, our system based its prediction also on stromal areas and precancerous lesions. This ability to broaden the scope of the interpretable data beyond conventional diagnosis is advantageous for quality assurance and may be especially valuable in cases characterized by minimal neoplastic tissue.

A limitation of our study was the exclusive testing of the quality assurance system in a single institution. However, given the practice in Carmel Medical Center is to stain every tumor sample with IHC, we anticipate even higher false-negative detection rates in medical centers with less rigorous standards. Another limitation of the second-read quality assurance experiment was the absence of ERBB2 restained slides. Nevertheless, the low incidence of ERBB2 inconsistencies found in our heterogeneity analysis suggests limited practical benefit in its reassessment. The ability of our deep-learning system to predict PR and ERBB2 statuses from H&E images is lower than its ability to predict ER status. This trend has also been observed in previous studies13–16. Given the substantial therapeutic implications of ERBB2 quantification, and given all three receptors ER, PR, and ERBB2 are needed to categorize patients into the four breast cancer subtypes, this poses a limitation for the application of deep learning to H&E images in breast cancer. It remains unclear whether this limitation is due to biological factors or current machine-learning capabilities. For instance, H&E images may not present the morphological features necessary for accurately predicting ERBB2 status by IHC. Nevertheless, it is important to note that our method does not aim to replace IHC entirely. Instead, we demonstrate that while the overall performance of deep learning may not fully substitute for IHC, it can still be valuable for identifying patients eligible for hormone therapy, and for identifying potential receptor misclassifications. Our approach thus offers a practical application of machine learning in clinical practice, despite the current limitations of accurately categorizing breast cancer based on H&E images.

This study demonstrates the feasibility, in a real-world setting, of using a system for the prediction of receptor status based on H&E-stained slides in breast cancer patients. We show that for a subset of patients, the system can recapitulate IHC results with near-total accuracy. Moreover, we show that the system could be effectively integrated into the pathologic workflow as a quality assurance tool aimed to mitigate false-negative classification.

Supplementary information

Description of Additional Supplementary Files

Acknowledgements

This research was supported by the Israel Innovation Authority—Kamin 69997 (R.K. and G.S.), the Israel Science Foundation (ISF) grant 679/18 (R.K. and G.S.), and the Israel Precision Medicine Partnership program (IPMP) grant 3864/21 (R.K. and G.S.). We would like to thank Saeb Eyadat for helping with the data acquisition and quality assurance, Hen Davidov for supporting the deep learning experiments, Maya Holdengreber for performing the slide scanning, and Liat Dizengoff for managing the Helsinki approvals in Carmel Medical Center.

Author contributions

G.S. conceptualized the study, designed the experiments, and conducted the statistical analysis. G.S., R.S., S.C., K.S., and T.N. collaborated on data collection, processing, machine learning model development, and experimentation. E.S., A.C., T.G., and A.P. contributed significantly to data collection, annotation, and pathology data interpretation. G.S. and R.K. supervised the project. All authors contributed to drafting the manuscript and provided critical revisions.

Peer review

Peer review information

Communications Medicine thanks Jakob Kather and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. [ Peer reviewer reports are available.]

Data availability

The data were composed of six independent cohorts: (1) The TCGA-BRCA dataset, publicly available at https://portal.gdc.cancer.gov. (2) The ABCTB dataset, accessible from the Australian Breast Cancer Tissue Bank, is subject to ethical and scientific approvals as described in their access policy: https://nsw.biobanking.org/biobanks/view/7. The remaining data collected from medical centers are not available for public access due to privacy and ethical considerations, in alignment with the Helsinki agreements and institutional policies. Interested researchers may request access directly from the respective institutions: (3) Carmel data from the Carmel Medical Center, Israel. (4) Haemek data from the Haemek Medical Center, Israel. (5) Ipatimup data from the Ipatimup Laboratory, Portugal. (6) CHUCB data from the Centro Hospitalar Universitário Cova da Beira, Portugal. Sample sizes and descriptions for each cohort are provided throughout the manuscript. Data used in this study were collected in accordance with relevant regulations and ethical approvals from the respective institutions. The source data for Fig. 2, Fig. 4a, and Fig. 6 are provided in Supplementary Data files 2–4.

Code availability

The source code used in this study can be accessed at: https://github.com/shachar5020/TransformerMIL4ReceptorPrediction. Additionally, the code has been archived in Zenodo41. Statistical analysis was performed using Matlab 2019a and Matlab 2023a. Preprocessing, training of the models, and inference were done using Python 3.10.6 and Pytorch library version 1.12.1. Additional Python libraries used for database management, graphical plotting, scientific calculations, and other tasks include Numpy v.1.23.1, Pandas v.1.4.4, Scipy v.1.9.1, Pytorch Lightning v.1.9.3, and Openslide v.3.4.1.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

2/28/2025

In the version of the article initially published, the description of Supplementary Data 1 was incomplete and has now been amended.

Supplementary information

The online version contains supplementary material available at 10.1038/s43856-024-00695-5.

References

- 1.Siegel, R. L., Miller, K. D., Wagle, N. S. & Jemal, A. Cancer statistics, 2023. CA Cancer J. Clin.73, 17–48 (2023). [DOI] [PubMed] [Google Scholar]

- 2.Gradishar, W. J. et al. NCCN Guidelines® Insights: breast cancer, version 4.2023. J. Natl Compr. Cancer Netw.21, 594–608 (2023). [DOI] [PubMed] [Google Scholar]

- 3.Allison, K. H. et al. Estrogen and progesterone receptor testing in breast cancer: ASCO/CAP guideline update. J. Clin. Oncol.38, 1346–1366 (2020). [DOI] [PubMed] [Google Scholar]

- 4.Ziegenhorn, H.-V. et al. Breast cancer pathology services in sub-Saharan Africa: a survey within population-based cancer registries. BMC Health Serv. Res.20, 912 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wang, B. et al. Impact of the 2018 ASCO/CAP guidelines on HER2 fluorescence in situ hybridization interpretation in invasive breast cancers with immunohistochemically equivocal results. Sci. Rep.9, 16726 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lin, F. & Chen, Z. Standardization of diagnostic immunohistochemistry: literature review and geisinger experience. Arch. Pathol. Lab. Med.138, 1564–1577 (2014). [DOI] [PubMed] [Google Scholar]

- 7.Gown, A. M. Current issues in ER and HER2 testing by IHC in breast cancer. Mod. Pathol.21, S8–S15 (2008). [DOI] [PubMed] [Google Scholar]

- 8.Bahreini, F., Soltanian, A. R. & Mehdipour, P. A meta-analysis on concordance between immunohistochemistry (IHC) and fluorescence in situ hybridization (FISH) to detect HER2 gene overexpression in breast cancer. Breast Cancer22, 615–625 (2015). [DOI] [PubMed] [Google Scholar]

- 9.Allott, E. H. et al. Intratumoral heterogeneity as a source of discordance in breast cancer biomarker classification. Breast Cancer Res.18, 68 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wolff, A. C. et al. Human epidermal growth factor receptor 2 testing in breast cancer: ASCO-College of American Pathologists guideline update. J. Clin. Oncol.41, 3867–3872 (2023). [DOI] [PubMed] [Google Scholar]

- 11.Pantanowitz, L. et al. An artificial intelligence algorithm for prostate cancer diagnosis in whole slide images of core needle biopsies: a blinded clinical validation and deployment study. Lancet Digit. Health2, e407–e416 (2020). [DOI] [PubMed] [Google Scholar]

- 12.Campanella, G. et al. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med.25, 1301–1309 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shamai, G. et al. Artificial intelligence algorithms to assess hormonal status from tissue microarrays in patients with breast cancer. JAMA Netw. Open2, e197700 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Naik, N. et al. Deep learning-enabled breast cancer hormonal receptor status determination from base-level H&E stains. Nat. Commun.11, 5727 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rawat, R. R. et al. Deep learned tissue ‘fingerprints’ classify breast cancers by ER/PR/Her2 status from H&E images. Sci. Rep.10, 7275 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gamble, P. et al. Determining breast cancer biomarker status and associated morphological features using deep learning. Commun. Med.1, 1–12 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bychkov, D. et al. Deep learning identifies morphological features in breast cancer predictive of cancer ERBB2 status and trastuzumab treatment efficacy. Sci. Rep.11, 4037 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shamai, G. et al. Deep learning-based image analysis predicts PD-L1 status from H&E-stained histopathology images in breast cancer. Nat. Commun.13, 6753 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]