Abstract

Background

Youth involved in the legal system have disproportionately higher rates of problematic substance use than non-involved youth. Identifying and connecting legal-involved youth to substance use intervention is critical and relies on the connection between legal and behavioral health agencies, which may be facilitated by learning health systems (LHS). We analyzed the impact of an LHS intervention on youth legal and behavioral health personnel ratings of their cross-system collaboration. We also examined organizational climate toward evidence-based practice (EBP) over and above the LHS intervention.

Methods

Data were derived from a type II hybrid effectiveness trial implementing an LHS intervention with youth legal and community mental health centers (CMHCs) in eight Indiana counties. Using a stepped wedge design, counties were randomly assigned to one of three cohorts and stepped in at nine-month intervals. Counties were in the treatment phase for 18 months, after which they were in the maintenance phase. Youth legal system and CMHC personnel completed five waves of data collection (n=307 total respondents, ranging from 108-178 per wave). Cross-system collaboration was measured via the Cultural Exchange Inventory, organizational EBP climate via the Implementation Climate Scale and Implementation Citizenship Behavior Scale, and intervention via a dummy-coded indicator variable. We conducted linear mixed models to examine: 1) the treatment indicator, and 2) the treatment indicator and organizational EBP climate variables on cross-system collaboration.

Results

The treatment indicator was not significantly associated with cross-system collaboration. When including the organizational EBP climate variables, the treatment indicator significantly predicted cross-system collaboration. Compared to the control phase, treatment (B=0.41, standard error [SE]=0.20) and maintenance (B=0.60, SE=0.29) phases were associated with greater cross-system collaboration output.

Conclusions

The analysis may have been underpowered to detect an effect; third variables may have explained variance in cross-system collaboration, and, thus, the inclusion of important covariates may have reduced residual errors and increased the estimation precision. The LHS intervention may have affected cross-system collaboration perception and offers a promising avenue of research to determine how systems work together to improve legal-involved-youth substance use outcomes. Future research is needed to replicate results among a larger sample and examine youth-level outcomes.

Trial registration

Clinicaltrials.gov identifier: NCT04499079. Registered 30 July 2020. https://clinicaltrials.gov/study/NCT04499079.

Supplementary Information

The online version contains supplementary material available at 10.1186/s43058-024-00686-6.

Keywords: Youth, Legal system, Juvenile justice, Behavioral health, Mental health, Community mental health, Cross-system collaboration, Learning health system, Type II hybrid effectiveness implementation trial

Contributions to the Literature.

While future research is needed to analyze patient-level outcomes, improved cross-system collaboration ratings offer insight into potential mechanisms of learning health systems on patient outcomes.

Many personnel who rated cross-system collaboration were not involved in certain components of the intervention (i.e., learning health system training and monthly cross-system meetings), suggesting improved collaboration for agency personnel who were not meeting directly with the other system.

Our differential results for cross-system collaboration process and output underscore the importance of examining cross-system collaboration subdomains separately.

Our analytic approach may offer a blueprint to those who implemented stepped wedge designs with clustered data when data collection steps may have been disrupted (e.g., due to COVID-19).

Background

Youth involved in the legal system disproportionately use substances more frequently and earlier than youth who are non-legal-involved [1, 2]. An international review of detained youth suggested the median lifetime prevalence of substance use disorders to be 51% for males and 59% for females (range: 11–100%), compared to approximately 7–11% in the general population [3]. Other studies among legal-involved youth found that lifetime prevalence was as high as 62% [4]. Despite the considerable need, many youth who are legal-involved are minimally connected to behavioral health care. Of those in need of substance use treatment, studies report between 48 and 56% of youth reported having received substance use intervention [5, 6], in which the majority is delivered in residential or outpatient settings. Only 25% of youth on probation with identified mental health needs reported receiving care [7].

Strategies for addressing the treatment gaps and needs of youth involved in the legal system are critical for improving youth outcomes and development [8–10]. A national survey of substance use intervention offerings among community supervision (i.e., probation) and behavioral health agencies found that, combined, approximately only one-third offered any substance use intervention for youth who are legal-involved; behavioral health agencies were more likely to offer interventions compared to community supervision agencies (45% vs 17%) [11]. Community supervision agencies have the advantage of identifying youth at risk for problematic substance use yet have limited training or capacity to provide robust evidence-based substance use intervention. Mental health agencies, on the other hand, are better equipped to provide substance use intervention yet may be limited in capacity to robustly identify legal-involved youth who are at greatest need for behavioral health treatment. Therefore, building and strengthening the connection between systems (i.e., the youth legal and mental health systems) may offer a viable solution to address treatment gaps [12]. This sentiment echoes conclusions from prior studies. Qualitative results from focus groups of youth who are legal-involved, caregivers, behavioral health providers, and probation officers emphasized the importance of improving the collaboration between probation and behavioral health agencies to facilitate care [13]. Findings from other studies examining legal, behavioral health, and other systems have identified strategies to improve cross-system collaboration such as increasing screening practices, facilitating treatment referral, reducing redundancies in treatment activities, and coordinating ongoing care [12, 14].

Despite the potential benefits of coordination between the youth legal system (YLS) and behavioral health agencies, collaboration remains limited. For instance, a study of nationally representative community supervision and mental health agencies paired within counties revealed that two-fifths of paired community supervision and behavioral health agencies were rated as low on indices of cross-system collaboration [15]. While there is a natural impetus to build the connection between the YLS and behavioral health care organizations, numerous barriers impede effective collaboration, including a lack of formal protocols, a lack of informal working relationships, and differing philosophies across systems [16, 17]. Interventions to address such barriers and facilitate the connection between systems may improve linkage to substance use intervention among youth involved in the legal system.

One intervention that may improve the connection between systems is implementation of a learning health system (LHS), which the National Academy of Medicine has endorsed as a model framework for improving health outcomes [18]. LHS is a quality improvement approach that aims to continuously integrate data, insights derived from data, and implementation of evidence-based solutions into a health system. As an example, the state of Washington developed an LHS, Comparative Effectiveness Research Translation Network (CERTAIN), which informed numerous studies targeting physical health conditions [19]. Within behavioral health domains, qualitative research examining strategies to improve the cross-system collaboration between child welfare and mental health has similarly highlighted the role of sharing data and having joint decision-making meetings – common features of an LHS [14]. It is hypothesized that implementation of an LHS would yield greater cross-system collaboration between YLS and behavioral health agencies. One prior study conducted qualitative interviews with YLS and behavioral health agency staff about their perceived utility of a data dashboard within LHS; results suggested data dashboard review may facilitate collaboration between systems [20]. However, there has been minimal systematic investigation of the association between the LHS and cross-system collaboration ratings between YLS and behavioral health agencies.

In addition to the inconsistent connections between youth legal and behavioral health agencies that may be strengthened through an LHS approach, the organizational climate towards evidence-based practices (EBPs) may influence the success of cross-system collaborations. Given differences in training, organizational culture, and beliefs about behavioral health, EBP climate may differ between youth legal and behavioral health settings. EBP climate may be reflected at the organizational and individual level, with the latter often reflected through EBP citizenship behavior that aids in the adoption and maintenance of EBPs within an organization. Past research has demonstrated that both organizational support for EBPs and individual EBP citizenship were associated with EBP use [21, 22], yet more research is needed to understand the association between cross-system collaboration and EBP climate. Research has identified that minimal cross-system collaboration among legal, mental health, and child welfare systems may hinder the adoption of EBPs, such as multi-systemic therapy [23]. Extending this research to examine the association between EBP climate and cross-system collaboration may offer an important avenue for future studies to conceptualize how to increase availability of EBPs across systems.

To address these gaps in the literature, we leveraged data from a multi-site hybrid type II trial designed to evaluate whether an LHS approach could improve substance use services for youth involved in the legal system. Specifically, we examined two aims: 1) quantify the impact of implementing an LHS between the YLS and local behavioral health agencies on personnel ratings of attitudes toward their cross-system collaboration, and 2) characterize the association between EBP implementation climate and rating of the cross-system collaboration over and above the impact due to LHS implementation. We hypothesized that the LHS would improve ratings of the cross-system collaboration exchange. We also predicted that YLS and behavioral health personnel’s ratings of EBP climate would be positively associated with cross-system collaboration independent of the effect of implementation of the LHS.

Methods

Sample and setting: learning health system

The data were derived from the Alliances to Disseminate Addiction Prevention and Treatment (ADAPT) project, which aimed to evaluate an LHS between the YLS and community mental health centers (CMHCs) to improve the identification of risk for substance use disorders and connection to indicated care for youth involved in the legal system. ADAPT was designed as a cluster-randomized stepped wedge hybrid type II effectiveness-implementation trial, in which eight counties in one Midwest state were enrolled in the study beginning in September 2020 through February 2024. Counties were eligible for participants if its rates of drug or opioid overdose/prescriptions were above the state average or if they had fewer than the state average of behavioral health providers per individual with substance use problems. Each county was randomly assigned to one of three cohorts, which were then stepped into the study's active implementation phase with approximately nine months between each step. Cohort 1 included two counties. Cohorts 2 and 3 each included three counties. In ADAPT, the research team conducted separate needs assessments by system. Personnel from the YLS and CMHC that served each county were trained at the beginning of their implementation phase, met quarterly to review county-level data to develop solutions, and met monthly to facilitate their cross-system collaboration. Participating counties had flexibility in choosing their ADAPT team (i.e., who would attend the initial training and participate in the monthly meetings). In addition to the site champions (often supervisors from each system), counties could choose to include an additional 1–2 representatives from their YLS and 1–2 representatives from their CMHC. Examples of specific solutions identified and implemented within counties through their learning health system collaboration meetings included reserving a small number of appointments for YLS-referred youth to address long intake wait times at CMHCs, submitting a grant application to fund services for mild-moderate substance use disorders at CMHCs, and using standardized substance use screening measures in YLS settings.

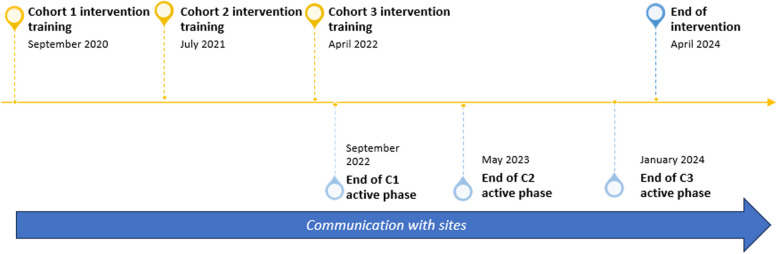

Each cohort was in the implementation phase for 18 months, after which it was in the maintenance phase and was no longer required to engage in quarterly and monthly meetings. By study completion, the maintenance phase was 19 months for cohort 1, 12 months for cohort 2, and 3 months for cohort 3. Additional details about the study design and rationale can be found in the published protocol [10]. Figure 1 summarizes the timeline of the ADAPT study.

Fig. 1.

ADAPT timeline

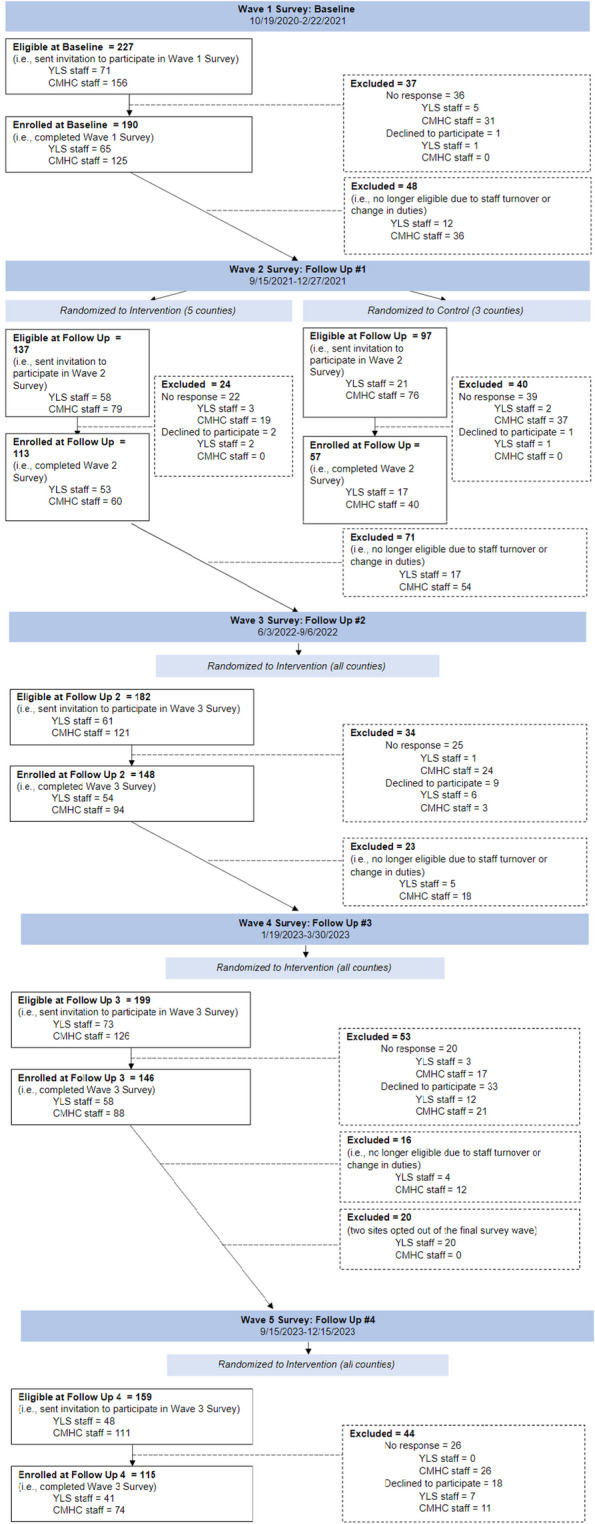

During the study, personnel from the YLS and CMHCs were identified via publicly available websites and staff contact lists provided by agency leaders. These personnel were invited to participate in five waves of survey data collection via email. They were emailed a personal link to a REDCap survey, which was preceded by a study information sheet that explained informed consent. If personnel did not consent to survey participation but completed the survey, survey data were removed from analysis. If personnel were no longer employed by the YLS or CMHC involved in the study, their information was removed from the research team’s contact list for subsequent survey waves. A consort diagram of survey participants can be found in Fig. 2. No harms were documented during the study.

Fig. 2.

Consort diagram of survey participants

ADAPT was approved by the first author’s Institutional Review Board (Protocol #1,910,282,231) and was preregistered as a clinical trial (Clinicaltrials.gov identifier: NCT04499079). Data are not made publicly available to preserve participant confidentiality but may be made available upon reasonable request. Analytic code is publicly available at https://github.com/dayusun/ADAPT/blob/main/adapt_bs_final.html.

Measures

Demographics

Survey participants provided information regarding their age (18–25, 26–35, 36–45, 46–55, 56–65, ≥ 66 years), gender (female, male, transgender, nonbinary, prefer not to report), race (White, Black/African American, American Indian/Alaskan Native, Asian, Native Hawaiian/Pacific Islander, other, prefer not to report), ethnicity (Hispanic/Latino(a), Non-Hispanic/Latino(a), do not know), time working at specific YLS or CMHC agency (< 1, 1–4, 5–9, 10–14, 15–19, ≥ 20 years), education level (high school, some college, Associate’s degree, Bachelor’s degree, Master’s degree, Doctorate degree), and job satisfaction (very satisfied, satisfied, not satisfied/dissatisfied, dissatisfied, very dissatisfied). Age and job satisfaction were used from the first survey wave in which a participant responded.

Treatment indicator

According to the stepped wedge design, each county had data corresponding to a control (pre-implementation) phase, an intervention phase, and a maintenance (post-implementation) phase. A dummy-coded indicator variable was created (control phase = 0reference category; intervention phase = “1”; and maintenance phase = “2”), which was considered the primary independent variable of interest. Date and time data for each submitted survey were used to categorize responses to the corresponding implementation phase (control, intervention, maintenance).

Cross-system collaboration

Cultural Exchange Inventory (CEI)

The CEI is a 15-item measure developed to capture the process and outcomes of the exchange of information, attitudes, and practices between individuals employed in different systems. Process was measured using seven items and included items such as, “I feel like we respect one another” and “I feel like they answer all my questions.” Output was measured using eight items and included items such as, “I feel like I am learning something from them” and “I feel like they are changing their practices because of this collaboration.” Each item is rated on a 7-point Likert scale ranging from “Not at all” to “A great deal.” Process and output are on comparable scales and can be compared directly.

Palinkas and colleagues (2018) originally developed the CEI through semi-structured individual interviews and focus group with individuals employed in the child welfare, child mental health, and YLS [24]. The authors conducted an exploratory factor analysis on each domain (process and outcome) separately. The CEI demonstrated strong internal validity and support for convergent and discriminant validity. We recognized that the CEI has been minimally adopted outside the research group that developed the CEI, which warranted external investigation of the measure's factor structure. Therefore, for each domain, we assessed inter-item polychoric correlations and exploratory factor analysis using maximum likelihood estimation. Polychoric correlations demonstrated ranges of 0.53–0.90 and 0.70–0.87 for process and outcome, respectively. Factor analysis supported a single factor for process and a single factor for outcome; factor loadings ranged from 0.73–0.94 and 0.87–0.91, respectively. Our analyses supported the factor analytic structure proposed by Palinkas and colleagues (2018). Therefore, we examined each domain as a separate dependent variable. Scale scores were calculated by averaging respective items within each domain (i.e., process, outcome). A graphical summary of scores across the five waves can be found in Appendix 1. While we examined CEI process and output separately, we investigated the correlation between the two domains. Pearson correlation (r = 0.77) suggested overlap in the constructs (59% shared variance) but also notable distinction (41% unique variance).

Organizational climate and citizenship behavior

Implementation Climate Scale (ICS)

The ICS is an 18-item measure designed to capture the organization climate that may affect the outcomes of EBP implementation. The ICS has demonstrated strong internal consistency and construct validity [25]. External validation studies supported the internal consistency and concurrent validity of the scale [26–28]. In the present study, participants were asked to rate the extent to which they agreed with items following the prompt, “Evidence-based practice (EBP) is the integration of the best research evidence, clinical expertise and patient needs that will result in the best patient outcomes. For the purposes of this survey EBP refers to substance use screening, referral, and appropriate substance use treatment.” Six dimensions are measured: focus on evidence-based practice (e.g., “One of the team’s main goals is to use evidence-based practices effectively”), educational support for EBP (e.g., “This team provides evidence-based practice trainings or in-services"), recognition for evidence-based practice (e.g., “Staff on this team who use evidence-based practices are seen as experts”), rewards for evidence-based practice (“The better you are at using evidence-based practices, the more likely you are to get a bonus or a raise”), selection for evidence-based practice (“This team selects staff who value evidence-based practice”), and selection for openness (“This team selects staff open to new types of interventions”). Participants were asked to rate responses on a five-point Likert scale from “Not at all” to “Very great extent.” Consistent with suggestions from previous research, we created an average score of all 18 items to assess implementation climate at the work group level for our analyses [25].

Implementation Citizenship Behavior Scale (ICBS)

The ICBS is a six-item measure of individuals’ ratings of their behavior toward staying informed about EBPs, as well as helping others and the organization implement and use EBPs. The measure was designed to determine the extent to which individual employees exceed job expectations or requirements that facilitate the EBP implementation. ICBS has demonstrated strong internal consistency and construct validity [29]. External studies have validated the measure within [30] and outside [31] the U.S. While the ideal approach is to have supervisors rate subordinates on observed citizenship behaviors, in this study respondents were asked to rate the frequency with which they perform each of the behaviors on a five-point Likert scale from “Not at all” to “Frequently, if not always.” Items include, “Helping others with responsibilities related to the implementation of evidence-based practices” and “Helping teach evidence-based practice implementation procedures to new team members.” For analyses, we created an average score of all six items consistent with prior research [29].

Note that the CEI, ICS, and ICBS were included in each wave of survey data collection. Responses were timestamped with date and time to verify correspondence with the intended timepoint and intervention phase.

Analyses

We conducted two sets of maximum-likelihood linear mixed models in RStudio version 4.2.2 [32] using the lme4 package (version 1.1–35.2) corresponding to our two aims. For Aim 1, each dependent variable was modeled in a separate model and regressed on fixed effects for the treatment indicator and dummy-coded demographic covariates (age, race, gender, ethnicity, time at agency, highest education level, and job satisfaction). All covariates were determined a priori.

Survey respondents may have entered/exited the sample across waves or may have only answered one wave due to staff turnover. To account for this data structure, we included random effects for the participant identifier to account for autocorrelation of repeated survey measures within individual. We also included the interaction between county and system (YLS vs. CMHC) to account for the within-county nested data.

To adjust for potential confounding due to time, we first attempted to specify wave of survey data collection as a dummy-coded indictor. However, due to the onset of COVID-19 pandemic, Cohorts 1 and 2 were stepped into the survey data collection at the same time (see Fig. 2), disrupting the data collection schedule. As a result, the indicator for wave of survey collection was highly collinear with the treatment indicator. Instead, we included fixed effect parameters using a cubic B-spline model. We a priori choose a specification of three inner knots aligned with prior literature [33, 34] for six total parameters. Time was modeled continuously as days since start of the trial, which was made possible by the survey submission timestamp. The spline regression also accounted for discrepancy between planned and actual response time of personnel within counties and therefore more exactly accounted for actual temporal effect than would have been possible with a wave indicator.

For Aim 2, in addition to the parameters outlined above, we aimed to determine the association between EBP organizational citizenship behavior and climate and cross-system collaboration over and above the treatment indicator. Therefore, we included the ICS and ICBS variables as fixed effects variables in the model.

Results

The total sample was 307 unique individuals with 738 measurements across waves. The total number of respondents by wave ranged from 108 (wave 5) to 178 (wave 1). The sample was predominantly White (92%), non-Hispanic/Latino(a) (94%), and female gender (80%). Approximately 30% of the sample were aged 26–35 years old. Most individuals (~ 60%) had been at their agency between 1–9 years, and had a Bachelor’s (45%) or Master’s degree (41%). Approximately 85% of individuals rated their job satisfaction as either satisfied or very satisfied. See Table 1 for a demographic description of the sample.

Table 1.

Sample demographics

| Variable | YLS | CMHC |

|---|---|---|

| N (%)a | N (%)b | |

| Age | ||

| 18–25 years old | 7 (6.8) | 31 (15.2) |

| 26–35 years old | 19 (18.4) | 73 (35.8) |

| 36–45 years old | 29 (28.2) | 48 (23.5) |

| 46–55 years old | 31 (30.1) | 30 (14.7) |

| 56–65 years old | 16 (15.5) | 17 (8.3) |

| 66 or older | 0 | 5 (2.5) |

| Missing | 1 (1.0) | 0 |

| Race | ||

| American Indian/Alaskan Native | 0 | 0 |

| Asian | 1 (1.0) | 3 (14.7) |

| Black/African American | 6 (5.8) | 6 (2.9) |

| Multiracial | 1 (1.0) | 2 (1.0) |

| Native Hawaiian/Pacific Islander | 0 | 0 |

| White | 91 (88.3) | 191 (93.6) |

| Prefer not to answer | 2 (1.9) | 0 |

| Missing | 2 (1.9) | 2 (1.0) |

| Ethnicity | ||

| Hispanic/Latino(a) | 4 (3.9) | 9 (4.4) |

| Non-Hispanic/Latino(a) | 96 (93.2) | 192 (94.1) |

| Unknown | 3 (2.9) | 3 (1.5) |

| Gender | ||

| Female | 75 (72.3) | 167 (81.9) |

| Male | 26 (25.2) | 33 (16.2) |

| Transgender | 0 | 0 |

| Nonbinary | 0 | 3 (1.5) |

| Prefer not to answer | 0 | 3 (1.5) |

| Missing | 2 (1.9) | 1 (0.5) |

| Time at Agency | ||

| < 1 year | 12 (11.7) | 43 (21.1) |

| 1–4 years | 28 (27.2) | 83 (40.7) |

| 5–9 years | 17 (16.5) | 53 (26.0) |

| 10–14 years | 10 (9.7) | 13 (6.4) |

| 15–19 years | 14 (13.6) | 3 (1.5) |

| ≥ 20 | 22 (21.4) | 7 (3.4) |

| Missing | 0 | 2 (1.0) |

| Education | ||

| High School | 1 (1.0) | 2 (1.0) |

| Some College | 3 (2.9) | 7 (3.4) |

| Associate's degree | 0 | 6 (2.9) |

| Bachelor’s Degree | 67 (65.0) | 72 (35.3) |

| Master’s Degree | 19 (18.4) | 108 (52.9) |

| Doctorate Degree | 13 (12.6) | 9 (4.4) |

| Job Satisfaction | ||

| Very Satisfied | 41 (39.8) | 50 (24.5) |

| Satisfied | 54 (52.4) | 119 (58.3) |

| Not satisfied/dissatisfied | 6 (5.8) | 25 (12.3) |

| Dissatisfied | 0 | 10 (4.9) |

| Very dissatisfied | 2 (1.9) | 0 |

| M (SD) | ||

| ICS | 2.34 (0.67) | 2.56 (0.78) |

| ICBS | 2.46 (0.92) | 2.55 (0.95) |

aBased on 103 unique individuals. bBased on 204 unique individuals

For Aim 1, the treatment indicator was not statistically significantly associated with CEI process or CEI output, nor were any of the spline knot parameters. However, when the ICS and ICBS parameters were included (Aim 2), we found that the treatment phase (B = 0.41, Standard Error (SE) = 0.20) and maintenance phase (B = 0.60, SE = 0.29) were associated with greater reported CEI output compared to the control phase. Stated differently, the treatment and maintenance phase were associated with a 0.41 and 0.60 increase in the mean rating on CEI output, respectively, compared to the control phase.

Additionally, we found that both the ICS (B = 0.62, SE = 0.08) and ICBS measures (B = 0.30, SE = 0.06) were positively associated with CEI process, as well as CEI output (B = 0.51, SE = 0.09 and B = 0.34, SE = 0.07, respectively, for ICS and ICBS). Results can be interpreted as for every one unit (i.e., 1.0 increment of mean score) increase of the ICS, there was a 0.62 and 0.51 increase in the mean on the CEI process and output, respectively. See Table 2 for the summary of results. For details about covariates in linear mixed models, Appendix 2 includes regression parameter estimates with standard errors and Appendix 3 presents Type III omnibus F test results using Satterthwaite’s method for calculating approximate degrees of freedom. Refer to Appendix 4 for the graphical representation of the estimated B-spline temporal effect for CEI process and output.

Table 2.

Results from linear mixed models

| Outcome (B, SE) | ||||

|---|---|---|---|---|

| Aim 1 | Aim 2 | |||

| Predictor | CEI Processa | CEI Outputb | CEI Processc | CEI Outputd |

| Treatment Indicator | ||||

| Control | REF | REF | REF | REF |

| Treatment | 0.32 (0.22) | 0.37 (0.22) | 0.36 (0.21) | 0.41 (0.20)* |

| Maintenance | 0.31 (0.32) | 0.46 (0.32) | 0.51 (0.31) | 0.60 (0.29)* |

| Spline Parameter | ||||

| Basis 1 | -1.47 (1.20) | -0.16 (1.23) | -0.98 (1.12) | 0.24 (1.15) |

| Basis 2 | -0.39 (0.46) | -0.37 (0.47) | -0.41 (0.43) | -0.46 (0.44) |

| Basis 3 | -0.72 (0.69) | 0.18 (0.70) | -0.75 (0.65) | 0.12 (0.65) |

| Basis 4 | -0.16 (0.70) | -0.16 (0.71) | -0.32 (0.67) | -0.31 (0.67) |

| Basis 5 | -0.79 (0.98) | -0.03 (1.00) | -0.61 (0.94) | 0.01 (0.96) |

| Basis 6 | -0.33 (0.75) | -0.02 (0.76) | -0.52 (0.72) | -0.23 (0.72) |

| ICS | - | - | 0.62 (0.08)** | 0.51 (0.09)** |

| ICBS | - | - | 0.30 (0.06)** | 0.34 (0.07)** |

CEI Cultural Exchange Inventory, ICS Implementation Climate Scale, ICBS Implementation Citizenship Behavior Scale

*p < 0.05 **p < 0.01 aBased on 661 repeated measurements, 287 unique individuals. bBased on 662 repeated measurements, 288 unique individuals. cBased on 629 repeated measurements, 280 unique individuals. dBased on 630 repeated measurements, 281 unique individuals

Discussion

The aims of the current paper were to examine the association between developing and implementing an LHS between the YLS and CMHCs on personnel ratings of the cross-system collaboration, as well as to examine the association between EBP climate and the cross-system collaboration over and above the impact attributed to the LHS intervention. The results suggest that when EBP climate variables (ICBS and ICS) were not included in the model, the LHS was not associated with ratings of the cross-system collaboration (Aim 1). However, when measures of EBP implementation citizenship were included (Aim 2), not only were these variables positively associated with ratings of cross-system collaboration, but so was the treatment indicator. In particular, the experimental and maintenance phase of the LHS intervention were predictive of perceived output of the cross-system collaboration.

These results suggest that Aim 1 may have been underpowered to detect an association between the LHS intervention and cross-system collaboration ratings, and the inclusion of additional statistically significant covariates may have reduced residual errors in the estimation increasing the precision of the coefficient estimate and the possibility of finding a significant result, potentially through adjusting for confounding and by reducing collinearity between the stepped-wedge study design and time. Notably, the standard errors decreased when including covariates, further evidencing reduced statistical power for Aim 1. Therefore, two main findings emerged: 1) while not definitive due to nonsignificant findings without covariates, the LHS intervention may have affected perceived output from the cross-system collaboration, and 2) EBP organizational climate and citizenship behavior were positively associated with cross-system collaboration ratings even when controlling for the effect of the LHS implementation.

First, compared to the control phase, implementation of the LHS was predictive of increases in the ratings of the cross-system collaboration output, which includes items that measure perceptions of learning from, teaching, changing opinions about, and changing practices within the other system. Specifically in this study, the implementation phase of the LHS included quarterly review of county-level data and monthly hour-long meetings between the YLS and CMHCs facilitated by a research staff member. Formal meetings facilitated by the research staff member ceased during the maintenance phase, although counties were encouraged to continue meeting; anecdotal evidence suggests a few counties continued meeting. Positive association with cross-system collaboration output ratings is consistent with our hypothesis that the LHS has promising potential to improve ratings of the collaboration between the YLS and CMHCs. Prior qualitative research facilitating academic-community partnerships among behavioral health, legal, child welfare, and school systems suggested that cross-system discussions led to sharing and expanding resource knowledge [35]. While ADAPT differs in its intervention, frequent meetings with cross-systems partners may have increased perceived output of such meetings.

Notably, we did not observe an association between the LHS intervention and perceived cross-system collaboration process (e.g., beliefs that systems work well together, understand, and respect each other). The null findings were inconsistent with our hypotheses. It is possible that low power influenced null results. This is consistent with the finding that the treatment coefficients for process were not that much smaller than the coefficients for output, suggesting that the intervention may have had a smaller but positive impact on process and a larger sample size may have shown significant results for process. However, the potential for a positive impact of the intervention on process in a larger sample is only conjecture at this point, given that the effect was non-significant in this study. Furthermore, it is possible that the effect of the LHS is differentially impactful for perceived output but not process. While measures of cross-system collaboration process and output were correlated in the current sample, results indicate the importance of studying the two domains as separate. While cross-system collaboration process and output are theoretically interrelated, it is possible that positive ratings of process are not necessary for positive ratings of output in this population, setting, and intervention. For example, process items included, “I feel like we understand one another” and “I feel like they answer all my questions.” Endorsement of such items may not be necessary to endorse output items (e.g., “I feel like they are learning from me”). Given that the intervention aimed to facilitate development of localized solutions derived from data, meetings may have been more effective in establishing shared objectives rather than fostering and deepening the cross-system relationship. Also, most YLS and CMHC staff surveyed were not involved in the monthly and quarterly meetings. Perceptions of cross-system collaboration process (e.g., respect, understanding) may not have been affected by the LHS intervention for survey respondents. Changes in system practices, for example, which may have been disseminated by individuals attending the meetings, may be more salient to survey respondents. Future research would benefit from examining item-level responses to tease apart potential differences within the domains of cross-system collaboration process and output (e.g., understanding, respect, time devotion within process), as well as analyzing subgroups of respondents by job role and/or involvement in different aspects of the intervention (e.g., weekly/quarterly meetings with the other system).

Second, self-rated EBP organizational climate and citizenship behavior were positively associated with cross-system collaboration process and output ratings. These associations were observed in models adjusting for the effects of the LHS. Above the effects of the LHS, measures of EBP climate were strongly associated with cross-system collaboration. Due to the lack of experimental manipulation of organizational climate and citizenship behavior, we cannot infer causality between these variables and cross-system collaboration ratings. It is possible that they bidirectionally affect one another; future research would benefit from modeling such a relationship and identifying ideal points of intervention and matching specific interventions to specific systems. Regardless, however, the observed associations may align with prior research. For example, one study found that probation staff that had higher ratings of their agency reported more positive attitudes toward EBPs [36], which has been linked with organizational EBP support [21]. While Viglione and Blasko [36] looked within agency, the authors suggested that EBP beliefs may influence organizational commitment. In the current study, EBP climate may influence or reflect one’s commitment to addressing complex public health problems or openness to new ideas, feedback, and innovative solutions. In fact, prior research suggested that while probation officers supported EBPs for staff supervision, they were concerned about support for innovation and collaboration within their agency [37]. Likewise, EBP climate measures may be related to perception of within and cross-system collaboration support.

Strengths and limitations

The current study is the first of our knowledge to examine the impact of implementing an LHS between the YLS and CMHCs and ratings of the cross-system collaboration. While we focused our analysis on a proximal outcome (ratings of the cross-system collaboration), we are ultimately interested in how LHS affects behavioral health service utilization among legal-involved youth (potentially mediated by the cross-system collaboration). One study that aimed to strengthen the collaboration among detention centers and mental health agencies found that detained youth who received individualized treatment plans and case management endorsed fewer behavioral health symptoms post-detention-release [38]. While this study did not randomize youth to interventions, results suggested that collaborations between legal and mental health organizations may impact youth-level outcomes. The current study also utilized a stepped wedge design, which allowed for ethical randomization of the LHS, which is especially important in community-engaged research. While the stepped wedge design allows for potential time confounding adjustment, previous researchers have commented on the minimal research examining how to include such adjustment [39]. Our indicator variable for wave of survey collection was highly collinear with our treatment indicator. Modeling time using splines allowed us to accomplish a non-parametric adjustment for the potentially confounding impact of temporal or secular trends.

The results should be contextualized within the following limitations. First, as mentioned above in the interpretation of our findings, our study may have been underpowered to detect an effect between the LHS and cross-system collaboration ratings. While the inclusion of additional covariates and use of splines may have increased power through the reduction in collinearity, the susceptibility of stepped wedge designs to bias and reductions in power due to logistical interruptions of planned design and due to misspecifications of secular trends, remains a challenge [40]. Second, consistent with prior literature [41], we observed considerable staff turnover throughout the study, which reduced the number of eligible participants throughout the study. Staff turnover and survey attrition may have also hindered impact on the LHS, as new employees would not have participated in intervention-related training or regular meetings with staff from the other system; frequent staff turnover may have had negative impacts on cross-cultural collaboration, since new employees had few opportunities to develop connections with the other system or to form opinions of their interactions. Third, the LHS under investigation in the current study included numerous components, such as the development of a data dashboard to disseminate local data, review of local data, development of localized solutions, and frequent meetings between the YLS and CMHCs. It is unclear whether one, a few, or a unique combination of those components are responsible for the observed results. Qualitative and mixed-methods studies, in addition to specialized quantitative designs (e.g., fractional factorial randomized trials) [42], may provide greater insights into the perceived benefits of the intervention to directly inform component trial designs. Regardless, however, analyses in the current investigation using a stepped wedge design offers incremental understanding into an LHS intervention that has the potential to impact youth outcomes.

Conclusion

In the current investigation, we examined the implementation of an LHS between the YLS and CMHCs in hybrid Type II effectiveness-implementation trial on ratings of their cross-system collaboration. While our study was challenged by low power and collinearity between time and the implementation variable, our findings offer tentative support that the LHS improved ratings among YLS and CMHC personnel on the output of their cross-system collaboration. Future research should consider the impact of LHS interventions on youth-specific outcomes.

Supplementary Information

Acknowledgements

We would like to thank our community partners who participated in the ADAPT project.

Abbreviations

- YLS

youth legal system

- CERTAIN

Comparative Effectiveness Research Translation Network

- EBP(s)

evidence-based practice(s)

- ADAPT

Alliances to Disseminate Addiction Prevention and Treatment

- CMHC(s)

community mental health center(s)

- CEI

Cultural Exchange Inventory

- ICS

Implementation Climate Scale

- ICBS

Implementation Citizenship Behavior Scale

- SE

standard error

Authors’ contributions

LO conceptualized the project and wrote and edited the manuscript. DS conducted analyses. KS and MA provided supervision and conceptualization support. PM provided statistical consultation. ZA, TZ, LH, and MA secured funding. GA and LS provided methodological consultation. LG and AD provided writing support. All authors provided manuscript editorial support.

Funding

This research was supported by the National Institutes of Health through the National Institute on Drug Abuse Justice Community Opioid Innovation Network cooperative through award number UG1DA050070.

Data availability

Data are not made publicly available to preserve participant confidentiality but may be made available upon reasonable request. Analytic code is publicly available at https://github.com/dayusun/ADAPT/blob/main/adapt_bs_final.html.

Declarations

Ethics approval and consent to participate

ADAPT was approved by the first author’s Institutional Review Board (Protocol #1910282231). All participants were consented to participate. If individuals did not consent to participation, their data were excluded from analyses.

Consent for publication

All authors consent for publication.

Competing interests

Gregory Aarons, PhD, serves on the editorial board for Implementation Science Communications. All other authors declare no conflict of interest relevant to submission.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Schwalbe CS. Impact of probation interventions on drug use outcomes for youths under probation supervision. Child Youth Serv Rev. 2019;98:58–64. [Google Scholar]

- 2.Prinz RJ, Kerns SE. Early substance use by juvenile offenders. Child Psychiatry Hum Dev. 2003;33:263–77. [DOI] [PubMed] [Google Scholar]

- 3.Borschmann R, Janca E, Carter A, Willoughby M, Hughes N, Snow K, et al. The health of adolescents in detention: a global scoping review. Lancet Public Health. 2020;5(2):e114–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Aarons GA, Brown SA, Hough RL, Garland AF, Wood PA. Prevalence of adolescent substance use disorders across five sectors of care. J Am Acad Child Adolesc Psychiatry. 2001;40(4):419–26. [DOI] [PubMed] [Google Scholar]

- 5.Johnson TP, Cho YI, Fendrich M, Graf I, Kelly-Wilson L, Pickup L. Treatment need and utilization among youth entering the juvenile corrections system. J Subst Abuse Treat. 2004;26(2):117–28. [DOI] [PubMed] [Google Scholar]

- 6.Mulvey EP, Schubert CA, Chaissin L. Substance use and delinquent behavior among serious adolescent offenders: Citeseer; 2010.

- 7.White C. Treatment services in the juvenile justice system: Examining the use and funding of services by youth on probation. Youth Violence Juvenile Justice. 2019;17(1):62–87. [Google Scholar]

- 8.Belenko S, Knight D, Wasserman GA, Dennis ML, Wiley T, Taxman FS, et al. The Juvenile Justice Behavioral Health Services Cascade: A new framework for measuring unmet substance use treatment services needs among adolescent offenders. J Subst Abuse Treat. 2017;74:80–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Knight D, Belenko S, Robertson A, Wiley T, Wasserman G, Leukefeld C, et al., editors. Designing the optimal JJ-TRIALS study: EPIS as a theoretical framework for selection and timing of implementation interventions. Addiction Science & Clinical Practice; 2015: BioMed Central.

- 10.Aalsma MC, Aarons GA, Adams ZW, Alton MD, Boustani M, Dir AL, et al. Alliances to disseminate addiction prevention and treatment (ADAPT): A statewide learning health system to reduce substance use among justice-involved youth in rural communities. J Subst Abuse Treat. 2021;128:108368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Funk R, Knudsen HK, McReynolds LS, Bartkowski JP, Elkington KS, Steele EH, et al. Substance use prevention services in juvenile justice and behavioral health: results from a national survey. Health Justice. 2020;8:1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Knight DK, Belenko S, Dennis ML, Wasserman GA, Joe GW, Aarons GA, et al. The comparative effectiveness of Core versus Core+Enhanced implementation strategies in a randomized controlled trial to improve substance use treatment receipt among justice-involved youth. BMC Health Serv Res. 2022;22(1):1535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Elkington KS, Lee J, Brooks C, Watkins J, Wasserman GA. Falling between two systems of care: Engaging families, behavioral health and the justice systems to increase uptake of substance use treatment in youth on probation. J Subst Abuse Treat. 2020;112:49–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bunger AC, Chuang E, Girth AM, Lancaster KE, Smith R, Phillips RJ, et al. Specifying cross-system collaboration strategies for implementation: a multi-site qualitative study with child welfare and behavioral health organizations. Implement Sci. 2024;19(1):13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Scott CK, Dennis ML, Grella CE, Funk RR, Lurigio AJ. Juvenile justice systems of care: results of a national survey of community supervision agencies and behavioral health providers on services provision and cross-system interactions. Health Justice. 2019;7(1):11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kapp SA, Petr CG, Robbins ML, Choi JJ. Collaboration Between Community Mental Health and Juvenile Justice Systems: Barriers and Facilitators. Child Adolesc Soc Work J. 2013;30(6):505–17. [Google Scholar]

- 17.Johnson-Kwochka A, Dir A, Salyers MP, Aalsma MC. Organizational structure, climate, and collaboration between juvenile justice and community mental health centers: implications for evidence-based practice implementation for adolescent substance use disorder treatment. BMC Health Serv Res. 2020;20(1):929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.McGinnis JM, Fineberg HV, Dzau VJ. Advancing the learning health system. N Engl J Med. 2021;385(1):1–5. [DOI] [PubMed] [Google Scholar]

- 19.Flum DR, Alfonso-Cristancho R, Devine EB, Devlin A, Farrokhi E, Tarczy-Hornoch P, et al. Implementation of a “real-world” learning health care system: Washington state’s Comparative Effectiveness Research Translation Network (CERTAIN). Surgery. 2014;155(5):860–6. [DOI] [PubMed] [Google Scholar]

- 20.Dir AL, O’Reilly L, Pederson C, Schwartz K, Brown SA, Reda K, et al. Early development of local data dashboards to depict the substance use care cascade for youth involved in the legal system: qualitative findings from end users. BMC Health Serv Res. 2024;24(1):687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Aarons GA, Sommerfeld DH, Walrath-Greene CM. Evidence-based practice implementation: the impact of public versus private sector organization type on organizational support, provider attitudes, and adoption of evidence-based practice. Implement Sci. 2009;4:1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Haider S, Fernandez-Ortiz A, de Pablos HC. Organizational citizenship behavior and implementation of evidence-based practice: Moderating role of senior management’s support. Health systems. 2017;6(3):226–41. [Google Scholar]

- 23.Carstens CA, Panzano PC, Massatti R, Roth D, Sweeney HA. A naturalistic study of MST dissemination in 13 Ohio communities. J Behav Health Serv Res. 2009;36:344–60. [DOI] [PubMed] [Google Scholar]

- 24.Palinkas LA, Garcia A, Aarons G, Finno-Velasquez M, Fuentes D, Holloway I, et al. Measuring collaboration and communication to increase implementation of evidence-based practices: the cultural exchange inventory. Evidence Policy. 2018;14(1):35–61. [Google Scholar]

- 25.Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS). Implement Sci. 2014;9:1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ehrhart MG, Torres EM, Wright LA, Martinez SY, Aarons GA. Validating the Implementation Climate Scale (ICS) in child welfare organizations. Child Abuse Negl. 2016;53:17–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ehrhart MG, Torres EM, Hwang J, Sklar M, Aarons GA. Validation of the Implementation Climate Scale (ICS) in substance use disorder treatment organizations. Subst Abuse Treat Prev Policy. 2019;14:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Peters N, Borge RH, Skar A-MS, Egeland KM. Measuring implementation climate: psychometric properties of the Implementation Climate Scale (ICS) in Norwegian mental health care services. BMC Health Serv Res. 2022;22(1):23. [DOI] [PMC free article] [PubMed]

- 29.Ehrhart MG, Aarons GA, Farahnak LR. Going above and beyond for implementation: the development and validity testing of the Implementation Citizenship Behavior Scale (ICBS). Implement Sci. 2015;10:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Torres EM, Seijo C, Ehrhart MG, Aarons GA. Validation of a pragmatic measure of implementation citizenship behavior in substance use disorder treatment agencies. J Subst Abuse Treat. 2020;111:47–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Borge RH, Skar A-MS, Endsjø M, Egeland KM. Good soldiers in implementation: validation of the Implementation Citizenship Behavior Scale and its relation to implementation leadership and intentions to use evidence-based practices. Implementation Science Communications. 2021;2(1):136. [DOI] [PMC free article] [PubMed]

- 32.R Core Team. R: A language and environment for statistical computing. . In: Computing RFfS, editor. Vienna, Austria2022.

- 33.Harrell FE. Regression modeling strategies: with applications to linear models, logistic regression, and survival analysis: Springer; 2001.

- 34.James G, Witten D, Hastie T, Tibshirani R. An introduction to statistical learning: Springer; 2013.

- 35.Tolou-Shams M, Holloway ED, Ordorica C, Yonek J, Folk JB, Dauria EF, et al. Leveraging technology to increase behavioral health services access for youth in the juvenile justice and child welfare systems: A cross-systems collaboration model. J Behav Health Serv Res. 2022;49(4):422–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Viglione J, Blasko BL. The differential impacts of probation staff attitudes on use of evidence-based practices. Psychol Public Policy Law. 2018;24(4):449. [Google Scholar]

- 37.Belenko S, Johnson ID, Taxman FS, Rieckmann T. Probation staff attitudes toward substance abuse treatment and evidence-based practices. Int J Offender Ther Comp Criminol. 2018;62(2):313–33. [DOI] [PubMed] [Google Scholar]

- 38.Jenson JM, Potter CC. The effects of cross-system collaboration on mental health and substance abuse problems of detained youth. Res Soc Work Pract. 2003;13(5):588–607. [Google Scholar]

- 39.Barker D, McElduff P, D’Este C, Campbell M. Stepped wedge cluster randomised trials: a review of the statistical methodology used and available. BMC Med Res Methodol. 2016;16:1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hemming K, Taljaard M. Reflection on modern methods: when is a stepped-wedge cluster randomized trial a good study design choice? Int J Epidemiol. 2020;49(3):1043–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Glisson C, Green P. The effects of organizational culture and climate on the access to mental health care in child welfare and juvenile justice systems. Adm Policy Ment Health Serv Res. 2006;33(4):433–48. [DOI] [PubMed] [Google Scholar]

- 42.Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. Am J Prev Med. 2007;32(5 Suppl):S112–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data are not made publicly available to preserve participant confidentiality but may be made available upon reasonable request. Analytic code is publicly available at https://github.com/dayusun/ADAPT/blob/main/adapt_bs_final.html.