Abstract

Introduction

This study aims to evaluate US Department of Defense hospital efficiency.

Methods

Drawing on the American Hospital Association’s annual survey data, the study employs data envelopment analysis, slack analysis, and the Malmquist Productivity Index to identify the differences in hospital efficiency between Air Force, Army, and Navy hospitals as well as the trends of their efficiency from 2010 to 2021.

Results

US Department of Defense hospitals operated inefficiently from 2010 to 2021, although the average technical efficiency of all DOD hospitals increased slightly during this period. The inefficiency of all US Department of Defense hospitals may be due to the lack of pure technical efficiency rather than the suboptimal scale. However, as the efficiency trends in Navy hospitals differ from those in Army and Air Force hospitals, we should be careful in addressing the inefficiency of each type of US Department of Defense hospital.

Conclusion

Informed by the findings, this study enhances our understanding of US Department of Defense hospital efficiency and the policy implications, offering practical advice to healthcare policymakers, hospital executives, and managers on managing military hospitals.

Keywords: U.S. DOD hospitals, hospital efficiency, DEA, slack analysis, MPI

Introduction

Military hospitals are important because they directly relate to national defense. As well as serving the unique healthcare needs of active-duty service members, veterans, and their families, most military hospitals offer training grounds for medical professionals, medical research and development, and disaster and pandemic responses. These functions benefit citizens in general as well as those who serve in the military.

The United States has one of the largest and most preeminent military healthcare systems in the world. The US Military Health System (MHS) provides 9.6 million beneficiaries, including military personnel, with healthcare services through military treatment facilities such as US Department of Defense (DOD) hospitals and clinics. It is also in charge of developing medical-ready forces in the US and around the world. The current budget of the MHS is USD58.4 billion, an increase of $20 billion from $38 billion in 2010; the compound annual growth rate (CAGR) is 3.3%.

However, the MHS cannot be completely free from the trends of fiscal constraint. In March 2023, the Biden Administration requested $58.7 billion from Congress for the 2024 MHS budget.1 Despite diminishing resources, the MHS is confronting an increasing demand for healthcare services, especially as it is expected to improve the ability to prevent, detect, and respond to biological incidents and threats like the COVID-19 pandemic.

As they have done in the past when confronted with a challenge, DOD hospitals are meeting this challenge with a variety of efforts to enhance hospital efficiency.2–5 It is meaningful, therefore, to analyze DOD hospital efficiency, thereby drawing practical policy and managerial implications on how to manage these hospitals efficiently. This analysis will benefit healthcare policymakers, hospital executives, and public managers.

Despite their importance, military hospitals receive less attention these days. Although healthcare researchers keep studying hospital efficiency, they focus more on hospitals overall, attempting to generalize their findings to all kinds of hospitals.6–9 This has led them to pay relatively little attention to military hospitals, treating them as a type of public hospital.

To fill the gap in previous studies and generate practical implications on hospital efficiency, this study set out to evaluate US Department of Defense (DOD) hospital efficiency. More specifically, it attempts to identify the differences in hospital efficiency between the Air Force, Army, and Navy hospitals, as well as fluctuations in their efficiency from 2010 to 2021. Drawing on the American Hospital Association’s annual survey data during this period, the study employs data envelopment analysis (DEA), slack analysis, and the Malmquist Productivity Index (MPI).

The paper is structured along the following lines. First, it provides a snapshot of hospital-efficiency research. Second, it explains our empirical strategies, including data-gathering techniques, variables and measurements, and analytics. Third, it interprets the descriptive statistics as well as the results of the DEA, slack analysis, and MPI. Lastly, we discuss our findings and their implications and offer further considerations in the conclusion.

Literature Review

The demand for healthcare services has increased due to the aging population, chronic diseases, the cost of medical technology, and rising customer expectations. This demand has led to increased pressure on health expenditure. To help hospitals meet the increasing demand without causing a spike in health expenditure, previous research on hospital management has focused on assessing and prescribing hospital performance.

Various concepts, particularly economic efficiency and productivity, are applied to assess hospital performance. Although used interchangeably, economic efficiency and productivity are distinct. Productivity refers to the ratio of outputs to inputs—larger values of productivity indicate better performance. Economic efficiency in the hospital-efficiency literature encompasses two types: technical and allocative. Technical efficiency indicates the extent to which a set of inputs is used to produce a set of outputs. To achieve technical efficiency, an organization tries to obtain maximum outputs from given inputs or employ minimum inputs to generate given outputs.10,11 If an organization is perfectly optimized, it can generate maximum outputs from minimum inputs without any waste of resources. Allocative efficiency is defined as a mix of inputs to produce a mix of outputs. It enables us to find out the input mix that minimizes costs or the output mix that maximizes revenue.

Relying on these concepts, hospital-efficiency researchers have made continuous efforts to find out practical ways to optimize hospital management. These researchers have focused on identifying relevant input and output variables to analyze hospital efficiency.12,13 According to systematic reviews of hospital-efficiency studies, the most widely used inputs involve human resources (ie, the number of doctors, nurses, other medical staff, other non-medical staff, and total employed staff), physical resources (ie, the number of beds, equipment, and infrastructure), and financial resources (ie, the total amount of budget, the total amount of expenditure, the total amount of non-labor costs, the value of fixed capital, and the cost of drug supply). The number of outpatients and inpatients, average daily admission, the number of surgeries, the number of deliveries, the average length of stay, bed occupancy rate, life expectancy, death rate, survival rate, malnutrition rate, the total amount of revenue, and the total amount of profit are generally employed as outputs in the hospital literature.14–17

To analyze the input and output variables, hospital-efficiency research has also paid attention to selecting and sophisticating methodological approaches.14–17 The two main methodological approaches used are the non-parametric (such as DEA) and the parametric (such as stochastic frontier analysis or SFA) approaches.18,19 To examine the productivity trends of a hospital over time, DEA-based MPI has also been widely employed in hospital-efficiency research.20,21

Hospital-efficiency research has employed these methods and applied the results to improve hospital performance. Indeed, hospital-efficiency analysis enables hospital managers to assess the extent to which their hospital is efficiently run. In addition, the results of hospital-efficiency analysis can be compared between individual hospitals or between.22 For example, Céu Mateus et al conducted a comparative analysis of hospital efficiency between English, Portuguese, Spanish, and Slovenian hospitals using Stochastic frontier analysis and ordinary least squares (OLS) regression analysis.23

The change in hospital efficiency can also be compared using panel data.24 For instance, one study analyzed the efficiency of 107 Greek NHS (national health service) hospitals over a five-year period from 2009 to 2013 by using DEA and identified how hospital efficiency changed during the period.25

Based on the results of hospital-efficiency analysis, researchers have gone the extra mile to identify the determinants of hospital efficiency. This effort has uncovered the effects of organizational factors (eg, internal resources and organizational publicness26 and environments (eg, market competition and the population covered by the hospital)27 on hospital efficiency. For example, using DEA to assess the efficiency of hospitals in the US, one study uncovered that the hospital–physician integration level, teaching status, and market competition were positively associated with hospital efficiency.28

Data and Methods

Data

This study attempted to analyze the efficiency of US DOD hospitals. Thus, the unit of analysis in this study is individual military hospitals. The study obtained data from the American Hospital Association’s annual surveys ranging from 2010 to 2021 to evaluate the efficiency of DOD hospitals. The data include input and output information from the US Air Force, Army, and Navy hospitals.

Methods

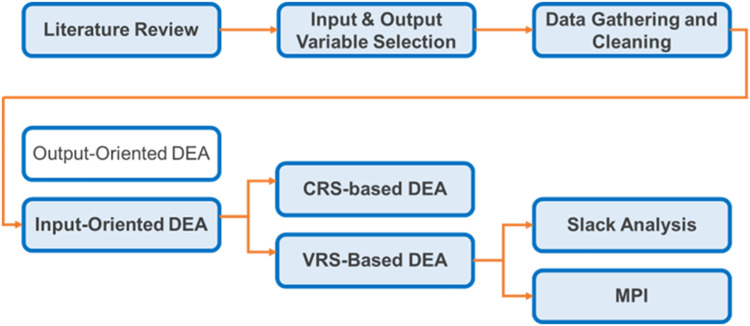

In this study, we employed multiple methods to assess the extent to which DOD hospitals are run efficiently. The methods include DEA, slack analysis, and MPI.

Data Envelopment Analysis

Our study employed input-oriented DEA to analyze DOD hospital efficiency. DEA is the most widely used non-parametric method in assessing. It is a mathematical technique to measure the efficiency of homogeneous decision-making units, such as hospitals, schools, and banks, by using a set of inputs and outputs.29 DEA compares the relative efficiency of decision-making units in the sample by assessing how efficiently a decision-making unit utilizes a set of inputs to produce a set of outputs.30 The result of DEA presents a form of efficiency score that ranges from 0 to 1, where 1 indicates perfect efficiency, while less than 1 means the decision-making unit is inefficient and thus there is room for efficiency improvement. Thus DEA enables us not only to measure the relative efficiency of decision-making units but also to identify the best-performing decision-making units. The most efficient decision-making units are at the efficiency frontier, which indicates that they produce the maximum outputs with the same level of inputs. Thus, DEA offers hospital managers opportunities to benchmark the best-performing hospitals, thereby contributing to improving hospital efficiency.

In DEA, there are two kinds of returns-to-scale (RTS) widely used in hospital-efficiency studies: constant-returns-to-scale (CRS) and variable-returns-to-scale (VRS).31 The CRS-based efficiency model assumes a fixed scale of operations; all decision-making units operate at an optimal scale.31 As CRS-based efficiency assumes that the outputs of a decision-making unit increase in a linear relationship, an increase or decrease in the scale of production does not affect efficiency. If the CRS-based efficiency score of a decision-making unit is 1, it means that the decision-making unit is operating at the optimal scale—ie, using its inputs efficiently to produce the maximum outputs. On the other hand, if the CRS-based efficiency score of a decision-making unit is less than 1, this implies that the decision-making unit could improve the utilization of its inputs to achieve the same level of output.

In contrast to CRS-based efficiency, VRS-based efficiency assumes that the scale of operations can affect efficiency.31 That is to say, VRS-based efficiency enables us to compare inefficient hospitals to efficient hospitals of the same size. Thus, VRS-based efficiency separates scale efficiency from overall technical efficiency, giving us pure technical efficiency. If the VRS-based efficiency score is 1, the implication is that the decision-making unit is operating at optimal efficiency given its current scale. If its VRS-based efficiency score is less than 1, it indicates that the decision-making unit is not using its inputs efficiently enough to achieve maximum outputs given its current scale. In this study, we employed both CRS- and VRS-based efficiency models.

Slack Analysis

To specify inefficient areas based on VRS-efficiency analysis, we also employed slack analysis. It identifies which input or output is generating inefficiencies. That is to say, the results of slack analysis clarify the surplus of inputs or deficit of outputs, thus improving the pure technical efficiency of a decision-making unit by guiding it to increase its outputs or decrease its inputs.

CRS-based efficiency indicates overall technical efficiency, encompassing pure technical efficiency and scale efficiency; VRS-based efficiency means pure technical efficiency. If we divide CRS-based efficiency by VRS-based efficiency, we obtain scale efficiency. The scale efficiency score measures the extent to which a decision-making unit deviates from an optimal scale.10 If the scale efficiency score is 1, the CRS-based efficiency score is the same as the VRS-based efficiency score, implying that the decision-making unit is operating optimally.

Malmquist Productivity Index

To examine the productivity trends of a hospital over time, this study employed DEA-based MPI that has been widely employed in hospital-efficiency research.20,21 It enables us to analyze panel data to investigate changes in the relationship between a set of inputs and a set of outputs in a hospital during a given period. This technique can not only accommodate a set of inputs and outputs but also present the change of specific indices, including total factor productivity, technical efficiency, technology, pure efficiency, and scale efficiency during a certain period.32 If the value of the MPI is higher than 1, the productivity of a hospital in t+1 has increased compared to its productivity in t.

In sum, relying on CRS-based and VRS-based efficiency techniques, we analyzed the overall technical efficiency, pure technical efficiency, and scale efficiency of DOD hospitals from 2010 to 2021. In addition, to specify the surplus of input variables, we ran a slack analysis. Lastly, we applied the DEA-based MPI technique to investigate productivity changes over time (See Supplementary Information 1 which summarizes a mathematical formula for each statistical method). Our statistical procedures to analyze DOD hospital efficiency are presented in Figure 1.

Figure 1.

Statistical Procedures.

Variables and Measurements

Building on previous studies of hospital efficiency,14,33–35 this study carefully selected five input and five output variables. Table 1 summarizes our input and output variables and their references.

Table 1.

Input and Output Variables and Their References

| Variable | References | Data Source |

|---|---|---|

| Inputs | The American Hospital Association’s annual surveys ranging from 2010 to 2021 | |

| Beds | Azreena et al, 2018; Ravaghi et al, 2020 | |

| Expenses (M US $) |

Azreena et al, 2018; O’Neill et al, 2008 | |

| FTE Physicians | Fazria & Dhamayanti, 2021 | |

| FTE Nurses | ||

| FTE Other | ||

| Outputs | ||

| Outpatient Visits |

Azreena et al, 2018; O’Neill et al, 2008 | |

| Inpatient Days |

Harrison & Coppola, 2007; Harrison & Meyer, 2014; Harrison & Ogniewski, 2005 | |

| Inpatient Surgical Operation | Azreena et al, 2018; Fazria & Dhamayanti, 2021 | |

| Outpatient Surgical Operation | ||

| FTE Trainees | Han & Lee, 2021; Lee et al, 2015; Oh et al, 2022; Oh et al, 2023; O’Neill, 1998; Ozcan, 1993 |

Input Variables

The study employed hospital beds, operating expenses, and full-time employees (physicians, nurses, and other employees) as input variables to analyze hospital efficiency.

Hospital Beds

The number of hospital beds has been widely used as a capital investment to measure hospital resources14,33 because it is critical to providing services to hospital inpatients. Both excessive bed capacity and shortage of available beds can negatively affect how a hospital functions. Thus, we included hospital beds as one of the input variables in our analysis, measuring the total number of beds in a military hospital in a certain year.

Operating Expenses

Previous studies have generally employed operating expenses to assess the overall cost of hospital operations.14,34 We also included operating expenses as an input variable in our analysis, measuring it by the total amount of operating expenses in a military hospital in a specific year. However, we did not include payroll expenses because the number of full-time employees is a distinct input variable in our analysis.

Full-Time Employees

As human resources have a critical impact on the healthcare system, hospital-efficiency research has often used medical personnel as a labor input variable.35 Relying on previous studies, we included full-time employees as an input variable in our analysis, operationalizing the full-time employees in three ways: 1) the number of full-time physicians, 2) the number of nurses, and 3) the number of other employees in a military hospital in a specified year.

Output Variables

This study employed outpatient visits, inpatient days, inpatient and outpatient surgical operations, and full-time employee trainees, as output variables to analyze hospital efficiency.

Outpatient Visits

Outpatient visits indicate the number of patients who come to the hospital to receive medical, dental, or other medical services but are not admitted, including all clinic visits, referred visits, and observation services. Outpatient visits are widely accepted in hospital-efficiency research as a way to capture hospital outputs.14,34 We also included outpatient visits as one of our output variables, operationalized as the total number of outpatient visits in a military hospital in a specified year.

Inpatient Days

Inpatient days mean the number of days during which a patient receives medical services in a hospital. It can be considered as an output variable. As the implementation of the Prospective Payment System based on the Diagnosis-Related Group (DRG) shifted the primary standard for hospital reimbursement from inpatient days to cases, attention to inpatient days as an output variable has been steadily decreasing in hospital-efficiency studies.14,34 However, previous studies of US federal hospitals show that inpatient days can be useful in evaluating the output of US federal healthcare systems.3,4,36 As our study focused on US military hospitals, we included inpatient days as one of the output variables in our analysis, measuring it by the total number of inpatient days in a military hospital in a specified year.

Surgical Operations

Surgical operation is a scheduled surgical service performed on patients and is widely regarded as one of the output variables in hospital-efficiency research.14,35 Our analysis involved surgical operations as an output variable. To add nuance to the measurement of surgical operations, we drew a distinction between outpatient and inpatient operations based on whether a patient receiving a surgical service remained in the hospital overnight. We measured outpatient and inpatient surgical operations by the total number of such operations in a military hospital in a specified year.

Full-Time Employee Trainees

Full-time employee trainees, such as medical and dental residents, can be regarded as both input and output variables34 and a resource for inexpensive labor. They can also be viewed as the achievement of a social mission. Considering that federal hospitals are one of the largest medical training providers in the United States and that there are enough previous studies that show the value of full-time employee trainees as an output variable in hospital-efficiency analysis,37–42 we included full-time employee trainees as an output variable, measuring it as the total number of full-time employee trainees in a military hospital in a specified year (See Supplementary Table 1, which shows the results of principal component analysis on our input and output variables, justifying our variable selection).

Results

Descriptive Statistics

Descriptive statistics for all DOD hospitals are presented in Table 1, while Tables 2 - 4 show descriptive statistics for Air Force, Army, and Navy hospitals, respectively. Tables 1–4 provide the number of hospitals and their input and output variables by year from 2010 to 2021, for all DOD hospitals and each hospital type.

Table 2.

Descriptive Statistics of Input and Output Variables (All DOD Hospitals)

| Variable | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (N=45) | (N=42) | (N=43) | (N=43) | (N=43) | (N=42) | (N=41) | (N=40) | (N=40) | (N=40) | (N=38) | (N=38) | |

| M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | |

| Inputs | ||||||||||||

| Beds | 92.07 | 88.21 | 84.07 | 82.42 | 82.47 | 83.64 | 84.41 | 85.90 | 85.73 | 84.70 | 87.97 | 85.42 |

| (82.38) | (76.54) | (75.58) | (76.21) | (76.78) | (77.29) | (76.54) | (76.88) | (77.09) | (75.89) | (76.47) | (71.56) | |

| Expenses (M US $) | 150.58 | 207.61 | 187.58 | 205.12 | 250.66 | 267.75 | 246.71 | 264.87 | 326.89 | 339.53 | 310.12 | 294.10 |

| (128.09) | (170.40) | (187.55) | (182.19) | (278.30) | (287.31) | (237.26) | (244.00) | (279.44) | (339.23) | (316.27) | (269.41) | |

| FTE Physicians | 142.78 | 82.55 | 98.81 | 125.35 | 139.23 | 116.19 | 130.32 | 154.05 | 166.38 | 184.25 | 149.08 | 188.08 |

| (98.73) | (74.71) | (103.15) | (93.28) | (139.94) | (148.96) | (162.53) | (168.03) | (143.92) | (194.68) | (147.02) | (135.47) | |

| FTE Nurses | 254.84 | 284.38 | 240.19 | 305.58 | 350.26 | 299.14 | 330.51 | 355.55 | 327.48 | 348.48 | 343.47 | 352.26 |

| (219.18) | (257.90) | (247.11) | (272.02) | (355.93) | (356.87) | (367.06) | (372.46) | (309.97) | (381.70) | (350.42) | (294.32) | |

| FTE Other | 1,236.42 | 1,214.14 | 979.19 | 1,146.14 | 1,253.12 | 1,041.79 | 1,082.37 | 1,393.18 | 1,579.30 | 1,689.63 | 1,690.24 | 1,760.53 |

| (769.96) | (713.07) | (973.49) | (881.99) | (1,310.69) | (1,414.92) | (1,122.41) | (1,562.41) | (1,238.67) | (1,611.52) | (1,211.51) | (1,246.77) | |

| Outputs | ||||||||||||

| Outpatient Visits | 413,873.70 | 480,246.90 | 444,511.20 | 398,654.60 | 458,749.40 | 405,303.10 | 468,103.60 | 423,702.30 | 395,792.70 | 419,818.20 | 312,896.80 | 502,612.20 |

| (217,254.70) | (391,008.40) | (237,057.20) | (273,801.60) | (298,639.30) | (325,892.60) | (295,484.40) | (549,649.00) | (344,858.10) | (430,104.00) | (368,818.30) | (449,983.70) | |

| Inpatient Days | 18,161.64 | 17,375.64 | 14,743.05 | 15,326.74 | 18,110.19 | 17,210.76 | 15,761.12 | 18,055.33 | 15,953.33 | 17,172.50 | 15,786.03 | 14,694.63 |

| (21,082.45) | (17,829.80) | (15,132.22) | (16,201.73) | (20,990.02) | (20,704.64) | (16,475.93) | (19,719.22) | (18,090.23) | (21,666.18) | (17,942.29) | (16,310.11) | |

| Inpatient Surgical Operation | 1,241.33 | 753.12 | 742.51 | 1,386.93 | 831.81 | 866.67 | 1,806.49 | 1,247.60 | 2,998.60 | 2,940.85 | 2,483.53 | 2,313.90 |

| (1,351.14) | (614.23) | (702.46) | (1,410.92) | (1,106.59) | (1,717.20) | (2,011.96) | (1,480.73) | (4,857.66) | (5,590.32) | (4,990.27) | (4,426.69) | |

| Outpatient Surgical Operation | 2,115.20 | 1,640.81 | 2,171.00 | 2,522.74 | 1,646.00 | 1,604.45 | 4,264.85 | 3,271.55 | 4,481.90 | 4,626.38 | 3,304.82 | 3,596.68 |

| (1,663.79) | (728.13) | (763.50) | (1,878.82) | (1,315.54) | (1,577.27) | (12,501.80) | (4,021.06) | (4,401.20) | (5,367.28) | (4,700.35) | (4,085.81) | |

| FTE Trainees | 55.42 (83.98) |

60.19 (107.38) |

43.74 (81.82) |

38.98 (82.50) |

48.47 (116.55) |

49.79 (117.66) |

46.17 (112.21) |

59.80 (126.05) |

64.08 (110.64) |

70.48 (133.36) |

63.37 (108.93) |

80.03 (113.96) |

Table 3.

Descriptive Statistics of Input and Output Variables (Air Force Hospitals)

| Variable | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (N=10) | (N=7) | (N=8) | (N=8) | (N=8) | (N=7) | (N=7) | (N=7) | (N=7) | (N=7) | (N=7) | (N=7) | |

| M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | |

| Inputs | ||||||||||||

| Beds | 94.60 | 71.57 | 58.25 | 59.50 | 59.50 | 66.57 | 66.57 | 65.86 | 65.86 | 65.86 | 65.86 | 65.86 |

| (81.65) | (24.57) | (29.12) | (29.03) | (29.03) | (22.73) | (22.74) | (22.87) | (22.87) | (22.87) | (22.87) | (22.87) | |

| Expenses (M US $) | 141.05 | 158.20 | 115.08 | 124.29 | 154.48 | 174.85 | 184.98 | 174.58 | 243.73 | 243.73 | 218.16 | 249.42 |

| (131.86) | (70.26) | (73.11) | (83.29) | (83.73) | (102.05) | (106.68) | (120.97) | (102.46) | (102.46) | (165.15) | (114.25) | |

| FTE Physicians | 134.40 | 86.43 | 56.13 | 104.25 | 101.38 | 57.57 | 83.00 | 106.57 | 111.29 | 110.71 | 81.43 | 147.29 |

| (81.25) | (28.86) | (52.42) | (46.51) | (40.36) | (21.92) | (21.30) | (31.90) | (32.48) | (32.79) | (30.60) | (49.41) | |

| FTE Nurses | 247.70 | 227.14 | 130.50 | 247.63 | 251.50 | 170.14 | 217.86 | 241.43 | 209.00 | 208.57 | 196.57 | 258.14 |

| (214.18) | (102.54) | (101.89) | (163.37) | (158.28) | (129.74) | (127.36) | (154.52) | (136.61) | (136.37) | (216.96) | (128.61) | |

| FTE Other | 1,195.40 | 892.71 | 542.75 | 746.75 | 831.25 | 350.00 | 649.14 | 843.43 | 1,084.14 | 1,084.86 | 1,416.14 | 1,311.86 |

| (710.30) | (209.88) | (634.75) | (334.19) | (306.90) | (231.62) | (130.10) | (111.31) | (303.61) | (303.47) | (166.78) | (555.05) | |

| Outputs | ||||||||||||

| Outpatient Visits | 360,609.30 | 325,503.00 | 340,498.80 | 250,357.60 | 390,412.80 | 332,344.00 | 457,266.40 | 272,443.30 | 292,059.60 | 292,059.60 | 219,356.00 | 405,669.60 |

| (185,329.80) | (49,742.19) | (87,657.58) | (122,417.10) | (163,468.90) | (105,214.20) | (136,184.00) | (114,269.10) | (83,206.41) | (83,206.41) | (122,491.50) | (289,550.20) | |

| Inpatient Days | 17,055.80 | 15,664.00 | 9,017.00 | 10,538.63 | 10,778.50 | 11,965.43 | 12,408.71 | 12,606.43 | 10,834.86 | 10,834.86 | 9,685.29 | 9,326.29 |

| (21,268.17) | (11,279.65) | (5,846.86) | (10,883.76) | (9,915.27) | (8,454.84) | (8,808.86) | (11,757.72) | (9,770.55) | (9,770.55) | (9,229.86) | (7,283.06) | |

| Inpatient Surgical Operation | 1,260.70 | 606.43 | 435.50 | 2,032.00 | 926.88 | 830.43 | 1,575.29 | 740.86 | 1,004.00 | 1,004.00 | 310.57 | 622.86 |

| (1,337.37) | (369.98) | (558.98) | (1,044.28) | (686.84) | (1,191.26) | (1,166.17) | (287.70) | (1,060.01) | (1,060.01) | (339.50) | (388.44) | |

| Outpatient Surgical Operation | 1,932.40 | 1,645.29 | 2,325.75 | 3,705.38 | 2,142.00 | 2,147.71 | 2,577.29 | 2,399.00 | 3,354.00 | 3,354.00 | 1,449.00 | 2,075.43 |

| (1,311.87) | (225.76) | (791.69) | (1,716.83) | (878.04) | (723.64) | (785.58) | (937.68) | (772.99) | (772.99) | (300.46) | (630.68) | |

| FTE Trainees | 42.70 | 13.71 | 4.88 | 3.63 | 1.00 | 0.43 | 0.43 | 14.86 | 14.71 | 14.71 | 13.86 | 31.14 |

| (76.35) | (12.16) | (3.80) | (5.88) | (2.14) | (1.13) | (1.14) | (11.65) | (29.25) | (29.25) | (24.77) | (63.00) |

Table 4.

Descriptive Statistics of Input and Output Variables (Army Hospitals)

| Variable | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (N=22) | (N=22) | (N=22) | (N=22) | (N=22) | (N=22) | (N=21) | (N=20) | (N=20) | (N=20) | (N=20) | (N=20) | |

| M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | |

| Inputs | ||||||||||||

| Beds | 87.77 | 87.32 | 85.00 | 81.77 | 82.00 | 81.27 | 82.67 | 85.80 | 84.95 | 83.20 | 83.20 | 83.20 |

| (74.51) | (73.77) | (71.21) | (72.40) | (72.55) | (73.15) | (71.39) | (71.81) | (71.04) | (69.08) | (69.08) | (69.08) | |

| Expenses (M US $) | 143.17 | 207.46 | 194.22 | 214.64 | 249.64 | 264.52 | 246.80 | 276.39 | 331.76 | 361.56 | 296.24 | 272.2614 |

| (117.56) | (167.31) | (177.44) | (171.18) | (254.43) | (269.98) | (231.68) | (237.53) | (261.11) | (385.35) | (305.92) | (266.52) | |

| FTE Physicians | 148.00 | 79.95 | 95.77 | 116.50 | 129.82 | 109.14 | 121.10 | 142.70 | 166.25 | 202.95 | 142.75 | 183.90 |

| (109.61) | (61.11) | (91.05) | (84.02) | (125.06) | (131.46) | (142.24) | (146.33) | (128.95) | (226.60) | (123.44) | (130.05) | |

| FTE Nurses | 246.27 | 279.05 | 243.95 | 307.68 | 351.82 | 297.73 | 329.19 | 364.75 | 337.00 | 380.75 | 354.25 | 349.05 |

| (205.55) | (240.70) | (233.35) | (284.57) | (346.10) | (331.69) | (340.46) | (357.09) | (286.83) | (428.21) | (333.05) | (283.60) | |

| FTE Other | 1,207.68 | 1,223.68 | 948.59 | 1,125.64 | 1,174.27 | 1,005.86 | 1,061.14 | 1,321.15 | 1,572.40 | 1,804.30 | 1,545.85 | 1,766.80 |

| (752.77) | (669.10) | (843.87) | (793.36) | (1,164.40) | (1,235.06) | (967.56) | (1,361.24) | (1,070.78) | (1,830.21) | (1,043.32) | (1,183.01) | |

| Outputs | ||||||||||||

| Outpatient Visits | 450,339.70 | 482,100.60 | 454,650.00 | 429,034.90 | 457,591.70 | 401,844.00 | 457,871.60 | 373,252.90 | 386,852.00 | 447,828.50 | 258,127.50 | 481,171.90 |

| (224,308.20) | (364.897.00) | (244,176.10) | (282,313.40) | (286,625.90) | (329,330.20) | (298,034.40) | (486,312.10) | (321,483.50) | (503,665.10) | (325,432.60) | (454,111.20) | |

| Inpatient Days | 17,426.91 | 17,116.95 | 15,903.55 | 16,430.59 | 18,674.64 | 16,664.36 | 15,901.33 | 19,436.80 | 16,459.05 | 18,755.70 | 15,517.10 | 14,535.90 |

| (19,172.15) | (17,536.37) | (16,269.65) | (17,337.65) | (20,338.04) | (19,647.52) | (16,423.70) | (20,610.59) | (17,686.55) | (24,254.73) | (17,079.61) | (15,850.62) | |

| Inpatient Surgical Operation | 1,165.59 | 742.09 | 783.23 | 1,367.59 | 982.77 | 1,119.32 | 2,032.86 | 1,198.75 | 2,984.50 | 3,247.65 | 2,189.20 | 2,339.20 |

| (1,258.71) | (613.75) | (673.93) | (1,599.32) | (1,389.02) | (2,160.94) | (2,482.91) | (1,311.22) | (4,334.35) | (6,377.63) | (4,228.48) | (4,240.74) | |

| Outpatient Surgical Operation | 2,161.68 | 1,541.14 | 2,129.18 | 2,331.36 | 1,697.36 | 1,708.73 | 6,156.95 | 2,892.15 | 4,303.45 | 4,684.20 | 2,902.65 | 3575.95 |

| (1,869.24) | (692.81) | (715.00) | (1,749.22) | (1,506.95) | (1,943.44) | (17,421.31) | (3,560.70) | (3,992.63) | (6,097.99) | (4,035.96) | (3,878.76) | |

| FTE Trainees | 56.77 (89.15) |

60.68 (102.97) |

43.27 (73.78) |

35.95 (74.29) |

42.86 (104.56) |

43.27 (104.41) |

39.90 (98.23) |

51.50 (111.76) |

66.10 (100.70) |

79.75 (147.53) |

59.25 (94.09) |

83.00 (109.75) |

Table 2 shows that the number of DOD hospitals has been decreasing from 45 hospitals in 2010 to 38 hospitals in 2021. Regarding the input of DOD hospitals, the number of hospital beds has decreased by 6.65 beds or 7.22% over 11 years. This reduction is interesting because the number of active-duty DOD personnel has decreased by 5.75%, from 1,417,370 in 2010 to 1,335,848 in 2021.

In contrast, other input variables show a significant increase during this period. For example, Table 2 shows that the total amount of operating expenses has increased by USD143.52 million. The total amount of operating expenses in 2021 was USD294.10 million, which accounts for 195.31% of those in 2010 ($150.58 million). Despite consideration of the annual inflation rate from 2010 to 2021, this increase is noticeable. The CAGR of operating expenses from 2010 to 2021 is 6.27%. The number of full-time employees also shows a significant increase during this period. The number of full-time physicians increased by 45.30 or 31.73% from 142.75 in 2010 to 188.08 in 2021, showing that its estimated CAGR is 2.54%. The number of full-time nurses increased by 97.42 or 38.23%, while the number of full-time other employees increased by 524.10 or 42.39%. Their CAGR is 2.99% and 3.26%, respectively. Overall, the input of DOD hospitals increased remarkably from 2010 to 2021.

Table 2 shows that there has also been an increase in the outputs of DOD hospitals during this period except on inpatient days. The total number of outpatient visits, surgical operations, and full-time employee trainees increased from 2010 to 2021. For example, the total number of outpatient visits increased by 88,738.50 or 21.44%. The total number of inpatient surgical operations increased by 1,072.56 or 86.40%, from 1,241.33 in 2010 to 2,313.90 in 2021. The total number of outpatient surgical operations also shows an increase of 1,481.48 or 70.04%, from 2,115.20 in 2010 to 3,596.68 in 2021. The CAGR of the two types of surgical operations from 2010 to 2021 is 5.82% and 4.94%. The total number of full-time employee trainees has also increased by 24.60 or 44.39%, from 55.42 in 2010 to 80.03 in 2021. Its CAGR is 3.40%. On the other hand, the total number of inpatient days shows a decrease, shifting from 18,161.64 in 2010 to 14,694.63 in 2021. The gap is 3,467.01 days or 19.09%. This means the total number of inpatient days has annually decreased by −1.91%.

Changes in the input and output variables from 2010 to 2021 reveal some differences between the Air Force, Army, and Navy hospitals. Navy hospitals have shown an increase in both inputs and outputs, while Air Force hospitals have revealed a noticeable decrease in outputs. (See Supplementary Table 2, which summarizes the CAGR of each input and output in each of the three types of DOD hospitals).

According to Table 3, Air Force hospitals have shown an increase in operating expenses, full-time physicians, nurses, and other employees while showing a decrease in hospital beds. Regarding the output variables, Air Force hospitals have revealed a decrease in inpatient days, inpatient surgical operations, and full-time employee trainees but an increase in outpatient visits and outpatient surgical operations.

Table 4 indicates that Army hospitals have shown the same pattern of change regarding input variables. The total number of hospital beds decreased, while other input variables increased in Army hospitals from 2010 to 2021. When it comes to the output variables, Army hospitals have shown an increase in outpatient visits, inpatient surgical operations, and outpatient surgical operations while confronting a decrease in inpatient days and full-time employee trainees.

Table 5 reveals that Navy hospitals are different from other military hospitals in terms of changing input variables from 2010 to 2021. All the input variables of Navy hospitals increased from 2010 to 2021. Interestingly, the number of hospital beds in Navy hospitals increased by 4.52 or 4.65%. Although its CAGR is only 0.41%, it shows an interesting difference from other types of hospitals because both Air Force and Army hospitals showed a decrease in the number of hospital beds during this period. Navy hospitals also show a difference in the change in output variables from other types of hospitals. In Navy hospitals, all output variables increased, except for outpatient visits. In particular, the number of inpatient surgical operations and outpatient surgical operations showed a significant increase. Their CAGR from 2010 to 2021 is 8.56% and 7.04%, respectively.

Table 5.

Descriptive Statistics of Input and Output Variables (Navy Hospitals)

| Variable | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (N=13) | (N=13) | (N=13) | (N=13) | (N=13) | (N=13) | (N=13) | (N=13) | (N=13) | (N=13) | (N=11) | (N=11) | |

| M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | |

| Inputs | ||||||||||||

| Beds | 97.38 | 98.69 | 98.38 | 97.62 | 97.38 | 96.85 | 96.85 | 96.85 | 97.62 | 97.15 | 110.73 | 101.91 |

| (100.50) | (99.55) | (99.78) | (100.31) | (101.67) | (102.07) | (102.07) | (102.07) | (103.33) | (102.57) | (106.29) | (94.80) | |

| Expenses (M US $) | 170.47 | 234.47 | 220.98 | 238.75 | 311.59 | 323.26 | 279.79 | 295.77 | 364.18 | 357.21 | 393.88 | 362.24 |

| (149.41) | (224.18) | (245.16) | (234.73) | (378.82) | (375.34) | (298.46) | (301.39) | (367.43) | (356.56) | (402.35) | (343.96) | |

| FTEPhysicians | 140.38 | 84.85 | 130.23 | 153.31 | 178.46 | 159.69 | 170.69 | 197.08 | 196.23 | 195.08 | 203.64 | 221.64 |

| (98.22) | (109.97) | (137.21) | (124.71) | (193.49) | (202.95) | (225.57) | (231.99) | (194.23) | (193.60) | (209.53) | (178.50) | |

| FTE Nurses | 274.85 | 324.23 | 301.31 | 337.69 | 408.38 | 371.00 | 393.31 | 402.85 | 376.62 | 374.15 | 417.36 | 418.00 |

| (259.23) | (331.77) | (316.67) | (313.58) | (457.16) | (467.86) | (485.94) | (476.30) | (402.15) | (400.02) | (440.46) | (383.14) | |

| FTE Other | 1,316.62 | 1,371.08 | 1,299.54 | 1,426.62 | 1,646.15 | 1,475.08 | 1,349.92 | 1,800.00 | 1,856.54 | 1,838.85 | 2,127.18 | 2,034.64 |

| (890.97) | (919.07) | (1,263.25) | (1,167.79) | (1,813.18) | (1,912.85) | (1,562.95) | (2,151.58) | (1,702.53) | (1,682.61) | (1,744.01) | (1,638.24) | |

| Outputs | ||||||||||||

| Outpatient Visits | 393,134.80 | 560,433.40 | 491,361.10 | 438,501.30 | 502,761.80 | 450,442.50 | 490,467.80 | 582,763.80 | 465,403.70 | 445,518.50 | 472,003.50 | 603,285.50 |

| (232,350.80) | (516,891.00) | (279.440.70) | (309,990.00) | (384,157.00) | (402,739.40) | (364,594.60) | (745,578.60) | (455,797.90) | (429,595.00) | (503,094.70) | (537,059.80) | |

| Inpatient Days | 20,255.69 | 18,735.08 | 16,302.85 | 16,405.23 | 21,666.77 | 20,959.85 | 17,339.77 | 18,864.00 | 17,931.38 | 18,149.38 | 20,157.27 | 18,399.45 |

| (25,307.35) | (21,921.40) | (17,067.87) | (17,459.06) | (26,658.93) | (26,861.77) | (20,179.26) | (22,379.22) | (22,374.42) | (22,754.99) | (23,206.46) | (20,970.58) | |

| Inpatient Surgical Operation | 1,354.62 | 850.77 | 862.54 | 1,022.69 | 517.85 | 458.62 | 1,565.31 | 1,595.62 | 4,094.31 | 3,511.77 | 4,401.46 | 3,344.00 |

| (1,598.45) | (733.68) | (817.62) | (1,197.19) | (692.61) | (946.22) | (1,534.49) | (2,019.22) | (6,539.19) | (5,822.97) | (7,042.96) | (5,922.30) | |

| Outpatient Surgical Operation | 2,177.15 | 1,807.08 | 2,146.54 | 2,118.85 | 1,253.85 | 1,135.46 | 2,117.08 | 4,325.08 | 5,363.77 | 5,222.54 | 5,217.00 | 4,602.46 |

| (1,645.81) | (947.52) | (871.88) | (2,025.98) | (1,139.30) | (1,094.56) | (1,383.44) | (5,490.78) | (5,981.46) | (5,754.49) | (6,632.16) | (5,496.36) | |

| FTE Trainees | 62.92 (85.93) |

84.38 (137.39) |

68.46 (110.57) |

65.85 (111.87) |

87.15 (158.84) |

87.38 (158.72) |

80.92 (152.61) |

96.77 (170.35) |

87.54 (145.86) |

86.23 (143.82) |

102.36 (152.62) |

105.73 (142.71) |

Tables 2–5 imply that DOD hospital efficiency may have changed during this period. They also hint of some meaningful differences in hospital efficiency between the three types of DOD hospitals.

Data Envelopment Analysis and Slack Analysis

We used DEA to calculate the technical efficiency scores, the number of DOD hospitals on efficiency frontiers, and average input slacks. The overall results are shown in Table 6, and the results for each of the Air Force, Army, and Navy hospitals are presented in Tables 7 - 9, respectively. (See Supplementary Figure 1, which visualizes the change of CRS-based, VRS-based, and scale efficiency).

Table 6.

Average Technical Efficiency Scores, Number of Efficiency Frontiers, and VRS Input Slacks (All DOD Hospitals)

| Year | Technical Efficiency | Number of Efficiency Frontiers | VRS Input Slacks (Average) | Number of Hospitals |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CRS Efficiency |

VRS Efficiency |

Scale Efficiency |

CRS | VRS | Scale | Beds | Total Expense | Physicians | Nurses | Other FTE | ||

| 2010 | 0.904 | 0.944 | 0.956 | 20 | 28 | 20 | 0.662 | 723,874.09 | 8.618 | 9.635 | 1.779 | 45 |

| 2011 | 0.963 | 0.987 | 0.975 | 25 | 29 | 27 | 0.016 | 1,955,633.12 | 7.627 | 0.543 | 1.760 | 42 |

| 2012 | 0.968 | 0.985 | 0.982 | 23 | 30 | 25 | 0.975 | 0.00 | 0.304 | 0.061 | 4.548 | 43 |

| 2013 | 0.920 | 0.959 | 0.958 | 24 | 27 | 25 | 0.000 | 1,518,408.75 | 3.826 | 0.005 | 54.700 | 43 |

| 2014 | 0.951 | 0.969 | 0.981 | 20 | 22 | 20 | 1.225 | 799,647.17 | 3.665 | 1.281 | 5.848 | 43 |

| 2015 | 0.973 | 0.988 | 0.985 | 36 | 37 | 36 | 0.000 | 41,499.46 | 0.033 | 0.012 | 2.000 | 42 |

| 2016 | 0.972 | 0.989 | 0.981 | 34 | 35 | 34 | 0.228 | 1,230,019.79 | 0.045 | 0.011 | 5.828 | 41 |

| 2017 | 0.968 | 0.990 | 0.977 | 32 | 34 | 32 | 0.394 | 1,043,573.15 | 0.012 | 0.011 | 12.313 | 40 |

| 2018 | 0.910 | 0.922 | 0.983 | 31 | 31 | 31 | 0.359 | 8,011,643.51 | 0.000 | 6.061 | 65.480 | 40 |

| 2019 | 0.881 | 0.928 | 0.933 | 31 | 31 | 31 | 0.673 | 6,734,720.39 | 0.019 | 5.404 | 70.321 | 40 |

| 2020 | 1.000 | 1.000 | 1.000 | 37 | 37 | 38 | 0.000 | 0.00 | 0.001 | 0.009 | 0.026 | 38 |

| 2021 | 0.991 | 0.991 | 1.000 | 26 | 26 | 38 | 5.376 | 34,271,291.50 | 4.868 | 4.852 | 0.000 | 38 |

| Mean | 0.950 | 0.971 | 0.976 | 28.250 | 30.583 | 29.750 | 0.826 | 4,694,192.58 | 2.418 | 2.324 | 18.717 | |

Table 7.

Average Technical Efficiency Scores, Number of Efficiency Frontiers, and VRS Input Slacks (Air Force Hospitals)

| Year | Technical Efficiency | Number of Efficiency Frontiers | VRS Input Slacks (Average) | Number of Hospitals |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CRS Efficiency |

VRS Efficiency |

Scale Efficiency |

CRS | VRS | Scale | Beds | Total Expense | Physicians | Nurses | Other FTE | ||

| 2010 | 0.892 | 0.939 | 0.948 | 4 | 7 | 4 | 1.064 | 1,185,045.87 | 7.273 | 12.902 | 0.000 | 10 |

| 2011 | 0.999 | 0.999 | 1.000 | 5 | 5 | 6 | 0.035 | 51,612.98 | 0.040 | 0.000 | 0.000 | 7 |

| 2012 | 0.972 | 0.990 | 0.982 | 5 | 7 | 5 | 1.095 | 0.00 | 0.109 | 0.000 | 2.286 | 8 |

| 2013 | 0.938 | 0.979 | 0.959 | 6 | 7 | 6 | 0.000 | 0.00 | 1.901 | 0.000 | 25.565 | 8 |

| 2014 | 0.919 | 0.957 | 0.961 | 1 | 2 | 1 | 2.506 | 93,990.97 | 9.809 | 6.578 | 7.736 | 8 |

| 2015 | 1.000 | 1.000 | 1.000 | 7 | 7 | 7 | 0.000 | 0.00 | 0.000 | 0.000 | 0.000 | 7 |

| 2016 | 1.000 | 1.000 | 1.000 | 7 | 7 | 7 | 0.000 | 0.00 | 0.044 | 0.000 | 0.000 | 7 |

| 2017 | 0.977 | 0.985 | 0.991 | 6 | 6 | 6 | 0.663 | 1,743,097.17 | 0.071 | 0.000 | 20.758 | 7 |

| 2018 | 0.943 | 0.943 | 0.999 | 6 | 6 | 6 | 0.001 | 5,337,678.49 | 0.000 | 4.251 | 48.685 | 7 |

| 2019 | 0.924 | 0.940 | 0.973 | 6 | 6 | 6 | 0.000 | 3,589,614.84 | 0.044 | 3.790 | 47.291 | 7 |

| 2020 | 1.000 | 1.000 | 1.000 | 6 | 6 | 7 | 0.009 | 0.00 | 0.003 | 0.000 | 0.000 | 7 |

| 2021 | 0.979 | 0.979 | 1.000 | 2 | 2 | 7 | 11.749 | 58,490,822.08 | 10.424 | 10.390 | 0.000 | 7 |

| Mean | 0.962 | 0.976 | 0.984 | 5.083 | 5.667 | 5.667 | 1.427 | 5,874,321.87 | 2.477 | 3.159 | 12.693 | |

Table 8.

Average Technical Efficiency Scores, Number of Efficiency Frontiers, and VRS Input Slacks (Army Hospitals)

| Year | Technical Efficiency | Number of Efficiency Frontiers | VRS Input Slacks (Average) | Number of Hospitals |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CRS Efficiency |

VRS Efficiency |

Scale Efficiency |

CRS | VRS | Scale | Beds | Total Expense | Physicians | Nurses | Other FTE | ||

| 2010 | 0.909 | 0.947 | 0.958 | 9 | 13 | 9 | 0.704 | 460,057.88 | 10.675 | 9.429 | 0.000 | 22 |

| 2011 | 0.960 | 0.985 | 0.974 | 12 | 15 | 13 | 0.019 | 3,552,534.00 | 14.321 | 0.543 | 1.934 | 22 |

| 2012 | 0.973 | 0.987 | 0.985 | 13 | 16 | 14 | 1.003 | 0.00 | 0.269 | 0.089 | 2.476 | 22 |

| 2013 | 0.921 | 0.956 | 0.962 | 12 | 14 | 12 | 0.000 | 2,001,264.35 | 4.055 | 0.009 | 60.587 | 22 |

| 2014 | 0.961 | 0.973 | 0.988 | 14 | 14 | 14 | 1.154 | 1,028,132.62 | 2.299 | 0.000 | 5.364 | 22 |

| 2015 | 0.966 | 0.985 | 0.980 | 19 | 20 | 19 | 0.000 | 54,092.91 | 0.039 | 0.023 | 3.321 | 22 |

| 2016 | 0.968 | 0.990 | 0.977 | 17 | 18 | 17 | 0.236 | 1,156,831.02 | 0.038 | 0.022 | 5.541 | 21 |

| 2017 | 0.965 | 0.985 | 0.979 | 16 | 17 | 16 | 0.516 | 1,386,116.74 | 0.000 | 0.000 | 16.097 | 20 |

| 2018 | 0.900 | 0.908 | 0.989 | 15 | 15 | 15 | 0.221 | 8,869,890.78 | 0.000 | 6.897 | 76.522 | 20 |

| 2019 | 0.867 | 0.911 | 0.936 | 15 | 15 | 15 | 0.455 | 7,035,479.00 | 0.023 | 6.290 | 79.533 | 20 |

| 2020 | 1.000 | 1.000 | 1.000 | 20 | 20 | 20 | 0.000 | 0.00 | 0.000 | 0.017 | 0.050 | 20 |

| 2021 | 0.994 | 0.994 | 1.000 | 16 | 16 | 20 | 3.471 | 21,945,135.34 | 3.255 | 3.204 | 0.000 | 20 |

| Mean | 0.949 | 0.968 | 0.977 | 14.833 | 16.083 | 15.333 | 0.648 | 3,957,461.22 | 2.915 | 2.210 | 20.952 | |

Table 9.

Average Technical Efficiency Scores, Number of Efficiency Frontiers, and VRS Input Slacks (Navy Hospitals)

| Year | Technical Efficiency | Number of Efficiency Frontiers | VRS Input Slacks (Average) | Number of Hospitals |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CRS Efficiency |

VRS Efficiency |

Scale Efficiency |

CRS | VRS | Scale | Beds | Total Expense | Physicians | Nurses | Other FTE | ||

| 2010 | 0.906 | 0.941 | 0.958 | 7 | 8 | 8 | 0.282 | 815,584.76 | 6.172 | 7.469 | 6.159 | 13 |

| 2011 | 0.950 | 0.985 | 0.962 | 8 | 9 | 8 | 0.001 | 278,427.08 | 0.385 | 0.834 | 2.412 | 13 |

| 2012 | 0.957 | 0.979 | 0.977 | 5 | 7 | 6 | 0.852 | 0.00 | 0.484 | 0.050 | 9.447 | 13 |

| 2013 | 0.908 | 0.952 | 0.950 | 6 | 7 | 7 | 0.000 | 1,635,673.91 | 4.623 | 0.000 | 62.668 | 13 |

| 2014 | 0.952 | 0.971 | 0.980 | 5 | 6 | 5 | 0.555 | 847,229.44 | 2.195 | 0.190 | 5.505 | 13 |

| 2015 | 0.971 | 0.986 | 0.984 | 11 | 11 | 11 | 0.000 | 42,533.32 | 0.041 | 0.000 | 0.842 | 13 |

| 2016 | 0.962 | 0.982 | 0.979 | 10 | 10 | 10 | 0.337 | 2,010,566.15 | 0.056 | 0.000 | 9.430 | 13 |

| 2017 | 0.966 | 0.999 | 0.966 | 10 | 11 | 10 | 0.060 | 139,916.24 | 0.000 | 0.034 | 1.944 | 13 |

| 2018 | 0.908 | 0.932 | 0.965 | 10 | 10 | 10 | 0.764 | 8,131,090.42 | 0.000 | 5.750 | 57.536 | 13 |

| 2019 | 0.878 | 0.950 | 0.908 | 10 | 10 | 10 | 1.370 | 7,965,533.21 | 0.000 | 4.912 | 68.550 | 13 |

| 2020 | 1.000 | 1.000 | 1.000 | 11 | 11 | 11 | 0.000 | 0.00 | 0.000 | 0.000 | 0.000 | 11 |

| 2021 | 0.992 | 0.992 | 1.000 | 8 | 8 | 10 | 4.784 | 41,270,056.79 | 4.266 | 4.325 | 0.000 | 11 |

| Mean | 0.946 | 0.972 | 0.969 | 8.417 | 9.000 | 8.833 | 0.750 | 5,261,384.28 | 1.519 | 1.964 | 18.708 | |

Table 6 indicates that the average CRS-based technical efficiency from 2010 to 2021 is 0.950. DOD hospitals showed instability in CRS-based technical efficiency during this period. For example, they recorded the lowest score, 0.904, in 2010, while they registered a CRS-based technical efficiency score of 100% in 2020. As found in the number of CRS-based technical efficiency frontiers in Table 6, 25 out of 45 DOD hospitals (55.6%) in 2010 ran inefficiently, while 37 of 38 DOD hospitals (97.4%) in 2020 ran efficiently. It is also noticeable that CRS-based technical efficiency suddenly decreased in 2013, 2018, and 2019. CRS-based technical efficiency decreased by 0.05 from 2012 to 2013, by 0.06 from 2017 to 2018, and by 0.03 from 2018 to 2019, respectively. Then it suddenly increased by 0.12 from 2019 to 2020.

Table 6 also shows that the average VRS-based technical efficiency from 2010 to 2021 was 0.971. In addition, it seems it was unstable during this period, like CRS-based technical efficiency. The VRS-based technical efficiency of DOD hospitals recorded its lowest value, 0.922, in 2018, while the highest value, 1.000, was achieved in 2020 and 2021. Table 6 reveals that only 22 out of 43 DOD hospitals (51.2%) in 2014 were efficient, while 37 out of 38 DOD hospitals (97.4%) in 2020 ran efficiently. Like CRS-based technical efficiency, VRS-based technical efficiency also suddenly decreased in 2013 and 2018. It decreased by 0.03 from 2012 to 2013 and by 0.07 from 2017 to 2018, respectively. Then, the VRS-based technical efficiency spiked by 0.07 from 2019 to 2020.

Scale efficiency increased steadily from 2010 to 2021, as shown in Table 6. It was 0.956 in 2010 but achieved 1.000 in 2020 and 2021. Indeed, only 20 out of 45 DOD hospitals (44.4%) in 2010 showed an optimal size. However, all DOD hospitals have achieved their optimal size since 2020. Scale efficiency showed similar changes to those of CRS-based and VRS-based efficiency during this period. It decreased by 0.02 from 2012 to 2013 and by 0.05 from 2019 to 2020. Then, scale efficiency rocketed by 0.07 from 2020 to 2021.

These results indicate that, overall, DOD hospitals from 2010 to 2021 were not successful in substantially increasing their technical efficiency, thereby achieving an optimal level of efficiency to maximize their outputs. Indeed, all averages of CRS-based, VRS-based, and scale efficiency are less than 1, indicating that all DOD hospitals were not perfectly efficient during this period. In particular, the average of VRS-based technical efficiency, 0.971, was lower than the average of scale efficiency, 0.976. This implies that overall technical efficiency originated from failure to achieve pure technical efficiency rather than an optimal scale. In particular, it is noticeable to highlight that the gap between VRS-based technical efficiency and scale efficiency in 2018 was 0.061. Considering that the CRS-based technical efficiency was 0.910, it means that overall DOD hospitals ran inefficiently in 2018, and the inefficiency may be due to the lack of pure technical efficiency.

Table 6 presents the average VRS input slacks in DOD hospitals from 2010 to 2021. It reveals that the average operating expense and other full-time employee slacks showed wild fluctuations during this period. For example, they suddenly soared in 2018. The total number of operating expense slacks increased by 767.71% from USD1,043,573.15 in 2017 to USD8,011,643.51 in 2018. The total number of full-time other employee slacks also surged by 531.80%, from 12.313 employees in 2017 to 65.480 in 2018. In contrast, hospital beds, full-time physicians, and full-time nurses steadily decreased from 2010 to 2021. In particular, all input variables showed little or no slack in 2020. Indeed, all CRS-based, VRS-based, and scale efficiency recorded 1, which means that they were perfectly efficient. This may be due to the outbreak of the COVID-19 pandemic in 2020.

Tables 7–9 reveal differences in technical efficiency as well as changes from 2010 to 2021 between the Air Force, Army, and Navy hospitals. The average CRS-based technical efficiency of the Air Force, Army, and Navy hospitals from 2010 to 2021 was 0.962, 0.949, and 0.946, respectively, while their average VRS-based technical efficiency was 0.976, 0.968, and 0.972. The average scale efficiency of the three types of hospitals from 2010 to 2021 was 0.984, 0.977, and 0.969, respectively. The Air Force hospitals showed relatively higher technical efficiency than Army and Navy hospitals during this period.

The results indicate that all types of DOD hospitals have revealed their overall inefficiency during this period because the averages of CRS-based, VRS-based, and scale efficiency were less than 1 in all types of DOD hospitals. Interestingly, the average VRS-based technical efficiency was lower than the average-scale efficiency from 2010 to 2021 in both Air Force and Army hospitals. In contrast, the average VRS-based technical efficiency was larger than the average-scale efficiency during this period in Navy hospitals, implying that the overall inefficiency of Air Force and Army hospitals is likely to originate from the lack of pure technical efficiency rather than suboptimal scale, while the inefficiency of Navy hospitals is likely to be related to failure in achieving an optimal size rather than the lack of pure technical efficiency.

Specifically, Table 7 indicates that the Air Force hospitals have shown fluctuations in CRS-based technical efficiency, although they have maintained relatively high efficiency compared to Army and Navy hospitals. Regarding CRS-based technical efficiency, the Air Force hospitals recorded the lowest score, 0.892, in 2010 and then showed a decrease from 2010 to 2014. In particular, only 1 out of 8 Air Force hospitals (12.5%) in 2014 was efficient. Both the average VRS-based technical efficiency and scale efficiency in 2014 align with the average CRS-based technical efficiency. Then, they suddenly registered a CRS-based technical efficiency score of 100% in 2015 and 2016. Both VRS-based technical efficiency and scale efficiency also showed similar patterns during this period.

As Table 8 shows, Army hospitals increased their technical efficiency from 2010 to 2016. The average CRS-based technical efficiency, VRS-based technical efficiency, and scale efficiency of Army hospitals in 2010 were 0.909, 0.947, and 0.958, respectively, while they increased to 0.968, 0.990, and 0.977 in 2016. Since then and before 2020, Army hospitals showed a substantial decrease in their average CRS-based technical efficiency, VRS-based technical efficiency, and scale efficiency. According to the number of CRS-based technical efficiency frontiers, only 9 out of 22 Army hospitals (40.9%) in 2010 were efficient. However, 19 out of 22 Army hospitals (86.4%) in 2015 ran efficiently. After achieving this recordable efficiency, the number of CRS-based technical efficiency frontiers among Army hospitals decreased to 15 out of 20 Army hospitals (75.0%) in 2019. Similar patterns are discovered in the changes in the average VRS-based technical efficiency and scale efficiency.

Table 9 reveals that the Navy hospitals experienced fluctuations in their technical and scale efficiency. For example, their average CRS-based, VRS-based, and scale efficiency in 2010 was 0.90, 0.941, and 0.958, respectively. The average CRS-based, VRS-based, and scale efficiency in 2010 increased to 0.966, 0.999, and 0.966. However, they went down to 0.878, 0.950, and 0.908 in 2019. The number of efficiency frontiers was lowest in 2014. Regarding the number of CRS-based technical efficiency frontiers, there were only 5 out of 13 Navy hospitals (38.5%) in 2014. The number of scale efficiency frontiers also indicates that only 6 out of 13 Navy hospitals (46.2%) had an optimal scale to produce maximum outputs in 2014. After experiencing the worst year, the number of efficiency frontiers increased and became stable Ten or 11 out of 13 Navy hospitals from 2015 to 2019 ran efficiently.

With regard to the average VRS input slacks, the Air Force, Army, and Navy hospitals gradually decreased their slacks from 2010 to 2021, although the average VRS input slacks suddenly soared in 2021; this rapid change in 2021 may be due to the impact of the COVID-19 pandemic. Generally, all three types of military hospitals maintained few slacks in hospital beds, full-time physicians, and full-time nurses during this period. However, as shown in Tables 7–9, they experienced some rapid changes in operating expenses and other full-time employee slacks in certain years. That is to say, the Air Force, Army, and Navy hospitals had some difficulties in managing their operating expenses and other full-time employees to maximize hospital efficiency.

Malmquist Productivity Index

We employed the DEA-based MPI to assess the productivity change of DOD hospitals from 2010 to 2021. The results are presented in Tables 10–13. (See Supplementary Figure 2, which visualizes these tables as a graph). The tables show the average MPI and its four components: technical efficiency change (Effch), technological change (Techch), pure efficiency change (Pech), and scale efficiency change (Sech).

Table 10.

MPI and Its Components (All DOD Hospitals)

| Periods | Effch | Techch | Pech | Sech | MPI |

|---|---|---|---|---|---|

| 2012–2013 | 0.956 | 1.193 | 0.980 | 0.975 | 1.141 |

| 2013–2014 | 1.028 | 0.804 | 1.008 | 1.019 | 0.827 |

| 2014–2015 | 1.045 | 0.972 | 1.025 | 1.020 | 1.015 |

| 2015–2016 | 1.000 | 1.110 | 1.000 | 1.000 | 1.110 |

| 2016–2017 | 0.967 | 0.687 | 0.988 | 0.978 | 0.664 |

| 2017–2018 | 0.926 | 1.067 | 0.920 | 1.007 | 0.989 |

| 2018–2019 | 0.948 | 1.069 | 1.006 | 0.943 | 1.014 |

| 2019–2020 | 1.178 | 1.252 | 1.093 | 1.078 | 1.474 |

| 2020–2021 | 0.990 | 0.923 | 0.990 | 1.000 | 0.914 |

| Mean | 1.002 | 0.993 | 1.000 | 1.001 | 0.995 |

Table 11.

MPI and Its Components (Air Force Hospitals)

| Periods | Effch | Techch | Pech | Sech | MPI |

|---|---|---|---|---|---|

| 2012–2013 | 0.998 | 1.476 | 0.986 | 1.012 | 1.472 |

| 2013–2014 | 0.958 | 0.638 | 0.974 | 0.984 | 0.611 |

| 2014–2015 | 1.072 | 1.080 | 1.054 | 1.018 | 1.159 |

| 2015–2016 | 1.000 | 0.974 | 1.000 | 1.000 | 0.974 |

| 2016–2017 | 0.975 | 0.705 | 0.984 | 0.991 | 0.687 |

| 2017–2018 | 0.953 | 0.988 | 0.945 | 1.009 | 0.942 |

| 2018–2019 | 0.965 | 1.036 | 0.994 | 0.971 | 1.000 |

| 2019–2020 | 1.115 | 1.154 | 1.082 | 1.030 | 1.286 |

| 2020–2021 | 0.978 | 0.864 | 0.979 | 1.000 | 0.846 |

| Mean | 1.000 | 0.963 | 0.999 | 1.001 | 0.963 |

Table 12.

MPI and Its Components (Army Hospitals)

| Periods | Effch | Techch | Pech | Sech | MPI |

|---|---|---|---|---|---|

| 2012–2013 | 0.940 | 1.149 | 0.975 | 0.964 | 1.080 |

| 2013–2014 | 1.048 | 0.864 | 1.019 | 1.029 | 0.906 |

| 2014–2015 | 1.036 | 0.941 | 1.016 | 1.019 | 0.974 |

| 2015–2016 | 1.000 | 1.172 | 1.000 | 1.000 | 1.172 |

| 2016–2017 | 0.963 | 0.667 | 0.984 | 0.978 | 0.642 |

| 2017–2018 | 0.914 | 1.080 | 0.905 | 1.010 | 0.988 |

| 2018–2019 | 0.940 | 1.091 | 1.003 | 0.938 | 1.026 |

| 2019–2020 | 1.208 | 1.290 | 1.120 | 1.079 | 1.559 |

| 2020–2021 | 1.002 | 1.007 | 1.000 | 1.002 | 1.009 |

| Mean | 1.002 | 1.012 | 1.001 | 1.001 | 1.015 |

Table 13.

MPI and Its Components (Navy Hospitals)

| Periods | Effch | Techch | Pech | Sech | MPI |

|---|---|---|---|---|---|

| 2012–2013 | 0.959 | 1.110 | 0.987 | 0.972 | 1.064 |

| 2013–2014 | 1.038 | 0.820 | 1.012 | 1.026 | 0.851 |

| 2014–2015 | 1.044 | 0.963 | 1.022 | 1.022 | 1.006 |

| 2015–2016 | 1.000 | 1.090 | 1.000 | 1.000 | 1.090 |

| 2016–2017 | 0.968 | 0.716 | 1.000 | 0.969 | 0.693 |

| 2017–2018 | 0.932 | 1.099 | 0.934 | 0.998 | 1.025 |

| 2018–2019 | 0.952 | 1.051 | 1.021 | 0.933 | 1.001 |

| 2019–2020 | 1.163 | 1.248 | 1.049 | 1.108 | 1.451 |

| 2020–2021 | 0.991 | 0.955 | 0.991 | 1.000 | 0.946 |

| Mean | 1.003 | 0.993 | 1.001 | 1.002 | 0.997 |

Table 10 shows the results of all DOD hospitals. The average MPI of all DOD hospitals from 2010 to 2021 is 0.995, indicating that the average productivity of all DOD hospitals decreased by 0.5% annually during this period. That is to say, all DOD hospitals failed to improve their productivity significantly over time.

In particular, it is interesting that the average technological change was 0.993, which is less than 1, while the average pure and scale efficiency changes were 1.000 and 1.001, respectively, which are larger than 1. This may denote that the decrease in all DOD hospital productivity originates from a decrease in technological change. Supplementary Figure 2 clearly shows that the MPI has moved with technological efficiency during this period. That is to say, some DOD hospitals might fail to fully employ technology and innovative management to produce more outputs with fewer inputs and thus stay inefficient.

When it comes to the change in productivity by year, we found that significant decreases in both MPI and technological efficiency happened together in 2013–2014 and 2016–2017, while technical efficiency, pure technical efficiency, and scale efficiency remained relatively constant during this period. It is also interesting to mention that all of MPI and its four components spiked in 2019–2020. This may be due to the COVID-19 pandemic.

Tables 11–13 show the results for Army, Navy, and Air Force hospitals, respectively. Only the Army hospitals achieved productivity improvement, while Air Force and Navy hospitals experienced a decrease in productivity. All three types of DOD hospitals showed similar patterns in their change of MPI and its four components during this period; the MPI and technological efficiency moved together and showed a significant decrease in 2013–2014 and 2016–2017 and a sudden rise in 2019–2020.

Specifically, the average MPI of Army hospitals from 2010 to 2021 is 1.015, meaning that Army hospitals increased their productivity by 1.5% annually during this period. All the average values in their technical efficiency, technological efficiency, pure technical efficiency, and scale efficiency are larger than 1, implying that the Army hospitals showed enhancement of all productivity from 2010 to 2021. Indeed, the MPI and its four components of Army hospitals have been relatively stable, although MPI and technological efficiency showed a noticeable decrease in 2013–2014 and 2016–2017 and a remarkable increase in 2015–2016 and 2019–2020.

On the other hand, the average productivity of Air Force and Navy hospitals are 0.963 and 0.997, which implies that their productivity decreased by 3.7% and 0.3%, respectively, during this period. In particular, the Air Force hospitals showed the most significant decrease in productivity among the three types of DOD hospitals. Considering that their average value of technological efficiency is 0.963, this decrease may be due to their inefficiency in using technology and innovative management to manage their inputs and outputs. When we examined the MPI and its four components of Air Force hospitals by year, we found that the MPI and technological efficiency between 2012–2013 and 2013–2014 rapidly decreased by 0.86 and 0.84, respectively. Indeed, Air Force hospitals had the highest MPI and technological efficiency among the three types of DOD hospitals in 2012–2013, but their MPI and technological efficiency were placed third in 2013–2024.

Discussion

DOD hospitals are expected to fulfil two primary missions: maintain an operational medical capacity to support combat operations and offer healthcare benefits to DOD beneficiaries. Both are critically related to the overall capacity of national defense. This makes military healthcare one of the most important policy areas and one of the largest expenditures in the defense budget.

To be accountable for achieving these two primary missions against rising costs in the military healthcare system, DOD hospitals are expected to meet demand efficiently. For that reason, Congress as well as the DOD have paid attention to evaluating and improving the efficiency of DOD hospitals. For example, Congress mandated MHS reform through the Fiscal Year 2017 (FY17) National Defense Authorization Act (NDAA), which not only shifted the administration of Military Treatment Facilities (MTFs) from individual services to the Defense Health Agency but also directed the agency to investigate all MTFs to define what should be done to achieve the right-sized facilities.43–45

Academia has also engaged in assessing and prescribing hospital efficiency in the MHS. Hospital-efficiency researchers assess military hospital efficiency by analyzing technical efficiency,39,46–49 specifying the differences in efficiency between types of military hospitals, such as between DOD and Veterans Administration (VA) hospitals,48,50 comparing them to non-federal hospitals51 and identifying the determinants of military hospital performance,52 as well as the outcomes of military hospital efficiency.53,54

This study is an extension of this federal hospital-efficiency research. In particular, it focuses on analyzing all DOD hospitals, consisting of Army, Air Force, and Navy hospitals, from 2010 to 2021. The results contribute to previous federal hospital-efficiency studies by adding new findings.

First, this study offers the trends of DOD hospital efficiency from 2010 to 2021. The average of all DOD hospitals’ CRS-based technical efficiency moved from 0.904 in 2010 to 0.991 in 2021. It slightly improved (its CAGR is 0.84%), although there were some fluctuations during this period. The CRS-based technical efficiency of all DOD hospitals recorded its highest value (1.000) during this period in 2020, while its lowest value (0.881) was in 2019. All three types of DOD hospitals showed a similar pattern in CRS-based technical efficiency trends from 2010 to 2021. The average CRS-based technical efficiency of the Air Force from 2010 to 2021 moved from 0.892 in 2010 to 0.979 in 2021 (its CAGR is 0.85%), while one of the Army hospitals shifted from 0.909 in 2010 to 0.994 in 2021 (its CAGR is 0.82%), and one of the Navy hospitals increased from 0.906 in 2010 to 0.992 in 2021 (its CAGR is 0.83%).

Although simple comparison is not possible, we can trace the trends of DOD hospital-efficiency change based on previous studies. For example, in employing DEA, Ozcan and Bannick (1994) found that the average efficiency of 124 DOD hospitals ranged from 0.91 to 0.96 from 1988 to 1990; the average efficiency of all DOD hospitals during this period was 0.95, while the average efficiency of Air Force, Army, and Navy hospitals was 0.96, 0.94, and 0.91, respectively. For the three years, the average efficiency of all DOD hospitals slightly increased from 0.93 in 1988, 0.95 in 1989, and 0.94 in 1990. Harrison and Meyer (2014) also used DEA to analyze the efficiency of VA and DOD hospitals in 2007 and 2011. The results indicated that the efficiency of federal hospitals was 0.81 in 2007 and 0.86 in 2011, respectively. Summarizing these studies, we can estimate that DOD hospitals have not been operating efficiently, although their average efficiency has been slightly improving with some upward and downward trends. This implies that there is room for efficiency improvement and thus policymakers and hospital managers should make more effort to find practical ways to improve the efficiency of DOD hospitals.

Second, the overall technical efficiency of all DOD hospitals has been more influenced by pure technical efficiency than scale efficiency. The average of VRS-based technical efficiency from 2010 to 2021 was 0.971, while the average of scale efficiency during this period was 0.976. Considering that the overall technical efficiency of all DOD hospitals during that time was 0.950, they have been run inefficiently and the inefficiency is likely to be due to the lack of pure technical efficiency. For example, when CRS-based technical efficiency recorded its lowest value (0.881) in 2019, slack analysis indicated that the average slack of total expenses was USD 6,734,720.39 and the average slack of nurses and other full-time employees was 5.404 and 70.321, respectively. However, when specifying the difference between the three types of DOD hospitals, we found that Navy hospitals were different from Army and Air Force hospitals. During this period, there were only three years when the average of VRS-based technical efficiency was lower than the average of scale efficiency: 2010, 2014, and 2018. During the remaining nine years, the average of VRS-based technical efficiency was higher than the average of scale efficiency. That is to say, the overall inefficiency of Navy hospitals might have been more influenced by scale efficiency than pure technical efficiency during this period. This implies that policymakers and hospital managers should pay more attention to addressing pure technical efficiency, although it depends on the type of military hospital.

Lastly, the change in the productivity of all DOD hospitals is related to technological efficiency. When scrutinizing the MPI and its four components in all DOD hospitals from 2010 to 2021, this study found that the MPI and technological efficiency showed the same pattern. They showed an upward or a downward trend in the same year. That is to say, an increase or a decrease in the productivity of all DOD hospitals may originate from technological efficiency or inefficiency. When successfully employing the development of technology or adopting innovative management, the productivity of all DOD hospitals in t increased compared to one in t-1. However, when they failed to use technology and innovative management techniques in t-1, their productivity in t decreased compared to one in t-1. This implies that policymakers and hospital managers should pay more attention to employing smart technology and innovative management to improve the productivity of DOD hospitals.

The findings of this study make significant contributions to the hospital-efficiency research. By highlighting inefficiencies in US DOD hospitals over a prolonged period (2010–2021) and emphasizing differences in technical efficiency across service branches, our study underscores the need for specialized analyses of DOD hospitals as distinct from public or private healthcare institutions. Unlike general hospital efficiency studies, this research demonstrates the importance of incorporating the unique dual-mission structure of DOD hospitals into efficiency assessments, offering insights that bridge operational readiness and healthcare delivery. Further research on hospital efficiency could benefit from these nuanced approaches by extending similar methods to other military healthcare systems globally.

From a managerial perspective, our findings highlight actionable steps to enhance operational efficiency. We identified input slacks, particularly in operating expenses and staffing, and it provides hospital managers with a basis for targeted interventions. For example, optimizing nurse-to-patient ratios or reallocating underutilized resources could improve efficiency metrics. The differentiation between inefficiencies rooted in scale versus pure technical operations also offers specific pathways for reform. Navy hospitals, where scale inefficiencies are more pronounced, might benefit from reorganizing resource allocations or facility capacities. In contrast, the inefficiencies in Army and Air Force hospitals could be addressed by adopting advanced management practices and training programs for hospital staff.

Moreover, our study found out that technological change plays a crucial role as a primary driver of productivity fluctuations. It has direct implications for hospital management. The observed decreases in productivity due to technological inefficiencies suggest a need for greater investment in and integration of innovative healthcare technologies. Policymakers could incentivize the adoption of advanced diagnostic tools, telemedicine platforms, and data-driven management systems to address these gaps. Leveraging such technologies would not only improve operational efficiency but also enhance the quality of care provided to DOD beneficiaries.

Conclusion

The importance of DOD hospitals cannot be underestimated. Their two primary missions—to maintain medical readiness for combat and to distribute healthcare benefits to DOD beneficiaries—are directly related to national security. Thus, they have received significant attention and are expected to meet the demand for doing more with less—ie, achieve optimal operational medical capacity. Assessing the efficiency of DOD hospitals benefits decision-makers and managers as well as Congress. However, there has been a decrease in hospital-efficiency research focusing only on DOD hospitals because current hospital-efficiency studies tend to regard DOD hospitals as public hospitals.

To fill the lacuna, this study aimed to evaluate DOD hospital efficiency. It drew upon the American Hospital Association’s annual survey data and employed DEA, slack analysis, and the MPI to analyze DOD hospitals from 2010 to 2021.

The findings of this study offer practical policy and managerial implications on how to manage DOD hospitals efficiently. They reveal that, overall, DOD hospitals operated inefficiently from 2010 to 2021, although the average technical efficiency of all DOD hospitals increased slightly during this period. In addition, our findings show that the inefficiency of all DOD hospitals may be due to the lack of pure technical efficiency rather than the suboptimal scale. However, as Navy hospitals seem to be different from Army and Air Force hospitals, we should be careful in addressing the inefficiency of each type of DOD hospital.

Despite these contributions, we should acknowledge that this study has revealed some limitations that further research could accommodate. The study heavily relies on analyzing the efficiency of DOD hospitals and not on offering any causal relationship that could explain what generates the efficiency or inefficiency of DOD hospitals. Thus, further research should employ panel data to identify the causal factors that lead to DOD hospital efficiency. In addition, although this study attempted to analyze the differences in efficiency between Army, Air Force, and Navy hospitals from 2010 to 2021, it employed aggregated data and thus could not explain the variations of individual DOD hospitals regarding their relative efficiency and change during this period. This limitation asks future studies on DOD hospital efficiency to pay attention to discovering the differences among individual DOD hospitals. Further research may be able to investigate the differences in efficiency between different DOD hospitals by using a case study approach.

Funding Statement

This research is supported by the 2023 International Joint Research Project grant from the Research Institute for National Security Affairs (RINSA), Korea National Defense University (KNDU).

Disclosure

The authors declare no competing interests.

References

- 1.Congressional Research Service (CRS). FY2024 Budget Request for the Military Health System. 2023; Accessed October 3, 2023. Available from https://crsreports.congress.gov/product/pdf/IF/IF12377.

- 2.Harrison JP, Coppola MN, Wakefield M. Efficiency of federal hospitals in the United States. J Med Syst. 2004;28(5):411–422. doi: 10.1023/B:JOMS.0000041168.28200.8c [DOI] [PubMed] [Google Scholar]

- 3.Harrison JP, Coppola MN. The impact of quality and efficiency on federal healthcare. Int J Public Pol. 2007;2(3–4):356–371. doi: 10.1504/IJPP.2007.012913 [DOI] [Google Scholar]

- 4.Harrison JP, Meyer S. Measuring efficiency among US federal hospitals. Healthcare Manager. 2014;33(2):117–127. doi: 10.1097/HCM.0000000000000005 [DOI] [PubMed] [Google Scholar]

- 5.Weeks WB, Wallace AE, Wallace TA, Gottlieb DJ. Does the VA offer good health care value? J Health Care Finance. 2009;35(4):1. [PubMed] [Google Scholar]

- 6.Imani A, Alibabayee R, Golestani M, Dalal K. Key indicators affecting hospital efficiency: a systematic review. Front Public Health. 2022;10:830102. doi: 10.3389/fpubh.2022.830102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Moshiri H, Aljunid SM, Amin RM. Hospital efficiency: concept, measurement techniques and review of hospital efficiency studies. Malaysian J Public Health Med. 2010;10(2):35–43. [Google Scholar]

- 8.Srimayarti BN, Leonard D, Yasli DZ. Determinants of Health Service Efficiency in Hospital: a Systematic Review. Int J Eng Sci Inform Technol. 2021;1(3):87–91. doi: 10.52088/ijesty.v1i3.115 [DOI] [Google Scholar]

- 9.Rosko M, Al-Amin M, Tavakoli M. Efficiency and profitability in US not-for-profit hospitals. Int J Health Econom Management. 2020;20(4):359–379. doi: 10.1007/s10754-020-09284-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Farrell MJ. The measurement of productive efficiency. J Royal Stat Soc A. 1957;120(3):253–281. doi: 10.2307/2343100 [DOI] [Google Scholar]

- 11.Kumbhakar SC, Lovell CK. Stochastic Frontier Analysis. Cambridge University Press; 2000. [Google Scholar]

- 12.Hadji B, Meyer R, Melikeche S, Escalon S, Degoulet P. Assessing the relationships between hospital resources and activities: a systematic review. J Med Syst. 2014;38(10):1–21. doi: 10.1007/s10916-014-0127-9 [DOI] [PubMed] [Google Scholar]

- 13.Garcia-Alonso CR, Almeda N, Salinas-Pérez JA, Gutierrez-Colosia MR, Salvador-Carulla L. Relative technical efficiency assessment of mental health services: a systematic review. Administration Policy Mental Health Mental Health Serv Res. 2019;46(4):429–444. doi: 10.1007/s10488-019-00921-6 [DOI] [PubMed] [Google Scholar]

- 14.Azreena E, Juni MH, Rosliza AM. A systematic review of hospital inputs and outputs in measuring technical efficiency using data envelopment analysis. Int J Public Health Clin Sci. 2018;5(1):17–35. [Google Scholar]

- 15.Jung S, Son J, Kim C, Chung K. Efficiency Measurement Using Data Envelopment Analysis (DEA) in Public Healthcare: research Trends from 2017 to 2022. Processes. 2023;11(3):811. doi: 10.3390/pr11030811 [DOI] [Google Scholar]

- 16.Mbau R, Musiega A, Nyawira L, et al. Analysing the efficiency of health systems: a systematic review of the literature. Appl Health Econ Health Pol. 2023;21(2):205–224. doi: 10.1007/s40258-022-00785-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zubir MZ, Noor AA, Rizal AM, et al. Approach in Inputs & Outputs Selection of Data Envelopment Analysis (DEA) Efficiency Measurement in Hospital: a Systematic Review. medRxiv. 2023;2023:10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hollingsworth B. Non-parametric and parametric applications measuring efficiency in health care. Healthcare Management Sci. 2023;6(4):203–218. doi: 10.1023/A:1026255523228 [DOI] [PubMed] [Google Scholar]

- 19.Hollingsworth B. The measurement of efficiency and productivity of health care delivery. Health Econom. 2008;17(10):1107–1128. doi: 10.1002/hec.1391 [DOI] [PubMed] [Google Scholar]