Abstract

The iterative shrinkage-thresholding algorithm (ISTA) is a classic optimization algorithm for solving ill-posed linear inverse problems. Recently, this algorithm has continued to improve, and the iterative weighted shrinkage-thresholding algorithm (IWSTA) is one of the improved versions with a more evident advantage over the ISTA. It processes features with different weights, making different features have different contributions. However, the weights of the existing IWSTA do not conform to the usual definition of weights: their sum is not 1, and they are distributed over an extensive range. These problems may make it challenging to interpret and analyze the weights, leading to inaccurate solution results. Therefore, this paper proposes a new IWSTA, namely, the entropy-regularized IWSTA (ERIWSTA), with weights that are easy to calculate and interpret. The weights automatically fall within the range of [0, 1] and are guaranteed to sum to 1. At this point, considering the weights as the probabilities of the contributions of different attributes to the model can enhance the interpretation ability of the algorithm. Specifically, we add an entropy regularization term to the objective function of the problem model and then use the Lagrange multiplier method to solve the weights. Experimental results of a computed tomography (CT) image reconstruction task show that the ERIWSTA outperforms the existing methods in terms of convergence speed and recovery accuracy.

Introduction

Inverse problems in imaging are essential for physical and biomedical sciences [1]. They have been widely used in optical and radar systems, including X-ray computed tomography (CT), positron emission tomography (PET), and electrical tomography (ET). An inverse problem in imaging aims to estimate an unknown image from the given measurements by solving an optimization problem with a regularizer. Mathematically, it is to infer an original signal x ∈ Rn from its measurements b = Ax ∈ Rm. Here, A ∈ Rm × n is a linear random projection (matrix). Because m ≪ n, this inverse problem is typically ill-posed.

Therefore, researchers typically construct an optimization problem and utilize a regularizer to solve it. However, the employed regularizer is usually nonsmooth, so it cannot be solved in a straightforward manner. Therefore, many first-order iterative proximal gradient methods have also been proposed, e.g., the iterative shrinkage-thresholding algorithm (ISTA) [2], two-step ISTA (TwISTA) [3] and fast ISTA (FISTA) [4, 5]. The ISTA is one of the most popular methods for solving such problems, and its advantage is its simplicity. However, the ISTA has also been recognized as a slow method. TWIST, as a faster method than the ISTA, also has good effectiveness. The FISTA has low complexity, fast convergence, and moderate recovery accuracy. It has been proven that the FISTA can also be faster than TWIST by several orders of magnitude. In addition, the alternating direction method of multipliers (ADMM) [6] and primal-dual hybrid gradient (PDHG) algorithm [7] are important methods. These methods solve inverse problems with nonsmooth regularizers that possess high computational efficiency. Unfortunately, these methods treat every attribute equally, which can result in inaccurate optimal solutions.

Recently, the iterative weighted shrinkage-thresholding algorithm (IWSTA) [8] and its variants have been developed to extend the range of practical applications. These methods often outperform unweighted algorithms. Nasser et al. [9] proposed the weighted FISTA (W-FISTA), which has higher estimation efficiency than the original FISTA but the same complexity. Candes et al. [10] proposed an iterative algorithm for reweighted L1 minimization (IRL1) to penalize better nonzero coefficients. This method solves the imbalance between the L1 and L0 norms. Chartrand et al. [11] proposed an iterative reweighted least-squares (IRLS) algorithm to attain an improved ability to recover sparse signals. IRLS and IRL1 are known for their state-of-the-art reconstruction rates for noiseless and noisy measurements. Foucart et al. [12] proposed a weighted method to obtain the solution of a system with the minimal Lq quasinorm (WLQ). This method generalizes and improves the result obtained with the L1 norm. Wipf et al. [13] presented distinct detail-separable and nonseparable iterative reweighting algorithms and introduced mainly nonnegative sparse coding examples via reweighted L1 minimization (IRW) for solving linear inverse problems. However, the weights of these algorithms do not conform to the usual weight definition. Their sums are not one, and these weights are distributed over an enormous range. Such weights are difficult to explain and may lead to inaccurate results. Table 1 lists some IWTAs and their details.

Table 1. Variants of weighted methods.

This paper proposes a new IWSTA based on entropy regularization called the entropy-regularized IWSTA (ERIWSTA). This algorithm makes the weights easy to calculate, and it has good interpretability. Additionally, the iterative formula update process becomes simple. Experimental results obtained in synthetic CT image denoising tasks show that the proposed method is feasible and effective.

Related work

We introduce the general model of an inverse problem and its solving method (the ISTA). For the convenience of description, we present the symbolic notations. Matrices are represented as capital letters. For a matrix A, A*i, Ai* and Aij denote the i-th column, the i-th row and the (i, j)-th element of A, respectively; ‖ ⋅ ‖i represents the i-norm of a vector. All the vectors are column vectors unless transposed to a row vector by a prime superscript T.

The equation of an inverse problem in imaging can be expressed as follows [14]:

| (1) |

where x ∈ Rn denotes an unknown image, b ∈ Rm denotes the given measurements, A ∈ Rm × n is called the system matrix (usually, m ≪n), and ϵ is the unknown disturbance term or noise. Note that the system is underdetermined. To reconstruct the original image x, researchers usually construct the following optimization problem [15]:

| (2) |

Generally, the least-squares method is used to solve the problem.

To suppress overfitting, some scholars [16–18] have introduced the L0 norm to Eq (2) as sparse prior information. Therefore, to obtain the solution of Eq (2), we must solve an optimization problem that minimizes the cost function [19]:

| (3) |

where ‖x‖0, the number of nonzero components of x, is the regularizer that imposes prior knowledge (sparsity); β > 0, the regularization coefficient, is a hyperparameter used to control the tradeoff between accuracy and sparsity. Eq (3) is an NP-hard optimization problem [20], which is highly discrete, so it is challenging to precisely solve this problem. Thus, we must seek an effective approximation solution for this problem. The L1 norm regularizer is introduced as a substitute for the L0 norm. That is [2],

| (4) |

This is a convex continuous optimization problem with a sole nondifferentiable point (x = 0). The classic method for solving the problem is the ISTA proposed by Chambolle et al. [21, 22]. The ISTA updates x through the following shrinkage and soft thresholding operation in each iteration:

| (5) |

where k represents the k-th iteration, t is an appropriate step size and soft is the soft threshold operation function. The soft function has the following form:

| (6) |

where sign(xi) is the sign function of xi and θ is the threshold.

Recently, the IWSTA has attracted more interest than the ISTA, as it outperforms its unweighted counterparts in most cases. In these methods, decision variables and weights are optimized in an alternating manner, or decision variables are optimized under heuristically chosen weights. Usually, the objective function of this type of algorithm has the following form [13]:

| (7) |

In this paper, we improve the second term in Eq (7) to obtain a variant. Then, we propose an iterative update law for the variant.

Methodology

The main idea of IWSTA-type algorithms is to define a weight for each attribute based on the current iteration xk and then use the defined weights to obtain a new x. In this section, we introduce an entropy regularizer to the cost function and obtain the following optimization model:

| (8) |

where γ ≥ 0 is a given hyperparameter, and the inspiration for adding a term with logarithms comes from the literature [23]. It is worth noting that if we do not use the entropy regularizer, w can easily be solved as wi = 1 when |xi| = min{|x1|, …, |xn|}, and otherwise is 0 The update rule can be easily explained by an example as

The solutions are w1 = 0, w2 = 1 and w3 = 0, among which w2 corresponds to the minimum value of {4, 1, 5}. This is very similar to the weight computation in the k-means algorithm. This shows the simple fact that only one element of w is 1, and the others are 0, which is grossly incompatible with the actual problem. Then, we add the negative entropy of the weights to measure the uncertainty of the weights and stimulate more attributes to help with signal reconstruction because it is well known that is minimized in information theory when

| (9) |

We alternatively solve w and x in Eq (8) as follows.

Update rule for w

To solve w, we introduce the Lagrange multiplier λ and obtain the following Lagrange function. For w, F(x) is a constant, so we only construct a Lagrange function on G(x), which can be expressed as follows:

| (10) |

We set the partial derivatives of L(w, λ) with respect to wi and λ to zero and then obtain the following two equations:

| (11) |

| (12) |

From Eq (11), we know that

| (13) |

Substituting Eq (13) into Eq (12), we have

| (14) |

It follows that

| (15) |

Substituting this expression into Eq (13), we obtain that

| (16) |

Such weights certainly satisfy the constraints that wi ≥ 0 and .

Update rule for x

Inspired by the work concerning the ISTA, we adopt a similar approach for the iterative update process of x. The construction of a majorization is an important step toward obtaining the update rule.

Definition 0.1 (Majorization) Assume Ψ(x) is an n-dimensional real valued function about vector x, we can denote ψk(x;xk) as a majorization for Ψ(x) at xk (fixed) if ψk(xk) = Ψ(xk) and ψk(x) ≥ Ψ(x).

Clearly, Ψ(x) is nonincreasing under the update rule xk+1 = minxψ(x|xk) because

| (17) |

Then, we can construct a majorization for F(x).

Remark 0.1. Conditions for constructing surrogate function: A basic principle of optimization algorithm is to construct an easily minimized function ψk(x;xk) to replace the original function Ψ(x). Then calculate the minimum value of the function ψk(x;xk), and use the minimum value point as the new iteration point(i.e. xk+1 = argmin ψk(x;xk)). By continuously repeating the two steps of constructing the surrogate function and finding the minimum value of the surrogate function, an estimated sequence ψk of Ψ can be obtained. The estimated sequence ψk makes Ψ(xk) monotonically decreasing when the surrogate function satisfies the following conditions [24, 25]:

| (18a) |

| (18b) |

Proposition 0.1 Notably, F(x) is a Lipschitz-continuous and differentiable convex function, which has a majorization function at the fixed current iteration xk as follows:

| (19) |

where L is larger than or equal to the maximum eigenvalue of ATA.

Proof. It is well known that

| (20) |

Comparing F(x) with F(x, xk), we find that their last items are different. By performing singular value decomposition (SVD) on a symmetric definite matrix, we know that ATA = QTΣQ, in which Q is an orthogonal matrix consisting of all eigenvectors and Σ is a diagonal consisting of all eigenvalues. Let z = x − xk; then,

| (21) |

It is also certain that if x = xk. Thus, the proof is established. Now, we obtain the majorization for the cost function Φ(x, w) on x.

| (22) |

which can be reorganized as:

| (23) |

Then,

| (24) |

where (.)i denotes the i-th element of vector (.).

Let

| (25) |

so we know that

| (26) |

Let

| (27) |

Let be the value of xi when the partial derivative is 0, that is:

| (28) |

By transferring terms, we can obtain the following equation:

| (29) |

That is,

| (30) |

Due to and ΔF(x) = 2AT(Ax − b), the iterative formula can be easily obtained as follows:

| (31) |

Therefore, the update of x is completed.

Experiments

Experimental description

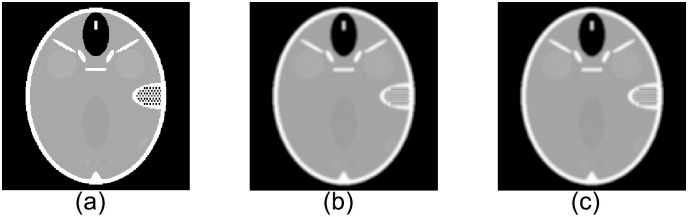

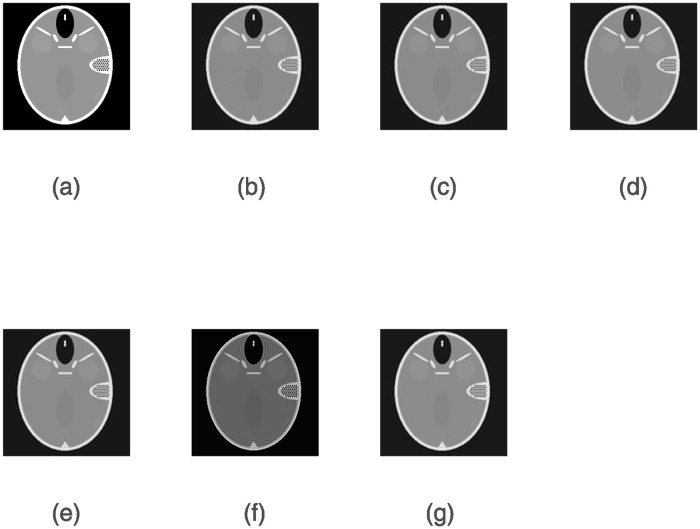

We use a simulated CT dataset and a real PET dataset to evaluate the performance of the ERWISTA. The simulated CT dataset consists of a Shepp-Logan phantom with 256 × 256 pixels. The use of phantom data brings many advantages, including the fact that we have accurate a priori knowledge of the pixel values and the freedom to add noise to them as needed. We blur the image (b) using a uniform 5 × 5 kernel (applied by the fspecial MATLAB function) and then add Gaussian noise with a mean of 0 and variances of 10−2 and 10−3. The original and blurred and noisy images are shown in Fig 1. The real dataset comes from the cooperating hospital. The dataset includes low-dose and normal-dose PET images with size of 256 × 256. All experiments are performed on an HP computer with a 2.5 GHz Intel(R) Core(TM) i7–4710MQ CPU with 12 GB of memory using MATLAB R2019a for coding.

Fig 1. Original and noisy head phantom images.

(a) Head phantom with 256 × 256 pixels; (b) and (c) blurred images with 5 × 5 uniform kernels and additive Gaussian noise with variances of σ = 10−2 and σ = 10−3, respectively.

Evaluation standard

This paper uses the mean absolute error (MAE) to measure the similarity between the reconstructed and true images. The value of the MAE is calculated by taking the average of the squared differences between the restored pixel values and the true pixel values. Let x represent the ground truth, represent the reconstructed image and N denote the number of voxels. The MAE is defined as follows:

| (32) |

Generally, lower MAE values indicate better reconstructed image quality.

Experimental results of simulated dataset

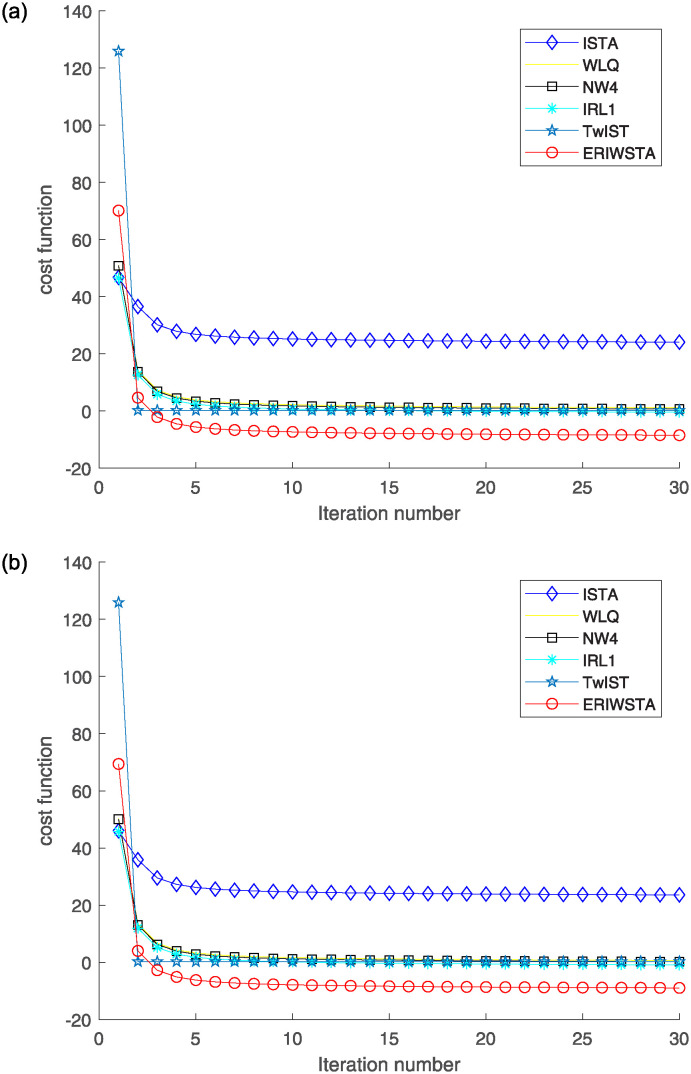

To illustrate the performance of the proposed method, we provide some visual results. Fig 2 displays the cost function curves produced by six algorithms during training. They are the ISTA, WLQ, IRW, IRL1, TwIST and the ERIWSTA. As is well known, we should not compare the values of different cost functions. However, we can visually compare their convergence speeds, in which an algorithm shows faster convergence when the corresponding curve becomes flatter within fewer iterations. We find that the six algorithms tend to converge after approximately several iterations and that the proposed algorithm has a fast convergence speed. The ERIWSTA arrives at the stable status early.

Fig 2. The cost function Φ(x, w) versus the number of iterations for different Gaussian noise levels: (a) σ = 10−2 and (b) σ = 10−3.

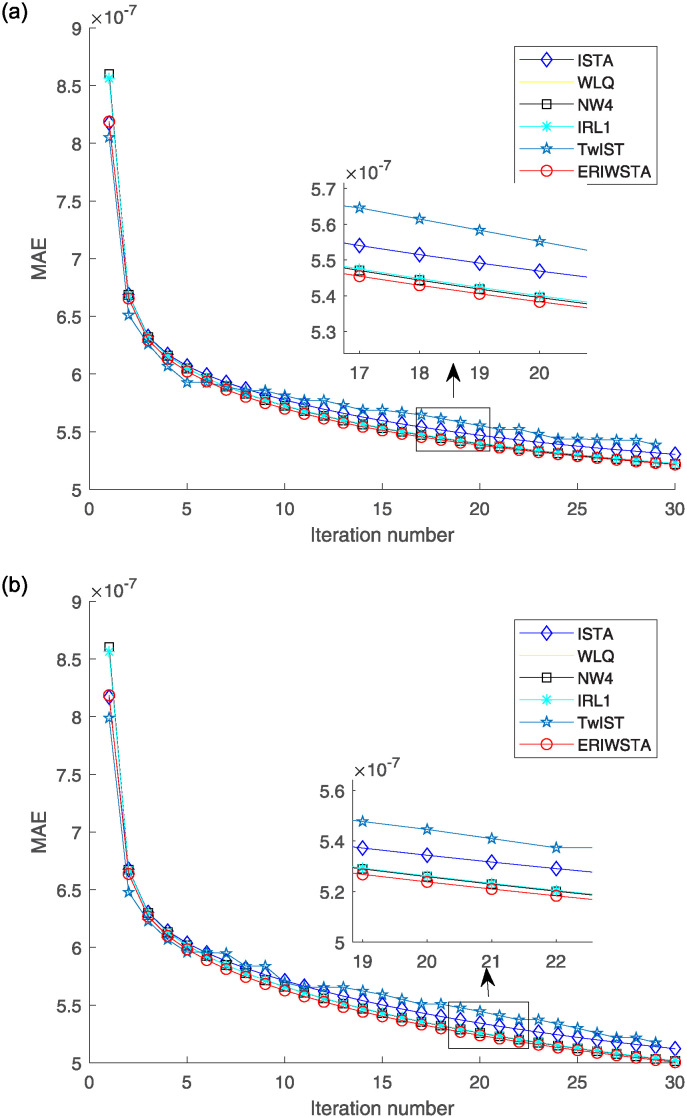

Fig 3 shows the MAE curves of the six algorithms versus the number of iterations. Subgraphs (a) and (b) denote the curves produced in the cases with Gaussian noise levels of σ = 10−2 and σ = 10−3, respectively. We can observe that each algorithm has a small MAE value, indicating that all methods have considerable denoising capabilities. However, compared with the other algorithms, the ERIWSTA obtains the minimum MAE value after each iteration to obtain a clearer denoised image by observing an enlarged detail image.

Fig 3. MAE versus the number of iterations for different Gaussian noise levels: (a) σ = 10−2 and (b) σ = 10−3.

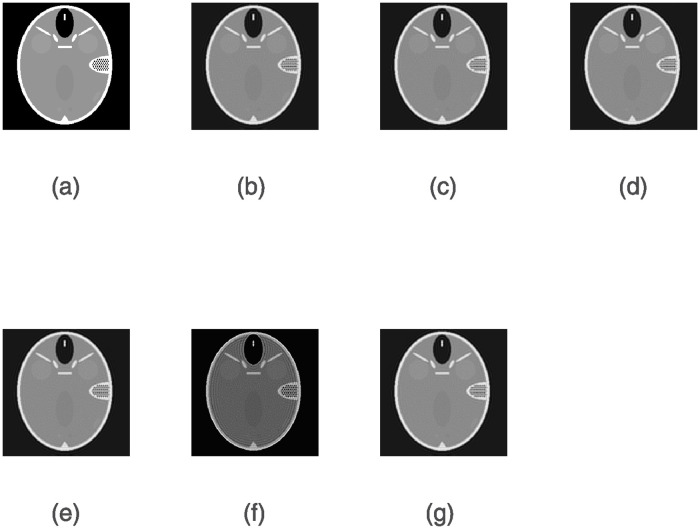

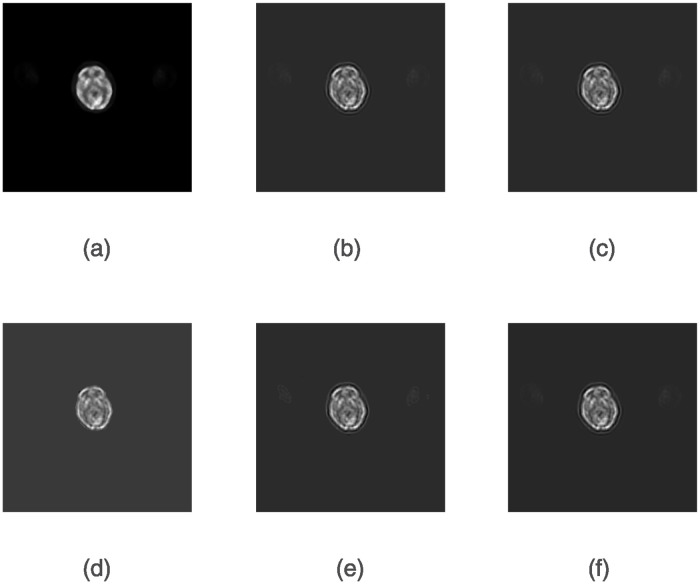

Figs 4 and 5 show the denoising results obtained by six algorithms under the given noise level. As seen, the image reconstructed by the ERIWSTA has a better noise removal effect than those of the other algorithms, and it can more accurately recover the edge information and texture features of brain images.

Fig 4. The denoising results yielded by different algorithms on a dataset with Gaussian noise at a level of σ = 10−2 after 30 iterations.

(a) denotes the original image, and (b)–(g) denote the denoised images produced by the ISTA, WLQ, IRW, IRL1, TwIST and the ERIWSTA, respectively.

Fig 5. The denoising results yielded by different algorithms on a dataset with Gaussian noise at a level of σ = 10−3 after 30 iterations.

(a) denotes the original image, and (b)-(g) denote the denoised images produced by the ISTA, WLQ, IRW, IRL1, TwIST and the ERIWSTA, respectively.

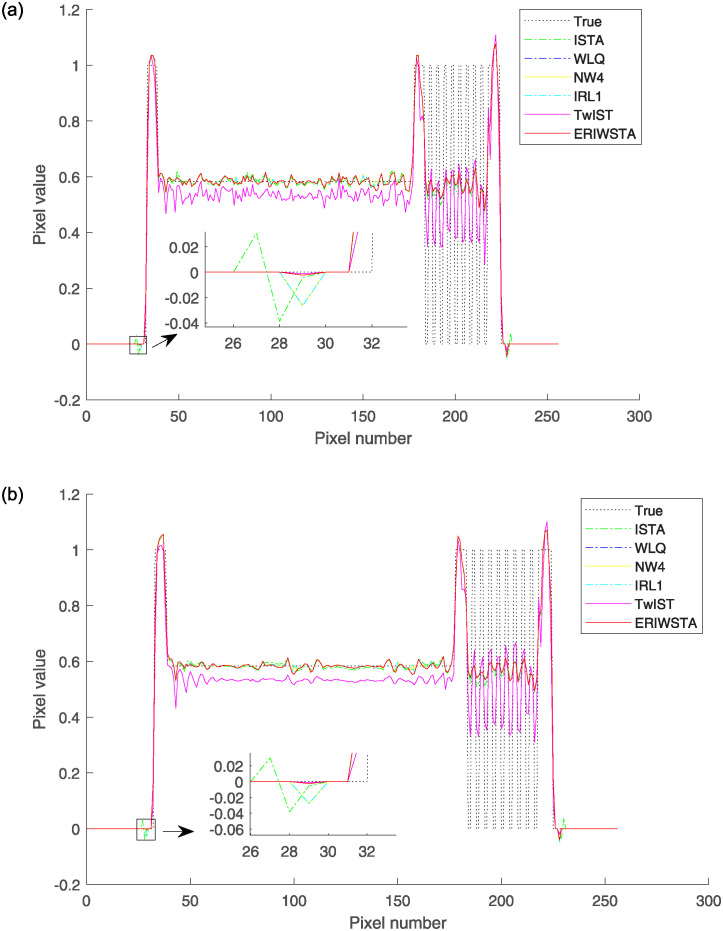

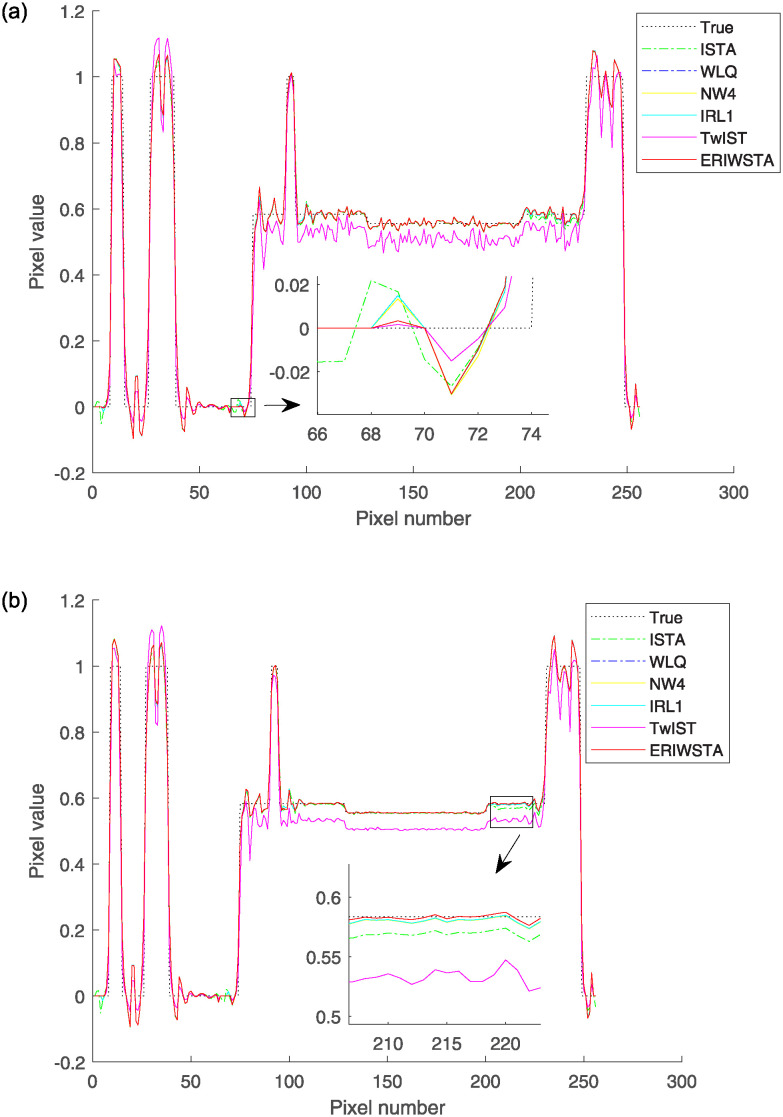

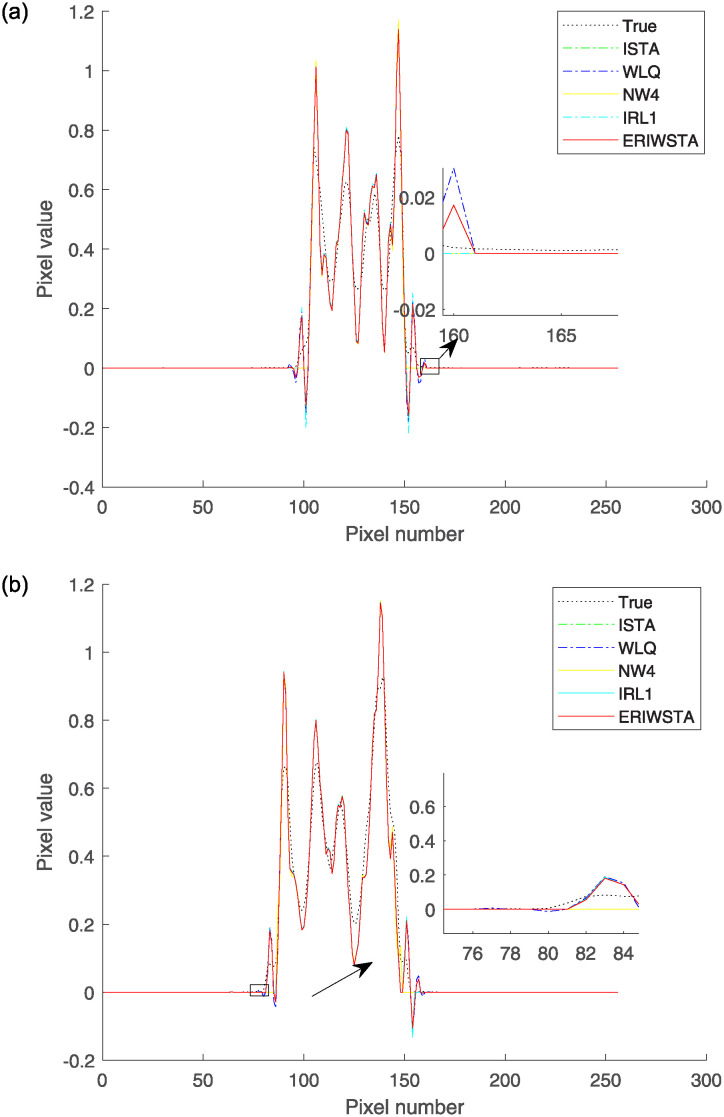

In addition, we further verify the effectiveness of the proposed algorithm. We select the same row and column of four reconstructed images for one slice and compare them with the corresponding rows and columns of the real image by their pixel values. Figs 6 and 7 show the comparisons among the horizontal and vertical center profiles of the restored images, respectively. IRL1 yields significant deviations from the true values in both the horizontal and vertical regions. The ISTA, IRW, WLQ and TwIST recover well in the horizontal region but sometimes have abnormal states in the vertical region. These methods cannot guarantee a stable denoising effect. Compared with the other algorithms, the ERIWSTA does not guarantee the lowest denoising errors in all intervals, but it can fit the true values more accurately overall. The ERIWSTA also has the best stability and robustness, can guarantee good denoising effects in areas with minor pixel value variations, and can track the true profile more accurately.

Fig 6. Horizontal central profiles produced for the 128-th row of the restored images with different Gaussian noise levels: (a) σ = 10−2 and (b) σ = 10−3.

Fig 7. Vertical central profiles produced for the 128-th column of the restored images with different Gaussian noise levels: (a) σ = 10−2 and (b) σ = 10−3.

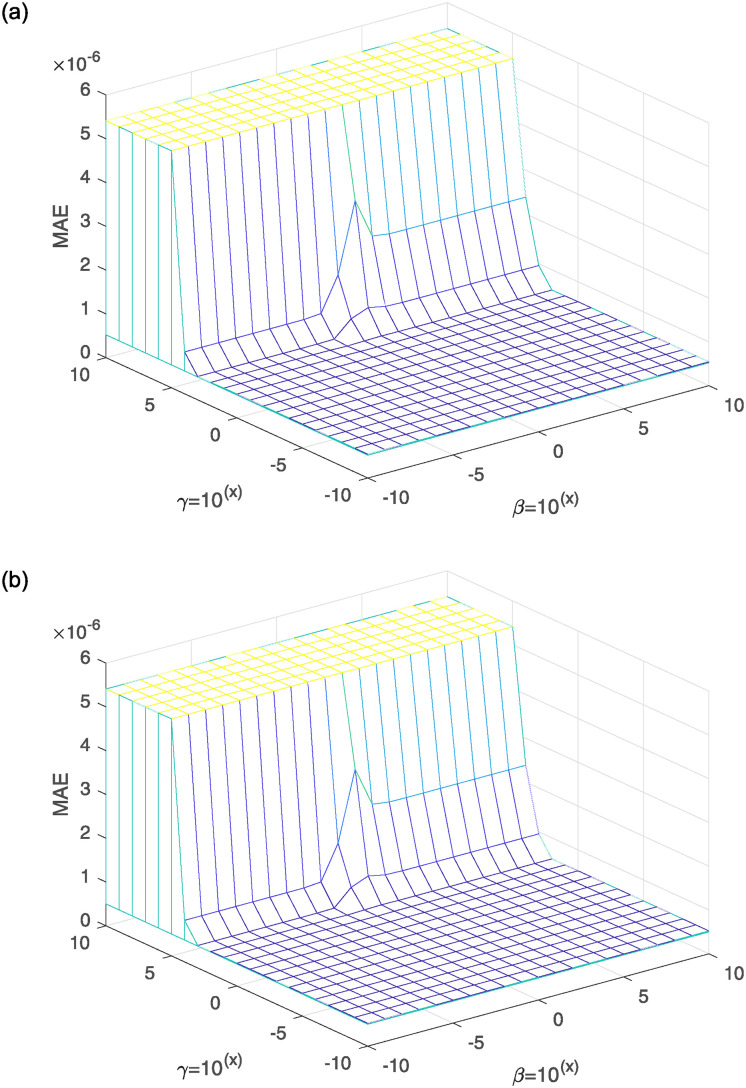

Hyperparameter selection

We conduct hyperparameter experiments to select the optimal penalty hyperparameter β and the optimal entropy-weighted hyperparameter γ. We provide a parameter range from 10−10 to 1010 and choose the MAE as the evaluation index. The number of experimental iterations is 100, and we plot a three-dimensional line graph for the ERIWSTA with the vertical axis representing the MAE values corresponding to different hyperparameters, as shown in Fig 8. The optimal values of β and γ can be chosen from wide ranges, and the ERIWSTA has stable lower MAE values for both, which also shows the excellent robustness of the ERIWSTA. In addition, to ensure the validity of the experimental results, we choose the optimal β and γ values for all the compared algorithms. For details, Tables 2 and 3 show the optimal values of all the algorithms’ β and γ parameters when conducting experiments on the datasets with noise levels of σ = 10−2 and σ = 10−3, respectively. An interesting observation is that, regardless of whether low or high noise levels are used, the restoration accuracy of our algorithm is always better than that of the other approaches.

Fig 8. 3D profiles of β and λ with respect to the MAE for different Gaussian noise levels: (a) σ = 10−2 and (b) σ = 10−3.

Table 2. The optimal MAE values and corresponding hyperparameters (Gaussian noise with σ = 10−2).

| Termed | β | γ | δ | MAE |

|---|---|---|---|---|

| ISTA | 10−3 | − | − | 5.312077*10−7 |

| WLQ | 10−5 | 10−10 | 10−3 | 5.228672 * 10−7 |

| IRW | 10−5 | 10−2 | 10−3 | 5.410231 * 10−7 |

| IRL1 | 10−5 | − | 10−3 | 5.228672 * 10−7 |

| TwIST | 10−5 | − | 10−5 | 5.333443 * 10−7 |

| ERIWSTA | 102 | 10−2 | − | 5.218246 * 10−7 |

Table 3. The optimal MAE values and corresponding hyperparameters (Gaussian noise with σ = 10−3).

| Termed | β | γ | δ | MAE |

|---|---|---|---|---|

| ISTA | 10−3 | − | − | 5.122013 * 10−7 |

| WLQ | 10−5 | 10−5 | 10−3 | 5.018339 * 10−7 |

| IRW | 10−5 | 10−2 | 10−3 | 5.410231 * 10−7 |

| IRL1 | 10−5 | − | 10−3 | 5.018340 * 10−7 |

| TwIST | 10−5 | − | 10−5 | 5.311041 * 10−7 |

| ERIWSTA | 102 | 10−2 | − | 5.005524 * 10−7 |

Experimental results of real PET dataset

To make the results more convincing, we added the experiment result of real PET image. Fig 9 shows the denoised images and the second to sixth columns of Fig 9 are the images reconstructed using ISTA, WLQ, IRW, IRL1 and the proposed method, respectively. It is seen that all methods have considerable denoising ability, and the proposed algorithm has a better ability to denoise. Fig 10 shows the comparisons among the horizontal and vertical center profiles of the restored PET images, respectively. The ISTA, IRW and WLQ recover well in the horizontal region but sometimes have abnormal states in the vertical region. Compared with the other algorithms, the ERIWSTA does not guarantee the lowest denoising errors in all intervals, but it can fit the true values more accurately overall. The ERIWSTA also has the best stability and robustness.

Fig 9. The denoising results yielded by different algorithms.

(a) denotes the ground truth, and (b)–(f) denote the denoised images produced by the ISTA, WLQ, IRW, IRL1 and the ERIWSTA, respectively.

Fig 10.

The comparison between PET images reconstructed by different algorithms and the label images focuses on specific pixel profiles: (a) shows the pixel values along the 128th row of the corresponding images. (b) shows the pixel values along the 128th column of the corresponding images.

Conclusions

This paper proposes a new IWSTA for solving linear inverse problems based on entropy regularization. The main innovation of the algorithm is its introduction of an entropy regularization term in the loss function of the classic ISTA. The weights of the new algorithm are easy to calculate and have good interpretability. In addition, the iterative process of updating the formula becomes simple. Finally, we demonstrate the algorithm’s effectiveness in CT and PET data denoising experiments.

Although the experimental results show that the proposed method effectively removes noise, this work still needs to be improved. The hyperparameters of all algorithms in this paper are obtained by manually adjusting them according to the resulting imaging quality. This selection method requires cyclic experiments for the selected parameter intervals to choose the optimal parameters. This method could be more efficient and select only the optimal parameters in the given interval. Therefore, in the future, we will study a method for adaptively setting the parameters to accurately select the optimal parameters of each algorithm, thus ensuring the algorithm’s effectiveness and further improving its noise reduction ability. In addition, the data used in this experiment are phantom data. In algorithmic analyses, the use of simulated data provides good properties. It is easy to add noise and is conducive to the theoretical study of an algorithm’s effectiveness. However, the proposed algorithm still must be verified in more practice. Therefore, verifying the algorithm’s effectiveness on more patients’ clinical data and effectively improving the algorithm based on an actual application would be meaningful.

Supporting information

(PDF)

Data Availability

The data are shared on Dryad and the link is as follows: https://datadryad.org/stash/share/5rW5F45qSTpPSoudI9dJr66mWAd3KoNMbJ8Sho5Ell0.

Funding Statement

This work was supported by the Natural Science Foundation of Liaoning Province (2002-MS-114). The funder had a role in study conceptualization, formal analysis, investigation, methodology, supervision, and writing-review and editing of the manuscript.

References

- 1. Xiang JX, Dong YG, Yang YJ. FISTA-net: Learning a fast iterative shrinkage thresholding network for inverse problems in imaging. IEEE Transactions on Medical Imaging. 2021. Jan;40(5):1329–1339. doi: 10.1109/TMI.2021.3054167 [DOI] [PubMed] [Google Scholar]

- 2. Daubechies I, Defrise M, Demol C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Communications on Pure and Applied Mathematics: A Journal Issued by the Courant Institute of Mathematical Sciences. 2004. Aug;57(11):1413–1457. doi: 10.1002/cpa.20042 [DOI] [Google Scholar]

- 3. Bioucas-Dias JM, Figueiredo MA. A new TwIST: Two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Transactions on Image processing. 2007. Nov;16(12):2992–3004. doi: 10.1109/TIP.2007.909319 [DOI] [PubMed] [Google Scholar]

- 4. Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM journal on imaging sciences. 2009. Mar;2(1):183–202. doi: 10.1137/080716542 [DOI] [Google Scholar]

- 5.Vonesch C, Unser M. A fast iterative thresholding algorithm for wavelet-regularized deconvolution. In: Wavelets XII. SPIE, 2007: 135–139.

- 6. Boyd S, Parikh N, Chu E, Peleato B, Eckstein J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends® in Machine learning. 2011. Jul;3(1):1–122. doi: 10.1561/2200000016 [DOI] [Google Scholar]

- 7. Chambolle A, Pock T. A first-order primal-dual algorithm for convex problems with applications to imaging. Journal of mathematical imaging and vision. 2011. Dec;40:120–145. doi: 10.1007/s10851-010-0251-1 [DOI] [Google Scholar]

- 8. Zhu WX, Huang ZL, Chen JL, Peng Z. Iterative weighted thresholding method for sparse solution of underdetermined linear equations. Sci. China Math. 2020. Jul;64(3):639–664. doi: 10.1007/s11425-018-9467-7 [DOI] [Google Scholar]

- 9.Nasser A, Elsabrouty M, Muta O. Weighted fast iterative shrinkage thresholding for 3D massive MIMO channel estimation. In: 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada. IEEE, 2017: 1–5.

- 10. Candes EJ, Wakin MB, Boyd SP. Enhancing sparsity by reweighted L1 minimization. Journal of Fourier analysis and applications. 2008. Oct;14:877–905. doi: 10.1007/s00041-008-9045-x [DOI] [Google Scholar]

- 11.Chartrand R, Yin W. Iteratively reweighted algorithms for compressive sensing. In: 2008 IEEE international conference on acoustics, speech and signal processing, Las Vegas, NV, USA. IEEE, 2008: 3869–3872.

- 12. Foucart S, Lai MJ. Sparsest solutions of undetermined linear systems via Lq-minimization for 0 < q < = 1. Applied and Computational Harmonic Analysis. 2009. May;26(3):395–407. doi: 10.1016/j.acha.2008.09.001 [DOI] [Google Scholar]

- 13. Wipf D, Nagarajan S. Iterative reweighted L1 and L2 methods for finding sparse solutions. IEEE Journal of Selected Topics in Signal Processing. 2010;4(2):317–329. doi: 10.1109/JSTSP.2010.2042413 [DOI] [Google Scholar]

- 14. Chung JL, Nagy JG. An efficient iterative approach for large-scale separable nonlinear inverse problems. SIAM Journal on Scientific Computing. 2010;31(6):4654–4674. doi: 10.1137/080732213 [DOI] [Google Scholar]

- 15. Donoho DL. Compressed sensing. IEEE Transactions on Information Theory. 2006. Apr;52(4): 1289–1306. doi: 10.1109/TIT.2006.871582 [DOI] [Google Scholar]

- 16. Elad M, Figueiredo MT, Ma Y. On the role of sparse and redundant representations in image processing. Proceedings of the IEEE. 2010. Feb;98(6):972–982. doi: 10.1109/JPROC.2009.2037655 [DOI] [Google Scholar]

- 17. Wright J, Ma Y, Mairal J, Sapiro G, Huang TS, Yan SC. Sparse representation for computer vision and pattern recognition. Proceedings of the IEEE. 2010. Jun;98(6):1031–1044. doi: 10.1109/JPROC.2010.2044470 [DOI] [Google Scholar]

- 18. Gong MG, Liu J, Li H, Cai Q, Su LZ. A multiobjective sparse feature learning model for deep neural networks. IEEE transactions on neural networks and learning systems. 2015. Aug;26(12):3263–3277. doi: 10.1109/TNNLS.2015.2469673 [DOI] [PubMed] [Google Scholar]

- 19.Barbie D, Lucibello C, Saglietti L, Krzakala F, Zdeborova L. Compressed sensing with l0-norm: statistical physics analysis and algorithms for signal recovery. arXiv:2304.12127v1. 2023.

- 20. Natarajan BK. Sparse approximate solutions to linear systems. SIAM journal on computing. 1995;24(2):227–234. doi: 10.1137/S0097539792240406 [DOI] [Google Scholar]

- 21. Figueiredo MA, Nowak RD. An EM algorithm for wavelet-based image restoration. IEEE Transactions on Image Processing. 2003. Aug;12(8):906–916. doi: 10.1109/TIP.2003.814255 [DOI] [PubMed] [Google Scholar]

- 22. Chambolle A, Devore RA, Lee NY, Lucier BJ. Nonlinear wavelet image processing: variational problems, compression, and noise removal through wavelet shrinkage. IEEE Transactions on Image Processing. 1998. Mar;7(3):319–335. doi: 10.1109/83.661182 [DOI] [PubMed] [Google Scholar]

- 23.James MQ. Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA. 1967: 281–297.

- 24. Teng YY, Zhang YN, Li H, Kang Y. A convergent non-negative deconvolution algorithm with Tikhonov regularization. Inverse Problems. 2015. Mar;31(3):035002. doi: 10.1088/0266-5611/31/3/035002 [DOI] [Google Scholar]

- 25. Jacobson MW, Fessler JA. An expanded theoretical treatment of iteration-dependent majorize-minimize algorithms. IEEE Transactions on Image Processing. 2007. Oct;16(10):2411–2422. doi: 10.1109/tip.2007.904387 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF)

Data Availability Statement

The data are shared on Dryad and the link is as follows: https://datadryad.org/stash/share/5rW5F45qSTpPSoudI9dJr66mWAd3KoNMbJ8Sho5Ell0.