Abstract

Does competition increase cheating? This question has been investigated by both psychologists and economists in the past and received conflicting answers. Notably, prior experimental work compared how people behaved under competitive and non-competitive tasks that were associated with different levels of uncertainty about the reward that people would receive. We aim to experimentally disentangle the effect of competition from the effects of uncertain rewards. We conducted an incentivized, pre-registered study featuring real-time interaction between participants (N = 765). We introduce an uncertain non-competitive incentive scheme along with the certain non-competitive scheme and the (uncertain) competitive scheme. We find that competition significantly increases the magnitude (but not the prevalence) of cheating relative to both non-competitive schemes, with the effect of competition being larger when the level of uncertainty is held constant across schemes.

Keywords: Cheating, Competition, (dis)honesty, Effort, Risk, Uncertainty

Subject terms: Human behaviour, Behavioural methods

Introduction

Does competing with others make people act more dishonestly? Decision-makers within different types of organizations (e.g., companies, governments) and in different sectors of society (e.g., education, industry) are routinely confronted with this question. Consider companies interested in tying their employees’ compensation to their performance. Should they employ individual, non-competitive incentives that depend only on the target employee’s own performance (e.g., giving a bonus upon achieving target quotas or other performance indicators) or opt for competitive incentives which depend on the target employee’s performance relative to other employees’ performance? Both types of performance-based incentives may affect not only employee effort1–3 but also their tendency to engage in an array of dishonest and counterproductive work behaviors: taking shortcuts, prioritizing quantity over quality, sabotaging other employees, or even fabricating reports and contracts4–6.

The question of whether and how competition affects honesty has been of interest to social scientists for decades. A large swath of empirical research in psychology and economics has focused on investigating the effect of competitive incentives on dishonest or anti-social behavior7–16. Recently, the question of whether competition facilitates dishonest or immoral behavior has been the object of one of the largest ever crowd-sourced projects in the behavioral sciences, involving 45 research groups across the globe17 (the “#ManyDesigns” project).

Despite ample work investigating the causal link between competitive incentive schemes and dishonest behavior, empirical evidence is, at best, mixed. While some experimental studies found a higher rate of cheating under competitive payment schemes than under individual (non-competitive) incentives10,11, others found no effect of competition on the prevalence of dishonest behavior9,12. Similarly, while some work shows that the intensity of competition is positively correlated with the prevalence of cheating, in both field settings14 and lab experiments7, others document that more intense competition leads to significantly lower levels of cheating11. We contribute to this literature by disentangling the effects of competition from the effects of a factor which covaried with competition in past experimental designs—the uncertainty in the rewards that will be obtained in the relevant task.

The need to disentangle reward uncertainty and competition

The seeming inconsistency of past results on the effects of competition is likely driven by a host of factors, including different design choices (e.g., operationalizations of cheating, observability of cheating, group composition, nature of the task) and study populations. However, we note that most experimental investigations into the effect of competitive incentives on dishonest behavior confounded the effect of competition with the effects of uncertainty9–12. In these studies, while the competitive incentives were always inherently uncertain and probabilistic (i.e., only a fraction of participants received compensation; participants could not tell with certainty if they would be paid), the non-competitive incentives were always certain and deterministic (i.e., all participants received compensation; participants knew with certainty that they would be paid). For example, in the experiment conducted by Rigdon and D’Esterre9 participants had to solve math problems. In the non-competitive conditions, participants received a guaranteed payment of $0.50 per correct answer, regardless of the performance of others. By contrast, in the competitive conditions, they were matched with another participant, and only the person with the higher total score was paid $0.50 per correct answer, while the lower performer received $0. That is, final payments were always uncertain, with the possibility of earning nothing for submitting correct responses, as compensation also depended on the relative performance. Notably, some of these studies did equate the expected value of incentives across (certain) competitive and (uncertain) non-competitive incentive schemes, to make these conditions more comparable10,12. However, drawing causal inferences about the role of competition from a direct comparison between these two types of incentive scheme is still problematic. In decision-theoretic terms, the causal analysis of a potential competition effect across these two types of incentives relies on the assumptions that participants have a constant marginal valuation for money (i.e., earning an extra $1 is equally valuable, regardless of the sum of past earnings) and that participants weigh probabilities objectively. If these requirements are not met (see section “Potential effects of uncertainty on cheating”), then any potential difference (or lack of difference) between the individual non-competitive incentive scheme and the competitive scheme can arise, or remain undetected, due to a combination of factors, including non-constant marginal valuation of money, subjective probability weighting, and the presence of competition itself. This makes it impossible to isolate the effect of competition per se and may have contributed to inconsistent and inconclusive results on the effect of competitive incentives on dishonest behavior.

Similarly, in the recent crowd-sourced project on competition and moral behavior17, among the 17 experiments that investigated whether people cheat more for monetary incentives in competitive environments, 12 experimental designs (71%) either confounded the effects of competition and uncertainty (i.e., directly compared a certain non-competitive incentive scheme with an uncertain competitive scheme) or failed to control for the expected value of incentives across competitive and non-competitive conditions. In the other five designs (29%), the level of uncertainty was the same across the competitive and non-competitive conditions, but the lack of the crucial third condition in these designs (i.e., the certain non-competitive incentive) made it impossible to study the effects of competition and uncertainty at the same time. In other words, in none of these experiments did researchers experimentally cross the two potential manipulations of uncertainty and competition.

Potential effects of uncertainty on cheating

Though reward uncertainty and competition often co-occur in many real-world contexts, their effects are conceptually independent and should be disentangled to be truly understood. There are, at least, three distinct mechanisms through which the presence of uncertainty may affect dishonest behavior (and motivation), which are independent from the potential effect of competition: (1) non-constant marginal valuation of money, (2) subjective probability weighting, and (3) differences in the perceived “moral cost” of cheating under uncertainty.

Non-constant marginal valuation of money

The robust evidence of the fact that people do not evaluate outcomes in a linear fashion can contribute to different cheating rates across different incentive schemes. If individuals have an increasing marginal valuation of money, they might be more motivated by the prospect of getting a larger reward under the uncertain (competitive) incentive scheme than under the certain (non-competitive) one, even if the expected value of the incentives is the same across schemes. This holds even when people have perfectly accurate beliefs about the likelihood of receiving uncertain incentives.

Conversely, people with a diminishing marginal valuation of money may be motivated to cheat less under uncertain incentives. It follows that the net effect of uncertain incentives on population-level dishonest behavior may depend on the relative proportion of people with diminishing/increasing/constant marginal valuation of money. For example, if most participants exhibit diminishing marginal valuations, then the effect of uncertainty would, on average, reduce participants’ motivation to cheat, countering the potential effect of competition under a competitive (uncertain) incentive scheme, relative to a non-competitive (certain) scheme.

Subjective probability weighting

In addition to (or independently of) people’s valuation of money, participants’ subjective perception of probabilities may also confound direct comparisons between competitive (uncertain) and non-competitive (certain) schemes. For example, most empirical work suggests that people tend to overweight comparatively small probabilities18–20, thus making them overly optimistic. The objective probability of receiving a bonus payment under competitive incentives ranged between 13 and 50% in the past experiments9–12,17, which makes the possibility of overweighting probabilities under competition particularly relevant. Even if people have a constant marginal valuation of money and even if the objective expected value of incentives is the same across competitive and non-competitive schemes, overweighting probabilities may make competitive schemes disproportionately motivating, and thus lead people to cheat more.

On the other hand, participants may value certain incentives disproportionately compared to uncertain incentives even when their expected values are identical21, which further complicates and confounds any causal investigation of competition when directly comparing behavior under competitive (uncertain) to non-competitive (certain) schemes.

Moral cost of dishonest behavior/self-signaling

Finally, cheating may have different psychological costs depending on whether rewards are certain or uncertain. There is growing evidence that people consider both utilitarian (i.e., outcome-based) and deontological (i.e., action-based) perspectives when determining the morality of their behavior, and that there is substantial variation across individuals in the extent to which they care about utilitarian vs. deontological considerations22. For example, if some participants base their inference of morality partly on the outcome of their actions23, aligned with utilitarian ethics, cheating for uncertain (as opposed to certain) incentives may allow participants to maintain a positive self-view in terms of being honest (or allow them to view cheating as less morally condemnable).

When there is a deterministic link between actions and outcomes, there is no ambiguity that any gains via dishonest acts are ill-gotten, but when there is uncertainty about the consequences, people may exploit this ambiguity in a self-serving way24,25. For example, people may engage in motivated reasoning under uncertain incentives: “Even if I cheat, I might not gain any benefit from doing so, so cheating is not that bad.” Such self-serving reasoning would require far more mental gymnastics under certain incentives, i.e., when cheating is surely consequential.

On the other hand, if people rely on a general process of “counterfactual attribution”, uncertain incentives may also reduce the willingness to cheat or engage in other acts of dishonest behavior. As Silver and Silverman26 found in the context of rewards, actors who do good knowing they might not be rewarded for it may appear as if they would have been willing to act regardless of incentives. Applying this to the context of dishonest behavior, cheating for uncertain rewards may seem more like the actor would have been willing to cheat without any incentive to behave dishonestly at all. Independent of whether the presence of uncertainty in incentives increases or decreases the perceived moral cost of cheating, these potential differences further confound the causal investigation of competition in direct comparisons between competitive (uncertain) and non-competitive (certain) incentive schemes.

Overview of the experiment

To investigate the potential effects of the various incentive schemes on dishonest behavior, we conducted a pre-registered experiment (https://aspredicted.org/kyxz-b4gb.pdf) that relied on the recently introduced “spot-the-difference task”27,28. In the spot-the-difference task participants are presented with 20 pairs of images that have some differences between them (see Fig. 1). Participants score points if they report seeing three differences between images, but unbeknownst to them, 10 pairs of images have only two differences between them. Thus, participants can achieve scores above 50% only by dishonestly reporting seeing three differences where only two exists. The only experimental manipulation is the way scores are converted into monetary payment at the end of the experiment. In the certain condition, individual scores are converted into payment at a fixed rate. In the uncertain condition, one participant out of four is randomly selected to receive payment. Finally, in the competitive condition, the participant with the highest score in a group of four receives payment (see the Methods section for more details). This task presents several advantages over the widely used, canonical experimental paradigms (e.g., the die task, the coin task, the sender-receiver game, the matrix task). First, online participants are less familiar with the spot-the-difference task, and it is less transparent that this task is meant to measure dishonest behavior. Second, recent work has raised serious concerns about the construct validity of the canonical experimental paradigms29: “rather than deception, the results from these studies instead measure how participants perceive their relationship with the experimenter.” Third, this experimental task allows us to study both the prevalence of dishonest behavior (i.e., whether a larger proportion of people cheat under competition) and its magnitude (i.e., whether competition motivates people to cheat more intensively).

Fig. 1.

Sample pair of images used in the “spot-the-difference” task. This task and the corresponding stimuli were adapted from Speer et al.27 and Gai and Puntoni28.

Results

Construct validity

We report some insights that speak to the validity of the spot-the-difference task, i.e., whether participants’ tendency to report three differences in the two-difference rounds is correlated with their tendency to engage in dishonest behaviors in general. We examined three measures: (1) participants’ self-reported responses to the honesty/humility sub-inventory of the HEXACO personality test, (2) participants’ self-reported risk taking attitudes in the domain of ethical decisions, and (3) participants’ approval rate on Prolific, which can be interpreted as an indicator of real-world socially questionable behavior30.

After pooling the data across all three conditions, we found a significantly negative correlation between participants’ self-reported honesty/humility score and the number of dishonestly reported rounds (i.e., which had only two differences between the images), r(765) = − 0.14, p < 0.001, indicating that those people who are more likely to engage in dishonest behaviors in their daily lives are also more inclined to overreport their performance on the present task. We did not find any significant differences in the honesty/humility scores between any two conditions, all p ≥ 0.399.

Similarly, we found a significantly positive correlation between participants’ self-reported risk-taking attitude in the domain of ethical and financial decisions and the number of dishonestly reported rounds, r(765) = 0.13, p < 0.001, indicating that those who tend to take more ethical and financial risk in their daily lives are more inclined to overreport their performance on the present task. We did not find any significant differences in the self-reported risk-taking attitude between any two conditions, all p ≥ 0.520.

Finally, we also found a significantly negative correlation between participants’ approval rate on Prolific and the number of dishonestly reported rounds, r(765) = − 0.10, p = 0.006. These consistent results suggest that the spot-the-difference task is a valid measure of participants’ willingness to engage in dishonest, or ethically questionable, behavior.

Assumption checks

Participants felt rather skilled and confident in their ability to spot differences in the task (M = 3.61 out of 5, SD = 0.96), and were confident that they could have found three differences in all 20 rounds if they had unlimited time to work on the task (M = 4.20 out of 5, SD = 1.17). Most participants (57%) were certain that they would have found three differences in all rounds, whereas only 6% thought that they could certainly not have found three differences in all rounds (possibly realizing that some rounds had only two differences). There were no significant differences between any two conditions in either reported skill or confidence about finding all differences given unlimited time, all p ≥ 0.169. These results indicate that most participants were not aware of the impossibility of finding three differences in all rounds, and thus, participants likely did not think that the study investigated their tendency to act dishonestly (which further corroborates the construct validity of the task).

Main results

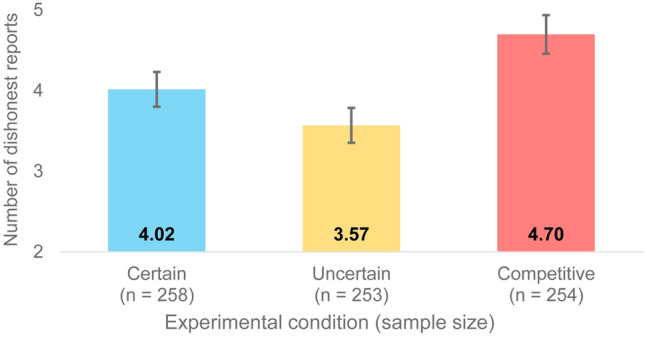

First, we conducted an independent-samples t-test (two-tailed) to compare the number of dishonestly reported rounds between the certain and competitive conditions, to test whether we replicated the main effect of competition reported in some of the prior work contrasting these two incentive schemes10,11. We found that participants in the competitive condition reported significantly more rounds dishonestly (M = 4.70, SD = 3.82) compared to people in the certain condition (M = 4.02, SD = 3.46), t(504) = 2.11, p = 0.035, Cohen’s d = 0.19 (see red vs. blue bars in Fig. 2). By contrast, we did not find a significant difference in the number of rounds reported “honestly” (i.e., rounds that actually had three differences), p = 0.379. Note that due to a programming error in the spot-the-difference task, two participants (0.3%) were prompted to report differences in some of the three-difference (i.e., “honest”) rounds multiple times, and as a result ended up reporting honestly 19 and 34 times. Since these participants actually reported 10 rounds honestly (but some of these multiple times), we adjusted these erroneous numbers to 10. Doing so did not affect the main results in any meaningful way.

Fig. 2.

Number of dishonestly reported rounds (out of 10) across experimental conditions. Error bars represent ± 1 standard error.

To test whether this difference between the certain and competitive conditions could be explained by the uncertain nature of rewards in the competitive condition, we also contrasted these two conditions to the uncertain condition. We conducted a linear OLS regression analysis, using the number of dishonestly reported rounds as the outcome measure. We added two independent variables, coded as binary measures: (1) uncertainty of rewards (coded as 1 in the uncertain and competitive conditions; 0 in the certain condition); (2) presence of competition (coded as 1 in the competitive condition; 0 in certain and uncertain conditions).

This regression analysis revealed a significant positive effect of the presence of competition on the number of dishonestly reported rounds, β = 1.13, SE = 0.32, t(762) = 3.55, p < 0.001 (see Table 1, column 1). By contrast, there was no significant effect of the presence of uncertainty on the number of dishonestly reported rounds, β = − 0.45, SE = 0.32, t(762) = 1.41, p = 0.159. Directionally, the introduction of uncertainty (without the introduction of competition) seems to have reduced the number of dishonestly reported rounds (M = 3.57, SD = 3.44) compared to the certain condition, although this reduction was not significant, p = 0.144. These results are robust to the inclusion of demographic factors (gender, age, income) in the analyses, and we did not find any significant interactions between gender, age, or income, and the experimental manipulations, all p ≥ 0.111 (we report the detailed analyses in the Supplementary Information). This pattern of results strongly suggests that it is not the inherent uncertainty of rewards that increases dishonest reporting in competitive environments, but specifically the competitive aspect of the incentive scheme. Finally, we did not find any significant effect of the experimental manipulation on the number of honest reports, both p ≥ 0.305 (Table 1, column 2).

Table 1.

Results of OLS regression analyses.

| Dependent variable | ||||

|---|---|---|---|---|

| Number of dishonest reports (0–10) | Number of honest reports (0–10) | Never dishonest (% of participants) | Always dishonest (% of participants) | |

| (1) | (2) | (3) | (4) | |

| Presence of uncertainty | − 0.446 | − 0.013 | 0.035 | − 0.017 |

| (0.317) | (0.119) | (0.035) | (0.032) | |

| Presence of competition | 1.128*** | 0.122 | − 0.044 | 0.086** |

| (0.318) | (0.119) | (0.035) | (0.032) | |

| Constant | 4.016*** | 8.922*** | 0.186*** | 0.140*** |

| (0.223) | (0.084) | (0.025) | (0.023) | |

| Observations | 765 | 765 | 765 | 765 |

| R2 | 0.016 | 0.002 | 0.002 | 0.010 |

| Adjusted R2 | 0.014 | − 0.001 | − 0.0003 | 0.008 |

**p < 0.01; ***p < 0.001.

Exploratory analyses

Prevalence vs. magnitude of dishonest reporting. Next, we investigated how the presence of competition increased the average number of dishonest reports: did competition increase the proportion of participants who submitted dishonest reports, or did it increase the extent of overreporting among those who submitted dishonest reports (or both)?

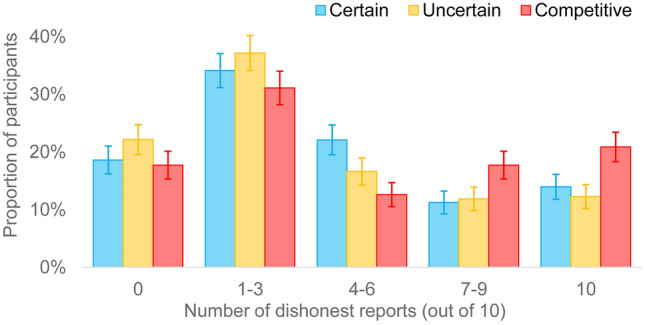

Neither the presence of uncertainty, nor the presence of competition had a significant effect on the proportion of fully honest participants (who did not submit any dishonest reports), both p ≥ 0.210 (see Table 1, column 3). About the same proportion of participants remained fully honest in the certain (M = 18.6%), uncertain (M = 22.1%), and competitive conditions (M = 17.7%). That is, the presence of competition (or uncertainty) did not affect the proportion of participants who submitted any number of dishonest reports.

By contrast, the average number of dishonest reports among those who submitted at least one dishonest report was significantly higher in the competitive condition (M = 5.71, SD = 3.46) than in both the certain condition (M = 4.93, SD = 3.19), t(414) = 2.38, p = 0.018, Cohen’s d = 0.23, and in the uncertain condition (M = 4.58, SD = 3.24), t(404) = 3.38, p = 0.001, Cohen’s d = 0.34. There was no significant difference between the latter two conditions, p = 0.274.

Furthermore, the presence of competition significantly increased the proportion of participants who submitted the maximum number of dishonest reports (i.e., reported seeing three differences in all 10 out of 10 two-difference rounds), β = 0.09, SE = 0.03, t(762) = 2.68, p = 0.008 (see Table 1, column 4). This proportion was substantially larger in the competitive condition (M = 20.9%) than in either the certain condition (M = 14.0%) or uncertain conditions (M = 12.3%). There was no significant effect of the presence of uncertainty on the proportion of participants who submitted the maximum number of dishonest reports, p = 0.596.

Figure 3 shows the full distribution of dishonest reports across conditions, highlighting that the main effect of competition occurred largely due to an increase in the proportion of participants who reported most rounds (7 or more) dishonestly. This suggests that competitive incentives do not necessarily increase the prevalence of dishonest behavior, but increase the magnitude of dishonest behavior, pushing people towards more extreme ways of cheating.

Fig. 3.

Relative frequency of dishonest reports (proportion of participants submitting given number of dishonest reports) across conditions. Error bars represent ± 1 standard error.

Discussion

The question of whether competing with others leads people to behave unethically has been the object of extensive empirical research. However, results from previous experimental investigations could be interpreted as reflecting a combination of competing with other people and feeling uncertain about whether a reward would be obtained. We conducted a large-scale pre-registered behavioral experiment that separated these effects, thereby providing a more precise test of whether competition leads to dishonest behavior. Employing a task that allowed us to unobtrusively observe individual instances of cheating, we compared a competitive scheme to both a certain non-competitive scheme (that resembled past “piece-rate” treatments) and an uncertain non-competitive scheme in which expected rewards were as uncertain as those in the competitive scheme. Overall, we found that the presence of competition increased cheating among participants. In particular, the competitive scheme in our experiment significantly increased the magnitude (but not the prevalence) of dishonest behavior, compared to both the certain and the uncertain non-competitive scheme.

This investigation yielded several insights. First, previous research found inconsistent results about the effects of competition on cheating. While this likely occurs for several different reasons, we suggest that one major limitation of past work has been the potential confounding effect of uncertain rewards, which was present in all direct comparisons between certain non-competitive and uncertain competitive incentive schemes. By contrast, we argue that controlling for uncertainty (i.e., comparing uncertain non-competitive schemes with uncertain competitive ones) might result in more accurate, less confounded estimates of the effect of competition on dishonest behavior.

Second, the effect of competition in the present experiment was larger when comparing the competitive scheme to the uncertain non-competitive scheme (d = 0.30) than when compared to the certain non-competitive scheme (d = 0.19). This suggests that, if anything, the presence of uncertain rewards, per se, may reduce participants’ willingness to act dishonestly. This finding might thus contribute to explain some of the inconsistency of results in past studies in which the effect of competition was confounded by the effects of uncertainty9–12,17. It further implies that even those past studies which did find a significant effect of competition when comparing the non-competitive certain incentives to the competitive uncertain incentives potentially underestimated the true effect of competition10,11.

Finally, another reason for inconsistency in past work may be due to the heterogeneity of dependent measures used to detect dishonest behavior, particularly due to the differential sensitivity of these measures. In our study we found that neither the presence of competition nor the presence of uncertainty affected the proportion of participants who submitted dishonest reports, but we found significant effects on the average magnitude of cheating. Based on these results, it is possible that past experiments that could only test whether competition increases the prevalence of cheating (but not the magnitude), may have underestimated the effect of competition on dishonest behavior.

While the present experiment helps in disentangling the effects of uncertainty and competition, it also has some inherent limitations. In our study, we focused on a specific type of uncertainty: the probabilistic nature of being compensated for one’s work. However, it is possible that social competition also changes the nature of uncertainty that participants face, potentially introducing new components of uncertainty. For example, the presence of competition may add another component of uncertainty surrounding the likelihood of being the best in a group, independently of monetary compensation. That is, while the uncertain condition involves financial uncertainty only, the competition condition may also involve social uncertainty, on top of financial uncertainty, and it is possible that these influence people’s decision-making processes differently. For instance, uncertainty about whether one is outperforming peers can create a psychological tension that drives individuals to cheat in order to secure a perceived advantage. Conversely, uncertainty related to reward probabilities, such as a 25% chance of winning, may evoke different emotional responses and risk assessments. This distinction is crucial, as it suggests that what we interpret as a “competition effect” could instead be simply a reflection of how different forms of uncertainty affect ethical decision-making. Therefore, while our findings indicate that competition amplifies the magnitude of cheating, especially after controlling for reward uncertainty, we acknowledge the need for further exploration into the specific mechanisms at play. Future research should aim to disentangle these types of uncertainty to clarify their respective influences on cheating behavior, ultimately enriching our understanding of the interplay between competition and ethical conduct.

Methods

Transparency and openness

We report how we determined our sample size (see the Supplementary), all data exclusions (if any), all manipulations, and all measures, and the studies follow JARS31. All data, analysis code, and research materials are publicly available at OSF: https://osf.io/86eb9. Data were analyzed using R, version 4.2.2 (R Core Team, 2022). We pre-registered the experiment on AsPredicted.org: https://aspredicted.org/kyxz-b4gb.pdf. The study was reviewed and approved by the Institutional Review Board at the Rotterdam School of Management, Erasmus University. All research was performed in accordance with relevant guidelines and regulations, and informed consent was obtained from all participants.

Participants and pre-screening criteria

We recruited 1,066 participants on Prolific to participate in this experiment in exchange for $2, in addition to any potential bonus payment depending on their performance in the spot-the-difference task. While it is common practice on crowd-sourced platforms (e.g., Prolific, Amazon Mechanical Turk) to only recruit participants with a high “approval rate” (i.e., whose submission are deemed of high-quality by the requesters), in our study we did not set any such criteria. Since the purpose of our study was to investigate dishonest behavior, and since lower approval rates may signal a higher likelihood of engaging in such behavior when completing online studies, restricting participation based on approval rate would have introduced a selection bias. In fact, lower approval rates on Prolific are correlated with a stronger tendency to cheat in experimental paradigms that are widely used to investigate dishonesty (e.g., coin flip task)30. We relied on this insight to test the external validity of the spot-the-difference task. The only recruitment criteria were that participants had to be at least 18 years old, reside in the U.S., and speak English as their first language.

Exclusions from data analyses

We excluded 301 participants (28.2%) from the analyses: Ninety (8.4%) who did not complete the full survey (i.e., quit before responding to all questions or could not be matched with others); 116 (10.9%) who failed to correctly answer at least one of the attention check or comprehension check questions; and 95 (8.9%) who reported a confidence of 0% that they interacted with real people. These exclusion criteria were all pre-registered. The final sample contained 765 responses (49.2% female; Mage = 40.5 years).

Procedure

Participants were directed to a Qualtrics survey in which they first read about the “spot-the-difference” task, which we adapted from Speer et al.27 and Gai and Puntoni28. In the spot-the-difference task participants are presented with pairs of images that have several differences between them (for example, an object is missing, or some areas have different colors), and their task is to find these differences (see Fig. 1 for a sample pair of images that has three differences between the images).

After describing the spot-the-difference task, we randomly assigned participants to one of the following three conditions, which only differed in how participants’ compensation was determined: (1) certain, (2) uncertain, or (3) competitive compensation. In all three conditions, we truthfully told participants that they would first be matched with three other people to form a group of four, and then work individually on the same set of 20 pairs of images, presenting one pair at a time. We informed participants that they would all work on the same set of images so to prevent (incorrect) beliefs that the spot-the-difference task may be substantially easier or harder for other participants.

In each of these 20 rounds, participants saw two images next to each other for five seconds. After the images disappeared, participants responded to the question: “Did you find 3 differences?” by selecting either “Yes” or “No.” Importantly, participants did not indicate what (or where) those differences actually were, but simply reported whether they had found three differences. If the participant selected “No,” they did not earn anything in that round. However, if the participants reported “Yes,” they earned a number of points that later determined their bonus payment, depending on the experimental condition. In the certain condition, participants earned 10 points for each round in which they reported “Yes” (i.e., reported that they found three differences). Thus, in this condition participants could earn up to 200 points if they reported “Yes” in all 20 rounds. In the uncertain and competitive conditions, participants earned 40 points for each time they reported “Yes” (i.e., could earn up to 800 points). At the end of the study, these points were converted into a bonus payment in the following way:

In the certain condition participants learned that they would receive a bonus payment of $0.01 for each point they earned in the task (i.e., up to $2).

In the uncertain condition, one member in each group of four was randomly selected and had their points converted into a bonus payment ($0.01 for each point, i.e., up to $8). Thus, in this condition, if a participant was not selected randomly for payment, they did not receive any bonus, regardless of how many differences they reported. Since the likelihood of being randomly selected for payment in a group of four is 25%, the expected bonus payment for each report of “Yes” was identical across the certain condition (i.e., 100% × $0.10 = $0.10 per round) and uncertain condition (i.e., 25% × $0.40 + 75% × $0 = $0.10 per round).

Finally, in the competitive condition, we told participants that the person who would earn the most points in each group would have their points converted into a bonus payment ($0.01 for each point, i.e., up to $8); the other three group members would not earn any bonus, regardless of how many differences they reported. We ensured that there was always only one “winner” in each group. We told participants that in case a of a tie (i.e., if the top two participants reported the same number of “Yes” in the task), the tie would be broken randomly, and one participant would be paid as if they were the sole winner, whereas the other participant would not earn any money. Thus, in both the uncertain and competitive conditions, only one person earned a bonus, which, in expectation, was the same ($0.10 per round) as the guaranteed bonus of $0.10 per round in the certain condition.

Before the 20 incentivized rounds, participants answered a comprehension check question about how the bonus payments would be determined and completed three rounds of (unincentivized) practice rounds of the spot-the-difference task. These practice rounds included three pairs of images that each had exactly three differences between the images, and participants could spend as much time comparing the pairs of images as they wanted. Then, we matched participants in groups of four using SMARTRIQS32,33, and participants started working on the 20 incentivized rounds of the spot-the-difference task.

Unlike in the practice rounds, in which there were always three differences between the two images, in half of the incentivized rounds (ten out of 20) there were only two differences between images. Thus, since it was impossible to find three differences in these ten rounds, any report of “Yes” in these “two-difference” rounds was necessarily untruthful, and as such, we treat all “Yes” responses in these rounds as dishonest reports. Reporting “Yes” in the other ten rounds (i.e., those that actually contained three differences) could be either honest or dishonest, depending on whether participants actually found the three differences. Since participants did not have to report what (or where) the three differences are, this experimental paradigm does not allow us to determine the honesty of reporting in the three-difference rounds. Therefore, we treat all “Yes” responses in the three-difference rounds as “honest reports.”

The primary dependent variable was the number of dishonest reports, that is, the number of two-difference rounds (out of 10) in which participants dishonestly reported that they found three differences (i.e., selected “Yes”). This variable could take any discrete value between 0 and 10 (0 and 10 included), therefore we treated the outcome variable as a continuous variable.

After participants completed the 20 incentivized rounds, we showed them all 20 images on a single screen, and they had to select which image they liked the most. We told participants that at the end of the study, we would reveal the favorite images of their group members. This task served two purposes: First, it served as a distractor, strengthening perceptions that the study intended to measure individual differences in the ability to detect differences. Second, it justified why all participants had to be assigned to groups of four, even if bonus payments did not depend on other participants’ performance.

After selecting their favorite image, but before informing participants about their final payment (or learning about the performance of others in their group), participants completed a questionnaire consisting of three groups of questions. First, participants provided their basic demographic information (gender, age, and annual household income). Second, participants completed the 10-item Honesty-Humility subscale of the HEXACO personality test34 (Cronbach’s α = 0.80); and an 8-item domain-specific risk-taking scale35 (Cronbach’s α = 0.75). These two scales respectively aimed to measure participants’ overall tendency to act honestly and to incur ethical and financial risks in their daily lives. The latter scale included four items that measured participants’ tendency to take ethical risks (e.g., “Cheating on an exam” or “Cheating by a significant amount on your income tax return”, Cronbach’s α = 0.79) and four items that asked participants about their willingness to take financial risks (e.g., “Investing 5% of your annual income in a very speculative stock” or “Gambling a week’s income at a casino”, Cronbach’s α = 0.66). Third, participants responded to a series of questions about the spot-the-difference task and reported their expectations and beliefs about their own and other participants’ performance. Participants rated how skilled or confident they felt when working on the task (from 1 = not skilled/confident at all to 5 = very skilled/confident). Then, they indicated how many of 100 other participants they thought: (a) performed better than them, (b) performed worse than them, and (c) performed equally well as them (each from 0 to 100). Following these estimates, participants indicated the likelihood they thought they performed the best in their group of four (from 0% = certainly did not perform the best to 100% = certainly performed the best). Then, we asked participants to estimate the number of rounds in which they reported that they found three differences but were not fully certain whether they actually found those differences (from 0 to 20) and the number of rounds in which they thought that others reported three differences when others were not fully certain (from 0 to 20).

Finally, participants indicated their confidence about their ability to find three differences in all 20 rounds if they had unlimited time to work on the spot-the-difference task, as opposed to viewing each pair of images for only 5 s (from 1 = certainly would NOT have found 3 differences in all rounds to 5 = certainly would have found 3 differences in all rounds). This last question was meant to serve as an assumption check, to assess whether participants (incorrectly) believed that it was possible to find three differences in all 20 rounds if they had unlimited time. If participants were confident in their ability and (incorrectly) believed that they could have found three differences in all 20 rounds, it is unlikely that they viewed the present task as a measure of dishonest behavior. By contrast, if participants realized that the task was impossible, they could have inferred that the purpose of the study was to investigate dishonest behavior and overreporting, and this realization could have affected their behavior.

After participants completed all parts of the questionnaire, we showed them the favorite images of each member of their group, informed them about their final bonus payment (in all conditions), whether they had been randomly selected for bonus payment (only in the uncertain condition), and whether they reported finding three differences in more rounds than others in their group (only in the competitive condition).

Finally, participants indicated their confidence that they interacted with real people in the study (they did), as opposed to bots (from 0 = not confident at all to 100 = very confident). As pre-registered, we used this question to exclude participants from subsequent analyses who (incorrectly) thought that they did not interact with real people. To test whether this exclusion criterion affected any of our main results, we conducted robustness check analyses that we report in the Supplementary Information. In brief, we find that all the main results are robust to the inclusion of participants who did not believe that they interacted with real people.

Supplementary Information

Author contributions

A.M.: Conceptualization (equal), methodology (lead), software (lead), formal analysis (lead), investigation (lead), resources (lead), data curation (lead), writing—original draft (lead), writing—review and editing (equal), visualization (lead). G.P.: Conceptualization (equal), methodology (support), software (support), investigation (support), writing—original draft (support), writing—review and editing (equal), supervision (lead), funding acquisition (lead).

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Data availability

All data, analysis code, and research materials are available at OSF: https://osf.io/86eb9. We pre-registered the experiment on AsPredicted.org: https://aspredicted.org/kyxz-b4gb.pdf.

Declarations

Competing interests

The authors declare no competing interests.

Use of generative AI (GenAI)

During the preparation of this work the authors did not use any LLM/GenAI services (like ChatGPT). The authors have reviewed and edited the content as needed and take full responsibility for the content of the publication.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-024-83621-y.

References

- 1.Bracha, A. & Fershtman, C. Competitive incentives: working harder or working smarter?. Manag. Sci.59, 771–781 (2013). [Google Scholar]

- 2.Gneezy, U. & Rey-Biel, P. On the relative efficiency of performance pay and noncontingent incentives. J. Eur. Econ. Assoc.12, 62–72 (2014). [Google Scholar]

- 3.Lazear, E. P. Performance pay and productivity. Am. Econ. Rev.90, 1346–1361 (2000). [Google Scholar]

- 4.Hegarty, W. H. & Sims, H. P. Some determinants of unethical decision behavior: an experiment. J. Appl. Psychol.63, 451–457 (1978). [Google Scholar]

- 5.Pierce, J. R., Kilduff, G. J., Galinsky, A. D. & Sivanathan, N. From glue to gasoline. Psychol. Sci.24, 1986–1994 (2013). [DOI] [PubMed] [Google Scholar]

- 6.Balafoutas, L., Czermak, S., Eulerich, M. & Fornwagner, H. Incentives for dishonesty: An experimental study with internal auditors. Econ. Inq.58, 764–779 (2020). [Google Scholar]

- 7.Chui, C., Kouchaki, M. & Gino, F. “Many others are doing it, so why shouldn’t I?”: How being in larger competitions leads to more cheating. Organ. Behav. Hum. Decis. Process.164, 102–115 (2021). [Google Scholar]

- 8.Rigdon, M. L. & D’Esterre, A. Sabotaging another: priming competition increases cheating behavior in tournaments. South. Econ. J.84, 456–473 (2017). [Google Scholar]

- 9.Rigdon, M. L. & D’Esterre, A. The effects of competition on the nature of cheating behavior. South. Econ. J.81, 140425121019003 (2015). [Google Scholar]

- 10.Faravelli, M., Friesen, L. & Gangadharan, L. Selection, tournaments, and dishonesty. J. Econ. Behav. Organ.110, 160–175 (2015). [Google Scholar]

- 11.Cartwright, E. & Menezes, M. L. C. Cheating to win: Dishonesty and the intensity of competition. Econ. Lett.122, 55–58 (2014). [Google Scholar]

- 12.Schwieren, C. & Weichselbaumer, D. Does competition enhance performance or cheating? A laboratory experiment. J. Econ. Psychol.31, 241–253 (2010). [Google Scholar]

- 13.Buser, T. & Dreber, A. The flipside of comparative payment schemes. Manag. Sci.62, 2626–2638 (2016). [Google Scholar]

- 14.Bennett, V. M., Pierce, L., Snyder, J. A. & Toffel, M. W. Customer-driven misconduct: how competition corrupts business practices. Manag. Sci.59, 1725–1742 (2013). [Google Scholar]

- 15.Gill, D., Prowse, V. & Vlassopoulos, M. Cheating in the workplace: An experimental study of the impact of bonuses and productivity. J. Econ. Behav. Organ.96, 120–134 (2013). [Google Scholar]

- 16.Schurr, A. & Ritov, I. Winning a competition predicts dishonest behavior. Proc. Natl. Acad. Sci.113, 1754–1759 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Huber, C. et al. Competition and moral behavior: A meta-analysis of forty-five crowd-sourced experimental designs. Proc. Natl. Acad. Sci.120, (2023). [DOI] [PMC free article] [PubMed]

- 18.Glaser, C., Trommershäuser, J., Mamassian, P. & Maloney, L. T. Comparison of the distortion of probability information in decision under risk and an equivalent visual task. Psychol. Sci.23, 419–426 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gonzalez, R. & Wu, G. On the shape of the probability weighting function. Cogn. Psychol.38, 129–166 (1999). [DOI] [PubMed] [Google Scholar]

- 20.Lejarraga, T., Pachur, T., Frey, R. & Hertwig, R. Decisions from experience: from monetary to medical gambles. J. Behav. Decis. Mak.10.1002/bdm.1877 (2015). [Google Scholar]

- 21.Kahneman, D. & Tversky, A. Prospect theory: an analysis of decision under risk. Econometrica47, 263–292 (1979). [Google Scholar]

- 22.Capraro, V., Halpern, J. Y. & Perc, M. From outcome-based to language-based preferences. J. Econ. Lit.62, 115–154 (2024). [Google Scholar]

- 23.Mazar, N., Amir, O. & Ariely, D. The dishonesty of honest people: a theory of self-concept maintenance. J. Mark. Res.45, 633–644 (2008). [Google Scholar]

- 24.Dana, J., Weber, R. A. & Kuang, J. X. Exploiting moral wiggle room: experiments demonstrating an illusory preference for fairness. Econ. Theory33, 67–80 (2007). [Google Scholar]

- 25.Garcia, T., Massoni, S. & Villeval, M. C. Ambiguity and excuse-driven behavior in charitable giving. Eur. Econ. Rev.124, 103412 (2020). [Google Scholar]

- 26.Silver, I. & Silverman, J. Doing good for (maybe) nothing: How reward uncertainty shapes observer responses to prosocial behavior. Organ. Behav. Hum. Decis. Process.168, 104113 (2022). [Google Scholar]

- 27.Speer, S. P. H., Smidts, A. & Boksem, M. A. S. Cognitive control increases honesty in cheaters but cheating in those who are honest. Proc. Natl. Acad. Sci.117, 19080–19091 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gai, P. J. & Puntoni, S. Language and consumer dishonesty: a self-diagnosticity theory. J. Consum. Res.48, 333–351 (2021). [Google Scholar]

- 29.Skowronek, S. About 70% of participants know that the canonical deception paradigms measure dishonesty. Acad. Manag. Proc.2021, 13725 (2021). [Google Scholar]

- 30.Schild, C., Lilleholt, L. & Zettler, I. Behavior in cheating paradigms is linked to overall approval rates of crowdworkers. J. Behav. Decis. Mak.34, 157–166 (2021). [Google Scholar]

- 31.Appelbaum, M. et al. Journal article reporting standards for quantitative research in psychology: The APA Publications and Communications Board task force report. Am. Psychol.73, 3–25 (2018). [DOI] [PubMed] [Google Scholar]

- 32.Molnar, A. How to implement real-time interaction between participants in online surveys: A practical guide to SMARTRIQS. Quant. Methods Psychol.16, 334–354 (2020). [Google Scholar]

- 33.Molnar, A. SMARTRIQS: A simple method allowing real-time respondent interaction in qualtrics surveys. J. Behav. Exp. Financ.22, 161–169 (2019). [Google Scholar]

- 34.Ashton, M. & Lee, K. The HEXACO-60: A short measure of the major dimensions of personality. J. Pers. Assess.91, 340–345 (2009). [DOI] [PubMed] [Google Scholar]

- 35.Weber, E. U., Blais, A.-R. & Betz, N. E. A domain-specific risk-attitude scale: measuring risk perceptions and risk behaviors. J. Behav. Decis. Mak.15, 263–290 (2002). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data, analysis code, and research materials are available at OSF: https://osf.io/86eb9. We pre-registered the experiment on AsPredicted.org: https://aspredicted.org/kyxz-b4gb.pdf.