Summary

Background

Understanding the mechanisms of algorithmic bias is highly challenging due to the complexity and uncertainty of how various unknown sources of bias impact deep learning models trained with medical images. This study aims to bridge this knowledge gap by studying where, why, and how biases from medical images are encoded in these models.

Methods

We systematically studied layer-wise bias encoding in a convolutional neural network for disease classification using synthetic brain magnetic resonance imaging data with known disease and bias effects. We quantified the degree to which disease-related information, as well as morphology-based and intensity-based biases were represented within the learned features of the model.

Findings

Although biases were encoded throughout the model, a stronger encoding did not necessarily lead to the model using these biases as a shortcut for disease classification. We also observed that intensity-based effects had a greater influence on shortcut learning compared to morphology-based effects when multiple biases were present.

Interpretation

We believe that these results constitute an important first step towards a deeper understanding of algorithmic bias in deep learning models trained using medical imaging data. This study also showcases the benefits of utilising controlled, synthetic bias scenarios for objectively studying the mechanisms of shortcut learning.

Funding

Alberta Innovates, Natural Sciences and Engineering Research Council of Canada, Killam Trusts, Parkinson Association of Alberta, River Fund at Calgary Foundation, Canada Research Chairs Program.

Keywords: Artificial intelligence, Algorithmic bias, Synthetic data

Research in context.

Evidence before this study

A search of the terms (“medical imaging” and “deep learning”) and (“performance disparities” or “algorithmic bias” or “shortcut learning”) was conducted on PubMed and Google Scholar for relevant articles published prior to July 2024. Previous works in this domain have mostly focused on case studies of subgroup performance disparities in medical imaging AI models, and development and/or evaluation of bias mitigation strategies on such models. A small number of recent studies investigated how biases are encoded within the learned features of models trained using medical imaging data. However, these studies were performed with real medical imaging datasets, which contain many unknown and untraceable sources of bias. Thus, there has not yet been an established method for comprehensively and reliably studying the mechanisms of algorithmic bias encoding and shortcut learning.

Added value of this study

We propose an analytical framework for objectively quantifying the degree to which biases are encoded throughout multiple layers of a deep learning model using a recently proposed tool for generating synthetic datasets with controlled biases. Counterfactual bias scenario datasets generated with this framework enable a definitive and traceable quantification of algorithmic encoding of bias, which is not possible using real medical imaging datasets. Employing this methodology, we empirically demonstrated how the learned features of bias contributed to the ability of the model to exploit bias “shortcuts” when trained to classify disease, and showed how different types of biases can influence this so-called shortcut learning to different extents.

Implications of all the available evidence

Understanding how AI systems encode and utilise dataset bias is highly important but difficult to comprehensively study with real-world medical imaging data containing many unknown sources of bias. This work establishes an analytical foundation for comprehensive quantification of the mechanisms of algorithmic bias which could extend to various other architectures and tasks, and enhances the general understanding of bias in AI for medical image analysis.

Introduction

Medical imaging datasets have a unique level of complexity when compared to standard computer vision (CV) datasets used in artificial intelligence (AI)-based image analysis. For instance, many medical imaging modalities are three-dimensional rather than two-dimensional, and classification of medical images often depends on subtle, nearly indistinguishable pathological differences, rather than high-level interpretable features such as the object type. The features of a medical image that could introduce bias into AI models, and the ways that these bias-associated features can interact and compound are also distinctly complex in comparison. For instance, consider datasets commonly used in research on spurious correlations in CV: in Waterbirds,1 the source of bias is whether a particular bird is pasted on a land or water background. In CelebA,2 a person's hair colour can be spuriously correlated with their perceived gender. These spurious attributes can lead to shortcut learning, in which deep learning models learn to associate a classification outcome with some extraneous feature, leading to poor generalisation on data that does not contain this association.3,4 Various studies that have used these datasets as “toy” examples for investigating bias have benefitted from being able to accredit downstream algorithmic bias to these known and obvious attributes. For example, Taghanaki et al.5 proposed a masking algorithm to mitigate shortcut learning in CV models. They identified that the baseline models undesirably relied on known spurious attributes, while their proposed method used the correct features for the classification task (e.g., hair instead of facial features for classifying hair colour, and bird instead of background for classifying bird type). Yenamandra et al.6 aimed to interpret the shortcuts learned in CV models by visualising subsets of data that had poor performance. They were able to validate that their method performed as intended due to prior knowledge of the types of images that were expected to have poor performance (e.g., waterbirds on land). Various studies using these simple “toy” datasets have allowed researchers to develop a deeper understanding into how spurious correlations and subsequent shortcut learning manifest in deep learning models used in CV.

While these insights regarding algorithmic bias generated in the CV domain are undoubtedly valuable, they may not necessarily directly translate to the medical image analysis domain. In medical imaging datasets, features associated with biological characteristics (e.g., age, sex, skin tone), social determinants of health (e.g., economic stability, access to healthcare, experiences of discrimination), acquisition conditions (e.g., scanner type, imaging protocol), as well as the distribution of all of these factors across multiple sites combine in ways that create largely uninterpretable and untraceable sources of algorithmic bias.3,7, 8, 9, 10, 11, 12, 13 Thus, it is difficult to confidently develop and validate methods or findings when there are few conclusively known sources of bias in real-world medical image datasets. Despite these challenges, recent studies have taken steps towards facilitating greater interpretability into how these (often sensitive) attributes contribute to bias (typically measured in the form of subgroup performance disparities).3,14 For instance, Glocker et al.14 used dimensionality reduction techniques, including principal component analysis (PCA), to investigate how bias is encoded within the learned feature space in the penultimate layer of a chest x-ray classification model. They found that disease classes could be discriminated within the first principal component (PC), and that associations in the PCs between sensitive attributes and disease could be related to the model's subgroup performance disparities. Their approach of inspecting marginal distributions of subgroups within a model's penultimate layer feature space has also been applied to image acquisition bias in Parkinson's disease classification11 and race and sex bias in brain age prediction15 from brain magnetic resonance imaging (MRI) data.

While these studies also found that subgroups with performance disparities could be distinguished within the feature space of trained models, it is still unknown which image features associated with those subgroups made them susceptible to algorithmic bias to begin with. Typically, the real-world categories in which performance disparities are observed (e.g., self-reported race15) are at best proxy variables that may obscure the real sources of spurious correlations and subsequent shortcut learning. Currently, there is a gap in the literature preventing a comprehensive understanding of shortcut learning-induced bias in medical image analysis models. While it is often assumed that spurious features, which are associated with some combination of biological, social, and imaging factors, can be what leads to bias, the complexity and uncertainty of how these factors interact makes it challenging to pinpoint where these biases manifest in learned representations throughout a model, why such biases lead to shortcut learning, and how different sources of bias interact with each other.

The recently proposed Simulated Bias in Artificial Medical Images (SimBA) framework16,17 was developed to enable the study of bias in medical imaging models with enhanced objectivity, as it provides control over the sources of bias in a dataset (similar to Waterbirds) along with the realism and scale of medical images (here: 3D brain MRI). With this publicly available framework, it is possible to define ground truth disease effects (the target for a classification task) and bias effects (intended to act as spurious correlations) in the data. Furthermore, a key benefit of this framework is the ability to generate true counterfactual datasets, in which the same simulated subjects can be studied under various “bias scenarios”. For example, a brain MRI belonging to a distinct subject can be generated with or without a specified bias effect. Thus, we have access to the counterfactual scenario in which an alternate version of the image exists. This concept is related to the idea of “digital twins” in clinical trials, where multiple treatment scenarios can be studied for a given patient, in silico.18 Thus, deep learning models trained using SimBA counterfactual datasets provide strong baselines for subsequent analyses, making it possible to explore questions such as “what would happen to the model if the biases were X instead of Y?” The utility of this framework has, for example, been showcased in previous work,17 where performance disparities and efficacy of bias mitigation methods were shown to differ between counterfactual scenarios with varying proximity between disease and bias effects. Overall, the SimBA framework makes biases traceable via direct comparison to baseline scenarios in which identical datasets do not contain these specific biases being studied. This enables a degree of conclusiveness in studying a model's internal representations of bias that is not possible with real medical imaging data, where many known and unknown sources of bias interact in complex ways and where true counterfactuals are usually not available.

Thus, the contribution of this work is to present an analysis framework which can provide strategies for developing foundational knowledge towards bridging the gap of understanding the where, why, and how of bias in medical imaging AI, using SimBA. In a convolutional neural network (CNN) trained using synthetic brain MRI datasets with different known biases, we quantified how those biases were encoded in a dimensionality-reduced feature space in multiple layers throughout the model. We believe that such an analysis of bias encoding throughout multiple layers of a model is needed to develop a comprehensive understanding of feature interactions. Methodically, we also expand the SimBA framework to incorporate multiple bias subgroups and intensity-based bias effects in addition to already present morphology biases. Using this setup for systematically investigating algorithmic bias encoding due to shortcut learning, we demonstrate how layer-wise analysis of counterfactual bias scenarios enables new insight into the following three key questions:

Q1.Where is bias encoded?

Q2.Why does shortcut learning occur?

Q3.How are different sources of bias encoded?

Methods

Data

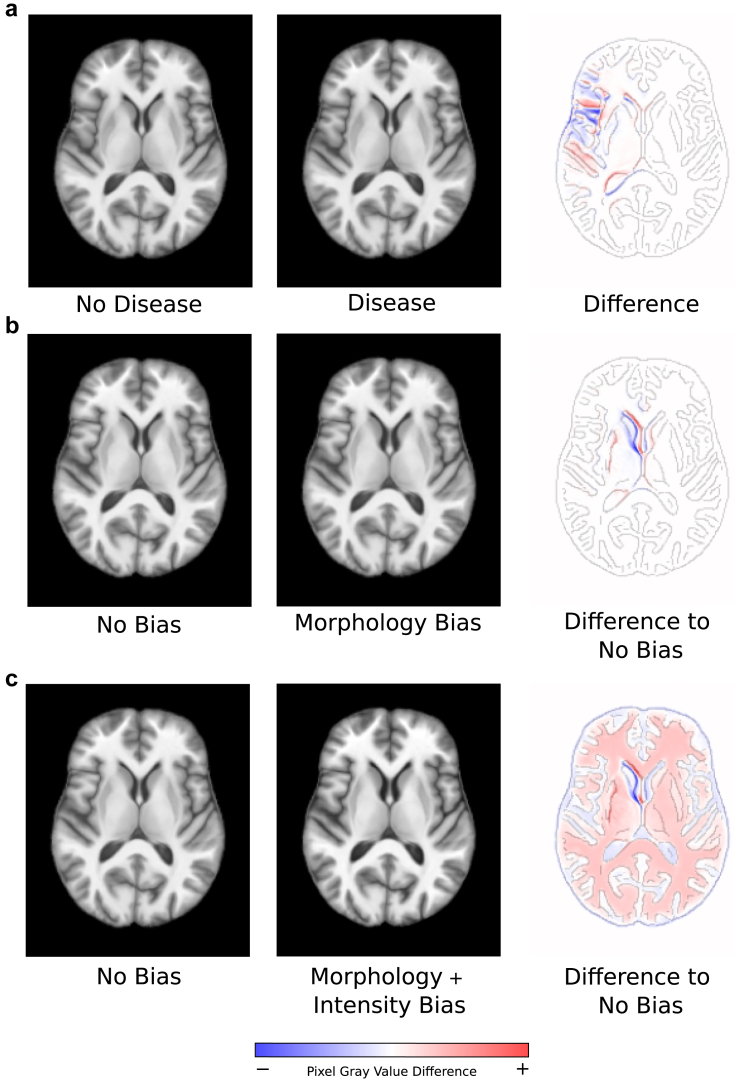

We utilised SimBA16,17 to generate synthetic T1-weighted brain MRI datasets of size 173 × 211 × 155 (1 mm isotropic spacing), exemplified in Fig. 1. These datasets contained realistic differences in global brain morphology (“subject effects”, representing inter-subject variation) and two balanced classes (“disease” and “non-disease”), which were modelled as varying degrees of morphological deformation in the left insular cortex region, identical to the approach described in Stanley et al.17 (See Fig. 1a). These effects were not intended to represent any real-world disease conditions, but were instead meant to serve as arbitrary but realistic “toy” classes for the purpose of an objective bias analysis.

Fig. 1.

Examples of axial slices of synthetic SimBA data. The third column of the figure shows difference maps between the images from the second and first column of the corresponding row overlaid on an outline of the first column image. Here, blue regions indicate negative pixel grey value differences, red regions indicate positive pixel grey value differences, and white images indicate no pixel grey value difference. a: Comparison of an image from the non-disease class with an image from the disease class. b: Comparison between no bias and morphology bias counterfactual images. c: Comparison between no bias and morphology-plus-intensity bias counterfactual images.

To investigate where and how bias was encoded in a CNN model (Q1, Q2), we generated a dataset of 4303 3D images, a subset of which included a morphology-based bias effect. A counterfactual bias scenario dataset without the presence of the morphology-based bias effect was also generated to serve as a baseline for comparison, which used the same 4303 simulated subjects (Fig. 2a, “Morphology Bias Dataset” and Fig. 2b, “No Bias Dataset”). The morphology-based bias effect was represented by a morphological deformation in a brain region that was separate from the disease effect (here: the left putamen) (See Fig. 1b). Identical to the approach described in Stanley et al.,17 bias effects impacted the same brain region for all affected images in the dataset, but varied in the degree of morphological deformation. These bias effects were added to the majority (i.e., 70%) of the disease class and a minority of the non-disease class (i.e., 30%), which simulates a “prevalence disparity”.19 This was previously shown16,17 to lead to performance disparities between the “bias” and “non-bias” subgroups (i.e., the subsets of data with and without the bias effect, respectively) as a result of a CNN learning to associate the presence or lack of the bias effect with the respective disease classes (i.e., shortcut learning).

Fig. 2.

Illustrative representation of the composition of counterfactual datasets, where shaded regions indicate proportion of bias subgroups within each dataset. a: No Bias Dataset, where there are no bias effects are present in any of the images. b: Morphology Bias Dataset, where a majority of the disease class and a minority of the non-disease class contain images with morphology-based bias effects. c: Morphology and Intensity Bias Dataset, where a majority of the disease class and a minority of the non-disease class contain images with both morphology and intensity-based bias effects. Size of bias subgroups are as follows: i: n = 1440, ii: n = 713, iii: n = 704, iv: n = 1446, v: n = 606, vi: n = 834, vii: n = 109, viii: n = 595, ix: n = 602, x: n = 111, xi: n = 844, xii: n = 602. “Subject A” and “Subject B” represent example images from the datasets. Each simulated subject appears exactly once in each of the three counterfactual datasets, but may manifest with a morphology bias effect, intensity bias effect, or both, depending on the bias scenario (e.g., Subject A).

To study how different sources of bias were encoded in a CNN model (Q3), we generated an additional counterfactual dataset of the same 4303 simulated subjects, in which both morphology- and intensity-based bias effects were present in subsets of the data (Fig. 2c, “Morphology and Intensity Bias Dataset”). Specifically, we simulated a scenario in which the data from the same synthetic subjects used in Q1/Q2 was acquired using two different MRI scanners, which resulted in a global intensity-based bias effect. This effect was introduced by increasing the contrast of the images by a factor of 20% (Fig. 1c). 70% of the disease class and 30% of the non-disease class contained this intensity bias effect, also leading to prevalence disparities in the context of intensity bias subgroups. In other words, within each class, there were four distinct bias subgroups, combining the presence or absence of both morphology- and intensity-based bias effects (Fig. 2c, “Morphology and Intensity Bias Dataset”). Overall, 12,909 synthetic 3D brain MRI images were generated for this work, belonging to 4303 distinct simulated subjects that were present in each of the three counterfactual bias scenario datasets as illustrated in Fig. 2.

Models and performance evaluation

Similar to prior works with SimBA,16,17 we studied a basic CNN with six blocks. The first five blocks were comprised of convolution (2 × 2 × 2, filters = 16, 32, 64, 128, 256)—batch normalisation—sigmoid activation—max pooling (2 × 2 × 2), and the sixth block was comprised of average pooling—dropout, followed by a fully-connected softmax classification layer. A learning rate of 1e−4, batch size of 4, and the Adam optimizer was used, with the model trained using validation loss early stopping with patience = 15 epochs, typically converging around 30 epochs. Separate models with the same architecture and optimisation strategy were trained for each of the three counterfactual bias scenario datasets (i.e., No Bias, Morphology Bias, Morphology + Intensity Bias). Models were implemented in Keras/Tensorflow 2.10 using a NVIDIA GeForce RTX 3090 GPU.

Each of the three datasets containing 4303 images were split into partitions of 50%/25%/25% for training, validation, and testing (Table 1). The splits were identical for each bias scenario dataset, ensuring that the same simulated subjects were used for training, validating, and testing of the model in all three counterfactual models. Each model had identical weight initialisation, batch loading, and used deterministic GPU behaviour. These identical model pipelines for each counterfactual scenario enabled a detailed analysis of the model's learned features that were solely a result of the morphology and intensity bias effects under study, since the bias scenarios had corresponding baselines without bias effects for comparison.

Table 1.

Composition of bias subgroups in train/validation/test splits of the dataset.

| Morphology + Intensity subgroup | Morphology + No Intensity subgroup | No Morphology + Intensity subgroup | No Morphology + No Intensity subgroup | |

|---|---|---|---|---|

| Disease class | 418/206/210 | 306/149/151 | 298/151/146 | 56/30/23 |

| Non-disease class | 55/30/26 | 300/149/153 | 303/146/153 | 420/212/212 |

Columns indicate subgroup with given bias effects (e.g., Morphology + Intensity = images with both morphology and intensity bias effects, Morphology + No Intensity = images with morphology bias effect but not intensity bias effect).

Disease classification accuracy and bias in model performance were evaluated on the test partition for models trained on each of the counterfactual bias scenario datasets. Model performance bias was measured by computing performance disparities between bias subgroups. In other words, differences in performance were calculated between the subsets of data that either contained (“bias subgroup”) or did not contain (“non-bias subgroup”) the specified bias effect(s). More specifically, the differences in true positive rates (ΔTPR) and false positive rates (ΔFPR) were computed between bias and non-bias subgroups in the test dataset, where a positive classification corresponded to the disease class.

Statistics

Ten weight initialisation seeds were analysed for each of the three bias scenarios to compute 95% confidence intervals for accuracy and performance disparities. Statistical testing was performed to check for differences in accuracy between the three models trained on the respective bias scenario (No Bias, Morphology Bias, Morphology + Intensity Bias) using a one-way repeated measures ANOVA with Tukey's multiple comparison testing after checking for normality with the Shapiro–Wilk test. Two-tailed paired t-tests with Bonferroni correction were used to check for significant differences in ΔTPR and ΔFPR between the Morphology Bias and No Bias models, and between the Morphology + Intensity Bias and No Bias models, after checking for normality with the Shapiro–Wilk test. Significance was determined at a 95% confidence level. Analysis was performed in GraphPad Prism 10.0.2.

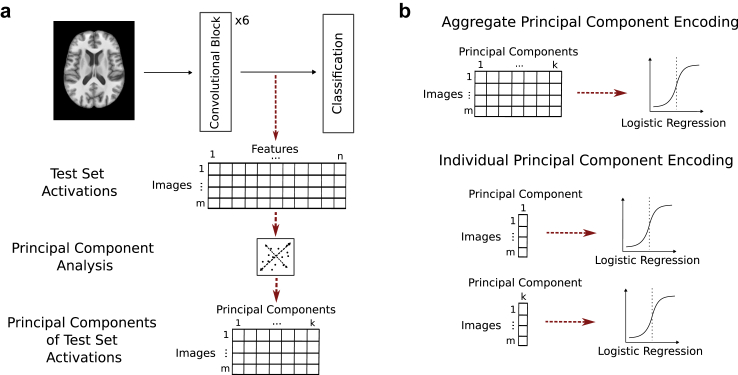

Evaluation of bias encoding

We quantitatively measured the amount of disease or bias encoding in the trained CNNs using the activations (features) of the test partition from a given model layer. After applying principal component analysis (PCA) to reduce the original high-dimensional feature space, we assessed the accuracy of a logistic regression classifier in predicting disease or bias using the activations projected onto these principal components. This process is illustrated in Fig. 3. More precisely, we ran inference on a trained model using a test dataset of size m and extracted feature activations of size n from each layer L of interest. These features were flattened into a one-dimensional vector such that a matrix of size m × n was created for each layer L. A PCA decomposition of the matrix that explained 99% of the variance in the feature space for each L was then performed. The resulting projection matrix shape was m × k where each of the m datasets were projected on each of the k principal components (PCs—PCs are ordered by decreasing variance covered) (see Fig. 3a). Disease or bias encoding for each layer was determined by investigating the accuracy of a logistic regression model trained to classify disease or bias, respectively, from the activations in this PC space. These accuracies essentially represent the degree of linear separation of bias or disease within the dimensionality-reduced feature space of the model. Encoding was measured both at an aggregate level (i.e., using all PCs from a given layer) and at an individual PC level (i.e., using each PC from a given layer) (Fig. 3b). We present the individual encoding results from the last four convolutional blocks for a single weight initialisation seed in the main manuscript, with full results for all blocks and additional model replicates in the Supplementary Material (Figs. S1–S4, respectively).

Fig. 3.

a: Process of extracting features and performing dimensionality reduction for individual layers of a trained model. b: Difference between quantitative measures of “aggregate” versus “individual” encodings from principal components.

Role of funders

The funding sources played no role in the design, analysis, interpretation, or reporting of the study, nor in the decision of where to submit the manuscript for publication.

Ethics

This research is exempt from ethical approval as the analysis is based on synthetically generated T1-weighted brain MRI datasets.

Results

Model performance and disparities

The simulated bias scenarios indeed led to biased models where shortcut learning was assumed to have occurred, as evidenced by two observations related to model performance as described in the following.

First, the overall classification accuracies were 84.9% (95% CI: 84.7%–85.2%), 86.1% (85.8%–86.3%), and 88.3% (87.6%–88.9%) for the models trained on the No Bias dataset, the Morphology Bias dataset, and the Morphology + Intensity Bias dataset, respectively (Table 2). Both the Morphology Bias (p = 0.0003, one-way repeated measures ANOVA with Tukey's multiple comparison testing) and the Morphology + Intensity Bias (p < 0.0001) had significantly higher accuracies than the No Bias model, and the Morphology + Intensity Bias (p = 0.0002) also had a significantly higher accuracy than the Morphology Bias model. These improved overall accuracies when trained with biased data, compared to unbiased data, clearly demonstrate that the model leveraged the bias effects to enhance accuracy beyond what would be achievable using only disease effects for the binary classification task. In other words, if the model solely relied on disease effects for classification, the maximum performance would be equal to that achieved using the No Bias dataset. Therefore, the significantly higher accuracies achieved when using the Morphology Bias and Morphology + Intensity Bias datasets must be a result of the model exploiting the bias shortcut, since the setups were otherwise identical.

Table 2.

Disease classification accuracy of the model trained on each counterfactual dataset.

| Bias Scenario Dataset | No Bias | Morphology Bias | Morphology and Intensity Bias |

|---|---|---|---|

| Disease classification accuracy (%) | 84.9 (84.7–85.2) | 86.1 (85.8–86.3) | 88.3 (87.6–88.9) |

Reported as mean (95% CI).

Second, there were significant TPR/FPR differences (Δ) for the models trained on the biased data (Table 3, Supplementary Material Table S1). More precisely, the ΔTPR and ΔFPR between the subgroups with and without morphological bias effect were 16.7% (14.0%–19.7%, p < 0.0001, two-tailed paired t-test) and 19.0% (17.6%–20.5%, p < 0.0001) respectively. In the multi-bias scenario, the ΔTPR was 40.4% (33.0%–47.9%, p < 0.0001) and ΔFPR was 37.7% (34.0%–41.4%, p < 0.0001) between the subgroups with and without both morphological and intensity bias effects. In other words, in each bias scenario the (biased) model predicted the class associated with the majority bias subgroup at a higher rate.

Table 3.

Difference in true positive rate (ΔTPR) and false positive rate (ΔFPR) for the Morphology Bias dataset and Morphology and Intensity Bias dataset.

| Bias Scenario Dataset | Morphology Bias | Morphology and Intensity Bias |

|---|---|---|

| ΔTPR (%) | 16.7 (14.0–19.7, p < 0.0001) | 40.4 (33.0–47.9, p < 0.0001) |

| ΔFPR (%) | 19.0 (17.6–20.5, p < 0.0001) | 37.7 (34.0–41.4, p < 0.0001) |

The subgroups used in the calculations for the Morphology Bias Dataset are those with the morphology bias effects minus those without morphology bias effects. The subgroups used in the calculations for the Morphology and Intensity Bias Dataset are those with both morphology and intensity bias effects minus those without morphology or intensity bias effects. Reported as mean (95% CI). p-Values indicate statistically significant results compared to the No Bias scenario.

Q1: Where is bias encoded?

The morphology-based bias effect could be identified with 100% accuracy from the aggregate collection of PCs of each convolutional block of the CNN (Supplementary Material Table S2), indicating that the bias was strongly encoded throughout the model. Individual PC encoding values are presented in Fig. 4. Upon inspection of the individual PC encodings for the Morphology Bias scenario (Fig. 4a), it was found that a single PC dominated the degree of encoding in each block (>90% accuracy), although not quite to the 100% accuracy as seen in the aggregate encoding analysis. The remainder of the information required to classify bias with 100% accuracy was likely contained within the PC that we observed to be disease-dominant (i.e., resulted in the highest accuracy for disease classification), from which bias could be classified with ∼70% accuracy in each layer. Thus, bias was encoded jointly in its “own” PC, as well as in the orthogonal PC primarily used to predict the disease class. Although the baseline No Bias dataset (Fig. 4b) only included disease effects, a bias encoding level of ∼62% was observed within each disease-dominant PC. These values represented the “chance level” bias encoding contained within the disease PCs since bias subgroup representation was correlated with disease class (i.e., 70% of the disease class contained the bias subgroup, so accurate classification of disease status also resulted in a >50% chance of classifying bias subgroups).

Fig. 4.

a: Individual principal component (PC) encoding of disease and bias in the model trained on the Morphology Bias dataset. b: Individual PC encoding of disease and bias in the model trained on the No Bias dataset. Earlier layers result in more PCs due to a higher-dimensional feature space. However, we visualise only enough PCs to show where a substantial level of disease or bias encoding occurs. PCs are ordered in descending amount of variability contained in the original activation space.

Q2: Why does bias lead to shortcut learning?

Given the individual PC encodings (Fig. 4a), it can be seen that the bias-dominant PCs contained near chance-level disease information (∼50%). Therefore, it may be argued that the bias-dominant PCs did not contribute directly to shortcut learning, since they would not provide any useful information that could influence disease classification. In other words, just because biases were encoded, this did not necessarily mean that these biases contributed to shortcut learning for the main task.

The reason behind the shortcut learning (and consequently, performance disparities) in the Morphology Bias scenario was more clearly revealed when comparing this model's encoding patterns to the baseline No Bias scenario (Fig. 4b). In this without-bias scenario, the disease-dominant PC from each layer encoded a chance-level classification accuracy of ∼62% for the synthetic subjects which would have contained the bias effect in the with-bias scenario. In the model trained using the Morphology Bias dataset where these bias effects were present, the disease-dominant PCs encoded bias classification accuracies of 6.5%, 8.5%, 9.9%, and 8.4% greater than the chance level, for each respective block (Fig. 4a). In blocks 3, 5, and 6, the level of disease encoding is also higher than what would be possible if the model only had access to the disease effect information, as in the No Bias scenario. These results indicate that the model learned representations of the disease that were associated with bias features, as these shared representations could be used as a shortcut for achieving higher accuracy.

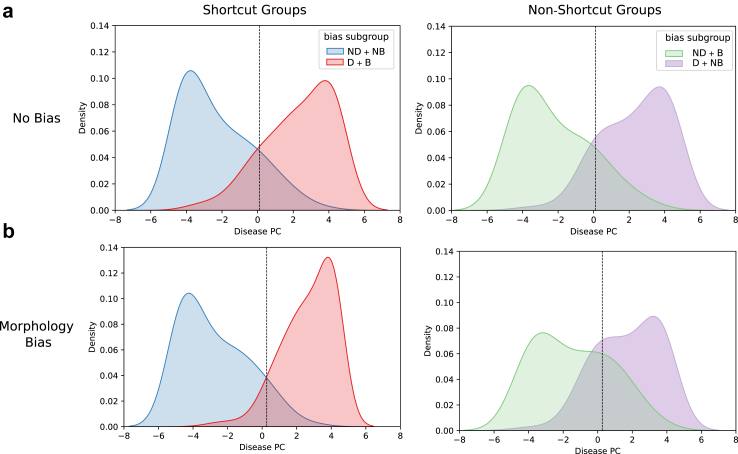

The model's association between disease classification and bias subgroups is further visualised in Fig. 5, which illustrates the distributions of activations from the penultimate layer of the CNN (block 6) for the test partition images along the disease-dominant PC. The marginal distributions of each bias subgroup with corresponding ground truth disease class label are displayed. Here, the “shortcut groups” are the subgroups that contain (or lack) the bias effect that correlates with the majority representation of data within the disease (or non-disease) class. These are the subgroups where the shortcut for predicting disease classes exists (i.e., in Fig. 2, group i: bias effect + disease class, and group iv: no bias effect + non-disease class). Conversely, the “non-shortcut groups” are the subgroups in which the presence (or lack) of the bias effect does not correlate with the majority representation within the respective classes of disease or non-disease, and that a model reliant on shortcuts would misclassify at a higher rate (i.e., in Fig. 2, group iii: no bias effect + disease class, and group ii: bias effect + non-disease class). These plots also contain a vertical line corresponding to a linear decision boundary separating the disease and non-disease classes.

Fig. 5.

Distribution of test partition activations from the penultimate layer (block 6) of the model along PC #1 for the disease-dominant PC in a: the No Bias scenario (top row) and b: the Morphology Bias scenario (bottom row). The vertical dashed line represents the decision boundary from a logistic regression model classifying disease with a threshold of 0.5. Abbreviations: D: disease class, ND: non-disease class, B: bias subgroup, NB: non-bias subgroup.

When considering only the No Bias scenario (Fig. 5a), the distributions of the shortcut and non-shortcut groups (left and right column, respectively) appear relatively similar. This finding is in contrast to the Morphology Bias scenario (Fig. 5b), where the shapes of the shortcut group distributions are quite different from those of the non-shortcut groups. More specifically, when the morphology bias effect was present, the shortcut groups exhibited a higher density towards the extremes of the distribution, while the non-shortcut groups had higher density towards the middle of the distribution, compared to the No Bias counterparts shown in Fig. 5a. Relative to the linear decision boundary separating the disease and non-disease classes, the shortcut and non-shortcut groups in the No Bias scenario (Fig. 5a) have regions of similar size that fall on the wrong side of the decision boundary (representing misclassifications). In the Morphology Bias scenario Fig. 5b, these regions are much larger for the non-shortcut groups compared to the shortcut groups. This finding illustrates how the spurious correlations between the bias subgroups and disease labels led the model to learn feature representations that achieved high accuracy on the shortcut groups, which comprised the majority of the dataset, at the expense of misclassifying a larger proportion of the minority non-shortcut groups.

Q3: How are different sources of bias encoded?

Fig. 6 shows the disease and bias encoding within individual PCs for the model trained on the dataset with both morphology- and intensity-based bias effects. Two predominant differences can be observed compared to the morphology bias-only counterfactual scenario. First, the distribution of bias information across PCs varied as data moved through the network. For example, the morphology bias-dominant PC (#10) in block three did not contain any information useful for classifying intensity bias, while the intensity bias-dominant PC (#6) allowed for 71% accurate classification of the morphology bias. In block four, PCs #3–5 shared similar degrees of encoding of either bias effect, while in the final two blocks of the model, a specific bias effect dominating single PCs emerged, similar to what was seen in the morphology bias-only scenario (i.e., PC #2 for intensity bias, PC #3 for morphology bias). Second, the shortcut strength of the bias effect (measured by the amount of bias encoding in the disease-dominant PC compared to the chance level encoding of the No Bias scenario) was different between intensity and morphology-based bias effects. In each layer analysed, the shortcut strength of the intensity bias was considerably stronger than that of the morphology bias. While the morphology bias still had higher-than-chance level accuracy (62%, see Supplementary Material Fig. S1), the intensity bias in the last three blocks appeared to have the strongest influence on the feature representation used for predicting the target disease class. This may have been due to the model being able to easily identify the intensity effect as a shortcut, without having to distinguish between the morphology that defines the disease effect versus the bias effect.

Fig. 6.

Individual principal component (PC) encoding of disease, morphological bias, intensity bias, and subgroup (i.e., the four distinct bias subgroups, combining the presence or absence of both morphology- and intensity-based bias effects) bias for the Morphology and Intensity Bias scenario.

Discussion

In this study, we systematically investigated how the manifestation of bias propagated through a classification CNN, using counterfactual synthetic brain MRI datasets representing three distinct scenarios (No Bias, Morphology Bias, Morphology + Intensity Bias). We measured bias encoding as the ability to predict bias from the PCs of the model's feature space with a linear model, and found that: 1) Bias was encoded throughout the model, strongly in a bias-dominant PC and subtly in a disease-dominant PC. 2) The encoding of bias in the disease-dominant PC represented the influence that the bias effects had on shortcut learning. 3) Different sources of bias were encoded to different extents throughout the model and influenced shortcut learning to different degrees. Using these controlled counterfactual bias scenarios, we provided empirical evidence showing that deep learning models exploit spurious correlations to improve accuracy, as expected when trained using standard empirical risk minimisation.20

This work combined extended versions of the SimBA framework for synthetic data generation and the bias analyses proposed by Glocker et al.14 to investigate bias across various layers in a CNN model. Similar to this related prior study, which used real chest x-ray data,14 we also found that disease labels could be discriminated within the first principal component of the feature space of the last convolutional block, and that shifts in the marginal distribution of bias subgroups in this space can be an indicator of performance disparities. Importantly, in the studies by both Glocker et al.14 and Brown et al.,3 it was shown that a strong level of bias encoding in the feature space does not necessarily indicate that this bias is contributing to harmful shortcut learning. In this work, we not only corroborate these conclusions but also provide new empirical justification for this: shortcut learning resulted from the way that bias attributes influenced the representation of disease features, whereas strong bias encoding was encoded elsewhere in the feature space but did not contribute any useful information for classifying disease.

While our experiments were (purposely) highly controlled and some individual results are likely model architecture-specific, we believe that some of the general patterns of bias encoding observed in this work, e.g., certain bias attributes dominating subspaces of model's learned features and bias attributes influencing learned disease features, may also be likely to occur in real datasets albeit to a more complex degree. For example, in a prior real-world neuroimaging analysis of bias in a multi-site Parkinson's disease CNN classifier, Souza et al.11 found that the MRI scanner type, which is likely to introduce a stronger intensity-based bias compared to a morphological bias, was a significant partial mediator between true and predicted disease status. Conversely, sex (more likely to contribute morphology-based bias) was found to be insignificant in mediation analysis. Thus, their model may have learned an intensity-dominant shortcut, similar to what we observed in our multi-bias scenario. However, as the intensity bias that we modelled in this work was represented by a static contrast change that affected the entire image space, future work should aim to model intensity-based biases that manifest non-linearly across the image space or are present in varying degrees throughout a dataset, which may be more representative of real-world image acquisition effects (e.g., “bias field” artifacts causing intensity non-uniformities in MRI acquisition).

In the specific datasets used in this work, we modelled disease effects and morphology-based bias effects as impacting separate brain regions. However, in some real-world examples of anatomical biases from medical images, these effects could overlap or impact the same regions. For instance, regions relevant to lung or heart-related disease detection in chest x-ray images could overlap with demographic-associated features,21 and pigmentation of lesions across different skin tones could impact models trained for dermatological tasks.22 In brain imaging specifically, saliency map-derived importance scores for certain brain regions have been shown to vary between performance-disparate subgroups,23 possibly indicating that these regions were both relevant to the classification task and a source of bias. Various previous works have studied morphological differences between demographic subgroups in brain imaging (e.g.,24,25), which can help facilitate future work that aims to model bias effects that are relevant to real-world populations. However, relatively few studies,21,23,26,27 most of which analysed chest x-ray images, have been conducted so far to identify specific features in medical images which may be used as shortcuts by deep learning models and how those are related to disease-relevant features. Thus, while the flexibility of SimBA can enable researchers to study both spatially isolated and overlapping effects in future studies, complementary work also needs to be done on real-world data to identify how disease and bias effects manifest in various conditions, which can enable more faithful modelling of these conditions in the context of the analysis proposed in this study.

We observed that a relatively small number of PCs explained most of the variation represented in the feature space encodings of the SimBA datasets. This is expected to a certain degree, since the simulated data contained only three main “effects” (subject morphology, disease effects, and bias effects) without any additional structured or unstructured noise. More complex real-world datasets would likely require many more PCs with biases encoded to different extents. Therefore, such an analysis of real-world data would also inevitably be much more complex. Identifying how individual sources of bias are encoded in such real-world data would also not be as easily interpretable compared to our setup—while we have access to each counterfactual dataset that allows us to precisely compare how the addition of biases modifies the encoding, it may not even be possible to identify a true, single source of bias in real data, let alone explore how it is encoded in individual components and the extent to which it influences disease classification. Thus, although not necessarily a direct representation of what one would expect to obtain from a layer-wise analysis of bias encoding from real data, our setup enables us to confidently trace known sources of bias, helping us to develop a stronger understanding of how bias manifests in the simplest scenarios that can systematically be made more complex.

Additionally, the way bias is encoded likely manifests differently depending on the architecture of the model. Since this study is the first analysis demonstrating how synthetic data with simulated biases can be used to study layer-wise bias encoding, we chose to use a rather basic CNN that excluded components which could make the interpretation of results more complex (e.g., skip connections, attention blocks). However, future studies using the proposed SimBA-based framework should aim to investigate more complex architectures to facilitate understanding of how biases manifest differently under different conditions, especially in state-of-the-art deep learning models. Furthermore, dimensionality reduction methods other than PCA could be used. Notably, supervised decomposition methods like linear discriminant analysis or supervised PCA, as well as stochastic algorithms like t-distributed stochastic neighbour embedding (t-SNE) or uniform manifold approximation and projection (UMAP), could give different interpretations of how the feature space within biased models is organised, which would be an interesting direction of investigation for future work.

Importantly, the type of shortcut learning that we investigate in this work is not the only reason for bias observed in deep learning models trained on medical images. In general, AI-based medical image analysis models are considered to be biased (or “unfair”) when they fail to perform equally across various subsets of the population (often relating to sensitive sociodemographic attributes). As shown in this work, this can occur even when the test (or deployment) population faithfully represents the training population, but a shortcut is learned that does not generalise to all subgroups. This is also a problem when dataset shift occurs,28 in which the distribution of data in the test set is different than that of the training set, such that learned feature representations inadequately generalise. A key future direction with the proposed analysis framework includes modelling specific data “mismatch” scenarios, such as shifts in class prevalence or label annotation policies, to facilitate a better understanding of whether there are differences in internal representations of bias learned as a result of different causal mechanisms of bias.

Finally, beyond improving our understanding of how various bias scenarios are encoded in a model's learned features, analysis of bias encoding using SimBA can also facilitate a deeper understanding of the efficacy of bias mitigation strategies in targeting the sources of shortcut learning. For instance, future work could allow investigation into questions such as, to what degree do bias mitigation strategies disentangle the relationship between bias and disease features within disease-dominant PCs? Do bias “unlearning” strategies primarily remove information from bias-dominant PCs, which appear to contribute little, if any, to shortcut learning? While out of scope for this initial study, future experiments using the proposed framework can provide deeper interpretation into how bias mitigation strategies act on the internal representations of deep learning medical image analysis models, and the extent to which representations of bias vary between model architectures.

Conclusion

In summary, we utilised counterfactual bias scenarios with synthetic brain MRI data to systematically analyse how different biases were encoded in a convolutional neural network used for classification of medical images. Through this and future work building upon this analytical framework, the research community can build foundational knowledge of how bias propagates through deep learning models and manifests as shortcut learning, which could help facilitate the development of models or bias mitigation strategies that are inherently robust to this phenomenon.

Contributors

E.A.M.S. was responsible for conceptualisation, design of experiments, development and implementation of code, analysis and interpretation of results, verification of data, and writing of the manuscript. R.S. contributed to conceptualisation, design of experiments, verification of data, analysis and interpretation, and review/editing of the manuscript. M.W. and N.D.F. contributed to conceptualisation, review/editing of the manuscript, and provided senior supervision. All authors read and reviewed the final version of the manuscript.

Data sharing statement

Datasets and code are available for download from https://github.com/estanley16/SimBA and https://github.com/estanley16/bias_encoding.

Declaration of interests

The authors have no competing interests to declare.

Acknowledgements

ES was supported by Alberta Innovates, Natural Sciences and Engineering Resource Council of Canada (NSERC) and Killam Trusts. RS was supported by Parkinson Association of Alberta. NDF was supported by Calgary Foundation, Canada Research Chairs Program and NSERC. We would like to thank the reviewers for their constructive and thoughtful comments that greatly improved the paper.

Footnotes

Supplementary data related to this article can be found at https://doi.org/10.1016/j.ebiom.2024.105501.

Appendix ASupplementary data

References

- 1.Sagawa S., Koh P.W., Hashimoto T.B., Liang P. Distributionally robust neural networks for group shifts: on the importance of regularization for worst-case generalization. http://arxiv.org/abs/1911.08731 Available from:

- 2.Liu Z., Luo P., Wang X., Tang X. IEEE International Conference on Computer Vision (ICCV) IEEE; Santiago, Chile: 2015. Deep learning face attributes in the wild; pp. 3730–3738.https://ieeexplore.ieee.org/document/7410782 Available from: [Google Scholar]

- 3.Brown A., Tomasev N., Freyberg J., Liu Y., Karthikesalingam A., Schrouff J. Detecting shortcut learning for fair medical AI using shortcut testing. Nat Commun. 2023;14(1):4314. doi: 10.1038/s41467-023-39902-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Banerjee I., Bhattacharjee K., Burns J.L., et al. “Shortcuts” causing bias in radiology artificial intelligence: causes, evaluation, and mitigation. J Am Coll Radiol. 2023;20(9):842–851. doi: 10.1016/j.jacr.2023.06.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Taghanaki S.A., Khani A., Khani F., et al. Masktune: mitigating spurious correlations by forcing to explore. http://arxiv.org/abs/2210.00055 Available from:

- 6.Yenamandra S., Ramesh P., Prabhu V., Hoffman J. 2023 IEEE/CVF International Conference on Computer Vision (ICCV) IEEE; Paris, France: 2023. Facts: first amplify correlations and then slice to discover bias; pp. 4771–4781.https://ieeexplore.ieee.org/document/10377984/ Available from: [Google Scholar]

- 7.Lee T., Puyol-Antón E., Ruijsink B., Shi M., King A.P. In: Statistical Atlases and Computational Models of the Heart Regular and CMRxMotion Challenge Papers. Camara O., Puyol-Antón E., Qin C., et al., editors. Springer Nature Switzerland; Cham: 2022. A systematic study of race and sex bias in CNN-based cardiac MR segmentation; pp. 233–244. (Lecture Notes in Computer Science) [Google Scholar]

- 8.Groh M., Harris C., Soenksen L., et al. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) [Internet] IEEE; Nashville, TN, USA: 2021. Evaluating deep neural networks trained on clinical images in dermatology with the fitzpatrick 17k dataset; pp. 1820–1828.https://ieeexplore.ieee.org/document/9522867/ Available from: [Google Scholar]

- 9.Seyyed-Kalantari L., Zhang H., McDermott M.B.A., Chen I.Y., Ghassemi M. Underdiagnosis bias of artificial intelligence algorithms applied to chest radiographs in under-served patient populations. Nat Med. 2021;27(12):2176–2182. doi: 10.1038/s41591-021-01595-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Stanley E.A.M., Wilms M., Forkert N.D. In: Ethical and Philosophical Issues in Medical Imaging, Multimodal Learning and Fusion Across Scales for Clinical Decision Support, and Topological Data Analysis for Biomedical Imaging. Baxter J.S.H., Rekik I., Eagleson R., et al., editors. Springer Nature Switzerland; Cham: 2022. Disproportionate subgroup impacts and other challenges of fairness in artificial intelligence for medical image analysis; pp. 14–25. (Lecture Notes in Computer Science) [Google Scholar]

- 11.Souza R., Winder A., Stanley E.A.M., et al. Identifying biases in a multicenter MRI database for Parkinson's disease classification: is the disease classifier a secret site classifier? IEEE J Biomed Health Inform. 2024;28(4):2047–2054. doi: 10.1109/JBHI.2024.3352513. [DOI] [PubMed] [Google Scholar]

- 12.Konz N., Mazurowski M.A. Medical Imaging with Deep Learning. Vol. 227. 2024. Reverse Engineering Breast MRIs: Predicting Acquisition Parameters Directly from Images; pp. 829–845.https://proceedings.mlr.press/v227/konz24a.html (Proceedings of Machine Learning Research). Available from: [Google Scholar]

- 13.Souza R., Wilms M., Camacho M., et al. Image-encoded biological and non-biological variables may be used as shortcuts in deep learning models trained on multisite neuroimaging data. J Am Med Inf Assoc. 2023;30 doi: 10.1093/jamia/ocad171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Glocker B., Jones C., Bernhardt M., Winzeck S. Algorithmic encoding of protected characteristics in chest X-ray disease detection models. EBioMedicine. 2023;89 doi: 10.1016/j.ebiom.2023.104467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Piçarra C., Glocker B. In: Clinical Image-Based Procedures, Fairness of AI in Medical Imaging, and Ethical and Philosophical Issues in Medical Imaging. Wesarg S., Puyol Antón E., Baxter J.S.H., et al., editors. Springer Nature Switzerland; Cham: 2023. Analysing race and sex bias in brain age prediction; pp. 194–204. (Lecture Notes in Computer Science) [Google Scholar]

- 16.Stanley E.A.M., Wilms M., Forkert N.D. In: Medical Image Computing and Computer Assisted Intervention – MICCAI 2023. Greenspan H., Madabhushi A., Mousavi P., et al., editors. Springer Nature Switzerland; Cham: 2023. A flexible framework for simulating and evaluating biases in deep learning-based medical image analysis; pp. 489–499. (Lecture Notes in Computer Science) [Google Scholar]

- 17.Stanley E.A.M., Souza R., Winder A.J., et al. Towards objective and systematic evaluation of bias in artificial intelligence for medical imaging. J Am Med Inf Assoc. 2024;31 doi: 10.1093/jamia/ocae165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bordukova M., Makarov N., Rodriguez-Esteban R., Schmich F., Menden M.P. Generative artificial intelligence empowers digital twins in drug discovery and clinical trials. Expet Opin Drug Discov. 2024;19(1):33–42. doi: 10.1080/17460441.2023.2273839. [DOI] [PubMed] [Google Scholar]

- 19.Jones C., Castro D.C., De Sousa Ribeiro F., Oktay O., McCradden M., Glocker B. A causal perspective on dataset bias in machine learning for medical imaging. Nat Mach Intell. 2024;15:1–9. [Google Scholar]

- 20.Zare S., Nguyen H.V. In: Medical Image Computing and Computer Assisted Intervention – MICCAI 2022. Wang L., Dou Q., Fletcher P.T., Speidel S., Li S., editors. Springer Nature Switzerland; Cham: 2022. Removal of confounders via invariant risk minimization for medical diagnosis; pp. 578–587. (Lecture Notes in Computer Science) [Google Scholar]

- 21.Yi P.H., Wei J., Kim T.K., et al. Radiology “forensics”: determination of age and sex from chest radiographs using deep learning. Emerg Radiol. 2021;28(5):949–954. doi: 10.1007/s10140-021-01953-y. [DOI] [PubMed] [Google Scholar]

- 22.Adamson A.S., Smith A. Machine learning and health care disparities in dermatology. JAMA Dermatol. 2018;154(11):1247–1248. doi: 10.1001/jamadermatol.2018.2348. [DOI] [PubMed] [Google Scholar]

- 23.Stanley E.A.M., Wilms M., Mouches P., Forkert N.D. Fairness-related performance and explainability effects in deep learning models for brain image analysis. J Med Imaging. 2022;9(6) doi: 10.1117/1.JMI.9.6.061102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cosgrove K.P., Mazure C.M., Staley J.K. Evolving knowledge of sex differences in brain structure, function, and chemistry. Biol Psychiatry. 2007;62(8):847–855. doi: 10.1016/j.biopsych.2007.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Atilano-Barbosa D., Barrios F.A. Brain morphological variability between whites and African Americans: the importance of racial identity in brain imaging research. Front Integr Neurosci. 2023;17 doi: 10.3389/fnint.2023.1027382. https://www.frontiersin.org/journals/integrative-neuroscience/articles/10.3389/fnint.2023.1027382/full Available from: [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gichoya J.W., Banerjee I., Bhimireddy A.R., et al. AI recognition of patient race in medical imaging: a modelling study. Lancet Digit Health. 2022;4(6):e406–e414. doi: 10.1016/S2589-7500(22)00063-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lotter W. Acquisition parameters influence AI recognition of race in chest x-rays and mitigating these factors reduces underdiagnosis bias. Nat Commun. 2024;15(1):7465. doi: 10.1038/s41467-024-52003-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Castro D.C., Walker I., Glocker B. Causality matters in medical imaging. Nat Commun. 2020;11(1):3673. doi: 10.1038/s41467-020-17478-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.