Abstract

This survey explores the transformative impact of foundation models (FMs) in artificial intelligence, focusing on their integration with federated learning (FL) in biomedical research. Foundation models such as ChatGPT, LLaMa, and CLIP, which are trained on vast datasets through methods including unsupervised pretraining, self-supervised learning, instructed fine-tuning, and reinforcement learning from human feedback, represent significant advancements in machine learning. These models, with their ability to generate coherent text and realistic images, are crucial for biomedical applications that require processing diverse data forms such as clinical reports, diagnostic images, and multimodal patient interactions. The incorporation of FL with these sophisticated models presents a promising strategy to harness their analytical power while safeguarding the privacy of sensitive medical data. This approach not only enhances the capabilities of FMs in medical diagnostics and personalized treatment but also addresses critical concerns about data privacy and security in healthcare. This survey reviews the current applications of FMs in federated settings, underscores the challenges, and identifies future research directions including scaling FMs, managing data diversity, and enhancing communication efficiency within FL frameworks. The objective is to encourage further research into the combined potential of FMs and FL, laying the groundwork for healthcare innovations.

Keywords: Foundation model, Federated learning, Healthcare, Biomedical, Large language model, Vision language model, Privacy, Multimodal

Introduction

Foundation models (FMs) [1, 2] have risen to prominence as pivotal elements in the field of artificial intelligence [3]. These models are distinguished by their deep learning architectures and a vast number of parameters, allowing them to excel in tasks ranging from text generation to video analysis-capabilities that surpass those of previous AI systems. FMs are developed using advanced training techniques, including unsupervised pretraining [4, 5], self-supervised training [6, 7], instructed fine-tuning [8], and reinforcement human preference feedback [9]. These methodologies equip them to generate coherent text and realistic images with unprecedented accuracy, showcasing their transformative potential across various domains.

The potential of foundation models extends far beyond mere technical capabilities. These models mark a significant paradigm shift from how we utilize artificial intelligence for cutting-edge scientific problem-solving. As versatile tools, they can be rapidly adapted and fine-tuned for specific tasks, eliminating the need to develop new models from the ground up. This adaptability is crucial in fields where processing limited datasets to extract meaningful insights is essential. It is particularly transformative in biomedical healthcare, where the efficacy of AI must be balanced with stringent data privacy considerations [10–12]. In this domain, foundation models not only enhance our analytical capabilities but also ensure that sensitive health information is handled with the utmost integrity, thereby aligning technological advancement with ethical standards.

Federated Learning (FL) [13, 14], a method for training machine learning models across multiple decentralized devices or servers without exchanging local data samples, aligns well with the capabilities of foundation models in the biomedical healthcare sector. In this context, where data privacy and collaborative efforts are essential, FL enables the utilization of vast and varied datasets characteristic of the medical field while protecting sensitive patient information. By applying FL, foundation models can access and analyze extensive medical data without breaching privacy [15, 16], thus overcoming major obstacles in deploying AI technologies where data confidentiality is crucial. Existing applications of FL in conjunction with FMs typically involve training strategies that range from starting from scratch to prompt fine-tuning. FL enhances the application of FMs across both large language models and vision-language models, allowing for comprehensive and privacy-conscious analyses.

Integrating the privacy-preserving and decentralization features of FL with the robust, generalizable capabilities of FMs enables researchers to perform in-depth analyses using insights pooled from local datasets. This approach not only broadens the scope and accuracy of medical research but also complies with stringent data protection laws such as the General Data Protection Regulation (GDPR) [17] in Europe and the Health Insurance Portability and Accountability Act (HIPAA) [18] in the United States. The potential for healthcare is profound, facilitating more personalized medicine where treatment plans are precisely tailored to individual genetic profiles, lifestyles, and medical histories. Additionally, FMs that are pre-trained or fine-tuned via federated learning on diverse datasets can reveal new biomarkers and therapeutic targets, thereby significantly pushing the boundaries of medical research and improving patient care. The synergy between federated learning and foundation models heralds a significant leap forward in the use of medical data, driving innovation in medical technologies while rigorously protecting patient privacy.

This paper presents a comprehensive survey of the latest advancements in foundation models and federated learning within the biomedical and healthcare sectors, highlighting their implementations and addressing the persistent challenges encountered in these fields. A notable application of these technologies involves the use of federated foundation models to train pre-trained vision-language models, such as FedClip [19], which enhance both generalization and personalization in image classification tasks. Additionally, MedCLIP [20] employs vision-text contrastive learning with 20K medical datasets to surpass current benchmarks in medical diagnostics. FedMed [21], a tailored federated learning framework, effectively counters performance degradation in federated settings, facilitating high-quality collaborative training. Another groundbreaking model, MedGPT [22], based on the GPT architecture, utilizes electronic health records to predict future medical events, offering the potential to detect early signs of critical illnesses, such as cancer or cardiovascular diseases [23], before they are typically diagnosable through conventional methods. Importantly, the utilization of federated learning ensures that sensitive patient data is processed on-site, never leaving the institution’s local environment, thus significantly enhancing data security and maintaining strict patient confidentiality.

The integration of federated learning (FL) with foundation models (FMs) offers unprecedented potential to transform medical diagnostics and personalize treatments, greatly enhancing the capabilities of healthcare systems to deliver exceptional care while adhering to rigorous standards of data privacy and security. This technological advancement not only improves patient outcomes but also strengthens trust in the use of AI within critical sectors such as healthcare. However, deploying federated FMs in the biomedical domain comes with significant challenges, including ensuring data privacy and security, achieving model generalization across diverse datasets, and maintaining bias and fairness. Addressing these issues is essential for harnessing the full capabilities of FL FMs in healthcare and biomedical research.

Furthermore, this paper explores future directions and ongoing challenges in the field, emphasizing the importance of real-time learning and adaptation, fostering collaborative innovation, and the generation of synthetic data for both academic and industrial applications within FL frameworks. By overcoming these challenges, researchers and practitioners can fully realize the potential of federated foundation models, leading to revolutionary advancements in healthcare. These efforts will not only contribute to scientific progress but also to the practical, ethical, and efficient implementation of AI technologies in sensitive environments, ultimately benefiting global health outcomes.

We provide a comprehensive review of existing literature on Federated Learning (FL) and Foundation Models (FM) within the biomedical and healthcare domains. This review meticulously categorizes and discusses various aspects such as biomedical and healthcare data sources, foundation models, federated privacy, and downstream tasks, offering a thorough synthesis of current knowledge and methodologies.

We introduce a taxonomy of biomedical healthcare foundation models, classifying the existing representative FMs from diverse perspectives including model architecture, training strategy, and intended application purposes. This taxonomy aids in the systematic understanding and comparison of different models.

We explore the open challenges and outline future research directions for the integration of FL with FMs in the biomedical and healthcare sectors, providing insights into unresolved issues and potential advancements.

To the best of our knowledge, this is the first survey paper to extensively cover foundation models in federated learning specifically tailored for biomedical and healthcare applications. Our survey uniquely addresses both large-language and vision-language models, highlighting their relevance and transformative potential in this context.

How do we collect papers?

In this survey, we collect over two hundred related papers in the field of Federated Learning, Foundation Model, and Biomedical healthcare. We consider Google Scholar as our main literature search engine, where the MedPub, Web of Science, and IEEE Xplore are also used as essential tools. Moreover, we check most of the related top-tier conferences, such as NeurIPS, ICML, ICLR, CVPR, and ECCV, and Bioinformatics. The major keywords we use are “Biomedical Federated Learning, Medical Pretrained Foundation Model, Healthcare Federated Pretrain Training, etc”. The most representative papers like Med-BERT [24], FedClip [19], and MedClip [20] are regarded as seed papers for reference check.

Organization

The rest of this survey is organized as follows. Background section describes the FM and FL literature relevant to our work. Federated learning and foundation models section details how to apply FMs with FL. The applications of FM on biomedical and healthcare is summarized in Foundation models in biomedical healthcare section. The challenges and future directions of Federated FMs in the biomedical and healthcare sectors are discussed in Open challenges and opportunities in federated foundation biomedical research section. Finally, we conclude our survey in Conclusions section.

Background

Background on foundation models

The latest wave of AI innovation sees the evolution of a new class of AI models often referred as foundation models (FMs) - a term popularized by the Stanford Institute for Human-Centered AI [25] which can be categorized into two model types: Large-language Model (LLM) and Vision-language Model (VLM). For example, LLMs including ChatGpt and Gpt-4 [26] from OpenAI demonstrate impressive capabilities to generate coherent text. VLMs such as DALLE 2 [27] shows the ability to create realistic images and art from a text description. These models are trained with pretraining, self-supervised training, and reinforcement-instructed fine-tuning with broad data at immense scale and high resource costs, resulting in models with billions of parameters [25]. In this section, we will introduce the backbone of Foundation Models in Backbone networks in foundation models section, where the pre-trained large-language models and vision-language models are discussed in Foundation on text: large language models and Foundation beyond text: vision language models sections, respectively.

Backbone networks in foundation models

The significant advancements in foundation models are largely due to the evolution of their underlying architectures, transitioning from Long Short-Term Memory networks (LSTM) [28] to Transformers [29]. Initially, LSTMs served as the basic architecture for early pre-trained models, where the recurrent structure is computationally intensive when scaled to deeper layers. In response to these limitations, the Transformer architecture was developed and quickly established itself as the standard for modern natural language processing (NLP) [30]. The superiority of Transformers over LSTMs can be attributed to two key factors: (1) Efficiency: Transformers eliminate recurrence, enabling parallel computation of tokens.(2) Effectiveness: The attention mechanism facilitates dynamic spatial interactions between tokens, contingent on the input itself. This section provides a brief overview of the evolution of backbone networks in foundation models, highlighting the transition from LSTMs to Transformers, followed by vision language model backbones from Convolutional neural networks (CNNs) [31] to Vision Transformers (ViTs) [32].

Backbone Networks in Texts.

Transformer has become the backbone of most pre-trained language models, such as BERT [33], GPT[1], and T5[34], building upon self-attention module and feed-forward networks (FFNs). The self-attention module facilitates token interaction, while FFN refines token representations using non-linear transformations. The Transformer architecture is designed to process tokens efficiently in parallel, thanks to the elimination of recurrent units and the use of position embeddings. Additionally, the architecture includes residual connections, layer normalization, and other features that prevent saturation issues and enhance expressive power with large-scale data and deep layers. The input is linearly transformed into query, key, value (Q, K, V), and output spaces in the self-attention module: the attention scores between the query and key is computed, which are then used to weight the values. The FFN module processes the weighted values to generate the output. The Transformer architecture has proven to be superior in terms of capacity and scalability, enabling the development of increasingly sophisticated language models. Considering an input Xm the linear transformation of X into Q, K, V is computed as follows:

| 1 |

where the self-attention module is calculated with a softmax function as follows:

| 2 |

To this end, the FFN provides the non-linear features for the transformer architecture. Besides the self-attention and FFN modules, the transformer architecture also includes residual connections [35], layer normalization [36], and positional encoding [37] to enhance the model’s performance. The transformer architecture has been widely adopted in various pre-trained language models, such as BERT, GPT, and T5, and has been instrumental in advancing the field of natural language processing (NLP).

Backbone Networks in Images.

Convolutional Neural Networks (CNNs) [31] have long been the foundation for many vision-related tasks, characterized by their distinctive architecture comprising convolutional, pooling, activation, and fully connected layers. These layers work in unison where convolutional layers act as trainable filters identifying image patterns like edges and textures, pooling layers reduce data dimensionality, activation layers introduce non-linearity, and fully connected layers synthesize these features into predictions. This architecture has been not only pivotal in vision applications but also adapted for language understanding tasks.

As the field evolves, there has been a notable shift towards incorporating Transformer architectures into vision tasks. This integration is exemplified by the development of Vision Transformers (ViT) [32], which apply the Transformer’s self-attention mechanisms to image patches for feature extraction, representing a significant evolution from traditional CNN approaches. This concept has similarly impacted computational biology, as seen in models like AlphaFold2 [38], which leverages Transformer technology for protein structure prediction. These adaptations underscore the versatility and robustness of Transformer models across different scientific domains.

Foundation on text: large language models

In the field of Natural Language Processing (NLP), the evolution of methods to build token representations has been marked by significant advancements. Initially, typical approaches such as those proposed by [39, 40] focused on creating ’static word embeddings,’ where a one-to-one mapping between words and their vector representations is established. These embeddings are termed ’static’ because they do not account for the context in which a word is used, thus limiting their ability to reflect the diverse meanings words can have in different settings.

Recognizing the limitations of static embeddings, there has been a shift towards developing ’contextualized word embeddings.’ These representations are dynamic, with the vector for a word varying according to its contextual usage. For instance, the word ’bank’ would have different embeddings in ’river bank’ compared to ’money bank.’ This approach, exemplified by models like ELMo [41], GPT [42], and BERT [33], significantly enhances the quality of word representations by modeling bi-directional contexts, thereby improving performance across various NLP tasks.

Historically, neural language models [43, 44] served as foundational frameworks in NLP, utilizing relatively shallow neural architectures for efficient training. These models were primarily pre-trained on tasks like unidirectional language modeling, which involves predicting the next word based on previous words. However, subsequent innovations such as Skip-Gram [39] aimed to enrich word embeddings by predicting surrounding words or using bidirectional context, respectively. GloVe [40] extended this by focusing on word co-occurrence probabilities.

The advent of deep learning brought about more sophisticated approaches for learning word representations. ELMo [41] introduced a bidirectional language modeling task, utilizing both forward and backward context in its pre-training. GPT [42] continued with unidirectional modeling, while BERT [33] innovated with the Masked Language Model. This method involves masking words in a sentence and predicting them based on the remaining unmasked context, allowing for deeper bidirectional context modeling.

Further developments like the T5 model [34] introduced an encoder-decoder framework for generating text outputs, proving particularly effective in text generation tasks such as summarization and question-answering. These advancements have been integral to the development of versatile language models like OpenAI’s GPT-3, InstructGPT, Codex, and ChatGPT, which not only generate text but also engage in conversational exchanges, admit errors, and handle complex user interactions.

Representative Large Language Models

Large Language Models (LLMs) have become pivotal in the evolution of natural language understanding and generation. The progression of the GPT series, from GPT-1 [42] to GPT-3 [45], and the subsequent release of GPT-4 [26], illustrates a remarkable expansion in model size and versatility. These models have profoundly impacted AI research and applications, heralding a new era of computational linguistics. Concurrently, BERT [33] revolutionized pre-training approaches by emphasizing bidirectional training, which significantly enhances language understanding capabilities. PaLM [46], another notable advancement, has achieved state-of-the-art results across diverse language tasks, highlighting the potential for scalability in LLMs. Recent innovations also include Bard [47], which integrates extensive world knowledge into a context-aware framework, and LLaMa [2], which prioritizes efficiency and practical applicability in language model design. Collectively, these models mark crucial developments in the field, each contributing distinctively to the enrichment and complexity of machine learning techniques that underpin contemporary AI systems.

Foundation beyond text: vision language models

Deep neural networks have exhibited remarkable success across a variety of vision tasks, such as image classification, object detection, and instance segmentation, largely attributable to the effectiveness of pre-training. Initially, pre-training in the vision domain involved training models on extensive annotated image datasets like ImageNet [48]. However, to address issues such as generalization errors and spurious correlations inherent in supervised learning, various self-supervised learning methods have been developed.

A significant area of advancement in AI research is the integration of vision and language models, which aims to develop systems capable of understanding and generating content that spans visual and textual modalities. The introduction of the Vision Transformer (ViT) [49] marked a pivotal shift by applying the transformer architecture-originally designed for natural language processing-directly to sequences of image patches. This approach fundamentally changed the paradigm of how models process visual information. Building on this, CLIP (Contrastive Language-Image Pre-training) [50] advanced the field by learning visual concepts through natural language supervision, enabling the model to adeptly handle various vision tasks with minimal task-specific training. Further extending these innovations, Stable Diffusion [51] ventured into generative art, providing tools to create intricate images from textual descriptions. The most recent breakthrough, Segment Anything [52], tackles image segmentation using deep learning to precisely identify and delineate multiple objects within images in ways that are contextually relevant. Collectively, these developments not only bridge the gap between visual data and language processing but also set the stage for more intuitive and interactive AI systems.

Challenges of foundation models

The paradigm of foundation models (FMs) represents a significant shift from traditional task-specific models that have long dominated the AI landscape. These pre-trained models are designed for adaptation to a variety of tasks they were not originally trained for [53]. Adaptation techniques include user or engineer prompts, continual learning, and fine-tuning-methods that expand their application to fields where data scarcity impedes the development of specialized algorithms. This flexibility introduces exciting possibilities for scalable, reusable AI models across diverse domains, including transformative potential in healthcare [54]. However, this shift also presents unique challenges, including the risk of over-generalization, the difficulty in fine-tuning for highly specialized tasks, and the ethical implications of deploying such versatile technologies in sensitive areas. These challenges necessitate rigorous validation, careful implementation, and ongoing monitoring to ensure that the deployment of foundation models aligns with ethical standards and practical requirements.

Over-trusting High Performance & Output Coherence: Ensuring Safe & Reliable Use

Despite the high accuracy and broad capabilities of larger models, it’s critical to address ethical and legal standards to ensure their use remains safe, fair, and privacy-conscious [53]. In healthcare, the necessity for accurate and reliable data for clinical decision-making cannot be overstated. However, verifying the correctness of outputs from FMs poses a challenge, as demonstrated by systems like ChatGPT, whose outputs can mimic human-like text, potentially leading to automation bias and misuse [55]. The complexity of these models often precludes a full understanding of their mechanisms, necessitating cautious deployment decisions, especially in sensitive fields like healthcare. This includes designing interfaces that clearly articulate the limitations and probabilistic nature of AI outputs and developing robust validation processes to ensure safety and fairness.

Building AI in a Vacuum: Decontextualized & Centralized

AI development frequently takes place in isolation, focused on technological accuracy before considering real-world user needs [56]. This ’development in a vacuum’ has drawn increasing scrutiny for failing to address the actual conditions and requirements of end-users [57]. Foundation models, in particular, suffer from this issue as they require significant adaptation to be truly effective outside of initial testing environments. A greater emphasis on ethnographic studies could provide deeper insights into the practical applications and challenges of AI within operational settings. Moreover, integrating AI technology into everyday use demands an understanding of specific user contexts, necessitating strategies for risk mitigation and a move towards more user-centered research directions. Validating the utility of AI in real-world settings and ensuring their integration into clinical practice remain a formidable challenge, but one that the human-computer interaction (HCI) community is well-equipped to tackle by bridging the ’last mile’ of AI in healthcare [58].

Background of federated learning in foundation models

Background of conventional federated learning and frameworks

Federated Learning (FL) is a machine learning paradigm where multiple clients, such as mobile devices or entire organizations, collaboratively train a model under the orchestration of a central server, such as a service provider, while keeping the training data decentralized. This method not only adheres to the principles of focused collection and data minimization but also addresses many systemic privacy risks and costs associated with traditional centralized machine learning approaches. The concept of FL, first introduced by McMahan et al. in 2016 [13], has grown significantly in interest from both theoretical and practical perspectives. This approach is defined by challenges including unbalanced and non-IID data across numerous unreliable devices, limited communication bandwidth, and the complexities of model training and implementation across diverse and distributed environments.

Since its inception, the focus of federated learning has expanded beyond mobile and edge devices to include applications involving a small number of more reliable entities, such as multiple organizations collaborating to train a model. This has led to distinguishing between “cross-device” and “cross-silo” federated learning, each with unique challenges and requirements. In this survey, we delve into the specifics of cross-device federated learning, highlighting its practical aspects, challenges, and its potential to train and implement foundation models (FMs) in a distributed fashion.

Groundbreaking works of FL including McMahan et al. [13] laid the foundational framework for FL systems. Research in FL has since advanced, focusing on enhancing data privacy in applications such as medical image segmentation [59] and addressing ongoing challenges related to communication efficiency, scalability, and model robustness [60, 61]. Notable developments in FL include SCAFFOLD [62] and FedProx [61], which tackle issues such as client update variance and client drift in non-IID data environments. Further contributions from FedGSam [63], FedLGA [14], and LoMar [64] have advanced FL by developing generalized strategies and adaptive algorithms that enhance learning processes in federated settings.

Moreover, the development of open-source FL frameworks such as TsingTao [65], Flower [66], FedML [67], FATE [68], and FederatedScope [69] has significantly advanced the accessibility and standardization of FL practices. Designing specialized FL systems and benchmarks is imperative to meet the unique needs and challenges of foundation models (FM). Although current FL frameworks have made significant strides in both academic and industrial settings [66–71], they may not fully satisfy the specific requirements for optimizing memory, communication, and computational demands associated with FMs. Platforms like FedML [67] and FATE [68] are adapted to better support FMs, but extensive research is still needed to thoroughly explore system requirements and integration strategies for these models.

Motivations of federated learning for foundation models

Scarcity of Compliant Large-Scale Data

The shortage of large-scale, high-quality, legally compliant data has become a critical driver for the adoption of federated learning in the context of foundation models. This scarcity is particularly acute in sectors such as technology and social media, where data compliance and privacy issues are increasingly foregrounded [72–74].

High Computational Resource Demand

Training large-scale foundation models demands significant computational resources. For instance, training LLaMa with 65 billion parameters required 2048 NVIDIA A100 GPUs over 21 days [2], while the smaller 1.3 billion parameter GPT-3 model needed 64 Tesla V100 GPUs for a week [45]. The development of GPT-4 also highlighted these intensive demands, utilizing substantial resources over several months at considerable financial costs [26]. Federated learning can help alleviate these demands by distributing computational tasks across multiple devices, thereby optimizing resource utilization.

Continuous Model Updating Challenges

As data continually evolves, particularly from sources like IoT sensors and edge devices, keeping foundation models updated becomes a significant challenge [75, 76]. Federated learning offers a dynamic solution by enabling ongoing, incremental updates to FMs with new data, which allows these models to adapt to emerging data landscapes without the need to reinitiate training processes. This approach not only enhances the models’ accuracy and relevance but also ensures their adaptability to real-world changes [77].

Reducing Response Delays and Enhancing FM Services

One of the foremost benefits of applying federated learning to foundation models is the potential to deliver nearly instant responses, thus significantly improving user experience. Traditional central server deployments often face latency and privacy issues due to the required network communications between users and servers [78]. Federated learning addresses these concerns by enabling models to operate directly on local devices, minimizing network dependencies, reducing latency, and improving privacy protections. This approach not only enhances response times but also ensures a seamless, privacy-conscious interaction, maintaining user trust and satisfaction in the services provided by foundation models.

Motivations of foundation models for federated learning

Foundation Models can significantly contribute to enhancing the efficacy of Federated Learning. This section explores the motivations behind leveraging FM within FL, examines the challenges posed by this integration, and discusses the potential opportunities it offers to the field.

Data Privacy and Shortage Dilemma in FL

In federated settings, clients often grapple with limited or imbalanced datasets, especially in federated few-shot learning contexts [79]. Such data scarcity can result in suboptimal model performance, as it may not fully capture the diversity of the data distribution [80]. Moreover, privacy concerns are intensified due to the potential for sensitive information recovery from model updates in FL [81, 82]. These issues are particularly acute in sectors like healthcare or finance, where data privacy regulations or the inherent sensitivity of the data restrict availability, thus complicating the training process and limiting FL’s effectiveness in these crucial areas. One promising solution is the use of synthetic data generated by FMs. Being extensively pre-trained on vast datasets and further refined through techniques such as fine-tuning and prompt engineering, FMs possess a deep understanding of complex data distributions, enabling them to produce synthetic data that closely mirrors real-world diversity.

Performance Dilemma in FL

FL can mitigate issues related to non-IID and biased data by leveraging the advanced capabilities of FMs, thus enhancing performance across various tasks and domains [83]. FMs can improve FL’s efficiency in several ways. (1). Starting Point Advantage: FMs provide a robust starting point for FL. Clients can begin fine-tuning directly on their local data instead of starting from scratch, leading to faster convergence and enhanced performance while reducing the need for extensive communication rounds [84, 85]. (2). Data Diversity Enhancement: FMs act as powerful generators that can synthesize diverse data, enriching the training dataset in FL. An example is GPT-FL [86], which utilizes generative models to produce synthetic data that improves downstream model training on servers. This approach not only boosts test accuracy but also enhances communication and client sampling efficiency. (3). Knowledge Distillation: FMs can address performance issues in FL by acting as knowledgeable teachers through techniques like knowledge distillation [87].

New Sharing Paradigm Empowered by FM

Unlike traditional FL, which involves sharing high-dimensional model parameters, FMs use a new paradigm through prompt tuning. PROMPTFL [88] showcases how FM capabilities can be leveraged to efficiently combine global aggregation with local training on sparse data. This approach focuses on training prompts rather than the entire model, thereby optimizing resource use and enhancing performance. Building on this concept, FedPrompt [89] introduces an innovative prompt tuning method specifically designed for FL, while a recent study FedTPG [90] explores a scalable prompt generation network that learns across multiple clients, aiming to generalize to unseen classes effectively.

Machine learning in biomedical and health care

Biomedical ML: data fusion

Data is the cornerstone of sense-making in artificial intelligence (AI), playing a crucial role in various sectors, including healthcare, where they are from diverse sources like care providers, insurers, and academic publications [53, 91]. They vary in form (e.g., clinical notes, medical images), scale (e.g., patient versus population level), and style (professional versus lay language), posing both opportunities and challenges for the application and training of AI models. Despite the proficiency of machine learning methods in managing and extracting insights from vast, multi-dimensional data [92], it is vital to address how societal biases and inequalities are embedded in the data. Disparities can manifest in various aspects of healthcare, such as the prioritization of certain medical issues and the exclusion or misrepresentation of specific population groups. These issues often stem from barriers like limited healthcare access, restrictive criteria for clinical trial participation, or the risk of inaccurate data due to documentation errors and systemic discrimination [93]. For example, in California, the mandate to verify citizenship at hospitals has reduced autism diagnosis rates among Hispanic children in the context of stringent federal immigration policies.

Healthcare and biomedicine are major sectors within the U.S. economy, accounting for about 17% of the Gross Domestic Product (GDP) [94–97]. These fields require substantial financial investments and extensive medical knowledge, encompassing everything from patient care to scientific exploration of diseases and the development of new therapies [54, 98]. We envision machine learning models as central repositories of medical knowledge, trained on a diverse array of data sources and modalities within medicine [99, 100]. These models could serve as dynamic platforms that medical professionals and researchers use to access and contribute to the latest findings, enhancing their ability to make informed decisions [101].

Biomedical Data Fusion

In the field of biomedical research, a significant challenge lies in deciphering the complex interactions within and between the cellular and organismal levels, characterized by diverse components that exhibit emergent behaviors [102]. The data collected through various sensors, while rich, often provide limited insights when examined in isolation due to the specificity of each measurement modality [103]. Data fusion, the process of integrating data from multiple views, aims to provide a holistic view of biological phenomena by combining disparate data sources that offer unique perspectives on the same subject [104]. This approach is generally advantageous in several ways, categorized into complementary, redundant, and cooperative features of the data [105]. These features are not mutually exclusive but interact synergistically, enhancing the robustness and accuracy of the insights gained.

Data fusion requires the use of sophisticated machine learning (ML) methods capable of integrating both structured and unstructured data while accommodating their varied statistical properties, sources of non-biological variation, high dimensionality, and distinct patterns of missing values [103, 106]. A comprehensive examination of these strategies is presented in a review of multimodal deep learning approaches with potential advancements and methodologies in the medical field [107].

Categories Summary of Data Fusion

The categories of data fusion techniques can be broadly summarized into three main approaches: easy fusion, intermediate fusion, and late fusion. Easy fusion typically involves direct modeling techniques where different types of neural networks are used to process the input data. This includes fully connected networks for a straightforward integration of features across modalities [108], convolutional networks that are effective in handling spatial data [109], and recurrent networks suited for sequential data integration [110]. Autoencoders also play a significant role in easy fusion, with variations such as regular [111], denoising [112], stacked [113], and variational autoencoders [114] being employed to refine the fusion process.

Intermediate fusion, on the other hand, involves branching strategies that can be homogeneous, focusing on either marginal [115] or joint representations [116], or heterogeneous, which also targets both marginal [117] and joint data representations [118]. These strategies optimize the integration by selectively focusing on how data from the same or different modalities are fused.

Late fusion utilizes aggregation methods to combine features at a higher level, often after initial independent processing. Techniques in this category include simple averaging [119] and weighted averaging [120], where weights might be assigned based on the reliability or importance of each modality. Furthermore, meta-learning approaches are utilized to dynamically adjust these weights for optimal performance [121], thus enhancing the fusion’s effectiveness by incorporating learning-based adjustments. These methods ensure that the final model output maximally benefits from the diverse characteristics of all data modalities involved.

FM in biomedical healthcare

Motivations

Foundation models hold transformative potential for biomedical research, particularly in the realms of drug discovery and disease understanding, thereby enhancing healthcare solutions [122]. Biomedical discovery processes are currently characterized by intensive demands on human resources, lengthy experimental timelines, and substantial financial outlays. For example, the drug development journey includes stages from basic research, such as protein target identification and potent molecule discovery, through clinical development involving clinical trials, to the final drug approval stage. This extensive process typically spans more than a decade and incurs costs often exceeding one billion dollars [123]. Thus, the ability to expedite biomedical discovery by harnessing existing data and published findings becomes crucial, especially during critical times like the COVID-19 outbreak, which resulted in significant loss of life and economic damage [124].

Foundation models contribute to biomedical advancements in two primary ways. Firstly, these models exhibit strong generative capabilities, as seen with coherent text generation in models such as GPT-3. These capabilities can be utilized for generating experimental protocols in clinical trials and in designing novel molecules for drug discovery [125, 126]. Secondly, foundation models excel at integrating diverse data modalities in medicine, facilitating the exploration of biomedical concepts across various scales-from molecular to patient and population levels-and integrating multiple knowledge sources, including imaging, textual, and chemical data [127–132]. This integrated approach enables discoveries that might be challenging with single-modality data alone.

Additionally, foundation models are adept at transferring knowledge across different data modalities. For instance, research by Lu et al. [133] demonstrated how a transformer model, initially trained on natural language, a data-rich modality, could be adapted for other sequence-based tasks, such as protein folding predictions, a longstanding challenge in biomedicine. These capabilities highlight the potential applications of foundation models in addressing complex biomedical tasks.

Applications

Foundation models (FMs) hold significant potential for revolutionizing healthcare applications through their adaptability and efficiency in performing specific healthcare and biomedical tasks. They have been proposed for use in a variety of areas including disease prediction [24], triage or discharge recommendations [98], and health administration tasks such as clinical notes summarization [134] and medical text simplification [1]. These applications leverage the unique capabilities of FMs, such as fine-tuning and prompting [45], to tailor solutions to specific needs, enhancing both the accuracy and efficiency of medical services.

FMs are particularly effective in patient-facing roles, such as question-answering systems and clinical trial matching applications, benefiting both researchers and patients by simplifying access to information and streamlining patient recruitment processes [126, 135–137]. As central interfaces, FMs facilitate interactions among data, tasks, and individuals, improving the operational efficiency of healthcare services. This is further explored in subsequent sections focusing on specific healthcare and biomedical tasks.

Additionally, FMs serve as repositories of extensive medical knowledge, accessible by healthcare professionals and the public for purposes like medical question-answering and interactive chatbot applications. Innovations such as ChatGPT [86] and Bard [138] provide conversational user interfaces that assist users in navigating complex health information and obtaining relevant health advice.

The implementation of FMs also promises to accelerate healthcare application development and research. These models can automate processes such as structured dataset generation, data labeling, and synthetic data creation [139]. Looking forward, there is considerable scope for developing new FM-enabled capabilities, particularly through the use of multimodal data, a feature characteristic of the healthcare domain. Beyond natural language processing, breakthroughs are already evident in areas like biomedical research, where tools like AlphaFold [140] have made significant advances in predicting human protein structures to aid drug development. Similarly, innovations in genome sequencing are hastening the detection of disease-causing genetic variants [141], and new methods are being developed for optimized clinical trial design [142]. This multidisciplinary integration highlights the transformative potential of FMs in enhancing and expanding the capabilities of the healthcare sector.

Federated learning and foundation models

Federated learning (FL) and foundation models represent two cutting-edge approaches in the field of machine learning. Federated learning offers a decentralized approach to training models across multiple nodes or devices, ensuring privacy and maintaining data locality. In contrast, foundation models, due to their vast size and generalized pre-training, provide significant adaptability and scalability for a variety of tasks. Integrating these technologies poses unique challenges but also opens up exciting opportunities for innovation in AI training and application. This section explores the relationship between federated learning and foundation models, highlighting key research directions and recent advancements in this domain. Depending on the training paradigm, foundation models can either be trained from scratch or fine-tuned on top of pre-trained models within a federated learning framework. Additionally, the application of foundation models in federated learning can extend to large language models or vision language models.

Related surveys further enrich our understanding of this integration. A recent survey by Yu et al. [83] discusses the intersection of foundation models with federated learning, exploring the motivations behind their integration, the challenges faced, and future directions for research in this domain. This survey serves as a crucial resource for comprehending the current landscape and the potential of combining federated learning strategies with the robust capabilities of foundation models. Another pivotal work by Zhuang et al. [143] provides an in-depth analysis of how foundational models can be effectively adapted and optimized within a federated learning framework, discussing both the technical hurdles and the potential breakthroughs. Additionally, Kairouz et al. [60] offers a comprehensive overview of the advancements and persistent challenges in federated learning, highlighting issues such as algorithm efficiency, data heterogeneity, and security concerns. These surveys collectively offer a rich tapestry of insights into the evolving field of federated learning and foundation models, emphasizing their complexities and transformative potential.

Federated learning and foundation models

The integration of pre-training techniques within federated learning (FL) setups, especially for large-scale models, is increasingly viewed as essential for boosting model performance and broadening their applicability. Extensive research underscores the importance of pre-training in preparing large models to face the unique challenges presented by the decentralized nature of federated datasets. Chen et al. highlight the vital role of pre-training in readying large models for these challenges, emphasizing its necessity for effective performance within federated learning frameworks [84]. In a similar vein, Nguyen et al. investigate how the initial conditions of model training, such as the starting points of pre-training and model initialization, critically influence the effectiveness and convergence of FL models [144]. These studies stress the importance of meticulous pre-training phases to ensure that large models are fully equipped to navigate the complexities of federated learning, thereby maximizing their performance and utility in diverse applications. The application of federated learning to foundation models not only addresses these preparatory needs but also leverages the inherent strengths of both paradigms to offer several key advantages: (1). Efficient Distributed Learning: Federated learning enables models to learn from data distributed across multiple devices or servers without needing to centralize the data, thus preserving privacy and reducing data movement costs. (2). Parameter-efficient Training: By utilizing techniques such as model compression and prompt tuning within a federated framework, the training process becomes more parameter-efficient. This is particularly beneficial in environments where computational resources are limited. (3). Prompt Tuning: This method involves fine-tuning a model on a specific task by adjusting a small set of parameters, and when combined with federated learning, it allows for personalized model tuning on decentralized data. (4). Model Compression: Techniques like quantization and pruning that reduce the model size can be effectively applied in federated settings, enhancing the feasibility of deploying large models on edge devices with limited storage and processing capabilities.

Efficient Distributed Learning Algorithms

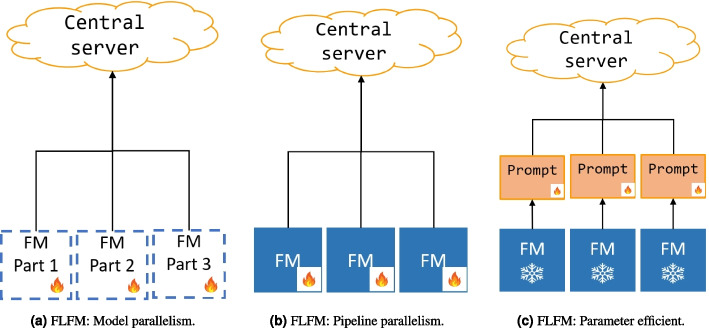

Efficient distributed learning algorithms are critical for optimizing foundation models within the constraints of limited resources [89, 145]. These algorithms are specifically engineered to address the twin challenges of enhancing communication and computation efficiency during the training and deployment of large FMs across a network of devices, which may vary in capabilities and network conditions. Two pivotal techniques in this regard are model parallelism and pipeline parallelism.

Model parallelism [146] involves dividing the model into different segments and distributing these segments across multiple devices. This allows for simultaneous processing and can significantly expedite the computation process by leveraging the combined power of multiple devices. On the other hand, pipeline parallelism [147] focuses on enhancing the overall system’s efficiency and scalability by organizing the computation process in stages. Each stage can be processed on different devices in a pipeline manner, thus optimizing the workflow and reducing idle times.

An illustrative example of these parallelism strategies in federated learning (FL) for FMs is demonstrated in Fig. 1, where participants train distinct layers of a model using their own private, local data. Note that Fig. 1a is the illustration of model parallelism and Fig. 1b demonstrates the pipeline parallelism. This approach not only maintains the privacy of the data but also contributes to the efficiency of the learning process. Recent studies, such as the research conducted by Yuan et al., validate the practicality of utilizing pipeline parallelism for decentralized FM training across heterogeneous devices [148].

Fig. 1.

Efficient distributed learning and parameter efficient strategies for foundation models in federated learning

Parameter-efficient Training Methods

Parameter-efficient training methods are increasingly critical in optimizing foundation models for specific domains or tasks. These methods typically involve integrating adapters-a technique where the core parameters of the FM are frozen, and only a small, task-specific section of the model is fine-tuned. This approach is illustrated in Fig. 1c, which shows how adapters can be effectively incorporated into the federated learning framework for FMs. Recent implementations such as FedCLIP [50] and FFM [83] utilize this method to fine-tune FMs, achieving substantial performance improvements.

By focusing adjustments on small adapters rather than the entire model, these training methods greatly reduce the computational and communication demands typically required [149, 150]. This is particularly beneficial in FL environments where conserving bandwidth and processing power is crucial due to the distributed nature of the data and the varying capacities of participating devices. However, despite these efficiencies, the underlying requirement for substantial computational resources to manage the FM and execute the fine-tuning process remains significant.

Prompt Tuning

Prompt tuning has rapidly gained traction as a communication-efficient alternative to full model tuning, demonstrating effectiveness comparable to more resource-intensive methods [151]. This technique involves fine-tuning lightweight, additional tokens while keeping the foundational model’s main parameters frozen, which avoids the necessity of sharing large model parameters across the network. In federated learning scenarios, this approach enables leveraging the collective knowledge from multiple participants to refine the prompts used in FM training, potentially enhancing the performance of the FM.

The integration of prompt tuning in FL, similar to the parameter efficient approach depicted in Fig. 1c, has been explored in recent research. Studies such as FedPrompt [152] and PROMPTFL [88] have shown promising results by improving the quality and effectiveness of prompt-based training methods through FL frameworks. These methods enable efficient and targeted tuning of model behaviors without requiring extensive data transfer or the deployment of large-scale models on each participant’s device, thereby conserving bandwidth and computational resources.

Moreover, a recent study, FedTPG [90], investigates a scalable prompt generation network that learns across multiple clients, aiming to generalize effectively to unseen classes. This approach demonstrates the potential of FL to enhance the sophistication of prompt tuning methodologies by distributing the learning process across a wide array of devices and data sources.

However, the implementation of prompt tuning in FL is not without challenges. Concerns include the assumptions that large FMs are readily available on user devices, which may not always be feasible in resource-constrained environments. Additionally, there are potential privacy risks associated with utilizing cloud-based FM APIs, which could compromise the security of sensitive data.

Model Compression

Model compression has emerged as a vital strategy to mitigate the substantial memory, communication, and computational demands of large foundation models. By minimizing the size of these models, model compression enables more practical deployments within federated learning frameworks without significantly compromising performance. Prominent compression techniques include knowledge distillation, where a smaller model is trained to emulate the performance of a larger one [153], and quantization, which reduces the numerical precision of model parameters to decrease both size and computational complexity [154]. Additionally, pruning eliminates superfluous or redundant model parameters, significantly lowering the resource requirements of the model [155].

Implementing these compression techniques effectively requires striking a balance between reducing model size and preserving essential capabilities. This balance ensures that the compressed model performs robustly in real-world applications, maintaining the functionality of the foundation model while reducing operational demands. Therefore, research and development in model compression focus not only on shrinking model dimensions but also on enhancing efficiency and intelligence, tailored for specific deployment scenarios. [156] introduces ResFed, a framework that leverages model compression in federated learning to significantly cut down on bandwidth and storage needs while maintaining high model accuracy. [153] presents the concept of knowledge distillation, which allows a compact “student” model to learn effectively from a larger “teacher” model, thus enabling the student to achieve similar performance with much lower computational costs. [154] explores quantization techniques for training neural networks that perform inference using only integer arithmetic, substantially lightening model load without sacrificing accuracy. [155] provides a thorough review of neural network pruning techniques, showing their potential to significantly reduce model size while maintaining or improving performance. [61] discusses the integration of model compression into federated learning, tackling challenges related to efficiency and scalability in privacy-preserving, decentralized machine learning.

FL on large language models and vision language models

In this section, we delve into the integration of federated learning with foundation models on the two main model applications: large language models and vision language models, exploring the unique challenges and opportunities presented by these advanced AI systems.

FL on large language models

Federated learning applied to large language models (LLMs) represents a transformative approach to harnessing decentralized datasets for model training, while prioritizing data privacy and security [21, 157]. This method is especially crucial for LLMs because of their inherent requirement for vast and varied data inputs to accurately capture and interpret the complexities of human language.

This nature of FL effectively addresses privacy concerns by ensuring that sensitive or proprietary data does not leave its original location, thereby reducing the risk of data breaches. Additionally, this decentralized approach allows LLMs to learn from a wider array of linguistic inputs, reflecting regional dialects, colloquialisms, and cultural nuances that might not be present in a centralized dataset [158].

Moreover, the application of FL to LLMs facilitates the development of models that are not only linguistically comprehensive but also more personalized and responsive to local contexts. By training on diverse datasets that are geographically dispersed, LLMs can develop a deeper understanding of language variations and user-specific preferences, leading to improved performance in tasks such as language translation, sentiment analysis [159], and contextual understanding.

This method also helps in mitigating biases that are often present in centralized training datasets. Since FL involves multiple datasets that are not centrally collected, the resulting model is trained on a broader spectrum of data sources, which can contribute to more balanced and fair outputs. Thus, federated learning not only enhances the privacy and security of data used in training LLMs but also boosts the models’ ability to decipher and utilize the full richness of human language, making them more accurate and effective in real-world applications.

Practical Applications of FL on LLMs

The integration of federated learning with large language models is yielding groundbreaking frameworks and methodologies that significantly enhance language model training while adhering to data privacy and security protocols. In this survey, we highlight several notable applications and advancements in this domain, including:

Privacy-preserving Federated Learning and its application to natural language processing: [157] explores privacy-preserving techniques in federated learning for training large language models. It particularly focuses on models such as BERT and GPT-3, providing insights into how federated learning can be leveraged to maintain privacy without sacrificing the performance of language models in NLP applications.

FedMed: A federated learning framework for language modeling: [21] introduces “FedMed”, a novel federated learning framework designed specifically for enhancing language modeling. The framework addresses the challenge of performance degradation commonly encountered in federated settings and showcases effective strategies for collaborative training without compromising on model quality.

Efficient Federated Learning with Pre-Trained Large Language Model Using Several Adapter Mechanisms: [160] highlights a method to enhance federated learning efficiency by integrating adapter mechanisms into pre-trained large language models. The study emphasizes the benefits of using smaller transformer-based models to alleviate the extensive computational demands typically associated with training large models in a federated setting. The approach not only preserves data privacy but also improves learning efficiency and adaptation to new tasks.

OpenFedLLM: This contribution is a seminal effort in federated learning specifically designed for large language models. The “OpenFedLLM” framework facilitates the federated training of language models across diverse and geographically distributed datasets. A standout feature of this framework is its capability to ensure data privacy during collaborative model training. It also incorporates federated value alignment, a novel approach that promotes the alignment of model outputs with human ethical standards, ensuring that the trained models adhere to desirable ethical behaviors [161]. Moreover, OpenFedLLM is open-source1 , making it accessible to the broader research community and fostering collaboration in the development of federated language models.

Pretrained Models for Multilingual Federated Learning: This study addresses the complex challenges of utilizing pretrained language models within a federated learning context across multiple languages. Weller et al.’s work is crucial for understanding how multilingualism impacts federated learning algorithms, particularly exploring the effects of non-IID (independently and identically distributed) data inherent in natural language processing tasks across different languages. The research explores three main tasks: language modeling, machine translation, and text classification, providing valuable insights into the adaptability of federated learning to diverse linguistic datasets [162].

GPT-fl: This innovative approach integrates federated learning with prompt-based techniques to train large language models. “GPT-fl” employs prompt learning within a federated framework, which allows for efficient learning from decentralized data sources while maintaining data privacy. This method enhances model adaptability and performance across various linguistic tasks, making it a promising solution for applications requiring high levels of customization and responsiveness to user-specific contexts [86].

In summary, LLMs in FL focus on balancing privacy preservation with maintaining high performance in NLP applications. Models like Privacy-preserving Federated Learning, e.g., FedMed, explore strategies to mitigate performance degradation in federated settings and enhance training efficiency using techniques such as adapter mechanisms. These approaches are particularly adept at managing the significant computational overhead associated with LLMs while ensuring that sensitive data remains secure within its local environment in FL. OpenFedLLM introduces an open-source framework that emphasizes ethical alignment in model outputs, advocating for responsible AI practices that adhere to human ethical standards, which can be crucial as it addresses the growing concern over AI alignment with societal values. Meanwhile, research on Pretrained Models for Multilingual Federated Learning tackles the challenges of multilingualism and non-IID data in federated learning, offering insights into effectively managing diverse linguistic data and enhancing the robustness of language models across different languages. GPT-fl combines prompt-based techniques within a federated framework, improving model adaptability and customization across linguistic tasks, which allows for personalized and contextually relevant responses that are essential in dynamic real-world applications.

FL on vision language models

The integration of federated learning with vision language models (VLMs) marks a significant advancement in multimodal learning where both visual and textual data are processed in a privacy-preserving, distributed learning environment. These models are crucial for tasks that necessitate a deep understanding and generation of information from visual cues and textual descriptions. Federated learning enhances the capability of VLMs by enabling them to learn from a diverse set of decentralized data sources, including images and associated annotations from various geographic and demographic distributions without the need to centralize sensitive data.

VLMs integrated with FL are particularly beneficial in scenarios where data privacy is paramount, such as in healthcare for patient image data or in surveillance where personal data protection is critical. By processing data locally and only sharing model updates, FL preserves the privacy and security of the underlying data, while still benefiting from the diverse data attributes necessary for robust model training.

This approach also allows for the training of more personalized and region-specific models, capturing a wide array of cultural and contextual nuances in visual-textual datasets. For example, a VLM trained via federated learning can better understand and generate language descriptions for regional landmarks or culturally specific events, enhancing its applicability across different global contexts.

Moreover, the decentralized nature of FL helps in mitigating dataset bias, a common issue in centralized training datasets. Since the training data in FL comes from a wide range of sources, the models are less likely to overfit to the biases present in a single dataset, leading to more generalizable and fair VLMs.

This section underscores the crucial role of prompt learning in expanding the capabilities of both language and vision models trained in federated environments. By facilitating efficient task adaptation and maintaining data privacy, prompt learning represents a significant step forward in the development of AI systems that can operate across diverse and distributed data landscapes.

Practical Applications of FL on VLMs

FedCLIP: Pioneering the field of federated vision-language models, FedCLIP [19] adapts the powerful CLIP (Contrastive Language-Image Pre-training) architecture [50] to operate in a federated setting. Unlike traditional learning models that centralize data, FedCLIP enables collaborative learning across decentralized image datasets with accompanying text descriptions. Crucially, this approach safeguards data privacy by eliminating the need for sensitive user data to leave local devices.

PromptFL: [88] demonstrates the power of combining federated learning with prompt learning techniques for training models on distributed visual and textual data. Prompt learning injects flexibility into model training. In PromptFL, federated learning preserves privacy while prompt learning improves training effectiveness and efficiency across diverse datasets.

FedPrompt: Communication-Efficient and Privacy-Preserving Prompt Tuning in Federated Learning [152] addresses two critical aspects of federated learning for vision-language models: efficiency and privacy. Prompt tuning offers adaptability but can be communication-intensive. FedPrompt explores methods to reduce communication overhead while still reaping the benefits of prompt tuning, all while ensuring that sensitive data remains protected.

pFedPrompt: [163] addresses personalization challenges in federated vision-language models. “Personalized Prompt for Vision-Language Models in Federated Learning” investigates how to learn personalized prompts. These prompts are tailored to individual clients or datasets within the federated system. The aim is to unlock performance gains by having the model adapt its behavior for specific local data distributions.

FedTPG: (Text-driven Prompt Generation for Vision-Language Models in Federated Learning) [90] aims to enhance prompt generation techniques in a federated context. It introduces the idea of learning a prompt generator network which can produce context-aware prompts that guide vision-language models to tackle a variety of tasks. This has potential benefits for scenarios where a model must adapt to new classes or data it hasn’t encountered previously, aligning well with the distributed nature of federated learning.

FedMM: In computational pathology, fusing information from multiple modalities can significantly improve diagnostic accuracy. However, centralized training approaches raise privacy concerns due to the sensitive nature of medical images. FedMM introduces a federated framework designed specifically to handle multi-modal data in this context. The key idea of [164] is to train individual feature extractors for each modality in a federated manner. Because only these learned feature extractors are shared, raw image data remains protected within each institution. FedMM can accommodate the situation where different institutions or hospitals may have different sets of available modalities. It enables collaborative learning even with this data heterogeneity. Subsequent tasks like classification can be performed locally using the features extracted by the federated models.

FedDAT: Foundation models offer impressive performance across many tasks but often require substantial amounts of data for finetuning. FedDAT [165] addresses the challenge of finetuning these models in a federated context where the goal is to protect data privacy. To handle heterogeneity, FedDAT leverages a Dual-Adapter Teacher technique to regularize how model updates are made on each client. Furthermore, it employs Mutual Knowledge Distillation to facilitate efficient knowledge transfer across clients in the federated system.

CLIP2FL: Real-world data is often messy, and client devices in a federated system might have data with different characteristics or class imbalances. CLIP2FL [166] tackles this by using a pre-trained CLIP model as guidance. On the client-side, CLIP is used for knowledge distillation to improve the local feature representations. On the server-side, CLIP is employed to generate features which help retrain the server’s classifier, mitigating the negative impact of the long-tailed data problem.

FedAPT: [167] introduces FedAPT, a novel method for collaborative learning in federated settings where data resides on multiple clients with varying domains (e.g., different image styles or categories). FedAPT aims to improve model generalization across these domains while maintaining data privacy. The key innovation lies in adaptive prompt tuning within the federated learning framework. Instead of directly sharing raw data, FedAPT trains a meta-prompt and adaptive network to personalize text prompts for each specific test sample. This allows the model to better adjust to domain-specific characteristics.

General Commerce Intelligence: [168] discusses the development of a novel NLP-based engine designed for commerce applications. This engine leverages federated learning to provide personalized services while ensuring privacy preservation across multiple merchants. The authors focus on creating a “glocally” (globally and locally) optimized system that balances global optimization needs with local data privacy requirements.

In summary, federated learning models for vision-language tasks have demonstrated significant innovation in enhancing privacy and efficiency while leveraging the synergy between textual and visual data. Models like FedCLIP, PromptFL, and FedPrompt focus on privacy and decentralization, with trade-offs in model accuracy and communication overhead. Personalization and adaptability are key in pFedPrompt and FedAPT, which aim to tailor learning to local datasets but introduce complexity in managing local and global optimization. FedTPG and CLIP2FL enhance adaptability to new tasks and data variability, although at the cost of increased computational demands. FedMM and FedDAT tackle challenges in multi-modal and heterogeneous data integration, crucial for applications like medical diagnostics. Lastly, General Commerce Intelligence optimizes federated learning for commercial applications, balancing local privacy with global optimization needs.

Framework for federated foundation models in biomedical

Conceptual framework

We simulate a hierarchical multi-tier architecture for the integration of FL and FMs to handle biomedical challenges:

Data Centers: Each compute node in FL hosts its private biomedical datasets, which is stored locally and only communicated with the server to ensure privacy.

Model Host: Foundation Models, pretrained on large-scale, public datasets, serve as the backbone on the server, which can be fine-tuned with the distributed data for the targeted biomedical challenges. Note that the large-scale model can be trained or transferred with model distillation or finetuning methods like Parameter-Efficient Fine-Tuning [169].

Aggregation: FL algorithms like FedAvg [13], FedProx [61] are performed to aggregate updates from the nodes while addressing data heterogeneity and fairness concerns.

Feedback: Explainable metrics and evaluation pipelines will be introduced post-aggregation to make the system robust and trustworthy.

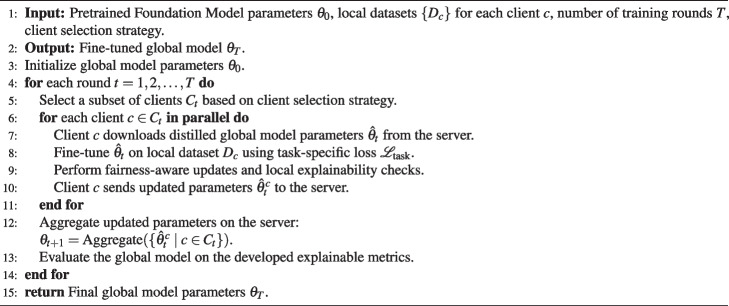

Algorithm

In the following algorithm, we introduce the algorithm of Federated Foundation Model in biomedical:

Algorithm 1 Federated Foundation Model in Biomedical

Practical applications

Training foundation models within a federated learning framework presents distinct challenges, particularly due to the disparate nature of data sources and the varied computational resources across participating devices. The overarching goal is to cultivate effective and inclusive training strategies that can efficiently manage device heterogeneity and ensure data privacy, all while maintaining high model performance.

Training foundation models from scratch within a federated learning context is an ambitious endeavor that involves complex coordination and robust algorithmic strategies. Unlike traditional centralized training environments, federated learning necessitates handling data that remains on local devices, preventing the direct sharing of raw data. This scenario demands sophisticated techniques to efficiently aggregate learning from disparate data sources, which are often uneven in size and diversity. The primary challenge lies in ensuring that the model learns effectively from each node without requiring extensive computational resources or compromising the integrity and privacy of the data. To overcome these hurdles, training strategies must be carefully designed to optimize the learning process across the network, allowing for both model convergence and performance retention. Such strategies often involve advanced algorithms for secure multi-party computation, differential privacy, or decentralized optimization methods. By training foundation models from scratch in this way, the federated approach not only safeguards data privacy but also harnesses the unique insights embedded in local data distributions, leading to more robust and generalizable models.

Prompt learning is emerging as a pivotal approach in both natural language processing and computer vision fields, enabling models to adapt to new tasks with minimal changes to their architecture or weights. This section explores the integration of prompt learning with federated learning (FL) across different domains, highlighting recent advancements and unique applications.

Furthermore, beyond merely fostering participation, it is crucial to consider how profits and costs associated with deploying FMs via APIs are distributed. Ensuring a fair allocation of rewards and benefits is imperative to maintain trust and promote sustained cooperation among stakeholders. Mechanisms need to be established to define the distribution of profits derived from the use of FMs, guaranteeing a fair share of economic benefits. This equitable distribution is essential not only for fostering a sense of fairness but also for encouraging continued participation and investment in the FL ecosystem for FMs.

The concept of Federated Foundation Models is at the forefront of federated learning, enabling the training of large-scale models across distributed networks. This methodology is particularly effective in dealing with the challenges related to synchronizing and updating model parameters in environments where data quality and quantity are inconsistent across nodes. It ensures that learning is continuous and effective, even when network conditions and data availability vary significantly [83].

Additionally, the work titled “Heterogeneous Ensemble Knowledge Transfer for Training Large Models in Federated Learning” by Cho et al. [170] explores innovative techniques for transferring knowledge in federated settings. This study is crucial for the development of robust models capable of performing well across diverse network conditions. By facilitating knowledge transfer, this approach allows for the aggregation of insights from different data distributions and device capabilities, which is essential for building comprehensive and resilient models.

Furthermore, “No One Left Behind: Inclusive Federated Learning over Heterogeneous Devices” by Liu et al. [171] focuses on creating federated learning algorithms that integrate every participating device, regardless of its computational capabilities or the quality of the data it holds. This inclusivity ensures that every device contributes to and benefits from the collaborative learning process, thus maximizing the utilization of available data and enhancing the overall performance of the model. This approach is fundamental to achieving equity in model training and ensuring that the advantages of sophisticated model learning are universally accessible.

These studies provide a foundation for further research into strategies that enhance the combination of foundation models in federated learning frameworks, prioritizing inclusivity and efficiency.

Read-world applications of federated fm in healthcare

Recently, the integration of Federated Learning with Foundation Models has begun to demonstrate transformative potential in real-world healthcare applications. In this part, we highlight specific case studies and practical examples where these technologies have been successfully deployed.

Predicting Parkinson’s Disease Progression: [172] applies FL to train explainable AI (XAI) [173] models for predicting the progression of Parkinson’s disease. By collaborating across multiple medical centers without sharing raw patient data, they developed models that maintained patient privacy while achieving high predictive accuracy.

Mammography Analysis: [174] focuses on using FL for mammography analysis, enabling different healthcare providers to collaboratively train deep learning models without centralizing sensitive patient data.

Intensive Care Unit (ICU) Mortality Prediction: FLICU [175] framework utilizes FL to predict ICU mortality rates. By training models on decentralized data from multiple ICUs, the study demonstrated that FL could achieve performance comparable to centralized models.

Foundation models in biomedical healthcare