Abstract

Bearings are critical in mechanical systems, as their health impacts system reliability. Proactive monitoring and diagnosing of bearing faults can prevent significant safety issues. Among various diagnostic methods that analyze bearing vibration signals, deep learning is notably effective. However, bearings often operate in noisy environments, especially during failures, which poses a challenge to most current deep learning methods that assume noise-free data. Therefore, this paper designs a Multi-Location Multi-Scale Multi-Level Information Attention Activation Network (MLSCA-CW) with excellent performance in different kinds of strong noise environments by combining soft threshold, self-activation, and self-attention mechanisms. The model has enhanced filtering performance and multi-location information fusion ability. Our comparative and ablation experiments demonstrate that the model’s components, including the multi-location and multi-scale vibration extraction module, soft threshold noise filtering module, multi-scale self-activation mechanism, and layer attention mechanism, are highly effective in filtering noise from various locations and extracting multi-dimensional features. The MLSCA-CW model achieves 92.02% accuracy against various strong noise disturbance and outperforms SOTA methods under challenging working conditions in CWRU dataset.

Keywords: Deep learning, Bearing fault diagnosis, Self-activation mechanism, Attention mechanism

Subject terms: Information technology, Scientific data, Computational science

Introduction

Bearings are crucial components in mechanical systems, typically responsible for load-bearing and transmission. Their condition directly influences the reliability of mechanical systems. During operation, alternating stress can lead to the degradation or damage of bearing components, posing safety risks to both equipment and personnel. Consequently, monitoring the health and diagnosing faults in bearings is essential for preventing major safety incidents in mechanical systems1. Initially, bearing fault diagnosis primarily revolved around physical modeling and signal processing2. This approach necessitated a deep understanding of the mechanical system structure from researchers, and the diagnostic methods were constrained by prior knowledge, limiting their broad application in industrial settings3–7. With the rapid advancement and deployment of deep learning methods, data-driven deep learning algorithms have emerged as the dominant technology. They can adaptively extract fault characteristics and perform training and learning. Deep learning algorithms are capable of directly establishing a multi-dimensional nonlinear mapping relationship between fault signals and their categories, enabling accurate and swift diagnosis of bearing faults8. However, bearings often operate in complex and harsh environments, resulting in vibration signals that contain significant environmental noise. Particularly in the event of bearing failure, fault features may be obscured by noise, substantially diminishing the model’s accuracy9,10.

In recent years, the deep learning field has seen rapid advancements, with numerous models achieving breakthroughs in areas such as computer vision, natural language processing, and weather forecasting11–13. These developments demonstrate the potential of deep learning to automatically discern data patterns layer by layer from extensive datasets. Currently, mainstream CNN networks and their variants have shown promising results in diagnosing bearing faults with pure fault signal datasets. However, the real-world operating environments of bearings significantly differ from ideal laboratory conditions. Mechanical systems work amidst a variety of strong noises, which disrupts the application of deep learning networks trained on pure signals for actual diagnosis and prediction, leading to less satisfactory outcomes. In response to this challenge, some researchers have begun exploring bearing fault diagnosis in single noisy environments, using vibration signals. For instance, Zhang et al.14 proposed an enhanced residual network aimed at diagnosing bearing faults under Gaussian noise with SNR of 0–8 dB. Hou et al.15 added Gaussian-like noise signals with SNR of 10–20 dB to the dataset, used the attention mechanism, and filtered the noise through the Fourier transform. Similarly, Yao et al.16 developed an intelligent method using a stacked inverse residual convolution neural network (SIRCNN), which allows for the diagnosis of bearing faults in environments with a single type of noise, with SNR of − 6 to 10 dB. In conclusion, current research mainly targets vibration signals with single, weak noise. Studies on bearing fault diagnosis in signals with multiple, strong noises are still scarce. Moreover, data from a single sensor can be limited by factors like its operational state, potentially causing distortion and errors in the vibration signals17. Thus, the model’s failure to learn distinct fault features and its decreased robustness reduce its effectiveness. Given this limitation, it’s crucial to use information from sensors at multiple locations, allowing the deep learning-based network to identify more significant and complete fault features in fault diagnosis.

Addressing this challenge, this paper designs a novel Multi-Location Multi-Scale Multi-Level Information Attention Activation Network (MLSCA-CW), which combines soft threshold, self-activation, and self-attention mechanisms to perform excellently in various strong noise environments. Specifically, we considered noise levels SNR in the range of − 9 to 9 dB, which are strong noise levels in real-world conditions. Additionally, our experimental design includes the mixture of multiple noise sources to simulate the complex noise environment in actual equipment. The experimental results show that even in such complex and strong noise environments, our method can effectively extract fault features and perform accurate fault diagnosis. The main contributions of this paper include:

A novel feature extraction module (MLVFE) with adaptive extraction and fusion ability of multi-location sensor information is designed. The module uses multiple one-dimensional convolutions to extract features from multiple angles for each location channel and generates dynamic weights for feature information of different locations based on the weight distribution sub-module, to realize efficient extraction and weighted fusion of multi-location sensor information.

Improved self-activation mechanism (MSC-SA). Specifically, unlike previous studies, this paper uses multi-scale convolution to extract multi-scale signal features and efficiently and adaptively amends the main features according to the features of different scales, so that more information can be considered to achieve more effective feature extraction.

The deep residual shrinkage network module (DRSN-CW) with different thresholds per channel was distributed to multiple key locations of the MLSCA-CW model. The soft threshold mechanism was used to further filter the noise in the vibration features extracted by the multi-location feature extraction module and the multi-dimensional signal features extracted by multiple self-activation mechanisms, to improve the robustness of the model.

Multi-layer attention module is proposed. Specifically, the multi-layer attention module is added after the self-activation mechanism to automatically learn the weights of shallow and deep features in multiple feature subspaces, so as to adaptively enhance beneficial features and suppress unbeneficial features.

The rest of this article is organized as follows. “Related work” section reviews related work, “Method” section provides a detailed introduction to the proposed method, “Experiment” section describes the experiment and results, and “Conclusion” section gives the conclusion.

Related work

Deep learning has emerged as a prominent method for bearing fault diagnosis. Unlike traditional machine learning methods18–21, deep learning models bypass the cumbersome process of feature engineering and act as powerful universal function approximators with strong expressiveness. Especially with ample data, deep learning can autonomously learn complex data patterns. Convolutional Neural Networks (CNN)22, Autoencoders (AE)23,24, Deep Belief Networks (DBN)25,26, and Recurrent Neural Networks (RNN)27 have also created a wave of buzz in the field of intelligent fault diagnosis. Since their initial application to rolling bearing fault identification in 201628, CNNs and their variants have achieved satisfactory results in predicting pure bearing fault vibration signals29 . Wang et al.30 applied strategies like wavelet decomposition and interpolation to transform vibration signals into grayscale images, enhancing the CNN model’s generalization performance. Wen et al.31 utilized a CNN based on LeNet-5 to enhance the learned feature scale, achieving an accuracy of 99.79% on a pure test set. Zhuang et al.32 improved model performance by employing a multi-scale CNN to extract features at various scales. Li et al.33 introduced a novel bearing defect diagnosis algorithm utilizing an updated Dempster-Shafer hypothesis CNN (IDSCNN), which further enhanced network performance. Pan et al.34 developed a new architecture named “LiftingNet,” based on CNN, to mitigate the impact of speed variations on prediction outcomes.

However, most of the current deep learning methods use pure datasets, and a few scholars add no more than two classes of low-intensity noise to the dataset for experiments. For example, Deng et al.35 proposed a framework MgNet based on multi-granularity information fusion to explore the performance of the network in weak Laplacian noise and Gaussian noise environments. Yan et al.36 proposed an attention mechanism-guided residual convolution variational auto-encoder network (AM-RCVAE), which can diagnose bearing faults under Gaussian white noise and pink noise with SNR of − 6 to 6 dB. Zhang et al.14 proposed a residual learning network that can improve network information flow for bearing fault diagnosis of a single type with SNR of 0–9 dB. Yao et al.16 proposed a stacked inverse residual convolution neural network (SIRCNN) for classifying the type and severity of bearing failure in single noise environments with SNR of − 6 to 10 dB. Bearing fault diagnosis under high intensity and multi-noise environment is the basis for applying bearing fault diagnosis methods based on deep learning to actual diagnosis.

However, the existing network ignores the contribution of shallow features, the extraction of multi-scale information and the fusion of multi-location information, and only overly relies on the deep network to extract effective information at a specific location. This results in their poor performance in environments with complex noise. Therefore, it’s necessary to design a new neural network to complete multi-scale feature extraction and fusion of multi-position vibration signals, multi-level feature adaptive aggregation and multi-stage noise filtering, so as to realize efficient extraction of fault features and accurate judgment of fault types.

Method

In this paper, the MLSCA-CW model mainly includes the variable-scale multi-location information extraction and weighted fusion module, the noise filtering module based on soft threshold, the improved multi-scale self-activation mechanism, and the multi-layer attention mechanism. In the network design, we took the coupling information contained in data from multiple location sensors and the complementary nature of different scale features within a single sensor into account. To achieve this, we weighted and aggregated signal features extracted through one-dimensional convolution with multiple receptive fields at each location. The information from multiple location sensors is fused to enhance the feature information. To mitigate the interference of noise signals in these features, the signals obtained by the multi-location feature extraction module are input into the soft threshold processing module for adaptive adjustment and suppression of noise signals, thereby effectively filtering out a majority of noise present in the feature signals. Then, the output feature signals are subsequently subjected to multi-scale convolution for the extraction of features at various scales. Additionally, a self-activation mechanism is computed based on the processed features obtained from different scales to dynamically rectify the primary feature, thereby enhancing the clarity of fault feature signals. The signal passes through multiple self-activated modules with the same structure to extract multi-level features from shallow to deep layers. The characteristic fault signals and the noise signals doped by the further extraction and separation will become more easily distinguished in the high-dimensional space. At the end of each self-activation layer, the soft threshold mechanism is used to further filter the noise, and the characteristic signals are further purified. The pure deep and shallow features encompass the most crucial information necessary for bearing fault diagnosis. By employing a multi-layer attention module, the shallow and deep feature signals are meticulously processed to acquire pure high-recognition signals, which are subsequently fed into a multi-layer perceptron to yield the ultimate fault diagnosis outcomes.

Framework of MLSCA-CW

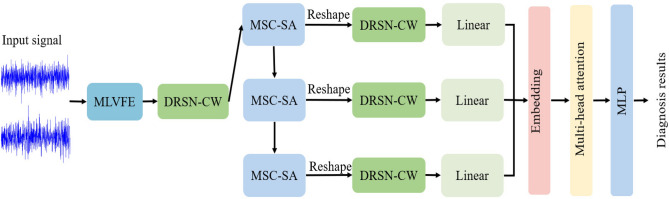

The MLSCA-CW network comprises a multi-location feature extraction and fusion module, four soft threshold signal filtering modules, three feature mapping processors, a multi-layer attention module, and a classifier that integrates feature information to produce diagnostic results. The framework of MLSCA-CW is shown in Fig. 1.

Fig. 1.

Framework of MLSCA-CW. The input is two locations sensor vibration signals, and the output is the classification results. MLVFE is a multi-location vibration signal feature extractor, DRSN-CW is a noise filter module based on soft threshold, MSC-SA is multiple self-activated groups based on multi-scale convolution, Linear is a linear layer, Embedding represents the feature embedding layer, Multi-head Attention is a multi-head attention mechanism, and MLP is a multi-layer perceptron.

Key modules of MLSCA-CW

Multi-location vibration feature extractor

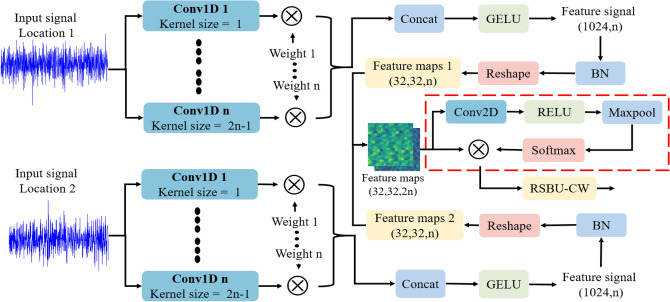

In neural network, the quality of input signal is related to the final performance of the network, and high-quality data input is the basis of excellent network performance. Zheng et al.17 found that the data collected by a single location sensor would have problems such as signal distortion, weak anti-interference ability of signal information, and incomplete extracted characteristic signals. Furthermore, the sensor captures one-dimensional signals that are constrained by the limited expressive capacity of one-dimensional convolution, leading to incomplete feature extraction from input signals. There is a lack of consensus among scholars regarding efficient methods for converting these one-dimensional signals into more expressive two-dimensional features. Among various transformation methods, Chen et al.37 incorporated a dedicated feature extractor into the network’s front end to enable adaptive and learnable feature extraction, while simultaneously reducing the need for additional data preprocessing as demonstrated in Cheng’s study38. Furthermore, research has demonstrated39 that during the process of extracting network data features, convolutional networks with multiple receptive fields exhibit a more comprehensive expression of data characteristics compared to those with fixed view fields. This enhanced representation capability also contributes to increased robustness and accuracy within the network. In summary, we incorporate a self-designed multi-receptive field multi-location vibration feature extraction module (MLVFE, Fig. 2) based on one-dimensional convolution in the network’s front end to achieve a more comprehensive and holistic nonlinear feature mapping for multiple one-dimensional feature signals.

Fig. 2.

Structure of Multi-location Vibration Signal Feature Extractor (MLVFE). The input is the vibration signal of two locations sensors, and the output is the two-dimensional vibration signal feature map of multi-location fusion. Conv1D n (n = 1,2,3....) is a series of one-dimensional convolution with a convolution kernel of 2n-1, which is used to extract vibration signal features from multiple scales. The dotted box is the weight distribution sub-module, which will extract the extracted features through two-dimensional convolution, RELU activation function, maximum pooling, and finally use Softmax activation function to process and convert them into the weights of different location sensor information to realize the dynamic fusion of multi-sensor information.

Specifically, let ,represent the signal inputs of sensors at two distinct locations, then the output 2D feature map of MLVFE can be calculated according to Eq. (1). Where, “” is convolution operation, “” is Conv1D with convolution kernel size of i, “” is Conv2D with convolution kernel size of i, “BN” is batch normalization. Besides, to couple different spatial features , a trainable weight, is added to each Conv1D in MLVFE. Furthermore, to facilitates the fusion and complementation of vibration signal characteristics across these locations, an adaptive learning parameter W, similar to the learning weight of attention mechanism, is calculated by RELU et al. operations.

We use multiple unshared one-dimensional convolution sets to process signals at different locations separately, and have captured the multi-scale features contained in the signals at each location. Take the signal input at a certain location as an example, assuming its shape is , after 3 () one-dimensional convolution processing with kernel size 1, 3 and 5, vectors of shape , and can be generated, and then they are concated into vectors of shape .

| 1 |

Deep residual shrinkage network with channel-wise thresholds

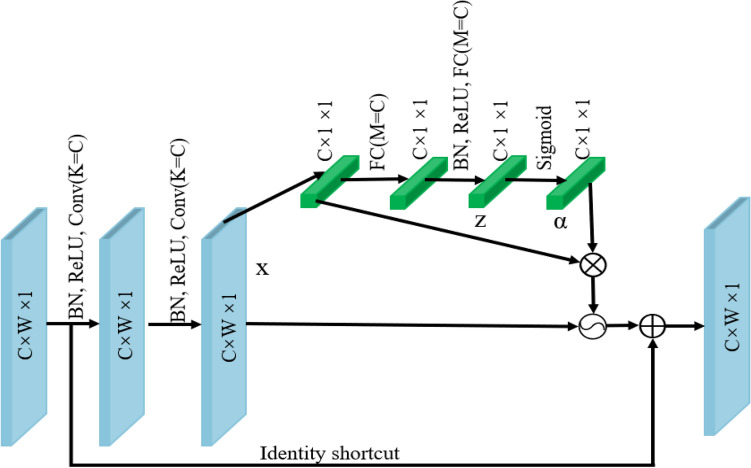

Soft threshold segmentation is a commonly used denoising method in signal processing40,41. The general workflow involves converting the original input signal into a feature space, where near-zero values are not significant, through a series of processing steps. Then, a soft threshold is applied to convert these near-zero features to zero. The classic wavelet thresholding method in signal processing is to design a task-oriented filter to convert beneficial information into very positive or negative features, and at the same time convert noise and other unbeneficial information into near zero features, to eliminate the influence of noise. This approach is effective but a task-oriented filter requires very specialized signal processing knowledge and has been very challenging. Thanks to the rapid development of deep learning, the basic working principle of deep learning is to use gradient descent algorithm to adaptively fit data in multiple dimensions, which coincides with the feature engineering in soft thresholding42. Therefore, the DRSNs series network combining soft threshold and deep learning network came into being. The structural framework of Deep Residual Shrinkage Networks with Channel-wise Thresholds (DRSN-CW) is shown in Fig. 3.

Fig. 3.

Structure of DRSN-CW. Where K is the number of convolution kernels in the convolution layer, M is the number of neurons in the FC network, and C, W, and 1 in C Ã- W Ã- 1 represent the number of channels, width, and height of the feature map, respectively. X, Z, and are indicators of the feature map to be used when determining the threshold.

The basic principle function of soft threshold is shown in Eq. (2):

| 2 |

Where x is the input feature signal, y is the output feature signal, and is the threshold and positive parameter. The threshold method, akin to the RELU function but not identical, sets features near to zero as zero while preserving both positive and negative features.

Deep learning uses gradient descent method to gradually approach the optimal point, and analyzes the threshold method from the principle of gradient descent. As shown in Eq. (3), the derivative of the input x on the output y. The derivative of the input to the output in the threshold method, is either 0 or 1. This property effectively mitigates issues related to gradient vanishing and exploding during the solving process.

| 3 |

The DRSN-CW module is composed of three “BN” and “RELU” operations, two convolutional layers, two fully connected layers, a global average pooling layer, and a sigmoid activation function. As shown in Fig. 3, the green part is the proprietary module for soft threshold estimation. In this specific section, the absolute values of the inputs are passed through a global average pool to generate one-dimensional vectors. The one-dimensional vector is connected by two layers of neurons, each with the same number of channels as the input feature map, in order to obtain a scaling parameter vector. Then, we pass the scaled parameter vector through the sigmoid function. Using the expression in Eq. (4), we can transform it into a one-dimensional vector where each element is between (0, 1).

| 4 |

where is the characteristic of the Cth neuron, is the Cth scaling parameter. After that, the thresholds are calculated as Eq. (5):

| 5 |

where is the threshold of the Cth channel of the feature map. i, j and c are indexes of the feature map width, height, and channel. The threshold after adaptive learning remains within a reasonable range. Then, a threshold filter is applied to the input signal. Additionally, to avoid gradient vanishing and exploding issues in the network, the classical identity mapping technique from residual networks is employed.

Multi-scale multi-headed self-activation mechanism

Activation function is the key to adaptive learning of deep learning network. Appropriate activation function ensures the mapping ability of neural network in multi-dimensional feature subspace. Currently, scholars divide the activation function into two major categories, namely the linear rectification function RELU ( ) and its related variants, as well as the Swish( , ) function with high precision in the neural network framework system43. The activation function of the self-activation mechanism adopted in this paper is a smooth approximation of the RELU function, combined with the advantages of the accuracy of the Swish function. And is calculate by Eq. (6).

| 6 |

Where is a learnable parameter, , are the signal characteristics after mapping through different receptive fields.When , and , holds, while holds if , are satisfied. That is, the self-activation function adopted in this paper can select the activation function suitable for specific tasks according to different adaptive parameters of tasks. RELU and Swish are both special forms of 43.In addition, is different from the Swish function with fixed upper and lower bounds. If , , and , , then substitute , into Eq. (6), and we get: .After that, calculating the first and second derivatives and solving the equation . When , The maximum and minimum values of the first derivative of can be obtained as shown in Eq. (7):

| 7 |

As shown in Eq. (7) that modifying the values of and can adjust the gradient range of the activation function in the model, namely the activation degree of neurons. And the value of ( the larger is, the smaller x is needed to make closer to the lower bounds, and the opposite is the same ) can change the speed of x approaching the upper/lower bounds. Therefore, choosing a reasonable parameter can improve the overall performance of the model. For example, in44, parameter is set as a trainable parameter and the performance of the model is improved. And in our proposed module, parameter is used as a learnable parameter determined by network training.

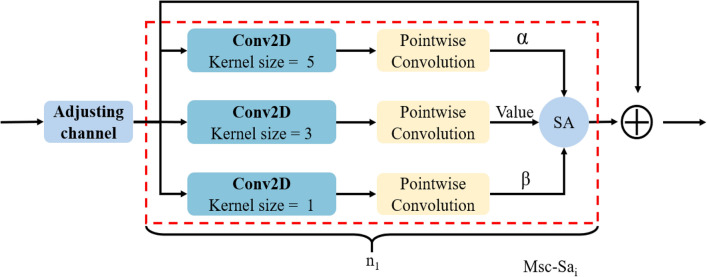

The activation degree of neurons can be adaptively adjust in the Multi-Scale Multi-headed Self-Activation module (MSC-SA) with three trainable parameters similar to the transformer induction bias, so as to avoid the limitations of fixed activation function in feature extraction and gradient calculation. Building on this, to further enhance the model’s robustness, we employ multi-scale convolution combined with point convolution to extract channel information. This information serves as a parameter to regulate neuron activation levels, facilitating the creation of a new self-activation function, as illustrated in Fig. 4.

Fig. 4.

Structure of MSC-SA. indicate the number of repetitions for ().

To ensure consistent residual connection feature shape in network design, the input feature channels are adjusted through under-sampling multi-scale convolution prior to MSC-SA extracting input features. Then, leveraging the number of channel heads, we apply operations akin to the multi-head self-attention mechanism found in transformers. The program computes the value of the self-activation function for the feature, conducting these calculations in parallel.

Multi-headed layer attention mechanism

The MLSCA-CW model use three multi-head self-activation modules with multi-scale receptive fields to extract the shallow and deep features of the signal from shallow to deep. In previous studies, scholars only used the final deep features as the discriminant basis of the final classifier, which made the shallow signal features directly discarded, while the shallow signal features may contain beneficial information for prediction. Therefore, this paper proposes an innovative approach that seamlessly integrates shallow and deep feature signals using a multi-head attention mechanism. This enables the model to adaptively leverage both shallow and deep features for comprehensive information extraction, thereby enhancing robustness and improving prediction accuracy. After noise reduction by the DRSN-CW module, both shallow and deep features are input into a linear layer, as shown in Fig. 1, to ensure a uniform feature shape across all outputs. To enhance the attention mechanism’s ability to learn the relationship between deep and shallow features, the input is processed through an embedding layer. This layer effectively captures the complex hidden relationships between them. The process can be expressed as Eq. (8).

| 8 |

Where, represent the input features, represent the result of embedding, and E is a trainable matrix to nonlinear mapping from to . Subscript ( ) represents the network layer index.

The MLSCA-CW model applies the attention mechanism in the form of multiple heads to capture the coupling between all features. Specifically, take the input as an example, through the multi-head ( head=4) mechanism will get four outputs . To get the output corresponding to , the multi-head attention mechanism first splicing four output . Then, a single-layer fully connected neural network without nonlinear activation layer is used to perform linear transformation on the splicing results to obtain . The complete mathematical operation process of this process is as follows:

represent the input of the multi-layer attention module, and it also the output of the embedding . We can get . And we can calculate the q/k/v vector by Eq. (9).

| 9 |

Where LN() represent the layer normalization, is the index of attention head, and A is the total number of attention heads. Calculating attention weights through dot product, as shown in Eq. (10).

| 10 |

Where SM is softmax activate function.

In the attention mechanism encoding process, as shown in Eq. (11), is obtained by taking the weighted sum of the first computed v vector using the self-attention coefficient of each attention head.

| 11 |

Then, as shown in Eq. (12), these vectors from all heads are successively projected to obtain the attention representation results of various hierarchical features within the network.

| 12 |

Experiment

This study utilizes the Pytorch-based deep learning platform to develop deep neural networks, leveraging autograd and GPUs for accelerated Tensor computations. Additionally, Windows 10 serves as the operating system.

Dataset

The data sources of this paper are Case Western Reserve University (CWRU) Bearing Fault Diagnosis Dataset45 and the Intelligent Maintenance Systems (IMS) Dataset46. The CWRU dataset contains the pure vibration data of bearings from different fault modes and working conditions and is widely used. In this paper, it is applied to the research of bearing fault diagnosis under different kinds of strong noise. The bearing working environment of IMS data set is closer to the actual conditions. Therefore, this paper applies it to the study of the comprehensive performance of the model.

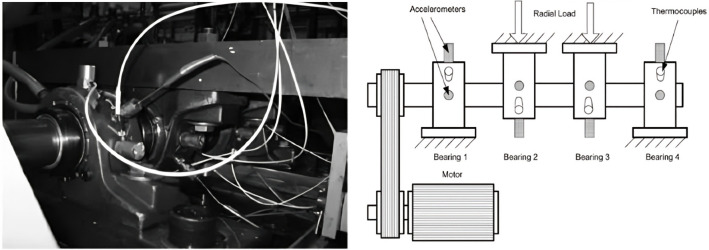

a) Case Western Reserve University (CWRU) Dataset

The data set was collected by the Case Western Reserve University Bearing Data Center, and the bearing failures were man-made failures induced by Electrical Discharge Machining (EDM). Specifically, the failures with diameters of 0.007, 0.014 and 0.021 inches are introduced into the inner ring, outer ring and rolling body of the bearing respectively. Then, the bearings were placed in the test motor and data were recorded using vibration sensors at the motor’s drive end (DE) and fan end (FE). Throughout the experiment, the motor load range was 0 to 3 hp, the motor speed oscillated slightly within the range of 1720–1797 RPM, and the vibration signal sampling frequency was 12 kHz and 48 kHz. And the DE and FE data were selected in our experiment, with a fault size of 0.007 inch and a sampling frequency of 12 kHz, under a load condition of 0 hp45.

b). Intelligent Maintenance Systems (IMS) Dataset

The dataset is life-cycle data for rolling bearings produced by the NSF I/UCR Intelligent Maintenance Systems Center at the University of Cincinnati, USA, with support from Rexnord, Inc., Milwaukee, WI46.

As shown in Fig. 5, the AC motor rotates at a constant speed of 2000 rpm and is connected to the shaft by a friction belt. Four Rexnord ZA-2115 double row roller bearings were installed on the rotating shaft, and a radial load of 6000 pounds was applied to the bearings through a spring mechanism. And all bearings are force-lubricated. Measuring the vibration signals of bearings in X and Y directions with high-precision quartz accelerometers. All bearings are damaged after serving beyond the design service life (more than 100 million times of rotation). This paper considers that the data collected by the accelerometer in two directions are bearing signals in two different locations. In our experiment, the health status of the bearing dataset was classified into four categories, namely outer ring failure (OR), inner ring failure (IR), rolling sphere failure (BF) and healthy bearing (H).

Fig. 5.

The IMS systems47.

c) University of Ottawa (UofO) Dataset

The experiments are conducted using a SpectraQuest machinery fault simulator (MFS-PK5M). The motor drives the shaft, and its rotational speed is controlled by an AC drive. Two ER16K ball bearings support the shaft; the left bearing is healthy, while the right one is replaced with bearings in various health conditions. An ICP accelerometer (Model 623C01) is mounted on the housing of the experimental bearing to collect vibration data. Additionally, an incremental encoder (EPC model 775) measures the shaft’s rotational speed.And data sets are designed in detail in Reference48,49.

Experimental setup

To convert one-dimensional vibration signal into two-dimensional feature map, a sample in this paper is 1024 vibration signal sampling points intercepted at the same time in two locations.In CWRU dataset, we treat drive end (DE) and fan end (FE) as two different locations. And the samples are intercepted by sliding window according to the step size of 128. And we got 4744 samples. The total number of samples of health status, rolling element fault, outer ring fault and inner ring fault are 1186, 1186, 1186 and 1186 respectively.

In IMS dataset, referring to the analysis of IMS data by Hai Qiu et al.47, we selected data from bearing 1 of dataset1 23/10/2003 12:06:24 to 09/11/2003 13:05:58 that were considered healthy. Data selected from bearing 3 of dataset1 18/11/2003 08:22:30 to 24/11/2003 23:57:32 are considered inner ring failures. Data selected from bearing 4 of dataset1 18/11/2003 08:22:30 to 24/11/2003 23:57:32 are considered rolling element failures. The data selected from bearing 3 of dataset3 12/4/2004 14:51:57 to 18/4/2004 02:42:55 is considered to be an outer ring failure.the different channel data of bearing 1, 3, and 4 in the dataset 1 are regarded as two locations; in the dataset3, the data of bearing 3 is repeated twice as two locations data respectively. And the samples are intercepted by sliding window according to the step size of 1024. And we got 4,095 samples. The total number of samples of health status, rolling element fault, outer ring fault and inner ring fault are 1023, 1123, 1123 and 1124 respectively.

In the UofO dataset, data sets 01 collected under the condition of health, inner ring failure and outer ring failure are selected respectively under the condition of bearing speed increase. In each 01 bearing data set, ’Channel_1’ is the vibration data measured by the accelerometer, and ’Channel_2’ is the speed data measured by the encoder. In this paper, ’Channel_1’ and ’Channel_2’ are regarded as two different position data. Using sliding window interception, the step size is 1024, and 5,860 samples are obtained. The total number of samples of health status, outer ring fault and inner ring fault are 1953, 1953 and 1954 respectively.

All samples are divided into training set, validation set and test set according to the proportion of 6:2:2. To ensure the credibility and reproducibility of our experimental results, we established a fixed random seed. In the training process, we set the learning rate to 0.001 and send up to 64 samples to the model for training (i.e. batch size = 64). The loss function is set to cross-entropy loss, and we choose the stochastic gradient descent (Adam)32 optimizer to optimize the network.

Three evaluation indicators were selected, namely prediction accuracy (ACC), F1-score and Recall, and the corresponding evaluation index calculation formula is as follows:

| 13 |

| 14 |

| 15 |

Where, , , and represents the true positive rate, true negative rate, false positive rate and false negative rate of i class respectively. And C denotes the number of classes.

To discuss the effectiveness of the proposed MLSCA-CW network, we next select six benchmark models for comparison.

LR model: LR model is a logical regression model, which is often used to deal with regression problems where the dependent variable is a categorical variable. It can deal with multi-classification problems. It is a classic network in the field of machine learning, with good interpretability, low calculation cost and fast training speed.

MC-CNN model50: This network is widely employed in fault diagnosis due to its incorporation of multi-scale convolution into the traditional CNN architecture, thereby enhancing the model’s robustness. And this is a multi-scale method with excellent performance. And this is a multi-scale method with excellent performance.

WDCNN model51: It is a widely used bearing fault diagnosis method. It uses the wide convolution kernel of the first convolution layer to extract features and suppress noise.

Multiscale inner product model52: A deep learning method based on multi-scale inner product and locally connected features to extract fault diagnosis in vibration signals, with strong ability to collect multi-scale local information. And this is a multi-scale method with excellent performance. And this is a multi-scale method with excellent performance.

SANet model53: Multi-scale convolution is used for feature extraction. Besides, it is inspired by the transformer architecture, incorporates a self-activation mechanism, with strong comprehensive performance such as accuracy and robustness. And this is a multi-scale method with excellent performance. And this is a multi-scale method with excellent performance.

The QCNN model54: It is a convolutional neural network constructed based on quadratic neurons, from which the attention mechanism is derived and has a strong filtering effect on noise.

This study conducts preliminary tests on the performance of the model using the CWRU dataset and IMS dataset. Meantime, to study the anti-interference ability of the model to multi-noise and strong noise, we added Gaussian noise, Laplacian noise, Brownian noise, and Violet noise to the CWRU original signal under the condition that the SNR ( ) ranged from − 9 to 9 db and the interval was 3db. Subsequently, to test the comprehensive performance of the model, we introduced the IMS dataset for further comprehensive performance testing.

Results and discussion

Ablation experiment

To assess the efficacy of the proposed module, we conducted comprehensive ablation experiments on various network modules in the presence of violet noise with SNRs ranging from -9dB to 9dB. The experimental findings are presented in Tables 1 and 2.The data in Table 1 are the ablation experiments of the two locations model, and the data in Table 2 are the ablation experiments of the single location model ( drive end (DE) data ). Where the MLSCA model removes the soft threshold module from the MLSCA-CW model, while MLSCA-CW-LSA eliminates the layer attention mechanism at the end of its structure. Additionally, MLSCA-CW-SCA represents the model without the multi-scale self-activation mechanism.And MLSCA-FFT represents the replacement of the soft threshold module with a fast Fourier transform (FFT).

Table 1.

Ablation experimental results of models under different intensities of Violet noise(CWRU dataset, two locations).

| Model | SNR | 9 dB | 6 dB | 3 dB | 0 | 3 dB | 6 dB | 9 dB |

|---|---|---|---|---|---|---|---|---|

| MLSCA-CW | ACC | 92.017 | 92.437 | 94.118 | 96.639 | 100.000 | 100.000 | 100.000 |

| F1-score | 87.802 | 90.130 | 93.052 | 95.452 | 100.000 | 100.000 | 100.000 | |

| MLSCA | ACC | 68.487 | 76.891 | 77.731 | 78.151 | 79.412 | 80.672 | 87.815 |

| F1-score | 57.745 | 65.497 | 71.404 | 73.887 | 75.648 | 76.667 | 87.479 | |

| MLSCA-CW-LSA | ACC | 84.034 | 88.655 | 91.176 | 95.378 | 100.000 | 100.000 | 100.000 |

| F1-score | 68.116 | 86.634 | 88.365 | 93.749 | 100.000 | 100.000 | 100.000 | |

| MLSCA-CW-SCA | ACC | 78.992 | 81.513 | 81.933 | 82.773 | 83.193 | 86.134 | 92.857 |

| F1-score | 66.776 | 67.780 | 68.728 | 76.395 | 77.026 | 81.189 | 91.008 | |

| MLSCA-FFT | ACC | 61.765 | 63.445 | 74.790 | 76.050 | 77.731 | 79.412 | 84.454 |

| F1-score | 46.556 | 51.475 | 68.913 | 71.019 | 74.568 | 73.527 | 83.146 |

Best results is highlighted

Table 2.

Ablation experimental results of models under different intensities of Violet noise (CWRU dataset, single location).

| Model | SNR | 9 dB | 6 dB | 3 dB | 0 dB | 3 dB | 6 dB | 9 dB |

|---|---|---|---|---|---|---|---|---|

| MLSCA-CW | ACC | 91.437 | 92.017 | 93.697 | 94.597 | 95.277 | 99.160 | 100.000 |

| F1-score | 88.815 | 89.326 | 89.665 | 92.078 | 92.563 | 98.985 | 100.000 | |

| MLSCA | ACC | 73.109 | 75.210 | 76.050 | 79.412 | 84.034 | 84.454 | 92.857 |

| F1-score | 67.711 | 70.018 | 70.157 | 74.224 | 79.210 | 80.813 | 91.590 | |

| MLSCA-CW-LSA | ACC | 91.597 | 92.437 | 92.857 | 93.277 | 94.539 | 96.218 | 99.580 |

| F1-score | 89.186 | 90.512 | 91.088 | 91.571 | 92.605 | 95.453 | 99.519 | |

| MLSCA-CW-SCA | ACC | 83.193 | 83.193 | 84.454 | 84.874 | 86.975 | 90.756 | 93.277 |

| F1-score | 68.151 | 72.383 | 79.472 | 79.535 | 84.337 | 88.925 | 92.305 | |

| MLSCA-FFT | ACC | 73.529 | 76.471 | 77.731 | 79.832 | 81.933 | 83.193 | 88.235 |

| F1-score | 67.594 | 69.448 | 71.267 | 76.235 | 78.352 | 77.352 | 86.290 |

Best results is highlighted

According to the data analysis presented in Table 1, the removal of the soft threshold noise reduction module from the MLSCA-CW model leads to a significant decrease in its noise resistance. The MLSCA model fault classification accuracy at -9dB SNR is only 68.487%, and the F1-score is even only 57.745%, the model’s classification effect is particularly affected by noise. As a comparison, the proposed MLSCA-CW model has a high classification accuracy of 92.017% and an F1-score index of 87.802% under the same conditions. In addition, for a low-noise environment with a SNR of 9, the MLSCA model’s classification accuracy without the soft-thresholding module is only 87.815%, which further suggests that the soft-thresholding module is effective in filtering out the majority of the noise and retaining the effective main fault features. The MLSCA-CW-LSA model removes the layer attention module making it unable to effectively utilize the low-dimensional features, and the model classification can only refer to the final extracted high-dimensional features while ignoring the important information that may be contained in the shallow features. From the results, after removing the layer attention, the model extracts high-dimensional features that can support the model for deep nonlinearities when the SNR is greater than 0. Meantime, the model’s predicted classification accuracy approaches 100%.

However, as the noise intensity continues to increase, the performance of the model begins to decrease and gradually falls below that of the proposed MLSCA-CW model. Obviously, with the enhancement of noise signals, the performance of the model without layer attention mechanism decreases more sharply, which further shows that the designed layer attention mechanism can well integrate high-dimensional and low-dimensional vibration features, so that the information obtained by the classifier is more comprehensive and complete, and ultimately greatly improves the model performance. The MLSCA-CW-SCA model removes the multi-scale self-activation mechanism. From the table 1, compared with the proposed complete model, the removal of the self-activation mechanism causes a serious performance decline. Even under very weak noise intensity (SNR=9dB), the model has only 92.857% prediction accuracy, while the model accuracy decreases to 78.992% in a strong noise environment with a SNR of − 9. This shows that our improved self-activation mechanism has a positive impact on improving the accuracy and robustness of the MLSCA-CW model. FFT is an effective means to reduce noise in traditional signal processing. MLSCA-FFT model uses FFT to replace soft threshold module in MLSCA-CW model. Through the experimental results, we can find that the MLSCA-FFT model has a great loss of noise filtering ability for both single and multi-position signals. In the strong noise environment, the performance of MLSCA-FFT model decreased by more than 30%. This further shows that soft threshold has great advantages in noise filtering of bearing data compared with traditional FFT. Because soft threshold dynamically adjusts the processing of noise through adaptive channle-specific thresholds to maintain higher diagnostic accuracy and stability, especially in real-time, dynamically changing noise environments. In contrast, FFT is based on a fixed frequency domain filter, its excessive suppression of a specific frequency in a high noise environment may lead to the loss of important signals. And verifies the advanced nature of the methods used in this paper.

The single location ablation data in Table 2 were analyzed, and the rule was consistent with that shown by the two locations model in Table 1. However, the performance of each experimental data of the single location decreased compared with that of the two loctions data. This shows that the two locations feature extractors can effectively supplement the single loction feature; Thus, the fault features extracted by the model are enriched, and the accuracy of bearing faults is improved.

Overall, the soft-thresholding module is essential for effectively filtering noise and preserving fault features, particularly in high-noise environments. The layer attention mechanism plays a crucial role in integrating both low-dimensional and high-dimensional features, enabling the model to focus on critical patterns in noisy data. The multi-scale self-activation mechanism enhances the model’s robustness by dynamically activating important features across multiple scales, which is especially beneficial under varying noise conditions. Additionally, utilizing multi-location data provides richer context and features, improving fault classification accuracy by capturing a more comprehensive set of fault information from multiple sensor locations.

In summary, the soft threshold module, layer attention mechanism, improved self-activation mechanism ,and two-location feature extractors employed by the MLSCA-CW model have significantly contributed to enhancing its performance and robustness.

Comparative experiments

Model performance without noise

To evaluate model performance without noise, tests were conducted on the CWRU and IMS datasets. As shown in Table 3, Except for the LR model, all models achieved 100% prediction accuracy on the CWRU dataset. On the IMS dataset, the MLSCA-CW, MC-CNN, and QCNN models reached 100% prediction accuracy.

Table 3.

Comprehensive performance of each model.

| Dataset | CWRU | IMS | ||

|---|---|---|---|---|

| Model | ACC | F1-score | ACC | F1-score |

| MLSCA-CW | 100.000 | 100.000 | 100.000 | 100.000 |

| LR | 82.689 | 66.410 | 69.597 | 65.226 |

| MC-CNN | 100.000 | 100.000 | 100.000 | 100.000 |

| WDCNN | 100.000 | 100.000 | 99.389 | 99.396 |

| Multiscale inner product | 100.000 | 100.000 | 99.634 | 99.643 |

| SANet | 100.000 | 100.000 | 92.918 | 92.965 |

| QCNN | 100.000 | 100.000 | 100.000 | 100.000 |

Best results is highlighted

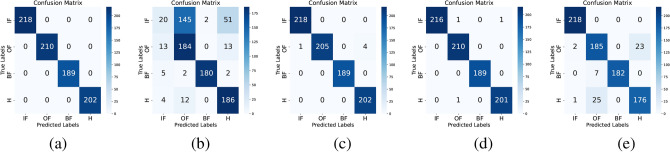

In order to evaluate the performance of the model more intuitively, we further visualized the prediction results of the IMS dataset and obtained the confusion matrix as shown in Fig. 6.

Fig. 6.

Confusion matrix for all methods in the IMS dataset. IF, OF, BF and H respectively represent inner ring fault, outer ring fault, ball fault and health. (a) Confusion matrix of MLSCA-CW, MC-CNN and QCNN models. (b) Confusion matrix of LR model. (c) Confusion matrix of WDCNN model. (d) Confusion matrix of Multiscale inner product model. (e) Confusion matrix of SANet model.

According to the results in Fig. 6, the MLSCA-CW, MC-CNN, and QCNN models show excellent performance, while the other models have mediocre performance. Specifically, the LR model is extremely inaccurate for inner ring fault judgment; the WDCNN model fails only for part of the outer ring fault judgment; the Multiscale inner product model is partially unclear for the inner ring fault and healthy bearing judgment; and the SANet model is more confusing for distinguishing between outer ring fault and healthy bearing signal information.

In noise-free environments, the MLSCA-CW, MC-CNN, and QCNN models all achieved 100% prediction accuracy. However, bearings often operate amidst various types of intense noise in real-world conditions. Therefore, we tested the performance of each model under various types and intensities of noise.

BModel performance under various and intense noise conditions

a) CWRU dataset performance

Due to the presence of various types of intense noise in the operating environment of bearings. This paper is dedicated to exploring the performance of the model in complex environments containing multiple types of noise backgrounds. The proposed model and related benchmark comparison models are subjected to performance testing. The proposed model and six comparative models were subjected to testing using the same dataset (CWRU dataset) and testing methods, and the corresponding results are presented in Tables 4 and 5.

Table 4.

ACC performance comparison of different models in CWRU dataset under different SNRs (%).

| SNR (dB) | 9 | 6 | 3 | 0 | 3 | 6 | 9 | |

|---|---|---|---|---|---|---|---|---|

| Model | Noise type | |||||||

| MLSCA-CW (two locations) | Gauss | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 92.017 | 92.437 | 94.118 | 96.639 | 100.000 | 100.000 | 100.000 | |

| Brown | 99.160 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 95.378 | 97.899 | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 | |

| MLSCA-CW (single location) | Gauss | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 99.580 | 99.580 | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 91.437 | 92.017 | 93.697 | 94.597 | 95.277 | 99.160 | 100.000 | |

| Brown | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 90.756 | 96.639 | 99.580 | 99.580 | 100.000 | 100.000 | 100.000 | |

| LR | Gauss | 48.739 | 56.303 | 60.084 | 65.126 | 71.429 | 80.672 | 81.513 |

| Laplace | 47.479 | 48.319 | 61.345 | 67.647 | 68.487 | 73.950 | 75.630 | |

| Violet | 49.580 | 56.303 | 58.824 | 60.504 | 61.345 | 62.605 | 31.092 | |

| Brown | 31.092 | 31.933 | 33.613 | 35.714 | 38.655 | 44.958 | 52.101 | |

| Mixed | 52.941 | 53.782 | 57.983 | 58.824 | 62.605 | 65.126 | 66.387 | |

| MC-CNN | Gauss | 97.479 | 98.739 | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 81.933 | 83.824 | 94.958 | 98.739 | 100.000 | 100.000 | 100.000 | |

| Brown | 41.176 | 42.857 | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 82.773 | 86.218 | 99.557 | 100.000 | 100.000 | 100.000 | 100.000 | |

| WDCNN | Gauss | 60.504 | 77.311 | 93.277 | 99.580 | 99.580 | 100.000 | 100.000 |

| Laplace | 96.387 | 97.185 | 97.899 | 98.697 | 99.370 | 100.000 | 100.000 | |

| Violet | 81.092 | 81.513 | 82.521 | 84.538 | 93.025 | 97.479 | 100.000 | |

| Brown | 87.815 | 88.655 | 93.697 | 99.160 | 100.000 | 100.000 | 100.000 | |

| Mixed | 82.773 | 84.638 | 91.176 | 99.580 | 100.000 | 100.000 | 100.000 | |

|

Multiscale inner product |

Gauss | 92.437 | 98.319 | 98.739 | 99.580 | 100.000 | 100.000 | 100.000 |

| Laplace | 89.076 | 94.538 | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 84.874 | 88.655 | 89.916 | 91.597 | 92.437 | 97.059 | 100.000 | |

| Brown | 99.580 | 99.580 | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 94.538 | 96.526 | 98.375 | 99.131 | 99.580 | 100.000 | 100.000 | |

| SANet | Gauss | 92.080 | 92.577 | 93.697 | 94.958 | 100.000 | 100.000 | 100.000 |

| Laplace | 98.562 | 99.148 | 99.580 | 99.580 | 100.000 | 100.000 | 100.000 | |

| Violet | 65.966 | 73.109 | 74.370 | 85.714 | 87.395 | 97.059 | 100.000 | |

| Brown | 38.655 | 49.160 | 60.504 | 79.832 | 79.974 | 80.672 | 87.625 | |

| Mixed | 68.487 | 81.092 | 84.034 | 90.336 | 97.479 | 99.160 | 100.000 | |

| QCNN | Gauss | 99.580 | 99.580 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 84.034 | 85.714 | 91.597 | 93.697 | 95.798 | 100.000 | 100.000 | |

| Brown | 90.756 | 91.176 | 99.160 | 99.580 | 99.580 | 100.000 | 100.000 | |

| Mixed | 89.076 | 92.017 | 92.437 | 98.319 | 100.000 | 100.000 | 100.000 | |

Best results is highlighted

Table 5.

F1-score performance comparison of different models in CWRU dataset under different SNRs (%).

| SNR (dB) | 9 | 6 | 3 | 0 | 3 | 6 | 9 | |

|---|---|---|---|---|---|---|---|---|

| Model | Noise type | |||||||

| MLSCA-CW (two locations) | Gauss | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 87.802 | 90.130 | 93.052 | 95.452 | 100.000 | 100.000 | 100.000 | |

| Brown | 98.942 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 94.320 | 97.333 | 99.460 | 100.000 | 100.000 | 100.000 | 100.000 | |

| MLSCA-CW (single location) | Gauss | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 98.401 | 99.349 | 99.507 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 88.815 | 89.326 | 89.665 | 92.078 | 92.563 | 98.985 | 100.000 | |

| Brown | 99.423 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 88.649 | 95.360 | 99.460 | 99.460 | 100.000 | 100.000 | 100.000 | |

| LR | Gauss | 38.477 | 39.334 | 50.944 | 56.203 | 64.422 | 74.491 | 77.103 |

| Laplace | 32.712 | 37.082 | 53.722 | 60.418 | 63.232 | 65.506 | 67.829 | |

| Violet | 41.206 | 41.737 | 43.645 | 45.199 | 46.185 | 52.642 | 58.449 | |

| Brown | 13.939 | 15.643 | 19.439 | 20.339 | 24.304 | 28.845 | 39.236 | |

| Mixed | 40.637 | 42.835 | 48.288 | 50.714 | 54.749 | 55.324 | 57.463 | |

| MC-CNN | Gauss | 96.826 | 97.784 | 99.500 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 72.880 | 76.414 | 92.008 | 97.726 | 100.000 | 100.000 | 100.000 | |

| Brown | 19.111 | 28.976 | 99.400 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 79.630 | 84.524 | 99.276 | 100.000 | 100.000 | 100.000 | 100.000 | |

| WDCNN | Gauss | 52.476 | 65.848 | 90.640 | 99.418 | 99.418 | 100.000 | 100.000 |

| Laplace | 93.582 | 96.307 | 97.047 | 97.499 | 98.780 | 100.000 | 100.000 | |

| Violet | 73.759 | 75.463 | 76.335 | 80.832 | 89.444 | 96.454 | 100.000 | |

| Brown | 85.080 | 86.575 | 91.957 | 99.235 | 100.000 | 100.000 | 100.000 | |

| Mixed | 76.725 | 79.217 | 88.710 | 99.525 | 100.000 | 100.000 | 100.000 | |

|

Multiscale inner product |

Gauss | 90.388 | 97.898 | 98.439 | 99.465 | 100.000 | 100.000 | 100.000 |

| Laplace | 85.418 | 93.438 | 99.438 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 81.336 | 84.192 | 86.129 | 87.263 | 89.512 | 95.903 | 100.000 | |

| Brown | 99.468 | 99.468 | 99.496 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Mixed | 91.087 | 93.436 | 97.350 | 98.735 | 99.418 | 100.000 | 100.000 | |

| SANet | Gauss | 90.528 | 91.841 | 92.751 | 93.612 | 100.000 | 100.000 | 100.000 |

| Laplace | 97.585 | 99.463 | 99.490 | 99.496 | 100.000 | 100.000 | 100.000 | |

| Violet | 57.685 | 64.359 | 61.165 | 78.834 | 81.678 | 95.925 | 100.000 | |

| Brown | 13.939 | 37.957 | 42.133 | 60.103 | 68.290 | 68.908 | 69.014 | |

| Mixed | 57.704 | 68.707 | 79.048 | 87.701 | 96.641 | 99.051 | 100.000 | |

| QCNN | Gauss | 99.463 | 99.463 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet | 77.840 | 83.911 | 88.613 | 91.222 | 94.186 | 100.000 | 100.000 | |

| Brown | 89.551 | 89.727 | 98.866 | 99.490 | 99.496 | 100.000 | 100.000 | |

| Mixed | 86.803 | 89.614 | 90.267 | 97.956 | 100.000 | 100.000 | 100.000 | |

Best results is highlighted

Analyzing the data in Table 4, the MLSCA-CW (Two locations) model has excellent anti-noise ability. At -9dB SNR and in five different noise environments, the classification accuracy of the model is more than 90%. Mixed noise is a equal proportional mixture of Gaussian noise, Laplacian noise, Brownian noise, and Violet noise. In addition, under the same noise conditions, the MLSCA-CW model is better than other comparison models. It is worth mentioning that in Gaussian noise and Laplacian noise environments, the classification accuracy of the MLSCA-CW model can reach 100% in all SNR cases tested. In addition, we also found that classical LR models cannot effectively learn overly complex nonlinear relationships. Except for significant performance degradation in certain noise environments and SNRs less than -3dB (e.g., SANet performs poorly in Brownian and Purple noises), most of the models showed high performance in lower noise scenarios. It is worth noting that the accuracy of the benchmark models we compared significantly decreased in strong noise environments between -9dB and -6dB.MC-CNN combines traditional convolution to realize multi-scale feature extraction, and feature extraction based on a multi-scale feeling field can make the features collected by the model more complete. However, under high noise intensity conditions (e.g., SNR less than -6 dB) the original signal has been annihilated, and the features extracted using only multiscale convolution will lead to a sharp decrease in the prediction performance of the model due to the inclusion of many large noise features.

The WDCNN model uses a wide convolution kernel in the first convolutional layer to extract features and suppress noise, and the results show that it does have a suppression effect on noise and has a better prediction of Laplacian noise. However, for other kinds of noise and when the noise signal strength is high, the performance of the WDCNN model has a more substantial degradation, and the classification accuracy of the model under Gaussian noise with a -9 dB SNR even drops to 60.504%. The multiscale inner product model uses multi-scale inner product and locally connected feature extraction method, which does have a high filtering effect on noise; its performance is better than MLSCA-CW model under -9dB Brown noise condition, but there is still a gap between Multiscale inner product model and MLSCA-CW model in other cases. This also shows that the use of multi-scale inner product method with correlation filtering noise feature extraction method is a potential method, and the MLSCA-CW model we proposed using multi-scale convolution and layer attention mechanism with corresponding feature enhancement method is very correct, and the effect is immediate. SANet network mainly uses the self-activation mechanism of depth separation, which often has better performance in dealing with some strong noise, which provides a new idea for improving network robustness. However, from the experimental results, self-activation mechanism of depth separation still needs to be combined with other means to realize the actual working environment of bearings for multiple types and strong noise. QCNN model is essentially a model based on attention mechanism. It can be clearly seen from the test results that the overall performance of the model is relatively excellent. However, there is still considerable scope for enhancing the performance of violet noise as well as other types of noise in highly noisy environments with SNRs of -9dB and -6dB.

This experimental result shows that proper use of attention mechanism helps improve the robustness of the model. Based on the above analysis, we have good reason to believe that the MLSCA-CW model combining the multi-scale multi-location feature extractor, soft threshold noise filtering, multi-scale self-activation mechanism and layer attention mechanism will have a significant improvement in prediction accuracy and robustness compared to the benchmark model. In fact, the data in Table 4 and Table 5 strongly prove the correctness of the above conclusion. For mixed noise environments, MLSCA-CW (Two locations) model outperforms other comparison models in SNR ranging from -9 to 9dB. In strong noise environments of -9dB, the prediction accuracy reaches 95.378 %. Besides, an important feature of the MLSCA-CW model is that it allows for more fully utilization of sensor signals from multiple locations to complete bearing fault diagnosis tasks under complex conditions. Specifically, the extracted multi-location fault features are used to realize the fusion complementation of the feature signals and enrich the fault feature information, so that the network can better fit the nonlinear mapping relationship between the signal features and the fault types, which in turn improves the model prediction accuracy. Table 4 and Table 5 give the experimental results of MLSCA-CW model using single location sensor signal information and double location sensor signal. By comparison, we can find that in the Brownian noise prediction except for -9dB environment, the accuracy of MLSCA-CW(Single location) is slightly higher than that of MLSCA-CW(Two locations) by 0.42%. However, in other noise signals of different SNRs, the performance of MLSCA-CW(Single location) does not exceed that of MLSCA-CW(Two locations). The above analysis shows that the multi-location information aggregation method proposed in this paper enhances the model’s ability to extract fault features and information to a certain extent , and improves the model’s prediction performance in different application scenarios.

b) UofO dataset performance

In order to further verify the generalization and robustness of the proposed model, we select the UofO bearing variable speed diagnosis dataset for further verification. Since this paper pays more attention to the diagnostic performance of the network in a multi-type strong noise environment, the noise intensity of the signal-to-noise ratio of -9, -6 and -3dB is selected for this comparison experiment. In addition, ACC, F1-score and Recall were selected as evaluation indicators, as shown in Table 6.

Table 6.

ACC, F1-score, and Recall comparison of different models in UofO dataset under different SNRs (%).

| SNR (dB) | − 9 | − 6 | − 3 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model | Noise type | ACC | F1-score | Recall | ACC | F1-score | Recall | ACC | F1-score | Recall |

| MLSCA-CW (two locations) | Gauss-noise | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace-noise | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet-noise | 99.744 | 99.744 | 99.744 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Brownian-noise | 95.819 | 95.828 | 95.819 | 96.160 | 96.084 | 96.087 | 96.331 | 96.349 | 96.355 | |

| Mixed-noise | 73.976 | 73.987 | 73.976 | 81.655 | 81.630 | 81.607 | 86.263 | 86.059 | 85.990 | |

| MLSCA-CW (single location) | Gauss-noise | 89.759 | 89.837 | 89.759 | 92.918 | 92.943 | 93.013 | 99.915 | 99.917 | 99.916 |

| Laplace-noise | 88.055 | 87.764 | 88.055 | 99.829 | 99.825 | 99.829 | 99.829 | 99.823 | 99.823 | |

| Violet-noise | 67.491 | 66.903 | 67.491 | 67.662 | 66.959 | 66.957 | 68.515 | 68.431 | 68.515 | |

| Brownian-noise | 69.966 | 70.422 | 69.966 | 71.331 | 71.388 | 71.177 | 78.157 | 77.906 | 77.771 | |

| Mixed-noise | 63.140 | 62.380 | 63.450 | 64.505 | 56.557 | 64.944 | 66.212 | 59.645 | 66.212 | |

| LR | Gauss-noise | 80.887 | 80.857 | 80.887 | 81.399 | 81.206 | 81.686 | 82.594 | 82.203 | 82.594 |

| Laplace-noise | 80.802 | 80.574 | 80.802 | 81.826 | 81.531 | 81.826 | 83.532 | 83.170 | 83.532 | |

| Violet-noise | 62.201 | 60.689 | 62.201 | 65.785 | 62.516 | 64.547 | 68.345 | 67.076 | 68.345 | |

| Brownian-noise | 32.594 | 18.560 | 32.594 | 33.703 | 20.273 | 33.481 | 35.410 | 23.759 | 35.410 | |

| Mixed-noise | 37.500 | 37.080 | 37.500 | 38.225 | 34.837 | 38.225 | 43.345 | 39.934 | 43.078 | |

| MC-CNN | Gauss-noise | 95.734 | 95.737 | 95.734 | 97.440 | 97.438 | 97.440 | 100.000 | 100.000 | 100.000 |

| Laplace-noise | 94.966 | 94.968 | 94.966 | 96.416 | 96.413 | 96.416 | 100.000 | 100.000 | 100.000 | |

| Violet-noise | 81.911 | 81.878 | 81.911 | 85.448 | 85.391 | 85.448 | 92.217 | 92.126 | 92.217 | |

| Brownian-noise | 41.076 | 40.546 | 41.076 | 46.200 | 45.463 | 46.200 | 95.601 | 95.588 | 95.601 | |

| Mixed-noise | 68.174 | 68.443 | 68.174 | 70.734 | 70.804 | 70.734 | 74.147 | 74.209 | 74.147 | |

| WDCNN | Gauss-noise | 73.294 | 73.266 | 73.294 | 74.915 | 74.944 | 74.915 | 99.915 | 99.916 | 99.916 |

| Laplace-noise | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet-noise | 65.870 | 63.396 | 65.870 | 67.065 | 66.142 | 67.044 | 69.625 | 68.699 | 69.625 | |

| Brownian-noise | 72.952 | 72.924 | 72.952 | 73.294 | 73.418 | 73.175 | 76.024 | 75.754 | 75.671 | |

| Mixed-noise | 68.089 | 68.240 | 68.381 | 70.478 | 70.141 | 70.070 | 73.891 | 73.745 | 73.891 | |

| Multiscale inner product | Gauss-noise | 92.491 | 92.495 | 92.491 | 95.648 | 95.671 | 95.648 | 97.355 | 97.367 | 97.355 |

| Laplace-noise | 89.505 | 89.501 | 89.505 | 92.065 | 92.064 | 92.065 | 94.625 | 94.619 | 94.625 | |

| Violet-noise | 83.959 | 83.930 | 83.959 | 85.666 | 85.646 | 85.666 | 88.225 | 88.208 | 88.225 | |

| Brownian-noise | 91.041 | 91.043 | 91.041 | 92.747 | 92.753 | 92.747 | 95.307 | 95.308 | 95.307 | |

| Mixed-noise | 69.120 | 69.098 | 69.120 | 72.504 | 72.453 | 72.504 | 74.196 | 74.229 | 74.196 | |

| SANet | Gauss-noise | 69.795 | 69.414 | 69.795 | 78.157 | 76.166 | 78.505 | 79.863 | 78.058 | 79.863 |

| Laplace-noise | 68.345 | 58.102 | 68.345 | 69.198 | 59.924 | 69.198 | 71.758 | 64.939 | 71.758 | |

| Violet-noise | 66.638 | 64.001 | 66.638 | 67.065 | 67.083 | 67.199 | 68.601 | 68.450 | 68.601 | |

| Brownian-noise | 52.986 | 52.579 | 52.986 | 55.205 | 51.545 | 55.219 | 56.911 | 53.621 | 56.911 | |

| Mixed-noise | 53.840 | 53.528 | 54.046 | 55.034 | 54.700 | 54.862 | 58.106 | 57.489 | 58.106 | |

| QCNN | Gauss-noise | 99.829 | 99.829 | 99.829 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 |

| Laplace-noise | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | 100.000 | |

| Violet-noise | 67.235 | 66.850 | 67.235 | 69.113 | 67.640 | 68.070 | 70.819 | 70.205 | 70.819 | |

| Brownian-noise | 52.218 | 52.018 | 52.218 | 54.181 | 49.979 | 54.194 | 56.485 | 56.316 | 56.485 | |

| Mixed-noise | 72.696 | 72.369 | 72.696 | 74.061 | 73.675 | 73.680 | 75.341 | 75.333 | 75.341 | |

Best results is highlighted

As shown in Table 6, the proposed MLSCA-CW (Two Locations) maintains optimal performance under different SNRs and various noise conditions. The prediction accuracy of no less than 95.819% can be maintained in each single type − 9 dB noise environment. For the − 9 dB mixed noise of variable speed bearings, the prediction accuracy of 73.976% can be maintained. For -3dB mixed-noise, the prediction accuracy of 86.263% is maintained, which is 10% higher than that of other optimal comparison models.The MLSCA-CW (Single Location) lacks the signal information of a location, resulting in incomplete fault features obtained by the model, which reduces the prediction accuracy of the model. The experimental results also verify that the model prediction accuracy is decreased due to incomplete fault features under variable speed conditions, and the confusion between fault and noise characteristics is intensified due to the loss of single position information.For LR model, its nonlinear mapping ability is weak. Under complex environmental conditions such as Violet-noise, Brown-noise and Mixed-noise, the model expression ability is low, and the prediction accuracy is only about 30%. The MC-CNN model realizes multi-scale feature extraction based on multi-scale convolution, and collects multi-scale fault features through multi-scale field, so as to improve and strengthen fault feature extraction and improve the mode expression ability. It is consistent with the results of CWRU dataset. For Guass-noise and Laplce-noise, the model has strong expressiability and good effect. For high-intensity Violet-noise, Brownain-noise and Mixed-noise, multi-scale convolution can not better distinguish fault features and noise features, reaching the performance expression limit of the model, and the worst prediction accuracy is only about 40%.

The first convolution layer of WDCNN uses wide convolution to extract features and suppress noise. The experiment proves that the WDCNN model can achieve a good separation of fault features and Laplace-noise, which makes the model have strong expression ability in Laplace-noise environment. However, for other types of noise (especially -9dB strong noise), the WDCNN model’s ability to separate noise is greatly reduced, and its prediction accuracy is basically maintained at 60–70%.The multiscale inner product model uses the multiscale inner product and local connected feature extraction method, which has a good effect on noise filtering. However, its performance compared to MLSCA-CW (Two Locations) still has some gaps. Under the conditions of -9dB Mixed-noise, the prediction accuracy is only 68%, and even with the reduction of 6 dB SNR, the prediction accuracy of the model is only about 5% higher. The results also show that multi-scale inner product and locally connected feature extraction is a promising method for noise removal. This further demonstrates the superiority of the MLSCA-CW (Two Locations) model proposed in this paper by combining multi-scale convolution with layer attention mechanism.The SANet network mainly adopts the self-activation mechanism of deep separation based on Transformer, and its model performance is in the acceptable range when processing some kinds of noise data (such as CWRU data) under constant rotation. However, in the environment of variable speed and high noise intensity, the performance of SANet is even worse than that of LR, which indicates that the expression ability of the model SANet in the environment of variable speed still has great room for improvement. Essentially, QCNN is a model based on attention mechanism, which has a strong filtering ability for strong Guass-noise and Laplace-noise, while its filtering ability for other noises and Mixed-noise is greatly reduced.In general, attention-based models such as Multiscale inner product, SAnet and QCNN, as well as models based on multi-scale convolution operators such as MC-CNN and WDCNN have better filtering ability for partial noise than LR models. This further verifies that the MLSCA-CW (Two Locations) model proposed in this paper combines multi-scale multi-location feature extractor, soft threshold noise filter, multi-scale self-activation mechanism and layer attention mechanism to filter out multiple types of strong noise. In addition, an important feature of MLSCA-CW model is to extract fault features by using multiple position sensor information. According to the results, the prediction accuracy of MLSCA-CW (Single Location) model in variable speed environment decreased significantly compared with MLSCA-CW (Two Locations). This also shows that the extraction of multi-position vibration features is indeed helpful to improve the model’s perfection of fault features and the separation of noise signals. At the same time, compared with the constant speed of CWRU, MLSCA-CW (Single Location) performance degradation is more obvious. This also shows that MLSCA-CW (Two Locations) is more comprehensive for feature supplement under variable speed conditions, and is more suitable for complex situations.

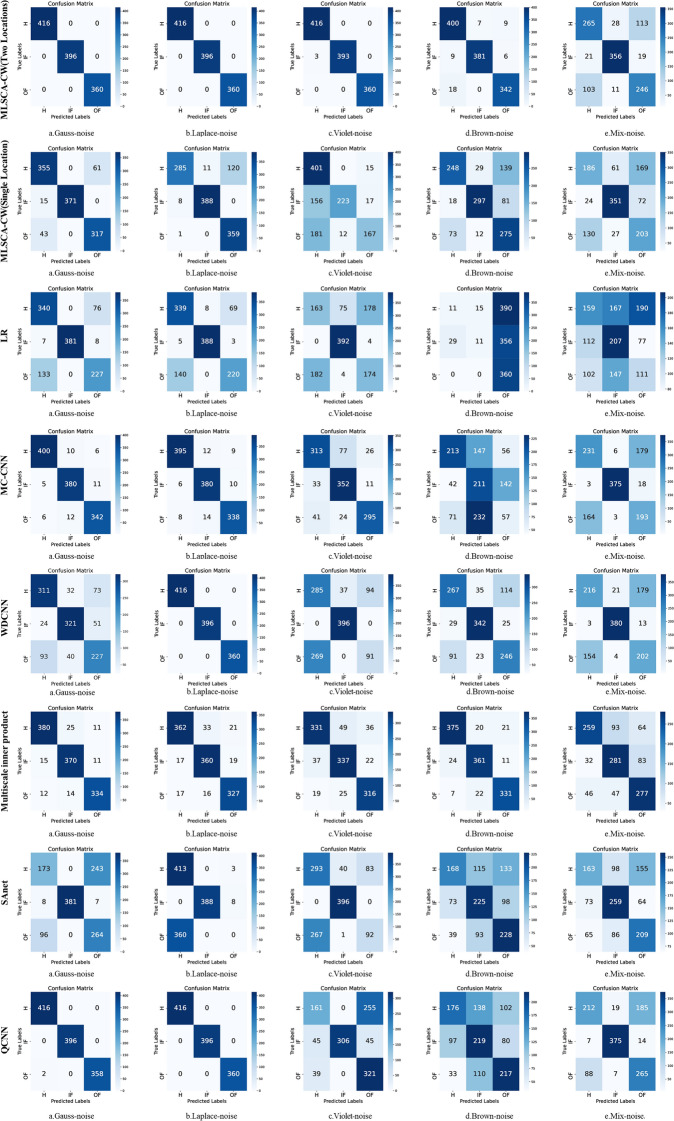

To further visually analyze the performance of each model under high SNRs, we visualized the confusion matrix under − 9 dB SNR of each model, and the results were shown in Fig. 7. As shown in Fig. 7, MLSCA-CW (Two Locations) is highly confusing for the fault signals of healthy bearings and outer rings in Mixed-noise environments. However, its overall comprehensive performance is better than other benchmark models.For MLSCA-CW (Single Location), it can be seen that the model’s ability to distinguish between health and outer circle faults decreases, and more signals of health and outer circle faults are confused. Among the other models, the Multiscale inner product model has a uniform distribution of success rate for all noise types, but its overall recognition accuracy is lower than MLSCA-CW (Two Locations). MC-CNN is poor in identifying the outer ring faults of Brown-noise. WDCNN is poor for most noise health and outer ring fault detection. Under Laplace-noise environment, SANet’s fault identification accuracy is 0 in the outer faults, and all oute faults are misjudged as healthy. QCNN has poor effect on the identification of healthy bearings under the noise environment of Violet-noise, Brown-noise and Mixed-noise.

Fig. 7.

Confusion matrix of all model at -9dB SNR in UofO dataset. On the left is the model name for each row. a,b,c,d, and e stand for Gauss-noise, Laplace-noise, Violet-noise, Brownian-noise, and Mixed-noise respectively.

In a nutshell, the performance of the proposed MLSCA-CW (Two Locations) in the UofO variable speed bearing data set is still optimal, while the other basic models have different degrees of defects in their ability to express different types of noise environments. This also validates the advanced nature of the approach adopted by the MLSCA-CW model. It also shows that MLSCA-CW has good generalization and robustness.

Although with the rapid development of GPU technology, the computing cost has decreased, the algorithm efficiency is still an important index to evaluate the model. Therefore, the model inference time of a single sample is tested under the premise of ensuring the model performance. The results show that the inference time of a single sample in MLSCA-CW model is 0.515 ms. The single sample inference time of MC-CNN is 0.943ms, the single sample inference time of Multiscale inner product is 0.725 ms, the single sample inference time of SANet is 0.725 ms, and the single sample inference time of QCNN is 0.456ms. In general, the proposed model has significant performance advantages and computational efficiency is better than most of the comparison models, showing promising application prospects in near real-time computing.

Conclusion

This paper proposes a new multi-position, multi-scale, multi-level information attention activation network (MLSCA-CW) for bearing fault diagnosis. Ablation experiments show that the soft-threshold noise removal, layer attention mechanism, multi-scale self-activation mechanism, and extraction of multi-scale features from multi-position sensor data used in this paper are highly effective in filtering out various types of high-intensity noise, while improving the model’s expressive power. Furthermore, the soft-threshold noise removal method offers more significant advantages compared to the traditional FFT approach.

Comparison experiments demonstrate that, under both constant and variable-speed bearing operation conditions, the proposed MLSCA-CW outperforms current advanced multi-scale bearing fault diagnosis models, such as MC-CNN, SAnet, and QCNN, in the presence of various types of strong noise. Under the CWRU dataset, the MLSCA-CW model maintains a high diagnostic accuracy of 92.02% in noise environments ranging from − 9 to 9 dB. In the UofO variable-speed bearing fault diagnosis dataset, the model achieves a diagnostic rate of 95.819% under various single noise conditions ranging from − 9 to − 3 dB. Even under mixed noise, the diagnostic accuracy remains significantly higher than that of multi-scale baseline models like SANet. Experiments conducted on datasets with different working conditions and mixed noise sources further validate the model’s robustness and practicality. Additionally, the inference time for a single sample in the MLSCA-CW model is only 0.515 ms, indicating its potential for near-real-time computation.

In summary, the MLSCA-CW model, which integrates soft-threshold, self-activation, and self-attention mechanisms to effectively extract multi-scale features from multi-position sensor data, demonstrates strong adaptability and robustness under high-intensity and multi-noise real-world conditions, along with significant real-time computing potential. The MLSCA-CW model provides reliable technical support for machinery fault diagnosis. Future research can further optimize the model structure and expand its application to fault diagnosis of more types of mechanical equipment.

Author contributions

Yu Zhang: Conceptualization, Methodology, Software, Visualization, Writing - original draft. Lianlei Lin: Formal analysis, Supervision, Writing - review & editing. Junkai Wang: Writing - review & editing. Wei Zhang: Data curation, Validation. Sheng Gao: Supervision, Writing - review & editing. Zongwei Zhang: Supervision, Writing - review & editing.

Data availability

This paper uses the CWRU dataset, IMS dataset & UofO dataset for training and testing. The CWRU dataset is available at the following website https://engineering.case.edu/bearingdatacenter/download-data-file. The IMS dataset is available at the following website https://www.nasa.gov/intelligent-systems-division/discovery-and-systems-health/pcoe/pcoe-data-set-repository/. The UofO dataset is available at the following website https://data.mendeley.com/datasets/v43hmbwxpm/1.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Li, X., Shao, H., Lu, S., Xiang, J. & Cai, B. Highly efficient fault diagnosis of rotating machinery under time-varying speeds using lsismm and small infrared thermal images. IEEE Trans. Syst. Man Cybern. Syst.52, 7328–7340. 10.1109/TSMC.2022.3151185 (2022). [Google Scholar]

- 2.Upadhyay, N. & Kankar, P. Diagnosis of bearing defects using tunable q-wavelet transform. J. Mech. Sci. Technol.32, 549–558. 10.1007/s12206-018-0102-8 (2018). [Google Scholar]

- 3.Assaad, B., Eltabach, M. & Antoni, J. Vibration-based condition monitoring of a multistage epicyclic gearbox in lifting cranes. Mech. Syst. Signal Process.42, 351–367 (2014). [Google Scholar]

- 4.Rauber, T. W., De Assis Boldt, F. & Varejao, F. M. Heterogeneous feature models and feature selection applied to bearing fault diagnosis. IEEE Trans. Industr. Electron.62, 637–646 (2015). [Google Scholar]

- 5.Yu, X., Dong, F., Ding, E., Wu, S. & Fan, C. Rolling bearing fault diagnosis using modified lfda and emd with sensitive feature selection. IEEE Access6, 3715–3730 (2017). [Google Scholar]

- 6.Upadhyay, N. & Chourasiya, S. Extreme learning machine and ensemble techniques for classification of rolling element bearing defects. Life Cycle Reliab. Saf. Eng.11, 189–201. 10.1007/s41872-022-00196-1 (2022). [Google Scholar]