Abstract

Aims

The aim of this study was to assess the validity of undertaking time‐series analyses on both fatal and non‐fatal drug overdose outcomes for the surveillance of emerging drug threats, and to determine the validity of analyzing non‐fatal indicators to support the early detection of fatal overdose outbreaks.

Design, setting and participants

Time‐series analyses using county‐level data containing fatal overdoses and non‐fatal overdose counts were collected at monthly intervals between 2015 and 2021 in California and Florida, USA. To analyze these data, we used the Farrington algorithm (FA), a method used to detect aberrations in time‐series data such that an abnormal increase in counts relative to previous observations would result in an alert. The FA's performance was compared with a bench‐mark approach, using the standard deviation as an aberration detection threshold. We evaluated whether monthly alerts in non‐fatal overdose can aid in identifying fatal drug overdose outbreaks, defined as a statistically significant increase in the 6‐month overdose death rate. We also conducted analyses across regions, i.e. clusters of counties.

Measurements

Measurements were taken during emergency department and emergency medical service visits.

Findings

Both methods yielded a similar proportion of alerts across scenarios for non‐fatal overdoses, while the bench‐mark method yielded more alerts for fatal overdoses. For both methods, the correlations between surveillance evaluations were relatively poor in the detection of aberrations (typically < 35%) but were high between evaluations yielding no alerts (typically > 75%). For ongoing fatal overdose outbreaks, a strategy based on the detection of alerts at the county level from either method yielded a sensitivity of 66% for both California and Florida. At the regional level, the equivalent analyses had sensitivities of 81% for California and 77% for Florida.

Conclusion

Aberration detection methods can support the early detection of fatal drug overdose outbreaks, particularly when methodologies are applied in combination rather than individual methods separately.

Keywords: Outbreak, Overdose, Surveillance

INTRODUCTION

In 2022 there were more than 100 000 drug overdose deaths in the United States, which represented the highest number on record for a single year [1]. While the origins of the crisis stem from extra‐medical use and diversion of prescription opioids, the situation evolved as regulations curtailed the accessibility of these medicines and heroin availability and affordability increased. Potent synthetic opioids penetrated the drug supply leading to much higher overdose mortality rates, and both polysubstance use of opioids and stimulants, as well as the increased adulteration of the supply with a range of psychoactive substances, have further complicated the overdose epidemic [2]. In addition to the structural issues driving the overdose crisis, including weak welfare and health‐care systems, other factors have compounded it, including the COVID‐19 pandemic [3]. The high levels of variability in the production of synthetic opioids have increased the likelihood of emerging drug overdose epidemics, as users and dealers do not know the strength or effects of new drugs [4]. This constant evolution means that, over relatively short periods, different (and sometimes novel) identification approaches are needed to respond to the specific public health challenges associated with each epidemic, which might also be affecting different demographic groups in distinct geographic areas.

Given this situation, it is critical that overdose outbreaks are detected as early as possible so that public health organizations can take appropriate measures to mitigate the health impacts of newly introduced, harmful substances within the local drug supply [5]. To this end, abnormally high overdose counts at a given time‐point can be indicative of new substances, drug use patterns or demographic groups engaging in drug use, potentially leading to an outbreak. Time‐series methods provide an effective means for establishing whether overdose counts are abnormally high, depending on whether or not overdose counts exceed pre‐specified forecasts based on historical overdose trends. While the availability of overdose mortality data from US Centers for Disease Control and Prevention (CDC) WONDER and some local coroner's offices has permitted the implementation of these methods [6], the lag in public data dissemination mean that it is not conducive to the development of a real‐time surveillance mechanism [7]. The potential benefits of using alternative metrics—such as emergency department (ED) and emergency medical system (EMS) visits related to drug use—have been demonstrated in specific localities [8]. Indeed, non‐fatal overdose is a key predictor of fatal overdose at the individual level and therefore we expect it to be a strong indicator at the population level [9, 10, 11]. While there is no nationally coordinated scheme in place to systematically collect and make these data publicly available at county level, as per the mortality data from CDC WONDER, non‐fatal drug overdose statistics are often collected by states [8, 12, 13].

In this study we sought to investigate the suitability of using non‐fatal overdose drug data for the early detection of fatal overdose outcomes, using data from California and Florida, as these two states publicly share non‐fatal overdose data at county level and at monthly intervals. We apply the Farrington algorithm (FA), a statistical method widely used in the field of infectious diseases to detect aberrations in time‐series data, such that an abnormal increase in counts, relative to previous observations, would result in an alert. We compare the FA's performance to that of a bench‐mark approach, using the standard deviation as an aberration detection threshold, to evaluate their potential contributions to overdose surveillance efforts, both separately and in combination with one another.

The aims of the study are twofold. First, it aims to assess the validity of undertaking time‐series analyses on both fatal and non‐fatal drug overdose outcomes for the surveillance of emerging drug threats in real time. We evaluate whether monthly alerts in non‐fatal overdose identified through aberration detection methods can aid in identifying spikes in fatal overdose occurring during the same month. The second aim is to explore the use of non‐fatal overdose outcomes to support the ‘early detection’ of fatal drug overdose outbreaks, which we define as a statistically significant increase in the 6‐month overdose death rate. This second analysis addresses the frequent random fluctuations in fatal overdose rates, which may not necessarily reflect changing trends in mortality and could potentially lead to unfounded alerts. By defining a fatal overdose outbreak as a long‐term significant increase in overdose deaths, we aim to focus on the early identification of fundamental changes in the drug supply, drug use patterns or communities involved which pose an emerging threat in the context of the overdose crisis. To achieve this, we assess whether a surveillance system using aberration detection analyses to detect monthly alerts in non‐fatal overdose outcomes can support in identifying an ongoing, long‐term, fatal overdose outbreak.

METHODS

Data sets

Monthly drug overdose death counts for all counties in California and Florida between 2015 and 2021 were extracted from the CDC WONDER restricted database (using underlying cause of death codes X40–44, X60–64, X85 and Y10–14). Monthly drug‐related ED visit counts for 55 counties in California between 2016 and 2021 were obtained from the EpiCenter webpage [14]. These counts reflect visits meeting the criteria for the ‘Poisoning: Drug’ category of the ICD‐10–CM external cause‐of‐injury matrix for reporting mechanism and intent of injury [15]. Monthly counts of overdose‐suspected emergency medical service (EMS) visits were obtained from the Florida Department of Health for all 67 counties in Florida between 2015 and 2020. These data were collected through the Florida EMS Tracking and Reporting System and recorded events were based on the Enhanced State Opioid Overdose Surveillance (ESOOS) criteria, as defined by the state of Florida, intended to detect incidents involving any drug overdose.

It is important to acknowledge the differences between ED and EMS data for the assessment of drug overdose trends. ED data capture information on individuals receiving care in a hospital setting, while those treated by EMS responders may or may not be transported to a hospital for further care. A study of data from nine US states has shown that opioid‐involved overdose encounters captured in EMS data were consistently higher than the number of encounters captured in ED data [16]. A variety of reasons have been put forward to explain the refusal of people treated by EMS responders to go for further care. These include experiences of intolerable withdrawal symptoms after receiving naloxone, as well as concerns regarding the adequacy of care for withdrawal symptoms and stigma from health‐care professionals at hospitals [17]. Despite these differences, the work by Casillas and colleagues shows that ED and EMS data provide comparable and relevant metrics for identifying spikes or anomalies [16].

In addition to county‐level analyses, we also investigated fatal and non‐fatal overdose outcomes at the regional level. In California, regions were defined based on Covered California's 19 rating regions, each of which has different pricing and health insurance options [18]. Two regions in this system fall within Los Angeles county and were therefore grouped together. For Florida, regions were defined based on Florida's Statewide Medicaid Managed Care (SMMC) program, corresponding to eleven regions [19]. See Supporting information, Appendix A for more information on these administrative divisions.

The Institutional Review Board of the University of California San Diego determined that an ethics review was not required for this study because it is certified as not human subjects research according to the Code of Federal Regulations, Title 45, part 46 and UCSD Standard Operating Policies and Procedure (reference no. 807788). The analysis was not pre‐registered and the results should be considered exploratory.

Using the Farrington algorithm as a surveillance tool

There is an extensive list of methods available for the purposes of aberration detection within syndromic surveillance systems [20]. Deciding on a method is complicated, given that there are many factors that can influence the performance of an algorithm, including the characteristics of the time‐series being monitored and those of the epidemic that is the target of the surveillance [20]. The FA method was chosen for use in the current study for pragmatic reasons. First, it is widely used by public health authorities for the surveillance of epidemiological data to detect abnormal increases in time‐series data for different types of health harms [21]. Secondly, the availability of an established and accessible R software package should facilitate the implementation of this approach by overdose surveillance teams, increasing the likelihood of its scale‐up if found to perform well [22]. We applied an algorithm that fitted a negative binomial regression model with spline terms to monthly count data from the previous 3 years to characterize time trends for the outcomes of interest. This approach was applied separately for each geographical jurisdiction, i.e. separate models fitted for each unique county or region. The model includes an offset term capturing the county population size given that the likelihood of an opioid‐involved event occurring is an expected increase with population size, all other things being equal. This model establishes a threshold value for the next time‐point, above which observed counts are assumed to reflect a deviation from expectations, based on the negative binomial regression model fitted to previous observed values; that is, beyond a chance occurrence. If the observed count exceeds the threshold value, an alarm is raised by the model.

All analyses presented in this paper have been carried out using the surveillance package in R [22]. A tutorial paper, associated with the surveillance package, demonstrates how the FA model should be implemented in R using example data sets [23]. The package requires the user to format and provide time‐series data for analysis, as well as specifying parameters related to the model specification and the calculation of the threshold value. A key methodological consideration when specifying the model is the choice of the upper percentile of the distribution (𝑎) to represent the threshold. For example, if we assume that parameter 𝑎 equals 0.05 then this denotes a threshold above which values are expected to occur with an associated likelihood of 5% or less, based on trends in the past data. All else being equal, a smaller value of 𝑎 will correspond to a higher threshold value and a smaller likelihood of a resulting alert from the algorithm. Ultimately, the value selected denotes the strength of evidence against the null hypothesis; i.e. that deviations in the observed count from expectations result from random fluctuations. If this value is set too high, important aberrations could be missed, but setting the limit too low could result in frequent false alarms. As such, we explore a range of values (0.01, 0.05, 0.10) for this parameter, as the optimal value is expected to vary among counties due to differences in population size, the degree of measurement error in count estimates and the clinical implications of a false positive or false negative.

When applying the FA the analyst also needs to specify the number of years of data that will be fitted in the model, known as the baseline data. We use 3 years of baseline data to ensure that there are enough data points to fit the regression model, which is in keeping with findings in the methodological literature which propose that this is the minimum time‐frame required for the estimation of robust results [20]. No additional covariates were included in the model. In certain applications, covariates may be included if they are important predictors of the time‐series data and can provide better forecasts, e.g. weather covariates for heat‐related illnesses [24]. However, while potential covariates, including MOUD and naloxone coverage, were considered, data were not available at the county‐level for both states during the period investigated.

Bench‐mark surveillance approach: standard deviation

An alternative approach for generating alerts was also tested based on estimates of the mean and standard deviation for the previous 3 years of count data (alerts only occur in counts ≥ 4). To promote comparability between the two methods, we also varied the statistical inference criteria for the standard deviation approach, so that it would correspond to an 𝑎 of 0.01, 0.05 and 0.10, respectively. The standard deviation approach was chosen based on reports from collaborators at public health departments describing its application to support their surveillance efforts and based on its widespread use as a standard statistical measure.

Comparison of alerts generated with monthly fatal and non‐fatal data

We assessed the degree of alignment between the monthly alerts generated from fatal and non‐fatal data using a variety of metrics. Sensitivity and specificity for the detection of a spike in the fatal overdose count were estimated based on alerts detected using non‐fatal overdose data relative to those detected using the equivalent fatal overdose data. Positive and negative predictive values (PPV and NPV) were also estimated, indicating the likelihood that an alert in the non‐fatal outcome data would successfully identify an alert identified in the overdose mortality data.

Use of monthly non‐fatal overdose data for the early detection of ongoing fatal overdose outbreaks

We define a fatal overdose outbreak as a statistically significant increase in the 6‐month overdose death rate. A statistically significant difference was assumed when the 95% confidence interval for a given 6‐month period was higher than, and non‐overlapping with, the 95% confidence interval for the previous 6‐month period. To examine the potential early detection of ongoing fatal overdose outbreaks using non‐fatal overdose data, we calculated the number of alerts generated over 6 months by applying the FA and the standard deviations method to non‐fatal data when a fatal overdose outbreak occurred versus when it did not occur.

Diagnostics

There are stark contrasts between counties in terms of their population sizes. For the 55 counties in California included in the analysis, the population sizes ranged between 8527 and 9 761 210 in 2023 [25]. In Florida, the county population sizes ranged between 7831 and 2 757 592 in 2022 [26]. These differences in population size inevitably give rise to overdose counts (both fatal and non‐fatal) that vary in magnitude throughout counties, regardless of the corresponding rates, conditional on population size. This situation can present a challenge when making inferences concerning changing overdose rates for jurisdictions with small populations compared to those with larger populations, all other things being equal. In anticipation of this concern, we sought to examine variations in the statistical power of time‐trend parameter estimates for the FA models among population sizes and achieved this by illustrating P‐value significance test estimates across counties.

RESULTS

Descriptive statistics for fatal and non‐fatal drug outcomes in California and Florida

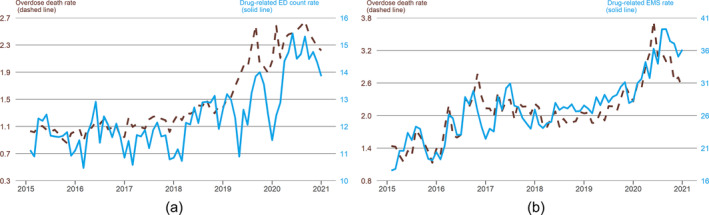

At the state level, there were increases in drug‐related outcomes over time in both California and Florida (see Figure 1a,b). Overdose death rates were generally higher in Florida than in California at equivalent time‐points and both states exhibited notable increases in 2020. Fatal and non‐fatal outcomes showed similar trends in both states aside from a deviation in ED visits in California in 2020, due to most probably disruptions in health‐care services resulting from the COVID‐19 pandemic. At the county level, the correlations between fatal and non‐fatal outcomes were high in both states (see Table 1).

FIGURE 1.

(a) Overdose death rates and drug‐related emergency department (ED) admissions in California (per 100 k); (b) overdose death rates and drug‐related emergency medical system (EMS) visit in Florida (per 100 k).

TABLE 1.

Correlations in fatal and non‐fatal outcomes.

| Year | California | Florida |

|---|---|---|

| 2015 | NA | 0.81 |

| 2016 | 0.94 | 0.78 |

| 2017 | 0.96 | 0.83 |

| 2018 | 0.96 | 0.86 |

| 2019 | 0.97 | 0.89 |

| 2020 | 0.97 | 0.86 |

| 2021 | 0.98 | NA |

| All years | 0.93 | 0.84 |

Abbreviation: NA = not available.

Descriptive statistics for surveillance evaluation of fatal and non‐fatal outcomes

The proportion of evaluations with alerts generated was higher as the parameter value 𝑎 increased, regardless of the outcome or state under investigation. This finding can be seen in Tables 2 and 3, which also show that the standard deviation approach yields a larger proportion of alerts compared to the FA method in the fatal outcome data across all scenarios. Specifically, 3–22% of monthly evaluations led to an alert, corresponding to an average of approximately one to eight alerts per county during a 3‐year period. The two approaches yield a similar proportion of alerts throughout scenarios (5–23%) with the non‐fatal outcome data. The estimates corresponding to the ‘Alert from either method’ show the proportion of alerts generated when alerts from either or both methods are detected. This approach yields the largest proportion of alerts, although the divergence from the other three approaches is larger for the non‐fatal outcome data, which indicates an incongruity in trends across the two outcomes. The estimates corresponding to the ‘Alert from both methods’ approach shows the degree of overlap between the FA method and the standard deviation rule, capturing the data points where alerts were yielded for both methods. For this approach, the proportion of alerts is either equal to or smaller than those obtained using a single method, and these estimates indicate a greater degree of overlap between the methods in the fatal data compared to the non‐fatal data. The analyses of region‐level data yielded larger proportions of evaluations with alerts (i.e. greater overlap) compared to those conducted at the county level (see Supporting information, Tables S1a and S2a for details).

TABLE 2.

Proportion of monthly surveillance evaluations on county‐level fatal opioid overdose data yielding an alert.

| Alert from Farrington algorithm | Alert from standard deviation rule | Alert from either method | Alert from both methods | |

|---|---|---|---|---|

| California | ||||

|

0.03 | 0.08 | 0.08 | 0.03 |

|

0.09 | 0.17 | 0.18 | 0.08 |

|

0.13 | 0.22 | 0.24 | 0.11 |

| Florida | ||||

|

0.03 | 0.04 | 0.05 | 0.02 |

|

0.06 | 0.08 | 0.10 | 0.04 |

|

0.09 | 0.11 | 0.14 | 0.06 |

TABLE 3.

Proportion of monthly surveillance evaluations on county‐level non‐fatal overdose data yielding an alert.

| Alert from Farrington algorithm | Alert from standard deviation rule | Alert from either method | Alert from both methods | |

|---|---|---|---|---|

| California | ||||

|

0.05 | 0.05 | 0.07 | 0.02 |

|

0.12 | 0.12 | 0.16 | 0.07 |

|

0.18 | 0.18 | 0.24 | 0.12 |

| Florida | ||||

|

0.06 | 0.07 | 0.09 | 0.04 |

|

0.14 | 0.15 | 0.20 | 0.10 |

|

0.21 | 0.23 | 0.29 | 0.14 |

Assessment of alerts generated with monthly non‐fatal data to identify spikes in monthly fatal overdose data

Tables 4 and 5 show that correlations between surveillance evaluations on county‐level fatal and non‐fatal data yielding alerts are relatively poor, i.e. less than 35% sensitivity for most of the scenarios considered. In contrast, the correlation between evaluations yielding no alerts is high, i.e. more than 75% specificity for most of the scenarios considered. The results in Tables 5 and 6 correspond to analyses with the parameter value alpha set to 0.05. Additional results can be found in the Supporting information with different alpha values (Supporting information, Tables S4a and S4b). These results show that correlations between surveillance evaluations on county‐level fatal and non‐fatal data yielding alerts increase as the α is increased. In contrast, correlation between evaluations yielding no alerts decrease as the alpha term is increased. The correlations between evaluations on region‐level fatal and non‐fatal data yielding alerts are consistently higher, albeit still modest at best, in comparison to those on the county‐level analyses. The differences between correlations among evaluations yielding no alerts at the region‐level compared to those at the county‐level were mixed.

TABLE 4.

Correlations between monthly fatal and non‐fatal surveillance evaluations at county and region‐level for California.

| Alert from Farrington algorithm | Alert from standard deviation rule | Alert from either method | Alert from both methods | |

|---|---|---|---|---|

| County‐level | ||||

|

0.17 | 0.19 | 0.25 | 0.11 |

|

0.89 | 0.90 | 0.85 | 0.93 |

|

0.13 | 0.27 | 0.27 | 0.12 |

|

0.92 | 0.84 | 0.84 | 0.92 |

| Region‐level | ||||

|

0.21 | 0.29 | 0.34 | 0.14 |

|

0.82 | 0.87 | 0.80 | 0.89 |

|

0.19 | 0.52 | 0.47 | 0.18 |

|

0.84 | 0.71 | 0.69 | 0.85 |

Abbreviations: PPV = positive predictive value; NPV = negative predictive value.

TABLE 5.

Correlations between monthly fatal and non‐fatal surveillance evaluations and region‐level for Florida.

| Alert from Farrington algorithm | Alert from standard deviation rule | Alert from either method | Alert from both methods | |

|---|---|---|---|---|

| County‐level | ||||

|

0.32 | 0.25 | 0.34 | 0.20 |

|

0.87 | 0.85 | 0.82 | 0.91 |

|

0.14 | 0.13 | 0.17 | 0.09 |

|

0.95 | 0.93 | 0.92 | 0.96 |

| Region‐level | ||||

|

0.39 | 0.43 | 0.52 | 0.24 |

|

0.87 | 0.81 | 0.76 | 0.91 |

|

0.40 | 0.33 | 0.42 | 0.25 |

|

0.87 | 0.87 | 0.82 | 0.91 |

Abbreviations: PPV = positive predictive value; NPV = negative predictive value.

TABLE 6.

Testing performance of using non‐fatal surveillance evaluations for the early detection of fatal overdose outbreaks in California.

| Farrington algorithm | Standard deviation rule | Alert from either method | Alert from both methods | |

|---|---|---|---|---|

| County‐level | ||||

|

0.51 | 0.55 | 0.66 | 0.40 |

|

0.57 | 0.59 | 0.47 | 0.70 |

|

0.34 | 0.37 | 0.35 | 0.37 |

|

0.73 | 0.76 | 0.76 | 0.73 |

| Region‐level | ||||

|

0.73 | 0.69 | 0.81 | 0.59 |

|

0.50 | 0.57 | 0.40 | 0.68 |

|

0.56 | 0.58 | 0.54 | 0.61 |

|

0.69 | 0.68 | 0.72 | 0.66 |

Abbreviations: PPV = positive predictive value; NPV = negative predictive value.

Assessment of alerts generated using non‐fatal data for the early detection of overdose outbreaks

Tables 6 and 7 show the test performance of using monthly non‐fatal surveillance evaluations for the early detection of fatal overdose outbreaks (defined as a statistically significant increase in the fatal overdose rate during a 6‐month period). We can see that during fatal overdose outbreaks, a strategy based on the detection of alerts from either the FA or standard deviation methods consistently yielded the highest sensitivity estimates of the four approaches considered, with 66% sensitivity at county‐level for both California and Florida and 81 and 77% sensitivity at regional level for California and Florida, respectively. However, specificity was low for this scenario at 40–47% throughout the two states at county and region‐level. During the phases without fatal overdose outbreaks, a strategy guided by the detection of no alerts on both the FA and standard deviation methods yielded the highest specificity (68–79%). The PPV and NPV varied less across scenarios, but using ‘alerts from both methods’ yielded better outcomes overall (i.e. higher PPV with relatively low impact on NPV) at both county and region‐level for both states. Based on the sensitivity and PPV results, we can see that the region‐level analyses were better for the identification of true positives (i.e. cases where there is an ongoing fatal overdose outbreak) compared to the county‐level analyses. Building on the PPV results we observed increases in the likelihood of a statistically significant fatal overdose outbreak having occurred, as the number of non‐fatal alerts increased within a 6‐month time‐frame (see Supporting information, Tables S5a to S5d). This trend did not always exhibit a clear significance, however, which may be partly due to the limited number of observations. Among false negatives at the county level in California, 72% of cases had at least one alert (with the FA or standard deviation method) when looking at the corresponding region‐level evaluations. This equated to 38% of all county‐level evaluations yielding no alert. In Florida, 49% of the false negatives at the county level had at least one alert for the corresponding region‐level evaluations, which equated to 26% of all the county‐level evaluations with no alert. The specificity and NPV results indicate that the county‐level analyses were superior for the identification of true negatives (i.e. cases without fatal overdose outbreaks) compared to the region‐level analyses.

TABLE 7.

Testing performance of using non‐fatal surveillance evaluations for the early detection of fatal overdose outbreaks in Florida.

| Farrington algorithm | Standard deviation rule | Alert from either method | Alert from both methods | |

|---|---|---|---|---|

| County‐level | ||||

|

0.53 | 0.54 | 0.66 | 0.39 |

|

0.59 | 0.59 | 0.47 | 0.72 |

|

0.32 | 0.32 | 0.31 | 0.34 |

|

0.78 | 0.78 | 0.79 | 0.76 |

| Region‐level | ||||

|

0.65 | 0.64 | 0.77 | 0.52 |

|

0.66 | 0.54 | 0.43 | 0.79 |

|

0.57 | 0.49 | 0.48 | 0.63 |

|

0.73 | 0.68 | 0.73 | 0.70 |

Abbreviations: PPV = positive predictive value; NPV = negative predictive value.

Figures in the Supporting information (S2–S152) show time‐series data for non‐fatal overdose data for all the counties and regions in California and Florida, as well as the timing of alerts generated with the different methods and the timing of fatal overdose outbreaks. For these plots, the surveillance algorithms may be regarded as exhibiting a good performance if two sets of characteristics are observed: (i) alerts are raised only during ongoing fatal overdose outbreaks (indicated by shaded regions) and (ii) each distinct fatal overdose outbreak phase exhibits at least one alert raised by a surveillance algorithm. There are 10 counties (Kern, Lassen, Los Angeles, Madera, Orange, Riverside, San Francisco, Santa Cruz, Tehama and Tulare) exhibiting these characteristics in California and eight counties (Broward, Clay, Collier, Duval, Gulf, Levy, Palm Beach and Pinellas) exhibiting them in Florida. In contrast, the surveillance algorithms may be regarded as exhibiting a bad performance if two sets of characteristics are observed: (i) alerts are only raised at time‐points outside the fatal overdose outbreak phases and (ii) there are overdose outbreak phases observed without any alerts. There are four counties (Imperial, Marin, Placer and Plumas) exhibiting these characteristics in California and six counties (Bradford, Calhoun, Columbia, Hernando, Manatee and St Johns) exhibiting them in Florida.

Diagnostics

There was variation throughout counties in the proportion of surveillance evaluations meeting P‐value significance criteria associated with the time trends in the FA models. Figures S1a–S1d in the Supporting Information show that counties with smaller populations (fewer than 500 k) were less likely to exhibit low P‐values compared to those with larger populations (greater than 500 k). This finding is important, because results in the Supporting information (Tables S2a and S2b) show that the proportion of surveillance evaluations yielding an alert changes if a significance cut‐off criterion is employed, along the lines of the original FA method [27]. The region‐level analyses exhibited higher proportions of surveillance evaluations meeting P‐value significance criteria compared to the county‐level analyses (see Supporting information, Tables S3a and S3b), providing further evidence on the robustness of the FA method in larger populations.

DISCUSSION

This paper represents an important contribution to the published literature as the first study, to our knowledge, applying the FA method to time‐series overdose data for the surveillance of overdose spikes and outbreaks. We find that the application of aberration methods to monthly non‐fatal overdose to detect monthly fatal overdose spikes has a relatively low performance due to erratic fatal overdose outcomes (i.e. relatively small numbers). However, the performance is improved in the context of ongoing fatal overdose outbreaks, especially when assessing alerts at the regional level rather than the county level. This finding aligns with guidance recommending that the target geographical area for assessing the number of overdoses should have a minimum population of 500 000 people [28]. In contrast, county‐level surveillance appears to be more appropriate for ruling out the possibility of an overdose outbreak. We recommend that future research should explore the use of decision tree classification methods to identify the optimal combination of the aberration detection outputs for predicting the occurrence of overdose outbreaks [29]. These extensions might also explore the use of variables reflecting county characteristics and the patterns of alerts accruing over time.

Regardless of the model specification used to interpret the aberration detection outputs, analysts inevitably encounter a trade‐off between sensitivity and specificity when formulating a strategic response to these outputs. As such, the analyst must (implicitly or explicitly) weigh up the implications of all the possible outcomes (i.e. true positive, false negative, true negative, false positive). A challenge herein lies in the need to characterize the strategic response that would be implemented in the face of an outbreak, as well as anticipating the benefits and costs associated with the potential outcomes. Harm reduction strategies play a critical role in preventing opioid‐related overdoses, but research has shown that an understanding of the engagement of people who use drugs with these services is needed to ensure effective implementation [30]. Given that overdose outbreaks have historically tended to coincide with changes in the drug supply and/or an identifiable change in health behaviors associated with drug use [2, 3], a starting‐point for health departments in responding to future outbreaks should be identifying the causes and/or populations of interest through qualitative assessments. A successful example of this type of response was documented in Connecticut in 2019, when an alert was raised by the state‐wide surveillance program following EMS visits for suspected overdoses among people smoking crack cocaine [31]. The alert prompted a response from local harm reduction groups involving the distribution of fentanyl test strips and naloxone to people using crack cocaine, as well as warnings regarding the dangers of using alone. In addition to this, it is important that any overdose surveillance systems and outbreak detection methods are constantly evaluated to ensure that the surveillance system is promoting the best use of public health resources and that it is operating efficiently [32].

Strengths and limitations

This paper demonstrates the surveillance potential of using aberration detection methods to identify drug overdose outbreaks. More work is needed to determine the validity of the methods throughout a broader range of geographical areas, given that the present study only looks at data from two states. Furthermore, the generalizability of the findings in the study may be limited by the time‐frames over which data were available, and so additional research should apply aberration detection methods to future data. The latter point is particularly relevant, given that the time‐points evaluated in this study cover the onset of the COVID‐19 pandemic. More generally, it is important to acknowledge that there are a multitude of possible surveillance methods available, beyond those employed in this study, that future work should refer to in order to integrate for the surveillance of drug overdose outcomes [33]. This study has demonstrated that the combination of two surveillance methods for the detection of overdose outbreaks is more productive than one method, indicating that the adoption of additional methods could yield further gains. Future work might also consider incorporating data on the geospatial proximity of overdose in nearby counties with geographical weights which can improve stability in outbreak detection [34]. In addition, evaluating the strength of other potential population‐level fatal overdose predictors, such as the incidence of soft‐tissue infections, endocarditis and other drug‐related harms, might lead to improved performance, particularly in the context of highly adulterated drug supplies [35]. Finally, it is important to acknowledge that while this work might help in mitigating the impacts of future overdose outbreaks, it does not address the structural drivers of the overdose crisis in the United States. To this end, policymakers must tackle root causes of the overdose crisis, including the lack of economic opportunities, poor working and housing conditions and eroded social support for depressed communities [36].

CONCLUSIONS

This study assesses the validity of employing aberration detection methods for the surveillance of emerging drug threats in real time. The results indicate that these methods can be used to support the early detection of fatal drug overdose outbreaks, particularly when assessing data from regions comprised of multiple counties rather than single counties alone. Furthermore, we observe that early detection is improved when multiple aberration detection methodologies are applied in combination rather, than individual methods separately. Health departments are recommended to use these methods within a surveillance system, in combination with qualitative assessments to identify changes in the drug supply and/or identifiable changes in health behaviors associated with drug use.

AUTHOR CONTRIBUTIONS

Thomas Patton: Conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); methodology (equal); validation (equal); visualization (equal); writing—original draft (equal). Sharon Trillo‐Park: Conceptualization (equal); data curation (equal); project administration (equal). Bethan Swift: Validation (equal). Annick Bórquez: Conceptualization (equal); methodology (equal); supervision (equal); validation (equal).

DECLARATION OF INTERESTS

None to declare.

Supporting information

Appendix S1. Supporting Information

Figure S1a: Proportion of Surveillance Evaluations on Fatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in California.

Figure S1b: Proportion of Surveillance Evaluations on Nonfatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in California.

Figure S1c: Proportion of Surveillance Evaluations on Fatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in Florida.

Figure S1d: Proportion of Surveillance Evaluations on Nonfatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in Florida.

Figure S2: Time‐series for Alameda.

Figure S3: Time‐series for Amador.

Figure S4: Time‐series for Butte.

Figure S5: Time‐series for Calaveras.

Figure S6: Time‐series for Colusa.

Figure S7: Time‐series for Contra Costa.

Figure S8: Time‐series for Del Norte.

Figure S9: Time‐series for El Dorado.

Figure S10: Time‐series for Fresno.

Figure S11: Time‐series for Glenn.

Figure S12: Time‐series for Humboldt.

Figure S13: Time‐series for Imperial.

Figure S14: Time‐series for Inyo.

Figure S15: Time‐series for Kern.

Figure S16: Time‐series for Kings.

Figure S17: Time‐series for Lake.

Figure S18: Time‐series for Lassen.

Figure S19: Time‐series for Los Angeles.

Figure S20: Time‐series for Madera.

Figure S21: Time‐series for Marin.

Figure S22: Time‐series for Mariposa.

Figure S23: Time‐series for Mendocino.

Figure S24: Time‐series for Merced.

Figure S25: Time‐series for Modoc.

Figure S26: Time‐series for Mono.

Figure S27: Time‐series for Monterey.

Figure S28: Time‐series for Napa.

Figure S29: Time‐series for Nevada.

Figure S30: Time‐series for Orange.

Figure S31: Time‐series for Placer.

Figure S32: Time‐series for Plumas.

Figure S33: Time‐series for Riverside.

Figure S34: Time‐series for Sacramento.

Figure S35: Time‐series for San Benito.

Figure S36: Time‐series for San Bernadino.

Figure S37: Time‐series for San Diego.

Figure S38: Time‐series for San Francisco.

Figure S39: Time‐series for San Joaquin.

Figure S40: Time‐series for San Luis Obispo.

Figure S41: Time‐series for San Mateo.

Figure S42: Time‐series for Santa Barabara.

Figure S43: Time‐series for Santa Clara.

Figure S44: Time‐series for Santa Cruz.

Figure S45: Time‐series for Shasta.

Figure S46: Time‐series for Siskiyou.

Figure S47: Time‐series for Solano.

Figure S48: Time‐series for Sonoma.

Figure S49: Time‐series for Stanislaus.

Figure S50: Time‐series for Tehama.

Figure S51: Time‐series for Trinity.

Figure S52: Time‐series for Tulare.

Figure S53: Time‐series for Tuolumne.

Figure S54: Time‐series for Ventura.

Figure S55: Time‐series for Yola.

Figure S56: Time‐series for Yuba.

Figure S57: Time‐series for Alachua.

Figure S58: Time‐series for Baker.

Figure S59: Time‐series for Bay.

Figure S60: Time‐series for Bradford.

Figure S61: Time‐series for Brevard.

Figure S62: Time‐series for Broward.

Figure S63: Time‐series for Calhoun.

Figure S64: Time‐series for Charlotte.

Figure S65: Time‐series for Citrus.

Figure S66: Time‐series for Clay.

Figure S67: Time‐series for Collier.

Figure S68: Time‐series for Columbia.

Figure S69: Time‐series for DeSoto.

Figure S70: Time‐series for Dixie.

Figure S71: Time‐series for Duval.

Figure S72: Time‐series for Escambia.

Figure S73: Time‐series for Flagler.

Figure S74: Time‐series for Franklin.

Figure S75: Time‐series for Gadsden.

Figure S76: Time‐series for Gilchrist.

Figure S77: Time‐series for Glades.

Figure S78: Time‐series for Gulf.

Figure S79: Time‐series for Hamilton.

Figure S80: Time‐series for Hardee.

Figure S81: Time‐series for Hendry.

Figure S82: Time‐series for Hernando.

Figure S83: Time‐series for Highlands.

Figure S84: Time‐series for Hillsborough.

Figure S85: Time‐series for Holmes.

Figure S86: Time‐series for Indian River.

Figure S87: Time‐series for Jackson.

Figure S88: Time‐series for Jefferson.

Figure S89: Time‐series for Lafayette.

Figure S90: Time‐series for Lake.

Figure S91: Time‐series for Lee.

Figure S92: Time‐series for Leon.

Figure S93: Time‐series for Levy.

Figure S94: Time‐series for Liberty.

Figure S95: Time‐series for Madison.

Figure S96: Time‐series for Manatee.

Figure S97: Time‐series for Marion.

Figure S98: Time‐series for Martin.

Figure S99: Time‐series for Miami‐Dade.

Figure S100: Time‐series for Monroe.

Figure S101: Time‐series for Nassau.

Figure S102: Time‐series for Okaloosa.

Figure S103: Time‐series for Okeechobee.

Figure S104: Time‐series for Orange.

Figure S105: Time‐series for Osceola.

Figure S106: Time‐series for Palm Beach.

Figure S107: Time‐series for Pasco.

Figure S108: Time‐series for Pinellas.

Figure S109: Time‐series for Polk.

Figure S110: Time‐series for Putnam.

Figure S111: Time‐series for Santa Rosa.

Figure S112: Time‐series for Sarasota.

Figure S113: Time‐series for Seminole.

Figure S114: Time‐series for St. Johns.

Figure S115: Time‐series for St. Lucie.

Figure S116: Time‐series for Sumter.

Figure S117: Time‐series for Suwannee.

Figure S118: Time‐series for Taylor.

Figure S119: Time‐series for Union.

Figure S120: Time‐series for Volusia.

Figure S121: Time‐series for Wakulla.

Figure S122: Time‐series for Walton.

Figure S123: Time‐series for Washington.

Figure S124: Time‐series for Region 1.

Figure S125: Time‐series for Region 2.

Figure S126: Time‐series for Region 3.

Figure S127: Time‐series for Region 4.

Figure S128: Time‐series for Region 5.

Figure S129: Time‐series for Region 6.

Figure S130: Time‐series for Region 7.

Figure S131: Time‐series for Region 8.

Figure S132: Time‐series for Region 9.

Figure S133: Time‐series for Region 10.

Figure S134: Time‐series for Region 11.

Figure S135: Time‐series for Region 12.

Figure S136: Time‐series for Region 13.

Figure S137: Time‐series for Region 14.

Figure S138: Time‐series for Regions 15–16.

Figure S139: Time‐series for Region 17.

Figure S140: Time‐series for Region 18.

Figure S141: Time‐series for Region 19.

Figure S142: Time‐series for Region 1.

Figure S143: Time‐series for Region 2.

Figure S144: Time‐series for Region 3.

Figure S145: Time‐series for Region 4.

Figure S146: Time‐series for Region 5.

Figure S147: Time‐series for Region 6.

Figure S148: Time‐series for Region 7.

Figure S149: Time‐series for Region 8.

Figure S150: Time‐series for Region 9.

Figure S151: Time‐series for Region 10.

Figure S152: Time‐series for Region 11.

Table S1a: Proportion of Surveillance Evaluations on Region‐Level ODD Data Yielding an Alert.

Table S1b: Proportion of Surveillance Evaluations on Region‐Level Nonfatal Data Yielding an Alert.

Table S2a: Proportion of Surveillance Evaluations on County‐Level ODD Data Yielding an Alert (significant trend cases only).

Table S2b: Proportion of Surveillance Evaluations on County‐Level Nonfatal Data Yielding an Alert (significant trend cases only).

Table S3a: Proportion of Surveillance Evaluations on County‐Level Fatal Data Meeting P‐Value Significance Criteria.

Table S3b: Proportion of Surveillance Evaluations on County‐Level Nonfatal Data Meeting P‐Value Significance Criteria.

Table S4a: Correlations Between Fatal and Nonfatal Surveillance Evaluations at the County‐Level for California.

Table S4b: Correlations Between Fatal and Nonfatal Surveillance Evaluations at the County‐Level for Florida.

Table S5a: Likelihood of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month timeframes contingent on whether (California, county level).

Table S5b: Likelihood of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month time‐frames contingent on whether (Florida, county level).

Table S5c: Odds ratios of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month timeframes contingent on whether (California, county level).

Table S5d: Odds ratios of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month timeframes contingent on whether (Florida, county level).

ACKNOWLEDGEMENTS

This work was supported by a T32 Training Grant (T32 DA023356) and a NIDA Avenir Grant (DP2DA049295). The authors would like to thank Christos Lazarou, Christina Galardi, Katie O'Shields and Slone Taylor for participating in discussions about the topic of overdose data surveillance during the early stages of this work.

Patton T, Trillo‐Park S, Swift B, Bórquez A. Early detection and prediction of non‐fatal drug‐related incidents and fatal overdose outbreaks using the Farrington algorithm. Addiction. 2025;120(2):266–275. 10.1111/add.16674

DATA AVAILABILITY STATEMENT

The mortality data that support the findings of this study are available from the CDC WONDER restricted database. Access to, and use of, this data requires research‐proposal review [https://www.cdc.gov/nchs/nvss/index.htm] and approval by the National Center for Health Statistics. The emergency department count data from California in this study are openly available from the EpiCenter webpage at https://skylab4.cdph.ca.gov/epicenter/. The emergency medical services count data from Florida in this study are openly available from the Florida Department of Health webpage at https://www.flhealthcharts.gov/Charts/.

REFERENCES

- 1. Ahmad F, Cisewski J, Rossen L, Sutton P. Provisional drug overdose death counts [internet] Atlanta, GA: Department of Health and Human Services, CDC, National Center for Health Statistics; 2023. [cited 2024 Jan 26]. Available at: https://www.cdc.gov/nchs/nvss/vsrr/drug-overdose-data.htm (accessed 26 January 2024). [Google Scholar]

- 2. Ciccarone D. The triple wave epidemic: supply and demand drivers of the US opioid overdose crisis. Int J Drug Policy. 2019;71:183–188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Ciccarone D. The rise of illicit fentanyls, stimulants and the fourth wave of the opioid overdose crisis. Curr Opin Psychiatry. 2021;34:344–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Pardo B, Taylor J, Caulkins JP, Kilmer B, Reuter P, Stein BD. The future of fentanyl and other synthetic opioids. Santa Monica, CA: RAND Corporation; 2019. [Google Scholar]

- 5. Hoots BE. Opioid overdose surveillance: improving data to inform action. Public Health Rep. 2021;136:5S–8S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Cartus AR, Li Y, Macmadu A, Goedel WC, Allen B, Cerdá M, et al. Forecasted and observed drug overdose deaths in the US during the COVID‐19 pandemic in 2020. JAMA Netw Open. 2022;5:e223418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Blanco C, Wall MM, Olfson M. Data needs and models for the opioid epidemic. Mol Psychiatry. 2021;27:787–792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Moore PQ, Weber J, Cina S, Aks S. Syndrome surveillance of fentanyl‐laced heroin outbreaks: utilization of EMS, medical examiner and poison center databases. Am J Emerg Med. 2017;35:1706–1708. [DOI] [PubMed] [Google Scholar]

- 9. Lowder EM, Amlung J, Ray BR. Individual and county‐level variation in outcomes following non‐fatal opioid‐involved overdose. J Epidemiol Community Health. 2020;74:369–376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Krawczyk N, Eisenberg M, Schneider KE, Richards TM, Lyons BC, Jackson K, et al. Predictors of overdose death among high‐risk emergency department patients with substance‐related encounters: a data linkage cohort study. Ann Emerg Med. 2020;75:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Thylstrup B, Seid AK, Tjagvad C, Hesse M. Incidence and predictors of drug overdoses among a cohort of > 10,000 patients treated for substance use disorder. Drug Alcohol Depend. 2020;206:107714. [DOI] [PubMed] [Google Scholar]

- 12. Barboza GE, Angulski K. A descriptive study of racial and ethnic differences of drug overdoses and naloxone administration in Pennsylvania. Int J Drug Policy. 2020;78:102718. [DOI] [PubMed] [Google Scholar]

- 13. Goldstick J, Ballesteros A, Flannagan C, Roche J, Schmidt C, Cunningham RM. Michigan system for opioid overdose surveillance. Inj Prev. 2021;27:500–505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. California Department of Public Health, Injury and Violence Prevention Branch . EpiCenter: California injury data online [internet] [cited 2024 Jan 26]. Available at: https://skylab4.cdph.ca.gov/epicenter/ (accessed 26 January 2024). [Google Scholar]

- 15. Hedegaard H, Johnson RL, Garnett M, Thomas KE. The International Classification of Diseases, 10th revision, clinical modification (ICD–10–CM): external cause‐of‐injury framework for categorizing mechanism and intent of injury. Natl Health Stat Rep. 2019;136:1–22. [PubMed] [Google Scholar]

- 16. Casillas SM, Stokes EK, Vivolo‐Kantor AM. Comparison of emergency medical services and emergency department encounter trends for non‐fatal opioid‐involved overdoses, nine states, United States, 2020–2022. Ann Epidemiol. 2024;97:38–43. [DOI] [PubMed] [Google Scholar]

- 17. Bergstein RS, King K, Melendez‐Torres G, Latimore AD. Refusal to accept emergency medical transport following opioid overdose, and conditions that may promote connections to care. Int J Drug Policy. 2021;97:103296. [DOI] [PubMed] [Google Scholar]

- 18. Weinberg M, Kallerman P. A study of affordable care act competitiveness in California [internet] The Brookings Institution and the Rockefeller Institute of Government; 2017. [cited 2024 Jan 26]. Available at: https://www.brookings.edu/wp-content/uploads/2017/02/ca-aca-competitiveness.pdf (accessed 26 January 2024). [Google Scholar]

- 19. Florida Agency for Health Care Administration . Eleven‐region structure of the statewide Medicaid managed care (SMMC) program [internet] [cited 2024 Jan 26]. Available at: https://ahca.myflorida.com/content/download/5999/file/SMMC_Region_Map.pdf (accessed 26 January 2024). [Google Scholar]

- 20. Faverjon C, Berezowski J. Choosing the best algorithm for event detection based on the intended application: a conceptual framework for syndromic surveillance. J Biomed Inform. 2018;85:126–135. [DOI] [PubMed] [Google Scholar]

- 21. Hulth A, Andrews N, Ethelberg S, Dreesman J, Faensen D, Van Pelt W, et al. Practical usage of computer‐supported outbreak detection in five European countries. Eurosurveillance. 2010;15:19658. [PubMed] [Google Scholar]

- 22. Meyer S, Held L, Höhle M. Spatio‐temporal analysis of epidemic phenomena using the R package surveillance. J Stat Softw. 2017;77:1–55. [Google Scholar]

- 23. Salmon M, Schumacher D, Höhle M. Monitoring count time series in R: aberration detection in public health surveillance. J Stat Softw. 2016;70:1–35. [Google Scholar]

- 24. Perry AG, Korenberg MJ, Hall GG, Moore KM. Modeling and syndromic surveillance for estimating weather‐induced heat‐related illness. J Environ Public Health. 2011;2011:750236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. State of California, Department of Finance . State of California, Department of Finance, E‐5 population and housing estimates for cities, counties and the state—January 1, 2021–2023 [internet] Sacramento, CA; 2023. [cited 2024 Jan 26]. Available at: https://dof.ca.gov/forecasting/demographics/estimates/e‐5‐population‐and‐housing‐estimates‐for‐cities‐counties‐and‐the‐state‐2020‐2023/ (accessed 26 January 2024). [Google Scholar]

- 26. Florida Legislature, Office of Economic and Demographic Research . Florida population estimates by county and municipality [internet] [cited 2024 Jan 26]. Available at: http://edr.state.fl.us/Content/population-demographics/data/index-floridaproducts.cfm (accessed 26 January 2024). [Google Scholar]

- 27. Noufaily A, Enki DG, Farrington P, Garthwaite P, Andrews N, Charlett A. An improved algorithm for outbreak detection in multiple surveillance systems. Stat Med. 2013;32:1206–1222. [DOI] [PubMed] [Google Scholar]

- 28. Sanghavi D, Alley D. Transforming population health‐ARPA‐H's new program targeting broken incentives. N Engl J Med. 2024;390:295–298. [DOI] [PubMed] [Google Scholar]

- 29. Song YY, Ying L. Decision tree methods: applications for classification and prediction. Shanghai Arch Psychiatry. 2015;27:130–135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Mistler CB, Chandra DK, Copenhaver MM, Wickersham JA, Shrestha R. Engagement in harm reduction strategies after suspected fentanyl contamination among opioid‐dependent individuals. J Community Health. 2021;46:349–357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Canning P, Doyon S, Ali S, Logan SB, Alter A, Hart K, et al. Using surveillance with near‐real‐time alerts during a cluster of overdoses from fentanyl‐contaminated crack cocaine, Connecticut, June 2019. Public Health Rep. 2021;136:18S–23S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Klaucke DN, Buehler JW, Thacker SB, Parrish RG, Trowbridge FL. Guidelines for evaluating surveillance systems; 1988. Available at: https://www.cdc.gov/mmwr/preview/mmwrhtml/00001769.htm (accessed 26 January 2024). [Google Scholar]

- 33. Lawson AB, Kleinman K. Spatial and Syndromic Surveillance for Public Health Hoboken, NJ: John Wiley & Sons Ltd; 2005. [Google Scholar]

- 34. Marks C, Abramovitz D, Donnelly CA, Carrasco‐Escobar G, Carrasco‐Hernández R, Ciccarone D, et al. Identifying counties at risk of high overdose mortality burden during the emerging fentanyl epidemic in the USA: a predictive statistical modelling study. Lancet Public Health. 2021;6:e720–e728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Friedman J, Montero F, Bourgois P, Wahbi R, Dye D, Goodman‐Meza D, et al. Xylazine spreads across the US: a growing component of the increasingly synthetic and polysubstance overdose crisis. Drug Alcohol Depend. 2022;233:109380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Friedman SR, Krawczyk N, Perlman DC, Mateu‐Gelabert P, Ompad DC, Hamilton L, et al. The opioid/overdose crisis as a dialectics of pain, despair, and one‐sided struggle. Front Public Health. 2020;8:540423. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1. Supporting Information

Figure S1a: Proportion of Surveillance Evaluations on Fatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in California.

Figure S1b: Proportion of Surveillance Evaluations on Nonfatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in California.

Figure S1c: Proportion of Surveillance Evaluations on Fatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in Florida.

Figure S1d: Proportion of Surveillance Evaluations on Nonfatal Data with Trend Terms Meeting P‐Value Significance Criteria By Population Size of Counties in Florida.

Figure S2: Time‐series for Alameda.

Figure S3: Time‐series for Amador.

Figure S4: Time‐series for Butte.

Figure S5: Time‐series for Calaveras.

Figure S6: Time‐series for Colusa.

Figure S7: Time‐series for Contra Costa.

Figure S8: Time‐series for Del Norte.

Figure S9: Time‐series for El Dorado.

Figure S10: Time‐series for Fresno.

Figure S11: Time‐series for Glenn.

Figure S12: Time‐series for Humboldt.

Figure S13: Time‐series for Imperial.

Figure S14: Time‐series for Inyo.

Figure S15: Time‐series for Kern.

Figure S16: Time‐series for Kings.

Figure S17: Time‐series for Lake.

Figure S18: Time‐series for Lassen.

Figure S19: Time‐series for Los Angeles.

Figure S20: Time‐series for Madera.

Figure S21: Time‐series for Marin.

Figure S22: Time‐series for Mariposa.

Figure S23: Time‐series for Mendocino.

Figure S24: Time‐series for Merced.

Figure S25: Time‐series for Modoc.

Figure S26: Time‐series for Mono.

Figure S27: Time‐series for Monterey.

Figure S28: Time‐series for Napa.

Figure S29: Time‐series for Nevada.

Figure S30: Time‐series for Orange.

Figure S31: Time‐series for Placer.

Figure S32: Time‐series for Plumas.

Figure S33: Time‐series for Riverside.

Figure S34: Time‐series for Sacramento.

Figure S35: Time‐series for San Benito.

Figure S36: Time‐series for San Bernadino.

Figure S37: Time‐series for San Diego.

Figure S38: Time‐series for San Francisco.

Figure S39: Time‐series for San Joaquin.

Figure S40: Time‐series for San Luis Obispo.

Figure S41: Time‐series for San Mateo.

Figure S42: Time‐series for Santa Barabara.

Figure S43: Time‐series for Santa Clara.

Figure S44: Time‐series for Santa Cruz.

Figure S45: Time‐series for Shasta.

Figure S46: Time‐series for Siskiyou.

Figure S47: Time‐series for Solano.

Figure S48: Time‐series for Sonoma.

Figure S49: Time‐series for Stanislaus.

Figure S50: Time‐series for Tehama.

Figure S51: Time‐series for Trinity.

Figure S52: Time‐series for Tulare.

Figure S53: Time‐series for Tuolumne.

Figure S54: Time‐series for Ventura.

Figure S55: Time‐series for Yola.

Figure S56: Time‐series for Yuba.

Figure S57: Time‐series for Alachua.

Figure S58: Time‐series for Baker.

Figure S59: Time‐series for Bay.

Figure S60: Time‐series for Bradford.

Figure S61: Time‐series for Brevard.

Figure S62: Time‐series for Broward.

Figure S63: Time‐series for Calhoun.

Figure S64: Time‐series for Charlotte.

Figure S65: Time‐series for Citrus.

Figure S66: Time‐series for Clay.

Figure S67: Time‐series for Collier.

Figure S68: Time‐series for Columbia.

Figure S69: Time‐series for DeSoto.

Figure S70: Time‐series for Dixie.

Figure S71: Time‐series for Duval.

Figure S72: Time‐series for Escambia.

Figure S73: Time‐series for Flagler.

Figure S74: Time‐series for Franklin.

Figure S75: Time‐series for Gadsden.

Figure S76: Time‐series for Gilchrist.

Figure S77: Time‐series for Glades.

Figure S78: Time‐series for Gulf.

Figure S79: Time‐series for Hamilton.

Figure S80: Time‐series for Hardee.

Figure S81: Time‐series for Hendry.

Figure S82: Time‐series for Hernando.

Figure S83: Time‐series for Highlands.

Figure S84: Time‐series for Hillsborough.

Figure S85: Time‐series for Holmes.

Figure S86: Time‐series for Indian River.

Figure S87: Time‐series for Jackson.

Figure S88: Time‐series for Jefferson.

Figure S89: Time‐series for Lafayette.

Figure S90: Time‐series for Lake.

Figure S91: Time‐series for Lee.

Figure S92: Time‐series for Leon.

Figure S93: Time‐series for Levy.

Figure S94: Time‐series for Liberty.

Figure S95: Time‐series for Madison.

Figure S96: Time‐series for Manatee.

Figure S97: Time‐series for Marion.

Figure S98: Time‐series for Martin.

Figure S99: Time‐series for Miami‐Dade.

Figure S100: Time‐series for Monroe.

Figure S101: Time‐series for Nassau.

Figure S102: Time‐series for Okaloosa.

Figure S103: Time‐series for Okeechobee.

Figure S104: Time‐series for Orange.

Figure S105: Time‐series for Osceola.

Figure S106: Time‐series for Palm Beach.

Figure S107: Time‐series for Pasco.

Figure S108: Time‐series for Pinellas.

Figure S109: Time‐series for Polk.

Figure S110: Time‐series for Putnam.

Figure S111: Time‐series for Santa Rosa.

Figure S112: Time‐series for Sarasota.

Figure S113: Time‐series for Seminole.

Figure S114: Time‐series for St. Johns.

Figure S115: Time‐series for St. Lucie.

Figure S116: Time‐series for Sumter.

Figure S117: Time‐series for Suwannee.

Figure S118: Time‐series for Taylor.

Figure S119: Time‐series for Union.

Figure S120: Time‐series for Volusia.

Figure S121: Time‐series for Wakulla.

Figure S122: Time‐series for Walton.

Figure S123: Time‐series for Washington.

Figure S124: Time‐series for Region 1.

Figure S125: Time‐series for Region 2.

Figure S126: Time‐series for Region 3.

Figure S127: Time‐series for Region 4.

Figure S128: Time‐series for Region 5.

Figure S129: Time‐series for Region 6.

Figure S130: Time‐series for Region 7.

Figure S131: Time‐series for Region 8.

Figure S132: Time‐series for Region 9.

Figure S133: Time‐series for Region 10.

Figure S134: Time‐series for Region 11.

Figure S135: Time‐series for Region 12.

Figure S136: Time‐series for Region 13.

Figure S137: Time‐series for Region 14.

Figure S138: Time‐series for Regions 15–16.

Figure S139: Time‐series for Region 17.

Figure S140: Time‐series for Region 18.

Figure S141: Time‐series for Region 19.

Figure S142: Time‐series for Region 1.

Figure S143: Time‐series for Region 2.

Figure S144: Time‐series for Region 3.

Figure S145: Time‐series for Region 4.

Figure S146: Time‐series for Region 5.

Figure S147: Time‐series for Region 6.

Figure S148: Time‐series for Region 7.

Figure S149: Time‐series for Region 8.

Figure S150: Time‐series for Region 9.

Figure S151: Time‐series for Region 10.

Figure S152: Time‐series for Region 11.

Table S1a: Proportion of Surveillance Evaluations on Region‐Level ODD Data Yielding an Alert.

Table S1b: Proportion of Surveillance Evaluations on Region‐Level Nonfatal Data Yielding an Alert.

Table S2a: Proportion of Surveillance Evaluations on County‐Level ODD Data Yielding an Alert (significant trend cases only).

Table S2b: Proportion of Surveillance Evaluations on County‐Level Nonfatal Data Yielding an Alert (significant trend cases only).

Table S3a: Proportion of Surveillance Evaluations on County‐Level Fatal Data Meeting P‐Value Significance Criteria.

Table S3b: Proportion of Surveillance Evaluations on County‐Level Nonfatal Data Meeting P‐Value Significance Criteria.

Table S4a: Correlations Between Fatal and Nonfatal Surveillance Evaluations at the County‐Level for California.

Table S4b: Correlations Between Fatal and Nonfatal Surveillance Evaluations at the County‐Level for Florida.

Table S5a: Likelihood of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month timeframes contingent on whether (California, county level).

Table S5b: Likelihood of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month time‐frames contingent on whether (Florida, county level).

Table S5c: Odds ratios of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month timeframes contingent on whether (California, county level).

Table S5d: Odds ratios of a statistically‐significant increase in the overdose death rate (ODR) having occurred contingent on the number of monthly nonfatal alerts derived over 6‐month timeframes contingent on whether (Florida, county level).

Data Availability Statement

The mortality data that support the findings of this study are available from the CDC WONDER restricted database. Access to, and use of, this data requires research‐proposal review [https://www.cdc.gov/nchs/nvss/index.htm] and approval by the National Center for Health Statistics. The emergency department count data from California in this study are openly available from the EpiCenter webpage at https://skylab4.cdph.ca.gov/epicenter/. The emergency medical services count data from Florida in this study are openly available from the Florida Department of Health webpage at https://www.flhealthcharts.gov/Charts/.