Abstract

Conducting penetration testing (pentesting) in cybersecurity is a crucial turning point for identifying vulnerabilities within the framework of Information Technology (IT), where real malicious offensive behavior is simulated to identify potential weaknesses and strengthen preventive controls. Given the complexity of the tests, time constraints, and the specialized level of expertise required for pentesting, analysis and exploitation tools are commonly used. Although useful, these tools often introduce uncertainty in findings, resulting in high rates of false positives. To enhance the effectiveness of these tests, Machine Learning (ML) has been integrated, showing significant potential for identifying anomalies across various security areas through detailed detection of underlying malicious patterns. However, pentesting environments are unpredictable and intricate, requiring analysts to make extensive efforts to understand, explore, and exploit them. This study considers these challenges, proposing a recommendation system based on a context-rich, vocabulary-aware transformer capable of processing questions related to the target environment and offering responses based on necessary pentest batteries evaluated by a Reinforcement Learning (RL) estimator. This RL component assesses optimal attack strategies based on previously learned data and dynamically explores additional attack vectors. The system achieved an F1 score and an Exact Match rate over %, demonstrating its accuracy and effectiveness in selecting relevant pentesting strategies.

Keywords: penetration testing, reinforcement learning, recommender systems

1. Introduction

With the continuous advancement of technology, cybersecurity has become crucial for protecting digital assets against threats, vulnerabilities, malicious artifacts, and other sophisticated cyber risks that impact their environment [1]. In this context, underpinned by the principles of Confidentiality, Integrity, and Availability (CIA), offensive cybersecurity is proposed as a range of dynamic techniques to robustly assess whether the applied controls and policies are effectively maintained within the targeted infrastructure [2].

In accordance with the National Institute of Standards and Technology (NIST) Special Publication (SP) 800-115, Technical Guide to Information Security Testing and Assessment [3], pentesting constitutes a fundamental component of offensive security. Through the execution of tests that simulate active attacks, mimicking the real behavior of a malicious actor, vulnerabilities in various information assets can be assessed, either manually or automatically. This process ultimately facilitates the identification of the most effective defense-response strategies.

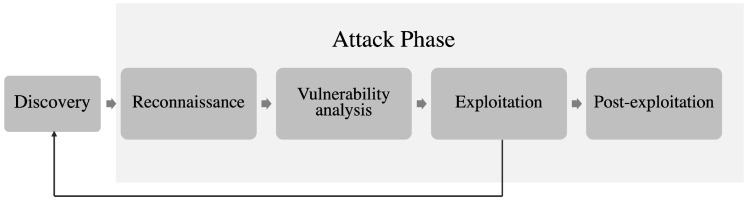

While various methodologies and techniques for pentesting exist, the SysAdmin, Audit, Networking, and Security (SANS) Institute [4] has articulated a set of refined steps to conduct a comprehensive audit. This process begins with target identification, also referred to as the discovery phase, and proceeds through service reconnaissance, vulnerability analysis, exploitation, post-exploitation activities, and the generation of findings reports. Figure 1 illustrates a summary of these stages.

Figure 1.

Phases of pentesting execution according to the best practices guide from SANS [4]. Note that the stages of reconnaissance, vulnerability analysis, exploitation, and post-exploitation are designated as attack phases, as these activities are actively engaged during the exercise. Additionally, the cycle may repeat if lateral movement opportunities arise during the exploitation stage.

In this context, the backbone of the pentesting process lies in the careful design of tests, as the environments in which they are conducted often operate under a black-box premise. This means the analyst has only minimal knowledge of the environment and must dynamically uncover the relevant assets according to a structured reconnaissance and execution plan. This poses a constant challenge, as environments are typically dynamic and unpredictable, requiring a high level of expertise, finely calibrated tools, and substantial time windows, which frequently turns into a race between detection and mitigation due to the critical nature of the assets involved [5].

As argued in [6], while the expertise of the pentester and the adaptability of tools to agile methodologies enhance the design of test batteries based on key infrastructure components of the target environment, no approach can definitively anticipate the complexity or duration of the exercise. This unpredictability may, in turn, increase the likelihood of false positives stemming from unexplored gaps, unsuccessful proofs of concept, or imprecisions in testing.

Conversely, to address the previously mentioned challenges, there has been a growing interest in applying ML techniques within the pentesting design and execution process. This approach seeks a more automated, precise, and efficient perspective adaptable to various domains, specifically in the planning and attack phases of testing, thereby enabling experts to more comprehensively cover the targeted exploitation objectives [7].

Although various branches of ML have applied different algorithms to classify, cluster, or predict aspects of the pentesting process, Reinforcement Learning (RL) has distinguished itself by its ability to rapidly adapt to diverse reconnaissance surfaces and construct attack vectors in a more realistic manner. This adaptability allows RL to broaden its reach toward new horizons of exploration and exploitation in vulnerable scenarios, redefining pentesting practices [8].

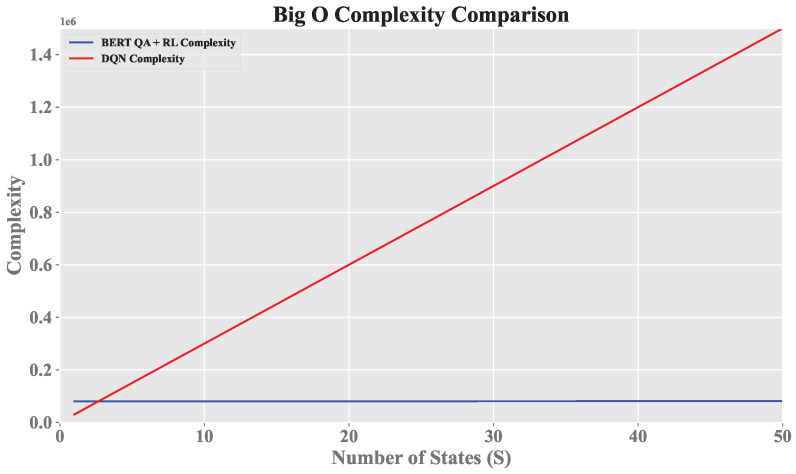

The literature has thoroughly examined the benefits and challenges of using RL in cybersecurity, highlighting its ability to adapt to constantly evolving offensive and defensive technologies [9,10]. However, relying exclusively on an RL agent in pentesting is challenging due to the dynamic nature of environments and the exponential growth in tests required to optimize reconnaissance and attack phases. This challenge presents a prohibitive computational problem of order , where S represents the target state space, A denotes the set of test actions, and T is the time horizon for executing potential attacks. The combination of these factors significantly increases the learning time and the trajectory required to find an optimal solution in vulnerable environments [8,11].

As indicated in [12], this situation has led pentesters, despite the wide availability of ML and RL tools, to continue relying on established databases such as the Common Vulnerability and Exposures (CVE) and the National Vulnerability Database (NVD). These resources allow them to identify vulnerable artifacts and focus on the proof-of-concept (PoC) tests needed to validate findings.

In this context, according to [13], the continuous pentesting process should rely on a balance between the estimation provided by the RL algorithm, the accuracy of intelligent testing, and expert validation, ensuring that results align with the environment requirements. Defining a general space for target attributes within the environment a finite domain for detecting vulnerabilities is essential. Calibrating the scope of the RL agent based on the probable number of tests is also crucial to prevent excessive generalization during the exploratory process. Additionally, consolidating results through pentester monitoring is fundamental to ensuring the quality of the procedure.

Within this framework, the present study addresses the limitations of current RL approaches in pentesting by proposing a resource-efficient architecture that maintains effectiveness while aligning closely with the skills of pentesters. The proposed model not only monitors all ecosystem components but also guides the analyst in precisely identifying the necessary tests and executing them optimally within a Question–Answer (QA) recommendation system (RS).

This approach leverages the synergy between RL and the text transformer BERT (Bidirectional Encoder Representations from Transformers) [14]. RL emulates the pentesting process within the desired domain and feeds a QA-based RS with tuples of question, context, and answer, enabling the pentester to consult and optimize the design, identification, and assessment of vulnerabilities. This integration allows the RL agent to learn from the evaluation environment and generate contextually relevant, actionable recommendations designed to guide the effective implementation of offensive tests, thereby avoiding system overload.

The structure of this manuscript is organized as follows: Section 2 examines related works that have explored traditional RL approaches, Quality Learning (QL), Deep Learning (DL) combined with RL (DRL), and hybrid models that manage one or more estimators or algorithms depending on the type of environment and the intended scope of exploitation for pentesting activities. Section 3 describes the methods and materials employed in developing the proposed RL system for QA-based recommendations within the pentesting process, hereafter referred to as BERT QA RL + RS, which is an acronym for Reinforcement Learning plus BERT plus Recommender System. Subsequently, Section 4 evaluates and discusses the results of RL+BERT+RS and compares them with other state-of-the-art studies. Finally, Section 6 presents the conclusions and suggests possible directions for future research.

2. Related Work

In [15], one of the first works to employ a traditional RL approach, the Intelligent Automated Penetration Testing (IAPT) approach is described. This approach utilizes a solution model based on the Partially Observable Markov Decision Process (POMDP), where an agent seeks the most optimal path to exploit vulnerabilities and earn rewards in a controlled environment with various network failures.

Further in [16], the authors enhanced the solution of the IAPT agent by adding more exploration/exploitation actions within the range of already identified gaps from the Common Vulnerability and Exploits (CVE), expanding their repertoire of policies and rewards. The results demonstrated that incorporating solutions of the Generalized Value Iteration Pruning (GIP) type in the simulated environment can uncover multiple exploitation paths while minimizing the complexity of the policies associated with POMDP.

In references [9,17], the classic RL problem is reformulated with a focus on QL, where an off-policy temporal difference is assumed, meaning the agent estimates actions and values starting with an initial hypothesis about the environment to be exploited. In line with the findings of [17], a Capture the Flag (CTF) scenario can be envisioned, where the agent incremental learning can track seasonal and nonseasonal ports, server attacks, and the exploitation of web vulnerabilities, typically requiring between 100 to 500 iterations to achieve successful reward outcomes. Similarly, Ref. [9] concluded that work by integrating a layer known as the Double-Deep-Q-Network (DDQN), wherein the agent is able to develop more observational routes towards the attack objective, converging in fewer reward–penalty episodes.

In another context, the authors of [18] applies the concept of QL to the post-exploitation phase, where the agent learns under the premise of environments already compromised in Microsoft Windows and Linux operating systems. The QL estimation results indicate that the agent can converge towards processes for discovering plaintext credentials and privilege escalation (PEsc) with minimal policies and actions.

From the perspective of DL layers, the studies presented in [10,19] suggest that incorporating classifiers for optimal exploitation environments is a timely addition to determine whether the agent will be capable of injecting a payload into the vulnerable target. In [19], it is described that the desired characteristics originate from the types of operating systems, service versions, and exploitation modes, which—depending on the generalization of the output layer—can guide the RL agent in executing the most ideal attack during the transition of actions and policies, thereby increasing the desired reward. Similarly, Ref. [10] posits that by promoting the use of payloads from common exploitation tools such as Metasploit, SQLmap, and Weevely, the selection in the classification of test batteries can be more efficient and cost-effective in discovering optimal conditions for agent attacks.

Within simulation environments where all phases of pentesting are completed, projects such as Network Attack Simulator (NASim) [20] and PenGym [21] are noteworthy. Both projects operate within a quasi-real configuration spectrum, incorporating network environments with hosts, network topology, compromise actions, defensive devices, and vulnerable targets. NASim leverages a classic RL approach, where the agent bases its actions on a kill chain driven by satisfactory exploitation probabilities, assuming the maximum reward value for the agent. Conversely, PenGym enhances the effectiveness of the entire pentesting process through modules called ’tiny,’ where the RL algorithm focuses on session-based transition actions, achieving a faster exploratory reward value than its counterpart.

In [22], a novel architecture named Cascaded Reinforcement Learning Agents (CRLA) was introduced. This architecture addresses the challenge of discrete action spaces in an autonomous pentest simulator, where the number of actions exponentially increases with complexity across various network exploitation scenarios. It was demonstrated that CRLA identified optimal attack strategies in scenarios with large action spaces more rapidly and robustly than conventional QL agents.

Also regarding the use of BERT models in the field of cybersecurity, in [23], they proposed an approach, VE-Extractor, to extracting vulnerability events from textual descriptions in vulnerability reports, including the extraction of the vulnerability event trigger and the event arguments (such as consequence or operation), and they used a BERT QA model for this purpose.

The studies [24,25] show that Large Language Models (LLMs) offer many advantages over traditional classification methods. In [24], a BERT-based Vulnerability Detection (BBVD) method was proposed to detect software vulnerabilities from the source code level using the high-level programming language C/C++, obtaining superior results to average models based on traditional classifications. The authors of [25] presented an automated categorization of vulnerability data using DL. In this paper, they found that BERT designs fitted with LSTM outperformed standard models in precision, F1 score, accuracy, and recall, also demonstrating the advantage of using LLM models in the field of cybersecurity.

Ultimately, in reference [26], a dataset of 1813 CVEs annotated with all corresponding MITRE ATT&CK techniques was presented, and models were proposed to automatically link a CVE to one or more techniques based on the textual description of the CVE metadata. Therein, they established a robust baseline that considers classical machine learning models and state-of-the-art pre-trained BERT-based linguistic models while countering the highly imbalanced training set with data augmentation strategies based on the TextAttack framework.

3. Methods and Materials

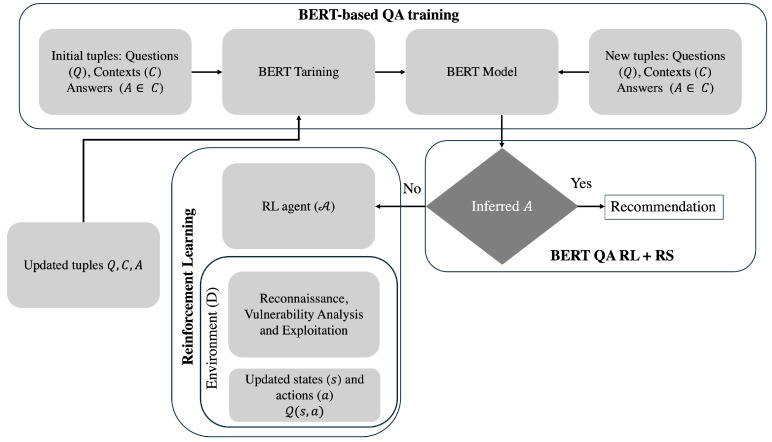

In Figure 2, the workflow of BERT QA RL + RS is illustrated.

Figure 2.

General diagram of the proposed architecture showing the interaction between the RL agent and the recommender system to obtain as output a suggestion of attacks to be performed on the available machine.

To describe the proposal represented in Figure 2, consider a traditional scenario where a pentester follows the guidelines established by NIST SP 800-115, which define a structured methodology for conducting black-box penetration tests. Initially, as in any work with estimators during the BERT-based QA training phase, a pre-trained model is unfrozen and fine-tuned with a dataset related to the necessary QA context to recalibrate the weights and customize it for the specific task. In this case, the training involves various historical CWE cases across multiple domains, including recognition, vulnerability analysis, and exploitation. These cases are diverse and objective-driven, organized into tuples consisting of questions (Q) specifying objectives to test, contexts (C) detailing how the solution to Q is formulated, and , which is the contextualized answer that directly addresses Q.

Once the BERT QA model is generated, the pentester might begin with limited information about the target, such as IP addresses, URLs, domain names, or topological layouts. Based on this information, the pentester would determine and experiment with various tests to identify service infrastructure and preliminary vulnerabilities, assess their exploitability, and propose mitigation measures. By querying the trained BERT QA model, an inferred RS response to the question may be obtained. However, if the response is unsatisfactory or not present, the following scenario involving the Reinforcement Learning (RL) phase can be considered:

The workflow begins when the pentester defines the evaluation objectives as a query Q, which structures the input for the BERT QA system while aligning with the recommendation of NIST SP 800-115 to clearly establish the scope and objectives. For example,

According to the guidelines of NIST SP 800-115, this query initiates the recognition phase by focusing on the target’s characteristics. If Q matches a context C already present in the knowledge base of BERT QA RL + RS, an inferred response is generated. For example,

The response A includes actionable insights into vulnerabilities and aligns with the emphasis of NIST SP 800-115 on identifying and documenting specific risks.

In cases where no response can be inferred, the query Q is transferred to the RL agent (). Based on the decomposition of Q, identifies which pentesting attributes are most suitable for reconnaissance, vulnerability analysis, and exploitation within a controlled environment . A training process is initiated, where represents the matrix of states s and actions a that must observe to determine successful interaction episodes for evaluating the desired objective. Over successive iterations, the RL agent maximizes the effectiveness of the reward sequence across the pentesting steps. When no further paths remain to be explored, the output of provides a new tuple to recalibrate the weights of the BERT QA model, incorporating new information for future recommendations. This iterative process ensures a dynamic and adaptive penetration testing approach.

When BERT QA RL + RS is integrated with new tuples, the pentester benefits from the combination of NIST SP 800-115 and the BERT QA RL system, resulting in potential new RS recommendations, as demonstrated in the following phases:

Planning and Preparation: NIST SP 800-115 emphasizes defining clear objectives and scoping the penetration test. In this workflow, Q establishes these objectives, while the BERT QA RL + RS system aligns them with pre-trained contexts C, offering immediate actionable insights or delegating tasks to when novel scenarios arise.

Recognition: According to NIST SP 800-115, identifying active hosts, open ports, and running services is critical. The RL agent operationalizes this by automating reconnaissance tasks using tools such as Nmap, correlating findings with known configurations in the BERT QA RL + RS system. This accelerates the discovery phase while ensuring consistency.

Vulnerability Identification: NIST SP 800-115 recommends correlating reconnaissance data with known vulnerabilities. The RL agent cross-references identified software versions and services against CWE and CVE databases, enriched by the contextual understanding provided by the BERT QA RL + RS system, to identify vulnerabilities with actionable clarity.

Exploitation: NIST SP 800-115 advises conducting controlled exploitation to validate findings. Leveraging outputs from BERT QA RL + RS, the RL agent selects optimal paths for proof-of-concept attacks using tools such as Metasploit, testing vulnerabilities like CWE-94 by attempting CI in functions.lib.php.

Reporting and Recommendations: NIST SP 800-115 underscores the importance of documenting findings and proposing mitigations. Here, the tuple consolidates the test results, integrating newly discovered insights into the BERT QA RL + RS knowledge base. For example, the system may recommend upgrading PHP to version 7.4 to mitigate CWE-94 or implementing input validation to address CWE-79.

By combining the structured methodology of NIST SP 800-115 with the adaptive capabilities of the BERT QA RL + RS system, this workflow automates and optimizes key phases of penetration testing. The state–action matrix ensures iterative learning from , while continuous updates to the BERT QA RL + RS system incorporate novel scenarios, enabling effective handling of diverse and previously unseen configurations.

In Section 3.1, Section 3.2 and Section 3.3, the steps of the BERT QA RL + RS strategy are described in depth.

3.1. BERT-Based QA Training

BERT [27] represents a series of pre-trained transformer-based models designed for various natural language understanding tasks. By utilizing a masked language model (MLM) schema, BERT enables predictions across multiple outputs, including classification, next-word and next-sentence prediction, term clustering, and inferring questions related to specific domain contexts.

In the architecture of BERT for QA, the core components consist of a query Q, which is framed as an argumentative formulation within a knowledge domain. For example, in the case of a RS tailored for penetration testing, Q could be defined as a set of features linked to target attributes, aiming to infer the optimal actionable pathway for reconnaissance, vulnerability assessment, and exploitation.

Associated with Q is a context, which provides detailed information on the presupposition intended for inference, serving as the referential framework toward a factual response A. This response A outlines how to conduct asset reconnaissance, identifies recognized assets that exhibit vulnerabilities, and specifies the steps to exploit these vulnerabilities. Depending on the target’s unique scenario, A may also include steps for post-exploitation.

The response is a specific statement delineated within the context C by its starting and ending indices, and , as shown below:

where indicates the starting index at position 115, and indicates the ending index at position 210 within the characters of C.

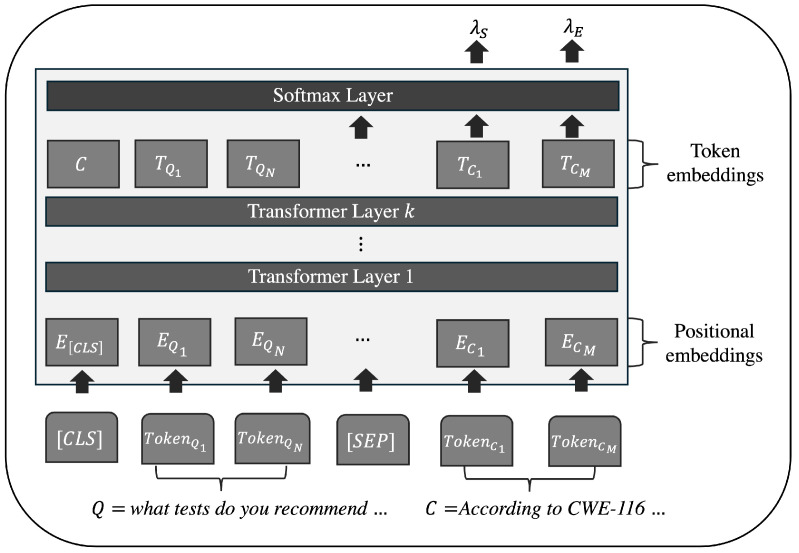

Figure 3 schematizes the mechanism by which BERT models, utilized in QA tasks, train the sample set for RS within the context of penetration testing, thereby enabling the generation of predictions related to A.

Figure 3.

General architecture of BERT for QA. Note how Q and C are included as inputs, separated by a special token, , which indicates the boundary between the two sequences. Additionally, the token signifies that the sequence will be used in a masked classification model, facilitating the emulation of potential answer selection.

The inputs for BERT in QA RS tasks are tuples , with , and the respective start and end indices and . At this stage, the sequences Q and C must be tokenized, including start and separation tokens between Q and C, such as [CLS] and . The token is placed at the beginning of the sequence to indicate a classification task, while the token separates different sentences or segments in the text, as shown in Equation (1):

| (1) |

In this equation, I represents the input to the BERT QA RL + RS model. The tokens and denote the N-th and M-th tokens of Q and C, respectively.

Each token and is transformed into a high-dimensional embedding vector, capturing the context, order, and relationships among tokens in I. In BERT, two types of embeddings are calculated: the token embeddings , which capture the vocabulary-based meaning of tokens for Q and C; segment embeddings, distinguishing between tokens of Q and C and aiding in the interpretation of each sequence, as shown in Equation (2).

| (2) |

The segment embedding vector S lies within the sequence dimension d, corresponding to the maximum length of I.

Positional embeddings analyze the structure and order of each and , enabling identification of positional context within the sequence and the relationships between Q and C to locate the potential answer A embedded in C, as defined in Equation (3).

| (3) |

The positional embedding vector P also lies within the sequence dimension d, corresponding to the maximum length of I.

The concatenated representation of tokens, defined by Equation 4, combines the embeddings as follows:

| (4) |

This combined embedding, , represents the concatenation of tokens, with denoting the total number of tokens generated by E, S, and P, including the special tokens and .

The embeddings H then undergo k layers of transformation, each consisting of several interconnected elements, including Multi-Head Attention, stabilization via Normalization, a nonlinear Feed-Forward Network (FNN), and an output activation to generate the probabilistic predictions for the potential output indices of , referred to as the start logits () and end logits ().

The Multi-Head Attention mechanism allows for simultaneous attention to different parts of the discourse between H of Q and C, facilitating the learning of relationships between words, both nearby and distant, within a single context. This enhances language comprehension by utilizing the pre-existing parameters W in the BERT model. For each attention head, three matrices are computed: The query matrix , which transforms H into a query space that establishes relationships and similarities among all tokens embedded in the sequence, allowing focused attention on each token. The key matrix , representing the contextual characteristics of the tokens in H in relation to the query matrix. The value matrix , which contains the information transferred between tokens based on the keys, capturing relevance, context, and the relationships learned with other tokens.

In this case, the attention in each head is calculated with , where is the dimension of the q and K vectors, as shown in Equation (5):

| (5) |

Here, acts as a normalization factor, preventing the attention scores from becoming excessively large and thus stabilizing the learning process.

When using all attention heads, the complete calculation is defined as shown in Equation (6):

| (6) |

where denotes the output of the h-th attention head, and is the parameter matrix used to project the concatenated output into a final dimension of d.

After the MultiHead attention process, the sequential output passes through a feed-forward neural network (FNN) that applies nonlinear transformations to its representations. This process captures the contextual importance and unique characteristics of each element in the sequence, creating a latent language representation that integrates the information from Q, C, and the associations of A, as shown in Equation (7).

| (7) |

In this formulation, is the FNN output; and represent BERT parameters to be adjusted for the latent language representation, and and serve as bias terms that help maintain the contextual relationships within the vocabulary range of the sequences in .

Consequently, normalization stabilizes learning and enhances both generalization and convergence by keeping the internal values resulting from the MultiHead and FNN layers within an appropriate range. This prevents issues of instability or gradient vanishing during training, where is applied. Here, H represents the embedding input, and X can refer to the output or .

The results of the final operation generate an output layer where logits are calculated, representing the unnormalized scores used to estimate the probabilities for the different indices corresponding to the correct answer A. Let Norm be the final step following the FFN, and let the output represent a series of token distributions over start and end positions in T, with weights W calibrated to produce an output based on the probability of all possible start and end indices for A, referred to as the start logits and end logits .

To transform the logits into numerical indices, a softmax function must be applied. The argmax denotes the highest probability value corresponding to the predictions of A, as given by and , where is the predicted start index, and is the predicted end index.

It is challenging to address all possible values that currently exist, including legacy, temporary, current, or zero-day vulnerabilities. However, the NIST vulnerability repository [28] provides a substantial list of approximately 93,000 records of security breaches reported by various vendors since 2013. These breaches are classified by impact level—informative, low, medium, or high—based on temporal, environmental, network, and exploit complexity factors. Following this line of reasoning, the samples from [28] include a unique submission identifier, year, characteristics, CVE (if applicable), vendor, vulnerability type, CWE, affected versions, vulnerability description, and proof of concept (if applicable).

Of the total 93,000, more than 171 distinct CWE types were captured, spread across 43,080 well-identified vulnerabilities with proofs of concept. These encompass 40,554 vendors, languages, services, and products, primarily operating systems such as Ubuntu-Linux, Fedora-Linux, Android-Linux, Windows 7, 8, 10, and Windows Server 2008, as well as Java Struts, PHP, Apache2, Nginx, C/C++, Python Flask, and OpenSuse, to generate complete tuples . Of the remaining 41,679, only 1 or 2 of , , or were available, and 8421 were candidates for submission to the RL estimator.

The questions A will be formulated in a closed and argumentative form, containing characteristics such as the environment, possible versions, ports, and information related to the target’s surrounding space to be evaluated. On the other hand, the contextual definition of C will be associated with the vendor, one or more CVEs, the vulnerability, affected versions, one or more CWEs, vulnerability description, and proof of concept. Conversely, will only contain proof of concept and the indices , to denote its boundary within C. If none exists, then A will be completed with a note indicating it will be sent to the RL agent for evaluation.

3.2. Reinforcement Learning

To formulate the RL problem in an ideal pentesting scenario, consider an agent interacting with an environment consisting of a set of technologies with vulnerable services . For to conduct pentesting tests over the scope of , a state space is required to represent the possible configurations of the environment based on , along with an action space that includes recognition (), vulnerability identification (), and exploitation () tests. Consequently, at each step, transitions within by selecting an action given a state , with the goal of maximizing a reward through effective recognition, identification, or exploitation of .

However, the previous formulation from a classical RL perspective can present challenges in highly dynamic environments, such as penetration testing scenarios, where incremental changes in technologies, services, and potential vulnerabilities—among other factors—are common. In such contexts, freedom of interaction with limited prior knowledge of the environment is essential. For this reason, Q-Learning [29] was chosen as the learning architecture, a type of RL that provides flexibility to handle dynamism and offers high convergence capabilities.

According to [13,29], Q-learning, also known as quality learning, emerges as an alternative for infinite horizon environments. This method is ideal for an agent to navigate environments with limited knowledge, adapting its decisions as it observes environmental conditions. In this context, learns from its actions to maximize the set of rewards , without being subject to specific adjustment policies .

Within penetration testing, this approach is formulated using a two-dimensional state–action matrix for each pair , where s denotes the state and a the associated action. The value of estimates the optimal reward obtained by performing an action a linked to , , and conditioned on . The goal is for , with preliminary knowledge of the testing scenario in a given environment , to accumulate the highest values, representing effective interactions to approximate . Equation (8) shows the calculation of these values.

| (8) |

Therein, we have the following:

represents the Q-value of the current state–action pair. If is in an initial state with preliminary knowledge of the environment, s remains unknown, and would be in the recognition stage, performing the action a of port or service scanning.

is the learning rate, which regulates the influence of new information on updates. A high implies that this information significantly impacts the value adjustments during the interaction of a within state s, and determining the amount of data a requires to recognize technologies associated with a specific port or service.

r is the reward obtained by performing action a in state s. For instance, could receive a high reward for successfully identifying the versions of technologies linked to a particular port or service.

is the discount factor for future rewards. If fails to recognize the port in , governs the importance of future versus immediate rewards, prompting to adopt more aggressive strategies to gather information about the port and assess its potential vulnerabilities.

is the resulting state after taking action a. Once the services and technologies associated with a port are identified, advances towards in a new state , where the action will focus on analyzing potential vulnerabilities.

represents the maximum Q-value across all possible actions in the subsequent state . This value corresponds to the highest expected reward that can achieve from by selecting the optimal action . In this sequence of actions, it would indicate that has accumulated sufficient information to progress from port identification to service association and, ultimately, to vulnerability exploitation.

For the construction of the environment , the renowned OpenAI Gym library [30], developed in version of the Python programming language, was employed. This library provides essential tools for establishing a Q-learning type reinforcement learning environment. Consequently, was configured to train in the action space within an infrastructure consisting of two virtual machines (VMs), each with its own state space and different configurations for .

Following the recommendations of the Cybersecurity and Infrastructure Security Agency (CISA) [31], various maturity reports were considered to determine the most suitable and reproducible scenarios in . This approach allowed the machines to be populated with various security breaches based on the most persistent vulnerabilities that, according to the CISA Advisory AA23-215, continue to impact production systems.

Although the environments in this RL scenario are intentionally vulnerable, their configuration reflects common and critical real-world security problems, mimicking possible attack schemes. However, in most RL problems, observations may be unpredictable, as in production systems, since the horizon over can increase over time.

To replicate back-end production conditions, the reported scenarios are structured to showcase an incremental progression, starting with network services, transitioning to web vulnerabilities, and culminating in exploitation through misconfigurations. This approach ensures the scenarios are both comprehensive and interconnected, demonstrating realistic attack pathways:

Network Services: The initial setup includes vulnerabilities in services such as FTP, SSH, and Telnet. For example, a misconfigured FTP service exposes sensitive directories, enabling unauthorized access to confidential files. This stage establishes a foundational exploitation route in network services.

Web Vulnerabilities: Building upon the network exploitation, the progression incorporates web-based weaknesses such as XSS and SQL-injection (SQLi). For instance, leveraging credentials from the compromised FTP server, an attacker could exploit an insecure web application to inject malicious SQL queries, gaining unauthorized database access.

Misconfiguration Scenarios: The final stage addresses critical configuration failures. Expanding on the previous exploit, a poorly configured database instance with no password protection allows for further data extraction. This demonstrates the compounding effect of misconfigurations as a vulnerability multiplier.

According to what has been expressed previously, approximately 1520 vulnerable configurations were assembled for the virtual machines (VMs) in the environments . While it is infeasible to cover all existing vulnerabilities due to their dynamic and evolving nature, these configurations focus on the most common and impactful vulnerabilities observed in real-world scenarios. This ensures a practical and representative framework for evaluating penetration testing architectures, emphasizing network services, web vulnerabilities, and system misconfigurations to simulate realistic attack pathways.

The detailed configurations of the VMs supporting these scenarios for the environments are as follows:

First Machine: This machine contains a series of configurations based on the Linux operating system, built upon the Metasploitable 2 [32] framework, with a set of intentionally vulnerable underlying services that facilitate practice in command injection (CI); misconfigurations; brute-force attacks (BFAs); exposed directories (EDs); outdated components (OCs); and failures in cryptographic flaws (CFs), authentication bypass (AB), and integrity, enabling various penetration testing exercises. The services include vulnerable versions of File Transfer Protocol (FTP), Secure Shell (SSH), Telnet, Tomcat, Network File System (NFS), UnrealIRCd, and Apache within the scope of network services; database management systems with security flaws in MySQL and PostgreSQL; a minimal version of Damn Vulnerable Web Application (DVWA) [33], which presents vulnerabilities such as XSS, directory traversal (DT), insecure deserialization (ID), arbitrary CI (ACI), and PEsc; file-sharing services through Samba; and RPC services—specifically Distcc and RExec—both configured in ways that allow vulnerability exploitation. In total, this machine offers more than 100 exploitation paths, 50 CVEs with proof-of-concept exploits, and over 40 reported weaknesses in the CWE (Common Weakness Enumeration) [34].

Second Machine: Similarly configured with Ubuntu , this machine hosts a series of , including Metasploitable 3, an updated version of its predecessor featuring vulnerabilities in Windows 8 and 10 operating systems specifically targeting services such as Tomcat, Python’s Flask, and Jenkins. Additionally, it offers several exploitation paths for the new version of Microsoft RDP. On the other hand, it enables handling PoCs for Fedora with vulnerable applications such as PHP, Apache Struts for Java, FTP, and Webmin. In total, it allows for the analysis of patterns for RCE, XSS, DT, information leakage, insecure configuration (IC), ID, API abuse (AA), CF, AB, and authorization flaws (AFs). Overall, there are 80 paths for reconnaissance, vulnerability analysis, and exploitation, with 40 identified CVEs and over 50 CWEs.

When is in its initial state and the series of transitions between the different configurations of with various presentations of begins, is preconfigured with three sensors aligned to the pairs at any given moment. For the recognition phase, Network Mapper (Nmap) [35] was employed, a well-known analyzer of technologies, services, and protocols associated with network ports using both passive and aggressive scanning techniques. In the vulnerability analysis states, an extension called Nmap Vulners [36] was used, which compares the data collected in the recognition phase with previously reported patterns in the CVE database. When a is in the exploitation block, it directs operations toward various Metasploit [37] modules, a suite that integrates confirmed payloads, to establish the target, attack path, and exploitation method.

To ensure gradual and continuous learning, an exploration-exploitation strategy was implemented as part of the Q-Learning algorithm, which operates iteratively, progressively refining the estimates of the values. Over multiple episodes of interaction with , adjusts its policies , when necessary, to maximize the cumulative rewards r of the state–action process [38], as detailed in Algorithm 1.

Each successful a yields a specific reward, ranging from , depending on the complexity of the attack, with a maximum cumulative reward of 3: 1 for stage, 2 for , and 3 for . In the event of failed attempts, adjusts to enable to return to the exploration–exploitation process at the current state s but with improved control over the search path through a more flexible while recalculating to optimize the desired path until it converges at .

The RL agent does not rely on any preexisting dataset for training. Instead, it learns through direct interaction with a simulated environment , which includes the two intentionally vulnerable virtual machines (VMs) described above. The agent uses trial-and-error exploration to improve its policy, receiving rewards based on the success of its actions. This design ensures that the training process adapts to dynamic scenarios rather than static data, mitigating risks of overfitting. Furthermore, the VM configurations were periodically altered to introduce variability, enhancing the generalization capabilities of the RL agent.

| Algorithm 1 Q-Learning for , , and tests. | |

|

The iteration episodes for conclude under two conditions: the first is the successful completion of , and the second is truncation, which occurs if the agent cannot complete any of the specified actions toward .

This strategy defines a parameter to guide in selecting effective a for each s. At each stage, evaluates the probability of exploring a new action or leveraging a known one. If this probability exceeds , selects the most effective learned so far. If lower, selects a random , ensuring broad exploration of the action space. As training advances, exploration gradually decreases, enabling to converge toward optimal . A decay rate is applied to , steadily reducing the probability of random selection until it reaches zero, at which point will consistently execute only the most effective actions.

After executing and completing the table, the pairs with the highest rewards over multiple episodes are compiled into a JSON interaction dataset. The output will consist of four keys: target to evaluate, contextual characteristics of the target, parameters used for , identified vulnerabilities in , and steps for successful exploitation of . Some rows will contain complete data for all three stages—reconnaissance, vulnerability identification, and exploitation—while others may contain only the first two stages or just the initial stage. In cases where did not reach its goal, these columns will be marked as failed attempts, which will serve the RS in determining that, according to a given Q, there is no A capable of responding to the query.

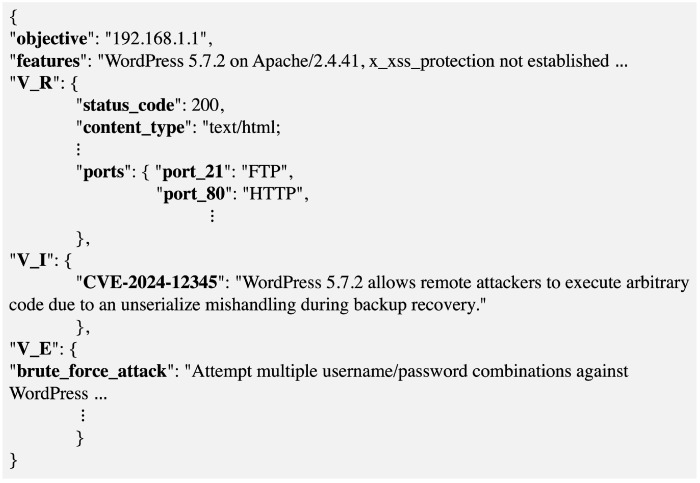

Once completes its exploration/exploitation in , all JSON objects are consolidated into a single dataset for subsequent ingestion by the BERT QA RL + RS. Figure 4 provides an example of a JSON object generated as a result of the RL process.

Figure 4.

The JSON format begins with an objective key to define the target, followed by essential characteristics in the features key, required to initialize the RL estimator. When completes its training, it outputs reconnaissance results in the V_R key, identifies of one or more vulnerabilities in the V_I key, and outputs from the exploitation episode in the V_E key, which serve as input for the BERT QA RL + RS.

3.3. BERT QA RL + RS

In order to integrate BERT QA RL + RS into the RL estimator, and under the assumption that a question Q does not yield a satisfactory answer , it is essential to define a transition function, as outlined in [39]. Initially, it is assumed that the RL process starts with an empty table in its first iteration.

Let be a confidence threshold based on a specific performance metric for BERT QA RL + RS. If the predicted answer has a probability of adequately addressing the question Q in the context C, then the RL estimator, using the mapping , will seek the optimal environment to initialize the state–action pairs in the table .

In this scenario, starts in an initial state and performs an action . After the first iteration, re-evaluates to confirm or adjust , allowing the RL estimator to proceed to the next iteration until it converges at . If convergence is not achieved—that is, if there are no values within the spaces and that yield a new A—a context C and an answer A will be returned, indicating that there are no routes for question Q in any of the spaces , , and .

Conversely, if a transition to , , or is feasible, then the output keys from the RL estimator will be concatenated within context C, with assigned to the V_R key, to V_I, and to V_E, forming a new tuple , which will be added to the BERT QA RL + RS model.

The Bellman equation for updating the value of for a new question Q with an uninferred answer is expressed in Equation (9):

| (9) |

where represents the updated value for contexts Q and C in the u-th iteration of an uninferred , is the learning rate, r is the reward obtained, and is the discount factor that values future rewards. This iterative process continues until each new answer reaches an acceptable confidence level or until the RL estimator completes its training in environment , as defined by .

Since the weights of the BERT model are frozen after the last training process, it is necessary to incorporate the new inputs , , and . As discussed in Section 1, new inputs are generated to construct a u-th version of the tokens:

This yields a new representation with segment embeddings , positional embeddings , and a final concatenated representation H now using the latest BERT parameters W, as shown in Equation (10).

| (10) |

In this equation, BERT represents the latest trained model, and refer to the new question and context for the u-th input, and W denotes the updated model weights.

As a next step, the weights W can be unfrozen for the u-th inputs and recalibrated through a fine-tuning layer that utilizes the new representations H and the answers , with their start and end indices, , as specified in Equation (11).

| (11) |

Here, represents the weights to be recalibrated based on the new prediction , is the softmax function, and serves as the bias term. To complete the calibration of the weights W with respect to the new answers , Equation (11) is decomposed into the new logits distributions for the start and end indices, generating a cross-entropy loss function , as expressed in Equation (12).

| (12) |

In this context, and denote the decomposed weights for the start and end indices. The normalized predictions of these indices are calculated as

The weights and the bias will be iteratively readjusted as new u tuples are ingested from the RL estimator, optimizing their recalibration as outlined in Equations (13) and (14).

| (13) |

| (14) |

where represents the learning rate, and ∇ denotes the gradient change applied to update the BERT weights as new values of , , and are added.

Ultimately, while the reliance on static datasets such as CVE and CWE serves as a foundation, the system’s iterative interaction between the RL estimator and the dynamically updated contextual knowledge ensures adaptability. This process leverages new inputs , , and , as described, to enhance the model’s ability to address novel attack vectors. By refining the weights W and recalibrating the fine-tuning layer for each new iteration, the system mitigates limitations associated with static data reliance, ensuring that it can adapt to evolving security landscapes. Such adaptability aligns with best practices for reinforcement learning systems, as outlined in [40], enabling continuous learning and improved response generation.

4. Results

In this study, the results are systematically divided into three subsections to emphasize the distinct contributions of the proposed BERT QA RL + RS framework. This structure facilitates a detailed analysis of each component, highlighting their respective contributions and performance. The subsections and principal findings are outlined as follows:

Section 4.1 Computational Efficiency of the Reinforcement Learning Agent: This subsection evaluated the RL agent’s learning behavior, convergence, and computational efficiency across 16 hyperparameter configurations. Configuration 12 emerged as the most optimal, achieving the highest cumulative rewards and the shortest episode lengths. These findings highlight the agent’s ability to effectively balance exploration and exploitation within the state-action space, as well as its robustness under varying initial conditions

Section 4.2 Performance Analysis of BERT QA Models: The study compared the computational efficiency and QA accuracy of three BERT-based models: BERT, RoBERTa, and DistilBERT. While DistilBERT demonstrated superior computational efficiency, requiring less training time and resources, RoBERTa achieved the highest QA accuracy with an F1-Score of 99.99%. These results emphasize the trade-offs between computational resource demands and QA precision, enabling informed decisions about model selection based on specific application needs.

Section 4.3 Combined RL and BERT QA RL + RS Framework: The integration of the RL agent with BERT QA RL + RS demonstrated its practical utility in prioritizing critical vulnerabilities within an automated penetration testing environment. The system effectively prioritized the most important vulnerabilities, with 14 out of 23 recommendations aligning with the top vulnerabilities in the CVE dataset. Additionally, the total training time for the integrated framework was approximately 1129.4 min, and the average task execution time was 23 min, which included RL decision-making and BERT inference. These results underscore the practical applicability of the integrated framework in prioritizing and addressing high-risk vulnerabilities in real-world scenarios.

4.1. Computational Efficiency of the Reinforcement Learning Agent

Assuming that a pentester intends to consult the process of a test battery with a Q, there are two possible paths: BERT QA RL + RS can infer an , or it can submit it to the agent to recalibrate BERT QA RL + RS through the exploration and exploitation of and for new tuples . Taking this last hypothesis into account, the NIST dataset, already structured in tuples , was divided into 80% for training and 20% for testing without replacement. First, the RL estimator was evaluated, assuming that there are instances not inferred in BERT QA RL + RS. The following performance metrics are presented in this context:

- Cumulative Reward (CR): The sum of rewards obtained by the agent over an episode or a period. The cumulative reward equation evaluates the total rewards accumulated by the agent, with the objective of maximizing this sum, as formulated in Equation (15):

where is the cumulative reward at instant i, T is the time horizon, and is the reward at instant j.(15) -

Episode Length (EL): Represents the number of steps (actions) taken by the agent to complete an episode, as determined by Equation (16).

(16) Here, denotes the episode length at instant i, N is the total number of steps, and is the duration of each step j. This metric evaluates the total number of actions required by the agent to complete its task.

- Policy Entropy (PE): Measures the uncertainty of the agent’s policy, which is useful for evaluating its level of exploration. Policy entropy is defined in Equation (17):

where is the probability of taking action a. A high entropy value indicates greater exploration in the selection of actions, while low values indicate a more stable and defined policy.(17) - Mean Squared Error (MSE): Evaluates the accuracy of the agent’s predictions compared to real values in the environment, as shown in Equation (18):

where N is the number of examples, represents the agent’s predictions, and represents the real values in the i-th iteration. This mean squared error quantifies the difference between the actions predicted by the agent and those observed, providing a measure of accuracy.(18)

On the other hand, Table 1 presents the parameters used for the RL estimator, specifically for . Each parameter distinctly influences the iterative behavior, with values (Value 1, Value 2) that, according to [41], have been shown to be effective across the desired i episodes for , , and .

Table 1.

Description of hyperparameters for and their respective values.

| Hyperparameter | Description | Value 1 | Value 2 |

|---|---|---|---|

| Alpha () | Learning rate that controls how much the agent learns from each new experience. A higher value accelerates learning but may lead to unstable convergence. | ||

| Gamma () | Discount factor that determines the importance of future rewards. A higher value prioritizes long-term rewards. | ||

| Epsilon () | Exploration rate that controls the probability of the agent taking a random action instead of following its policy. A higher value encourages exploration. | ||

| Epsilon Decay () | Decay rate for the exploration rate (), which controls how decreases over time, allowing the agent to reduce exploration as it learns. |

As suggested in [42], simulations are particularly valuable in tasks where real-world interactions are costly or infeasible, enabling agents to learn robust behaviors in a risk-free environment. This principle emphasizes the necessity of conducting multiple simulations to validate the consistency and robustness of the RL agent. Through these simulations, it is possible to ensure that the results are reproducible under identical conditions, confirming that outcomes are not merely coincidental or influenced by stochastic external factors but reflect the expected performance of the agent.

To verify the consistency of the agent , two simulations were carried out under identical initial conditions. The first simulation (Sim. 1) established a controlled environment with predefined states , actions , and a fixed reward structure. The primary objective of Sim. 1 was to iteratively optimize , allowing to adaptively learn an optimal policy. The second simulation (Sim. 2) replicated these conditions to confirm that the policies learned in Sim. 1 were not influenced by stochastic factors such as random initialization or environmental noise. Any discrepancies between the results of Sim. 1 and Sim. 2 would indicate potential sensitivity issues, as noted in [40], where reproducibility in RL often depends on addressing randomness in exploration strategies and environmental dynamics.

The iterative learning process ensures that incrementally improves its policy , maximizing the cumulative rewards over multiple episodes. During training, the progressive reduction of decreases random exploration, focusing on exploiting the best-known actions for each state. The consistency between Sim. 1 and Sim. 2 validates the reliability of updates and the adaptability of to dynamic environments.

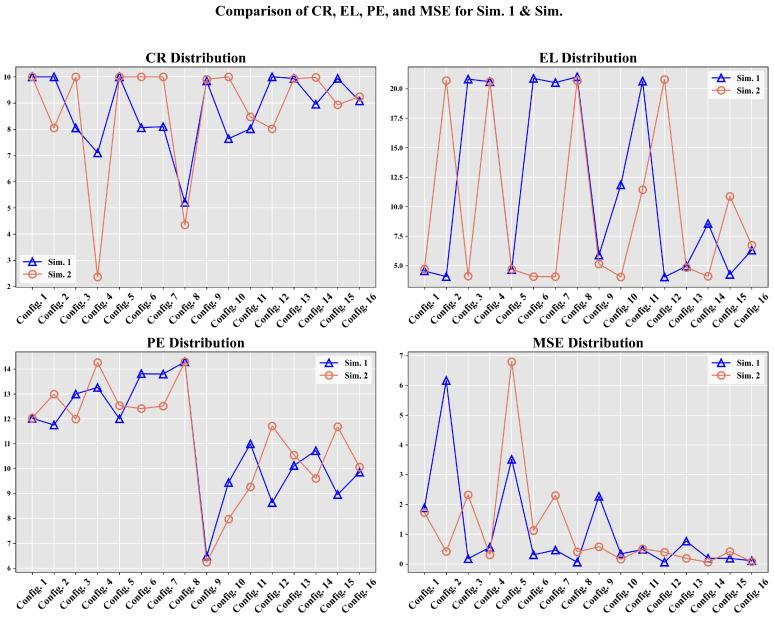

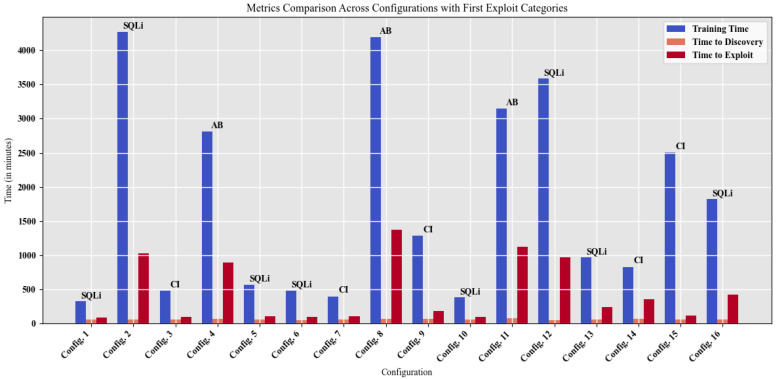

The results obtained with the experimental design are presented below, analyzing the influence of the variation in selected hyperparameters. Table 2 shows the configurations used for execution based on combinations of hyperparameters. Consequently, Figure 5 shows the evolution of MSE, CR, EL, and PE across 16 configurations for Sim. 1 and Sim. 2 in two RL simulations.

Table 2.

configuration parameters.

| Configuration | ||||

|---|---|---|---|---|

| Config. 1 | ||||

| Config. 2 | ||||

| Config. 3 | ||||

| Config. 4 | ||||

| Config. 5 | ||||

| Config. 6 | ||||

| Config. 7 | ||||

| Config. 8 | ||||

| Config. 9 | ||||

| Config. 10 | ||||

| Config. 11 | ||||

| Config. 12 | ||||

| Config. 13 | ||||

| Config. 14 | ||||

| Config. 15 | ||||

| Config. 16 |

Figure 5.

Evolution of the metrics MSE, CR, EL, and PE across 16 configurations for Sim. 1 and Sim. 2 RL simulations.

Taking Figure 5 as a reference, where the MSE metrics for the two simulations (Sim. 1 and Sim. 2) are compared, a quantitative hypothesis was also proposed to evaluate overfitting. Overfitting in RL environments [43] occurs when adjusts its policy excessively to a specific configuration or initial seed, thereby losing its capacity for generalization under slightly different conditions.

To quantify the stability of the behavior of between simulations, the normalized relative difference of the MSE was defined, as shown in Equation (19).

| (19) |

For the 16 evaluated configurations, a set of values was obtained. For instance,

These results indicate that, for these configurations, the differences between the two simulations are less than 5%. That is, the variation in the MSE metric between Sim. 1 and Sim. 2 is very small, suggesting significant stability in the performance of under changes in initial conditions.

If had strongly overfitted to a specific configuration or seed, values of much closer to 1 would have been observed, reflecting a strong dependency on the original run. Instead, the low values obtained confirm that demonstrates stable and consistent behavior, reducing the likelihood of overfitting under the evaluated conditions. Thus, the numerical evidence supports the conclusion that overfitting is minimal, at least within the stable subset of analyzed configurations. Thus, based on the foregoing evidence, the best overall results for Sim. 1 were achieved with Configuration 12 and for Sim. 2 with Configuration 10. These configurations demonstrate a balance among the learning rate (), the importance of future rewards (), exploration ( with a slow decay rate), and persistence in long-term learning.

As a matter of fact, configurations with exhibited a higher tendency to converge by truncation. This is due to the fact that, despite the balance between exploration and exploitation to identify successful states, the learning rate is very low, which limits the capacity of to execute optimal actions.

The MSE values remained low because comparisons were made against the current table, ensuring that the action a taken did not deviate significantly from the best known by . In contrast, PE values support the notion that a proper balance between exploration and exploitation is essential.

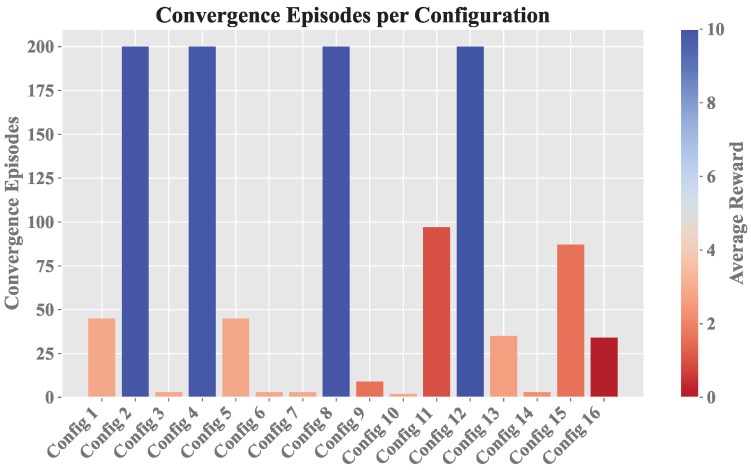

Based on the similar results of both simulations, the subsequent analyses were carried out based on Sim. 2, since it presented a minor number of episodes terminated by truncation, which would allow for a more detailed study of the agent’s behavior during learning. Figure 6 shows the convergence relationship for each configuration.

Figure 6.

convergence for hyperparameter configurations.

Configurations with convergence at 200 indicate that they were truncated and did not achieve stable learning, which is a phenomenon that was more pronounced for the initial value. It is also observed that the four configurations terminated by truncation had a good reward, and despite this, they are not desired configurations given that there must be a balance between the reward achieved by the agent and also the time it takes to obtain it, mainly in the focus of this work where the efficiency of the proposed architecture is at stake.

Figure 6 also shows the average reward r before reaching convergence, highlighting the contrast between the performance of while exploring new actions and its performance once it began to prioritize optimal actions. For the second value, the average reward r was initially low before convergence; however, most configurations eventually achieved values close to 10, representing the maximum reward when exploiting any state s in , , or .

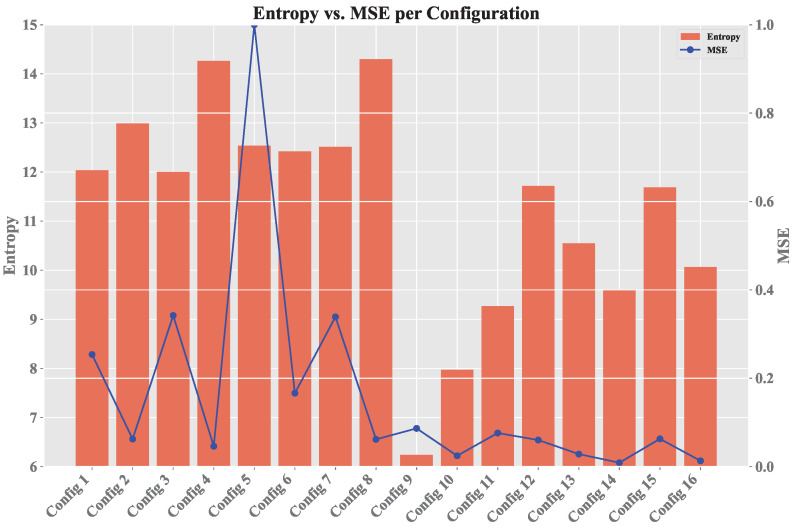

Figure 7 displays the relationship between policy entropy and MSE for each configuration. Higher PE values indicate greater disorder in the selection of , while lower PE values indicate more stable learning, showing that the second learning rate is more consistent. The same applies to MSE, which is generally lower in the final configurations. Peaks in these values suggest the use of exploration with a higher value, which results in the selection of random actions that may not be optimal, thus affecting the error rate.

Figure 7.

Comparison of PE and MSE for hyperparameter configurations.

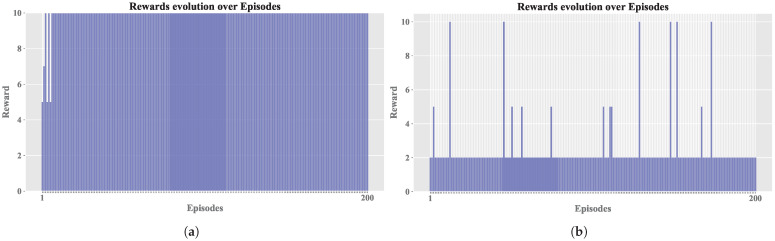

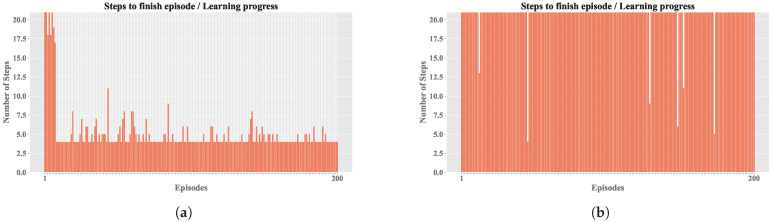

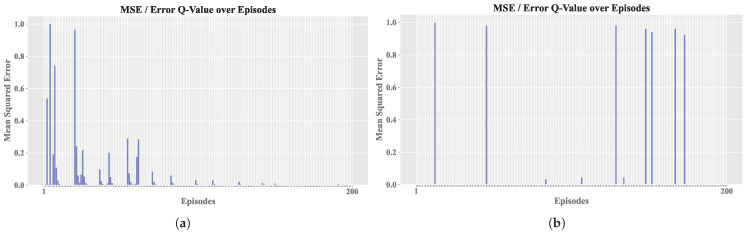

To facilitate a detailed comparison, two agent configurations were selected from Sim. 2: Config. 9, which exhibited a balanced performance in terms of reward and steps, and Config. 4, which demonstrated a less favorable outcome. While Figure 5 identifies the optimal configurations for each simulation, these often achieved perfect reward averages, which is an atypical occurrence in such environments. Therefore, we opted for Config. 9 and Config. 4 to provide a more realistic comparison.

Figure 8 shows the comparison between the cumulative reward of both configurations. It shows how the best configuration achieved the maximum reward in almost all episodes, with the exception of the initial episodes where the agent had not yet identified an optimal operating policy; in contrast, the other configuration had lower rewards throughout the training.

Figure 8.

Average reward comparisons. (a) Best configuration average reward. (b) Worst configuration average reward.

Figure 9 presents comparisons of average episode length (EL) between the configurations. In Figure 9a, the best configuration achieved shorter episodes, indicating faster convergence. In contrast, Figure 9b, the worst configuration, showed longer episodes with no convergence, resulting in episodes being truncated.

Figure 9.

Average EL comparisons. (a) Best configuration average EL. (b) Worst configuration average EL.

Regarding the EL, as shown in Figure 9, it is evident that training without convergence led to truncation across all episodes (exceeding 20 steps). In contrast, the other configuration achieved early convergence, and occasionally, random actions were selected during training to apply the exploration technique; if these actions prove ineffective, the previously learned actions are resumed. Figure 10 and Figure 11 share the characteristic that, in the best configuration, an initial phase of training disorder was observed until convergence was reached. Conversely, in the configuration that failed to stabilize, there were higher entropy measures and persistent error spikes throughout the training process.

Figure 10.

Average mean squared error (MSE) comparisons. (a) Best configuration average MSE. (b) Worst configuration average MSE.

Figure 11.

Average PE comparisons. (a) Best configuration average PE. (b) Worst configuration average PE.

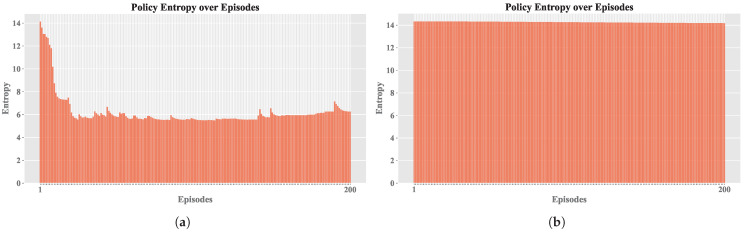

In addition to the metrics associated with RL environments, other metrics typical of a pentesting environment were observed and are described in Figure 12. These metrics provide a broader perspective on the model’s performance by incorporating key aspects relevant to real-world penetration testing scenarios. Specifically, this figure illustrates, for each configuration, the progression of the total training time, the time required to detect vulnerabilities, and the time taken to execute the first successful attack. Furthermore, it identifies the specific type of attack performed, offering insights into the effectiveness and efficiency of different strategies employed during the training process. These additional metrics are essential for evaluating the practical applicability and adaptability of the models in dynamic and complex security environments.

Figure 12.

Evolution of the metrics training time, time to discovery, and time to exploit across 16 configurations for two RL simulations. The figure shows how each metric evolves across the configurations, with different colors representing the individual metrics. Additionally, the figure includes the corresponding “First Exploit” categories, highlighting the different attack methods used.

4.2. Performance Analysis of BERT QA Models

In the case of BERT QA RL + RS, there are different pre-trained models, with each varying in terms of vocabulary size, weights, attention heads, transformer layers, and embeddings. The selection of models for BERT QA RL + RS is justified by their balance between accuracy and efficiency, with each one being suitable for different scenarios:

BERT uncased: Ref. [44] used this as the base model, providing a reliable benchmark for QA tasks and demonstrating a robust contextual understanding. It consists of 12 transformer blocks k, 768 embedding dimensions, 12 attention heads, and 4096 weights W.

RoBERTa: Ref. [45] enhanced BERT pretraining by removing certain constraints and leveraging a larger dataset with a more versatile masking approach, offering improved accuracy for QA and making it ideal for maximizing precision. It is composed of 12 transformer layers k, 768 embedding dimensions, 12 attention heads, and 3072 weights.

DistilBERT: Ref. [46] employed a lighter version of BERT uncased that retains much of the performance at a lower computational cost, making it ideal for QA environments with limited resources. It includes approximately of the original vocabulary, consisting of 512 embeddings, 12 attention heads, and 3072 weights W.

In the context of performance metrics for BERT QA RL + RS, an informative resource analysis can be performed to highlight the computational cost, the cumulative learning progress of BERT models [44,45,46], and the temporal efficiency of the training process, as shown in Table 3.

Table 3.

Computational efficiency metrics.

| Metric | Description | Formula |

|---|---|---|

| Total FLOPs | Represents the computational workload during model training, providing an indication of resource consumption. | — |

| Training Loss | Reflects the model’s progress in learning by measuring the discrepancy between predicted and actual values. | |

| Training Time | Represents the total duration of the training process, indicating the temporal efficiency of the model training. | — |

| Training Samples per Second (SPS) | Indicates the rate at which data samples are processed, measured in samples per second. | |

| Training Steps per Second (STS) | Denotes the frequency of training steps, providing insight into the model’s training step speed. |

Since the training was conducted in an intentionally vulnerable test environment, these times are lower than what would be obtained in a real-world environment, but they serve as a basis for evaluating the agent’s performance in the executed environment. From the figure it is possible to obtain the three categories of attacks that the agent manages to perform at the beginning of its training correspond to authentication bypass (AB), SQL injection (SQLi), and command injection (CI).

In this line of reasoning, Table 4 presents a summary of the computational efficiency metrics for each BERT QA RL + RS model, indicating that DistilBERT [46] achieved the fastest training time while maintaining a low loss, which translated into an efficient learning rate.

Table 4.

Computational efficiency metrics for BERT QA training.

From the results obtained, the total training time for the system integrating BERT QA RL + RS and RL can be calculated. In this case, the average training time for the RL agent, across all configurations, is 34 min; while the average training time for the BERT models is 1095.4 min, as detailed in the Table 4 (expressed in seconds). Thus, the total training time for the proposed architecture is 1129.4 min.

As for the execution, the total time will depend on the models selected to finally integrate the architecture. However, in general, a time of approximately 2 min is estimated for the inference of the BERT models, to which must be added the execution time of the RL agent with its best configuration (Config. 9–21 min). This last step will be necessary only if the BERT model does not provide an answer.

In terms of resource consumption, both models require at least 16 GB of RAM and 4 CPU cores, which are also necessary for the use of the models during the inference phase.

Thus, the choice of the best model depends on the specific project priorities. If speed and efficiency are critical, DistilBERT is the most suitable choice due to its superior runtime performance and comparable metrics. However, if the project prioritizes slightly higher QA performance, BERT or RoBERTa may be preferred, although the trade-off in computational efficiency should be considered.

4.3. Combined Performance Metrics for RL and BERT QA RL + RS

The third component of the RL and BERT QA RL + RS analysis focuses on metrics that evaluate the quality of predictions and inferences made by the models when predicting tuples , where A represents the ground truth. These metrics are critical for understanding how well the models capture and replicate the expected outputs in tasks requiring precise and contextually accurate answers. Table 5 details these metrics, which provide a comprehensive evaluation of the performance of the models. Additionally, Table 6 summarizes the hyperparameters used during the training of all models to ensure optimal configurations for achieving high-quality results.

Table 5.

QA accuracy metrics for BERT QA RL + RS.

| Metric | Description | Formula |

|---|---|---|

| Precision | Measures the proportion of correct words in the prediction relative to all predicted words. In the QA context, it evaluates the accuracy of the model’s generated answer. | |

| Recall | Measures the proportion of correct predicted words relative to all words in the correct answer. Evaluates if the model captures the keywords of the expected response. | |

| Exact Match (EM) | This metric measures the percentage of answers that exactly match the correct answer. It is a very strict metric, counting answers as correct only if they are identical to the expected response. | |

| F1-Score | F1 is a metric that combines precision and recall. It is used to measure the overlap between predicted words and words in the correct answer. Unlike EM, it does not require exact identity but assesses how many words in the prediction match those in the correct answer. |

Table 6.

Training hyperparameters for BERT QA RL + RS models.

| Hyperparameter | Description | Value |

|---|---|---|

| train_epochs | Number of complete passes through the entire training dataset. A higher number of epochs may improve model performance, though an excessive number could lead to overfitting. | 3 |

| train_batch_size | Determines the number of samples the model processes simultaneously during training. A larger batch size can accelerate training but requires more memory. | 16 |

| eval_batch_size | Similar to the training batch size, it controls the number of samples the model processes at once during evaluation. | 16 |

| learning_rate | Defines the rate at which the model adjusts its weights based on the loss gradient. A high learning rate speeds up training but may hinder convergence, while a lower rate results in more stable, albeit slower, learning. | |

| weight_decay | A regularization parameter that helps prevent overfitting by penalizing large weights, ensuring that the model generalizes well to unseen data. |

The QA accuracy metrics for each model are shown in Table 7.

Table 7.

Accuracy metrics comparison for BERT QA models.

For this evaluation, weighted average metrics were used, providing a balanced assessment of model performance across all instances in the BERT QA RL + RS task. This approach accounts for the varying importance and distribution of questions and answers, offering a more accurate representation of overall model performance. Since all models demonstrated high performance with metrics exceeding for both the EM and F1-Score, these weighted metrics ensure fair evaluation and reflect performance across different question types.

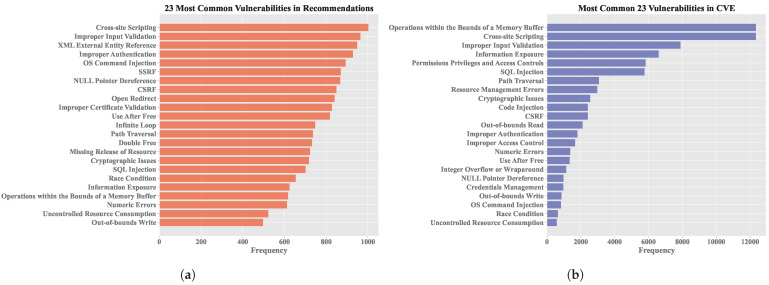

Since attacks are recommended based on the vulnerabilities of each target machine, it is relevant to analyze the vulnerabilities considered by the recommendation system. Figure 13a shows the list of vulnerabilities available in the recommendation system, while Figure 13b presents the top 23 vulnerabilities in the CVE dataset.

Figure 13.

Common vulnerabilities supported by the solution. (a) Vulnerabilities in the recommendation system. (b) Top vulnerabilities in the CVE dataset.

Among the 23 vulnerabilities currently used by the recommendation system, 14 are among the top vulnerabilities according to CVE, indicating that the system is targeting critical and important vulnerabilities and suggesting relevant attacks accordingly.

5. Discussions

To delve deeper into the BERT QA RL + RS proposal, this section adopts three comparative approaches to evaluate its performance and contributions. Each approach emphasizes a specific aspect of the architecture, from its qualitative advantages to its computational and statistical underpinnings, as well as its comparison with alternative methodologies. These approaches are structured into three main subsections:

Qualitative Comparison with State-of-the-Art and Common Pentesting Tools (Section 5.1)—This subsection explores the qualitative strengths of the proposed architecture in comparison with existing solutions, focusing on its adaptability, scalability, and modular design.

Statistical Validation Analysis and Computational Complexity Comparison (Section 5.2)—This subsection details a statistical analysis of the proposal’s reliability and contrasts its computational complexity with conventional methods such as Q-Learning and DQN.

Comparison of BERT QA RL + RS with Genetic Algorithms (Section 5.3)—This subsection provides a comparative analysis between the proposed architecture and Genetic Algorithms (GAs), emphasizing their respective strengths and limitations in a penetration testing context.

5.1. Qualitative Comparison with State-of-the-Art and Common Pentesting Tools

In this first point of comparison, the proposed BERT QA RL + RS framework is evaluated alongside traditional penetration testing tools and AI-enhanced solutions. These tools include widely recognized frameworks such as Metasploit [47], Nessus [48], OWASP ZAP [49], and Burp Suite [50], as well as advanced AI-enhanced tools like PentestGPT [51] and CyberProbe AI [52]. The comparison is organized based on the five phases of penetration testing outlined in NIST 800-155: preparation, discovery, analysis, exploitation, and reporting.

The strengths and limitations of each tool are presented, emphasizing their specific contributions and gaps in comprehensive penetration testing compared with BERT QA RL + RS, as listed in Table 8.

Table 8.

Comparison of penetration testing tools based on NIST 800-155 methodology.

| Tool | Advantages | Disadvantages | NIST 800-155 Coverage |

|---|---|---|---|

| Metasploit [47] | Comprehensive exploitation capabilities; extensive module library for payloads and post-exploitation. | Lacks automation; requires skilled operators; limited discovery and reporting. | Partial: Focused on exploitation and reporting. |

| Nessus [48] | Robust vulnerability scanning; extensive plugin support. | Limited exploitation features; requires external integration for advanced reporting. | Partial: Emphasizes discovery and analysis. |

| OWASP ZAP [49] | Highly effective for web application scanning; CI/CD integration. | Limited for multi-layered systems; manual intervention needed for reporting. | Partial: Focused on discovery and analysis. |

| Burp Suite [50] | Customizable for web penetration testing; rich plugin ecosystem. | Requires significant manual effort; limited to web applications. | Partial: Focused on discovery and analysis. |

| PentestGPT [51] | AI-based approach; rapid vulnerability identification; generates remediation suggestions. | Limited in complex system architectures; struggles with adaptive learning. | Partial: Covers preparation and discovery. |

| CyberProbe AI [52] | Advanced AI-driven scanning; effective for threat prioritization; integrates seamlessly with DevSecOps pipelines. | Expensive licensing; relies on pre-trained models; limited exploit generation. | Partial: Focuses on preparation, discovery, and reporting. |

| BERT QA RL + RS (This proposal) | Fully automated end-to-end framework; reinforcement learning ensures adaptability; QA provides contextual understanding; excels in multi-layered system testing. | Higher resource demands; training requires significant time. | Complete: Covers all NIST phases, including preparation, discovery, analysis, and exploitation. |

The analysis summarized in Table 8 indicates that BERT QA RL + RS demonstrates robust capabilities in addressing complex, multi-layered systems through automated, end-to-end testing processes. Its reinforcement learning and contextual question-answering methodology enable dynamic adaptation to evolving environments and effective prioritization of vulnerabilities based on criticality. In comparison, traditional tools such as Metasploit and Nessus, while reliable in specific areas, require significant manual configuration for advanced tasks and lack comprehensive automation. Similarly, AI-enhanced solutions like PentestGPT and CyberProbe AI provide notable automation capabilities but encounter limitations when addressing complex or ambiguous scenarios, which are areas where the BERT QA RL + RS framework shows a distinct advantage.