Abstract

Background

Artificial intelligence (AI) technologies are increasingly recognized for their potential to revolutionize research practices. However, there is a gap in understanding the perspectives of MENA researchers on ChatGPT. This study explores the knowledge, attitudes, and perceptions of ChatGPT utilization in research.

Methods

A cross-sectional survey was conducted among 369 MENA researchers. Participants provided demographic information and responded to questions about their knowledge of AI, their experience with ChatGPT, their attitudes toward technology, and their perceptions of the potential roles and benefits of ChatGPT in research.

Results

The results indicate a moderate level of knowledge about ChatGPT, with a total score of 58.3 ± 19.6. Attitudes towards its use were generally positive, with a total score of 68.1 ± 8.1 expressing enthusiasm for integrating ChatGPT into their research workflow. About 56 % of the sample reported using ChatGPT for various applications. In addition, 27.6 % expressed their intention to use it in their research, while 17.3 % have already started using it in their research. However, perceptions varied, with concerns about accuracy, bias, and ethical implications highlighted. The results showed significant differences in knowledge scores based on gender (p < 0.001), working country (p < 0.05), and work field (p < 0.01). Regarding attitude scores, there were significant differences based on the highest qualification and the employment field (p < 0.05). These findings underscore the need for targeted training programs and ethical guidelines to support the effective use of ChatGPT in research.

Conclusion

MENA researchers demonstrate significant awareness and interest in integrating ChatGPT into their research workflow. Addressing concerns about reliability and ethical implications is essential for advancing scientific innovation in the MENA region.

Keywords: Artificial intelligence, ChatGPT, MENA researchers, Research practices, Ethical implications, Scientific innovation

1. Introduction

Artificial intelligence (AI) has emerged as a transformative force in many domains, including research, by offering innovative tools and methodologies to augment human capabilities [1]. Within the realm of AI lies machine learning (ML), a pivotal area dedicated to crafting systems capable of autonomous learning and improvement without explicit programming [2]. Among these tools, ChatGPT (Generative Pre-trained Transformer) stands out as an advanced large language model developed by OpenAI (OpenAI Inc., USA), trained to generate human-like text based on input instructions [3]. Since its release in 2022, ChatGPT has garnered significant attention within the research community for its potential to streamline various research tasks, ranging from literature review to data generation [4,5].

The utilization of ChatGPT in research holds promise for revolutionizing traditional methodologies by providing automated assistance in information extraction, data analysis, and hypothesis formulation [6]. By leveraging its vast training dataset and advanced natural language processing capabilities, ChatGPT can facilitate access to a wealth of information, identify patterns and trends within existing literature, and even propose novel research hypotheses [5,7]. Furthermore, its ability to generate human-like text enables seamless communication and collaboration between researchers, transcending geographical and linguistic barriers [8].

However, integrating ChatGPT into research practices also raises important questions and considerations. Concerns regarding the reliability and accuracy of generated text (hallucinations), potential biases in data interpretation, and ethical implications of AI-driven research methodologies have been voiced within the academic community [[9], [10], [11], [12]]. Moreover, the extent to which researchers are aware of, knowledgeable about, and receptive to incorporating ChatGPT into their workflows remains an area of inquiry.

The MENA region presents a unique context for studying the adoption of AI tools in research. It encompasses a diverse range of countries with varying levels of technological development, academic infrastructure, and research funding [13]. Moreover, the region faces specific challenges, such as political instability and limited access to resources, which can impact the integration of advanced technologies like ChatGPT [14].

While several studies have explored use and general attitudes and perceptions towards AI, there is a notable gap in understanding how researchers from the MENA region specifically view the use of ChatGPT in their research endeavors [[15], [16], [17], [18], [19], [20]]. For example, a study of 200 researchers from Egypt reported that 11.5 % of researchers use ChatGPT in their research, primarily to rephrase paragraphs and find references [21]. Establishing guidelines and training for AI use in academic and research institutions was proposed in qualitative studies from the MENA region [22,23]. Thus, there is limited insight into the attitudes and perceptions of MENA researchers regarding ChatGPT. This poses a challenge for developing tailored strategies and interventions that promote the responsible and effective use of ChatGPT in this region. In addition, it is particularly significant given the diverse cultural, linguistic, and economic contexts within the MENA region, which may influence researchers' attitudes and perceptions to adopt new technologies such as ChatGPT.

To address this gap, this study aims to conduct a cross-sectional investigation into the knowledge, attitude, and perceptions of MENA researchers towards ChatGPT. Therefore, the study seeks to highlight the potential benefits and drawbacks of using ChatGPT in research practices in the unique context of the MENA region, guiding policymakers and academic institutions to make informed decisions regarding the use of such technology.

2. Material and methods

2.1. Study design

This study employed a cross-sectional design to assess the knowledge, attitudes, and perceptions of MENA researchers toward using ChatGPT in research settings. The questionnaire was created according to the study aim and a literature review [[15], [16], [17], [18], [19], [20]]. The survey was accessible to participants from May to September 2023. This research also employed the STROBE Checklist to ensure comprehensive reporting and adherence to best practices for cross-sectional studies [24].

2.2. Ethical considerations

The study protocol received ethical approval from the Institutional Review Board of the Jordan University of Science and Technology (JUST-IRB Approval No. 30/160/2023). Participants were informed about the purpose of the study and provided voluntary electronic consent before completing the questionnaire. To maintain anonymity, no personally identifiable information was collected during data collection.

2.3. Study tool

The survey questionnaire was developed using Google Forms to collect data from participants. It was reviewed by experienced university professors to ensure its validity, clarity, and relevance. Their feedback was used to refine the questions. The reliability of the questionnaire was confirmed with a Cronbach's alpha of 0.884. The questionnaire consisted of three main sections: demographic information (e.g., age, gender, educational background, research area, and institutional affiliation), knowledge of AI and ChatGPT use (6 items; i.e., participants' familiarity with ChatGPT, their previous AI use in research, and their understanding of AI and ML concepts), attitudes (27 items; i.e., attitudes towards integrating ChatGPT into their research practices), and perceptions (28 items; i.e., perceived advantages, disadvantages, and potential ethical implications).

2.4. Data collection

A convenient sampling method was utilized to recruit participants from academic institutions, research centers, and other relevant organizations across the MENA region. The inclusion criteria were Arab researchers currently working in the MENA region. The survey was accessible online, allowing researchers to participate at their convenience. The survey link was distributed through e-mail lists, social media platforms, and specialized professional networks such as LinkedIn and ResearchGate of relevant researchers. To ensure a diverse sample, efforts were made to include researchers from various disciplines and institutions. A minimal sample size of 281 was calculated using G-Power 3.1 software (medium effect size, α = 0.05, and power = 0.90). Sampling was continued until the target sample was achieved.

2.5. Data analysis

Upon completion of data collection, responses were exported to an Excel spreadsheet for initial data management and cleaning. Subsequent statistical analysis was conducted using the Statistical Package for the Social Sciences, version 23 (SPSS, IBM Corp., USA) software. Descriptive statistics, including frequencies (N), percentages (%), means, and standard deviations (SD), were computed for demographic variables and survey items as appropriate. The calculation of scores was performed by taking the mean value and then multiplying it by 100. To examine relationships between demographic factors and participants' attitudes towards ChatGPT use in research, several inferential statistical methods were employed. The independent t-test, one-way ANOVA, and Pearson's correlation (r) were employed to examine the strength and direction of relationships between scores. Statistical significance was set at p < 0.05 for all analyses.

3. Results

3.1. Demographics of participants

A total of 369 researchers participated in the survey, with the majority being male (65.3 %). The age distribution showed that 36.5 % of participants were between 22 and 35 years old, and 33.8 % were between 36 and 45 years old. The majority of participants worked in Jordan (83.5 %), held a PhD (59.6 %), and were employed at academic institutions (74.0 %). Regarding specialties, the participants were distributed among various fields, with pharmacy (22.8 %) and applied medical sciences (19.2 %) being the most represented. Table 1 shows the detailed demographic characteristics.

Table 1.

Demographic characteristics of study participants (N = 369).

| Variables | N (%) |

|---|---|

| Gender | |

| Male | 241 (65.3) |

| Female | 128 (34.7) |

| Age | |

| 22–35 | 134 (36.5) |

| 36–45 | 124 (33.8) |

| 46–55 | 76 (20.7) |

| >56 | 33 (9.0) |

| Working country | |

| Jordan | 308 (83.5) |

| Other countries | 61 (16.5) |

| Highest qualification | |

| Bachelor | 56 (15.2) |

| Master | 93 (25.2) |

| PhD | 220 (59.6) |

| Employment field | |

| Academic (i.e., universities) | 273 (74.0) |

| Government sector | 32 (8.7) |

| Othera | 64 (17.3) |

| Specialty | |

| Nursing | 60 (16.3) |

| Pharmacy | 84 (22.8) |

| Medicine | 64 (17.3) |

| Biological sciences | 53 (14.4) |

| Applied medical sciences | 71 (19.2) |

| Dentistry | 37 (10.0) |

| Years of experience | |

| >15 years | 124 (33.6) |

| 11–15 years | 54 (14.6) |

| 6–10 years | 73 (19.8) |

| 1–5 years | 101 (27.4) |

| Less than 1 year | 17 (4.6) |

| Number of published research | |

| >50 | 28 (7.6) |

| 31–50 | 37 (10.0) |

| 21–30 | 38 (10.3) |

| 11–20 | 57 (15.4) |

| 6–10 | 47 (12.7) |

| 1–5 | 100 (27.1) |

| None | 62 (16.8) |

Other fields include institutions or companies in the industry or private research centers.

3.2. Knowledge of AI and ChatGPT

According to Table 2, a significant proportion of participants reported familiarity with AI and ML. About 98.9 % of participants had heard about AI, including ChatGPT (88.3 %). Similarly, 84.6 % of participants had heard about ML. The most commonly used resources for learning about AI and its applications were internet pages (61.5 %) and social media (44.2 %). A majority of participants had experience with ChatGPT; of those, 27.6 % expressed their intention to use it in their research, while 17.3 % had already started using it.

Table 2.

Familiarity and usage of AI and ChatGPT (N = 369).

| Question and Response | N (%) |

|---|---|

| Have you ever heard about AI? | |

| Yes, and I consider myself an expert in this field | 13 (3.5) |

| Yes, and I can explain to you what AI is | 113 (30.6) |

| Yes, I know a little information about it | 221 (59.9) |

| Yes, but I don't know what it is exactly | 18 (4.9) |

| No, I haven't heard about it | 4 (1.1) |

| Have you ever heard about ML? | |

| Yes, and I consider myself an expert in this field | 9 (2.4) |

| Yes, and I can explain to you what ML is | 74 (20.1) |

| Yes, I know a little information about it | 159 (43.1) |

| Yes, but I don't know what it is exactly | 70 (19.0) |

| No, I haven't heard about it | 57 (15.4) |

| What resources did you use to learn about AI and its applications? | |

| Scientific papers | 147 (39.8) |

| Social media | 163 (44.2) |

| Friends and colleagues | 129 (34.9) |

| Internet pages | 227 (61.5) |

| I did not try to learn about AI and its applications | 27 (7.3) |

| Have you heard about ChatGPT? | |

| Yes | 326 (88.3) |

| No | 35 (9.5) |

| Maybe | 8 (2.2) |

| Have you used ChatGPT? | |

| Yes | 208 (56.4) |

| No | 153 (41.5) |

| I tried but couldn't access it | 8 (2.2) |

| Do you think you will use ChatGPT in your research? | |

| Yes, I will use it | 102 (27.6) |

| I've already started using it | 64 (17.3) |

| I won't use it now, but I may use it in the future | 116 (31.4) |

| No | 87 (23.6) |

3.3. Attitudes towards ChatGPT technology

The attitudes and impressions regarding ChatGPT in research, as depicted in Table 3, show a spectrum of viewpoints among researchers. The majority of researchers agreed that they rely heavily on technology in their research and expressed confidence in their ability to learn technology skills easily. Additionally, participants expressed a range of perceptions and concerns regarding ChatGPT. While some agreed that it could answer complex questions and facilitate access to information, others expressed skepticism about its reliability in solving problems requiring high mental abilities. Moreover, researchers identified potential ethical concerns associated with ChatGPT, including increased plagiarism and the possibility of biases (53–76 %). Finally, participants had mixed impressions regarding ChatGPT. While some agreed that it could provide accurate information in their research field (14.1 %), others expressed concerns about its reliability (37.7 %) and impact on research integrity (42.8 %).

Table 3.

Attitudes and impressions regarding ChatGPT in research (N = 369).

| Statement |

Agree N (%) |

Neutral N (%) |

Disagree N (%) |

|---|---|---|---|

| I rely heavily on technology in my research. | 279 (75.6) | 61 (16.5) | 29 (7.9) |

| Possesses advanced technological skills. | 227 (61.5) | 126 (34.1) | 16 (4.3) |

| I can learn technology skills easily. | 323 (87.5) | 43 (11.7) | 3 (0.8) |

| I do not prefer using new technological tools in my research, as the existing tools are sufficient. | 55 (14.9) | 84 (22.8) | 230 (62.3) |

| ChatGPT can answer complex questions. | 100 (27.1) | 186 (50.4) | 83 (22.5) |

| ChatGPT cannot solve problems requiring high mental abilities. | 206 (55.8) | 137 (37.1) | 26 (7.0) |

| Information from ChatGPT can be relied upon without review. | 22 (6.0) | 91 (24.7) | 256 (69.4) |

| ChatGPT provides accurate information in the research field. | 52 (14.1) | 187 (50.7) | 130 (35.2) |

| ChatGPT answers can be directly copied into research papers. | 33 (8.9) | 75 (20.3) | 261 (70.7) |

| The solutions provided by ChatGPT are effective. | 67 (18.2) | 198 (53.7) | 104 (28.2) |

| Using ChatGPT reduces research reliability. | 139 (37.7) | 155 (42.0) | 75 (20.3) |

| ChatGPT may contribute to increased plagiarism. | 197 (53.4) | 132 (35.8) | 40 (10.8) |

| Using ChatGPT increases scientific integrity. | 44 (11.9) | 167 (45.3) | 158 (42.8) |

| Researchers are responsible for ChatGPT errors. | 304 (82.4) | 52 (14.1) | 13 (3.5) |

| ChatGPT may exhibit biases. | 174 (47.2) | 149 (40.4) | 46 (12.5) |

| Using ChatGPT may raise ethical issues in research. | 281 (76.2) | 73 (19.8) | 15 (4.1) |

| ChatGPT requires independent testing for scientific use. | 252 (68.3) | 100 (27.1) | 17 (4.6) |

| ChatGPT may replace researchers in some disciplines. | 124 (33.6) | 114 (30.9) | 131 (35.5) |

| Researchers need formal training to use ChatGPT. | 260 (70.5) | 92 (24.9) | 17 (4.6) |

| Using ChatGPT positively impacts researchers' careers. | 188 (50.9) | 144 (39.0) | 37 (10.0) |

| Research contributed by ChatGPT can be confidently cited. | 63 (17.1) | 130 (35.2) | 176 (47.7) |

| Research by ChatGPT is easily accepted in scientific journals. | 55 (14.9) | 196 (53.1) | 118 (32.0) |

| Using ChatGPT increases the number of published papers. | 222 (60.2) | 121 (32.8) | 26 (7.0) |

| Using ChatGPT facilitates access to information. | 267 (72.4) | 91 (24.7) | 11 (3.0) |

| Using ChatGPT in research saves time and effort. | 265 (71.8) | 93 (25.2) | 11 (3.0) |

| Using ChatGPT reduces review and publication costs. | 191 (51.8) | 141 (38.2) | 37 (10.0) |

| Using ChatGPT reduces overall research costs. | 213 (57.7) | 129 (35.0) | 27 (7.3) |

3.4. Perceptions of AI and ChatGPT

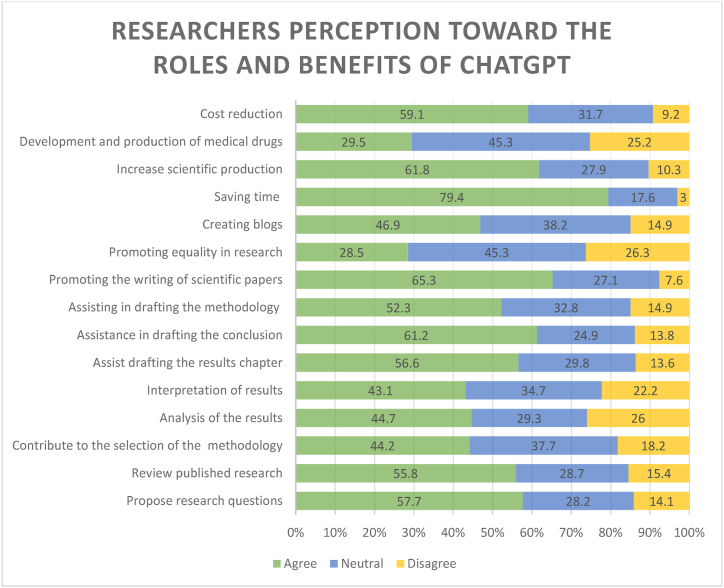

Fig. 1 presents the distribution of responses among researchers regarding various tasks that ChatGPT could potentially assist with. Regarding the potential research roles of ChatGPT, the majority of participants agreed that it could propose research questions, review published research, and assist in drafting conclusions, results, and methodology (52–61 %). Participants also recognized several potential benefits of using ChatGPT in research. The most agreed-upon benefits included saving time, promoting the writing of scientific papers, increasing scientific production, and reducing costs (59–79 %).

Fig. 1.

Researchers' perceptions of ChatGPT contributions to research (N = 369).

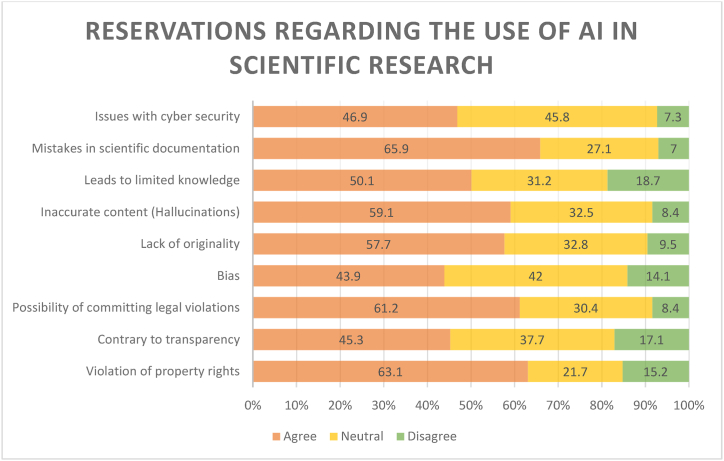

Fig. 2 presents a range of concerns among researchers regarding potential risks and drawbacks associated with ChatGPT use. Most researchers expressed apprehension about the potential for ChatGPT to lead to mistakes in scientific documentation, violations of property rights, the possibility of committing legal violations, and the adoption of inaccurate content. Furthermore, less than half of the respondents highlighted worries related to issues with cyber security and bias.

Fig. 2.

Researchers' concerns regarding potential risks of ChatGPT (N = 369).

3.5. Support for ethical regulations and training

Table 4 reveals a spectrum of attitudes among researchers regarding various aspects of integrating AI into scientific research practices. The participants were positive toward regulating the use of AI in research (77–91 %). Interestingly, a notable consensus (47.7 %) was observed in favor of including AI as an author if it contributed to the preparation of the paper or book. Furthermore, a significant proportion believed that researchers should receive training on the ethical use of AI in research (64.5 %).

Table 4.

Researchers' attitudes toward the ethical considerations of AI integration in scientific research (N = 369).

| Question Response | N (%) |

|---|---|

| Do you endorse the implementation of an ethical code to govern the moral utilization of AI in scientific research? | |

| Yes | 337 (91.3) |

| No | 32 (8.7) |

| Do you approve of publishing houses or journals requesting authors to disclose the involvement of AI in drafting and preparing different sections of the paper/book? | |

| Yes | 284 (77.0) |

| No | 29 (7.9) |

| Maybe | 56 (15.2) |

| Do you agree that AI should be credited as an author if it played a role in preparing the paper/book? | |

| Yes | 176 (47.7) |

| No | 127 (34.4) |

| Maybe | 66 (17.9) |

| Do you feel the need for training regarding the ethical utilization of AI in scientific research? | |

| Yes | 238 (64.5) |

| No | 47 (12.7) |

| Maybe | 84 (22.8) |

3.6. Factors affecting researchers’ knowledge and Attitude

Table 5 investigates various demographic factors that affect researchers’ scores in knowledge and attitude towards ChatGPT utilization in research. The results revealed statistically significant differences in knowledge scores based on gender (p < 0.001), working country (p < 0.05), and work field (p < 0.05). Specifically, female researchers demonstrated lower knowledge scores compared to male researchers, while participants from other countries exhibited higher knowledge scores than those from Jordan. Moreover, researchers from the academic sector achieved higher knowledge scores compared to those from other fields.

Table 5.

Parameters affecting researchers’ scores (N = 369).

| Variable |

Knowledge |

Attitude |

||

|---|---|---|---|---|

| Mean (%) ± SD | p-valuea | Mean (%) ± SD | p-valuea | |

| Gender | ||||

| Male | 60.9 ± 20.0 | <0.001 | 68.0 ± 8.2 | 0.723 |

| Female | 53.3 ± 18.1 | 68.3 ± 7.9 | ||

| Age | ||||

| 22–35 | 58.3 ± 19.6 | 0.427 | 69.3 ± 8.5 | 0.131 |

| 36–45 | 59.7 ± 20.7 | 67.2 ± 8.4 | ||

| 46–55 | 58.7 ± 17.9 | 67.7 ± 6.8 | ||

| >56 | 53.3 ± 19.4 | 66.9 ± 6.4 | ||

| Working country | ||||

| Jordan | 57.3 ± 19.7 | 0.022 | 68.2 ± 8.0 | 0.877 |

| Other countries | 63.5 ± 18.6 | 68.0 ± 8.2 | ||

| Highest qualification | ||||

| Bachelor | 57.7 ± 21.4 | 0.317 | 68.7 ± 6.7 | 0.035 |

| Master | 55.8 ± 21.0 | 70.0 ± 8.6 | ||

| PhD | 59.5 ± 18.5 | 67.3 ± 8.1 | ||

| Employment field | ||||

| Academic | 60.0 ± 23.1 | 0.011 | 67.6 ± 8.2 | 0.028 |

| Government sector | 51.0 ± 21.1 | 71.6 ± 6.9 | ||

| Other | 54.4 ± 21.4 | 68.6 ± 7.9 | ||

| Specialty | ||||

| Nursing | 56.7 ± 18.7 | 0.125 | 67.3 ± 8.1 | 0.366 |

| Pharmacy | 59.6 ± 18.8 | 67.2 ± 8.0 | ||

| Medicine | 54.2 ± 20.8 | 68.5 ± 7.0 | ||

| Biological sciences | 57.7 ± 20.2 | 68.6 ± 9.2 | ||

| Applied medical sciences | 63.3 ± 17.8 | 69.7 ± 8.6 | ||

| Dentistry | 56.3 ± 22.4 | 67.3 ± 7.2 | ||

| Years of experience | ||||

| >15 years | 56.7 ± 20.9 | 0.062 | 67.6 ± 6.6 | 0.918 |

| 11–15 years | 61.6 ± 22.4 | 68.4 ± 9.9 | ||

| 6–10 years | 55.7 ± 21.8 | 68.0 ± 8.6 | ||

| 1–5 years | 61.6 ± 21.8 | 68.6 ± 8.5 | ||

| Less than 1 year | 51.0 ± 21.8 | 68.3 ± 6.9 | ||

| Number of published research | ||||

| >50 | 59.8 ± 22.5 | 0.064 | 66.2 ± 6.7 | 0.282 |

| 31–50 | 56.8 ± 14.5 | 66.6 ± 7.6 | ||

| 21–30 | 65.6 ± 16.0 | 67.5 ± 7.1 | ||

| 11–20 | 61.3 ± 19.9 | 67.6 ± 8.8 | ||

| 6–10 | 59.8 ± 20.7 | 68.0 ± 8.5 | ||

| 1–5 | 56.2 ± 21.0 | 68.7 ± 9.1 | ||

| None | 53.6 ± 18.6 | 70.0 ± 6.3 | ||

A p-value <0.05 indicates statistical significance, calculated by either an independent t-test or ANOVA when appropriate.

Regarding attitude scores, there are statistically significant differences based on highest qualification and employment field (p < 0.05). Participants with a Master's degree exhibited the highest attitude scores. Furthermore, researchers from the government sector demonstrated the highest attitude scores compared to those from academic institutions or other fields. However, no statistically significant differences were found in both knowledge or attitude scores based on age, health-related specialty, years of experience, or number of published studies.

Table 6 shows the correlation analysis between knowledge and attitude scores. Both knowledge (moderate level) and attitude (positive) scores demonstrated statistically significant positive correlations with the total score (p < 0.001), suggesting that researchers with higher overall scores were more knowledgeable about ChatGPT and held more positive attitudes towards its utilization in research endeavors.

Table 6.

Correlation analysis of researchers’ scores (N = 369).

| Dependent variable | Total score (mean ± SD) | Pearson correlation coefficient (r) | p-valuea |

|---|---|---|---|

| Knowledge | 58.3 ± 19.6 | 0.927 | <0.001 |

| Attitude | 68.1 ± 8.1 | 0.412 | <0.001 |

| Knowledge- attitude relationship | 63.2 ± 10.8 | 1 | - |

A p-value <0.05 indicates statistical significance, calculated by Pearson's r.

4. Discussion

The introduction of ChatGPT has gained interest across various industries, including academia and scientific research, as it holds promise for assisting in tasks such as manuscript editing. However, concerns arise regarding its accuracy, particularly in medical research, where outdated or incorrect information could lead to significant consequences [25]. The findings of this study contribute to the theoretical understanding of how familiarity and attitudes towards AI technologies like ChatGPT are developing among MENA researchers and provide practical insights for enhancing research productivity and ethical standards.

The high level of familiarity with AI and ML concepts and awareness of ChatGPT among the surveyed researchers is encouraging, with the majority of participants indicating that they had heard about them. This suggests a growing awareness of advanced technologies within the research community in the MENA, which is crucial for exploring technology diffusion and adoption in academic settings. ChatGPT chatbot has gained significant recognition, reflecting its growing prominence as an AI tool for generating human-like text [26]. Moreover, the high percentage of participants who had used ChatGPT and expressed an intention to use it in their research highlights the perceived potential utility of this technology in enhancing research productivity and efficiency. In studies from Saudi Arabia, the majority of surveyed researchers were familiar with AI tools and ChatGPT [27,28]. In a study from Jordan, most of the participants expressed interest in utilizing AI for healthcare and research purposes [29]. In a survey study that included 420 researchers from the United States, 40 % of the participants had tried ChatGPT, with a reported range of perspectives around the uses of this tool in education, research, and healthcare [30]. In a global study conducted on 456 urologists, about half of participants have reported using ChatGPT in their research [31]. Thus, the current study finding aligns with the global trend of increasing interest in AI-driven tools for various applications, including research automation, content writing, and knowledge extraction [[32], [33], [34], [35], [36]].

While researchers generally exhibited positive attitudes towards new AI technology, including ChatGPT, concerns about reliability, accuracy, biases, and ethical implications were prevalent. These contradictory attitudes reflect the different perspectives among researchers regarding AI use in research, contributing to discussions on technological ambivalence and the complexity of AI adoption. This finding is in agreement with studies conducted in several countries from the MENA that include Jordan, Saudi Arabia, Egypt, and Lebanon [21,23,37,38]. Similarly, such concerns were highlighted in studies from developed countries [[39], [40], [41], [42]]. The perception about the potential for ChatGPT to introduce plagiarism, errors in documentation, and replace researchers underscores the need for robust quality control mechanisms and ethical guidelines to moderate these risks. This practical insight can guide the development of policies and training programs to address these concerns. These findings underscore the importance of addressing researchers’ perceptions and promoting informed decision-making regarding AI integration in research practices and align with the proactive action seen in previous research recommendations [[43], [44], [45]].

Participants identified various potential benefits associated with ChatGPT use in research, including saving time, promoting scientific writing, and increasing scientific production. These findings align with previous research from the MENA and the world highlighting the potential use of AI to simplify research processes and facilitate knowledge generation [11,46]. For example, in a study from Jordan, 38 % of participants have used AI in writing research papers [47]. In a study from Saudi Arabia, teachers and students were satisfied with using AI for translation purposes [48]. Graduates and students from medical schools in several countries including, Germany, Austria, and Switzerland, had positive AI perceptions in terms of enhancing healthcare, decreasing medical errors, and improving the precision of physician diagnoses [49,50]. On the other hand, the current study participants also expressed several concerns regarding the risks associated with ChatGPT, including its potential to introduce scientific inaccuracies, potential biases, and ethical dilemmas as property rights violations, highlighting the importance of balancing innovation with responsible use. These negative perceptions agree with studies that were conducted in Austria, United States, Egypt, and Portugal [21,[50], [51], [52]]. These benefits and concerns reflect the broader debates surrounding the responsible and ethical use of AI, including issues related to transparency, accountability, and algorithmic biases [53,54]. Addressing these issues requires transparent communication, rigorous validation processes, and ongoing monitoring of AI systems’ performance and impact [44]. These recommendations provide a practical framework for the responsible integration of AI technologies in research.

The majority of surveyed MENA researchers expressed support for the implementation of ethical regulations to govern AI use in research, and the advocacy for disclosing AI contributions in publications reflects researchers’ commitment to upholding ethical standards and transparency in scientific endeavors. This is consistent with previous studies from the MENA region [[55], [56], [57], [58], [59]]. This also reflects a recognition of the need for ethical guidelines to ensure the responsible and transparent use of AI in research and education [[60], [61], [62]]. Furthermore, the desire for formal training on the ethical use of AI suggests a proactive approach to equipping researchers with the knowledge and skills necessary to navigate the complexities of AI-driven research and address ethical challenges associated with ChatGPT and similar AI tools [26,59].

Notably, we identified several demographic factors that influence researchers' knowledge and attitudes toward ChatGPT. Male researchers and those working outside Jordan significantly impacted researchers' knowledge scores, highlighting the role of individual and contextual factors in shaping perceptions towards AI technologies. Additionally, researchers working in the academic sector significantly impacted both knowledge scores. Moreover, a moderate-strong positive correlation was observed between knowledge and attitude scores, indicating that researchers with higher knowledge about ChatGPT tend to hold more positive attitudes towards its utilization in research. This indicates that enhancing knowledge about ChatGPT among MENA researchers may increase acceptance of its adoption in research. However, previous research observed a widespread neutral-negative attitude within the Arabic-speaking community towards ChatGPT; our findings align with MENA users’ strong interest in the ChatGPT landscape [63]. This contradictory literature viewpoint underscores the necessity for a comprehensive understanding of the enthusiasm and apprehension surrounding ChatGPT adoption within MENA cultural contexts. Moreover, our results revealed a significant positive correlation between knowledge and the overall mean score, which suggests that knowledge is a main factor in behavior change toward the use of ChatGPT.

The findings of this study have several implications for research and practice in the MENA region. Theoretical contributions include insights into technology adoption and ethical considerations, while practical implications involve recommendations for ethical AI use, training, and policy development. First, a need for interdisciplinary collaboration between AI experts, ethicists, and domain-specific researchers to develop robust guidelines for the ethical use of AI in research [[64], [65], [66], [67]]. Second, educational institutions and research organizations in the MENA should prioritize the integration of AI literacy and ethics training, including ChatGPT, into research programs to empower researchers to navigate AI-driven technologies responsibly [68,69]. Finally, governmental and institutional funding bodies in the region should support initiatives aimed at promoting responsible AI development and fostering a culture of ethical research conduct within the academic community of the MENA region [57].

4.1. Limitations and future research directions

We acknowledged several limitations of this study, including its cross-sectional design, which limits the ability to establish causal relationships between variables, and the use of self-reported data, which may be subject to response bias. Moreover, our study focused specifically on researchers in the MENA region, with the majority of participants from Jordan, limiting the generalizability of the findings to other regions. Future research could employ more rigorous and diverse sampling methods and objective measures to overcome these limitations. Furthermore, it explores a mixed-methods analysis of the perspectives of researchers from diverse cultural and linguistic backgrounds and investigates how longitudinal trends in ChatGPT perceptions evolve, providing valuable insights into its long-term impact on research practices. While our current study provides a comprehensive overview of the knowledge, attitudes, and perceptions of MENA researchers towards ChatGPT, several avenues for future research (e.g., influence on researcher productivity) could build upon our findings to further deepen our understanding of AI use in research.

In conclusion, this study contributes to the growing body of literature on integrating AI in research. While participants recognized the potential benefits of ChatGPT for enhancing research productivity, they also raised important concerns about its reliability and ethical implications. Addressing both benefits and concerns is crucial for ensuring the responsible and ethical integration of ChatGPT and similar AI tools into the research process, ultimately advancing scientific knowledge and innovation in the MENA region.

5. Conclusion

This study highlights the moderate awareness and cautious stance of MENA researchers regarding ChatGPT, reflecting a blend of interest in its potential to enhance research productivity and significant concerns about accuracy, bias, and ethical implications. While the benefits recognized align with global trends, the unique challenges faced by the MENA region emphasize the need for interdisciplinary collaboration, AI literacy, and tailored ethical guidelines to ensure responsible AI integration. By addressing these concerns and learning from other regions’ experiences, the MENA research community can foster responsible ChatGPT integration into research practices, ultimately advancing scientific innovation while ensuring ethical practices.

CRediT authorship contribution statement

Sana'a A. Jaber: Writing – review & editing, Writing – original draft, Visualization, Validation, Software, Methodology, Investigation, Formal analysis, Data curation, Conceptualization. Hisham E. Hasan: Writing – review & editing, Writing – original draft, Visualization, Validation, Supervision, Software, Methodology, Investigation, Formal analysis, Data curation, Conceptualization. Karem H. Alzoubi: Writing – review & editing, Writing – original draft, Software, Resources, Project administration, Investigation, Funding acquisition, Data curation, Conceptualization. Omar F. Khabour: Writing – review & editing, Writing – original draft, Visualization, Supervision, Project administration, Methodology, Investigation, Funding acquisition, Data curation, Conceptualization.

Ethics and consent declarations

This study was reviewed and approved by the Institutional Review Board of the Jordan University of Science and Technology (JUST-IRB) with the approval number: [30/160/2023], dated [May-17-2023]. All participants provided electronic informed consent to participate in the study and for their data to be published.

Data availability statement

Data will be made available on request via e-mail from the corresponding author (Karem Alzoubi: khalzoubi@just.edu.jo).

Funding

This work was supported by the National Institutes of Health, Bethesda, MD, USA [grant number R25TW010026] and the Deanship of Research at Jordan University of Science and Technology, Irbid, Jordan [grant number 291-2023].

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgment

Authors would like to thank Deanship of Research at Jordan University of Science and Technology for its support.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.heliyon.2024.e41331.

Appendix A. Supplementary data

The following is the Supplementary data to this article.

References

- 1.Xu Y., Liu X., Cao X., Huang C., Liu E., Qian S., Liu X., Wu Y., Dong F., Qiu C.-W., Qiu J., Hua K., Su W., Wu J., Xu H., Han Y., Fu C., Yin Z., Liu M., Roepman R., Dietmann S., Virta M., Kengara F., Zhang Z., Zhang L., Zhao T., Dai J., Yang J., Lan L., Luo M., Liu Z., An T., Zhang B., He X., Cong S., Liu X., Zhang W., Lewis J.P., Tiedje J.M., Wang Q., An Z., Wang F., Zhang L., Huang T., Lu C., Cai Z., Wang F., Zhang J. Artificial intelligence: a powerful paradigm for scientific research. Innovation. 2021;2 doi: 10.1016/j.xinn.2021.100179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Taye M.M. Understanding of machine learning with deep learning: architectures, workflow, applications and future directions. Computers. 2023;12:91. doi: 10.3390/computers12050091. [DOI] [Google Scholar]

- 3.Menon D., Shilpa K. “Chatting with ChatGPT”: analyzing the factors influencing users' intention to Use the Open AI's ChatGPT using the UTAUT model. Heliyon. 2023;9 doi: 10.1016/j.heliyon.2023.e20962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Memarian B., Doleck T. ChatGPT in education: methods, potentials, and limitations. Comput. Hum. Behav.: Artificial Humans. 2023;1 doi: 10.1016/j.chbah.2023.100022. [DOI] [Google Scholar]

- 5.Ray P.P. ChatGPT: a comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems. 2023;3:121–154. doi: 10.1016/j.iotcps.2023.04.003. [DOI] [Google Scholar]

- 6.Niloy A.C., Bari M.A., Sultana J., Chowdhury R., Raisa F.M., Islam A., Mahmud S., Jahan I., Sarkar M., Akter S., Nishat N., Afroz M., Sen A., Islam T., Tareq M.H., Hossen M.A. Why do students use ChatGPT? Answering through a triangulation approach. Comput. Educ.: Artif. Intell. 2024;6 doi: 10.1016/j.caeai.2024.100208. [DOI] [Google Scholar]

- 7.Dahmen J., Kayaalp M.E., Ollivier M., Pareek A., Hirschmann M.T., Karlsson J., Winkler P.W. Artificial intelligence bot ChatGPT in medical research: the potential game changer as a double-edged sword. Knee Surg. Sports Traumatol. Arthrosc. 2023;31:1187–1189. doi: 10.1007/s00167-023-07355-6. [DOI] [PubMed] [Google Scholar]

- 8.Malik A.R., Pratiwi Y., Andajani K., Numertayasa I.W., Suharti S., Darwis A. Marzuki, exploring artificial intelligence in academic essay: higher education student's perspective. International Journal of Educational Research Open. 2023;5 doi: 10.1016/j.ijedro.2023.100296. [DOI] [Google Scholar]

- 9.Michel-Villarreal R., Vilalta-Perdomo E., Salinas-Navarro D.E., Thierry-Aguilera R., Gerardou F.S. Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Educ. Sci. 2023;13:856. doi: 10.3390/educsci13090856. [DOI] [Google Scholar]

- 10.Doyal A.S., Sender D., Nanda M., Serrano R.A. Chat GPT and artificial intelligence in medical writing: concerns and ethical considerations. Cureus. 2023 doi: 10.7759/cureus.43292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kooli C. Chatbots in education and research: a critical examination of ethical implications and solutions. Sustainability. 2023;15:5614. doi: 10.3390/su15075614. [DOI] [Google Scholar]

- 12.Xu H., Shuttleworth K.M.J. Medical artificial intelligence and the black box problem: a view based on the ethical principle of “do no harm,”. Intelligent Medicine. 2024;4:52–57. doi: 10.1016/j.imed.2023.08.001. [DOI] [Google Scholar]

- 13.Currie-Alder B., Arvanitis R., Hanafi S. Research in Arabic-speaking countries: funding competitions, international collaboration, and career incentives. Sci Public Policy. 2018;45:74–82. doi: 10.1093/scipol/scx048. [DOI] [Google Scholar]

- 14.Rizk N. In: The Oxford Handbook of Ethics of AI. Dubber M.D., Pasquale F., Das S., editors. Oxford University Press; 2020. Artificial intelligence and inequality in the Middle East; pp. 624–649. [DOI] [Google Scholar]

- 15.Farrokhnia M., Banihashem S.K., Noroozi O., Wals A. Innovations in Education and Teaching International. 2023. A SWOT analysis of ChatGPT: implications for educational practice and research; pp. 1–15. [DOI] [Google Scholar]

- 16.McLennan S., Meyer A., Schreyer K., Buyx A. German medical students' views regarding artificial intelligence in medicine: a cross-sectional survey. PLOS Digital Health. 2022;1 doi: 10.1371/journal.pdig.0000114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Fritsch S.J., Blankenheim A., Wahl A., Hetfeld P., Maassen O., Deffge S., Kunze J., Rossaint R., Riedel M., Marx G., Bickenbach J. Attitudes and perception of artificial intelligence in healthcare: a cross-sectional survey among patients. Digit Health. 2022;8 doi: 10.1177/20552076221116772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Coakley S., Young R., Moore N., England A., O'Mahony A., O'Connor O.J., Maher M., McEntee M.F. Radiographers' knowledge, attitudes and expectations of artificial intelligence in medical imaging. Radiography. 2022;28:943–948. doi: 10.1016/j.radi.2022.06.020. [DOI] [PubMed] [Google Scholar]

- 19.Kansal R., Bawa A., Bansal A., Trehan S., Goyal K., Goyal N., Malhotra K. Differences in knowledge and perspectives on the usage of artificial intelligence among doctors and medical students of a developing country: a cross-sectional study. Cureus. 2022 doi: 10.7759/cureus.21434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Karolinoerita V., Cahyana D., Hadiarto A., Hati D.P., Pratamaningsih M.M., Mulyani A., Hikmat M., Ramadhani F., Gani R.A., Heryanto R.B., Gofar N., Suriadikusumah A., Irawan I., Sukarman S., Suratman S. Application of chatgpt in soil science research and the perceptions of soil scientists in Indonesia. SSRN Electron. J. 2023 doi: 10.2139/ssrn.4401008. [DOI] [Google Scholar]

- 21.Abdelhafiz A.S., Ali A., Maaly A.M., Ziady H.H., Sultan E.A., Mahgoub M.A. Knowledge, perceptions and attitude of researchers towards using ChatGPT in research. J. Med. Syst. 2024;48:26. doi: 10.1007/s10916-024-02044-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hasanein A.M., Sobaih A.E.E. Drivers and consequences of ChatGPT use in higher education: key stakeholder perspectives. Eur J Investig Health Psychol Educ. 2023;13:2599–2614. doi: 10.3390/ejihpe13110181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Alsadhan A., Al-Anezi F., Almohanna A., Alnaim N., Alzahrani H., Shinawi R., AboAlsamh H., Bakhshwain A., Alenazy M., Arif W., Alyousef S., Alhamidi S., Alghamdi A., AlShrayfi N., Bin Rubaian N., Alanzi T., AlSahli A., Alturki R., Herzallah N. The opportunities and challenges of adopting ChatGPT in medical research. Front. Med. 2023;10 doi: 10.3389/fmed.2023.1259640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.von Elm E., Altman D.G., Egger M., Pocock S.J., Gøtzsche P.C., Vandenbroucke J.P. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. PLoS Med. 2007;4:e296. doi: 10.1371/journal.pmed.0040296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wen J., Wang W. The future of ChatGPT in academic research and publishing: a commentary for clinical and translational medicine. Clin. Transl. Med. 2023;13 doi: 10.1002/ctm2.1207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dwivedi Y.K., Kshetri N., Hughes L., Slade E.L., Jeyaraj A., Kar A.K., Baabdullah A.M., Koohang A., Raghavan V., Ahuja M., Albanna H., Albashrawi M.A., Al-Busaidi A.S., Balakrishnan J., Barlette Y., Basu S., Bose I., Brooks L., Buhalis D., Carter L., Chowdhury S., Crick T., Cunningham S.W., Davies G.H., Davison R.M., Dé R., Dennehy D., Duan Y., Dubey R., Dwivedi R., Edwards J.S., Flavián C., Gauld R., Grover V., Hu M.-C., Janssen M., Jones P., Junglas I., Khorana S., Kraus S., Larsen K.R., Latreille P., Laumer S., Malik F.T., Mardani A., Mariani M., Mithas S., Mogaji E., Nord J.H., O'Connor S., Okumus F., Pagani M., Pandey N., Papagiannidis S., Pappas I.O., Pathak N., Pries-Heje J., Raman R., Rana N.P., Rehm S.-V., Ribeiro-Navarrete S., Richter A., Rowe F., Sarker S., Stahl B.C., Tiwari M.K., van der Aalst W., Venkatesh V., Viglia G., Wade M., Walton P., Wirtz J., Wright R. Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int J Inf Manage. 2023;71 doi: 10.1016/j.ijinfomgt.2023.102642. [DOI] [Google Scholar]

- 27.Syed W., Bashatah A., Alharbi K., Bakarman S.S., Asiri S., Alqahtani N. Awareness and perceptions of ChatGPT among academics and research professionals in riyadh, Saudi Arabia: implications for responsible AI use. Med Sci Monit. 2024;30 doi: 10.12659/MSM.944993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Almulla M.A. Investigating influencing factors of learning satisfaction in AI ChatGPT for research: university students perspective. Heliyon. 2024;10 doi: 10.1016/j.heliyon.2024.e32220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Temsah M.-H., Aljamaan F., Malki K.H., Alhasan K., Altamimi I., Aljarbou R., Bazuhair F., Alsubaihin A., Abdulmajeed N., Alshahrani F.S., Temsah R., Alshahrani T., Al-Eyadhy L., Alkhateeb S.M., Saddik B., Halwani R., Jamal A., Al-Tawfiq J.A., Al-Eyadhy A. ChatGPT and the future of digital health: a study on healthcare workers' perceptions and expectations. Healthcare (Basel) 2023;11 doi: 10.3390/healthcare11131812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hosseini M., Gao C.A., Liebovitz D.M., Carvalho A.M., Ahmad F.S., Luo Y., MacDonald N., Holmes K.L., Kho A. An exploratory survey about using ChatGPT in education, healthcare, and research. PLoS One. 2023;18 doi: 10.1371/journal.pone.0292216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Eppler M., Ganjavi C., Ramacciotti L.S., Piazza P., Rodler S., Checcucci E., Gomez Rivas J., Kowalewski K.F., Belenchón I.R., Puliatti S., Taratkin M., Veccia A., Baekelandt L., Teoh J.Y.-C., Somani B.K., Wroclawski M., Abreu A., Porpiglia F., Gill I.S., Murphy D.G., Canes D., Cacciamani G.E. Awareness and use of ChatGPT and large language models: a prospective cross-sectional global survey in urology. Eur. Urol. 2024;85:146–153. doi: 10.1016/j.eururo.2023.10.014. [DOI] [PubMed] [Google Scholar]

- 32.Huang X., Zou D., Cheng G., Chen X., Xie H. Trends, research issues and applications of artificial intelligence in language education. Educ. Technol. Soc. 2023;26:112–131. https://www.jstor.org/stable/48707971 [Google Scholar]

- 33.Marzuki U. Widiati, Rusdin D., Darwin, Indrawati I. The impact of AI writing tools on the content and organization of students' writing: EFL teachers' perspective. Cogent Education. 2023;10 doi: 10.1080/2331186X.2023.2236469. [DOI] [Google Scholar]

- 34.Collins C., Dennehy D., Conboy K., Mikalef P. Artificial intelligence in information systems research: a systematic literature review and research agenda. Int J Inf Manage. 2021;60 doi: 10.1016/j.ijinfomgt.2021.102383. [DOI] [Google Scholar]

- 35.Quan H., Li S., Zeng C., Wei H., Hu J. Big data and AI-driven product design: a survey. Appl. Sci. 2023;13:9433. doi: 10.3390/app13169433. [DOI] [Google Scholar]

- 36.Vinuesa R., Azizpour H., Leite I., Balaam M., Dignum V., Domisch S., Felländer A., Langhans S.D., Tegmark M., Fuso Nerini F. The role of artificial intelligence in achieving the Sustainable Development Goals. Nat. Commun. 2020;11:233. doi: 10.1038/s41467-019-14108-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Abu Hammour K., Alhamad H., Al-Ashwal F.Y., Halboup A., Abu Farha R., Abu Hammour A. ChatGPT in pharmacy practice: a cross-sectional exploration of Jordanian pharmacists' perception, practice, and concerns. J Pharm Policy Pract. 2023;16:115. doi: 10.1186/s40545-023-00624-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Al-Dujaili Z., Omari S., Pillai J., Al Faraj A. Assessing the accuracy and consistency of ChatGPT in clinical pharmacy management: a preliminary analysis with clinical pharmacy experts worldwide. Res Social Adm Pharm. 2023;19:1590–1594. doi: 10.1016/j.sapharm.2023.08.012. [DOI] [PubMed] [Google Scholar]

- 39.Wang C., Liu S., Yang H., Guo J., Wu Y., Liu J. Ethical considerations of using ChatGPT in health care. J. Med. Internet Res. 2023;25 doi: 10.2196/48009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Høj S., Thomsen S.F., Ulrik C.S., Meteran H., Sigsgaard T., Meteran H. Evaluating the scientific reliability of ChatGPT as a source of information on asthma. J. Allergy Clin. Immunol.: Global. 2024;3 doi: 10.1016/j.jacig.2024.100330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Gordon E.R., Trager M.H., Kontos D., Weng C., Geskin L.J., Dugdale L.S., Samie F.H. Ethical considerations for artificial intelligence in dermatology: a scoping review. Br. J. Dermatol. 2024;190:789–797. doi: 10.1093/bjd/ljae040. [DOI] [PubMed] [Google Scholar]

- 42.Laohawetwanit T., Pinto D.G., Bychkov A. A survey analysis of the adoption of large language models among pathologists. Am. J. Clin. Pathol. 2024 doi: 10.1093/ajcp/aqae093. [DOI] [PubMed] [Google Scholar]

- 43.Siala H., Wang Y. SHIFTing artificial intelligence to be responsible in healthcare: a systematic review. Soc. Sci. Med. 2022;296 doi: 10.1016/j.socscimed.2022.114782. [DOI] [PubMed] [Google Scholar]

- 44.Aldoseri A., Al-Khalifa K.N., Hamouda A.M. Re-thinking data strategy and integration for artificial intelligence: concepts, opportunities, and challenges. Appl. Sci. 2023;13:7082. doi: 10.3390/app13127082. [DOI] [Google Scholar]

- 45.Heaton D., Nichele E., Clos J., Fischer J.E. “ChatGPT says no”: agency, trust, and blame in Twitter discourses after the launch of ChatGPT, AI and Ethics. 2024. [DOI]

- 46.Dwivedi Y.K., Sharma A., Rana N.P., Giannakis M., Goel P., Dutot V. Evolution of artificial intelligence research in Technological Forecasting and Social Change: research topics, trends, and future directions. Technol. Forecast. Soc. Change. 2023;192 doi: 10.1016/j.techfore.2023.122579. [DOI] [Google Scholar]

- 47.Mosleh R., Jarrar Q., Jarrar Y., Tazkarji M., Hawash M. Medicine and pharmacy students' knowledge, attitudes, and practice regarding artificial intelligence programs: Jordan and west bank of Palestine. Adv. Med. Educ. Pract. 2023;14:1391–1400. doi: 10.2147/AMEP.S433255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sahari Y., Al-Kadi A.M.T., Ali J.K.M. A cross sectional study of ChatGPT in translation: magnitude of use, attitudes, and uncertainties. J. Psycholinguist. Res. 2023;52:2937–2954. doi: 10.1007/s10936-023-10031-y. [DOI] [PubMed] [Google Scholar]

- 49.Alkhaaldi S.M.I., Kassab C.H., Dimassi Z., Oyoun Alsoud L., Al Fahim M., Al Hageh C., Ibrahim H. Medical student experiences and perceptions of ChatGPT and artificial intelligence: cross-sectional study. JMIR Med Educ. 2023;9 doi: 10.2196/51302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Weidener L., Fischer M. Artificial intelligence in medicine: cross-sectional study among medical students on application, education, and ethical aspects. JMIR Med Educ. 2024;10 doi: 10.2196/51247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Choudhury A., Elkefi S., Tounsi A. Exploring factors influencing user perspective of ChatGPT as a technology that assists in healthcare decision making: a cross sectional survey study. PLoS One. 2024;19 doi: 10.1371/journal.pone.0296151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Magalhães Araujo S., Cruz-Correia R. Incorporating ChatGPT in medical informatics education: mixed methods study on student perceptions and experiential integration proposals. JMIR Med Educ. 2024;10 doi: 10.2196/51151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Jeyaraman M., Ramasubramanian S., Balaji S., Jeyaraman N., Nallakumarasamy A., Sharma S. ChatGPT in action: harnessing artificial intelligence potential and addressing ethical challenges in medicine, education, and scientific research. World J. Methodol. 2023;13:170–178. doi: 10.5662/wjm.v13.i4.170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hadi Mogavi R., Deng C., Juho Kim J., Zhou P., Kwon Y.D., Hosny Saleh Metwally A., Tlili A., Bassanelli S., Bucchiarone A., Gujar S., Nacke L.E., Hui P. ChatGPT in education: a blessing or a curse? A qualitative study exploring early adopters' utilization and perceptions. Comput. Hum. Behav.: Artificial Humans. 2024;2 doi: 10.1016/j.chbah.2023.100027. [DOI] [Google Scholar]

- 55.Hasan H.E., Jaber D., Khabour O.F., Alzoubi K.H. Perspectives of pharmacy students on ethical issues related to artificial intelligence: a comprehensive survey study. Res Sq. 2024 doi: 10.21203/rs.3.rs-4302115/v1. [DOI] [Google Scholar]

- 56.Alrefaei A.F., Hawsawi Y.M., Almaleki D., Alafif T., Alzahrani F.A., Bakhrebah M.A. Genetic data sharing and artificial intelligence in the era of personalized medicine based on a cross-sectional analysis of the Saudi human genome program. Sci. Rep. 2022;12:1405. doi: 10.1038/s41598-022-05296-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Arawi T., El Bachour J., El Khansa T. The fourth industrial revolution: its impact on artificial intelligence and medicine in developing countries. Asian Bioeth Rev. 2024;16:513–526. doi: 10.1007/s41649-024-00284-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Hammoudi Halat D., Shami R., Daud A., Sami W., Soltani A., Malki A. Artificial intelligence readiness, perceptions, and educational needs among dental students: a cross-sectional study. Clin Exp Dent Res. 2024;10:e925. doi: 10.1002/cre2.925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Hasan H.E., Jaber D., Khabour O.F., Alzoubi K.H. Ethical considerations and concerns in the implementation of AI in pharmacy practice: a cross-sectional study. BMC Med. Ethics. 2024;25:55. doi: 10.1186/s12910-024-01062-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Díaz-Rodríguez N., Del Ser J., Coeckelbergh M., López de Prado M., Herrera-Viedma E., Herrera F. Connecting the dots in trustworthy Artificial Intelligence: from AI principles, ethics, and key requirements to responsible AI systems and regulation. Inf. Fusion. 2023;99 doi: 10.1016/j.inffus.2023.101896. [DOI] [Google Scholar]

- 61.Nguyen A., Ngo H.N., Hong Y., Dang B., Nguyen B.-P.T. Ethical principles for artificial intelligence in education. Educ. Inf. Technol. 2023;28:4221–4241. doi: 10.1007/s10639-022-11316-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Haque MdA., Li S. Exploring ChatGPT and its impact on society, AI and ethics. 2024. [DOI]

- 63.Al-Khalifa S., Alhumaidhi F., Alotaibi H., Al-Khalifa H.S. ChatGPT across Arabic twitter: a study of topics, sentiments, and sarcasm. Data. 2023;8:171. doi: 10.3390/data8110171. [DOI] [Google Scholar]

- 64.McLennan S., Fiske A., Tigard D., Müller R., Haddadin S., Buyx A. Embedded ethics: a proposal for integrating ethics into the development of medical AI. BMC Med. Ethics. 2022;23:6. doi: 10.1186/s12910-022-00746-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Stahl B.C., Leach T. Assessing the ethical and social concerns of artificial intelligence in neuroinformatics research: an empirical test of the European Union Assessment List for Trustworthy AI (ALTAI), AI and Ethics 3. 2023:745–767. doi: 10.1007/s43681-022-00201-4. [DOI] [Google Scholar]

- 66.Bouhouita-Guermech S., Gogognon P., Bélisle-Pipon J.-C. Specific challenges posed by artificial intelligence in research ethics. Front Artif Intell. 2023;6 doi: 10.3389/frai.2023.1149082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Arbelaez Ossa L., Lorenzini G., Milford S.R., Shaw D., Elger B.S., Rost M. Integrating ethics in AI development: a qualitative study. BMC Med. Ethics. 2024;25:10. doi: 10.1186/s12910-023-01000-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Meskher H., Belhaouari S.B., Thakur A.K., Sathyamurthy R., Singh P., Khelfaoui I., Saidur R. A review about COVID-19 in the MENA region: environmental concerns and machine learning applications. Environ. Sci. Pollut. Res. Int. 2022;29:82709–82728. doi: 10.1007/s11356-022-23392-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Farhat H., Alinier G., Helou M., Galatis I., Bajow N., Jose D., Jouini S., Sezigen S., Hafi S., Mccabe S., Somrani N., El Aifa K., Chebbi H., Ben Amor A., Kerkeni Y., Al-Wathinani A.M., Abdulla N.M., Jairoun A.A., Morris B., Castle N., Al-Sheikh L., Abougalala W., Ben Dhiab M., Laughton J. Perspectives on preparedness for chemical, biological, radiological, and nuclear threats in the Middle East and north africa region: application of artificial intelligence techniques. Health Secur. 2024;22:190–202. doi: 10.1089/hs.2023.0093. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data will be made available on request via e-mail from the corresponding author (Karem Alzoubi: khalzoubi@just.edu.jo).