Summary

As light sheet fluorescence microscopy (LSFM) becomes widely available, reconstruction of time-lapse imaging will further our understanding of complex biological processes at cellular resolution. Here, we present a comprehensive workflow for in toto capture, processing, and analysis of multi-view LSFM experiments using the ex vivo mouse embryo as a model system of development. Our protocol describes imaging on a commercial LSFM instrument followed by computational analysis in discrete segments, using open-source software. Quantification of migration and morphodynamics is included.

For complete details on the use and execution of this protocol, please refer to Dominguez et al.1

Subject areas: developmental biology, microscopy, computer sciences

Graphical abstract

Highlights

-

•

Instructions for in toto mouse embryo time-lapse imaging

-

•

Guidance on interactive 4D image processing and fusion of multi-view datasets

-

•

Steps for tracking at single-cell resolution with open-source F-TGMM

-

•

Quantification and visualization with Fiji-based open-source software

Publisher’s note: Undertaking any experimental protocol requires adherence to local institutional guidelines for laboratory safety and ethics.

As light sheet fluorescence microscopy (LSFM) becomes widely available, reconstruction of time-lapse imaging will further our understanding of complex biological processes at cellular resolution. Here, we present a comprehensive workflow for in toto capture, processing, and analysis of multi-view LSFM experiments using the ex vivo mouse embryo as a model system of development. Our protocol describes imaging on a commercial LSFM instrument followed by computational analysis in discrete segments, using open-source software. Quantification of migration and morphodynamics is included.

Before you begin

Early mammalian development is well studied across several model organisms, including human. However, concerted morphological events involving multiple cell populations and tissues remain poorly characterized, partly due to technical limitations. We present an integrated biological-to-microscopic-to-computational workflow to deeply and comprehensively examine these events in mouse embryos in toto. This protocol leverages four-dimensional whole-embryo light sheet imaging and an open-source computational pipeline to permit longitudinal reconstruction of development at single-cell resolution.

Based on a similar pre-existing comprehensive strategy for mouse embryo imaging,2 our protocol accommodates data from ‘turnkey’ light sheet fluorescence microscopes (LSFM), including Zeiss Z.1, Ultramicroscope II, or MuVi SPIM. Moreover, our computational pipeline has notable enhancements in pre-processing of LSFM image volumes, interactive registration and fusion of 4D datasets, tracking at cellular resolution, and quantitative analysis of tracking data. Although the computational steps are CPU and (GP)GPU intensive, they can be performed on a single cost-effective PC workstation running entirely open source software, and do not require massively parallel or cluster computing for most acquired datasets. Besides improved portability and ease of use, our methods improve on priors in terms of CPU and memory efficiency, as well as in accuracy. We recommend using (k)ubuntu 24.04 LTS for the computational steps (Zeiss Z.1 acquisition workstations utilize Microsoft Windows), although the pre-tracking steps have been validated on Windows as well. Forked Tracking with Gaussian Mixture Models,1,2,3 the tracking package we employ, has been pre-built for linux and CUDA 11.1. making it compatible with the majority of recent nVidia graphics cards out of the box.

Institutional permissions

Any experiments on live vertebrates or higher invertebrates must be performed in accordance with relevant institutional and national guidelines and regulations. All mouse protocols were approved by the Institutional Animal Care and Use Committee at UCSF. Mice were housed in a barrier animal facility with standard (12-h dark/light) husbandry conditions at the Gladstone Institutes. All experiments conform to the relevant regulatory standards. Users who wish to adopt this protocol will need approval from the relevant regulatory bodies at their institution.

Prepare materials for embryo culture and imaging

Timing: 4 h

-

1.

Heat inactivate and aliquot fetal bovine serum (FBS) and rat serum as described in “materials and equipment” below.

-

2.

Prepare CB-DMB (if imaging early cardiogenesis) and APE stock solutions as described in “materials and equipment” below.

-

3.

Prepare aliquots of Embryo mounting medium as described in “materials and equipment” below.

-

4.On the day prior to a live imaging experiment:

-

a.Prepare fresh Dissection medium and Embryo culture medium.

-

b.Clean bench and dissection area with 70% ethanol.

-

c.Fill Zeiss Z.1 live imaging sample chamber with 70% ethanol and empty. Wash chamber twice with distilled water.

-

d.Use 70% ethanol spray to sanitize the sample chamber temperature probe, the tip of the gas / CO2 tubing, and the round white chamber cover (with narrow-slit for capillary). Use 70% ethanol to sanitize the capillary holder/mount, thumbscrew, and required fittings. Allow all items to dry completely in a clean container. Keep these items in a clean, dry place until imaging.

-

e.Warm benchtop incubator to 34.5°C for storage of molten embryo mounting medium. Warm one digital dry bath to 75°C to melt embryo mounting medium, and the other to 37°C to use as a warm block for explanted uterus.

-

f.Place one temperature-controllable warm plate under a dissection stereomicroscope and the other nearby. Warm both to 37°C.

-

a.

CRITICAL: 34.5°C is narrowly above the gelling temperature at sea level for the gelatin and agarose products listed in key resources table, mixed at the proportions described in “materials and equipment.” We recommend that users empirically determine the proper temperature between 30°C and 37°C that maintains the mounting medium just above its gelling temperature.

Set up workstation PC for computational analysis

Timing: 2 h

-

5.

Download Kubuntu 24.04 LTS (https://kubuntu.org/getkubuntu/) and write its image to a fresh USB drive (USB 3.1 and 128+ GB recommended) to use as installation medium, following instructions provided by Canonical, developers of Ubuntu (https://ubuntu.com/tutorials/create-a-usb-stick-on-windows).

-

6.Boot to Kubuntu installation USB and install operating system (OS).

-

a.May require keystroke at system power on to access boot menu, recommend consulting system documentation.

-

b.Once booted, follow instructions to install Kubuntu, or use live persistent mode if desired∗ (not recommended).

-

a.

-

7.Install nVidia driver 430 to 550 as directed by nVidia, Canonical, or third-party online documentation (i.e., https://ubuntu.com/server/docs/nvidia-drivers-installation).

-

a.Install packages (below installs driver 550):$ sudo apt update$ sudo ubuntu-drivers list$ sudo ubuntu-drivers install nvidia:550

-

b.Reboot system and use below command to confirm driver is functional, which will show GPUs, the max CUDA version supported, and real-time hardware usage:$ nvidia-smi

-

a.

-

8.Download Fiji packages using a web browser, then install (remember paths):

-

a.Python dependencies –Using Konsole (i.e., Terminal).$ sudo apt install python3-pip$ sudo pip3 install numpy scipy h5py pandas$ sudo pip3 install matplotlib seaborn cython==0.29.35$ git clonehttps://github.com/bhoeckendorf/pyklb$ cd pyklb$ python3 setup.py build$ sudo python3 setup.py install

-

b.Fiji, according to instructions at https://fiji.sc –Ensure maximum heap space is close to RAM capacity (Edit → Options → Memory & Threads...), and that parallel threads matches capabilities of the workstation PC.

-

c.LSFMProcessing (remember paths) –

-

i.Using Konsole (i.e., Terminal), download repository:

-

ii.Follow installation instructions, including all dependencies, at https://github.com/mhdominguez/LSFMProcessing.

-

i.

-

d.Official MaMuT and BigStitcher builds –

-

i.Fiji “Help” menu → “Update...” → “Manage update sites”

-

ii.Check “MaMuT”, “BigStitcher”

-

iii.Hit “Close” then “Apply changes”

-

i.

-

e.MaMuT and multiview-recostruction .jar builds with advanced features –Follow instructions to download untagged releases and overwrite official .jar files, at https://github.com/mhdominguez/MaMuT and https://github.com/mhdominguez/multiview-reconstruction.

-

f.Klb format integration with Fiji –Download all .jar files bundled with most recent Release at https://github.com/mhdominguez/klb-bdv and place them in Fiji.app/jars.

-

a.

-

9.Using a web browser, download F-TGMM, and install:

-

a.Save the most recent Linux ...build-with-libraries.x86–64.tar.gz archive at https://github.com/mhdominguez/F-TGMM/releases to ∼/Downloads/F-TGMM.tar.gz.Note: In Linux, tilde ‘∼’ is an alias pointing to the user’s home directory (i.e., /home/fred, if username is ‘fred’).

-

b.Install to /opt/tgmm using Konsole (i.e.,.Terminal):$ sudo mkdir /opt/tgmm$ cd ∼/Downloads$ sudo tar -xvzf F-TGMM.tar.gz -C /opt/tgmm

-

c.Install GNU parallel, for running watershed segmentation.$ sudo apt install parallel

-

a.

-

10.Install SVF, MaMuT, and MaMuT library packages.

-

a.Download SVF using Konsole (i.e., Terminal):$ cd ∼/Downloads$ git clonehttps://github.com/mhdominguez/SVF$ cd ∼/Downloads/SVF$ git clonehttps://github.com/GuignardLab/IO$ git clonehttps://github.com/leoguignard/TGMMlibraries$ cd IO$ sudo python3 setup.py install$ cd ../TGMMlibraries$ sudo python3 setup.py install

-

b.Unpack blank dataset for SVF / MaMuT reconstructions:$ cd ∼/Downloads/SVF$ tar -xzvf Blank\ Dataset.tar.gz

-

c.Download MaMuTLibrary using Konsole (i.e., Terminal):$ cd ∼/Downloads$ git clonehttps://github.com/mhdominguez/MaMuTLibrary

-

d.Download TrackingFiles included with this protocol for processing example data:$ cd ∼/Downloads$ git clonehttps://github.com/mhdominguez/Dominguez-Protocols-2024-TrackingFilesTrackingFilesAlternatives: Instead of installing OS and software on user’s system, users can run LSFMProcessing-Kubuntu, a live Linux distribution that can be written to a USB thumb drive and booted with x86 64-bit hardware (majority of Intel and AMD systems). Booting a live Linux OS will not disturb the native OS or permanent software on the system. LSFMProcessing-Kubuntu is pre-packaged with nVidia drivers and all the software for the computational workflow in this protocol. Download and setup instructions are linked in the key resources table. By using this live Linux distribution, user agrees to comply with all relevant third-party licenses for the included software.

CRITICAL: This protocol has only been tested with software/driver versions as stated here. Ubuntu 18.04 to 24.04 LTS will also work identically with our protocol, though its GNOME desktop environment has fewer features and slightly inferior performance compared with Kubuntu, which is based on KDE. If users wish to use Windows, most protocol steps in Fiji, BigStitcher, MaMuT, and SVF are compatible using pre-built software included here. User can switch to Linux for the F-TGMM step if desired, or can build F-TGMM for Windows following instructions included in the repository (linked in key resources table).

CRITICAL: This protocol has only been tested with software/driver versions as stated here. Ubuntu 18.04 to 24.04 LTS will also work identically with our protocol, though its GNOME desktop environment has fewer features and slightly inferior performance compared with Kubuntu, which is based on KDE. If users wish to use Windows, most protocol steps in Fiji, BigStitcher, MaMuT, and SVF are compatible using pre-built software included here. User can switch to Linux for the F-TGMM step if desired, or can build F-TGMM for Windows following instructions included in the repository (linked in key resources table).

-

a.

Set up microscope PC workstation, including ZLAPS

Timing: 10 min

-

11.

Ensure user has access to microscope and workstation prior to imaging experiments, and that user will be able to clean and sanitize working components of the microscope in advance of any planned experiments.

-

12.

If using ZEN on Microsoft Windows, install ZLAPS (“Install” in https://github.com/mhdominguez/ZLAPS) for adaptive stage control during future live imaging runs.

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Chemicals, peptides, and recombinant proteins | ||

| Low MP agarose | Fisher | BP165-25 |

| Gelatin | Sigma | G1890 |

| Rat serum, special collection | Valley Biomedical | AS3061-SC, must be individually requested by purchaser; specify to “dispense in 125 mL aliquots” |

| Fetal bovine serum | Thermo Fisher Scientific | 10082139 |

| DMEM/F-12 | Thermo Fisher Scientific | 11039021 |

| GlutaMAX | Thermo Fisher Scientific | 35050061 |

| ITS-X | Thermo Fisher Scientific | 51500056 |

| Penicillin/Streptomycin | Thermo Fisher Scientific | 15070063 |

| b-estradiol | Sigma | E8875 |

| Progesterone | Sigma | P3972 |

| N-acetyl cysteine “NAC” | Sigma | A7250 |

| CB-DMB | Sigma | C5374 |

| Phosphate-buffered saline (PBS) | Thermo Fisher Scientific | 10010023 |

| Glass capillaries and piston, large (at least 4–5 per experiment) | Sigma | Z328502 and BR701938 (green) |

| Glass capillaries and piston, small (at least 4–5 per experiment) | Sigma | Z328480 and BR701932 (black) |

| Wide orifice low-retention tips | Rainin | 30389197 |

| Cell culture grade sterile water | MilliporeSigma | W3500 |

| Parafilm M | Any | N/A |

| Chemstrip 10 MD urine test strips (or similar) | Roche | 03260763160 |

| pH test paper, narrow range 6.0 to 8.0 (or similar) | Fisherbrand | 13-640-502 |

| Deposited data | ||

| Example raw .czi images for mouse embryo dataset at E7.5/ EHF, captured in two frontal-lateral oblique views (100° offset). Channel 1 acquired with 488 nm laser and GFP emission filter, representing Smarcd3-F6-nGFP. Channel 2 acquired with 561 nm laser and RFP emission filter, representing Mesp1-Cre lineage through RCL-H2B-mCherry reporter | This paper | https://datadryad.org/stash/dataset/doi:10.5061/dryad.nk98sf823 |

| Fused .klb files for mouse example dataset above | This paper | https://datadryad.org/stash/dataset/doi:10.5061/dryad.nk98sf823 |

| Intermediate (source and result) data files for above .klb dataset, processed as described herein | This paper | https://github.com/mhdominguez/Dominguez-Protocols-2024-TrackingFiles/tree/main/intermediate-data |

| Experimental models: Organisms/strains | ||

| Mouse: Mesp1-Cre, heterozygous embryos (E6 to E8) of either gender, bred with below strains | Saga et al.4 | N/A |

| Mouse: RCL-H2B-mCherry, heterozygous embryos (E6 to E8) of either gender, bred with above and below strains | Jackson Laboratory | cat: 023139 |

| Mouse: Smarcd3-F6-nGFP, heterozygous embryos (E6 to E8) of either gender, bred with above strains | Devine et al.5 | N/A |

| Software and algorithms | ||

| Fiji (base ImageJ v.1.53f) | Schindelin et al.6 | https://github.com/fiji/fiji |

| ZLAPS (ZEN lightsheet adaptive positioning system) | N/A | https://github.com/mhdominguez/ZLAPS |

| F-TGMM v.2.5 | McDole et al.2 and Dominguez et al.1 | https://github.com/mhdominguez/F-TGMM |

| “SVF” (TGMM2SVF and SVF2MaMuT) | McDole et al.2 and Dominguez et al.1 | https://github.com/mhdominguez/SVF |

| LSFM Processing Scripts | Dominguez et al.1 | https://github.com/mhdominguez/LSFMProcessing |

| PSF Generator | Biomedical Imaging Group at EPFL | http://bigwww.epfl.ch/algorithms/psfgenerator/ |

| Parallel Spectral Deconvolution | Piotr Wendykier | https://sites.google.com/site/piotrwendykier/software/deconvolution/parallelspectraldeconvolution |

| BigStitcher | Hörl et al.7 and Dominguez et al.1 |

https://github.com/PreibischLab/BigStitcher and https://github.com/mhdominguez/multiview-reconstruction |

| KLB file format (including system library and script wrappers) | McDole et al.2 | https://github.com/JaneliaSciComp/keller-lab-block-filetype/ |

| KLB Fiji integration | McDole et al.2 and Dominguez et al.1 | https://github.com/mhdominguez/klb-bdv |

| MaMuT | Wolf et al.8,9 | https://github.com/mhdominguez/MaMuT |

| MaMuT script library | Dominguez et al.1 | https://github.com/mhdominguez/MaMuTLibrary |

| TrackingFiles for handling example data with LSFMProcessing, F-TGMM, SVF, and MaMuT | This paper | https://github.com/mhdominguez/Dominguez-Protocols-2024-TrackingFiles |

| “LSFMProcessing-Kubuntu” - stable, custom Linux environment, with all software above included | This paper | https://gitlab.com/mhdominguez1/LSFMProcessing-Kubuntu24.04 |

| Kubuntu 24.04 LTS | Canonical and Kubuntu developers | https://kubuntu.org/getkubuntu/ |

| nVidia driver (versions 450 to 550) | nVidia corporation | N/A |

| Other | ||

| Zeiss Z.1 “Lightsheet” Microscope with installed features: - laser lines and emission filters for desired fluorophores - dual pco.edge 4.2 cameras with liquid cooling - Zeiss incubation with CO2 setup |

Zeiss | RRID:SCR_020919 |

| Zeiss 20×/1.0 “W Plan-APOCHROMAT” multi-immersion objective | Zeiss | similar to 421452-9700-000 |

| M32 × 0.075 to M27 × 0.75 10 mm-offset ring for 20×/1.0 W objective | Zeiss | N/A |

| Dual Zeiss “LSFM” 10×/0.2 illumination objectives | Zeiss | N/A |

| Zeiss Z.1 Lightsheet incubation sample chamber with temperature probe | N/A | N/A |

| Sample holder for capillaries | N/A | N/A |

| Sample chamber lid with small slit | N/A | N/A |

| Windows Workstation with ZEN | Zeiss/HP | RRID:SCR_018163 |

| Syringe pump capable of 2–30 μL / min infusion flow | World Precision Instruments | AL-300 |

| 20 mL Luer Lock syringes | VWR | 76290-384 |

| 60 mL Luer Lock syringes | VWR | 76290-388 |

| 3-way (2 female) stopcocks | Medex supply | MX5311L |

| Tubing with Luer ends | Cole Parmer | UX-30526-18 |

| Stereo dissection microscope - fluorescence is preferred but not absolutely necessary |

Leica | MZ 12, MZ FLIII, or similar |

| P200 micropipette | N/A | N/A |

| 200 μL wide-orifice low-retention micropipette tips | Rainin | 30389188 |

| Serological pipettes, 2–50 mL | N/A | N/A |

| Center well IVF dish | Thermo Fisher Scientific | 12-565-024 |

| 6 cm Petri dishes | MilliporeSigma | P5481 |

| 10 cm Petri dishes | MilliporeSigma | P5731 |

| Temperature-controllable warming plate (need two) | Fisher Scientific | NC0987506 |

| Benchtop incubator | Benchmark Scientific | H2200-HC |

| Benchtop digital dry bath with removable blocks (need two) | Benchmark Scientific | BSH1001 |

| Microdissection forceps #5, Inox, Tip Size .05 × .01 mm (qty 2+) | VWR | 100190-496 |

| Mouse dissection toolkit (qty 1+ each) | Medline | MDS1012011, MDS1017012, MDS0859411, and MDS0811013 |

| Microcentrifuge tubes | N/A | N/A |

| 50 mL conical vials | N/A | N/A |

| 50 mL conical vials w/ 0.22 μm vacuum-driven filter | Thermo Fisher Scientific | SCGP00525 |

| Cell culture hood with vacuum aspiration setup | Any | N/A |

| Tissue culture incubator at 37°C and 5% CO2 | Any | N/A |

| Spray bottle with 70% ethanol | Any | N/A |

| Laboratory refrigerator and freezer | Any | N/A |

| Microwave | Any | N/A |

| Oxygen and isoflurane vaporizer setup for mouse euthanasia | Any | N/A |

| 8+ TB external hard drives | Seagate | STKP14000400 |

| Workstation PC - 8+ core x86 CPU - 128 GB RAM - nVidia GPU with 8+ GB RAM - 2+ TB storage including ample swap partition / pagefile |

Lenovo, Dell, HP, or similar | N/A |

| USB 3.1 thumb drive, 128+ GB | Any | N/A |

Materials and equipment

APE solution at 2000× (N-acetyl cysteine, progesterone, b-estradiol)

APE simulates the in utero hormonal environment, and provides protection from damage when embryos are grown in atmospheric oxygen.10,11

-

•Prepare three individual reagent stocks in cell-culture grade DMSO:

-

○NAC stock (at 4,348×): dilute 26.61 mg in 1.5 mL DMSO.

-

○Progesterone stock (at 4,000×): dilute 3 mg in 2.4 mL DMSO.

-

○Estradiol stock at (50,000×): dilute 3.27 mg in 30 mL DMSO.

-

○

-

•Compound APE at 2,000× by adding component stocks together:

-

○Above NAC stock: add 1380 μL.

-

○Above progesterone stock: add 1500 μL.

-

○Above estradiol stock: 120 μL.

-

○

Divide 2,000× APE (suggested ∼20 μL aliquots) and store at −80°C for up to 1 year.

Prepare heat-inactivated fetal bovine serum

-

•

Divide 1 L FBS into 50 mL aliquots and freeze at −20°C.

Store fresh FBS at −20°C for up to 9 months.

-

•

When ready to use a new 50 mL aliquot, thaw first at 37°C, then heat-inactivate at 56°C for 30 min.

-

•

Divide heat-inactivated FBS into 5 mL aliquots.

Store heat-inactivated FBS at −20°C for up to 6 months.

Prepare heat-inactivated rat serum

-

•

When ready to use a 125 mL aliquot,12 thaw first at 37°C, then heat-inactivate at 56°C for 30 min.

-

•

Cool to room temperature (15°C–25°C) and sterile filter the rat serum into 15 mL aliquots using 0.22 μm 50 mL conical vial filters.

Store aliquotted rat serum at −20°C for up to 3 months.

Prepare CB-DMB (for imaging the heart at E7-E8 only)

CB-DMB inhibits the early heartbeat by inhibition of NCX1 channels.13

-

•

Dilute 5 mg of CB-DMB in 1.0585 mL DMSO to make 10 mM stock.

Store at −20°C for up to 1 year.

Prepare embryo mounting medium

-

•

Place 300 mg low melting point agarose and 600 mg gelatin in a 50 mL conical vial, add 20 mL sterile PBS, and vortex.

-

•

Close conical vial tightly and microwave repeatedly on high for 5–7 s until melted, stopping to remove and replace lid (to vent hot vapor) each time.

-

•

Aliquot melted gel mix into sterile microcentrifuge tubes and cool to room temperature (15°C–25°C).

Store at +4°C for up to 3 months if remaining free of contamination.

Dissection medium

| Reagent | Final concentration | Amount |

|---|---|---|

| DMEM/F12 (+HEPES, no phenol red) | 87% | 43.5 mL |

| Heat-inactivated FBS (see above) | 10% | 5 mL |

| Penicillin/streptomycin 100× | 1× | 0.5 mL |

| ITS-X 100× | 1× | 0.5 mL |

| GlutaMAX 100× | 1× | 0.5 mL |

| APE 2000× | 1× | 25 μL |

| Total | – | 50 mL |

Store at +4°C for up to 1 month.

Embryo culture medium

| Reagent | Final concentration | Amount |

|---|---|---|

| DMEM/F12 (+HEPES, no phenol red) | 42% | 15 mL |

| Heat-inactivated FBS | 14% | 5 mL |

| Heat-inactivated rat serum | 42% | 15 mL |

| Penicillin/streptomycin 100× | 1× | 360 μL |

| ITS-X 100× | 1× | 360 μL |

| GlutaMAX 100× | 1× | 360 μL |

| APE 2000× | 1× | 18 μL |

| CB-DMB 10 mMa | 2.5 μM | 9 μL |

| Total | – | ∼36 mL |

Store at +4°C for up to 1 week.

Only for heart imaging at E7-E8.

CRITICAL: CB-DMB is an irritant and is toxic by ingestion; only use when imaging the early heart to quench motion artifact.

Alternatives: If recurrent contamination of live cultures occurs despite robust sanitization of imaging chamber(s), user may add chloramphenicol at final concentration of 5–10 μg/mL to Embryo Culture Medium.

Step-by-step method details

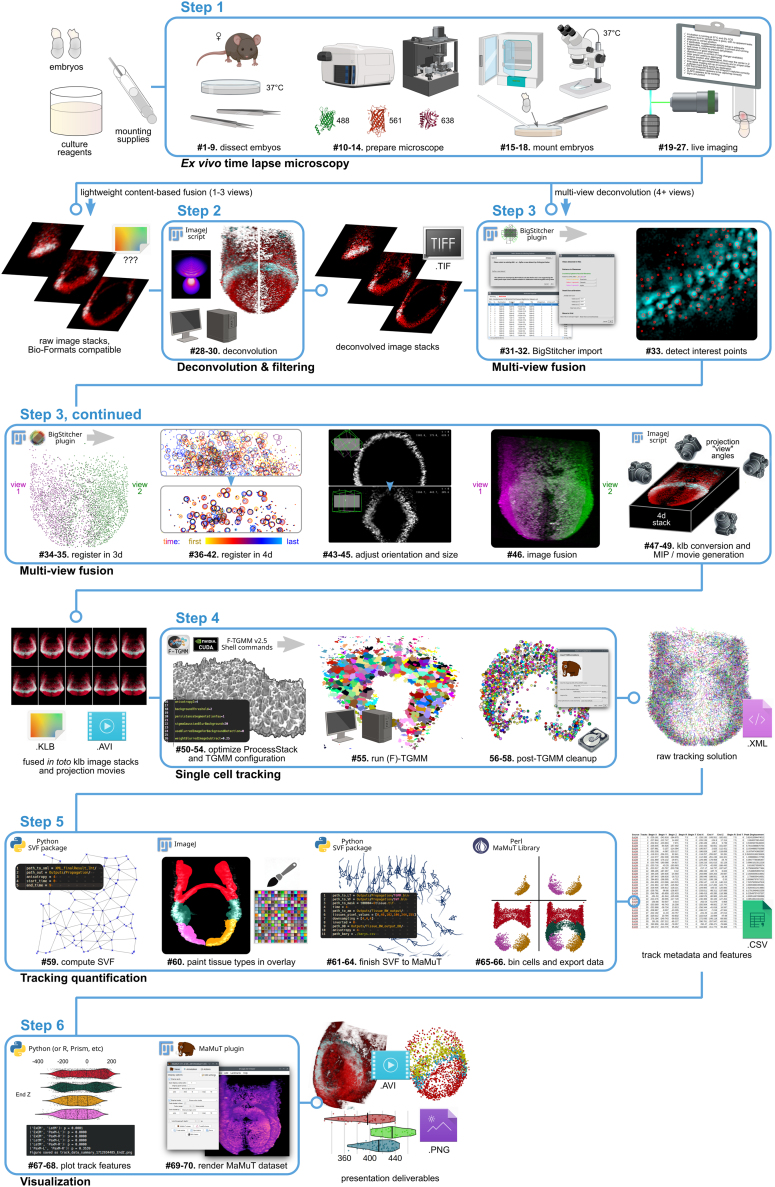

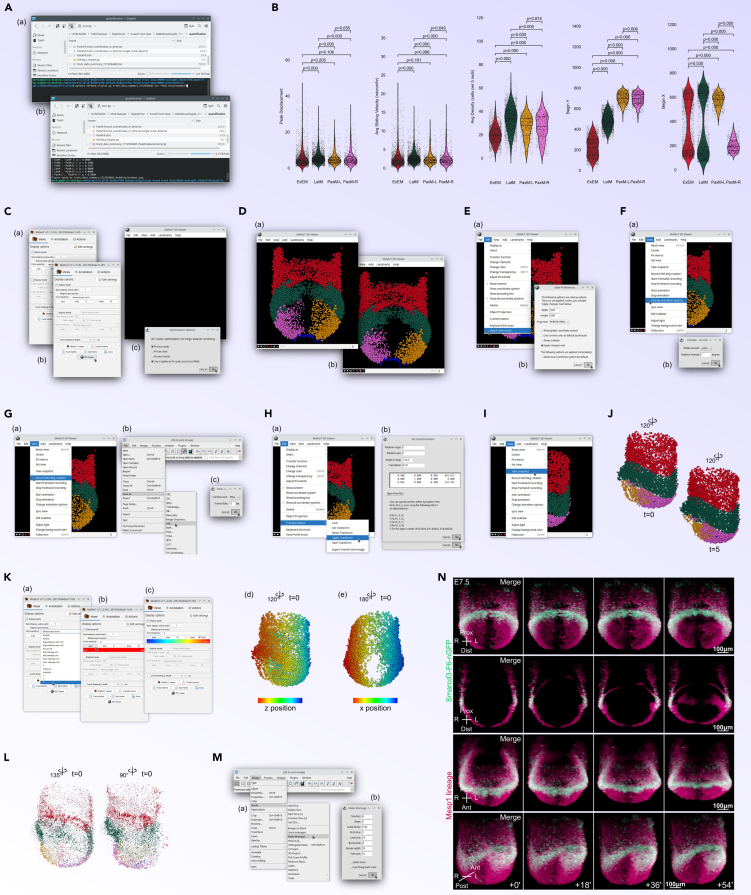

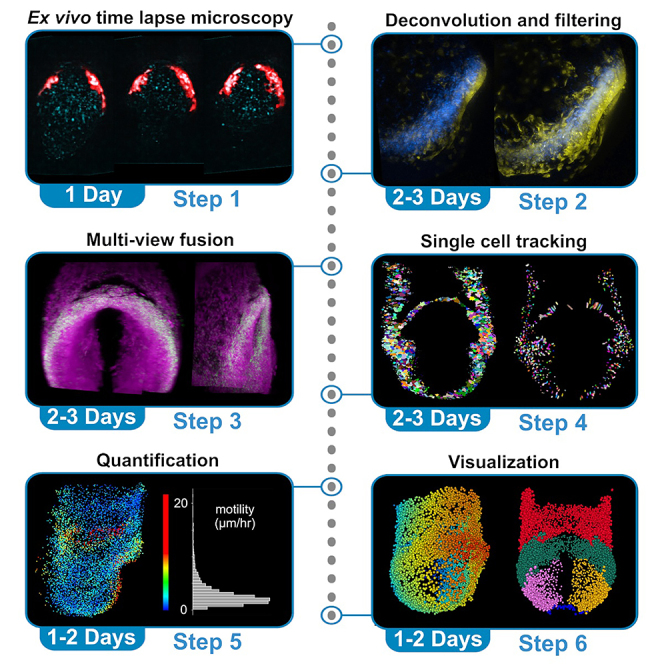

An overview of the below procedures, including the inputs and outputs of each step, is detailed in Figure 1. For a description of software packages and how they are used in this protocol, see Table 1.

Figure 1.

Overview of comprehensive workflow, partitioned by step

The step-by-step method details are broken down into discrete functions for each step. For quick reference, each segment of the protocol (5 across in the workflow, to be read left-to-right then top-to-bottom) points to numbered sub-steps (bold numbers following ‘#’) within the protocol text.

Table 1.

Software packages used in this protocol

| Step(s) | Name | Description |

|---|---|---|

| 1: #10–19 | Zeiss ZEN | Proprietary Windows software included with Zeiss Lightsheet Z.1 and 7 microscopes – necessary for setup and acquisition on these instruments. For non-Zeiss imaging, refer to the software included with your microscope. |

| 1: #14, #24–25 | ZLAPS (ZEN lightsheet adaptive positioning system) | Open-source IT3 and ImageJ scripted utility (Windows) that interfaces with ZEN to provide adaptive time-lapse acquisitions. Such features may be included in future versions of ZEN or your microscope’s software, obviating this package. |

| 2–6: #28–71 | (k)ubuntu 18.04 to 24.04 | Open-source Linux operating system for PC x86–64 hardware that we use for our computational workflow. User can adapt virtually all software below for Windows, although pre-built F-TGMM is only provided for Linux (user would need to download F-TGMM sources as well as nVidia CUDA toolkit and compile for Windows if desired). |

| 2: #28–71 | Fiji6 “Fiji is just ImageJ” | Open-source multi-platform Java-based application evolved from the original NIH ImageJ, with many plugins and tools included. Needed for virtually all computation steps of this workflow. |

| 2: #28–30; 3: #44, #47; 4: #53 | LSFM Processing Scripts1 | Collection of macros in Fiji for automating deconvolution, filtering, and format interconversion. Additionally contains Perl and Python scripts to augment BigStitcher and TGMM integration. |

| 2: #28–29 | PSF Generator & Parallel Spectral Deconvolution |

Fiji plugins that are needed for single-view deconvolution performed by LSFM Processing Scripts. |

| 3: #31–47 | BigStitcher1,7 | Fiji plugin for registering (aligning) all image stacks together in 4d, and fusing them into volumes (free from motion artifact, drift, and jitter) for each time point and channel. We recommend using our multiview-reconstruction.jar (see key resources table for link to github repository) containing performance enhancements, additional user options, and Lightweight Content Based Fusion. |

| 3–4: #47–55 | KLB Fiji integration1,2 & KLB library2 | System library, Python package, and Fiji utility for working with klb file format. klb is not absolutely necessary although it is highly recommended for fused datasets as the preferred format for (F-)TGMM input images. |

| 4: #50–55 | F-TGMM v2.51 | Application in C++ and nVidia CUDA, compatible only with nVidia (GP)GPUs, for segmentation and tracking of fused 4d datasets (klb or tif formatted). Pre-built only for Linux, though can be built for Windows also. |

| 3: #49; 4: #50–57; 5: #59–61 | TrackingFiles | Collection of scripts, lookup tables, and configuration files for use with F-TGMM, SVF, and MaMuT. |

| 5: #59–64 | SVF1,2 | Python application for statistically resolving TGMM tracking solutions into vector-like morphogenetic maps. Improves spatial accuracy and reconstructs continuous cell tracks across the full duration of the dataset. Affords backward and forward propagation of cell or tissue labels (identities) that are annotated by the user. |

| 4: #58; 5: #64; 6: #69–70 | MaMuT1,8 | Fiji plugin for visualizing tracking solutions, either directly from TGMM (raw) or outputted by SVF. We recommend using our MaMuT.jar (see key resources table for link to github repository) containing additional user options and 3d viewer. |

| 5: #55–56 | MaMuT script library1 | Perl scripts for manipulating MaMuT datasets via filtering, coloring, annotating, subsetting, merging, and exporting track data to visualize or quantify cell behaviors. |

| 2–6: #28–71 | LSFMProcessing-Kubuntu | USB Bootable custom live Linux distribution (Kubuntu 24.04 base) containing proprietary nVidia driers (version 550), and all software above other than ZEN and ZLAPS (see key resources table for link to GitLab repository for instructions to download and use). |

Ex vivo time-lapse microscopy

Timing: 9–24 h (Note: 8–12 h user time)

Here, embryos will be harvested from pregnant dams, dissected, mounted, and subjected to multiview light sheet imaging. Prior to starting, ensure that all steps above in “prepare materials for embryo culture and imaging” have been followed, and that all necessary reagents and equipment (listed in key resources table) are available.

Optional: If using our example raw dataset to rehearse all computational steps, download all .czi.bz2 files (ignore .klb files) from our repository at Data Dryad (https://datadryad.org/stash/dataset/doi:10.5061/dryad.nk98sf823). Ensure adequate disk space (60GB) is available, and run (in Kubuntu’s Dolphin, press F4 to toggle console below folder navigation) the following command in the folder to decompress the .czi files: bunzip2 ∗.bz2. Note bunzip is single-threaded and slow. Skip to #28 below and perform processing steps on these files.

-

1.Prepare embryo culture and mounting reagents:

-

a.Melt 2–3 tubes of embryo mounting medium per dam using digital dry bath at 75°C, then transfer the tubes to a benchtop incubator at 34.5°C when completely liquid.

-

b.Pre-warm embryo dissection medium, embryo culture medium, PBS, and DMEM/F-12°C to 37°C.

-

a.

-

2.When embryo mounting medium has equilibrated to incubator temperature (∼10 min), prepare and store capillaries (small/black capillaries for E6.5-E7.0, large/green capillaries for E7.5, jumbo/blue capillaries for E9.0+):

-

a.Use a P200 pipette to fill a glass capillary with mounting medium.

-

b.Once filled, orientate capillary vertically and allow medium to start to drip out of one end. As it drips down, insert plunger into that end to make a good seal with the medium (no air bubbles at the piston/gel interface).

-

c.Store the capillary/piston setup horizontally in benchtop incubator at 34.5°C to maintain its liquid state.

-

d.Repeat the above steps until 4–8 capillaries per dam are prepared and awaiting embedding.

-

a.

-

3.

Per dam, add 6 mL dissection medium each to two 6 cm round petri dishes; also, add 1.5 mL embryo culture medium to the center well of two IVF dishes (one for initial dissection, one for desired/chosen embryos). Temporarily store these dishes in a tissue culture incubator.

-

4.

Per dam, add 12 mL PBS to a 10 cm petri dish, and add 12 mL DMEM/F-12 to another 10 cm petri dish. Place these dishes on a 37°C warm block to maintain body temperature.

-

5.Following established institutional protocol, deeply anesthetize and euthanize pregnant dam(s) on desired day of pregnancy.

-

a.Collect uterus into the 10 cm petri dish containing PBS, and swirl gently for ∼30 s to remove gross blood.

-

b.Transfer the uterus to the 10 cm petri dish containing DMEM/F-12 at 37°C and bring to the dissection stereomicroscope.

-

c.Clean the now-used warm block with 70% ethanol and return it to the 37°C digital dry bath to re-warm.

-

a.

- 6.

CRITICAL: Maintain 37°C as closely as possible during dissection (i.e. using temperature-controllable warming plates, working quickly).

-

7.

Following common14,15 or user-preferred procedures, microdissect embryos and remove Reichert’s membrane in each 6 cm dish.

-

8.

Using a P200 pipette with low-retention wide-orifice tips, transfer each dissected embryo into the center well of the IVF dish and maintain 37°C (i.e., on temperature-controllable warming plate).

-

9.

Screen embryos with a fluorescence microscope if desired, and transfer selected embryos to a fresh center well dish with embryo culture medium.

Optional: If desired, intermediate-term embryo culture can be initiated at this point by transferring embryos to the outer “moat” portion of the IVF dish containing ∼2 mL embryo culture medium in at incubator at 37°C and 5% CO2. We recommend using an orbital shaker platform at 50–70 rpm such that embryos go around the moat like a lazy river. Culture medium should be changed every day; for this, embryos can be picked up or transferred using a 25 mL serological pipette.

Pause point: (5 min): After confirming embryos are viable for imaging and are safely stored at 37°C and 5% CO2, we turn our attention to the microscope setup and the imaging/incubation chamber. The below steps are somewhat specific to our Zeiss Z.1 Lightsheet microscope; however, they can be easily adapted to user equipment.

-

10.Follow a pre-imaging checklist at the microscope:

-

a.Confirm thermoelectric cooler/incubation instrument has sufficient coolant. Confirm cameras (if cooled) have sufficient coolant (Figure 2Aa).

-

b.Ensure that correct objectives are installed (Figure 2Ab) in the microscope (we use a 20× water immersion with correction for detection), including settings on any correction collar(s) (we use RI = 1.345 for embryo culture medium). Configure software (i.e., ZEN) according to microscope configuration.

-

c.Install incubation sample chamber in microscope (Figure 2Ac). Ensure that top rim and lower rails are dry before installing, though other parts may remain damp from cleansing.

-

d.Set up tubing (Figure 2Ad), ignoring the 20 mL syringe and pump if supplemental ddH2O is not required (see below).

-

a.

-

11.Setup syringe with embyro culture medium:

-

a.Remove the plunger (stopcock closed to incubation chamber) from the 60 mL syringe, then close stopcock to the 60 mL syringe and fill with embryo culture medium.

-

b.After checking tubing, close the stopcock to the 20 mL syringe and slowly depress plunger of 60 mL syringe to fill the incubation chamber.

-

c.Close stopcock to chamber.

-

a.

-

12.

Use ZEN software to begin incubation at 37°C and 5% CO2 (Figure 2Ba). Ensure that the temperature is rising and that CO2 control is starting normally.

-

13.

Use ZEN software to set up excitation and emission channels as suited for the experiment (Figure 2Bb-c).

-

14.If using ZLAPS, start ZLAPS (Figure 2Bd).

-

a.Follow instructions for configuration prior to imaging (“Usage” in https://github.com/mhdominguez/ZLAPS).

-

b.In the ZEN Experiment Manager tab, check “Z-Stack” and “Multiview Acquisition” and uncheck “Time Series” (Figure 2Be).

-

a.

Optional: If not using ZLAPS, check “Z-Stack” and “Time Series” and “Multiview Acquisition” if desired (Figure 2Bf).

Optional: Depending on the laboratory setup and the user’s confidence in securing viable embryos for imaging, steps 10–14 above can be performed prior to embryo harvest (step 5) to decrease the time embryos are resting at 37°C and 5% CO2 prior to imaging.

Pause point: (5 min): After confirming that the microscope is ready for imaging, we return to mounting the embryos and bringing them for auditioning on the LSFM. The below steps pertain to mounting embryos for imaging in a Zeiss Z.1 microscope; however, they can be adapted to other instrumentation.

-

15.

Discard the lid from a fresh 50 mL conical vial, then tape the vial horizontally to the flat surface of the 37°C warm block, maintaining ample space on the block abutting its open top to rest capillary and piston rods (Figure 2C).

-

16.Remove one capillary/piston setup at a time from benchtop incubator, and keeping as sterile as possible, cool at room temperature (15°C–25°C) until the mounting medium is starting to gel (on pushing some medium out with piston, it is just barely able to maintain the cylindrical shape of the capillary). Immediately proceed to mounting an embryo with this capillary (Video S1):

-

a.Push down piston rod at least 25%–33% of the overall capillary length to visualize gel, then use dissecting forceps to cut gel off sharply to remove this lower portion.

-

b.Place the lower end of capillary (opposite the piston) into the dish containing embryos, holding it with the non-dominant hand. Use the dominant hand to push piston to extrude ∼1–2 mm of gel, then pick up dissecting forceps with dominant hand.

-

c.While holding capillary with non-dominant hand, use dominant hand with dissecting forceps to lightly grab the ectoplacental cone from one embryo, pushing and shoving it gently into the extruded gel from the capillary (Figure 2D).

-

d.Once the embryo is immobilized by adequate embedding of the ectoplacental cone into the gel, use the dominant hand (first return forceps to tabletop) to retract piston and gel column containing mounted embryo (with excess culture medium) back into the capillary. Park embryo about 4–5 mm above the lower open end of the capillary.

-

e.Allow capillary with mounted embryo to cool to room temperature (15°C–25°C) for 1–2 min, then place capillaries inside open-top horizontally-oriented conical vial at 37°C prepared above.

-

a.

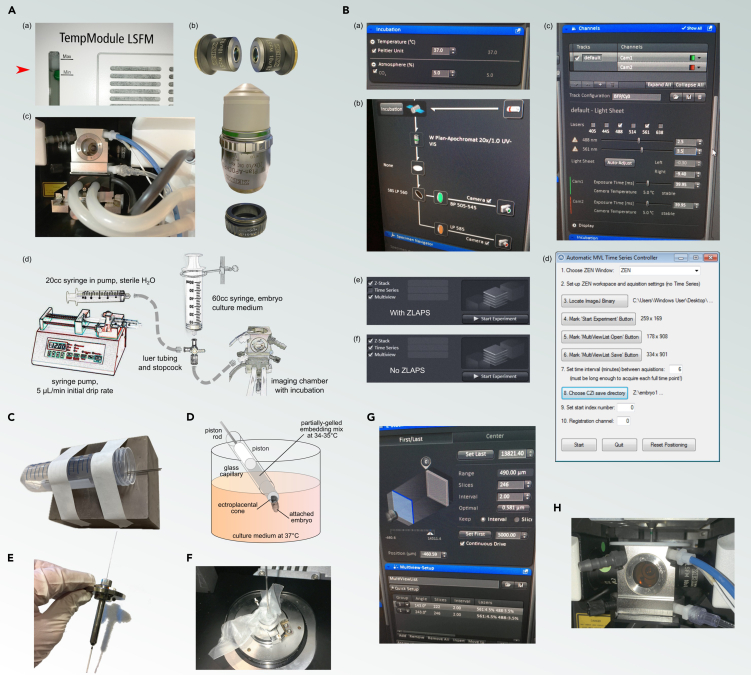

Figure 2.

Ex vivo time lapse microscopy

(A) Microscope Preparation (Steps 10a–d): Setup and checks before imaging including confirmation of coolant levels and objective installation, and preparation of the incubation sample chamber and tubing.

(B) Incubation and Software Configuration (Steps 11–14): Initial setup of the incubation environment using ZEN software, configuring the excitation and emission channels, and additional setup for ZLAPS, if used.

(C) Temperature Maintenance after Mounting (Step 15): Conical vial is taped to warm block to use as storage/transfer device for embryo-loaded capillaries.

(D) Embryo Mounting (Step 16): Detailed procedure for mounting embryos for imaging, including adjusting piston rod and embryo placement into capillary.

(E) Embryo Positioning for Imaging (Step 19): Placement of embryo-containing capillary into the microscope stage and initial positioning for imaging.

(F) Capillary Securement in Microscope (Step 22): Securing capillary on the microscope stage for stable time lapse imaging. Use of parafilm to minimize downward movement of the piston rod during a long experiment.

(G) Z-stack and Multiview setup in ZEN (Step 24): Configuration of Multiview settings, initiation of time lapse imaging, and environmental conditions maintenance.

(H) Tank setup (Step 25): Checks on medium level in the tank, presence of white tank cover to prevent dehydration, and connection of tubing including CO2.

Embryo must be trimmed for unobstructed illumination and observation from multiple angles with respect to region of interest. Ectoplacental cone is pushed into a column of partially-gelled agarose/gelatin mix for 360° free imaging of embryonic region.

Troubleshooting 1: Mounting embryos requires patience, practice, and optimization of the gelling conditions may be needed for best results.

-

17.

Repeat previous step for each embryo. When all embryos are mounted, carefully move the 37°C warm block with conical vial and capillaries to the microscope room.

-

18.

Confirm microscope setup is appropriate, with no leaks in incubation chamber or tubing, no leaks in peltier/TEC coolant connections, and that incubation is running at 37°C and 5% CO2.

Optional: If desired, verify the culture medium pH using a sterile transfer pipette/dropper and test strips (i.e. Chemstrip 10).

-

19.Load the first embryo-containing capillary into the capillary holder (Figure 2E), then:

-

a.Seat the capillary holder onto the microscope stage.

-

b.Using ZEN Specimen Navigator to position the stage, move capillary into the working position just above the objectives’ field of view.

-

c.Under camera visualization, slowly push piston rod to lower embryo freely into surrounding culture medium.

-

a.

Note: Do not extend the agarose column into the immersion medium more than is necessary for imaging the critical aspects of the embryo.

-

20.Focusing on areas of the specimen that are NOT the subject of the live imaging experiment, align the light sheets in the detection plane.

-

a.Ensure pivot scanner (if equipped) is enabled, and that Online Dual Side Fusion is enabled (depending on embryo size, photon budget, and intended multiview setup).

-

b.If imaging more than one channel, ensure that alignment of light sheets is correct in all channels (all channels should align simultaneously), and if not, consult technical support.

-

a.

-

21.Quickly obtain auditioning views of the specimen, then change capillaries to audition each embryo that has been mounted:

-

a.Upwardly retract piston rod under camera visualization to park embryo a few mm above bottom of capillary.

-

b.Raise the stage to its loading position to unmount.

-

a.

-

22.

Select the desired embryo for time lapse imaging, and load its capillary into the microscope again as above.

Note: If using Z.1 and similar top-load microscopes, after positioning the embryo into the objectives’ field of view, carefully open microscope loading platform and use parafilm to tightly wrap the piston rod to the thumbscrew (Figure 2F). Wedging parafilm between the thumbscrew and piston rod is also helpful to prevent unintended downward movement of the piston during imaging (Figure 2F).

-

23.

Verify light sheet alignment. Configure objective zoom, accounting for growth of the specimen during the experiment (though this can be adjusted later as needed).

CRITICAL: Adequate segmentation in computational steps requires adherence to both Nyquist and segmentation scale principles. The distance separating the borders of the closest adjacent nuclei should be at least twice (preferably several fold greater to account for aberration, noise, and other artifact) the pixel scale factor at acquisition. At E6.5, the most densely packed nuclei have boundaries that are offset by as little as 600–800 nm; therefore, we recommend 0.3 μm/pixel (or smaller) imaging resolution.

-

24.

If using ZLAPS, follow configuration steps and start imaging. Otherwise, configure Multiview-Setup (Figure 2G) and acquire a first time point. Place tank cover to prevent dehydration.

-

25.Follow an imaging checklist to systematically ensure smooth operation. An example of such a standard operating checklist we have employed before/during a live LSFM experiment:

-

a.✓ incubation is running at 37°C and 5% CO2 (Figure 2Ba).

-

b.✓ medium level in sample tank is good, with no apparent leaks (Figure 2H).

-

c.If desired, verify culture medium pH and/or density/specific gravity using a sterile transfer pipette/dropper and test strips (remove tank cover).

-

d.✓ stopcock in correct position.

-

e.✓ if applicable, supplemental ddH2O setup is adequate with correct levels (Figure 2Ad).

-

f.✓ if applicable, supplemental ddH2O is primed and running.

-

g.✓ tank cover in place to prevent dehydration (Figure 2H).

-

h.✓ lightsheets in good alignment.

-

i.✓ objective zoom is correct (if mag changer available).

-

j.✓ laser power setting is appropriate for the stage and experimental plan (Figure 2Bb).

-

k.✓ embryo position and view angles are good, with region of interest near the center of each Z-stack.

-

l.✓ multiview set-ups established correctly and in proper order.

-

m.✓ time lapse imaging set-up at correct intervals (we recommend 6 min for whole cell tracking at E6-E7) with sufficient time points requested.

-

n.✓ sufficient hard drive space on destination.

-

o.GO first time point! Ensure first time point captures correctly.

-

p.✓ second time point appears to be capturing correctly.

-

q.✓ ZLAPS appears to be working.

-

r.✓ signs are posted to indicate that a live experiment is taking place.

CRITICAL: If long term (6+ h) imaging will be undertaken, we recommend checking that the medium level in the sample chamber remains adequate. If evaporation is occurring, user can verify medium pH and density/specific gravity as described in the above checklist. Light sheets may need alignment adjustment every few hours depending on the instrument.Optional: If pH is not in the desired range (usually 7.4 to 7.5), adjust CO2 concentration accordingly.Optional: If sample chamber medium level is declining, and density or specific gravity measurements indicate that evaporation is taking place, set up supplemental ddH2O syringe and pump:

CRITICAL: If long term (6+ h) imaging will be undertaken, we recommend checking that the medium level in the sample chamber remains adequate. If evaporation is occurring, user can verify medium pH and density/specific gravity as described in the above checklist. Light sheets may need alignment adjustment every few hours depending on the instrument.Optional: If pH is not in the desired range (usually 7.4 to 7.5), adjust CO2 concentration accordingly.Optional: If sample chamber medium level is declining, and density or specific gravity measurements indicate that evaporation is taking place, set up supplemental ddH2O syringe and pump: -

s.Close stopcock to the unattached sideport if it is not already in this position.

-

t.Fill a 20 mL syringe with sterile deionized water (i.e., cell culture grade), attach it to Luer tubing and prime the tubing by depressing the plunger.

-

u.Connect the other end of the tubing to the stopcock. Close the stopcock to the sample chamber.

-

v.Slowly depress the plunger of the 20 mL syringe in order to evacuate any air bubbles into the inverted culture medium syringe, and away from the sample chamber.

-

w.Close the plunger to the 60 mL syringe and begin pump operation. We recommend starting at ∼5 μL/min flow and adjusting as needed to maintain a good level of medium in the sample chamber.

-

a.

-

26.

Each time the user walks away from the live imaging setup (regardless of the time), we recommend following a similar checklist to maximize endurance of the experiment.

CRITICAL: The stage at the onset as well as total duration of the imaging acquisitions should depend on the biological process under study. Our example dataset is approximately 3 h long; however, for study of migration and morphogenesis of early heart formation in the mouse, an adequate duration is 9–24 h depending on experimental design. The embryos should be harvested at a stage (at least several hours to account for variability) prior to the biological events being captured. Embryo culture medium recipe should be modified for optimal development at that stage.11,12,15

-

27.When the experiment is finished, take care to follow the reverse of the setup steps. In addition:

-

a.Consider rechecking pH and density or specific gravity, and note these values for titration of CO2 and supplemental ddH2O flow rate in future live imaging runs.

-

b.Ensure sample chamber and all fittings that contact the culture medium are adequately sanitized with 10% bleach and/or 70% ethanol. We recommend periodic disassembly of the sample chamber for scrubbing, immersion, and/or sonication of its components (where appropriate).

-

a.

Pause point: After data is collected, processing can commence at any time. Remember to backup each raw dataset after it is collected. The remaining steps in this protocol can be followed straightforwardly, or can be easily adapted to images acquired on microscopy equipment other than that described above. Since data processing can proceed at user’s pace, there are no further Pause points in the protocol.

CRITICAL: In planning image acquisitions (#22–25 above), users must consider the overall goals of their experiments, size and accessibility of region(s) of interest (ROI) within the specimen, the microscope they are using, their photon budget, as well as other factors (Figure 3A). Time lapse imaging usually requires trade-offs between temporal and spatial resolution, as finer time granularity may limit the available time to acquire images/views for each frame and vice-versa.

Note: Our own experience and empirical testing has informed our typical acquisition of two channels (488 nm, 561 nm) with 2–3 views offset at 72°–110° in the y-axis. This multiview setup is compatible with 6-min interval imaging of early mouse embryos on a Zeiss Z.1. We initially process our raw images with single-view deconvolution and enable additional de-blurring, since the alternative approach – multiview deconvolution – is inefficient when fusing fewer than 4 views.16 Users should critically examine their own experimental goals and equipment before deciding on an imaging plan. Iterative planning through experiential learning during imaging and processing steps is beneficial for optimizing an experiment’s photon budget and recovery of high signal-to-noise data, together with downstream user and CPU time.

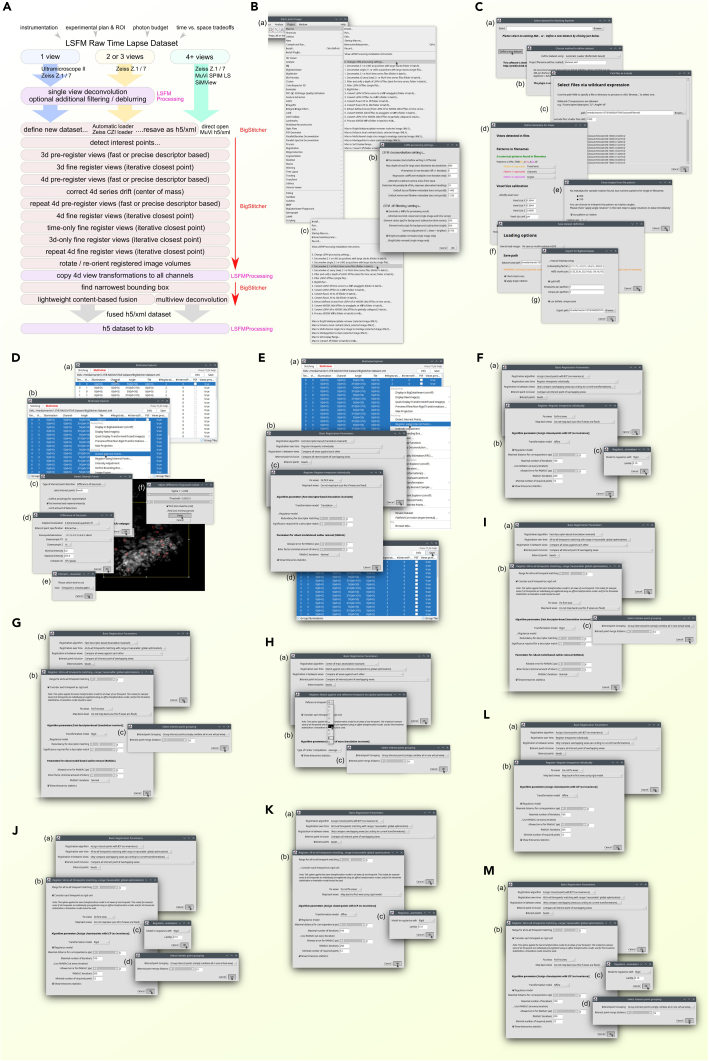

Figure 3.

Deconvolution, filtering, and multiview registration

(A) Pre-Processing Workflow Overview (Steps before 28): Visual representation of the recommended workflow for image acquisition and considerations, including setup and planning for multiview imaging.

(B) Initial Image Deconvolution and Filtering (Steps 28–30): Setup, parameter adjustment, and execution of LSFMProcessing macros in Fiji for deconvolution.

(C) Data Import and Pre-processing for BigStitcher (Step 32): Steps for importing and re-saving data in the BigStitcher-friendly h5/xml format.

(D) Interest Points Detection (Step 33): Process of detecting interest points within the datasets for image registration, with adjustment of detection parameters shown.

(E) Initial 3D Pre-Registration Using Interest Points (Step 34): Pre-registration in 3D using interest points detected earlier, detailing settings adjustments for optimal registration.

(F) Fine 3D Registration (Step 35): Detailed view of the fine registration process in 3D, with specific algorithm adjustments.

(G) Initial 4D Pre-Registration (Step 36): Setup and pre-registration across time points in 4D, using rigid transformation.

(H) 4D Series Undrift Using Center of Mass (Step 37): Procedure for correcting positional drift over time using the center of mass algorithm, selecting a mid-sequence reference time point.

(I) Repeating 4D Pre-Registration (Step 38): Repeat of the initial 4D pre-registration to refine alignment after undrift, emphasizing increased time point matching range.

(J) Fine 4D Registration (Step 39): Fine registration in 4D, focusing on optimizing view and time point overlap, with rigid or affine transformations.

(K) Unconstrained 4D Registration for Improved Tracking (Step 40): Advanced registration step to enhance tracking accuracy by allowing more flexible correspondence between time points (without view constraints).

(L) Re-Registration in 3D Unconstrained in Time (Step 41): Re-registration in 3D without time constraints, aimed at improving the fit of multiview datasets across different registration stages.

(M) Final Fine 4D Registration (Step 42): Completion of the registration process with fine adjustments in 4D, maximizing alignment and transformation accuracy over extended time points and views.

Deconvolution and filtering

Timing: 2 days (30 min user time)

In this step, our workflow employs single-view CPU-based deconvolution and additional de-blurring, which are followed by registration in the next step. In other words, raw images from each view will be processed prior to registering and fusing those images into a solid in toto volume.

Optional: For 4 views, if desired, skip to #32 and use Multiview Deconvolution in #46. Users should consult our recommended workflow (Figure 3A) prior to advancing to this step, especially if acquiring more than 3 views (i.e. SiMView, MuVi SPIM LS) or if using different instrumentation than we describe here.

-

28.

Open Fiji, install LSFMProcessing macros as instructed (https://github.com/mhdominguez/LSFMProcessing), and adjust deconvolution settings (Figure 3Ba-b, Plugins → Macros → 0. Change LSFM processing settings...).

Note: For 64 GB RAM/heap and 4 megapixel image slices, we recommend setting maximum block depth at maximum 320 slices; while for 120 GB RAM/heap we recommend maximum 480 slice blocks. Within the same settings window, adjust filtering settings as desired, including the application of additional deblurring, range compression to 8-bit, and/or stack depth uniformity.

-

29.

Run deconvolution on the raw 4D dataset. (Figure 3Bc, Plugins → Macros → 1. Deconvolve Z.1 time series (folder in batch)…). Follow instructions to select input and output folders.

-

30.

If desired, run post-deconvolution filtering (2. Filter and unify z-depth of LSFM .tif files (best for time series; folder in batch)…).

Note: As shown above in #28, user settings will affect which methods are used in this step. The output folder of deconvolution is the input folder in this step. This step’s output will be written to a new “Filtered” sub-folder.

CRITICAL: Ensure ample disk space is available on destination drive at each step. Expect deconvolved and filtered image sets each to consume similar space as the original raw images.

Multi-view fusion

Timing: 2 days (2–8 h user time)

This step imports image data into BigStitcher, a Fiji plugin for registering (aligning) all image stacks together in 4d, and fusing them into a single volume (free from motion artifact, drift, and jitter) for each time point and channel.

Optional: Datasets with 4 or more views (i.e. those collected on SiMView, MuVi SPIM LS, and similar microscopes) can take advantage of multiview deconvolution in BigStitcher rather than single view deconvolution prior to BigStitcher import (Figure 3A). If this is the case, user can import raw acquired image data and re-save as h5/xml (i.e. SiMView) or can use images already collected in h5/xml format (i.e. MuVi SPIM) and then proceed here for BigStitcher registration.

-

31.

In Fiji, run BigStitcher (Plugins → BigStitcher → BigStitcher).

-

32.Import outside data and re-save in BigStitcher/BDV format (Figure 3C):

-

a.Click Define a new dataset.

-

b.Using Automatic Loader, proceed (“OK”) to specify the input files. If user is importing raw unprocessed images with intention of fusing with multiview deconvolution, another import method (i.e., Zeiss Lightsheet) may be appropriate.

-

c.Excluding files below 1,000 kB (to exclude log files and PSFs), choose the input directory, which should be the Filtered folder created in #30 above, or the deconvolved output folder (#29 above) if skipping step #30.

-

d.Choose filename patterns to identify different channels, time points, and views (T = time point, C = channel, A = angle, as in Figure 3Cd).

-

e.Confirm that angle information is correctly parsed by the BigStitcher importer (Figure 3Ce).

-

f.Choose re-save h5/xml output format, and select the dataset export path (Figure 3Cf).

CRITICAL: Ensure ample disk space is available on destination drive.

CRITICAL: Ensure ample disk space is available on destination drive. -

g.Confirm h5/xml settings (Figure 3Cg). We recommend splitting datasets by time point and view setup (i.e., combination of channel and angle).

-

a.

-

33.Detect interest points for registration (Figure 3D):

-

a.When import and re-save is complete, BigStitcher will open the dataset (Figure 3Da).

-

b.In Multiview Explorer, sort/rank images by Channel, then select all images belonging to an appropriate channel (i.e., nuclei, punctae, etc.). Right-click and select Detect interest points from the Multiview Explorer context menu (Figure 3Db).

-

c.If using an 8-bit dataset, choose Set minimal and maximal intensity; otherwise, proceed (“OK”) with Difference-of-Gaussian detection (Figure 3Dc).

-

d.Select 2× in Downsample XY (Figure 3Dd). If using 8-bit, choose 0 and 255 as the minimum and maximal intensities.Note: Different Downsample options will affect accuracy and computation time, and can be tested empirically for datasets of different size and resolution.

-

e.When prompted, select the time point and view you would like to visualize for detection of interest points (Figure 3De).Note: Typically Timepoint 0, Viewsetup 0 can work. However, if the signal intensity/quality declines through the dataset, we recommend choosing an intermediate time point.

-

f.Moving/resizing the ROI box to the sample data, adjust Sigma (radius of the Gaussian) and Threshold (intensity of the Gaussian) to maximize correct detections while minimizing noisy/incorrect detections (i.e., those noted in the void away from the sample).Note: For our acquisitions, we use a Sigma ∼5 pixels, and for our 8-bit dataset we adjust the Threshold slider to near 0.002 (Figure 3Df).Troubleshooting 2: Careful selection of interest point detection parameters can markedly improve registration results. User must interactively move within the z-stack to ascertain correct sigma and threshold settings for minimal background detections.

-

g.Save the dataset in Multiview Explorer. Frequent saving between subsequent steps is recommended.

-

a.

-

34.Pre-register in 3d (Figure 3E):

-

a.Select all images from the channel where interest points were detected. Right click in Multiview Explorer and select Register using Interest Points… (Figure 3Eb).

-

b.Comparing all views and all interest points, use the Fast descriptor-based (translation invariant) algorithm to Register timepoints individually, using the interest points found above (Figure 3Eb).

-

a.

Note: On our datasets, we fit with Translation transformations, turn off Regularize model, and reduce Significance required for a descriptor match as well as Inlier factor to improve correspondences (interest points that match between images/views) in this initial pre-registration step (Figure 3Ec). Decreasing Redundancy for descriptor matching usually improves the speed of the registration.

CRITICAL: User should become familiar with the BigStitcher registration workflow. Each time Register using Interest Points… is used, a new transformation matrix is concatenated to the stack of registrations (matrices) for any image (each image/view is displayed in a distinct row in Multiview Explorer), incrementing the # Registrations for that line. At the time of image fusion, each view’s matrix stack is collapsed into a single transform matrix to bring that view into alignment with the others in the fused volume. Be familiar with the Transformation model options that are available: Translation (movement in X, Y, and/or Z), Rigid (Translation plus rotation in X, Y, and/or Z), and Affine (Rigid plus scale and shear). Non-rigid transformations – those that go beyond Affine fitting (i.e. mesh/cage warp, etc.) – are not accepted during registration but can be applied during Image Fusion, leveraging corresponding interest points in the registered images/views.

CRITICAL: To undo any registration step, ensure all images/views that were registered are selected in Multiview Explorer(they normally remain selected when methods are called), then right click and select Remove Transformation→ Latest/Newest Transformation from the context menu. Do this whenever an undesired transformation occurs (i.e. view alignment worsens, not improves) either through Register using Interest Points… or Apply Transformation(s).

-

35.Finely register in 3d (Figure 3F):

-

a.Select images from channel where interest points were detected. Right click in Multiview Explorer and select Register using Interest Points….

-

b.Comparing only overlapping views but all interest points, use the Assign closest-points with ICP (no invariance)algorithm to Register timepoints individually, using the interest points found above (Figure 3Fa).

-

a.

Note: We typically run ICP with Rigid or preferably Affine transformation models, enabling Regularize model, and disabling Use RANSAC (Figure 3Fb). We typically do not change the regularization Model or Lambda value from its default setting (Figure 3Fc).

-

36.Pre-register in 4d (Figure 3G):

-

a.Select images from channel where interest points were detected. Right click in Multiview Explorer and select Register using Interest Points….

-

b.Comparing all views and all interest points, use the Fast descriptor-based (translation invariant) algorithm to register over time using All-to-all timepoints matching with range, with the interest points found above (Figure 3Ga).

-

a.

Note: Because this is a pre-registration step, we decrease the sliding window Range for all-to-all timepoint matching to 3, and enable Consider each timepoint as rigid unit. To account for temporal to shifts in specimen positioning, we use Rigid transformation model, and leave other settings similar to pre-registration in 3d above (Figure 3Gb-c).

-

37.Undrift series in 4d (Figure 3H):

-

a.Select images from channel where interest points were detected. Right click in Multiview Explorer and select Register using Interest Points….

-

b.Comparing all views and all interest points, use the Center of mass algorithm to register over time using Match against one reference timepoint (no global optimization), using the interest points found above (Figure 3Ha).

-

c.Choose a high-quality single time point in the middle of the series to use as Reference timepoint, and enable Consider each timepoint as rigid unit.

-

a.

-

38.

Repeat pre-register in 4d (Figure 3I) now that positional drift is corrected. Follow steps in #36 above. However, increase the Range for all-to-all timepoint matching in this step to at least 5 for improved precision and smoothness of temporal registration.

Optional: The above two steps (#37 and #38) can be omitted if positional drift is minimal in your dataset, but we recommend checking for this (by fusing example time points from beginning to end and viewing as 4d hyperstack) after step #36 if you intend to skip #37 and #38. All-to-all timepoints matching with range is excellent for finely registering in 3d and 4d, but slow drift in the specimen center can accumulate over longer time durations, which unnecessarily increases the final fused volume and adds artifactual movement in cell tracking.

Troubleshooting 3: Temporal or spatial gaps in registration are common in large datasets, and require additional registration steps with alternate settings.

-

39.Finely register in 4d (Figure 3J):

-

a.Select images from the channel where interest points were detected. Right click in Multiview Explorer and select Register using Interest Points….

-

b.Comparing only overlapping views but all interest points, use the Assign closest-points with ICP (no invariance)algorithm using All-to-all timepoints matching with range, with the interest points found above (Figure 3Ja).

-

c.Set Range for all-to-all timepoint matching to 3, and enable Consider each timepoint as rigid unit. Similar to step #35, use Rigid or Affine transformation models, enabling Regularize model, and disable Use RANSAC (Figure 3Jb).

-

a.

-

40.Finely register in 4d while each time point’s images/views are unconstrained (Figure 3K). This step improves tracking accuracy through better registration across time while not holding images/views rigidly together at each time point.

-

a.Select images from channel where interest points were detected. Right click in Multiview Explorer and select Register using Interest Points….

-

b.Comparing only overlapping views but all interest points, use the Assign closest-points with ICP (no invariance)algorithm using All-to-all timepoints matching with range, with the interest points found above (Figure 3Ka).

-

c.Set Range for all-to-all timepoint matching to 5, and disable Consider each timepoint as rigid unit. Use Affine transformation, enable Regularize model, and disable Use RANSAC (Figure 3Kb).

-

a.

Note: Increase Maximal distance for correspondance (px) as needed to align images/views that are not in close proximity before this step.

-

41.

Finely re-register in 3d, now unconstrained in time. This step is paired with the above and below steps to improve overall 4d fitting of multiview datasets. Follow a similar procedure as that in step #35 above (Figure 3L).

-

42.

Complete final fine registration in 4d, using a similar procedure to step #39, except increase Range for all-to-all timepoint matching to at least 5, and use Affine transformation model (Figure 3M).

Example expected results for presentation, including multichannel maximal projections, SVF/MaMuT reconstructions, and single-channel anaglyphs (for viewing with red/blue 3d glasses).

Troubleshooting 4: ICP (iterative closest point) registrations have some pitfalls with model overfitting, and may need parameter tweaking to account for large temporal or spatial movements between time points. Generally, we recommend Regularize model, especially for Affine transformations.

-

43.When the single channel is satisfactorily registered in 4d, apply rigid rotation transformations to align the specimen with the XYZ coordinate system for fusion.

-

a.To examine specimen’s current orientation, use Multiview Explorer to select all images/views from at least one time point in the now-registered channel, right click and choose Image fusion… (Figure 4Aa).

-

b.In the Image Fusion dialog, select Display using ImageJ with Downsampling at 4 or greater, 16-bit Pixel type, Non-Rigid disabled, Content based fusion disabled, and Precompute images (Figure 4Ab).

-

c.The fused image volume will appear as a new Fiji image. From the menu, use Image → Stacks → Z Project… to create a maximum projection in the YZ plane (Figure 4Ac).Optional: We recommend creating and using keyboard shortcuts for Z Project… and other frequently used functions (Plugins → Shortcuts → Add Shortcut…).

-

d.Use the Angle tool to draw the rotation needed (estimate) in Z to align the specimen with the XY axis (Figure 4B). Type ‘M’ to measure this angle.

-

e.Return to Multiview Explorer, and select all images/views from the channel where interest points were detected to rotate the specimen:

-

f.Repeat fusion and maximum projection as above (#43a-c) to confirm proper alignment with the XY axis (Figure 4D).Note: If the specimen rotates the wrong direction, either undo the transformation or repeat rotation (#43e above) using negative of the rotation angle. Each transformation step can be undone with Remove Transformation→ Latest/Newest Transformation.

-

g.Use orthogonal view projections to check rotation and alignment:

-

i.Choose the window containing the newly aligned fused volume, and use Image → Stacks → Reslice [/]… with Rotate 90 degrees enabled and Start at: either Top or Left (Figure 4E).

-

ii.Create a maximum projection of the resliced image volume in either XZ or YZ planes (following #43c above) and estimate the rotations needed to align the specimen in those axes (following #43d above).

-

iii.Apply the rotations and recheck specimen alignment (following #43e-f above).

-

i.

-

h.Repeat this step iteratively until the specimen is satisfactorily rotated in all axes. An alternative to this process involving fusing and angle measurements (#43a-g above), user can use BigDataViewer to apply free and interactive manual transformations (Figure 4F).

-

a.

-

44.Copy view transformations from the registered channel (with the interest points) to all other channels (Figure 4G).

-

a.Save dataset in Multiview Explorer, then close.

-

b.Open the console/command line and navigate to dataset folder (in Kubuntu’s Dolphin, press F4 to toggle the console panel that appears below folder navigation).

-

c.Ensure Perl is installed (if Windows), and that dataset_folder_copy_view_transformations.pl is copied to that folder.

-

d.Run in terminal:$ perl dataset_folder_copy_view_transformations.pl.Note: The script will modify dataset.xml and print correlated view setups for which it updated the transformation matrix stack (Figure 4G).

-

e.Re-open dataset in BigStitcher. Confirm that all channels are now co-registered.

-

a.

-

45.Setup the narrowest bounding box (Figure 4H):

-

a.Select all images/views in Multiview Explorer (any image selection is acceptable).

-

b.Right click in and select Define Bounding Box… from the context menu. Choose Define using the BigDataViewer interactively (Figure 4Hb).

-

c.Adjust the XYZ extremes of the bounding box, visualizing specimen and box with BigDataViewer (the bounding box interior is shaded purple).

-

d.Narrow the boundaries as much as possible without excluding regions of the specimen.

-

a.

CRITICAL: Ensure that the entire 4d specimen (or at least the region of interest) is included in the box. Be careful to check that the entire Z (mouse wheel +/− Ctrl) and T extents of the specimen are in-bounds of the box.

-

46.Export in toto image volumes using lightweight content based fusion (or multiview deconvolution) (Figure 4I).

-

a.Select all images in Multiview Explorer (Ctrl+A) (Figure 4Ia).

-

b.Right click in and select Image Fusion…. Use 16-bit pixels (or 8-bit if previously range-compressed), Linear Interpolation, Cached image loading (to decrease heap memory usage). Configure Image Fusion settings:

-

i.If using our multiview-reconstruction.jar plugin, choose the orientation of the fusion. Current XYZ orientation will create a Z-stack in the as-seen orientation in BigDataViewer, whereas other orientation options will create side or top views.

-

ii.Set your desired output Anisotropy in Z (we use 4× anisotropy for all fusions).

CRITICAL: If using Anisotropy in Z (see above), fuse with the most appropriate view/angle to maximize resolution in the axis of expected cell movement for downstream tracking.Optional: If stacks are Z isotropic at time of fusion, consider reslicing and rotating the stacks after fusion – to create the most desirable view for tracking – then manually downscale in Z.

CRITICAL: If using Anisotropy in Z (see above), fuse with the most appropriate view/angle to maximize resolution in the axis of expected cell movement for downstream tracking.Optional: If stacks are Z isotropic at time of fusion, consider reslicing and rotating the stacks after fusion – to create the most desirable view for tracking – then manually downscale in Z. -

iii.Disable (faster, best for testing fusions) or Enable (slower, best for final fused output) content based fusion.Note: We recommend lightweight content based fusion from our multiview-reconstruction.jar, using 2×downsampled weights.

-

iv.Save fusions for each time point and channel, in xml/hdf5 format (Figure 4Ib).Optional: If fusing 4 or more views, (MultiView) Deconvolution… may improve the quality of fused image volumes compared with [lightweight] content based fusion. To generate theoretical point spread function (PSF) files, run step 1 of LSFMProcessing with deconvolution actually disabled (Plugins → Macros → 0. Change LSFM processing settings…to disable deconvolution, then Plugins → Macros → 1. Deconvolve Z.1 time series (folder in batch)…). This will create _psf.tif files in the raw dataset director, for each view or channel requiring a separate PSF. Follow BigStitcher instructions to Assign…those external PSF .tif files to each view setup (channel / angle combination). With multiview deconvolution, we typically use default settings with Optimization II, and Z-anisotropy of 1 (i.e. isotropic in Z). When fusions are exported as Z isotropic, we subsequently generate front and side views with anisotropy at 4 by downscaling the Z-axis (preceded by optional reslicing if an orthogonal view is desired) using batch processing. Z isotropic output images tend to be large and extremely costly to process versus Z-anisotropic.

-

i.

-

c.In subsequent Save as new XML Project options, enable split hdf5, with 1 time point per partition and 1 setup per partition (Figure 4Ic). Ensure ample free memory and disk space in target drive before proceeding to Image Fusion or Multiview Deconvolutions.Note: Newer and official versions of multiview-reconstruction.jar (does not apply to our GitHub-supplied version) may have additional options at the time of fusion. The default settings are often appropriate, but we recommend consulting the online BigStitcher documentation for assistance.

-

a.

-

47.For downstream compatibility and improved lossless compression, use LSFMProcessing macros to convert .h5 files in the fused dataset folder(s) to klb format.

-

a.Open Plugins → Macros → 4. Convert fused .h5 to .klb… (Figure 4J).

-

b.Ensure ample free space on disk before proceeding.

-

c.Follow prompts to choose folder location of the h5/xml dataset.

-

a.

Note: This requires a complete installation (including dependencies) of LSFMProcessing.

Optional: If using our sample fused dataset to rehearse tracking and quantification steps, download all .klb files from (ignore .czi.bz2 files) our repository at Data Dryad (https://datadryad.org/stash/dataset/doi:10.5061/dryad.nk98sf823) to a unique folder location. Proceed from #48 below. For creating the dataset.xml file, the pixel spacing in this dataset is 0.3044 microns in XY and 2.0 microns in Z.

-

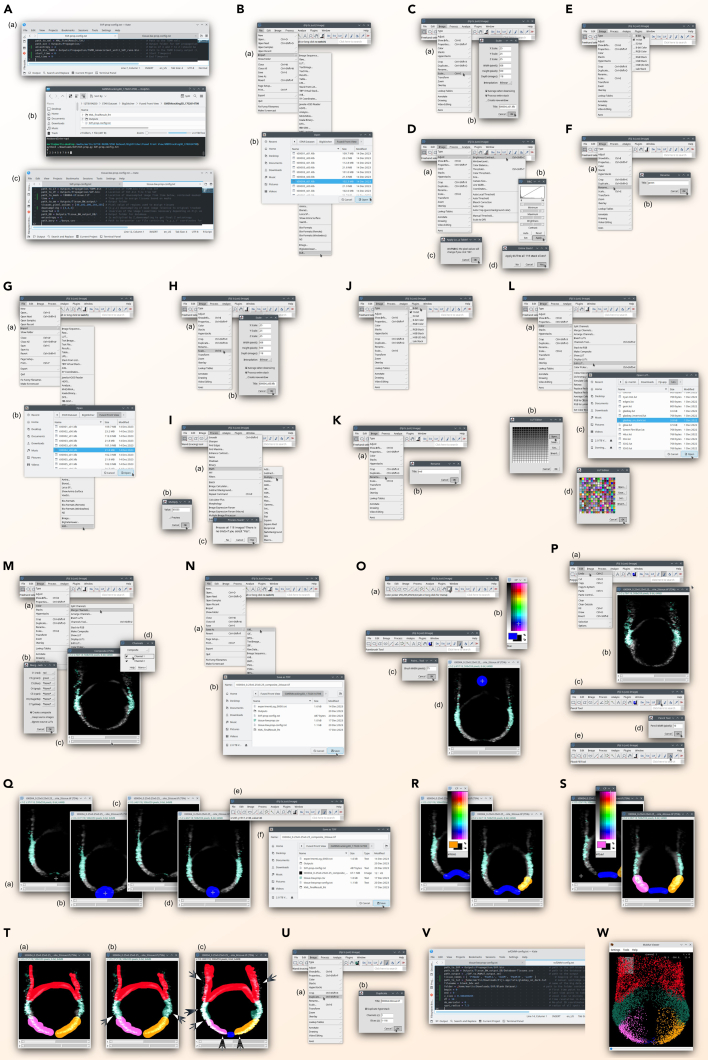

48.Recommended: Create a BigDataViewer dataset.xml file to pair with klb files generated in the above step (Figure 5A).

-

a.Open the original multiview (not fused) dataset.xml in Kate or other text editor (Figure 5Aa). Search (Ctrl+F) for voxelSize and observe the X or Y pixel dimension value from the <size> tag as shown, which is populated by three space-delimited (floating point) numbers that reflect the X Y Z voxel size, usually in μm.

-

b.Open KLB importer via Fiji menu (Figure 5Ab): Plugins → BigDataViewer → Open KLB(or use Plugins → Macros → 5. Create .klb BigDataViewer dataset.xml file…).

-

c.In the middle-lower portion of the dialog (Figure 5Ac), enable Manually specify pixel spacing (μm).

-

i.Enter the X and Y pixel unit sizes to the right of Manually specify pixel spacing (μm).

-

ii.Compute and enter the Z voxel size by multiplying this X voxel dimension by the Z anisotropy factor that was chosen during fusion (#46 above).

-

i.

-

d.At the top of the dialog (Figure 5Ac), to the far right of Template file, click … and choose the first klb image, usually named t00000_s00.klb. Set up the filename pattern for all subsequent images:

-

i.In the table below, modify Color channel to be file path tagged with ‘s’ (no quotes) and Time to be tagged with ‘t’.

-

ii.Set First for both Color channel and Time to 0, and set Stride for both to 1.

-

iii.Set Last for Color channel or Time to [number of channels or time points – 1], as observed in the t00??? or s0? values among klb files.

-

i.

-

e.Click Save XML to write a new dataset_klb.xml file that can be used in BigDataViewer (or BigStitcher) with the klb files.Optional: After the .klb dataset is created, user may opt to archive/backup the pre-fusion multiview h5/xml dataset files (∗.h5 and [usually] dataset.xml used in steps #31–45 above) and raw (czi, tif, or h5) data (from steps #1–27 above), while deleting intermediate steps that are more facilely and algorithmically reproducible (such as deconvolved image stacks, and/or post-deconvolution filtered image stacks). After following optional step #48 above, the fused h5/xml dataset) can be considered an intermediate step and deleted or archived; if not using klb/xml, user will need to preserve the fused h5/xml dataset for downstream use in MaMuT.

-

a.

-

49.Generate projection images and anaglyphs for visual presentation of the 4d dataset (Figures 5B and 5C).

-

a.Open maximum intensity projection (MIP) exporter from the Fiji menu (Figure 5Ba): Plugins → Macros → 5. Convert t0XXXX .klb/.tif files to anaglyphs or MIPs (folder in batch)….

-

b.Select the folder containing the klb dataset.

-

c.In the KLB/TIF processing settings dialog (Figure 5Bc), choose the desired views and projections.Note:Custom partial z-MIP settings… can be used to generate cutaway views of the specified slices; however, user must know the slice #’s before opening the MIP exporter. We recommend previewing a few .klb images throughout the time series to determine if cutaway views may be helpful to show morphogenetic phenomena that may address goals of the study. These previews will allow the user to find the slice #’s that should be entered in Custom partial MIP settings….

-

d.Confirm that a new folder, “MIPs”, was created in the klb dataset directory, and that tif files have been generated for each desired view or projection (Figure 5Bd).

CRITICAL: Ensure ample disk space is available.

CRITICAL: Ensure ample disk space is available. - e.

-