Abstract

The incorporation of ethical settings in Automated Driving Systems (ADSs) has been extensively discussed in recent years with the goal of enhancing potential stakeholders’ trust in the new technology. However, a comprehensive ethical framework for ADS decision-making, capable of merging multiple ethical considerations and investigating their consistency is currently missing. This paper addresses this gap by providing a taxonomy of ADS decision-making based on the Agent-Deed-Consequences (ADC) model of moral judgment. Specifically, we identify three main components of traffic moral judgment: driving style, traffic rules compliance, and risk distribution. Then, we suggest distinguishable ethical settings for each traffic component.

Keywords: Traffic moral judgment, ADC Model of moral judgment, Autonomous vehicle ethics, Autonomous vehicle decision-making

Introduction

The high expectations for driving automation include radical changes in transportation, as well as benefits such as reduction of roadway fatalities, diminishing emissions, and economic prosperity (Faisal et al., 2019). Companies like Waymo or Tesla, and government institutions (USDOT 2020) have been heavily investing in this technology to enhance citizens’ mobility. As highly automated vehicles are already operating in the U.S. territory (Waymo, 2024), addressing ethical issues such as privacy, cybersecurity, liability, and fair decisions in traffic is compelling. This paper focuses on the problem of decision-making for Automated Driving Systems (ADSs), specifically, on delineating ethical settings to implement in the vehicles’ trajectory planning (Millar, 2017).

Although the need for ethical ADS decision-making may sound too alarmist and hardly feasible (Iagnemma, 2018), implementing ethical settings will very likely enhance potential stakeholders’ trust in this technology (Henschke, 2020; Gill, 2021). For ADS to become a trusted member of our traffic system, its road decisions must reflect the common-sense intuitions of stakeholders. Such moral intuitions could serve as inputs for political discussion aimed at aligning driving automation with relevant values (Cecchini et al., 2024; Savulescu et al., 2021).

The main factors in traffic moral judgment and their consistency are still under debate. A number of scholars have highlighted how an ADS’ trajectories on the road imply a problem of risk distribution among different road users (Geisslinger et al., 2021; Etienne, 2022; Bonnefon et al., 2019; Evans, 2021). Other independent ethical issues have emerged, such as the vehicle’s behavior in low-risk everyday traffic situations(Himmelreich, 2018; De Freitas et al., 2021) and cooperation between ADS and other users in lane changing and merging (Islam et al., 2023; Borenstein et al., 2019). The goal of this paper is to define key morally relevant areas for ADS decision-making and suggest multiple ethical options. Ultimately, we aim to provide a taxonomy for designing and programming ADS that aligns with common-sense moral judgment.

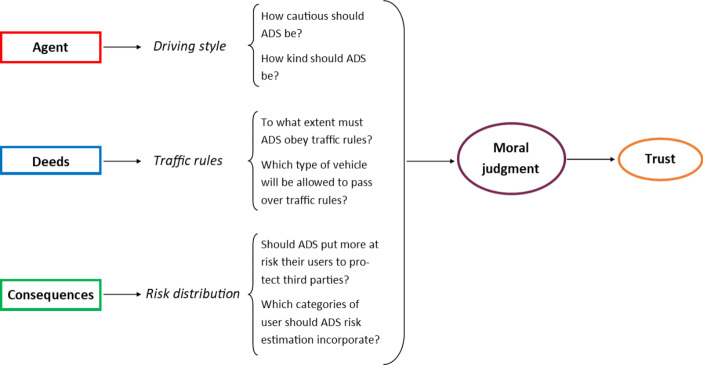

To navigate moral complexity in traffic, some authors have recently proposed to study people’s perception of ADS according to the Agent-Deed-Consequences (ADC) model of moral judgment (Dubljević, 2020; Cecchini et al., 2023). In brief, this framework posits that the moral acceptability of traffic conduct depends on the combination of the character of the driver (A component), the actions performed (D component), and the consequences produced (C component). Although the model is promising and supported by recent evidence (Dubljević et al., 2018), its full application to ADS still faces some challenges and requires further discussion. For instance, it is still unclear what character, deeds, and consequences would mean for ADS and what kind of decisions the three components would enhance. This essay advances the model by defining the ethical components more precisely and facilitating the transition from moral judgment to algorithmic decision-making in traffic.

This paper proceeds as follows. After a brief introduction to the problem of ethical decision-making for ADS (Sect. “The Problem of Ethical Decision-Making in ADS”), we summarize the core principles of the ADC model of moral judgment, also highlighting the main advantages and challenges of its application in the traffic context (Sect. “The ADC Model of Moral Judgment”). Then, we discuss each ethical component in traffic and sketch an array of ethical settings for ADS decision-making (Sect. “Agent, Deeds, and Consequences in Traffic”). Finally, we discuss how the model can be employed to ascertain moral preferences for ADS decision-making (Sect. “Discussion”).

The Problem of Ethical Decision-Making in ADS

ADS is the hardware and software that can collectively perform the entire driving task on a sustained basis (SAE On-Road Automated Driving Committee, 2021, p. 7). Notably, the Society for Automotive Engineers (SAE) distinguishes six levels of driving automation, from level 0 (“No automation”), which corresponds to conventional vehicles, to level 5 (“Full driving automation”). ADS is involved only at high or full automation (levels 4–5, SAE), in which the system can perform all driving functions without any expectation that a user will need to intervene (SAE On-Road Automated Driving Committee, 2021, p. 26).1 In other words, the user does not need to supervise the vehicle, as ADS can independently achieve a “minimal risk condition” when necessary; that is, bringing the vehicle to a stop within its path or removing it from an active lane to minimize the risk of a crash. For this reason, vehicles equipped with ADS (technically, ADS-operated vehicles) are commonly known as “automated” or “autonomous vehicles.”2 Such machines are already operating as ride-hailing services in San Francisco, Phoenix, and Los Angeles, and their release in other U.S. cities is expected shortly (Waymo, 2024).

The extended automaticity of ADS has raised some ethical concerns, including its capacity to make right decisions in sensitive situations on the road. For this reason, some scholars have argued for the need to implement agreed-upon ethical settings into ADS (Lin, 2016; Millar, 2017). The ethical settings would contribute to the trajectory planning of an ADS-operated vehicle, permitting decisions aligned with certain ethical values, such as safety, fairness, or environment preservation.

The need for an ethical ADS may sound alarmist and, perhaps, a distraction from other important ethical issues in ADS like privacy, cybersecurity, or liability (Iagnemma, 2018). However, there are good reasons to believe that programming ADSs with ethical settings enhances trust of potential stakeholders. As Henschke (2020) argues, system reliability is not the only factor that makes ADS trustworthy. Even if an ADS is reliable, traffic accidents do still happen,3 and co-existence between ADS-operated vehicles and conventional vehicles augments risk further. As some cases of avoidable fatalities have shown, disastrous events involving ADS deeply impact trust in the technology and its public perception.4 In order to increase the system’s resilience to such cases, ADS must display predictability and good will (Henschke, 2020). Likely, ethical settings for ADS would define a protocol for acting under uncertain conditions, increasing predictability in decision-making. Moreover, the ethical settings would ensure that the will to implement ADS is directed at avoiding harm to humans, and treating all road users with due care, even at the cost of breaking traffic rules if necessary. The combination of these aspects requires ADSs to be trained to act as functional equivalents of sensitive moral agents, capable of understanding the complexity of different traffic situations.

In support of the claim that ethical decision-making matters for stakeholders’ trust in ADS, a recent survey among U.S. participants reports that ADS’ ability to respond to moral dilemmas is perceived as the most important issue to address as compared to other technical, legal, and ethical questions regarding driving automation (Gill, 2021). This empirical result corroborates the supposition that potential users may not trust ADS not aligned with certain ethical settings.5 The risk is that users may simply “opt out” of using ADS thus nullifying all its expected benefits (Bonnefon et al., 2020, p. 110).

Since ADS needs to be a trusted part of our traffic community, its decisions on the road have to be aligned with stakeholders’ common-sense intuitions. For this reason, understanding people’s moral judgment about ADS behavior is a crucial starting point for developing trustworthy machines.6 The remainder of this paper aims to identify the key areas in which ADS could potentially be subject to moral judgment.

The ADC Model of Moral Judgment

Like in any other ethical domain, moral judgment in traffic depends on complex information processing, involving multiple considerations at the same time. A promising candidate for studying moral complexity in traffic is the Agent-Deed-Consequences (ADC) model of moral judgment (Dubljević, 2020; Cecchini et al., 2023). In this section, we summarize the model, and the main advantages and challenges of its application in the traffic context.

The core tenet of the ADC model is that moral judgment depends on positive or negative evaluations of three different classes of information: about the character and intentions of an agent (Agent component, A), their actions (Deed component, D), and the consequences brought about in the situation (Consequences component, C) (Dubljević & Racine, 2014). The three components provide complementary information about moral conduct: persistent character traits and underlying intentions illuminate the background and motivations that lead to an individual’s deed. The nature of the deed, in turn, explains why certain consequences resulted. When subjects consider a moral situation, they merge single evaluations into an overall moral judgment, which will be positive or negative according to the components’ valence. Therefore, the model predicts that the situation will be perceived as morally acceptable if all three A, D, and C components are deemed positive and unacceptable if all three components are viewed as negative. For example, if an observant ambulance driver (A+) successfully navigates a congested route while transporting a severely burned patient to a hospital (D+) and, in the end, the patient is stabilized and recovering (C+), the moral judgment of the situation will be positive (MJ+). Conversely, if a terrorist (A-) uses his vehicle to ram pedestrians (D-) and several innocent people die (C-), the moral judgment will be clearly negative (MJ-).

In some situations, the three components are aligned: good people do good deeds, resulting in positive consequences, while bad people do bad deeds, generating negative outcomes. However, moral stimuli might be discordant, generating conflicting intuitions. If A, D, and C do not align, the valence of the resultant judgment will depend on the specific weight of each component. In real-life scenarios, the components rarely have the same weight; rather, one dominant component typically determines the valence of the resultant judgment, and the other two moderate the effect. For example, if the lives of eight airplane passengers are threatened, people tend to forgive bad motives (A-) and deeds (D-) for extremely good high-stakes consequences (C++, MJ+) (Dubljević et al., 2018, Experiment 2). By contrast, in low-stakes situations like cooperation at the workplace, people do not tolerate bad deeds such as drug taking (D–), even in the face of good (low-stakes) consequences (C+, MJ-) (Sattler et al., 2023).

One apparent theoretical benefit of the ADC model is its ambition to integrate intuitions coming from competing moral preferences: for example, subjects aligned with virtue ethics tend to assign more weight to character and intentions than deeds and consequences (WA> WD, WA> WC); by contrast, in deontological intuitions, information about the action (and the duties it accomplishes) outweigh agency and consequences (WD> WA, WD> WC); finally, consequentialist intuitions prioritize consequences over deeds, character, and intentions (WC> WD, WC> WA). Note that the model does not intend to capture sophisticated reasons stemming from the contemporary development of virtue ethics, deontology, and consequentialism.7 Rather, the framework aims to investigate lay people’s responsiveness to certain classes of stimuli, which may or may not be incorporated into abstract moral theories.

The potential to study the interaction between conflicting intuitions is certainly an advantage in traffic moral judgment and decision-making, which involve competing ethical considerations, such as driving style, traffic rules, and risk distribution. Moreover, compartmentalizing different aspects of a given situation simplifies programming and computation for artificial agents, as the system can replace comprehensive moral judgments with more manageable, discrete computations (Pflanzer et al., 2022, p. 8). Finally, the ADC model offers a framework that is easy to operationalize into experimental settings: to the extent that the A, D, and C components can be translated into different textual or audio-visual stimuli, various moral situations can be created, facilitating the collection of a large amount of data about traffic moral judgments (Cecchini et al., 2023).

Besides these promising benefits, the application of the ADC model in ADS decision-making faces important challenges and requires some discussion. What do the A, D, and C components represent in traffic scenarios? In particular, what are the functional equivalents of character and intentions in vehicles? How to translate the components of moral judgment into specific prescriptions for ADS decision-making?

Agent, Deeds, and Consequences in Traffic

This section elaborates the adaptation of the ADC model in the road traffic context. First, we define the A, D, and C components’ meanings for traffic moral judgment. The components’ definitions correspond to three distinct areas in which ADS will have to make relevant decisions and be morally judged accordingly: driving style (agency), rule compliance (deed), and risk distribution (consequences). Second, in order to facilitate the transition from traffic moral judgment to ADS decision-making, we outline different ethical settings according to numerical values (from 1 to 0) for each of the three defined components of traffic conduct. Unlike the influential “trolley paradigm”,8 we assume here that ADS decision-making is prospective (i.e., programmed in advance) (Nyholm & Smids, 2016, Himmelreich, 2018). Thus, the ethical options discussed represent prescriptions valid for a wide range of circumstances, rather than immediate decisions. Additionally, we premise that ADS prescriptions are directed at public or commercial vehicle fleets, each regarded as a collective agent.

In these tasks, we proceed from the most basic constraints concerning traffic rules to arrive at the most complex ones related to traffic agency. Note that the purpose of this section is not to argue for specific ethical settings for ADS. Neither do we discuss how and by whom the settings should be deliberated.9 Rather, our goal is to display the representational power of the ADC model by suggesting new testing scenarios and relevant ethical discussions for them.

The Deeds

According to some moral philosophers, morality is constituted by a set of pro tanto rules like ‘one ought to pay one’s debts’, ‘one ought to keep one’s promises’, or ‘one ought not to lie’ (Ross, 1930; Frederick, 2015).10 Such obligations hold ceteris paribus, that is, under non-exceptional circumstances. For example, if Timmy promises Jane that he will meet her for lunch, people expect that Timmy accomplishes this unless on his way he sees a drowning child. In this case, people would expect Timmy to break his promise given the exceptional circumstances.

Even if they are not absolute but only pro tanto, ceteris paribus moral rules have some important practical functions in a community (Cecchini, 2020). General moral statements help agents quickly categorize certain acts as good or bad, providing clear guidance for decision-making. Additionally, moral rules facilitate social interactions and coordination by making human behavior predictable (Bicchieri, 2006). These rational considerations are supported by some evidence showing that internally represented rules, in cooperation with relevant emotions, contribute to people’s moral judgment in trolley-like dilemmas (Mallon & Nichols, 2010). Furthermore, other evidence suggests that people tend to condemn violations of moral norms if the circumstances are not exceptional (Sattler et al., 2023).

The analogy we draw here is that traffic rules such as road signs and speed limits function in ADS decision-making similarly to how pro tanto obligations function in moral reasoning.11 First, road signs guide ADS behavior under normal conditions, with exceptions made for emergencies. Second, they serve as the most basic ethical constraints for ADS-operated vehicles, helping to differentiate between right and wrong decisions. Third, compliance with traffic rules is essential to ensure that the behavior of ADSs is predictable and aligned with the expectations of other road users. Therefore, it is reasonable to assert that, for ADSs, a ‘good deed’ corresponds to compliance with a traffic rule, while a ‘bad action’ corresponds to a traffic violation.

Needless to say, people expect that future ADS will respect traffic rules and they will trust ADS accordingly. However, interesting moral questions arise from considering to what extent ADS should obey traffic rules. Existing laws in the U.S. assume that drivers can balance safety and compliance with traffic rules. Thus, the challenge is to establish an appropriate threshold of rule compliance for ADS in order to align its behavior with common sense (De Freitas et al., 2021). The moral threshold likely depends on the function of the vehicle. For instance, if an ADS is embedded into an ambulance or a law enforcement vehicle, it will certainly be allowed to selectively disobey traffic signs; yet, to avoid hazards, we might not want these vehicles to be completely insensitive to them (they will need at least to slow down in proximity of busy intersections). What about a regular ADS-operated vehicle that must reach the hospital for an emergency? Is it morally permissible for it to disobey some traffic rules like speed limits? Additionally, society might expect that ADS is sensitive enough to break some traffic signs (e.g., a solid line dividing two lanes) to prevent a collision with another user.

These scenarios suggest that ADS’ behavior related to rule compliance can be set to different degrees. The two extreme options are an ADS that respects traffic rules with no exceptions (D = 1) and an ADS that completely disregards them to reach certain purposes (D = 0). To specify the conditions under which an ADS is authorized to pass over the rules, a possible solution comprises a distinction between high-risk and low-risk traffic signs and an increasing percentage of speed limit violations. Different ethical options arise accordingly (Table 1). For example, an ADS can be instructed to ignore low-risk traffic signs and exceed speed limits by 10% in the case of high-risk of collision (D = 0.8).12

Table 1.

Different values for the deed component according to compliance with traffic rules

| Deed (D) | 1 | Obey every traffic sign, no exception |

| 0.8 | Authorized to ignore low-risk traffic signs and exceed speed limits by 10% in the case of high-risk of collision | |

| 0.5 | Authorized to ignore low-risk traffic signs and exceed speed limits by 30% in the case of high-risk of collision | |

| 0.2 | Authorized to ignore traffic signs and exceed speed limits by 50% in the case of high risk of collision | |

| 0 | Ignore any traffic signs to reach the destination |

The Consequences

The ethics of an emerging technology like ADS is largely dependent on whether it will bring benefits and minimize harm. This fundamental ethical constraint is largely in line with common sense and the German Ethics Commission for Automated Driving, which reports that “The licensing of automated systems is not justifiable unless it promises to produce at least a diminution in harm compared with human driving, in other words, a positive balance of risks” (2017, Art. 2).

Avoiding collisions and harm requires breaking traffic rules under certain circumstances. This consideration reflects the philosophical standpoint known as ethical consequentialism, according to which the morality of an action depends on the value brought by its consequences (Mill, 1863). A large body of psychological evidence highlights how consideration of harm in moral judgment is deeply rooted in the human mind, regardless of the culture (Schein & Gray, 2018). Indeed, in contrast with the influential “dual process” theory of moral judgment (Greene, 2013), which had posited that consequentialist judgments require slow and effortful reasoning, a growing number of studies show that consequentialist responses to moral dilemmas can be fast and automatic (see Cecchini, 2021). Therefore, if ADS must act like a sensitive moral agent, it will have to consider the moral outcomes of its actions when deciding what to do. However, it is debated in the literature how moral consequences should be considered.

Consequentialist decision-making in ADS is fundamentally a problem of risk distribution (Geisslinger et al., 2021; Bonnefon et al., 2019; Evans, 2021). According to a recent account, risk in driving automation (R) can be defined as the product of collision probability (p) and estimated harm (H), which are both functions of the trajectory of the vehicle (u) (Geisslinger et al., 2021, p. 1043):

|

1 |

The collision probability is calculated according to the automated driving perception system, which estimates the distance between detected objects and the uncertainties due to noise, range limitations, and occluded areas. The estimation of harm can be measured according to the kinetic energy produced by the impact, which has been considered as a reliable indicator for classifying the severity of an injury (Sobhani et al., 2011).

While the mathematical formulation of risk involves technical issues that cannot be thoroughly discussed here, a debated moral question is what type of risk should ADS consider in planning the vehicle’s trajectory: should the system be programmed to minimize the risk for its users, or should it calculate risk for third parties? Which road users should be included in the estimation? Answers to such questions likely determine the nature of the consequences that ADS moral decisions will bring about. To illustrate the problem, Geisslinger and colleagues (2021, p. 1042) imagine how an ADS-operated car might position itself in a lane according to whether it is programmed to minimize risk for itself or vulnerable users like a cyclist (Fig. 1). Assuming a large scale of implementation of ADS, the change in the trajectory could determine a significant shift in the number and severity of crashes per year (Bonnefon et al., 2019).

Fig. 1.

To the extent that the lateral distance from a vehicle influences the probability of a collision, the ADS-operated car’s trajectory determines the distribution of risk among the ego vehicle and other road users. (adapted from Geisslinger et al., 2021, 1042).

The distribution of risk in ADS decision-making has been hotly debated in the literature. Notably, a “selfish” ADS (C = 0 in Table 2) that minimizes risk only for its users could have more positive financial consequences for vehicle manufacturers, but more negative consequences for public safety (Bonnefon et al., 2016). On the other hand, an “altruistic” ADS (C = 1), estimating risk for external road users, could diminish the trust of potential consumers. In between the two extremes of a purely “selfish” and a purely “altruistic” ADS, some ethical considerations may create different nuances. For example, it could be relevant to consider if the third-party road user is violating traffic signs (e.g., a jaywalker) since the latter has some responsibility for creating a dangerous situation (Etienne, 2022). Nevertheless, targeting unlawful road users may constitute a form of technological paternalism that may appear to punish those who do not follow the letter of the law (Evans et al., 2020). Furthermore, it could be morally important for an ADS to minimize risk for socially relevant vehicles like public transportation, school buses, or hazardous material transportation (Evans, 2021) (C = 0.5).

Table 2.

Different values for the consequences component according to the distribution of risk

| Consequences (C) | 1 | Minimize risk for other users, disregard risk for ego vehicle |

| 0.8 | Calculate risk for any law-compliant road users | |

| 0.5 | Calculate risk for priority users (vulnerable users, public transportation, hazardous material transportation, etc.) | |

| 0.2 | Calculate risk for vulnerable users (pedestrians, cyclists, etc.) | |

| 0 | Minimize risk for ego vehicle only |

The Agent

A substantial part of moral philosophy agrees that acting ethically is not just accomplishing duties and bringing valuable consequences, but also developing a consistent character. Indeed, one of the oldest and most important lessons from virtue ethics is that moral conduct fundamentally depends on stable traits of personality (i.e., virtues and vices) like honesty or courage (Aristotle, 2004). Information about character and personality is relevant to emphasize the important moral difference between an agent who merely acts rightly and an agent who is wholehearted in what they do (Annas, 2006, p. 517). From this ethical standpoint, what matters for moral conduct is not just compliance with certain duties, nor the outcomes brought by the actions, but also the manner in which certain actions are performed. For example, it is not enough to obey speed limits and avoid collisions, a virtuous driver is supposed to demonstrate patience when interacting with novice drivers on the road or kindness when dealing with traffic congestion.

Unlike isolated good deeds, virtues make moral behavior lasting and reliable, creating a trustworthy environment enabled by good relationships. This aspect should not be underestimated in understanding moral psychology. Indeed, an important line of research investigating the influence of character on moral judgment points out that people interpret cues about moral character as indicators for future behavior (Uhlmann et al., 2015). This explains why people tend to tolerate accidental bad consequences provoked by subjects displaying good traits (Dubljević et al., 2018). Conversely, people tend to blame actions revealing bad character (e.g., cruelty in a videogame), even though the action involves no harm (Uhlmann et al., 2015). Therefore, information about people’s character is a crucial factor of moral judgment and regulates trust between agents.

Of course, contemporary artificial agents are not sophisticated enough to possess human character traits. However, insofar as they can represent the world, pursue goals, and realize them, some current AI applications can be understood as rudimentary non-biological intentional agents (List, 2021, p. 1219). Some technical characteristics might perform functions like traits in human beings and, accordingly, people may interpret them as virtues and vices. This hypothesis is corroborated by a recent study showing that people tend to attribute moral traits (e.g., dishonesty or cowardice) to AI systems, albeit to a lesser extent than to human beings (Gamez et al., 2020).

Since character ascriptions to artificial agents might have an impact on users’ moral judgment and trust in technology, it is worth considering whether ADS has functional equivalents of moral traits. A good candidate is ADS driving style: whether a vehicle drives cautiously or aggressively is an important cue for understanding if the vehicle is safe and trustworthy or, on the contrary, is going to create dangerous situations on the road. For this reason, information about an ADS’ driving style might be perceived by users as good or bad and have an impact on their trust in them.13 Similarly to human virtues, a good driving style is crucial for long-term safety and to create a trustworthy traffic environment. Even if an ADS-operated vehicle is driving on an empty road and is not violating any traffic sign, it might be important it adopts appropriate caution, given plausible “what if scenarios” that could easily arise, like a pedestrian suddenly running across the street (Keeling, 2021, p. 44). As Himmelreich (2018, p. 678) points out, ADS will need to be trained to deal with not only life-and-death emergencies, but mundane traffic scenarios like approaching a crosswalk with limited visibility, making a left turn with oncoming traffic, or navigating through busy intersections. Should ADS be designed for maximizing time efficiency in such circumstances or should they be extremely cautious to prevent danger? This is likely a morally relevant question suggesting that ADS driving style constitutes a relevant ethical component besides traffic rules and risk distribution.

One could object that driving style should be included in the consequences (C component) because it still determines the overall risk taken by a vehicle. In response to this objection, it is worth noting that while driving style does contribute to public safety (which is a value relevant to all three components), it is independent of the perception of imminent risk of collision with other users. In other words, an ADS set for a certain driving style will adhere to it regardless of the perception of risk due to other users in the vicinity. Thus, while the C value determines the distribution of harm between the ego vehicle and other users, driving style determines the vehicle’s intrinsic ethical character. For this reason, it is plausible to suppose that ADS requires separate prescriptions for driving style and risk distribution.

Two main “virtues” contributing to an ethical ADS driving style (broadly understood) can be identified. The first virtue can be defined as vehicle cautiousness, which comprises the ADS’ acceleration and braking modes. For example, current Tesla vehicles offer three different acceleration modes: “chill”, which limits acceleration for a slightly smoother and gentler ride, “sport”, which provides the normal level of acceleration, and “insane”, which provides the maximum level of acceleration immediately available (Tesla, 2023). It is not difficult to foresee that the acceleration mode adopted for future ADS will have consequences on the ethical perception of the new technology and a relevant environmental impact.

The second virtue contributing to the overall ADS driving style can be categorized as vehicle kindness. This ethical aspect concerns the ADS’ interaction with other road users, regulated by its communication modules (e.g., Vehicle-to-Vehicle and Vehicle-to-Pedestrian). One concrete technical issue in this domain is the extent to which an ADS should allow other vehicles to merge in situations uncovered by strict traffic rules (Borenstein et al., 2019). People might be more tolerant of ADS if it is designed to be kind to other road users. On the other hand, extreme kindness might not be desirable since other users could exploit it. Perhaps, ADS should be programmed to strike a balance between the two by being kind primarily to socially relevant vehicles like ambulances, fire trucks, school buses, or drivers with disabilities. Vehicle kindness should also be relative to national road etiquettes, given the cultural differences across the world (Hongladarom & Novotny, 2021). For instance, drivers in Italy or India sound their horns often, while in the Czech Republic or Thailand, everyone is expected not to blow their horns unless there is an emergency (101). Communication with non-motorized users like pedestrians and cyclists is, finally, another aspect contributing to vehicle kindness. An ADS-operated vehicle allowing a pedestrian or a cyclist to cross the street requires communication schemes equivalent to drivers’ hand gestures (Islam et al., 2023, p. 692) or signaling patterns. This interaction with vulnerable road users is particularly important since detecting them is still a challenging task for current ADS sensors.

Multiple types of agency can be generated by combining different levels of cautiousness and kindness (Table 3). For instance, a virtuous vehicle (A = 1) is extremely cautious in acceleration and braking, and kind to any road user. On the other hand, a vicious vehicle (A = 0) maximizes time efficiency, i.e., it is extremely aggressive on the road and kind with no user. In between these two extremes, ADS driving style can vary according to the level of cautiousness and the range of users to which it behaves kindly.14

Table 3.

Different values for the agent component according to the vehicle’s driving style

| Agent (A) | 1 | Extremely cautious, kind to any road user |

| 0.8 | Quite cautious, kind to priority users (vulnerable users, public transportation, hazardous material transportation, etc.) | |

| 0.5 | Cautious, kind to priority users | |

| 0.2 | Aggressive, kind to only emergency vehicles | |

| 0 | Maximize time efficiency: extremely aggressive, no kindness |

Discussion

Let us summarize the overall picture we detailed so far. It is plausible to say that ADS will have to respect traffic rules and they will be judged positively or negatively accordingly. This deontological aspect is captured by the Deed (D) component of moral judgment. Yet, ADS’ compliance with traffic rules may conflict with the ethical demand of preventing harm on the road, which could require breaking traffic signs in some emergencies. Thus, an important factor contributing to the moral acceptability of ADS is how their trajectories distribute risk among different road users and under different circumstances. Such a consequentialist ethical standpoint is represented in the model by the Consequences (C) component of moral judgment. However, ethical conduct on the road is not just traffic rules compliance and minimization of risk, but also social interaction with other users, which can judge a vehicle’s driving style. This ethical aspect comprises ADS behavior in every low-risk traffic situation not regulated by traffic rules; specifically, vehicle cautiousness and kindness. Since driving style is the functional equivalent in ADS of human character, the model reflects it as the Agent (A) component of moral judgment.

The ADC model predicts that each traffic component contributes to the moral acceptability of ADS. Additionally, we anticipate that moral judgment will positively correlate with subjective trust in ADS, as stakeholders tend to trust technology that aligns with their common-sense morality (Fig. 2).

Fig. 2.

The ADC model applied to ADS moral judgment

The traffic decision-making model just outlined can be employed to study moral preferences according to the type of ADS-operated vehicle. The first step is to develop hypotheses about social expectations on ADS decision-making by combining relevant values (from 1 to 0) for each traffic component. For example, one may set an ADS-operated ambulance in emergency mode for maximizing time efficiency (A = 0/0.2), passing over traffic signs (D = 0/0.2), and prioritizing risk for itself (C = 0/0.2). The emergency settings for an ADS embedded into a police car could be similar for A and D, but society might expect that the vehicle is disposed to sacrifice itself for other users (C = 0.8/1). Instead, an ADS-operated truck carrying hazardous materials could be programmed to be very cautious and gentle with other users (A = 0.8/1), while prompt to protect itself from other users (C = 0/0.2), possibly at the cost of breaking some traffic rules (D = 0.5), considering the long-term damage that any collision it participates in could provoke (Evans, 2021). Food delivery ADS, instead, might be set for a medium agent virtue (A = 0.5), since people would expect a sufficient level of efficiency; then, it must be extremely respectful of traffic rules (D = 0.8/1) and sufficiently altruistic (C = 0.5/0.8) to the extent that it does not carry human beings, nor a highly valuable cargo.

The hypotheses can be tested through various types of experiments. Initially, preliminary evidence can be obtained by asking subjects to assess the moral acceptability of traffic scenarios involving ADS-operated vehicles that adhere to ethical settings for A, D, and C. The traffic scenarios can be presented either textually or visually. Additionally, to ensure robustness and ecological validity of moral preferences, ADS ethical settings can be evaluated using a driving simulator. In this first-person experimental condition, participants act as passengers in ADS-operated vehicles that adhere to ethical settings for driving style, rule compliance, and risk distribution. Following the simulation, experimenters measure participants’ moral judgment and trust in the technology (see Shahrdar et al., 2019).

If combined, the third- and first-person perspectives provide a good understanding of individuals’ moral preferences for ADS behavior. A future challenge we foresee is to assess A, D, and C preferences at a system level of analysis, considering mixed road scenarios in which fleets of ADS vehicles operate alongside traditional vehicles(Borenstein et al., 2019). To address this problem, researchers can run large-scale traffic flow simulations using appropriate software.15 In the simulations, fleets of ADS-operated vehicles could be set to adhere to common-sense ethical settings. Subsequently, researchers can observe whether the traffic system satisfies objectively defined parameters of ethical values, such as safety, fairness, and mobility. This testing would illuminate how micro-level ethical decision-making impacts macro-level ethical consequences in driving automation.

Conclusion

Future ADS will have to be trained to make relevant decisions involving different ethical values. The ADC model of moral judgment promises to clarify this moral complexity by analyzing traffic moral conduct into different components comprising information about the agent, the deed, and the consequences of an action in a given situation. In this paper, we have argued that the agent component of ADS should be understood as the vehicle’s driving style, the deed as the level of compliance with traffic rules, and the consequences as the risk distribution among road users. Then, we have described distinguishable ethical settings according to numerical values assigned to each A, D, and C component.

The framework we have articulated in this work has several theoretical benefits. First, its holistic approach to moral judgment integrates multiple ethical considerations in traffic that emerged in the recent literature. Second, the ADC model enhances continuity between human moral judgment, trust, and algorithmic decision-making in ADS. Finally, the model promises ease of computation and flexibility in programming according to the practical needs served by ADS.

The path to ADS ethical decision-making is still long and more work needs to be done to translate the multiple ethical demands into suitable algorithms. The hope is that the advances of the ADC model we proposed here, in parallel with the necessary technological progress, represent a step forward to plausible ADS ethical settings.

Acknowledgements

We would like to thank all those who have helped in carrying out the research. We are grateful to the audience of the 2024 meeting of the South Carolina Society for Philosophy, the Autonomous Vehicle Symposium at NC State, and the Ethics of AI workshop at Utah State, where we presented earlier versions of this paper. Special thanks are due to the members of the NeuroComputational Ethics Research Group at NC State University for their helpful feedback, particularly to Sean Brantley and Ryan Sterner for their assistance in the manuscript preparation.

Author Contributions

Conceptualization: D.C., V.D.; Original draft: D.C.; Review and editing: D.C., V.D.

Funding

This research was partly supported by the National Science Foundation (NSF) CAREER award under Grant No. 2043612 (V.D.). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the NSF.

Declarations

Conflict of Interest

No conflict of interests.

Footnotes

The fundamental difference between level 4 and level 5 concerns the conditions under which the ADS can operate. At level 4, the ADS can operate under specified conditions, while at level 5, the ADS acts unconditionally, that is, “there are no design-based weather, time-of-day, or geographical restrictions on where and when the system can operate the vehicle” (SAE On-Road Automated Driving Committee, 2021, p. 32).

The SAE discourages the use of these terms because they are functionally imprecise, neglecting that ADS-operated vehicles are not fully autonomous but dependent on cooperation with the user and other outside entities (SAE On-Road Automated Driving Committee 2021, p. 34).

Statistics from the National Highway Traffic Safety Administration report nearly 400 crashes involving level 2 systems happened on US roads between July 2021 and December 2022 (Standing General Order on Crash Reporting For incidents involving ADS and Level 2 ADAS 2021).

In 2018, Elaine Herzberg, a 49-old woman died as a result of being hit by an Uber self-driving car in Tempe, Arizona. While Ms Herzberg was walking with her bicycle, the vehicle’s automatic systems failed to identify her as an imminent collision danger and the car’s passenger could not avoid the accident since they were visually distracted (BBC, 2020). After this episode, Uber removed their test vehicles from roads and friends of the victim demanded that Uber be closed down (Henschke, 2020, p. 81).

However, we cannot rule out the possibility that the results of this survey are influenced by a misrepresentation of the ethics of driving automation in the media, which could exaggerate the likelihood of moral dilemmas.

Although we believe that common-sense moral judgment should contribute to the alignment of ADSs, we do not contend that this must be the ultimate authority. Other inputs, such as philosophical theory or constitutional frameworks (e.g., the Vienna Convention on Road Traffic), should be considered. For further discussion on the role of moral intuitions in artificial intelligence alignment, see Cecchini et al. (2024).

Disagreement between philosophical theories lies more in interpretation of components rather than prioritization. For example, Kantian deontological theory interprets good intentions (A+) as intending to act out of duty itself, while classic utilitarianism understands good intentions as intending to maximize utility.

This paradigm holds that ADS ethical decision-making is fundamentally an applied trolley case, (Awad et al., 2018); that is, a dilemmatic situation in which an ADS-operated vehicle has to choose between two unavoidable harms, for instance, either swerving left, hitting a lethal obstacle, or proceeding forward, killing a pedestrian crossing the street. This approach is misleading for several reasons. First, unlike the trolley dilemma, which describes an immediate choice, decision-making in ADS has to be programmed in advance. Second, ADS’ ethical situations are not binary but comprise multiple options, as the trajectory of the vehicle is continuous rather than discrete. Third, the outcomes of each car trajectory are far from being certain like the two options in the trolley dilemma.

We do not take a stand between users’ freedom to customize ethical settings and mandatory regulations by relevant institutions (see Etienne, 2022 for discussion).

Notably, some, like moral particularists (Dancy 2004) strongly criticize this view.

By “traffic rules” here, we strictly mean road signs and not statutory laws that regulate the entire spectrum of traffic conduct and define exceptions related to signs.

The estimation of risk for the vehicle depends on the value assigned to the C component (see Table 2).

Relevantly, a preliminary study using a virtual reality simulator has reported that people’s trust in self-driving cars covaries with the ADS’ driving style (aggressive vs. prudent and respectful) (Shahrdar, Park & Nojoumian, 2019).

The sensitivity of ADS to different vehicle types has become a real problem in San Francisco, where police and fire departments have been complaining about how ADS-operated vehicles interfere with rescue operations (Kerr, 2023).

For example, the open source MATSim.org.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Annas, J. (2006). Virtue ethics. In D. Copp (Ed.), The Oxford handbook of ethical theory, (515–536). Oxford University Press.

- Aristotle (2004). Nicomachean ethics. Roger Crisp (Ed.). Cambridge University Press.

- Awad, E., Dsouza, S., Kim, R., Schulz, J., Henrich, J., Shariff, A., Bonnefon, J-F., & Rahwan, I. (2018). The moral machine experiment. Nature, 563(7729), 59–64. [DOI] [PubMed]

- BBC (2020, 16 September). BBC News. https://www.bbc.com/news/technology-54175359

- Bicchieri, C. (2006). The grammar of society: The nature and dynamics of social norms. Cambridge University Press.

- Bonnefon, J-F., Shariff, A., & Rahwan, I. (2016). The social dilemma of autonomous vehicles. Science, 352(6397), 36–37. [DOI] [PubMed]

- Bonnefon, J-F., Shariff, A., & Rahwan, I. (2019). The trolley, the bull bar, and why engineers should care about the ethics of autonomous cars. Proceedings of the IEEE, 107(3), 502–504. [Google Scholar]

- Bonnefon, J-F., Shariff, A., & Rahwan, I. (2020). The moral psychology of AI and the ethical opt-out problem. In S.M. Liao (Ed.), Ethics of artificial intelligence, (109–126). Oxford University Press.

- Borenstein, J., Herkert, J. R., & Miller, K. W. (2019). Self-driving cars and engineering ethics: The need for a system level. Science and Engineering Ethics, 25, 383–398. [DOI] [PubMed] [Google Scholar]

- Cecchini, D. (2020). Problems for moral particularism: Can we really escape general reasons? Philosophical Inquiries, 8(2), 31–46.

- Cecchini, D. (2021). Dual-process reflective equilibrium: Rethinking the interplay between intuition and reflection in Moral reasoning. Philosophical Explorations,24(3), 295–311.

- Cecchini, D., Brantley, S., & Dubljević, D. (2023). Moral judgment in realistic traffic scenarios: Moving beyond the trolley paradigm for ethics of autonomous vehicles. AI & Society. 10.1007/s00146-023-01813-y

- Cecchini, D., Pflanzer, M., & Dubljević, V. (2024). Aligning artificial intelligence with moral intuitions: An intuitionist approach to the alignment problem. AI and Ethics, 1–11. 10.1007/s43681-024-00496-5

- Cunneen, M., Mullins, M., Murphy, F., Shannon, D., Furxhi, I., & Ryan, C. (2020). Autonomous vehicles and avoiding the trolley (dilemma): Vehicle perception, classification, and the challenges of framing decision ethics. Cybernetics and Systems, 51(1), 59–80.

- Dancy, J. (2004). Ethics without principles. Clarendon.

- De Freitas, J., Censi, A., Smith, B. W., Di Lillo, L., Anthony, S. E., & Frazzoli, E. (2021). From driverless dilemmas to more practical commonsense tests for automated vehicles. Psychological and Cognitive Sciences, 118(11), e2010202118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dubljević, V. (2020). Toward implementing the ADC model of moral judgment in autonomous vehicles. Science and Engineering Ethics, 26, 2461–2472. [DOI] [PubMed] [Google Scholar]

- Dubljević, V., & Racine, E. (2014). The ADC of moral judgment: Opening the black box of moral intuitions with heuristics about agents, deeds, and consequences. AJOB Neuroscience, 5(4), 3–20.

- Dubljević, V., Sattler, S., & Racine, E. (2018). Deciphering moral intuition: How agents, deeds, and consequences influence moral judgment. PLOS ONE, 13(10), e0206750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Etienne, H. (2022). A practical role-based approach for autonomous vehicle moral dilemmas. Big Data & Society, 9(2), 1–12.

- Evans, N. G. (2021). Ethics and risk distribution for autonomous vehicles. In, R. Jenkins, D. Cerny and T. Hribek (Eds), Autonomous vehicle ethics, (pp. 7–19). Oxford University Press.

- Evans, K., de Moura, N., Chauvier, S., Chatila, R., & Dogan, E. (2020). Ethical decision making in autonomous vehicles: The AV ethics project. Science and Engineering Ethics, 26, 3285–3312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faisal, A., Yigitcanlar, T., Kamruzzaman, M., & Currie, G. (2019). Understanding autonomous vehicles: A systematic literature review on capability, impact, planning and policy. The Journal of Transportation and Land Use, 12(1), 45–72. [Google Scholar]

- Frederick, D. (2015). Pro-tanto obligations and ceteris-paribus rules. Journal of Moral Philosophy, 12(3), 255–266. [Google Scholar]

- Gamez, P., Shank, B. D., Arnold, C., & North, M. (2020). Artificial virtue: The machine question and perceptions of moral character in artificial moral agents. AI & SOCIETY, 35, 795–809. [Google Scholar]

- Geisslinger, M., Poszler, F., Betz, J., Lütge, C., & Lienkamp, M. (2021). Autonomous driving ethics: From trolley problem to ethics of risk. Philosophy & Technology, 34, 1033–1055. [Google Scholar]

- German Ethics Commission for Automated and Connected Driving (2017). Federal ministry for transport and digital infrastructure.

- Gill, T. (2021). Ethical dilemmas are really important to potential adopters of autonomous vehicles. Ethics and Information Technology, 23, 657–673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene, J. (2013). Moral tribes: Emotions, reason, and the gap between us and them. Penguin.

- Henschke, A. (2020). Trust and resilient autonomous driving systems. Ethics and Information Technology, 22, 81–92. [Google Scholar]

- Himmelreich, J. (2018). Never mind the trolley: The ethics of autonomous vehicles in mundane situations. Ethical Theory and Moral Practice, 21, 669–684. [Google Scholar]

- Hongladarom, S., & Novotny, D. D. (2021). Autonomous vehicles in drivers’ school: A non-western perspective. In R. Jenkins, D. Cerny & T. Hribek (Eds), Autonomous vehicle ethics: The trolley problem beyond, (pp. 99–113). Oxford University Press.

- Iagnemma, K. (2018). Why we have the ethics of self-driving cars all wrong. 21 January. https://www.weforum.org/agenda/2018/01/why-we-have-the-ethics-of-self-driving-cars-all-wrong/

- Islam, M. M., Al Redwan Newaz, A., Song, L., Lartey, B., Lin, S. C., Fan, W., Hajbabaie, A., Khan, M. A., Partovi, A., Phuapaiboon, T., Homaifar, A. & Karimoddini, A. (2023). Connected autonomous vehicles: State of practice. Applied Stochastic Models in Business and Industry, 9(5), 684–700.

- Keeling, G. (2021). Automated vehicles and the ethics of classification. In R. Jenkins, D. Cerny & T. Hribek (Eds), Autonomous vehicle ethics, (pp. 41–57). Oxford University Press.

- Kerr, D. (2023). Why police and firefighters in San Francisco are complaining about driverless cars. 10 August. https://www.npr.org/2023/08/10/1193106866/why-police-and-firefighters-in-san-francisco-are-complaining-about-driverless-ca

- Lin, P. (2016). Why ethics matters for autonomous cars. In M. Maurer, J.C. Gerdes, B. Lenz & H. Winner (Eds), Autonomous driving: Technical, legal and social aspects, (pp. 69–86). Springer.

- List, C. (2021). Group agency and artificial intelligence. Philosophy & Technology, 34, 1213–1242. [Google Scholar]

- Mallon, R., & Nichols, S. (2010). Rules. In J. Doris (Ed.), The moral psychology handbook, (pp. 297–320). Oxford University Press.

- Mill, J. S. (1863). Utilitarianism. Parker, Son, and Bourn, West Strand.

- Millar, J. (2017). Ethics settings for autonomous vehicles. In P. Lin, A. Keith and R. Jenkins (Eds.), Robot ethics 2.0: From autonomous cars to artificial intelligence, (pp. 20–34). Oxford University Press.

- National Highway Traffic Safety Administration (2021). Order on crash reporting for incidents involving ADS and Level 2 ADAS. General Order.

- Nyholm, S., & Smids, J. (2016). The ethics of accident-algorithms for self-driving cars: An applied trolley prolem? Ethical Theory and Moral Practice, 19, 1275–1289.

- Pflanzer, M., Traylor, Z., Lyons, J. B., Dubljevic, V., & Nam, C. S. (2022). Ethics in human-AI teaming: Principles and perspectives. AI and Ethics 3, 917–935 (2023).

- Ross, W. D. (1930). The right and the good. 2002. P. Stratton Lake (Ed.). Clarendon Press.

- SAE On-Road Automated Driving Committee. (2021). Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles. SAE International. 10.4271/J3016_202104 [Google Scholar]

- Sattler, S., Dubljević, V., & Racine, E. (2023). Cooperative behavior in the workplace: Empirical evidence from the agent-deed-consequences model of moral judgment. Frontiers in Psychology, 13:1064442. 10.3389/fpsyg.2022.1064442. [DOI] [PMC free article] [PubMed]

- Savulescu, J., Gyngell, C., & Kahane, G. (2021). Collective reflective equilibrium in practice (CREP) and controversial novel technologies. Bioethics, 35, 652–663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schein, C., & Gray, K. (2018). The theory of dyadic morality: Reinventing moral judgment by redefining harm. Personality and Social Psychology Review, 22(1), 32–70. [DOI] [PubMed] [Google Scholar]

- Shahrdar, S., Park, C., & Nojoumian, M. (2019). Human trust measurement using an immersive virtual reality autonomous vehicle simulator. In AIES’19: Session 9: Human and machine interaction (pp. 515–520). Association for Computing Machinery. (conference proceedings. No editor specified) https://dl.acm.org/doi/proceedings/10.1145/3306618?tocHeading=heading14

- Sobhani, A., Young, W., Logan, D., & Bahrololoom, S. (2011). A kinetic energy model of two-vehicle crash injury severity. Accident Analysis and Prevention, 43(3), 741–754. [DOI] [PubMed]

- Tesla (2023). Tesla model X owner’s manual. Accessed December 2023. https://www.tesla.com/ownersmanual/modelx/en_us/GUID-43B58CAD-3DB7-4421-BFCA-0E921B6F731D.html

- Uhlmann, L. E., Pizarro, A. D., & Diermeier, D. (2015). A person-centered approach to moral judgment. Perspecitves on Psychological Science, 10(1), 72–81. [DOI] [PubMed] [Google Scholar]

- USDOT. (2020). Ensuring American leadership in automated vehicle technologies: Automated vehicles 4.0. https://www.transportation.gov/sites/dot.gov/files/2020-02/EnsuringAmericanLeadershipAVTech4.pdf

- Waymo (2024). Waymo.com. Accessed November 2024. https://waymo.com/