Abstract

Despite major advances in artificial intelligence (AI) research for healthcare, the deployment and adoption of AI technologies remain limited in clinical practice. This paper describes the FUTURE-AI framework, which provides guidance for the development and deployment of trustworthy AI tools in healthcare. The FUTURE-AI Consortium was founded in 2021 and comprises 117 interdisciplinary experts from 50 countries representing all continents, including AI scientists, clinical researchers, biomedical ethicists, and social scientists. Over a two year period, the FUTURE-AI guideline was established through consensus based on six guiding principles—fairness, universality, traceability, usability, robustness, and explainability. To operationalise trustworthy AI in healthcare, a set of 30 best practices were defined, addressing technical, clinical, socioethical, and legal dimensions. The recommendations cover the entire lifecycle of healthcare AI, from design, development, and validation to regulation, deployment, and monitoring.

Introduction

In the field of healthcare, artificial intelligence (AI)—that is, algorithms with the ability to self-learn logic—and data interactions have been increasingly used to develop computer aided models, for example, disease diagnosis, prognosis, prediction of therapy response or survival, and patient stratification.1 Despite major advances, the deployment and adoption of AI technologies remain limited in real world clinical practice. In recent years, concerns have been raised about the technical, clinical, ethical, and societal risks associated with healthcare AI.2 3 In particular, existing research has shown that AI tools in healthcare can be prone to errors and patient harm, biases and increased health inequalities, lack of transparency and accountability, as well as data privacy and security breaches.4 5 6 7 8

To increase adoption in the real world, it is essential that AI tools are trusted and accepted by patients, clinicians, health organisations, and authorities. However, there is an absence of clear, widely accepted guidelines on how healthcare AI tools should be designed, developed, evaluated, and deployed to be trustworthy—that is, technically robust, clinically safe, ethically sound, and legally compliant (see glossary in appendix table 1).9 To have a real impact at scale, such guidelines for responsible and trustworthy AI must be obtained through wide consensus involving international and interdisciplinary experts.

In other domains, international consensus guidelines have made lasting impacts. For example, the FAIR guideline10 for data management has been widely adopted by researchers, organisations, and authorities, as the principles provide a structured framework for standardising and enhancing the tasks of data collection, curation, organisation, and storage. Although it can be argued that the FAIR principles do not cover every aspect of data management because they focus more on findability, accessibility, interoperability, and reusability of the data, and less on privacy and security, they delivered a code of practice that is now widely accepted and applied.

AI in healthcare has unique properties compared with other domains, such as the special trust relation between doctors and patients, because patients themselves generally do not have the opportunity to objectively assess the diagnosis and treatment decisions of doctors. This dynamic underscores the need for AI systems to be not only technically robust and clinically safe, but also ethically sound and transparent, ensuring that they complement the trust patients place in their healthcare providers. However, compared with non-AI tools, the highly complicated underlying data processing frequently comes with a lack of transparency into the exact working mechanisms. Unlike medical equipment, AI currently lacks universally accepted measures for quality assurance. Compared with chat assistants and synthetic image generators that receive increased public interaction, healthcare is a more sensitive domain where errors can have major consequences. Addressing these specific gaps for the healthcare domain is therefore crucial for trustworthy AI.

Initial efforts have focused on providing recommendations for the reporting of AI studies for different medical domains or clinical tasks (eg, TRIPOD+AI,11 CLAIM,12 CONSORT-AI,13 DECIDE-AI,14 PROBAST-AI,15 CLEAR16). These guidelines do not provide best practices for the actual development and deployment of the AI tools, but promote standardised and complete reporting of their development and evaluation. Recently, several researchers have published promising ideas on possible best practices for healthcare AI.17 18 19 20 21 22 23 24 However, these proposals have not been established through wide international consensus and do not cover the whole lifecycle of healthcare AI (ie, from design, development, and validation to deployment, usage, and monitoring).

In other initiatives, the World Health Organization published a report focused on key ethical and legal challenges and considerations. Because it was intended for health ministries and governmental agencies, it did not explore the technical and clinical aspects of trustworthy AI.25 Likewise, Europe’s High-Level Expert Group on Artificial Intelligence established a comprehensive self-assessment checklist for AI developers. However, it covered AI in general and did not address the unique risks and challenges of AI in medicine and healthcare.26

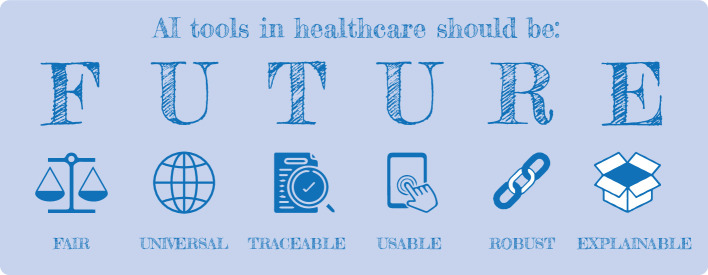

This paper addresses an important gap in the field of healthcare AI by delivering the first structured and holistic guideline for trustworthy and ethical AI in healthcare, established through wide international consensus and covering the entire lifecycle of AI. The FUTURE-AI Consortium was started in 2021 and currently comprises 117 international and interdisciplinary experts from 50 countries (fig 1), representing all continents (Europe, North America, South America, Asia, Africa, and Oceania). Additionally, the members represent a variety of disciplines (eg, data science, medical research, clinical medicine, computer engineering, medical ethics, social sciences) and data domains (eg, radiology, genomics, mobile health, electronic health records, surgery, pathology). To develop the FUTURE-AI framework, we drew inspiration from the FAIR principles for data management, and defined concise recommendations organised according to six guiding principles—fairness, universality, traceability, usability, robustness, and explainability (fig 2).

Fig 1.

Geographical distribution of the multidisciplinary experts

Fig 2.

Organisation of the FUTURE-AI framework for trustworthy artificial intelligence (AI) according to six guiding principles—fairness, universality, traceability, usability, robustness, and explainability

Summary points.

Despite major advances in medical artificial intelligence (AI) research, clinical adoption of emerging AI solutions remains challenging owing to limited trust and ethical concerns

The FUTURE-AI Consortium unites 117 experts from 50 countries to define international guidelines for trustworthy healthcare AI

The FUTURE-AI framework is structured around six guiding principles: fairness, universality, traceability, usability, robustness, and explainability

The guideline addresses the entire AI lifecycle, from design and development to validation and deployment, ensuring alignment with real world needs and ethical requirements

The framework includes 30 detailed recommendations for building trustworthy and deployable AI systems, emphasising multistakeholder collaboration

Continuous risk assessment and mitigation are fundamental, addressing biases, data variations, and evolving challenges during the AI lifecycle

FUTURE-AI is designed as a dynamic framework, which will evolve with technological advancements and stakeholder feedback

Methods

FUTURE-AI is a structured framework that provides guiding principles and step-by-step recommendations for operationalising trustworthy and ethical AI in healthcare. This guideline was established through international consensus over a 24 month period using a modified Delphi approach.27 28 The process began with the definition of the six core guiding principles, followed by an initial set of recommendations, which were then subjected to eight rounds of extensive feedback and iterative discussions aimed at reaching consensus. We used two complementary methods to aggregate the results: a quantitative approach, which involved analysing the voting patterns of the experts to identify areas of consensus and disagreement; and a qualitative approach, focusing on the synthesis of feedback and discussions based on recurring themes or new insights raised by several experts.

Definition of FUTURE-AI guiding principles:To develop a user friendly guideline for trustworthy AI in medicine, we used the same approach as in the FAIR guideline, based upon a minimal set of guiding principles. Defining overarching guiding principles facilitates streamlining and structuring of best practices, as well as implementation by future end users of the FUTURE-AI guideline.

To this end, we first reviewed the existing literature in healthcare AI, with a focus on trustworthy AI and related topics in healthcare, such as responsible AI, ethical AI, AI deployment, and terms relating to the six principles identified later. Additional searches were performed for related guidelines, for example, for AI reporting, AI evaluation, and guidelines or position statements from relevant (public) bodies such as the EU, the United States Food and Drug Administration (FDA), and WHO. This review enabled us to identify a wide range of requirements and dimensions often cited as essential for trustworthy AI.29 30 Throughout the following rounds, the literature review was iteratively expanded based on the advice by experts and widening of the scope, see round 3.

As table 1 shows, these requirements were then thematically grouped, leading to our definition of the six core principles (ie, fairness, universality, traceability, usability, robustness, and explainability), which were arranged to form an easy-to-remember acronym (FUTURE-AI).

Table 1.

Clustering of trustworthy artificial intelligence (AI) requirements and selection of FUTURE-AI guiding principles

| Clusters of requirements | Core principles |

|---|---|

| 1. Fairness, diversity, inclusiveness, non-discrimination, unbiased AI, equity | Fairness |

| 2. Generalisability, adaptability, interoperability, applicability, universality | Universality |

| 3. Traceability, monitoring, continuous learning, auditing, accountability | Traceability |

| 4. Human centred AI, user engagement, usability, accessibility, efficiency | Usability |

| 5. Robustness, reliability, resilience, safety, security | Robustness |

| 6. Transparency, explainability, interpretability, understandability | Explainability |

Round 1: Definition of an initial set of recommendations

Six working groups composed of three experts each (including clinicians, data scientists, and computer engineers) were created to explore the six guiding principles separately. The experts were recruited from five European projects (EuCanImage, ProCAncer-I, CHAIMELEON, PRIMAGE, INCISIVE), which together formed the AI for Health Imaging (AI4HI) network. By using “AI for medical imaging” as a common use case, each working group conducted a thorough literature review, then proposed a definition of the guiding principle in question, together with an initial list of best practices (between 6 and 10 for each guiding principle).

Subsequently, the working groups engaged in an iterative process of refining these preliminary recommendations through online meetings and by email exchanges. At this stage, a degree of overlap and redundancy was identified across recommendations. For example, a recommendation to report any identified bias was initially proposed under both the fairness and traceability principles, while a recommendation to train the AI models with representative datasets appeared under fairness and robustness. After removing the redundancies and refining the formulations, a set of 55 preliminary recommendations was derived and then distributed to a broader panel of experts for further assessment, discussion, and refinement in the next round.

Round 2: Online survey

In this round, the FUTURE-AI Consortium was expanded to 72 members by recruiting new experts, including AI scientists, healthcare practitioners, ethicists, social scientists, legal experts, and industry professionals. The same original group took part in rounds 2–5. Experts were identified from the literature, through networks, and an online search, with selection focusing on underrepresented expertise or demographics. Most of the experts were recruited to complement the original consortium based on academic credentials, geographical location, and under-represented expertise, to ensure a representative consortium in terms of geography and (healthcare) disciplines. We then conducted an online survey to enable the experts to assess each recommendation using five voting options (absolutely essential, very important, of average importance, of little importance, not important at all). The participants were also able to rate the formulation of the recommendation (“I would keep it as it is,” “I would refine its definition”) and propose modifications. Furthermore, they were able to propose merging recommendations or adding new ones. The survey included a section for free text feedback on the core principles and the overall FUTURE-AI guideline.

The survey responses were quantitatively analysed to assess the consensus level. Recommendations that garnered a high level agreement (>90%) were selected for further discussion. Recommendations that attracted considerable negative feedback, which were particularly those that suggested specific methods over general guidelines, were discarded. The written feedback also prompted the merging of some recommendations, aiming to craft a more concise guideline for easier adoption by future users. Consequently, a revised list of 22 recommendations was derived, along with the identification of 16 contentious points for further discussions.

As part of the survey, we also sought feedback from the experts on the adequacy of these guiding principles in capturing the diverse requirements for trustworthy AI in healthcare. While the consensus among experts was largely affirmative, it was suggested a seventh “general” category was introduced to cover broader issues such as data privacy, societal considerations, and regulatory compliance, and to produce a holistic framework. The best practices in this category are overarching, for example, multistakeholder engagement (general 1) is relevant for all six guiding principles, thereby avoiding repetition for each principle.

Round 3: Feedback on the reduced set of recommendations

The updated version of the guideline from round 2 was distributed to all experts for another round of feedback. This involved assessing both the adequacy and the phrasing of the recommendations. Additionally, we presented the points of contention identified in the survey, encouraging experts to offer their insights on these disagreements. Examples of contentious topics included the recommendation to perform multicentre versus local clinical evaluation, and the necessity (or not) to systematically evaluate the AI tools against adversarial attacks.

The feedback received from the experts played a crucial role in resolving several contentious issues, particularly through the refinement of the recommendations' wording. Moreover, the scope was broadened from “AI in medical imaging” more generally to “AI in healthcare” because we realised most of the recommendations hold for healthcare in general, making the guideline more broadly applicable. As a result, this led to the expansion of the FUTURE-AI guideline to a total of 30 best practices, which included six new recommendations within the “general” category. Areas of disagreement that remained unresolved were carefully documented and summarised for future discussions.

Round 4: Further feedback and rating of the recommendations

The updated recommendations were sent out to the experts for additional feedback, this time in written form, to assess each recommendation’s clarity, feasibility, and relevance. This phase allowed for more precise phrasing of the recommendations. As an example, the original recommendation to train AI models with “diverse, heterogeneous data” was refined by using the term “representative data” because many experts argued that representative data more effectively capture the essential characteristics of the populations, while the term heterogeneous is more ambiguous.

Furthermore, we implemented a system to rate each best practice depending on the specific needs and goals of each AI project. A key focus was to make a distinction between healthcare AI tools at the research or proof-of-concept stage and those intended for clinical deployment because they require different levels of compliance. Healthcare AI tools in the research or proof-of-concept stage are typically in their experimental phase and require some flexibility as their capabilities are being explored and fine-tuned. In contrast, AI tools intended for clinical deployment will interact directly with patient care and therefore should need higher standards of compliance to ensure they are ethical, safe, and effective. At this point of the process, the consortium members were requested to assess all the recommendations separately for both proof-of-concept and deployable AI tools, and categorise them as either “recommended” or “highly recommended.”

Round 5: Feedback on the manuscript

At this stage, with a well developed set of 30 recommendations, the first and last authors of the study drafted the first version of the FUTURE-AI manuscript. The draft manuscript was circulated among the experts, starting a series of iterative feedback sessions to ensure that the FUTURE-AI guideline was articulated with precision and clarity. This process enabled incorporation of diverse perspectives, from clinical, technical, and non-technical experts, hence making the manuscript more reader friendly and accessible to a broad audience. Experts were also able to suggest additional resources or references to substantiate the recommendations further. At this stage, examples of methods were integrated to the manuscript where relevant, aiming to demonstrate the practical implementation of the best practices in real world scenarios.

Round 6: New “external” feedback

In round 6 we invited additional experts (n=44) who had not participated in the initial stages of the study to provide independent feedback. This group was carefully selected to ensure a more diverse representation across the experts (eg, patient advocates, social scientists, regulatory experts), as well as wider geographical diversity (especially across Africa, Latin America, and Asia).

These experts were requested to provide written feedback and express their opinion on each recommendation using a voting system (ie, agree, disagree, neutral, did not understand, no opinion). For most of the recommendations on which no clear agreement was reached, again using consensus level, the primary cause was misinterpretation or unclarity. Therefore, this stage was especially helpful in pinpointing any remaining areas of ambiguity or contention that required further discussions, as well as in identifying the formulations that needed refinement to ensure the entire guideline is clear and accessible to a diverse audience within the medical AI community.

Round 7: Online consensus meetings

Based on the feedback from previous rounds, we identified a few topics that continued to evoke a degree of contention among experts, particularly concerning the exact wording of certain recommendations. Hence, we convened four online meetings in June 2023 specifically aimed at deepening the discussions around the remaining contentious areas and reaching a final consensus on both the recommendations and their formulations.

These discussions resolved outstanding issues, such as the recommendation to systematically validate AI tools against adversarial attacks, which was considered by many experts as a cybersecurity concern and thus grouped with other related concerns; or the recommendation that the clinical evaluations should be conducted by third parties, which was deemed impractical at scale, especially in resource limited settings. As a result of these consensus meetings, the final list of FUTURE-AI recommendations was established, and their formulations were completed as detailed in table 2.

Table 2.

List of FUTURE-AI recommendations, together with the expected compliance for both research and deployable artificial intelligence (AI) tools (+: recommended, ++: highly recommended)

| Recommendations | Research | Deployable |

|---|---|---|

| Fairness | ||

| 1. Define any potential sources of bias from an early stage | ++ | ++ |

| 2. Collect information on individuals’ and data attributes | + | + |

| 3. Evaluate potential biases and, when needed, bias correction measures | + | ++ |

| Universality | ||

| 1. Define intended clinical settings and cross setting variations | ++ | ++ |

| 2. Use community defined standards (eg, clinical definitions, technical standards) | + | + |

| 3. Evaluate using external datasets and/or multiple sites | ++ | ++ |

| 4. Evaluate and demonstrate local clinical validity | + | ++ |

| Traceability | ||

| 1. Implement a risk management process throughout the AI lifecycle | + | ++ |

| 2. Provide documentation (eg, technical, clinical) | ++ | ++ |

| 3. Define mechanisms for quality control of the AI inputs and outputs | + | ++ |

| 4. Implement a system for periodic auditing and updating | + | ++ |

| 5. Implement a logging system for usage recording | + | ++ |

| 6. Establish mechanisms for AI governance | + | ++ |

| Usability | ||

| 1. Define intended use and user requirements from an early stage | ++ | ++ |

| 2. Establish mechanisms for human-AI interactions and oversight | + | ++ |

| 3. Provide training materials and activities (eg, tutorials, hands-on sessions) | + | ++ |

| 4. Evaluate user experience and acceptance with independent end users | + | ++ |

| 5. Evaluate clinical utility and safety (eg, effectiveness, harm, cost-benefit) | + | ++ |

| Robustness | ||

| 1. Define sources of data variation from an early stage | ++ | ++ |

| 2. Train with representative real world data | ++ | ++ |

| 3. Evaluate and optimise robustness against real world variations | ++ | ++ |

| Explainability | ||

| 1. Define the need and requirements for explainability with end users | ++ | ++ |

| 2. Evaluate explainability with end users (eg, correctness, impact on users) | + | + |

| General | ||

| 1. Engage interdisciplinary stakeholders throughout the AI lifecycle | ++ | ++ |

| 2. Implement measures for data privacy and security | ++ | ++ |

| 3. Implement measures to address identified AI risks | ++ | ++ |

| 4. Define adequate evaluation plan (eg, datasets, metrics, reference methods) | ++ | ++ |

| 5. Identify and comply with applicable AI regulatory requirements | + | ++ |

| 6. Investigate and address application specific ethical issues | + | ++ |

| 7. Investigate and address social and societal issues | + | + |

Round 8: Final consensus vote

The very last step of the process involved a final vote on the derived recommendations, which took place through an online survey. At this stage, the final consortium consisted of 117 experts as more replied to the above recruitments: the original 72 experts from round 2, some of the 44 experts who provided feedback in round 6, and several additional experts. By the end of this process, all the recommendations were approved with less than 5% disagreement among all FUTURE-AI members. The little remaining disagreement mostly originated from whether recommendations should be “recommended” or “highly recommended” for research and deployable tools.

FUTURE-AI guideline

In this section, we provide definitions and justifications for each of the six guiding principles and give an overview of the FUTURE-AI recommendations. Table 2 provides a summary of the recommendations, together with the proposed level of compliance (ie, recommended v highly recommended). Note that supplementary table 1 in the appendix presents a glossary of the main terms used in this paper, while supplementary table 2 lists the main stakeholders of relevance to the FUTURE-AI framework.

Fairness

The fairness principle states that AI tools in healthcare should maintain the same performance across individuals and groups of individuals (including under-represented and disadvantaged groups). AI driven medical care should be provided equally for all citizens. Biases in healthcare AI can be due to differences in the attributes of the individuals (eg, sex, gender, age, ethnicity, socioeconomic status, medical conditions) or the data (eg, acquisition site, machines, operators, annotators). As, in practice, perfect fairness might be impossible to achieve, fair AI tools should be developed such that potential AI biases are identified, reported, and minimised as much as possible to achieve ideally the same but at least highly similar performance across subgroups to be considered fair.31 To this end, three recommendations for fairness are defined in the FUTURE-AI framework.

Fairness 1: Define sources of bias

Bias in healthcare AI is application specific.32 At the design phase, the interdisciplinary AI development team (see glossary) should identify possible types and sources of bias for their AI tool.33 These might include group attributes (eg, sex, gender, age, ethnicity, socioeconomic, geography), the medical profiles of the individuals (eg, with comorbidities or disability), as well as human and technical biases during data acquisition, labelling, data curation, or the selection of the input features.

Fairness 2: Collect information on individual and data attributes

To identify biases and apply measures for increased fairness, relevant attributes of the individuals, such as sex, gender, age, ethnicity, risk factors, comorbidities, or disabilities, should be collected. This should be subject to informed consent and approval by ethics committees to ensure an appropriate balance between the benefits of non-discrimination and the risks of reidentification. Measuring similarity of medical profiles should also be included to verify equal treatment (eg, risk factors, comorbidities, biomarkers, anatomical properties34). Furthermore, relevant information about the datasets, such as the centres where they were acquired, the machine used, the preprocessing and annotation processes, should be systematically collected to address technical and human biases. When complete data collection is logistically challenging, two alternative approaches can be considered: imputing missing attributes or removing samples with incomplete data. The choice between these methods should be evaluated on a case-by-case basis, considering the specific context and requirements of the AI system.

Fairness 3: Evaluate fairness

When possible—that is, the individuals’ and data attributes are available—bias detection methods should be applied by using fairness metrics such as true positive rates, statistical parity, group fairness, and equalised odds.31 35 To correct for any identified biases, mitigation measures should be tested, such as data resampling, bias free representations, and equalised odds postprocessing,36 37 38 39 40 to verify their impact on both the tool’s fairness and the model’s accuracy. Importantly, any remaining bias should be documented and reported to inform the end users and citizens (see traceability 2).

Universality

The universality principle emphasises that a healthcare AI tool should be generalisable outside the controlled environment where it was built. Specifically, the AI tool should be able to generalise to new patients and new users (eg, new clinicians), and when applicable, to new clinical sites. Depending on the intended radius of application, healthcare AI tools should be as interoperable and as transferable as possible so they can benefit citizens and clinicians at scale. To this end, four recommendations for universality are defined in the FUTURE-AI framework.

Universality 1: Define clinical settings

At the design phase, the development team should specify the clinical settings in which the AI tool will be applied (eg, primary healthcare centres, hospitals, home care, low versus high resource settings, one or several countries), and anticipate potential obstacles to universality (eg, differences in end users, clinical definitions, medical equipment or IT infrastructures across settings).

Universality 2: Use existing standards

To ensure the quality and interoperability of the AI tool, it should be developed based on existing community defined standards. These might include clinical definitions of diseases by medical societies, medical ontologies (eg, Systematized Nomenclature of Medicine Clinical Terms (SNOMED CT)41), data models (eg, Observational Medical Outcomes Partnership (OMOP)42), interface standards (eg, Digital Imaging and Communications in Medicine (DICOM), Fast Healthcare Interoperability Resources (FHIR) Health Level Seven (HL7)), data annotation protocols, evaluation criteria,21 and technical standards (eg, Institute of Electrical and Electronics Engineers (IEEE)43 or International Organisation for Standardization (ISO)44).21 41 42 43 44

Universality 3: Evaluate using external data

To assess generalisability, technical validation of the AI tools should be performed with external datasets that are distinct from those used for model training.45 These might include reference or benchmarking datasets that are representative for the task in question (ie, approximating the expected real world variations). Except for AI tools intended for single centres, the clinical evaluation studies should be performed at several sites to assess performance and interoperability across clinical workflows.46 If the tool’s generalisability is limited, mitigation measures (eg, transfer learning or domain adaptation) should be applied and tested.

Universality 4: Evaluate local clinical validity

Clinical settings vary in many aspects, such as populations, equipment, clinical workflows, and end users. Therefore, to ensure trust at each site, the AI tools should be evaluated for their local clinical validity.17 In particular, the AI tool should fit the local clinical workflows and perform well on the local populations. If the performance is decreased when evaluated locally, recalibration of the AI model should be performed and tested (eg, through model fine tuning).

Traceability

The traceability principle states that medical AI tools should be developed together with mechanisms for documenting and monitoring the complete trajectory of the AI tool, from development and validation to deployment and usage. This will increase transparency and accountability by providing detailed and continuous information on the AI tools during their lifetime to clinicians, healthcare organisations, citizens and patients, AI developers, and relevant authorities. AI traceability will also enable continuous auditing of AI models,47 identify risks and limitations, and update the AI models when needed.

Traceability 1: Implement risk management

Throughout the AI tool’s lifecycle, the multidisciplinary development team shall analyse potential risks, assess each risk’s likelihood, effects and risk-benefit balance, define risk mitigation measures, monitor the risks and mitigations continuously, and maintain a risk management file. The risks might include those explicitly covered by the FUTURE-AI guiding principles (eg, bias, harm, data breach), but also application specific risks. Other risks to consider include human factors that might lead to misuse of the AI tool (eg, not following the instructions, receiving insufficient training), application of the AI tool to individuals who are not within the target population, use of the tool by others than the target end users (eg, technician instead of physician), hardware failure, incorrect data annotations or input values, and adversarial attacks. Mitigation measures might include warnings to the users, system shutdown, reprocessing of the input data, the acquisition of new input data, or the use of an alternative procedure or human judgment only. Monitoring and reassessment of risk might involve the use of various feedback channels, such as customer feedback and complaints, as well as logged real world performance and issues (see traceability 5).

Traceability 2: Provide documentation

To increase transparency, traceability, and accountability, adequate documentation should be created and maintained for the AI tool,48 which might include (a) an AI information leaflet to inform citizens and healthcare professionals about the tool’s intended use, risks (eg, biases) and instructions for use; (b) a technical document to inform AI developers, health organisations, and regulators about the AI model’s properties (eg, hyperparameters), training and testing data, evaluation criteria and results, biases and other limitations, and periodic audits and updates49 50 51; (c) a publication based on existing AI reporting standards13 15 52; and (d) a risk management file (see traceability 1).

Traceability 3: Implement continuous quality control

The AI tool should be developed and deployed with mechanisms for continuous monitoring and quality control of the AI inputs and outputs,47 such as to identify missing or out-of-range input variables, inconsistent data formats or units, incorrect annotations or data preprocessing, and erroneous or implausible AI outputs. For quality control of the AI decisions, uncertainty estimates should be provided (and calibrated53) to inform the end users about the degree of confidence in the results.54

Traceability 4: Implement periodic auditing and updating

The AI tool should be developed and deployed with a configurable system for periodic auditing,47 which should define the datasets and timelines for periodic evaluations (eg, every year). The periodic auditing should enable the identification of data or concept drifts, newly occurring biases, performance degradation or changes in the decision making of the end users.55 Accordingly, necessary updates to the AI models or AI tools should be applied.56

Traceability 5: Implement AI logging

To increase traceability and accountability, an AI logging system should be implemented to trace the user’s main actions in a privacy preserving manner, specify the data that are accessed and used, record the AI predictions and clinical decisions, and log any encountered issues. Time series statistics and visualisations should be used to inspect the usage of the AI tool over time.

Traceability 6: Implement AI governance

After deployment, the governance of the AI tool should be specified. In particular, the roles of risk management, periodic auditing, maintenance, and supervision should be assigned, such as to IT teams or healthcare administrators. Furthermore, responsibilities for AI related errors should be clearly specified among clinicians, healthcare centres, AI developers, and manufacturers. Accountability mechanisms should be established, incorporating both individual and collective liability, alongside compensation and support structures for patients affected by AI errors.

Usability

The usability principle states that the end users should be able to use an AI tool to achieve a clinical goal efficiently and safely in their real world environment. On one hand, this means that end users should be able to use the AI tool’s functionalities and interfaces easily and with minimal errors. On the other hand, the AI tool should be clinically useful and safe, for example, improve the clinicians’ productivity and/or lead to better health outcomes for the patients and avoid harm. To this end, five recommendations for usability are defined in the FUTURE-AI framework.

Usability 1: Define user requirements

The AI developers should engage clinical experts, end users (eg, patients, physicians), and other relevant stakeholders (eg, data managers, administrators) from an early stage to compile information on the AI tool’s intended use and end user requirements (eg, human-AI interfaces), as well as on human factors that might affect the usage of the AI tool57 (eg, digital literacy level, age group, ergonomics, automation bias). Special attention should be paid to the fit with the current clinical workflow, including system level implementation of AI and interactions with other (AI) support tools. Using a majority voting strategy among diverse stakeholders to identify the most relevant clinical issues might help to ensure that solutions are broadly applicable rather than tailored to individual preferences.

Usability 2: Define human-AI interactions and oversight

Based on the user requirements, the AI developers should implement interfaces to enable end users to effectively use the AI model, annotate the input data in a standardised manner, and verify the AI inputs and results. Given the high stakes nature of medical AI, human oversight is essential and increasingly required by policy makers and regulators.17 26 Human-in-the-loop mechanisms should be designed and implemented to perform specific quality checks (eg, to flag biases, errors, or implausible explanations), and to overrule the AI predictions when necessary. Regulations, the benefits of automation, and patient preferences regarding AI autonomy might vary per use case and over time,58 therefore requiring use case specific human oversight mechanisms and periodic auditing and updates (see traceability 4).

Usability 3: Provide training

To facilitate best usage of the AI tool, minimise errors and harm, and increase AI literacy, the developers should provide training materials (eg, tutorials, manuals, examples) and/or training activities (eg, hands-on sessions) in an accessible format and language, taking into account the diversity of end users (eg, specialists, nurses, technicians, citizens, or administrators).

Usability 4: Evaluate clinical usability

To facilitate adoption, the usability of the AI tool within the local clinical workflows should be evaluated in real world settings with representative and diverse end users (eg, with respect to sex, gender, age, clinical role, digital proficiency, and disability). The usability tests should gather evidence on the user’s satisfaction, performance and productivity, and assess human factors that might affect the usage of the AI tool57 (eg, confidence, learnability, automation bias).

Usability 5: Evaluate clinical utility

The AI tool should be evaluated for its clinical utility and safety. The clinical evaluations of the AI tool should show benefits for the patient (eg, earlier diagnosis, better outcomes), for the clinician (eg, increased productivity, improved care), and/or for the healthcare organisation (eg, reduced costs, optimised workflows) compared with the current standard of care. Additionally, it is important to show that the AI tool is safe and does not cause harm to individuals (or specific groups), such as through a randomised clinical trial.59

Robustness

The robustness principle refers to the ability of a medical AI tool to maintain its performance and accuracy under expected or unexpected variations in the input data. Existing research has shown that even small, imperceptible variations in the input data might lead AI models into incorrect decisions.60 Biomedical and health data can be subject to major variations in the real world (both expected and unexpected), which can affect the performance of AI tools. Therefore, it is important that healthcare AI tools are designed and developed to be robust against real world variations, and evaluated and optimised accordingly. To this end, three recommendations for robustness are defined in the FUTURE-AI framework.

Robustness 1: Define sources of data variations

At the design phase, the development team should first define robustness requirements for the AI tool in question by making an inventory of the sources of variation that might affect the AI tool’s robustness in the real world. These might include differences in equipment, technical fault of a machine, data heterogeneities during data acquisition or annotation, and/or adversarial attacks.60

Robustness 2: Train with representative data

Clinicians, citizens, and other stakeholders are more likely to trust the AI tool if it is trained on data that adequately represent the variations encountered in real world clinical practice.61 Therefore, the training datasets should be carefully selected, analysed, and enriched according to the sources of variation identified at the design phase (see robustness 1). Training with representative datasets also allows for improvement of other principles, for example, more representative bias estimation and mitigation for fairness.

Robustness 3: Evaluate robustness

Evaluation studies should be implemented to evaluate the AI tool’s robustness (eg, stress tests, repeatability tests62) under conditions that reflect the variations of real world clinical practice. These might include data, equipment, technician, clinician, patient, and centre related variations. Depending on the results, mitigation measures should be implemented and tested to optimise the robustness of the AI model, such as regularisation,63 data augmentation,64 data harmonisation,65 or domain adaptation.66

Explainability

The explainability principle states that medical AI tools should provide clinically meaningful information about the logic behind the AI decisions. Although medicine is a high stake discipline that requires transparency, reliability and accountability, machine learning techniques often produce complex models that are black box in nature. Explainability is considered desirable from a technological, medical, ethical, legal, and patient perspective.67 It enables end users to interpret the AI model and outputs, understand the capacities and limitations of the AI tool, and intervene when necessary, such as to decide to use it or not. However, explainability is a complex task that has challenges that need to be carefully addressed during AI development and evaluation to ensure that AI explanations are clinically meaningful and beneficial to end users.68 Two recommendations for explainability are defined in the FUTURE-AI framework.

Explainability 1: Define explainability needs

At the design phase, it should be established with end users and domain experts if explainability is required for the AI tool. If so, the specific requirements for explainability should be defined with representative experts and end users, including (a) the goal of the explanations (eg, global description of the model’s behaviour v local explanation of each AI decision); (b) the most suitable approach for AI explainability69; and (c) the potential limitations to anticipate and monitor (eg, over-reliance of the end users on the AI decision68).

Explainability 2: Evaluate explainability

The explainable AI methods should be evaluated, first quantitatively by using computational methods to assess the correctness of the explanations,70 71 then qualitatively with end users to assess their impact on user satisfaction, confidence, and clinical performance.72 The evaluations should also identify any limitations of the AI explanations (eg, they are clinically incoherent73 or sensitive to noise or adversarial attacks,74 they unreasonably increase the confidence in the AI generated results75).

General recommendations

Finally, seven general recommendations are defined in the FUTURE-AI framework, which apply across all principles of trustworthy AI in healthcare.

General 1: Engage stakeholders continuously

Throughout the AI tool’s lifecycle, the AI developers should continuously engage with interdisciplinary stakeholders, such as healthcare professionals, citizens, patient representatives, expert ethicists, data managers, and legal experts. This interaction will facilitate the understanding and anticipation of the needs, obstacles, and pathways towards acceptance and adoption. Methods to engage stakeholders might include working groups, advisory boards, one-to-one interviews, cocreation meetings, and surveys.

General 2: Ensure data protection

Adequate measures to ensure data privacy and security should be put in place throughout the AI lifecycle. These might include privacy enhancing techniques (eg, differential privacy, encryption), data protection impact assessment, and appropriate data governance after deployment (eg, logging system for data access, see traceability 5). If deidentification is implemented (eg, pseudonymisation, k-anonymity), the balance between the health benefits for citizens and the risks for reidentification should be carefully assessed and considered. Furthermore, the manufacturers and deployers should implement and regularly evaluate measures for protecting the AI tool against malicious or adversarial attacks, such as by using system level cybersecurity solutions or application specific defence mechanisms (eg, attack detection or mitigation).76

General 3: Implement measures to address AI risks

At the development stage, the development team should define an AI modelling plan that is aligned with the application specific requirements. After implementing and testing a baseline AI model, the AI modelling plan should include mitigation measures to address the challenges and risks identified at the design stage (see fairness 1 to explainability 1). These might include measures to enhance robustness to real world variations (eg, regularisation, data augmentation, data harmonisation, domain adaptation), ensure generalisability across settings (eg, transfer learning, knowledge distillation), and correct for biases across subgroups (eg, data resampling, bias free representation, equalised odds post processing).

General 4: Define an adequate AI evaluation plan

To increase trust and adoption, an appropriate evaluation plan should be defined, including test data, metrics, and reference methods. First, adequate test data should be selected to assess each dimension of trustworthy AI. In particular, the test data should be well separated from the training to prevent data leakage.77 Furthermore, adequate evaluation metrics should be carefully selected, taking into account their benefits and potential flaws.78 Finally, benchmarking with respect to reference AI tools or standard practice should be performed to enable comparative assessment of model performance.

General 5: Comply with AI regulations

The development team should identify the applicable AI regulations, which vary by jurisdiction and over time. For example, in the EU, the recent AI Act classifies all AI tools in healthcare as high risk, hence they must comply with safety, transparency and quality obligations, and undergo conformity assessments. Identifying the applicable regulations at an early stage enables regulatory obligations to be anticipated based on the AI tool’s intended classification and risks.

General 6: Investigate application specific ethical issues

In addition to the well known ethical issues that arise in medical AI (eg, privacy, transparency, equity, autonomy), AI developers, domain specialists, and professional ethicists should identify, discuss, and address all application specific ethical, social, and societal issues as an integral part of the development and deployment of the AI tool.79

General 7: Investigate social and environmental issues

In addition to clinical, technical, legal, and ethical implications, a healthcare AI tool might have specific social and environmental issues. These will need to be considered and addressed to ensure a positive impact for the AI tool on citizens and society. Regulatory agencies or independent organisations could provide certifications or marks for AI tools that meet certain sustainability criteria. This approach can encourage transparency, give insight on an AI tool’s environmental impact, and highlight those that adopt environmentally friendly practices. Relevant issues might include the impact of the AI tool on the working conditions and power relations, on the new skills (or deskilling) of the healthcare professionals and citizens,80 and on future interactions between citizens, health professionals, and social careers. Furthermore, for environmental sustainability, AI developers should consider strategies to reduce the carbon footprint of the AI tool.81 To enable the implementation of the FUTURE-AI framework in practice, we provide a step-by-step guide by embedding the recommended best practices in chronological order across the key phases of an AI tool’s lifecycle, as shown in figure 3 and as follows:

Fig 3.

Embedding the FUTURE-AI best practices into an agile process throughout the artificial intelligence (AI) lifecycle. E=explainability; F=fairness; G=general; R=robustness; T=traceability; Un=universality; Us=usability

The design phase is initiated with a human centred, risk aware strategy by engaging all relevant stakeholders and conducting a comprehensive analysis of clinical, technical, ethical, and social requirements, leading to a list of specifications and a list of risks to monitor (eg, potential biases, lack of robustness, generalisability, and transparency).

Accordingly, the development phase prioritises the collection of representative datasets for effective training and testing, ensuring they reflect variations across the intended settings, equipment, protocols, and populations as identified previously. Furthermore, an adequate AI development plan is defined and implemented given the identified requirements and risks, including mitigation strategies and human centred mechanisms to meet the initial design's functional and ethical requirements.

Subsequently, the validation phase comprehensively examines all dimensions of trustworthy AI, including system performance but also robustness, fairness, generalisability, and explainability, and concludes with the generation of all necessary documentation.

Finally, the deployment phase is dedicated to ensuring local validity, providing training, implementing monitoring mechanisms, and ensuring regulatory compliance for adoption in real world healthcare practice.

Operationalisation of FUTURE-AI

In this section, we provide a detailed list of practical steps for each recommendation, accompanied by specific examples of approaches and methods that can be applied to operationalise each step towards trustworthy AI, as shown in table 3, table 4, table 5, and table 6. This approach offers easy-to-use, step-by-step guidance for all end users of the FUTURE-AI framework when designing, developing, validating and deploying new AI tools for healthcare.

Table 3.

Practical steps and examples to implement FUTURE-AI recommendations during design phase

| Recommendations | Operations | Examples |

|---|---|---|

| Engage interdisciplinary stakeholders (general 1) | Identify all relevant stakeholders | Patients, GPs, nurses, ethicists, data managers82 83 |

| Provide information on the AI tool and AI | Educational seminars, training materials, webinars84 | |

| Set up communication channels with stakeholders | Regular group meetings, one-to-one interviews, virtual platform85 | |

| Organise cocreation consensus meetings | One day cocreation workshop with n=15 multidisciplinary stakeholders86 | |

| Use qualitative methods to gather feedback | Online surveys, focus groups, narrative interviews87 | |

| Define intended use and user requirements (usability 1) | Define the clinical need and AI tool’s goal | Risk prediction, disease detection, image quantification |

| Define the AI tool’s end users | Patients, cardiologists, radiologists, nurses | |

| Define the AI model’s inputs | Symptoms, heart rate, blood pressure, ECG, image scan, genetic test | |

| Define the AI tool’s functionalities and interfaces | Data upload, AI prediction, AI explainability, uncertainty estimation88 | |

| Define requirements for human oversight | Visual quality control, manual corrections89 90 | |

| Adjust user requirements for all end user subgroups | According to role, age group, digital literacy level91 | |

| Define intended clinical settings and cross setting variations (universality 1) | Define the AI tool’s healthcare setting(s) | Primary care, hospital, remote care facility, home care |

| Define the resources needed at each setting | Personnel (experience, digital literacy), medical equipment (eg, >1.5 T MRI scanner), IT infrastructure | |

| Specify if the AI tool is intended for high end and/or low resource settings | Facilities with MRI scanners >1.5 T v low field MRIs (eg, 0.5 T), high end v low cost portable ultrasound92 93 | |

| Identify all cross settings variations | Data formats, medical equipment, data protocols, IT infrastructure94 | |

| Define sources of data heterogeneity (robustness 1) | Engage relevant stakeholders to assess data heterogeneity | Clinicians, technicians, data managers, IT managers, radiologists, device vendors |

| Identify equipment related data variations | Differences in medical devices, manufacturers, calibrations, machine ranges (from low cost to high end)95 | |

| Identify protocol related data variations | Differences in image sequences, data acquisition protocols,96 data annotation methods, sampling rates, preprocessing standards | |

| Identify operator related data variations | Different in experience and proficiency, operator fatigue, subjective judgment, technique variability | |

| Identify sources of artefacts and noises | Image noise, motion artefacts, signal dropout, sensor malfunction | |

| Identify context specific data variations | Lower data quality acquisition in emergency units, during high patient volume times | |

| Define any potential sources of bias (fairness 1) | Engage relevant stakeholders to define the sources of bias | Patients, clinicians, epidemiologists, ethicists, social carers97 98 |

| Define standard attributes that might affect the AI tool’s fairness | Sex, age, socioeconomic status99 | |

| Identify application specific sources of bias beyond standard attributes | Skin colour for skin cancer detection,100 101 breast density for breast cancer detection34 | |

| Identify all possible human biases | Data labelling, data curation99 | |

| Define the need and requirements for explainability with end users (explainability 1) | Engage end users to define explainability requirements | Clinicians, technicians, patients102 |

| Specify if explainability is necessary | Not necessary for AI enabled image segmentation part, critical for AI enabled diagnosis | |

| Specify the objectives of AI explainability (if it is needed) | Understanding AI model, aiding diagnostic reasoning, justifying treatment recommendations103 | |

| Define suitable explainability approaches | Visual explanations, feature importance, counterfactuals104 | |

| Adjust the design of the AI explanations for all end user subgroup | Heatmaps for clinicians, feature importance for patients105 106 | |

| Investigate ethical issues (general 6) | Consult ethicists on ethical considerations | Ethicists specialised in medical AI and/or in the application domain (eg, paediatrics)107 |

| Assess if the AI tool’s design is aligned with relevant ethical values | Right to autonomy, information, consent, confidentiality, equity107 | |

| Identify application specific ethical issues | Ethical risks for a paediatric AI tool (eg, emotional impact on children)108 109 | |

| Comply with local ethical AI frameworks | AI ethical guidelines from Europe,2 United Kingdom,110 111 United Sates,112 Canada,113 China,114 India,115 Japan,116 117 Australia,118 etc | |

| Investigate social and environmental issues (general 7) | Investigate AI tool’s social and environmental impact | Workforce displacement, worsened working conditions and relations, deskilling,80 dehumanisation of care, reduced health literacy, increased carbon footprint,119 negative public perception107 120 |

| Define mitigations to enhance the AI tool’s social and environmental impact | Interfaces for physician-patient communication, workforce training, educational programmes, energy efficient computing practices, public engagement initiatives | |

| Optimise algorithms, energy efficiency | Develop and use energy efficient algorithms that minimise computational demands. Techniques like model pruning, quantisation, and edge computing can reduce the energy required for AI tasks | |

| Promote responsible data usage | Focus on collecting and processing only the necessary amount of data. Implement federated learning techniques to minimise data transfers. This approach keeps data localised, reducing need for extensive data movement, which consumes energy | |

| Monitor and report the environmental impact of the AI tool | Regularly monitor and report on the environmental impact of AI systems used in healthcare, including energy usage, carbon emissions, and waste generation | |

| Use community defined standards (universality 2) | Use a standard definition for the clinical task | Definition of heart failure by the American Academy of Cardiology121 |

| Use a standard method for data labelling | BI-RADS for breast imaging122 | |

| Use a standard ontology for the AI inputs | DICOM for imaging data,123 SNOMED for clinical data41 | |

| Adopt technical standards | IEEE 2801-2022 for medical AI software43 | |

| Use standard evaluation criteria | See Maier-Hein et al21 for medical imaging applications, Barocas et al31 and Bellamy et al35 for fairness evaluation | |

| Implement a risk management process (traceability 1) | Identify all possible clinical, technical, ethical, and societal risks | Bias against under-represented subgroups, limited generalisability to low resource facilities, data drift, lack of acceptance by end users, sensitivity to noisy inputs124 |

| Identify all possible operational risks | Misuse of the AI tool (owing to insufficient training or not following the instructions), application of the AI tool outside of the target population (eg, individuals with implants), use of the tool by others than the target end users (eg, technician instead of physician), hardware failure, incorrect data annotations, adversarial attacks76 125 | |

| Assess the likelihood of each risk | Very likely, likely, possible, rare | |

| Assess the consequences of each risk | Patient harm, discrimination, lack of transparency, loss of autonomy, patient reidentification126 | |

| Prioritise all the risks depending on their likelihood and consequences | Risk of bias (if no personal attributes are included in the model) v risk of patient reidentification (if personal attributes are collected) | |

| Define mitigation measures to be applied during AI development | Data enhancement, data augmentation,127 bias correction techniques, domain adaptation,66 transfer learning,128 continuous learning129 | |

| Define mitigation measures to be applied after deployment | Warnings to the users, system shutdown, reprocessing of the input data, acquisition of new input data, use of an alternative procedure, or human judgment only | |

| Set up a mechanism to monitor and manage risks over time | Periodic risk assessment every six months | |

| Create a comprehensive risk management file | Including all risks, their likelihood and consequences, risk mitigation measures, risk monitoring strategy |

AI=artificial intelligence; BI-RADS=breast imaging reporting and data system; DI-COM=Digital Imaging and Communications in Medicine; ECG=electrocardiogram; GP=general practitioner; IEEE=Institute of Electrical and Electronics Engineers; MRI=magnetic resonance imaging; SNOMED=Systematized Nomenclature of Medicine.

Table 4.

Practical steps and examples to implement FUTURE-AI recommendations during development phase

| Recommendations | Operations | Examples |

|---|---|---|

| Collect representative training dataset (robustness 2) | Collect training data that reflect the demographic variations | According to age, sex, ethnicity, socioeconomics |

| Collect training data that reflect the clinical variations | Disease subgroups, treatment protocols, clinical outcomes, rare cases | |

| Collect training data that reflect variations in real world practice | Data acquisition protocols, data annotations, medical equipment, operational variations (eg, patient motion during scanning)125 | |

| Artificially enhance the training data to mimic real world conditions | Data augmentation,127 data synthesis (eg, low quality data, noise addition),130 data harmonisation,131 132 data homogenisation133 | |

| Collect information on individuals’ and data attributes (fairness 2) | Request approval for collecting data on personal attributes | Sex, age, ethnicity, socioeconomic status134 |

| Collect information on standard attributes of the individuals (if available and allowed) | Sex, age, nationality, education135 | |

| Include application specific information relevant for fairness analysis | Skin colour, breast density,34 presence of implants, comorbidity136 | |

| Estimate data distributions across subgroups | Male v female, across ethnic groups | |

| Collect information on data provenance | Data centres, equipment characteristics, data preprocessing, annotation processes | |

| Implement measures for data privacy and security (general 2) | Implement measures to ensure data privacy and security | Data deidentification, federated learning,137 138 differential privacy, encryption139 |

| Implement measures against malicious attacks | Firewalls, intrusion detection systems, regular security audits139 | |

| Adhere to applicable data protection regulations | General Data Protection Regulation,140 Health Insurance Portability and Accountability Act141 | |

| Define suitable data governance mechanisms | Access control, logging system | |

| Implement measures against identified AI risks (general 3) | Implement a baseline AI model and identify its limitations | Bias, lack of generalisability142 |

| Implement methods to enhance robustness to real world variations (if needed) | Regularisation,143 data augmentation,127 data harmonisation,131 domain adaptation66 | |

| Implement methods to enhance generalisability across settings (if needed) | Regularisation, transfer learning,144 knowledge distillation145 | |

| Implement methods to enhance fairness across subgroups (if needed) | Data resampling, bias free representation,36 equalised odds postprocessing37 38 146 | |

| Establish mechanisms for human-AI interactions (usability 2) | Implement mechanisms to standardise data preprocessing and labelling | Data preprocessing pipeline, data labelling plugin |

| Implement an interface for using the AI model | Application programming interface | |

| Implement interfaces for explainable AI | Visual explanations, heatmaps, feature importance bars105 106 | |

| Implement mechanisms for user centred quality control of the AI results | Visual quality control, uncertainty estimation147 | |

| Implement mechanism for user feedback | Feedback interface148 |

AI=artificial intelligence.

Table 5.

Practical steps and examples to implement FUTURE-AI recommendations during evaluation phase

| Recommendations | Operations | Examples |

|---|---|---|

| Define adequate evaluation plan (general 4) | Identify the dimensions of trustworthy AI to be evaluated | Robustness, clinical safety, fairness, data drifts, usability, explainability |

| Select appropriate testing datasets | External dataset from a new hospital, public benchmarking dataset148 | |

| Compare the AI tool against standard of care | Conventional risk predictors, visual assessment by radiologist, decision by clinician149 150 | |

| Select adequate evaluation metrics | F1 score for classification, concordance index for survival,21 statistical parity for fairness151 | |

| Evaluate using external datasets and/or multiple sites (universality 3) | Identify relevant public datasets | Cancer Imaging Archive,152 UK Biobank,153 M&Ms,154 MAMA-MIA,155 BRATS156 |

| Identify external private datasets | New prospective dataset from same site or from different clinical centre157 158 | |

| Select multiple evaluation sites | Three sites in same country, five sites in two different countries | |

| Verify that evaluation data and sites reflect real world variations | Variations in demographics, clinicians, equipment | |

| Confirm that no evaluation data were used during training | Yes/no | |

| Evaluate fairness and bias correction measures (fairness 3) | Select attributes and factors for fairness evaluation | Sex, age, skin colour, comorbidity |

| Define fairness metrics and criteria | Statistical parity difference defined fairness between −0.1 and 0.135 | |

| Evaluate fairness and identify biases | Fair with respect to age, biased with respect to sex | |

| Evaluate bias mitigation measures | Training data resampling,159 equalised odds postprocessing37 38 146 | |

| Evaluate impact of mitigation measures on model performance | Data resampling removed sex bias but reduced model performance160 | |

| Report identified and uncorrected biases | In AI information leaflet and technical documentation161 (see traceability 2). | |

| Evaluate user experience (usability 4) | Evaluate usability with diverse end users | According to sex, age, digital proficiency level, role, clinical profile162 163 |

| Evaluate user satisfaction using usability questionnaires | System usability scale164 | |

| Evaluate user performance and productivity | Diagnosis time with and without AI tool, image quantification time165 | |

| Assess training of new end users | Average time to reach competency, training difficulties166 | |

| Evaluate clinical utility and safety (usability 5) | Define clinical evaluation plan | Randomised control trial,59 167 in silico trial168 |

| Evaluate if AI tool improves patient outcomes | Better risk prevention, earlier diagnosis, more personalised treatment169 | |

| Evaluate if AI tool enhances productivity or quality of care | Enhanced patient triage, shorter waiting times, faster diagnosis, higher patient intake169 | |

| Evaluate if AI tool results in cost savings | Reduction in diagnosis costs,170 171 reduction in overtreatment172 | |

| Evaluate AI tool’s safety | Side effects or major adverse events in randomised control trials173 174 | |

| Evaluate robustness (robustness 3) | Evaluate robustness under real world variations | Using test-retest datasets,175 176 multivendor datasets177 |

| Evaluate robustness under simulated variations | Using simulated repeatability tests,148 synthetic noise and artefacts (eg, image blurring)178 | |

| Evaluate robustness against variations in end users | Different technicians or annotators | |

| Evaluate mitigation measures for robustness enhancement | Regularisation,63 data augmentation,64 127 noise addition, normalisation,179 resampling, domain adaptation66 | |

| Evaluate explainability (explainability 2) | Assess if explanations are clinically meaningful | Reviewing by expert panels, alignment to current clinical guidelines, explanations not pointing to shortcuts73 |

| Assess explainability quantitatively using objective measures | Fidelity, consistency, completeness, sensitivity to noise180-182 | |

| Assess explainability qualitatively with end users | Using user tests or questionnaires to measure confidence and affect clinical decision making183 184 | |

| Evaluate if explanations cause end user overconfidence or overreliance | Measure changes in clinician confidence,185 186 performance with and without AI tool187 | |

| Evaluate if explanations are sensitive to input data variations | Stress tests under perturbations to evaluate the stability of explanations74 188 | |

| Provide documentation (traceability 2) | Report evaluation results in publication using AI reporting guidelines | Peer reviewed scientific publication using TRIPOD-AI reporting guideline15 |

| Create technical documentation for AI tool | AI passport,189 model cards49 (including model hyperparameters, training and testing data, evaluations, limitations, etc) | |

| Create clinical documentation for AI tool | Guidelines for clinical use, AI information leaflet (including intended use, conditions and diseases, targeted populations, instructions, potential benefits, contraindications) | |

| Provide risk management file | Including identified risks, mitigation measures, monitoring measures | |

| Create user and training documentation | User manuals, training materials, troubleshooting, FAQs (see usability 2) | |

| Identify and provide all locally required documentation | Compliance documents and certifications (see general 5) |

AI=artificial intelligence; FAQ=frequently asked questions.

Table 6.

Practical steps and examples to implement FUTURE-AI recommendations during deployment phase

| Recommendations | Operations | Examples |

|---|---|---|

| Evaluate and demonstrate local clinical validity (universality 4) | Test AI model using local data | Data from local clinical registry |

| Identify factors that could affect AI tool’s local validity | Local operators, equipment, clinical workflows, acquisition protocols | |

| Assess AI tool’s integration within local clinical workflows | AI tool’s interface aligns with hospital IT system148 or disrupts routine practice | |

| Assess AI tool's local practical utility and identify any operational challenges | Time to operate, clinician satisfaction, disruption of existing operations148 190 | |

| Implement adjustments for local validity | Model calibration, fine-tuning,191 transfer learning192-194 | |

| Compare performance of AI tool with that of local clinicians | Side-by-side comparison, in silico trial | |

| Define mechanisms for quality control of AI inputs and outputs (traceability 3) | Implement mechanisms to identify erroneous input data | Missing value or out-of-distribution detector,195 automated image quality assessment73 196 197 |

| Implement mechanisms to detect implausible AI outputs | Postprocessing sanity checks, anomaly detection algorithm198 | |

| Provide calibrated uncertainty estimates to inform on AI tool’s confidence | Calibrated uncertainty estimates per patient or data point53 54 199 | |

| Implement system for continuous quality monitoring | Real time dashboard tracking data quality and performance metrics200 | |

| Implement feedback mechanism for users to report issues | Feedback portal enabling clinicians to report discrepancies or anomalies | |

| Implement system for periodic auditing and updating (traceability 4) | Define schedule for periodic audits | Biannual or annual |

| Define audit criteria and metrics | Accuracy, consistency, fairness, data security148 | |

| Define datasets for periodic audits | Newly acquired prospective dataset from local hospital | |

| Implement mechanisms to detect data or concept drifts | Detecting shifts in input data distributions148 190 | |

| Assign role of auditor(s) for AI tool | Internal auditing team, third party company190 | |

| Update AI tool based on audit results | Updating AI model,56 re-evaluating AI model,148 adjusting operational protocols, continuous learning201-204 | |

| Implement reporting system from audits and subsequent updates | Automatic sharing of detailed reports to healthcare managers and clinicians | |

| Monitor impact of AI updates | Impact on system performance and user satisfaction56 | |

| Implement logging system for usage recording (traceability 5) | Implement logging framework capturing all interactions | User actions, AI inputs, AI outputs, clinical decisions |

| Define data to be logged | Timestamp, user ID, patient ID (anonymised), action details, results | |

| Implement mechanisms for data capture | Software to automatically record every data and operation | |

| Implement mechanisms for data security | Encrypted log files, privacy preserving techniques205 | |

| Provide access to logs for auditing and troubleshooting | By defining authorised personnel, eg, healthcare or IT managers | |

| Implement mechanism for end users to log any issues | A user interface to enter information about operational anomalies | |

| Implement log analysis | Time series statistics and visualisations to detect unusual activities and alert administrators | |

| Provide training (usability 3) | Create user manuals | User instructions, capabilities, limitations, troubleshooting steps, examples, and case studies |

| Develop training materials and activities | Online courses, workshops, hands-on sessions | |

| Use formats and languages accessible to intended end users | Multiple formats (text, video, audio) and languages (English, Chinese, Swahili) | |

| Customise training to all end user groups | Role specific modules for specialists, nurses, and patients | |

| Include training to enhance AI and health literacy | On application specific AI concepts (eg, radiomics, explainability), AI driven clinical decision making | |

| Identify and comply with applicable AI regulatory requirements (general 5) | Engage regulatory experts to investigate regulatory requirements | Regulatory consultants from intended local settings |

| Identify specific regulations based on AI tool’s intended markets | FDA’s SaMD in the United States,206 MDR and AI Act207 in the EU | |

| Identify specific requirements based on AI tool’s purpose | De Novo classification (Class III)208 | |

| Define list of milestones towards regulatory compliance | MDR certification: technical verification, pivotal clinical trial, risk and quality management, postmarket follow-up | |

| Establish mechanisms for AI governance (traceability 6) | Assign roles for AI tool’s governance | For periodic auditing, maintenance, supervision (eg, healthcare manager) |

| Define responsibilities for AI related errors | Responsibilities of clinicians, healthcare centres, AI developers, and manufacturers | |

| Define mechanisms for accountability | Individual v collective accountability/liability,25 compensations, support for patients |

AI=artificial intelligence; FDA=United States Food and Drug Administration; MDR=medical device regulation; SaMD= Software as a Medical Device.

Discussion

Despite the tremendous amount of research in medical AI in recent years, currently only a limited number of AI tools have made the transition to clinical practice. Although many studies have shown the huge potential of AI to improve healthcare, major clinical, technical, socioethical, and legal challenges persist.

In this paper, we presented the results of an international effort to establish a consensus guideline for developing trustworthy and deployable AI tools in healthcare. To this end, the FUTURE-AI Consortium was established, which provided knowledge and expertise across a wide range of disciplines and stakeholders, resulting in consensus and wide support, both geographically and across domains. Through an iterative process that lasted 24 months, the FUTURE-AI framework was established, comprising a comprehensive and self-contained set of 30 recommendations, which covers the whole lifecycle of medical AI. By dividing the recommendations across six guiding principles, the pathways towards responsible and trustworthy AI are clearly characterised. Because of its broad coverage, the FUTURE-AI guideline can benefit a wide range of stakeholders in healthcare, as detailed in table 2 in the appendix.

FUTURE-AI is a risk informed framework, proposing to assess application specific risks and challenges early in the process (eg, risk of discrimination, lack of generalisability, data drifts over time, lack of acceptance by end users, potential harm for patients, lack of transparency, data security vulnerabilities, ethical risks), followed by implementing tailored measures to reduce these risks (eg, collect data on individuals’ attributes to assess and mitigate bias). As the specific measures to be implemented have benefits and potential weaknesses that the developers need to assess and take into consideration, a risk-benefit balancing trade-off has to be made. For example, collecting data on individuals’ attributes might increase the risk of reidentification, but can enable the risk of bias and discrimination to be reduced. Therefore, in FUTURE-AI, risk management (as recommended in traceability 1) must be a continuous and transparent process throughout the AI tool’s lifecycle.

FUTURE-AI is also an assumption-free, highly collaborative framework, recommending to continuously engage with multidisciplinary stakeholders to understand application specific needs, risks, and solutions (general 1). This is crucial to investigate all possible risks and factors that might reduce trust in a specific AI tool. For example, instead of making any assumptions on possible sources of bias, FUTURE-AI recommends that AI developers engage with healthcare professionals, domain experts, representative citizens, and/or ethicists early in the process to form interdisciplinary AI development teams and investigate in-depth the application specific sources of bias, which might include domain specific attributes (eg, breast density for AI applications in breast cancer).