Abstract

Advances in large language models (LLMs) have empowered a variety of applications. However, there is still a significant gap in research when it comes to understanding and enhancing the capabilities of LLMs in the field of mental health. In this work, we present a comprehensive evaluation of multiple LLMs on various mental health prediction tasks via online text data, including Alpaca, Alpaca-LoRA, FLAN-T5, GPT-3.5, and GPT-4. We conduct a broad range of experiments, covering zero-shot prompting, few-shot prompting, and instruction fine-tuning. The results indicate a promising yet limited performance of LLMs with zero-shot and few-shot prompt designs for mental health tasks. More importantly, our experiments show that instruction finetuning can significantly boost the performance of LLMs for all tasks simultaneously. Our best-finetuned models, Mental-Alpaca and Mental-FLAN-T5, outperform the best prompt design of GPT-3.5 (25 and 15 times bigger) by 10.9% on balanced accuracy and the best of GPT-4 (250 and 150 times bigger) by 4.8%. They further perform on par with the state-of-the-art task-specific language model. We also conduct an exploratory case study on LLMs’ capability on mental health reasoning tasks, illustrating the promising capability of certain models such as GPT-4. We summarize our findings into a set of action guidelines for potential methods to enhance LLMs’ capability for mental health tasks. Meanwhile, we also emphasize the important limitations before achieving deployability in real-world mental health settings, such as known racial and gender bias. We highlight the important ethical risks accompanying this line of research.

Additional Key Words and Phrases: Mental Health, Large Language Model, Instruction Finetuning

1. INTRODUCTION

The recent surge of Large Language Models (LLMs), such as GPT-4 [18], PaLM [23], FLAN-T5 [24], and Alpaca [115], demonstrates the promising capability of large pre-trained models to solve various tasks in zero-shot settings (i.e., tasks not encountered during training). Example tasks include question answering [87, 100], logic reasoning [124, 135], machine translation [15, 45], etc. A number of experiments have revealed that, built on hundreds of billions of parameters, these LLMs have started to show the capability to understand the human common sense beneath the natural language and do proper reasoning and inference accordingly [18, 85].

Among different applications, one particular question yet to be answered is how well LLMs can understand human mental health states through natural language. Mental health problems represent a significant burden for individuals and societies worldwide. A recent report suggested that more than 20% of adults in the U.S. experience at least one mental disorder in their lifetime [9] and 5.6% have suffered from a serious psychotic disorder that significantly impairs functioning [3]. The global economy loses around $1 trillion annually in productivity due to depression and anxiety alone [2].

In the past decade, there has been a plethora of research in natural language processing (NLP) and computational social science on detecting mental health issues via online text data such as social media content (e.g., [26, 32, 33, 38, 47]). However, most of these studies have focused on building domain-specific machine learning (ML) models (i.e., one model for one particular task, such as stress detection [46, 84], depression prediction [38, 113, 127, 128], or suicide risk assessment [28, 35]). Even for traditional pre-trained language models such as BERT, they need to be finetuned for specific downstream tasks [37, 72]. Some studies have also explored the multi-task setup [12], such as predicting depression and anxiety at the same time [106]. However, these models are constrained to predetermined task sets, offering limited flexibility. From a different aspect, another line of research has been exploring the application of chatbots for mental health services [20, 21, 68]. Most chatbots are simply rule-based and can benefit from more advanced models that empower the chatbots [4, 68]. Despite the growing research efforts of empowering AI for mental health, it’s important to note that existing techniques can sometimes introduce bias and even provide harmful advice to users [54, 74, 116].

Since natural language is a major component of mental health assessment and treatment [43, 110], LLMs could be a powerful tool for understanding end-users’ mental states through their written language. These instruction-finetuned and general-purpose models can understand a variety of inputs and obviate the need to train multiple models for different tasks. Thus, we envision employing a single LLM for a variety of mental health-related tasks, such as multiple question-answering, reasoning, and inference. This vision opens up a wide range of opportunities for UbiComp, Human-Computer Interaction (HCI), and mental health communities, such as online public health monitoring systems [44, 90], mental-health-aware personal chatbots [5, 36, 63], intelligent assistants for mental health therapists [108], online moderation tools [39], daily mental health counselors and supporters [109], etc. However, there is a lack of investigation into understanding, evaluating, and improving the capability of LLMs for mental-health-related tasks.

There are few recent studies on the evaluation of LLMs (e.g., ChatGPT) on mental-health-related tasks, most of which are in zero-shot settings with simple prompt engineering [10, 67, 132]. Researchers have shown preliminary results that LLMs have the initial capability of predicting mental health disorders using natural language, with promising but still limited performance compared to state-of-the-art domain-specific NLP models [67, 132]. This remaining gap is expected since existing general-purpose LLMs are not specifically trained on mental health tasks. However, to achieve our vision of leveraging LLMs for mental health support and assistance, we need to address the research question: How to improve LLMs’ capability of mental health tasks?

We conducted a series of experiments with six LLMs, including Alpaca [115] and Alpaca-LoRA (LoRA-finetuned LLaMA on Alpaca dataset) [51], which are representative open-source models focused on dialogue and other tasks; FLAN-T5 [24], a representative open-source model focused on task-solving; LLaMA2 [118], one of the most advanced open-source model released by Meta; GPT-3.5 [1] and GPT-4 [18], representative close-sourced LLMs over 100 billion parameters. Considering the data availability, we leveraged online social media datasets with high-quality human-generated mental health labels. Due to the ethical concerns of existing AI research for mental health, we aim to benchmark LLMs’ performance as an initial step before moving toward real-life deployment. Our experiments contained three stages: (1) zero-shot prompting, where we experimented with various prompts related to mental health, (2) few-shot prompting, where we inserted examples into prompt inputs, and (3) instruction-finetuning, where we finetuned LLMs on multiple mental-health datasets with various tasks.

Our results show that the zero-shot approach yields promising yet limited performance on various mental health prediction tasks across all models. Notably, FLAN-T5 and GPT-4 show encouraging performance, approaching the state-of-the-art task-specific model. Meanwhile, providing a few shots in the prompt can improve the model performance to some extent , but the advantage is limited. Finally and most importantly, we found that instruction-finetuning significantly enhances the model performance across multiple mental-health-related tasks and various datasets simultaneously. Our finetuned Alpaca and FLAN-T5, namely Mental-Alpaca and Mental-FLAN-T5, significantly outperform the best of GPT-3.5 across zero-shot and few-shot settings (×25 and 15 bigger than Alpaca and FLAN-T5) by an average of 10.9% on balance accuracy, as well as the best of GPT-4 by 4.8% (×250 and 150 bigger than Alpaca and FLAN-T5). Meanwhile, Mental-Alpaca and Mental-FLAN-T5 can further perform on par with the task-specific state-of-the-art Mental-RoBERTa [58]. We further conduct an exploratory case study on LLM’s capability of mental health reasoning (i.e., explaining the rationale behind their predictions). Our results illustrate the promising future of certain LLMs like GPT-4, while also suggesting critical failure cases that need future research attention. We open-source our code and model at https://github.com/neuhai/Mental-LLM.

Our experiments present a comprehensive evaluation of various techniques to enhance LLMs’ capability in the mental health domain. However, we also note that our technical results do not imply deployability. There are many important limitations of leveraging LLMs in mental health settings, especially along known racial and gender gaps [6, 42]. We discuss the important ethical risks to be addressed before achieving real-world deployment.

The contribution of our paper can be summarized as follows:

We present a comprehensive evaluation of prompt engineering, few-shot, and finetuning techniques on multiple LLMs in the mental health domain.

With online social media data, our results reveal that finetuning on a variety of datasets can significantly improve LLM’s capability on multiple mental-health-specific tasks across different datasets simultaneously.

We release our model Mental-Alpaca and Mental-FLAN-T5 as open-source LLMs targeted at multiple mental health prediction tasks.

We provide a few technical guidelines for future researchers and developers on turning LLMs into experts in specific domains. We also highlight the important ethical concerns regarding leveraging LLMs for health-related tasks.

2. BACKGROUND

We briefly summarize the related work in leveraging online text data for mental health prediction (Sec. 2.1). We also provide an overview of the ongoing research in LLMs and their application in the health domain (Sec. 2.2).

2.1. Online Text Data and Mental Health

Online platforms, especially social media platforms, have been acknowledged as a promising lens that is capable of revealing insights into the psychological states, health, and well-being of both individuals and populations [22, 30, 33, 47, 91]. In the past decade, there has been extensive research about leveraging content analysis and social interaction patterns to identify and predict risks associated with mental health issues, such as anxiety [8, 104, 111], major depressive disorder [32, 34, 89, 119, 128, 129], suicide ideation [19, 29, 35, 101, 114], and others [25, 27, 77, 103]. The real-time nature of social media, along with its archival capabilities, often helps in mitigating retrospective bias. The rich amount of social media data also facilitates the identification, monitoring, and potential prediction of risk factors over time. In addition to observation and detection, social media platforms could further serve as effective channels to offer in-time assistance to communities at risk [66, 73, 99].

From the computational technology perspective, early research started with basic methods [27, 32, 77]. For example, pioneering work by Coppersmith et al. [27] employed correlation analysis to reveal the relationship between social media language data and mental health conditions. Since then, researchers have proposed a wide range of feature engineering methods and built machine-learning models for the prediction [14, 79, 82, 102, 119]. For example, De Choudhury et al. [34] extracted a number of linguistic styles and other features to build an SVM model to perform depression prediction. Researchers have also explored deep-learning-based models for mental health prediction to obviate the need for hand-crafted features [57, 107]. For instance, Tadesse et al. [114] employed an LSTM-CNN model and took word embeddings as the input to detect suicide ideation on Reddit. More recently, pre-trained language models have become a popular method for NLP tasks, including mental health prediction tasks [48, 58, 83]. For example, Jiang et al. [60] used the contextual representations from BERT as input features for mental health issue detection. Otsuka et al. [88] evaluated the performance of BERT-based pre-trained models in clinical settings. Meanwhile, researchers have also explored the multi-task setup [12] that aims to predict multiple labels. For example, Sarkar et al. [106] trained a multi-task model to predict depression and anxiety at the same time. However, these multi-task models are constrained to a predetermined task set and thus have limited flexibility. Our work joins the same goal and aims to achieve a more flexible multi-task capability. We focus on the next-generation technology of instruction-finetuned LLMs, leverage their power in natural language understanding, and explore their capability on mental health tasks with social media data.

2.2. LLM and Health Applications

After the great success of Transformer-based language models such as BERT [37] and GPT [96], researchers and practitioners have advanced towards larger and more powerful language models (e.g., GPT-3 [17] and T5 [97]). Meanwhile, researchers have proposed instruction finetuning, a method that utilizes varied prompts across multiple datasets and tasks. This technique guides a model during training and generation phases to perform diverse tasks within a single unified framework [123]. These instruction-finetuned LLMs, such as GPT-4 [18], PaLM [23], FLAN-T5 [24], LLaMA [117], Alpaca [115], contain tens to hundreds of billions of parameters, demonstrating promising performance across a variety of tasks, such as question answering [87, 100], logic reasoning [124, 135], machine translation [15, 45], etc.

Researchers have explored the capability of these LLMs in health fields [59, 70, 71, 75, 85, 112, 125?]. For example, Singhal et al. [112] finetuned PaLM-2 on medical domains and achieved 86.5% on MedQA dataset. Similarly, Wu et al. [125] finetuned LLaMA on medical papers and showed promising results on multiple biomedical QA datasets. Jo et al. [61] explored the deployment of LLMs for public health scenarios. Jiang et al. [59] trained a medical language model on unstructured clinical notes from the electronic health record and fine-tuned it across a wide range of clinical and operational predictive tasks. Their evaluation indicates that such a model can be used for various clinical tasks.

There is relatively less work in the mental health domain. Some work explored the capability of LLMs for sentiment analysis and emotion reasoning [64, 95, 134].

Closer to our study, Lamichhane [67] and Amin et al. [10] tested the performance of ChatGPT (GPT-3.5) on multiple classification tasks (e.g., stress, depression, and suicide detection). The results showed that ChatGPT shows the initial potential for mental health applications, but it still has a great room for improvement, with at least 5–10% performance gaps on accuracy and F1-score. Yang et al. [132] further evaluated the potential reasoning capability of GPT-3.5 for reasoning tasks (e.g., potential stressors). However, most previous studies focused solely on zero-shot prompting and did not explore other methods to improve the performance of LLMs. Very recently, Yang et al. [133] released Mental-LLaMA, a set of LLaMA-based models finetuned on mental health datasets for a set of mental health tasks. Table 1 summarizes the recent related work exploring LLMs’ capabilities on mental-health-related tasks. None of the existing work explores the capability other than LLaMA or GPT-3.5. In this work, we present a comprehensive and systematic exploration of multiple LLMs’ performance on mental health tasks, as well as multiple methods to improve their capabilities.

Table 1.

A Summary of LLM-related Research for Mental Health Applications.

| LLMs | Methods | Tasks | |

|---|---|---|---|

| Lamichhane [67] | GPT-3.5 | Zero-shot | Classification |

| Amin et al. [10] | GPT-3.5 | Zero-shot | Classification |

| Yang et al. [132] | GPT-3.5 | Zero-shot | Classification, Reasoning |

| Mental-LLaMA [133] | LLaMA2, Vicuna (LLaMA-based) | Zero-shot, Few-shot, Instruction Finetuning | Classification, Reasoning |

| Mental-LLM (Our Work) | Alpaca, Alapca-LoRA, FLAN-T5, LLaMA2, GPT-3.5, GPT-4 | Zero-shot, Few-shot, Instruction Finetuning | Classification, Reasoning |

3. METHODS

We introduce our experiment design with LMMs on multiple mental health prediction task setups, including zero-shot prompting (Sec. 3.1), few-shot prompting (Sec. 3.2), and instruction finetuning (Sec. 3.3). These setups are model-agnostic, and we will present the details of language models and datasets employed for our experiment in the next section.

3.1. Zero-shot Prompting

The language understanding and reasoning capability of LLMs have enabled a wide range of applications without the need for any domain-specific data, but only providing appropriate prompts [65, 122]. Therefore, we start with prompt design for mental health tasks in a zero-shot setting.

The goal of prompt design is to empower a pre-trained general-purpose LLM to achieve good performance on tasks in the mental health domain. We propose a general zero-shot prompt template that consists of four parts:

| (1) |

where is the online text data generated by end-users. provides specifications for a mental health prediction target. poses the question for LLMs to answer. And OutputConstraint controls the output of models (e.g., “Only return yes or no” for a binary classification task).

We propose several design strategies for , as shown in the top part of Table 2: (1) Basic, which leaves it as blank; (2) Context Enhancement, which provides more social media context about the TextData; (3) Mental Health Enhancement, which inserts mental health concept by asking the model to act as an expert. (4) Context & Mental Health Enhancement, which combines both enhancement strategies by asking the model to act as a mental health expert under the social media context.

Table 2.

Prompt Design for Mental Health Prediction Tasks. aims to provide a better specification for LLMs and poses the questions for LLMs to answer. For Part 1, we propose three strategies: context enhancement, mental health enhancement, and the combination of both. As for Part 2, we design different content for multiple mental health problem categories and prediction tasks. For each part, we propose two to three versions to improve its variation.

| Strategy | ||

|---|---|---|

| Basic |

|

|

| Context Enhancement |

|

|

| Mental Health Enhancement |

|

|

| Context & Mental Health Enhancement |

|

|

| Category | Task | |

| Mental state (e.g., stressed, depressed) | Binary classification (e.g., yes or no) |

|

| Multi-class classification (e.g., multiple levels) |

|

|

| Critical risk action (e.g., suicide) | Binary classification (e.g., yes or no) |

|

| Multi-class classification (e.g., multiple levels) |

|

|

As for , we mainly focus on two categories of mental health prediction targets: (1) predicting critical mental states, such as stress or depression, and (2) predicting high-stake risk actions, such as suicide. We tailor the question description for each category. Moreover, for both categories, we explore binary and multi-class classification tasks1. Thus, we also make small modifications based on specific tasks to ensure appropriate questions (see Sec. 4 for our mental health tasks). The bottom part of Table 2 summarizes the mapping.

For both and , we propose several versions to improve its variability. We then evaluate these prompts on multiple LLMs on different datasets and compare their performance.

3.2. Few-shot Prompting

In order to provide more domain-specific information, researchers have also explored few-shot prompting with LLMs by providing few-shot demonstrations to support in-context learning (e.g., [7, 31]). Note that these few examples are used solely in prompts, and the model parameters remain unchanged. The intuition is to present a few “examples” for the model to learn domain-specific knowledge in situ. In our setting, we also test this strategy by adding additional randomly sampled pairs. The design of the few-shot prompt is straightforward:

| (2) |

where is the number of prompt-label pairs and is capped by the input length limit of a model. Note that both the and follow Eq. 1 and employ the same design of and to ensure consistency.

3.3. Instruction Finetuning

In contrast to the few-shot prompting strategy in Sec. 3.2, the goal of this strategy is closer to the traditional few-shot transfer learning, where we further train the model with a small amount of domain-specific data (e.g., [52, 71, 126]). We experiment with multiple finetuning strategies.

3.3.1. Single-dataset Finetuning.

Following most of the previous work in the mental health field [26, 35, 132], we first conduct basic finetuning on a single dataset (the training set). This finetuned model can be tested on the same dataset (the test set) to evaluate its performance and different datasets to evaluate its generalizability.

3.3.2. Multi-dataset Finetuning.

From Sec. 3.1 to Sec. 3.3.1, we have been focusing on one single mental health dataset . More interestingly, we further experiment with finetuning across multiple datasets simultaneously. Specifically, we leverage instruction finetuning to enable LLMs to handle multiple tasks in different datasets [17].

It is noteworthy that such an instruction finetuning setup differs from the state-of-the-art mental-health-specific models (e.g., Mental-RoBERTa [58]). The previous models are finetuned for a specific task, such as depression prediction or suicidal ideation prediction. Once trained on task A, the model becomes specific to task A and is only suitable for solving that particular task. In contrast, we finetune LLMs on several mental health datasets, employing diverse instructions for different tasks across these datasets in a single iteration. This enables them to handle multiple tasks without additional task-specific finetuning.

For both single- and multi-dataset finetuning, we follow the same two steps:

| (3) |

where is the total size of the training/test dataset represents the set of datasets used for finetuning, and indicates the specific dataset index . Both and follow Eq. 1. Similar to the few-shot setup in Eq. 2, they employ the same design of and .

4. IMPLEMENTATION

Our method design is agnostic to specific datasets or models. In this section, we introduce the specific datasets (Sec. 4.1) and models (Sec. 4.2) involved in our experiments. In particular, we highlight our instructional-finetuned open-source models Mental-Alpaca and Mental-FLAN-T5 (Sec. 4.2.1). We also provide an overview of our experiment setup and evaluation metrics (Sec. 4.3).

4.1. Datasets and Tasks

Our experiment is based on four well-established datasets that are commonly employed for mental health analysis. These datasets were collected from Reddit due to their high-quality and availability. It is noteworthy that we intentionally avoid using datasets with weak labels based on specific linguistic patterns (e.g., whether a user ever stated “I was diagnosed with X”). Instead, we used ones with human expert annotations or supervision. We define six diverse mental health prediction tasks based on these datasets.

Dreaddit [120]: This dataset collected posts via Reddit PRAW API [94] from Jan 1, 2017 to Nov 19, 2018, which contains ten subreddits in the five domains (abuse, social, anxiety, PTSD, and financial) and includes 2929 users’ posts. Multiple human annotators rated whether sentence segments showed the stress of the poster, and the annotations were aggregated to generate final labels. We used this dataset for a post-level binary stress prediction (Task 1).

DepSeverity [80]: This dataset leveraged the same posts collected in [120], but with a different focus on depression. Two human annotators followed DSM-5 [98] and categorized posts into four levels of depression: minimal, mild, moderate, and severe. We employed this dataset for two post-level tasks: binary depression prediction (i.e., whether a post showed at least mild depression, Task 2), and four-level depression prediction (Task 3).

SDCNL [49]: This dataset also collected posts from Python Reddit API, including r/SuicideWatch and r/Depressionfrom 1723 users. Through manual annotation, they labeled whether each post showed suicidal thoughts. We employed this dataset for the post-level binary suicide ideation prediction (Task 4).

CSSRS-Suicide [40]: This dataset contains posts from 15 mental health-related subreddits from 2181 users between 2005 and 2016. Four practicing psychiatrists followed Columbia Suicide Severity Rating Scale (C-SSRS) guidelines [93] to manually annotate 500 users on suicide risks in five levels: supportive, indicator, ideation, behavior, and attempt. We leveraged this dataset for two user-level tasks: binary suicide risk prediction (i.e., whether a user showed at least suicide indicator, Task 5), and five-level suicide risk prediction (Task 6).

In order to test the generalizability of our methods, we also leveraged three other datasets from various platforms. Similarly, all datasets contain human annotations as labels.

Red-Sam [62, 105]: This dataset also collected posts with PRAW API [94], involving five subreddits (Mental Health, depression, loneliness, stress, anxiety). Two domain experts’ annotations were aggregated to generate depression labels. We used this dataset as an external evaluation dataset on binary depression detection (Task 2). Although also from Reddit, this dataset was not involved in few-shot learning or instruction finetuning. We cross-checked datasets to ensure there were no overlapping posts.

Twt-60Users [56]: This dataset collected twitters from 60 users during 2015 with Twitter API. Two human annotators labeled every tweet with depression labels. We used this non-Reddit dataset as an external evaluation dataset on depression detection (Task 2). Note that this dataset has imbalanced labels (90.7% False), as most tweets did not indicate mental distress.

SAD [76]: This dataset contains SMS-like text messages with nine types of daily stressor categories (work, school, financial problem, emotional turmoil, social relationships, family issues, health, everyday decision-making, and other). These messages were written by 3578 humans. We used this non-Reddit dataset as an external evaluation dataset on binary stress detection (Task 1). Note that human crowd-workers write the messages under certain stressor-triggered instructions. Therefore, this dataset has imbalanced labels on the other side (94.0% True).

Table 3 summarizes the information of the seven datasets and six mental health prediction tasks. For each dataset, we conducted an 80%/20% train-test split. Notably, to avoid data leakage, each user’s data were placed exclusively in either the training or test set.

Table 3.

Summary of Seven Mental Health Datasets Employed for Our Experiment. The top four datasets are used for both training and testing, while the bottom three datasets are used for external evaluation. We define six diverse mental health prediction tasks on these datasets.

| Dataset | Task | Dataset Size | Text Length (Token) |

|---|---|---|---|

| Dreaddit [120] Source: Reddit |

#1: Binary Stress Prediction post-level |

Train: 2838 (47.6% False, 52.4% True) Test: 715 (48.4% False, 51.6% True) |

Train: 114 ± 41 Test: 113 ± 39 |

| DepSeverity [80] Source: Reddit |

#2: Binary Depression Prediction post-level |

Train: 2842 (72.9% False, 17.1% True) Test: 711 (72.3% False, 17.7% True) |

Train: 114 ± 41 Test: 113 ± 37 |

| #3: Four-level Depression Prediction post-level |

Train: 2842 (72.9% Minimum, 8.4% Mild, 11.2% Moderate, 7.4% Severe) Test: 711 (72.3% Minimum, 7.2% Mild, 11.5% Moderate, 10.0% Severe) |

Train: 114 ± 41 Test: 113 ± 37 |

|

| SDCNL [49] Source: Reddit |

#4: Binary Suicide Ideation Prediction post-level |

Train: 1516 (48.1% False, 51.9% True) Test: 379 (49.1% False, 50.9% True) |

Train: 101 ± 161 Test: 92 ± 119 |

| CSSRS-Suicide [40] Source: Reddit |

#5: Binary Suicide Risk Prediction user-level |

Train: 400 (20.8% False, 79.2% True) Test: 100 (25.0% False, 75.0% True) |

Train: 1751 ± 2108 Test: 1909 ± 2463 |

| #6: Five-level Suicide Risk Prediction user-level |

Train: 400 (20.8% Supportive, 20.8% Indicator, 34.0% Ideation, 14.8% Behavior, 9.8% Attempt) Test: 100 (25.0% Supportive, 16.0% Indicator, 35.0% Ideation, 18.0% Behavior, 6.0% Attempt) |

Train: 1751 ± 2108 Test: 1909 ± 2463 |

|

| Red-Sam [105] Source: Reddit |

#2: Binary Depression Prediction post-level |

External Evaluation: 3245 (26.1% False, 73.9% True) | External Evaluation: 151 ± 139 |

| Twt-60Users [56] Source: Twitter |

#2: Binary Depression Prediction post-level |

External Evaluation: 8135 (90.7% False, 9.3% True) | External Evaluation: 15 ± 7 |

| SAD [76] Source: SMS-like |

#1: Binary Stress Prediction post-level |

External Evaluation: 6185 (6.0% False, 94.0% True) | External Evaluation: 13 ± 6 |

4.2. Models

We experimented with multiple LLMs with different sizes, pre-training targets, and availability.

Alpaca (7B) [115]: An open-source large model finetuned from another open-sourced LLaMA 7B model [117] on instruction following demonstrations. Experiments have shown that Alpaca behaves qualitatively similarly to OpenAI’s text-davinci-003 on certain task metrics. We choose the relatively small 7B version to facilitate running and finetuning on consumer hardware.

Alpaca-LoRA (7B) [51]: Another open-source large model finetuned from LLaMA 7B model using the same dataset as Alpaca [115]. This model leverages a different finetuning technique called low-rank adaptation (LoRA) [51], with the goal of reducing finetuning cost by freezing the model weights and injecting trainable rank decomposition matrices into each layer of the Transformer architecture. Despite the similarity in names, it is important to note that Alpaca-LoRA is entirely distinct from Alpaca. They are trained on the same dataset but with different methods.

FLAN-T5 (11B) [24]: An open-source large model T5 [97] finetuned with a variety of task-based datasets on instructions. Compared to other LLMs, FLAN-T5 focuses more on task solving and is less optimized for natural language or dialogue generation. We picked the largest version of FLAN-T5 (i.e., FLAN-T5-XXL), which has a comparable size of Alpaca.

LLaMA2 (70B) [118]: A recent open-source large model released by Meta. We picked the largest version of LLaMA2, whose size is between FLAN-T5 and GPT-3.5.

GPT-3.5 (175B) [1]: This large model is closed-source and available through API provided by OpenAI. We picked the gpt-3.5-turbo, one of the most capable and cost-effective models in the GPT-3.5 family.

GPT-4 (1700B) [18]: This is the largest closed-source model available through OpenAI API. We picked the gpt-4-0613. Due to the limited availability of API, the cost of finetuning GPT-3.5 or GPT-4 is prohibitive.

It is worth noting that Alpaca, Alpaca-LoRA, GPT-3.5, LLaMA2 and GPT-4 are all finetuned with natural dialogue as one of the optimization goals. In contrast, FLAN-T5 is more focused on task-solving. In our case, the user-written input posts resemble natural dialogue, whereas the mental health prediction tasks are defined as specific classification tasks. It is unclear and thus interesting to explore which LLM fits better with our goal.

4.2.1. Mental-Alpaca & Mental-FLAN-T5.

Our methods of zero-shot prompting (Sec. 3.1) and few-shot prompting (Sec. 3.2) do not update model parameters during the experiment. In contrast, instruction finetuning (Sec. 3.3) will update model parameters and generate new models. To enhance their capability in the mental health domains, we update Alpaca and FLAN-T5 on six tasks across the four datasets in Sec. 4.1 using the multi-dataset instruction finetuning method (Sec. 3.3.2), which leads to our new model Mental-Alpaca and Mental-FLAN-T5.

4.3. Experiment Setup and Metrics

For zero-shot and few-shot prompting methods, we load open-source models (Alpaca, Alpaca-LoRA, FLAN-T5, LLaMA2) with one to eight Nvidia A100 GPUs to do the tasks, depending on the size of the model. For closed-source models (GPT-3.5, and GPT-4), we use OpenAI API to conduct chat completion tasks.

As for finetuning Mental-Alpaca and Mental-FLAN-T5, we merge the four datasets together and provide instructions for all six tasks (in the training set). We use eight Nvidia A100 GPUs for instruction finetuning. With cross entropy as the loss function, we backpropagate and update model parameters in 3 epochs, with Adam optimizer and a learning rate as (cosine scheduler, warmup ratio 0.03).

We focus on balanced accuracy as the main evaluation metric, i.e., the mean of sensitivity (true positive rate) and specificity (true negative rate). We picked this metric since it is more robust to class imbalance compared to the accuracy or F1 score [16, 129]. It is noteworthy that the sizes of LLMs we compare are vastly different, with the number of parameters ranging from 7B to 1700B. A larger model is usually expected to have a better overall performance than a smaller model. We inspect whether this expectation holds in our experiments.

5. RESULTS

We summarize our experiment results with zero-shot prompting (Sec. 5.1), few-shot prompting (Sec. 5.2), and instruction finetuning (Sec. 5.3). Moreover, although we mainly focus on prediction tasks in this research, we also present the initial results of our exploratory case study on mental health reasoning tasks in Sec. 5.4.

Overall, our results show that zero-shot and few-shot settings show promising performance of LLMs for mental health tasks, although their performance is still limited. Instruction-finetuning on multiple datasets (Mental-Alpaca and Mental-FLAN-T5) can significantly boost models’ performance on all tasks simultaneously. Our case study also reveals the strong reasoning capability of certain LLMs, especially GPT-4. However, we note that these results do not indicate the deployability. We highlight important ethical concerns and gaps in Sec. 6.

5.1. Zero-shot Prompting Shows Promising yet Limited Performance

We start with the most basic zero-shot prompting with Alpaca, Alpaca-LoRA, FLAN-T5, LLaMA2, GPT-3.5, and GPT-4. The balanced accuracy results are summarized in the first sections of Table 4. and achieve better overall performance than the naive majority baseline (), but they are far from the task-specific baseline models BERT and Mental-RoBERTa (which have 20%−25% advantages). With much larger models , the performance gets more promising ( over baseline), which is inline with previous work [132]. GPT-3.5’s advantage over Alpaca and Alpaca-LoRA is expected due to its larger size (25×).

Table 4.

Balanced Accuracy Performance Summary of Zero-shot, Few-shot and Instruction Finetuning on LLMs. highlights the best performance among zero-shot prompt designs, including context enhancement, mental health enhancement, and their combination (see Table. 2). Detailed results can be found in Table 10 in Appendix. Small numbers represent standard deviation across different designs of and . The baselines at the bottom rows do not have standard deviation as the task-specific output is static, and prompt designs do not apply. Due to the maximum token size limit, we only conduct few-shot prompting on a subset of datasets and mark other infeasible datasets as “−”. For each column, the best result is bolded, and the second best is underlined.

| Dataset | Dreaddit | DepSeverity | SDCNL | CSSRS-Suicide | |||

|---|---|---|---|---|---|---|---|

| Category | Model | Task #1 | Task #2 | Task #3 | Task #4 | Task #5 | Task #6 |

| Zero-shot Prompting | 0.593±0.039 | 0.522±0.022 | 0.431±0.050 | 0.493±0.007 | 0.518±0.037 | 0.232±0.076 | |

| 0.612±0.065 | 0.577±0.028 | 0.454±0.143 | 0.532±0.005 | 0.532±0.033 | 0.250±0.060 | ||

| 0.571±0.043 | 0.548±0.027 | 0.437±0.044 | 0.502±0.011 | 0.540±0.012 | 0.187±0.053 | ||

| 0.571±0.043 | 0.548±0.027 | 0.437±0.044 | 0.502±0.011 | 0.567±0.038 | 0.224±0.049 | ||

| 0.659±0.086 | 0.664±0.011 | 0.396±0.006 | 0.643±0.021 | 0.667±0.023 | 0.418±0.012 | ||

| 0.663±0.079 | 0.674±0.014 | 0.396±0.006 | 0.653±0.011 | 0.667±0.023 | 0.418±0.012 | ||

| 0.720±0.012 | 0.693±0.034 | 0.429±0.013 | 0.589±0.010 | 0.691±0.014 | 0.261±0.018 | ||

| 0.720±0.012 | 0.711±0.033 | 0.444±0.021 | 0.643±0.014 | 0.722±0.039 | 0.367±0.043 | ||

| 0.685±0.024 | 0.642±0.017 | 0.603±0.017 | 0.460±0.163 | 0.570±0.118 | 0.233±0.009 | ||

| 0.688±0.045 | 0.653±0.020 | 0.642±0.034 | 0.632±0.020 | 0.617±0.033 | 0.310±0.015 | ||

| 0.700±0.001 | 0.719±0.013 | 0.588±0.010 | 0.644±0.007 | 0.760±0.009 | 0.418±0.009 | ||

| 0.725±0.009 | 0.719±0.013 | 0.656±0.001 | 0.647±0.014 | 0.760±0.009 | 0.441±0.057 | ||

| Few-shot Prompting | 0.632±0.030 | 0.529±0.017 | 0.628±0.005 | — | — | — | |

| 0.786±0.006 | 0.678±0.009 | 0.432±0.009 | — | — | — | ||

| 0.721±0.010 | 0.665±0.015 | 0.580±0.002 | — | — | — | ||

| 0.698±0.009 | 0.724±0.005 | 0.613±0.001 | — | — | — | ||

| Instructional Finetuning | Mental-Alpaca | 0.816±0.006 | 0.775 ±0.006 | 0.746 ±0.005 | 0.724 ±0.004 | 0.730±0.048 | 0.403±0.029 |

| Mental-FLAN-T5 | 0.802±0.002 | 0.759±0.003 | 0.756 ±0.001 | 0.677±0.005 | 0.868 ±0.006 | 0.481 ±0.006 | |

| Baseline | Majority | 0.500±––– | 0.500±––– | 0.250±––– | 0.500±––– | 0.500±––– | 0.200±––– |

| BERT | 0.783±––– | 0.763±––– | 0.690±––– | 0.678±––– | 0.500±––– | 0.332±––– | |

| Mental-RoBERTa | 0.831 ±––– | 0.790 ±––– | 0.736±––– | 0.723 ±––– | 0.853 ±––– | 0.373±––– | |

Surprisingly, achieves much better overall results compared to and , and even and GPT-3.5 . Note that LLaMA2 is 6 times bigger than FLAN-T5 and GPT-3.5 is 15 times bigger. On Task #6 (Five-level Suicide Risk Prediction), even outperforms the state-of-the-art Mental-RoBERTa by 4.5%. Comparing these results, the task-solving-focused model FLAN-T5 appears to be better at the mental health prediction tasks in a zero-shot setting. We will introduce more interesting findings after finetuning (see Sec. 5.3.1).

In contrast, the advantage of GPT-4 becomes relatively less remarkable considering its gigantic size. ’s average performance outperforms (150× size), (25× size), and (10× size) by 6.4%, 7.5%, and 10.6%, respectively. Yet it is still very encouraging to observe that GPT-4 is approaching the state-of-the-art on these tasks , and it also outperforms Mental-RoBERTa on Task #6 by 4.5%. In general, these results indicate the promising capability of LLMs on mental health prediction tasks compared to task-specific models, even without any domain-specific information.

5.1.1. The Effectiveness of Enhancement Strategies.

In Sec. 3.1, we propose context enhancement, mental health enhancement, and their combination strategies for zero-shot prompt design to provide more information about the domain. Interestingly, our results suggest varied effectiveness on different LLMs and datasets.

Table 5 provides a zoom-in summary of the zero-shot part in Table 4. For Alpaca, LLaMA2, GPT-3.5, and GPT-4, the three strategies improved the performance in general (, 13 out of 18 tasks show positive changes; , 12/18 tasks positive; , 12/18 tasks positive; , 11/18 tasks positive). However, for Alpaca-LoRA and FLAN-T5, adding more context or mental health domain information would reduce the model performance . For Alpaca-LoRA, this limitation may stem from being trained with fewer parameters, potentially constraining its ability to understand context or domain specifics. For FLAN-T5, this reduced performance might be attributed to its limited capability in processing additional information, as it is primarily tuned for task-solving.

Table 5.

Balanced Accuracy Performance Change using Enhancement Strategies.

| Dataset | Dreaddit | DepSeverity | SDCNL | CSSRS-Suicide | |||

|---|---|---|---|---|---|---|---|

| Model | Task #1 | Task #2 | Task #3 | Task #4 | Task #5 | Task #6 | –All Six Tasks |

| ↑ +0.019 | ↑ +0.045 | ↑ +0.023 | ↑ +0.004 | ↑ +0.014 | ↑ +0.018 | ↑ +0.021 | |

| ↑ +0.000 | ↑ +0.055 | ↑ +0.013 | ↓ −0.011 | ↑ +0.006 | ↑ +0.004 | ↑ +0.011 | |

| ↓ −0.053 | ↑ +0.037 | ↓ −0.010 | ↑ +0.039 | ↓ −0.007 | ↓ −0.010 | ↓ −0.001 | |

| ↓ −0.035 | ↓ −0.047 | ↓ −0.094 | ↓ −0.030 | ↑ +0.027 | ↑ +0.027 | ↓ −0.025 | |

| ↓ −0.071 | ↓ −0.047 | ↓ −0.105 | ↓ −0.005 | ↑ +0.017 | ↑ +0.029 | ↓ −0.031 | |

| ↓ −0.071 | ↓ −0.048 | ↓ −0.051 | ↓ −0.003 | ↓ −0.023 | ↑ +0.037 | ↓ −0.027 | |

| ↑ +0.004 | ↑ +0.011 | ↓ −0.018 | ↑ +0.010 | ↓ −0.018 | ↓ −0.040 | ↓ −0.009 | |

| ↓ −0.043 | ↑ +0.003 | ↓ −0.030 | ↑ +0.005 | ↓ −0.013 | ↓ −0.046 | ↓ −0.021 | |

| ↓ −0.055 | ↓ −0.003 | ↓ −0.007 | ↑ +0.002 | ↓ −0.010 | ↓ −0.036 | ↓ −0.018 | |

| ↓ −0.062 | ↑ +0.014 | ↓ −0.019 | ↑ +0.000 | ↑ +0.031 | ↑ +0.106 | ↑ +0.012 | |

| ↓ −0.102 | ↑ +0.018 | ↓ −0.033 | ↑ +0.053 | ↑ +0.004 | ↑ +0.031 | ↓ −0.005 | |

| ↓ −0.136 | ↑ +0.011 | ↑ +0.016 | ↑ +0.054 | ↓ −0.002 | ↑ +0.067 | ↑ +0.002 | |

| ↑ +0.003 | ↑ +0.011 | ↓ −0.060 | ↑ +0.157 | ↑ +0.007 | ↑ +0.031 | ↑ +0.025 | |

| ↓ −0.006 | ↓ −0.006 | ↑ +0.039 | ↑ +0.116 | ↓ −0.093 | ↑ +0.077 | ↑ +0.021 | |

| ↓ −0.005 | ↓ −0.015 | ↑ +0.014 | ↑ +0.172 | ↑ +0.047 | ↑ +0.020 | ↑ +0.039 | |

| ↑ +0.006 | ↑ +0.000 | ↑ +0.001 | ↑ +0.000 | ↓ −0.007 | ↑ +0.023 | ↑ +0.004 | |

| ↑ +0.025 | ↓ −0.035 | ↑ +0.067 | ↑ +0.002 | ↓ −0.023 | ↓ −0.022 | ↑ +0.002 | |

| ↑ +0.018 | ↓ −0.031 | ↑ +0.061 | ↑ +0.003 | ↓ −0.063 | ↓ −0.006 | ↓ −0.003 | |

| –All Six Models | ↓ −0.031 | ↑ +0.000 | ↓ −0.011 | ↑ +0.032 | ↓ −0.006 | ↑ +0.017 | ↑ +0.000 |

| –Alpaca, GPT-3.5, GPT-4 | ↑ +0.001 | ↑ +0.007 | ↑ +0.017 | ↑ +0.053 | ↓ −0.013 | ↑ +0.015 | ↑ +0.013 |

The green/red color indicates increased/decreased accuracy. This table zooms in on the zero-shot section of Table 4. ↑/↓ marks the ones with better/worse performance in comparison.

The effectiveness of strategies on different datasets/tasks also varies. We observe that Task#4 from the SDCNL dataset and Task#6 from the CSSRS-Suicide dataset benefit the most from the enhancement. In particular, GPT-3.5 benefits very significantly from enhancement on Task #4 . And LLaMA2 benefits significantly on Task #6 . These could be caused by the different nature of datasets. Our results suggest that these enhancement strategies are generally more effective for critical action prediction (e.g., suicide, 2/3 tasks positive) than mental state prediction (e.g., stress and depression, 1/3 task positive).

We also compare the effectiveness of different strategies on the four models with positive effects: Alpaca, LLaMA2, GPT-3.5, and GPT-4. The context enhancement strategy has the most stable improvement across all mental health prediction tasks ( tasks positive; tasks positive; tasks positive; tasks positive). Comparatively, the mental health enhancement strategy is less effective ( tasks positive; tasks positive; tasks positive; tasks positive). The combination of the two strategies yields diverse results. It has the most significant improvement on GPT-3.5’s performance, but not on all tasks ( tasks positive), followed by LLaMA2 ( tasks positive). However, it has slightly negative impact on the average performance of Alpaca ( tasks positive) or GPT-4 ( tasks positive). This indicates that larger language models (LLaMA2, GPT-3.5 vs. Alpaca) have a strong capability to leverage the information embedded in the prompts. But for the huge GPT-4, adding prompts seems less effective, probably because it already contains similar basic information in its knowledge space.

We summarize our key takeaways from this section:

Both small-scale and large-scale LLMs show promising performance on mental health tasks. FLAN-T5 and GPT-4’s performance is approaching task-specific NLP models.

The prompt design enhancement strategies are generally effective for dialogue-focused models, but not for task-solving-focused models. These strategies work better for critical action prediction tasks such as suicide prediction.

Providing more contextual information about the task & input can consistently improve performance in most cases.

Dialogue-focused models with larger trainable parameters (Alpaca vs. Alpaca-LoRA, as well as LLaMA2/GPT-3.5 vs. Alpaca) can better leverage the contextual or domain information in the prompts, yet GPT-4 shows less effect in response to different prompts.

5.2. Few-shot Prompting Improves Performance to Some Extent

We then investigate the effectiveness of few-shot prompting. Note that since we observe diverse effects of prompt design strategies in Table 5, in this section, we only experiment with the prompts with the best performance in the zero-shot setting. Moreover, we exclude Alpaca-LoRA due to its less promising results and LLaMA2 due to its high computation cost.

Due to the maximum input token length of models (2048), we focus on Dreaddit and DepSeverity datasets that have a shorter input and experiment with in Eq. 2 for binary classification and for multi-class classification, i.e., one sample per class. We repeat the experiment on each task three times and randomize the few shot samples for each run.

We summarize the overall results in the second section of Table 4 and the zoom-in comparison results in Table 6. Although language models with few-shot prompting still underperform task-specific models, providing examples of the task can improve model performance on most tasks compared to zero-shot prompting . Interestingly, few-shot prompting is more effective for and tasks positive; tasks positive) than and tasks positive; tasks positive). Especially for Task #3, we observe an improved balanced accuracy of 19.7% for Alpaca but a decline of 2.3% for GPT-3.5, so that Alpaca outperforms GPT-3.5 on this task. A similar situation is observed for FLAN-T5 (improved by 12.7%) and GPT-4 (declined by 0.2%) on Task #1. This may be attributed to the fact that smaller models such as Alpaca and FLAN-T5 can quickly adapt to complex tasks with only a few examples. In contrast, larger models like GPT-3.5 and GPT-4, with their extensive “in memory” data, find it more challenging to rapidly learn from new examples.

Table 6.

Balanced Accuracy Performance Change with Few-shot Prompting. This table is calculated between the zero-shot and the few-shot sections of Table 4.

| Dataset | Dreaddit | DepSeverity | ||

|---|---|---|---|---|

| Model | Task #1 | Task #2 | Task #3 | –All Three Tasks |

| ↑ +0.039 | ↑ +0.007 | ↑ +0.197 | ↑ +0.081 | |

| ↑ +0.127 | ↑ +0.014 | ↑ +0.036 | ↑ +0.059 | |

| ↑ +0.036 | ↑ +0.023 | ↓ −0.023 | ↑ +0.012 | |

| ↓ −0.002 | ↑ +0.005 | ↑ +0.025 | ↑ +0.009 | |

| –All Four Models | ↑ +0.051 | ↑ +0.012 | ↑ +0.059 | ↑ +0.041 |

This leads to the key message from this experiment: Few-shot prompting can improve the performance of LLMs on mental health prediction tasks to some extent, especially for small models.

5.3. Instruction Finetuning Boost Performance for Multiple Tasks Simultaneously

Our experiments so far have shown that zero-shot and few-shot prompting can improve LLMs on mental health tasks to some extent, but their overall performance is still below state-of-the-art task-specific models. In this section, we explore the effectiveness of instruction finetuning.

Due to the prohibitive cost and lack of transparency of GPT-3.5 and GPT-4 finetuning, we only experiment with Alpaca and FLAN-T5 that we have full control of. We picked the most informative prompt to provide more embedded knowledge during the finetuning. As introduced in Sec. 3.3.2 and Sec. 4.2.1, we build Mental-Alpaca and Mental-FLAN-T5 by finetuning Alpaca and FLAN-T5 on all six tasks across four datasets at the same time.

The third section of Table 4 summarizes the overall results, and Table 7 highlights the key comparisons. We observe that both Mental-Alpaca and Mental-FLAN-T5 achieve significantly better performance compared to the unfinetuned versions . Both finetuned models surpass GPT-3.5’s best performance among zero-shot and few-shot settings across all six tasks and outperform GPT-4’s best version in most tasks ( tasks positive; tasks positive). Recall that GPT-3.5/GPT-4 are 25/250 times bigger than Mental-Alpaca and 15/150 times bigger than Mental-FLAN-T5.

Table 7.

Balanced Accuracy Performance Change with Instruction Finetuning. This table is calculated between the finetuning and zero-shot section, as well as the finetuning and few-shot section of Table 4. and are the best results among zero-shot and few-shot settings.

| Dataset | Dreaddit | DepSeverity | SDCNL | CSSRS-Suicide | ||

|---|---|---|---|---|---|---|

| Model | Task #1 | Task #2 | Task #3 | Task #4 | Task #5 | Task #6 |

| ↑ +0.223 | ↑ +0.253 | ↑ +0.315 | ↑ +0.231 | ↑ +0.212 | ↑ +0.171 | |

| ↑ +0.184 | ↑ +0.246 | ↑ +0.118 | — | — | — | |

| ↑ +0.143 | ↑ +0.095 | ↑ +0.360 | ↑ +0.047 | ↑ +0.201 | ↑ +0.034 | |

| ↑ +0.016 | ↑ +0.081 | ↑ +0.324 | — | — | — | |

| Results comparison: | ||||||

| Mental-RoBERTa | 0.831 | 0.790 | 0.736 | 0.723 | 0.853 | 0.373 |

| 0.721 | 0.665 | 0.642 | 0.632 | 0.617 | 0.310 | |

| 0.725 | 0.724 | 0.656 | 0.647 | 0.760 | 0.441 | |

| Mental-Alpaca | 0.816 | 0.775 | 0.746 | 0.724 | 0.730 | 0.403 |

| Mental-FLAN-T5 | 0.802 | 0.759 | 0.756 | 0.677 | 0.868 | 0.481 |

More importantly, Mental-Alpaca and Mental-FLAN-T5 perform on par with the state-of-the-art Mental-RoBERTa. Mental-Alpaca has the best performance on one task and the second best on three tasks, while Mental-FLAN-T5 has the best performance on three tasks. It is noteworthy that Mental-RoBERTa is a task-specific model, which means it is specialized on one task after being trained on it. In contrast, Mental-Alpaca and Mental-FLAN-T5 can simultaneously work across all tasks with a single-round finetuning. These results show the strong effectiveness of instruction finetuning: By finetuning LLMs on multiple mental health datasets with instructions, the models can obtain better capability to solve a variety of mental health prediction tasks.

5.3.1. Dialogue-Focused vs. Task-Solving-Focused LLMs.

We further compare Mental-Alpaca and Mental-FLAN-T5. Overall, their performance is quite close , with Mental-Alapca better at Task #4 on SDCNL and Mental-FLAN-T5 better at Task #5 and #6 on CSSRS-Suicide. In Sec. 5.1, we observe that has a much better performance than tasks positive) in the zero-shot setting. However, after finetuning, FLAN-T5’s advantage disappears.

Our comparison result indicates that Alpaca, as a dialogue-focused model, is better at learning from human natural language data compared to FLAN-T5. Although FLAN-T5 is good at task solving and thus has a better performance in the zero-shot setting, its performance improvement after instruction finetuning is relatively smaller than that of Alpaca. This observation has implications for future stakeholders. If the data and computing resources for finetuning are not available, using task-solving-focused LLMs could lead to better results. When there are enough data and computing resources, finetuning dialogue-based models can be a better choice. Furthermore, models like Alpaca, with their dialogue conversation capabilities, may be more suitable for downstream applications, such as mental well-being assistants for end-users.

5.3.2. Does Finetuning Generalize across Datasets?

We further measure the generalizability of LLMs after finetuning. To do this, we instruction-finetune the model on one dataset and evaluate it on all datasets (as introduced in Sec. 3.3.1). As the main purpose of this part is not to compare different models but evaluate the finetuning method, we only focus on Alpaca. Table 8 summarizes the results.

Table 8.

Balanced Accuracy Cross-Dataset Performance Summary of Mental-Alpaca Finetuning on Single Dataset.

| Test Dataset | Dreaddit | DepSeverity | SDCNL | CSSRS-Suicide | ||

|---|---|---|---|---|---|---|

| Finetune Dataset | Task #1 | Task #2 | Task #3 | Task #4 | Task #5 | Task #6 |

| Dreaddit | ↑ 0.720 | ↑ 0.623 | ↓ 0.474 | ↑ 0.720 | ↓ 0.156 | |

| DepSeverity | ↑ 0.618 | | 0.493 | ↑ 0.753 | ↓ 0.156 | ||

| SDCNL | ↓ 0.468 | ↓ 0.461 | ↑ 0.623 | ↑ 0.573 | ↓ 0.156 | |

| CSSRS-Suicide | ↓ 0.500 | ↓ 0.500 | ↑ 0.622 | ↑ 0.500 | ||

| Reference: | ||||||

| 0.593 | 0.522 | 0.431 | 0.493 | 0.518 | 0.232 | |

| Mental-Alpaca | 0.816 | 0.775 | 0.746 | 0.724 | 0.730 | 0.403 |

indicate the results of the model finetuned and tested on the same dataset. The bottom few rows are related Alpaca versions for reference. ↑/↓ marks the ones with better/worse cross-dataset performance compared to the zero-shot version .

We first find that finetuning and testing on the same dataset lead to good performance, as indicated by the entries on the diagonal in Table 8. Some results are even better than Mental-Alpaca (5 out of 6 tasks) or Mental-RoBERTa (3 out of 6 tasks), which is not surprising. More interestingly, we investigate cross-dataset generalization performance (i.e., the ones off the diagonal). Overall, finetuning on a single dataset achieves better performance compared to the zero-shot setting . However, the performance changes vary across tasks. For example, finetuning on any dataset is beneficial for Task #3 and #5 , but detrimental for Task #6 and almost futile for Task #4 . Generalizing across Dreaddit and DepSeverity shows good performance, but this is mainly because they share the language corpus. These results indicate that finetuning on a single dataset can provide mental health knowledge with a certain level and thus improve the overall generalization results, but such improvement is not stable across tasks.

Moreover, we further evaluate the generalizability of our best models instructional-finetuned on multiple datasets, i.e., Mental-Alpaca and Mental-FLAN-T5. We leverage external datasets that are not included in the finetuning. Table 9 highlights the key results. More detailed results can be found in Table 10.

Table 9.

Balanced Accuracy Performance Summary on Three External Datasets. These datasets come from diverse social media platforms. For each column, the best result is bolded, and the second best is underlined.

| Dataset | Red-Sam | Twt-60Users | SAD | |

|---|---|---|---|---|

| Category | Model | Task #2 | Task #2 | Task #1 |

| Zero-shot Prompting | 0.527±0.006 | 0.569±0.017 | 0.557±0.041 | |

| 0.577±0.004 | 0.649±0.021 | 0.477±0.016 | ||

| 0.563±0.029 | 0.613±0.046 | 0.767±0.050 | ||

| 0.574±0.008 | 0.736 ±0.019 | 0.704±0.026 | ||

| 0.506±0.004 | 0.571±0.000 | 0.750±0.027 | ||

| 0.511±0.000 | 0.566±0.017 | 0.854 ±0.006 | ||

| Instructional Finetuning | Mental-Alpaca | 0.604 ±0.012 | 0.718±0.011 | 0.819 ±0.006 |

| ↑ +0.077 | ↑ +0.149 | ↑ +0.262 | ||

| Mental-FLAN-T5 | 0.582 ±0.002 | 0.736 ±0.003 | 0.779±0.002 | |

| ↑ +0.019 | ↑ +0.123 | ↑ +0.012 |

Consistent with the results in Table 7, the instruction finetuning enhances the model performance on external datasets . Both Mental-Alpaca and Mental-FLAN-T5 ranked top 1 or 2 in 2/3 external tasks. It is noteworthy that Twt-60Users and SAD datasets are collected outside Reddit, and their data is different from the source of finetuning datasets. These results demonstrate strong evidence that instruction finetuning with diverse tasks, even with data collected from a single social media platform, can significantly enhance LLMs’ generalizability across multiple scenarios.

5.3.3. How Much Data Is Needed?

Additionally, we are interested in exploring how the size of the dataset impacts the results of instruction finetuning. To answer this question, we downsample the training set to 50%, 20%, 10%, 5%, and 1% of the original size and repeat each one three times. We increase the training epoch accordingly to make sure that the model is exposed to a similar amount of data. Similarly, we also focus on Alpaca only. Figure 1 visualizes the results. With only 1% of the data, the finetuned model is able to outperform the zero-shot model on most tasks (5 out of 6). With 5% of the data, the finetuned model has a better performance on all tasks. As expected, the model performance has an increasing trend with more training data. For many tasks, the trend approaches a plateau after 10%. The difference between 10% training data (less than 300 samples per dataset) and 100% training data is not huge .

Fig. 1.

Balanced Accuracy Performance Summary of Mental-Alpaca Finetuning with Different Sizes of Training Set. The finetuning is conducted across four datasets and six tasks. Each solid line represents the performance of the finetuned model on each task. The dashed line indicates the performance baseline. Note that the x-axis is in the log scale.

5.3.4. More Data in One Dataset vs. Fewer Data across Multiple Datasets.

In Sec. 5.3.2, the finetuning on a single dataset can be viewed as training on a smaller set (around 5–25% of the original size) with less variation (i.e., no finetuning across datasets). Thus, the results in Sec. 5.3.2 are comparable to those in Sec. 5.3.3. We found that the model’s overall performance is better when the model is finetuned across multiple datasets when overall training data sizes are similar . This suggests that increasing data variation can more effectively benefit finetuning outcomes when the training data size is fixed.

These results can guide future developers and practitioners in collecting the appropriate data size and sources to finetune LLMs for the mental health domain efficiently. We have more discussion in the next section. In summary, we highlight the key takeaways of our finetuning experiments as follows:

Instruction finetuning on multiple mental health datasets can significantly boost the performance of LLMs on various mental health prediction tasks. Mental-Alpaca and Mental-FLAN-T5 outperform GPT-3.5 and GPT-4, and perform on par with the state-of-the-art task-specific model.

Although task-solving-focused LLMs may have better performance in the zero-shot setting for mental health prediction tasks, dialogue-focused LLMs have a stronger capability of learning from human natural language and can improve more significantly after finetuning.

Finetuning LLMs on a small number of datasets and tasks may improve model generalizable knowledge in mental health, but its effect is not robust. Comparatively, finetuning on diverse tasks can robustly enhance generalizability across multiple social media platforms.

Finetuning LLMs on a small number of samples (a few hundred) across multiple datasets can already achieve favorable performance.

When the data size is the same, finetuning LLMs on data with larger variation (i.e., more datasets and tasks) can achieve better performance.

5.4. Case Study of LLMs’ Capability on Mental Health Reasoning

In addition to evaluating LLMs’ performance on classification tasks, we also take an initial step to explore LLMs’ capability on mental health reasoning. This is another strong advantage of LLMs since they can generate human-like natural language based on embedded knowledge. Due to the high cost of a systematic evaluation of reasoning outcomes, here we present a few examples as a case study across different LLMs. It is noteworthy that we do not aim to claim that certain LLMs have better/worse reasoning capabilities. Instead, this section aims to provide a general sense of LLMs’ performance on mental health reasoning tasks.

Specifically, we modify the prompt design by inserting a Chain-of-Thought (CoT) prompt [65] at the end of OutputConstraint in Eq. 1: “Return [set of classification labels]. Provide reasons step by step”. We compare Alpaca, FLAN-T5, GPT-3.5, and GPT-4. Our results indicate the promising reasoning capability of these models, especially GPT-3.5 and GPRT-4. We also experimented with the finetuned Mental-Alpaca and Mental-FLAN-T5. Unfortunately, our results show that after finetuning solely on classification tasks, these two models are no longer able to generate reasoning sentences even with the CoT prompt. This suggests a limitation of the current finetuned model.

5.4.1. Diverse Reasoning Capabilities across LLMs.

We present several examples as our case study to illustrate the reasoning capability of these LLMs. The first example comes from the binary stress prediction task (Task #1) on the Dreaddit dataset (see Figure 2). All models give the right classification, but with significantly different reasoning capabilities. First, FLAN-T5 generates the shortest reason. Although it is reasonable, it is superficial and does not provide enough insights. This is understandable because FLAN-T5 is targeted at task-solving instead of reasoning. Compared to FLAN-T5, Alpaca generates better reason. Among the five reasons, two of them accurately analyze the user’s mental state given the stressful situations. Meanwhile, GPT-3.5 and GPT-4 generate expert-level high-quality reasons. The inference from the user’s statement is accurate and deep, indicating their powerful capability of understanding human emotion and mental health. Comparing the two models, GPT-3.5’s reason is simpler, following the user’s statement point by point and adding basic comments, while GPT-4’s output is more organic and insightful, yet more concise.

Fig. 2.

A Case Study of Correct Reasoning Examples on Task #1 Binary Stress Prediction on Dreaddit Dataset. Bolded texts highlight the mental-health-related content in the input section, and the answers of LLMs. Underlined texts highlight the reasoning content generated by LLMs, and italicized & underlined texts indicate the wrong or unrelated content.

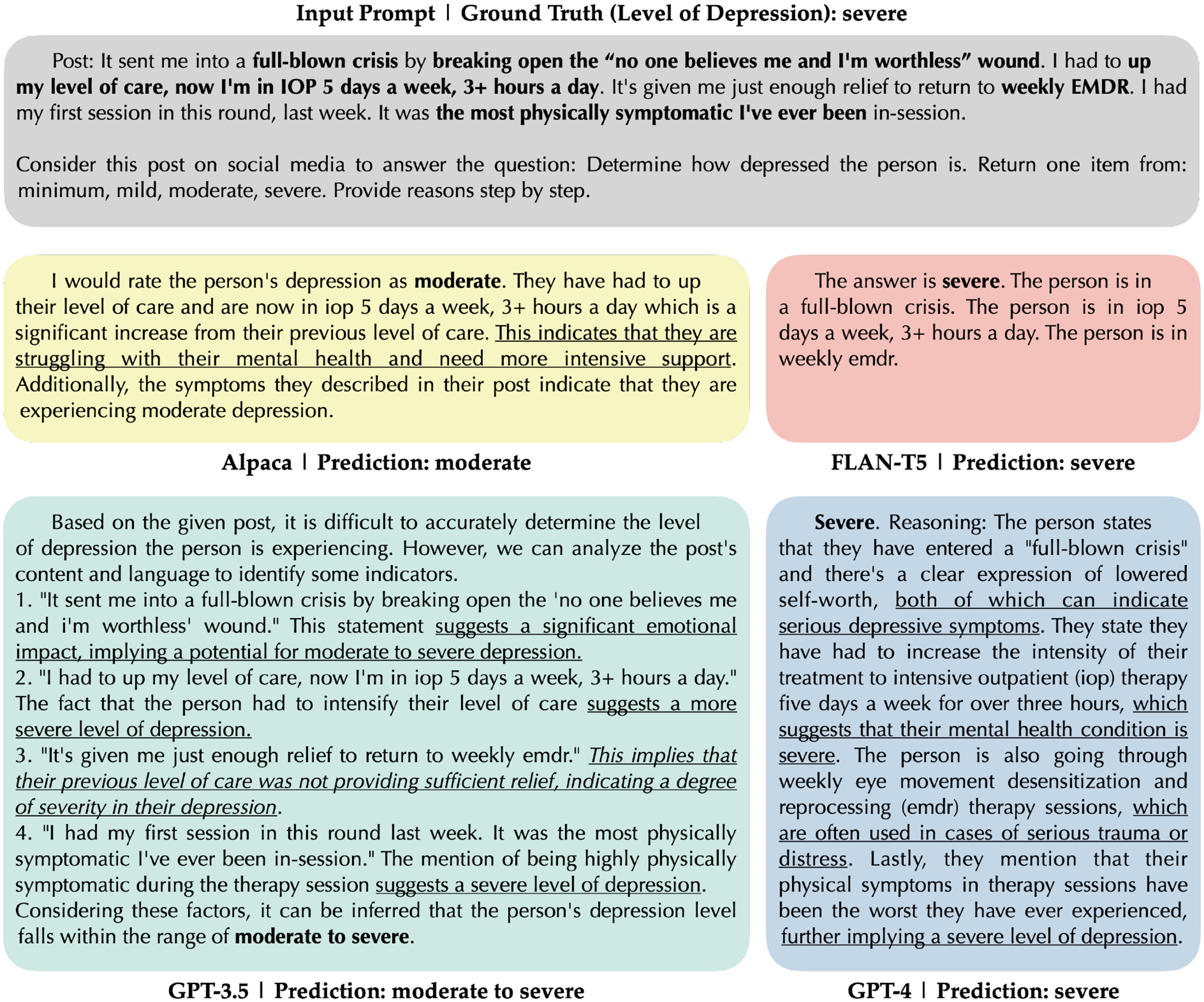

We also have a similar observation in the second example from the four-level depression prediction task (Task #3) on the DepSeverity dataset (see Figure 3). In this example, although FLAN-T5’s prediction is correct, it simply repeats the fact stated by the user, without providing further insights. Alpaca makes the wrong prediction, but it provides one sentence of accurate reasoning (although relatively shallow). GPT-3.5 makes an ambiguous prediction that includes the correct answer. In contrast, GPT-4 generates the highest quality reasoning with the right prediction. With its correct understanding of depressive symptoms, GPT-4 can accurately infer from the user’s situation, link it to symptoms, and provides insightful analysis.

Fig. 3.

A Case Study of Mixed Reasoning Examples on Task #3 Four-level Depression Prediction on DepSeverity Dataset. Alpaca wrongly predicted the label, and GPT-3.5 provided a wrong inference on the meaning of “relief”.

5.4.2. Wrong and Dangerous Reasoning from LLMs.

However, we also want to emphasize the incorrect reasoning content, which may lead to negative consequences and risks. In the first example, Alpaca generated two wrong reasons for the hallucinated “reliance on others” and “safety concerns”, along with an unrelated reason for readers instead of the poster. In the second example, GPT-3.5 misunderstood what the user meant by “relief”. To better illustrate this, we further present another example where all four LLMs make problematic reasoning (see Figure 4). In this example, the user was asking for others’ opinions on social anxiety, with their own job interview experience as an example. Although the user mentioned situations where they were anxious and stressed, it’s clear that they were calm when writing this post and described their experience in an objective way. However, FLAN-T5, GPT-3.5, and GPT-4 all mistakenly take the description of the anxious interview experience as evidence to support their wrong prediction. Although Alpaca makes the right prediction, it does not understand the main theme of the post. The false positives reveal that LLMs may overly generalize in a wrong way: Being stressed in one situation does not indicate that a person is stressed all the time. However, the reasoning content alone reads smoothly and logically. If the original post was not provided, the content could be very misleading, resulting in a wrong prediction with reasons that “appears to be solid”. These examples clearly illustrate the limitations of the current LLMs for mental health reasons, as well as their risks of introducing dangerous bias and negative consequences to users.

Fig. 4.

A Case Study of Incorrect Reasoning Examples on Task #1 Binary Stress Prediction on Dreaddit Dataset. FLAN-T5, GPT-3.5, and GPT-4 all make false positive predictions. All four LLMs provide problematic reasons.

The case study suggests that GPT-4 enjoys impressive reasoning capability, followed by GPT-3.5 and Alpaca. Although FLAN-T5 shows a promising zero-shot performance, it is not good at reasoning. Our results reveal the encouraging capability of LLMs to understand human mental health and generate meaningful analysis. However, we also present examples where LLMs can make mistakes and offer explanations that appear reasonable but are actually flawed. This further suggests the importance of more future research on LLMs’ ethical concerns and safety issues before real-world deployment.

6. DISCUSSION

Our experiment results reveal a number of interesting findings. In this section, we discuss potential guidelines for enabling LLMs for mental -health-related tasks (Sec. 6.1). We envision promising future directions (Sec. 6.2), while highlighting important ethical concerns and limitations with LLMs for mental health (Sec. 6.3). We also summarize the limitations of the current work (Sec. 6.4).

6.1. Guidelines for Empowering LLMs for Mental Health Prediction Tasks

We extract and summarize the takeaways from Sec. 5 into a set of guidelines for future researchers and practitioners on how to empower LLMs to be better at various mental health prediction tasks.

When computing resources are limited, combine prompt design & few-shot prompting, and pick prompts carefully.

As the size of large models continues to grow, the requirement for hardware (mainly GPU) has also been increasing, especially when finetuning an LLM. For example, in our experiment, Alpaca was trained on eight 80GB A100s for three hours [115]. With limited computing resources, only running inferences or resorting to APIs is feasible. In these cases, zero-shot and few-shot prompt engineering strategies are viable options. Our results indicate that providing few-shot mental health examples with appropriate enhancement strategies can effectively improve prediction performance. Specifically, adding contextual information about the online text data is always helpful. If the available model is large and contains rich knowledge (at least 7B trainable parameters), adding mental health domain information can also be beneficial.

With enough computing resources, instruction finetune models on various mental health datasets.

When there are enough computing resources and model training/finetuning is possible, there are more options to enhance LLMs for mental health prediction tasks. Our experiments clearly show that instruction finetuning can significantly boost the performance of models, especially for dialogue-based models since they can better understand and learn from human natural language. When there are multiple datasets available, merging multiple datasets and tasks altogether and finetuning the model in a single round is the most effective approach to enhance its generalizability.

Implement efficient finetuning with hundreds of examples and prioritize data variation when data resource is limited.

Figure 1 shows that finetuning does not require large datasets. If there is no immediately available dataset, collecting small datasets with a few hundred samples is often good enough. Moreover, when the overall amount of data is limited (e.g., due to resource constraints), it is more advantageous to collect data from a variety of sources, each with a smaller size, than to collect a single larger dataset. Because instruction finetuning generalizes better when data and tasks have a larger variation.

More curated finetuning datasets are needed for mental health reasoning.

Our case study suggests that Mental-Alpaca and Mental-FLAN-T5 can only generate classification labels after being finetuned solely on classification tasks, losing their reasoning capability. This is a major limitation of the current models. A potential solution involves incorporating more datasets focused on reasoning or causality into the instruction finetuning process, so that models can also learn the relationship between mental health outcomes and causal factors.

Limited Prediction and Reasoning Performance for Complex Contexts.

LLMs tend to make more mistakes when the conversation contexts are more complex [13, 69]. Our results contextualize this in the mental health domain. Section 5.4.2 shows an example case where most LLMs not only predict incorrectly but also provide flawed reasoning processes. Further analysis of mispredicted instances indicates a recurring difficulty: LLMs often err when there’s a disconnect between the literal context and the underlying real-life scenarios. he example in Figure 4 is a case where LLMs are confused by the hypothetical stressful case described by the person. In another example, all LLMs incorrectly assess a person with severe depression (false negative): “I’m just blown away by this doctor’s willingness to help. I feel so validated every time I leave his office, like someone actually understands what I’m struggling with, and I don’t have to convince them of my mental illness. Bottom line? Research docs if you can online, read their reviews and don’t give up until you find someone who treats you the way you deserve. If I can do this, I promise you can!” Here, LLMs are misled by the outwardly positive sentiment, overlooking the significant cues such as regular doctor visits and explicit mentions of mental illness. These observations underscore a critical shortfall of LLMs: they cannot handle complex mental health-related tasks, particularly those concerning chronic conditions like depression. The variability of human expressions over time and the models’ susceptibility to being swayed by superficial text rather than underlying scenarios present significant challenges.

Despite the promising capability of LLMs for mental health tasks, they are still far from being deployable in the real world. Our experiments reveal the encouraging performance of LLMs on mental health prediction and reasoning tasks. However, as we note in Sec. 6.3, our current results do not indicate LLMs’ deployability in real-life mental health settings. There are many important ethical and safety concerns and gaps before deployment to be addressed before achieving robustness and deployability.

6.2. Beyond Mental Health Prediction Task and Online Text Data

Our current experiments mainly involve mental health prediction tasks, which are essentially classification problems. There are more types of tasks that our experiments don’t cover, such as regression (e.g., predicting a score on a mental health scale). In particular, reasoning is an attractive task as it can fully leverage the capability of LLMs on language generation [18, 85]. Our initial case study on reasoning is limited but reveals promising performance, especially for large models such as GPT-4. We plan to conduct more experiments on tasks that go beyond classification.

In addition, there is another potential extension direction. In this paper, we mainly focus on online text data, which is one of the important data sources of the ubiquitous computing ecosystem. However, there are more available data streams that contain rich information, such as the multimodal sensor data from mobile phones and wearables (e.g., [55, 78, 81, 130, 131]). This leads to another open question on how to leverage LLMs for time-series sensor data. More research is needed to explore potential methods to merge the online text information with sensor streams. These are another set of exciting research questions to explore in future work.

6.3. Ethics in LLMs and Deployability Gaps for Mental Health

Although our experiments on LLMs have shown promising capability for mental-health-related tasks, it still has a long way to go before being deployed in real-life systems. Recent research has revealed the potential bias or even harmful advice introduced by LLMs [50], especially with the gender [42] and racial [6] gaps. In mental health, these gaps and disparities between population groups have been long-standing [54]. Meanwhile, our case study of incorrect prediction, over-generalization, and “falsely reasonable” explanations further highlight the risk of current LLMs. Recent studies are calling for more research emphasis and efforts in assessing and mitigating these biases for mental health [54, 116].

Although with a much stronger capability of understanding natural language (and early signs of mental health domain knowledge in our case), LLMs are no different from other modern AI models that are trained on a large amount of human-generated content, which exhibit all the biases that humans do [53, 86, 121]. Meanwhile, although we carefully picked datasets with human expert annotations, there exist potential biases in the labels, such as stereotypes [92], confirmation bias [41], normative vs. descriptive labels [11]. Besides, privacy is another important concern. Although our datasets are based on public social media platforms, it is necessary to carefully handle mental-health-related data and guarantee anonymity in any future efforts. These ethical concerns need to receive attention not only at the monitoring and prediction stage, but also in the downstream applications, ranging from assistants for mental health experts to chatbots for end-users. Careful efforts into safe development, auditing, and regulation are very much needed to address these ethical risks.

6.4. Limitations

Our paper has a few limitations. First, although we carefully inspect the quality of our dataset and cover different categories of LLM, the the range of datasets and the types of LLMs included are still limited. Our findings are based on the observations of these datasets and models, which may not generalize to other cases. Related, our exploration of zero-shot few-shot prompt design is not comprehensive. The limited input window of some models also limits our exploration of more samples for few-shot prompting. Furthermore, we have not conducted a systematic evaluation of these models’ performance in mental health reasoning. Future work can design larger-scale experiments to include more datasets, models, prompt designs, and better evaluation.

Second, our datasets were mainly from Reddit, which could be limited. Although our analysis in Section 5.3.2 shows that finetuned models have cross-platform generalizability, the finetuning was only based on Reddit and can introduce bias. Meanwhile, although the labels are not directly accessible on the platforms, it is possible that these text data have been included in the initial training of these large models. We still argue that there is little information leakage as long as the models haven’t seen the labels for our tasks, but it is hard to measure how the initial training process may affect the outcomes in our evaluation.

Third, another important limitation of the current work is the lack of evaluation of model fairness. Our anonymous datasets do not include comprehensive demographic information, making it hard to compare the performance across different population groups. As we discussed in the previous section, lots of future work on ethics and fairness is necessary before deploying such systems in real life.

7. CONCLUSION

In this paper, we present the first comprehensive evaluation of multiple LLMs (Alpaca, Alpaca-LoRA, FLAN-T5, LLaMA2, GPT-3.5, and GPT-4) on mental health prediction tasks (binary and multi-class classification) via online text data. Our experiments cover zero-shot prompting, few-shot prompting, and instruction finetuning. The results reveal a number of interesting findings. Our context enhancement strategy can robustly improve performance for all LLMs, and our mental health enhancement strategy can enhance models with a large number of trainable parameters. Meanwhile, few-shot prompting can also robustly improve model performance even by providing just one example per class. Most importantly, our experiments show that instruction finetuning across multiple datasets can significantly boost model performance on various mental health prediction tasks at the same time, generalizing across external data sources and platforms.. Our best finetuned models, Mental-Alpaca and Mental-FLAN-T5, outperform much larger LLaMA2, GPT-3.5 and GPT-4, and perform on par with the state-of-the-art task-specific model Mental-RoBERTa. We also conduct an exploratory case study on these models’ reasoning capability, which further suggests both the promising future and the important limitations of LLMs. We summarize our findings as a set of guidelines for future researchers, developers, and practitioners who want to empower LLMs with better knowledge of mental health for downstream tasks. Meanwhile, we emphasize that our current efforts of LLMs in mental health are still far from deployability. We highlight the important ethical concerns accompanying this line of research.

CCS Concepts: • Human-centered computing → Ubiquitous and mobile computing; • Applied computing → Life and medical sciences.

ACKNOWLEDGMENTS

This work is supported by VW Foundation, Quanta Computing, and the National Institutes of Health (NIH) under Grant No. 1R01MD018424-01.

APPENDIX: DETAILED RESULTS TABLES

Table 10.