Abstract

Some patients with a lesion to the primary visual cortex (V1) show “blindsight”: the remarkable ability to guess correctly about attributes of stimuli presented to the blind hemifield. Here, we show that blindsight can be induced in normal observers by using transcranial magnetic stimulation of the occipital cortex but exclusively for the affective content of unseen stimuli. Surprisingly, access to the affective content of stimuli disappears upon prolonged task training or when stimulus visibility increases, allegedly increasing the subjects' confidence in their overall performance. This finding suggests that availability of conscious information suppresses access to unconscious information, supporting the idea of consciousness as a repressant of unconscious tendencies.

In a number of recent studies, blindsight has been reported for the emotional expression of faces (1-3). This phenomenon has been dubbed “affective blindsight.” The existence of affective blindsight provides ground to the claim that there is an unconscious, subcortical route for the processing of affective information in the human brain (4). These findings have led some researchers to claim that we are “blindly led by our emotions” (5, 6). Although numerous studies have made it clear that considerable processing of (affective) information is possible in the absence of awareness, and that the outcomes of these unconscious processes may affect behavior (7-9), most of these experiments have been conducted in patients, so that it remains unclear which mechanisms contribute to unconsciously guided behavior in normal individuals (8). Here, we study the possibility of affective blindsight in normal observers, rendering schematic faces (emoticons) invisible by applying transcranial magnetic stimulation (TMS) to the visual cortex.

Methods

Ten neurologically normal subjects with corrected or corrected-to-normal vision (18-27 years old, seven males, including one of the authors) participated in experiment 1; seven of these subjects (five males, including one of the authors) participated in the control experiments. Participation of the author did not influence the results (see Figs. 4 and 5, which are published as supporting information on the PNAS web site). The overall effects remained unchanged, although significance levels dropped slightly (from P = 0.0000 to P = 0.0001 because of the decrease in number of trials). All subjects had given their written informed consent before the experiments. Visual stimuli were generated on a PC and shown on a 15-inch BenQ TFT (Suzhou, China) monitor, running at a refresh rate of 60 Hz. Stimuli were gray (136.2 cd/m2) on a white background (145.5 cd/m2); contrast was 3.3%. The stimuli were presented for one or two frames (i.e., 17 or 33 ms); presentation time was varied between blocks of 54 trials (Supporting Text, which is published as supporting information on the PNAS web site). Size of individual emoticons was ≈1° × 1° of visual angle; size of the entire array was ≈2° × 2° of visual angle. In each trial, four emoticons were presented: three neutral ones and one either happy or sad. This emoticon could be presented on either the left or right of a fixation dot. Position and emotion were counterbalanced over two blocks; i.e., both emotions were presented on all four possible locations an equal number of times after two blocks. Emoticons were chosen instead of real faces to control for differences in visual features between expressions, because for example, differences in processing of happy or sad faces might be related to features other than emotional expression (10). Schematic faces effectively function as emotional stimuli (11), and recent evidence suggests that they are processed similar to real faces, although they contain fewer feature confounds (12). Also, in real faces, the emotional content is mostly carried by the low spatial frequency information, which is processed by both cortical (13) and subcortical (14) areas. Emoticons contain primarily these low spatial frequency attributes.

Happy and sad (instead of e.g., fearful) facial expressions were chosen to further minimize differences in visual features between expressions: in our stimuli, only one feature (namely the curvature of the mouth) differed between the expressions used. Recent neuroimaging experiments show that not only fearful and neutral (15, 16) but also happy and sad stimuli evoke differential amygdala responses in the absence of awareness (17). Expression and location were chosen at random each trial. Subjects had to indicate as quickly as possible the emotional expression of the deviant emoticon, and after that, report its location (left or right). This latter task was not time stressed. Subjects received feedback on the emotion task, but not on the localization task, to keep subjects motivated to maximize their performance, even though they did not perceive a stimulus (Fig. 1). We have used a four-stimulus display to maximize TMS suppression. A previous study with a similar task has shown that in a four-item display, positive and negative schematic faces are detected equally well (18). In a separate control experiment, we confirmed that in our four-item display, happy and sad emoticons were detected equally well. Performance was ≈80% for both tasks (see Table 1). Subjects reported to be fully aware of all four stimuli. However, performance of the emotion task was slightly worse for sad emoticons on the right side of fixation than for the other categories (P = 0.002). Despite the short presentation times, subjects were thus highly accurate on both localization and emotion tasks when no TMS pulses were applied.

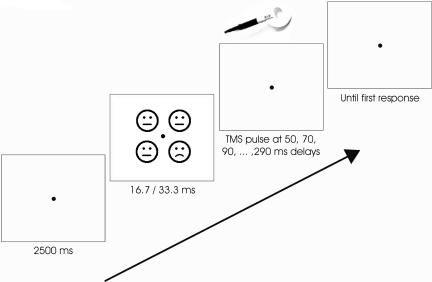

Fig. 1.

Typical trial run. Subjects fixated a blank screen (145.5 cd/m2) with a fixation dot on a 15-inch BenQ TFT monitor with a refresh rate of 60 Hz for 2,500 ms. Then, a set of four emoticons was presented. Each emoticon measured ≈1° × 1° of visual angle, the total set ≈2° × 2°. Emoticons were light gray (136.2 cd/m2) on a white background (145.5 cd/m2), i.e., contrast was 3.3%. Emoticons were presented for one frame (16.7 ms) or for two frames (33 ms, Fig. 2d). Presentation times were varied between blocks. TMS pulses were delivered at random delays relative to stimulus onset, ranging from 50 to 290 ms. Behavioral responses are given as quickly as possible, and a new trial is started after their completion.

Table 1. Performance on emotion discrimination and localization without TMS pulses.

| Left of fixation, %

|

Right of fixation, %

|

Overall, %

|

||||

|---|---|---|---|---|---|---|

| Emotion | Localization | Emotion | Localization | Emotion | Localization | |

| Happy | 77 | 74 | 85 | 82 | 83 | 79 |

| Sad | 82 | 85 | 71* | 75 | 77 | 80 |

| Overall | 81 | 80 | 78 | 78 | 80 | 79 |

Seven subjects participated in this experiment, which was identical to the TMS experiment, except that no TMS pulses were given. Presentation time was one frame (16.7 ms). Performance of the emotion task for sad emoticons on the right side of the fixation dot was slightly worse than for sad emoticons on the left side of fixation (*, P = 0.002).

At one of 13 delays, ranging from 50 to 290 ms after stimulus onset, a TMS pulse was delivered to the occipital pole to target V1 and surrounding early visual areas (19-23). Subjects were seated in a chair with their heads fixed in a head support unit, and were instructed to maintain fixation throughout a trial and to minimize blinking. We used a Magstim (Whiland, U.K.) 200 stimulator at 90% of maximum output with a 140-mm circular coil. The lower rim of the coil was positioned 1.5 cm above the inion. Magnetic stimulation at this location ≈90-130 after stimulus onset is known to result in suppression of stimulus visibility (19-23).

Per condition, data were pooled over subjects and TMS delays. Binomial tests were used to test whether performance at a given delay was significantly suppressed as compared with baseline level, defined as the mean performance on the trials in which pulses were delivered to the occipital pole between 210 and 290 ms. To test whether performance on the localization task and the emotion task was significantly different, we used a binomial test for dependent proportions. In addition, we post hoc isolated trials in which subjects failed to localize the deviant emoticon (at those delays in which localization performance was suppressed most) and computed performance on the emotion discrimination task for those trials. Performance was tested against chance level (50%) by using a binomial test.

Results

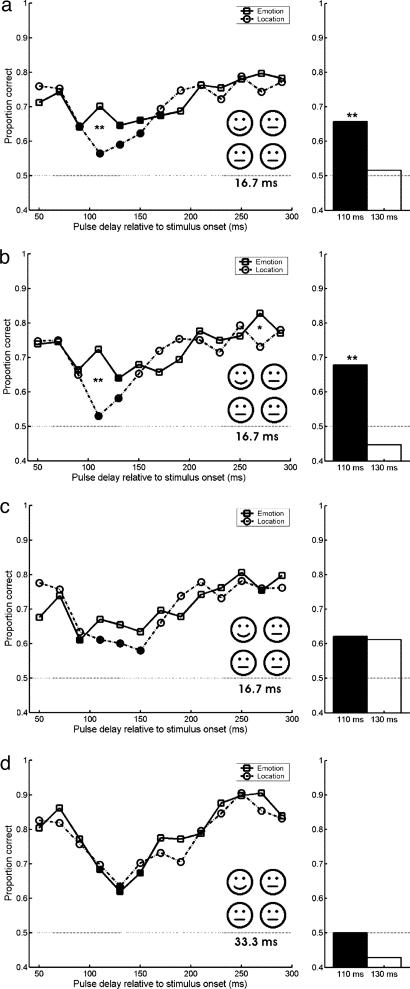

When stimuli were presented for one frame (16.7 ms, Fig. 2a), subjects were unable to localize the emotional emoticon among three neutral ones when activity in striate cortex was disrupted 110 ms after stimulus onset (Fig. 2a, P = 0.000). The fact that failure to localize was accompanied by the absence of conscious experience of the emotional emoticon was supported by two observations. First, subjects verbally reported not seeing the deviant emoticon on a subset of the trials, most likely the ones in which TMS pulses were given at 110 ms after stimulus onset. Second, it was confirmed in a separate detection experiment, in which 6 of the 10 subjects participated, that “emotional” trials could not be discriminated from “all neutral” ones when a pulse was given at 110 ms after stimulus onset: average detection performance was 48% and not significantly above chance level (P = 0.70, 48 trials per subject, see Table 2).

Fig. 2.

Affective blindsight during TMS suppression. TMS pulses were delivered at 13 delays relative to the onset of the four emoticons, ranging from 50 to 290 ms in 20-ms steps. Mean scores per delay are shown. Squares, emotion task; circles, localization task. Dotted lines indicates chance level. Filled symbols indicate performance that is significantly [P < 0.004, threshold (0.05) corrected for 13 multiple comparisons] below baseline performance, which is the average performance at the 210-290 ms delays. The bar charts (Right) show the performance on the emotion task for the trials in which the response to the localization task was incorrect, for the 110- and 130-ms TMS delays. Asterisks, difference in performance between two tasks is significant: *, P <0.004; **, P < 7.7 × 10-7. Thresholds (0.05 and 0.001) are corrected for 13 multiple comparisons. Statistics were performed with binomial tests. (a) Scores for the one-frame presentation time. All seven subjects did 48 trials per delay. Performance on the emotion task is significantly higher than performance on the localization task when a pulse is given at 110 ms after stimulus onset (P =0.01). (b) Scores for the first six blocks of the one-frame presentation time (24 trials per subject per delay for 10 subjects). Performance on the emotion task is significantly higher than performance on the localization task when a pulse is given at 110 ms after stimulus onset (P = 0.000). (c) Scores for the second six blocks of the one-frame presentation time (24 trials per subject per delay for seven subjects). (d) Scores for the two-frame presentation time. All seven subjects did 24 trials per delay.

Table 2. Performance on emotion detection task with pulses delivered at 110 ms.

| Left of fixation, % | Right of fixation, % | Overall, % | |

|---|---|---|---|

| Happy | 48 | 47 | 48 |

| Sad | 47 | 50 | 48 |

| Overall | 47 | 48 | 48 |

Six subjects participated in this experiment. Visual stimulation was identical to the main experiment (presentation time was one frame, 16.7 ms), except that in 50% of the trials, no emotional emoticon was present. Subjects had to indicate whether there was an emotional emoticon.

Notwithstanding the subjective and objective loss of perception of the emoticons, subjects were able to accurately guess whether the emotional expression of the deviant emoticon was either happy or sad but exclusively when emoticons were presented for only one frame (16.7 ms). Although visibility and localization of the deviant emoticon was at chance at a TMS delay of 110 ms, emotional expression could be reported at values significantly above chance (Fig. 2a), providing further evidence for the existence of affective blindsight, here in normal subjects. Happy emoticons were reported more accurately than sad emoticons (P = 0.000), but location did not affect emotion discrimination (Table 3). However, sad emoticons presented on left of fixation were detected slightly better than sad emoticons presented right of fixation (P = 0.05, see Table 3). Neither emotion nor location had an effect on suppression as measured with the localization task.

Table 3. Emotion discrimination performance for different expressions and locations for the 110-ms pulse delay.

| Left of fixation, % | Right of fixation, % | Overall, % | |

|---|---|---|---|

| Happy | 78 | 79 | 79** |

| Sad | 65* | 57* | 61** |

| Overall | 72 | 69 | 70 |

Happy expressions are discriminated better than sad expressions (**, P = 0.000); only for the sad expressions there is a small effect of laterality (*, P = 0.05).

Affective blindsight is further confirmed by a separate analysis in which we isolated the trials where subjects failed to localize the deviant emoticon after a TMS pulse at 110 or 130 ms (Fig. 2a Right). Even in these trials, subjects could discriminate between happy and sad emoticons significantly above chance (P = 0.000, for the 110-ms pulses).

TMS-induced blindsight was most pronounced during the first six sessions of the one-frame condition (Fig. 2b). Seven subjects did 12 blocks of the one-frame condition. In these blocks, stimulus suppression diminished (Fig. 2c), most likely as a result of training (23). Here, we made a puzzling observation: although stimulus visibility at the critical TMS intervals (110-130 ms) apparently increased due to training or habituation, judging the emotional expression did not benefit from this increased visibility, even though feedback was given on the emotion judgments, not on the localization. In other words, an overall increase in stimulus visibility (judged from the increase in performance for the last six compared with the first six blocks of experimentation, Fig. 2b vs. 2c), did not improve the affective blindsight performance. If anything, there rather was a relative decrease of the blindsighted performance, in the sense that there was no significant discrimination between happy and sad emoticons during trials in which localization was disrupted (Fig. 2c Right, corrected for multiple comparisons).

Results of the two-frame condition confirm that increased visibility suppresses the affective blindsight effect: increase of the presentation time from one to two frames (33 ms) resulted in an increase in overall and baseline performance (Fig. 2d). However, localization was still strongly suppressed by TMS pulses at 130 ms. Surprisingly, access to the emotional content of the unseen emoticon disappeared; identification of the emotional expression was suppressed equally strong by TMS pulses at 130 ms (Fig. 2d). Subsequent analysis of the trials in which subjects failed to localize the deviant emoticon shows that in these trials, emotion discrimination was at chance level (Fig. 2d Right). This result shows that the occurrence of TMS-induced affective blindsight depends on overall stimulus visibility: it only occurs when subjects are in a general state of uncertainty about the stimuli. Note that the results of training and of increasing stimulus visibility in the two-frame condition are independent, because the experiments of Fig. 2 (and 3) were run in random order across subjects (see Table 4, which is published as supporting information on the PNAS web site, for detailed information on task order).

We have tested three alternative explanations for our results. First, the differences in localization and emotional expression detection, and their dependence on overall stimulus visibility, may be attributed to shifts in decision criterion. We eliminated the role of decision criterion by calculating d′ values. The effects remained the same, showing that shifts in decision criterion did not play a role in the disappearance of TMS-induced affective blindsight by increased stimulus visibility (see Fig. 6, which is published as supporting information on the PNAS web site).

Second, it might be that the observed affective blindsight is a direct consequence of the fact that subjects received feedback on the “emotion” task, and that this task was the primary task. We therefore also ran the tasks in the reverse order, i.e., subjects first had to localize the deviant emoticon as quickly as possible, for which they received feedback, and then judge its emotional expression. In this version of the experiment, both tasks showed suppression, and no significant difference in performance between the two tasks was found (Fig. 3a). A residual affective blindsight effect was found in the sense that in trials in which localization was successfully suppressed, emotional judgment was significantly above chance (Fig. 3a Right). The reverse, above-chance localization on trials in which emotional judgment was successfully suppressed was not found (residual localization performance on the 110-ms delay was 58%, P = 0.19; on the 130-ms delay 43%, P = 0.90). Comparing this data with our previous results indicates that blindsight occurs only for the emotional expression, not for localizing the deviant emoticon. It also, shows, however, that access to the affective content may disappear very rapidly. Affective blindsight is strongest when responses are given immediately after the presentation of stimuli. The reduction of affective blindsight in this condition is unlikely to be an effect of training or increased stimulus visibility, because blocks of this experiment have been randomly mixed with blocks of the experiments of Fig. 2.

Fig. 3.

Control experiments. Magnetic stimulation was the same as in the first two experiments, but task order (a) and visual stimulation (b) were varied. Stimuli were shown for one frame in both experiments. Symbols are as in Fig. 2. (a) Scores for the reversed task order experiment. Subjects did 24 trials per delay for seven subjects. (b) Scores for the curvature and localization tasks. Subjects did 24 trials per delay for seven subjects.

Third, a possible answer might be that subjects did not show blindsight for the affective content of stimuli but instead for the curvature of the mouth. To test this hypothesis, we repeated the first experiment, but now with scrambled emoticons: eyes and mouth were placed in a non-face configuration. In this version of the experiment, suppression at a TMS latency of 130 ms was equally strong for the localization and “emotional expression,” i.e., no “curvature blindsight” was present (however, performance was slightly above chance in both conditions, probably due to further training of our subjects, because this experiment was performed after the other experiments in four of seven subjects (see Fig. 3b and Table 4). Again, in trials in which subjects could not localize the deviant emoticon, they could neither discriminate between the two curvatures of the mouth (Fig. 3b Right). These results suggest that blindsight is exclusive for the expression signaled by the emoticon as a whole (24).

Discussion

Our results show that affective blindsight may be induced in normal observers by using TMS of the striate cortex. We conjecture that affective blindsight is most likely mediated by a subcortical pathway to the amygdala, through the midbrain and thalamus. In normal observers, this pathway provides a route for processing behaviorally relevant, unseen visual stimuli, in parallel to the cortical routes necessary for conscious identification, most likely along the ventral pathway from V1 to temporal cortex (4, 13-15, 25). Occipital TMS blocks access to the cortical route, most likely by interfering with V1 activity (19-23). Even if our TMS pulses would have “spilled” to extrastriate areas like V2 or V3, the interpretation would not have been altered.

Our results show that blocking the cortical route by means of TMS blocks perception, but not affective discrimination, although exclusively when stimuli are generally hard to see. This finding is paradoxical, because it suggests that access to unconscious information is only possible when, during the experiment, stimulus strength is on average below a certain threshold, so that the subjects feel rather insecure about how to respond. A similar paradox has been found in neuroimaging studies on amygdala responses to emotional stimuli: both in blindsight patients (1-3) as in normal observers (8, 15-18), the amygdala shows activation when an affective face is presented but not seen. However, blindsight patients are capable of guessing the affective content of the stimulus above chance (1-3), whereas normal observers are not (8, 15-18). This finding suggests that conscious perception of a stimulus affects access to unconsciously processed information.

Studies on affective priming confirm this conclusion: when observers have to rate emotional valence of neutral targets, they tend to rate neutral targets as more negative when the targets are preceded by invisible negative affective primes and as more positive when the prime was positive. However, when primes are clearly visible, the effect reverts and observers tend to rate targets as more positive if the prime was negative and vice versa (26).

We theorize that the availability of conscious information triggers a behavioral mode in which maximum accuracy is achieved by using consciously perceived information, processed in cortical areas such as V1 and the fusiform face area, whereas access to unconscious information, processed in the subcortical route to the amygdala, is blocked (4, 14, 18). However, if conscious information is sparse, maximum accuracy under such circumstances may be achieved by incorporating unconscious information in response preparation. Although the subject may feel like he is guessing in such conditions, his guesses are actually affected by unconsciously processed information, leading to above-chance performance. So, we might be “blindly led by emotions” but only when we have no other option available.

The finding that availability of conscious information inhibits responding to unconsciously processed information seems to be in line with the Freudian mechanism of active repression of unconscious information by consciousness (27). Although the unconscious information repressed here has little to do with the Freudian unconscious, the proposed mechanism of repression remains the same. Recently, there has been a renewed interest in the idea of repression, triggered by the emergence of some supporting experimental evidence (27, 28). We provide further and more direct evidence in favor of this hypothesis.

Supplementary Material

Acknowledgments

We thank Sabrina La Fors, Jeroen Vermeer, and Thomas Pronk for their assistance during TMS measurements. J.J. is supported by a grant from the Social and Behavioral Sciences Research Council of the Netherlands Organization for Scientific Research.

This paper was submitted directly (Track II) to the PNAS office.

Abbreviations: TMS, transcranial magnetic stimulation; V1, primary visual cortex.

References

- 1.De Gelder, B., Vroomen, J., Pourtois, G. & Weiskrantz, L. (1999) NeuroReport 10, 3759-3763. [DOI] [PubMed] [Google Scholar]

- 2.Morris, J. S., De Gelder, B., Weiskrantz, L. & Dolan, R. J. (2001) Brain 124, 1241-1252. [DOI] [PubMed] [Google Scholar]

- 3.Pegna, A. J., Khateb, A., Lazeyras, F. & Seghier, M. L. (2005) Nat. Neurosci. 8, 24-25. [DOI] [PubMed] [Google Scholar]

- 4.LeDoux, J. (1996) The Emotional Brain (Simon & Schuster, New York).

- 5.Heywood, C. A. & Kentridge, R. W. (2000) Trends Cogn. Sci. 4, 125-126. [DOI] [PubMed] [Google Scholar]

- 6.De Gelder, B., Vroomen, J., Pourtois, G. & Weiskrantz, L. (2000) Trends Cogn. Sci. 4, 126-127. [DOI] [PubMed] [Google Scholar]

- 7.Weiskrantz, L., Warrington, E. K., Sanders, M. D. & Marshall, J. (1972) Brain 97, 709-728. [DOI] [PubMed] [Google Scholar]

- 8.Pessoa, L. (2005) Curr. Opin. Neurobiol. 15, 1-9.15721737 [Google Scholar]

- 9.Esteves, F. & Öhman, A. (1993) Scand. J. Psychol. 34, 1-18. [DOI] [PubMed] [Google Scholar]

- 10.Nothdurft, H. C. (1993) Perception 22, 1287-1298. [DOI] [PubMed] [Google Scholar]

- 11.Eastwoord, J. D., Smilek, D. & Merikle, P. M. (2001) Percept. Psychophys. 63, 1004-1013. [DOI] [PubMed] [Google Scholar]

- 12.Sagiv, N. & Bentin, S. (2001) J. Cognit. Neurosci. 13, 937-951. [DOI] [PubMed] [Google Scholar]

- 13.Winston, J. S., Vuilleumier, P. & Dolan, R. J. (2003) Curr. Biol. 13, 1824-1829. [DOI] [PubMed] [Google Scholar]

- 14.Vuilleumier, P., Armony, J. L., Driver, J. & Dolan, R. J. (2003) Nat. Neurosci. 6, 624-631. [DOI] [PubMed] [Google Scholar]

- 15.Morris, J. S, Ohman, A. & Dolan, R. J. (1999) Proc. Natl. Acad. Sci. USA 96, 1680-1685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B. & Jenike, M. A. (1998) J. Neurosci. 18, 411-418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Killgore, W. D. S. & Yurgelun-Todd, D. A. (2004) NeuroImage 21, 1215-1223. [DOI] [PubMed] [Google Scholar]

- 18.Öhman, A., Lundqvist, D. & Esteves, F. (2001) J. Pers. Soc. Psychol. 80, 381-396. [DOI] [PubMed] [Google Scholar]

- 19.Juan, C. H. & Walsh, V. (2004) Exp. Brain Res. 150, 259-263. [DOI] [PubMed] [Google Scholar]

- 20.Cowey, A. & Walsh, V. (2000) NeuroReport 11, 3269-3273. [DOI] [PubMed] [Google Scholar]

- 21.Amassian, V. E., Cracco. R. Q., Maccabee, P. J., Cracco, J. B., Rudell, A. & Eberle, L. (1989) Electroencaphalogr. Clin. Neurophysiol. 74, 458-462. [DOI] [PubMed] [Google Scholar]

- 22.Corthout, E., Uttl, B., Walsh, V., Hallett, M. & Cowey, A. (1999) NeuroReport 10, 2631-2634. [DOI] [PubMed] [Google Scholar]

- 23.Corthout, E., Uttl, B., Walsh, V., Hallett, M. & Cowey, A. (2000) NeuroReport 11, 1565-1569. [PubMed] [Google Scholar]

- 24.Suzuki, S. & Cavanagh, P. (1995) J. Exp. Psychol. Hum. Percept. Perform. 21, 901-913. [Google Scholar]

- 25.Goodale, M. A. & Milner, A. D. (1992) Trends Neurosci. 15, 20-25. [DOI] [PubMed] [Google Scholar]

- 26.Murphy, S. T. & Zajonc, R. B. (1993) J. Pers. Soc. Psychol. 64, 723-739. [DOI] [PubMed] [Google Scholar]

- 27.Solms, M. (2004) Sci Am. 290, 82-88. [DOI] [PubMed] [Google Scholar]

- 28.Anderson, M. C. & Green, C. (2001) Nature 410, 366-369. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.