Abstract

In the context of the increasing popularity of Big Data paradigms and deep learning techniques, we introduce a novel large-scale hyperspectral imagery dataset, termed Orbita Hyperspectral Images Dataset-1 (OHID-1). It comprises 10 hyperspectral images sourced from diverse regions of Zhuhai City, China, each boasting 32 spectral bands with a spatial resolution of 10 meters and spanning a spectral range of 400–1000 nanometers. The core objective of this dataset is to elevate the performance of hyperspectral image classification and pose substantial challenges to existing hyperspectral image processing algorithms. When compared to traditional open-source hyperspectral datasets and recently released large-scale hyperspectral datasets, OHID-1 presents more intricate features and a higher degree of classification complexity by providing 7 classes labels in wider area. Furthermore, this study demonstrates the utility of OHID-1 by testing it with selected hyperspectral classification algorithms. This dataset will be useful to advance cutting-edge research in urban sustainable development science, land use analysis. We invite the scientific community to devise novel methodologies for an in-depth analysis of these data.

Subject terms: Mathematics and computing, Environmental sciences

Background & Summary

Hyperspectral remote sensing has copious narrow spectral bands and provides a complete image for each band. This has numerous advantages over conventional remote sensing with only three RGB bands:

Improved ground object classification: The high spectral resolution of hyperspectral images (HSI) makes it much easier to detect subtle differences in appearance and boundaries between objects.

Better chemical composition analysis: It is much easier to identify various materials through specific light emission and absorption characteristics.

Differential analysis: Differences between images in one band and those in another band can be highly significant.

Quantitative analysis: Working with narrow spectral bands can reduce noise and help overcome interference.

These advantages make HSI useful for many applications, including agricultural analysis1–3, weather forecasting4, land5 and ocean resource mapping6,7, and a variety of others8,9.

Classification of pixels in HSI is of fundamental importance. Classification involves using labeled data to train a model that learns the data features associated with each label by adjusting internal weights until the overall error with respect to the training set is minimized. A large computational volume is involved in repeating the adjustment of these weights to find the combination that minimizes the overall error for the chosen model. However, the huge advances in computing power in recent years have made this approach possible, and it is widely used for many purposes, including HSI analysis.

Deep Neural Networks (DNNs) have been found to be a useful supervised approach for many challenging tasks, including image parsing10–14 and natural language processing15–17. Setting up a DNN relies heavily on the availability of appropriately labelled datasets and in recent years these have been published for various tasks18–21. Examples include CIFAR-10/100 and ImageNet14 for image recognition, Microsoft COCO22, Chinese City Parking Dataset (CCPD)23,24 and PASCAL VOC25 for object detection, and ActivityNet18 and the “something something” video datasets26 for video parsing27. These datasets are large-scale and well-annotated.

Several datasets have also been published in relation to remote sensing images28–39. These can be divided into multiple categories, including standard red-green-blue (RGB) images and HSI. Exploiting HSI30,35,40–44 is popular since HSI contains rich spectral information that can better present the spatial features and material composition of ground objects. For example, HSI has been used in combination with DNNs to obtain better accuracy for object recognition by using information from specific spectral bands45, and for winter wheat analysis3,46. But the use of available open-source HSI datasets are limited. For example, the Indian Pines28, Salinas Valley32, and Pavia University29 datasets, which are widely used in classification researches. However, analytically, we find that:

① These older datasets contain relatively small amounts of data and are typically used with conventional algorithms or shallow DNNs. Since shallow DNNs have limited learning capabilities, these networks have limited generalization performance and are not suitable for many practical purposes.

② The generalization ability of classification model is one of the important indicators of model performance, which needs to be tested on different scenes and different types of datasets, but the existed available datasets cannot meet the demands.

In the context of the increasing popularity of big data paradigms and deep learning techniques, we build a new set of hyperspectral data with complex characteristics using data from Zhuhai Orbita Aerospace Technology Co., Ltd (Orbita) and named it Orbita Hyperspectral Images Dataset-147 (OHID-1). It describes different type of areas in Zhuhai City, China.

The Necessity of opening the OHID-1 dataset

We compared OHID-147 with other HSI datasets and also used 8 well-known DNNs to estimate the baseline of classification difficulty caused by richer characteristics contained in the OHID-147. The results show that the AI algorithms developed for these datasets perform not good enough on the OHID-147, which means OHID-147 provides greater challenges than those associated with the previously available HSI datasets. To mine the OHID-147, we propose to the scientific community to develop methods to study these data in depth. We believe that OHID-147 can contribute to the study of HSI and help advance the performance of HSI classification.

As we mentioned before, an effective classification method should be tested on various kinds of scenes and different types of HSI data. From the current research perspective, a lot of studies use the data captured by airborne sensors for experimentation, because most of the public datasets are captured by airborne sensors (Table 1). The Botswana dataset is one of the few publicly available satellite data, which has only 1 scene and a spatial resolution of 30 m.

Table 1.

Comparison between the existed public datasets and OHID-1 dataset.

| Dataset | Resolution | Channels | Spectrum | Number of classes | Scenes | Size (pixels) | Sensor |

|---|---|---|---|---|---|---|---|

| Indian Pines (IP)28 | 20 m | 220 | 400–2500 nm | 16 | 1 | 145 × 145 | AVIRIS (Airborne) |

| Pavia University (PU)29 | 1.3 m | 115 | 430nm–860nm | 9 | 1 | 610 × 340 | ROSIS (Airborne) |

| Kennedy Space Center (KSC)30 | 18 m | 224 | 400–2500 nm | 13 | 1 | 512 × 614 | AVIRIS (Airborne) |

| Houston31 | 2.5 m | 144 | 364–1046 nm | 15 | 1 | 349 × 1905 | ITRES CASI-1500 (Airborne) |

| Salinas Valley32 | 3.7 m | 224 | 400–2500 nm | 16 | 1 | 512 × 217 | AVIRIS (Airborne) |

| Botswana33 | 30 m | 242 | 400–2500 nm | 14 | 1 | 1476 × 256 | Hyperion (Spaceborne) |

| Xiongan New Area (Matiwan Village)34 | 0.5 m | 250 | 400–1000 nm | 19 | 1 | 3750 × 1580 | Full spectrum Multi-modal Imaging spectrometer (Airborne) |

| ShanDongFeiCheng (SDFC)35 | 10 m | 63 | 400–1000 nm | 19 | 2 | 2000 × 2700, 2100 × 2840 | high score special aviation hyperspectral spectrometer (Airborne) |

| Trento36 | 1 m | 63 | 400–980 nm | 6 | 1 | 600 × 166 | AISA Eagle (Airborne) |

| Chikusei37 | 2.5 m | 128 | 343–1018 nm | 19 | 1 | 2517 × 2335 | Headwall Hyperspec-VNIR-C (Airborne) |

| WHU-Hi38 | 0.109 m | 274 | 400–1000 nm | 16 | 1 | 1217 × 303 | Headwall Nano-hyperspec (Airborne) |

| OHID-1 (from this paper) | 10 m | 32 | 400–1000 nm | 7 | 10 | 512 × 512 | CMOS (spaceborne) |

Comparing to the airborne-data, the satellite data also has irreplaceable value in environment, agricultural, city management, etc. since the satellite data has high timeliness and low labor costs. Although there are also some unresolved issues with satellite data, such as the calibration of radiation values and geometric information, the influence of cloud, the low spatial resolution and so on, we also think exploring the application of satellite data has significant value in social development.

The Research value of the OHID-1 dataset

In addition to helping to improve the effectiveness of classification algorithms, the OHID-147 can also be used in other researches of computer vision (CV). For example, the super-resolution reconstruction which is very popular in CV. The super-resolution reconstruction has crucial meaning for application of HSI data, because its ability in improving the spatial resolution. It is well-known that there is a trade-off between spatial and spectral resolution in hyperspectral satellite images due to the hardware device limitations, the low spatial resolution leads to insufficient ground detail in the image. This limitation restricts the widespread application of hyperspectral images across various domains. Our dataset not only has a spatial resolution of 10 m which is up to advanced level in this area, but also contains 10 scenes for the examination of different models.

There is now a much stronger call for integrated knowledge about understanding of the character and dynamics of cities, social and technological systems and their interfaces. Thus, OHID-147 can make a significant contribution to cutting-edge research on the science of sustainability and development of cities, urbanization, agriculture, contemporary climate change, cyclone forecasting, biodiversity conservation, environmental behavior, environmental degradation, green infrastructure, health and environment, land use, natural resource management, water-soil-waste and others other remote sensing tasks related to sustainability and management of cities.

Methods

Samples of OHID-1 Dataset

According to the above, we propose a new open-source dataset: the OHID-147, which is intended to help address the above limitations. The OHID-147 is collected by “Zhuhai No.1” hyperspectral satellite (OHS) constellation (Fig. 1), designed and produced by Zhuhai Orbita Aerospace Technology Co., Ltd (Orbita). In 2023 company has been renamed to Zhuhai Aerospace Microchips Science & Technology Co., Ltd. With eight OHS (labelled with OHID-147 as A, B, C, D, E, F, G, H30), “Zhuhai No.1” can make global observations within two days, and the payload of the satellite has four principal components: (i) the lens, (ii) the focal plane, (iii) the focusing mechanism, (iv) the hood. “Zhuhai No.1” adopts the pushbroom scan imaging technology, with a 150 km image width, a 10 m spatial resolution, a 2.5 nm spectral resolution, and a 400–1000 nm wavelength range. Due to transmission and storage limitations, available data bands is designed to be 32 (programmable, total number of bands is 256). A single hyperspectral satellite can orbit the earth about 15 times per day, and the maximum single data acquisition time in one orbit is about 8 minutes. With such high-quality of data, the HSI captured by “Zhuhai No.1” has been applied in many fields, such as water body monitoring and land use classification.

Fig. 1.

Space-borne OHS imagery: (a) Space-borne OHS imagery (10 m spatial resolution and 2.5-nm spectral resolution). (b) The intraclass pixels show serious spectral variability, and the interclass pixels show the spectral similarity of Building and Farmland.

The OHID-147 provides 10 hyperspectral images each with 32 spectral bands, a size of 512 × 512 pixels and 7 types of objects (Fig. 1). This makes OHID-147 suitable for (i) training DNNs for use with hyperspectral images, (ii) deepening the depth of DNNs, and (iii) improving the generalization performance of DNNs. Some sample scenes with annotations of different types of land-use are shown in Fig. 2. The scenes shown in Fig. 2 (part I) come from different type of areas and Fig. 2 (part II) shows part of Zhuhai City, Guangdong Province, China. As we can see, different land-use types of these image are well annotated.

Fig. 2.

Sample scenes from OHID-147 dataset: Part 1, different type of areas at Zhuhai City, Guangdong Province, China. Part 2, part of Zhuhai City, Guangdong Province, China.

Subfigure (a) of Figure 1 comprises two key components: a high spectral resolution line graph and a schematic representation of satellite remote sensing. High spectral resolution displays detailed spectral information, characterized by its high spectral resolution of 2.5 nanometers. The horizontal axis represents the wavelength, indicating the range of electromagnetic radiation captured by the sensor. The vertical axis represents Radiance. Subfigure (b) presents samples from the dataset to illustrate the mean spectra curves and mean spectra ± standard deviation plots for the building and farmland categories. In both plots, the horizontal axis represents the wavelength, while the vertical axis represents the radiance values. These visualizations aid in understanding the spectral variability and similarity between the building and farmland categories within the dataset.

Figure 2 presents sample scenes extracted from the OHID-1 dataset. OHID-1 stands for the relevant dataset name. Part I show cases different types of areas (categorized as city, country, and mountain) within the dataset, with their corresponding annotated pseudo-color images, sourced from Zhuhai City, Guangdong Province, China. Annotations refer to the labeled areas within the images. Part II focuses on a specific image from the dataset, zoomed in to provide detailed views of both the image itself and its annotations, featuring a part of Zhuhai City, Guangdong Province, China.

To have a clearer vision of the current public datasets, 11 common public HSI datasets and their parameters are listed in Table 1. As we can see, these 11 datasets are different at spatial resolution, spectral resolution and spectral range. Moreover, there is only one dataset which was taken by a spaceborne sensor (Botswana). Indicating that the existed methods need to be applied to a lot of different datasets to improve their generalization ability. For this reason, we propose the OHID-147 which was collected by spaceborne sensor and contained 10 scenes.

Labeling

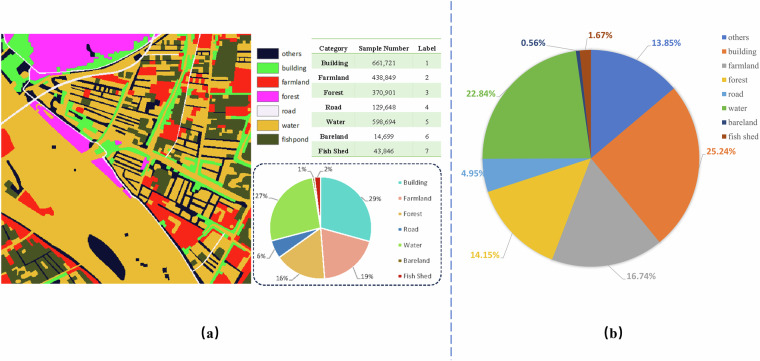

The OHID-147 uses 7 labels which mainly correspond to basic objects such as buildings and roads, as shown in Fig. 4. There are 10 scenes, all of Zhuhai, a coastal city in China. All the scenes have the same spatial resolution of 10 m/pixel, with 512 × 512 pixels per scene.

Fig. 4.

Schematic diagram of dataset annotation and statistics: (a) Classes distribution within a single scene image from the OHID-1. (b) Classes distribution within all OHID-1 images.

OHID-147 contain 2 folders for images and labels respectively:

images: 10 scenes and 32-band hyperspectral images, “tif” format;

labels: The semantic labels corresponding to the 10 scenes of hyperspectral images, which are single-channel data, “tif” format.

We selected 10 representative regions from the four original images for marking, each size 512 × 512, and the regional distribution is shown in the Fig. 3. Meanwhile, the dataset samples were classified by 7 categories: building, farmland, forest, road, water, bare land and fish shed, and the sample number of these 7 categories can be seen in Fig. 4. Figure 4a presents a detailed illustration of the categories and their distribution within a single scene image from the OHID-147. Figure 4b gives a distribution of all OHID-147 images. It is evident from this figure that the OHID-147 data is characterized by both multi-category presence and data imbalance. Among the seven categories, water and road occupy more than half of the total, whereas bare land and fish shed account for less than 2% each. This presents a challenge for subsequent HSI classification tasks.

Fig. 3.

The regional distribution map.

Figure 4 is divided into two parts, providing a detailed exhibition of the categories and their distribution within the OHID-1 dataset. The dataset samples are categorized into seven classes: buildings, farmland, forests, roads, water areas, bare land, and fish ponds. Subfigure (a) presents a detailed view of the categories and their distribution within a single scene image. This includes a pseudo color annotated image, a table showing the specific sample counts for the different categories, and a pie chart illustrating the statistical distribution of these categories. Subfigure (b) is a pie chart representing the overall data distribution of the dataset.

To ensure the accuracy of the labels, we obtained high-resolution aerial flight images with similar dates as a reference and conducted on-site surveys with the help of GPS positioning. For buildings, roads, rivers, large areas of woodland, and other easily distinguishable features, we confirmed and manually outlined these by referring to aerial images, while for bare soil, fish shed, and other features that change rapidly over time, we used drones to conduct on-the-spot investigations and used the drone’s GPS to match the OHID-1 plot coordinates to obtain accurate features for the image area. Figure 5 is an example of a reference aerial image for each of the 7 categories, also giving the corresponding colors used in annotated images.

Fig. 5.

Reference aerial images and colours used for annotation: (a) Image cube. (b) Ground-truth image. (c) Typical zone in the study area.

We also analyzed the reflectance of the scenes at different wavelengths, and Fig. 6 presents the average reflectance for each wavelength band for each object class. From this figure, it can be seen that the “Zhuhai No.1” satellites perform well in providing the spectral characteristics of each object class and that it shows their difference well. Buildings and roads, however, are similar in reflectivity.

Fig. 6.

Reflectance of each object.

Figure 5 is an example of a reference aerial image for each of the 7 categories, also giving the corresponding colors used in annotated images. The figure presents three subplots (a, b, and c) illustrating different aspects of hyperspectral imagery and ground truth data. Subplot (a) is Hyperspectral Image Cube. Subplot (b) displays the ground truth image, which provides the true or reference labels for the hyperspectral data. Subplot (c) stands Typical Zone in the Study Area.

Figure 6 presents the average reflectance for each wavelength band for each object class. From this figure, it can be seen that the “Zhuhai No.1” satellites perform well in providing the spectral characteristics of each object class and that it shows their difference well. The horizontal axis (x-axis) represents the “band,” with units in nanometers (nm). The vertical axis (y-axis) represents the “reflectance,” with units in percent (%).

Data Records

Data storage format

The OHID-147 is available at Figshare repository https://figshare.com/articles/online_resource/OHID-1/27966024/8. It describes different type of areas at Zhuhai City, China. This link provides access to the original data, dataset, preprocessing, codes.

Original data

Original data is available at Baidu Netdisk: https://pan.baidu.com/s/1qMtY7ossLwRh0pI2v2bnDg?pwd=bi70, code: bi70.

This link provides access to the raw data and annotations of the OHID-147, which includes two different data formats: “mat” and “tif”. All data have a size of 5056 × 5056 pixels. The raw data consists of 32 bands, while the annotation data consists of 1 band.

Dataset

The “image” folder contains 10 hyperspectral images, each with 32 spectral bands, a size of 512 × 512 pixels, and depicting 7 types of objects. The naming format is “201912_n.tif”, where n ranges from 1 to 10. The “labels” folder contains the labels for the ten images in the “images” folder, with the same naming format of “201912_n.tif”, where n also ranges from 1 to 10. Each label has values ranging from 0 to 7, and the category represented by each value can be found in “sample_proportion.png”, “201912_n.png”, where n ranges from 1 to 10, represents the bar chart distribution of each category in “201912_n.tif”, “201912_n_color.png”, where n ranges from 1 to 10, represents the visualized pseudo color map of the labels in “201912_n.tif”.

Preprocessing

The “Preprocessing” folder provide the codes for band synthesis and slicing of the original file.

Codes

The “HSI_Classification” folder contains the codes for ID CNN, 2D CNN, 3D CNN and SVM. These codes are built upon HIS classification algorithms from https://github.com/zhangjinyangnwpu/HSI_Classification, with the primary changes made in the “unit.py” file. The “HyLiTE” files store the HyLITE code. These codes are built upon HyLITE algorithms from https://github.com/zhoufangqin/hylite, with the main changes made in the “main.py” file. We have added code for reading some additional files to make it compatible with other datasets.

Data source selection

The original satellite images of the dataset were derived from four OHS images taken by four hyperspectral satellites between 2019 and 2020. The data size of each scene is 5056 × 5056.

The naming rule of “Zhuhai No.1” hyperspectral satellite data product is “Satellite + ID + Receiving Station _ Receiving time _ scene _ level_Band_sensor”, where:

Satellite: In “Zhuhai No.1” constellation, the category of remote sensing satellite is denoted, and the hyperspectral satellite is denoted H;

ID: Satellite number, hyperspectral satellite number A, B, C, D, E, F, G, H, in which the four satellites A-D for the second group launch, the four satellites E-H for the third group launch. The naming convention is shown in the Fig. 7.

Fig. 7.

Naming rules for the data products of “Zhuhai No.1” hyperspectral satellite.

Figure 7 illustrates the naming convention for the data products of the “Zhuhai No.1” hyperspectral satellite. It provides an example of a data product name. Each part of the example name is clearly labeled and described to ensure understanding of the naming convention and the data products it represents.

Data Pre-processing

During the data pre-processing, the ENVI software was used to perform radiometric calibration, atmospheric correction, and geometric correction and orthographic correction on the collected data48–50.

Radiation calibration

Radiation calibration is the process of converting the digital quantization value DN recorded by the sensor into the radiation brightness value L. Calibrated hyperspectral remote sensing data is essential for accurately extracting the genuine physical properties of ground objects from the imagery. Additionally, it enables the comparison of hyperspectral data collected from different regions or at different times. In order to compare and analyze hyperspectral remote sensing data with different remote sensors, spectrometers and even system simulation data, the radiation scaling formula used in OHS hyperspectral satellite images is as follows:

| 1 |

In the formula:

Le is apparent radiance;

gain is the absolute gain coefficient of radiometric calibration;

offset indicates the absolute offset coefficient of radiative calibration;

TDIStage indicates the integral series. You can obtain the TDIStage field from the metadata file (XXX_meta.xml) in the hyperspectral data folder.

The gain and offset parameters for each band are automatically obtained by ENVI from the metadata file, and both parameters are calibrated in W/(m2-s × r-μ × m), so that the calculated radiance value Le is also in W/(m2-s × r-μ×m).

Atmospheric correction

In order to obtain the surface radiation information, it is necessary to eliminate the influence caused by external factors such as the atmosphere, which is called atmospheric radiation correction. Since the surface radiation information after inversion is generally reflectivity, it is also called reflectivity inversion. The surface reflectivity is mainly based on the theory of atmospheric radiative transfer, and is obtained by inversion of atmospheric radiative transfer model and software. The MODTRAN model and FLAASH software are mainly used in atmospheric correction of OHS hyperspectral satellite data.

The FLAASH algorithm was used for atmospheric correction. Based on the location of the study area and the time of image acquisition, we selected the tropical atmospheric correction algorithm, set the aerosol level to urban, and converted the image from apparent radiance data to surface reflectance.

Geometric correction

In the process of image acquisition, many factors will affect the geometric deformation of the image, so that the geometric figure in the image is different from the geometric figure in the selected map projection, which is manifested as displacement, rotation, scaling, affine, bending and other deformation. These deformations distort the geometry or position of the image. In order to eliminate these errors and improve the positioning accuracy and use value of remote sensing image, geometric correction of remote sensing image is needed.

The key steps in geometric correction of remote sensing image mainly include:

Establish a unified coordinate system and map projection for distorted images and reference images. The “CGCs2000_3_degree_Gauss_Kruger_CM_114E” coordinate system is adopted in this paper.

Select the ground control point (GCP), according to the GCP selection principle, find the same position of the ground control point pair on the distorted image and the reference image.

Select the correction model, use the selected GCP data to obtain the parameters of the correction model, and then use the correction model to realize the pixel coordinate transformation between the distorted image and the reference image.

Select the appropriate resampling method to perform grayscale assignment on the output image pixel of the distorted image.

Orthographic correction

Orthographic correction is a process of correcting space and geometric distortion of image to produce orthographic image of multi-center projection. It not only rectifies geometric distortions caused by systemic factors but also mitigates distortions induced by varying terrain conditions. The RPC coefficients provided by L1 radiation correction products of OHS hyperspectral satellite are used to construct rational function models for orthographic correction of hyperspectral images. The rational function model, as an alternative model to the rigorous geometry model of the sensor, can realize the orthographic correction of remote sensing images without control points. The RPC coefficient complies with NITF 2.1 standard (RPC00B format).

After processing by the series of means described above, the data can be used in a variety of applications to assist the industry. Table 2 lists some of these applications and the corresponding spectral bands used. There are differences in the spectral characteristics of different ground objects, which are reflected in different spectral bands. When using hyperspectral remote sensing images, appropriate bands are selected according to the spectral characteristics of different ground objects. These bands were also included in constructing the OHID-147.

Table 2.

Applications of “Zhuhai No.1” hyperspectral satellite.

| Application | Criteria | Wave Length(nm) | OHID-1 Reference Band |

|---|---|---|---|

| Black and Smelly Water | Chlorophyll Inversion | 566 and 670 | b7,b14 |

| Suspended Matter and Transparency | 670 and 806 | b14,b23 | |

| Dissolved Oxygen Inversion | 520 and 566 | b4,b7 | |

| Total Nitrogen and Phosphorus Inversion | 566 and 670 | b7,b14 | |

| Black and Smelly Water | 550–580 and 626–700 | b6-b8 and b11-b17 | |

| Water | NDWI | Near red, green light band | b21-b32 and b3-b7 |

| Suspended Matter Concentration | 700–850 | b16-b26 | |

| Yellow Substance | 466 | b1 | |

| Chlorophyll | 480,536,566 | b2,b5,b7 | |

| Sediment, CODmn | 500 | b3 | |

| Red Tide | 520 | b4 | |

| Cyanobacteria Bloom | 550,626,686 | b6,b11 | |

| Algae | 610–640 | b10-b12 | |

| Chlorophyll Absorption | 656 | b13 | |

| Chlorophyll Fluorescence | 686 | b15 | |

| Water Plants, Transparency | 716 | b17 | |

| Forestry | Chlorophyll | 640–660 and 430–450 | b12-b13 |

| Anthocyanin | 537 | b5 | |

| NDVI | Near red, red light band | b14,b24 | |

| Pests and Diseases | 400–700 and 720–1100 | b1-b16 and b17-b32 | |

| Red Edge Location (Pests and Diseases) | 680–750 | b15-b19 | |

| FHI (Forest Health Evaluation) | 566,606,654,866 | b7,b10,b13,b27 | |

| PRI (Photochemical Vegetation Index) | 531,570 | b5,b7 | |

| Crop | SAVI (Soil Regulated Vegetation Index), Rice Growth | 700–750 and 725–890 | b16-b19 and b18-b28 |

| RVI (Ratio Vegetation Index), | 465–605 and 860–1000 | b1-b9 and b27-b32 | |

| SAVI, Wheat Growth | 695–750 and 735–1000 | b16-b19 and b18-b32 | |

| RVI, Wheat Growth | 460–590 and 725–1000 | b1-b9 and b18-b32 | |

| Ocean | Bleached Coral | 520–580 | b4-b8 |

| Water and Land Segmentation | 776–940 | b21-b32 |

Technical Validation

Quality of OHID-1 Dataset

Ensuring the image quality of the dataset is critical to guaranteeing that the input data meets the highest standards, going beyond factors like spatial resolution or the number of spectral bands. Here we chose five parameters to state the quality of the provided data, here is the synopsis of the quality control (QC) implemented during its processing:

① Uncontrolled positioning accuracy: Utilize CE90 to evaluate the uncontrolled positioning accuracy of OHS hyperspectral satellite images, ensuring that the error is less than 500 m.

② Controlled positioning accuracy: Select an appropriate number and distribution of control points in the image, calculate their distance from the true position coordinates, and ensure the error is less than 3 pixels.

③ Full-band image registration accuracy: After the registration process is completed, evaluate the positions of each control point to verify that the absolute and geographic positioning errors relative to other products in the dataset are less than 3 pixels.

④ Relative radiometric calibration error accuracy: Examine the OHS hyperspectral satellite image after radiometric calibration processing, calculate the error between the calibrated radiance values and true radiance values, and display the relative radiometric calibration error to be less than 3%.

⑤ Signal-to-noise ratio (SNR): Select suitable images based on the requirements of a solar height angle greater than 30 degrees and a ground reflectance greater than 0.2 to calculate their SNR, in order to verify that their SNR falls within the range of 25 to 40 decibels.

Algorithms adopted

Tons of methods have been published for optimizing the performance of DNNs in recent years13,17,51–55. For HSI classification, the following methods have been successful applied in image classification: CDCNN56,57 uses a neural network that is deeper than most others. It fuses the ideas of AlexNet54, DCNN19, ResNet13 and FCN22, and utilizes a residual structure with only convolutional layers used to extract HSI features.

However, CDCNN only deals with 2-D data. To address this problem, SSRN58 adopts 3-D data as input, and improves its structure with ideas from 3DCNN and ResNet. To further improve accuracy, DBMA21 and DBDA59 use a novel structure with a dual network to extract features from the space and spectrum of the data separately and merge the features during inference. SSSAN60 differs from other methods mentioned in the article in that the backbone of its visual feature extraction module uses a transformer structure. A feature of CVSSN61 is to improve the representation of spectral-spatial characteristics based on the extraction of spatial information. In other aspects, FDSSC62 proposes a novel network to improve speed and accuracy. HyLITE63, a vision transformer that incorporates both local and spectral information, demonstrates superior performance compared to other network. The classification frameworks of different DNNs are shown in Figs. 8–14.

Fig. 8.

Flowchart of different DCNNs classification framework: (a) 1DCNN. (b) 2DCNN. (c) 3DCNN.

Fig. 14.

Flowchart of HyLITE framework.

Figure 8 is divided into three parts, each offering a detailed visualization of a different convolutional neural network (CNN) architecture, along with its parameters and components. Part (a) of the image depicts the structure of 1DCNN. Part (b) of the image shows the structure of 2DCNN. Part(c) of the image illustrates the structure of 3DCNN.

-

1DCNN

CNNs have different implementations of convolutional and max pooling layers and different ways of training the network. As depicted in Fig. 8(a), 1DCNN comprises five weighted layers: the input layer, the convolutional layer C1, the max pooling layer M2, the fully connected layer F3, and the output layer. Assuming denotes the complete set of trainable parameters, , where is the parameter set between the ()-th and the i-th layer, index number i ranging from 1 to 4.

In HSI, each pixel sample can be conceptualized as a 2D image with a height dimension of 1, echoing the representation of 1D audio inputs in speech recognition. This comparison facilitates the application of similar processing techniques to HSI data. Hence, the size of the input layer are solely , where represents the total number of bands. layer C1 processes the input data by applying 20 kernels of size . Layer C1 comprises nodes, where is determined by the relationship . Between the input layer and layer C1, there exist trainable parameters. Subsequently, layer M2, serving as the second hidden layer, employs a kernel size of . Layer M2 comprises nodes, where . layer F3 has nodes and exhibits trainable parameters in its connection with layer M2. Finally, the output layer contains nodes and possesses trainable parameters in its connection with layer F3. Consequently, the overall architecture of our proposed CNN classifier encompasses a total of trainable parameters64.

-

2DCNN

As depicted in Fig. 8(b), the input hyperspectral image data of 2DCNN is represented as a 3D tensor, characterized by shape . Here, h and w correspond to the image’s height and width, respectively, while c denotes the number of spectral bands or channels present within the image. To align with the unique characteristics of CNNs, it is necessary to decompose the captured hyperspectral image into patches. Each patch encapsulates spectral and spatial information pertaining to a specific pixel, allowing for the extraction of relevant features for classification tasks65. Specifically, to categorize a pixel located at coordinates on image plane while effectively merging spectral and spatial information, 2DCNN utilize a square patch, measuring in size, with its center aligned at . Here, represents the class label assigned to the pixel situated at location , while designates the patch centered around pixel . Subsequently, a dataset D can be constructed, consisting of tuples . Each tuple contains a patch , which is itself a 3D tensor with shape , encapsulating both spectral and spatial information pertaining to the pixel .

Furthermore, the tensor is decomposed into c matrices, each with shape s × s. These matrices are then inputted into a CNN, which progressively constructs high−level features that capture both spectral and spatial attributes of pixel . Subsequently, these extracted features are passed to a Multi-Layer Perceptron (MLP), which performs the classification task.

-

3DCNN

A combined spatio-spectral model is essential for the comprehensive analysis of both spectral and spatial information within hyperspectral data. The key advantage of spatio-spectral combined framework lies in the seamless integration of both components from the outset of the process, ensuring they remain inextricably linked throughout. By maximizing the utilization of data information, this solution significantly reduces costs. Unlike prior methods, 3DCNN concurrently processes spatial and spectral components through genuine 3D convolutions. This approach optimizes the limited number of available samples and requires fewer trainable parameters, enhancing overall performance66. This proposal addresses the problem by decomposing it into the processing of a sequence of volumetric representations of the image. Therefore, each pixel is linked to an spatial neighborhood and a defined number of spectral bands, effectively treating each pixel as a volumetric element of dimension . The core idea of this architecture lies in merging the principles of traditional CNN networks with a unique twist: the utilization of 3D convolution operations. This departure from the standard 1D convolution operators, which solely examine the spectral content of the data, allows for a more comprehensive analysis that incorporates both spatial and spectral information.

Figure 8(c) provides an overview of the 3DCNN architecture, which stacks various blocks of CNN layers to ensure deep and efficient representations of the image. Initially, a set of layers based on 3D convolutions is introduced to handle the three-dimensional input voxels. Each of these layers comprises a number of volumetric kernels that concurrently perform convolutions across the width, height, and depth axes of the input. Following this 3D convolution stack, a series of 1 × 1 convolution (1D) layers are employed to discard spatial neighborhood information, followed by a sequence of fully connected layers. Essentially, the proposed architecture treats 3D voxels as input data and initially generates 3D feature maps, which are gradually reduced to 1D feature vectors throughout the layers.

-

CDCNN

Figure 9 shows the overall framework of the CDCNN model, which can be divide into three main modules: feature vector extraction module, class center vector extraction module and prediction module. Where, the feature vector extraction module is located on the left side of Fig. 8, which can convert each input image into a vector representing image features, that is, it can convert into . The functional module for extracting class center vectors through the extraction algorithm of class center vectors is located in the blue area in the upper right corner of Fig. 8. The prediction module for predicting image categories based on posterior probability values, located in the red dashed box in the bottom right corner67.

-

DBMA

Figure 10 shows the overall framework of the DBMA model. Composed of dense spectral blocks and channel attention blocks, the spectral branch used for extracting spectral features is the top branch. Composed of dense spatial blocks and spatial attention blocks, the spatial branch used for extracting spatial features is the bottom branch21.

-

DBDA

The whole structure of the DBDA network can be seen in Fig. 11. For convenience, the top branch is called the “spectral branch” and the bottom branch is named the “spatial branch”. Spectral and spatial feature maps can be obtained by inputting inputs into the spectral and spatial branches respectively59.

-

SSSAN

Figure 12 shows the overall framework of the SSSAN60 model. The spectral module consists of two ReLU layers, two batch normalization layers, and two convolutional layers. The spatial module consists of two attention modules, two ReLU layers, two batch normalization layers, and two convolutional layers. The kernel size in the spatial module is 3 × 3 × q, where q is the channel number of input features. To maintain a spatial size of 7 × 7 for convolutional features, filling operations are used in each convolutional layer. In the end of SSSAN model, a softmax layer and two fully connected layers are utilized to classify.

-

CVSSN

Figure 13 shows the overall framework of the CVSSN61 model. Based on two similarity measures, an adaptive weighted addition based spectral vector self-similarity module (AWA-SVSS) and Euclidean distance based feature vector self-similarity module (ED-FVSS) have been designed, which can fully mine the central vector oriented spatial relationships. Moreover, a spectral-spatial information fusion module (SSIF) is formulated as a new pattern to fuse the central 1D spectral vector and the corresponding 3D patch for efficient spectral-spatial feature learning of the subsequent modules. Besides, a scale information complementary convolution module (SIC-Conv) and a channel spatial separation convolution module (CSS-Conv) are implemented for efficient spectral space feature learning.

HyLITE

Fig. 9.

Flowchart of CDCNN framework.

Fig. 10.

Flowchart of DBMA framework.

Fig. 11.

Flowchart of DBDA framework.

Fig. 12.

Flowchart of SSSAN framework.

Fig. 13.

Flowchart of CVSSN framework.

Figure 14 is divided into two parts by a dashed line. On the left side, a Vision Transformer (ViT) structure is depicted, with a detailed illustration of the process through which hyperspectral data is input into the ViT structure. On the right side, the specific layer structure within the transformer architecture is shown.

Figure 14 furnishes an illustrative overview of HyLITE. The model rigorously adheres to the protocol specified in Spectralformer, approaching HSI as a task of image-level classification. For an image-label pair comprising and y ∈ , where is a low-resolution square image featuring spatial dimensions and spectral resolution , extracted from a high-resolution hyperspectral image via overlapping patchification, the model undertakes processing. Each patch is assigned a label corresponding to the category of its central pixel, selected from among possible classes, depending on different datasets. The model’s objective is to train a vision transformer, denoted as with parameters , to predict the image label , where represents the model’s forecasted labels.

Evaluation Metrics

To evaluate HSI classification performance, we utilized the Overall Accuracy (OA), Average Accuracy (AA), and the kappa coefficient as evaluation metrics. The OA can be calculated using formula (2):

| 2 |

where m denotes the number of accurate result and N denotes the total number of samples.

The AA can be obtained by formula (3):

| 3 |

where n denotes the class number, mi denotes the number of accurate result of class i and ci denotes the sample number of class i.

The kappa coefficient is a parameter to evaluate the consistency between different classes and it can be calculated using formula (4):

| 4 |

where pe is calculated with formula (5):

| 5 |

and ci denotes the sample number of the ith class, mi denotes the number of accurate results of the ith class. OA denotes Overall Accuracy and N is the total number of samples.

The consistency indicated by the value of the kappa coefficient is divided into 5 classes. It is presented in Table 3.

Table 3.

Classes for the kappa coefficient.

| Kappa | −1 | 0 | 0.00∼0.20 | 0.21∼0.40 | 0.41∼0.60 | 0.61∼0.80 | 0.81∼1.00 |

|---|---|---|---|---|---|---|---|

| Class | Complete inconsistency | Occasional agreement | Slight | Fair | Moderate | Substantial | Almost Perfect |

Experimental settings and results

We conducted experiments on OHID-147 with 8 DNNs and a traditional method: (1) 1DCNN64, (2) 2DCNN65, (3) 3DCNN66, (4) CDCNN67, (5) DBMA21, (6) DBDA59, (7) SSSAN60, (8) CVSSN61, (9) SVM68 and HyLITE63. We randomly selected 500 samples from each class as the training set and the rest samples as the test set. While training the DNNs, we used the Adam Optimizer and the CrossEntropy Loss. The data has been normalized. Experimentally, the learning rate and batch size are set to 0.0001 and 64, respectively, and 200 epochs are trained at a time. The learning rate is reduced by a factor of 0.1 at 50, 90, 110 epoch, respectively. We trained and tested for 10 times and calculated the mean value of OA, AA and kappa coefficient as our final results. The main parameters are set by experience and they are shown in Table 4. While training SVM, we exploited grid search to find parameters cost and gamma, we set parameter cost to 9.514 and parameter gamma to 0.03125 and also carried out training and testing 10 times.

Table 4.

Main parameters for training DNNs.

| Parameters | Max Epochs | Early Stop | Learning Rate | Batch Size |

|---|---|---|---|---|

| Value | 200 | 30 | 0.0001 | 64 |

The overall experiment results are described in Table 5, and visualization results of classification from different dataset are shown in Figs. 15–21.

Table 5.

Experiment results on OHID-1 and other HSI datasets.

| Datasets | EVALUATION METRIC | 1DCNN | 2DCNN | 3DCNN | CDCNN | DBMA | DBDA | SVM | SSSAN | CVSSN | HyLITE |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Indian Pines | OA | 0.861 | 0.917 | 0.84 | 0.623 | 0.932 | 0.954 | 0.694 | 0.956 | 0.981 | 0.983 |

| AA | 0.916 | 0.93 | 0.904 | 0.509 | 0.877 | 0.965 | 0.656 | 0.957 | 0.959 | 0.969 | |

| kappa | 0.838 | 0.902 | 0.814 | 0.559 | 0.922 | 0.947 | 0.647 | 0.95 | 0.978 | 0.980 | |

| Pavia university | OA | 0.918 | 0.968 | 0.971 | 0.877 | 0.947 | 0.96 | 0.843 | 0.998 | 0.999 | 0.991 |

| AA | 0.925 | 0.969 | 0.97 | 0.824 | 0.955 | 0.965 | 0.83 | 0.998 | 0.998 | 0.988 | |

| kappa | 0.891 | 0.957 | 0.961 | 0.836 | 0.93 | 0.947 | 0.788 | 0.997 | 0.998 | 0.989 | |

| Salinas Valley | OA | 0.911 | 0.936 | 0.882 | 0.778 | 0.954 | 0.975 | 0.881 | 0.985 | 0.997 | 0.964 |

| AA | 0.964 | 0.974 | 0.951 | 0.799 | 0.963 | 0.98 | 0.915 | 0.993 | 0.998 | 0.984 | |

| kappa | 0.901 | 0.928 | 0.868 | 0.755 | 0.949 | 0.972 | 0.867 | 0.983 | 0.997 | 0.960 | |

| WHU-Hi-Hanchuan | OA | 0.792 | 0.869 | 0.854 | 0.678 | 0.838 | 0.857 | 0.707 | 0.985 | 0.991 | 0.952 |

| AA | 0.754 | 0.856 | 0.833 | 0.429 | 0.78 | 0.821 | 0.506 | 0.983 | 0.986 | 0.889 | |

| kappa | 0.759 | 0.848 | 0.83 | 0.615 | 0.809 | 0.831 | 0.655 | 0.983 | 0.989 | 0.943 | |

| WHU-Hi-Longkou | OA | 0.918 | 0.969 | 0.963 | 0.967 | 0.993 | 0.996 | 0.949 | 0.96 | 0.999 | 0.989 |

| AA | 0.925 | 0.973 | 0.958 | 0.924 | 0.979 | 0.989 | 0.885 | 0.886 | 0.997 | 0.977 | |

| kappa | 0.891 | 0.96 | 0.951 | 0.956 | 0.991 | 0.995 | 0.934 | 0.948 | 0.998 | 0.986 | |

| WHU-Hi-Honghu | OA | 0.742 | 0.887 | 0.841 | 0.778 | 0.888 | 0.899 | 0.757 | 0.986 | 0.993 | 0.946 |

| AA | 0.726 | 0.88 | 0.84 | 0.464 | 0.831 | 0.879 | 0.538 | 0.97 | 0.983 | 0.870 | |

| kappa | 0.687 | 0.858 | 0.804 | 0.716 | 0.859 | 0.873 | 0.684 | 0.982 | 0.991 | 0.931 | |

| OHID-1 | OA | 0.592 | 0.663 | 0.615 | 0.724 | 0.736 | 0.74 | 0.691 | 0.895 | 0.903 | 0.869 |

| AA | 0.616 | 0.687 | 0.648 | 0.562 | 0.601 | 0.61 | 0.543 | 0.836 | 0.886 | 0.698 | |

| kappa | 0.501 | 0.583 | 0.529 | 0.653 | 0.67 | 0.675 | 0.614 | 0.855 | 0.864 | 0.798 |

Fig. 15.

Visualization of experimental results of the Indian Pines dataset: (a) true color. (b) ground truth. (c) 1DCNN. (d) 2DCNN. (e) 3DCNN. (f) HyLITE. (g) SVM.

Fig. 21.

Visualization of experimental results of the WHU-Hi-HongHu dataset: (a) true color. (b) ground truth. (c) 1DCNN. (d) 2DCNN. (e) 3DCNN. (f) HyLITE. (g) SVM.

Figure 15 contains seven images and a legend. Image (a) displays the true color of the Indian Pines dataset. Image (b) shows the ground truth. Images (c) to (g) present the visual results obtained after training five different Deep Neural Networks (DNNs) and a traditional method on the Indian Pines dataset and performing inference. Specifically, Image (c) corresponds to 1DCNN (one-dimensional convolutional neural network), Image (d) to 2DCNN (two-dimensional convolutional neural network), Image (e) to 3DCNN (three-dimensional convolutional neural network), Image (f) to HyLITE, and Image (g) to SVM. The legend provides the color mapping for each category in the Indian Pines dataset within the inference results.

Figure 16 contains seven images and a legend. Image (a) displays the true color of Pavia University dataset. Image (b) shows the ground truth. Images (c) to (g) present the visual results obtained after training five different Deep Neural Networks (DNNs) and a traditional method on the Pavia University dataset and performing inference. Specifically, Image (c) corresponds to 1DCNN, Image (d) to 2DCNN, Image (e) to 3DCNN, Image (f) to HyLITE (Hyperspectral Locality-aware Image TransformEr), and Image (g) to SVM. The legend provides the color mapping for each category in the Pavia University dataset within the inference results.

Fig. 16.

Visualization of experimental results of the Pavia University dataset: (a) true color. (b) ground truth. (c) 1DCNN. (d) 2DCNN. (f) HyLITE. (g) SVM.

Figure 17 contains seven images and a legend. Image (a) displays the true color of OHID-1 dataset. Image (b) shows the ground truth. Images (c) to (g) present the visual results obtained after training five different Deep Neural Networks (DNNs) and a traditional method on the OHID-1 dataset and performing inference. Specifically, Image (c) corresponds to 1DCNN, Image (d) to 2DCNN, Image (e) to 3DCNN, Image (f) to HyLITE, and Image (g) to SVM. The legend provides the color mapping for each category in the OHID-1 dataset within the inference results.

Fig. 17.

Visualization of experimental results of the OHID-1 dataset: (a) true color. (b) ground truth. (c) 1DCNN. (d) 2DCNN. (e) 3DCNN. (f) HyLITE. (g) SVM.

Figure 18 contains seven images and a legend. Image (a) displays the true color of the Salinas Valley dataset. Image (b) shows the ground truth. Images (c) to (g) present the visual results obtained after training five different Deep Neural Networks (DNNs) and a traditional method on the Salinas Valley dataset and performing inference. Specifically, Image (c) corresponds to 1DCNN, Image (d) to 2DCNN, Image (e) to 3DCNN, Image (f) to HyLITE, and Image (g) to SVM. The legend provides the color mapping for each category in the Salinas Valley dataset within the inference results.

Fig. 18.

Visualization of experimental results of the Salinas Valley dataset: (a) true color. (b) ground truth. (c) 1DCNN. (d) 2DCNN. (e) 3DCNN. (f) HyLITE. (g) SVM.

Figure 19 contains seven images and a legend. Image (a) displays the true color of the WHU-Hi-HanChuan dataset. Image (b) shows the ground truth. Images (c) to (g) present the visual results obtained after training five different Deep Neural Networks (DNNs) and a traditional method on the WHU-Hi-HanChuan dataset and performing inference. Specifically, Image (c) corresponds to 1DCNN, Image (d) to 2DCNN, Image (e) to 3DCNN, Image (f) to HyLITE, and Image (g) to SVM. The legend provides the color mapping for each category in the WHU-Hi-HanChuan dataset within the inference results.

Fig. 19.

Visualization of experimental results of the WHU-Hi-HanChuan dataset: (a) true color. (b) ground truth. (c) 1DCNN. (d) 2DCNN. (e) 3DCNN. (f) HyLITE. (g) SVM.

Figure 20 contains seven images and a legend. Image (a) displays the true color of the WHU-Hi-LongKou dataset. Image (b) shows the ground truth. Images (c) to (g) present the visual results obtained after training five different Deep Neural Networks (DNNs) and a traditional method on the WHU-Hi-LongKou dataset and performing inference. Specifically, Image (c) corresponds to 1DCNN, Image (d) to 2DCNN, Image (e) to 3DCNN, Image (f) to HyLITE, and Image (g) to SVM. The legend provides the color mapping for each category in the WHU-Hi-LongKou dataset within the inference results.

Fig. 20.

Visualization of experimental results of the WHU-Hi-LongKou dataset: (a) true color. (b) ground truth. (c) 1DCNN. (d) 2DCNN. (e) 3DCNN. (f) HyLITE. (g) SVM.

Figure 21 contains seven images and a legend. Image (a) displays the true color of WHU-Hi-HongHu dataset. Image (b) shows the ground truth. Images (c) to (g) present the visual results obtained after training five different Deep Neural Networks (DNNs) and a traditional method on the WHU-Hi-HongHu dataset and performing inference. Specifically, Image (c) corresponds to 1DCNN, Image (d) to 2DCNN, Image (e) to 3DCNN, Image (f) to HyLITE, and Image (g) to SVM. The legend provides the color mapping for each category in the WHU-Hi-HongHu within the inference results.

Analysis of experimental results

From Table 5, it can be seen that the performance of the vast majority of methods on OHID-147 has decreased compared to their performance on other datasets, which means that OHID-147 is more difficult to annotate correctly for current HSI classification methods. In other words, OHID-147 can provide a solid foundation for the further development of HSI classification.

As Figs. 15–21 show, the ground truth images of the original scenes and the visualization of classification results obtained by various methods across three datasets. Upon inspection, it is evident from Figs. 15–21 that all prediction maps have achieved approximate correct predictions of the overall regions compared to the ground truth, demonstrating the effective contribution of artificial intelligence techniques to hyperspectral classification. Compared to other methods, the HyLITE architecture leverages transformers to produce smoother and more accurate classification maps. The transformer’s ability to capture long-range dependencies and complex relationships within the data allows it to provide refined predictions that are superior in both smoothness and accuracy.

The OHID-147 focuses on 7 classes of area near Zhuhai City. Besides classification of hyperspectral images, it also can be used for testing general multi-classification algorithms, both deep learning and traditional algorithms.

Usage Notes

The dataset described here is available from https://figshare.com/articles/online_resource/OHID-1/27966024/8. This dataset offers flexibility for researchers aiming to study HSI classification for environmental monitoring, resource management, and zone planning etc. Subjected to policy, the dataset includes no geospatial information.

The OHID-147 focuses on 7 classes of area near Zhuhai City. It can be utilized for evaluating both general hyperspectral classification algorithms, encompassing deep learning and traditional methodologies, as well as for computer vision tasks such as super-resolution.

Its advantage lies in its distinctive spatial resolution, spectral characteristics, extensive data scale, advanced acquisition techniques, and vast application potential, distinguishing OHID-147 from other datasets. Unlike traditional remote sensing datasets confined to color space features, OHID-1’s spectral-spatial features enrich object details. Meanwhile, it covers a broader area and exhibits more complex features, posing greater classification challenges. This presents a substantial challenge to existing hyperspectral image classification algorithms and, compared to other remote sensing hyperspectral datasets, has greater potential to drive improvements in algorithm performance.

The limitations of OHID-1 include: For terrain labels of OHID-147 do not include desert, glacier, wetland and other terrains which can not be found in Zhuhai City, the terrain similarity should be evaluated before using the dataset. And subjected to the resolution at 10 m, it is more suitable for investigation of wide area information than local information such as details of building or river.

OHID-147 data is characterized by both multi-category presence and data imbalance. This presents a challenge for subsequent HSI classification tasks with imbalanced data.

This dataset not only can be used for land cover classification, but also has a wide range of applications in agricultural management, environmental monitoring, resource management, and other areas.

Mineral Resource Exploration: Hyperspectral technology can identify different types of minerals, and by analyzing the spectral characteristics of these minerals, their types and distributions can be determined. This holds significant guidance for mineral resource exploration and mining.

Geological Structure Analysis: Hyperspectral data can also be used to analyze geological structures, including rock types and fault distributions. This information is of great importance for geological exploration and early warning of geological disasters.

Environmental Monitoring and Protection: Hyperspectral data can identify the characteristic spectra of different pollutants in water bodies, enabling real-time monitoring and early warning of water pollution. Additionally, by analyzing the spectral characteristics of soil, the degree and type of soil erosion can be assessed, providing a scientific basis for soil protection and management.

Precision Agriculture Monitoring and Management: Hyperspectral data can capture the spectral characteristics of crops at different growth stages, allowing for precise monitoring of crop growth conditions, including nutritional status and pest and disease conditions. Meanwhile, by analyzing the spectral reflectance characteristics of crops, their nutritional needs and water status can be determined, enabling precise fertilization and irrigation, which improves the utilization efficiency of agricultural resources. Furthermore, continuous monitoring of crop growth conditions using hyperspectral data allows for the establishment of crop growth models, thereby enabling more accurate yield estimates.

In conclusion, this study aimed to address the challenges associated with the classification of hyperspectral images by introducing a new large-scale dataset, OHID-147, designed to advance the performance of hyperspectral image classification algorithms. Our primary goal was to provide a comprehensive dataset that captures the complex characteristics of different areas in Zhuhai City, China, and to evaluate the effectiveness of various deep neural networks (DNNs) on this dataset. The main results of our research indicate that OHID-1, with its rich spectral diversity and high spatial resolution, poses significant classification challenges for existing AI algorithms, suggesting that there is room for improvement in the development of more accurate and robust classification models.

For future work, we will expand the capacity of the OHID-147 from 100 MB level to 10 GB level (OHID-2). Meanwhile, we will provide more category labels ranging from 7 classes to more than 10 classes, including desert, glacier, wetland and other terrains. We will provide data for more regions besides Zhuhai. In addition, we will develop more efficient and accurate classification and detection algorithms as benchmark algorithms based on the characteristics of the OHID-147 to fulfill increasingly diverse application requirements.

Acknowledgements

This research was funded by Guangdong Provincial Key Laboratory of Big Data Processing and Applications of Hyperspectral Remote Sensing Micro/Nano Satellites under Grant 2023B1212020009, supported by Hybrid AI and Big Data Research Center of Chongqing University of Education (2023XJPT02); Science and Technology Research Program of Chongqing Education Commission of China (KJQN202201608); Collaborative QUST-CQUE Laboratory for Hybrid Methods of Big Data Analysis. This research was funded by Science and Technology Project of Social Development in Zhuhai No. 2420004000328.

Author contributions

The original data with quality and data standard control was provided by J.Y., J.D., X.C. and J.W. J.D. and X.J. performed data labeling and presentation. A.M., S.G., L.L. and D.Y. contributed to conceptualization, methodology and formal analysis. J.D., C.D., X.W., J.H., A.D. and S.X. have performed experimental studies and analysis experiments. A.M., A.D., J.D. wrote the manuscript. S.G. and Y.S. contributed to revision of the manuscript to its final version.

Code availability

The code for 1DCNN, 2DCNN, 3DCNN is available at GitHub: https://github.com/eecn/Hyperspectral-Classification (Hyperspectral-Classification Pytorch, nuaa.cf).

The code of CDCNN, DBDA, DBMA is available at GitHub: https://github.com/lironui/Double-Branch-Dual-Attention-Mechanism-Network.

The code for SSSAN is available at GitHub: https://github.com/jizexuan/SSSANet.

The code for CVSSN is available at GitHub: https://github.com/lms-07/CVSSN.

The code for HyLITE is available at GitHub: https://github.com/hifexplo/hylite.

The codes for band synthesis and slicing of the original file is available at https://github.com/hrnavy/OHID-1 (Preprocessing).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Sergey Gorbachev, Email: gorbachev@cque.edu.cn.

Jun Yan, Email: yanjun@qust.edu.cn.

Dong Yue, Email: yued@njupt.edu.cn.

References

- 1.Yang, W., Yang, C., Hao, Z., Xie, C. & Li, M. Diagnosis of plant cold damage based on hyperspectral imaging and convolutional neural network. IEEE Access.7, 118239–118248 (2019). [Google Scholar]

- 2.Junttila, S. et al. Close-range hyperspectral spectroscopy reveals leaf water content dynamics. Remote Sensing of Environment: An Interdisciplinary Journal. 277 (2022).

- 3.Prey, L., Von Bloh, M. & Schmidhalter, U. Evaluating RGB imaging and multispectral active and hyperspectral passive sensing for assessing early plant vigor in winter wheat. Sensors.18(9), 2931 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jones, T. A., Koch, S. & Li, Z. Assimilating synthetic hyperspectral sounder temperature and humidity retrievals to improve severe weather forecasts. Atmospheric Research.186, 9–25 (2017). [Google Scholar]

- 5.Ghosh, A. & Joshi, P. K. Hyperspectral imagery for disaggregation of land surface temperature with selected regression algorithms over different land use land cover scenes. ISPRS Journal of Photogrammetry and Remote Sensing96, 76–93 (2014). [Google Scholar]

- 6.Foglini, F. et al. Application of hyperspectral imaging to underwater habitat mapping. Southern Adriatic Sea. Sensors19(10), 2261 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Marcello, J., Eugenio, F., Martín, J. & Marqués, F. Seabed mapping in coastal shallow waters using high resolution multispectral and hyperspectral imagery. Remote Sensing10(8), 1208 (2018). [Google Scholar]

- 8.Rubo, S. & Zinkernagel, J. Exploring hyperspectral reflectance indices for the estimation of water and nitrogen status of spinach. Biosystems Engineering214, 58–71 (2022). [Google Scholar]

- 9.Gao, Y. et al. Hyperspectral and multispectral classification for coastal wetland using depthwise feature interaction network. IEEE Transactions on Geoscience and Remote Sensing60, 1–15 (2021). [Google Scholar]

- 10.Sellami, A., Abbes, A. B., Barra, V. & Farah, I. R. Fused 3-d spectral-spatial deep neural networks and spectral clustering for hyperspectral image classification. Pattern Recognition Letters.138, 594–600 (2020). [Google Scholar]

- 11.Bock, C. H., Poole, G. H., Parker, P. E. & Gottwald, T. R. Plant disease severity estimated visually, by digital photography and image analysis. and by hyperspectral imaging. Critical reviews in plant sciences29(2), 59–107 (2010). [Google Scholar]

- 12.Daudt, R. C., Le Saux, B., Boulch, A. & Gousseau, Y. Urban change detection for multispectral earth observation using convolutional neural networks. IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, 2115-2118 (2018).

- 13.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 770-778 (2016).

- 14.Russakovsky, O. et al. ImageNet large scale visual recognition challenge. International journal of computer vision115(3), 211–252 (2015). [Google Scholar]

- 15.Xu, R. et al. SDNN: Symmetric deep neural networks with lateral connections for recommender systems. Information Sciences595, 217–230 (2022). [Google Scholar]

- 16.Xiao, S. et al. Complementary or Substitutive? A novel deep learning method to leverage text-image interactions for multimodal review helpfulness prediction. Expert Systems with Application208, 118138 (2022). [Google Scholar]

- 17.Jin, L., Zhang, L. & Zhao, L. Feature selection based on absolute deviation factor for text classification. Information Processing & Management60, 103251 (2023). [Google Scholar]

- 18.Caba Heilbron, F., Escorcia, V., Ghanem, B. & Carlos Niebles, J. Activitynet: A large-scale video benchmark for human activity understanding. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 961-970 (2015).

- 19.Chen, Y. et al. Hyperspectral images classification with gabor filtering and convolutional neural network. IEEE Geoscience and Remote Sensing14(12), 2355–2359 (2017). [Google Scholar]

- 20.Ji, X., Henriques, J. F. & Vedaldi, A. nvariant information clustering for unsupervised image classification and segmentation Proceedings of the IEEE/CVF international conference on computer vision, 9865-9874 (2019).

- 21.Ma, W., Yang, Q., Wu, Y., Zhao, W. & Zhang, X. Double-branch multi-attention mechanism network for hyperspectral image classification. Remote Sensing11(11), 1307 (2019). [Google Scholar]

- 22.Long, J., Shelhamer, E. & Darrell, T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition, 3431-3440 (2015). [DOI] [PubMed]

- 23.Zou, Y. et al. License plate detection and recognition based on YOLOv3 and ILPRNET. Signal. Image and Video Processing.16, 473–480 (2022). [Google Scholar]

- 24.Zou, Y. et al. A robust license plate recognition model based on Bi-LSTM. IEEE Access8, 211630–211641 (2020). [Google Scholar]

- 25.Everingham, M., Van Gool, L., Williams, C. K., Winn, J. & Zisserman, A. The pascal visual object classes (voc) challenge. International journal of computer vision88(2), 303–338 (2010). [Google Scholar]

- 26.Goyal, R. et al. The “something something” video database for learning and evaluating visual common sense. Proceedings of the IEEE international conference on computer vision, 5842-5850 (2017).

- 27.Gu, C. et al. Ava: A video dataset of spatiotemporally localized atomic visual actions. Proceedings of the IEEE conference on computer vision and pattern recognition, 6047-6056 (2018).

- 28.Baumgardner, M. F.; Biehl, L. L.; Landgrebe, D. A. 220 Band AVIRIS Hyperspectral Image Data Set: June 12, 1992 Indian Pine Test Site 3. Purdue University Research Repository. https://purr.purdue.edu/publications/1947/1 (2015).

- 29.P. Gamba. Pavia university Dataset. https://ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (2016).

- 30.NASA AVIRIS. Kennedy Space Center Dataset. http://www.ehu.eus/ccwintcolindex.php/Hyperspectral_Remote_Sensing_Scenes (2016).

- 31.National Science Foundation Center for Airborne Laser Mapping. Houston Dataset. 2013 IEEE GRSS Data Fusion Contesthttps://machinelearning.ee.uh.edu/2013-ieee-grss-data-fusion-contest/ (2013).

- 32.Salinas valley Dataset. https://ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (2017).

- 33.Botswana Dataset. http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (2017).

- 34.Yi, C. E. N. et al. Aerial hyperspectral remote sensing classification dataset of Xiongan New Area (Matiwan Village). National Remote Sensing Bulletin24(11), 1299–1306 (2020). [Google Scholar]

- 35.Sun, L., Zhang, J., Li, J., Wang, Y. & Zeng, D. SDFC dataset: a large-scale benchmark dataset for hyperspectral image classification. Optical and Quantum Electronics55(2), 173 (2023). [Google Scholar]

- 36.Trento Dataset. Githubhttps://github.com/pagrim/TrentoData (2019).

- 37.Yokoya, N. & Iwasaki, A. Airborne hyperspectral data over Chikusei. Naoto TOKYAhttps://naotoyokoya.com/Download.html (2016).

- 38.Zhong, Y. et al. WHU-Hi: UAV-borne hyperspectral with high spatial resolution (H2) benchmark datasets and classifier for precise crop identification based on deep convolutional neural network with CRF. Remote Sensing of Environment250, 112012 (2020). [Google Scholar]

- 39.Aerial hyperspectral remote sensing classification dataset of Xiongan New Area (Matiwan Village). 10.11834/jrs.20209065.

- 40.Jun, Y. et al. Using a hybrid FNN method for image classification of satellite remote sensing data. Artificial Intelligence Impressions1, 159–180 (2022). [Google Scholar]

- 41.Liu, W., Zhang, Y., Yan, J., Zou, Y. & Cui, Z. Semantic segmentation network of remote sensing images with dynamic loss fusion strategy. IEEE Access9, 70406–70418 (2021). [Google Scholar]

- 42.Mohanty, S. P., Hughes, D. P. & Salathé, M. Using deep learning for image-based plant disease detection. Frontiers in plant science7, 1419 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lee, H. & Kwon, H. Going deeper with contextual cnn for hyperspectral image classification. IEEE Transactions on Image Processing26(10), 4843–4855 (2017). [DOI] [PubMed] [Google Scholar]

- 44.Rafal, J., Wojciech, Z. & Ilya, S. An empirical exploration of Recurrent Network architectures. 32nd International Conference on Machine Learning. ICML.3, 2332–2340 (2015). [Google Scholar]

- 45.Li, F. et al. Evaluating hyperspectral vegetation indices for estimating nitrogen concentration of winter wheat at different growth stages. Precision Agriculture11(4), 335–357 (2010). [Google Scholar]

- 46.Wang, W., Dou, S., Jiang, Z. & Sun, L. A fast dense spectral–spatial convolution network framework for hyperspectral images assification. Remote Sensing10(7), 1068 (2018). [Google Scholar]

- 47.Deng, J., & Wei, X. Dataset OHID-1: A New Large Hyperspectral Image Dataset for Multi-Classification, Figshare, 10.6084/m9.figshare.27966024.v8 (2024).

- 48.Rani, N., Mandla, V. R. & Singh, T. Evaluation of atmospheric corrections on hyperspectral data with special reference to mineral mapping. Geoscience Frontiers8(4), 797–808 (2017). [Google Scholar]

- 49.López-Serrano, P. M., Corral-Rivas, J. J., Díaz-Varela, R. A., Álvarez-González, J. G. & López-Sánchez, C. A. Evaluation of radiometric and atmospheric correction algorithms for aboveground forest biomass estimation using Landsat 5 TM data. Remote sensing8(5), 369 (2016). [Google Scholar]

- 50.Prieto-Amparan, J. A. et al. Atmospheric and radiometric correction algorithms for the multitemporal assessment of grasslands productivity. Remote Sensing10(2), 219 (2018). [Google Scholar]

- 51.Möllenbrok, L., Sumbul, G. & Demir, B. Deep active learning for multi-label classification of remote sensing images. IEEE Geoscience and Remote Sensing Letters20, 5002405 (2023). [Google Scholar]

- 52.Tan, S., Yan, J., Jiang, Z. & Huang, L. Approach for improving YOLOv5 network with application to remote sensing target detection. Journal of Applied Remote Sensing15(03), 036512 (2021). [Google Scholar]

- 53.Karen, S., Andrew, Z. Very deep convolutional networks for large-scale image recognition. 3rd International Conference on Learning Representations. ICLR 2015 - Conference Track Proceedings, 1-14 (2015).

- 54.Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems25, 1097–1105 (2012). [Google Scholar]

- 55.Webb, G. I., Keogh, E. & Miikkulainen, R. Naive bayes. Springer15(1), 713–714 (2010). [Google Scholar]

- 56.Shi, W. et al. Landslide recognition by deep convolutional neural network and change detection. IEEE Transactions on Geoscience and Remote Sensing59(6), 4654–4672 (2020). [Google Scholar]

- 57.Du, R. et al. CDCNN-CMR-SV algorithm for robust adaptive wideband beamforming. Signal. Image and Video Processing17(5), 2137–2143 (2023). [Google Scholar]

- 58.Zhong, Z. et al. Spectral–spatial residual network for hyperspectral image classification: A 3-D deep learning framework. IEEE Transactions on Geoscience and Remote Sensing56(2), 847–858 (2017). [Google Scholar]

- 59.Li, R., Zheng, S., Duan, C., Yang, Y. & Wang, X. Classification of hyperspectral image based on double-branch dual-attention mechanism network. Remote Sensing12(3), 582 (2020). [Google Scholar]

- 60.Zhang, X. et al. Spectral–Spatial self-attention networks for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing60, 5512115 (2022). [Google Scholar]

- 61.Li, M., Liu, Y., Xue, G., Huang, Y. & Yang, G. Exploring the relationship between center and neighborhoods: Central vector oriented self-similarity network for hyperspectral image classification. IEEE Transactions on Circuits and Systems for Video Technology33(4), 1979–1993 (2022). [Google Scholar]

- 62.Wang, W., Dou, S., Jiang, Z. & Sun, L. A fast dense spectral–spatial convolution network framework for hyperspectral images classification. Remote sensing10(7), 1068 (2018). [Google Scholar]

- 63.Thiele, S. T. et al. Multi-scale, multi-sensor data integration for automated 3-D geological mapping. Ore Geology Reviews136, 104252 (2021). [Google Scholar]

- 64.Hu, W., Huang, Y., Wei, L., Zhang, F. & Li, H. (Deep convolutional neural networks for hyperspectral image classification. Journal of Sensors, 258619 (2015).

- 65.Makantasis, K., Karantzalos, K., Doulamis, A. & Doulamis, N. Deep supervised learning for hyperspectral data classification through convolutional neural networks. 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 4959-4962 (2015).

- 66.Hamida, A. B., Benoit, A., Lambert, P. & Amar, C. B. 3-D Deep Learning Approach for Remote Sensing Image Classification. IEEE Transactions on Geoscience and Remote Sensing56(8), 4420–4434 (2018). [Google Scholar]

- 67.Zhang, T. et al. CDCNN: a model based on class center vectors and distance comparison for wear particle recognition. IEEE Access8, 113262–113270 (2020). [Google Scholar]

- 68.Cortes, C. & Vapnik, V. Support-vector networks. Machine learning20, 273–297 (1995). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Deng, J., & Wei, X. Dataset OHID-1: A New Large Hyperspectral Image Dataset for Multi-Classification, Figshare, 10.6084/m9.figshare.27966024.v8 (2024).

Data Availability Statement

The code for 1DCNN, 2DCNN, 3DCNN is available at GitHub: https://github.com/eecn/Hyperspectral-Classification (Hyperspectral-Classification Pytorch, nuaa.cf).

The code of CDCNN, DBDA, DBMA is available at GitHub: https://github.com/lironui/Double-Branch-Dual-Attention-Mechanism-Network.

The code for SSSAN is available at GitHub: https://github.com/jizexuan/SSSANet.

The code for CVSSN is available at GitHub: https://github.com/lms-07/CVSSN.

The code for HyLITE is available at GitHub: https://github.com/hifexplo/hylite.

The codes for band synthesis and slicing of the original file is available at https://github.com/hrnavy/OHID-1 (Preprocessing).