Abstract

Classification of patient multicategory survival outcomes is important for personalized cancer treatments. Machine Learning (ML) algorithms have increasingly been used to inform healthcare decisions, but these models are vulnerable to biases in data collection and algorithm creation. ML models have previously been shown to exhibit racial bias, but their fairness towards patients from different age and sex groups have yet to be studied. Therefore, we compared the multimetric performances of 5 ML models (random forests, multinomial logistic regression, linear support vector classifier, linear discriminant analysis, and multilayer perceptron) when classifying colorectal cancer patients (n=515) of various age, sex, and racial groups using the TCGA data. All five models exhibited biases for these sociodemographic groups. We then repeated the same process on lung adenocarcinoma (n=589) to validate our findings. Surprisingly, most models tended to perform more poorly overall for the largest sociodemographic groups. Methods to optimize model performance, including testing the model on merged age, sex, or racial groups, and creating a model trained on and used for an individual or merged sociodemographic group, show potential to reduce disparities in model performance for different groups. Notably, these methods may be used to improve ML fairness while avoiding penalizing the model for exhibiting bias and thus sacrificing overall performance.

Keywords: Colorectal cancer, survival, machine learning, multilabel classification, transcriptomics, feature selection

Introduction

Artificial Intelligence (AI) is changing medicine, with growing applications of Machine Learning (ML) algorithms in the biomedical field, and more specifically in cancer research and treatment [1-3]. Lung and colorectal cancers (CRC) are the two most common causes of cancer deaths in the United States[4]. As various types of ‘omics data, including transcriptomic and genomic data, have been generated in recent decades[3], ML algorithms have been used to predict disease prognosis and helped inform patient treatments for lung cancers[5-8] and CRC[9-11]. However, these models are vulnerable to biases in both data collection and algorithm creation, often resulting in minority groups such race- and age-minorities being fit into a model designed for the majority[12-17].

Racial biases have been shown in ML models used in US healthcare systems, where the model underperforms for certain race group(s)[18-20]. However, accuracy alone was considered when evaluating the fairness of the model. We have previously shown that although accuracy is important, additional metrics such as precision, recall, and F1 may help to better understand the model’s performance and thus evaluate its fairness[5, 10, 21]. In addition, while racial biases were considered, studying ML model performance with different age and gender groups can further inform us about other biases within these models as reported in non-cancer medical field[17, 22-25]. The existence of these biases warrants better understanding of which algorithms exhibit the least bias towards patients from different sociodemographic groups, and whether these algorithms can be optimized to improve model fairness. Whereas previous studies have investigated this question, many penalize ML models for exhibiting bias, reducing bias but at the cost of overall performance[26-28]. Hence, we aimed to understand the multimetric performance of random forests (RF), multinomial logistic regression (MLogit), linear support vector classifier (Linear SVC), linear discriminant analysis (LDA), and multilayer perceptron (MLP) when classifying four-category outcomes for CRC and lung adenocarcinoma patients from various sociodemographic groups. We also aimed to reduce bias of model performances, including testing the model on merged age, sex, and racial groups, and training and testing the model on an individual or merged sociodemographic group. We found that both methods held the potential to increase algorithmic fairness (i.e., reduce biases) for certain ML models, and that the latter method can do so with significantly reducing overall ML performance.

Methods

Data Extraction

We obtained individual-level data of colorectal and lung adenocarcinoma data from The Cancer Genome Atlas Program (TCGA) Pancancer Atlas through the cBioPortal repository[29], as described before[5, 7, 9, 10, 21]. The data were publicly available and de-identified, making this an exempt study (category 4) that did not require an Institutional Review Board IRB review.

We performed differentially expressed genes (DEG) analysis to remove the less relevant genes using Chi-square test. The outcomes of the classification models were the patients’ 4-category survival. This included Alive with No Known Disease Progression, Alive with Disease Progression, Dead with No Known Disease Progression, and Dead with Disease Progression.

We grouped the patients based on their age, race, and sex, the three sociodemographic factors of our interest, to analyze the extent of the biases that ML models had on each group. CRC patients were divided into 3 age groups based on whether they were 33 – 60, 60 – 72, or 73+ years old, and into 5 racial groups that included Race Unknown, American Indian or Alaska Native, Black or African American, White, and Asian and Pacific Islander (AAPI). Meanwhile, Lung patients were split into 2 age groups based on whether they were below 65 years old or 65 and above and into 3 racial groups of Black, White, or Other. Both CRC and Lung patients were also divided into Male and Female groups based on their sex.

Pipelines

All ML processes were conducted using Python 3.6.9 in Google Colaboratory. We used five ML models, namely RF, MLogit, Linear SVC, LDA, and MLP. For each ML model, we created a pipeline that automated the process of creating, tuning, and collecting the performance metrics Accuracy, Precision, Recall, and F1. Performance metrics were obtained using the cross_validate function imported from the sklearn.model_selection package from Scikit Learn. All tuned models were run 20 times to obtain the mean and standard deviation of each performance metric.

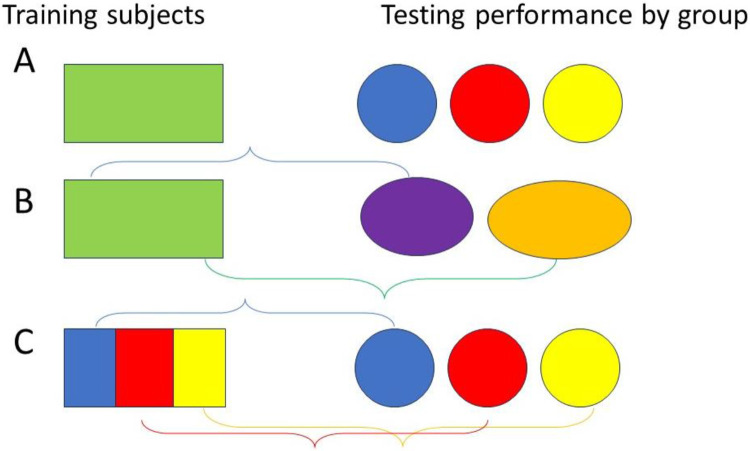

As Figure 1 shows, we applied the ML models that were tuned and trained on all the patients to each group (row A). We also used these models to examine whether merging groups would improve differences between or among the groups (row B). Finally, we applied ML models that were trained on each patient or merged group to that same group (row C). All processes were first performed on the CRC dataset before being repeated in the Lung dataset to validate our findings.

Figure 1.

Three grouping approaches to improve machine learning fairness and reduce biases.

Comparing Different Models

To examine whether, and to what extent, biases in our sociodemographic factors of interest impacted model performance, we first tuned and trained the models on the entire dataset before testing the models on each individual age, race, and sex group.

Because of the small size of the American Indian and AAPI groups in the CRC dataset (1 and 12 patients, respectively), we focused on the Race Unknown, White, and Black or African American patient groups to measure model bias and only included American Indian and AAPI patients when merging groups. Results from each ML model were also compared to examine whether certain models were less affected by biases and to compare the results from different types of models.

For the RF model, we used the RandomForestClassifier from the Python Scikit Learn package and set our split criterion as the Gini impurity index. We automated the tuning process, tuning parameters n_estimator (the number of trees in the forest) and min_samples_split (the minimum number of samples required to split an internal node). Varying n_estimator (ranging from 5 to 145, with intervals of 5) and min_samples_split (ranging from 2 to 11, with intervals of 3), we performed 5-fold cross validation using cross_val_score to collect accuracy values from the Scikit Learn package. The model was tuned three times before the pipeline automatically selected the parameters that produced the highest average accuracy. We then tested the tuned model on individual age, race, and sex groups and collected performance metrics by the group.

For Mlogit modelling, we used LogisticRegression from sklearn.linear_model to perform MLogit and set the solver to newton-cg. We tested the model on individual age, race, and sex groups after training the model on the rest of the dataset to prevent model overfitting. Since we don’t need to tune MLogit, we used KFold from the sklearn.model_selection package to perform 10-fold cross validation before extracting performance metrics.

To create our Linear SVC, LDA, and supervised neural network models, we used SVC from sklearn.svm, LinearDiscriminantAnalysis from the sklearn.discriminant_analysis package, and MLPClassifier from sklearn.neural_network, respectively. The solver aam was used for the MLP model. We split the dataset into training and testing groups using train_test_split from sklearn.model_selection. To test the performance of the tuned model on individual sociodemographic groups, we dropped members of other groups from the aforementioned testing group. For this model, we also used KFold to perform 10-fold cross validations and obtain the model’s performance metrics.

Combining Groups

We combined individual race and age groups in the CRC and Lung datasets and tested the ML models on these subgroups to test whether that would reduce the gap between model performance for different subgroups.

For CRC, we created subgroups of patients 33-72, 61+, and between 33-60 or 73+ years of age. We also created race subgroups of Unknown/Nonwhite (Race Unknown, Black or African American, American Indian, and AAPI), Race Known (White, Black or African American, American Indian, and AAPI), White/Unknown, and Minorities (Black or African American, American Indian, and AAPI) for the CRC dataset. For Lung, we grouped patients into subgroups of Minorities (Black and Other), Black/White, and Nonblack (White and Other) based on their race.

By creating the subgroups, we effectively reduced the number of groups being compared at a time from 3 or 5 (CRC Age and Lung Race or CRC Race) into 2 subgroups. We tested the models tuned on the entire dataset on these subgroups, using the same automated pipelines as before. We then compared the performance metrics for each subgroup against the remaining individual groups (or subgroups composed of those individual groups) to identify whether differences in model performance across different race and age groups had been reduced or exacerbated.

Tuning Individual Groups

Finally, we tuned and trained each ML model on individual race, age, and sex groups, as well as the subgroups with combined race and age groups that we created in the last section to identify whether individualizing models for specific groups would decrease ML model biases. The same pipelines and processes as the ones used in the first method (comparing different ML models) were employed here.

Each race, age, and sex group and subgroup was split into training and testing groups. The model was first tuned and trained on the individual group or subgroup before getting tested and performance metrics obtained. These groups were shuffled in the 20 iterations that we performed.

Statistics and reproducibility

Mean squared error (MSE) was calculated to compare ML performance differences among groups, while standard deviation (SD) was shown the figures. The number of independent experiments, the number of events, and information about the statistical details and methods are indicated in the relevant figure legends. The Student t test was used to compare ML performance metrics. P values of less than 0.05 were considered significant.

Results

Baseline Characteristics

Using the Chi-square test, we identified 2,034 DEG in the CRC dataset and 2,647 DEG in the Luad one. There were 589 qualified CRC cases, with 406 (68.7%) patients alive with no progression, 65 (11.0%) alive with progression, 35 (5.9%) dead with no known progression, and 85 (14.4%) dead with disease progression (Supplementary Table 1) [10]. There were 188 (31.9%) cases between 33 and 60 years of age, 197 (33.4%) between 61 and 72, and 206 (35.0%) that were 73 or older. 310 (52.6%) of the patients were male and 279 (47.4%) female. In addition, the race of 229 (38.9%) cases were unknown. 1 (0.02%) patient was American Native or Alaskan Indian, 64 (10.9%) were Black, 283 (48.0%) were White, and 12 (2.0%) were AAPI.

In the Lung dataset, there were 515 qualified cases, with 252 (48.9%) alive without disease, 76 (14.8%) alive with disease, 80 (15.5%) dead with no known disease, and 107 (20.8%) dead with disease (Supplementary Table 2)[7]. 220 (42.7%) cases involved patients under 65 years old and 295 (57.3%) cases were 65 and above. 277 (53.8%) of the patients were male and 238 (46.2%) female. In addition, 52 (10.1%) were Black, 388 (75.3%) were White, and 75 (14.6%) were of another race.

The breakdown of each race, age, and sex group into the four survival categories is shown in Supplementary Tables 1 and 2 as well.

Model Performance

For all five ML models, the 20 iterations we ran appeared to perform similarly. We found that in most of the models, larger sociodemographic groups tended to perform more poorly overall than smaller ones.

Out of the three CRC age groups, RF, Linear SVC, LDA, and MLP had the highest accuracy for the second largest group, with patients aged 61-72 (73%, 73%, 83%, and 72%, respectively), with LDA having the best overall performance with a precision of 54%, a recall value of 62%, and an F1 value of 68% (Figure 2). Interestingly, the below 65 Lung age group also had the best accuracy among every model except Linear SVC and the best overall performance using MLP (with a 57% accuracy 57%, 41% precision, 45% recall, and 40% F1), despite this group being smaller than the other one (Figure 3).

Figure 2.

The five ML models’ performance classifying patients in different age, race, and sex groups into their 4-category survival outcomes in the CRC dataset after being trained on patients from each group. Error bar, standard deviation.

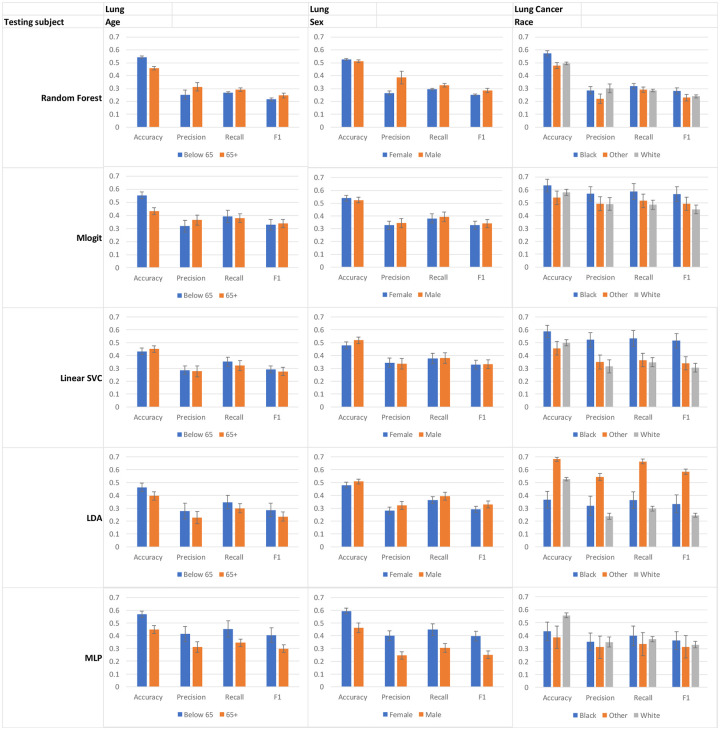

Figure 3.

Model performance for lung cancer patients in different sociodemographic groups after being trained on the entire dataset. Error bar, standard deviation.

Both the CRC and Lung datasets had fewer female cases than male. However, the models generally had a higher accuracy for female patients (except for MLP in CRC and Linear SVC and LDA in Lung). The most striking difference in accuracy occurred in Linear SVC for CRC (77% vs. 62%) and MLP for Lung (59% vs. 46%), both of which had the best overall performance for female patients (Figure 2 & 3).

Finally, in CRC race, the second largest group, patients whose race was unknown, typically had the highest accuracy. Strikingly, Black or African American, the smallest group we focused on when comparing different models, consistently had the highest Precision, Recall, and F1 values in all five models, despite not having the highest accuracy. Similar findings were observed in the Lung dataset, where the two smallest groups, Black patients and patients of another race, had the highest Precision, Recall, and F1 values across all of the ML models. However, interestingly, they also had the highest accuracy values in every model except for MLP, unlike what occurred with the CRC racial groups.

While combining individual age and race groups and testing ML models that were trained on the entire dataset on these subgroups, we found that merging groups can exacerbate or reduce gaps, depending on the choice of subgroup.

For CRC, the ML models typically had higher accuracy values for the two younger age groups than the 73+ group (Figure 2). This was reflected by larger percent difference values when either group’s accuracy is compared with the 73+ group’s than when the two younger groups are compared with each other (Table 1). When the two higher performing groups are combined into a single subgroup, the gap in accuracy between them and the 73+ group grows for every model except MLP. However, when either group was combined with the 73+ group, the percent difference values exhibited a marked decrease, from 10.9% and 8.6% to 0.8% and 0.4% in Linear SVC (Table 1).

Table 1.

Testing whether merging CRC age groups and training the model on individual or combined groups affects the percent difference between group performance. The three groups 33-60, 61-72, and 73+ are denoted as G1, G2, and G3, respectively. The color shade indicates the difference, while the number of asterisks indicates the level of statistical differences (*, <0.05; **, <0.01; ***, <0.001).

| Tuning subject | All | Subgroup | Subgroup vs All | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Testing subject | G2 vs G1 | G3 vs G2 | G3 vs G1 | G3 vs G1+2 |

G2+3 vs G1 |

G2 vs G1+3 |

G3 vs G1+2 |

G2+3 vs G1 |

G2 vs G1+3 |

G1 | G2 | G3 | G1+2 | G2+3 | G1+3 |

| Accuracy | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) |

| Random Forest | 0.7* | −17.0*** | −16.5*** | −17.3*** | −8.1*** | 10.5*** | −1.2*** | −9.4*** | −10.0*** | 0.4* | 0.7* | 22.3*** | 0.0 | −1.0*** | 0.2 |

| Mlogit | −3.7*** | −12.7*** | −15.9*** | −17.3*** | 1.4** | 1.8** | 18.1*** | −3.6*** | −3.5*** | 6.1*** | 10.1*** | 9.2*** | 6.7*** | 0.8 | 8.2*** |

| Linear SVC | 2.5*** | −10.9*** | −8.6*** | −13.6*** | 0.8 | 0.4 | 8.8*** | −9.1*** | −8.9*** | 8.9*** | 6.2*** | 5.6*** | −0.8 | −1.8*** | −2.9*** |

| LDA | 15.2*** | −31.7*** | −21.3*** | −28.7*** | −9.8*** | 16.2*** | −15.9*** | −14.5*** | −14.8*** | 15.1*** | −0.1 | 46.3*** | −12.3*** | 9.0*** | −1.1* |

| MLP | −5.6*** | −0.1 | −5.7*** | −1.1 | −8.6*** | 7.4*** | 8.9 | 1.0 | 2.3* | −9.9*** | −4.6*** | −2.1* | 5.4*** | −0.4 | 4.8*** |

| Precision | |||||||||||||||

| Random Forest | −23.0*** | 48.1*** | 14.1** | 48.1*** | −4.8 | −34.6*** | 42.5** | −9.7** | 39.4*** | 6.7 | 3.8 | −39.6*** | 27.4*** | 1.2 | −5.3 |

| Mlogit | −20.9*** | 9.7** | −13.2*** | 3.1 | 7.9* | 5.4 | 9.4*** | −13.3*** | 22.9*** | 13.9*** | 43.9*** | 23.2*** | 39.0*** | −8.4** | 86.6*** |

| Linear SVC | −22.6*** | 5.0 | −18.8*** | 5.0 | −34.9*** | −14.5*** | 14.8** | −8.7** | −21.5*** | 4.1 | 34.5*** | −5.0 | 14.5** | 46.0*** | −9.8** |

| LDA | 22.4*** | −42.7*** | −29.9*** | −23.6*** | −40.7*** | 79.1*** | −33.9*** | −25.5*** | −38.9*** | 24.9*** | 2.0 | 78.1*** | −10.1** | 56.9*** | 11.6* |

| MLP | −21.3*** | 21.2*** | −4.6 | 30.9*** | −27.5*** | 3.3 | −23.0** | −11.2** | −42.8*** | −3.1 | 23.0*** | 0.4 | 1.1 | 18.7*** | −27.3*** |

| Recall | |||||||||||||||

| Random Forest | −21.7*** | 10.7*** | −13.3*** | 11.1*** | −19.5*** | −5.6*** | 4.0*** | −20.4*** | 5.1*** | 1.5* | 1.6 | −10.7*** | 3.2*** | 0.4 | 0.7 |

| Mlogit | −18.6*** | 1.9 | −17.0*** | 7.4* | −8.9** | 17.0*** | −0.2 | 2.6 | −0.2 | −9.7*** | 10.9*** | −1.7 | 5.4 | 1.7 | 29.5*** |

| Linear SVC | −12.7*** | −1.1 | −13.7*** | 7.2* | −31.3*** | 4.1 | 1.6 | −29.2*** | −27.2*** | 6.6* | 22.1*** | −2.9 | 5.8 | 9.8** | −7.4** |

| LDA | 24.3*** | −32.7*** | −16.3*** | −7.6* | −32.4*** | 67.0*** | −34.7*** | −42.9*** | −40.2*** | 26.6*** | 1.8 | 51.2*** | −8.7** | 6.8* | 1.6 |

| MLP | −15.9*** | 8.3** | −8.9** | 26.1*** | −33.5*** | 19.3*** | −19.8*** | −23.9*** | −35.0*** | −10.3** | 6.8* | −1.3 | −0.2 | 2.6 | −17.1*** |

| F1 | |||||||||||||||

| Random Forest | −20.4*** | 11.2*** | −11.4*** | 14.3*** | −20.4*** | −5.9** | 8.9*** | −19.6*** | 6.2** | 2.1 | 1.8 | −14.1*** | 6.9*** | 3.1* | 1.7 |

| Mlogit | −20.0*** | 2.8 | −17.8*** | 3.6 | −4.0 | 14.3*** | 5.6** | 10.2*** | 7.3*** | −2.0 | 22.5*** | 7.0** | 17.1*** | 12.5*** | 50.2*** |

| Linear SVC | −15.9*** | −0.3 | −16.1*** | 4.5 | −31.8*** | −2.9 | 7.3* | −29.5*** | −26.9*** | 8.1* | 28.5*** | −3.0 | 8.7 | 11.8*** | −8.8** |

| LDA | 25.9*** | −40.7*** | −25.3*** | −18.9*** | −37.6*** | 77.4*** | −36.6*** | −45.4*** | −41.6*** | 28.4*** | 1.9 | 71.8*** | −11.7*** | 12.3** | 5.6 |

| MLP | −19.0*** | 12.9*** | −8.5** | 28.3*** | −32.4*** | 14.9*** | −19.2*** | −24.9*** | −37.9*** | −8.7* | 12.7** | −1.5 | 2.2 | 1.4 | −19.6 |

Racial groups in CRC and Lung datasets behaved similarly. We found that we could reduce the percent difference values for performance metrics like Precision, Recall, and F1 when we combined small groups like Black patients or other minorities with a larger racial group (Table 3 & 4). The improvement is most significant in MLogit, where differences in Precision as large as 73.7% and 44.2% could be reduced to 24.1% and 7.3% for CRC (Table 3).

Table 3.

Examining the effects of ways to mitigate model bias among CRC Race groups. The color shade indicates the difference, while the number of asterisks indicates the level of statistical differences (*, <0.05; **, <0.01; ***, <0.001).

| Tuning subject | All | Subgroup | Subgroup vs All | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Testing subject | Black vs Unknown |

White vs Unknown |

White vs Black |

White vs Nonwhite/ Unknown |

Unknown vs Known |

Unknown/- White vs Minorities |

White vs Unknown/ Nonwhite |

Unknown vs Known |

Unknown/- White vs Minorities |

White | Unknown | Unknown/ White |

Black | Unknown/ Nonwhite |

Known | Minorities |

| Accuracy | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) |

| Random Forest | −10.5*** | −16.6*** | −6.9*** | −13.6*** | 19.2*** | 2.4** | −15.1*** | −3.4** | 1.3** | −0.8* | −18.6*** | 0.0 | −7.6*** | 0.9* | 0.5* | 1.0 |

| Mlogit | −3.7** | 0.4 | 4.3** | −2.1** | 4.6** | −80*** | −9.5*** | 5.5*** | 3.0*** | −0.4 | 9.6*** | 7.7*** | 3.9** | 7.8*** | 8.7*** | −3.8*** |

| Linear SVC | −4.3* | −10.3*** | −6.3*** | −3.8*** | 12.8*** | 9.7*** | −14.2*** | 12.7*** | 7.5*** | −3.3*** | −7.2*** | −9.1*** | −9.4*** | 8.4*** | −7.1*** | −7.2*** |

| LDA | −11.1*** | −17.1*** | −6.7*** | −16.7*** | 15.9*** | 2.8*** | −9.3*** | 29.6*** | −2.5** | 10.0*** | 14.0*** | −6.2*** | 2.6** | 1.0** | 1.9* | −1.0 |

| MLP | −20.0*** | −22.1*** | −2.5* | −18.6*** | 24.1*** | 86.0*** | −30*** | 12.8*** | 17.7*** | 10.1*** | −7.0*** | −0.9 | 7.3*** | −7.5*** | 2.3* | 56.7*** |

| Precision | ||||||||||||||||

| Random Forest | 45.1*** | −9.4* | −37.6*** | −27.8*** | −20.0*** | −19.6** | 1.9 | 28.1** | −42.9*** | 24.0** | 38.1*** | −24.1*** | −22.6*** | −12.1* | −13.8* | 6.9 |

| Mlogit | 73.7*** | −3.1 | −44.2*** | −24.1*** | −7.3*** | −51.3*** | 13.8*** | −38.9*** | 25.3*** | 67.1*** | 12.3*** | 97.9*** | −6.8** | 11.4** | 70.4*** | −23.0*** |

| Linear SVC | 34.0*** | −6.9* | −30.5*** | 8.0* | 61.7* | −38.3*** | −6.3 | 25.4*** | −6.6* | −24.0*** | −18.0*** | 21.1*** | −47.2*** | −12.4** | 5.8 | −20.0*** |

| LDA | 48.8*** | −30.0*** | −53.0*** | −29.2*** | −31.7*** | −34.5*** | 1.8 | 106.4*** | −39.8*** | 44.1*** | 60.9*** | 15.2*** | −32.2*** | 0.3 | −46.8*** | 25.3*** |

| MLP | 9.6*** | −27.5*** | −33.8*** | −24.6*** | 41.8*** | 34.2*** | −8.7* | 17.7** | 21.1*** | 3.3 | −11.2** | 23.3** | −31.6*** | −14.7*** | 7.1 | 36.7*** |

| Recall | ||||||||||||||||

| Random Forest | 33.9*** | −14.0*** | −35.7*** | −14.5*** | 11.5*** | −29.7*** | −11.4*** | 39.2*** | −30.8*** | 2.3 | 21.6*** | −2.3*** | −34.5*** | −1.3 | −2.6** | −0.8 |

| Mlogit | 45.8*** | −8.3** | −37.1*** | −13.4*** | 9.3*** | −50.8*** | 4.7** | −23.6*** | −13.1*** | 14.2*** | −13.5*** | 31.8*** | −28.2*** | −5.5* | 23.8*** | −25.3*** |

| Linear SVC | 28.8*** | −13.9*** | −33.1*** | 0.0 | 56.0 | −43.1*** | −12.9*** | 29.6*** | −29.1*** | −17.5*** | −14.6*** | 9.4*** | −44.8*** | −5.4 | 2.8 | −12.3*** |

| LDA | 28.6*** | −32.7*** | −47.6*** | −20.9*** | −21.0*** | −27.7*** | −1.4 | 81.3*** | −46.3*** | 24.0*** | 25.0*** | 11.0*** | −35.1*** | −0.5 | −45.5*** | 49.4*** |

| MLP | 11.9*** | −31.2*** | −38.5*** | −23.2*** | 45.4*** | 19.1*** | −7.0* | 30.1*** | −7.4** | 8.1* | −10.3** | 20.7*** | −33.5*** | −10.7*** | 0.3 | 55.3*** |

| F1 | ||||||||||||||||

| Random Forest | 36.9*** | −19.4*** | −41.1*** | −23.2*** | 10.5*** | −32.2*** | −14.6*** | 50.7*** | −36.9*** | 7.5 | 27.8*** | −6.3*** | −36.7*** | −3.3 | −6.3** | 0.6 |

| Mlogit | 59.5*** | −6.7* | −41.5*** | −17.6*** | 4.0*** | −51.9*** | 5.6** | −30.0*** | −0.2 | 29.7*** | −5.6* | 53.7*** | −24.1*** | 1.1 | 40.3*** | −26.0*** |

| Linear SVC | 32.8*** | −13.1*** | −34.5*** | 2.7 | 61.8 | −42.3*** | −11.6*** | 29.9*** | −21.8*** | −19.4*** | −16.7*** | 11.6*** | −47.3*** | −6.3* | 3.7 | −17.7*** |

| LDA | 25.6*** | −37.8*** | −50.5*** | −26.7*** | −19.2*** | −30.1*** | −2.7 | 100.0*** | −44.5*** | 32.4*** | 32.3*** | 12.0*** | −34.4*** | −0.3 | −46.6*** | 41.0*** |

| MLP | 8.3** | −31.5*** | −36.7*** | −24.9*** | 47.9*** | 21.7*** | −7.9* | 27.6*** | 4.0*** | 5.8 | −11.3** | 20.7*** | −33.0*** | −13.8*** | 2.8 | 41.3*** |

Table 4.

Examining the effects of ways to mitigate model bias among lung race groups. The color shade indicates the difference, while the number of asterisks indicates the level of statistical differences (*, <0.05; **, <0.01; ***, <0.001).

| Tuning subject | All | Subgroup | Subgroup vs All | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Testing subject | Other vs Black |

Other vs White |

Black vs White |

White vs Minorities |

Other vs Black/ White |

Black vs Nonblack |

White vs Minorities |

Other vs Black/ White |

Black vs Nonblack |

White | Other | Black | Minorities | Black/ White |

Nonblack |

| Accuracy | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) | Delta (%) |

| Random Forest | −16.8*** | 3.5** | −13.9*** | 0.0 | −5.5*** | 16.8*** | −2.4* | −3.6*** | 14.4*** | −2.8** | −0.4 | −3.5* | −0.4 | −2.4*** | −1.4* |

| Mlogit | −15.0*** | 7.6** | −8.5*** | 16.2*** | 26.5*** | 30.1*** | 24.7*** | −29.8*** | −2.7** | 3.4** | −23.0*** | −5.2** | −3.6* | 38.9*** | 26.8*** |

| Linear SVC | −22.4*** | 9.2** | −15.3*** | 33.5*** | −12.8*** | 52.7*** | 127.2*** | 56.8*** | 77.1*** | 37.3*** | 89.5*** | 31.3*** | −19.3*** | 5.4*** | 13.2*** |

| LDA | 85.3*** | −22.7*** | 43.2*** | 22.3*** | 30.4*** | −29.6*** | 0.7 | −55.7*** | −20.1*** | −12.5*** | −64.8*** | 17.7** | 6.3** | 3.6** | 3.6*** |

| MLP | −10.4 | 43.6*** | 28.6*** | 43.9*** | −23.6*** | −22.7*** | −3.6 | −27.8*** | −17.0*** | −14.4*** | −9.8 | 2.8 | 27.9*** | −4.5** | −4.3** |

| Precision | |||||||||||||||

| Random Forest | −23.7*** | 38.8*** | 5.9 | 25.6*** | −34.8*** | −15.1*** | 23.1*** | −28.4*** | −16.0*** | 6.9 | 5.9 | −8.4* | 9.1 | −3.6 | −7.4 |

| Mlogit | −13.7*** | −0.2 | −13.9*** | 52.0*** | 79.6*** | 73.3*** | 74.9* | −54.4*** | −33.9*** | 20.8*** | −48.0*** | −29.5*** | 5.0 | 104.7*** | 84.8*** |

| Linear SVC | −33.3*** | −9.5 | −9.5 | 5.3 | −16.9*** | 12.6* | 101.1*** | 66.9*** | 67.1*** | 13.3** | 75.1*** | 38.4*** | −40.7*** | −12.9*** | −6.8 |

| LDA | 70.0*** | −56.3*** | −25.6*** | −18.5*** | 62.4*** | 25.0*** | 23.9*** | −47.4*** | 18.5*** | 26.5*** | −70.2*** | 14.1* | −16.8*** | −8.1* | 20.3*** |

| MLP | −12.4 | 12.9 | −1.1 | 41.7*** | −23.8*** | 4.7 | 22.5*** | −14.4** | 20.8** | 10.3** | −15.8* | 6.8 | 27.5*** | −25.1*** | −7.4* |

| Recall | |||||||||||||||

| Random Forest | −8.5*** | −1.7 | −10.7*** | 2.9 | 0.3 | 10.8*** | 2.1 | −0.7 | 4.9* | 2.5 | 2.1 | −6.3* | 3.2 | 2.4* | −1.0 |

| Mlogit | −12.6*** | −5.8* | −17.7*** | 25.3*** | 59.0*** | 72.7*** | 45.3*** | −37.3*** | −11.4*** | 3.1 | −41.6*** | −20.9*** | −11.1** | 48.1*** | 54.3*** |

| Linear SVC | −31.7*** | −4.4 | −34.7*** | 9.1 | −14.2*** | 71.9*** | 90.4*** | 69.3*** | 83.4*** | 24.7*** | 84.6*** | 3.6 | −28.5*** | −6.4* | −2.9 |

| LDA | 82.9*** | −53.4*** | −17.9*** | −18.4*** | 66.0*** | 14.9** | 10.1** | −41.2*** | 16.8** | 13.1*** | −67.7*** | 13.2* | −16.2*** | −12.0*** | 11.4*** |

| MLP | −16.0* | 11.0 | −6.8 | 16.6*** | −21.0*** | 8.4 | 8.3* | −14.7* | 22.0*** | 8.9** | −15.2 | 5.5 | 17.2** | −21.5*** | −6.3* |

| F1 | |||||||||||||||

| Random Forest | −18.8*** | 4.4 | −15.2*** | 9.6*** | −8.0** | 16.0*** | 9.7** | −10.5*** | 8.8** | 8.8** | 4.4 | −7.4* | 8.7* | 7.2*** | −1.2 |

| Mlogit | −13.1*** | −9.1** | −21.0*** | 34.5*** | 77.3*** | 86.5*** | 63.0*** | −48.4*** | −23.6*** | 12.1*** | −49.1*** | −27.9*** | −7.5* | 74.8*** | 76.0*** |

| Linear SVC | −34.4*** | −9.5* | −40.6*** | 6.3*** | −12.9*** | 84.6*** | 111.6*** | 78.4*** | 90.6*** | 25.2*** | 88.5*** | −1.2 | −37.2*** | −8.0** | −4.3 |

| LDA | 75.4*** | −58.0*** | −26.4*** | −17.8*** | 70.8*** | 27.1*** | 14.2*** | −9.0 | 24.6*** | 14.7*** | −53.1*** | 12.6* | −17.4*** | −12.0*** | 14.9*** |

| MLP | −13.5 | 5.4 | −8.8 | 27.4*** | −18.5** | 10.4 | 12.1** | −12.3*** | 28.3*** | 9.7** | −17.9* | 6.4 | 24.7*** | −23.7*** | −8.5** |

We also tuned the ML models on individual or combined age, sex, and race groups, before testing the model on other members of the same group to see if this could improve model performance. We found that this method seems to reduce the performance difference between the two groups for certain models without needing to penalized model performance for bias [26-28].

RF and MLP generally showed an improvement in reducing model bias when using this method. Whereas accuracy values for RF remained relatively constant for both CRC and Lung patients, Precision, Recall, and F1 typically experienced an improvement, especially among CRC Sex and Age, as well as Lung Sex (Table 3, 5 and 6). Meanwhile, for MLP, this method resulted in a significant improvement among all performance metrics across many of the CRC and Lung groups, and especially for CRC and Lung Sex (Tables 5 and 6).

Table 5.

Examining the effects of ways to mitigate model bias among CRC groups based on sex. The color shade indicates the difference, while the number of asterisks indicates the level of statistical differences (*, <0.05; **, <0.01; ***, <0.001).

| Tuning subject | All | Subgroup | Subgroup vs All | |

|---|---|---|---|---|

| Testing subject | Male vs Female | Female | Male | |

| Accuracy | Delta (%) | Delta (%) | Delta (%) | Delta (%) |

| Random Forest | −3.6*** | −3.6*** | −0.6* | −0.6* |

| Mlogit | −5.0*** | −7.0*** | 4.1*** | 1.9** |

| Linear SVC | −19.7*** | −1.4 | −18.5*** | 0.0 |

| LDA | −1.4** | −6.2*** | 4.0*** | −1.0* |

| MLP | −11.5*** | 2.3*** | −1.0 | 14.6*** |

| Precision | ||||

| Random Forest | 21.8** | 5.7 | 21.8** | 5.7 |

| Mlogit | 3.4 | −22.2*** | 53.0*** | 15.1*** |

| Linear SVC | −10.4*** | 19.2*** | −35.8*** | −14.6*** |

| LDA | −5.8 | −4.8 | −2.3 | −1.2 |

| MLP | −26.6*** | 9.7* | −8.9* | 36.2*** |

| Recall | ||||

| Random Forest | 2.4* | 0.0 | 3.5** | 1.2 |

| Mlogit | −3.5 | −19.1*** | 5.9* | −11.3*** |

| Linear SVC | −15.7*** | 11.2*** | −30.1*** | −7.8** |

| LDA | −6.7* | −6.2* | −2.6 | −2.0 |

| MLP | −20.7*** | 3.8 | −5.5 | 23.8*** |

| F1 | ||||

| Random Forest | 4.2* | −0.9 | 8.4*** | 3.1 |

| Mlogit | −2.4 | −20.8*** | 21.0*** | −1.8*** |

| Linear SVC | −24.3*** | 14.5*** | −34.7*** | −1.3 |

| LDA | −6.2 | −6.5* | −1.3 | −1.7 |

| MLP | −24.8*** | 6.6 | −7.2 | 31.6*** |

Table 6.

Examining the effects of ways to mitigate model bias among Lung groups based on sex. The color shade indicates the difference, while the number of asterisks indicates the level of statistical differences (*, <0.05; **, <0.01; ***, <0.001).

| Tuning subject | All | Subgroup | Subgroup vs all | |

|---|---|---|---|---|

| Testing subject | Male vs Female | Female | Male | |

| Accuracy | Delta (%) | Delta (%) | Delta (%) | Delta (%) |

| Random Forest | −2.5*** | −2.7* | −0.4 | −0.6 |

| Mlogit | −2.6 | −12.9** | 9.1*** | −2.5 |

| Linear SVC | 8.1*** | 2.0 | 15.9*** | 9.3*** |

| LDA | 6.3*** | 8.2* | 3.8** | 5.7*** |

| MLP | −22.1*** | −37.0*** | 4.2** | −15.8*** |

| Precision | ||||

| Random Forest | 45.1*** | 31.1 | 0.4 | −9.3* |

| Mlogit | 5.2 | −5.1*** | 51.1*** | 36.3*** |

| Linear SVC | −2.0 | 53.2*** | −5.2 | 53.0*** |

| LDA | 14.5*** | 25.4 | −0.7 | 8.7* |

| MLP | −38.8*** | −11.6*** | −31.0*** | −0.4 |

| Recall | ||||

| Random Forest | 10.5*** | 7.1 | 0.7 | −2.5* |

| Mlogit | 3.1 | −2.1*** | 14.4*** | 8.7** |

| Linear SVC | 0.8 | 37.3** | −0.5 | 35.5*** |

| LDA | 8.5** | 21.4 | −5.0 | 6.3* |

| MLP | −32.0*** | −20.6*** | −19.7*** | −6.3 |

| F1 | ||||

| Random Forest | 13.1*** | 7.1 | 0.8 | −4.6* |

| Mlogit | 4.0 | −1.9*** | 28.4*** | 21.1*** |

| Linear SVC | 0.9 | 45.8 | −1.2 | 42.8*** |

| LDA | 13.1*** | 24.5 | −1.7 | 8.2* |

| MLP | −36.9*** | −21.3*** | −24.6*** | −6.0 |

Linear SVC reduced the gap in accuracy values from 19.7% to 1.4% for CRC Sex and from 8.1% to 2% for Lung Sex (Tables 5 and 6). However, it often increased the gap for other performance metrics, especially across the Lung sociodemographic groups (Tables 2, 4 and 6).

Table 2.

Examining the effects of ways to mitigate model bias among lung age groups. The color shade indicates the difference, while the number of asterisks indicates the level of statistical differences (*, <0.05; **, <0.01; ***, <0.001).

| Tuning subject | All | Subgroup | Subgroup vs. All | |

|---|---|---|---|---|

| Testing subject | 65+ vs Below 65 | Below 65 | 65+ | |

| Accuracy | Delta (%) | Delta (%) | Delta (%) | Delta (%) |

| Random Forest | −16.1*** | −17.9*** | 0.4 | −1.8 |

| Mlogit | −21.9*** | −1.1*** | 1.6 | 28.7*** |

| Linear SVC | 4.4* | −24.7*** | 26.4*** | −8.9*** |

| LDA | −14.5*** | −7.0*** | 14.0*** | 24.0*** |

| MLP | −20.9*** | 0.9 | −24.3*** | −3.3 |

| Precision | ||||

| Random Forest | 24.2*** | 23.8*** | 1.6 | 1.3 |

| Mlogit | 15.1*** | 49.9*** | 16.4*** | 51.5*** |

| Linear SVC | −3.1 | −27.1*** | 25.2*** | −5.8 |

| LDA | −18.0** | −29.5*** | −5.0 | −18.4** |

| MLP | −24.7*** | 43.5*** | −38.3*** | 17.7*** |

| Recall | ||||

| Random Forest | 9.3*** | 10.6*** | 1.9 | 3.1 |

| Mlogit | −3.3 | 33.2*** | −6.9* | 28.3*** |

| Linear SVC | −8.8 | −23.4*** | 13.1*** | −5.0 |

| LDA | −13.8** | −23.4*** | 5.5 | −6.3 |

| MLP | −24.0*** | 26.1*** | −31.7*** | 13.3*** |

| F1 | ||||

| Random Forest | 14.4*** | 19.6*** | 4.2* | 8.9** |

| Mlogit | 3.0 | 44.0*** | 2.4 | 43.2*** |

| Linear SVC | −5.5 | −27.5*** | 20.9*** | −7.2* |

| LDA | −18.2*** | −27.8*** | 3.1 | −9.0* |

| MLP | −26.2*** | 31.4*** | −35.6*** | 14.7*** |

Finally, we calculated the MSE values for each method that we used to try and reduce model bias (Table 7). We found that across the same ML models and performance metrics, both merging groups and tuning and testing the model on the same group often reduced the magnitude of the MSE values. This was especially apparent in models like RF (data not shown) across both CRC and lung cancer patients when dividing them into groups based on their age, sex, and race.

Table 7.

The mean square errors by cancer type and socioeconomical groups.

| Tuning Subject | All | All on Subgroup | Subgroup | Subgroup vs All |

|---|---|---|---|---|

| CRC | ||||

| Age | 0.0091 | 0.0110 | 0.0041 | 0.0220 |

| Gender | 0.0045 | N/A | 0.0027 | 0.0110 |

| Race | 0.0237 | 0.0216 | 0.0145 | 0.0174 |

| Lung | ||||

| Age | 0.0046 | N/A | 0.0059 | 0.0071 |

| Gender | 0.0054 | N/A | 0.0086 | 0.0790 |

| Race | 0.0176 | 0.0081 | 0.0163 | 0.0246 |

Discussion

Here we examined the impact that age, sex, and race have on the five ML models’ multimetric performance when classifying patient 4-category survival outcomes using CRC and lung adenocarcinoma data from TCGA. We confirmed that ML models exhibit significant biases and found that ML models tended to perform more poorly overall on the largest sociodemographic groups. Our data show that both merging groups (depending on the choice of subgroup) and training models on each individual or merged group hold the potential to reduce ML performance biases and thus improve AI fairness.

There are few published works evaluate ML model bias in classifying cancer outcomes using omic and clinic data, while some investigated the racial biases in ML modelling of population data[18], cancer imaging or classification[14, 20, 27, 30]. However, most, if not all, of them base evaluations of model performance solely on accuracy. This study is novel in that we compared the multimetric performance of RF, MLogit, Linear SVC, LDA, and MLP models to empirically examine model bias for different age, sex, and race groups for the first time.

Moreover, most, if not all, previous cancer studies on ML or AI fairness sort patients into binaries, especially for factors like race (comparing black and white patient groups), further marginalizing racial minorities [13, 14, 20], except one[18]. Thus, model performance and biases towards other racial groups, such as AAPI or American Natives, are poorly understood. While we were limited by the small size of these groups and were only able to examine them when merged with other groups, our findings will shed light on how model performance is impacted by algorithmic bias towards small minority groups. Furthermore, most previous ML studies on cancer survivals using transcriptomic data and socioeconomical status(s) classify patient outcomes into binary or time-based survivals [30, 31]. By analyzing four categories of outcomes, especially in the context of patient sociodemographic factors, our models can help better understand treatment outcome (e.g., a death with cancer likely linked to under-treatment) and thus inform personalized cancer treatments.

We also for the first time examined how to reduce biases toward age and gender in ML performance on classifying cancer outcomes. Several original studies focused on gender biases in ML performance[17, 23-25, 32-36], but to our knowledge few focused on cancer. Interestingly, review and guideline articles have raised the concerns on the gender biases or fairness issues[13, 37-40]. Age has been included as a covariable in several studies focused on AI/ML fairness[13, 14, 16, 18, 19, 22, 27]. However, only few focused on the fairness in age (i.e., ageism)[17]. Therefore, our works shed light on the existence and possible solutions to ML performance biases by age and may help improve ML performance in older patients who fared worse in our study.

In addition, many prior works examining AI fairness penalize the model for exhibiting bias[26-28, 41]. Despite possible overfitting, this study was able to reduce gaps in performance without doing so for RF, Linear SVC, and MLP by training models on individual or merged groups. This will allow us to reduce algorithmic bias while enabling the model to succeed when classifying patients from every group, rather than tearing down groups to equalize performance.

This study has several limitations that should be considered. We used 5-fold cross-validation and ran each model 20 times to rigorously examine the models, a method that has previously been shown to be effective [5, 9, 10]. 10-fold cross-validation may provide more samples for training, but significantly reduce the sample size in the validation/testing set. Given our focus on the minority groups, 5-fold cross-validation was chosen although 10-fold cross-validation is preferred. Moreover, the sample size of the two TCGA cohorts may be too small, especially in the context of the different sociodemographic groups. Future large-scale studies with more balanced age, sex, and race cohorts, or at least cohorts more representative of populations in the US, are warranted to better understand algorithmic bias and examine the intersectionality between different sociodemographic identities. Furthermore, we did not use the so-called fairness metrics, but the traditional group versus group comparison for robust statistical inference. The main reason is that those AI fairness metrics are mostly debatable or attributable to a single source [18, 42]. Indeed, one group appears to prefer established (balanced) accuracy as the performance metrics over fairness metrics[43]. Another group recommended using a metric best aligned with clinical purpose when assessing fairness of using ML for clinical decision support since various fairness metrics often result in conflicting results[44]. Finally, we were not able to use independent datasets that are as comprehensive as TCGA. Indeed, data sources and recruiting more patients of race-, age- and gender-minority have been recommended [45-48]. Hopefully, better and more comprehensive datasets will become available and enable us to rigorously examine our findings in an independent dataset. Additional studies are needed to validate our findings.

In summary, we here show that ML models like RF, MLogit, Linear SVC, LDA, and MLP do show bias towards different age, sex, and racial groups when classifying patients with colorectal or lung adenocarcinoma into their 4-category survival outcomes. These findings may help improve our understanding of algorithmic bias and how to include better representation of patients from all sociodemographic backgrounds. Our study also reveals several ways to design the models that hold the potential to reduce model bias.

Supplementary Material

Acknowledgments

Funding

This work was supported by the U.S. National Science Foundation (IIS-2128307 to LZ), the National Institute of Diabetes and Digestive and Kidney Diseases, National Institutes of Health (R01DK132885 to NG) and the National Cancer Institute, National Institutes of Health (R37CA277812 to LZ).

Footnotes

Conflicts of Interest

The authors declare no other conflict of interests.

Compliance with ethical standards

This exempt study using publicly available de-identified data did not require an IRB review.

Data Availability Statement

The data sets used and/or analyzed of this study are available on the cBioPortal website (https://www.cbioportal.org/). The program coding is available from the corresponding authors on reasonable request.

References

- 1.Cui M, Deng F, Disis ML et al. Advances in the Clinical Application of High-throughput Proteomics, Explor Res Hypothesis Med 2024;9:209–220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cui M, Cheng C, Zhang L. High-throughput proteomics: a methodological mini-review, Lab Invest 2022;102:1170–1181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Liu DD, Zhang L. Trends in the characteristics of human functional genomic data on the gene expression omnibus, 2001-2017, Lab Invest 2019;99:118–127. [DOI] [PubMed] [Google Scholar]

- 4.Siegel RL, Kratzer TB, Giaquinto AN et al. Cancer statistics, 2025, CA Cancer J Clin 2025;75:10–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Deng F, Zhou H, Lin Y et al. Predict multicategory causes of death in lung cancer patients using clinicopathologic factors, Comput Biol Med 2021;129:104161. [DOI] [PubMed] [Google Scholar]

- 6.She Y, Jin Z, Wu J et al. Development and Validation of a Deep Learning Model for Non-Small Cell Lung Cancer Survival, JAMA Netw Open 2020;3:e205842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Deng F, Shen L, Wang H et al. Classify multicategory outcome in patients with lung adenocarcinoma using clinical, transcriptomic and clinico-transcriptomic data: machine learning versus multinomial models, Am J Cancer Res 2020;10:4624–4639. [PMC free article] [PubMed] [Google Scholar]

- 8.Shang G, Jin Y, Zheng Q et al. Histology and oncogenic driver alterations of lung adenocarcinoma in Chinese, Am J Cancer Res 2019;9:1212–1223. [PMC free article] [PubMed] [Google Scholar]

- 9.Deng F, Zhao L, Yu N et al. Union With Recursive Feature Elimination: A Feature Selection Framework to Improve the Classification Performance of Multicategory Causes of Death in Colorectal Cancer, Lab Invest 2024;104:100320. [DOI] [PubMed] [Google Scholar]

- 10.Feng CH, Disis ML, Cheng C et al. Multimetric feature selection for analyzing multicategory outcomes of colorectal cancer: random forest and multinomial logistic regression models, Lab Invest 2021. [DOI] [PubMed] [Google Scholar]

- 11.Zhang M, Hu W, Hu K et al. Association of KRAS mutation with tumor deposit status and overall survival of colorectal cancer, Cancer Causes Control 2020;31:683–689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang DY, Venkat A, Khasawneh H et al. Implementation of Digital Pathology and Artificial Intelligence in Routine Pathology Practice, Lab Invest 2024;104:102111. [DOI] [PubMed] [Google Scholar]

- 13.Wang Y, Wang L, Zhou Z et al. Assessing fairness in machine learning models: A study of racial bias using matched counterparts in mortality prediction for patients with chronic diseases, J Biomed Inform 2024;156:104677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Soltan A, Washington P. Challenges in Reducing Bias Using Post-Processing Fairness for Breast Cancer Stage Classification with Deep Learning, Algorithms 2024;17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kolla L, Parikh RB. Uses and limitations of artificial intelligence for oncology, Cancer 2024;130:2101–2107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Iezzoni LI. Cancer detection, diagnosis, and treatment for adults with disabilities, Lancet Oncol 2022;23:e164–e173. [DOI] [PubMed] [Google Scholar]

- 17.Tan W, Wei Q, Xing Z et al. Fairer AI in ophthalmology via implicit fairness learning for mitigating sexism and ageism, Nat Commun 2024;15:4750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Trentz C, Engelbart J, Semprini J et al. Evaluating machine learning model bias and racial disparities in non-small cell lung cancer using SEER registry data, Health Care Manag Sci 2024;27:631–649. [DOI] [PubMed] [Google Scholar]

- 19.Ganta T, Kia A, Parchure P et al. Fairness in Predicting Cancer Mortality Across Racial Subgroups, JAMA Netw Open 2024;7:e2421290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Huti M, Lee T, Sawyer E et al. An Investigation into Race Bias in Random Forest Models Based on Breast DCE-MRI Derived Radiomics Features, Clin Image Based Proced Fairness AI Med Imaging Ethical Philos Issues Med Imaging (2023) 2023;14242:225–234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Deng F, Huang J, Yuan X et al. Performance and efficiency of machine learning algorithms for analyzing rectangular biomedical data, Lab Invest 2021;101:430–441. [DOI] [PubMed] [Google Scholar]

- 22.Doo FX, Naranjo WG, Kapouranis T et al. Sex-Based Bias in Artificial Intelligence-Based Segmentation Models in Clinical Oncology, Clin Oncol (R Coll Radiol) 2025;39:103758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dibaji M, Ospel J, Souza R et al. Sex differences in brain MRI using deep learning toward fairer healthcare outcomes, Front Comput Neurosci 2024;18:1452457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Stanley EAM, Wilms M, Mouches P et al. Fairness-related performance and explainability effects in deep learning models for brain image analysis, J Med Imaging (Bellingham) 2022;9:061102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Seyyed-Kalantari L, Liu G, McDermott M et al. CheXclusion: Fairness gaps in deep chest X-ray classifiers, Pac Symp Biocomput 2021;26:232–243. [PubMed] [Google Scholar]

- 26.Genc M. Penalized logistic regression with prior information for microarray gene expression classification, Int J Biostat 2024;20:107–122. [DOI] [PubMed] [Google Scholar]

- 27.Maros ME, Capper D, Jones DTW et al. Machine learning workflows to estimate class probabilities for precision cancer diagnostics on DNA methylation microarray data, Nat Protoc 2020;15:479–512. [DOI] [PubMed] [Google Scholar]

- 28.Herrmann M, Probst P, Hornung R et al. Large-scale benchmark study of survival prediction methods using multi-omics data, Brief Bioinform 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gao J, Aksoy BA, Dogrusoz U et al. Integrative analysis of complex cancer genomics and clinical profiles using the cBioPortal, Sci Signal 2013;6:pl1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chierici M, Bussola N, Marcolini A et al. Integrative Network Fusion: A Multi-Omics Approach in Molecular Profiling, Front Oncol 2020;10:1065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Shen J, Wang L, Taylor JMG. Estimation of the optimal regime in treatment of prostate cancer recurrence from observational data using flexible weighting models, Biometrics 2017;73:635–645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Salahuddin Z, Chen Y, Zhong X et al. From Head and Neck Tumour and Lymph Node Segmentation to Survival Prediction on PET/CT: An End-to-End Framework Featuring Uncertainty, Fairness, and Multi-Region Multi-Modal Radiomics, Cancers (Basel) 2023;15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Yaseliani M, Noor EAM, Hasan MM. Mitigating Sociodemographic Bias in Opioid Use Disorder Prediction: Fairness-Aware Machine Learning Framework, Jmir ai 2024;3:e55820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Li D, Lin CT, Sulam J et al. Deep learning prediction of sex on chest radiographs: a potential contributor to biased algorithms, Emerg Radiol 2022;29:365–370. [DOI] [PubMed] [Google Scholar]

- 35.Lucas AM, Palmiero NE, McGuigan J et al. CLARITE Facilitates the Quality Control and Analysis Process for EWAS of Metabolic-Related Traits, Front Genet 2019;10:1240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wang R, Chaudhari P, Davatzikos C. Bias in machine learning models can be significantly mitigated by careful training: Evidence from neuroimaging studies, Proc Natl Acad Sci U S A 2023;120:e2211613120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Yang Y, Lin M, Zhao H et al. A survey of recent methods for addressing AI fairness and bias in biomedicine, J Biomed Inform 2024;154:104646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Liu M, Ning Y, Teixayavong S et al. A translational perspective towards clinical AI fairness, NPJ Digit Med 2023;6:172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Do H, Nandi S, Putzel P et al. A joint fairness model with applications to risk predictions for underrepresented populations, Biometrics 2023;79:826–840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bhanot K, Qi M, Erickson JS et al. The Problem of Fairness in Synthetic Healthcare Data, Entropy (Basel) 2021;23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ashford LJ, Spivak BL, Ogloff JRP et al. Statistical learning methods and cross-cultural fairness: Trade-offs and implications for risk assessment instruments, Psychol Assess 2023;35:484–496. [DOI] [PubMed] [Google Scholar]

- 42.Sikstrom L, Maslej MM, Hui K et al. Conceptualising fairness: three pillars for medical algorithms and health equity, BMJ Health Care Inform 2022;29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Chin MH, Afsar-Manesh N, Bierman AS et al. Guiding Principles to Address the Impact of Algorithm Bias on Racial and Ethnic Disparities in Health and Health Care, JAMA Netw Open 2023;6:e2345050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Labkoff S, Oladimeji B, Kannry J et al. Toward a responsible future: recommendations for AI-enabled clinical decision support, J Am Med Inform Assoc 2024;31:2730–2739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bedi S, Liu Y, Orr-Ewing L et al. Testing and Evaluation of Health Care Applications of Large Language Models: A Systematic Review, JAMA 2025;333:319–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Griffin AC, Wang KH, Leung TI et al. Recommendations to promote fairness and inclusion in biomedical AI research and clinical use, J Biomed Inform 2024;157:104693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Gu T, Pan W, Yu J et al. Mitigating bias in AI mortality predictions for minority populations: a transfer learning approach, BMC Med Inform Decis Mak 2025;25:30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ueda D, Kakinuma T, Fujita S et al. Fairness of artificial intelligence in healthcare: review and recommendations, Jpn J Radiol 2024;42:3–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data sets used and/or analyzed of this study are available on the cBioPortal website (https://www.cbioportal.org/). The program coding is available from the corresponding authors on reasonable request.