Abstract

With the advent of the Internet, movie reviews have emerged as a crucial reference for users in selecting films and hold significant value in guiding filmmakers and platforms in content recommendation. Consequently, accurate classification of movie reviews has extensive practical applications. Traditional manual classification methods, however, are not only time-intensive and laborious but also susceptible to subjective bias. In response, automated classification techniques leveraging deep learning have become a promising alternative. Among these, the BERT model, renowned for its bidirectional encoder architecture, excels in contextual understanding and semantic representation. Nevertheless, it faces challenges in capturing long-range word dependencies and fully extracting local features in lengthy texts. Moreover, model bias stemming from sensitive information, such as gender and race, embedded in the data can compromise the fairness of classification outcomes. To address these limitations, this study introduces several enhancements to the BERT model. First, a dynamic positional offset encoding mechanism grounded in attention is employed to replace traditional absolute positional encoding, thereby enhancing the model’s capacity to process positional information. Second, a dynamic weighted fusion pooling strategy is proposed, integrating average pooling, maximum pooling, and self-attention pooling to improve the comprehensiveness of feature extraction. Additionally, during data preprocessing, sensitive attributes such as gender and race are mitigated through the removal or obfuscation of specific terms or features, combined with data augmentation techniques including easy data augmentation (EDA) and noise injection to generate neutral review samples. This approach reduces potential biases and enhances the model’s generalization capabilities. Experimental results on the IMDb movie review dataset demonstrate the efficacy of the proposed improvements, with the improved BERT model achieving a 0.73% increase in F1 score and a 0.90% improvement in accuracy, thereby validating the effectiveness of the modifications.

Keywords: Movie rating, Bert, Model bias, Dynamic position bias, Dynamic weighted fusion strategy, EDA, IMDb

Subject terms: Computer science, Information technology

Introduction

The Internet has transformed movie reviews into a pivotal platform for audiences to share their cinematic experiences. These reviews have assumed a pivotal role within the film market, exerting a notable influence on audience decision-making processes. A review of global film market statistics reveals a consistent and sustained growth trajectory in the movie industry over recent years. In 2019, global box office revenues reached $42.4 billion, according to Statista. Movie reviews constitute a pivotal source of information for prospective filmgoers, serving as a crucial guide in their decision-making process regarding which films to watch. China, in particular, is one of the fastest-growing markets in the global film industry. According to a report by the China Film Association, the country’s box office revenue in 2023 is projected to reach approximately 60.69 billion yuan, with a projected audience size of around 1.32 billion. A survey conducted by Nielsen further indicates that over 70% of viewers utilize reviews and ratings as a reference point when selecting a film.

Review platforms such as IMDb and Rotten Tomatoes amass a substantial volume of user reviews and ratings, which exert a considerable influence on the reach and box office performance of films. Research indicates that films receiving ratings of 8.0 or above on IMDb generate 30% to 50% more revenue than those with lower ratings. The advent of social media has led to an expansion in the reach of movie reviews beyond their traditional platforms. Social networks such as Weibo and WeChat have emerged as significant venues for users to share and discuss their viewing experiences. In 2023, a study revealed that over 90% of Chinese internet users engaged in posting or reading movie reviews on these platforms.

The integration of user-generated reviews within brand marketing has become a pivotal element. For instance, the Chinese movie rating platform Douban Movie boasts over 20 million user reviews, making it a prominent platform within China’s cultural landscape. The categorization of reviews based on sentiment or opinion facilitates producers’ ability to gauge audience feedback and provides potential viewers with information that influences their choices. This classification enhances the delivery of personalized content, thereby improving the user experience. Consequently, the accurate classification of movie reviews has emerged as a pivotal research domain within the broader field of natural language processing.

Conventional approaches to the classification of movie reviews employ feature extraction techniques, such as the bag-of-words model and term frequency-inverse document frequency (TF-IDF) analysis. Subsequent to these methods are machine learning algorithms, including support vector machines (SVM) and random forests. However, these methods have been shown to lack the capacity to effectively capture word order and contextual dependencies, resulting in suboptimal performance in understanding the intricate relationships within film reviews. In recent years, deep learning models, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and long short-term memory (LSTM) networks, have been employed to enhance the capture of contextual information. Nevertheless, these models continue to encounter challenges in processing extensive sequences and are computationally onerous.

Despite the strides made in deep learning, these models continue to grapple with the capture of long-range dependencies and semantic hierarchies within text. This presents a significant opportunity to enhance the classification performance of these models. The BERT (Bidirectional Encoder Representations from Transformers) model is designed to address these issues by capturing contextual information through its bidirectional transformer architecture. In contrast to conventional models, BERT employs pre-training tasks such as masked language modeling and next-sentence prediction to learn generalized language representations, thereby demonstrating superior performance across a spectrum of tasks.

BERT offers several key advantages. Firstly, its bidirectional encoding captures both the left and right contexts of words, thereby facilitating a more profound semantic understanding. Secondly, BERT’s pre-training on extensive corpora enhances its generalization and transfer learning abilities. Nevertheless, the original BERT (OBERT) model exhibits certain limitations, particularly in tasks such as film review classification, which suggest the potential for further optimization.BERT’s word embedding layer utilizes absolute positional encoding1, a method that is inadequate for capturing the relative relationships between words. This encoding method does not account for the distance between words, which is critical for many tasks. Absolute positional encoding is static; consequently, it is unable to adapt to task-specific variations, which limits its ability to handle texts of different lengths. This limitation becomes particularly problematic for texts of a greater length, where BERT demonstrates an inability to capture long-range word dependencies.BERT’s pooling strategy, known as CLS pooling2, employs the hidden state of the CLS token to represent the entire sentence. While this method is efficient, it fails to capture information from all positions in the sequence, which can result in information loss. This issue is more pronounced in longer texts, where the CLS token may fail to capture significant local features, thereby diminishing the model’s capacity to process long-distance dependencies.In order to address these limitations,the present paper proposes three major enhancements:

Absolute positional encoding should be replaced with dynamic positional bias encoding. This approach integrates relative positional encoding with learnable positional encoding, thereby enabling the model to more effectively capture word relationships and enhance contextual understanding.

The conventional CLS pooling strategy is substituted with a dynamic weighted fusion approach. This strategy integrates average pooling3, max pooling4, and self-attention pooling5, thereby enhancing the model’s adaptability to diverse data and facilitating the extraction of key information in varied contexts.

The efficacy of the model is augmented through Easy Data Augmentation (EDA)6 and noise injection techniques7. Additionally, L2 regularization8 is employed to enhance the model’s generalization, mitigate overfitting, and augment its resilience to unseen data. The remainder of this paper is structured as follows: Section "Related Work" offers a comprehensive review of the extant state of research. The subsequent section, Section "Methods", introduces the enhanced BERT (IBERT) model. The subsequent section, Section "Experimental analysis", details the experimental design and the subsequent analysis of the results. Concluding the study, Section "Results and Discussion" explores prospective avenues for further research.

Related work

In recent years, the rapid advancement of natural language processing (NLP) technology has greatly propelled film review classification tasks. Early studies primarily used traditional machine learning techniques. Pang et al.9 investigated sentiment classification for film reviews, proposing a method that utilized the bag-of-words model and TF-IDF representation. They combined this with traditional classifiers, such as support vector machines. However, this approach ignored word order and contextual information, limiting its ability to capture semantic relationships within film reviews. As a result, the classification performance was suboptimal.

Building on this work, Mikolov et al.10 introduced the Word2Vec model. This model used the Skip-Gram and CBOW techniques to generate word embeddings, marking a significant advancement in word embedding technology. Following this, Pennington et al.11 developed the GloVe model, which utilized matrix decomposition to create global word embeddings. These embeddings were better at capturing broader word relationships. Despite these advancements, both Word2Vec and GloVe models struggled with long-range dependencies and complex contextual sentiment variations, which are common in lengthy texts like film reviews. These reviews often involve multiple sentiments, sarcasm, and complex plot analysis.

The rise of deep learning led to the development of text classification methods based on convolutional neural networks (CNNs). Kim et al.12 proposed a CNN model for extracting local text features. This model used convolution and pooling operations to derive n-gram features from movie reviews, achieving high classification accuracy. However, while CNNs are effective at capturing local semantic information, they are less effective at processing long-range dependencies, especially in long reviews. This presents an opportunity to improve CNN performance.

To address the limitations of CNNs in handling long-range dependencies, recurrent neural networks (RNNs)13 and long short-term memory (LSTM) networks were introduced. Tang et al.14 developed a sentiment classification model based on bidirectional LSTM. This model captures both forward and backward dependencies, improving classification effectiveness. However, LSTMs suffer from high computational complexity when processing long texts, leading to long training times and challenges in modeling extremely long dependencies.

Vaswani et al.15 introduced the Transformer model, which uses an attention mechanism. This model significantly improved long text processing by bypassing the need for context-based word processing, as used in RNNs. The Transformer model’s self-attention approach handled long texts better. However, despite its effectiveness, Transformers require significant computation during training and struggle with tasks that demand fine-grained sentiment analysis.

The BERT model, proposed by Devlin et al.16, represents a significant advance in film review classification. BERT captures contextual information through pre-training and fine-tuning. It has demonstrated remarkable performance in many NLP tasks. However, relying solely on the [CLS] token to represent the entire sentence can cause the model to miss important information, particularly local and fine-grained features in long texts.

To improve positional representation, Shaw et al.17 introduced relative position encoding. This technique sets attentional weights based on the relative distance between words, rather than fixed position values. It improves the model’s ability to capture long-range dependencies. However, while relative position encoding improves positional awareness, it remains static, which limits its adaptability across different inputs.

To address BERT’s inefficiency in processing long texts, Beltagy et al.18 introduced the Longformer model. Longformer uses a local sparse attention mechanism and a limited number of global attentions. This reduces BERT’s computational complexity while maintaining sufficient contextual information. However, Longformer focuses more on reducing computational overhead than on optimizing positional representation or dynamically handling long-range dependencies.

Kitaev et al.19 introduced the Reformer model to address the computational complexity and memory limitations of traditional Transformers. The Reformer employs techniques such as locality-sensitive hashing and reversible residual networks to reduce computational overhead and memory usage. While this improves training efficiency, the constrained attention mechanisms may limit the model’s ability to capture long-range dependencies and complex global semantics, reducing its effectiveness in modeling long, intricate texts.

Gao et al.20 presented SimCSE, which uses contrastive learning to improve sentence representations and pooling methods. SimCSE is trained via unsupervised and supervised contrastive learning, generating more robust sentence representations, especially during pooling. While this technique excels in sentence-level tasks, it struggles to extract local details and diverse features in long text classification. Moreover, most of these methods rely on pre-trained sentence representations that are insufficient for capturing the full range of dynamic features in complex, long texts.

Methods

This study improves the original BERT model in several ways. Firstly, it replaces absolute positional encoding with dynamic positional bias21–23 encoding based on the attention mechanism24. This change enhances the model’s adaptability in processing positional information. Next, the study incorporates a dynamic weighted fusion pooling strategy25–27 that combines average pooling, max pooling, and self-attention pooling. This approach improves feature extraction and helps capture critical information. Additionally, the model’s generalization performance is enhanced through data augmentation using EDA and noise injection. These improvements enable superior performance when processing long texts and complex linguistic structures. The improved model is shown in Fig. 1.

Fig. 1.

Structure of the IBERT network.

Dynamic position bias

In the OBERT model, each word in the input sequence is assigned a fixed absolute position vector. In other words, every word’s position is encoded independently from the others. This method does not directly capture the distance between words, which is a disadvantage for tasks that require position sensitivity (such as syntactic analysis, question-answering, and text classification). Moreover, the encoding dimension is fixed. If a sentence exceeds the maximum sequence length, the model cannot effectively represent the positional relationships of the extra words.

The dynamic position bias proposed in this paper improves upon traditional absolute position encoding. Its core idea is to combine relative position encoding with a learnable position bias. This approach is based on the attention mechanism. Relative position encoding28 captures the distance between words. For example, when two words are adjacent, their relative distance is 1, regardless of their absolute positions in the sentence.Dynamic position bias introduces learnable bias parameters based on this relative encoding. These parameters are automatically learned during model training via backpropagation. As a result, the model can adjust the position biases according to the data distribution, which helps it handle different tasks and sequence lengths more effectively.This dynamic bias is applied during the weight calculation of the attention mechanism. When computing the attention weight, the model considers both the word-to-word relevance (derived from the content vectors) and the relative positional bias as an additional weighting term. This means that the attention mechanism assigns different biases to different relative positions. As a result, the model can better distinguish dependencies between words and improve its understanding of the semantic meaning of the text.The structure of the dynamic positional bias is shown in Fig. 2.

Fig. 2.

The architecture of the dynamic position bias.

Algorithm 1 details how dynamic positional bias is integrated into the attention mechanism. This step-by-step explanation clarifies the calculation process.

First, the basic attention score is computed through the dot product of Query and Key vectors. This follows the standard approach used in traditional attention mechanisms.Next, relative distances between tokens are encoded into a vector R. This step establishes relative positional encoding, where positional relationships are determined by token distances rather than absolute positions. The encoded vector R is then processed by a multi-layer perceptron (MLP). This transformation converts relative distances into meaningful positional biases through the MLP processing.A learnable parameter b is then added to refine this bias. During training, b is optimized through backpropagation. This learnable component enables the model to adapt positional biases dynamically based on varying data patterns and task needs.Next, the dynamic positional bias B is combined with the basic attention score. This enhancement improves how the model processes and applies relative positional information. Finally, the updated attention score is converted into attention weights. These weights are applied to generate the final output.

By integrating dynamic positional bias in this sequential manner, the model gains stronger awareness of positional relationships. This improvement allows it to better handle tasks requiring precise understanding of context and relative token positions.

Algorithm 1.

Dynamic Bias in Self-Attention

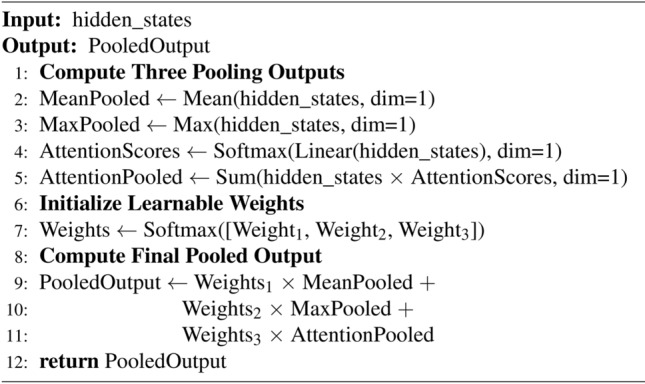

Dynamic weighted fusion strategy

In classification tasks, the CLS pooling mechanism in the BERT model is commonly used to obtain a sentence representation. It treats the CLS token vector as a summary of the entire sentence and directly uses it for classification. This vector is progressively updated through the multi-layer transformer and is designed to capture global sentence information.However, the CLS vector provides only a compressed representation of the sentence. It may fail to capture all important details, especially in long or semantically complex sentences. In sentiment classification task29, for example, multiple sentiment words may need to be considered together. A single CLS vector may not sufficiently encode this diverse sentiment information. Additionally, CLS pooling is static, meaning it does not adapt to different inputs or tasks. Unlike the self-attention mechanism, it does not flexibly focus on the most relevant parts of a sentence in different contexts. As a result, it struggles with tasks that require capturing local details or complex semantic relationships.

To address these limitations, this paper proposes a dynamically weighted fusion strategy that integrates average pooling, max pooling, and self-attention pooling. By introducing learnable dynamic weights, the model can adaptively combine the advantages of different pooling methods. Instead of relying on a single pooling approach, the model dynamically selects the most suitable strategy for the given input.This approach allows for multi-perspective feature extraction. Average pooling captures general sentence-level features, max pooling highlights the most informative features, and self-attention pooling selectively emphasizes important parts of the sentence. Learnable weights further enhance adaptability by adjusting during training through backpropagation30 and gradient descent31. This improves the model’s flexibility and performance across different tasks and datasets.The structure of the dynamic weighted fusion strategy is shown in Fig. 3.

Fig. 3.

The architecture of the dynamic weighted fusion strategy.

In order to more clearly demonstrate the implementation process of this strategy, we provide Algorithm 2 to detail the specific calculation steps of the dynamic weighted fusion strategy.Starting from the input sequence embedding H, the model first computes feature representations using three different pooling methods. Average pooling calculates the global representation by averaging the sequence embeddings, thereby capturing the overall information within the sequence. Maximum pooling selects the maximum value along each dimension to extract the most significant features, highlighting the critical aspects of the input sequence. Self-attention pooling employs an attention mechanism to generate a weight matrix that focuses on the semantically important parts of the sequence, enhancing contextual understanding. Subsequently, a learnable parameter matrix W and a softmax function are utilized to produce dynamic weights that adjust the contributions of the three pooling methods. Finally, the model computes a weighted sum of the feature representations from these pooling methods to generate the final global feature representation, effectively integrating comprehensive, salient, and contextually relevant information.

Algorithm 2.

Dynamic Weighted Pooling Strategy

Data augmentation and L2 regularization

EDA operations and noise injection were applied to the training dataset. EDA comprises four basic operations: synonym replacement, random insertion, random replacement, and random deletion. By slightly perturbing the original text, EDA generates a large number of diverse training samples. This increased variety helps the model understand multiple expressions and prevents it from overfitting to specific patterns. It also prepares the model to handle different inputs and reduces errors when encountering unfamiliar data. This approach is especially useful when dealing with small sample sizes or insufficient datasets.

Adding noise to the training data simulates real-world scenarios where inputs may be incorrect or imperfect. This uncertainty teaches the model to handle abnormal data effectively. Instead of memorizing specific training patterns, the model learns to capture the underlying structure and patterns of the data. As a result, the model becomes more robust in various environments and under different input conditions.

Additionally, L2 regularization is employed during model training. By adding a weight attenuation term to the loss function, L2 regularization helps prevent the model from overfitting. When combined with data augmentation, it effectively reduces the risk of overfitting and improves performance on unseen data. Together, data augmentation and regularization enhance the model’s robustness and generalization capabilities when processing diverse text inputs.

Experimental analysis

IMDb dataset

The dataset used in this paper is the IMDb movie review dataset, which contains 50,000 movie review samples from the IMDb movie database, divided into two categories: positive reviews and negative reviews. The dataset is randomly divided into training set, validation set, and test set in the proportion of 80%, 10%, and 10%, respectively, including 40,000 training samples, 5,000 validation samples, and 5,000 test samples. The dataset covers a variety of movie genres and different user reviews, and the length of the movie reviews varies from short comments to detailed reviews. It is very diverse and challenging, and can effectively test the performance of the model in the sentiment classification task. As shown in Table 1.

Table 1.

IMDb dataset.

| Characteristic | Description |

|---|---|

| Dataset name | IMDb movie review dataset |

| Sample size | 50000 |

| Category | Positive, Negative |

| Average movie review length | About 230 words |

| Number of training set samples | 40000 |

| Number of validation set samples | 5000 |

| Number of test set samples | 5000 |

| Data source | IMDb movie database |

To reduce bias in sentiment prediction32,33, this study applies several preprocessing techniques to remove or blur sensitive information from the data. Sensitive information includes words or features related to gender, race, religion, and other similar attributes. Data augmentation techniques-such as synonym replacement, random insertion, random deletion, random swapping, and noise injection-are then used to generate neutral comment samples. This process helps decrease the model’s reliance on sensitive features and enhances its fairness and robustness.

First, a list of sensitive words is defined. This list includes gender terms (e.g., “male,” “female”) and racial terms (e.g., “white,” “black”), among others. The predefined list is used to identify sensitive words in the text. These words are then replaced with general labels or placeholders. For example, “male” can be replaced with “[gender]” or “[sensitive word].”

If it is necessary to retain sensitive words, Word2Vec is used to reduce their impact. In this case, words containing sensitive information are replaced with similar, neutral words. For example, “male” might be replaced with “human” or “individual.” If a text contains specific gender, race, or other sensitive labels, that information can be removed or replaced with a more neutral description. For instance, the phrase “black male” can be modified to “individual.”

Next, data augmentation techniques generate diverse neutral review samples. Techniques like synonym replacement and random insertion help ensure a balanced ratio of sentiment-neutral samples. This balance reduces the model’s reliance on extreme sentiments, such as overly positive or overly negative expressions.

Finally, the generated neutral samples are merged into the training set. Figure 4 illustrates this process.

Fig. 4.

Data preprocessing.

Experimental environment and parameter setting

The experimental environment for this article is: Operating System: Windows 10; GPU: NVIDIA RTX 2080; Deep learning framework: PyTorch; programming language: python3.10; experimental environment built in Pycharm.

In the present experiment, Bayesian optimization34 was utilized to systematically search for key hyperparameters, and an early stopping mechanism35 was introduced to prevent overfitting of the model. Through the implementation of Bayesian optimization, we were able to intelligently adjust parameters such as the learning rate, batch size, and maximum sequence length. The optimal hyperparameter configuration that was determined on the validation set included 15 epochs, a batch size of 16, a maximum length of 128, and an initial learning rate of 0.000001.During the training process, the accuracy, F1 score, and loss value of the validation set were monitored in real time. The early stopping mechanism was employed to terminate the training when there was no significant improvement in the indicators within several consecutive epochs. The experimental findings indicate that the performance metrics of the model attained their zenith at the 15th epoch, coinciding with the stabilization of the validation loss. Subsequent training did not yield any enhancement in performance; rather, it exhibited indications of overfitting.

Consequently, the integration of Bayesian optimization with the early stopping mechanism was undertaken to ascertain the most optimal hyperparameter configuration. This investigation was conducted within the constraints of the existing dataset and experimental parameters. The optimal configuration, as determined by this analysis, comprises 15 epochs, a batch size of 16, a maximum length of 128, and an initial learning rate of 0.000001.The training parameter settings are shown in Table 2.

Table 2.

Training parameters of the model.

| Training parameters | Details |

|---|---|

| Epochs | 15 |

| Batch-size | 16 |

| Category | Positive, Negative |

| Max length | 128 |

| Initial learning rate | 0.000001 |

Model evaluation

The main evaluation metrics used in this paper are F1 score, accuracy and confusion matrix.

The F1 score is a harmonic mean of precision and recall that comprehensively reflects the classification effect of the model. The F1 score strikes a balance between precision and recall, and is suitable for evaluating the overall performance of the model when the categories are unbalanced. For the movie review classification task, the F1 score can effectively measure the overall performance of the model in classifying positive and negative emotions. The accuracy rate is the proportion of samples with correct predictions by the model out of all samples. When the category distribution is balanced, the accuracy rate is a very intuitive overall performance metric that reflects the overall classification ability of the model. The confusion matrix shows a detailed comparison of the model’s prediction and actual classification, including the number of correct and incorrect classifications. The confusion matrix clearly shows in which categories the model performs better or worse, which is helpful in diagnosing model problems.

The formula is:

|

In this context, precision refers to the proportion of actual positive samples among those predicted as positive by the model. Conversely, the recall rate represents the proportion of correctly predicted positive samples among all actual positive samples. TP represents the number of samples that are predicted by the model to be positive and are, in fact, positive. FP denotes the number of samples that are predicted by the model to be positive but are, in reality, negative. TN signifies the number of samples that are predicted by the model to be negative and are, in truth, negative. FN, on the other hand, refers to the number of samples that are predicted by the model to be negative but are, in fact, positive.

Results and discussion

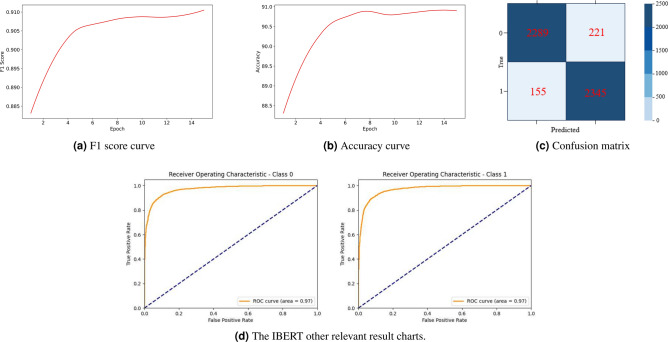

Experimental results of the IBERT model

The results of the experimental investigation into the efficacy of the IBERT model are presented in Fig. 5. Figure 5a illustrates the F1 score curve, Fig. 5b depicts the accuracy curve, Fig. 5c displays the confusion matrix, and Fig. 5d illustrates other pertinent plots associated with this experiment.

Fig. 5.

Experiment result charts.

To illustrate the actual effect of the model, a test was conducted on the IMDB movie review dataset. The subsequent cases are employed to elucidate the model’s performance and application scenarios. For a more thorough examination of these cases, please refer to Table 3.

Table 3.

Real case analysis.

| Case number | Actual comments | Sentiment prediction | Prediction rating | Actual score | Analysis and discussion |

|---|---|---|---|---|---|

| 1 | “The movie was absolutely fantastic! The acting was superb, and the story kept me engaged the entire time.” | Positive | 8.5 | 9 | The model correctly identified the positive sentiment in the comments and predicted a high score, validating the accuracy of the model in positive sentiment comments. |

| 2 | “The movie was okay, but I felt that it was a bit too long. Some parts dragged a little.” | Neutral | 6 | 6 | The model successfully captures the neutral sentiment in the comments and gives reasonable rating predictions, indicating that the model can effectively handle comments that lack strong emotions. |

| 3 | “This movie was a complete waste of time. The acting was terrible, and the plot made no sense at all.” | Negative | 1 | 1 | In the negative sentiment reviews, the model accurately identified strong negative sentiments and predicted low ratings, demonstrating its robustness in negative sentiment analysis. |

| 4 | Action Movies: “An intense thrill ride with mind-blowing action sequences and an amazing cast!” | Positive | 8.5 | – | The model accurately identified the positive sentiment in the action movie and predicted a high rating. |

| 5 | Romance Movies: “A heartfelt story about love and loss, with incredible performances from the lead actors.” | Positive | 9 | – | The model was able to capture the positive sentiment in the romance film reviews and predicted a 9-star rating, demonstrating good sentiment recognition capabilities. |

| 6 | Comedy Movies: “The movie had some funny moments, but overall, it was just too predictable and cliché.” | Neutral | 6 | – | The model successfully handled neutral sentiment in comedy films and predicted a 6-star rating, demonstrating adaptability and diversity across different movie genres. |

Ablation experiment

To verify the IBERT model in this paper, ablation experiments are carried out by combining several modules. The experimental results on the same test set are shown in Table 3. As can be seen from the table, with BERT-base as the base model, Combination 1 is the OBERT model that has not been improved; Combination 2 is to change the position encoding method of the Bert model, abbreviated as Imroved1 in the table below, the accuracy is improved by 0.42% and the F1 score is improved by 0.33%; Combination 3 is to change the pooling strategy of the Bert model, abbreviated as Imroved2 in the table below, the accuracy reached 90. 58%, and the F1 score reached 0.89; Combination 4 is a combination of Combination 2 and Combination 3, changing the position coding method and the pooling strategy of the Bert model, the accuracy reached 90.78%, the F1 score increased by 0.54%, and the recognition ability of positive and negative samples in the confusion matrix was also improved; Combination 5 is a data enhancement operation based on Combination 4. Compared to the original model, the accuracy is improved by 0.83%, the F1 score is improved by 0.65%, and the recognition ability of positive and negative samples in the confusion matrix is further improved compared to combination 4. It is the model with the strongest performance in this experiment, which proves the effectiveness of this paper’s improvement of the OBERT model. EDA and noise injection are abbreviated as DA, F1 score is abbreviated as F1, and accuracy is abbreviated as A. Table 4 shows the results of the ablation experiment.

Table 4.

Training parameters of the model.

| Combination | Improved1 | Improved2 | DA | F1 | A(%) |

|---|---|---|---|---|---|

| 1 | 0.88 | 90.22 | |||

| 2 |

|

|

|

0.89 | 90.64 |

| 3 |

|

|

|

0.89 | 90.58 |

| 4 |

|

|

|

0.90 | 90.78 |

| 5 |

|

|

|

0.92 | 91.12 |

Significant values are in bold.

To more effectively illustrate the enhanced efficacy of the revised Bert model, data visualization techniques were employed to present performance comparison graphs between the OBERT model and the IBERT model, as well as the data enhancement operations based on the improvements. The comparison results are illustrated in Fig. 6. In Fig. 6, the horizontal coordinate represents the epoch number, while the vertical coordinate represents the F1 score, accuracy, training loss, and validation loss, respectively. Figure 6e depicts the confusion matrix.

Fig. 6.

Comparison of results between the IBERT and the OBERT.

Comparison with other models

To investigate in more detail the performance of the improved model proposed in this paper in text classification tasks, we conducted comparative experiments with other prominent classical models, including CNN, RNN, LSTM, GRU36 and FastText37, using the same dataset and training parameters. The results of the comparative experiments are presented in Table 5. As evidenced in Table 4, the IBERT model demonstrated superior detection performance compared to other models, including CNN, RNN, LSTM, GRU, FastText, RoBERTa38, XLNet39, T540 and the OBERT model.F1 score is abbreviated as F1, and accuracy is abbreviated as A.

Table 5.

Training parameters of the model.

| Models | F1 | A(%) |

|---|---|---|

| CNN | 0.86 | 86.81 |

| RNN | 0.82 | 83.28 |

| LSTM | 0.87 | 87.11 |

| GRU | 0.85 | 85.42 |

| FastText | 0.88 | 89.01 |

| RoBERTa | 0.91 | 90.88 |

| XLNet | 0.91 | 90.76 |

| T5 | 0.89 | 90.32 |

| BERT | 0.89 | 90.22 |

| IBERT | 0.92 | 91.12 |

Significant values are in bold.

Experimental effect verification

To further demonstrate the performance improvement of the IBERT model and the generalization ability of the improved model, this paper also selects two other public datasets, SST-2 and AGNews, for verification, and compares the classification results of the model before and after the improvement to clearly show the difference brought about by the improvement. The comparison results are shown in Figs. 7 and 8. The blue curve in the figure shows the F1 score and accuracy of the OBERT model classification, while the red curve shows the F1 score and accuracy of the IBERT model. It can be seen from the figure that the F1 score and accuracy of the IBERT model have both improved compared to the OBERT model. It can also be seen from the confusion matrix that the IBERT model has also improved its ability to identify positive and negative samples compared to the OBERT model. Therefore, the generalization ability of the IBERT model has also been improved.

Fig. 7.

Experimental results on SST-2 dataset.

Fig. 8.

Experimental results on AGNews dataset.

Conclusions

This paper proposes improvements to the BERT model. The new IBERT model introduces a content-based dynamic position offset into the attention mechanism. It replaces the original absolute position encoding with a dynamic position offset encoding. In addition, the traditional CLS pooling is replaced by a dynamic weighted fusion pooling strategy. This new pooling strategy combines average pooling, maximum pooling, and self-attention pooling to extract more comprehensive key information from the text.The model’s generalization ability is further enhanced by applying EDA-based data augmentation and noise injection to the training dataset. Experimental results show that, compared with the OBERT model and other mainstream text classification models, IBERT can more effectively capture semantic features. It achieves better performance in sentiment classification of IMDb movie reviews, reduces misclassification under complex language structures, and improves both accuracy and robustness.

These improvements have broad application potential. They are relevant not only for sentiment analysis but also for tasks such as text summarization and machine translation. In the future, the model’s performance on long texts and complex contexts can be further enhanced by expanding the dataset and optimizing the dynamic position offset mechanism. This will further improve the model’s applicability and stability.

Author contributions

Ning Weijun was responsible for writing the main part of the manuscript and performed data analysis. Wu Haodong prepared Figs. 1, 2 and 3. Zhao Qihao prepared Figs. 4, 5 and 6. Zhang Tianxin participated in the experimental design and data collection. Wang Fuwei, as the first supervisor, guided the development of the research plan and the entire research process. Wang Weimin, as the second supervisor, provided important guidance on the experimental methods and results discussion. All authors participated in the results discussion and reviewed the final version of the manuscript.

Data availability

The datasets used in this study are accessible through the following links: IMDb: https://datasets.imdbws.com and SST-2: https://nlp.stanford.edu/sentiment and AGNews: https://huggingface.co/datasets/fancyzhx/ag_news.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Chen, P. -C. et al. A simple and effective positional encoding for transformers. arXiv:2104.08698 (2021).

- 2.Zhang, C., Liwicki, S. & Cipolla, R. Beyond the CLS token: Image reranking using pretrained vision transformers. In BMVC, 80 (2022).

- 3.Bieder, F., Sandkühler, R. & Cattin, P. C. Comparison of methods generalizing max-and average-pooling. arXiv:2103.01746 (2021).

- 4.Zhou, P. et al. Text classification improved by integrating bidirectional LSTM with two-dimensional max pooling. arXiv:1611.06639 (2016).

- 5.Chen, F., Datta, G., Kundu, S. & Beerel, P. A. Self-attentive pooling for efficient deep learning. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, 3974–3983 (2023).

- 6.Wei, J. & Zou, K. Eda: Easy data augmentation techniques for boosting performance on text classification tasks. arXiv:1901.11196 (2019).

- 7.Rizos, G., Hemker, K. & Schuller, B. Augment to prevent: short-text data augmentation in deep learning for hate-speech classification. In Proceedings of the 28th ACM international conference on information and knowledge management, 991–1000 (2019).

- 8.Xie, X., Xie, M., Moshayedi, A. J. & Noori Skandari, M. H. A hybrid improved neural networks algorithm based on L2 and dropout regularization. Math. Probl. Eng.2022, 8220453 (2022). [Google Scholar]

- 9.Pang, B., Lee, L. & Vaithyanathan, S. Thumbs up? Sentiment classification using machine learning techniques. arXiv:cs/0205070 (2002).

- 10.Mikolov, T. Efficient estimation of word representations in vector space. arXiv:1301.37813781 (2013).

- 11.Pennington, J., Socher, R. & Manning, C. D. Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 1532–1543 (2014).

- 12.Zhang, Y. & Wallace, B. A sensitivity analysis of (and practitioners’ guide to) convolutional neural networks for sentence classification. arXiv:1510.03820 (2015).

- 13.Subramanian, A. A. V. & Venugopal, J. P. A deep ensemble network model for classifying and predicting breast cancer. Comput. Intell.39, 258–282 (2023). [Google Scholar]

- 14.Tang, D., Qin, B. & Liu, T. Document modeling with gated recurrent neural network for sentiment classification. In Proceedings of the 2015 conference on empirical methods in natural language processing, 1422–1432 (2015).

- 15.Ashish, V. Attention is all you need. Adv. Neural Inf. Process. Syst.30, (2017).

- 16.Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805 (2018).

- 17.Shaw, P., Uszkoreit, J. & Vaswani, A. Self-attention with relative position representations. arXiv:1803.02155 (2018).

- 18.Beltagy, I., Peters, M. E. & Cohan, A. Longformer: The long-document transformer. arXiv:2004.05150 (2020).

- 19.Kitaev, N., Kaiser, Ł. & Levskaya, A. Reformer: The efficient transformer. arXiv:2001.04451 (2020).

- 20.Gao, T., Yao, X. & Chen, D. SimCSE: Simple contrastive learning of sentence embeddings. arXiv:2104.08821 (2021).

- 21.He, P., Liu, X., Gao, J. & Chen, W. DeBERTa: Decoding-enhanced BERT with disentangled attention. arXiv:2006.03654 (2020).

- 22.Ke, G., He, D. & Liu, T.-Y. Rethinking positional encoding in language pre-training. arXiv:2006.15595 (2020).

- 23.Raisanen, V., Elbamby, M. & Petrov, D. Cross-stakeholder service orchestration for B5G through capability provisioning. arXiv:2008.07162 (2020).

- 24.Karthikeyan, N. et al. A novel attention-based cross-modal transfer learning framework for predicting cardiovascular disease. Comput. Biol. Med.170, 107977 (2024). [DOI] [PubMed] [Google Scholar]

- 25.Zafar, A. et al. A comparison of pooling methods for convolutional neural networks. Appl. Sci.12, 8643 (2022). [Google Scholar]

- 26.Xiao, N. & Zhang, L. Dynamic weighted learning for unsupervised domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 15242–15251 (2021).

- 27.Yu, D., Wang, H., Chen, P. & Wei, Z. Mixed pooling for convolutional neural networks. In Rough sets and knowledge technology: 9th international conference, RSKT 2014, Shanghai, China, October 24-26, 2014, Proceedings 9, 364–375 (Springer, 2014).

- 28.Wu, K., Peng, H., Chen, M., Fu, J. & Chao, H. Rethinking and improving relative position encoding for vision transformer. In Proceedings of the IEEE/CVF international conference on computer vision, 10033–10041 (2021).

- 29.Liu, S. M. & Chen, J.-H. A multi-label classification based approach for sentiment classification. Expert Syst. Appl.42, 1083–1093 (2015). [Google Scholar]

- 30.Hecht-Nielsen, R. Theory of the backpropagation neural network. In Neural Netw. Percept., 65–93 (Elsevier, 1992).

- 31.Haji, S. H. & Abdulazeez, A. M. Comparison of optimization techniques based on gradient descent algorithm: A review. PalArch’s J. Archaeol. Egypt/Egyptol.18, 2715–2743 (2021). [Google Scholar]

- 32.Jothi Prakash, V. & Arul Antran Vijay, S. A multi-aspect framework for explainable sentiment analysis. Pattern Recogn. Lett.178, 122–129 (2024). [Google Scholar]

- 33.Prakash, J. & Vijay, A. A. S. Cross-lingual sentiment analysis of Tamil language using a multi-stage deep learning architecture. ACM Trans. Asian Low-Resour. Lang. Inf. Process.22 (2023).

- 34.Wang, X., Jin, Y., Schmitt, S. & Olhofer, M. Recent advances in Bayesian optimization. ACM Comput. Surv.55, 1–36 (2023). [Google Scholar]

- 35.Ferro, M. V., Mosquera, Y. D., Pena, F. J. R. & Bilbao, V. M. D. Early stopping by correlating online indicators in neural networks. Neural Netw.159, 109–124 (2023). [DOI] [PubMed] [Google Scholar]

- 36.Dey, R. & Salem, F. M. Gate-variants of gated recurrent unit (GRU) neural networks. In 2017 IEEE 60th international midwest symposium on circuits and systems (MWSCAS), 1597–1600 (IEEE, 2017).

- 37.Yao, T., Zhai, Z. & Gao, B. Text classification model based on fastText. In 2020 IEEE International conference on artificial intelligence and information systems (ICAIIS), 154–157 (IEEE, 2020).

- 38.Yinhan, L. et al. Roberta: A robustly optimized BERT pretraining approach. arXiv:1907.11692 1–13 (2019).

- 39.Yang, Z. XLNet: Generalized autoregressive pretraining for language understanding. arXiv:1906.08237 (2019).

- 40.Raffel, C. et al. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res.21, 1–67 (2020).34305477 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used in this study are accessible through the following links: IMDb: https://datasets.imdbws.com and SST-2: https://nlp.stanford.edu/sentiment and AGNews: https://huggingface.co/datasets/fancyzhx/ag_news.